Surface and Underwater Acoustic Source Recognition Using Multi-Channel Joint Detection Method Based on Machine Learning

Abstract

1. Introduction

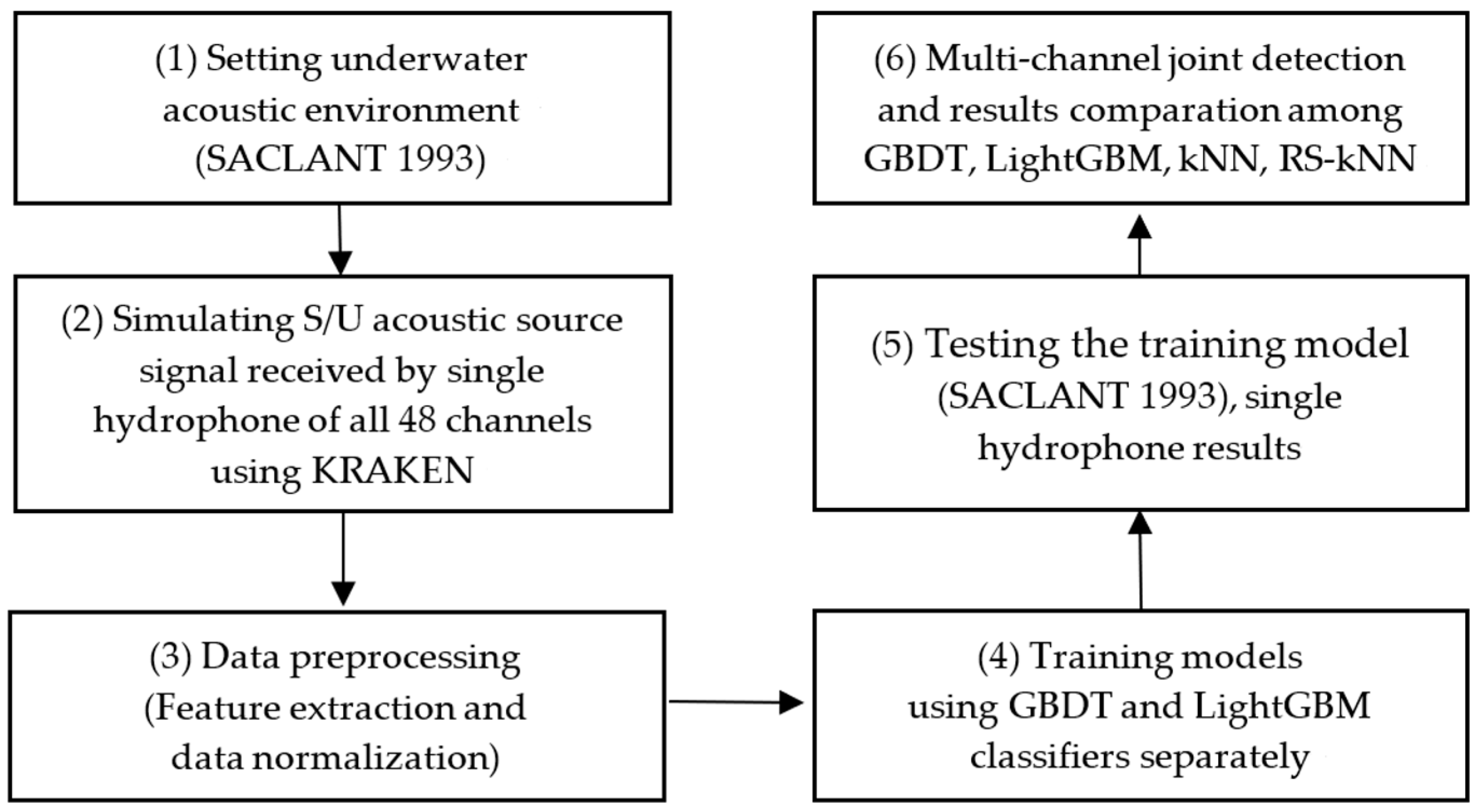

2. Overall Architecture [1]

3. Theory and Method

3.1. KRAKEN [13]

3.2. GBDT [22]

3.3. LightGBM [23]

- (1)

- Reducing the use of data memory to ensure that a single machine can use as much data as possible without sacrificing speed;

- (2)

- Reducing the cost of communication, improving the efficiency of GPU, and realizing linear acceleration in calculation.

4. Data Preprocessing

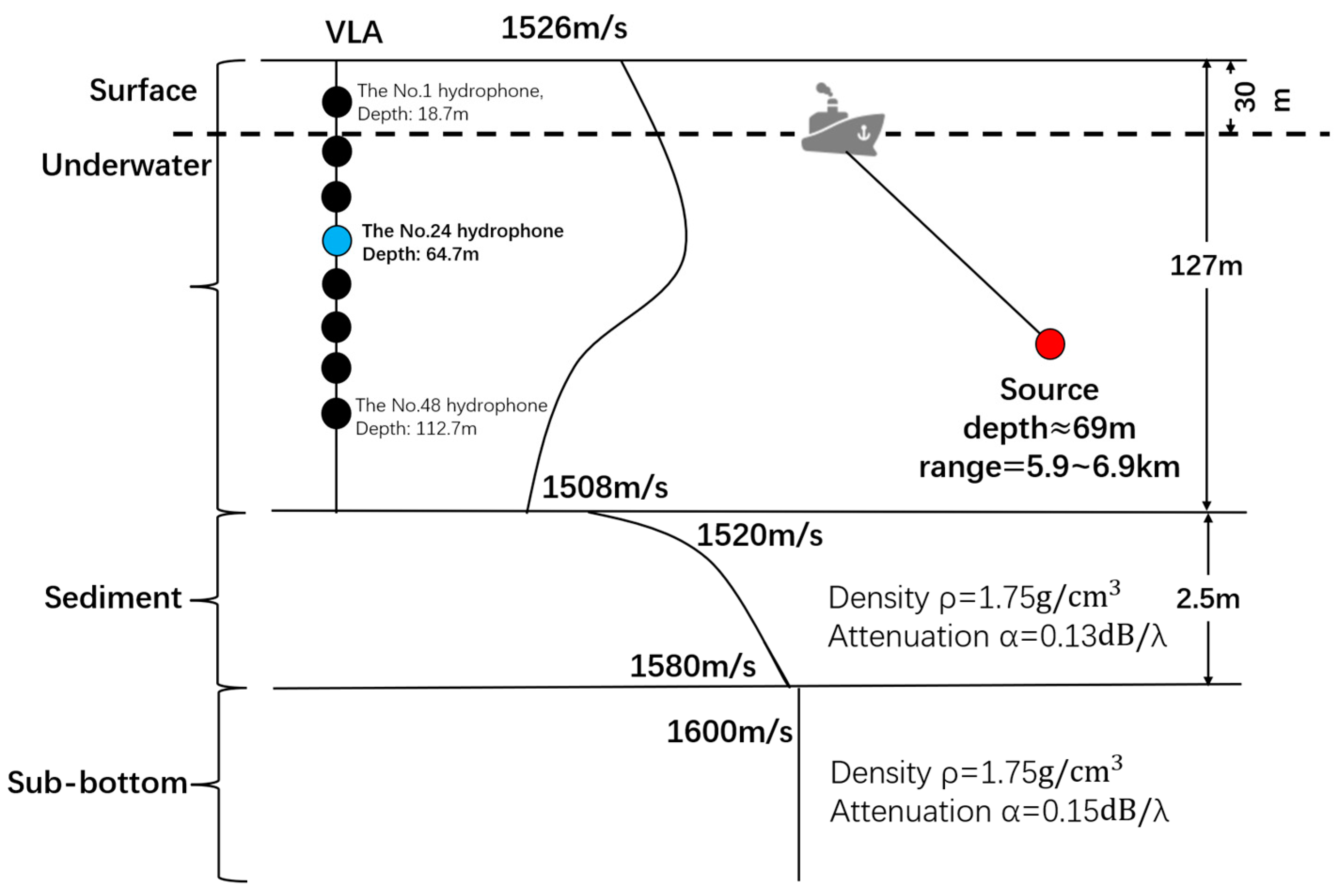

4.1. The Experimental Information of SACLANT 1993

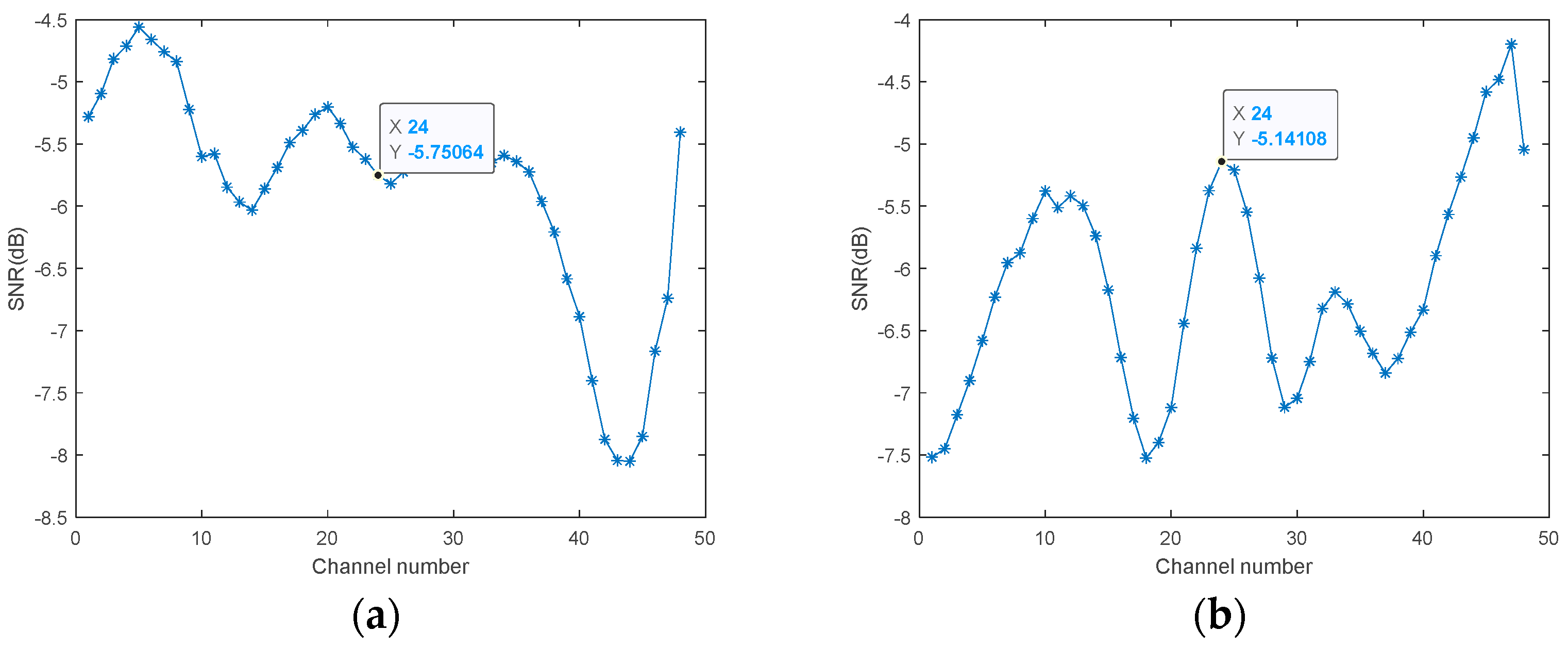

4.2. The Simulation Data

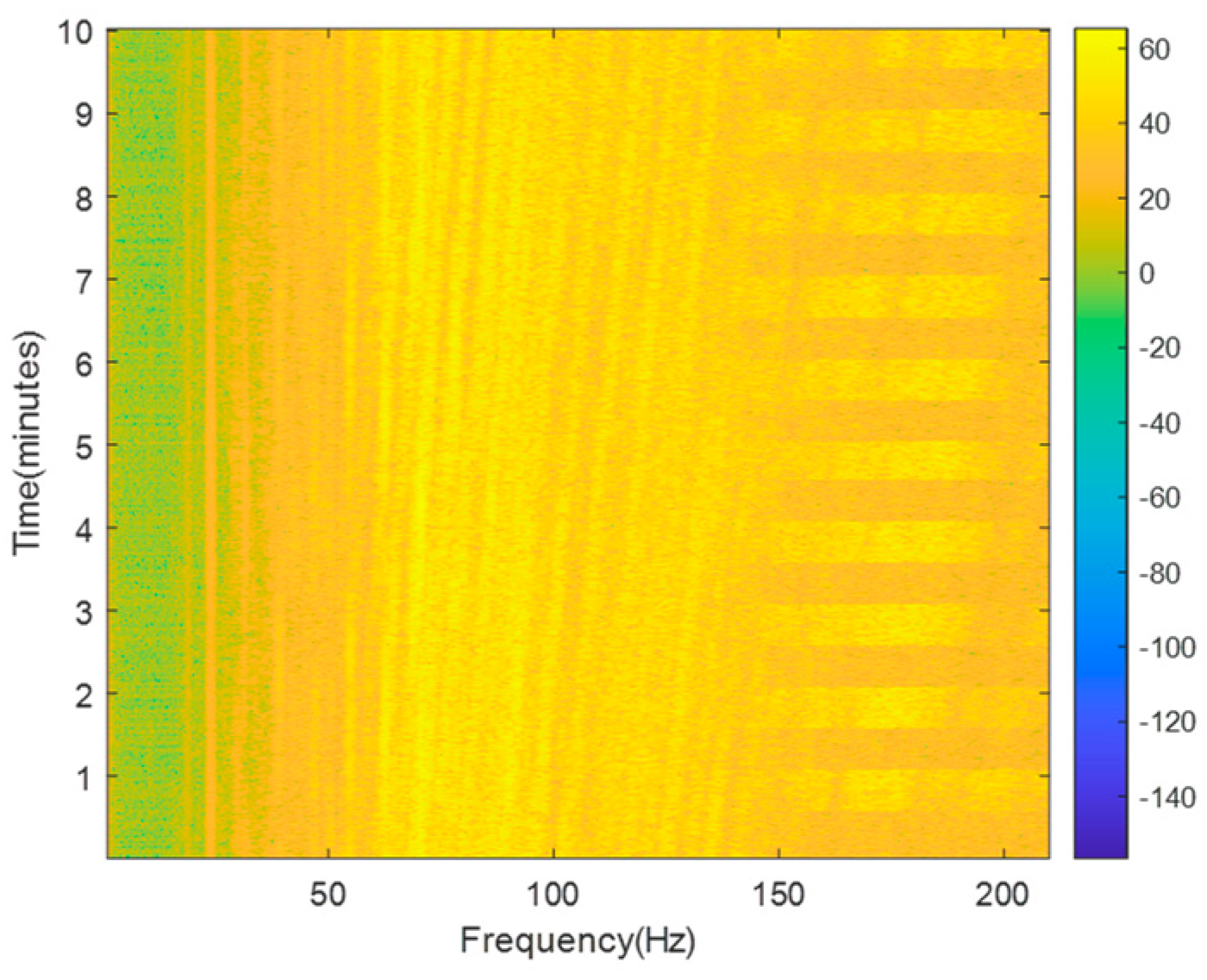

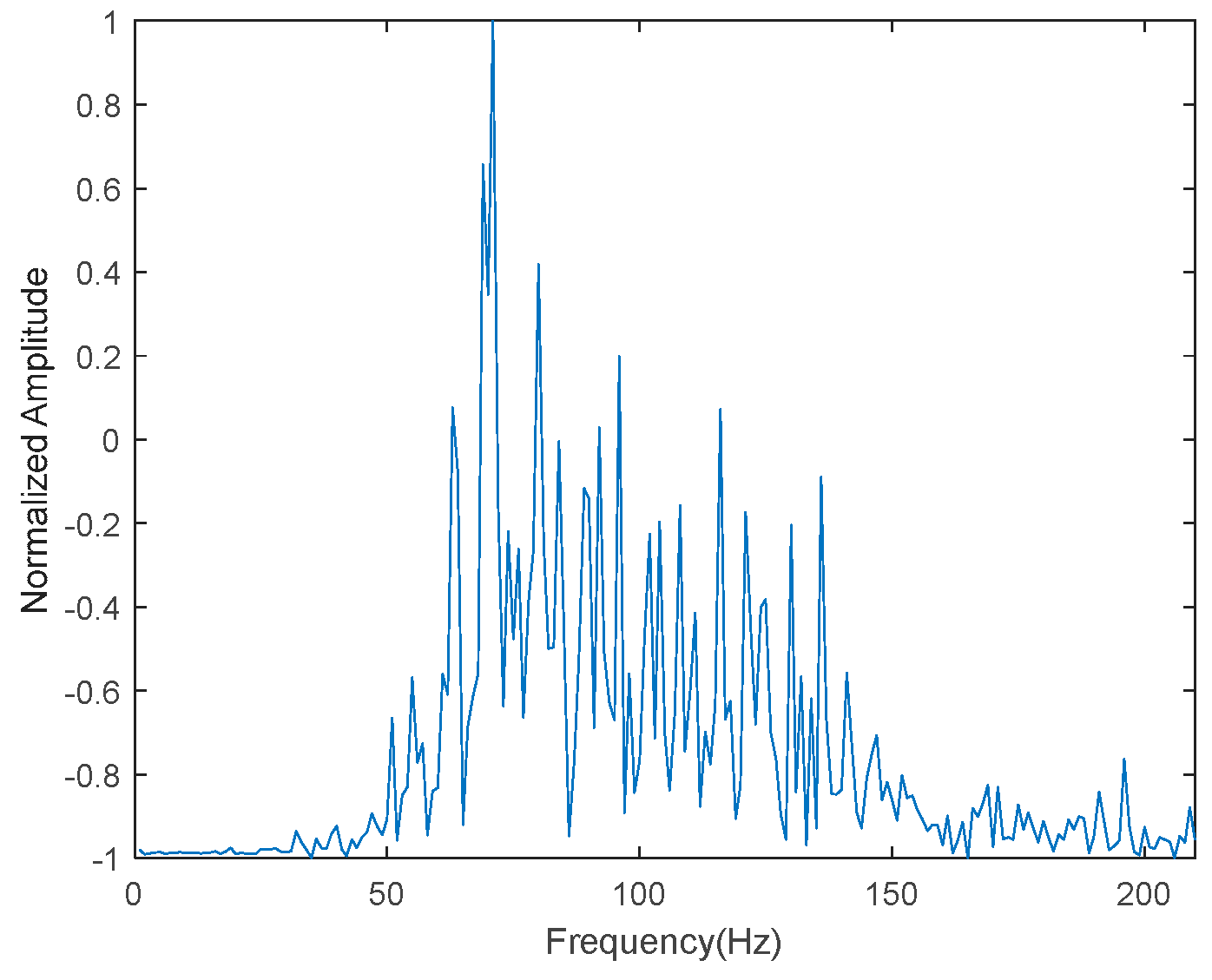

4.3. The Experimental Data

4.4. Feature Extraction

- (1)

- Using Modules as Features

- (2)

- Using Real and Imaginary Parts as Features

5. Results and Analyses

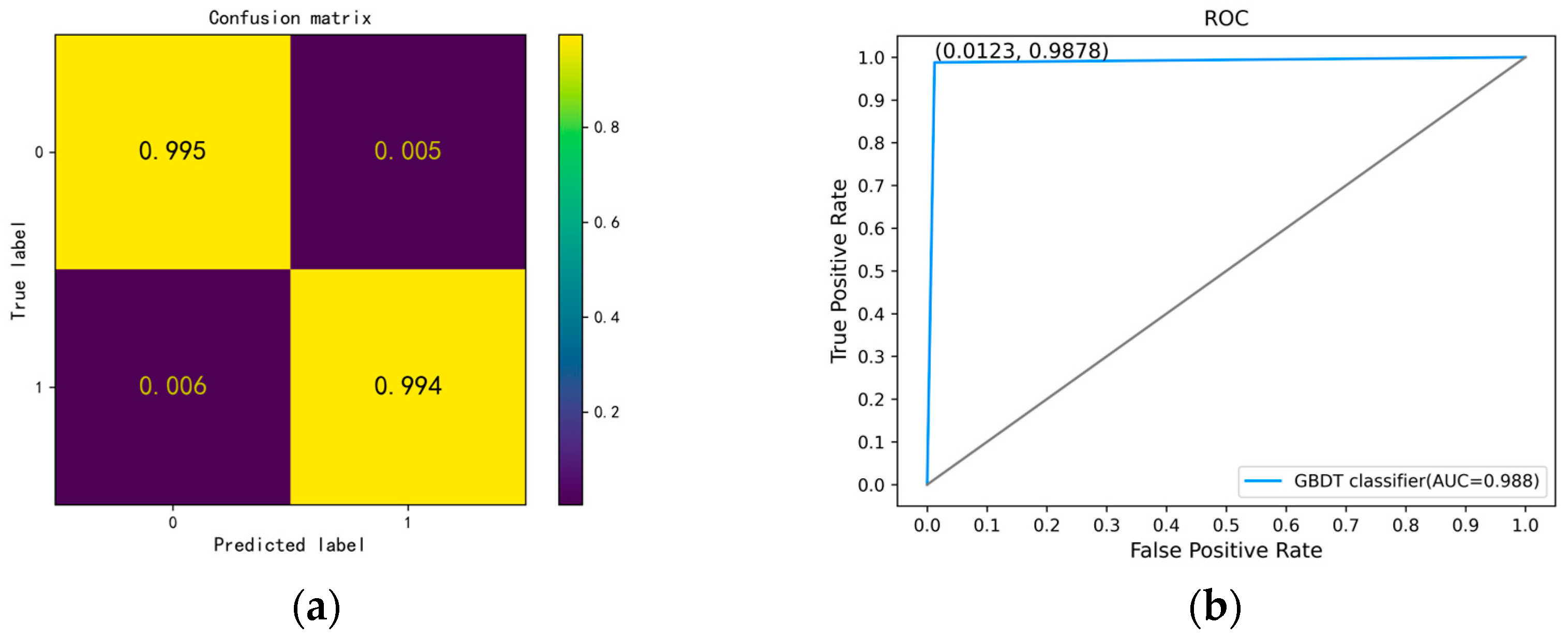

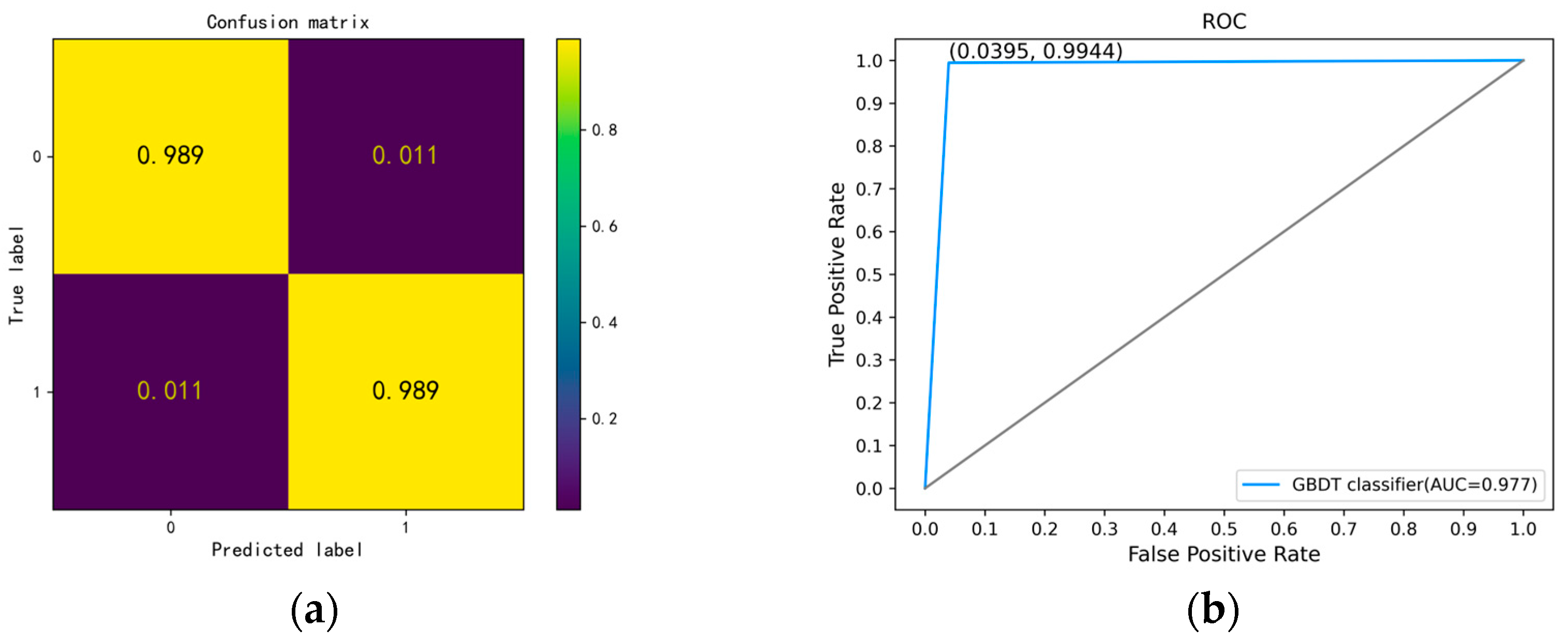

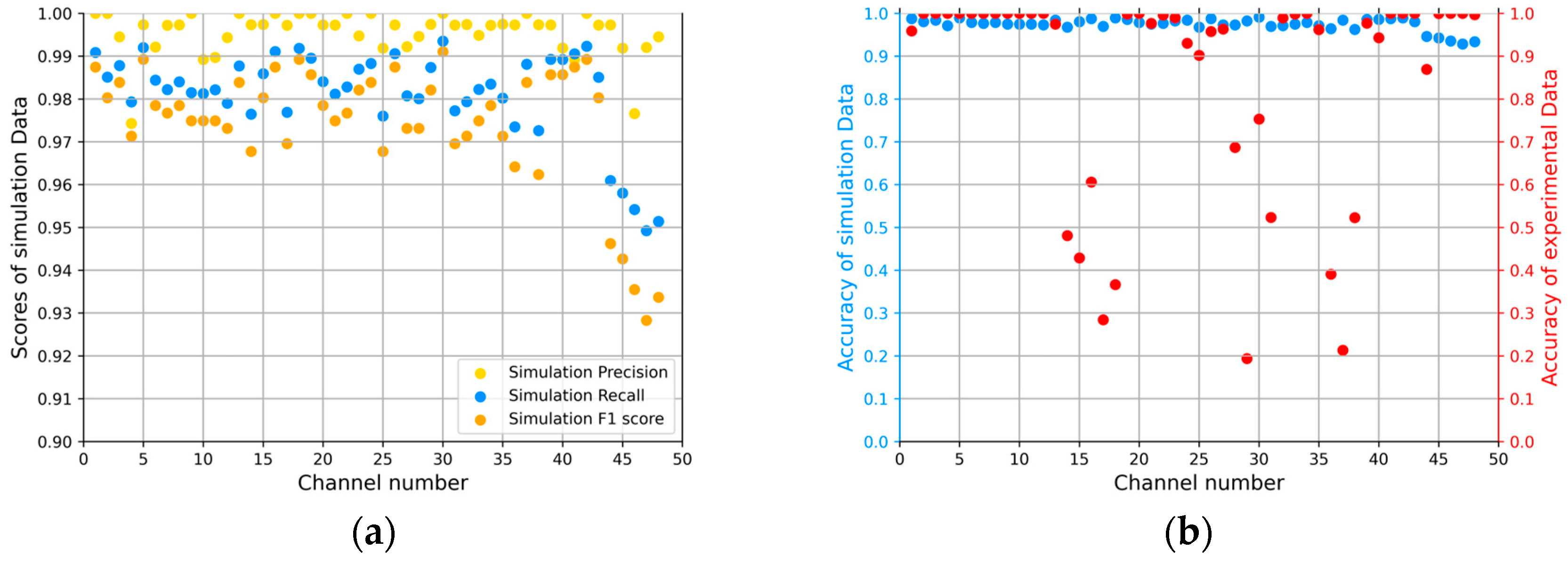

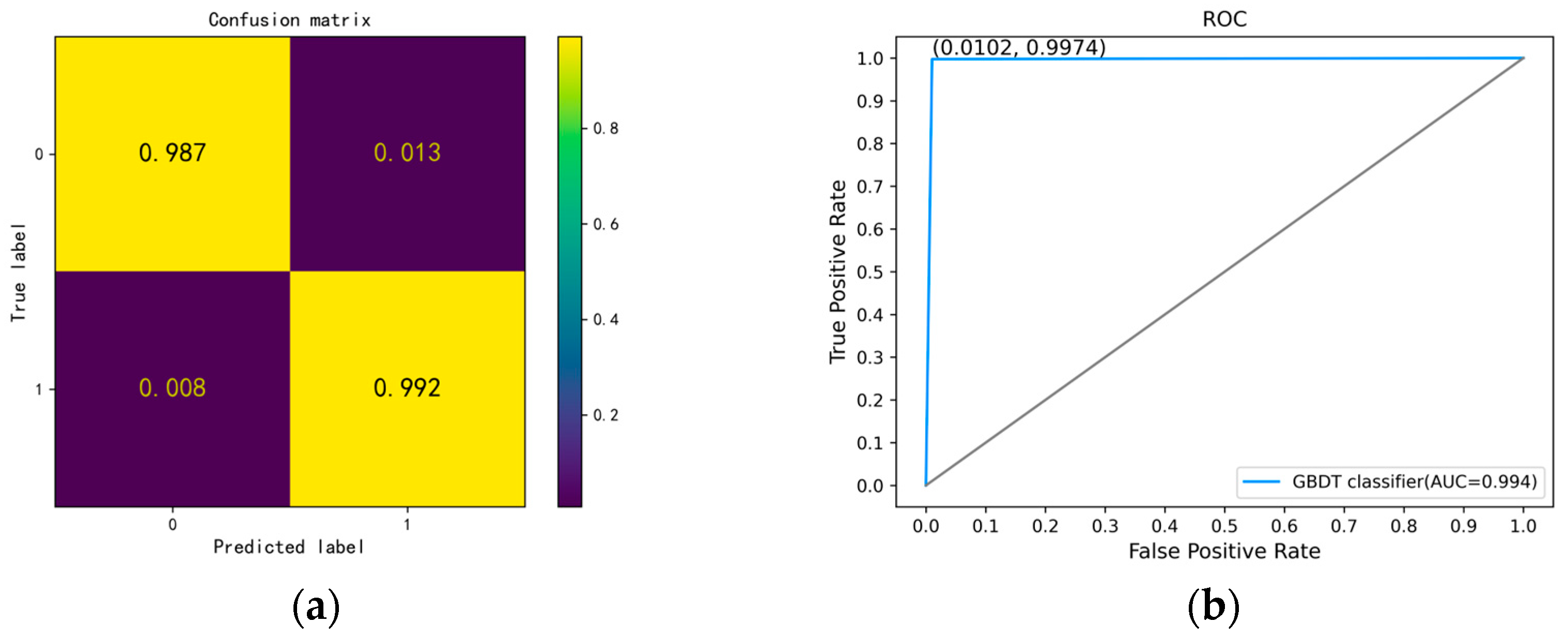

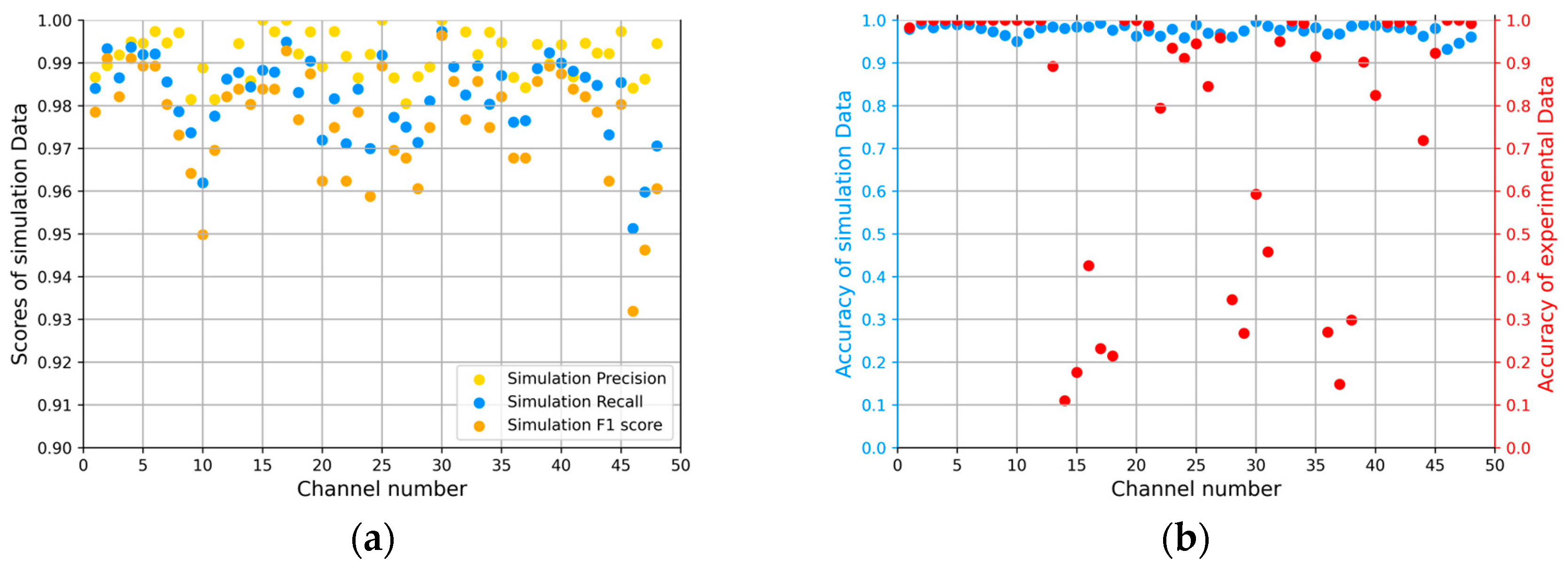

5.1. Results of GBDT

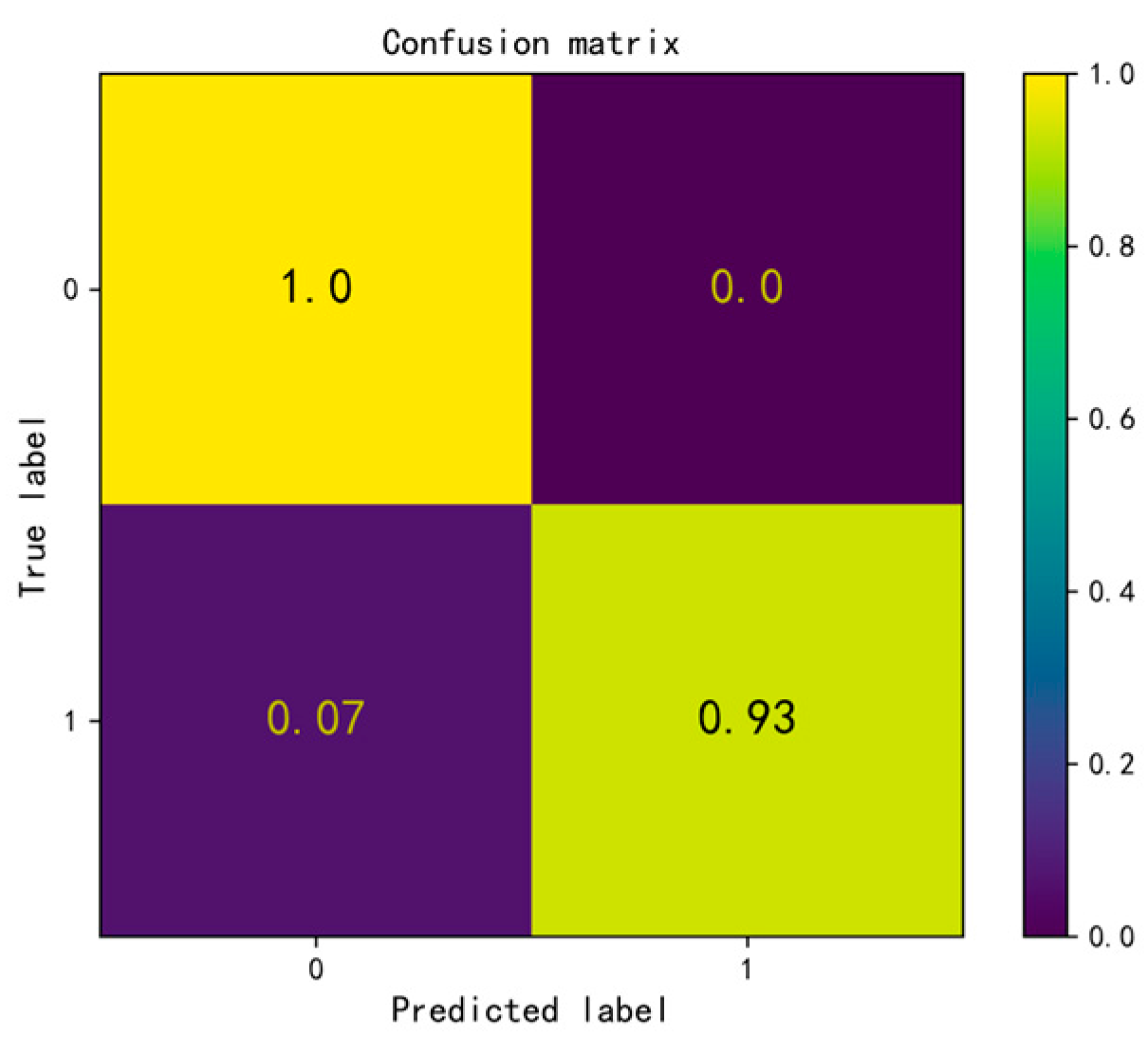

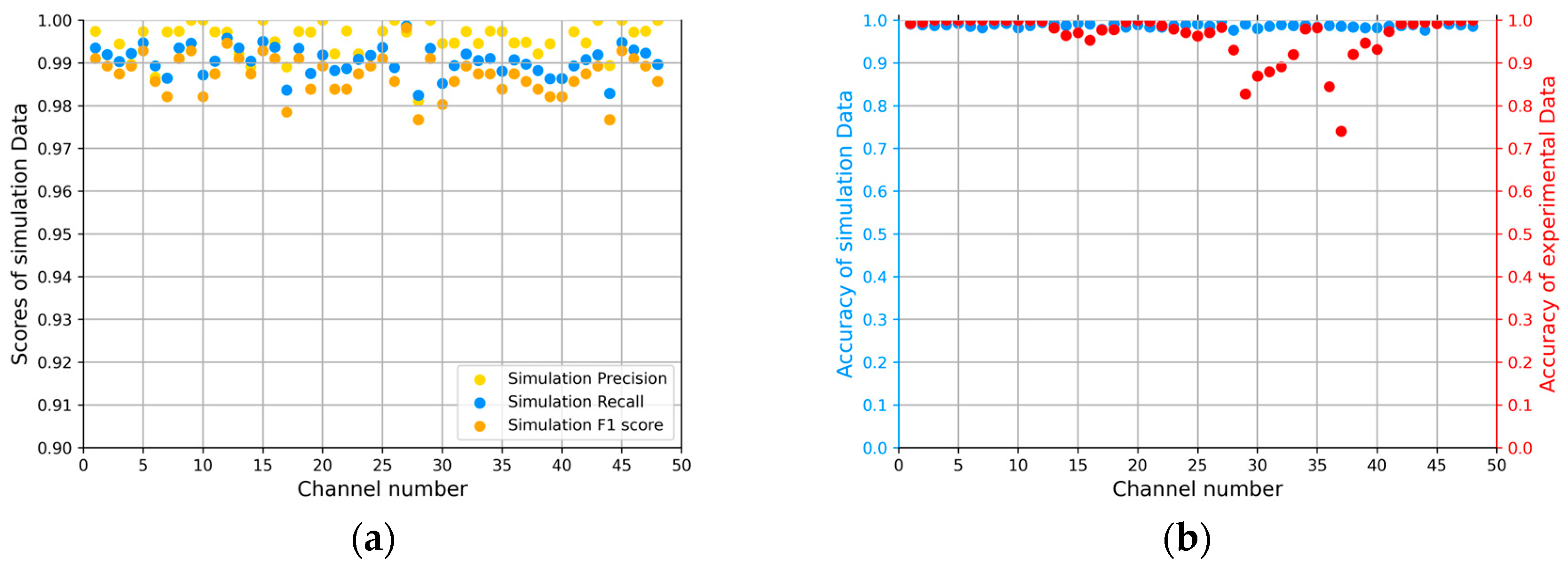

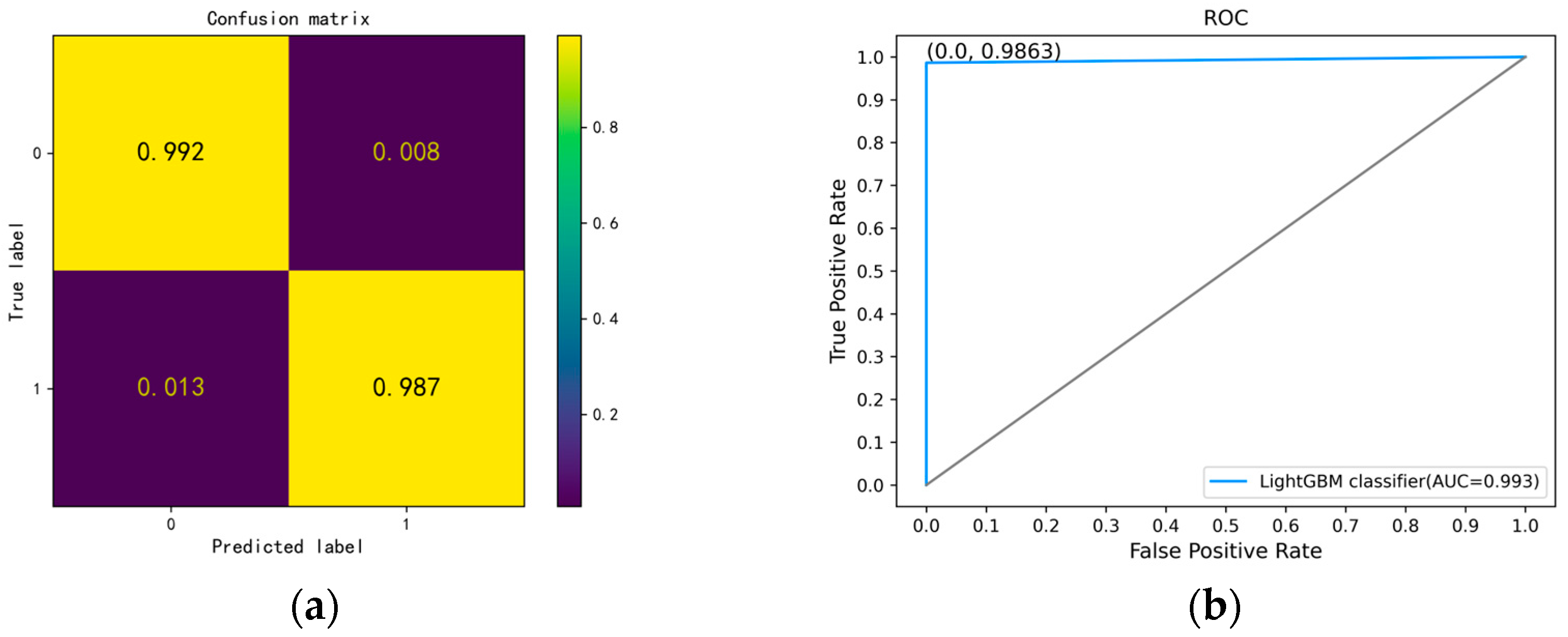

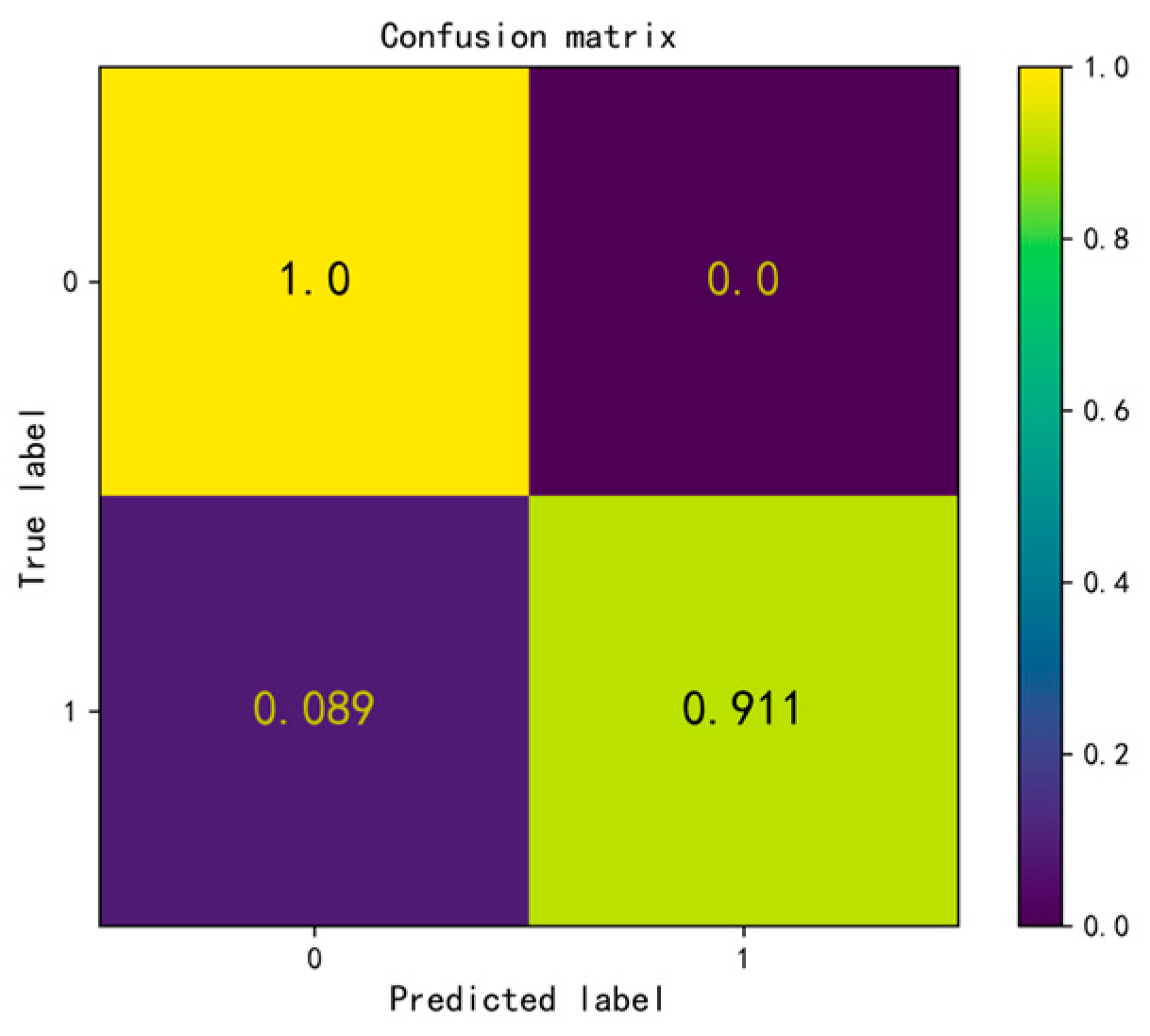

5.1.1. Evaluation of Surface Target Detection Model

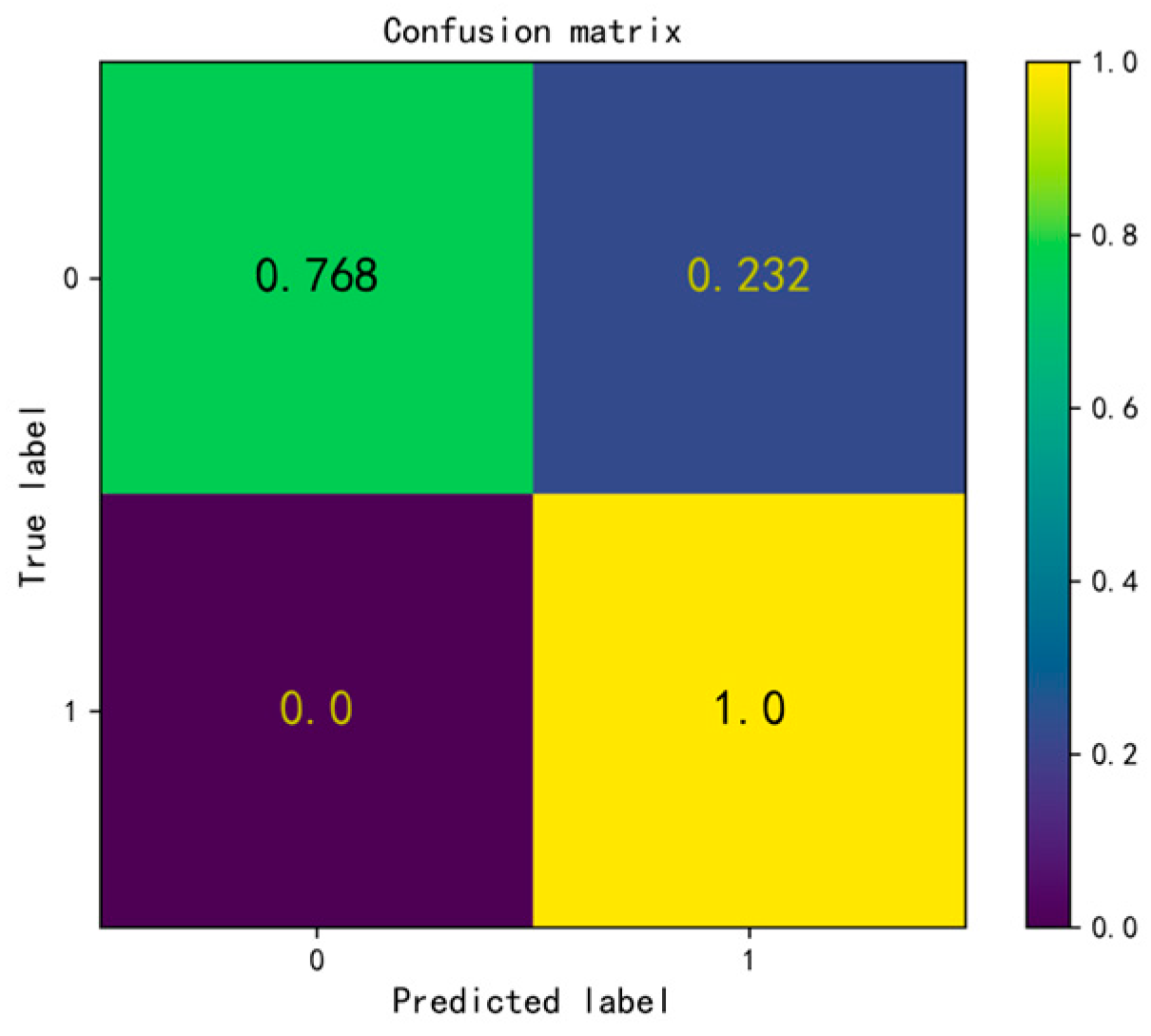

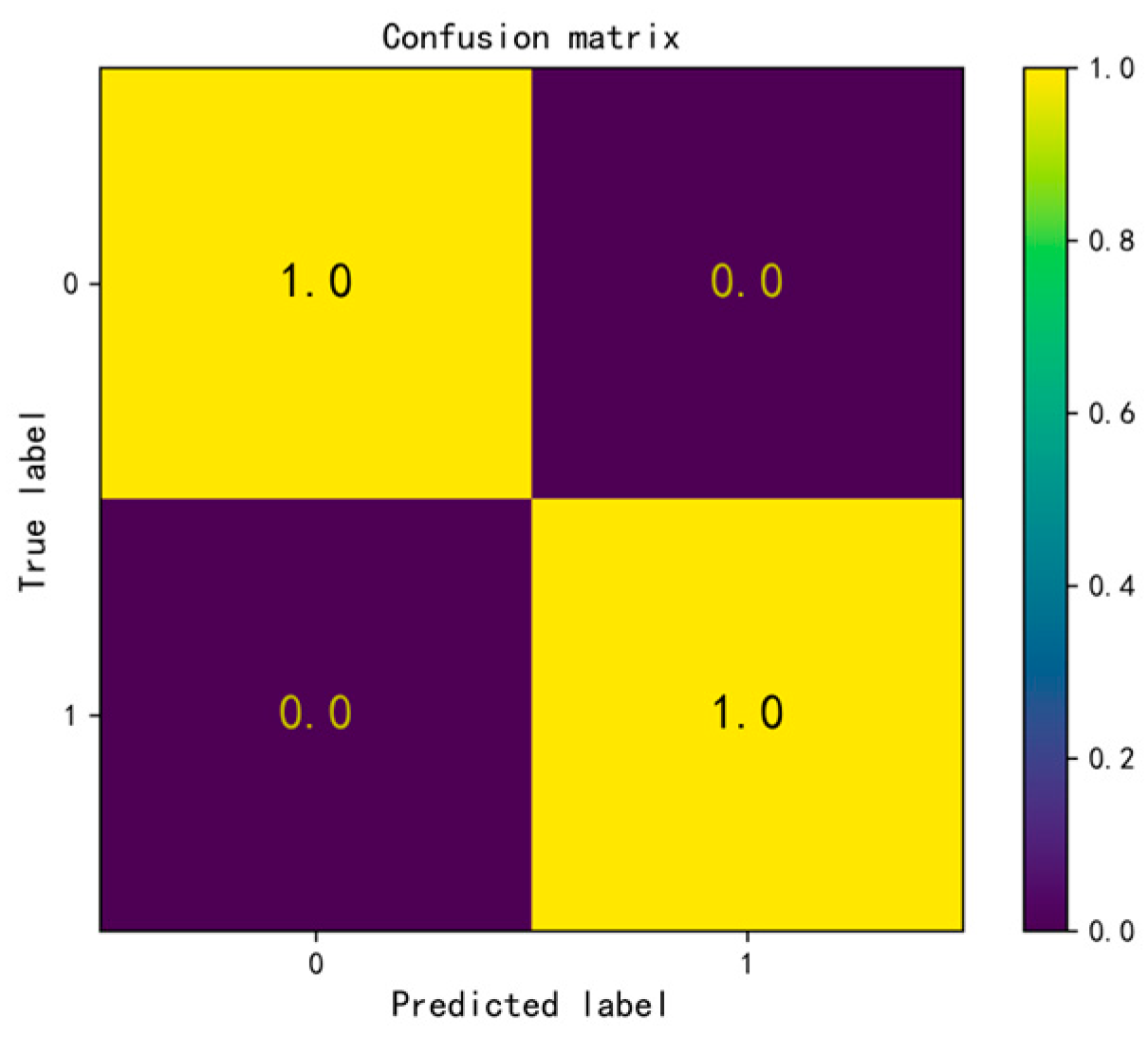

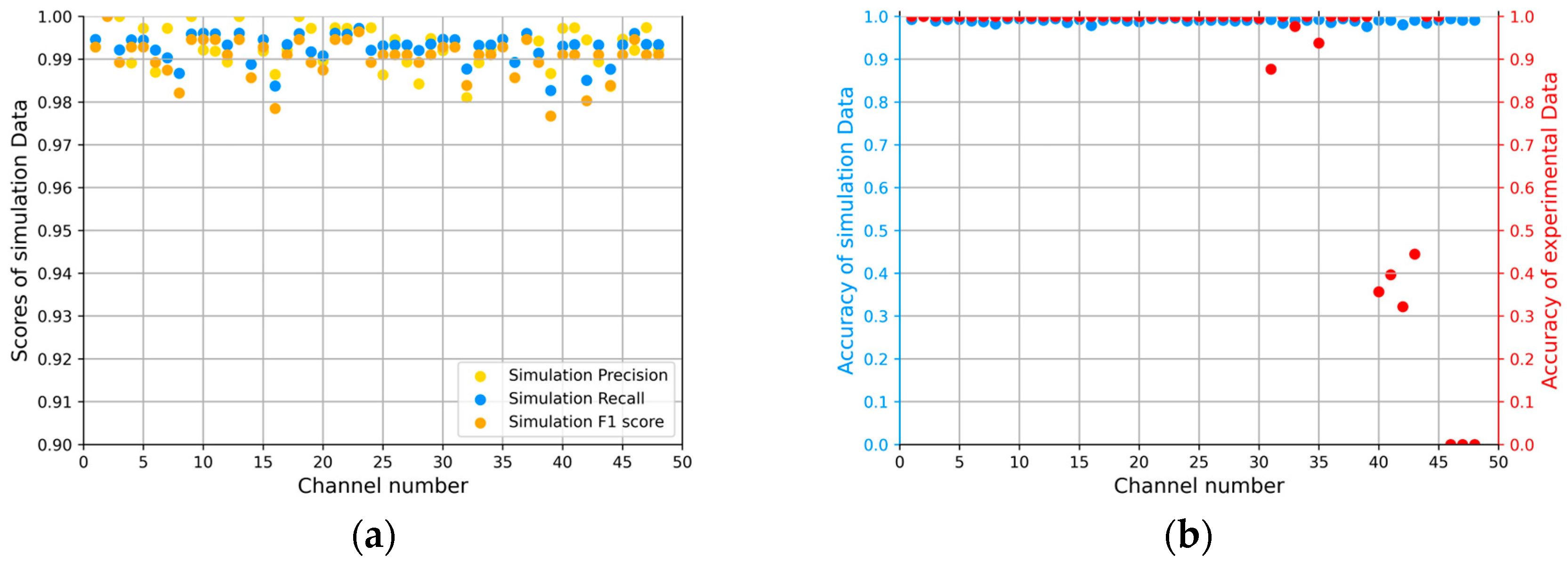

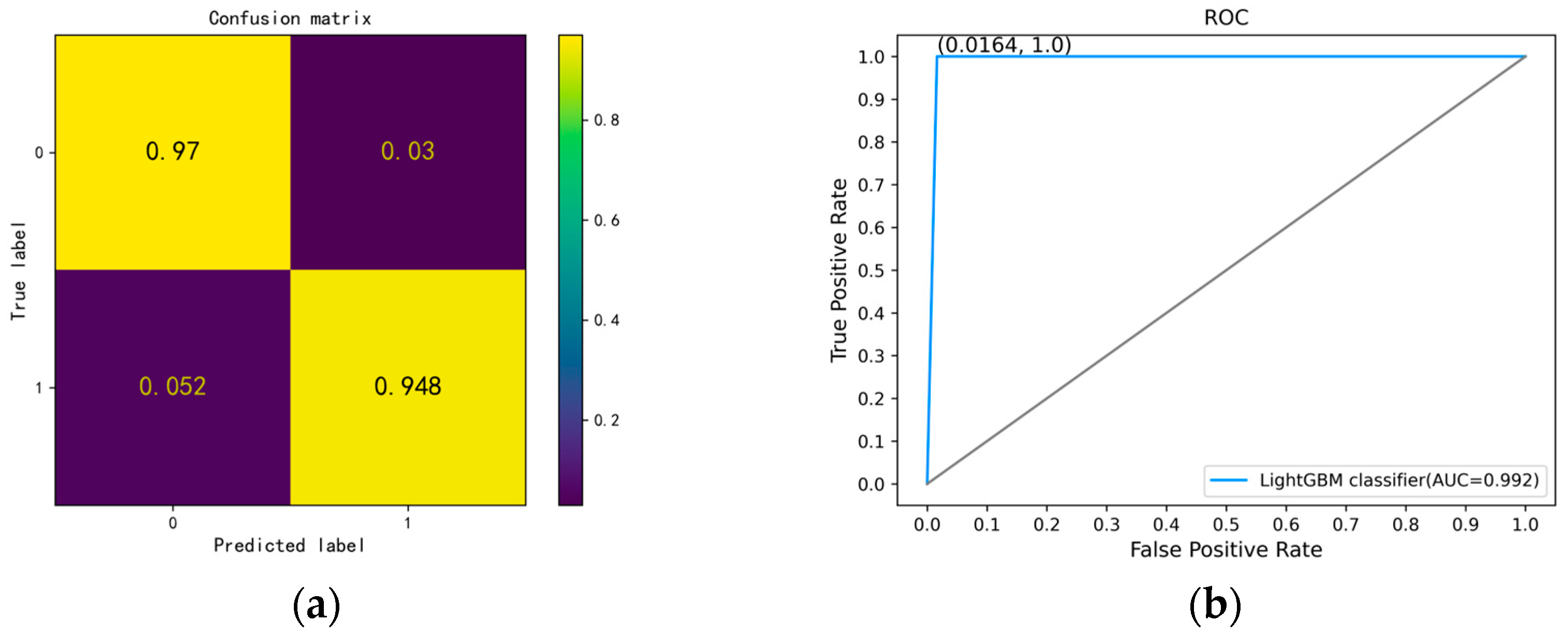

5.1.2. Evaluation of Underwater Target Detection Model

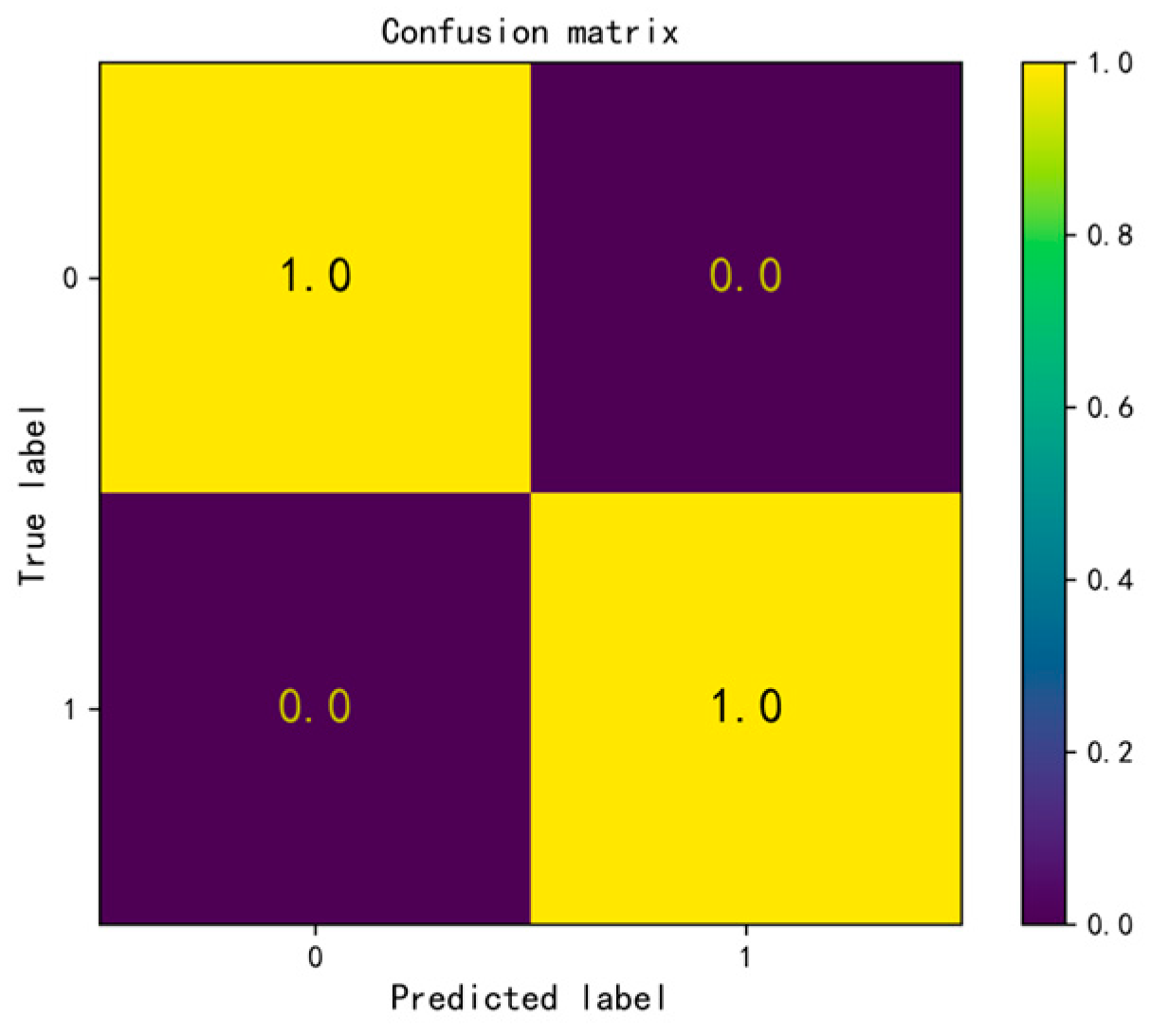

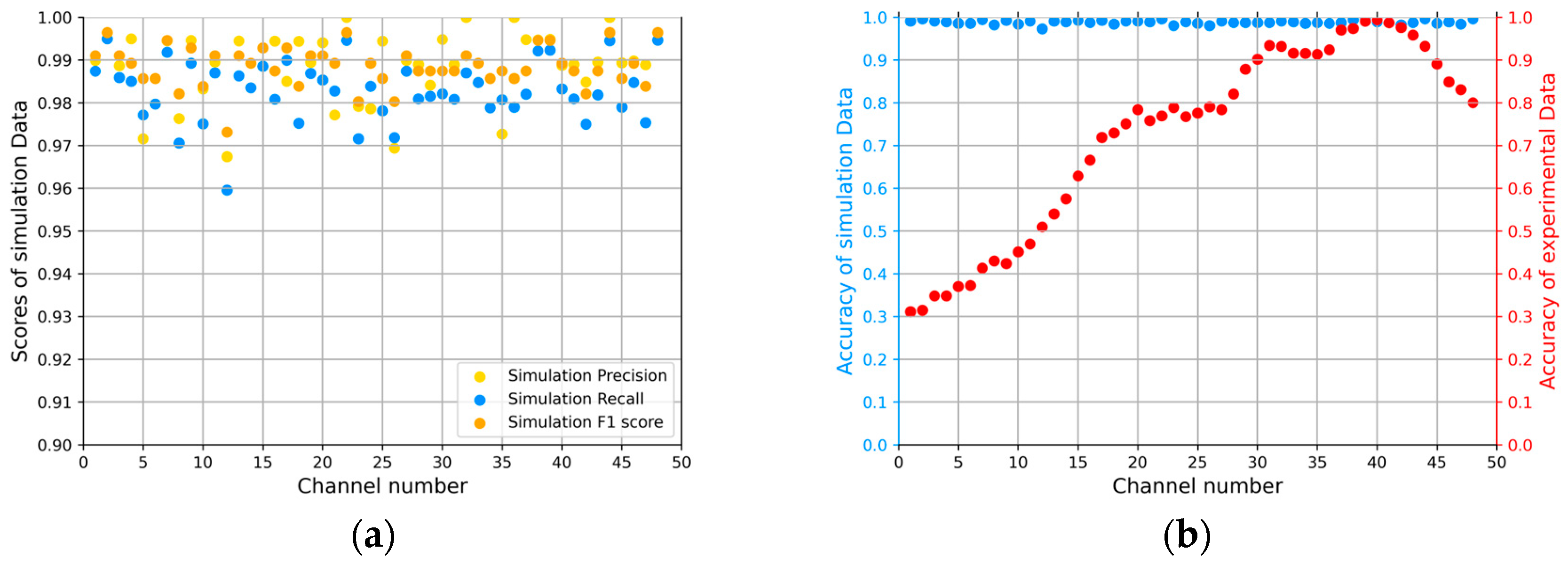

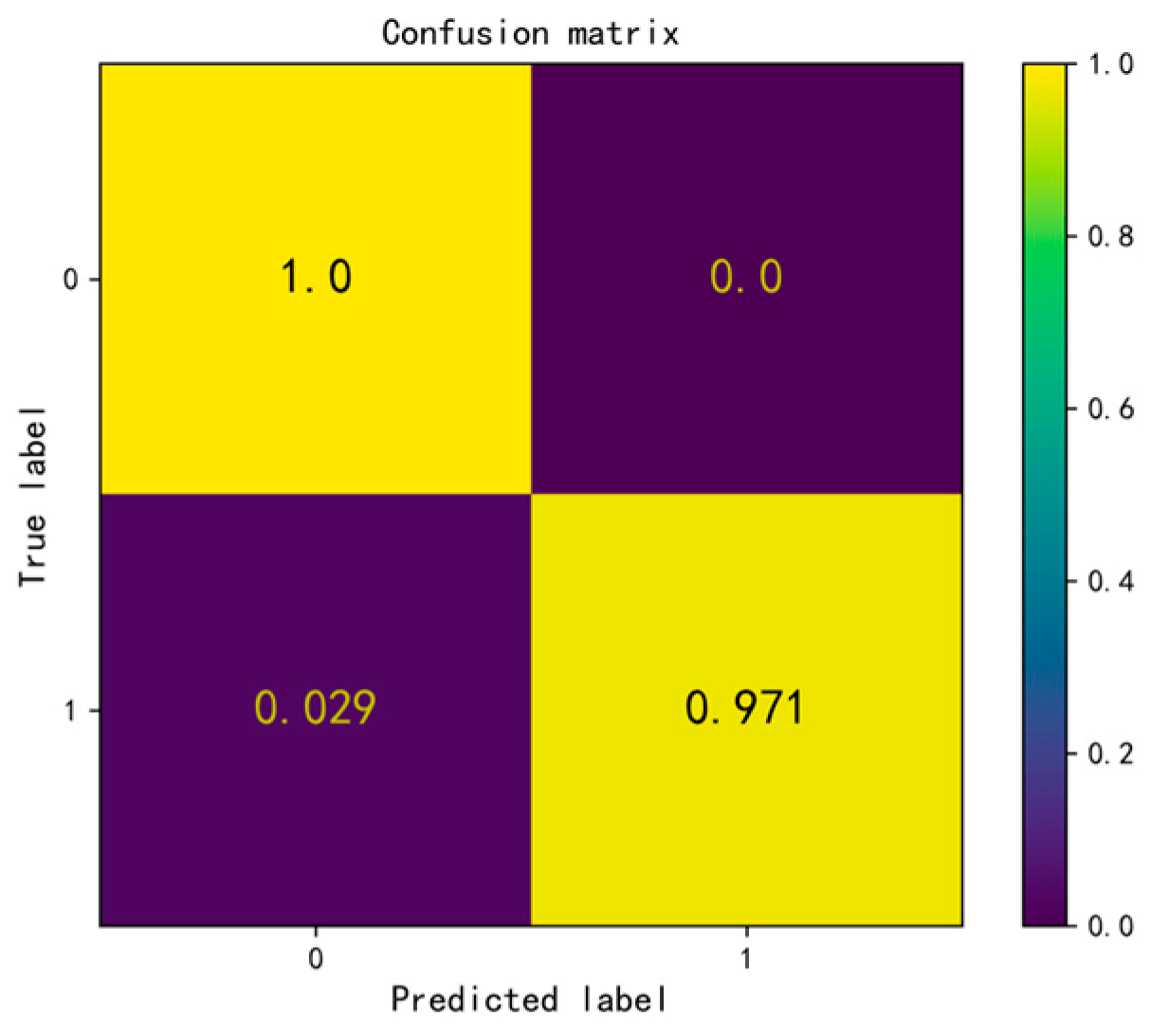

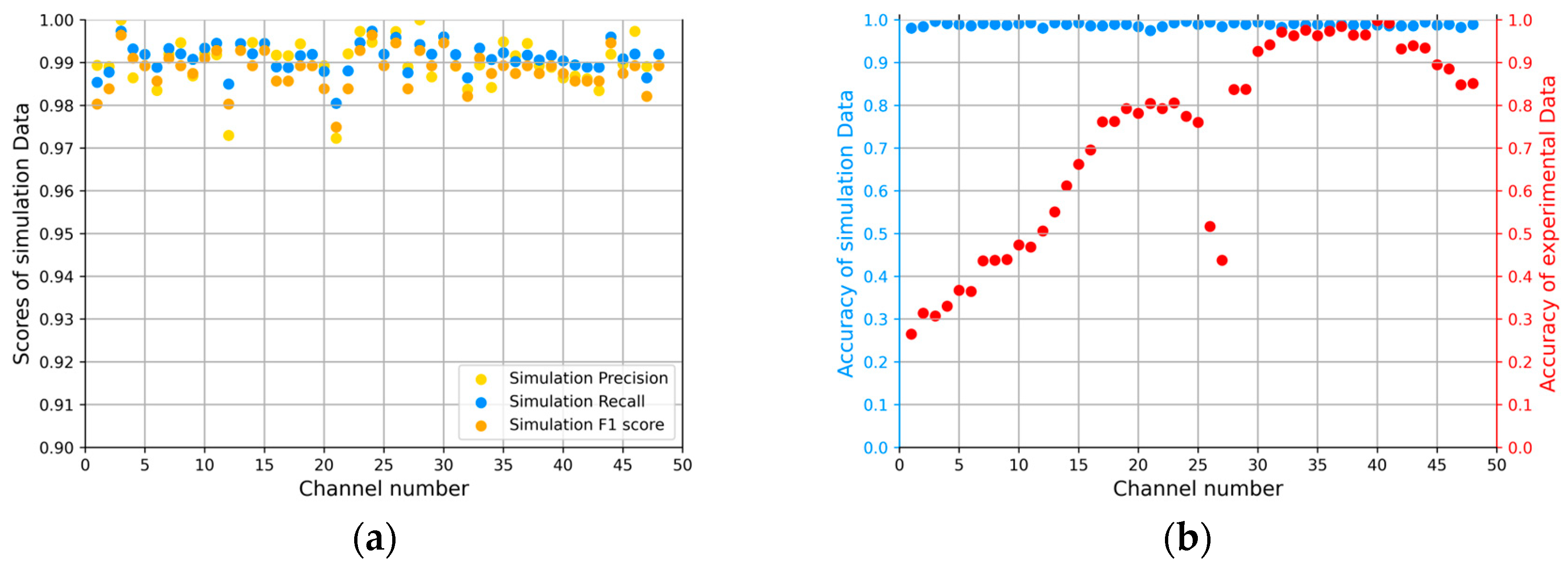

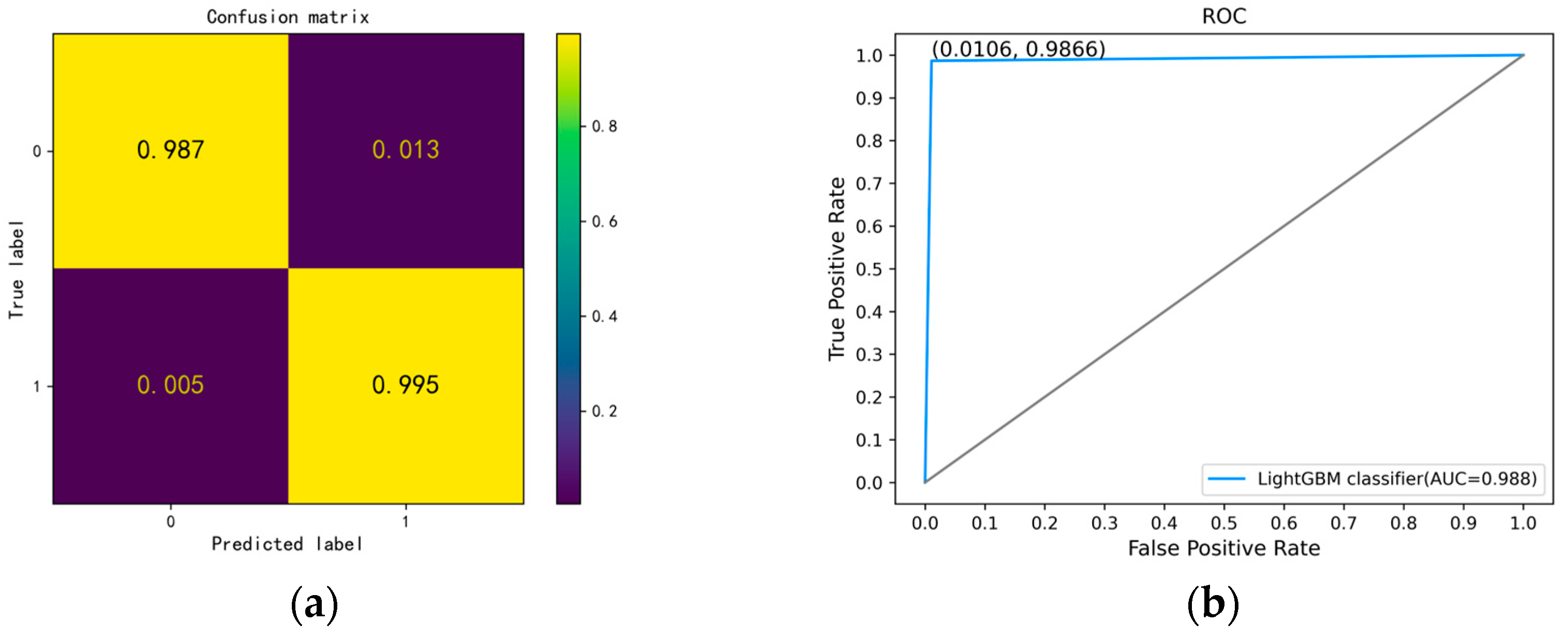

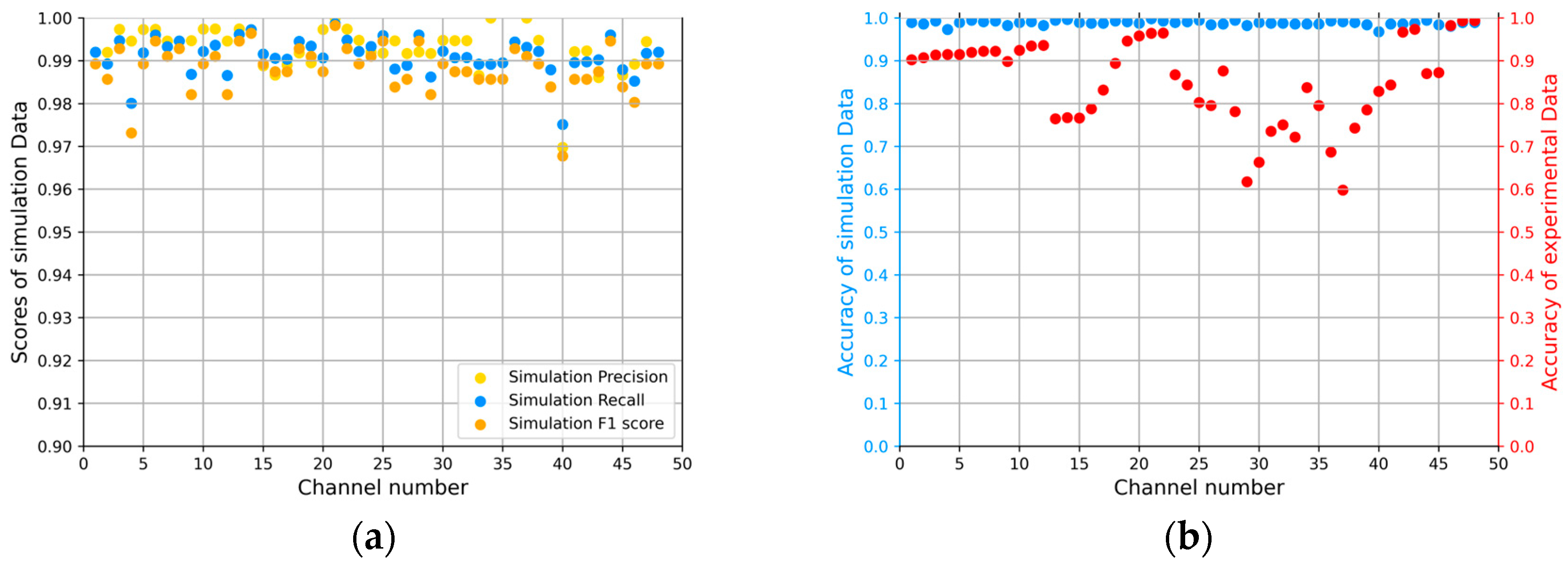

5.2. Results of LightGBM

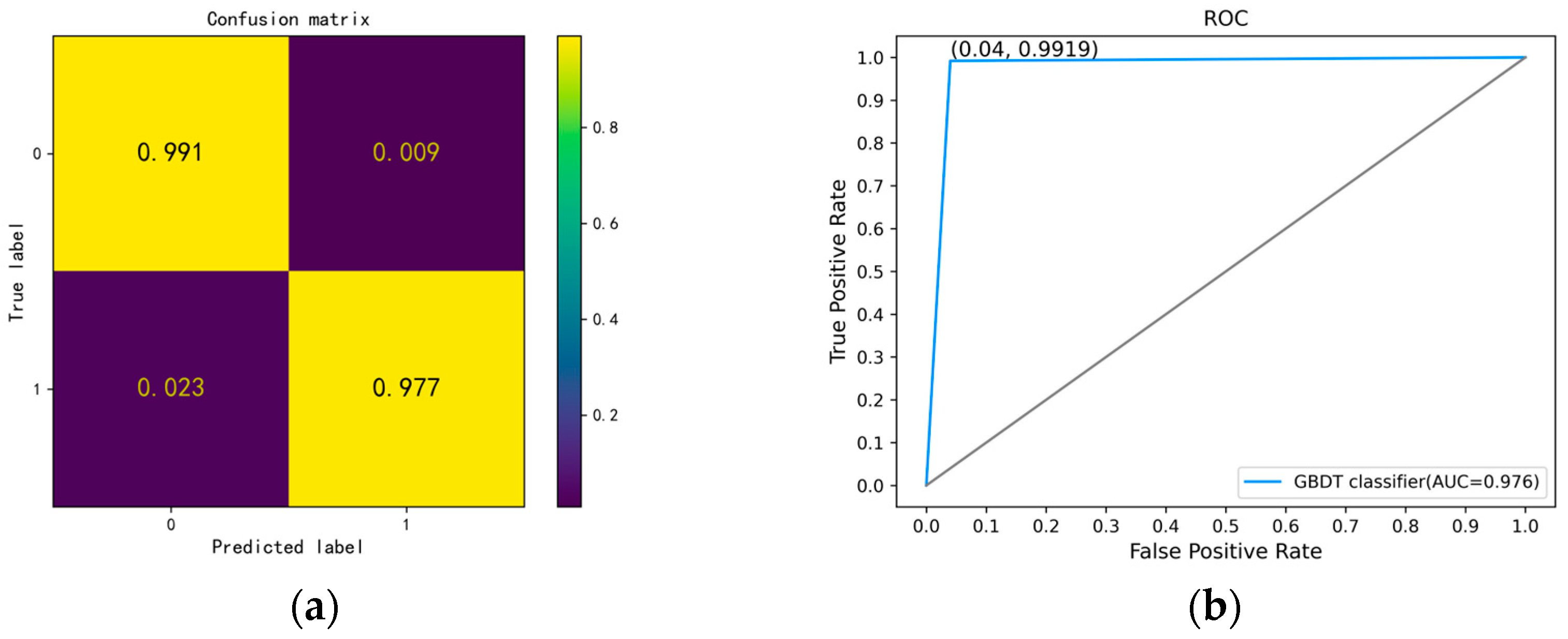

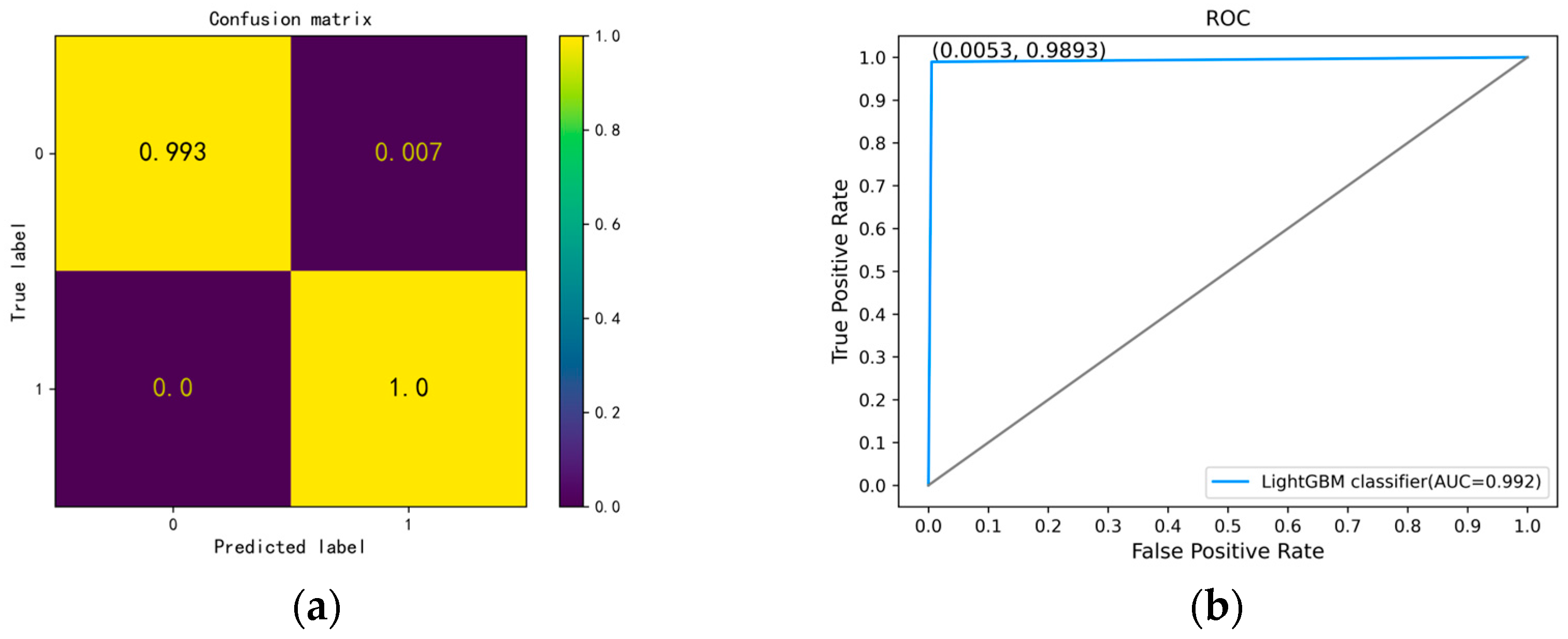

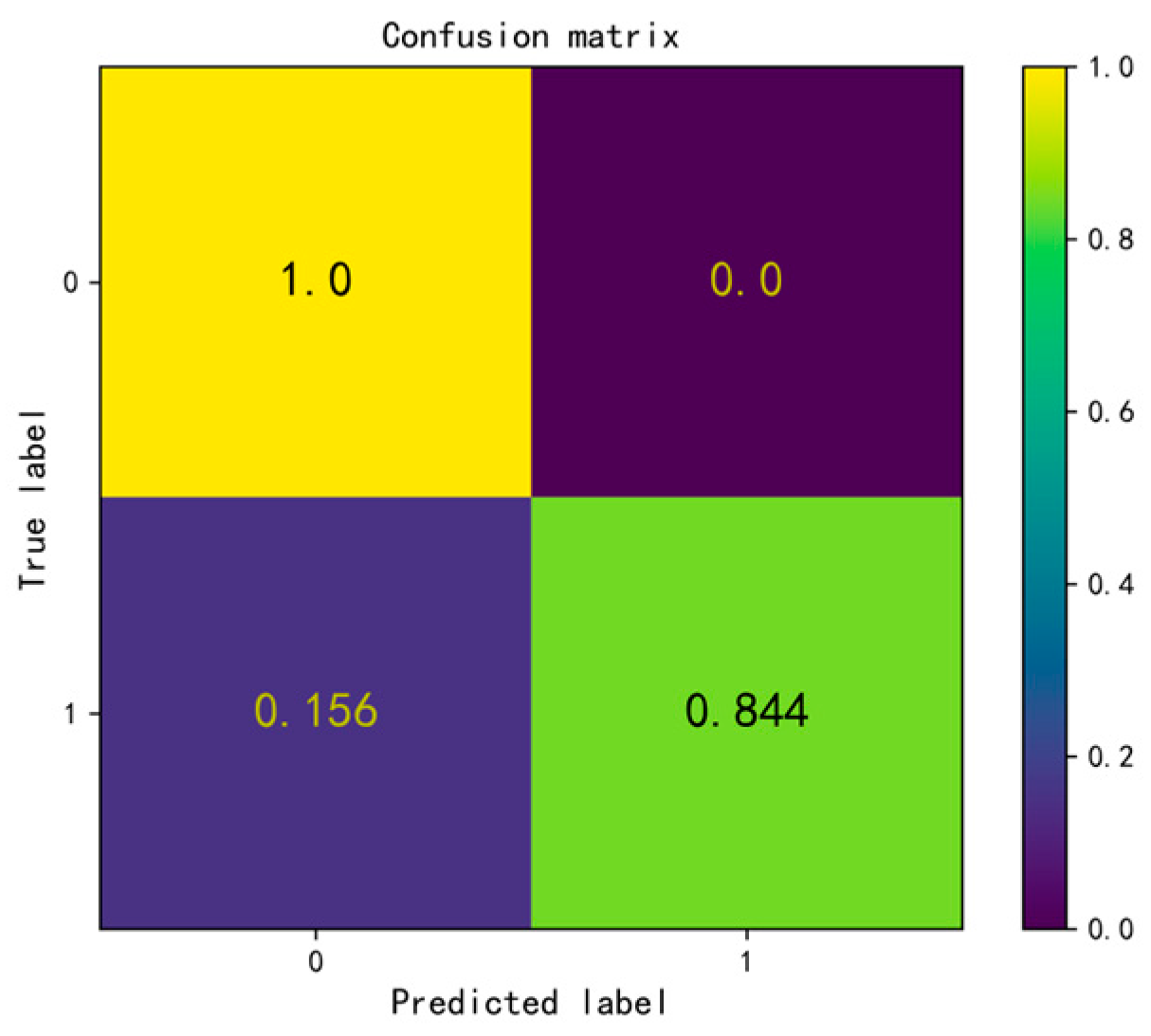

5.2.1. Evaluation of Water Surface Target Detection Model

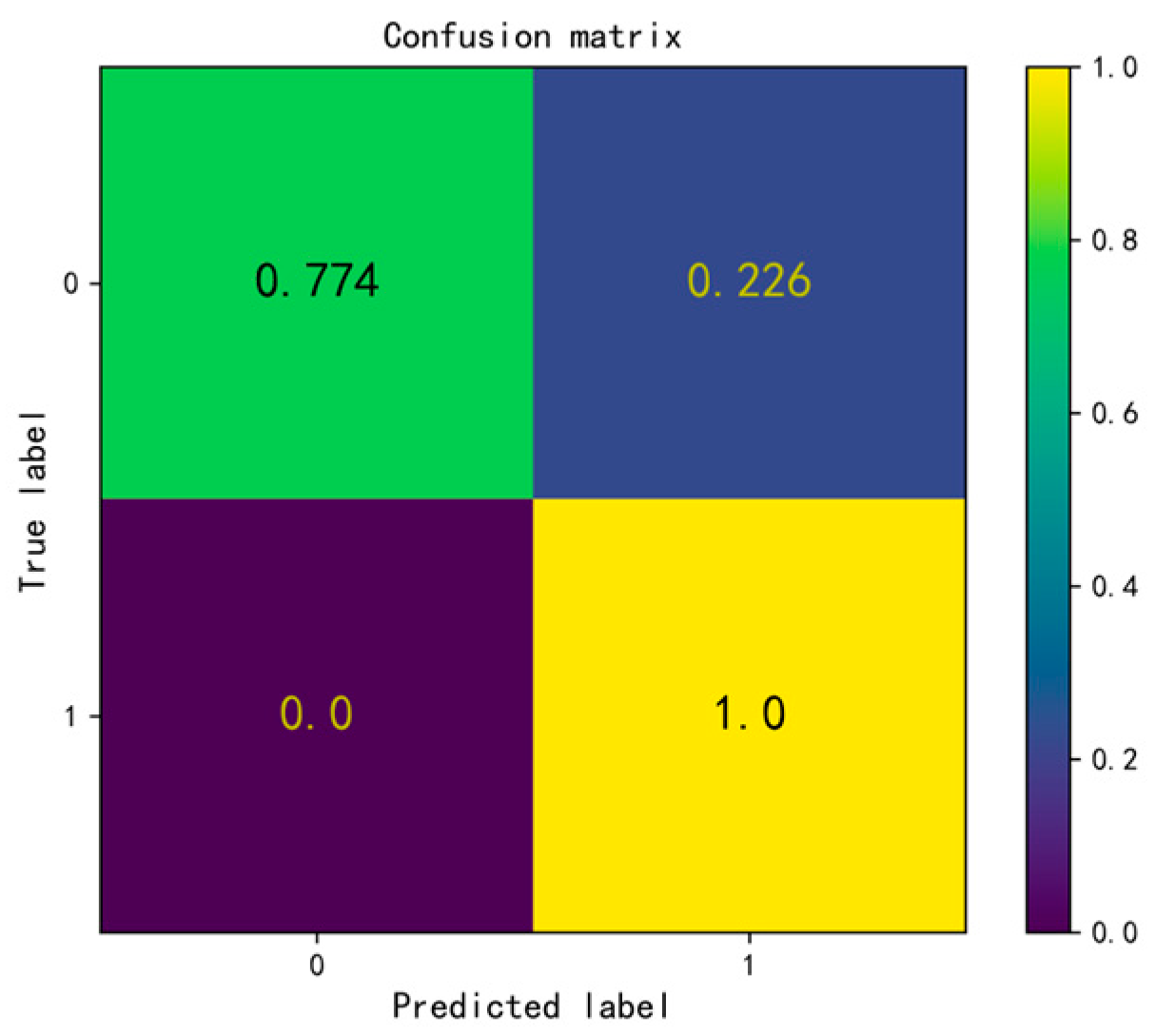

5.2.2. Evaluation of Underwater Target Detection Model

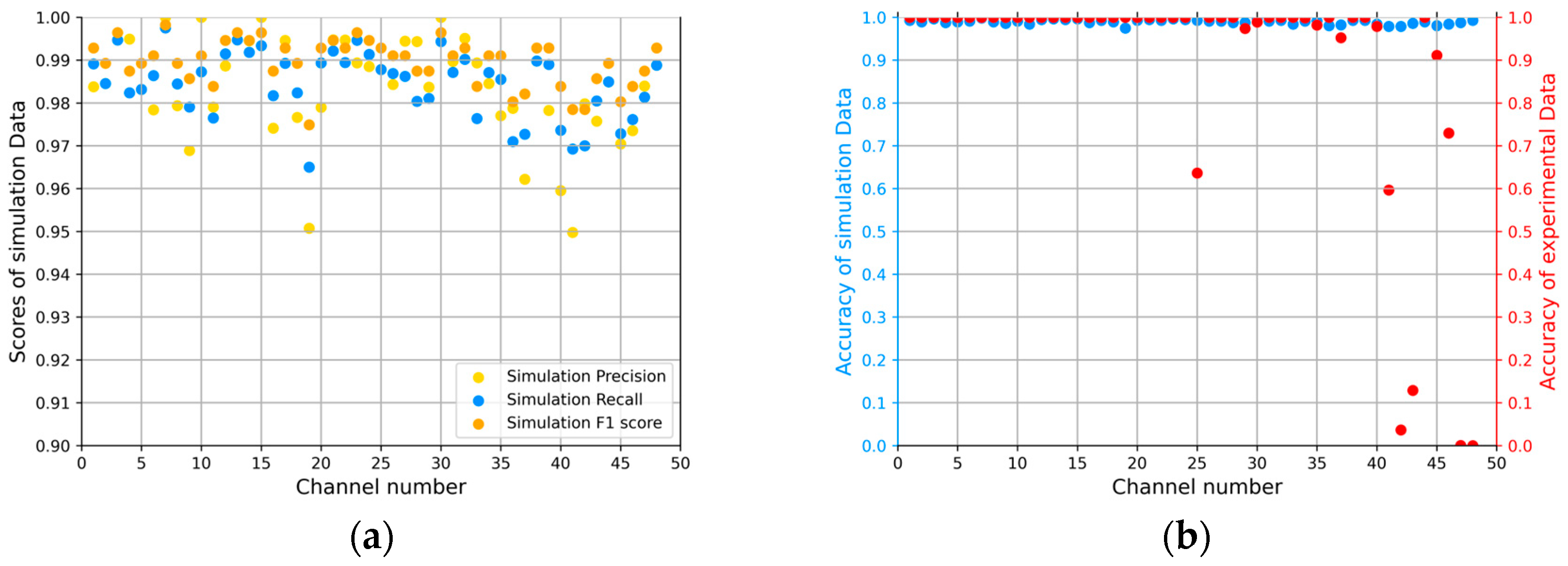

5.3. Multi-Channel Joint Detection and Results Comparation

6. Conclusions and Discussion

- (1)

- The results of the No. 24 hydrophone using the GBDT model show that the training model established using simulation data effectively solved the problem of S/U acoustic source recognition. The ultimate optimal model also achieved a good balance and had good experimental accuracy.

- (2)

- Using LightGBM to classify the experimental data of hydrophone 24 achieved the best balance between precision and recall, with good experimental accuracy.

- (3)

- Four machine learning methods (kNN, RS-kNN, GBDT, and LightGBM) were used to identify all 48 hydrophones of the VLA for S/U acoustic source recognition. The results show that the recognition performance of GBDT and LightGBM was better than that of kNN when modules were used as features.

- (4)

- For surface models, two algorithms based on GBDT and LightGBM exhibited a higher pass rate for module features compared to real and imaginary value features because the features provided by the modules were sufficient for the algorithm to learn. The two algorithms achieved extremely high pass rates of 40 or even 41, respectively, which is even better than the best case (30 passes) of the two algorithms based on kNN and RS-kNN. On the contrary, when using real and imaginary values as features, because the simple separation of real and imaginary values cannot reflect information that previously belonged to a single point, the features contained a lot of redundant information, making the features learned by the model unrepresentative to some extent and unable to make correct predictions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, W.; Wu, Y.; Shi, J.; Leng, H.; Zhao, Y.; Guo, J. Surface and Underwater Acoustic Source Discrimination Based on Machine Learning Using a Single Hydrophone. J. Mar. Sci. Eng. 2022, 10, 321. [Google Scholar] [CrossRef]

- Nicolas, B.; Mars, J.I.; Lacoume, J.-L. Source Depth Estimation Using a Horizontal Array by Matched-Mode Processing in the Frequency-Wavenumber Domain. EURASIP J. Adv. Signal Process. 2006, 2006, 065901. [Google Scholar] [CrossRef]

- Tolstoy, A. Matched Field Processing for Underwater Acoustics. In Matched Field Processing for Underwater Acoustics/Alexandra Tolstoy; World Scientific: Singapore, 1993. [Google Scholar]

- Wilson, G.R.; Koch, R.A.; Vidmar, P.J. Matched mode localization. J. Acoust. Soc. Am. 1988, 84, 310–320. [Google Scholar] [CrossRef]

- Westwood, E.K. Broadband matched-field source localization. J. Acoust. Soc. Am. 1992, 91, 2777–2789. [Google Scholar] [CrossRef]

- Baggeroer, A.B.; Kuperman, W.A.; Mikhalevsky, P.N. An overview of matched field methods in ocean acoustics %J Oceanic Engineering. IEEE J. Ocean. Eng. 1993, 18, 401–424. [Google Scholar] [CrossRef]

- Smith, G.B.; Feuillade, C.; Balzo, D.R.D.; Byrne, C.L. A nonlinear matched-field processor for detection and localization of a quiet source in a noisy shallow-water environment. J. Acoust. Soc. Am. 1989, 85, 1158–1166. [Google Scholar] [CrossRef]

- Michalopoulou, Z.-H.; Porter, M.B. Matched-field processing for broad-band source localization. IEEE J. Ocean. Eng. 1996, 21, 384–392. [Google Scholar] [CrossRef]

- Wawrzyniak, N.; Stateczny, A. MSIS Image Postioning in Port Areas with the Aid of Comparative Navigation Methods. Pol. Marit. Res. 2017, 24, 32–41. [Google Scholar] [CrossRef]

- Kazimierski, W.; Zaniewicz, G. Determination of Process Noise for Underwater Target Tracking with Forward Looking Sonar. Remote. Sens. 2021, 13, 1014. [Google Scholar] [CrossRef]

- Piskur, P.; Szymak, P. Algorithms for passive detection of moving vessels in marine environment. J. Mar. Eng. Technol. 2017, 16, 377–385. [Google Scholar] [CrossRef]

- Premus, V.E.; Helfrick, M.N. Use of mode subspace projections for depth discrimination with a horizontal line array: Theory and experimental results. J. Acoust. Soc. Am. 2013, 133, 4019–4031. [Google Scholar] [CrossRef]

- Porter, M.B. The KRAKEN Normal Mode Program; SACLANT Undersea Researeh Centre: La Spezia, Italy, 1991. [Google Scholar]

- Premus, V.E.; Backman, D. A Matched Subspace Approach to Depth Discrimination in a Shallow Water Waveguide. In Proceedings of the Conference Record of the Forty-First Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 4–7 November 2007; Ocean Acoustical Services and Instrumentation Systems Inc.: Lexington, MA, USA, 2007. [Google Scholar]

- Yang, T.C. Data-based matched-mode source localization for a moving source. J. Acoust. Soc. Am. 2014, 135, 1218–1230. [Google Scholar] [CrossRef]

- Du, J.; Zheng, Y.; Wang, Z.; Cui, H.; Liu, Z. Passive Acoustic Source Depth Discrimination with Two Hydrophones in Shallow Water. In Proceedings of the OCEANS 2016—Shanghai, Shanghai, China, 10–13 April 2016. [Google Scholar]

- Conan, E.; Bonnel, J.; Chonavel, T.; Nicolas, B. Source depth discrimination with a vertical line array. J. Acoust. Soc. Am. 2016, 140, EL434–EL440. [Google Scholar] [CrossRef]

- Conan, E.; Bonnel, J.; Nicolas, B.; Chonavel, T. Using the trapped energy ratio for source depth discrimination with a horizontal line array: Theory and experimental results. J. Acoust. Soc. Am. 2017, 142, 2776–2786. [Google Scholar] [CrossRef]

- Liang, G.; Zhang, Y.; Zhang, G.; Feng, J.; Zheng, C. Depth Discrimination for Low-Frequency Sources Using a Horizontal Line Array of Acoustic Vector Sensors Based on Mode Extraction. Sensors 2018, 18, 3692. [Google Scholar] [CrossRef]

- Niu, H.; Reeves, E.; Gerstoft, P. Source localization in an ocean waveguide using supervised machine learning. J. Acoust. Soc. Am. 2017, 142, 1176–1188. [Google Scholar] [CrossRef]

- Niu, H.; Ozanich, E.; Gerstoft, P. Ship localization in Santa Barbara Channel using machine learning classifiers. J. Acoust. Soc. Am. 2017, 142, EL455–EL460. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Signal Processing Information Base (SPIB). Available online: http://spib.linse.ufsc.br/ (accessed on 10 October 2021).

- Gingras, D.F.; Gerstoft, P. Inversion for geometric and geoacoustic parameters in shallow water: Experimental results. J. Acoust. Soc. Am. 1995, 97, 34. [Google Scholar] [CrossRef]

- Krolik, L.J. The performance of matched-field beamformers with Mediterranean vertical array data. IEEE Trans. Signal Process. 1996, 44, 2605–2611. [Google Scholar] [CrossRef]

- Choi, J.; Choo, Y.; Lee, K. Acoustic Classification of Surface and Underwater Vessels in the Ocean Using Supervised Machine Learning. Sensors 2019, 19, 3492. [Google Scholar] [CrossRef] [PubMed]

| Depth of the top hydrophone | 18.7 m |

| Depth of the bottom hydrophone | 112.7 m |

| The space of the VLA | 2 m |

| The number of the VLA | 48 |

| Depth of source | 69 m |

| Range of source | 5.9~6.9 km |

| Surface target frequency band | 20~72 Hz |

| Underwater target frequency band | 170~220 Hz |

| Precision | Recall | F1 Score | Accuracy | |

|---|---|---|---|---|

| Modules | 0.9942 | 0.9885 | 0.9913 | 0.9946 |

| Real and imaginary values | 0.9892 | 0.9786 | 0.9839 | 0.9892 |

| Precision | Recall | F1 Score | Accuracy | |

|---|---|---|---|---|

| Modules | 0.9769 | 1 | 0.9883 | 0.9839 |

| Real and imaginary values | 0.9918 | 0.9916 | 0.9918 | 0.9892 |

| Precision | Recall | F1 Score | Accuracy | |

|---|---|---|---|---|

| Modules | 0.9868 | 0.9973 | 0.9921 | 0.9892 |

| Real and imaginary values | 1 | 0.9947 | 0.9974 | 0.9964 |

| Precision | Recall | F1 Score | Accuracy | |

|---|---|---|---|---|

| Modules | 0.9489 | 0.9920 | 0.9699 | 0.9588 |

| Real and imaginary values | 0.9947 | 0.9920 | 0.9933 | 0.9910 |

| Machine Learning Method | Feature | List of Hydrophones That Reached the Threshold | Number of Hydrophones That Reached Threshold |

|---|---|---|---|

| kNN | Modules | 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 34, 36, 37, 38, 39 | 20 |

| Real and imaginary values | 16, 17, 18, 19, 20, 21, 22, 23, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 47, 48 | 28 | |

| RS-kNN | Modules | 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39 | 25 |

| Real and imaginary values | 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 46, 47, 48 | 30 | |

| GBDT | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 44, 45 | 41 |

| Real and imaginary values | 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44 | 15 | |

| LightGBM | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 32, 33, 34, 35, 36, 37, 38, 39, 44, 45 | 40 |

| Real and imaginary values | 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44 | 15 |

| Machine Learning Method | Feature | List of Hydrophones That Reached the Threshold | Number of Hydrophones That Reached the Threshold |

|---|---|---|---|

| kNN | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 19, 20, 32, 33, 43, 45, 46, 47, 48 | 21 |

| Real and imaginary values | 4, 5, 6, 7, 8, 9, 10, 11, 19, 20, 32, 33, 41, 42, 43, 44, 46, 47 | 18 | |

| RS-kNN | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 19, 20, 21, 23, 24, 32, 33, 34, 39, 41, 42, 43, 44, 45, 46, 47, 48 | 31 |

| Real and imaginary values | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10,11, 12, 13, 14, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 31, 32, 33, 34, 35, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48 | 40 | |

| GBDT | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 19, 20, 21, 22, 23, 24, 25, 26, 27, 32, 33, 34, 35, 39, 40, 41, 42, 43, 45, 46, 47, 48 | 35 |

| Real and imaginary values | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 33, 34, 35, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48 | 42 | |

| LightGBM | Modules | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 19, 20, 21, 23, 24, 25, 27, 32, 33, 34, 35, 39, 41, 42, 43, 45, 46, 47, 48 | 31 |

| Real and imaginary values | 1, 2, 3, 4, 5, 6, 7, 8, 10, 11, 12, 19, 20, 21, 22, 42, 43, 46, 47, 48 | 20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Q.; Zhu, M.; Zhang, W.; Shi, J.; Liu, Y. Surface and Underwater Acoustic Source Recognition Using Multi-Channel Joint Detection Method Based on Machine Learning. J. Mar. Sci. Eng. 2023, 11, 1587. https://doi.org/10.3390/jmse11081587

Yu Q, Zhu M, Zhang W, Shi J, Liu Y. Surface and Underwater Acoustic Source Recognition Using Multi-Channel Joint Detection Method Based on Machine Learning. Journal of Marine Science and Engineering. 2023; 11(8):1587. https://doi.org/10.3390/jmse11081587

Chicago/Turabian StyleYu, Qiankun, Min Zhu, Wen Zhang, Jian Shi, and Yan Liu. 2023. "Surface and Underwater Acoustic Source Recognition Using Multi-Channel Joint Detection Method Based on Machine Learning" Journal of Marine Science and Engineering 11, no. 8: 1587. https://doi.org/10.3390/jmse11081587

APA StyleYu, Q., Zhu, M., Zhang, W., Shi, J., & Liu, Y. (2023). Surface and Underwater Acoustic Source Recognition Using Multi-Channel Joint Detection Method Based on Machine Learning. Journal of Marine Science and Engineering, 11(8), 1587. https://doi.org/10.3390/jmse11081587