Abstract

Traditional cotton field plastic film residue monitoring relies on manual sampling, with low efficiency and limited accuracy; therefore, large-scale nondestructive monitoring is difficult to achieve. A UAV-based prediction method for shallow plastic film residue pollution in cotton fields that uses RDT-Net and machine learning is proposed in this study. This study focuses on the weight of residual plastic film in shallow layers of cotton fields and UAV-captured surface film images, establishing a technical pathway for drone image segmentation and weight prediction. First, the images of residual plastic film in cotton fields captured by the UAV are processed via the RDT-Net semantic segmentation model. A comparative analysis of multiple classic semantic segmentation models reveals that RDT-Net achieves optimal performance. The local feature extraction process in ResNet50 is combined with the global context modeling advantages of the Transformer and the Dice-CE Loss function for precise residue segmentation. The mPa, F1 score, and mIoU of RDT-Net reached 95.88%, 88.33%, and 86.48%, respectively. Second, a correlation analysis was conducted between the coverage rate of superficial residual membranes and the weight of superficial residual membranes across 300 sample sets. The results revealed a significant positive correlation, with R2 = 0.79635 and PCC = 0.89239. Last, multiple machine learning prediction models were constructed on the basis of plastic film coverage. The ridge regression model achieved optimal performance, with a prediction R2 of 0.853 and an RMSE of 0.1009, increasing accuracy in both the segmentation stage and prediction stage. Compared with traditional manual sampling, this method substantially reduces the monitoring time per cotton field, significantly decreases monitoring costs, and prevents soil structure disruption. These findings address shortcomings in existing monitoring methods for assessing surface plastic film content, providing an effective technical solution for large-scale, high-precision, nondestructive monitoring of plastic film pollution on farmland surfaces and in the plow layer. It also offers data support for the precise management of plastic film pollution in cotton fields.

1. Introduction

As a core production resource in modern agriculture, agricultural plastic film plays a crucial role in agricultural production in arid and cool-temperate regions of China, thanks to its significant advantages in increasing soil temperature, conserving moisture, and suppressing weeds [1]. However, in Xinjiang, a major cotton-producing region in China, the widespread application of plastic mulch cultivation technology is accompanied by issues such as continuous mulching, low residual film recovery rates, and inadequate recovery technologies, leading to a gradual worsening trend of residual film pollution in cotton fields [2,3,4]. Currently, the residual film amount in Xinjiang’s cotton fields generally ranges from 42 to 540 kg/hm2, with an average of 200 kg/hm2, far exceeding the national limit of 75 kg/hm2. A large amount of residual film in the soil plow layer not only hinders the normal movement of water and nutrients and damages the soil structure, but also inhibits seed germination and crop root growth. Furthermore, if residual film is mixed with seed cotton, it will reduce cotton quality, which has posed a significant threat to the sustainable development of the cotton industry [5,6,7]. The control of residual film pollution requires comprehensive management from the source of the entire industrial chain [8]. Among various measures, in addition to developing efficient and practical residual film recycling equipment, achieving rapid and accurate monitoring and assessment of residual film pollution is not only a scientific basis for quality supervision of residual film recycling operations but also a core support for the precise classification of farmland residual film pollution levels, which is of great significance for constructing a full-chain governance system for residual film [9].

The assessment of residual film pollution in cotton fields is mainly divided into two core scenarios: surface residual film pollution assessment and plow layer residual film pollution assessment. Surface residual film pollution assessment focuses on the quantitative analysis of visible residual film on the cotton field surface, while plow layer residual film pollution assessment targets the monitoring of the distribution and content of buried residual film in the soil plow layer (usually 0–30 cm in depth). Together, they form the key dimensions for a comprehensive assessment of residual film pollution in cotton fields. There are significant differences in technical requirements between different assessment scenarios: surface assessment needs to balance rapidity and large-scale coverage capabilities, while plow layer assessment focuses more on the balance between depth and accuracy. Clarifying the core definitions and technical boundaries of these two types of assessments is a fundamental prerequisite for conducting research on residual film pollution monitoring technologies.

In the field of surface residual film pollution assessment, UAV imaging technology has been widely applied in areas such as farmland soil pollution [10], vegetation coverage [11], and pest and disease monitoring [12] due to its high precision, portability, rapidity, and multiscale information acquisition capabilities, providing technical support for surface residual film monitoring. Some scholars have explored the application of UAVs and deep learning technologies in farmland residual film identification and pollution monitoring: Yang et al. [9] constructed an intelligent monitoring system for farmland residual film pollution that integrates UAV autonomous path planning and rapid residual film image assessment, realizing the integration of UAV automatic sampling and rapid pollution monitoring; Liang et al. [13] conducted a study on UAV-based residual film identification during the seedling emergence stage of tobacco fields, and when the flight height was 50 m, the residual film identification accuracy reached 80.06%. Later, their team optimized the method using image S-component and pulsed neural network algorithms, increasing the residual film identification rate in tobacco fields during the harvest period to 87.49% [14]. However, this method is only applicable to farmlands that are untreated after autumn harvest and have continuous plastic film use, resulting in limitations in applicable scenarios.

Currently, manual sampling remains the main method for plow layer residual film pollution assessment: Du et al. [15] investigated residual film pollution in the 0–20 cm plow layer soil in typical regions of northern China using the plum blossom spot method and found that residual film was mainly concentrated at a depth of 10–15 cm; Wang et al. [16] conducted large-scale monitoring of the 0–30 cm plow layer soil in long-term mulched farmlands in eastern Gansu, eastern Qinghai, and northern Shaanxi, pointing out that the degree of residual film pollution in arid and semi-arid areas was significantly higher than that in semi-arid and semi-humid areas; He et al. [17] monitored residual film in long-term mulched cotton fields through manual stratified sampling and found that the content of residual film increased annually with the increase in mulching years, and the fragmentation degree of residual film increased layer by layer with the increase in tillage depth. Compared with the current situation investigation of plow layer residual film, there are fewer studies on predicting the degree of plow layer residual film pollution. Qiu et al. [18] proposed a prediction method for plow layer residual film in cotton fields by combining UAV imaging and deep learning, and found a strong correlation between the surface residual film area and the shallow residual film weight. They detected the surface residual film area through UAV aerial images and the Deeplabv3 model, and then constructed a linear regression model to fit the actual mass of plow layer residual film. However, the accuracy of the surface residual film segmentation model constructed by this method still needs further improvement.

With the rapid development of deep learning technology, Transformer models based on self-attention mechanisms have been widely applied in sequence analysis and semantic segmentation fields in recent years due to their excellent global feature modeling capabilities [19,20,21,22], and have shown good potential in agricultural scenarios: Guo et al. [23] addressed the problems of resource waste and environmental pollution caused by uniform pesticide application in traditional UAV-based rice field remote sensing image weed segmentation, and proposed a new segmentation model based on CNN-Transformer. Its performance in processing complex and variable weed target shapes is better than that of single CNN or Transformer models, with an mIoU of 72.8%, providing effective technical support for determining weed distribution for precise variable pesticide application in rice fields; Wang et al. [24] took the Guanzhong Plain of Shaanxi Province as the research area, selected ten-day scale VTCI, LAI, and FPAR as remote sensing parameters, and constructed a CNN-Transformer deep learning model that integrates the local feature extraction capability of CNN and the global information extraction capability of Transformer, realizing accurate estimation of winter wheat yield per unit area. The model’s estimation accuracy (R2, RMSE, and MAPE) was 0.70, 420.39 kg/hm2, and 7.65%, respectively, which was better than that of the single Transformer model. It also alleviated the problems of underestimation of high yield and overestimation of low yield, and identified the period from late March to early May as the critical growth period of winter wheat; Wu et al. [25] developed an intelligent multimodal diagnostic system for kidneys based on Transformer, which integrates multimodal information such as images and diagnostic reports for disease prediction, and finally achieved the optimal prediction effect.

Existing monitoring technologies for residual film pollution in cotton fields have obvious limitations: surface residual film assessment methods have limited applicable scenarios or insufficient segmentation accuracy; plow layer residual film assessment relies on manual sampling, resulting in low efficiency and high cost; and studies on predicting plow layer residual film pollution are scarce, making it difficult to meet the demand for full-dimensional, rapid, and accurate monitoring of residual film in cotton fields. As China’s core cotton-producing region, Xinjiang faces particularly prominent residual film pollution problems in cotton fields, and there is an urgent need to break through existing technical bottlenecks and construct an integrated technical solution that considers both surface and plow layer residual film monitoring. This study combines the local extraction capability of ResNet50 and the global correlation modeling capability of the Transformer model, with the surface residual film coverage rate as the main feature, to construct a shallow residual film weight regression prediction model, achieving rapid and accurate detection of the residual film content in the tillage layer of cotton fields. These results can compensate for the deficiency of existing monitoring methods, which can assess only the residual film on the surface, and provide an effective method for the assessment of residual film pollution on the surface and in the plow layer of farmland.

The global feature modeling capability of the Transformer model and the local feature extraction capability of CNN complement each other, providing a technical path for improving the accuracy of residual film identification and the reliability of prediction. Combining the advantages of these two types of models with the actual governance needs of residual film pollution in Xinjiang’s cotton fields is expected to solve the shortcomings of existing monitoring methods and provide technical support for the full-chain governance of residual film pollution in cotton fields.

2. Materials and Methods

2.1. Research Area

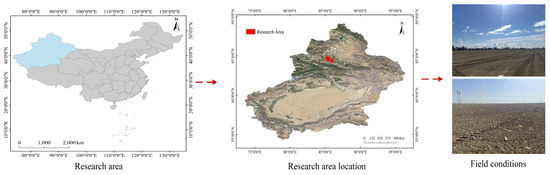

Xinjiang is located in northwestern China, deep in the heart of the Eurasian continent, and has a typical temperate continental climate. Cotton cultivation in this area has long involved mulching, and with the support of this technique, it has become a main cotton production area in China. However, the residual film pollution in cotton fields has become increasingly severe, limiting the sustainable development of the industry. The study area was in the 8th Division of Xinjiang Production and Construction Corps and Manas County, Changji Hui Autonomous Prefecture, Xinjiang Uygur Autonomous Region, at an average altitude of 450 m. This area has long served as a national control monitoring point for residual film and a monitoring point of the Corps. Cotton has been grown using plastic film mulching technology for a long time. After the cotton is harvested in autumn, a series of soil management operations, including mechanical crushing of cotton stalks to induce the return of straw to the field, recovery and treatment of residual plastic film in the field, and plowing of the land, need to be performed. However, these operational processes often fail to completely recover the plastic film, resulting in the formation of fine fragments. When land consolidation operations are performed before spring sowing, the degree of fragmentation of the residual film in the cotton fields in this area further intensifies. The residual film in the plow layer soil exhibits certain regularity, which is typical of residual film pollution in cotton fields in Xinjiang. An overview of the research area is shown in Figure 1.

Figure 1.

Research area.

2.2. Data Acquisition and Processing

2.2.1. Data Acquisition

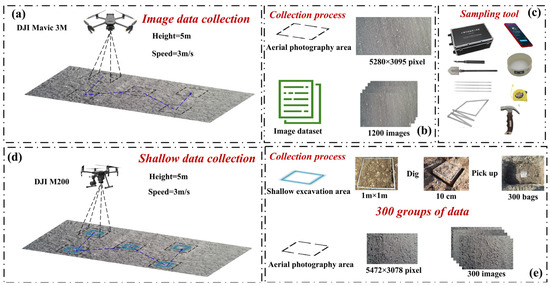

The data sources used in this study consisted of two components. The first component is the UAV image data of the surface layer of the cotton field, and the second component is the weight data of the shallow residual film of the cotton field and the corresponding UAV image data. The UAV image data were collected from 2022 to 2024. At that time, the cotton fields in the study area were in the presowing stage. The data collection was conducted with the DJI Mavic 3M UAV. The aerial photography angle was 90° vertical to the ground, the flight altitude was 5 m, and the flight speed was 2 m/s. The image resolution was 5280 × 3956 pixels. A total of 1200 images were collected for the subsequent training and testing of the semantic segmentation model. As shown in Figure 2a,b. These 1200 images are independent and non-repetitive images collected from different locations and at different times within the study area to ensure the representativeness of the dataset and the generalization ability of the model. The shallow residual film data from 300 sample points in cotton fields collected by the team in 2022 and the corresponding UAV image data comprised the second component of the studied data. The shallow collection process involved collecting the residual film data of the plow layer from each cotton field using the five-point sampling method, with 10 sample points collected from each cotton field, totaling 300 sampling points. As shown in Figure 2d,e. With respect to the corresponding aerial images, shallow residual film samples were obtained in 1 m × 1 m squares. The tools for shallow residual film sampling included 1 m × 1 m folding rulers, shovels, and tape measures. The sampling depth ranged from 0 to 10 cm. All the shallow residual films in the 0–10 cm range were manually picked up and returned to the labeled bags. As shown in Figure 2c. The aerial height of the image data was the same as that of the first data component. Preliminary data were obtained for the verification of shallow residual films. The data acquisition process is shown in Figure 2, and the image acquisition information is presented in Table 1.

Figure 2.

Data collection process: (a) The image data collection process of DJI Mavic 3M.; (b) Construction of image datasets; (c) Shallow data sampling tool; (d) Shallow verification data collection of DJI M200; (e) Construction of shallow datasets.

Table 1.

Information related to image data collection.

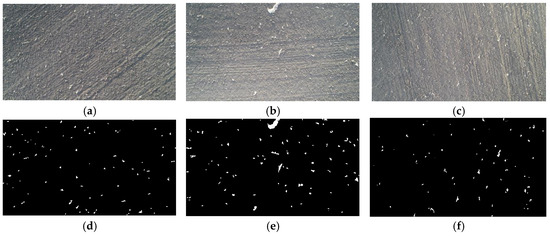

2.2.2. Data Processing

To remove blank records, avoid interference with model learning, and ensure data integrity, the collected surface residual film data images were cleaned to eliminate invalid samples that were blurry, overexposed, underexposed, or had no residual film, and the image format was unified to PNG format. The original image was subsequently annotated using Photoshop CS5, and the residual film in the image was manually annotated. The manual annotation results are shown in Figure 3. After screening, data preprocessing was performed on the images. First, the images were uniformly adjusted to a fixed size of 1200 × 600. Second, image normalization was performed to eliminate dimensional differences via standardization to the pixel value range. This step not only accelerated the convergence efficiency during the model training process but also precisely matched the image characteristics with the input specifications of the pretrained model. Before the image data are used for model training, a data augmentation strategy is needed. Through operations such as random rotation, random cropping to 1024 × 512, random adjustment of brightness and contrast, and the addition of Gaussian noise, the sample diversity can be expanded to effectively alleviate the risk of overfitting. Last, the dataset was divided into a training set, a validation set, and a test set at a ratio of 8:1:1. The data partitioning method is random partitioning, and the test set remains independent throughout the entire training process, not participating in any training stages, to ensure the objectivity and accuracy of model evaluation.

Figure 3.

Schematic of data annotation: (a) Original image 1; (b) Original image 2; (c) Original image 3; (d) Manual label 1; (e) Manual label 2; (f) Manual label 3.

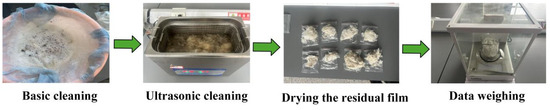

The shallow residual film samples that were collected were cleaned with multiple basic rinses to thoroughly remove impurities such as soil and debris adhering to the surface. After basic cleaning was completed, an ultrasonic cleaning process was used to further remove the fine dirt remaining in the pores of the residual film and on the surface to ensure that the samples were clean. After all the cleaning steps were completed, the residual film was collected and placed in a well-ventilated and dry area for natural air drying. Direct sunlight or high-temperature baking should be avoided to prevent physical changes in the material of the residual film. After the air-drying is completed, a high-precision balance is used to accurately weigh the residual film, and the sample numbering rules are strictly followed to complete the marking. The specific process is shown in Figure 4.

Figure 4.

Treatment process of shallow residual film.

2.2.3. Data Analysis

After data processing, the shallow residual film data of 300 samples and the corresponding surface image data of unmanned aerial vehicles were obtained. First, for the surface image of the UAV, the residual film coverage rate was calculated. The residual film coverage rate C is the ratio of residual film pixels in the image to the total number of pixels of the entire UAV aerial image. Let the size of the aerial image be M × N; then, the residual film coverage rate C is the ratio of the total number of residual film pixels p(x,y) = 1 in the image to the total number of image pixels M × N, as shown in Equation (1).

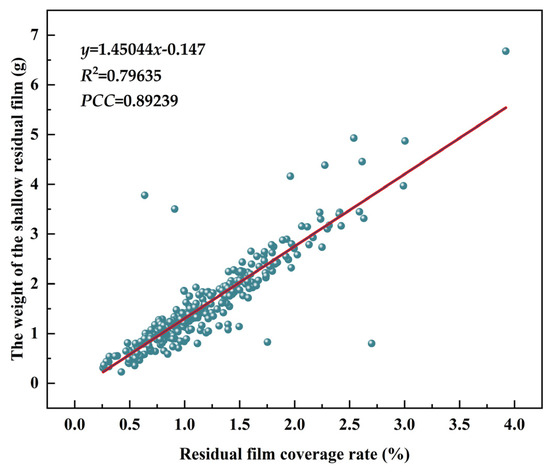

After the residual film coverage rate of 300 samples was obtained, a linear regression fitting analysis was conducted between it and the preprocessed shallow residual film sample data. The results indicated that the R2 of the fitting model was 0.79635 and that the PCC was 0.89239. The corresponding mathematical expression was y = 1.45044x − 0.147 (where x represents the surface residual film coverage rate and y represents the shallow residual film weight). Without eliminating abnormal samples, the surface residual film coverage rate and the shallow residual film weight still displayed a relatively high positive correlation, indicating a stable linear correlation between the two. The linear fitting results are shown in Figure 5.

Figure 5.

Fitting results for the residual film coverage rate and shallow residual film weight.

2.3. Construction of a Semantic Segmentation Model for Residual Film in Cotton Fields

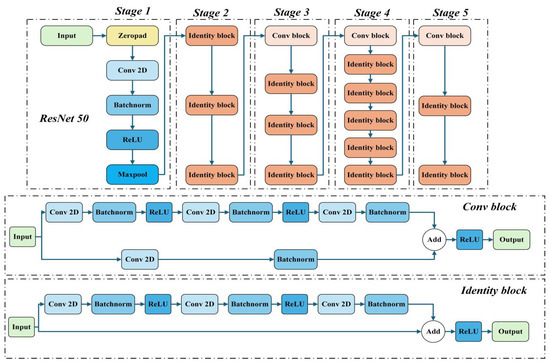

2.3.1. ResNet50 Backbone Network

ResNet [26] has been widely applied in various deep learning network architectures because of its outstanding feature extraction capabilities and its innovative residual structure. This deep residual network can effectively mitigate the vanishing or exploding gradient problem caused by the deepening of network layers, overcoming the main challenges of deep networks, such as the decreasing learning efficiency and the difficulty of further increasing the accuracy of the model. The specific network structure is shown in Figure 6. The core components of ResNet50 are the Conv block and Identity block, and its network architecture is generally divided into five functional stages. One initial convolutional layer and four subsequent residual layers. During the feature extraction process, the residual block actively reduces the size of the feature map to compress the spatial dimension through the step size setting of the convolutional layer, while gradually increasing the number of channels to enhance the feature expression ability, ultimately achieving hierarchical progressive extraction from low-order texture features to high-order semantic features.

Figure 6.

ResNet50 Backbone Network.

In this study, ResNet50 is selected as the backbone network of the CNN, mainly because of its residual structure, to address the problem of vanishing gradients in deep networks and improve the feature extraction ability in small-sample scenarios. ResNet50 is composed mainly of convolutional blocks and identity blocks. The convolutional blocks are used to change the network dimension through step-size downsampling.

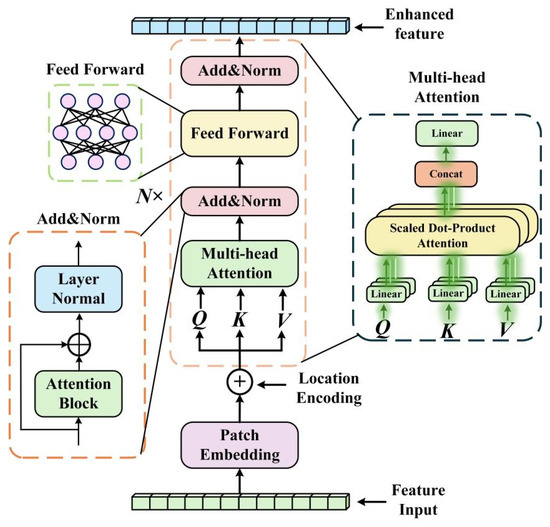

2.3.2. Transformer Enhancement Module

The Transformer model is a deep learning model based on a self-attention mechanism, which can achieve efficient data processing through parallel computing [27]. To overcome the inherent limitations of traditional CNNs in long-distance dependency modeling, this paper introduces a Transformer enhancement module. This module is placed at the end of the encoder. The main objective of the model is to effectively supplement the reliance of the CNN on local features by obtaining the global context information of the image, thereby improving the semantic understanding and feature correlation capabilities of the model for complex scenes such as cotton field residual film segmentation. The main structure of the Transformer enhancement module is shown in Figure 7 and consists of a patch embedding layer, position encoding, and multilayer Transformer encoders. The main function of patch embedding is to convert the two-dimensional spatial feature map extracted by the CNN into a serialized representation suitable for processing by the Transformer, reducing the computational complexity through dimension reduction, and maintaining the spatial relationship by injecting position information. Patch embedding connects convolutional local feature extraction and Transformer global context modeling and has the complementary advantages of the two architectures. In the Transformer enhancement module, the main role of position encoding is to inject absolute or relative position information into the transformer model to compensate for the inherent permutation invariance defect of its self-attention mechanism, enabling the model to understand the spatial order and relative position relationship of elements in the sequence and correctly handle data with temporal or spatial structures. The encoder includes a multihead attention mechanism, residual connections, layer normalization, and a feedforward network. The encoder further improves the nonlinear expression ability of features through a feedforward neural network. During this process, the encoder introduces residual connections and layer normalization mechanisms, which not only increase the stability of model training but also the efficiency of gradient propagation. By stacking multiple coding layers, the model can extract features layer by layer and improve its ability to model complex dependencies. Ultimately, the low-dimensional features output by the encoder are mapped to scalar values through a linear layer. The multihead attention mechanism is composed of multiple self-attention mechanisms. The input features of the self-attention mechanism obtain the corresponding query matrix Q, key matrix K, and value matrix V. The output result of the self-attention is obtained by a weighted summation of the V matrix based on the correlation between the Q matrix and the K matrix [28]. The corresponding formula is shown in the following Equation (2).

where softmax represents the normalization function of the self-attention weight, dk denotes the scaling factor that prevents the dot product from being too large, and Attention is the function of the self-attention mechanism.

Figure 7.

Network structure of the Transformer enhancement module.

2.3.3. RDT-Net Segmentation Model

To precisely segment residual films in UAV images, in this study, a hybrid network based on a CNN and a Transformer is constructed. The main goal is to extract multiscale local features of the residual film by a ResNet50 backbone network, perform global feature space correlation with a Transformer, and restore resolution by a multiscale fusion decoder. This structure outputs features of different resolutions through downsampling at each stage of ResNet50 and fuses the features of different resolutions with the decoder for upsampling through skip connections, maintaining the multiscale detail information of the image. A Transformer enhancement module is introduced between ResNet50 and the decoder to identify long-distance dependencies. The features output by Stage 5 are input into the Transformer enhancement module, and a total of 6 layers of Transformer enhancement modules are superimposed to increase the segmentation accuracy of the model. The improved deep features and the shallow features of the CNN are combined to restore the image resolution through progressive upsampling and feature fusion, and the semantic segmentation result of the surface residual film of the UAV is output.

2.3.4. Method for Predicting the Weight of Shallow Residual Film

The linear fitting effect of the experimental data on the surface residual film coverage rate and the shallow residual film weight of 300 groups of unmanned aerial vehicles is relatively good. On this basis, this study increases the segmentation accuracy of surface residual films through the RDT-Net hybrid network and then further uses machine learning to construct a prediction model for the content of shallow residual films, achieving a coherent process from precise segmentation to effective prediction. Three hundred sets of sample data were randomly divided into a training set and a test set at a ratio of 8:2. Four machine learning models, namely, multiple linear regression (MLR), the random forest (RF), ridge regression (RR), and the support vector machine (SVR), were selected for comparison. The input feature is the surface residual film coverage rate C of the unmanned aerial vehicle obtained through semantic segmentation of the RDT-Net model, and the target label is the measured value of the shallow residual film weight of the corresponding sample point. First, a regression model is constructed on the basis of the training set and fitted to obtain the regression straight line. Second, the surface residual film coverage rate of all 300 groups of samples is input into the model to generate the predicted value of the shallow residual film weight. Lastly, the predicted values and the measured values are fitted via regression to determine the overall prediction effect of the model.

2.4. Evaluation Indicators and Test Environment

2.4.1. Evaluation Indicators

To test and evaluate the various performances of the model, on the basis of the confusion matrix, this study uses the commonly used pixel accuracy (Pa), mPA, F1-score, and mIoU in semantic segmentation model experiments as evaluation indicators. The F1 score is jointly determined by the recall and precision, and the specific calculation formulas are Equations (3)–(8).

where TP represents the number of pixels for which the sample is truly labeled as a residual film and the model correctly predicts it as a residual film; FP denotes the number of background pixels for which the true label of the sample is the background and the model incorrectly predicts it to be a residual film; FN indicates the number of residual film pixels in the background for which the true label of the sample is residual film and the model incorrectly predicts it; and TN denotes the number of background pixels for which the true label of the sample is the background and the model correctly predicts it as the background.

In this study, the R2, RMSE, and PCC were used to evaluate the performance of the model in predicting the weights of shallow residual film via the coverage rate of surface residual film of UAVs. The calculation formulas are shown in Equations (9)–(11).

where represents the true value, denotes the true average value, indicates the predicted value, represents the predicted average value, and n denotes the sample size.

2.4.2. Test Environment

The network training in this study is performed with the TensorFlow 2.5.0 deep learning framework, which is built on the Python 3.8 environment and relies on the CUDA 11.2 acceleration platform to achieve computing acceleration. The training process is completed on the GPU mode workstation, whose hardware is an Intel (R) Xeon (R) W-2223 CPU @ a 3.6 GHz processor, an NVIDIA GeForce RTX 3090 graphics card, and 24 GB of video memory. The input image size of the model is 1024 × 512, the number of iteration rounds (epochs) is 100, the batch size is 6 on the basis of the memory capacity, the initial learning rate is 0.0001, and the Adam optimizer is used for training optimization to reduce the risk of overfitting of the model. The specific parameter settings are listed in the table below. The specific training parameters are shown in Table 2.

Table 2.

Training parameter settings.

2.5. Dice-CE (Cross-Entropy) Loss Function

In terms of the severe imbalance of residual film pixels and background pixels in UAV image data, the dice loss and CE loss are combined to increase the stability of model training and prediction accuracy, improve the ability of the model to determine small targets, and evaluate the similarity measurement of positive and negative samples. The Dice [29] function is typically used to calculate the similarity between two samples, and the calculation formula is presented as follows.

where X and Y represent the true label pixels of the category and the model prediction pixels, respectively.

Dice loss can effectively mitigate the effect of the unbalanced number of pixels in the sample. The image dataset in this study is limited by small segmentation targets and unbalanced categories. Notably, small targets are easily overlooked by the model, and a single loss function is prone to optimization saturation. To improve the ability of the model to focus on small targets and reduce loss oversaturation, cross-entropy loss [30] is introduced as a component of the total loss. The corresponding formula is as follows.

where M represents the number of categories, yic denotes the judgment of the authenticity of the current sample, which is true when its value is 1; and pic represents the predicted probability given by the classifier that the current sample belongs to category c.

3. Results and Analysis

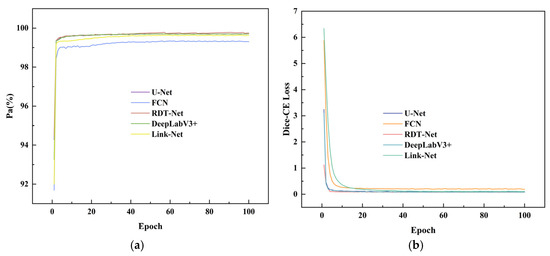

3.1. Model Training Comparison Test

To validate the actual segmentation performance of the RDT-Net model, this study selects mainstream and classic semantic segmentation models such as FCN, Link-Net, DeepLabV3+, and UNet for comparison. To improve the scientific nature of the experiments, all the models were uniformly set with key training parameters such as the number of training iterations, the initial learning rate, and the batch size to eliminate the interference of parameter differences on the performance evaluation of the models. The learning curves of each model based on the validation set of drone residual film images are shown in Figure 8. The Dice loss learning process is shown in Figure 8a, and the pixel accuracy (Pa) learning process is shown in Figure 8b. The accuracy training curve indicates that the RDT-Net model has a relatively high accuracy rate in the early stage of training, and as the number of training rounds increases, the accuracy rate converges rapidly and stabilizes. Throughout the entire training process, the accuracy rate fluctuated slightly and remained consistently within a relatively high range, revealing the excellent training stability and rapid learning ability of the model. According to the training curve of Dice-CE loss, the RDT-Net model achieves a rapid and efficient decrease in Dice-CE loss during the early stage of training. As the training rounds progress, the loss value rapidly becomes stable and quickly converges, indicating that the model can efficiently learn data features and has a strong ability to optimize the loss. Compared with those of models such as FCN, LinkNet, DeepLabV3+, and UNet, the learning curve of the RDT-Net model is always optimal. Moreover, throughout the entire training period, the curve fluctuation amplitude was significantly smaller, revealing not only excellent training stability but also efficient convergence ability, further confirming the reliability of the model in the feature learning and optimization process.

Figure 8.

Validation set learning curve: (a) Validation set accuracy learning curve; (b) Validation set loss learning curve.

To comprehensively and objectively evaluate the comprehensive performance of the RDT-Net model, a comparative analysis of the highest accuracy rate, the lowest loss value, and the model weights was conducted based on the validation set. The specific values of and differences between the RDT-Net model and each compared model in terms of the above indicators are presented in detail in Table 3. The RDT-Net model proposed in this study achieves excellent performance on the validation set, with an optimal recognition accuracy rate of 99.76% and an optimal loss value of 0.0772. After the training is completed, the size of the model weight file is approximately 315 M. In terms of overall performance, this model maintains high-precision prediction performance while effectively controlling the weight scale. The model can accurately complete the recognition and segmentation of residual film pixels within a limited time, balancing performance superiority and the efficiency of actual deployment.

Table 3.

Comparison of the validation set models.

3.2. Model Test Comparison Experiment

First, to verify the optimization effectiveness of Dice-CE loss, a control experiment was performed during the training process of the RDT-Net model in this study. CE loss, dice loss, and Dice-CE loss were selected as the bases of the optimization objective functions of the model. With the other training parameters specifically controlled, the performance of the models corresponding to the three loss functions was compared and analyzed with the test set to elucidate the advantages of Dice-CE loss.

According to the data in Table 4, the model with Dice-CE loss achieves a significant improvement in the performance of small target segmentation. Compared with those of the model with only CE loss, the mPA, F1 score, and mIoU increased by 1.37, 1.14, and 1.67 percentage points, respectively. Compared with those of the model with only Dice loss, the above three core indicators increase by 3.15, 3.69, and 4.76 percentage points, respectively, confirming the superiority of Dice-CE loss in increasing the segmentation accuracy of small targets.

Table 4.

Effect of different loss functions on model performance on the basis of the test set.

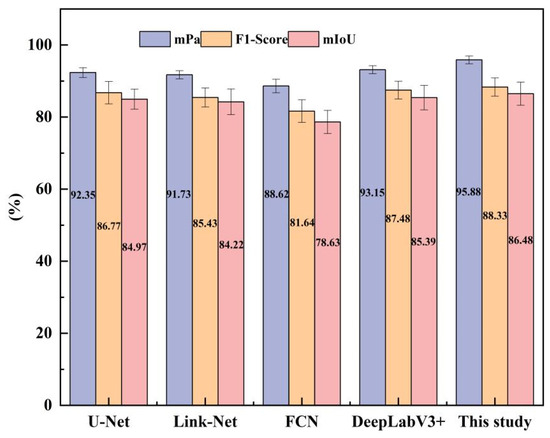

To systematically evaluate the application performance and reliability of the RDT-Net model in actual semantic segmentation scenarios, this study designs a multimodel comparison experiment to conduct performance comparisons with multiple segmentation models. To improve the scientific nature and comparability of the experimental results, all the models included in the comparison were evaluated on a completely consistent test set. Moreover, irrelevant variables such as the calculation method of the evaluation indicators and the reasoning hardware environment were strictly controlled to ensure consistency. The comparison results are shown in Figure 9. The RDT-Net model achieved excellent segmentation performance on the test set, with mPA, F1 score, and mIoU values of 95.88%, 88.33% and 86.48%, respectively. Compared with those of the U-Net model, the mPA, F1 score, and mIoU increase by 3.53, 1.56, and 1.51 percentage points, respectively, and compared with those of the Link-Net model, the mPA, F1 score, and mIoU increase by 4.15, 2.90, and 2.26 percentage points, respectively. Compared with those of the FCN model, the mPA, F1 score, and mIoU increase by 7.26, 6.69, and 7.85 percentage points, respectively, and compared with those of the DeepLabV3+ model, the mPA, F1 score, and mIoU increase by 2.73, 0.85, and 1.09 percentage points, respectively. The residual film image segmentation effect of the FCN model is the poorest, with mPA, F1 score, and mIoU values of 88.62, 81.64, and 78.63, respectively. In contrast, the performance of the DeepLabV3+ and U-Net models is relatively good, but further improvement is still needed for the task of segmenting small residual film targets in unmanned aerial vehicle images. A horizontal comparison of the test set indicators of this model with those of four comparison models, namely, FCN, U-Net, DeepLabV3+, and Link-Net, reveals that the RDT-Net model proposed in this study is at the optimal level in terms of all the core evaluation indicators and has achieved significant improvements over the corresponding indicators of the other models. The excellent performance of this model in semantic segmentation tasks has been fully validated.

Figure 9.

Results of different model test sets.

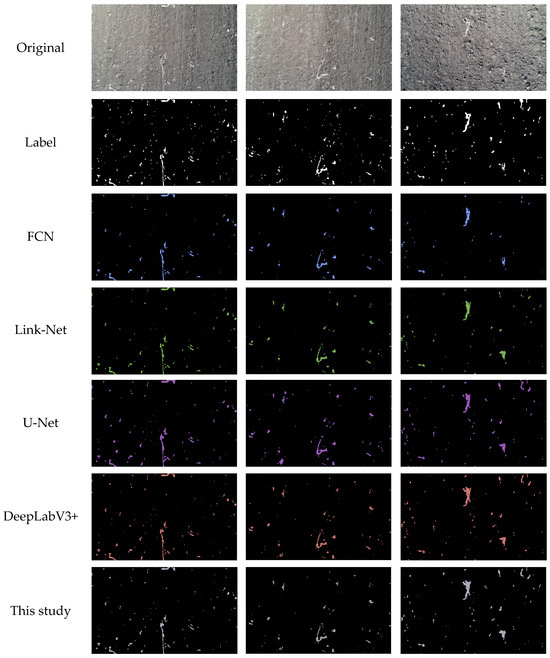

To present the differences in the performance of various models in the semantic segmentation task of surface residual films for UAVs, this study conducts a visual comparison with four other segmentation models and the proposed model. The segmentation results of each model on the surface residual film test samples of unmanned aerial vehicles are shown in Figure 10. Through an intuitive comparison of the original images, real annotations, and prediction results of each model, the differences in the segmentation accuracy and completeness of the residual film area obtained by different models can be clearly observed. It can be intuitively observed from the segmentation results in Figure 10 that the FCN and Link-Net models have obvious defects in the segmentation of surface residual films of unmanned aerial vehicles. There are significant missed detections in some residual film areas, accompanied by several false detections at the same time. In contrast, the U-Net, DeepLabV3+, and RDT-Net models proposed in this study have better segmentation effects and can identify the contours and distributions of most residual film regions more completely. However, the edge segmentation accuracy of extremely small residual films is still insufficient for the former two methods. Specifically, there is a slight blurring in the transition area between the edge of the extremely small residual film and the background. The models failed to achieve highly precise pixel-level division.

Figure 10.

Effects of different model segmentations.

On the basis of the above comparative analysis of the segmentation effects of each model, the semantic segmentation model RDT-Net proposed in this study achieves better comprehensive performance in the task of segmenting residual surface film based on images obtained with UAVs. Compared with the obvious missed and false detections of FCN and Link-Net, as well as the deficiencies of U-Net and DeepLabV3+ in the segmentation of extremely small residual film edges, the proposed model can not only identify the residual film area more accurately and reduce misjudgments and missed detections, but also identify the residual film contours more completely. Even for complex backgrounds, the segmentation of small and medium-sized residual films via this model is more robust, fully confirming its advantages in the semantic segmentation task of surface residual films.

3.3. Regression Prediction of Shallow Residual Membrane Weights

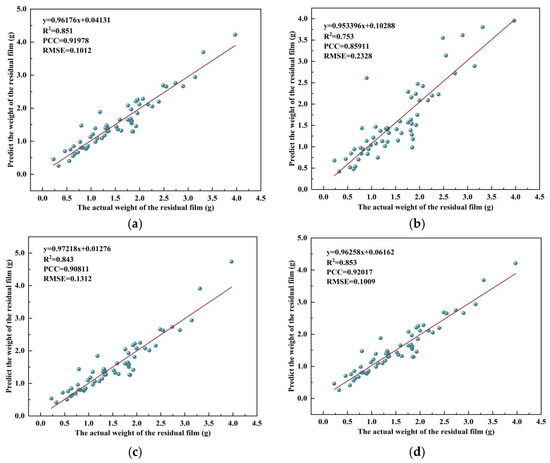

The UAV images were extracted from the data of 300 groups of shallow residual film samples and input one by one into the trained RDT-Net model. The surface residual film coverage rate corresponding to each group of samples was obtained through model reasoning. Selecting the residual film coverage rate predicted by the unmanned aerial vehicle as the input feature and the true value of the shallow residual film weight as the label, a machine learning regression model was constructed and trained, and a model that can predict residual film weights through the coverage rate was obtained. The trained weight prediction model was used to predict residual film weights. The 60 sets of data in the test set were subjected to regression fitting analysis with the corresponding true weight values to verify the prediction performance of the model. The regression fitting effects of different machine learning models on the test set are shown in Figure 11.

Figure 11.

Prediction effects of different machine learning models for the test set: (a) MLR; (b) RF; (c) SVR; (d) RR.

In the task of predicting the weight of shallow residual films, there are significant differences in the prediction performance among different machine learning models. The ridge regression (RR) model performed the best, and its prediction effect was superior to that of the other models. The values predicted with this model were fitted in reference to the true values of the shallow residual film weights, yielding the regression equation y = 0.96258x + 0.06162. In terms of the quantitative evaluation indicators, R2 = 0.853, and the RMSE = 0.1009. Overall, this model has a relatively high prediction accuracy and can be effectively applied to predict shallow residual film weights.

4. Discussion

This study focuses on a UAV prediction method for shallow residual film pollution in cotton fields based on RDT-Net. A path for predicting weights by combining UAV image segmentation with machine learning was constructed, achieving nondestructive and large-scale monitoring and increasing the weight prediction R2 to more than 0.85 through the combination of RDT-Net and RR. The drawbacks of traditional methods, such as low efficiency, high labor intensity, and high cost, are addressed. The overall research framework is divided into two components: the construction of semantic segmentation algorithms and the modeling of machine learning. In the early stage of data collection and analysis, a linear fitting analysis was conducted on the surface residual film coverage data obtained by the UAV and the measured shallow residual film weight data. The results revealed a significant positive correlation between the two, with an R2 of 0.79635 and a PCC of 0.89239. The coverage rate of the surface residual film effectively reflects the weight of the shallow residual film. From the perspective of the distribution pattern of residual film in cotton fields, the surface residual film is a direct effect of the shallow residual film. The two are consistent in terms of spatial distribution density and fragmentation degree. Therefore, the coverage rate can be used as an alternative indicator for monitoring the weight of residual film. The coverage rate is extracted by the RDT-Net model, and its performance is better than that of other traditional semantic segmentation models. The segmentation problem of small and scattered residual film targets in the complex background of cotton fields is addressed through a local feature extraction CNN + a global context modeling Transformer. The model maintains stable performance in residual film image segmentation tasks with different coverage rates. On the test set, the mPa, F1 score, and mIoU reached 95.88%, 88.33% and 86.48%, respectively. Compared with the UAV-based farmland residual film pollution monitoring system developed by Yang et al. [9], the mIoU of the semantic segmentation model adopted in this study increased by 0.85 percentage points. Moreover, compared with the shallow residual film prediction method proposed by Qiu et al. [18], this study integrates RDT-Net semantic segmentation and machine learning regression modeling and increases both the accuracy of residual film segmentation and the prediction accuracy of shallow residual film weight. A correlation model between the surface residual film coverage rate and the shallow residual film weight was constructed through a machine learning model to indirectly predict the shallow residual film weight on the basis of the surface coverage rate. A performance comparison of the various models revealed that the RR model performed the best, with an R2 of 0.853 and an RMSE of 0.1009. In terms of data characteristics, although the relationship between the residual film coverage rate of cotton fields and weight is significantly positively correlated, several outliers are affected by minor interference factors such as soil moisture and residual film thickness. However, the regularization characteristics of ridge regression can effectively reduce the interference of outliers on the model and avoid overfitting. In contrast, although random forests have a strong ability to fit nonlinear relationships, they are prone to overfitting and poor generalization when the sample size is limited to 300 groups. On the other hand, the SVR is sensitive to the kernel function parameters and has insufficient stability in small sample scenarios. This study provides reference data for model selection in agricultural parameter prediction tasks. Compared with the traditional manual sampling monitoring method [15,16,17], the model in this study significantly increased the monitoring efficiency of residual film pollution in cotton fields and simultaneously reduced the monitoring cost.

While the high predictive accuracy of our model is promising, its practical utility in real-world cotton field management deserves further discussion. The correlation between surface coverage and plow layer weight, which our model leverages, is not merely a statistical phenomenon but is governed by field-specific physical processes. Firstly, the soil texture significantly influences the migration of residual film. In sandy soils, film fragments may be more easily buried by wind or water erosion, potentially leading to an overestimation of plow layer weight from surface coverage. Conversely, in cohesive clay soils, fragments may remain on the surface longer, having the opposite effect. Secondly, the history of tillage practices is a critical factor. Fields subjected to deep plowing will likely exhibit a stronger and more homogeneous correlation between surface and subsurface film compared to those under minimal tillage. Finally, the material properties of the mulch film itself, such as its thickness and biodegradability, affect its fragmentation pattern and density, thereby influencing the spectral signature captured by the sensors and the subsequent regression model. Our research, trained on data from the Xinjiang region, inherently encapsulates these local contextual factors. This contextual dependency, however, implies that model deployment in regions with vastly different agronomic practices or soil types would require calibration and potentially the incorporation of these factors as auxiliary input features in the future. Despite these considerations, the primary practical value of this study lies in its ability to provide a rapid, non-invasive diagnostic tool for predicting cotton fields with likely severe residual film pollution, thereby enabling targeted and cost-effective remediation efforts, which is a significant advancement over manual sampling approaches.

In this study, the machine learning prediction model uses only the surface residual film coverage rate as the single input feature and does not incorporate potential influencing factors such as residual film thickness, soil type, or film coverage duration. Although a single coverage rate achieved relatively high prediction accuracy in the experiment, in cotton fields with significant differences in residual film thickness, it may increase the weight prediction error. The robustness of the model can subsequently be further increased through multifeature fusion. Moreover, the RDT-Net model has the limitations of high computational complexity and large weight volume. Subsequently, this model needs to be optimized through lightweight model technology to achieve deployment on edge computing devices and improve its application value in field scenarios involving residual film monitoring.

In subsequent research, multimodal data fusion and model optimization can be explored. On the basis of the temperature difference between the residual film and the soil, multimodal fusion is performed by combining RGB images with thermal infrared images to accurately identify the residual film. Moreover, RDT-Net is combined with lightweight models. The model parameters are compressed through knowledge distillation technology to increase the reasoning speed and adapt to the real-time monitoring requirements of UAVs. The research method of this study is extended to other film-covered crops, such as corn and peanuts. Through transfer learning, the data collection cost in new scenarios is reduced. Moreover, on the basis of the soil and residual film characteristics in different climate zones, the model parameters are optimized to construct a universal residual film pollution monitoring model.

5. Conclusions

To address the high labor intensity, high cost, and low efficiency in the manual monitoring of shallow residual film pollution in cotton fields, this study focuses on the nondestructive and high-precision monitoring requirements of shallow residual film pollution in cotton fields, and a UAV-based prediction method for shallow residual film pollution in cotton fields is proposed based on RDT-Net combined with machine learning. The main research conclusions are as follows:

- (1)

- Through preliminary data collection and analysis of 300 groups of cotton field samples, the measured values of the surface residual film coverage rate obtained by the UAV and the shallow residual film weight values were linearly fitted. The results indicated an R2 of 0.79635 and a PCC of 0.89239, confirming that the surface coverage rate can be used as an effective alternative index for the shallow residual film weight. The results provide a data foundation for subsequent weight prediction on the basis of coverage.

- (2)

- To address the segmentation problem of small and scattered residual film targets in the complex background of cotton fields, the proposed RDT-Net model effectively increases the segmentation accuracy of residual films by integrating the local feature extraction ability of ResNet50 and the global context modeling advantage of the transformer. On the test set, the mPa, F1 score, and mIoU were 95.88%, 88.33% and 86.48%, respectively, providing reliable technical support for the precise quantification of surface residual film coverage.

- (3)

- On the basis of the surface residual film coverage rate, multiple machine learning prediction models were constructed, and their performances were compared. The prediction effect was the best, with an R2 of 0.853 and an RMSE of 0.1009. A technical path for indirectly predicting the shallow weight from the surface coverage rate was constructed. This research addresses the deficiency of existing monitoring methods in the assessment of shallow residual film content, providing an effective approach for monitoring and assessing surface and shallow residual film pollution in farmland.

Author Contributions

Conceptualization, L.M., Z.Z., H.W. and R.Z.; Data curation, L.M.; Formal analysis, L.M. and Z.Z.; Funding acquisition, Z.Z. and R.Z.; Investigation, L.M., Y.C. and S.Y.; Methodology, L.M.; Resources, Z.Z. and H.W.; Supervision, Z.Z., R.Z. and H.W.; Visualization, L.M. and Y.J.; Writing—original draft, L.M.; Writing—review and editing, Z.Z., H.W. and R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The authors gratefully acknowledge the financial support provided by the National Key R&D Plan of China (2022YFD2002400), the Science and Technology Bureau of Xinjiang Production and Construction Corps (2023AB014), the National Natural Science Foundation of China (32560411), the China Postdoctoral Science Foundation (2025MD774114), the Post Scientist Project of the National Modern Agricultural Industrial Technology System (CARS-15-17), the High-level Talent Initiation Program of Shihezi University (RCZK202442), and the Tianchi Talent Program Project of Xinjiang Uygur Autonomous Region.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Song, Y.; Sun, J.N.; Cai, M.J.; Li, J.Y.; Bi, M.Z.; Gao, M.X. Effects of management of plastic and straw mulching management on crop yield and soil salinity in saline-alkaline soils of China: A meta-analysis. Agric. Water Manag. 2025, 308, 109309. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, X.G.; Wen, H.J.; Zheng, X.; Niu, Q.; Kang, J.M. Research Status and Prospect of Control Technology for Residual Plastic Film Pollution in Farmland. Trans. Chin. Soc. Agric. Mach. 2017, 48, 1–14. [Google Scholar]

- Wang, P. Measures to Reduce the Pollution of Residual of Mulching Plastic Film in Farmland. Trans. Chin. Soc. Agric. Eng. 1998, 14, 185–188. [Google Scholar]

- Xu, G.; Du, X.M.; Cao, Y.Z.; Wang, Q.H.; Xu, D.P.; Lu, G.L.; Li, F.S. Residue levels and morphological characteristics of agricultural plastic films in typical areas. J. Agro-Environ. Sci. 2005, 12, 79–83. [Google Scholar]

- Zhang, D.; Liu, H.B.; Hu, W.L.; Qin, X.H.; Ma, X.W.; Yan, C.R.; Wang, H.Y. The status and distribution characteristics of residual mulching film in Xinjiang, China. J. Integr. Agric. 2016, 15, 2639–2646. [Google Scholar] [CrossRef]

- Zong, R.; Wang, Z.H.; Zhang, J.Z.; Li, W.H. The response of photosynthetic capacity and yield of cotton to various mulching practices under drip irrigation in Northwest China. Agric. Water Manag. 2021, 249, 106814. [Google Scholar] [CrossRef]

- Zhang, P.; Wei, T.; Han, Q.F.; Ren, X.L.; Jia, Z.K. Effects of different film mulching methods on soil water productivity and maize yield in a semiarid area of China. Agric. Water Manag. 2020, 241, 106382. [Google Scholar] [CrossRef]

- Yan, C.R.; Liu, E.K.; Shu, C.; Liu, Q.; Liu, S.; He, W.Q. Characteristics of plastic film mulching, residual pollution and prevention technologies in China. J. Agric. Resour. Environ. 2014, 31, 95–102. [Google Scholar]

- Yang, J.K.; Zhai, Z.Q.; Li, Y.L.; Duan, H.W.; Cai, F.J.; Lv, J.D.; Zhang, R.Y. Design and research of residual film pollution monitoring system based on UAV. Comput. Electron. Agric. 2023, 12, 108608. [Google Scholar] [CrossRef]

- Salgado, L.; Lopez-Sanchez, C.A.; Colina, A.; Baragano, D.; Forjan, R.; Gallego, J.R. Hg and As pollution in the soil-plant system evaluated by combining multispectral UAV-RS, geochemical survey and machine learning. Environ. Pollut. 2023, 333, 122066. [Google Scholar] [CrossRef] [PubMed]

- Kupkova, L.; Cervena, L.; Potuckova, M.; Lysak, J.; Roubalov, M.; Hrazsky, Z.; Brezina, S.; Epstein, H.E.; Mullerova, J. Towards reliable monitoring of grass species in nature conservation: Evaluation of the potential of UAV and PlanetScope multi-temporal data in the Central European tundra. Remote Sens. Environ. 2023, 294, 113645. [Google Scholar] [CrossRef]

- Yu, G.W.; Ma, B.X.; Zhang, R.Y.; Xu, Y.; Lian, Y.Z.; Dong, F.J. CPD-YOLO: A cross-platform detection method for cotton pests and diseases using UAV and smartphone imaging. Ind. Crops Prod. 2025, 234, 121515. [Google Scholar] [CrossRef]

- Liang, C.J.; Wu, X.M.; Wang, F.; Song, Z.J.; Zhang, F.G. Research on field plastic film recognition algorithm based on unmanned aerial vehicle. Acta Agric. Zhejiangensis 2019, 31, 1005–1011. [Google Scholar]

- Wu, X.M.; Liang, C.J.; Zhang, D.B.; Yu, L.H.; Zhang, F.G. Method for identifying residual plastic film after harvest period based on UAV remote sensing images. Trans. Chin. Soc. Agric. Mach. 2020, 51, 189–195. [Google Scholar]

- Du, X.M.; Xu, G.; Xu, D.P.; Zhao, T.K.; Li, F.S. Mulch film residue contamination in typical areas of North China and countermeasures. Trans. Chin. Soc. Agric. Eng. 2005, 21, 225–227. [Google Scholar]

- Wang, S.Y.; Fan, T.L.; Cheng, W.L.; Wang, L.; Zhao, G.; Li, S.Z.; Dang, Y.; Zhang, J.J. Occurrence of macroplastic debris in the long-term plastic film-mulched agricultural soil: A case study of Northwest China. Sci. Total Environ. 2022, 831, 154881. [Google Scholar] [CrossRef] [PubMed]

- He, H.J.; Wang, Z.H.; Guo, L.; Zheng, X.R.; Zhang, J.Z.; Li, W.H.; Fan, B.H. Distribution characteristics of residual film over a cotton field under long-term film mulching and drip irrigation in an oasis agroecosystem. Soil Tillage Res. 2018, 180, 194–203. [Google Scholar] [CrossRef]

- Qiu, F.S.; Zhai, Z.Q.; Li, Y.L.; Yang, J.K.; Wang, H.Y.; Zhang, R.Y. UAV imaging and deep learning based method for predicting residual film in cotton field plough layer. Front. Plant Sci. 2022, 13, 3748. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Xie, W.J.; Zhao, M.C.; Liu, Y.; Yang, D.Y. Recent advances in Transformer technology for agriculture: A comprehensive survey. Eng. Appl. Artif. Intell. 2024, 138, 109412. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, S.Q.; Hu, S.R.; Ning, J.F.; Lan, X.Y.; Wang, Y.S. A dairy goat tracking method via lightweight fusion and Kullback Leibler divergence. Comput. Electron. Agric. 2023, 213, 108189. [Google Scholar] [CrossRef]

- Xie, Y.; Lu, Z.J. Novel deep-learning method based on LSA-Transformer for fault detection and its implementation in penicillin fermentation process. Measurement 2024, 235, 114871. [Google Scholar]

- Guo, Z.H.; Cai, D.D.; Jin, Z.Y.; Xu, Y.T.; Yu, F.H. Research on unmanned aerial vehicle (UAV) rice field weed sensing image segmentation method based on CNN-transformer. Comput. Electron. Agric. 2025, 229, 109719. [Google Scholar] [CrossRef]

- Wang, P.X.; Du, J.L.; Zhang, Y.; Liu, J.M.; Li, H.M.; Wang, C.M. Yield Estimation of Winter Wheat Based on Multiple Remotely Sensed Parameters and CNN-Transformer. Trans. Chin. Soc. Agric. Mach. 2024, 55, 173–182. [Google Scholar]

- Wu, Q.; Li, J.; Zhao, L.; Liu, D.; Wen, J.; Wang, Y.; Wang, Y.; Huang, N.; Jiang, L.; Liu, Q.; et al. A noninvasive model for chronic kidney disease screening and common pathological type identification from retinal images. Nat. Commun. 2025, 16, 6962. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liao, J.; Liang, Y.X.; Jiang, R.; Xing, H.; He, X.Y.; Wang, H.; Zeng, H.Q.; He, S.W.; Tang, S.O.; Luo, X.W. Classification of Cotton Verticillium wilt Severity Levels Based on Transformer FNN and UAV Hyperspectral Remote Sensing Technology. Trans. Chin. Soc. Agric. Mach. 2025, 56, 240–251. [Google Scholar]

- Hossain, M.A.; Sakib, S.; Abdullah, H.M.; Arman, S.E. Deep learning for mango leaf disease identification: A vision transformer perspective. Heliyon 2024, 10, e36361. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, C.P.; Fu, Q.; Kou, R.K.; Wu, W.Y.; Liu, T.Y. Survey of Evaluation Metrics and Methods for Semantic Segmentation. Comput. Eng. Appl. 2023, 6, 57–69. [Google Scholar]

- Kumar, N.; Verma, R.; Sharma, S.; Bhargava, S.; Vahadane, A.; Sethi, A. A Dataset and a Technique for Generalized Nuclear Segmentation for Computational Pathology. IEEE Trans. Med. Imaging 2017, 36, 1550–1560. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).