Abstract

In recent years, grassland degradation has become a global ecological problem. The identification of degraded grassland species is of great significance for monitoring grassland ecological environments and accelerating grassland ecological restoration. In this study, a ground spectral measurement experiment of typical grass species in the typical temperate grassland of Inner Mongolia was performed. An SVC XHR-1024i spectrometer was used to obtain field measurements of the spectra of grass species in the typical grassland areas of the study region from 6–29 July 2021. The parametric characteristics of the grass species’ spectral data were extracted and analyzed. Then, the spectral characteristic parameters + vegetation index, first-order derivative (FD) and continuum removal (CR) datasets were constructed by using principal component analysis (PCA). Finally, the RF, SVM, BP, CNN and the improved CNN model were established to identify Stipa grandis (SG), Cleistogenes squarrosa (CS), Caragana microphylla Lam. (CL), Leymus chinensis (LC), Artemisia frigida (AF), Allium ramosum L. (AL) and Artemisia capillaris Thunb. (AT). This study aims to determine a high-precision identification method based on the measured spectrum and to lay a foundation for related research. The obtained research results show that in the identification results based on ground-measured spectral data, the overall accuracy of the RF model and SVM model identification for different input datasets is low, but the identification accuracies of the SVM model for AF and AL are more than 85%. The recognition result of the CNN model is generally worse than that of the BP neural network model, but its recognition accuracy for AL is higher, while the recognition effect of the BP neural network model for CL is better. The overall accuracy and average accuracy of the improved CNN model are all the highest, and the recognition accuracy of AF and CL is stable above 98%, but the recognition accuracy of CS needs to be improved. The improved CNN model in this study shows a relatively significant grass species recognition performance and has certain recognition advantages. The identification of degraded grassland species can provide important scientific references for the realization of normal functions of grassland ecosystems, the maintenance of grassland biodiversity richness, and the management and planning of grassland production and life.

1. Introduction

Grassland ecosystems play an important role in global climate change [1], play an irreplaceable role in water conservation biodiversity protection and provide a steady increase in carbon sinks [2]. Adequate grassland resources and healthy grassland ecosystems are essential for maintaining high levels of grassland livestock production, ensuring food security and meeting the basic needs of local pastoralists [3,4]. In recent years, under the dual influence of human activities and natural factors, grasslands have been degraded to varying degrees, and it seems to have become one of the global ecological problems [5,6]. The grassland degradation situation in China is particularly serious, with more than 90% of the grassland in a degraded state [7], which threatens the ecological security of grasslands in China. Changes in grassland vegetation community structure are synergistic with grassland degradation [8]. Grassland degradation forces vegetation to develop coping strategies, and the grass seed community structure changes accordingly, making the grass seed community structure index an important part of the grassland degradation index system. The composition of grass seed community structure indicators includes degraded indicator plant populations, dominant indicator plant populations, community species composition, community spatial regularity, population density, and population proportion; thus, the identification results of grass species can be used as the basis for changes in grass species community structure [9]. Therefore, the identification of degraded grassland species is of great significance for monitoring grassland ecological environments and accelerating grassland ecological restoration. The high-precision identification results can lay a solid data foundation for grassland resource surveys.

The identification of vegetation species is generally based on the external characteristics of plants. Compared with other external organs (such as roots, stems and flowers), the leaves of plants are easier to collect and obtain. Therefore, it is the most direct and effective method to use the leaf characteristics of vegetation to identify the types of vegetation species. The earliest methods for obtaining vegetation leaf length, leaf aspect ratio, leaf edge serrations and other traits by manual measurements involved heavy workloads, low work efficiency and difficulties in guaranteeing data objectivity.

With the continuous refinement of vegetation species identification techniques and methods, various scientific methods have been proposed by domestic and foreign scholars for the classification of vegetation species, including spectral characteristic parameter analysis [10,11], spectral mixture analysis [12,13] and the vegetation index [2]. Models such as the random forest model [14] and artificial neural networks [15] were used for grass seed identification. The combination of measured spectral data and hyperspectral remote sensing image data, based on parameters such as the vegetation index and spectral characteristics, and statistical analysis methods (such as partial least squares regression analysis, one-way analysis of variance and linear discriminant analysis) can not only extract the effective feature bands for vegetation classification and accurately identify vegetation types [16] but also distinguish newly grown vegetation from all vegetation [17].

In recent years, hyperspectral remote sensing technology has been widely used in grass species identification research, and the identification results significantly improved [18]. Hyperspectral remote sensing data have superior characteristics that multispectral data cannot match, i.e., high spectral resolution and rich spectral dimension information. It can quantitatively analyze weak spectral characteristic differences in the process of grassland degradation and can also estimate the community characteristic parameters of grassland degradation vegetation, making it far more capable of grassland degradation monitoring than multispectral remote sensing [19]. However, traditional grassland hyperspectral research is limited to the analysis and comparison of spectral reflectance characteristics [20]. There are two general ideas. One involves using spectral transformation processing based on measured spectral data (such as continuum removal, spectral feature parameter extraction and vegetation index extraction) to analyze vegetation spectral features; the other involves using mathematical statistical analysis methods (such as regression analysis and correlation analysis) to analyze the characteristic bands of the spectrum of various types of grass species [21,22,23]. Research on vegetation species identification using various hyperspectral data information extraction methods shows that hyperspectral remote sensing has more clear advantages than multispectral remote sensing in terms of vegetation species identification [15]. The abovementioned studies show that hyperspectral remote sensing technology has strong applicability and feasibility in grass species identification and has great development potential.

In recent years, with the development and improvement of artificial intelligence theories and applications, artificial neural networks (ANNs) as data mining technology—such as back propagation (BP) neural networks, multilayer perceptron (MLP) neural networks and convolutional neural networks (CNNs)—have been gradually introduced into the field of grassland identification research [24]. Artificial neural networks can process information by imitating the operation mechanism of the human brain. It has outstanding self-learning, self-organizing and self-adaptive characteristics, showing a strong fault-tolerant ability and a high degree of parallel and distributed processing information and nonlinear expression capabilities. In the field of spectral analysis, the application of ANNs covers many aspects and has become the most widely used pattern recognition technology in spectral analyses [25]. Aiming at the ground-measured spectrum, the BP neural network model can effectively classify the spectral characteristics of different grass species with high accuracy by mining data effectively, thereby identifying grass species [24]. Moreover, studies have shown that the MLP neural network model has high recognition accuracies for natural grasslands in various phases and small changes over time [26].

Currently, there are few high-precision identification methods for the identification of constructive and degraded grass species in typical temperate grasslands. This study made full use of field-measured spectral data to extract and analyze the parametric features of the grass species’ spectral data to distinguish the characteristic differences among various grass species to the greatest extent. Combined with the learning and analysis abilities of the neural network model, the accurate identification of constructive and degraded grass species in typical temperate grasslands can be realized. At the same time, compared with other identification methods, the identification method of ground spectral remote sensing data suitable for typical grassland species was determined. This study aims to realize the accurate identification of constructive and degraded grass species in typical temperate grasslands and provide an important research basis for the degradation monitoring and ecological restoration of typical temperate grasslands in China.

2. Materials and Methods

2.1. Study Area

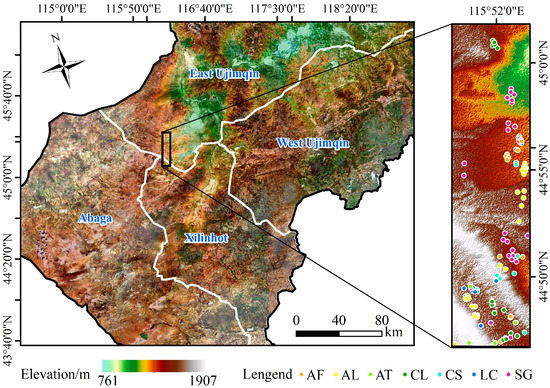

The typical temperate steppe of Xilingol League was taken as the research area in this study. It is located at 115°44′–115°56′ E and 44°46′–45°04′ N; the size of the area is about 228.211 km2, and it is located at the junction of East Ujimqin Banner and Xilinhot City, Xilingol League, Inner Mongolia, China (Figure 1). It belongs to the central region of the Inner Mongolia Plateau, and the terrain is relatively flat, with high-altitude terrain in the north and low-altitude terrain in the south. The study area is characterized by cold weather, high wind, little rain, long periods of sunshine, large temperature differences and strong evaporative power. It is dry and windy in spring seasons, rainy and hot in summer seasons, and cold in winter seasons due to Mongolia’s high air pressure. The typical temperate steppe in the study area is mainly composed of Stipa grandis as the constructive species community and Cleistogenes squarrosa as the constructive species community. With the change in the environment and the further development of grassland degradation in this area, communities dominated by degraded grass species such as Caragana microphylla Lam., Artemisia frigida and Allium ramosum L. gradually emerged.

Figure 1.

The map of the location of study area.

2.2. Data and Preprocessing

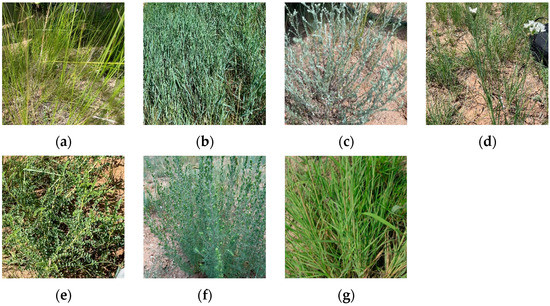

Based on the vegetation type distribution map [27], historical measured data and the specific conditions of the experimental area, ground spectral measurements were performed in the study area. The plant species included Stipa grandis (SG), Leymus leymus chinensis (LC), Artemisia frigida (AF), Allium ramosum L. (AL), Caragana microphylla Lam. (CL), Artemisia capillaris Thunb. (AT) and Cleistogenes squarrosa (CS) (Figure 2).

Figure 2.

Experimental grass species of typical grassland: (a) Stipa grandis (SG); (b) Leymus leymus chinensis (LC); (c) Artemisia frigida (AF); (d) Allium ramosum L. (AL); (e) Caragana microphylla Lam. (CL); (f) Artemisia capillaris Thunb. (AT); (g) Cleistogenes squarrosa (CS).

2.2.1. Data Acquisition

(1) Sample Plot Setting

According to the vegetation type distribution map, previous research data and historical field experiment data, the sample plots with dense grass species distributions and research potential within the study area were selected in advance. In the field experiment, the preset sample plots were adjusted according to the specific conditions, and the optimal sample plots were selected to meet the research needs.

(2) Quadrat Setting

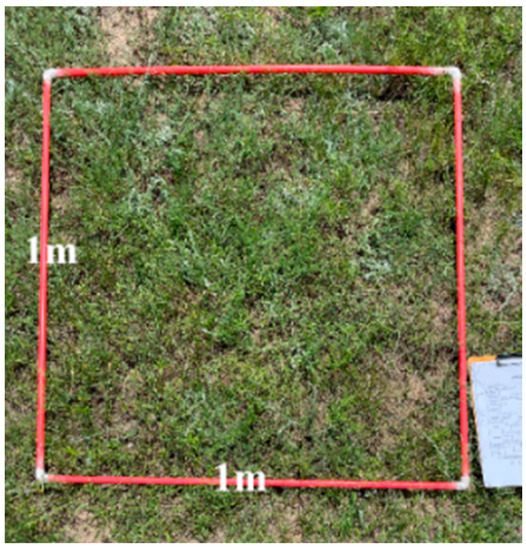

To consider the dominant grass species, degraded types and the quantity of distribution in the sample plots, we randomly set up four 1 × 1 m sample plots every 30 × 30 m for spectral collection (Figure 3). Attention should be given to the selection of ground sampling points that can best reflect the homogeneity of vegetation and soil in the study area in the process of setting and laying the sample plots.

Figure 3.

Diagram of quadrat settings.

(3) Spectra collection

An SVC XHR-1024i spectrometer was used to conduct field measurements of the grass species spectra in typical grassland areas from 6 July to 29 July 2021. The measurement was performed in sunny, windless or light weather, and the observation time was controlled and took place between 10:00 a.m. and 14:00 p.m. every day. A white board was used to calibrate the spectrometer before conducting measurements. The spectrometer probe should be approximately 1 m away from the vegetation so that the target vegetation in the quadrat fills the entire field of view of the probe. During the measurement, the grass species targeted by the spectrometer and the dominant grass species in the plot were recorded. For each species in each quadrat, ensuring at least 3 repeated measurements is required, and they are averaged in a follow-up measurement.

2.2.2. Data Preprocessing

Spectral reflectance data obtained by field measurements are subject to different interferences in the process of acquisition and transmission, which reduced the quality of spectral data and had a direct impact on the identification results of grass species. Therefore, it is necessary to smooth and denoise the measured data to improve data quality. First, the measured spectral data are screened, the abnormal spectral data are removed, and the mean value of the spectral data is calculated. Then, the moving average method—a method used to calculate the mean value of a certain point within a certain range on the spectral curve to replace the raw value of the point—is used to smooth and denoise the spectral data, as shown in Equation (1) [28]:

where reflects the reflectance of the sample at point i, which ranges from 1 to n.

The nine-point weighted moving average method is based on the principle of the moving average method. The weight of each point is attached to the distance between the four points before and after a point on the curve, and the distance is inversely proportional to the weight [28]:

where reflects the reflectance of the sample after smoothing. i − 4 to i + 4 represent nine points that are weighted, with i as the origin.

In this study, the nine-point weighted moving average method was selected as the smoothing and denoising method in order to calculate the measured spectral data and to complete data preprocessing.

2.3. Methodology

2.3.1. Feature Extraction of Ground-Measured Data

(1) Spectral Derivative

A spectral transformation is the deep processing of grass species spectral data by using mathematical transformations. After the grass species spectrum is spectrally transformed, spectral features will be enhanced, which will help amplify the spectral changes between the grass species and improve the separability of grass species. Common spectral transformation methods include spectral derivative transformations and continuum removal transformations. The first-order derivative (FD) spectrum is the first derivative of the grass species spectral data that is solved (i.e., the slope of the grass species spectral curve), which can reflect the change speed of the raw data and play a role in enhancing the difference between grass species. Equation (3) shows the calculation formula of the FD spectrum [29]:

where is the value of the FD spectrum at point i, i is the midpoint of band i − 1 and band i + 1. is the value of reflectance at band i + 1, is the value of reflectance at band i − 1, and is the distance between band − 1 and band + 1.

(2) Continuum Removal

Continuum removal, also known as envelope removal, is a normalization of spectral data so that the spectral absorption characteristics of various grass species can be compared under one criterion. The continuum removal process can enhance and expand the absorption features of the grass species spectral data, thereby highlighting the differences in spectral features between grass species [18]:

where is the value of the continuum at band , is the value of reflectance at band , is the magnitude of the Hull value at band , is the slope of Hull at the selected absorption start point to end point, is the value of reflectance at the absorption start point, is the value of reflectivity at the absorption end point, and and are the wavelengths corresponding to the absorption start and end points, respectively.

(3) Spectral Characteristic Parameters

The spectral dimension information of the measured spectral data is extremely rich, which provides the possibility for the rational and effective use of the data, but at the same time, a large amount of data and redundant data result, which lead to an increase in the amount of calculation and a decrease in efficiency in the specific application process and is not conducive for the identification of grass species. Therefore, 6 three-edge parameters, 4 peak-valley parameters, 3 three-edge area parameters, and 7 spectral characteristic parameters were selected as spectral characteristic parameters for calculation and analysis in this study (Table 1).

Table 1.

List of spectral characteristic parameters.

The amplitude of the three edges is the maximum value of the FD within a certain range. The position of the three edges is the wavelength corresponding to the amplitude of the three edges. The area of the three edges is the sum of the FD values within a certain range. The blue edge corresponds to 490–530 nm, the red edge corresponds to 560–640 nm, and the yellow edge corresponds to 560–640 nm.

The chlorophyll contained in plant leaves can strongly absorb most of the energy of red and blue light. The “green peak and red valley” in the visible light range is the key difference between the raw spectrum of vegetation and that of other ground objects. The green peak amplitude is the maximum reflectance in the range of 510–560 nm, the green peak position is the wavelength corresponding to the green peak amplitude, the red valley peak is the minimum reflectance in the range of 640–680 nm, and the red valley position is the wavelength corresponding to the red valley amplitude.

(4) Vegetation Index

The vegetation index method calculates the spectral reflectance of a specific band and amplifies the difference in spectral characteristics between grass species in the form of a vegetation index. The normalized difference vegetation index (NDVI), ratio vegetation index (RVI), difference vegetation index (DVI), modified soil adjusted vegetation index (MSAVI), transformed vegetation index (TVI) and renormalized difference vegetation index (RDVI) were selected for research. As shown in Table 2, the vegetation indices of 6 grass species were calculated according to the calculation formula. Bands and in the table are obtained by averaging the spectral reflectance within a certain range, where band corresponds to 620–670 nm and band corresponds to 841–876 nm.

Table 2.

List of vegetation index.

2.3.2. Establishment of Grassland Species Recognition Models

Grassland species recognition in the study area was conducted based on ground-measured spectral data. First, the feature extraction method based on principal component analysis (PCA) was used to reduce the dimensions of the grass species’ spectral data, FD and CR data and to build datasets. Then, the algorithms of RF, SVM, BP neural network and CNN were used to establish the recognition models that are applicable to the measured spectral data in order to identify and analyze the grass species and to study high-precision recognition methods.

(1) Feature Extraction Based on PCA

PCA is a multivariate statistical analysis method that can transform multiple indicators in the high-dimensional space of the raw data via their internal structural relationships to obtain a few mutually independent composite indicators in the low-dimensional space while retaining most information of the original indicators. In the process of applying PCA, multiple independent variables in the raw spectrum are linearly fitted based on the principle of maximum variance, and the original high-dimensional variables are replaced by the converted low-dimensional variables. Usually, the first few principal components that can represent T% of all feature information (T is generally higher than 80%) are selected to reduce the dimensionality of the data. PCA can not only replace the original indicators in the high-dimensional space with a few unrelated principal component factors to reduce the crossover and redundancy of the raw information, but it can also eliminate the need to manually determine the weights, obtain the internal structural relationships between the indicators via the analysis of the raw data and then obtain the weight according to the correlation and variability existing between the indicators, thus making the calculation results more scientific and reliable [40].

Hyperspectral data have many raw wave bands and high dimensionality. The FD and CR datasets can be calculated from the raw spectrum, which further enriches the spectral information of the data and highlights the differences in spectral features among grass species. The PCA method can extract the most effective features from the high-dimensional spectral information, which is conducive to the efficient recognition of grassland plant species.

(2) Establishment of SVM Models

The SVM algorithm is a model based on the Vapnik–Chervonenkis dimension theory and the principle of structural risk minimization in statistics, which further improves the traditional machine model by avoiding the dimensionality disaster of calculating high-dimensional data in the process of learning and training so that it can demonstrate excellent generalization ability and better robustness for pattern recognition and can be applied to small sample learning [41].

The core component of SVM is to maximize the classification boundary, transfer the data to the high-dimensional space in the form of nonlinear mapping using the inner product kernel function and establish the optimal hyperplane for the division of the high-dimensional space. The calculation formula is shown in (7) [41].

The dot product in the optimal hyperplane is represented by the inner product ; that is, the data are mapped to a new space, and then the optimization function is calculated, as shown in (9) [41]:

where is the Lagrange multiplier, which corresponds to the sample one by one. The optimal solution of the quadratic function is calculated, and the calculation formula of the optimal classification function is obtained, as shown in (12) [41]:

where denotes the threshold value of classification, and denotes the kernel function. There are three main types of kernel functions that are widely used in SVM algorithms: radial basis functions, polynomial kernel functions and neural network kernel functions.

(3) Establishment of BP Neural Network Models

The BP neural network is a multilayer feedforward neural network based on the backpropagation training algorithm. It has strong self-learning and adaptive capabilities. It takes the error of the network as the objective function and calculates the minimum value of the objective function based on the gradient search technique, which enhances the classification ability of the network. The damage to some neurons of the BP neural network will not have a fatal impact on the global learning and training of the network, and it can still operate normally with a certain degree of fault tolerance. It generally consists of three layers: an input layer, a hidden layer and an output layer. The BP neural network hidden layer is located between the input layer and output layer, which is not directly related to the outside, but the number of its nodes has a great influence on the network’s accuracy. Therefore, choosing a reasonable number of neurons in the output layer and hidden layer is beneficial for training the network and improving the recognition accuracy [42].

The computation process of the BP neural network can be divided into two stages, namely the forward computation process of the raw data and the back computation process. In the forward propagation process, the input data enter the network from the input layer and pass to the hidden layer, and the results are delivered to the output layer after layer-by-layer computational processing in the hidden layer. In this process, the neurons in each layer only influence the neurons in the next layer. When the output of the output layer does not reach the desired state, the network enters the backpropagation training process. The error of each layer is returned along the original path, and the weights of each neuron are corrected and learned so that the error is minimized.

The calculation formula is shown in (13) [42]. represents the input of the neural network, and the input of each neuron in the hidden layer can be expressed as follows:

where denotes the weight of the input layer neuron and the hidden layer neuron, denotes the threshold of the hidden layer neuron, and both and are adjusted in the direction of error reduction during the training process.

(4) Establishment of CNN Models

CNN is a type of neural network for deep supervised learning in the field of machine learning [43,44]. It can imitate the signal processing of the visual neural system to learn the deep features. Via parameter sharing and sparse connections, it solves the problem of having many parameters in traditional neural networks and is conducive for optimizing the network; on the other hand, it simplifies the model’s structure and reduces the risk of overfitting [44].

CNN generally includes an input layer, convolutional layer, pooling layer, fully connected layer and objective function. The training process of CNN is divided into two stages: forward propagation and backpropagation. In the forward propagation process, different modules, such as the convolution layer and pooling layer, are superimposed to gradually learn the original features of the input data and transfer them to the final objective function. In the backpropagation process, the objective function is used to balance the difference between the real value and the predicted value, and the objective function is used to update the weight and offset.

The input layer is the raw data transmitted into the entire neural network. The convolution layer is the core of the entire convolutional neural network. The network realizes the dimensionality reduction and feature extraction of data via the operation of the convolution layer. The calculation formula of the convolution layer is shown in (14) [44]:

where is the feature mapping of the current layer, is the nonlinear activation function, is the mapping of the upper layer features, is the set of feature mappings, is the weight value corresponding to the filter at () in the layer, and is the bias.

Nonlinear activation function processes the linear output of the upper layer and can enhance the characterization ability of the model. The commonly used activation functions in the activation layer are ReLU, tanh and sigmoid, which are calculated by Equations (15)–(17) [44], respectively.

The pooling layer, also known as the downsampling or subsampling layer, can effectively reduce the amount of computation, improve the network’s efficiency, enhance the generalization ability and prevent overfitting. Commonly used pooling layers include maximum pooling and average pooling, and their calculation formulas are (18) and (19) [45], respectively:

where denotes max pooling, i.e., the maximum value of the pooled domain; denotes average pooling, i.e., the average value of the pooled domain; is the activation value of the pooled domain; is the corresponding domain on the feature map.

The full connection layer combines the features transmitted at the lower layers, such as convolution and pooling, in the network to obtain high-level features and complete the classification task.

The objective function, also called the cost function or loss function, is the commander in chief of the entire convolutional neural network. The objective function generally includes the cost function and regularization. The backpropagation of the network is performed using the errors generated by the real values and predicted values of the samples to learn and adjust the network parameters.

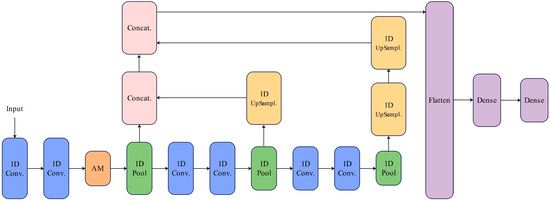

The 1D CNN model set up in this study contains 6 convolutional layers and 3 pooling layers; finally, the softmax classifier is used to complete the recognition. Considering that the 1D CNN can only extract the spectral features of a single layer, there is a loss of detailed information; thus, the 1D CNN model is improved. By upsampling and merging pooling layers of different dimensions, multiscale features are fused, and an attention mechanism (AM) is introduced to redistribute the weight of different layers to obtain more spectral information features, thus improving the recognition performance of the 1D CNN. The improved network structure is shown in Figure 4.

Figure 4.

Diagram of the structure of the improved 1D CNN model.

(5) Dataset Construction

The input data applied to the model are derived from field-measured data, and the spectral feature parameters + vegetation index, spectral FD and CR data are calculated from the grass species spectra. PCA was carried out on the three sets of data, and the first k components were selected according to the cumulative contribution rate so that the cumulative contribution rate reached more than 90%, and the spectral characteristic parameters + vegetation index dataset, FD dataset and CR dataset were obtained. Each dataset contains 256 sample data. The above-mentioned three datasets are inputted into four recognition models, and the model automatically splits and disrupts the input dataset, using 70% of the data of each grass species as the training sample and 30% as the validation sample—that is, a total of 179 labeled data as the training set and 77 unlabeled data as the validation set. The validation set is set up to validate and compare the performance of individual models in identifying unknown spectral data.

(6) Accuracy Assessment

The overall accuracy (OA), producer’s accuracy (PA) and average accuracy (AA) are used to evaluate the recognition accuracy of each model [9].

The OA is calculated by Equation (20), which represents the ratio of the number of samples correctly classified by the model classification method to the total number of samples . It can characterize the recognition performance of the entire classifier, which can reflect well the classification accuracy of the model and the overall error of the classification results. However, it is greatly affected by the sample’s distribution, especially when the sample distribution is unbalanced and there are too many samples of a certain category, which will have a significant impact on the OA of the classification results.

The PA can be calculated by Equation (21). It is the ratio of the number of samples correctly classified in each category to the total number of samples in that category—that is, the probability that the category of any random sample in the validation sample is consistent with its real category in the classification result—reflecting the accuracy rate corresponding to each category.

The AA is shown in Equation (22). It is the average of the classification accuracy of each category. It represents the average of the percentage of correctly classified samples, giving each category the same degree of attention.

3. Results

3.1. Ground-Measured Data Feature Analysis

3.1.1. Grass Species Spectra Analysis

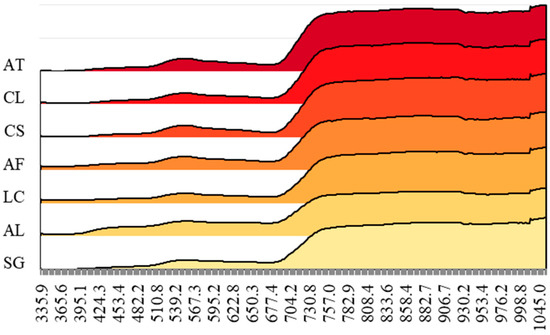

Figure 5 shows the curve of the grass species spectral data after smoothing, with AL having the highest reflectivity at the green peak and CL having the smallest reflectance. At the red valley, the reflectance values of seven grass species are consistent with the order of the reflectance size at the green peak, among which the reflectivity of AL decreases more smoothly at the red valley, while the reflectance of the remaining six grass species decreases rapidly.

Figure 5.

Grass species spectra in the study area.

3.1.2. Spectral Derivative Feature Analysis

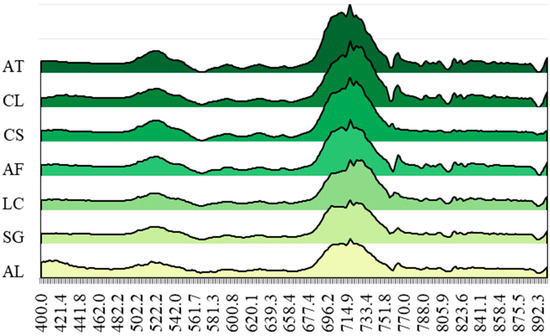

Figure 6 shows the FD spectral data curve of typical grassland species in the study area. The first smaller peak occurs in the range from 480 to 550 nm, and the size of this peak reflects the ability of this type of vegetation to reflect chlorophyll to a certain extent. The FD spectral data changes significantly around this peak, among which the FD value of AT is the greatest, followed by CS, and the FD value of AL and LC is small. The second largest peak of the FD spectral data occurs in the red band range of 680 to 750 nm, which is the strong absorption band of chlorophyll, where the seven grass species show significant differences. Among them, the FD spectral peak of AT is the largest, followed by CL, and the FD peak of SG and AL is small. Between the two peaks, the FD values of the seven grass species fluctuate less and show a roughly similar trend, among which AL maintains a larger spectral FD value than the other grass species, while CS and AT show lower FD values.

Figure 6.

First-order derivative spectra of measured spectral data.

3.1.3. Continuum Removal Analysis

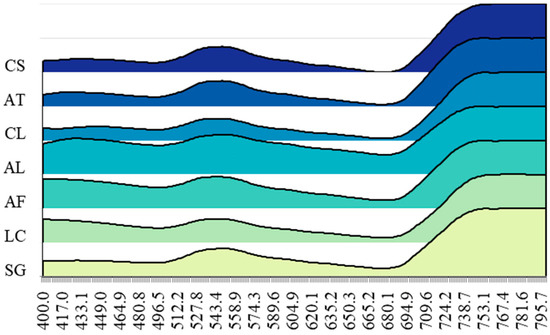

Figure 7 shows CR data for measured spectral data in the visible range. The differences between grass species in the range from 400 to 800 nm are significant, among which the CR value of AL is significantly higher than that of other grass species, followed by AF. There is a peak at approximately 550 nm (the green reflection peak in the raw spectrum), which has the most pronounced difference between AL and CL, while the difference between AT and CS is smaller. At approximately 680 nm, the peak shows a trough pattern, and the CR value rapidly increases after the valley. The trough value that appears here is the red valley in the grass species spectral data, where the difference between AL and CS is clear, while the difference between CL and AT, SG and AF is very weak.

Figure 7.

Continuum removal spectra of measured spectral data.

3.1.4. Spectral Characteristic Parameters

From the calculation results of the spectral characteristic parameters in Table 3, the Eb of each grass species is not very different for the three-edge parameters. There is a certain gap in the red-edge position (Er); AF, AL and CS appear earlier, and SG, LC, CL and AT are located relatively behind. The yellow-edge position (Ey) shows a clearer difference: AL is the most forward; SG, LC and CS are in the center; AF, CL and AT are more in the back. For the three-edge amplitude, the red-edge amplitudes (Erm) of each grass species are more significant than the blue-edge amplitudes (Ebm) and the yellow-edge (Eym) amplitudes, with AL and CL being larger and AL being the smallest. The change in the three-edge area parameter is similar to the three-edge amplitude, the difference between the red-edge area (Era) is also the most significant, and the characteristics of its performance are similar to the differences between grass species reflected by the amplitude. It can be concluded that the differences between grass species in general are most evident in the spectral characteristic parameters of the red edge.

Table 3.

List of spectral characteristic parameters of grass species.

It can be seen from the results of seven spectral feature indices that the characteristics of the Pgm/Vrm value, Pgm-Vrm normalized value and Pgm-Vrm value of each grass species are similar, among which the value of AL is the lowest and the value of CS is the greatest. The variation characteristics of the Era/Eba value and Er-Eb normalized value are consistent, with AL being the lowest and LC being the greatest. The variation characteristics of the Era/Eya value and Er-Ey normalized value are consistent with the greatest value of AL and the lowest value of CS, respectively.

3.1.5. Vegetation Index

As shown in Table 4, the vegetation indices of each grass species are calculated based on their mean spectra. The differences in the characteristics of each grass species on the six planting indices were basically the same, with the values of CS, CL and LC always maintaining high values, the values of SG, AF and AL remaining stable in the middle and the values of AL remaining the lowest. Among them, the RVI value of each grass species is substantially greater than the value of other vegetation indices, which makes the difference in the RVI of the seven typical grass species more significant.

Table 4.

List of spectral characteristic parameters of grass species.

3.2. Grassland Species Identification Based on Ground-Measured Spectral Data

3.2.1. Feature Extraction Results and Analysis

The dimensionality reduction results in Table 5 were obtained by using PCA to reduce the dimensionality of the grass species spectra (GSS) data, first-order derivative (FD) data and continuum removal (CR) data and then comprehensively considering the characteristic values and cumulative percentages of the principal components of each grass species. The principal components with cumulative eigenvalues exceeding 90% are selected. That is, the principal components selected can represent more than 90% of the spectral data information; the top two principal components are required for the GSS data, the top five principal components are required for the FD data; the top three principal components are required for the CR data. This shows that the characteristics of the raw data are enhanced, and the amount of information is richer via the spectral conversion processing of FD and CR. At the same time, PCA is efficient in the calculation of research data, has good applicability and information retention ability and achieves good dimensionality reduction effects so that spectral data information is expressed more intensively and further provides more effective characteristics for the identification of grassland plant species.

Table 5.

Grass species spectra data, FD data and CR data principal component analysis.

3.2.2. Identify Results and Accuracy Analysis

With respect to the three input datasets of the spectral characteristic parameter + vegetation index, FD and CR, four models used for ground-measured spectral data were tested. Table 6, Table 7 and Table 8 show the recognition results of the four models on different datasets.

Table 6.

The recognition results of the models on spectral characteristic parameters + vegetation index dataset.

Table 7.

The recognition results of models on the dataset of FD.

Table 8.

Recognition results of the model on the dataset of CR.

For the dataset of the spectral characteristic parameters + vegetation index, the SVM model had the lowest OA of 68.83% and the highest recognition accuracy of 98.7% for AL, followed by AF, SG, CL, AT and LC, with the lowest recognition accuracy of 50% for CS. The OA of the RF model is 69.28%, the highest recognition accuracy is 91.67% for AF, and the lowest recognition accuracy is 57.04% for CS. The OA of the BP neural network model is 83.12%, and the recognition accuracy of AF, CL and CS is 98.7%, but the lowest recognition accuracy of 59.34% was achieved for LC. The OA of the CNN model is 76.62%, and the recognition accuracy of AL reached 98.7%, but the accuracy of LC is the worst, with an accuracy of 57.15%. The improved CNN model has the highest OA of 90.91%, and its recognition ability for each grass species is relatively balanced, with an AA of 91.23%.

For the FD dataset, the RF model has the lowest OA of 70.42% and the highest recognition accuracy of 88.89% for AL, with the lowest recognition accuracy of 57.15% for CS. The highest accuracy of the SVM model for the recognition of AF is 98.7%, and the lowest accuracy of the recognition of SG is 50%. The accuracy of the BP neural network model reached 98.7% for the classification of AL, CL and AT, and the lowest for the recognition of CS is 66.67%. The OA of CNN is improved, but the lowest recognition accuracy is 58.33% for CS. The improved CNN model has the highest OA of 97.37%, and its recognition accuracy reached 98.7% for SG, AF, AL, CL, and AT.

For the CR dataset, the RF model achieved 92.86% for the recognition accuracy of AF, but its OA of 79.12% is still the lowest. The SVM model achieved 98.7% for the recognition accuracy of AF, but it had an OA of 79.22%. The OA of both the BP neural network model and CNN model significantly improved toward 96.10% and 91.78%, respectively. The OA of the improved CNN model improved toward 98.70%, and the accuracy of the grass species except CS reached 98.7%, which produces better recognition results.

From the abovementioned results, the RF model has the highest recognition accuracy for AF in the three datasets, both reaching more than 80%, but the recognition effect for CS is poor. The SVM model has the highest recognition accuracy for AL and AF in the three datasets, both reaching more than 85%, but the recognition accuracy is lower for both SG and CS. The BP neural network model is the best for the recognition of CL, with 98.7% for three datasets, and it is less effective for the recognition of LC. The recognition accuracy of the CNN model has been maintained at 98.7% for AL, while the improved CNN model has a stabilized recognition accuracy of 98.7% for AF and CL, but they all need to improve the recognition accuracy of CS.

4. Discussion

The same model in different datasets with respect to the classification results shows that the differences in spectral features among different grass species in typical grasslands do not only appear at individual special locations. The deep processing of the grass species spectral data using mathematical transformations enhances the spectral characteristics, helps amplify the spectral changes among grass species and improves the separability of grass species [21]. Spectral differential transformations can eliminate some atmospheric effects and smooth the interference of vegetation backgrounds, such as soil and shadow [29]. Continuum removal (CR) transformation can eliminate noise and enhance the spectral absorption and reflection characteristics of ground objects [18]. Compared with the dataset of spectral characteristic parameters + vegetation index, the spectral differential dataset and the CR dataset constructed in the experiment are used to identify the grass species from the improved combination of data by using principal component analysis. Therefore, the spectrum transform method can effectively strengthen the model’s stability and improve the identification accuracy of the grass species of each model.

In this experiment, different mathematical transformations are used to change the form of the spectral data. It can be seen that the transformed FD data (Figure 6) and CR data (Figure 7) have great differences between grass species, which enhances the spectral separability between grass species and can be effectively used to identify grass species [18]. Comparing the identification results of the spectral differential dataset and the CR dataset illustrates that different spectral transformation methods differ in their ability to handle noise and in extracting original feature information, and they differ in their enhancement of the model’s performance. For example, for the improved CNN model, the differential transformation of the spectral data can obtain an AA of 97.68%, while the CR process can achieve an AA of 98.81%. It is shown that the CR makes the data more applicable and distinguishable than the first-order derivative transformation, which can significantly improve the recognition accuracy.

Although the PA of the improved CNN model for identifying individual grass species was lacking in the spectral characteristic parameters + vegetation index dataset compared with the BP neural network model, it corresponded to the highest OA and AA and the best recognition accuracy overall. The original CNN has difficulty in taking advantage of its recognition because of the small data volume, low dimensionality and limited samples of the measured spectra, but the recognition performance greatly improved after its improvement. Therefore, for the analysis of the measured spectral data, the improved CNN model of this experiment showed more significant grass species recognition performance and had certain recognition advantages.

The large difference in the recognition results of the same model for different input samples is because the recognition process of the neural network for grass species is similar to a black box simulation process for sample data input and output, and the learning processes and memory of its network are unstable and closely related to the training samples [26]. In other words, for different learning samples, the trained neural network needs to start training again, and there is no memory for the previous learning process. Thus, its training and recognition can only target the internal laws implied in this sample data, and it is only responsible for this batch of samples. However, neural networks have an important position in the field of spectral analysis due to their powerful self-learning, self-organization and self-adaptive abilities and massive parallel processing capabilities [24], which make them an important classification method for typical grassland plant species identification based on ground spectral data.

In this study, we used ground spectral data to identify plant species in typical grasslands in the study area based on RF, SVM and neural network algorithms and investigate the accuracy of improving the recognition of typical grassland plant species based on ground spectral data. However, due to the limitation of sample distribution, the study area in this work only covers temperate typical grasslands, and no large-scale identification of other grassland species in Inner Mongolia has been performed. The next step is to carry out scientific and reasonable large-scale field experiments, obtain more ground observation data, carry out more extensive in-depth research and analysis and provide an important scientific reference for grassland degradation monitoring and management operations.

5. Conclusions

In this study, the identification of constructive species and degraded grass species of typical temperate grassland in Inner Mongolia was performed based on the measured spectrum. The research used field-measured data to extract and analyze the parametric characteristics of grass species’ spectral data; to establish the RF model, SVM model, BP neural network model, CNN model and improved CNN model; to complete grass species type recognition based on the ground spectrum; and to determine the high-precision recognition method based on hyperspectral remote sensing data, laying the foundation for related research.

- In the recognition results based on ground-measured spectral data, for the spectral characteristic parameters + vegetation index dataset, the highest OA of the improved CNN model was 90.91% and its recognition ability for each grass species was more balanced, with an average accuracy of 91.23%; for the FD dataset, the OA of the improved CNN model was the highest at 97.37%, and the accuracy of its recognition of SG, AF, AL, CL and AT was 98.7%; for the CR dataset, the OA of the improved CNN model increased to 98.70%, and the accuracies of all grass species except CS reached 98.7%.

- For each input dataset of the ground-measured spectral data, the OA of the RF model and SVM model is low, and the recognition result of the CNN model is generally worse than that of the BP neural network model, but its recognition accuracy for AL is higher, while the BP neural network model is better for the recognition of CL. The OA and AA of the improved CNN model are the highest, and the recognition accuracy is the best overall.

- Neural networks have an important position in the field of spectral analysis due to their powerful self-learning, self-organizing and self-adaptive capabilities and massive parallel processing abilities, and they are important classification methods for typical grassland plant species recognition based on ground truth spectral data. The improved CNN model in this study showed more significant grass species recognition performance and has certain recognition advantages.

Author Contributions

Conceptualization, H.L. and H.W.; funding acquisition, H.W.; methodology, H.L.; supervision, H.W. and X.L.; visualization, J.L., X.Z., J.S. and D.L.; writing—original draft, T.Q., Y.Z., Y.L. and Y.Y.; writing—review and editing, H.L., H.W., X.L. and T.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Science and Technology Project of Inner Mongolia (2021ZD0011), the Key Science and Technology Project of Inner Mongolia (2021ZD0015) and the National Key Research and Development Program of China (2022YFF13034).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the National Aeronautics and Space Administration (NASA) for providing NASADEM Data at 30 m.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Manlike, A.; Sawut, R.; Zheng, F.; Li, X.; Abudukelimu, R. Monitoring and Analysing Grassland Ecosystem Service Values in Response to Grassland Area Changes—An Example from Northwest China. Rangel. J. 2020, 42, 179. [Google Scholar]

- Kandwal, R.; Jeganathan, C.; Tolpekin, V.; Kushwaha, S.P.S. Discriminating the Invasive Species, ‘Lantana’ Using Vegetation Indices. J. Indian Soc. Remote Sens. 2009, 37, 275–290. [Google Scholar] [CrossRef]

- Carlier, L.; Rotar, I.; Vlahova, M.; Vidican, R. Importance and Functions of Grasslands. Not. Bot. Horti Agrobot. Cluj-Napoca 2009, 37, 25–30. [Google Scholar]

- Thornton, P.K.; Gerber, P.J. Climate Change and the Growth of the Livestock Sector in Developing Countries. Mitig. Adapt. Strat. Glob. Change 2010, 15, 169–184. [Google Scholar]

- Zhang, R.; Liang, T.; Guo, J.; Xie, H.; Feng, Q.; Aimaiti, Y. Grassland Dynamics in Response to Climate Change and Human Activities in Xinjiang from 2000 to 2014. Sci. Rep. 2018, 8, 2888. [Google Scholar] [CrossRef]

- Zheng, K.; Wei, J.; Pei, J.; Cheng, H.; Zhang, X.; Huang, F.; Li, F.; Ye, J. Impacts of Climate Change and Human Activities on Grassland Vegetation Variation in the Chinese Loess Plateau. Sci. Total Environ. 2019, 660, 236–244. [Google Scholar] [CrossRef]

- Yang, W.; Wang, Y.; He, C.; Tan, X.; Han, Z. Soil Water Content and Temperature Dynamics under Grassland Degradation: A Multi-Depth Continuous Measurement from the Agricultural Pastoral Ecotone in Northwest China. Sustainability 2019, 11, 4188. [Google Scholar]

- Mansour, K.; Mutanga, O.; Adam, E.; Abdel-Rahman, E.M. Multispectral Remote Sensing for Mapping Grassland Degradation Using the Key Indicators of Grass Species and Edaphic Factors. Geocarto Int. 2016, 31, 477–491. [Google Scholar] [CrossRef]

- Marcinkowska-Ochtyra, A.; Jarocińska, A.; Bzdęga, K.; Tokarska-Guzik, B. Classification of Expansive Grassland Species in Different Growth Stages Based on Hyperspectral and LiDAR Data. Remote Sens. 2018, 10, 2019. [Google Scholar]

- Kutser, T.; Jupp, D.L.B. On the Possibility of Mapping Living Corals to the Species Level Based on Their Optical Signatures. Estuar. Coastal Shelf Sci. 2006, 69, 607–614. [Google Scholar] [CrossRef]

- Zeng, S.; Kuang, R.; Xiao, Y.; Zhao, Z. Measured Hyperspectral Data Classification of Poyang Lake Wetland Vegetation. Remote Sens. Inf. 2017, 32, 75–81. [Google Scholar]

- Senay, G.B.; Elliott, R.L. Capability of AVHRR Data in Discriminating Rangeland Cover Mixtures. Int. J. Remote Sens. 2002, 23, 299–312. [Google Scholar]

- Rajapakse, S.S.; Khanna, S.; Andrew, M.E.; Ustin, S.L.; Lay, M. Identifying and Classifying Water Hyacinth (Eichhornia Crassipes) Using the Hymap Sensor. In Proceedings of the Remote Sensing and Modeling of Ecosystems for Sustainability III, San Diego, CA, USA, 31 August 2006. [Google Scholar]

- Elhadi, M.I.A.; Mutanga, O.; Rugege, D.; Ismail, R. Field Spectrometry of Papyrus Vegetation (Cyperus papyrus L.) in Swamp Wetlands of St Lucia, South Africa. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12 July 2009. [Google Scholar]

- Haq, M.A. CNN Based Automated Weed Detection System Using UAV Imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar]

- Wang, L.; Sousa, W.P. Distinguishing Mangrove Species with Laboratory Measurements of Hyperspectral Leaf Reflectance. Int. J. Remote Sens. 2009, 30, 1267–1281. [Google Scholar]

- Apan, A.; Phinn, S.; Maraseni, T. Discrimination of Remnant Tree Species and Regeneration Stages in Queensland, Australia Using Hyperspectral Imagery. In Proceedings of the 2009 First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Grenoble, France, 26 August 2009. [Google Scholar]

- Li, X.; Wang, H.; Li, X.; Tang, Z.; Liu, H. Identifying Degraded Grass Species in Inner Mongolia Based on Measured Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 12, 5061–5075. [Google Scholar]

- Zomer, R.J.; Trabucco, A.; Ustin, S.L. Building Spectral Libraries for Wetlands Land Cover Classification and Hyperspectral Remote Sensing. J. Environ. Manag. 2009, 90, 2170–2177. [Google Scholar] [CrossRef]

- Yang, H.; Li, J.; Mu, S.; Yang, Q.; Hu, X.; Jin, G.; Zhao, W. Analysis of Hyperspectral Reflectance Characteristics of Three Main Grassland Types in Xinjiang. Acta. Pratacult. Sin. 2012, 21, 258–266. [Google Scholar]

- Yamano, H.; Chen, J.; Tamura, M. Hyperspectral Identification of Grassland Vegetation in Xilinhot, Inner Mongolia, China. Int. J. Remote Sens. 2003, 24, 3171–3178. [Google Scholar]

- An, R.; Jiang, D.; Li, X.; Quaye-Ballard, J.A. Using Hyperspectral Data to Determine Spectral Characteristics of Grassland Vegetation in Central and Eastern Parts of Three-River Source. Remote Sens. Technol. Appl. 2014, 29, 202–211. [Google Scholar]

- He, L.; An, S.; Jin, G.; Fan, Y.; Zhang, T. Analysis on High Spectral Characteristics of Degraded Seriphidium Transiliense Desert Grassland. Acta. Agrestia Sin. 2014, 22, 271–276. [Google Scholar]

- Zhang, F.; Huang, M.; Zhang, J.; Bao, G.; Bao, Y. Identification of Grass Species Based on Hyperspectrum-a Case Study of Xilin Gol Grassland. Bull. Surv. Map. 2014, 7, 66–69. [Google Scholar]

- Li, Y.; Wang, J.; Gu, B.; Meng, G. Artificial Neural Network and Its Application to Analytical Chemistry. Spectrosc. Spectr. Anal. 1999, 19, 844–849. [Google Scholar]

- Zhang, F.; Yin, Q.; Kuang, D.; Li, F.; Zhou, B. Optimal Temporal Selection for Grassland Spectrum Classification. J. Remote Sens. 2006, 10, 482–488. [Google Scholar]

- Zhang, X.S. Vegetation Map of The People’s Republic of China (1:1 000 000 000); Geology Press: Beijing, China, 2007. [Google Scholar]

- Rock, B.N.; Williams, D.L.; Moss, D.M.; Lauten, G.N.; Kim, M. High-Spectral Resolution Field and Laboratory Optical Reflectance Measurements of Red Spruce and Eastern Hemlock Needles and Branches. Remote Sens. Environ. 1994, 47, 176–189. [Google Scholar] [CrossRef]

- Shafri, H.Z.M.; Anuar, M.I.; Seman, I.A.; Noor, N.M. Spectral Discrimination of Healthy and Ganoderma-Infected Oil Palms from Hyperspectral Data. Int. J. Remote Sens. 2011, 32, 7111–7129. [Google Scholar]

- Zhang, C.; Cai, H.J.; Li, Z.J. Estimation of Fraction of Absorbed Photosynthetically Active Radiation for Winter Wheat Based on Hyperspectral Characteristic Parameters. Spectrosc. Spect. Anal. 2015, 35, 2644–2649. [Google Scholar]

- Zheng, J.J.; Li, F.; Du, X. Using Red Edge Position Shift to Monitor Grassland Grazing Intensity in Inner Mongolia. J. Indian Soc. Remote 2017, 46, 81–88. [Google Scholar]

- Blackburn, G.A. Hyperspectral Remote Sensing of Plant Pigments. J. Exp. Bot. 2007, 58, 855–867. [Google Scholar] [CrossRef]

- Horler, D.N.H.; Barber, J.; Darch, J.P.; Ferns, D.C.; Barringer, A.R. Approaches to Detection of Geochemical Stress in Vegetation. Adv. Space Res. 1983, 3, 175–179. [Google Scholar]

- Rouse, J.W.J.; Haas, R.H.; Deering, D.W.; Schell, J.A.; Harlan, J.C. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1, Greenbelt, MD, USA, 10–14 December 1973. [Google Scholar]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar]

- Richardson, A.J.; Wiegand, C.L. Distinguishing Vegetation from Soil Background Information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Qi, A.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar]

- Roujean, J.L.; Breon, F.M. Estimating PAR Absorbed by Vegetation from Bidirectional Reflectance Measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar]

- Singh, L.; Mutanga, O.; Mafongoya, P.; Peerbhay, K. Remote Sensing of Key Grassland Nutrients Using Hyperspectral Techniques in Kwazulu-Natal, South Africa. J. Appl. Remote Sens. 2017, 11, 036005. [Google Scholar] [CrossRef]

- Mack, B.; Waske, B. In-Depth Comparisons of Maxent, Biased SVM and One-Class SVM for One-Class Classification of Remote Sensing Data. Remote Sens. Lett. 2017, 8, 290–299. [Google Scholar] [CrossRef]

- Wang, H.; Li, X.; Long, H.; Qiao, Y.; Li, Y. Development and Application of a Simulation Model for Changes in Land-Use Patterns under Drought Scenarios. Comput. Geosci. 2011, 37, 831–843. [Google Scholar]

- Jia, K.; Li, Q.; Tian, Y.; Wu, B. A Review of Classification Methods of Remote Sensing Imagery. Spectrosc. Spectral Anal. 2011, 31, 2618–2623. [Google Scholar]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar]

- Yu, D.; Wang, H.; Chen, P.; Wei, Z. Mixed Pooling for Convolutional Neural Networks. In International Conference on Rough Sets and Knowledge Technology; Springer: Cham, Swizterland, 2014; Volume 8818, pp. 364–375. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).