Abstract

Background/Objective: The growing volume and complexity of cases presented to emergency departments underline the urgent need for effective clinical-risk-management strategies. Increasing demands for quality and safety in healthcare highlight the importance of predictive tools in supporting timely and informed clinical decision-making. This study aims to evaluate the performance and usefulness of predictive models for managing the clinical risk of people who visit the emergency department. Methods: A systematic review was conducted, including primary observational studies involving people aged 18 and over, who were not pregnant, and who had visited the emergency department; the intervention was clinical-risk management in emergency departments; the comparison was of early warning scores; and the outcomes were predictive models. Searches were performed on 10 November 2024 across eight electronic databases without date restrictions, and studies published in English, Portuguese, and Spanish were included in this study. Risk of bias was assessed using the Checklist for Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modeling Studies as well as the Prediction Model Risk-of-Bias Assessment Tool. The results were synthesized narratively and are summarized in a table. Results: Four studies were included, each including between 4388 and 448,972 participants. The predictive models identified included the Older Persons' Emergency Risk Assessment score; a new situation awareness model; machine learning and deep learning models; and the Vital-Sign Scoring system. The main outcomes evaluated were in-hospital mortality and clinical deterioration. Conclusions: Despite the limited number of studies, our results indicate that predictive models have potential for managing the clinical risk of emergency department patients, with the risk-of-bias study indicating low concern. We conclude that integrating predictive models with artificial intelligence can improve clinical decision-making and patient safety.

1. Introduction

The purpose of emergency departments (EDs) is to provide care to people at clinical risk of experiencing complex disease processes and/or organ failure [,,,,,].

Emergency departments require transdisciplinary approaches [,,,,,] that combine diverse fields of expertise [,] to anticipate clinical risk and standardize assessment processes [,,,,,].

Risk stratification [,,,,,,,], supported by predictive models, can improve the anticipation of patient needs and enhance their safety and quality of care [,,,,,,,,,].

These approaches are aligned with current evidence highlighting the importance of proactive risk management in acute care settings [,,,,,].

A predictive model can support emergency professionals in systematizing decisions and organizing care in transdisciplinary teams [,,,,,,,,,,,,,,,].

Predictive models, typically grounded in algorithmic frameworks derived from machine learning or statistical methodologies, are designed to estimate risk and thereby inform decision-making processes in EDs [,,,,,].

Machine learning approaches, which include supervised and unsupervised techniques such as logistic regression, decision trees, ensemble learning, and neural networks, enable the identification of complex patterns in large clinical datasets. These models have shown increasing promise in predicting outcomes such as hospital admission, intensive care needs, and mortality, complementing or outperforming traditional scoring systems []. Their integration into emergency care may facilitate earlier recognition of deterioration and more targeted interventions.

The objective of this review is to evaluate the performance and usefulness of predictive models for managing the clinical risk of people who visit the ED.

2. Materials and Methods

This systematic review was conducted and reported according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [,] (Appendix A). We performed a systematic review on the use of predictive models for managing the clinical risk of ED patients.

Registration and protocol: This protocol was registered in PROSPERO under the name “Predictive model for managing the clinical risk of ED patients: A systematic review” and registration number CRD42024556926. No protocol was prepared, and no amendments have been made to the information provided at registration.

2.1. Eligibility Criteria

We aimed to identify all predictive models developed until 10 November 2024 for managing the clinical risk of ED patients. The articles needed to fulfill the following population, intervention, comparison, and outcome criteria to be considered for inclusion (Table 1).

Table 1.

Population, intervention, comparison, outcomes, and study (PICOS) design framework [], as well as year and language criteria.

2.2. Information Sources

The following databases were searched from inception until 10 November 2024: CINAHL® Plus, the Health Technology Assessment Database, MedicLatina, MED-LINE®, PubMed, Scopus, the Cochrane Plus Collection, and Web of Science.

Information sources were restricted to peer-reviewed articles to ensure methodological rigor and reliability of the extracted data, and the gray literature and preprints were excluded.

The selection of electronic databases was informed by guidance from the Cochrane Handbook for Systematic Reviews of Interventions (Chapter 4: Searching for and selecting studies) []. MedicLatina was included due to its coverage of peer-reviewed scientific and medical journals from established Latin American and Spanish-language publishers, thereby enabling comprehensive retrieval of the relevant literature published in Spanish.

2.3. Search Strategy

Among the entry terms, we used the following keywords according to Medical Subject Headings: risk assessment (health risk assessment, risk analysis); risk management; risk adjustment (case-mix adjustment); risk factors; early warning score; emergency service; and hospital (emergency departments). The initial search strategy was developed in PubMed and subsequently adapted to meet the specific syntax and indexing requirements of each included database. The full search strategies for all databases are provided in Appendix B.

2.4. Selection Process

Duplicate articles were removed using Rayyan. Two authors (M.R. and L.M.) independently screened titles and abstracts, and conflicting results were discussed in consensus meetings. After screening the titles and abstracts, the full text of each article was assessed for eligibility by the same authors to decide whether or not they should be included in the systematic review.

2.5. Data Collection Process

The method used to collect data from the included articles followed the guidelines of the Cochrane Handbook for Systematic Reviews of Interventions []. One author (M.R.) collected data from each article, and a random check was conducted by another author (L.M.). This check showed no discrepancies.

2.6. Data Items

The following data were extracted from every included article: study details (authors, publication year, language, country where the study was carried out, study aim/research question, design, recruitment source, inclusion and exclusion criteria, type of allocation, stratification, and sample size); characteristics of participants (age, gender, ethnicity, and multimorbidities); intervention details (intervention content, intervention setting, delivery of intervention, and number of participants assessed at follow-up); comparison/control characteristics (type of control program/intervention); and outcomes (primary and secondary outcomes, where between-group differences, total scores/means, and standard deviations in each group were extracted from the study results; methods of outcome measurement, including blinding procedures; and time of outcome measurements).

2.7. Risk-of-Bias Assessment in Included Studies

The methods used to assess risk of bias in the included studies were assessed with the Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modeling Studies (CHARMS) checklist and Prediction Model Risk-of-Bias Assessment Tool (PROBAST) []. Two review authors (M.R. and L.M.) independently assessed the risk of bias in the included studies, and disagreements were resolved by consensus.

2.8. Effect Measures

The effect measures that we evaluated for the outcomes of predictive models for clinical-risk management were discrimination and calibration.

2.9. Synthesis Methods

We visually displayed the results of individual studies and syntheses in a table, which groups the characteristics of the included studies, including the authors, year, sample, objectives, assessment tools, interventions, results, and conclusions.

The method used to synthesize the results and our rationale for choosing this method are reported in the text.

Owing to the anticipated methodological and clinical heterogeneity, a meta-analysis was not performed. Instead, a narrative synthesis was undertaken to address heterogeneity across model types, with particular emphasis on identifying and interpreting inconsistencies in the findings—such as divergent effect directions or substantial variations in effect magnitudes—across the included studies.

2.10. Reporting Bias Assessment

To assess potential reporting biases, selective outcome reporting within studies was evaluated by comparing the reported results with those outlined in the study protocols, where available. Although a formal meta-analysis was not conducted, the potential for publication bias was considered by documenting the presence or absence of non-significant findings and assessing whether studies were prospectively registered (e.g., in clinical trial registries). Any discrepancies between registered protocols and final publications will be critically appraised and discussed.

2.11. Certainty Assessment

The methods used in the included studies to assess confidence in the body of evidence for an outcome were herein assessed using the GRADE (Grading of Recommendations Assessment, Development, and Evaluation) approach [,,], which evaluates the quality of evidence based on factors such as risk of bias, consistency, directness, precision, and publication bias.

3. Results

3.1. Study Selection

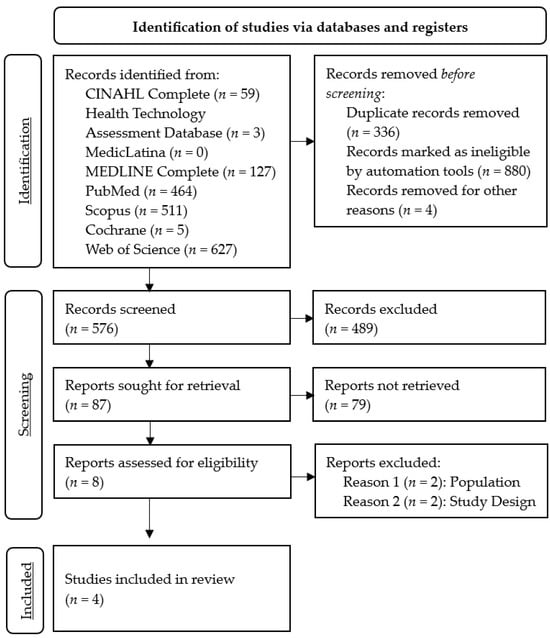

The search process identified 1796 records, of which 576 remained after the removal of records before screening. The exclusion of studies based on titles and abstracts resulted in the retention of eight full-text articles eligible for assessment. Of these, four articles were excluded because the studies did not address the population criteria (n = 2) or the study design criteria (n = 2). Finally, we included four articles in this systematic review, with full details of the study selection summarized in Figure 1.

Figure 1.

PRISMA 2020 flow diagram for systematic review on the use of predictive models for managing the clinical risk of ED patients.

3.2. Study Characteristics

The study characteristics are summarized in Table 2. The four studies utilized different models: the Older Persons' Emergency Risk Assessment (OPERA) score, which is a risk prediction model developed and validated by the authors to predict in-hospital mortality and other outcomes in older adults admitted to the ED []; the new situation awareness (SA) model, which was introduced in an intervention group and consisted of the existing regional early warning score (EWS) system plus five additional subjective parameters (skin observations, dyspnea reported by the patient, pain, clinical intuition or concern, and patients' or relatives' concerns) []; benchmarking ED prediction models, which refer to various machine learning and deep learning models (including logistic regression, random forest, gradient boosting, multilayer perception, Med2Vec, and long short-term memory) that were developed and evaluated for predicting three different ED outcomes []; and the Vital-Sign Scoring (VSS) system, which is based on the presence of seven potential vital-sign abnormalities that were used to predict hospital mortality [].

Table 2.

Characteristics of the studies included for systematic review of the use of predictive models for managing the clinical risk of ED patients (n = 4).

Three of the included studies had a prospective design, and all four studies prospectively assessed the performance of the models.

3.3. Risk of Bias in Studies

The results of the risk-of-bias assessment for each included study are presented in Table 3 and Supplementary Materials, for which we used the CHARMS checklist and PROBAST [].

Table 3.

Risk-of-bias and applicability assessments of the development studies.

In [], selection bias may have occurred due to the exclusion of patients without NEWS2 or CFS data, as well as patients with a short length of stay, and there was potential information bias due to missing data, which the authors addressed through multiple imputations. In general, the study design and methods aimed to help to reduce the risk of bias, but there are still potential sources of bias that could affect the validity of the results.

In [], seasonal variation in the case mix and imbalance in the baseline characteristics between the intervention and control groups may have influenced the results. In addition, there was a lack of fidelity monitoring to ensure that the intervention was implemented as planned.

In [], selection bias due to the single-center nature of the dataset may have limited the generalizability of the results, and measurement bias and residual confounding may have occurred due to the lack of certain potentially important risk factors in the dataset. In addition, bias could have been introduced by the simple imputation method used to handle missing data, which can hide the underlying data structure.

Based on the information provided in [], the primary sources of bias in this study are likely selection bias due to the exclusion of outpatient cases, and information bias due to the need to extract data from patient records in some cases. However, the authors recognize these limitations and provide evidence that they are unlikely to have had a significant impact on the results. Overall, the risk of bias in this study appears to be moderate.

3.4. Results of Individual Studies

The OPERA risk score was derived and validated using data of 8974 and 8391 patients, respectively. The OPERA model included the NEWS2, CFS, acute kidney injury, age, sex, and the Malnutrition Universal Screening Tool (MUST). The OPERA model demonstrated superior performance for predicting in-hospital mortality, with an area under the curve (AUC) of 0.79, compared to NEWS2 (AUC = 0.65) and CFS (AUC = 0.76). The OPERA risk groups were able to predict prolonged hospital stay, with the highest-risk group having an odds ratio of 9.7 for staying more than 30 days. The OPERA model maintained good performance even when excluding the variable requiring a laboratory result (creatinine for acute kidney injury) [].

The new SA model reduced the odds of CD by 21% compared to the control group, but there was no significant impact on mortality, ICU admissions, or readmissions [].

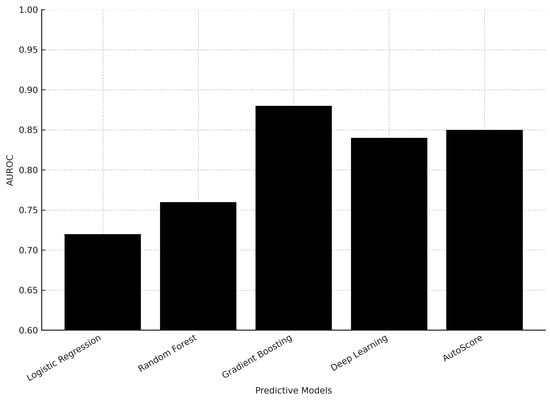

The gradient boosting machine learning model achieved high performance in predicting critical outcomes (Area Under the Receiver Operating Characteristic—AUROC: 0.880) and hospitalization (AUROC: 0.819), but lower performance in predicting 72-h ED reattendance, and deep learning models did not outperform the gradient boosting model. Traditional clinical scoring systems had poor discriminatory performance, but the interpretable AutoScore model achieved better performance in predicting critical outcomes (AUROC 0.846) and hospitalization (AUROC 0.793) using a small number of variables [].

Figure 2 illustrates the comparison of AUROC performance across predictive models, highlighting the superior performance of gradient boosting.

Figure 2.

Comparison of AUROC performance of predictive models for ED risk management.

Most individual vital-sign abnormalities, except seizures and abnormal respiratory rate, were independent predictors of hospital mortality. Higher initial and maximum VSS values were significantly associated with increased hospital mortality. VSS values had a higher predictive power for hospital mortality when collected in the first 15 min after ED admission, compared to the maximum score over the entire stay [].

3.5. Results of Syntheses

The OPERA model performed even better at predicting in-hospital mortality when excluding patients already receiving care or palliative care, with an AUC of 0.80 in the validation cohort [].

Machine learning models, particularly gradient boosting, outperformed other methods in predicting hospitalization and critical outcomes, but struggled with predicting 72-h ED reattendance. While traditional clinical scoring systems performed poorly, the interpretable AutoScore model achieved reasonably good performance on the critical outcome and hospitalization prediction tasks [].

3.6. Reporting Biases

None of the studies explicitly discuss any communication biases.

3.7. Certainty of Evidence

Based on the study design, methods, and statistical analysis, the certainty of evidence in [] is moderate. The quasi-experimental controlled pre- and post-intervention design, large sample size, and appropriate statistical analysis provide a reasonable level of confidence in the findings, but the inherent limitations of a non-randomized study design prevent this study from achieving higher certainty.

Based on the details provided in [], the certainty of evidence appears to be high. This study was a large, prospective cohort study with a comprehensive set of relevant patient data, conducted ethically and with appropriate oversight. The methodological details suggest a high-quality study with a high degree of certainty in its findings.

The methods used in the included studies to assess confidence in the body of evidence for an outcome were herein assessed using the GRADE (Grading of Recommendations Assessment, Development, and Evaluation) approach [,,] (Table 4).

Table 4.

Grade indicating quality of evidence.

We are moderately confident that an estimate of the effect (or association) is correct (i.e., the certainty of the evidence from the included studies is moderate). Our confidence in the effect estimate is limited by the small number of included studies, and the true effect may be substantially different from the effect estimate.

4. Discussion

4.1. Current Research Status

The first study derived and validated the OPERA score, which can help clinicians stratify older patients by risk of mortality and prolonged hospital stay. The model demonstrated good discrimination and calibration, with a small over-prediction of mortality risk, which was addressed. Excluding patients without a documented frailty score limited the sample size, as this assessment may not have been conducted for patients in relatively good health. OPERA performed better for short-term mortality compared to longer-term outcomes, likely due to its focus on acute-illness severity. OPERA provided useful odds ratios for extended hospital stay, which could support discharge planning and resource allocation [,].

The new SA model reduced the odds of CD compared to the existing EWS system, but did not impact secondary outcomes like mortality, ICU admission, or readmissions. The SA model’s wider approach to increasing situational awareness and identifying early signs of deterioration was supported by these findings. The lack of impact on mortality is consistent with previous studies on EWS systems. There was a trend towards reduced ICU admissions in the intervention group, but this was likely due to differences in case mix. A higher EWS at ED entry was associated with increased risk of CD, as seen in other studies [,,,].

Machine learning models demonstrated higher predictive accuracy than traditional scoring systems, but complex deep learning models did not outperform simpler models on the relatively low-dimensional ED data. While machine learning models had higher predictive accuracy, their black-box nature makes them less suitable for clinical decision-making in emergency care, where explainability is important. Traditional scoring systems had lower predictive accuracy, but the interpretable AutoScore system achieved higher accuracy while maintaining the advantages of transparent, point-based scoring systems [,].

VSS, which measures the presence, onset, or worsening of vital-sign instability, is highly predictive of hospital mortality. Both the initial VSS value and its change during the ED stay is relevant, with patients who exhibit an increase in VSS having higher mortality. The individual components of VSS, such as impaired consciousness, hypotension, hypoxemia, and abnormal heart rate, were the strongest predictors of mortality. Its lack of independent predictive value for seizures and respiratory rate may be due to their co-occurrence with other VSS components. These results suggest that using VSS for the rapid identification and treatment of at-risk patients in EDs has the potential to improve outcomes for critically ill patients [,].

4.2. Trends

Gradient boosting methods demonstrated superior AUROC values (0.819–0.880) compared to logistic regression and random forest, while deep learning models did not outperform simpler methods. This suggests that moderately complex models may provide the best balance between predictive accuracy and interpretability in emergency settings. Clinical integration requires models that are not only accurate but also interpretable at the bedside. Tools such as AutoScore provide transparent, point-based frameworks that maintain predictive accuracy while allowing clinicians to understand and trust the decision-making process—an essential factor for adoption in high-pressure emergency settings.

Table 5 provides a schematic overview summarizing the strengths and limitations of traditional scoring systems, machine learning, and deep learning approaches.

Table 5.

Overview of predictive models in ED risk management.

4.3. Limitations

The limitations of the evidence obtained in this study are related to the scarcity of existing studies on predictive models for managing the clinical risk of ED patients. Furthermore, the number of included studies was small, which might be because we only included studies in English, Portuguese, and Spanish. Importantly, the small number of studies included significantly limits the generalizability of our findings.

In practice, predictive models represent objective methods to identify clinical risk or deterioration, supporting timely decision-making and interventions to improve patient outcomes.

4.4. Future Prospects

Future research should focus on integrating explainable machine learning models into electronic health record systems to ensure real-time applicability in clinical workflows. Transparent frameworks, such as AutoScore, may balance predictive performance with interpretability, thereby increasing acceptance among healthcare professionals. Moreover, multicenter external validation studies are essential to confirm generalizability across diverse emergency department populations.

This study contributes to transforming the care model in health services, with re-percussions for health policies that will make it possible to reorganize EDs.

5. Conclusions

This systematic review highlights the potential of predictive models in managing clinical risk in EDs. Despite the limited number of studies included, our findings demonstrate that models such as OPERA, machine learning models, the situation awareness model, and the VSS system represent valuable solutions for predicting in-hospital mortality and clinical deterioration. Their implementation may support faster and more informed clinical decisions, as well as optimize resource allocation in high-pressure care settings. However, the strength of our conclusions is limited by the inclusion of only four studies, underscoring the need for more multicenter validation research.

The integration of predictive models with artificial intelligence tools represents an opportunity to significantly enhance patient safety and the quality of care they receive. However, the widespread adoption of such methods requires robust external validation, adaptation to the realities of different emergency services, and special attention to the clinical interpretability of models—an essential condition for their acceptance by healthcare professionals.

Therefore, future research should focus on the development and validation of transparent, efficient predictive models that are integrated into clinical information systems, contributing to a more proactive, safe, and person-centered care paradigm.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm14207245/s1, CHARMS and PROBAST templates.

Author Contributions

Conceptualization, M.J.B.R., L.A.N.d.M., and A.L.d.S.J.; methodology, M.J.B.R. and L.A.N.d.M.; software, M.J.B.R. and L.A.N.d.M.; validation, M.J.B.R., L.A.N.d.M., and A.L.d.S.J.; formal analysis, M.J.B.R. and L.A.N.d.M.; investigation, M.J.B.R., L.A.N.d.M., and A.L.d.S.J.; resources, M.J.B.R. and L.A.N.d.M.; data curation, M.J.B.R., L.A.N.d.M., and A.L.d.S.J.; writing—original draft preparation, M.J.B.R.; writing—review and editing, M.J.B.R., L.A.N.d.M., and A.L.d.S.J.; visualization, M.J.B.R.; supervision, M.J.B.R.; project administration, M.J.B.R.; funding acquisition, M.J.B.R., L.A.N.d.M., and A.L.d.S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by national funds through the UID/04923—Comprehensive Health Research Centre.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki. Ethical review and approval were waived for this study due to its purely observational nature.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are provided via tables in the text, and via text and tables in the appendices. The included articles are available via the electronic databases utilized in this study.

Acknowledgments

The authors have reviewed and edited the output and take full responsibility for the content of this publication. They would like to express their deepest gratitude to David José Murteira Mendes, whose intellectual guidance and unwavering support were instrumental to conducting this work. His legacy as a scholar and mentor continues to inspire, and this review is dedicated to his memory.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AUC | Area Under the Curve |

| AUROC | Area Under the Receiver Operating Characteristic |

| CCI | Charlson Comorbidity Index |

| CD | Clinical Deterioration |

| CFS | Clinical Frailty Scale |

| CHARMS | Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modeling Studies |

| ECI | Elixhauser Comorbidity Index |

| ED | Emergency Department |

| EWS | Early Warning Score |

| GP | General Practitioner |

| GRADE | Grading of Recommendations Assessment, Development, and Evaluation |

| ICD | International Classification of Diseases |

| ICU | Intensive Care Unit |

| Med2Vec | Multi-layer Representation Learning Tool for Medical Concepts |

| MIMIC-IV | Medical Information Mart for Intensive Care IV |

| MUST | Malnutrition Universal Screening Tool |

| NEWS2 | National Early Warning Score 2 |

| NHS | National Health Services |

| OPERA | Older Persons' Emergency Risk Assessment |

| PICOS | Population, Intervention, Comparison, Outcomes, and Study |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PROBAST | Prediction Model Risk-of-Bias Assessment Tool |

| PROSPERO | International Prospective Register of Systematic Reviews |

| SA | Situation Awareness |

| VSS | Vital-Sign Scoring |

Appendix A

Table A1.

PRISMA 2020 checklist.

Table A1.

PRISMA 2020 checklist.

| Section and Topic | Item # | Checklist Item | Location Where Item Is Reported |

|---|---|---|---|

| TITLE | |||

| Title | 1 | Identify the report as a systematic review. | Page 1 Lines 2 and 3 |

| ABSTRACT | |||

| Abstract | 2 | See the PRISMA 2020 for Abstracts checklist. | Page 1 Lines 18 to 41 |

| INTRODUCTION | |||

| Rationale | 3 | Describe the rationale for the review in the context of existing knowledge. | Page 2 Lines 46 to 67 |

| Objectives | 4 | Provide an explicit statement of the objective(s) or question(s) the review addresses. | Page 2 Lines 68 and 69 |

| METHODS | |||

| Eligibility criteria | 5 | Specify the inclusion and exclusion criteria for the review and how studies were grouped for the syntheses. | Page 3 Lines 79 to 84 Table 1 |

| Information sources | 6 | Specify all databases, registers, websites, organizations, reference lists and other sources searched or consulted to identify studies. Specify the date when each source was last searched or consulted. | Page 4 Lines 86 to 96 |

| Search strategy | 7 | Present the full search strategies for all databases, registers and websites, including any filters and limits used. | Page 4 Lines 98 to 104 |

| Selection process | 8 | Specify the methods used to decide whether a study met the inclusion criteria of the review, including how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process. | Page 4 Lines 106 to 110 |

| Data collection process | 9 | Specify the methods used to collect data from reports, including how many reviewers collected data from each report, whether they worked independently, any processes for obtaining or confirming data from study investigators, and if applicable, details of automation tools used in the process. | Page 4 Lines 112 to 115 |

| Data items | 10a | List and define all outcomes for which data were sought. Specify whether all results that were compatible with each outcome domain in each study were sought (e.g. for all measures, time points, analyses), and if not, the methods used to decide which results to collect. | Page 4 Lines 117 to 126 |

| 10b | List and define all other variables for which data were sought (e.g. participant and intervention characteristics, funding sources). Describe any assumptions made about any missing or unclear information. | Page 4 Lines 117 to 126 | |

| Study risk of bias assessment | 11 | Specify the methods used to assess risk of bias in the included studies, including details of the tool(s) used, how many reviewers assessed each study and whether they worked independently, and if applicable, details of automation tools used in the process. | Pages 4 to 5 Lines 128 to 132 |

| Effect measures | 12 | Specify for each outcome the effect measure(s) (e.g. risk ratio, mean difference) used in the synthesis or presentation of results. | Page 5 Lines 134 to 135 |

| Synthesis methods | 13a | Describe the processes used to decide which studies were eligible for each synthesis (e.g. tabulating the study intervention characteristics and comparing against the planned groups for each synthesis (item #5)). | Page 5 Lines 137 to 146 |

| 13b | Describe any methods required to prepare the data for presentation or synthesis, such as handling of missing summary statistics, or data conversions. | Page 5 Lines 137 to 146 | |

| 13c | Describe any methods used to tabulate or visually display results of individual studies and syntheses. | Page 5 Lines 137 to 146 | |

| 13d | Describe any methods used to synthesize results and provide a rationale for the choice(s). If meta-analysis was performed, describe the model(s), method(s) to identify the presence and extent of statistical heterogeneity, and software package(s) used. | Page 5 Lines 137 to 146 | |

| 13e | Describe any methods used to explore possible causes of heterogeneity among study results (e.g. subgroup analysis, meta-regression). | Page 5 Lines 137 to 146 | |

| 13f | Describe any sensitivity analyses conducted to assess robustness of the synthesized results. | Page 5 Lines 137 to 146 | |

| Reporting bias assessment | 14 | Describe any methods used to assess risk of bias due to missing results in a synthesis (arising from reporting biases). | Page 5 Lines 148 to 154 |

| Certainty assessment | 15 | Describe any methods used to assess certainty (or confidence) in the body of evidence for an outcome. | Page 5 Lines 156 to 160 |

| RESULTS | |||

| Study selection | 16a | Describe the results of the search and selection process, from the number of records identified in the search to the number of studies included in the review, ideally using a flow diagram. | Page 5 and 6 Lines 163 to 171 Figure 1 |

| 16b | Cite studies that might appear to meet the inclusion criteria, but which were excluded, and explain why they were excluded. | Page 5 and 6 Lines 163 to 171 Figure 1 | |

| Study characteristics | 17 | Cite each included study and present its characteristics. | Page 6 to 12 Lines 173 to 188 Table 2 |

| Risk of bias in studies | 18 | Present assessments of risk of bias for each included study. | Page 13 Lines 191 to 213 Table 3 |

| Results of individual studies | 19 | For all outcomes, present, for each study: (a) summary statistics for each group (where appropriate) and (b) an effect estimate and its precision (e.g. confidence/credible interval), ideally using structured tables or plots. | Pages 13 and 14 Lines 215 to 243 Figure 2 |

| Results of syntheses | 20a | For each synthesis, briefly summarize the characteristics and risk of bias among contributing studies. | Page 14 to 15 Lines 245 to 252 |

| 20b | Present results of all statistical syntheses conducted. If meta-analysis was done, present for each the summary estimate and its precision (e.g. confidence/credible interval) and measures of statistical heterogeneity. If comparing groups, describe the direction of the effect. | Page 14 to 15 Lines 245 to 252 | |

| 20c | Present results of all investigations of possible causes of heterogeneity among study results. | Page 14 to 15 Lines 245 to 252 | |

| 20d | Present results of all sensitivity analyses conducted to assess the robustness of the synthesized results. | Page 14 to 15 Lines 245 to 252 | |

| Reporting biases | 21 | Present assessments of risk of bias due to missing results (arising from reporting biases) for each synthesis assessed. | Page 15 Line 254 |

| Certainty of evidence | 22 | Present assessments of certainty (or confidence) in the body of evidence for each outcome assessed. | Page 15 Lines 256 to 273 Table 4 |

| DISCUSSION | |||

| Discussion | 23a | Provide a general interpretation of the results in the context of other evidence. | Page 15 to 17 Lines 275 to 340 Table 5 |

| 23b | Discuss any limitations of the evidence included in the review. | Page 15 to 17 Lines 275 to 340 Table 5 | |

| 23c | Discuss any limitations of the review processes used. | Page 15 to 17 Lines 275 to 340 Table 5 | |

| 23d | Discuss implications of the results for practice, policy, and future research. | Page 15 to 17 Lines 275 to 340 Table 5 | |

| OTHER INFORMATION | |||

| Registration and protocol | 24a | Provide registration information for the review, including register name and registration number, or state that the review was not registered. | Page 17 Lines 366 to 369 |

| 24b | Indicate where the review protocol can be accessed, or state that a protocol was not prepared. | Page 17 Lines 366 to 369 | |

| 24c | Describe and explain any amendments to information provided at registration or in the protocol. | Page 17 Lines 366 to 369 | |

| Support | 25 | Describe sources of financial or non-financial support for the review, and the role of the funders or sponsors in the review. | Page 17 Lines 370 and 371 |

| Competing interests | 26 | Declare any competing interests of review authors. | Page 18 Line 382 |

| Availability of data, code and other materials | 27 | Report which of the following are publicly available and where they can be found: template data collection forms; data extracted from included studies; data used for all analyses; analytic code; any other materials used in the review. | Page 18 Lines 375 and 376 |

Appendix B

Appendix B.1. CINAHL® Plus

(risk assessment OR Risk Assessments OR Assessment, Risk OR Health Risk Assessment OR Assessment, Health Risk OR Health Risk Assessments OR Risk Assessment, Health OR Risk Analysis OR Analysis, Risk OR Risk Analyses OR risk management OR Management, Risk OR Management, Risks OR Risks Management OR risk adjustment OR Adjustment, Risk OR Adjustments, Risk OR Risk Adjustments OR Case-Mix Adjustment OR Adjustment, Case-Mix OR Adjustments, Case-Mix OR Case Mix Adjustment OR Case-Mix Adjustments OR risk factors OR Factor, Risk OR Risk Factor) AND (early warning score OR Early Warning Scores OR Score, Early Warning OR Scores, Early Warning OR Warning Scores, Early) AND (emergency service, hospital OR Emergency Services, Hospital OR Hospital Emergency Services OR Services, Hospital Emergency OR Hospital Emergency Service OR Service, Hospital Emergency OR Emergency Hospital Service OR Emergency Hospital Services OR Hospital Service, Emergency OR Hospital Services, Emergency OR Service, Emergency Hospital OR Services, Emergency Hospital OR Hospital Service Emergency OR Emergencies, Hospital Service OR Emergency, Hospital Service OR Hospital Service Emergencies OR Service Emergencies, Hospital OR Service Emergency, Hospital OR Emergency Departments OR Department, Emergency OR Departments, Emergency OR Emergency Department)

Appendix B.2. Health Technology Assessment Database

((risk assessment) OR (Risk Assessments) OR (Assessment, Risk) OR (Health Risk Assessment) OR (Assessment, Health Risk) OR (Health Risk Assessments) OR (Risk Assessment, Health) OR (Risk Analysis) OR (Analysis, Risk) OR (Risk Analyses) OR (risk management) OR (Management, Risk) OR (Management, Risks) OR (Risks Management) OR (risk adjustment) OR (Adjustment, Risk) OR (Adjustments, Risk) OR (Risk Adjustments) OR (Case-Mix Adjustment) OR (Adjustment, Case-Mix) OR (Adjustments, Case-Mix) OR (Case Mix Adjustment) OR (Case-Mix Adjustments) OR (risk factors) OR (Factor, Risk) OR (Risk Factor)) AND ((early warning score) OR (Early Warning Scores) OR (Score, Early Warning) OR (Scores, Early Warning) OR (Warning Scores, Early)) AND ((emergency service, hospital) OR (Emergency Services, Hospital) OR (Hospital Emergency Services) OR (Services, Hospital Emergency) OR (Hospital Emergency Service) OR (Service, Hospital Emergency) OR (Emergency Hospital Service) OR (Emergency Hospital Services) OR (Hospital Service, Emergency) OR (Hospital Services, Emergency) OR (Service, Emergency Hospital) OR (Services, Emergency Hospital) OR (Hospital Service Emergency) OR (Emergencies, Hospital Service) OR (Emergency, Hospital Service) OR (Hospital Service Emergencies) OR (Service Emergencies, Hospital) OR (Service Emergency, Hospital) OR (Emergency Departments) OR (Department, Emergency) OR (Departments, Emergency) OR (Emergency Department))

Appendix B.3. MedicLatina

(risk assessment OR Risk Assessments OR Assessment, Risk OR Health Risk Assessment OR Assessment, Health Risk OR Health Risk Assessments OR Risk Assessment, Health OR Risk Analysis OR Analysis, Risk OR Risk Analyses OR risk management OR Management, Risk OR Management, Risks OR Risks Management OR risk adjustment OR Adjustment, Risk OR Adjustments, Risk OR Risk Adjustments OR Case-Mix Adjustment OR Adjustment, Case-Mix OR Adjustments, Case-Mix OR Case Mix Adjustment OR Case-Mix Adjustments OR risk factors OR Factor, Risk OR Risk Factor) AND (early warning score OR Early Warning Scores OR Score, Early Warning OR Scores, Early Warning OR Warning Scores, Early) AND (emergency service, hospital OR Emergency Services, Hospital OR Hospital Emergency Services OR Services, Hospital Emergency OR Hospital Emergency Service OR Service, Hospital Emergency OR Emergency Hospital Service OR Emergency Hospital Services OR Hospital Service, Emergency OR Hospital Services, Emergency OR Service, Emergency Hospital OR Services, Emergency Hospital OR Hospital Service Emergency OR Emergencies, Hospital Service OR Emergency, Hospital Service OR Hospital Service Emergencies OR Service Emergencies, Hospital OR Service Emergency, Hospital OR Emergency Departments OR Department, Emergency OR Departments, Emergency OR Emergency Department)

Appendix B.4. MEDLINE®

(risk assessment OR Risk Assessments OR Assessment, Risk OR Health Risk Assessment OR Assessment, Health Risk OR Health Risk Assessments OR Risk Assessment, Health OR Risk Analysis OR Analysis, Risk OR Risk Analyses OR risk management OR Management, Risk OR Management, Risks OR Risks Management OR risk adjustment OR Adjustment, Risk OR Adjustments, Risk OR Risk Adjustments OR Case-Mix Adjustment OR Adjustment, Case-Mix OR Adjustments, Case-Mix OR Case Mix Adjustment OR Case-Mix Adjustments OR risk factors OR Factor, Risk OR Risk Factor) AND (early warning score OR Early Warning Scores OR Score, Early Warning OR Scores, Early Warning OR Warning Scores, Early) AND (emergency service, hospital OR Emergency Services, Hospital OR Hospital Emergency Services OR Services, Hospital Emergency OR Hospital Emergency Service OR Service, Hospital Emergency OR Emergency Hospital Service OR Emergency Hospital Services OR Hospital Service, Emergency OR Hospital Services, Emergency OR Service, Emergency Hospital OR Services, Emergency Hospital OR Hospital Service Emergency OR Emergencies, Hospital Service OR Emergency, Hospital Service OR Hospital Service Emergencies OR Service Emergencies, Hospital OR Service Emergency, Hospital OR Emergency Departments OR Department, Emergency OR Departments, Emergency OR Emergency Department)

Appendix B.5. PubMed

(((((((((((((((((((((((((((risk assessment) OR (Risk Assessments)) OR (Assessment, Risk)) OR (Health Risk Assessment)) OR (Assessment, Health Risk)) OR (Health Risk Assessments)) OR (Risk Assessment, Health)) OR (Risk Analysis)) OR (Analysis, Risk)) OR (Risk Analyses)) OR (risk management)) OR (Management, Risk)) OR (Management, Risks)) OR (Risks Management)) OR (risk adjustment)) OR (Adjustment, Risk)) OR (Adjustments, Risk)) OR (Risk Adjustments)) OR (Case-Mix Adjustment)) OR (Adjustment, Case-Mix)) OR (Adjustments, Case-Mix)) OR (Case Mix Adjustment)) OR (Case-Mix Adjustments)) OR (risk factors)) OR (Factor, Risk)) OR (Risk Factor)) AND (((((early warning score) OR (Early Warning Scores)) OR (Score, Early Warning)) OR (Scores, Early Warning)) OR (Warning Scores, Early))) AND ((((((((((((((((((((((emergency service, hospital) OR (Emergency Services, Hospital)) OR (Hospital Emergency Services)) OR (Services, Hospital Emergency)) OR (Hospital Emergency Service)) OR (Service, Hospital Emergency)) OR (Emergency Hospital Service)) OR (Emergency Hospital Services)) OR (Hospital Service, Emergency)) OR (Hospital Services, Emergency)) OR (Service, Emergency Hospital)) OR (Services, Emergency Hospital)) OR (Hospital Service Emergency)) OR (Emergencies, Hospital Service)) OR (Emergency, Hospital Service)) OR (Hospital Service Emergencies)) OR (Service Emergencies, Hospital)) OR (Service Emergency, Hospital)) OR (Emergency Departments)) OR (Department, Emergency)) OR (Departments, Emergency)) OR (Emergency Department))

Appendix B.6. Scopus

TITLE-ABS-KEY ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( risk AND assessment ) OR ( risk AND assessments ) ) OR ( assessment, AND risk ) ) OR ( health AND risk AND assessment ) ) OR ( assessment, AND health AND risk ) ) OR ( health AND risk AND assessments ) ) OR ( risk AND assessment, AND health ) ) OR ( risk AND analysis ) ) OR ( analysis, AND risk ) ) OR ( risk AND analyses ) ) OR ( risk AND management ) ) OR ( management, AND risk ) ) OR ( management, AND risks ) ) OR ( risks AND management ) ) OR ( risk AND adjustment ) ) OR ( adjustment, AND risk ) ) OR ( adjustments, AND risk ) ) OR ( risk AND adjustments ) ) OR ( case-mix AND adjustment ) ) OR ( adjustment, AND case-mix ) ) OR ( adjustments, AND case-mix ) ) OR ( case AND mix AND adjustment ) ) OR ( case-mix AND adjustments ) ) OR ( risk AND factors ) ) OR ( factor, AND risk ) ) OR ( risk AND factor ) ) AND ( ( ( ( ( early AND warning AND score ) OR ( early AND warning AND scores ) ) OR ( score, AND early AND warning ) ) OR ( scores, AND early AND warning ) ) OR ( warning AND scores, AND early ) ) ) AND ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( emergency AND service, AND hospital ) OR ( emergency AND services, AND hospital ) ) OR ( hospital AND emergency AND services ) ) OR ( services, AND hospital AND emergency ) ) OR ( hospital AND emergency AND service ) ) OR ( service, AND hospital AND emergency ) ) OR ( emergency AND hospital AND service ) ) OR ( emergency AND hospital AND services ) ) OR ( hospital AND service, AND emergency ) ) OR ( hospital AND services, AND emergency ) ) OR ( service, AND emergency AND hospital ) ) OR ( services, AND emergency AND hospital ) ) OR ( hospital AND service AND emergency ) ) OR ( emergencies, AND hospital AND service ) ) OR ( emergency, AND hospital AND service ) ) OR ( hospital AND service AND emergencies ) ) OR ( service AND emergencies, AND hospital ) ) OR ( service AND emergency, AND hospital ) ) OR ( emergency AND departments ) ) OR ( department, AND emergency ) ) OR ( departments, AND emergency ) ) OR ( emergency AND department ) ) )

Appendix B.7. Cochrane Plus Collection

(risk assessment OR Risk Assessments OR Assessment, Risk OR Health Risk Assessment OR Assessment, Health Risk OR Health Risk Assessments OR Risk Assessment, Health OR Risk Analysis OR Analysis, Risk OR Risk Analyses OR risk management OR Management, Risk OR Management, Risks OR Risks Management OR risk adjustment OR Adjustment, Risk OR Adjustments, Risk OR Risk Adjustments OR Case-Mix Adjustment OR Adjustment, Case-Mix OR Adjustments, Case-Mix OR Case Mix Adjustment OR Case-Mix Adjustments OR risk factors OR Factor, Risk OR Risk Factor) AND (early warning score OR Early Warning Scores OR Score, Early Warning OR Scores, Early Warning OR Warning Scores, Early) AND (emergency service, hospital OR Emergency Services, Hospital OR Hospital Emergency Services OR Services, Hospital Emergency OR Hospital Emergency Service OR Service, Hospital Emergency OR Emergency Hospital Service OR Emergency Hospital Services OR Hospital Service, Emergency OR Hospital Services, Emergency OR Service, Emergency Hospital OR Services, Emergency Hospital OR Hospital Service Emergency OR Emergencies, Hospital Service OR Emergency, Hospital Service OR Hospital Service Emergencies OR Service Emergencies, Hospital OR Service Emergency, Hospital OR Emergency Departments OR Department, Emergency OR Departments, Emergency OR Emergency Department)

Appendix B.8. Web of Science

((((((((((((((((((((((((((ALL = (Risk Assessment)) OR ALL = (Risk Assessments)) OR ALL = (Assessment, Risk)) OR ALL = (Health Risk Assessment)) OR ALL = (Assessment, Health Risk)) OR ALL = (Health Risk Assessments)) OR ALL = (Risk Assessment, Health)) OR ALL = (Risk Analysis)) OR ALL = (Analysis, Risk)) OR ALL = (Risk Analyses)) OR ALL = (risk management)) OR ALL = (Management, Risk)) OR ALL = (Management, Risks)) OR ALL = (Risks Management)) OR ALL = (risk adjustment)) OR ALL = (Adjustment, Risk)) OR ALL = (Adjustments, Risk)) OR ALL = (Risk Adjustments)) OR ALL = (Case-Mix Adjustment)) OR ALL = (Adjustment, Case-Mix)) OR ALL = (Adjustments, Case-Mix)) OR ALL = (Case Mix Adjustment)) OR ALL = (Case-Mix Adjustments)) OR ALL = (risk factors)) OR ALL = (Factor, Risk)) OR ALL = (Risk Factor)) AND (((((ALL = (early warning score)) OR ALL = (Early Warning Scores)) OR ALL = (Score, Early Warning)) OR ALL = (Scores, Early Warning)) OR ALL = (Warning Scores, Early)) AND ((((((((((((((((((((((ALL = (emergency service, hospital)) OR ALL = (Emergency Services, Hospital)) OR ALL = (Hospital Emergency Services)) OR ALL = (Services, Hospital Emergency)) OR ALL = (Hospital Emergency Service)) OR ALL = (Service, Hospital Emergency)) OR ALL = (Emergency Hospital Service)) OR ALL = (Emergency Hospital Services)) OR ALL = (Hospital Service, Emergency)) OR ALL = (Hospital Services, Emergency)) OR ALL = (Service, Emergency Hospital)) OR ALL = (Services, Emergency Hospital)) OR ALL = (Hospital Service Emergency)) OR ALL = (Emergencies, Hospital Service)) OR ALL = (Emergency, Hospital Service)) OR ALL = (Hospital Service Emergencies)) OR ALL = (Service Emergencies, Hospital)) OR ALL = (Service Emergency, Hospital)) OR ALL = (Emergency Departments)) OR ALL = (Department, Emergency)) OR ALL = (Departments, Emergency)) OR ALL = (Emergency Department))

References

- Administração Central do Sistema de Saúde, I.P. Termos de Referenciação Dos Episódios de Urgência Classificados Na Triagem de Prioridades Como Pouco Urgentes/Não Urgentes/Encaminhamento Inadequado Para o Serviço (Cor Verde, Azul Ou Branca, Respetivamente) Nos Serviços de Urgência Hospitalares Para Os Cuidados de Saúde Primários e Outras Respostas Hospitalares Programadas; Lisbon, Portugal. 2022, pp. 1–6. Available online: https://www.acss.min-saude.pt/wp-content/uploads/2016/11/Circular_Normativa_11_2022.pdf (accessed on 10 January 2025).

- Coimbra, N.; Teixeira, A.C.; Gomes, A.; Ferreira, A.; Nina, A.; Freitas, A.; Lucas, A.; Bouça, B.; Cardoso, C.M.; Esteves, C.; et al. Enfermagem de Urgência e Emergência, 1st ed.; Coimbra, N., Ed.; Lidel—Edições Técnicas, Lda.: Lisbon, Portugal, 2021. [Google Scholar]

- Ordem dos Enfermeiros. Regulamento de Competências Específicas Do Enfermeiro Especialista Em Enfermagem Médico-Cirúrgica Na Área de Enfermagem à Pessoa Em Situação Crítica, Na Área de Enfermagem à Pessoa Em Situação Paliativa, Na Área de Enfermagem à Pessoa Em Situação Perioperatória e Na Área de Enfermagem à Pessoa Em Situação Crónica; Diário da República n.o 135/2018, Série II; Diário da República: Lisbon, Portugal, 2018; pp. 19359–19370. Available online: https://www.ordemenfermeiros.pt/media/8732/m%C3%A9dico-cirurgica.pdf (accessed on 10 January 2025).

- Binnie, V.; Le Brocque, R.; Jessup, M.; Johnston, A.N.B. Illustrating a Novel Methodology and Paradigm Applied to Emergency Department Research. J. Adv. Nurs. 2021, 77, 4045–4054. [Google Scholar] [CrossRef]

- Donaldson, L.; Ricciardi, W.; Sheridan, S.; Tartaglia, R. (Eds.) Textbook of Patient Safety and Clinical Risk Management; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Barroso, F.; Sales, L.; Ramos, S.; Diniz, A.M.; Grilo, A.M.; Resendes, A.; Oliveira, A.S.; Coelho, A.; Correia, A.; Graça, A.; et al. Guia Prático Para a Segurança Do Doente, 1st ed.; Lidel—Edições Técnicas, Lda.: Lisbon, Portugal, 2021. [Google Scholar]

- Lawrence, M.G.; Williams, S.; Nanz, P.; Renn, O. Characteristics, Potentials, and Challenges of Transdisciplinary Research. One Earth 2022, 5, 44–61. [Google Scholar] [CrossRef]

- Lawrence, R. (Ed.) Handbook of Transdisciplinarity: Global Perspectives; Edward Elgar Publishing: Cheltenham, UK, 2023. [Google Scholar] [CrossRef]

- Ribeiro, O.; Perondi, A.R.; Ferreira, A.; Faria, A.; Andrade, Á.; Cavadas, B.; Vendruscolo, C.; Fernandes, C.; Moreira, C.; Fassarella, C.; et al. Ambientes de Prática de Enfermagem Positivos—Um Roteiro Para a Qualidade e Segurança, 1st ed.; Lidel—Edições Técnicas, Lda.: Lisbon, Portugal, 2023. [Google Scholar]

- Pini, R.; Ralli, M.L.; Shanmugam, S. Emergency Department Clinical Risk. In Textbook of Patient Safety and Clinical Risk Management; Springer International Publishing: Cham, Switzerland, 2021; pp. 189–203. [Google Scholar] [CrossRef]

- Direção-Geral da Saúde. Plano Nacional de Saúde 2030; Lisbon, Portugal. 2022. Available online: https://pns.dgs.pt/files/2023/09/PNS-2030-publicado-em-RCM.pdf (accessed on 10 January 2025).

- Presidência do Conselho de Ministros. Procede à Criação, Com Natureza de Entidades Públicas Empresariais, de Unidades Locais de Saúde; Diário da República n.o 215/2023, Série I; Diário da República: Lisbon, Portugal, 2023; pp. 4–20. Available online: https://files.diariodarepublica.pt/1s/2023/11/21500/0000400020.pdf (accessed on 10 January 2025).

- Administração Central do Sistema de Saúde, I.P. Estratificação Pelo Risco; Lisbon, Portugal. 2024, pp. 1–10. Available online: https://www.acss.min-saude.pt/wp-content/uploads/2016/11/Circular-Normativa_4_Estratificacao-pelo-risco.pdf (accessed on 10 January 2025).

- Administração Central do Sistema de Saúde, I.P. Estratégia Para Estratificação Da População Pelo Risco; Lisbon, Portugal. 2022, pp. 1–21. Available online: https://www.acss.min-saude.pt/wp-content/uploads/2022/01/Estrategia-Estratificacao-Risco-29-11-2021.pdf (accessed on 10 January 2025).

- Berwick, D.; Fox, D.M. “Evaluating the Quality of Medical Care”: Donabedian’s Classic Article 50 Years Later. Milbank Q. 2016, 94, 237–241. [Google Scholar] [CrossRef] [PubMed]

- Wadhwa, R.; Boehning, A.P. The Joint Commission. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- World Health Organization. Handbook for National Quality Policy and Strategy: A Practical Approach for Developing Policy and Strategy to Improve Quality of Care; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- World Health Organization. Conceptual Framework for the International Classification for Patient Safety—Version 1.1. Final Technical Report; World Health Organization: Geneva, Switzerland, 2009. [Google Scholar]

- Chen, Y.; Chen, H.; Sun, Q.; Zhai, R.; Liu, X.; Zhou, J.; Li, S. Machine Learning Model Identification and Prediction of Patients’ Need for ICU Admission: A Systematic Review. Am. J. Emerg. Med. 2023, 73, 166–170. [Google Scholar] [CrossRef]

- Porto, B.M. Improving Triage Performance in Emergency Departments Using Machine Learning and Natural Language Processing: A Systematic Review. BMC Emerg. Med. 2024, 24, 219. [Google Scholar] [CrossRef] [PubMed]

- Raita, Y.; Goto, T.; Faridi, M.K.; Brown, D.F.M.; Camargo, C.A.; Hasegawa, K. Emergency Department Triage Prediction of Clinical Outcomes Using Machine Learning Models. Crit. Care 2019, 23, 64. [Google Scholar] [CrossRef]

- Shin, H.A.; Kang, H.; Choi, M. Triage Data-Driven Prediction Models for Hospital Admission of Emergency Department Patients: A Systematic Review. Healthc. Inform. Res. 2025, 31, 23–36. [Google Scholar] [CrossRef]

- El Arab, R.A.; Al Moosa, O.A. The Role of AI in Emergency Department Triage: An Integrative Systematic Review. Intensive Crit. Care Nurs. 2025, 89, 104058. [Google Scholar] [CrossRef]

- Chigboh, V.M.; Zouo, S.J.C.; Olamijuwon, J. Predictive Analytics in Emergency Healthcare Systems: A Conceptual Framework for Reducing Response Times and Improving Patient Care. World J. Adv. Pharm. Med. Res. 2024, 7, 119–127. [Google Scholar] [CrossRef]

- European Commission; Directorate-General for Research and Innovation; Directorate G—Policy & Programming Centre. Unit G3—Common Strategic Planning & Programming Service. In Horizon Europe Strategic Plan 2025–2027, 1st ed.; Publications Office of the European Union: Luxembourg, 2024. [Google Scholar] [CrossRef]

- Medical Subject Headings. Predictive Learning Models. National Library of Medicine. Available online: https://www.ncbi.nlm.nih.gov/mesh/?term=Predictive+Learning+Models (accessed on 1 August 2025).

- Medical Subject Headings. Prediction Methods, Machine. National Library of Medicine. Available online: https://www.ncbi.nlm.nih.gov/mesh/?term=Prediction+Methods%2C+Machine (accessed on 1 August 2025).

- Guan, G.; Lee, C.M.Y.; Begg, S.; Crombie, A.; Mnatzaganian, G. The Use of Early Warning System Scores in Prehospital and Emergency Department Settings to Predict Clinical Deterioration: A Systematic Review and Meta-Analysis. PLoS ONE 2022, 17, e0265559. [Google Scholar] [CrossRef]

- Du, J.; Tao, X.; Zhu, L.; Wang, H.; Qi, W.; Min, X.; Wei, S.; Zhang, X.; Liu, Q. Development of a Visualized Risk Prediction System for Sarcopenia in Older Adults Using Machine Learning: A Cohort Study Based on CHARLS. Front. Public Health 2025, 13, 1544894. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 Explanation and Elaboration: Updated Guidance and Exemplars for Reporting Systematic Reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Amir-Behghadami, M.; Janati, A. Population, Intervention, Comparison, Outcomes and Study (PICOS) Design as a Framework to Formulate Eligibility Criteria in Systematic Reviews. Emerg. Med. J. 2020, 37, 387. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions, 2nd ed.; John Wiley & Sons: Chichester, UK, 2019. [Google Scholar]

- Fernandez-Felix, B.M.; López-Alcalde, J.; Roqué, M.; Muriel, A.; Zamora, J. CHARMS and PROBAST at Your Fingertips: A Template for Data Extraction and Risk of Bias Assessment in Systematic Reviews of Predictive Models. BMC Med. Res. Methodol. 2023, 23, 44. [Google Scholar] [CrossRef] [PubMed]

- GRADE Working Group. GRADE Handbook; Schünemann, H., Brożek, J., Guyatt, G., Oxman, A., Eds.; GRADEpro Guideline Development Tool; McMaster University and Evidence Prime: London, UK, 2023. [Google Scholar]

- Cochrane. Cochrane Training—GRADE Approach. Available online: https://training.cochrane.org/grade-approach (accessed on 10 July 2025).

- Brennan, S.E.; Johnston, R.V. Research Note: Interpreting Findings of a Systematic Review Using GRADE Methods. J. Physiother. 2023, 69, 198–202. [Google Scholar] [CrossRef] [PubMed]

- Arjan, K.; Forni, L.G.; Venn, R.M.; Hunt, D.; Hodgson, L.E. Clinical Decision-Making in Older Adults Following Emergency Admission to Hospital. Derivation and Validation of a Risk Stratification Score: OPERA. PLoS ONE 2021, 16, e0248477. [Google Scholar] [CrossRef]

- Tygesen, G.B.; Lisby, M.; Raaber, N.; Rask, M.T.; Kirkegaard, H. A New Situation Awareness Model Decreases Clinical Deterioration in the Emergency Departments—A Controlled Intervention Study. Acta Anaesthesiol. Scand. 2021, 65, 1337–1344. [Google Scholar] [CrossRef]

- Xie, F.; Zhou, J.; Lee, J.W.; Tan, M.; Li, S.; Rajnthern, L.S.; Chee, M.L.; Chakraborty, B.; Wong, A.-K.I.; Dagan, A.; et al. Benchmarking Emergency Department Prediction Models with Machine Learning and Public Electronic Health Records. Sci. Data 2022, 9, 658. [Google Scholar] [CrossRef]

- Merz, T.M.; Etter, R.; Mende, L.; Barthelmes, D.; Wiegand, J.; Martinolli, L.; Takala, J. Risk Assessment in the First Fifteen Minutes: A Prospective Cohort Study of a Simple Physiological Scoring System in the Emergency Department. Crit. Care 2011, 15, R25. [Google Scholar] [CrossRef]

- Arjan, K.; Weetman, S.; Hodgson, L. Validation and Updating of the Older Person’s Emergency Risk Assessment (OPERA) Score to Predict Outcomes for Hip Fracture Patients. HIP Int. 2023, 33, 1107–1114. [Google Scholar] [CrossRef]

- Wei, S.; Xiong, D.; Wang, J.; Liang, X.; Wang, J.; Chen, Y. The Accuracy of the National Early Warning Score 2 in Predicting Early Death in Prehospital and Emergency Department Settings: A Systematic Review and Meta-Analysis. Ann. Transl. Med. 2023, 11, 95. [Google Scholar] [CrossRef]

- Tavaré, A.; Pullyblank, A.; Redfern, E.; Collen, A.; Barker, R.O.; Gibson, A. NEWS2 in Out-of-Hospital Settings, the Ambulance and the Emergency Department. Clin. Med. 2022, 22, 525–529. [Google Scholar] [CrossRef]

- Candel, B.G.; Duijzer, R.; Gaakeer, M.I.; ter Avest, E.; Sir, Ö.; Lameijer, H.; Hessels, R.; Reijnen, R.; van Zwet, E.W.; de Jonge, E.; et al. The Association between Vital Signs and Clinical Outcomes in Emergency Department Patients of Different Age Categories. Emerg. Med. J. 2022, 39, 903–911. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).