Featured Application

The proposed approach for crowd density estimation and counting, utilizing visible and thermal imaging, can be widely applied in scenarios including urban public security management, intelligent traffic control, security for large-scale events, and commercial passenger flow statistics, thus offering considerable practical value.

Abstract

Crowd counting is a significant task in computer vision. By combining the rich texture information from RGB images with the insensitivity to illumination changes offered by thermal imaging, the applicability of models in real-world complex scenarios can be enhanced. Current research on RGB-T crowd counting primarily focuses on feature fusion strategies, multi-scale structures, and the exploration of novel network architectures such as Vision Transformer and Mamba. However, existing approaches face two key challenges: limited robustness to illumination shifts and insufficient handling of scale discrepancies. To address these challenges, this study aims to develop a robust RGB-T crowd counting framework that remains stable under illumination shifts, through introduces two key innovations beyond existing fusion and multi-scale approaches: (1) a cross-modal adaptive fusion module (CMAFM) that actively evaluates and fuses reliable cross-modal features under varying scenarios by simulating a dynamic feature selection and trust allocation mechanism; and (2) a multi-scale aggregation module (MSAM) that unifies features with different receptive fields to an intermediate scale and performs weighted fusion to enhance modeling capability for cross-modal scale variations. The proposed method achieves relative improvements of 1.57% in GAME(0) and 0.78% in RMSE on the DroneRGBT dataset compared to existing methods, and improvements of 2.48% and 1.59% on the RGBT-CC dataset, respectively. It also demonstrates higher stability and robustness under varying lighting conditions. This research provides an effective solution for building stable and reliable all-weather crowd counting systems, with significant application prospects in smart city security and management.

1. Introduction

Crowd density estimation and counting, as a fundamental and critical task in computer vision, focuses on automatically estimating the number of individuals from images or videos and generating high-precision density distribution maps. This technology is widely employed in various scenarios such as urban public security management, intelligent traffic control, security for large-scale events, and commercial passenger flow statistics, holding significant practical importance [1,2,3,4]. By enabling real-time and accurate detection of crowd density, such systems can effectively provide early warnings for potential risks and offer crucial support for resource allocation and decision-making analysis, thereby playing an indispensable role in building smart and safe cities [5].

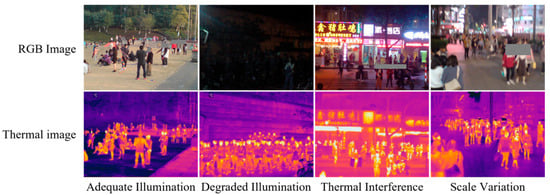

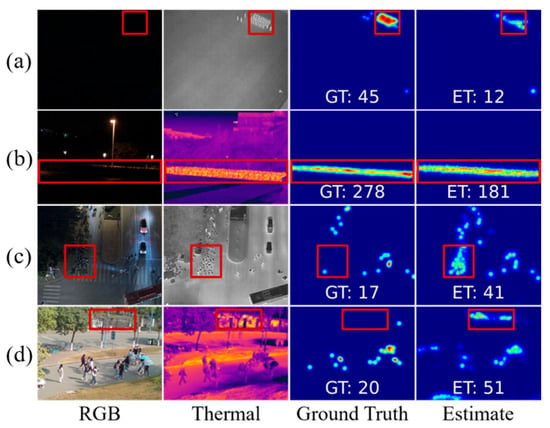

With the advancement of deep learning models and neural networks, significant progress has been made in visible light (RGB) image-based crowd counting research on multiple benchmark datasets [6,7,8]. However, the performance of these methods heavily depends on environmental lighting and weather conditions [9,10]. In low-light scenarios such as nighttime, backlit conditions, or poorly lit indoor environments, visible light images often suffer from decreased signal-to-noise ratio, color distortion, and loss of detailed textures, as illustrated in Figure 1. This leads to a substantial performance degradation in counting models, making it difficult to meet the urgent practical demand for stable operation.

Figure 1.

Comparison of RGB and thermal images under different scenarios. RGB images are sensitive to illumination variations, while thermal images are not constrained by lighting conditions. However, thermal imaging is susceptible to interference from other heat sources, and objects at different scales exhibit pronounced differences in representation between visible and thermal modalities.

To overcome the inherent limitations of the visible modality, researchers have increasingly turned to exploring crowd counting methods that combine visible light and thermal imaging (RGB-T) [11,12,13]. Thermal imaging devices capture images by sensing the infrared radiation emitted from object surfaces, independent of external visible light sources. Consequently, they can stably capture clear human silhouette information under challenging visual conditions such as total darkness, haze, rain, and snow, as shown in Figure 1. This characteristic makes thermal imaging a natural complement to visible light: visible light provides rich appearance features and semantic content under good illumination, while thermal imaging offers reliable human structure perception under poor visual conditions. By fusing information from these two modalities, it is promising to build crowd counting systems with robustness far superior to those relying on a single modality.

Current RGB-T crowd counting methods primarily focus on investigating how to fuse cross-modal features, such as through multi-stream network designs [14,15,16], attention mechanisms [17,18], and the adoption of advanced backbone networks [19,20]. Although existing RGB-T approaches have advanced in cross-modal fusion, several critical limitations still persist:

- Limited robustness to illumination shifts: In well-lit scenarios, the RGB modality can provide rich detailed information and should be assigned greater confidence; whereas under insufficient illumination or extreme weather conditions, the thermal infrared modality, with its stronger anti-interference capability, should play a more significant role. However, existing methods such as DEFNet [14] and MISF-Net [21] consistently fuse features from different modalities without adaptive weighting, leading to performance fluctuations between bright and dark environments. This indicates their incomplete adaptation to real-world illumination changes.

- Insufficient handling of scale discrepancies: Due to differences in imaging principles, the same target may exhibit scale discrepancies between visible and thermal images. Existing methods, such as MIANet [17] with its hierarchical interaction strategy or CCANet [18] with misalignment-aware fusion, primarily focus on feature interaction at the same scale, thus insufficiently modeling cross-modal scale variations.

To tackle the aforementioned challenges, this paper proposes a novel network architecture that integrates a cross-modal adaptive mechanism with multi-scale feature aggregation. We design a cross-modal adaptive fusion module (CAFM), which employs a lightweight attention mechanism to extract global contextual information and generates channel-wise independent adaptive weights. This allows for dynamic adjustment of the fusion ratio between RGB and thermal modalities based on imaging conditions, thereby enhancing model robustness in complex scenarios. Simultaneously, we introduce a multi-scale aggregation module (MSAM), which unifies features from different hierarchical levels to an intermediate scale and performs weighted fusion, effectively integrating multi-scale contextual information and improving the model’s adaptability to scale variations. These two modules work synergistically to form an end-to-end RGB-T crowd density estimation and counting framework, demonstrating significant advantages in handling modality reliability fluctuations and scale disparities. The main contributions of this paper are as follows:

- We design a cross-modal adaptive fusion module (CAFM), which simulates a dynamic feature selection and trust assignment mechanism to proactively evaluate and fuse reliability-aware features from different modalities according to varying scenarios. This module addresses the issue of the modality reliability fluctuation problem ignored by most prior RGB-T methods, which treat both modalities equally regardless of imaging conditions.

- We design a multi-scale aggregation module (MSAM) that unifies features from different receptive fields to an intermediate scale and performs weighted fusion, effectively handling cross-modal scale discrepancies that are not adequately addressed in existing multi-scale RGB-T fusion networks.

- The proposed method is thoroughly validated on benchmark datasets. Compared to existing state-of-the-art methods, it effectively enhances crowd density estimation and counting performance. Evaluated using the GAME and RMSE metrics, our method achieves improvements of 1.57%, 4.05%, 5.05%, 4.63%, and 0.78% across different GAME levels and RMSE on the DroneRGBT dataset, and improvements of 2.48%, 1.89%, 2.80%, 0.67%, and 1.59% on the RGBT-CC dataset, respectively. Notably, it exhibits superior robustness under varying illumination conditions, validating the effectiveness of the proposed adaptive fusion and multi-scale aggregation strategy.

2. Related Work

2.1. RGB-Based Crowd Counting

The significant progress and continuous evolution of crowd counting techniques have been driven by advances in deep learning and neural networks [1,7,8]. Current neural network-based research primarily focuses on model architecture design and counting methodologies [10].

In terms of model architecture design, convolutional neural networks (CNNs) have become the mainstream approach due to their powerful capability for local feature extraction and advantage in preserving spatial information. To address the challenge of scale variation caused by perspective distortion (where individuals appear larger nearby and smaller farther away), a typical strategy involves multi-scale network design. Du et al. [22] designed a hierarchical mixture model, which incorporates a competitive collaboration mechanism among experts and a soft pixel-wise gating network to hierarchically fuse multi-scale features for handling scale variations in crowd counting. Gong et al. [23] acquired scene priors by jointly predicting coarse density maps and multi-scale segmentation masks, and designed a spatial-adaptive pyramid module that leverages cross-scale correlation features to refine the density map. To enhance the quality of crowd density maps, some studies have developed context-aware enhancement networks. Wang et al. [24] collaboratively integrated local and cross-layer contextual information from different levels through modules for local context aggregation, guided attention fusion, and multi-level information fusion. Zhu et al. [25] utilized a dense attention dilation module to generate high-granularity density maps, preserving crucial contextual information via attention mechanisms for more accurate counting in complex scenes. Some methods have further advanced crowd counting by employing novel network architectures. Li et al. [26] proposed CCST, a network based on Swin Transformer, and designed a Feature Adaptive Fusion regression Head (FAFHead) to simultaneously tackle uneven crowd density distribution and large head scale span. Huo et al. [27] was the first to introduce the Visual Mamba model into weakly supervised crowd counting. It enhanced multi-scale feature fusion through adjacent-scale progressive and hybrid regression bridges, while also boosting feature utilization efficiency and counting performance via quadratic hybrid regression.

Regarding counting methodologies, using Gaussian density maps as supervision signals is a classic and widely adopted approach. Wan et al. [28] proposed an end-to-end trainable adaptive density map generator to replace traditional hand-crafted density maps, allowing optimization specific to the network and dataset. Chen et al. [29] introduced a density-guided dynamic adaptation module and proposed a correntropy-based optimal transport loss function to mitigate the impact of noise in the generated density maps. Liang et al. [30] addressed the issue of overlapping and blurred individual locations in dense crowd localization by proposing a novel Focal Inverse Distance Transform (FIDT) map to precisely describe individual positions. Furthermore, some research has explored point supervision to achieve more accurate counting. Ma et al. [31] proposed a Bayesian loss function based on a probability model, which computes the expected count for each annotated point to replace traditional Gaussian density maps, providing more reliable supervision for network training. Song et al. [32] proposed a purely point-based crowd counting model, employing the Hungarian algorithm for one-to-one point matching to optimize the learning objective, aligning predictions with annotation standards. To address various noises in point annotations, Wan et al. [33] proposed modeling the true locations as random variables through probability modeling and utilized a negative log-likelihood loss to naturally handle the uncertainty of spatial offset noise, thereby improving counting accuracy.

Although RGB-based monomodal crowd counting has achieved remarkable progress under standard illumination conditions, its performance often degrades significantly in complex environments with dim, low, or uneven lighting. This is primarily due to degraded image quality, loss of detail information, and enhanced noise interference. To overcome this limitation, current research explores RGB-Thermal (RGB-T) based crowd counting methods. By fusing the rich textual information from visible light with the illumination-invariant characteristics of thermal imaging, these methods enable robust, all-weather, and cross-scene counting.

2.2. RGB-T Crowd Counting

Crowd counting methods based on visible and thermal imaging (RGB-T) aim to overcome the limitations of RGB modality concerning illumination variations, occlusions, and background clutter, and are widely applied in public security, intelligent surveillance, and crowd management [11,12]. Despite progress, RGB-T counting still faces several key challenges: (1) dynamic modality reliability due to varying imaging conditions; (2) cross-modal scale variation; and (3) feature misalignment between modalities. Existing fusion strategies often fall short in addressing these challenges comprehensively.

Most prior works focus on cross-modal feature fusion to exploit complementary information. For instance, some methods adopt equal-weight fusion strategies such as element-wise addition or channel concatenation [14,15]. While simple, these approaches implicitly assume equal contribution from each modality and fail to adaptively adjust fusion ratios based on scene conditions (e.g., illumination changes), leading to suboptimal performance in low-light or complex environments.

Another line of research incorporates attention mechanisms to enhance modality-specific features. For example, Wang et al. [17] uses multi-scale and channel attention to filter redundant information, while Liu et al. [18] designs cross-modal attention to capture complementary features in both channel and spatial dimensions. To address the issue of insufficient handling of scale variations in cross-modal feature fusion, Li et al. [34] propose a CSA-Net that integrates scale-aware cross-modal feature aggregation and channel attention aggregation. By embedding a plug-and-play multi-scale module, the network significantly improves counting accuracy. Although these methods improve feature selectivity, they often operate on single-scale features and lack hierarchical aggregation mechanisms, limiting their ability to handle large-scale variations in crowd density and distribution.

Recent approaches leverage Transformer-based architectures for global feature interaction. Kong et al. [19] employs a multimodal transformer mixer to enhance bi-modal feature fusion, and Guo et al. [35] explores mutual information maximization to alleviate modality heterogeneity. Pan et al. [36] integrates the visual transformer into the model architecture to capture global dependencies, and a corresponding information aggregation module is designed to integrate information from multiple hierarchical levels. Chen et al. [37] proposes a novel hybrid model named TransMambaCC, which combines the analytical capabilities of Transformer and designs a Mamba-based pyramid module for addressing the issue of head scale variation observed in crowded scenes. While effective in capturing long-range dependencies, these methods can be computationally intensive and may overlook fine-grained local details crucial for accurate crowd localization.

To address modality misalignment, some works propose explicit alignment mechanisms. Kong et al. [20] introduces differential deformable representations to calibrate feature misalignment, and Kong et al. [38] achieves spatial–semantic fusion through a two-stage alignment. However, these methods often treat alignment as a separate stage rather than an adaptive, end-to-end learnable process, which may limit their flexibility in dynamic scenes.

In summary, existing RGB-T fusion strategies exhibit three primary limitations: (1) lack of adaptive trust allocation under varying imaging conditions; (2) insufficient integration of multi-scale contextual information; and (3) reliance on fixed or computationally heavy fusion architectures that may not generalize well across diverse scenarios. To provide a comprehensive comparison, we select representative methods from each category—including attention-based (MIANet [17], CCANet [18]), Transformer-based (MCN [19], C4-MIM [35]), alignment-focused (CFAF-Net [20], CrowdAlign [38]), and multi-stream designs (DEFNet [14], MC3Net [15])—as benchmarks. This selection ensures that our evaluation encompasses the mainstream technical approaches and highlights the comprehensive advantages of our method in adaptive fusion, multi-scale aggregation, and lightweight design.

3. Methodology

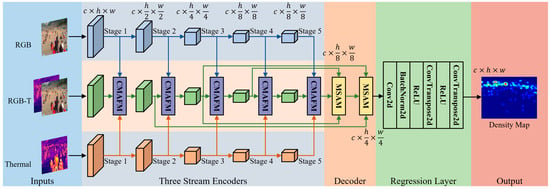

In visible and thermal imaging (RGB-T) based multimodal crowd density estimation and counting tasks, to address the impact of varying imaging conditions on modality reliability and the challenges in representation learning caused by cross-modal scale feature discrepancies, we propose a Cross-modal Adaptive Fusion and Multi-scale Aggregation Network. The overall architecture is illustrated in Figure 2. Firstly, within the Encoder network, we adopt a three-stream structure designed to extract features from the visible, thermal, and fused modalities respectively. Furthermore, we design a cross-modal adaptive fusion module (CMAFM) to perform weighted fusion of cross-modal features input at the same scale, thereby dynamically and selectively emphasizing more informative features while suppressing those potentially containing noise or missing information. Secondly, in the Decoder network, we propose a multi-scale aggregation module (MSAM), which significantly enhances the model’s robustness to scale variations and its comprehensive utilization of contextual information through hierarchical aggregation of feature maps from different receptive fields. Finally, the regression network generates the final density map used for crowd density estimation and counting. The proposed method collectively enhances the model’s capability to represent multimodal and multi-scale crowd features, effectively ensuring improved counting accuracy and robustness.

Figure 2.

Overall architecture of the proposed method. The Encoder network employs a three-stream design to extract features from the RGB, Thermal, and multimodal modalities respectively, wherein the CMAFM is utilized for fusing cross-modal features at the same scale. In the Decoder network, the MSAM performs hierarchical aggregation of feature maps from different scales.

3.1. Proposed Cross-Modal Adaptive Fusion Module

Visible light images are rich in texture, color, and detailed information, but their quality severely degrades under poor illumination conditions (e.g., nighttime, backlighting). Thermal infrared images, generated by capturing the thermal radiation emitted by objects, are insensitive to illumination changes and can effectively delineate human silhouettes, but they lack detailed texture information. In crowd counting tasks, these two modalities possess inherent complementarity. Simple feature fusion strategies, such as element-wise addition or channel concatenation, exhibit significant limitations: addition implicitly assumes equal contribution from each modality, while concatenation treats all features equally, placing the burden of feature selection entirely on subsequent convolutional layers. Neither approach can dynamically adjust the fusion strategy based on the specific characteristics of the input content. For instance, in well-lit scenes, the network should place more trust in the detailed information from the RGB modality, whereas in low-light scenarios, it should rely more on the robustness of the TIR modality.

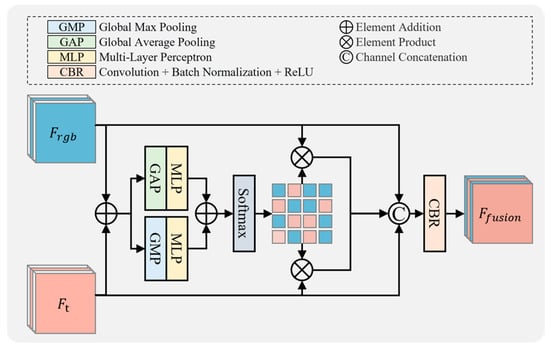

Our proposed cross-modal adaptive fusion module (CMAFM) aims to resolve this issue. It employs a lightweight attention network to extract global contextual information from the fused features and generates a set of adaptive weights that are shared spatially but independent along the channel dimension. These weights are used for the weighted fusion of the input modalities, enabling dynamic and selective emphasis on more informative features and suppression of potentially noisy or uninformative ones. The structure of CMAFM is shown in Figure 3. It takes RGB features and thermal features extracted from the same network level as input, and outputs the fused enhanced features .

Figure 3.

Cross-Modal Adaptive Fusion Module.

First, we perform element-wise addition on the features from the two modalities to obtain an initial aggregated feature , which serves as the informational basis for computing the attention weights. Subsequently, we employ two parallel strategies, global average pooling (GAP) and global max pooling (GMP), to compress the spatial dimensions and extract highly abstract global context descriptors. GAP captures the overall statistics of the entire feature map, while GMP captures the most salient local features; their combination forms a more comprehensive contextual representation. The calculation formulas are as follows:

Next, the two pooled feature vectors are fed into two independent Multilayer Perceptron (MLP). Each MLP consists of a dimensionality reduction layer (with ReLU activation) followed by a dimensionality restoration layer. The outputs from the two MLP branches are then summed to obtain the unnormalized attention scores, denoted as , where the first dimension corresponds to the modality dimension. A Softmax function is then applied along the modality dimension for normalization. This operation ensures that for each channel c, the weight coefficients for the two modalities sum to 1, explicitly representing the network’s allocation of “trust” between the two modalities for that specific channel. The calculation formula for the channel attention weight map is as follows:

Subsequently, the generated attention weights are used to recalibrate the original input modality features:

By broadcasting the weights across the entire spatial dimensions, dynamic fusion is achieved, which “assigns different importance to different modalities for different channels”. Finally, to maximally preserve the original information and provide sufficient freedom for the network to learn integration, we concatenate the original bimodal features with the recalibrated bimodal features. The CBR unit comprising a convolution layer, batch normalization (BN), and ReLU activation is used to perform dimensionality reduction and non-linear transformation on the concatenated high-dimensional features, ultimately outputting the fused features. The calculation formula is as follows:

The complete mathematical model of the cross-modal adaptive fusion module is presented in Algorithm 1.

| Algorithm 1. Cross-Modal Adaptive Fusion. |

| Input: , where c is the number of channels, h is the height, and w is the width. |

| Output: . |

| Step 1: Initial feature aggregation: . |

| Step 2: Global context descriptor extraction: Compute global average-pooled vectors via Equation (1). Compute global max-pooled vectors via Equation (2). |

| Step 3: Channel-wise attention weight generation: Pass and through two independent multi-layer perceptrons (MLPs) with ReLU activation: Apply Softmax along the modality dimension to obtain normalized attention weights and via Equation (3). Step 4: Feature recalibration and using Equations (4) and (5). |

| Step 5: Feature concatenation and adaptive fusion apply a CBR block via Equation (6). |

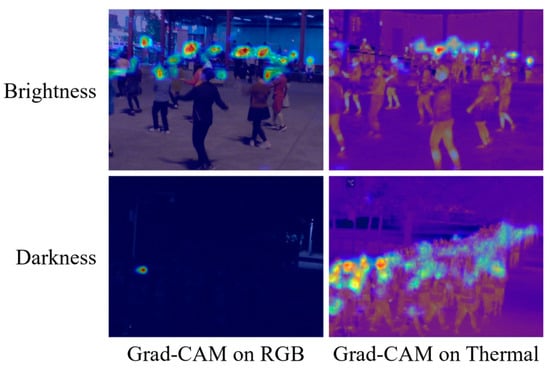

To conduct an interpretability analysis of the CMAFM, we employ gradient-weighted class activation mapping (Grad-CAM) to visualize the gradients of the feature maps output by the CMAFM layer, highlighting its decision-making outcomes under varying illumination conditions, as shown in Figure 4. It can be observed that in bright-light scenes, the CMAFM focuses its attention on regions of the visible-light image containing rich texture features, while in low-light scenes, it concentrates on areas of the thermal image that contain contour features. Based on the above analysis, the proposed CMAFM simulates a dynamic feature selection and trust allocation mechanism. Instead of passively accepting all input features, the network actively assesses the reliability of each modality across different feature channels given the current scene. It achieves adaptive and refined fusion of RGB and TIR modality features, laying a solid foundation for building high-performance bimodal crowd counting models.

Figure 4.

Grad-CAM Visualization of CMAFM Decisions across Illumination Conditions.

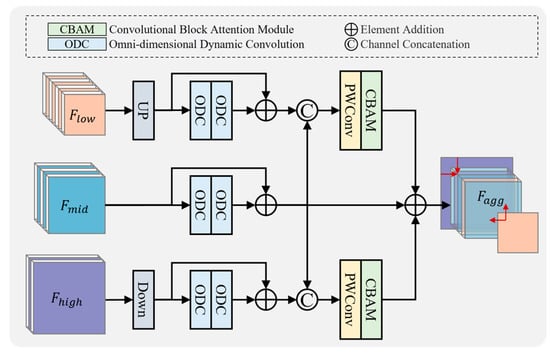

3.2. Proposed Multi-Scale Aggregation Module

To fully integrate the multi-level features extracted from both visible and thermal modalities, we propose the multi-scale aggregation module (MSAM). This module significantly enhances the model’s robustness to scale variations and its comprehensive utilization of contextual information through hierarchical aggregation of feature maps from different receptive fields. MSAM takes the multi-scale feature maps , , and generated from the previous stage as input, where the subscripts indicate the network depth (e.g., corresponds to the deepest layer with the largest receptive field and lowest resolution). Its core idea is to unify all features to an intermediate scale and perform weighted fusion. The overall workflow of the module is depicted in Figure 5.

Figure 5.

Multi-Scale Aggregation Module.

First, to enable cross-scale fusion, feature maps of different resolutions are resized to the same spatial dimensions. Then, to enhance the representational capacity of features at each scale and stabilize gradient flow before fusion, we introduce a lightweight feature enhancement block for each input branch. This block consists of two sequentially connected omni-dimensional dynamic convolution (ODConv2d) layers [39], which leverage parallel multi-scale convolutional kernels and an attention mechanism to achieve powerful spatial feature extraction capability with minimal parameters. The calculation is as follows:

where . Subsequently, we concatenate the high-scale and low-scale features with the mid-scale features along the channel dimension, constructing fused features that encompass multi-scale context. We then use a pointwise convolution for feature projection, making the fusion process more efficient. To further enhance the quality of the fused features, we introduce the convolutional block attention module (CBAM) [40] to adaptively calibrate the dimensionality-reduced features, suppressing unimportant background noise and highlighting key features relevant to crowd regions.

Finally, MSAM aggregates the calibrated multi-scale contextual features with the core mid-scale features via a simple element-wise addition operation, producing enhanced features rich in multi-scale information as the module output. The complete mathematical model of the MSAM is presented in Algorithm 2.

| Algorithm 2. Multi-Scale Aggregation. |

| Input: . |

| Output: , where is the spatial size of . |

| Step 1: Spatial alignment and to the spatial dimensions of : |

| Step 2: Apply two sequential ODConv2d layers per branch for feature refinement via Equation (7). |

| Step 3: Concatenate the enhanced low- and high-scale features with the mid-scale features: Step 4: Channel projection apply a 1 × 1 convolution to reduce channel dimensions, then refine with convolutional block attention module (CBAM): |

| Step 5: Fuse the attentively calibrated multi-scale features with the original mid-scale features via element-wise addition: |

The design principle of MSAM mimics how the human visual system observes crowded scenes: it requires both a “global overview” (utilizing the large receptive field of to perceive the overall distribution in high-density areas) and “scrutinizing details” (utilizing the fine-grained features of to distinguish adjacent individuals or small groups), ultimately integrating global and local information at an “intermediate perspective” (). It effectively aggregates information from both global context and local details, providing a powerful and scale-robust feature representation for subsequent density map regression.

3.3. Network Loss

To improve the quality of the estimated crowd density maps, we employ the focal inverse distance transform map (FIDTM) [30] to precisely describe individual locations and generate the ground truth density maps. FIDTM effectively alleviates the ambiguity in localization caused by overlapping and blurry annotations in dense crowds, thereby providing more accurate supervision signals.

For the loss function, we adopt the mean squared error (MSE) loss to supervise the model training. The MSE loss enforces pixel-level fitting between the predicted density map and the ground truth, which is crucial for generating high-fidelity density distributions. The formulation is as follows:

where is the total number of pixels in the density map per sample, is the ground truth density value at the i-th pixel, and is the predicted density value at the i-th pixel from the model. As the loss decreases, it indicates that the predicted density map output by the model becomes increasingly closer to the ground truth density map at the pixel level. Consequently, the total count obtained by summing the density map also becomes more accurate.

The MSE loss is chosen for its following advantages in the context of crowd density estimation. Firstly, it directly penalizes deviations at each pixel, encouraging the network to produce density maps that are structurally consistent with the ground truth, which is essential for accurate local density estimation and overall count integrity. Secondly, as a convex and smooth function, MSE provides stable gradients throughout training, facilitating efficient optimization and convergence of the deep network. Finally, the continuous nature of density maps aligns well with the regression objective of MSE, making it a natural and effective choice for supervising density map prediction tasks.

4. Experiment Details

4.1. Datasets

We evaluate our approach using two extensively adopted benchmark datasets in multimodal crowd counting research.

DroneRGBT [11]: This dataset provides a UAV-based RGB-T crowd counting benchmark, comprising aligned RGB-T images acquired by visible and thermal sensors mounted on drones operating at 30–50 m above ground. It includes a variety of outdoor settings such as campuses, stadiums, and streets, with a total of 3607 RGB-T image pairs—1800 pairs each for training and testing—annotating 175,723 human instances. The per-image count varies from 1 to 403 individuals. Known for its diversity in crowd density, scene layout, and lighting, DroneRGBT is the predominant benchmark for RGB-T crowd counting from a UAV viewpoint.

RGBT-CC [12]: As a reference dataset for RGB-T crowd counting in natural scenes captured from arbitrary viewpoints, RGBT-CC encompasses a broad spectrum of indoor and outdoor environments, including parks, streets, malls, and classrooms, under notable illumination variations and complex lighting. It presents greater complexity in both crowd density distribution and background context compared to DroneRGBT, establishing it as a commonly adopted and more demanding benchmark in contemporary RGB-T crowd counting studies. The dataset consists of 2030 aligned RGB-T images, with 1013 captured in well-lit conditions and 1017 in low-light settings.

4.2. Implementation Step

Our experiments were conducted on a server equipped with an Intel Xeon Gold 5220R CPU (2.2 GHz base frequency) running Ubuntu 22.04. The computational workload was accelerated using an NVIDIA A800 GPU with 24 GB of memory. The implementation was coded in Python 3.10, and all modeling, training, and inference procedures were built upon the PyTorch 2.12 deep learning framework.

To improve the quality of the estimated crowd density maps, we employ the focal inverse distance transform map (FIDTM) [30] to precisely describe individual locations and generate the ground truth density maps. This method converts discrete annotated points into a continuous density distribution using a nonlinear distance decay function. It first applies the Euclidean distance transform to the binary mask of point coordinates to calculate the distance from each pixel to its nearest annotated point. Then, the designed decay function maps these distance values into density weights, with an effective radius of approximately 50 pixels. Unlike traditional fixed Gaussian kernel methods, this approach naturally determines the influence range of each annotated point through its decay function, generating a density map that forms a continuous distribution and better models the spatial correlations between targets.

During the data loading phase, for both the DroneRGBT and RGBT-CC datasets, we employ the officially split training and testing subsets for training and validation. For the input RGB and thermal infrared image data, we consistently apply random cropping with a crop size of 256 × 256 pixels, and incorporate random scaling and rotation operations to enhance data diversity. All images undergo normalization with channel mean values of [0.485, 0.456, 0.406] and standard deviations of [0.229, 0.224, 0.225].

To ensure a fair comparison with existing methods and distinguish network-independent improvements, we adopt the VGG-16 pre-trained model, which is widely used by most approaches, as the backbone network. Parameters of newly added modules are initialized using a normal distribution. During training, the AdamW [41] optimizer is used to update model gradients and parameters, with a weight decay coefficient of 1 × 10−4. The initial learning rate is set to 1 × 10−4. In the first 10 epochs, the learning rate is increased to 1 × 10−3 using a linear warm-up strategy, and then decays to 1 × 10−4 according to a cosine function [42]. The end-to-end training process runs for 200 epochs with a batch size of 16. To verify the model’s stability, we conduct training with five different random seeds (1, 48, 95, 167, 412) and evaluate the results by calculating the mean and standard deviation from the five trained models.

4.3. Evaluation Metrics

The grid average mean absolute error (GAME) and the root mean square error (RMSE) are widely adopted objective evaluation metrics in crowd counting tasks. Their formulas are defined as follows:

where denotes the number of test samples. represents the ground-truth count for the i-th image, while indicates its corresponding predicted count. In the GAME formula, the image is partitioned into non-overlapping regions, where typically ranges from 0 to 3, and the mean absolute error (MAE) is computed separately for each region. Specifically, and denote the ground-truth and predicted counts for the j-th region of the i-th image, respectively. The GAME metric is designed to measure the model’s capability in local localization and counting accuracy, whereas RMSE is employed to evaluate the robustness of the model’s overall counting performance.

5. Experimental Results and Discussion

5.1. Discussion of Experimental Results on the DroneRGBT Benchmark

In the DroneRGBT benchmark, we conducted a comprehensive evaluation of the proposed method and compared it with representative RGB-T crowd counting approaches. For IADM [12] and DEFNet [14], we reproduced their results using their publicly released code, while for other methods, we directly adopted the results reported in their respective papers. The experimental results are summarized in Table 1, where the best and second-best performances are highlighted in bold and underlined, respectively. It can be observed that the proposed method achieves the best results across all evaluation metrics. MCN [19] employs a multimodal transformer mixer to enhance global information fusion, demonstrating outstanding performance in GAME(0) and RMSE, which focus on overall counting quality. MIANet [17] adopts a hierarchical interaction and fusion structure to generate refined density estimates, achieving remarkable results in GAME(1) and GAME(2), which emphasize local counting capability. In comparison to other methods, the proposed approach effectively addresses challenges related to fluctuations in modality reliability and scale variations through the synergy of cross-modal adaptive fusion and multi-scale aggregation. This not only improves global counting performance but also maintains advantages in local counting accuracy. Overall, the proposed method achieves improvements of 1.57%, 4.05%, 5.05%, 4.63%, and 0.78% over the second-best results across the evaluation metrics, demonstrating its effectiveness.

Table 1.

Experimental Results on the DroneRGBT Benchmark.

5.2. Discussion of Experimental Results on the RGBT-CC Benchmark

To further conduct a comprehensive evaluation of the proposed method, we compared it with current mainstream RGB-T crowd counting approaches on the more challenging RGBT-CC benchmark dataset. The results of the compared methods were taken directly from those reported in their original papers. The experimental results are presented in Table 2, demonstrating that our method maintains its leading performance. In contrast, other existing methods exhibit varying performance across the evaluation metrics. MISF-Net [21], which focuses on modality-specific features, achieves the second-best result on GAME(0). By addressing RGB-T cross-modal feature misalignment, it also attains the second-best performance on the GAME(1) metric. C4-MIM [35] leverages mutual information maximization regularization to explore multimodal consistency, achieving the second-best results on GAME(2) and GAME(3), respectively. CFAF-Net [20] employs a differential deformable representation to calibrate feature misalignment, obtaining the second-best result on the RMSE metric. In comparison to these second-best results, our proposed method achieves improvements of 2.48%, 1.89%, 2.80%, 0.67%, and 1.59% across the evaluation metrics, further validating its superior generalization capability and robustness across different datasets.

Table 2.

Experimental Results on the RGBT-CC Benchmark.

5.3. Cross Dataset Validation and Analysis

To comprehensively evaluate the generalization ability of the proposed method, we conducted cross-dataset validation experiments between the DroneRGBT and RGBT-CC datasets, which differ in acquisition perspective, scene distribution, and illumination characteristics. This experiment aims to test whether the model can maintain stable performance without fine-tuning on the target dataset, thereby assessing its adaptability in real-world cross-scenario and cross-device deployment. The experimental results are shown in Table 3. It can be observed that, compared to the within-dataset testing results (see Table 1 and Table 2), both the proposed method and existing methods exhibit a significant performance decline, indicating relatively limited generalization capability. The adaptive weighting mechanism in the proposed CMAFM and the feature aggregation strategy in MSAM may have overfitted to specific patterns of inter-modal correlation, scale distribution, and scene context in the source dataset (training set). When the data distribution of the target dataset (test set) changes considerably, these mechanisms may fail to produce optimal fusion and representation. Although existing methods demonstrate superior performance in within-dataset testing, the cross-dataset experiments reveal that there is still room for improvement in their generalization ability. This points to an important direction for future work: while pursuing high performance, greater emphasis should be placed on enhancing the model’s generalization capability across different domains and scenarios.

Table 3.

Comparison Results of Cross Dataset Testing.

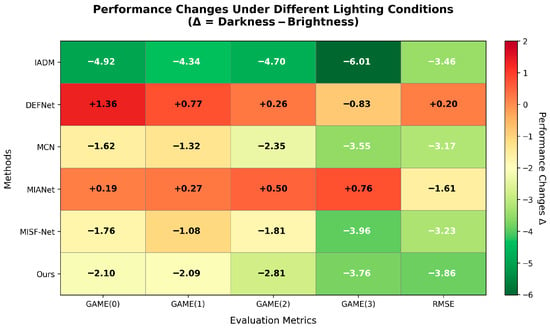

5.4. Experimental Comparison Under Different Illumination Conditions

It is widely recognized that RGB-T multimodal crowd counting research aims to address the limitations of visible-light images under illumination variations. Therefore, experimental comparisons under different lighting conditions are crucial. We categorized the RGBT-CC dataset into bright and dim scenes based on illumination levels to evaluate model performance separately. The experimental results are summarized in Table 4. As shown, existing methods exhibit significant performance disparities across illumination conditions. For instance, DEFNet [14] achieves strong results in bright environments but suffers notable performance degradation in low-light scenarios. Conversely, MISF-Net [21] demonstrates impressive performance under dim lighting but underperforms in well-lit conditions. In contrast, the proposed method consistently achieves optimal or near-optimal results across both bright and dim scenarios, demonstrating comprehensive robustness and stability under varying illumination conditions.

Table 4.

Comparison of Results under Different Lighting Conditions.

To visually reflect the performance differences of various methods under different lighting conditions, we take bright-light scenes as the baseline and analyze their variations in low-light scenes. The experimental results are shown in Figure 6. It can be observed that DEFNet [14] shows positive values (red) across all metrics, indicating a comprehensive performance decline in low-light conditions. MISF-Net [21] exhibits negative values (green) in most metrics, suggesting improved performance in low-light settings. Our method consistently demonstrates distinct negative values (dark green) with larger magnitudes across all metrics, indicating the most significant performance improvement in low-light environments and highlighting its robustness across varying illumination conditions.

Figure 6.

Comparison results of performance changes under different lighting conditions.

The performance fluctuations of the aforementioned method under varying illumination conditions are primarily attributed to differences in the models’ capabilities to fuse and utilize information from the Visible (RGB) and Thermal Infrared (T) modalities. Under bright conditions, RGB image quality is high. If a model over-relies on visible information, its performance may fluctuate when illumination changes. Conversely, under low-light conditions, visible image quality degrades. If the model can effectively leverage the stable thermal features present in the thermal infrared data, it can maintain satisfactory counting performance. Our proposed cross-modal adaptive fusion module (CMAFM) is designed to address this issue. By extracting cross-modal global contextual information, CAFM generates adaptive weights that are shared across spatial dimensions yet independent across channel dimensions. These weights are used to perform a weighted fusion of the input modalities, thereby dynamically and selectively emphasizing more informative features while suppressing those potentially contaminated by noise or information loss due to varying illumination. This enables the model to achieve more accurate and robust crowd counting amidst dynamically changing light and complex scenes.

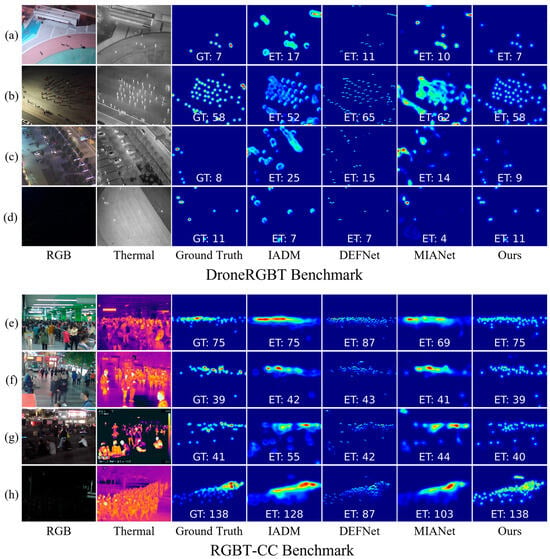

5.5. Qualitative Comparison

5.5.1. Comparison of Density Maps and Count Visualization

To more intuitively compare crowd density estimation and counting capabilities, we conducted a qualitative analysis by visualizing the predicted density maps. We selected data from the DroneRGBT and RGBT-CC datasets representing different illumination conditions, including bright, dark, and complex mixed scenes. The experimental results are shown in the corresponding Figure 7. It can be observed that our proposed method effectively estimates crowd density distributions across these varying illuminations and backgrounds, yielding more accurate counts. Notably, in the DroneRGBT example Figure 7a, despite good illumination, the presence of heat sources similar to humans in the thermal imagery led to erroneous estimations by comparison methods IADM [12], DEFNet [14], and MIANet [17]. In example Figure 7c, where the visible background is complex, the comparison methods similarly produced incorrect estimates. In dark scenes, such as examples Figure 7d,h, the visible (RGB) modality suffers from severe information loss, making it difficult for models to extract effective texture and appearance features from it. Although the thermal infrared modality provides some thermal signature information, the models still struggle to adequately fuse the bimodal information, ultimately leading to missed detections and underestimation of some individuals. Analysis of other examples similarly confirms that our proposed method outperforms existing approaches in both crowd density estimation and counting. In summary, qualitative analysis demonstrates that our method, through its weighted fusion strategy, emulates the physical principle of dynamic sensory selection based on information reliability. This allows it to adaptively rely on the most stable information source in changing illumination environments, thereby enhancing the robustness and accuracy of the crowd counting system at a fundamental level.

Figure 7.

Visualization Results on the DroneRGBT and RGBT-CC Datasets. (a–d) show the visualization example results from the DroneRGBT dataset. (e–h) show the visualization example results from the RGBT-CC dataset.

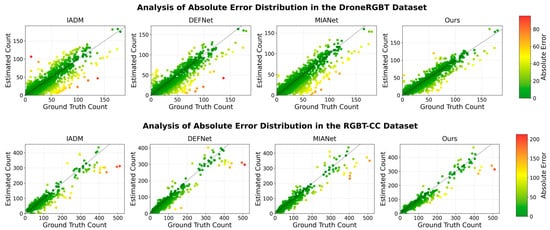

5.5.2. Comparison of Absolute Error Distribution in Counting

To further analyze the overall counting performance of our proposed method on the DroneRGBT and RGBT-CC datasets, we visualized the model’s count predictions against the ground truth using scatter plots, as shown in Figure 8. The scatter color gradient from green to red indicates increasing absolute error. It is evident that, compared to existing methods, the predictions of our proposed method align more closely with the diagonal, reflecting smaller absolute counting errors and demonstrating superior overall counting performance.

Figure 8.

Visualization Results of the Scatter Plot.

5.5.3. Analysis of Failure Cases

To analyze the limitations of the proposed method, we conducted an investigation on the incorrectly counted samples, as shown in Figure 9. In Figure 9a,b, under low-light conditions when the thermal images contain highly dense crowds, the proposed method suffers from missed detections. This occurs because, in extremely crowded and poorly lit scenarios, thermal radiation between individuals tends to overlap and merge, resulting in blurred thermal contours between persons. Although our method adaptively fuses RGB and thermal modal features through the CMAFM module, when the thermal information itself is ambiguous, the model struggles to distinguish highly overlapping individuals from the fused features. Especially in the absence of supporting visible-light texture details, the multi-scale aggregation module (MSAM) also finds it difficult to restore clear individual localization on feature maps with limited resolution. In Figure 9c,d, when the background contains objects with thermal characteristics or visible-light shapes similar to humans, the proposed method yields false detections. This is mainly because the thermal modality is susceptible to interference from other heat sources in the environment, while the visible-light modality may also misinterpret objects resembling human shapes as pedestrians in complex backgrounds. Although the CMAFM module attempts to suppress the influence of unreliable modalities through a weight-assignment mechanism, when both modalities are affected, the model may still incorrectly classify strongly responsive features as human. Moreover, if background objects similar to humans exhibit feature responses across multiple scales, the MSAM may further reinforce false detections during the aggregation of multi-scale contextual information.

Figure 9.

Failure Cases: Red Boxes Highlight Regions with Severe Counting Errors. (a,b) present the results in low-light scenarios. (c,d) show the results for scenes where the background contains objects with thermal characteristics similar to humans.

The above analysis indicates that the proposed method still exhibits certain limitations when dealing with extremely dense and overlapping thermal imaging targets, as well as multimodal background interference. Future work may further optimize the approach by incorporating refined strategies such as background-aware suppression and edge-aware attention mechanisms [47,48,49].

5.6. Computational Complexity Analysis

To comprehensively validate the computational complexity and runtime efficiency of the model, we evaluated it using the number of parameters, floating-point operations (FLOPs), and throughput. The validation data consisted of the test set from RGBT-CC, comprising 800 samples, each with an image resolution of 480 × 640. The experimental results are shown in Table 5. Due to the three-stream network architecture adopted by our proposed method, it exhibits higher values in both the number of parameters and FLOPs compared to IADM [12] and DEFNet [14]. Similarly, in terms of throughput, the inference speed of the proposed method is lower than that of IADM and MIANet [17]. Therefore, lightweight design will be considered in future research.

Table 5.

Comparison Results of Computational Complexity and Operational Efficiency.

Additionally, to assess the computational efficiency of the proposed cross-modal adaptive fusion module (CMAFM) and multi-scale aggregation module (MSAM), we provide a comparative analysis of the number of parameters and FLOPs, aiming to illustrate the impact of each module on the overall model complexity. The results are presented in Table 6. It is particularly noteworthy that the CMAFM module introduces only 0.23 M parameters and 0.04 G FLOPs—an extremely marginal increase—indicating that this module achieves adaptive weighted fusion of cross-modal features with almost no additional computational burden. In contrast, the MSAM module has a relatively large number of parameters (68.90 M) and a high computational cost (192.07 G FLOPs), primarily due to the multiple ODConv2d layers and cross-scale feature fusion operations it contains. Future work may further explore lightweight improvements for this module to reduce computational overhead while preserving its performance advantages.

Table 6.

Comparison Results of Complexity of Proposed Module.

5.7. Ablation Studies

5.7.1. Conduct Ablation Experiments on the Proposed Module

To validate the effectiveness of the proposed cross-modal adaptive fusion module (CMAFM) and multi-scale aggregation module (MSAM) in our method, we conducted ablation studies, with experimental results presented in the accompanying Table 7. Experiment 1 serves as the baseline. For feature fusion, we used direct element-wise addition, and for multi-scale features, we employed a standard feature pyramid networks (FPN) [50]. Experiment 2 introduces the CMAFM module alone. The results show a substantial improvement in crowd counting performance, with enhancements of 19.67%, 16.66%, 7.57%, 3.86%, and 22.07% across the evaluation metrics, respectively. This confirms that CMAFM’s dynamic and selective emphasis on more informative cross-modal features significantly boosts overall counting capability. Experiment 3 introduces the MSAM module alone. It achieved improvements of 11.24%, 20.25%, 12.33%, 9.47%, and 15.58% across the five metrics. Notably, the gains on the GAME(1), GAME(2), and GAME(3) metrics are more pronounced, demonstrating that MSAM, through its hierarchical aggregation of feature maps from different receptive fields, significantly enhances the model’s robustness to scale variation and its comprehensive utilization of contextual information, thereby improving local counting accuracy.

Table 7.

Results of Ablation Study on the RGBT-CC Dataset.

Experiment 4 presents the results of our complete proposed method, which integrates both CMAFM and MSAM. This approach achieves the best performance across all evaluation metrics, significantly outperforming the introduction of any single module. It is particularly noteworthy that the performance gain from the complete method (a 31.12% improvement in GAME(0) versus the baseline) exceeds the simple sum of the individual gains from CMAFM (19.67%) and MSAM (11.24%). This clearly indicates a powerful synergistic effect between the two modules. The refined cross-modal feature selection enabled by CMAFM and the multi-scale contextual awareness provided by MSAM prove to be highly complementary. Their coupling allows the model to dynamically integrate the most informative features from both different modalities and different scales, leading to breakthrough performance in both global counting accuracy (reflected in RMSE) and local counting stability (reflected in GAME(1–3)).

5.7.2. Conduct Ablation Experiments on the Proposed MSAM

To further validate the role of key components in the proposed Multi-Scale Aggregation Module (MSAM), we conducted additional ablation experiments on the RGBT-CC dataset. Specifically, we took the fully proposed method as the baseline and removed two sub-modules—ODConv (Omni-Dimensional Dynamic Convolution) and CBAM (Convolutional Block Attention Module)—respectively. Under the condition that all other settings remained consistent with the base experiments, we retrained and evaluated the models. The experimental results are shown in Table 8. To verify the contributions of the ODConv and CBAM introduced in the proposed MSAM, ablation studies were carried out. The base experiment was the proposed complete method. For ODConv, we replaced it with standard convolution; for CBAM, we directly removed it from the model. The training process used the same configuration as the base experiment, and evaluation was performed on the RGBT-CC dataset. The results are presented in the table. When standard convolution replaced ODConv, all metrics declined significantly, with GAME(0) and RMSE increasing by 24.76% and 12.16%, respectively. This indicates that ODConv, through its parallel multi-scale convolutional kernels and attention mechanism, effectively enhances the spatial representation and scale adaptability of features. Its dynamic convolution mechanism enables the model to better capture contextual information under different receptive fields, thereby improving the quality of multi-scale feature fusion.

Table 8.

Experimental Results of MSAM Component Ablation.

After removing CBAM, the model performance also showed a systematic drop, with GAME(2) and RMSE increasing by 4.00% and 8.76%, respectively. By adaptively calibrating attention across both channel and spatial dimensions, CBAM effectively suppresses background noise and highlights key features related to crowd regions. The introduction of this module significantly enhances the discriminative ability after feature fusion and strengthens the model’s stability in complex backgrounds.

In summary, this ablation experiment further confirms the effectiveness of ODConv and CBAM in the multi-scale aggregation module. They not only individually improve the expressive quality and selectivity of features but also, through synergistic interaction, significantly enhance the model’s adaptability to scale variations and complex backgrounds. This provides important support for the final crowd density estimation and counting performance.

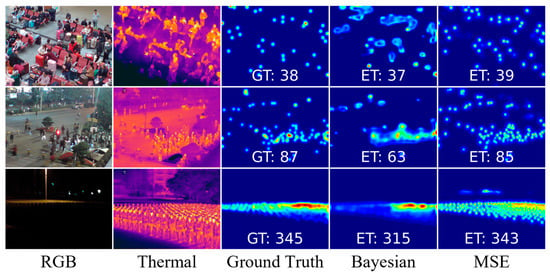

5.7.3. Conduct Ablation Experiments on Different Supervisory Losses

To further investigate the impact of different loss functions on model performance, this section supplements ablation experiments on supervised loss. In the experiments, keeping the network structure and training strategy unchanged, we used Mean Squared Error loss (MSE) and the probability-based Bayesian Loss [x] for training, respectively, and evaluated their performance on the RGBT-CC dataset.

First, we conducted a metric comparison experiment. The results are shown in Table 9, from which it can be seen that the network trained with MSE loss significantly outperforms the version using Bayesian loss across all evaluation metrics. Specifically, in terms of overall counting accuracy (GAME(0)) and robustness (RMSE), MSE loss leads by 16.61% and 14.03%, respectively. For the GAME(1) to GAME(3) metrics, which measure local counting stability, MSE loss also exhibits clear advantages, leading by 6.72%, 9.02%, and 11.59%, respectively. This phenomenon can be attributed to the difference in how well the two loss functions match the characteristics of the task. The pixel-level density maps generated by FIDTM serve as supervision signals, which are essentially a continuous regression task. MSE loss directly performs pixel-wise fitting between the predicted density map and the ground-truth density map, effectively driving the model to learn to generate smooth and spatially accurate density estimates. This aligns highly with the objective of density map regression. Bayesian loss was originally designed to handle positional uncertainty in point annotations, computing the expected count for each annotated point through a probabilistic model.

Table 9.

Comparison Results of Different Supervisory Losses.

Second, we performed a visual analysis of the predicted density maps, as shown in Figure 10. It can be observed that compared with the density maps predicted by Bayesian loss, those predicted by MSE loss exhibit more accurate spatial distribution, lower background noise, and more reasonable density values. The fundamental reason for this visual difference lies in the different optimization objectives of the two loss functions. MSE loss forces the model to perform precise pixel-wise regression, making it focus on learning to generate outputs that are as close as possible to the ground-truth continuous density maps in both numerical value and spatial distribution. In contrast, the core of Bayesian loss is to handle the uncertainty of point annotations through a probabilistic model; its optimization objective is more focused on the statistical consistency of the total count rather than precise spatial density distribution.

Figure 10.

Comparison of Density Maps for Different Supervision Losses.

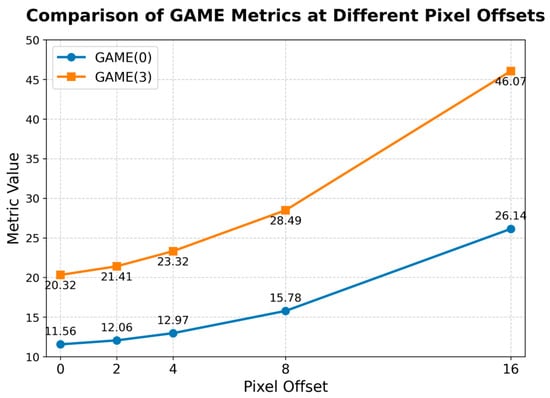

5.7.4. Conduct Ablation Experiments on Spatial Registration Errors

In practical applications, spatial registration errors often exist between visible light and thermal imaging sensors, which may arise from device installation deviations, lens distortion, or motion-induced image misalignment. To evaluate the robustness of the proposed model under registration errors, we designed an ablation experiment on spatial offset. We kept the thermal images fixed and applied horizontal rightward and vertical downward pixel shifts to the visible light images, with shift amounts of 0 (no shift), 2, 4, 8, and 16 pixels, respectively. The experiment was conducted on the bright scene subset of the RGBT-CC dataset, using GAME(0) and GAME(3) as evaluation metrics. The results are shown in Figure 11. As can be seen from the figure, as the shift amount of the visible light images increases, both GAME(0) and GAME(3) gradually rise, indicating a decline in model performance with increasing registration error. At a shift of 2 pixels, GAME(0) increases by approximately 4.3%, and GAME(3) by about 5.4%, suggesting that the model possesses a certain tolerance to minor registration errors. When the shift increases to 8 pixels, GAME(0) and GAME(3) rise by approximately 36.5% and 40.2%, respectively, indicating a more noticeable performance degradation. At a shift of 16 pixels, the performance decline becomes even more significant, demonstrating that large-scale spatial misalignment has a substantial impact on the model. Although model performance decreases with increasing registration error, it remains relatively stable under small-scale shifts (≤4 pixels). This robustness can be partly attributed to the proposed cross-modal adaptive fusion module (CMAFM), which dynamically adjusts the fusion ratio of features from the two modalities through channel-independent attention weights, thereby mitigating feature matching conflicts caused by spatial misalignment to some extent. Additionally, the multi-scale aggregation module (MSAM) enhances the perception of local structures and global distributions by fusing features from different receptive fields, thus compensating to some extent for local information loss induced by the shifts.

Figure 11.

Experimental Results of Spatial Registration Error Ablation.

6. Conclusions

To address the performance degradation of visible light (RGB) under low illumination and adverse weather conditions, as well as the representational challenges caused by cross-modal scale discrepancies, this paper proposes a novel RGB-T crowd density estimation and counting framework that integrates a cross-modal adaptive mechanism with multi-scale feature aggregation. The designed cross-modal adaptive fusion module (CMAFM) employs a lightweight attention mechanism to adaptively assign modality reliability, thereby dynamically adjusting the contributions of RGB and thermal infrared features under varying imaging conditions. The multi-scale aggregation module (MSAM) enhances the model’s adaptability to scale variations and contextual awareness by performing weighted fusion of global and local features at intermediate scales. Together, these modules significantly improve the model’s representational robustness in complex scenarios.

Extensive experiments demonstrate that the proposed method consistently improves counting and density estimation performance on public benchmarks. On the DroneRGBT dataset, the proposed method achieves relative improvements of approximately 1.57%, 4.05%, 5.05%, 4.63%, and 0.78% in GAME(0)–GAME(3) and RMSE metrics, respectively. On the RGBT-CC dataset, the corresponding improvements are approximately 2.48%, 1.89%, 2.80%, 0.67%, and 1.59%. Quantitative results and ablation studies under varying illumination conditions consistently indicate that the CMAFM effectively mitigates performance degradation caused by modality reliability fluctuations, while the MSAM enhances the modeling capacity for targets of different scales and density distributions.

The proposed method not only achieves comprehensive superiority in objective metrics but, more importantly, exhibits remarkable stability in complex scenarios with drastic illumination changes. This characteristic endows the study with significant practical value: the developed system can provide 24/7 crowd monitoring capabilities for urban public security management, enabling reliable early warning in critical scenarios such as nighttime surveillance and emergency command; it offers precise crowd flow statistics and density assessment for intelligent traffic management and security at large-scale events, assisting administrators in scientific resource allocation and risk control; its strong environmental adaptability ensures stable performance even under adverse weather conditions such as haze, rain, and snow, greatly expanding the deployment scope of intelligent vision systems in real-world settings. Future work may focus on integrating temporal video information, cross-device, cross-domain adaptation, as well as model compression and edge deployment, to further enhance the system’s real-time performance and generalization capability in practical applications.

In future work, to address the challenging issues in current RGB-T crowd counting and the limitations of existing methods, we will further investigate the following directions:

- Cross-dataset and Cross-device Generalization: Study the adaptability and generalization of models across datasets collected with different devices and under varying scene distributions. Explore domain adaptation and meta-learning strategies to reduce dependence on specific data annotations and enhance the model’s robustness in diverse real-world environments.

- Suppression of Complex Heat Sources and Background Interference: Aiming at interference caused by non-human heat sources (such as lights, vehicles, animals, etc.) and complex backgrounds in thermal infrared images, investigate more discriminative feature representations and fusion mechanisms to improve the model’s ability to distinguish between targets and interference.

- Adaptive Fusion Strategies for Extremely High-Density Crowds: In highly crowded scenes, study mechanisms for dynamically adjusting the granularity and receptive fields of multimodal fusion, optimizing feature extraction and aggregation methods to better capture severely occluded and extremely small-scale target features, thereby enhancing counting accuracy.

- Fine-Grained Multimodal Registration and Alignment: Address the spatial misalignment issues between RGB and thermal infrared images caused by differences in perspective, resolution, and imaging principles. Investigate adaptive pixel-level or feature-level registration techniques to mitigate the impact of misalignment on fusion effectiveness and improve the geometric consistency of feature fusion.

These research directions will help overcome the limitations of current methods and advance RGB-T crowd counting technology toward greater efficiency, robustness, and practicality.

Author Contributions

Conceptualization, J.L. and Z.N.; methodology, J.L. and Z.N.; software, J.L.; validation, J.L. and Y.Z.; resources, J.L. and L.T.; data curation, Y.Z. and L.T.; writing—original draft preparation, J.L. and Z.N.; writing—review and editing, Y.Z. and L.T.; funding acquisition, Z.N. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Sichuan Science and Technology Program (Grant No. 2025ZDZX0009).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data for DroneRGBT and RGBT-CC presented in this research are openly available at https://github.com/VisDrone/DroneRGBT and https://github.com/chen-judge/RGBTCrowdCounting, respectively (accessed on 11 August 2025).

Acknowledgments

Zuodong Niu expresses gratitude to Ya Qiu for the support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| RGB-T | Visible Light and Thermal Imaging |

| CMAFM | Cross-Modal Adaptive Fusion Module |

| MSAM | Multi-Scale Aggregation Module |

| MLP | Multilayer Perceptron |

| CNNs | Convolutional Neural Networks |

| FIDTM | Focal Inverse Distance Transform Map |

| MSE | Mean Squared Error |

| GAME | Grid Average Mean Absolute Error |

| RMSE | Root Mean Square Error |

References

- Khan, A.; Ali Shah, J.; Kadir, K.; Albattah, W.; Khan, F. Crowd Monitoring and Localization Using Deep Convolutional Neural Network: A Review. Appl. Sci. 2020, 10, 4781. [Google Scholar] [CrossRef]

- Boukerche, A.; Coutinho, R.W. Crowd management: The overlooked component of smart transportation systems. IEEE Commun. Mag. 2019, 57, 48–53. [Google Scholar] [CrossRef]

- Sam, D.B.; Peri, S.V.; Sundararaman, M.N.; Kamath, A.; Babu, R.V. Locate, size, and count: Accurately resolving people in dense crowds via detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2739–2751. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Menouar, H.; Hamila, R. Revisiting crowd counting: State-of-the-art, trends, and future perspectives. Image Vis. Comput. 2023, 129, 104597. [Google Scholar] [CrossRef]

- Alhawsawi, A.N.; Khan, S.D.; Ur Rehman, F. Crowd Counting in Diverse Environments Using a Deep Routing Mechanism Informed by Crowd Density Levels. Information 2024, 15, 275. [Google Scholar] [CrossRef]

- Luo, H.; Sang, J.; Wu, W.; Xiang, H.; Xiang, Z.; Zhang, Q.; Wu, Z. A High-Density Crowd Counting Method Based on Convolutional Feature Fusion. Appl. Sci. 2018, 8, 2367. [Google Scholar] [CrossRef]

- Ilyas, N.; Lee, B.; Kim, K. HADF-Crowd: A Hierarchical Attention-Based Dense Feature Extraction Network for Single-Image Crowd Counting. Sensors 2021, 21, 3483. [Google Scholar] [CrossRef]

- Sindagi, V.A.; Patel, V.M. A survey of recent advances in cnn-based single image crowd counting and density estimation. Pattern Recognit. Lett. 2018, 107, 3–16. [Google Scholar] [CrossRef]

- Liu, Z.; Yaermaimaiti, Y. A study of pedestrian detection algorithms for use in complex environments. Eng. Res. Express 2025, 7, 035283. [Google Scholar] [CrossRef]

- George, A.; Vinothina, V.; Beulah, G.V. Neural Network-Based Crowd Counting Systems: State of the Art, Challenges, and Perspectives. J. Adv. Inf. Technol. 2023, 14, 1450–1460. [Google Scholar] [CrossRef]

- Peng, T.; Li, Q.; Zhu, P. RGB-T Crowd Counting from Drone: A Benchmark and MMCCN Network. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 December 2020–4 January 2021. [Google Scholar]

- Liu, L.; Chen, J.; Wu, H.; Li, G.; Li, C.; Lin, L. Cross-Modal Collaborative Representation Learning and a Large-Scale RGBT Benchmark for Crowd Counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4821–4831. [Google Scholar]

- Gu, S.; Lian, Z. A unified RGB-T crowd counting learning framework. Image Vis. Comput. 2023, 131, 104631. [Google Scholar] [CrossRef]

- Zhou, W.; Pan, Y.; Lei, J.; Yu, L. DEFNet: Dual-branch enhanced feature fusion network for RGB-T crowd counting. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24540–24549. [Google Scholar] [CrossRef]

- Zhou, W.; Yang, X.; Lei, J.; Yan, W.; Yu, L. MC3Net: Multimodality Cross-Guided Compensation Coordination Network for RGB-T Crowd Counting. IEEE Trans. Intell. Transp. Syst. 2024, 25, 4156–4165. [Google Scholar] [CrossRef]

- Tang, H.; Wang, Y.; Lin, Z.; Chau, L.P.; Zhuang, H. A three-stream fusion and self-differential attention network for multi-modal crowd counting. Pattern Recognit. Lett. 2024, 183, 35–41. [Google Scholar] [CrossRef]

- Wang, S.; Lyu, Y.; Li, Y.; Xu, Y.; Wu, W. MIANet: Bridging the Gap in Crowd Density Estimation with Thermal and RGB Interaction. IEEE Trans. Intell. Transp. Syst. 2025, 26, 254–267. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, G.; Shi, B.; Hu, Y. CCANet: A Collaborative Cross-Modal Attention Network for RGB-D Crowd Counting. IEEE Trans. Multimed. 2024, 26, 154–165. [Google Scholar] [CrossRef]

- Kong, W.; Liu, J.; Hong, Y.; Li, H.; Shen, J. Cross-modal collaborative feature representation via Transformer-based multi-modal mixers for RGB-T crowd. Expert Syst. Appl. 2024, 255, 124483. [Google Scholar] [CrossRef]

- Kong, W.; Yu, Z.; Li, H.; Zhang, J. Cross-modal misalignment-robust feature fusion for crowd counting. Eng. Appl. Artif. Intell. 2024, 136, 108898. [Google Scholar] [CrossRef]

- Mu, B.; Shao, F.; Xie, Z.; Chen, H.; Zhu, Z.; Jiang, Q. MISF-Net: Modality-Invariant and -Specific Fusion Network for RGB-T Crowd Counting. IEEE Trans. Multimed. 2025, 27, 2593–2607. [Google Scholar] [CrossRef]

- Du, Z.; Shi, M.; Deng, J.; Zafeiriou, S. Redesigning multi-scale neural network for crowd counting. IEEE Trans. Image Process. 2023, 32, 3664–3678. [Google Scholar] [CrossRef]

- Gong, S.; Yao, Z.; Zuo, W.; Yang, J.; Yuen, P.; Zhang, S. Spatially adaptive pyramid feature fusion for scale-aware crowd counting. Pattern Recognit. 2025, 168, 111832. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, T.; Zhang, K.; Wang, H.; Li, M.; Lu, J. Context attention fusion network for crowd counting. Knowl. Based Syst. 2023, 271, 110541. [Google Scholar] [CrossRef]

- Zhu, A.; Duan, G.; Zhu, X.; Zhao, L.; Huang, Y.; Hua, G.; Snoussi, H. CDADNet: Context-guided dense attentional dilated network for crowd counting. Signal Process.-Image Commun. 2021, 98, 116379. [Google Scholar] [CrossRef]

- Li, B.; Zhang, Y.; Xu, H.; Yin, B. CCST: Crowd counting with swin transformer. Vis. Comput. 2023, 39, 2671–2682. [Google Scholar] [CrossRef]

- Huo, Z.; Yuan, C.; Zhang, K.; Qiao, Y.; Luo, F. VMamba-Crowd: Bridging multi-scale features from Visual Mamba for weakly-supervised crowd counting. Pattern Recognit. Lett. 2025, 197, 297–303. [Google Scholar] [CrossRef]

- Wan, J.; Wang, Q.; Chan, A.B. Kernel-based density map generation for dense object counting. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1357–1370. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, J.; Chen, B.; Du, S. Counting varying density crowds through density guided adaptive selection CNN and transformer estimation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1055–1068. [Google Scholar] [CrossRef]

- Liang, D.; Xu, W.; Zhu, Y.; Zhou, Y. Focal inverse distance transform maps for crowd localization. IEEE Trans. Multimed. 2022, 25, 6040–6052. [Google Scholar] [CrossRef]

- Ma, Z.; Wei, X.; Hong, X.; Gong, Y. Bayesian loss for crowd count estimation with point supervision. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3365–3374. [Google Scholar]

- Wan, J.; Wu, Q.; Chan, A.B. Modeling Noisy Annotations for Point-Wise Supervision. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15065–15080. [Google Scholar] [CrossRef]

- Li, H.; Zhang, J.; Kong, W.; Shen, J.; Shao, Y. CSA-Net: Cross-modal scale-aware attention-aggregated network for RGB-T crowd counting. Expert Syst. Appl. 2023, 213, 119038. [Google Scholar] [CrossRef]

- Guo, Q.; Yuan, P.; Huang, X.; Ye, Y. Consistency-constrained RGB-T crowd counting via mutual information maximization. Complex Intell. Syst. 2024, 10, 5049–5070. [Google Scholar] [CrossRef]

- Pan, Y.; Zhou, W.; Fang, M.; Qiang, F. Graph enhancement and transformer aggregation network for RGB-thermal crowd counting. IEEE Geosci. Remote Sens. Lett. 2024, 21, 3000705. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, H.; Huang, L.; Yang, Y.; Kang, W.; Zhang, J. TransMambaCC: Integrating Transformer and Pyramid Mamba Network for RGB-T Crowd Counting. Appl. Intell. 2025, 55, 989. [Google Scholar] [CrossRef]

- Kong, W.; Yu, Z.; Li, H.; Tong, L.; Zhao, F.; Li, Y. CrowdAlign: Shared-weight dual-level alignment fusion for RGB-T crowd counting. Image Vis. Comput. 2024, 148, 105152. [Google Scholar] [CrossRef]

- Kuang, X.; Tao, B. ODGNet: Robotic Grasp Detection Network Based on Omni-Dimensional Dynamic Convolution. Appl. Sci. 2024, 14, 4653. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhou, P.; Xie, X.Y.; Lin, Z.C.; Yan, S.C. Towards Understanding Convergence and Generalization of AdamW. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6486–6493. [Google Scholar] [CrossRef]

- Zhang, C.; Shao, Y.; Sun, H.; Xing, L.; Zhao, Q.; Zhang, L. The WuC-Adam algorithm based on joint improvement of Warmup and cosine annealing algorithms. Math. Biosci. Eng. 2024, 21, 1270–1285. [Google Scholar] [CrossRef]

- Pan, Y.; Zhou, W.; Qian, X.; Mao, S.; Yang, R.; Yu, L. CGINet: Cross-modality grade interaction network for RGB-T crowd counting. Eng. Appl. Artif. Intell. 2023, 126, 106885. [Google Scholar] [CrossRef]

- Xie, Z.; Shao, F.; Mu, B.; Chen, H.; Jiang, Q.; Lu, C.; Ho, Y.S. BGDFNet: Bidirectional Gated and Dynamic Fusion Network for RGB-T Crowd Counting in Smart City System. IEEE Trans. Instrum. Meas. 2024, 73, 5024616. [Google Scholar] [CrossRef]

- Zhang, Y.; Choi, S.; Hong, S. Memory-efficient cross-modal attention for RGB-X segmentation and crowd counting. Pattern Recognit. 2025, 162, 111376. [Google Scholar] [CrossRef]

- Liu, K.; Zou, X.; Zhu, P.; Sang, J. Modal-Adaptive Spatial-Aware-Fusion and Propagation Network for Multimodal Vision Crowd Counting. IEEE Trans. Consum. Electron. 2025, 71, 3605–3616. [Google Scholar] [CrossRef]

- Wan, J.; Kumar, N.S.; Chan, A.B. Fine-Grained Crowd Counting. IEEE Trans. Image Process. 2021, 30, 2114–2126. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Chen, M.; Li, Q.; Chen, Y.; Lin, R.; Li, X.; He, S.; Liu, W. Category-Contrastive Fine-Grained Crowd Counting and Beyond. IEEE Trans. Multimed. 2024, 27, 477–488. [Google Scholar] [CrossRef]

- Hayat, M.; Aramvith, S. Superpixel-Guided Graph-Attention Boundary GAN for Adaptive Feature Refinement in Scribble-Supervised Medical Image Segmentation. IEEE Access 2025, 25, 35212–35222. [Google Scholar] [CrossRef]

- Deng, C.; Wang, M.; Liu, L.; Liu, Y.; Jiang, Y. Extended Feature Pyramid Network for Small Object Detection. IEEE Trans. Multimed. 2022, 24, 1968–1979. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.