Abstract

Dense Video Captioning (DVC) represents the cutting edge of advanced multimedia tasks, focusing on generating a series of temporally precise descriptions for events unfolding within a video. In contrast to traditional video captioning, which usually offers a singular summary or caption for an entire video, DVC demands the identification of multiple events within a video, the determination of their exact temporal boundaries, and the production of natural language descriptions for each event. This review paper presents a thorough examination of the latest techniques, datasets, and evaluation protocols in the field of DVC. We categorize and assess existing methodologies, delve into the characteristics, strengths, and limitations of widely utilized datasets, and underscore the challenges and opportunities associated with evaluating DVC models. Furthermore, we pinpoint current research trends, open challenges, and potential avenues for future exploration in this domain. The primary contributions of this review encompass: (1) a comprehensive survey of state-of-the-art DVC techniques, (2) an extensive review of commonly employed datasets, (3) a discussion on evaluation metrics and protocols, and (4) the identification of emerging trends and future directions.

1. Introduction

In the rapidly evolving landscape of computer vision and natural language processing (NLP), video understanding has emerged as a pivotal area of research. With the proliferation of video content across various platforms [1], the need for automated systems that can effectively analyze, interpret, and summarize video data has become increasingly urgent. Dense Video Captioning (DVC) stands at the forefront of this advancement [2], representing a sophisticated task that combines video analysis with language generation.

DVC aims to localize and describe multiple events within long, untrimmed videos [3]. Unlike traditional video captioning, which typically generates a single summary sentence or a sequence of sentences for the entire video, DVC requires the model to detect and caption multiple events occurring at different time intervals within the video. This task is highly challenging due to the complexity and diversity of video content [4], the variable length and overlap of events, and the need for accurate temporal localization and coherent natural language descriptions.

The motivation behind DVC stems from its potential applications in various domains. For instance, in video retrieval systems, DVC can enable more precise and intuitive search capabilities by allowing users to query based on event descriptions [5]. In video accessibility, DVC can provide detailed captions for deaf and hard-of-hearing individuals, enhancing their understanding and enjoyment of video content. Additionally, DVC has applications in content analysis [6], surveillance, and sports video summarization, where automatic event detection and description can save time and resources.

This comprehensive review paper aims to provide a detailed analysis of the state-of-the-art techniques, datasets, and evaluation protocols [7] for Dense Video Captioning (DVC). The paper is structured to cover the following key areas:

Fundamentals of Dense Video Captioning: This section introduces the fundamental concepts and challenges associated with DVC, including the subprocesses of video feature extraction, temporal event localization, and dense caption generation.

Techniques for Dense Video Captioning: In this section, we survey the existing methodologies for DVC, categorizing them into key subprocesses and discussing the strengths and limitations of each approach. We will cover a wide range of techniques, from traditional computer vision methods to deep learning-based architectures, including encoder-decoder models, attention mechanisms, and multimodal fusion techniques.

Datasets for Dense Video Captioning: This section presents an overview of the most widely used datasets for training and evaluating DVC models. We will discuss the characteristics, strengths, and limitations of each dataset, highlighting the challenges and opportunities they present for researchers in the field.

Evaluation Protocols and Metrics: In this section, we review the evaluation metrics commonly employed in DVC research, including BLEU [8], METEOR [9], CIDEr [10], ROUGE-L [11], SODA [12], SPICE [13], and WMD [14]. We will discuss the challenges in evaluating DVC models and the importance of developing more comprehensive and interpretable evaluation protocols.

Emerging Trends and Future Directions: This section summarizes the current research trends in DVC and identifies open challenges and limitations. We will propose potential future directions for DVC research, including improved event recognition and localization algorithms, enhanced multimodal integration, and more efficient and interpretable models. The contributions of this review paper are as follows:

- (1)

- A comprehensive survey of the state-of-the-art techniques for Dense Video Captioning, categorizing and analyzing the existing methodologies.

- (2)

- An in-depth review of the most widely used datasets for DVC, highlighting their strengths and limitations.

- (3)

- A detailed discussion of the evaluation metrics and protocols commonly employed in DVC research, including challenges and opportunities for future developments.

- (4)

- Identification of emerging trends and future directions in DVC research, providing valuable insights for researchers and practitioners in the field.

2. Fundamentals of Dense Video Captioning

2.1. Definition and Objectives of DVC

Dense Video Captioning (DVC) represents an advanced multimedia task that strives to automatically produce a series of temporally localized descriptions for events unfolding within a video [15]. Unlike conventional video captioning, which usually offers a solitary summary or caption per video, DVC demands the identification of multiple events within a video, the determination of their exact temporal boundaries, and the generation of a natural language description for each event. The core objective of DVC is to furnish a thorough, detailed, and human-readable comprehension of video content, thereby enhancing video retrieval, indexing, and browsing experiences.

2.2. Key Subprocesses

Video Feature Extraction (VFE): VFE constitutes the initial critical subprocess in DVC [16,17]. It entails extracting meaningful representations from raw video data for subsequent tasks like event localization and caption generation. This typically involves employing deep learning models, such as Convolutional Neural Networks (CNNs) for spatial feature extraction and Recurrent Neural Networks (RNNs) or Transformers for temporal feature encoding. The aim of VFE is to capture both the visual content and the temporal dynamics of the video.

Temporal Event Localization (TEL): TEL pertains to identifying the start and end times of events within a video. This subprocess necessitates precise temporal segmentation of the video into meaningful event segments. TEL can be tackled using diverse techniques, including sliding window methods, proposal-based methods, and end-to-end detection frameworks. The challenge resides in accurately detecting events, particularly when they overlap or occur in rapid succession.

Dense Caption Generation (DCG): DCG is the culminating subprocess in DVC, where natural language descriptions are crafted for each localized event. This involves encoding the visual and temporal features of the event into a format that can be decoded into a coherent sentence or phrase. DCG often harnesses encoder-decoder architectures, such as sequence-to-sequence models or Transformer-based models, trained on extensive datasets of video-caption pairs. The objective is to generate captions that are both precise and fluent, encapsulating the essential details of the event.

2.3. Challenges in DVC

Event Recognition and Overlapping Events: A primary challenge in DVC is accurately recognizing and differentiating between distinct events, especially when they occur simultaneously or overlap in time. This necessitates models with a robust ability to distinguish between visually similar events and manage temporal ambiguities.

Event Localization Accuracy: Precise event localization is vital for generating meaningful captions. However, achieving high localization accuracy is challenging due to the complexity and variability of video content. Factors like camera motion, occlusions, and varying event durations can all contribute to localization errors.

Multimodal Integration and Natural Language Generation: DVC requires the integration of multiple modalities, encompassing visual, auditory, and temporal information. Effective multimodal fusion is crucial for generating accurate and informative captions. Furthermore, the generated captions must be natural and fluent, which mandates models with a profound understanding of language structure and semantics.

3. Techniques for Dense Video Captioning

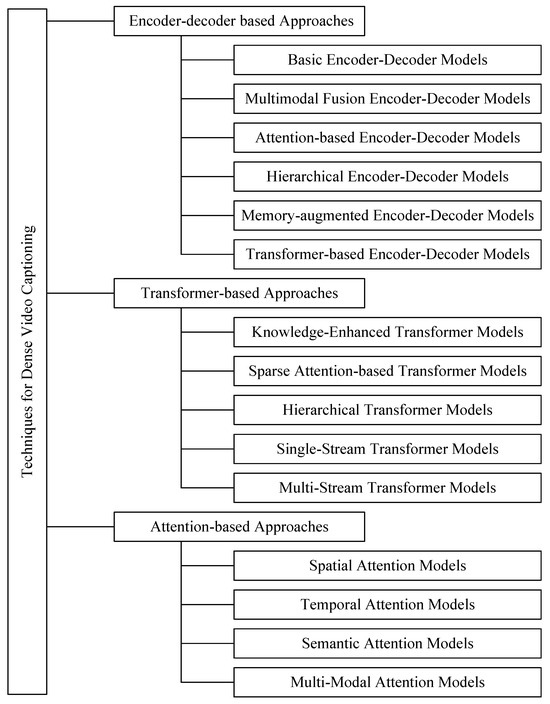

Recent years have seen remarkable progress in video captioning: the automated generation of descriptive text for video content. Central to these advancements are attention-based methodologies, which enable models to dynamically focus on the most relevant spatiotemporal features within videos. This review provides a structured analysis of cutting-edge techniques in dense video captioning, emphasizing three methodological pillars: attention mechanisms, transformer architectures, and encoder-decoder frameworks. This study highlights innovations in modeling long-range temporal dependencies and integrating multimodal cues (visual, auditory, textual) to enhance caption relevance and fidelity. These technical breakthroughs are particularly valuable given the growing demand for video captioning in human–computer interaction systems, content-based retrieval platforms, and automated video indexing pipelines. As visualized in Figure 1, our analysis systematically dissects current approaches through three complementary lenses-attention-driven strategies, transformer-based paradigms, and encoder-decoder architectures-to offer a comprehensive roadmap for researchers and practitioners in this dynamic field.

Figure 1.

Techniques for Dense Video Captioning.

3.1. Encoder-Decoder Based Approaches

3.1.1. Basic Encoder-Decoder Models

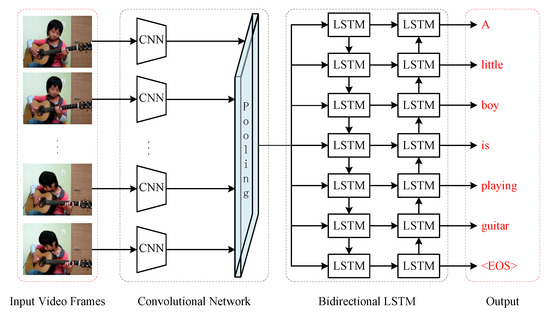

Video captioning aims to generate natural language descriptions of video content, and the basic encoder-decoder framework has been widely adopted for this task. Early methods employed models such as Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) to encode video features and decode them into captions. For instance, Venugopalan et al. [18] introduced the first encoder-decoder model for video captioning. As illustrated in Figure 2, features from the layer are extracted from individual frames. Here, refers to the fully connected layer features obtained from each frame using a pre-trained convolutional neural network (CNN), typically extracted from the layer of models like VGGNet. These features are then mean-pooled over the entire duration of the video and subsequently fed into an LSTM (Long Short-Term Memory) network at each time step. The LSTM generates a word per time step, considering both video features and the preceding word, until the end-of-sentence tag is chosen. Yao et al. [19] further improved this approach by incorporating temporal attention mechanisms.

Figure 2.

Redrawn schematic of the pioneering video caption encoder-decoder network, adapted from [19].

However, these basic models often suffer from limited performance. They struggle to capture complex temporal and spatial dependencies in videos, which leads to generated captions that lack specificity and diversity. This limitation underscores the need for more advanced techniques to enhance the quality and accuracy of video captioning.

3.1.2. Multimodal Fusion Encoder-Decoder Models

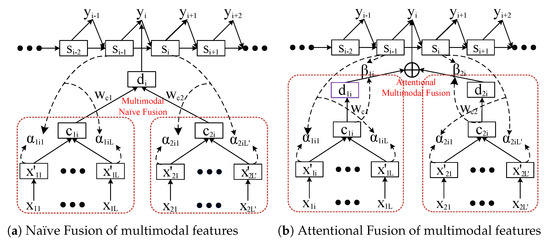

Multimodal fusion approaches aim to integrate information from video, audio, and text to generate more accurate captions. Hori et al. [20] introduced an attention-based method for multimodal fusion in video description, as shown in Figure 3. This method utilizes temporal attention mechanisms to selectively focus on encoded features from specific time frames. By combining visual, motion, and audio features, it captures a more comprehensive representation of the video content, enhancing the quality of the generated captions.

Figure 3.

Redrawn architecture of multimodal fusion encoder-decoder models (adapted from [20]) for video caption generation.

Building upon the idea that videos contain both visual and auditory information, Huang et al. [21] proposed a model that leverages both visual and audio features. Their model employs separate encoders for each modality and generates captions based on a fused multimodal representation. Similarly, Aafaq et al. [22] introduced a model that incorporates early linguistic information fusion through a visual-semantic embedding framework. However, these methods often require costly additional annotations for non-video modalities and often rely on simple fusion techniques, such as concatenation or element-wise summation. These techniques may not fully exploit the complementary nature of multimodal information, highlighting the need for more sophisticated fusion strategies.

3.1.3. Attention-Based Encoder-Decoder Models

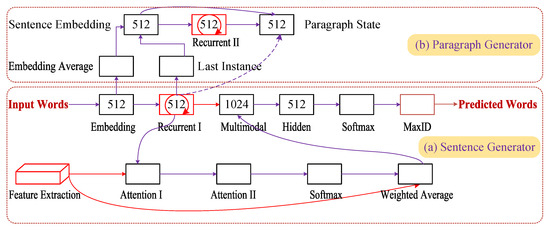

To overcome the limitations of basic encoder-decoder models, attention mechanisms have been introduced. These mechanisms enable the model to focus on salient parts of the video while generating captions by dynamically adjusting the weight assigned to different video frames based on their relevance to the currently generated caption. Yu et al. [23] proposed a hierarchical RNN with an attention mechanism to encode videos at multiple temporal scales. The overall structure of this hierarchical RNN-based attention encoder-decoder framework is illustrated in Figure 4.

Figure 4.

Redrawn hierarchical RNN-based attention encoder-decoder framework for video captioning (adapted from [23]): Input in green, output in blue, recurrent components in red, and sentence generator reinitialization indicated by orange arrow.

Building on this, Pan et al. [24] introduced a spatio-temporal attention model that captures both spatial and temporal attention, further enhancing the model’s ability to focus on important video features. Despite these advancements, attention-based models still face challenges in capturing long-range dependencies and generating diverse captions. Ongoing research continues to explore solutions to these remaining issues.

3.1.4. Hierarchical Encoder-Decoder Models

Hierarchical encoder-decoder models aim to capture both local and global temporal structures in videos by employing multiple encoders and decoders that operate at different temporal scales. Wang et al. [25] proposed a hierarchical recurrent neural encoder that effectively captures both short-term and long-term dependencies in videos, which are then decoded into captions using an LSTM. This approach highlights the potential of hierarchical models in understanding complex video structures.

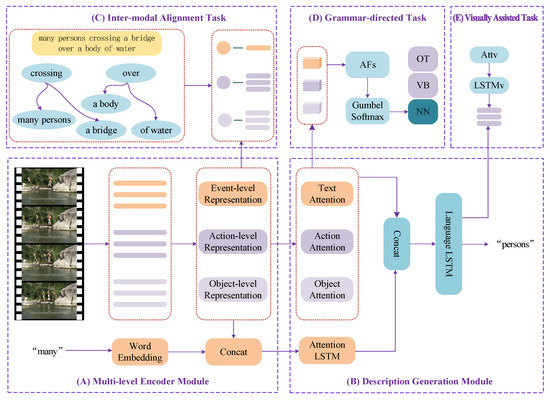

Building on this concept, Gao et al. [26] introduced the Hierarchical Representation Network with Auxiliary Tasks (HRNAT), as illustrated in Figure 5. This framework incorporates auxiliary tasks, such as cross-modality matching and syntax-guiding, to enhance caption generation. The novelty of their approach lies in the shared knowledge and parameters between auxiliary tasks and the main stream, including the Hierarchical Encoder Module, Hierarchical Attention Mechanism, and Language Generation Mechanism. While these hierarchical approaches offer a powerful way to model the complex structure of videos and captions at multiple levels of granularity, they can also increase model complexity and computational cost due to the multiple stages of processing involved.

Figure 5.

Redrawn hierarchical encoder-decoder model (adapted from [26]): HRNAT overview for video captioning (vc-HRNAT) with Event-level (orange), Action-level (light red), and Object-level (green) detail.

3.1.5. Memory-Augmented Encoder-Decoder Models

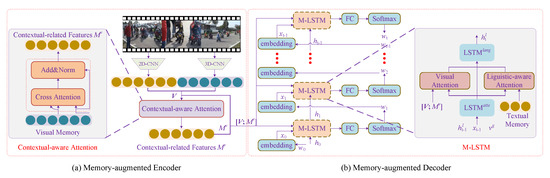

Memory-augmented encoder-decoder models have emerged as a powerful approach to enrich video captioning with external knowledge. These models integrate external memory to store and retrieve pertinent information, enabling them to capture implicit knowledge and produce more diverse captions. Wang et al. [27] proposed a memory-attended recurrent network that leverages an external memory to store visual and textual context. This context is then accessed during caption generation to enhance the overall context of the captions.

Building on this concept, Jing et al. [28] proposed the Memory-based Augmentation Network (MAN), as shown in Figure 6. The MAN consists of two key components: a memory-augmented encoder and a decoder. The encoder integrates a visual memory module that stores diverse visual contexts from the dataset and employs contextual-aware attention to extract essential video-related features. Conversely, the decoder incorporates a symmetric textual memory to capture external linguistic cues from dataset descriptions, using linguistic-aware cross-attention to gather relevant language features for each token at every time step. By leveraging visual and textual memories learned from the video-language dataset, the MAN enhances contextual understanding, thereby improving the quality and diversity of generated captions. While the performance of such memory-augmented models depends on the quality and diversity of the stored information, and maintaining the memory can be computationally intensive, their ability to produce high-quality and diverse captions makes them a promising direction in video captioning research.

Figure 6.

Overview of the proposed MAN architecture for video captioning (redrawn by the authors, adapted from [28]).

3.1.6. Transformer-Based Encoder-Decoder Models

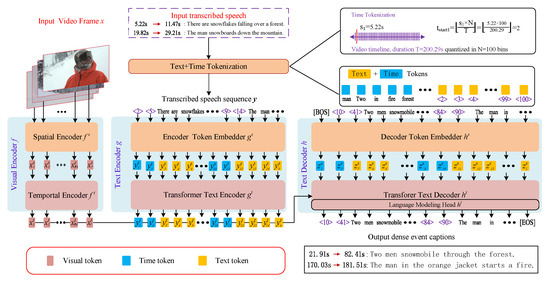

Transformer models, initially designed for natural language processing, have recently gained traction in the field of video captioning due to their exceptional capacity to capture long-range dependencies. Yang et al. [29] pioneered the use of a Transformer-based model for video captioning, which outperformed traditional RNN-based approaches. Building on this success, Zhou et al. [30] further advanced the field by presenting an end-to-end Transformer model for dense video captioning. This model utilizes an encoder for video feature extraction and a decoder for caption generation, significantly enhancing efficiency through parallel processing.

More recently, the introduction of Vid2Seq by Yang et al. [31] has marked a new milestone in video captioning. As depicted in Figure 7, Vid2Seq is a pretrained Transformer-based encoder-decoder model specifically designed for narrated videos. It takes video frames and transcribed speech sequence as inputs, and outputs an event sequence , where each event includes a textual description and corresponding timestamps in the video. Despite its top performance across multiple benchmarks, it is important to note that Transformer models require substantial data for training, are computationally intensive, and often exhibit less interpretability than RNN-based models due to their reliance on self-attention mechanisms.

Figure 7.

Transformer-based Encoder-Decoder Models for video captioning (redrawn by the authors, adapted from [31]): This approach frames dense event captioning as a sequence-to-sequence task, utilizing special time tokens to enable the model to comprehend and produce token sequences containing both textual semantics and temporal localization details, thereby anchoring each sentence to the corresponding video segment.

3.2. Discussion-Encoder-Decoder Based APPROACHES

Encoder-decoder-based approaches for video captioning have significantly evolved, transitioning from simple CNN-RNN models to complex Transformer-based and modular frameworks. Attention mechanisms have been crucial for improving visual-textual alignment. Despite progress, challenges persist in generating diverse and accurate captions, capturing long-range dependencies, and incorporating external knowledge. Video captioning, vital for applications like video retrieval and human–computer interaction, has garnered attention.

3.3. Transformer-Based Approaches

3.3.1. Knowledge-Enhanced Transformer Models

Knowledge-enhanced transformer models are designed to integrate external knowledge into the video captioning process, aiming to enhance the diversity and accuracy of the generated captions. Gu et al. [32] introduced the Text with Knowledge Graph Augmented Transformer (TextKG) for this purpose. In TextKG, both the external and internal streams utilize the self-attention module to model interactions among multi-modality information. Specifically, the external stream focuses on modeling interactions between knowledge graphs and video content, while the internal stream exploits multi-modal information within videos. This approach allows the model to leverage external knowledge effectively.

Similarly, Zhang et al. [33] proposed a Center-enhanced Video Captioning model with Multimodal Semantic Alignment. This model integrates feature extraction and caption generation into a unified framework and introduces a cluster center enhancement module to capture key information within the multimodal representation. This facilitates more accurate caption generation. Both of these models, TextKG and the Center-enhanced Video Captioning model, effectively utilize external knowledge to overcome the limitations of relying solely on video-description pairs. By incorporating knowledge graphs and memory networks, they capture richer context, thereby enhancing the cognitive capability of the generated captions. However, the performance of these models is contingent upon the quality and coverage of the external knowledge sources they utilize.

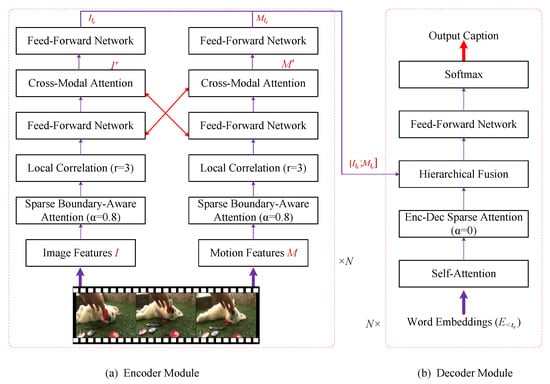

3.3.2. Sparse Attention-Based Transformer Models

Sparse attention-based transformer models have proven effective for video captioning by focusing on informative regions, reducing redundancy and computational complexity. These models enhance efficiency and caption quality via sparse attention mechanisms. Notable examples include the Sparse Boundary-Aware Transformer (SBAT) by Jin et al. [34], which uses boundary-aware pooling and local correlation to capture features and outperform state-of-the-art methods, and the Universal Attention Transformer (UAT) by Im et al. [35], which employs a full transformer structure with ViT, FEGs, and UEA for competitive performance. As illustrated in Figure 8, the encoder-decoder structure with sparse boundary-aware attention is used for video captioning.

Figure 8.

The SBAT architecture (redrawn by the authors, adapted from [34]) integrates a sparse boundary-aware strategy (Sp) into both encoder and decoder multihead attention blocks.

However, these models have limitations. Performance hinges on the accuracy of sparse attention, and misidentification or omission of key details can degrade captions. Designing these mechanisms requires careful hyperparameter tuning, which is time-consuming and dataset-specific. Moreover, fast-moving objects or complex scenes pose challenges for selecting sparse, informative regions. Efficient models like SBAT and SnapCap aim to reduce complexity and improve speed, with SnapCap generating captions directly from the compressed video to avoid costly processing.

3.3.3. Hierarchical Transformer Models

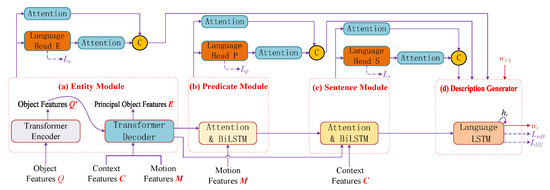

Hierarchical transformer models are designed to capture the intricate hierarchical structure of videos, which encompasses scenes, actions, and objects at multiple levels of granularity. Ye et al. [36] proposed a Hierarchical Modular Network (HMN) specifically for video captioning. This network consists of three key modules: the entity module, which identifies principal objects within the video; the predicate (or action) module, which learns action representations; and the sentence module, which generates the final captions. As illustrated in Figure 9, the HMN adheres to the conventional encoder-decoder paradigm, with the HMN itself serving as the encoder.

Figure 9.

The proposed Hierarchical Modular Network (redrawn by the authors, adapted from [36]) functions as a robust video encoder, linking video representations to linguistic semantics through three levels using entity, predicate, and sentence modules. Each sentence module has its own input, which is extracted from captions, and linguistic guidance.

Similarly, Ging et al. [37] introduced COOT, another hierarchical model for video captioning. COOT includes components for capturing relationships between frames and words, producing clip and sentence features, and generating final video and paragraph embeddings. Both HMN and COOT decompose the video captioning task into sub-tasks, such as scene recognition, action recognition, and caption generation, and then combine the results of these sub-tasks to produce the final caption. By leveraging the hierarchical structure of videos, these models are able to generate captions that are more semantically meaningful and grammatically correct. However, the effective design of these modular architectures requires careful consideration to ensure seamless information flow across the different levels of hierarchy.

3.3.4. Single-Stream Transformer Models

To tackle the challenges of video captioning, single-stream transformer models have emerged, harnessing the potent self-attention mechanism inherent in transformers. These models strive to integrate both visual and textual information within a cohesive framework. Chen et al. [38] presented the Two-View Transformer (TVT) network, which employs a transformer encoder to capture temporal information and a fusion decoder to merge visual and textual modalities. TVT exhibits enhanced performance over RNN-based approaches, underscoring the efficiency and efficacy of transformers in the realm of video captioning.

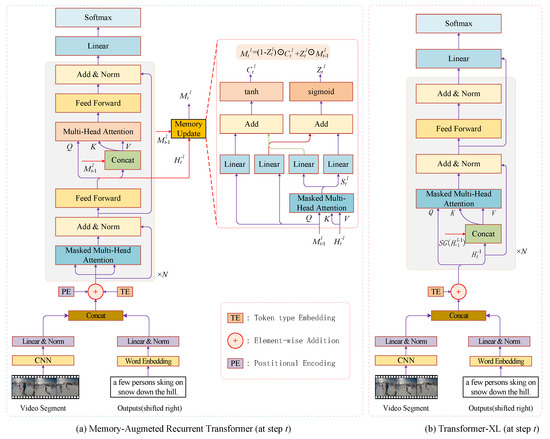

Building on this, Lei et al. [39] introduced MART, which enhances the transformer architecture by incorporating an external memory module to facilitate sentence-level recurrence. This memory module aids in modeling the context of preceding video segments and sentences, thereby producing more coherent paragraph captions. In MART, notably, the encoder and decoder are shared, as illustrated in Figure 10 (left). Initially, video and text inputs are encoded and normalized separately. Figure 10 (right) showcases a modified version of Transformer-XL tailored for video paragraph captioning. Nonetheless, these models encounter difficulties with complex temporal dynamics and multi-modal interactions, and they demand substantial amounts of paired data, which is both costly and time-consuming to acquire.

Figure 10.

The figure illustrates the proposed Memory-Augmented Recurrent Transformer (MART) for video paragraph captioning (redrawn by the authors, adapted from [39]). On the right, the Transformer-XL model for the same task is depicted. Relative PE stands for Relative Positional Encoding, and SG(·) denotes stop-gradient [40].

3.3.5. Multi-Stream Transformer Models

To capture the diverse information in videos more effectively, multi-stream transformer models have been developed. Wang et al. introduced the Collaborative Three-Stream Transformers (COST) framework [41], which is illustrated in Figure 11. This framework comprises Video-Text, Detection-Text, and Action-Text branches, each tailored to model interactions between global video appearances, detected objects, and actions, respectively. By fusing these branches, COST generates comprehensive captions, and a cross-granularity attention module ensures feature alignment across the different streams. This design allows COST to capture intricate interactions between objects and their actions/relations across various modalities.

Figure 11.

The proposed COST method’s network architecture (redrawn by the authors, adapted from [41]) comprises three transformer branches: Action-Text, Video-Text, and Detection-Text. A cross-granularity attention module aligns the interactions modeled by these branches, with Y, H, and X representing their respective interactions.

In a different approach, Sun et al. [42] presented SnapCap, a method that bypasses the reconstruction process and generates captions directly from compressed video measurements. This method is guided by a teacher-student knowledge distillation framework using CLIP. While multi-stream transformer models, such as COST, significantly enhance video captioning performance by leveraging complementary semantic cues, they do come with higher computational costs due to their multiple streams. SnapCap, on the other hand, offers an alternative that may address some of these computational challenges while still aiming for accurate caption generation.

3.4. Discussion-Transformer Based APPROACHES

Transformer-based approaches have shown significant promise in video captioning. Basic Transformer models have demonstrated improved performance over RNN-based methods due to their ability to capture long-range dependencies. Enhanced Transformer models, such as VideoBERT, MART, and SBAT, further improve performance by incorporating additional mechanisms like memory augmentation, sparse attention, and boundary-aware pooling. Collaborative frameworks like COST and knowledge graph-augmented models provide additional context to generate more informative captions. Finally, efficient Transformer models like SnapCap and factorized autoregressive decoding enable video captioning in resource-constrained environments.

3.5. Attention-Based Approaches

3.5.1. Spatial Attention Models

Spatial attention models have become crucial in enhancing video caption generation by highlighting key regions within frames that are essential for understanding content. Their primary goal is to capture the most prominent areas, extracting high-resolution visual features necessary for accurate captioning. By complementing temporal attention frameworks, spatial attention mechanisms delve into the spatial details of each frame, expertly identifying significant objects and activities. This combination of spatial and temporal cues improves the overall comprehension of video content.

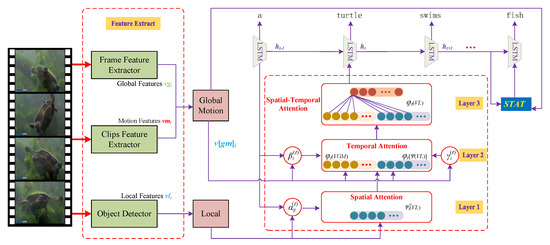

An early notable example is the Attention-Based Multimodal Fusion for Video Description (ABFVD) model by Hori et al. [20], which integrates image and motion attributes through a multimodal attention system. However, ABFVD’s reliance on basic feature combinations may limit its ability to adaptively assess feature relevance across different descriptive contexts. In contrast, Tu et al. [43] introduced the spatial-temporal attention (STAT) model, skillfully combining both spatial and temporal dimensions to focus on relevant regions and frames. As illustrated in Figure 12, the STAT model takes global motion features, local features, and model status information as input, producing dynamic visual representations that are fed into each LSTM decoder iteration. Nevertheless, a significant limitation of spatial attention models is their potential over-reliance on predefined object detection methods or region proposal networks, which may not consistently identify contextually relevant areas. Additionally, these models can be computationally intensive, especially with large and complex scenes, posing challenges for real-time implementation and resource management.

Figure 12.

The STAT unit (redrawn by the authors, adapted from [43]) is depicted, receiving local features, global-motion features, and model status as inputs. It generates dynamic visual features for LSTM decoder iterations. Layer 1 applies spatial attention to local features. Layer 2 focuses on temporal attention for both feature types. Layer 3 fuses two temporal representations.

3.5.2. Temporal Attention Models

Temporal attention models have emerged as a prominent approach in the field of video captioning, enabling dynamic focus on the most relevant frames or temporal segments within a video. Yao et al. [19] were pioneers in this domain, introducing a model that utilizes a soft attention mechanism. This mechanism assigns weights to frames based on their relevance to the current word being generated, allowing the model to selectively attend to various temporal regions for improved caption prediction.

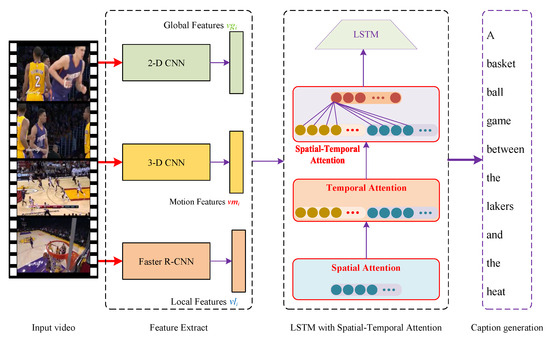

Building on this foundation, Yan et al. [16] proposed the Spatial-Temporal Attention Mechanism (STAT) for video captioning. As illustrated in Figure 13, their framework integrates three types of features (CNN, C3D, and R-CNN) through a two-stage attention mechanism. Initially, given the current semantic context, a spatial attention mechanism guides the decoder to select local features with higher spatial attention weights, representing significant regions within frames. Subsequently, a temporal attention mechanism enables the decoder to select global and motion features, along with the previously identified local features. Ultimately, these three types of features are fused to represent the information of keyframes, enhancing the model’s ability to generate more detailed descriptions.

Figure 13.

The STAT video caption framework (redrawn by the authors, adapted from [16]) utilizes a spatial-temporal attention mechanism and comprises three main modules: (1) Feature extraction, (2) LSTM with spatial-temporal attention, and (3) caption generation.

3.5.3. Semantic Attention Models

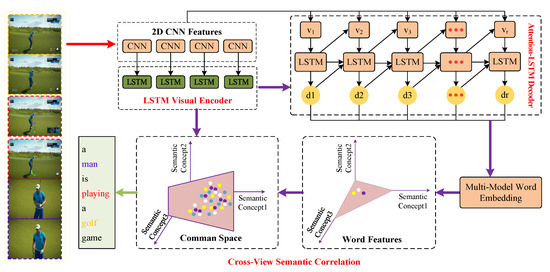

Semantic attention models aim to integrate high-level semantic information into the captioning process, thereby generating more coherent and meaningful captions by understanding the video’s content. Ye et al. [36] proposed a hierarchical network for video captioning that uses three levels to link video representations with linguistic semantics, extracting semantic features at different levels to guide caption generation. Similarly, Gao et al. [44] introduced a unified framework (Figure 14, named aLSTMs, an attention-based Long Short-Term Memory model with semantic consistency. This framework takes the dynamic weighted sum of local spatio-temporal 2D CNN feature vectors and 3D CNN feature vectors as input for the LSTM decoder, integrating multi-word embedding and cross-view methodology to project generated words and visual features into a common space, bridging the semantic gap between videos and corresponding sentences.

Figure 14.

The proposed method, aLSTMs (redrawn by the authors, adapted from [44]), includes: (1) an LSTM visual encoder for video frame processing, (2) an attention-based LSTM for word generation with key feature focus, and (3) a cross-view module aligning sentences with visual content. For demonstration, words are color-coded to indicate their most relevant video frames.

Yousif et al. [45] presented a Semantic-Based Temporal Attention Network (STAN) for Arabic video captioning, utilizing a Semantic Representation Network (SRN) to extract semantic features and employing temporal attention to align keyframes with relevant semantic tags. Guo et al. [46] introduced an attention-based LSTM method focused on semantic consistency, combining attention with LSTM to capture significant video structures and explore multimodal representation correlations. Despite these advancements in improving semantic richness in captions, semantic attention models face challenges related to the availability and quality of semantic features and require sophisticated architectures and training strategies, increasing computational complexity and training time.

3.5.4. Multi-Modal Attention Models

To generate more comprehensive and accurate captions, multi-modal attention models aim to fuse information from various sources, such as visual, auditory, and textual data. Hori et al. [20] introduced an attention-based multimodal fusion model that incorporates visual, motion, and audio features for descriptive video content generation. Similarly, Ji et al. [47] proposed an Attention-based Dual Learning Approach for Video Captioning, which includes a caption generation and a video reconstruction module to bridge the semantic gap between videos and captions by jointly learning both modalities.

Lin et al. [48] presented a multi-modal feature fusion method with feature attention specifically for the VATEX captioning challenge. This method extracts motion, appearance, semantic, and audio features, utilizing a feature attention module to focus on different features during the decoding process. However, these multi-modal attention models often have higher computational complexity due to the integration of multiple modalities. Furthermore, acquiring high-quality multi-modal features, particularly for audio, can be challenging and may require specialized pre-processing and feature extraction techniques.

3.6. Discussion-Attention Based Models

Attention-based approaches have proven to be highly effective in Video Captioning, enabling models to focus on the most relevant visual and temporal information within videos. From traditional Encoder-Decoder frameworks with single-head attention to advanced Transformer models with multi-head attention, the field has witnessed significant progress. Furthermore, the integration of semantic information and object relationships has further enriched the generated captions, pushing the state-of-the-art in this exciting domain. As we continue to explore more sophisticated attention mechanisms and their applications, the potential for creating truly intelligent video description systems remains vast and promising.

4. Datasets for Dense Video Captioning

Dense video captioning is a task that involves generating natural language descriptions for multiple events occurring in a video, and it heavily relies on the availability of well-annotated datasets. This section provides an overview of commonly used datasets for dense video captioning, followed by detailed descriptions, characteristics, limitations, and the data preprocessing and augmentation techniques associated with each dataset. Table 1 presents a comparison of key attributes among various datasets, highlighting benchmark ones that have been utilized in recent video description research [1]. Additionally, it summarizes comparisons involving video caption datasets and 3D gaze-related datasets.

Table 1.

Comparison of video caption datasets across different domains.

4.1. Detailed Descriptions

- (1)

- MSVDMSVD [49] (Microsoft Video Description) is a pioneering and widely adopted dataset for video captioning tasks. It features short video clips accompanied by descriptive sentences. Each video clip in MSVD is annotated with a single sentence description, providing a concise summary of the clip’s content. The MSVD dataset comprises over 1970 video clips, offering a substantial collection for research and development in video captioning.

- (2)

- TACoSTACoS [50] (Textually Annotated Cooking Scenes) is a multimodal corpus containing high-quality videos of cooking activities aligned with multiple natural language descriptions. This facilitates grounding action descriptions in visual information. The corpus provides sentence-level alignments between textual descriptions and video segments, alongside annotations indicating similarity between action descriptions. TACoS comprises 127 videos with 2540 textual descriptions, resulting in 2206 aligned descriptions after filtering. It contains 146,771 words and 11,796 unique sentences.

- (3)

- MPII-MDMPII-MD [51] is a dataset sourced from movies for video description. It aims to facilitate research on generating multi-sentence descriptions for videos. Each video is annotated with multiple temporally localized sentences that cover key events and details. The dataset contains 94 movies, amounting to 68,000 clips with 68.300 multi-sentence descriptions.

- (4)

- TGIFTGIF [52] (Tumblr GIF) is a large-scale dataset designed for animated GIF description, containing 100,000 GIFs collected from Tumblr and 120,000 natural language descriptions sourced via crowdsourcing. Each GIF is accompanied by multiple sentences to capture its visual content. High-quality annotations are ensured through syntactic and semantic validation, ensuring strong visual-textual associations. Workers were selected based on language proficiency and performance. The dataset is split into 90K training, 10K validation, and 10K test GIFs, providing ample data for model training and evaluation.

- (5)

- MSR-VTTMSR-VTT [7] (Microsoft Research Video to Text) is a diverse video dataset tailored for various video-to-text generation tasks, including video captioning and dense video captioning. It spans 20 broad categories, offering a rich variety of video content. Annotations for MSR-VTT are provided by 1327 AMT workers, ensuring each video clip is accompanied by multiple captions that offer comprehensive coverage of various aspects of the video content, enhancing the understanding of video semantics. MSR-VTT comprises 10,000 video clips sourced from 7180 videos, with an average of 20 captions per video.

- (6)

- CharadesCharades is a large-scale video dataset designed for activity understanding and video description tasks [53]. It contains videos of people performing daily activities in various indoor scenes. The annotations for Charades include action labels, temporal intervals, and natural language descriptions, collected through Amazon Mechanical Turk to provide comprehensive coverage of video content. Charades comprises 9848 videos, recorded by 267 people in 15 different indoor scenes. Each video is annotated with multiple action classes and descriptions.

- (7)

- VTWVTW (Video Titles in the Wild) is a large-scale dataset of user-generated videos automatically crawled from online communities [54]. It contains 18,100 videos with an average duration of 1.5 min, each associated with a concise title produced by an editor. Each video is annotated with a single title sentence describing the most salient event, accompanied by 1–3 longer description sentences. Highlight moments corresponding to the titles are manually labeled in a subset. The dataset spans 213.2 h in total, making it a challenging benchmark for video title generation tasks.

- (8)

- ActivityNet CaptionsActivityNet Captions [2] is a large-scale dataset for dense video captioning, featuring over 20,000 videos segmented into multiple temporal intervals. With 100K descriptions totaling 849 h, each averaging 13.48 words and capturing 36 s of video content, it comprehensively annotates activities within each interval. Annotations in the dataset include multiple temporally localized sentences per video, averaging 3.65 per clip, which collectively cover 94.6% of video content with 10% overlap, ideal for studying concurrent events. Covering over 849 video hours, the dataset encompasses 20K videos and generates 100k sentences. Both sentence length and count per video follow normal distributions, indicative of varying video durations.

- (9)

- YouCook2YouCook2 [4] is a comprehensive cooking video dataset, featuring 2000 YouTube videos across 89 recipes from diverse cuisines. It covers a wide range of cooking styles, ingredients, and utensils, primarily used for video summarization, recipe generation, and dense video captioning tasks. YouCook2 includes temporal boundaries of cooking steps and ingredients, along with textual descriptions for each step. These annotations provide detailed, step-by-step insights into the cooking process. Comprising 2000 cooking videos, YouCook2 offers a substantial dataset for research and development in cooking-related video analysis.

- (10)

- BDD-XThe BDD-X [55] dataset is a large-scale video dataset designed for explaining self-driving vehicle behaviors. It contains over 77 h of driving videos with human-annotated descriptions and explanations of vehicle actions. Each video clip is annotated with action descriptions and justifications. Annotators view the videos from a driving instructor’s perspective, describing what the driver is doing and why. The dataset comprises 6984 videos, split into training (5588), validation (698), and test sets (698). Over 26,000 actions are annotated across 8.4 million frames.

- (11)

- VideoStoryVideoStory [56] is a novel dataset tailored for multi-sentence video description. It comprises 20k videos sourced from social media, spanning diverse topics and engaging narratives. Each video is annotated with multiple paragraphs, containing 123k sentences temporally aligned to video segments. On average, each video has 4.67 annotated sentences. The dataset encompasses 396 h of video content, divided into training (17,098 videos), validation (999 videos), test (1011 videos), and blind test sets (1039 videos).

- (12)

- M-VADM-VAD [57] (Montreal Video Annotation Dataset) is a large-scale movie description dataset consisting of video clips from various movies. It aims to support research on video captioning and related tasks. Each video clip is paired with a textual description. Annotations include face tracks associated with characters mentioned in the captions. The dataset contains over 92 movies, resulting in a substantial number of video clips and textual descriptions, providing rich material for video captioning research.

- (13)

- VATEXVATEX [58] (Video and Text) is a large-scale dataset comprising 41,269 video clips, each approximately 10 s long, sourced from the Kinetics-600 dataset. Each video clip in VATEX is annotated with 10 English and 10 Chinese descriptions, providing rich linguistic information across two languages. VATEX boasts a total of 41,269 video clips, making it a substantial resource for video description tasks.

- (14)

- TVCTVC [59] (TV Show Caption) is a large-scale multimodal video caption dataset built upon the TVR dataset. It requires systems to gather information from both video and subtitles to generate relevant descriptions. Each annotated moment in TVR has additional descriptions collected to form the TVC dataset, totaling 262,000 descriptions for 108 [53] moments. Descriptions may focus on video, subtitles, or both. The dataset includes 174,350 training descriptions, 43,580 validation descriptions, and 21,780 public test descriptions, split across numerous videos and moments.

- (15)

- ViTTThe ViTT [21] (Video Timeline Tags) dataset comprises 8000 untrimmed instructional videos, tailored for tasks that involve video content analysis and tagging. It aims to address the uniformity issue in YouCook2 videos by sampling videos with cooking/recipe labels from YouTube-8M. Each video in ViTT is annotated with 7.1 temporally-localized short tags, offering detailed insights into various aspects of the video content. Annotators have identified each step and assigned descriptive yet concise tags. The ViTT dataset boasts 8000 videos, with an average duration of 250 s per video. It contains a vast array of unique tags and token types, making it a comprehensive resource for video analysis tasks.

- (16)

- VC_NBA_2022VC_NBA_2022 [60] is a specialized basketball video dataset designed for knowledge-guided entity-aware video captioning tasks. This dataset is intended to support the generation of text descriptions that include specific entity names and fine-grained actions, particularly tailored for basketball live text broadcasts. VC_NBA_2022 provides annotations beyond conventional video captions. It leverages a multimodal basketball game knowledge graph (KG_NBA_2022) to offer additional context and knowledge, such as player images and names. Each video segment is annotated with detailed captions covering 9 types of fine-grained shooting events and incorporating player-related information from the knowledge graph. The dataset contains 3977 basketball game videos, with 3162 clips used for training and 786 clips for testing. Each video has one text description and associated candidate player information (images and names). The dataset is constructed to focus on shot and rebound events, which are the most prevalent in basketball games.

- (17)

- WTSWTS [61] (Woven Traffic Safety Dataset) is a specialized video dataset designed for fine-grained spatial-temporal understanding tasks in traffic scenarios, particularly focusing on pedestrian-centric events. Comprehensive textual descriptions and unique 3D Gaze data are provided for each video event, capturing detailed behaviors of both vehicles and pedestrians across diverse traffic situations. The dataset contains over 1.2k video events, with rich annotations that enable in-depth analysis and understanding of traffic dynamics.

4.2. Characteristics and Limitations of Each Dataset

- (1)

- MSVDMSVD is an early and well-established dataset that features short video clips accompanied by concise descriptions. However, its size and diversity are somewhat limited, and the single sentence descriptions may not fully capture all aspects of the video content.

- (2)

- TACoSTACoS is a multimodal corpus that offers video-text alignments along with diverse cooking actions and fine-grained sentence-level annotations. Nevertheless, it is restricted to the cooking domain and thus may lack the diversity needed for broader actions.

- (3)

- MPII-MDMPII-MD centers on movie descriptions and contains 94 videos paired with 68k sentences, providing multi-sentence annotations aligned with video clips. But this dataset is confined to movie-related content and lacks the diversity of topics typically found in social media videos.

- (4)

- TGIFTGIF consists of 100K animated GIFs coupled with 120K natural language descriptions, showcasing rich motion information and cohesive visual stories. Yet, due to the nature of animated GIFs, it lacks audio and long-form narratives, which limits its complexity compared to full videos.

- (5)

- MSR-VTTMSR-VTT boasts diverse video content spanning various topics, with multiple captions per video segment to offer a comprehensive understanding. However, the annotations may occasionally be redundant or overlapping, and the quality of captions can vary, potentially affecting model training.

- (6)

- CharadesCharades, comprising 9,848 annotated videos of daily activities involving 267 people, covers 157 action classes and facilitates research in action recognition and video description. Nonetheless, the dataset’s focus on home environments restricts diversity in backgrounds and activities, possibly impacting generalization.

- (7)

- VTWThe VTW dataset holds 18,100 automatically crawled user-generated videos with diverse titles, presenting a challenging benchmark for video title generation. However, the temporal location and extent of highlights in most videos are unknown, posing challenges for highlight-sensitive methods.

- (8)

- ActivityNet CaptionsActivityNet Captions is a large-scale dataset characterized by diverse video content, fine-grained temporal annotations, and rich textual descriptions. The complexity of the videos might make it difficult for models to accurately capture all details, and some annotations may exhibit varying levels of granularity.

- (9)

- YouCook2YouCook2 zeroes in on cooking activities, making it highly domain-specific with detailed step-by-step annotations. Compared to general-purpose datasets, it has limited diversity in video content, and its annotations are biased towards cooking terminology.

- (10)

- BDD-XBDD-X contains over 77 h of driving videos with 26k human-annotated descriptions and explanations, covering diverse driving scenarios and conditions. However, it is important to note that the annotations are rationalizations from observers rather than capturing drivers’ internal thought processes.

- (11)

- VideoStoryVideoStory comprises 20k social media videos paired with 123k multi-sentence descriptions, spanning a variety of topics and demonstrating temporal consistency. But there’s a possibility that its representativeness might be limited as it tends to focus on engaging content when it comes to all social media videos.

- (12)

- M-VADThe M-VAD dataset holds 92 movies with detailed descriptions that cover different genres. It zeroes in on captions for movie clips, which makes it quite suitable for video captioning research. Nevertheless, it lacks character visual annotations as well as proper names in the captions, thereby restricting its application in tasks that require naming capabilities.

- (13)

- VATEXVATEX shines in its focus on a broad range of video activities, rendering it versatile for various video understanding tasks. It offers detailed and diverse annotations that touch upon multiple aspects of the video content. Yet, despite being extensive, it may not be deeply specialized in one particular domain like some other datasets. Moreover, while comprehensive, its annotations might not be finely tuned to the specific terminology or nuances of highly specialized fields.

- (14)

- TVCTVC stands out as a large-scale dataset equipped with multimodal descriptions and embraces diverse context types such as video, subtitle, or both, along with rich human interactions. However, the annotations within this dataset may vary in their level of detail, and some descriptions could be more centered around one modality.

- (15)

- ViTTViTT centers on text-to-video retrieval tasks, making it immensely valuable for video-text understanding and alignment. It provides detailed annotations that link textual descriptions to corresponding video segments. Still, when compared to general-purpose video datasets, its focus is narrower, concentrating on specific types of videos pertinent to the text-to-video retrieval task. Additionally, the annotations might lean towards the terminology and context commonly seen in text-video pairing scenarios.

- (16)

- VC_NBA_2022The VC_NBA_2022 dataset presents a specialized collection of basketball game videos, covering a multitude of in-game events. It delivers detailed and specific annotations for shooting incidents along with player-centric details, offering an exhaustive comprehension of basketball game situations. Nonetheless, the dataset’s annotations could occasionally be skewed towards certain aspects of the game, perhaps overlooking other vital elements. Furthermore, the quality and consistency of these annotations can fluctuate, which might influence the efficacy of model training.

- (17)

- WTSWTS has a distinct focus on traffic safety scenarios, making it highly pertinent to studies involving pedestrian and vehicle interactions. It includes thorough annotations comprising textual descriptions and 3D gaze data for in-depth analysis. Although, in contrast to general-purpose video datasets, it has a more confined scope concerning video content as it concentrates solely on traffic safety circumstances. Furthermore, its annotations may exhibit bias towards traffic-related terminology and specific facets of pedestrian and vehicle behavior.

4.3. Data Preprocessing and Augmentation Techniques

Effective data preprocessing and augmentation play a pivotal role in enhancing the performance of dense video captioning models. When dealing with the datasets mentioned, several common techniques are employed to optimize the data for training these models.

Firstly, temporal segmentation is applied to videos. This involves dividing them into meaningful clips based on temporal annotations [62,63,64]. By focusing on relevant parts of the video, this technique helps in improving the accuracy and relevance of the captions generated by the model [65,66,67].

Next, frame sampling is utilized to extract keyframes from each video segment. This step is crucial for reducing computational load while capturing essential visual information [4,7,68]. By selecting representative frames, the model can process the video more efficiently without losing important details [66,69,70,71].

In addition to visual data preprocessing, text preprocessing is also essential. Captions are cleaned by removing punctuation and stop words, and undergoing tokenization and lemmatization [64,67]. This process ensures that the captions are in a standardized format, making it easier for the model to understand and generate accurate descriptions [64,67].

To further enhance the diversity and robustness of the training data, data augmentation techniques are employed. One such technique is temporal shifting, where the boundaries of temporal segments are slightly shifted [72]. This helps create more robust models by exposing them to variations in the temporal boundaries of events.

Another augmentation technique is caption paraphrasing. By generating paraphrased versions of captions, the diversity of the training data is increased [72,73]. This encourages the model to learn different ways of describing the same event, improving its ability to generate varied and accurate captions [74].

Lastly, synthetic noise is added to video frames or captions. This technique enhances the model’s robustness by exposing it to noisy data during training [71]. By learning to handle synthetic noise, the model becomes better equipped to deal with real-world noise and variations, leading to improved performance in dense video captioning tasks.

5. Evaluation Protocols and Metrics

Assessing video descriptions, whether automatically or manually generated, remains challenging due to the absence of a definitive “correct answer” or ground truth for evaluating accuracy. A single video can be accurately depicted using a range of sentences that display both syntactic and semantic variety. For instance, consider the various captions for vid1447 from the MSVD dataset, as illustrated in Figure 15. One caption states, “Two men are rolling huge tires sideways down a street while spectators watch”, offering one viewpoint. Another says, “Men are flipping large tires in a race”, presenting a different angle. Yet another description notes, “Two men are pushing large tires”, emphasizing a distinct aspect of the action. Variations also emerge in how strength and activity are portrayed, such as “A strong person pulling a big tire”, or the more concise version, “Two men are rolling tires”. Additionally, some captions incorporate extra context, like “A short clip about Jeff Holder, the strongest man in Southern California, who is moving the tire and proving his strength”. Each of these captions captures the essence of the video in a unique yet equally valid way, with differing focuses, levels of detail, and depictions of the actions and participants.

Figure 15.

An instance from the MSVD dataset is provided along with its corresponding ground truth captions. Observe the varied descriptions of the same video clip. Each caption presents the activity either wholly or partially in a distinct manner.

5.1. Key Metrics

Video description merges computer vision (CV) with natural language processing (NLP). BLEU [8], METEOR [9], CIDEr [10], ROUGE-L [11], SODA [12], SPICE [13], and WMD [14] serve as crucial metrics for evaluating auto-generated captions for videos and images. Derived from NLP, these metrics assess various aspects like n-gram overlap, semantic similarity, and uniqueness, providing rapid and cost-effective evaluations that complement human assessments of adequacy, fidelity, and fluency. Each metric has unique strengths and aims to align with professional translation quality. Table 2 summarizes the metric names, purposes, methodologies, and limitations, with detailed descriptions following below.

Table 2.

Summary of Metrics Used for Video Description Evaluation.

5.1.1. BLEU

BLEU (Bilingual Evaluation Understudy) is a metric commonly used in machine translation and has been adapted for video captioning evaluation [8]. It measures the n-gram precision between the generated caption and the reference captions. BLEU scores range from 0 to 1, with higher scores indicating better performance. However, BLEU has limitations in capturing the semantic similarity between captions and may penalize models for generating grammatically correct but semantically different captions from the references.

The core idea behind BLEU is to compute the precision of n-grams (sequences of n consecutive words) between the candidate text and the reference texts. BLEU calculates a weighted geometric mean of the n-gram precisions for different values of n, typically up to 4. Additionally, BLEU incorporates a brevity penalty to penalize candidate texts that are too short. The BLEU score is calculated using the following formula [8]:

where is the brevity penalty, N is the maximum n-gram length (typically 4), is the weight for n-gram precision, typically set to for uniform weighting; is the modified n-gram precision for length n.

The brevity penalty (BP) is defined as:

where c is the length of the candidate text, r is the effective reference length, calculated as the closest reference length.

5.1.2. METEOR

METEOR (Metric for Evaluation of Translation with Explicit Ordering) is another metric that combines Precision (P) and Recall (R), taking into account both exact word matches and paraphrases [9]. It uses a synonym dictionary and stems words to improve the evaluation of semantic similarity. METEOR is considered more robust than BLEU in handling synonyms and different word orderings. Moreover, it explicitly takes into account the word order in the translated sentence, which is vital for capturing the fluency and grammatical correctness of the translation.

The computation of the METEOR score involves five steps:

- (1)

- Word AlignmentThe initial step involves aligning words between the machine-generated and reference translations using exact matching, stemming (Porter stemmer), and synonymy matching (WordNet). This alignment is carried out in stages, each focusing on a different matching criterion.

- (2)

- Precision and Recall CalculationOnce aligned METEOR calculates Precision (P) as the ratio of correctly matched unigrams in the translation to the total unigrams, and Recall (R) as the ratio of correctly matched unigrams to the total in the reference.

- (3)

- F-measure CalculationMETEOR then computes the harmonic mean (F-measure) of Precision (P) and Recall (R), with more weight given to Recall (R).

- (4)

- Penalty for FragmentationTo penalize translations with poor ordering or gaps, METEOR calculates a fragmentation Penalty based on the number of chunks formed by adjacent matched unigrams in the translation and reference.where and are parameters that control the Penalty.

- (5)

- Final METEOR Score

5.1.3. CIDEr

CIDEr (Consensus-based Image Description Evaluation) is tailored for assessing image and video caption [10]. It gauges the level of agreement among multiple reference captions by calculating the cosine similarity between the TF-IDF vectors of the candidate caption and those of the references. This metric excels at capturing the relevance and salience of the generated captions, embodying the idea that a high-quality caption should resemble how most humans would describe the image.

CIDEr evaluates captions by: matching n-grams (1−4 words) for grammatical and semantic richness, applying TF-IDF weighting to downplay common n-grams, computing average cosine similarity for each n-gram length to measure overlap, and combining n-gram scores with uniform weights to obtain the final score. The metric’s formula is as follows:

- (1)

- TF-IDF Weightingwhere is the frequency of n-gram in reference caption , is the vocabulary of all n-grams, is the total number of images, and q is a constant typically set to the average length of reference captions.

- (2)

- Cosine Similarity for n-grams of Length nwhere is the candidate caption, is the set of reference captions, and and are the TF-IDF vectors for the n-grams of length n in the candidate and reference captions, respectively.

- (3)

- Combined CIDEr Scorewhere N is the maximum n-gram length (typically 4), and are the weights for different n-gram lengths (typically set to for uniform weights).

5.1.4. ROUGE-L

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is a collection of metrics designed to assess automatic summarization, and it has been adapted for evaluating video captioning as well [11]. Specifically, ROUGE-L measures the longest common subsequence (LCS) between a generated caption and reference captions, with a particular focus on the recall of content. This metric is valuable for gauging the completeness of the generated captions. ROUGE-L offers both Recall () and Precision () scores, and the overall ROUGE-L score is typically reported as their F-measure ().

The computation of ROUGE-L involves three steps:

- (1)

- LCS CalculationFor two sequences X and Y, let denote the length of their longest common subsequence.

- (2)

- Recall and PrecisionRecall () is determined by the ratio of the length of the longest common subsequence (LCS) to the length of the reference sequence. Precision (), on the other hand, is calculated as the ratio of the length of the LCS to the length of the candidate sequence.

- (3)

- F-MeasureThe F-measure () is the harmonic mean of Recall () and Precision ().where is a parameter that controls the relative importance of Recall and Precision. In ROUGE evaluations, is often set to a very large value (e.g., ), effectively making equal to .

5.1.5. SODA

SODA (Story Oriented Dense Video Captioning Evaluation Framework) is a metric specifically designed to evaluate video story descriptions [12]. It takes into account the temporal ordering of events and penalizes redundant captions to ensure accuracy. SODA assesses the quality and relevance of generated captions by maximizing the sum of Intersection over Union (IoU) scores and computing an F-measure based on METEOR scores. The framework comprises two main components: optimal matching using dynamic programming, and an F-measure specifically tailored for evaluating video story descriptions.

- (1)

- Optimal Matching Using Dynamic ProgrammingSODA uses dynamic programming to find the best match between generated and reference captions, maximizing their IoU score sum while considering temporal order. The IoU between a generated caption p and a reference caption g is defined as:where and represent the start and end times of the event proposals corresponding to the captions.Given a set of reference captions G and generated captions P, SODA finds the optimal matching by solving:where S holds the maximum score of optimal matching between the first i generated captions and the first j reference captions, and C is the cost matrix based on IoU scores.

- (2)

- F-measure for Evaluating Video Story DescriptionTo penalize redundant captions and ensure the generated captions cover all relevant events, SODA computes Precision and Recall based on the optimal matching and derives F-measure scores:where represents an evaluation metric like METEOR.

5.1.6. SPICE

SPICE (Semantic Propositional Image Caption Evaluation) is a metric for assessing image caption quality by analyzing semantic content, utilizing a graph-based semantic representation called a scene graph to encode objects, attributes, and relationships present in captions, thus abstracting away lexical and syntactic idiosyncrasies of natural language. It calculates an F-score over logical tuples to reflect semantic propositional similarity, providing a more human-like evaluation.

To generate a scene graph, SPICE employs a two-stage approach: first, a dependency parser establishes syntactic dependencies between words in the caption, resulting in a dependency syntax tree; second, this tree is mapped to a scene graph using a rule-based system, consisting of object nodes, attribute nodes, and relationship edges [13].

SPICE computes a similarity score between a candidate caption c and reference captions by extracting logical tuples from their parsed scene graphs using a function T:

where is the scene graph for caption c, is the set of object nodes, is the set of relationship edges, and is the set of attribute nodes. Each tuple contains one, two, or three elements, representing objects, relationships, and attributes, respectively.

SPICE then computes precision P(c, S), recall R(c, S), and F-score based on the matching tuples between the candidate scene graph and the reference scene graphs:

where ⊗ denotes the tuple matching operator, which returns the set of matching tuples between two scene graphs. For matching tuples, SPICE uses WordNet synonym matching, similar to METEOR.

5.1.7. WMD

WMD (Word Mover’s Distance) is a metric for evaluating text document similarity [14], based on the Earth Mover’s Distance. It measures the minimum distance to move embedded words from one document to match those of another using word2vec, capturing semantic similarity for tasks like document retrieval and classification. Given two documents represented as normalized bag-of-words (nBOW) histograms d and , WMD calculates the minimum travel distance between their embedded words.

Formally, let X and be the sets of embedded word vectors for documents d and , respectively. The WMD is defined as the solution to the following optimization problem:

subject to:

here, T is a flow matrix where represents the amount of “mass” moved from word i in document d to word j in document . The cost is the Euclidean distance between the embedded word vectors and :

The constraints ensure that the entire “mass” of each word in the source document is moved to the target document, and vice versa.

5.2. Challenges in Evaluation and Benchmarking

Despite the advancements in evaluation metrics, there are still challenges in evaluating and benchmarking DVC models.

- (1)

- Diversity of Video ContentVideos contain a wide range of events, contexts, and interactions, making it difficult to create a comprehensive set of reference captions that capture all possible descriptions. This diversity can lead to inconsistencies in evaluation results.

- (2)

- Semantic SimilarityCurrent metrics rely heavily on n-gram matching and may not accurately capture the semantic similarity between captions. This can result in models being penalized for generating captions that are semantically correct but differ in wording from the references.

- (3)

- Temporal LocalizationDVC involves not only generating accurate captions but also locating the events in the video. Evaluating the temporal localization of events is challenging, as it requires precise annotations and may not be fully captured by existing metrics.

5.3. Discussion on the Interpretability and Fairness of Evaluation Metrics

The interpretability and fairness of evaluation metrics are crucial for the development of DVC models.

- (1)

- InterpretabilityMetrics should provide clear insights into the strengths and weaknesses of the models. For example, a low BLEU score may indicate a lack of n-gram overlap, while a high CIDEr score may suggest good consensus with reference captions. However, it is important to interpret these scores in the context of the specific task and dataset.

- (2)

- FairnessEvaluation metrics should be fair and unbiased, reflecting the true performance of the models. This requires careful selection of reference captions and consideration of the diversity of video content. Metrics should also be robust to variations in annotation quality and consistency.

To address these challenges, future work should focus on developing more sophisticated evaluation metrics that can capture the semantic similarity between captions and account for the diversity of video content. Additionally, there is a need for larger and more diverse datasets with high-quality annotations to support the evaluation and benchmarking of DVC models.

5.4. Benchmark Results

We present an overview of the benchmark results achieved by various methods across multiple video description datasets, including MSVD [49], TACoS [50], MPII-MD [51], MPII-MD [51], TGIF [52], Youtube2text [75], MSR-VTT [7], Charades [53], VTW [54], ActivityNet Captions [2], YouCook2 [4], BDD-X [55], VideoStory [56], M-VAD [57], VATEX [58], TVC [59], ViTT [21], VC_NBA_2022 [60], and WTS [61]. The methods are evaluated using a range of automatic metrics: BLEU [8], METEOR [9], CIDEr [10], ROUGE-L [11], SODA [12], SPICE [13], and WMD [14].

5.4.1. MSVD Dataset: Method Performance Overview

On the MSVD Dataset, various methods have been evaluated using the BLEU, METEOR, CIDEr, and ROUGE-L metrics. As shown in Table 3, several methods have reported results for this dataset, with specific models achieving notable performances. Here, we summarize the best-performing methods, along with their published scores.

Encoder-decoder based approaches: Encoder-decoder architectures have shown promising results on the MSVD dataset. Among the recent methods, Howtocaption [76] stands out with the highest BLEU (70.4), METEOR (46.4), CIDEr (154.2), and ROUGE-L (83.2) scores. This method leverages a combination of advanced encoding and decoding techniques to generate high-quality captions. Other notable methods include KG-VCN [77] and ATMNet [78], which also report competitive scores across all metrics. Earlier works, such as VNS-GRU [79] and RMN [80], also employed encoder-decoder frameworks but achieved lower scores compared to more recent methods. This highlights the progress made in improving video description techniques over the years.

Transformer-based approaches: Transformer-based methods have gained popularity in recent years due to their ability to capture long-range dependencies in sequences. CEMSA [33] is a notable transformer-based approach that reports competitive scores on the MSVD dataset, with BLEU (60.9), METEOR (40.5), CIDEr (117.9), and ROUGE-L (77.9). Other transformer-based methods, such as COST [41] and TextKG [32], also show promising results. However, some transformer-based methods, like SnapCap [42] and UAT-FEGs [35], report lower scores compared to encoder-decoder based approaches. This suggests that while transformers are powerful architectures, their effectiveness in video description tasks may depend on the specific implementation and design choices.

Table 3.

Comparison of video captioning methods on the MSVD dataset, showing BLEU-4 (in percent), METEOR, CIDEr, and ROUGE-L scores per sentence for short descriptions.

Table 3.

Comparison of video captioning methods on the MSVD dataset, showing BLEU-4 (in percent), METEOR, CIDEr, and ROUGE-L scores per sentence for short descriptions.

| NO | Method | Method’s Category | BLEU-4 | METEOR | CIDEr | ROUGE-L | Year |

|---|---|---|---|---|---|---|---|

| 1 | ASGNet [81] | Encoder-decoder | 55.2 | 36.6 | 101.8 | 74.3 | 2025 |

| 2 | CroCaps [82] | Encoder-decoder | 58.2 | 39.79 | 112.29 | 77.41 | 2025 |

| 3 | KG-VCN [77] | Encoder-decoder | 64.9 | 39.7 | 107.1 | 77.2 | 2025 |

| 4 | PKG [83] | Encoder-decoder | 60.1 | 39.3 | 107.2 | 76.2 | 2025 |

| 5 | ATMNet [78] | Encoder-decoder | 58.8 | 41.1 | 121.9 | 78.2 | 2025 |

| 6 | Howtocaption [76] | Encoder-decoder | 70.4 | 46.4 | 154.2 | 83.2 | 2024 |

| 7 | MAN [28] | Encoder-decoder | 59.7 | 37.3 | 101.5 | 74.3 | 2024 |

| 8 | GSEN [84] | Encoder-decoder | 58.8 | 37.6 | 102.5 | 75.2 | 2024 |

| 9 | EDS [85] | Encoder-decoder | 59.6 | 39.5 | 110.2 | 76.2 | 2024 |

| 10 | SATLF [86] | Encoder-decoder | 60.9 | 40.8 | 110.9 | 77.5 | 2024 |

| 11 | GLG [87] | Encoder-decoder | 63.6 | 42.4 | 107.8 | 80.8 | 2024 |

| 12 | CMGNet [88] | Encoder-decoder | 54.2 | 36.9 | 96.2 | 74.5 | 2024 |

| 13 | ViT/L14 [89] | Encoder-decoder | 60.1 | 41.4 | 121.5 | 78.2 | 2023 |

| 14 | CARE [90] | Encoder-decoder | 56.3 | 39.11 | 106.9 | 75.6 | 2023 |

| 15 | VCRN [91] | Encoder-decoder | 59.1 | 37.4 | 100.8 | 74.6 | 2023 |

| 16 | RSFD [92] | Encoder-decoder | 51.2 | 35.7 | 96.7 | 72.9 | 2023 |

| 17 | vc-HRNAT [26] | Encoder-decoder | 57.7 | 36.8 | 98.1 | 74.1 | 2022 |

| 18 | SemSynAN [93] | Encoder-decoder | 64.4 | 41.9 | 111.5 | 79.5 | 2021 |

| 19 | TTA [94] | Encoder-decoder | 51.8 | 35.5 | 87.7 | 72.4 | 2021 |

| 20 | AVSSN [93] | Encoder-decoder | 62.3 | 39.2 | 107.7 | 76.8 | 2021 |

| 21 | RMN [80] | Encoder-decoder | 54.6 | 36.5 | 94.4 | 73.4 | 2020 |

| 22 | SAAT [95] | Encoder-decoder | 46.5 | 33.5 | 81.0 | 69.4 | 2020 |

| 23 | VNS-GRU [79] | Encoder-decoder | 66.5 | 42.1 | 121.5 | 79.7 | 2020 |

| 24 | JSRL-VCT [96] | Encoder-decoder | 52.8 | 36.1 | 87.8 | 71.8 | 2019 |

| 25 | GFN-POS [27] | Encoder-decoder | 53.9 | 34.9 | 91.0 | 72.1 | 2019 |

| 26 | DCM [97] | Encoder-decoder | 53.3 | 35.6 | 83.1 | 71.2 | 2019 |

| 27 | CEMSA [33] | Transformer | 60.9 | 40.5 | 117.9 | 77.9 | 2024 |

| 28 | SnapCap [42] | Transformer | 51.7 | 36.5 | 94.7 | 73.5 | 2024 |

| 29 | COST [41] | Transformer | 56.3 | 37.2 | 99.2 | 74.3 | 2023 |

| 30 | TextKG [32] | Transformer | 60.8 | 38.5 | 105.2 | 75.1 | 2023 |

| 31 | UAT-FEGs [35] | Transformer | 56.5 | 36.4 | 92.8 | 72.8 | 2022 |

| 32 | HMN [36] | Transformer | 59.2 | 37.7 | 104.0 | 75.1 | 2022 |

| 33 | O2NA [98] | Transformer | 55.4 | 37.4 | 96.4 | 74.5 | 2021 |

| 34 | STGN [99] | Transformer | 52.2 | 36.9 | 93.0 | 73.9 | 2020 |

| 35 | SBAT [34] | Transformer | 53.1 | 35.3 | 89.5 | 72.3 | 2020 |

| 36 | TVT [38] | Transformer | 53.21 | 35.23 | 86.76 | 72.01 | 2018 |

| 37 | STAN [45] | Attention | 40.0 | 40.2 | 72.1 | 55.2 | 2025 |

| 38 | IVRC [100] | Attention | 58.8 | 40.3 | 116.0 | 77.4 | 2024 |

| 39 | MesNet [101] | Attention | 54.0 | 36.3 | 100.1 | 73.6 | 2023 |

| 40 | ADL [47] | Attention | 54.1 | 35.7 | 81.6 | 70.4 | 2022 |

| 41 | STAG [102] | Attention | 58.6 | 37.1 | 91.5 | 73.0 | 2022 |

| 42 | SemSynAN [103] | Attention | 64.4 | 41.9 | 111.5 | 79.5 | 2021 |

| 43 | T-DL [104] | Attention | 55.1 | 36.4 | 85.7 | 72.2 | 2021 |

| 44 | SGN [105] | Attention | 52.8 | 35.5 | 94.3 | 72.9 | 2021 |

| 45 | ORG-TRL [106] | Attention | 54.3 | 36.4 | 95.2 | 73.9 | 2020 |

| 46 | MAM-RNN [107] | Attention | 41.3 | 32.2 | 53.9 | 68.8 | 2017 |