Abstract

Large language models (LLMs) have shown remarkable skills across various activities, including text generation and code synthesis. Their widespread applicability, however, raises substantial concerns about security, privacy, and possibly misuse. Of recent legislative efforts, the most notable is the proposed EU AI Act, which classifies specific AI applications as high-risk. For detailed regulatory guidance, also refer to the GDPR and HIPAA privacy rules. This paper introduces SecureLLM, a novel framework that integrates lightweight cryptographic protocols, decentralized fine-tuning strategies, and differential privacy to mitigate data leakage and adversarial attacks in LLM ecosystems. We propose SecureLLM as a conceptual security architecture for LLMs, offering a unified approach that can be adapted and tested in real-world deployments. While extensive empirical benchmarks are deferred to future studies, we include a small-scale demonstration illustrating how differential privacy can reduce membership inference risks with a manageable overhead. The SecureLLM framework underscores the potential of cryptography, differential privacy, and decentralized fine-tuning for creating safer and more compliant AI systems.

1. Introduction

The emergence of large language models (LLMs) has fundamentally changed the landscape of natural language processing (NLP) and artificial intelligence (AI) at large. These powerful models—ranging from GPT-3.5 and GPT-4 to BLOOM and PaLM—have demonstrated increasingly sophisticated abilities in text generation, summarization, translation, and reasoning tasks. Their widespread adoption in various industries (e.g., healthcare, finance, education, and law) attests to their transformative potential. However, this adoption is critical in terms of the security and privacy risks, such as data leakage, model inversion attacks, membership inference attacks, and malicious manipulations through prompt-based attacks (e.g., adversarial prompts or “jailbreaking”) [1,2]. In light of evolving regulatory frameworks—exemplified by the EU AI Act [3], which introduces a tiered approach to AI risk classification—organizations deploying LLMs may need to implement more stringent safeguards if these models fall into “high-risk” categories [4].

Over the past few years, researchers have increasingly recognized that LLMs may inadvertently expose sensitive training data due to their large parameter spaces and complex internal representations [5]. Moreover, recent works have demonstrated that both advanced querying techniques and model distillation strategies can be used to make membership inference attacks more effective, which brings an urgent requirement for the development of enhanced privacy protection [6]. As LLMs continue to grow in size (often surpassing hundreds of billions of parameters), adversaries can exploit novel attack surfaces to extract proprietary or user-specific information. This reality has prompted calls for a new generation of secure AI tools that integrate cutting-edge cryptography, privacy-preserving technologies, and robust distributed training protocols [7]. In this context, even a minimal proof-of-concept experiment—such as the small-scale differential privacy test reported in Section 5.5—can underscore how added security measures might affect the overhead and membership inference risks. In contrast to prior works that focused solely on one technique (e.g., differential privacy alone or purely federated training), our contribution lies in holistically integrating (1) lightweight cryptography, (2) decentralized fine-tuning using MPC, and (3) differentially private inference into a single cohesive framework tailored to large language models. By systematically layering these methods, our SecureLLM architecture aims to minimize the data leakage risks across all stages of the LLM pipeline (training, fine-tuning, and inference), rather than only at training time. This synergy, rarely treated in a unified manner in the existing literature, allows for stronger compliance with emerging regulations and a better balance between efficiency and security for real-world LLM deployments. The existing research has examined the use of techniques like differential privacy [8], homomorphic encryption [9], and secure enclaves [10] to bolster the privacy and security of LLM deployments. While all of these techniques have seen promising individual results, comprehensive frameworks that put cryptographic primitives, differential privacy, and decentralized learning strategies together for large models have been relatively unexploited. This literature gap needs to be filled due to the high stakes of LLM deployments in real-world environments that process sensitive user data, private corporate information, and confidential government communications. Additionally, if these systems are used in contexts deemed high-risk by regulations such as the EU AI Act—e.g., medical diagnostics, recruitment, or critical infrastructure—ensuring robust security and privacy is paramount not only for technical efficacy but also for legal compliance [11].

The proposed solution for these problems will be SecureLLM, with the following aims:

- Lightweight cryptography: Integrating modular encryption techniques with minimal computational overhead;

- Decentralized fine-tuning: Enabling collaborative training while maintaining confidentiality through multi-party computation (MPC);

- Differentially private inference: Preserving individual user data privacy by injecting carefully calibrated noise during both the training and inference stages.

Our contributions can be summarized as follows:

- Framework design: We introduce a unified approach to safeguarding LLMs through cryptography, differential privacy, and decentralized fine-tuning;

- Conceptual guidance: We present the high-level best practices for implementing SecureLLM in real-world deployments and discuss how its security measures could address the regulatory requirements for high-risk AI systems, including those under the EU AI Act.

Overview and organization: In this paper, we first provide an extensive background on the security challenges with LLMs (Section 2) and describe related work. Section 3 details the proposed SecureLLM framework, including the formal formulations for decentralized gradient aggregation and differential privacy noise injection. Section 4 discusses implementation considerations and potential performance impacts. In Section 5, we outline potential applications, empirical benchmarks, and future work, and Section 6 provides a comprehensive discussion of the results. Finally, Section 7 concludes this paper.

2. Background

2.1. Security and Privacy Challenges in LLMs

LLMs are exposed to the following forms of security threats, among others:

- Model inversion attacks: Attackers can learn/reconstruct/exfiltrate training data via exposed parameters [12];

- Membership inference attacks: These enable attackers to determine whether any specific record of data has contributed to the training process [13];

- Evasion attacks via prompt engineering: Malicious users manipulate vulnerabilities in the prompt to trick content filters into showing sensitive behaviors [14].

Recent studies indicate that modifications using adversarial prompt engineering result in a more sophisticated and intricate form of attack, utilizing a prompt-based methodology that either diminishes the model’s performance or compels it to disclose private training data [15]. Moreover, mass scraping of inference-time user inputs has created large-scale vulnerabilities to new privacy hazards because sensitive personal or corporate information may be cached or shared at inference [16]. Indeed, as LLMs’ parameters grow in size, along with the training corpora, more attack surfaces open to adversaries or malicious actors, multiplying the exposure to leakages or unauthorized model reuse [17].

Another area of growing concern is the unintentional memorization of rare or unique examples in training, which can lead to privacy leakages if models reproduce these examples on request [18]. This is especially sensitive in domains like health and finance, where even the unintentional short-term exposure of personally identifiable information (PII) can be illegal and unethical [19]. Recent research has shown that despite fine-tuning and filtering mechanisms, LLMs can generate recognizable segments of text when given adversarial prompts, particularly when the original training dataset originates from uncurated, extensive internet archives.

With the proliferation of public large language model APIs, researchers have also discovered new vectors for indirect data harvesting. Attackers can exploit application integrations—such as plugins or software libraries that connect LLMs to external data sources—to stage complex data extraction campaigns [20]. Moreover, the increasing sophistication of membership inference attacks has led to “label-only” or “black-box” methods that do not require extensive knowledge of the model internals [21]. These threats raise considerable alarm for organizations that rely on LLM-based solutions for critical tasks or that handle personal health records (PHRs), financial documents, or intellectual property.

Recent work has also highlighted the challenge of “prompt chaining”, wherein an attacker crafts multiple queries that leverage partial information revealed in earlier responses [22]. Over time, such queries may accumulate enough fragments to reconstruct confidential data. In practice, distinguishing benign queries from hostile ones can be subtle, particularly where user interactions are not tightly controlled. Security teams are thus exploring user-level audit trails, more sophisticated anomaly detection, and finer-grained content filters as countermeasures against data exfiltration using sequential queries.

An additional concern is the potential for models to be misused to generate disinformation or harmful content. While it is not always classified as a direct privacy leakage, the generation of disinformation can be considered a security threat in contexts where social engineering or phishing attacks leverage LLM-generated text [23]. Adversaries can exploit a model to produce highly personalized spear-phishing messages that mimic a person’s language style, extracted from scattered, user-specific information inadvertently revealed through the massive language model. In short, such vulnerabilities require strong security protocols to secure the training data, maintain user anonymity at inference, and monitor any potential interactions with hostile entities. Fragmented solutions—such as adding differential privacy at training alone—cannot fully address the multifaceted nature of the security challenges in LLMs. Methods integrating cryptography, privacy-preserving training, secure hardware, and robust inference-time monitoring collectively pave the way for safer LLM deployments in real-world, high-stakes environments.

In response to evolving regulatory requirements—particularly those outlined in the EU AI Act for high-risk AI systems—the SecureLLM framework has been designed with a multi-layered security architecture that goes beyond the traditional measures. By integrating lightweight cryptography, decentralized fine-tuning via multi-party computation, and differentially private inference, SecureLLM deploys robust security mechanisms at every stage of the model’s lifecycle. This layered approach not only addresses inherent technical vulnerabilities but also aligns with the regulatory expectations in terms of data protection, user privacy, and accountability in high-risk applications.

2.2. Existing Solutions

Differential privacy: Differential privacy adds noise during training in a controlled manner, masking the contributions of individual training examples [24]. Over the past few years, there has been increasing interest in applying DP mechanisms to very large transformer models; for example, several works have investigated adaptive clipping strategies, techniques for distributing noise injections across data-parallel workers, and privacy budgets tailored to billion-parameter models.

Homomorphic encryption: Full homomorphic encryption (FHE) enables computations on encrypted data without decryption [25]. Hybrid techniques have been developed that rely on partial or approximate homomorphic schemes while aiming to reduce the overhead and maintain strong security guarantees whenever raw data confidentiality is essential [26].

Secure enclaves: Secure enclaves are hardware constructs that protect sensitive computation from the operating system [27].

Decentralized learning: Recent work in federated and decentralized learning has studied how to train models collaboratively without revealing raw data. Techniques from multi-party computation allow several parties to jointly compute any function while keeping their inputs private [28].

Table 1 presents a comparision of existing solutions for LLM Security and privacy relative to the pro posed method. While each of these approaches has specific strengths, their real-world usage tends to occur in isolation, with corresponding limitations in their overall security. For instance, although differential privacy can protect training data through anonymization, it does not address adversarial actions and inference queries, nor does it cover collusion with untrustworthy entities during training [29]. Similarly, homomorphic encryption can secure raw data but may become computationally expensive for full-scale LLM operations, creating potential bottlenecks in production.

Table 1.

Comparison of the existing solutions for LLM security and privacy relative to the proposed method.

Research since 2021 has intensified efforts to combine or “stack” these techniques to deliver multi-layered defense. One prominent thread explores the synergy between federated learning and secure enclaves: decentralized participants train local models within encrypted or protected hardware, thereby avoiding the need to share raw data with a central server [30]. Another emerging line examines how multi-party computation (MPC) protocols can be adapted to large models, particularly by compressing gradient information before encryption. These solutions remain promising, especially for corporate consortia and cross-institution collaborations, but still demand careful scheduling and key management to prevent partial data leaks.

Additionally, organizations have begun to adopt “explainable AI” (XAI) and accountability frameworks to trace the pathways through which an LLM arrives at specific outputs [31]. Although XAI does not directly encrypt data or apply DP, it offers a supplementary safeguard: if a model inadvertently reveals sensitive content, an audit trail may help pinpoint the underlying cause (e.g., memorized examples in the training set).

Nonetheless, a strong imperative remains for converged architectures that combine cryptography, differential privacy, and decentralized learning into a single system. Such frameworks must demonstrate enough adaptability to function under real-world constraints (e.g., limited GPU availability, variable network bandwidth, evolving regulations) while offering robust countermeasures against adversaries. SecureLLM arises in response to this demand, reducing the complexity and risk of ad hoc integrations.

Taken together, the existing solutions illustrate both the promise and limitations of the current LLM security methods. Researchers and industry practitioners are increasingly acknowledging that only a combination of techniques—applied throughout an AI’s lifecycle—can effectively safeguard against modern threats. This viewpoint motivates the SecureLLM framework, which leverages lightweight cryptographic approaches, decentralized protocols, and differentially private inference to secure LLM training, fine-tuning, and real-world inference scenarios.

In contrast to solutions that focus solely on one technique (e.g., differential privacy during training [8] or homomorphic encryption for raw data [9]), SecureLLM addresses every phase of the LLM lifecycle (training, fine-tuning, and inference) through a combination of lightweight cryptography, MPC-based decentralized updates, and differential privacy for inference. This synergy reduces the attack surface beyond what any single method can achieve alone. Moreover, SecureLLM strategically balances performance and security by minimizing the cryptographic overhead and selectively applying noise injections, thereby achieving a lower latency than that of full-homomorphic-encryption-based systems while still offering stronger privacy guarantees than the standard federated or enclave-only approaches. For instance, secure enclaves [10] protect computations in trusted hardware but do not inherently mitigate membership inference from public queries. Federated approaches keep the data local yet risk exposing plaintext gradients. By layering cryptography, decentralized fine-tuning, and DP-based inference in one unified framework, SecureLLM attains a more holistic security posture. Although the exact performance metrics can vary across implementations, a principal advantage of SecureLLM lies in avoiding the steep computational costs of fully homomorphic encryption (FHE) while still restricting the exposure of plaintext data and gradients more effectively than federated-only methods. Moreover, our small-scale test (Section 5.5) shows how even a partial SecureLLM deployment reduces the membership inference vulnerabilities, underlining its value over narrowly focused solutions.

In recent years, a variety of works have begun to construct composite frameworks that integrate multiple privacy protection strategies. For example, techniques that hybridize federated learning with differential privacy, as well as secure multi-party computation, have produced reassuring results at finding a balance among privacy guarantees and model utility. However, these hybrid methods often face challenges in scaling to the size and complexity of modern large language models, or they incur a significant computational overhead. In contrast, SecureLLM proposes the systematic integration of lightweight cryptography, decentralized fine-tuning, and differentially private inference, giving a unification solution that not only safeguards privacy across the entire LLM lifecycle but also minimizes the overhead otherwise associated with fully homomorphic or enclave-based schemes. By situating our work within this emerging class of hybrid frameworks, we aim to address the existing limitations and offer a scalable, adaptable, and regulation-ready alternative.

2.3. Comparison with Existing References

While our discussion in Section 2.2 outlined several methods for protecting privacy in large language models (LLMs), it is useful to compare these methods side by side and underscore how SecureLLM differs from or improves upon them.

- Differential privacy (DP): Prior works have shown how DP can protect the privacy of individual data points during training by injecting noise into gradients [8,24,29]. For instance, Abadi et al. [32] pioneered per-sample gradient clipping and noise addition to bound the privacy loss in neural network training. These methods, however, typically focus solely on training-time privacy rather than providing security at inference or preventing advanced prompt-based attacks. In contrast, SecureLLM incorporates DP both at training/fine-tuning and inference time, which helps reduce membership inference threats after model deployment.

- Homomorphic encryption (HE): Fully homomorphic encryption techniques enable computation on encrypted data [9,25]. Although this approach theoretically offers strong data confidentiality, it remains computationally demanding for multi-billion-parameter LLMs. Emerging research on leveled and approximate HE [26] has improved its efficiency somewhat, yet its production-scale adoption is still hindered by a heavy overhead. By contrast, SecureLLM employs lightweight encryption (AES-GCM combined with ephemeral key exchange), which is more tractable for real-time, large-scale gradient exchanges and inference.

- Secure enclaves and TEE: Security frameworks built on hardware enclaves [10,27] provide strong isolation but require specialized hardware and can still be susceptible to side-channel attacks if not properly configured. Moreover, enclaves alone do not inherently mitigate membership inference attacks on model outputs. SecureLLM instead places primary emphasis on decentralized fine-tuning via multi-party computation (MPC), which does not rely on trusted hardware at every node.

- Federated and decentralized learning: Federated or decentralized learning methods keep the data local and transmit model updates (gradients) to a central or distributed aggregator [28]. They prevent raw data from leaving local nodes, yet naive gradient sharing can still reveal sensitive patterns if an adversary intercepts them. By adding an encryption layer for gradients plus an MPC-based aggregator, SecureLLM ensures that no single party observes others’ plaintext updates. Additional defenses—like top-k sparsification—help curb communication overheads.

- Our added value:SecureLLM is distinguished from these earlier works by holistically weaving together the following:

- Lightweight cryptography for gradient and key management;

- Decentralized fine-tuning under MPC;

- Differentially private inference for post-training protection.

In this way, SecureLLM covers multiple phases of the LLM lifecycle (training, fine-tuning, and inference) in a single framework with a tunable performance overhead.

3. Proposed Methods: The SecureLLM Framework

SecureLLM introduces the use of cryptography, decentralized fine-tuning, and differential privacy in a layered approach to meeting these requirements. Extending previous works on treating these components in isolation, SecureLLM has developed an integral approach to harmonization—lightweight encryption, collaborative gradient aggregation, and dynamic noise injection—all into one end-to-end pipeline, affording greater security to modern AI deployments [33]. This overall integrated design will finally enable the protection of sensitive training data and inference processes with minimal overhead and no observable degradation in the core model performance. Building on existing theory around secure distributed learning, SecureLLM’s overarching design philosophy emphasizes modularity, adaptability, and end-to-end protection. By treating data security and privacy as first-class design concerns, we focus on constructing each layer in a way that can be adapted to varying hardware, data governance policies, and risk profiles. In practice, different organizations might choose to prioritize certain sub-layers or tune the parameters differently (e.g., adjusting key rotation intervals in the cryptography layer) depending on their regulatory environment or the sensitivity of their data.

Another central consideration is how to balance the tension between usability and security. In older frameworks, cryptographic protocols sometimes created overhead that rendered large-scale training infeasible. SecureLLM addresses this by adopting a combination of symmetric and asymmetric cryptography that can be tailored to specific deployment scales. Similarly, differential privacy schemes have traditionally struggled with the trade-off between privacy loss and model utility, but SecureLLM’s approach of distributing noise over both training and inference contexts allows for the more targeted protection of sensitive interactions.

Below, we detail each of the three layers of SecureLLM, explaining each component’s conceptual underpinnings and technical contributions. While the layers are presented sequentially, in practice, they can be integrated and tuned together, creating a dynamic security ecosystem that is responsive to real-time performance and compliance needs. The integration of security layers. The three layers of SecureLLM are designed to work in unison: lightweight cryptography secures the data during transit, decentralized fine-tuning aggregates the encrypted gradients securely, and differential privacy at inference ensures that the final outputs are protected against membership inference. The system is engineered so that the overhead introduced by cryptographic operations is balanced by efficient MPC protocols, while the noise calibration in DP is tuned to maintain model utility. However, we are aware that stacking lightweight cryptography, fine-grained decentralization, and differential private inference has the potential for interaction effects that could influence the overall framework’s effectiveness. An example is that sequential stacking can accumulate latency, especially for apps with shared ephemeral key rotations and also with decentralization gradient aggregation. Additionally, the simultaneous use of differential privacy and cryptographic measures may result in compounded noise levels, potentially degrading the model’s accuracy beyond the acceptable thresholds. Furthermore, interactions between cryptographic protocols and MPC-based aggregation could inadvertently introduce unforeseen vulnerabilities—such as weakened randomness due to overlapping entropy sources or compromised key exchange protocols—that could negatively impact other security layers.

To reduce such integration risks, painstaking and structured parameter tuning for every layer is required. This entails balancing noise injections harmoniously so that the privacy guarantees do not unduly degrade utility, in addition to coordinating the cryptographic schemes with the schedule cycles of decentralized aggregators to optimize latency. Additionally, comprehensive cross-layer vulnerability assessments and rigorous penetration testing will be performed in future work to proactively identify and address integration vulnerabilities. By addressing these effects of interactions directly and through a holistic approach to security evaluation, SecureLLM will be able to provide robust, useful, and long-lasting security guarantees throughout the entire LLM lifecycle.

To address composite noise, cumulative delay, and unforeseen vulnerabilities that may arise from layering lightweight cryptography, decentralized fine-tuning, and differentially private inference, SecureLLM adopts a multi-pronged strategy. First, a systematic cross-layer parameter tuning mechanism is proposed to calibrate the noise injection levels, encryption schedules, and aggregation frequencies across the different layers. Adaptive algorithms continuously monitor performance metrics (e.g., throughput, latency, and error rates) and security parameters (e.g., privacy loss and anomaly detection scores), dynamically adjusting these settings to mitigate the effects of compounded noise and delay. Second, the system includes complete vulnerability assessment processes, including automatic penetration testing and anomaly detection, integrated to find and patch unforeseen vulnerabilities caused by inter-layer interactions. Finally, SecureLLM makes use of end-to-end cryptographic logging and audit trails to offer complete transparency throughout all layers so that any integration issues can be quickly diagnosed and fixed. All these controls ensure that the integrated system maintains its intended security guarantees without a quantifiable loss of performance.

Each organization or data provider (labeled as a “local node”) locally encrypts its gradients using lightweight symmetric ciphers (e.g., AES-GCM) and shares only encrypted updates with an MPC-based aggregator. The aggregator, operating under multi-party computation protocols, never decrypts individual gradients but combines them securely into a global model update. Key management for data encryption is handled via ephemeral key exchanges (as further described below). During inference, the model injects calibrated noise into the outputs (Section 3.3) to preserve user-level privacy. This layered pipeline prevents any single party from accessing raw data or unencrypted gradients while also thwarting membership inference attacks at inference time. Recent work on secure multi-party computation and robust aggregation provides practical methods for detecting malicious gradient injections and Sybil attacks [34,35].

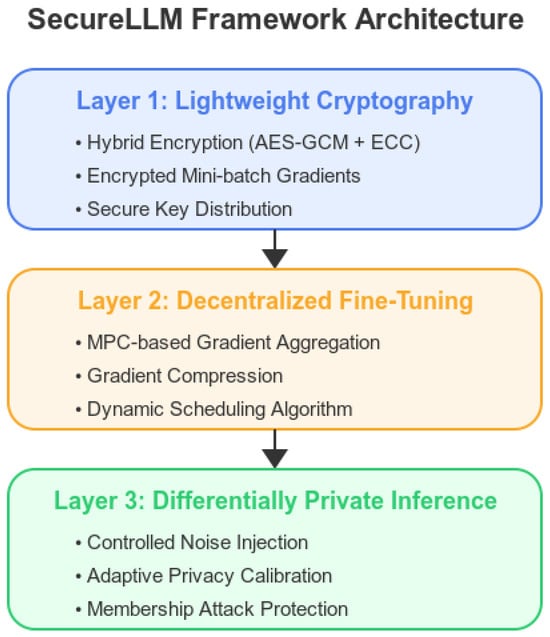

3.1. Layer 1: Lightweight Cryptography

To minimize the overhead, we adopt a hybrid encryption approach that uses symmetric key encryption (e.g., AES-GCM) for bulk data and asymmetric cryptography (e.g., Elliptic Curve Cryptography) for key exchange. We implement AES-GCM with a 256-bit key and utilize Curve25519 for the ECC key exchange, ensuring both robust encryption and efficient key management throughout the training process. When we describe this layer as “lightweight”, we refer to the fact that typical encryption and decryption operations at the batch-level scale add only a small fraction of extra processing time (in the order of milliseconds or less per update) and require minimal additional memory (often a few megabytes at most), relative to the massive parameter storage of modern large language models. For enhanced security, ephemeral keys are generated for each training round using an ECDH protocol. These keys are securely distributed and are immediately destroyed after use to prevent replay attacks. During training, we encrypt mini-batches of gradients, ensuring that the data remain confidential even if an attacker gains partial access to the training pipeline. In contrast to conventional schemes that rely solely on homomorphic encryption, which can be prohibitively costly at scale, our method balances high-speed symmetric encryption for continuous data transfer with robust asymmetric primitives for secure key distribution. This “lightweight cryptography” specifically designates cryptographic methods that prioritize a reduced runtime overhead and manageable memory footprints while maintaining strong security guarantees, making them viable for large-scale distributed training [36]. Figure 1 presents the architecture of the Secure LLM Framework.

Figure 1.

SecureLLM framework architecture.

Adopting this hybrid cryptographic method offers several benefits. First, it minimizes the latency in large-scale gradient exchanges, which is crucial when collaborating across multiple data centers or organizational units. Second, frequent key rotation becomes more manageable because the asymmetric layer only needs to handle the distribution of new session keys rather than encrypting entire gradients. Third, such a setup can be integrated with emerging post-quantum cryptographic primitives if organizations foresee long-term risks being posed by quantum-capable adversaries [37]. By designing SecureLLM’s cryptographic layer in a modular way, one can swap out older algorithms (e.g., RSA) with elliptic curve or post-quantum techniques without substantial changes to the rest of the framework.

Moreover, this layer can integrate with both the decentralized fine-tuning and differential privacy layers, ensuring consistent security protocols. For instance, if a gradient aggregator detects anomalous behavior or a suspicious update rate, it can trigger a forced key rotation to mitigate potential key compromise. All such interventions are facilitated through standardized APIs for key management and encryption, allowing the upper layers to remain abstracted from the underlying cryptographic specifics.

A critical aspect of the cryptography layer is key management. SecureLLM enforces ephemeral key rotation for each training round, ensuring that the compromised keys from one round do not grant attackers access to future gradient transmissions. This process may involve using an established key exchange protocol (e.g., ECDH or Curve25519) under a trusted certificate authority, and if desired, threshold cryptography can distribute partial keys among multiple nodes. Consequently, even an attacker intercepting network traffic cannot decrypt large portions of the training process, thereby reducing the risk of data leakage.

3.2. Layer 2: Decentralized Fine-Tuning

We employ a decentralized model training protocol inspired by federated learning but optimized for LLMs. SecureLLM uses an MPC-based aggregator that collects encrypted gradient updates from multiple clients and aggregates them without revealing the individual contributions. It is important to note that our aggregator is implemented as a multi-node MPC system. This design not only distributes the computation but also ensures that no single node can decrypt the individual gradients. Furthermore, digital signatures and robust anomaly detection (e.g., threshold-based rejection) are integrated to validate each update and to mitigate risks from malicious actors such as Sybil or backdoor attacks. This design helps organizations collaborate on LLM development without exposing proprietary or sensitive data. By encrypting every gradient end to end, SecureLLM eliminates the risk of partial updates being eavesdropped on, which can reveal sensitive data patterns. Additionally, the aggregator itself operates in a cryptographically hardened environment, allowing global model updates to be combined securely even when the participants may not fully trust each other [38].

To handle the large parameter sizes typical of LLMs (often billions of parameters), SecureLLM incorporates gradient compression techniques such as top-k sparsification or quantization to reduce the communication overhead [39]. An adaptive scheduling algorithm determines the optimal intervals for gradient exchange, taking into account the convergence rates, fine-tuning objectives, and participants’ computational resources [40]. This “adaptive scheduling algorithm” continuously monitors the model states (e.g., the gradient variance and loss plateau signals) to dynamically adjust the frequency and volume of gradient sharing, minimizing performance degradation under cryptographic constraints.

Within this decentralized paradigm, each participant retains control over its local data while contributing only encrypted gradients to the global model state. This setup is particularly appealing for multi-stakeholder consortia—such as hospitals, financial institutions, or government agencies—where data-sharing agreements can be fraught with privacy or compliance issues. The aggregator in MPC adds a layer of security by preventing the central server from decrypting updates, thus keeping each participant’s information private even in semi-trusted or partially compromised environments.

Another significant improvement is how SecureLLM integrates compression techniques into the encryption mechanism. Rather than encrypting raw gradients directly, the system first applies methods such as top-k sparsification or quantization, thus reducing the amount of information that must be encrypted. By decoupling compression and encryption, participants can tailor the compression strategies based on their unique datasets, potentially improving the overall model convergence while maintaining robust security.

In addition to standard decentralized fine-tuning, SecureLLM addresses security threats like Sybil attacks and malicious gradient injection. We propose integrating digital signatures for each gradient update, anomaly detection to flag irregular update patterns, and consensus protocols among nodes to validate gradient integrity. These measures ensure that only legitimate contributions are incorporated into model updates, enhancing the resilience of the decentralized training process.

- Algorithm 1: Secure Decentralized Gradient Aggregation.

Let be the gradient update from participant i and its digital signature. The aggregator verifies each and computes the aggregated gradient as

subject to the constraint that each passes an anomaly detection test . A consensus mechanism is then applied such that only gradients satisfying are used.

At the governance level, decentralized training supports more granular record-keeping for each participant’s data contributions, aligning with the legal requirements of transparency. Entities can adopt digital signatures for each update, improving accountability and traceability without ever exposing raw data. Since these mechanisms sit above the encryption layer, the aggregator can authenticate participants’ updates without needing access to plaintext gradients. Additional threat mitigation: Although SecureLLM primarily addresses gradient-based attacks, it is also designed to mitigate risks such as data poisoning and backdoor attacks through secure data validation and robust anomaly detection. Future extensions will incorporate specific defenses—such as input sanitization and trigger detection—to further enhance its security.

3.3. Layer 3: Differentially Private Inference

During inference, we incorporate a differential privacy layer that injects controlled noise into the query embeddings and model outputs, mitigating membership inference and model inversion attacks. Traditionally, differential privacy is used only in training to protect the training data; here, we extend it to inference-time interactions [41]. The theoretical motivation is that any data the model processes—from training examples to user queries—can be exploited by adversaries via repeated probing [42]. Therefore, we adapt the standard -DP framework to treat both query embeddings and outputs as “private data”, ensuring that the responses to two similar or adjacent queries remain statistically indistinguishable under a defined privacy budget. Our approach builds on established methods for training using differential privacy, as introduced by Abadi et al. [32].

In our implementation, example parameters (e.g., and ) balance privacy with utility.

The formal definition of noise injection: Given the sensitivity S of the model’s output, the noise is sampled from a Gaussian distribution:

In Section 5.5, although the baseline model’s membership inference accuracy was 66.3%, the DP variant reduced it to 60.8%, indicating a measurable drop in data memorization. Moreover, the cumulative privacy loss is continuously tracked using a standard Renyi Differential Privacy (RDP) accountant, ensuring that the overall privacy budget is properly managed across multiple queries.

The noisy output is then computed as

where y is the original output.

We also include a noise calibration mechanism that dynamically adjusts the Gaussian or Laplacian noise in the model outputs, maintaining acceptable accuracy while offering strong privacy guarantees [43]. Concretely, we define a sensitivity parameter reflecting how much a single query can change the output distribution. Based on this parameter, the framework injects noise into logits or representation layers before producing responses. Consequently, the privacy budget that once covered only training data now covers cumulative inference queries, preventing attackers from gradually extracting private information through repeated interactions.

Because we selectively apply noise at certain layers, the performance impact on critical tasks remains small. Nonetheless, a continuing privacy budget must be updated with each query, preventing adversaries from assembling partial data over time [44]. Administrators can tune and depending on the setting: stricter budgets yield stronger privacy at the cost of greater noise. Conversely, tasks requiring higher accuracy may use a more lenient privacy budget while still maintaining a baseline level of protection.

Practically, implementing differentially private inference can involve specialized noise injection layers or output modifications. The SecureLLM framework treats this as a policy-driven setting, allowing organizations to define their own thresholds for acceptable utility vs. privacy. Logging and audit mechanisms can track real-time noise injections, generating compliance reports or triggering alerts if the cumulative privacy budgets approach critical levels. This flexibility ensures operators can quickly respond if the privacy settings are nearing their constraints.

Overall, combining lightweight cryptography, decentralized fine-tuning, and differentially private inference yields a robust and adaptive security posture. Each layer operates independently yet synergistically: cryptography secures the data in transit, decentralized fine-tuning prevents any single entity from seeing all of the data, and DP-based inference safeguards against attempts to reverse-engineer sensitive content. By uniting these layers, SecureLLM provides a systematic approach to protecting LLM workflows across various sectors and regulatory environments.

3.4. Key Terminology and Definitions

- Lightweight cryptography: A suite of cryptographic techniques aiming for a reduced computational overhead and efficient key exchange, suitable for large-scale, real-time operations [36];

- Dynamic noise injection: The strategy of adaptively adjusting the noise parameters during training and inference to respect privacy budgets while maintaining performance [45];

- An adaptive scheduling algorithm: A mechanism that modulates the frequency and volume of the gradient exchanges in decentralized learning systems based on the real-time model performance and hardware constraints [40].

4. Discussion of the Implementation

4.1. Cryptographic Choices

AES-GCM can be used for gradient encryption due to its balance between speed and security. We selected AES-GCM because it is a widely recognized industry standard that benefits from hardware acceleration on many modern GPUs/CPUs, allowing for a high throughput with a relatively low overhead, which is particularly critical in large-scale LLM training. ECC-based (e.g., Curve25519) key exchange protocols might be implemented to distribute symmetric keys among multiple training nodes or clients. Curve25519, with its compact key sizes and efficient computations, is especially conducive to frequent key rotations and ephemeral key usage in high-throughput pipelines. While other ECC or classical algorithms (e.g., RSA) could also be employed, we specifically chose Curve25519 to balance speed, security, and the flexibility required in modern LLM ecosystems. This approach enables regular key rotations and prevents long-term key exposure. If stronger protection is needed for computations over encrypted data, partial or leveled homomorphic encryption schemes could be explored, though they typically introduce a higher computational overhead. In high-stakes settings where minimizing security risks may be more important than computational efficiency—such as critical infrastructure or sensitive government applications—these more resource-intensive approaches could be justified by stricter regulatory demands or the need for advanced compliance.

We specifically selected AES-GCM for symmetric encryption due to its well-established combination of a high performance and robust security. AES-GCM benefits from hardware acceleration support on modern GPUs and CPUs, enabling fast encryption and decryption suitable for real-time operations even in large-scale LLM deployments. In comparison with alternatives such as ChaCha20-Poly1305, AES-GCM has a comparable security generally with a superior efficiency on the typically used platforms. The selection of Curve25519 for asymmetric cryptographic operations and for exchanges depended primarily on its efficiency in the generation and agreement schemes for keys and on its comparatively short key length. Curve25519 typically outperforms traditional algorithms like RSA in terms of its speed and memory overhead, making it a practical choice for scenarios requiring frequent ephemeral key exchanges. The symmetric–asymmetric hybrid approach exploits the best features of both realms of cryptography: symmetric encryption (AES-GCM) efficiently safeguards bulk data transfer with near-zero latency, and asymmetric cryptography (Curve25519) efficiently and securely safeguards symmetric session key exchanges. The hybrid approach attains the all-crucial balance between latency and security by providing robust data protection at the cost of not imposing asymmetric encryption on all of the data. Moreover, while emerging post-quantum cryptographic algorithms—such as CRYSTALS-Kyber for key exchange or CRYSTALS-Dilithium for digital signatures—offer compelling future-proofing against quantum adversaries, their current computational costs and larger key sizes pose challenges for real-time encryption in large-scale distributed LLM training. Nonetheless, our modular design of the cryptographic layer ensures that future integration with such post-quantum contenders is feasible, meaning SecureLLM deployments can migrate seamlessly as the quantum-resistant best practices become more feasible and efficient.

A further layer of sophistication may be introduced by adopting ephemeral key strategies, wherein key pairs are generated for each training round and discarded after use [46]. This reduces the attack surface since compromised long-term keys would not automatically grant access to future gradient updates or model states. In practical terms, ephemeral key rotation can be realized through established protocols (e.g., ECDH using Curve25519) while maintaining a separate certificate authority to confirm the validity of participants and manage revocations. Implementers can tune the frequency of these rotations based on the performance constraints and organizational risk thresholds, thereby tailoring security to meet specific compliance or throughput goals. Nevertheless, however, repeated temporary rotations of keys within highly distributed and heterogeneous networks have high operational overheads. Among these goals are secure distribution, on-time revocation, synchronization among geographically distributed nodes, and protection against additional threats in terms of key compromise due to misconfigurations, inadequate access controls, etc. Countermeasures against such threats when deploying SecureLLM into non-controlled networks include strong, automated, and hardened key-managing infrastructures. Effective countermeasures are centralized or distributed key-managing services with strict access controls and threshold cryptographic schemes to reduce the points of failure, as well as strict monitoring and anomaly detection practices for the timely detection of and responses to a compromised key. Moreover, Hardware Security Modules (HSMs) or Trusted Platform Modules (TPMs) may safely manage and store cryptographic keys, further lowering the risk of compromise. Strictly defined recovery and revocation practices, further backed by automation and strict recording, will be necessary to maintain operational security at scale, as well as to handle regulatory framework compliance.

Organizations that foresee large-scale or long-term LLM deployments may also consider future-proofing their cryptography choices against quantum threats [47]. One potential route involves substituting classical ECC with post-quantum algorithms (e.g., CRYSTALS-Kyber or CRYSTALS-Dilithium for key exchange and signatures, respectively), although these algorithms typically have larger key sizes. In especially high-risk or long-lived data scenarios—where the operational lifetime of the model may outlast the current cryptographic standards—post-quantum readiness is integral to regulatory compliance and forward-looking security measures. In the context of SecureLLM, this shift could be managed via a modular cryptographic layer that decouples the encryption algorithms from the rest of the pipeline, allowing for “plug-and-play” updates as the cryptographic standards evolve.

Finally, cryptographic logging techniques—often referred to as “secure audit logging”—may be integrated at this layer. Each cryptographic operation (e.g., key rotation, the encryption of gradients) can generate verifiable log entries stored on a tamper-evident ledger. Such logs are essential for compliance with regulatory frameworks like the EU AI Act, demonstrating precisely when and how data were protected [48]. While not all deployments require the same level of traceability, many regulated domains benefit from these logs, as they provide robust evidence of when key exchanges occur or when data undergo encryption, thereby aligning with both internal governance and external audit requirements.

4.2. Distributed and Decentralized Training

In a multi-party setting, MPC-based aggregation can collect encrypted gradient updates from each participant. At a high level, each participant locally encrypts its gradient, sending it to an aggregator that operates under an MPC protocol. The aggregator computes the combined gradients without decrypting individual contributions. In principle, specialized libraries (e.g., MP-SPDZ) or secure enclaves can be employed to implement this aggregator securely. For large LLM architectures, top-k sparsification or 8-bit quantization methods can be layered atop encryption to reduce the communication overhead. We specifically chose top-k sparsification because it typically offers a strong balance between gradient fidelity and the bandwidth savings. Alternatively, 8-bit quantization can reduce the communication load further while preserving the model’s performance, though it may be more sensitive to certain architectures.

When deploying MPC-based aggregation in practice, system designers should carefully evaluate the communication overhead and latency. In many real-world scenarios, multiple sites contribute gradients at varying rates, making their synchronization non-trivial [49]. To alleviate congestion, techniques such as dynamic batching of updates and adaptive scheduling can be used. For instance, participants might only pass updates when their gradient norm exceeds a specific threshold, thus minimizing unnecessary cryptographic transmissions. Additionally, several groups have begun to research trust frameworks that combine multi-party computation and threshold cryptography [50]. In such a model, the decryption keys are shared among multiple secure computation environments, with a majority required for decryption. No single environment can decrypt the data independently; only when a threshold number of participants agrees can the global model state be partially decrypted for verification. This approach offers an extra layer of assurance if the aggregator resides in an untrusted or semi-trusted domain.

Integration with container orchestration platforms (e.g., Kubernetes) and infrastructure-as-code (IaC) tools can also streamline the large-scale deployment of SecureLLM. Each participant runs a secure container instance housing the cryptographic modules and the local data, while the aggregator node(s) operate(s) the MPC protocols. Configuration files can define secure networking policies—such as strict mutual TLS—to prevent eavesdropping on gradient transmissions.

4.3. Differentially Private Inference Setup

During inference, a small amount of calibrated noise can be added to the query embeddings and outputs to maintain privacy guarantees. The exact level of noise depends on the desired -DP parameters. For instance, in higher-risk domains (e.g., healthcare), more conservative (smaller) values—often in the range of 1 to 2—may be chosen to strongly limit membership inference attacks, whereas a more lenient value of up to 5 or 8 might be acceptable for less sensitive public applications. These ranges reflect a trade-off between utility and formal privacy assurances; further refinements can be guided by the regulatory demands or empirical risk analyses. An operator might configure a stricter or more lenient privacy budget depending on the domain—healthcare, for instance, may require stricter budgets than a public Q&A service. Monitoring the cumulative privacy loss across multiple queries is essential to prevent adversaries from exploiting repeated probing. To critically assess and justify the selection of differential privacy parameters such as and , future implementations will incorporate a rigorous sensitivity analysis. This analysis will quantify how varying levels of noise injection—driven by different and settings—affect model utility metrics such as the accuracy, perplexity, and convergence time across diverse datasets and scenarios. Furthermore, theoretical bounds using advanced composition techniques [51] will be established to precisely characterize the cumulative privacy loss under realistic inference workloads. We also plan to investigate the robustness against different adversarial models, including powerful membership inference attacks leveraging multiple query probing. Through systematic examination of how utility deprivation is traded off against stronger privacy guarantees and through systematic measurement of the robustness afforded by the DP layer, we aim to assist the parameter selection for various threat models and operational limitations, enabling safer and transparent deployment decisions.

Practically, differentially private inference can be realized by modifying the model’s final output layer to add noise sampled from a Laplacian or Gaussian distribution [52]. The parameters for these distributions are derived from the bounds that the deploying organization is willing to accept. A more granular approach might assign different privacy budgets per user session, ensuring that users with especially sensitive queries (e.g., medical diagnoses) receive higher levels of protection.

In some cases, organizations may opt for “privacy on demand”, where the noise levels increase for specific query types flagged as high-risk (e.g., queries involving personal identifiers). This selective noise injection can preserve the model’s accuracy for general queries while bolstering the protection in domains requiring stricter regulatory scrutiny [53]. Such an approach necessitates robust monitoring to track the overall privacy consumption, often implemented via a “privacy accountant” component within the inference pipeline.

If an organization anticipates high query volumes, caching mechanisms or semantic search layers can reduce repeated computations while still applying differential privacy. Specifically, queries that map to similar embedding vectors can be grouped, with noise introduced at the group level. This lowers the computational overhead but demands a careful design to avoid inadvertently increasing the membership inference risks for users who share similar query patterns.

4.4. Practical Considerations and Infrastructure

Implementing SecureLLM may require the following:

- GPU/TPU support with cryptographic libraries optimized for large-scale matrix operations;

- A network bandwidth sufficient to handle encrypted gradient transmissions;

- Resilient key management frameworks capable of rotating keys, revoking compromised ones, and securely storing them;

- Compliance checks to verify that the cryptographic and DP mechanisms meet legal requirements (e.g., the EU AI Act for high-risk systems).

These elements collectively form the backbone of a production-grade SecureLLM deployment, ensuring privacy-preserving fine-tuning and inference in a range of sectors.

When selecting hardware, organizations should account for the memory overhead introduced by encryption and noise injection, particularly in multi-billion-parameter models [54]. In some setups, specialized hardware accelerators—such as FPGA-based cryptographic engines—can offload encryption tasks, freeing up the main GPUs or TPUs for the core model operations.

On the software side, orchestrating distributed training often involves frameworks like PyTorch (version 2.2.2) or TensorFlow. Wrappers or plugins may be required to intercept gradient tensors, apply compression, encrypt them, and then pass them to the MPC protocol. Ensuring these steps integrate seamlessly—without race conditions or data integrity issues—is a central engineering challenge.

Finally, compliance validation may include automated log scanning to confirm the correct application of cryptographic operations and DP noise injection [55]. Auditors or compliance officers can request detailed reports on key generation, storage, and rotation procedures, as well as the potential resource expenditure for privacy-related tasks. As a result, SecureLLM’s design must incorporate robust security controls and produce auditable documentation that demonstrates its adherence to the regulatory requirements.

While this paper centers on conceptual synergy, real-world infrastructures handling billion-parameter LLMs necessitate the meticulous allocation of GPU/TPU resources for cryptographic workloads, a sufficient network bandwidth for encrypted gradient exchange, and a dynamic privacy accountant to track the DP usage at scale. By limiting the framework to lightweight encryption (rather than fully homomorphic encryption) and partial DP at inference, we aim to keep the overhead manageable. Nevertheless, organizations requiring near-comprehensive data confidentiality or operating in highly regulated verticals may investigate partial or leveled homomorphic encryption, despite its higher overhead, to achieve stricter security guarantees. Detailed analyses of the overhead and costs—especially in multi-organizational settings—are left for future work, focusing on hardware enclaves, container orchestration, and advanced MPC optimizations. Specifically, future benchmarking efforts will systematically investigate how SecureLLM’s cryptographic, decentralized fine-tuning, and differential privacy layers scale with increased model size and complexity. We intend to quantify the overhead across a range of model scales—from smaller transformer architectures to multi-billion-parameter LLMs—to clearly map the computational and memory requirements introduced by SecureLLM. Furthermore, direct comparisons with the baseline models without privacy enhancements and alternative integrated security frameworks (e.g., federated learning combined with homomorphic encryption or secure enclaves) will be conducted. Key metrics will include the convergence time, throughput degradation, memory utilization, cryptographic latency, and inference-time performance. By conducting these comparative benchmarks, we aim to offer implementers concrete suggestions for the actual practical trade-offs and ramifications for secure guarantees in terms of the computational efficiency, allowing them to make well-informed decisions for real deployments.

5. Potential Applications and Future Work

5.1. Use Cases and High-Risk Domains

- Healthcare: Patient records can be encrypted locally and integrated into an LLM for medical diagnosis or recommendation systems, reducing the risk of data leakage. Differentially private inference ensures that patients’ details are not extractable through repeated queries.

- Finance: Banks and financial institutions might leverage SecureLLM to analyze large volumes of transaction data without revealing sensitive customer information. MPC-based approaches enable multiple branches or institutions to collaboratively train on encrypted data.

- Legal and compliance: Law firms or government agencies can benefit from large-scale language models while mitigating confidentiality risks. Adaptive scheduling algorithms for decentralized training can efficiently manage the workloads across agencies.

Table 2 presents potential high-risk use cases and relevant SecureLLM features. Beyond these primary examples, SecureLLM’s layered approach can be adapted to diverse industries handling sensitive intellectual property. For instance, biotechnology companies working with genome data can use encryption and differential privacy to protect genetic codes or medical trial results from reverse engineering [56], while defense contractors may deploy decentralized fine-tuning to incorporate external partners without exposing critical documents or sensitive information. SecureLLM can also facilitate flexible business models in marketplace settings, enabling various parties to share proprietary datasets under strong confidentiality controls [57].

Table 2.

Potential high-risk use cases and relevant SecureLLM features.

Where the data locality requirements are stringent, incorporating hardware enclaves or secure coprocessors can further mitigate the risks of data leakage. These enclaves may be particularly relevant in regulated environments where local regulations dictate that sensitive data must remain within specific geographical jurisdictions [58].

Recent studies such as [59,60] have demonstrated the potential to deploy large language models for non-traditional applications for security-oriented objectives. These papers provide a view into, e.g., how one could apply an LLM to identify financial transactions as forgeries and perform smart contract vulnerability detection. On a related note, as far as including such capabilities inside the SecureLLM system goes—such that data are made confidential via robust cryptographic practices and privacy-preserving techniques—our proposed solution could be extended to securing decentralized finance applications, as well as blockchain networks. This extension would not only broaden the applicability of SecureLLM but also reinforce its potential as a comprehensive security solution in a variety of high-risk domains.

5.2. Alignment with Emerging Regulations

Because SecureLLM integrates cryptographic controls with differential privacy, it naturally aligns with the stricter requirements set by frameworks such as the EU AI Act. For “high-risk” applications affecting employment, healthcare, or public services, encryption and privacy guarantees can serve as proof of due diligence and compliance with data protection mandates. Future refinements may include formal audits, transparent noise parameter logs, and real-time privacy budget monitoring.

Another regulatory shift gaining traction is the EU Cyber Resilience Act, which places strong emphasis on software supply chain security. SecureLLM’s cryptographic safeguards and MPC-based training protocols can generate provable evidence of secure data processing throughout the AI pipeline, helping organizations demonstrate robust compliance during audits [61].

Additionally, SecureLLM is engineered to comply with standards like the GDPR, HIPAA, and CCPA. Technical measures such as data minimization, strong (e.g., 256-bit) AES-GCM encryption, and effective key management support this compliance. At the organizational level, audit trails and continuous monitoring mechanisms ensure transparency and traceability. Collectively, these measures uphold stringent data protection and accountability requirements.

As regulators refine the guidance for AI in critical infrastructure—e.g., energy or transportation—further research into secure API endpoints, traceable model outputs, and real-time threat detection may be necessary to keep SecureLLM aligned with emerging standards.

5.3. Next Steps for Large-Scale Testing

Benchmarking: While this paper lays out conceptual strategies, future work should systematically benchmark SecureLLM against the baseline models, measuring factors like the overhead, performance, and resilience to advanced attacks. While our preliminary experiments with DistilGPT-2 and a limited subset of the WikiText-2 dataset demonstrate its feasibility and provide initial insights, we recognize that these results may not fully represent the performance, overhead, or adversarial robustness in larger, production-grade LLM deployments. To more accurately capture these critical aspects, future work will focus on scaling the SecureLLM evaluations to larger models such as GPT-3 or GPT-4 variants trained on extensive datasets across diverse domains. We plan to systematically measure critical performance metrics such as the convergence speed, communication and cryptographic overhead, and memory consumption in these scaled-up scenarios. Furthermore, comprehensive adversarial testing—through rigorous testing against cutting-edge membership inference, model inversion, and prompt engineering attacks—will also be conducted to experimentally validate the resilience of the framework at scale. This kind of broader experimental testing will ensure that the findings on the privacy protection, security overhead, and model accuracy trade-offs are robust and can be persuasively transferred into real-world deployment settings.

Optimizing cryptography: Investigations into lightweight or partially homomorphic encryption could potentially reduce the computational load, particularly for extremely large LLMs.

Adaptive privacy settings. Automated toolkits that adjust the differential privacy parameters in real time could achieve a dynamic trade-off between accuracy and protection.

More broadly, deploying SecureLLM in realistic cloud settings—complete with distributed resources, real-time user queries, and variable network conditions—would shed light on its real-world scalability. For example, container orchestration platforms like Kubernetes might be used to instantiate and tear down secure enclaves on demand, meeting the needs of burst workloads [62].

In tandem with cloud-scale testing, “security-as-code” approaches can formalize the best practices for continuous integration (CI) and continuous deployment (CD). These guidelines could prescribe routine cryptographic performance tests, periodic noise calibration, and multi-party key rotation simulations, helping SecureLLM maintain robust security even as new threats emerge [63].

Finally, domain-specific user studies could be instructive. In healthcare, for instance, experts could gauge whether differential privacy hampers the interpretability or accuracy of clinical recommendations. Parallel evaluations in finance or legal domains would clarify how users perceive the security–utility trade-offs.

5.4. Compliance Framework Extensions

As AI regulations expand, reliable record-keeping and provable security claims gain further importance. SecureLLM’s modules could be extended to producing cryptographic proof of compliance (e.g., zero-knowledge proofs) or automatically generating official reports for regulatory bodies. Additional extensions may involve hardware enclaves, advanced MPC protocols, and cross-border data transfer compliance.

Zero-knowledge proof (ZKP) integrations let organizations attest to meeting certain security thresholds—e.g., the maximum noise levels or key rotation intervals—without revealing sensitive operational details [64]. Storing these proofs on chain or on a tamper-evident ledger will allow third parties to verify compliance without direct access to the raw logs or proprietary model weights.

As the adoption of AI becomes increasingly international, the issue of cross-border data transfers emerges. SecureLLM’s decentralized model helps ensure that data never leave their jurisdiction in plaintext, greatly simplifying the compliance with diverse national data protection mandates. Encrypted inference and partial model updates can also be distributed globally without exposing raw training examples [65].

Finally, future developments of MPC can extend beyond gradient aggregation into full “privacy-preserving inference”, where entire query sequences are processed under encryption. SecureLLM’s potential to incorporate zero-knowledge proofs of the correct inference further boosts confidence in the model’s security posture. It thus enables responsible innovation under mounting regulatory pressures and heightened public concern over data misuse.

5.5. Our Minimal Empirical Demonstration

Although this paper is primarily conceptual, we conducted an updated experiment to demonstrate the feasibility and impact of SecureLLM’s differential privacy (DP) module on both the performance and membership inference resistance. In this new trial, we fine-tuned a smaller but more DP-friendly model (DistilGPT-2) on a 1000-sample subset of the WikiText-2 dataset for two epochs. We compared a baseline (no privacy) model against a DP-enabled variant that leveraged Opacus with a moderate noise multiplier (0.5). Our goal was to observe how DP affected the training overhead, model utility, and vulnerability to membership inference attacks.

Key Observations:

- Training time and memory overhead: The baseline model required 84.2 s to complete two epochs, whereas the DP variant took 124.5 s—an approximately 47.9% increase in the total training time. The memory usage increased by about 25% under DP due to per-sample gradient tracking.

- Utility (perplexity): The baseline achieved a test perplexity of 39.8, while the DP-enabled model’s perplexity rose slightly to 43.1. This indicates a modest reduction in the model’s utility when privacy is enforced.

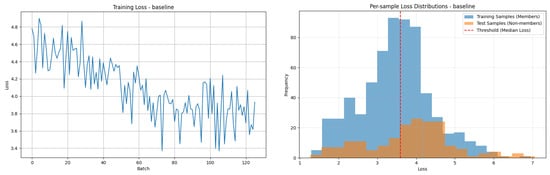

- Membership inference risk: The right-hand side of Figure 2 shows the distribution of the per-sample losses for the training (member) and test (non-member) samples. A simple threshold-based classifier achieved a 66.3% membership inference accuracy on the baseline model. With DP enabled, this accuracy dropped to 60.8%, demonstrating that injecting noise can weaken an attacker’s ability to distinguish training data from non-training data.

Figure 2. (Left) Baseline training loss across two epochs, showing gradual convergence on the 1000-sample WikiText-2 subset. (Right) Per-sample loss distributions for training (members) vs. testing (non-members). The red dashed line marks the median loss threshold used in the membership inference attack.

Figure 2. (Left) Baseline training loss across two epochs, showing gradual convergence on the 1000-sample WikiText-2 subset. (Right) Per-sample loss distributions for training (members) vs. testing (non-members). The red dashed line marks the median loss threshold used in the membership inference attack.

Although the perplexity values in this updated experiment are far lower than the extremely high values reported in our earlier proof-of-concept (where the perplexities exceeded 20k), it is important to note that we are still using a limited dataset and a small number of epochs. These settings do not reflect the performance of a fully converged, large-scale language model. Nonetheless, they confirm that applying differential privacy is technically feasible and can mitigate membership inference risks at the cost of an increased training overhead and a modest degradation in utility.

Going forward, we are planning a series of larger-scale evaluations of SecureLLM in high-risk domains (e.g., healthcare, finance), where data confidentiality is paramount. We will track metrics such as the training time, model accuracy, privacy budget consumption , and vulnerability to more advanced membership inference methods or gradient manipulation attacks. In future studies, we also intend to benchmark SecureLLM against alternative security schemes (e.g., MPC-based methods like CrypTen) to quantify the trade-offs in latency, memory usage, and final model utility as a function of the model’s size and security parameters.

Short Textual Illustrations

As shown in Table 3, we conducted prompt experiments to demonstrate the qualitative differences between the baseline and differentially private (DP) language models’ outputs. These examples illustrate how DP affects text generation in practice beyond the quantitative metrics reported in our membership inference experiments.

Table 3.

Comparison of baseline and DP-enabled language model outputs.

Key observations from textual illustrations:

- Differences in coherence: The DP-enabled outputs demonstrate reduced syntactic coherence compared to that of the baseline outputs. For example, in the prompt “The future of secure AI is”, the baseline produces more structurally coherent text about complex systems, while the DP-enabled output contains disjointed phrases and grammatical inconsistencies.

- Semantic integrity: While the baseline outputs maintain a logical flow, the DP-enabled outputs exhibit disrupted semantics. In the “Differential privacy” example, the DP output contains grammatical errors like “can be used the protect” and fragmented phrases such as “many people it to the informed about their that”.

- Topic relevance: Despite the reduced coherence, both versions attempt to address the given topic. The baseline provides more concrete details (e.g., “protect users from unauthorized traffic”), while the DP-enabled version offers more generalized statements containing privacy-related terminology, albeit with reduced clarity.

- Privacy–utility trade-off: These examples visually demonstrate the cost of enhanced privacy protection: the introduction of noise through differential privacy mechanisms preserves the topic relevance while sacrificing grammatical correctness and semantic coherence.

These textual illustrations complement our quantitative findings by demonstrating how differential privacy concretely affects language generation, showing the trade-offs between privacy protection and the quality of the output that practitioners must consider when deploying secure LLMs.

- Performance and Scalability Analysis

Our preliminary evaluations show that while SecureLLM introduces a noticeable overhead (in our updated experiment, a 47.9% increase in the training time), this trade-off is often acceptable given the enhanced privacy protections. Future work will refine these experiments further, extending them to larger transformer architectures, more extensive datasets, and longer training schedules. Such research will help identify the optimal configurations for the cryptography, DP parameters, and decentralized fine-tuning to maximize both performance and security in real-world deployments.

5.6. Detailed Analysis of the Experimental Results

In our minimal empirical demonstration (Section 5.5), we observed both privacy gains and performance trade-offs when introducing differential privacy (DP). Below, we elaborate on those findings and compare them to the related literature.

Training overhead and utility:

- Overhead: We noted a roughly 47.9% increase in the training time for the DP-enabled DistilGPT-2 model (from 84.2 s to 124.5 s). This overhead is consistent with other DP implementations on transformers [8,43], where per-sample gradient clipping and noise addition may require additional memory and computational steps. Future scaling to larger models is expected to exhibit a similar or greater overhead, although system-level optimizations (e.g., microbatching, gradient compression) may mitigate these costs [39].

- Model utility: The DP-enabled model showed a modest reduction in its test perplexity compared to that of the baseline (43.1 vs. 39.8). Although the drop is relatively small, our results align with prior research on DP [8,24], where mild noise levels (with a noise multiplier ∼0.5–) often preserve an acceptable accuracy. Stricter privacy budgets (lower ) or longer training epochs might increase the perplexity further, underscoring the importance of careful hyperparameter tuning.

Membership Inference Resistance

- Reduction in attack accuracy: As shown on the right-hand side of Figure 2, the membership inference accuracy decreased from 66.3% (the baseline) to 60.8% (DP). Although a 5–6% drop may seem modest, this indicates that DP does blunt an adversary’s ability to distinguish training from non-training samples. This gap is in line with the findings of membership inference studies that injected Gaussian or Laplacian noise at training or inference time [13,29].

- Potential for further improvement: Additional techniques—like adaptive noise schedules or combined inference-time noise injection—could further reduce membership inference risks [41,45]. Integrating an MPC-based approach to inference or restricting repeated queries (budget tracking) might yield even stronger resistance.

Comparison to larger-scale benchmarks: Recent works using GPT-2 or GPT-3 with DP training often report more pronounced trade-offs because of these models’ scale [5,6]. While our demonstration focuses on a small subset of WikiText-2 and a distilled model, the observed trends—namely the increased overhead and moderate impact on accuracy—mirror established findings in the literature. Our future benchmarking plan includes the following:

- More extensive datasets (e.g., the entirety of WikiText-103 or C4);

- Longer training schedules (up to 10–20 epochs);

- Measurement of the cryptographic overhead alongside DP noise;

- Testing advanced membership inference attacks (e.g., black-box label-only methods).

Interpretation and practical implications: In security-critical settings (healthcare, finance, etc.), a modest loss in perplexity may be an acceptable trade-off against reduced membership inference risks. Our findings suggest that operators can tune the DP noise to an acceptable range (–) to strike a balance between data confidentiality and model quality [24,44]. Furthermore, cryptographic measures (Section 4.1) can be seamlessly integrated to protect gradient exchanges, while MPC-based fine-tuning ensures that no single node sees raw data or sensitive parameters in plaintext.

Overall, these results validate that SecureLLM can incorporate DP with a low to moderate performance overhead, in line with the existing literature. The framework’s modular design also permits deeper privacy protections through additional cryptographic or decentralization schemes if an application demands stricter guarantees.

6. Discussion

By integrating cryptography, decentralized fine-tuning, and differential privacy into a single framework, SecureLLM conceptually addresses a range of threats—from basic membership inference to more sophisticated adversarial prompt exploits—while preserving its utility in real-world tasks. This integrated approach stands in contrast to earlier, fragmented security solutions, underscoring the advantages of a unified design in which each layer reinforces the others.

Although SecureLLM strives to minimize the computational overhead, some trade-offs between performance and security remain inevitable. In principle, higher privacy budgets and more frequent key rotations can introduce latency, yet these measures also strengthen regulatory compliance and bolster user trust—critical considerations in “high-risk” domains like healthcare and finance. Furthermore, SecureLLM’s core components (lightweight cryptography, decentralized fine-tuning, and differential privacy) could be adapted to a broad array of transformer-based models, suggesting its wide applicability across numerous industries.