Abstract

The fast advancement of unmanned aerial vehicle (UAV) technology has facilitated its use across a wide range of scenarios. Due to the high mobility and flexibility of drones, the images they capture often exhibit significant scale variations and severe object occlusions, leading to a high density of small objects. However, the existing object detection algorithms struggle with detecting small objects effectively in cross-scale detection scenarios. To overcome these difficulties, we introduce a new object detection model, RPS-YOLO, based on the YOLOv8 architecture. Unlike the existing methods that rely on traditional feature pyramids, our approach introduces a recursive feature pyramid (RFP) structure. This structure performs two rounds of feature extraction, and we reduce one downsampling step in the first round to enhance attention to small objects during cross-scale detection. Additionally, we design a novel attention mechanism that improves feature representation and mitigates feature degradation during convolution by capturing spatial- and channel-specific details. Another key innovation is the proposed Localization IOU (LIOU) loss function for bounding box regression, which accelerates the regression process by incorporating angular constraints. Experiments conducted on the VisDrone-DET2021 and UAVDT datasets show that RPS-YOLO surpasses YOLOv8s, with an mAP50 improvement of 8.2% and 3.4%, respectively. Our approach demonstrates that incorporating recursive feature extraction and exploiting detailed information for multi-scale detection significantly improves detection performance, particularly for small objects in UAV images.

1. Introduction

In recent years, with the rapid development of drone technology and the reduction in manufacturing costs, drones have been widely applied across various industries. However, the high mobility of UAVs leads to a significant variation in the size of objects within images and potential occlusion between objects, resulting in a large number of small objects—those with fewer pixels. The existing object detection algorithms often focus less on small objects during cross-scale detection, leading to feature loss during the convolution process. Therefore, maintaining attention on small objects while accurately detecting large ones is a critical challenge. At the same time, in order to embed the model into the drone platform, it is necessary to control the model parameters within a certain range and maintain a relative balance with the detection accuracy.

Most current research methods encounter accuracy issues when dealing with UAV aerial imagery, particularly in scenarios with a high proportion of small objects. To improve the focus on small objects while maintaining high accuracy for large object detection, this study proposes a Recursive Pyramid Structure-based YOLO (RPS-YOLO) algorithm, which incorporates a recursive feature pyramid structure. The specific improvements are reflected in the following three aspects:

- The YOLOv8 network is enhanced with a recursive feature pyramid (RFP) structure to perform two stages of “processing” on the image—i.e., two rounds of feature extraction. During the first stage, a single downsampling operation is reduced, enabling the model to place greater emphasis on small object detection in multi-scale scenarios.

- In order to prevent feature loss during the convolution process, we designed a new attention mechanism that can supplement salient information with detailed information from both spatial and channel perspectives, thus enabling feature recovery during the convolution process.

- A new bounding box loss function is proposed by incorporating angle loss, accelerating the regression of predicted bounding boxes.

The experimental outcomes on both the UAVDT and VisDrone-DET2021 datasets indicate that, when compared with other leading lightweight networks, the RPS-YOLO model demonstrates enhanced detection accuracy while maintaining an effective balance between computational efficiency and the number of parameters.

The structure of the remaining sections of this paper is outlined as follows: Section 2 provides an overview of related work in drone detection and introduces YOLOv8 and the recursive feature pyramid structure. Section 3 introduces our proposed model and the structure and application of each module. Section 4 presents the results of the experiments, Section 5 discusses them, and Section 6 concludes this paper.

2. Related Works

Object detection algorithms are typically categorized into traditional algorithms and deep learning-based algorithms. Traditional object detection algorithms (e.g., SIFT [1], HOG [2], and DPM [3]) mainly rely on hand-crafted features. These methods lack robustness when dealing with scale and viewpoint variations. Deep learning-based object detection algorithms can be divided into two main categories: one is region proposal-based two-stage algorithms, such as the R-CNN series [4,5,6,7]. These algorithms produce candidate boxes and employ convolutional neural networks (CNNs) to classify them. However, the slow processing speed of two-stage methods limits their effectiveness for real-time UAV image processing. The other category is one-stage algorithms, such as the YOLO series [8,9,10,11,12,13,14,15,16], SSD [17], and RetinaNet [18], which directly predict object categories and bounding box coordinates through a single neural network model, without the need to generate candidate regions first, as in two-stage algorithms. These methods typically offer higher inference speeds but sacrifice detection accuracy to some extent. Additionally, Carion et al. [19] introduced the DETR model by incorporating the Transformer architecture. Specifically, DETR encodes the input image and the target set into two sets and then predicts the class, position, and count of objects by matching these two sets. This transformation simplifies the detection process and eliminates the need for post-processing steps. However, from UAV perspectives, objects are typically small in size, making it challenging to directly apply the previously mentioned detection algorithms to UAV aerial scenarios.

In recent years, many research efforts have emerged to tackle challenges in UAV aerial object detection. Regarding target localization and recognition in UAV images affected by adverse weather, Fang et al. [20] introduced ODFC-YOLO, integrating image dehazing with object detection to enhance detection precision. However, further research is needed to optimize model parameters and computational efficiency. To meet the demands of small object detection in UAV images under complex interference backgrounds while balancing detection accuracy and processing speed, Kong et al. [21] designed the Drone-DETR algorithm based on the RT-DETR framework. This algorithm integrates a small object detection module and a feature fusion attention module, achieving a perfect balance between model lightweighting and high performance. However, its detection performance degrades under extreme lighting conditions (such as overexposure or low-light environments). Zhao et al. [22] proposed an approach based on RetinaNet, which optimizes the scale and number of anchors through a systematic clustering method and refines focal loss computation; however, it suffers from slower speeds. Bao et al. [23] embedded the STC structure into YOLOv8 to enhance performance for small object detection in UAV images, achieving significant performance improvements but leading to a notable rise in the number of model parameters. Li et al. [24] proposed the Bi-PAN-FPN framework to improve the model’s capability in detecting small objects. However, it neglects the limited small object features present in deeper feature maps, which affects overall detection accuracy. Wang et al. [25] proposed a feature processing module called FFNB, which fully integrates shallow and deep features, improving model recall. At the same time, deep convolution is used to reduce model parameters, but the complexity of the model leads to a decrease in real-time performance. Guo et al. [26] designed the C3D module to replace the C2f module in YOLOv8, aiming to preserve more comprehensive information, but it increases the computational complexity. Zhai et al. [27] added a high-resolution detection branch to the YOLOv8 detection head and used both SPD Conv and GAM attention mechanisms to improve the detection accuracy of small targets. However, it greatly increased the inference time of the model.

2.1. YOLOv8 Detection Algorithm

YOLOv8 [14] is one of the leading object detection methods currently available. When performing detection tasks in different environments and scenarios, it only requires fine-tuning or transfer learning of the pre-trained model to adapt to the specific application needs of a given scenario. This flexibility not only ensures that the algorithm maintains high efficiency but also reduces the time and cost required for development, making it easier to deploy quickly in diverse fields. The architecture of YOLOv8 is shown in Figure 1 (with n C2f modules applied after each convolution or upsampling).

Figure 1.

YOLOv8 network architecture.

YOLOv8 uses the PAN-FPN structure in its main architecture. FPN [28] decomposes deep feature maps into a series of lower-resolution but semantically richer feature maps and fuses these with the corresponding upsampled shallow feature maps, ultimately generating multi-scale feature maps (orange region in Figure 1). The PAN [29] architecture is built by first upsampling from feature maps with low resolution and then downsampling from those with high resolution. These are then linked together to create a pathway.

In particular, PAN introduces a bottom-up path following FPN (green region in Figure 1), where the information from each layer’s feature map is combined with the feature maps from the adjacent upper and lower layers. By combining top-down and bottom-up pathways, PAN-FPN creates a network architecture that efficiently combines shallow spatial details with deep semantic features via feature fusion, enhancing the variety and thoroughness of the resulting features. However, this paper argues that a single round of feature extraction does not capture enough information, and, therefore, multiple rounds of feature extraction are necessary. YOLOv8 incorporates the C2f module within its backbone network, utilizing gradient-split connections to enhance the information flow in the feature extraction process. However, this approach does not completely eliminate feature loss. As a result, it is crucial to take measures to prevent feature loss during the feature extraction stage.

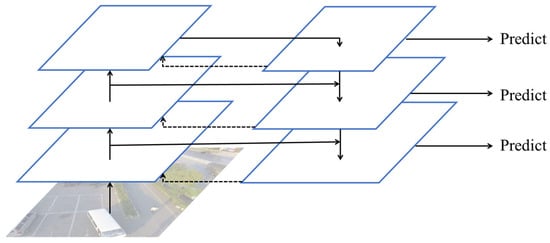

2.2. Recursive Feature Pyramid

While the FPN enhances the detector’s capacity to identify objects at various scales by combining shallow and deep feature maps using upsampling, downsampling, and lateral connections, a single FPN is not enough to capture all the feature information effectively. Based on the “think twice” philosophy, Qiao et al. [30] proposed the recursive feature pyramid (RFP). As shown in Figure 2, the network is constructed on the basis of FPN, incorporating extra feedback connections from the FPN layers into the bottom-up backbone layers. When the recursive structure is unfolded into a sequential process, it results in a backbone for object detection networks that enables multiple passes over the image to capture more semantic information.

Figure 2.

Recursive feature pyramid (RFP).

3. Method

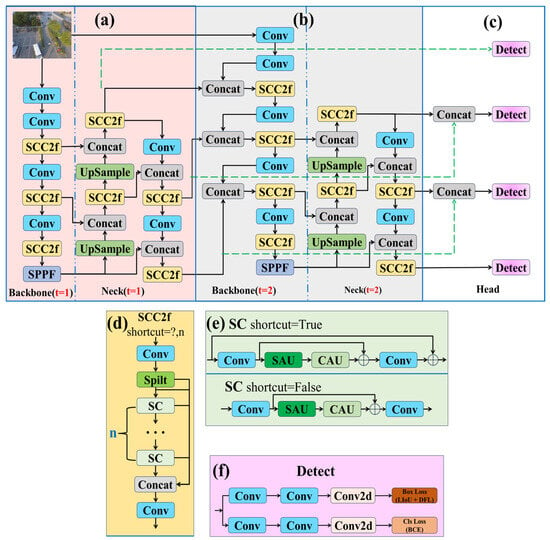

We present a new algorithm based on YOLOv8—RPS-YOLO. As shown in Figure 3, compared to the original YOLOv8, RPS-YOLO integrates an RFP structure to perform two rounds of “processing” on the image, enabling multi-scale feature fusion. To emphasize small objects while performing cross-scale detection, this paper does not apply recursion directly to YOLOv8. Instead, during the first “processing” pass, the network reduces one downsampling step, as shown in the backbone in Figure 3a, allowing it to capture more geometric information. During the second “processing” pass, the input features are downsampled five times to obtain features at different scales while also fusing the output from the first “processing” pass, as shown in the backbone in Figure 3b. As shown in Figure 3c, in the final Head layer, the results from all “processing” stages are combined in the final Head layer to perform multi-scale object detection.

Figure 3.

RPS-YOLO architecture: (a) first “processing”; (b) second “processing”; (c) Head layer; (d) SCC2f module; (e) SC module; (f) detect module.

In deeper network architectures, feature loss is more likely to occur, thus necessitating the design of effective modules for feature restoration. In the backbone network, this paper introduces a new module—Spatial and Channel C2f (SCC2f). As shown in Figure 3d (where n represents the number of bottlenecks), SCC2f uses gradient-split connections. To mitigate feature degradation, the SCC2f module uses an SC module for feature extraction. The SC module consists of a Spatial Attention Unit (SAU) and a Channel Attention Unit (CAU), as shown in Figure 3e. These units enhance the feature representation by leveraging detailed information from both channel and spatial dimensions, thereby restoring the feature representation of the CNN.

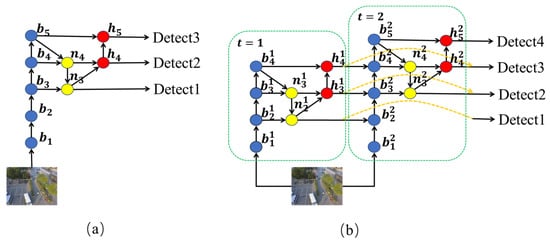

3.1. Multi-Scale Feature Fusion

In convolutional networks, deeper layers capture more semantic features, while shallow layers contain more geometric information. Therefore, to better fuse the feature information from both deep and shallow layers, RPS-YOLO, based on YOLOv8, integrates RFP to perform two rounds of “processing” on the image. As shown in Figure 4b, both “processing” passes utilize the PAN-FPN structure. To place more emphasis on shallow features, the first “processing” pass reduces one downsampling step, while the second “processing” pass incorporates additional feedback connections from the first “processing” pass into the bottom-up backbone layer to generate richer feature information.

Figure 4.

(a) YOLOv8 network structure. (b) RPS-YOLO network structure.

The multi-scale feature fusion structure of YOLOv8 is shown in Figure 4a. Let represent the feature map extracted during downsampling, represent the feature map re-extracted after upsampling, and denotes the feature map produced by the PAN backbone. The subscript represents the feature map that has been downsampled by a factor of , and denotes the convolution operation in each module. When the maximum downsampling number is N and the maximum upsampling number is M, the YOLOv8 structure can be defined by Equations (1)–(3):

where represents the original image. In the RPS-YOLO structure, the feedback connections are added to the FPN, and the corresponding from the two stages are fused, as shown in Figure 4b. The superscript denotes the number of “processing” passes. Let the maximum downsampling number for each “processing” pass be and the maximum upsampling number be . Then, the definition of in RPS-YOLO is given by Equation (4):

where represents the original image. The expressions for and are similar to those in Equation (1). Finally, and are fused with and , respectively, achieving two rounds of “processing” on the image. This results in a broader recognition range, encompassing tiny, small, medium, and large objects.

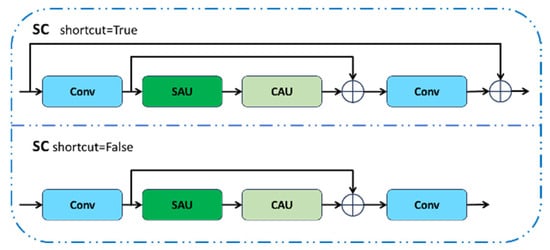

3.2. SC Attention Mechanism

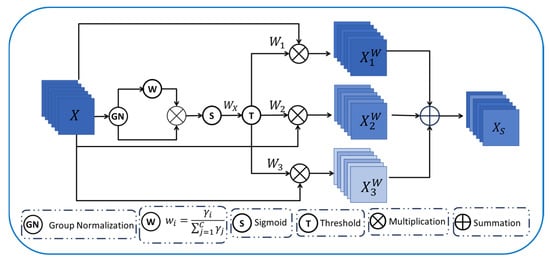

The Spatial and Channel (SC) module, as shown in Figure 5, is applied within the SCC2f module, composed sequentially of the SAU and the CAU. In the bottleneck residual block, the SAU operation begins by extracting spatially refined features from the intermediate input, and then the CAU operation refines the channel-wise features. This process utilizes both spatial- and channel-wise detailed information to recover feature information and enhance the network’s expressive capacity.

Figure 5.

SC module.

3.2.1. Spatial Attention Unit

During the convolution process, the model may experience feature degradation, resulting in the progressive loss of small object details. This paper improves feature representation by extracting detailed information and fusing it with salient information. To avoid misinterpreting background information as either useful or useless, a scaling factor is used to control the threshold range, effectively separating the feature map into three parts, i.e., detailed information, background information, and salient information, as shown in Figure 6.

Figure 6.

Spatial Attention Unit.

To capture the informative content from various feature maps while minimizing the influence of batch size during training, Group Normalization (GN) [31] is used to normalize the feature maps. The scaling factor of the GN layer is then employed to measure the relative importance of information between different feature maps. In this paper, Equation (5) is used to standardize the feature map (N stands for the batch size, C stands the channel dimension, and H and W correspond to the spatial width and height axes):

where and are the standard deviation and mean of X, and are the learnable affine transformations, and is a small positive constant introduced to ensure numerical stability during division.

The learnable parameter of the GN layer is utilized to quantify the spatial pixel variability across each channel and batch. Greater variations in spatial pixels are reflected in richer spatial information, leading to an increase in . The normalized weight is derived from Equation (6):

The reweighted feature maps’ weights are transformed into the (0, 1) range using the sigmoid activation, generating the weight information . Subsequently, gating is applied using a threshold, where the thresholds are defined in Equations (7) and (8):

where and represent the high and low thresholds, denotes the mean of , represents the variance in , a trainable parameter that adjusts the threshold range. The weight exceeding the threshold is assigned a value of 1, resulting in the weight for salient information. The weight exceeding the threshold or below the threshold is set to 0 to obtain the weight for background information. The weight exceeding the threshold is assigned a value of 0, resulting in the weight for detailed information. The entire process of obtaining W can be represented by Equation (9):

The input feature X is multiplied with , , and to obtain three weighted features: salient information features , background information features , and detailed information features . This successfully partitions the input features into three components: contains rich and expressive spatial content, referred to as salient information, contains irrelevant information, referred to as background information, and contains minimal information, considered as detailed information. Finally, the weighted features , , and are summed to generate the spatially refined feature map .

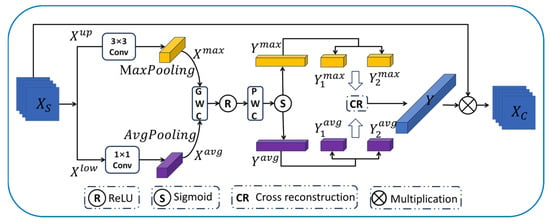

3.2.2. Channel Attention Unit

To extract the salient information from different channel feature maps while retaining global information, the CAU is composed of an up-conversion stage (yellow module in Figure 7) and a down-conversion stage (purple module in Figure 7). The up-conversion stage is used to extract salient information, while the down-conversion stage is used to extract global information.

Figure 7.

Channel Attention Unit.

First, the channels of X are divided into and . is input into the up-conversion stage, where it is first processed by a 3 × 3 convolution to extract feature information, followed by a max-pooling layer to aggregate the salient information of the feature map. On the other hand, is input into the down-conversion stage, where a 1 × 1 convolution is applied to produce a feature map that captures shallow hidden details, followed by an average-pooling layer to capture the global information of the feature map. This process generates two distinct spatial context descriptors, and , which are then passed to a shared network to generate two attention maps. To reduce parameter overhead, the first layer of the network uses Group Convolution (GWC) [32], followed by a ReLU activation layer. Although GWC reduces parameters and computational cost, it limits the flow of information between channel groups. To mitigate this, Pointwise Convolution (PWC) is employed to facilitate the flow of information across feature channels and restore the lost information. In summary, the channel attention calculation is represented by Equations (10) and (11):

where denotes the sigmoid function and and are used to extract feature information. and are shared for the inputs and , with following the ReLU activation function.

To further generate richer information features while saving space, this paper does not directly add the two parts, but instead, it adopts a cross-reconstruction operation to effectively combine and weight these two distinct attention maps. After the cross-reconstruction, the resulting attention maps are concatenated to obtain the final channel attention map. The cross-reconstruction operation is represented by Equation (12):

where denotes concatenation and denotes element-wise addition. Finally, the output is multiplied by X to obtain the final refined feature .

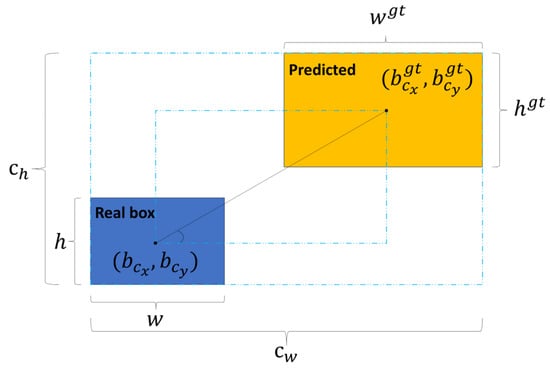

3.3. LIOU Loss Function

The loss function for bounding box regression quantifies the difference between the predicted boxes and the ground truth, enabling the model to generate more accurate location predictions. As a result, a well-designed loss function can significantly enhance the model’s detection performance. YOLOv8 employs CIOU [33] to compute the loss.

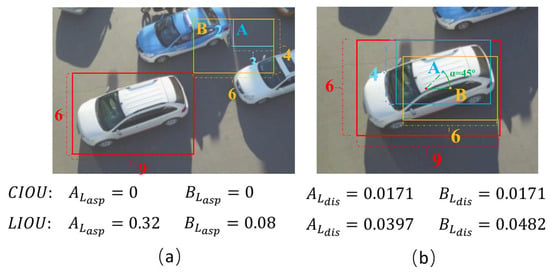

The CIOU bounding box loss function is composed of three components: IOU loss, center distance loss, and aspect ratio loss. Upon investigating the aspect ratio loss, the following observations were made:

- When the predicted box has the same aspect ratio as the ground truth box, but with differing width and height values, the penalty term does not accurately reflect the true discrepancy between the two boxes.

- The gradient of height and width are calculated as shown in Equations (13) and (14).

It can be derived that , indicating that the gradients of and have opposite signs. Therefore, at any given time, if one of these two variables increases, the other will decrease. To tackle these issues, this paper designs a novel loss function, LIOU, which separates the aspect ratio factor between the predicted box and the ground truth box based on the penalty term in CIOU. It computes the width and length of both the predicted and ground truth boxes individually. Furthermore, to account for the orientation between the predicted boxes and ground truth boxes, an angle loss function is incorporated to prevent the predicted box from potentially “wandering” during the training phase. The calculation formula for LIOU is given by Equation (15).

where IOU represents the overlap between the predicted and ground truth bounding boxes. The parameters , , , , , and are illustrated in Figure 8. Here, and represent the height and width of the predicted box; represents the Euclidean distance between the centers of the predicted and ground truth boxes; and denote the dimensions of the smallest enclosing box formed by the predicted and ground truth boxes; and and represent the height and width of the ground truth box. represents the angle between the centerline connecting the predicted box and the ground truth box and the coordinate axes, and denote the center coordinates of the predicted boxes and ground truth box, respectively, and represents the angle loss.

Figure 8.

Predicted box (yellow) and real box (blue).

As shown in Figure 9a, when the predicted box has the same aspect ratio as the ground truth box, LIOU can better reflect the difference between the two boxes. As the angle between the ground truth box center and the predicted box relative to the coordinate axis decreases, LIoU applies a greater penalty, as illustrated in Figure 9b. The LIOU loss function addresses the regression direction issue and simplifies the angle loss, thereby reducing the computational cost.

Figure 9.

(a) Aspect ratio loss result. (b) Distance and angle loss result.

4. Experiments

This article evaluates the performance of the model using the publicly available drone datasets VisDrone-DET2021 and UAVDT, and introduces the dataset, experimental environment and training strategy, evaluation indicators, experiment results, and visualization analysis.

4.1. Dataset

We conducted experiments using the VisDrone-DET2021 [34] and UAVDT [35] datasets, which rank among the most prominent benchmarks for small object detection in UAV imagery.

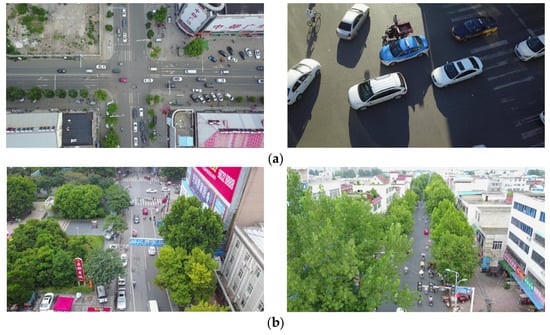

The VisDrone-DET2021 dataset includes real-world images taken by drones across various urban and rural regions, covering a wide range of scenes, lighting conditions, and object categories. Due to variations in drone flight altitude and posture, there is a significant change in object scale, as shown in Figure 10a. Additionally, the presence of obstacles causes occlusions in the images, as depicted in Figure 10b.

Figure 10.

(a) Images of different sizes (b) obstructed by objects.

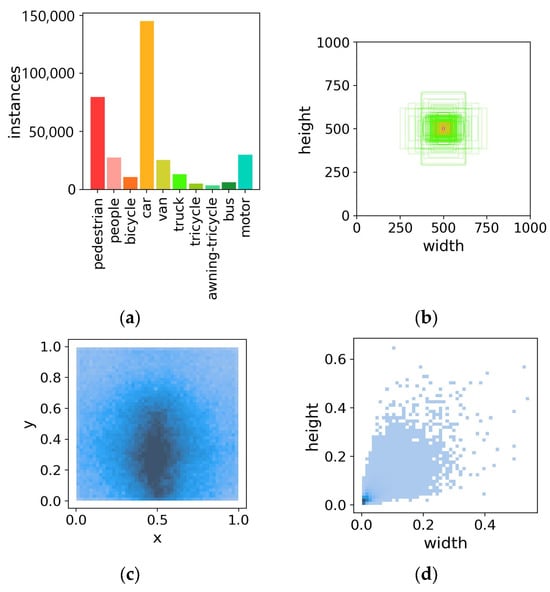

The dataset consists of 6471 images for training, 548 images for validation, and 1580 images for testing, and it covers 10 distinct object categories. An overview of the dataset is provided in Figure 11. The number of objects per category is shown in Figure 11a, with pedestrians and cars making up the majority. Figure 11b visualizes the sizes of the object bounding boxes, where all bounding box centers are fixed at a single point. This clearly indicates that the dataset is composed of a large proportion of small objects. The distribution of bounding box center coordinates is shown in Figure 11c, indicating that most of the centers are concentrated in the lower and central areas of the image. Figure 11d displays a scatter plot illustrating the widths and heights of the bounding boxes, with the darkest color located in the lower-left corner, further confirming that the dataset predominantly consists of small objects.

Figure 11.

VisDrone-DET2021 dataset information: (a) class distribution histogram; (b) bounding box visualization; (c) position distribution; (d) size distribution.

The UAVDT dataset contains a total of 38,327 images, split into 23,258 images for training and 15,069 images for testing. Each image has a resolution of 1080 × 540 pixels, providing a balanced level of detail while maintaining computational efficiency. The annotations in the dataset cover three vehicle types, i.e., car, truck, and bus, with each vehicle type annotated with bounding boxes, indicating the locations of the vehicles within the image. Among them, the total number of labels for cars is 755,688, for trucks is 25,086, and for buses is 18,021.

4.2. Experimental Environment and Training Strategy

The experiment was carried out using the PyTorch 1.8.1 framework, with Python 3.8, the ultralytics and Ubuntu 18.04 operating system, and the CUDA 11.1 libraries. The hardware configuration included an Intel(R) Xeon(R) Platinum 8255C CPU @ 2.50 GHz processor and a 24 GB NVIDIA GeForce RTX 3090 GPU. The training and testing images were resized to 640 × 640, with a batch size of 8. The training was conducted for 200 epochs, and the SGD optimizer was used with an initial learning rate of 0.01.

4.3. Evaluation Indicators

In this study, we utilize mean Average Precision (mAP), the total number of model parameters, and GFLOPs as evaluation metrics.

- mAP (mean Average Precision):

mAP represents the weighted mean of the Average Precision (AP) values for each class, acting as a metric to assess the model’s overall performance in detecting across all categories. The formula for its calculation is shown in Equation (16).

where denotes the AP for the class indexed by . N stands for the total number of object classes in the training dataset (in this study, N = 10). mAP0.5 refers to the mAP of the detection model when the IOU threshold is set to 0.5, while mAP0.5:0.95 indicates the mAP when IOU values range from 0.5 to 0.95 in steps of 0.05.

- AP (Average Precision):

- Average precision is the weighted average of precision (P) over all recall (R) values, with its calculation method outlined in Equation (17).

- Precision (P):

Precision is the ratio of the number of true positive predictions to the total number of detected samples, and the formula for its calculation is shown in Equation (18).

where TP is true positive, where a predicted positive sample is indeed positive, and FP is false positive, where a predicted positive sample is actually negative.

- Recall (R):

Recall is the ratio of the number of true positive predictions to the total number of actual samples, and the formula for its calculation is shown in Equation (19).

where FN is false negative, where a predicted negative sample is actually positive.

4.4. Experiment Results

4.4.1. Comparison with Large-Scale Networks

To demonstrate the competitiveness of the proposed RPS-YOLO network compared to other object detection networks, we conducted further comparative experiments on the VisDrone-DET2021 dataset. The networks compared include the classical networks Faster R-CNN, CenterNet, and SABL, the UAV object detection network RSOD, and Drone-Yolo, as well as networks TOOD and RT-DETR. The experimental results are presented in Table 1.

Table 1.

Comparison experiment results of large-scale networks and RPS-YOLO on the VisDrone-DET2021 validation dataset.

As shown in Table 1, the RPS-YOLO network maintains strong competitiveness compared to other advanced networks. Among all these models, RPS-YOLO provides the second-highest detection accuracy while using the minimum number of parameters and computational resources. This outstanding detection performance is mainly attributed to the two-stage “processing” of the image and four-scale detection.

4.4.2. Comparison with Lightweight Networks

To assess the performance improvements of the proposed network, we performed comparative experiments between several prominent lightweight networks and the RPS-YOLO network using the VisDrone-DET2021 and UAVDT datasets. The results of the experiments on the VisDrone-DET2021 validation dataset are presented in Table 2.

Table 2.

Comparison experiment results of lightweight and RPS-YOLO networks on the VisDrone-DET2021 validation dataset.

The best result is presented in bold.

Based on the experimental results in Table 2, it is evident that while YOLOv3-Tiny offers a lightweight model, it significantly sacrifices detection accuracy. Compared to the YOLOv6s model, YOLOv5s, YOLOv8s, and YOLOv10s exhibit better detection performance with fewer parameters. However, these models all utilize a three-scale detection structure, which does not fully address the detection challenges posed by high proportions of small objects. Consequently, despite only minor differences in parameters, the approach introduced in this study achieves superior accuracy in object detection. However, due to the two-stage image “processing” involved, the model incurs a higher computational cost and has the lowest FPS. PP-PicoDet-L, despite having the smallest parameter count, exhibits relatively lower detection accuracy. In conclusion, RPS-YOLO outperforms the other models, achieving the highest average detection accuracy and overall detection performance.

The results of the experiments on the UAVDT dataset are presented in Table 3. As observed, the proposed RPS-YOLO network outperforms the YOLOv8s network by achieving a 3.4% improvement in mAP0.5, highlighting the competitive edge of the proposed model.

Table 3.

Comparison experiment results of RPS-YOLO and lightweight networks on the UAVDT dataset.

4.5. Visualization Analysis

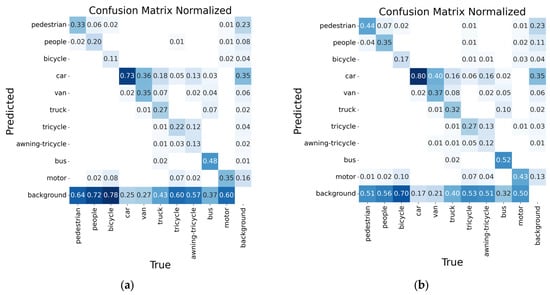

To visually and conveniently demonstrate the detection capability of the proposed model, comparative experiments were conducted on the VisDrone-DET2021 dataset, using confusion matrices and inference results to evaluate the model’s performance.

To visualize RPS-YOLO’s ability to predict object classes, confusion matrices for both RPS-YOLO and YOLOv8s were plotted, as shown in Figure 12. Each row of the matrix corresponds to a ground truth class, while each column represents a predicted class. The upper triangular area highlights the number of false positive detections (false positives, FPs), the lower triangular area shows the proportion of missed detections (false negatives, FNs), and along the main diagonal, the proportion of correctly detected samples (true positives, TPs) is shown.

Figure 12.

(a) Confusion matrix plot of YOLOv8s. (b) Confusion matrix plot of RPS-YOLO.

As shown in Figure 12, the region along the diagonal of the confusion matrix for RPS-YOLO is more pronounced than that of YOLOv8s, signifying that RPS-YOLO has improved accuracy in correctly predicting object classes. YOLOv8s shows a higher rate of misclassifications for smaller objects, such as tricycles and bicycles, indicating a considerable number of missed detections or false positives for these categories. The enhanced model mitigates the misdetection rate for these objects, ultimately improving the overall detection performance.

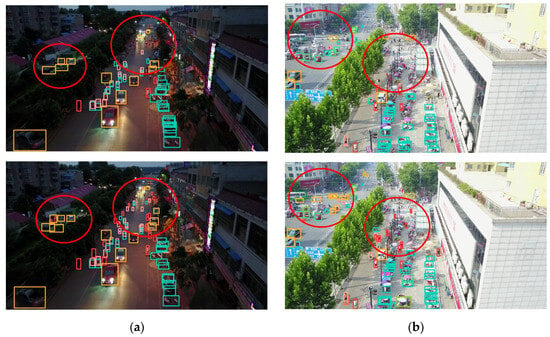

To visually demonstrate the detection performance, inference experiments were conducted on RPS-YOLO and YOLOv8s. Nighttime and densely populated scenes were selected as the experimental datasets. These scenes feature numerous small and varied objects, making them ideal for inference evaluation. The results are presented in Figure 13.

Figure 13.

YOLOv8s inference results on the VisDrone-DET2021 dataset (top) and RPS-YOLO inference result (bottom) for (a) a night scene and (b) a densely populated scene.

As shown in the circled regions in Figure 13a, the proposed method demonstrates superior detection accuracy for small objects located in distant areas of the field of view when compared to YOLOv8s while also improving the recognition accuracy for occluded objects. Similarly, as observed in the circled regions in Figure 13b, the proposed method reduces the misdetection rate for densely packed small objects.

5. Discussion

The experimental results in Table 2 show that, among the lightweight models, RPS-YOLO achieves the best detection accuracy. Compared to YOLOv6s, RPS-YOLO provides an 8.9% higher mAP0.5 while maintaining fewer parameters and computational costs. Additionally, compared to YOLOv8s, RPS-YOLO improves mAP0.5 by 8.2% on the VisDrone-DET2021 dataset, with an increase in the parameter count of less than 20%, which is acceptable.

The experimental results in Table 3 indicate that RPS-YOLO demonstrates a clear advantage over other lightweight networks in the UAVDT dataset, particularly in terms of accuracy, achieving an mAP0.5 of 35.3%. It outperforms other networks, showcasing its strong capability in handling multi-scale object detection. Compared to YOLOv3-tiny and PP-PicoDet-L, RPS-YOLO provides higher object detection accuracy, especially excelling in complex scenes and small object detection, making it highly effective in tasks requiring high-precision detection.

We further assessed the impact of each component in the RPS-YOLO model through ablation experiments conducted on the VisDrone2021-DET validation dataset. Table 4 shows the results of these experiments. YOLOv8s was chosen as the baseline model, and the input image size was fixed at 640 × 640. A checkmark (√) denotes the application of each respective enhancement strategy.

Table 4.

RPS-YOLO ablation experiment results on the VisDrone-DET2021 validation dataset.

As shown in Table 4, it is evident that each enhancement strategy applied to the baseline model leads to varying degrees of improvement in detection performance. By integrating the RFP structure into the baseline model, the mAP0.5 increased by 5.9%. The analyzed dataset contains a significant number of small targets, so prioritizing shallow features during the initial “processing” phase of the image and incorporating scale fusion of shallow feature information helps to effectively reduce the false negative rate for small targets.

The SCC2f module was incorporated into the backbone network, substituting the original C2f module. Thanks to its efficient attention mechanism, the SCC2f module improved the focus on smaller targets within the feature maps, resulting in a 1.9% boost in mAP0.5. Additionally, the introduction of LIOU into the regression loss function for bounding boxes addressed the angle loss issue during bounding box regression and decoupled the aspect ratio factor. This improvement boosted the localization accuracy of the bounding boxes, resulting in a 0.4% increase in mAP0.5.

The improved model achieved an 8.2% increase in mAP0.5 on the VisDrone-DET2021 dataset, with most detection metrics showing significant improvement. The use of the recursive feature pyramid structure makes the model more complex, but this is acceptable in comparison to the improved detection accuracy.

6. Conclusions

In the task of object detection for UAV aerial imagery, several challenges arise, including a high proportion of small objects and large-scale variations. Many existing models struggle to achieve high detection accuracy while balancing computational resource consumption. This paper presents an enhanced YOLOv8 algorithm that integrates a recursive feature pyramid (RFP) structure, leading to the following conclusions:

- Conducting two rounds of “processing” for multi-scale detection enhances detection accuracy, particularly in situations where there is a large presence of small objects.

- Leveraging fine-grained details effectively mitigates feature degradation during the convolution process, improving the model’s attention to small objects.

- By separating the aspect ratio component in the bounding box regression loss function, the process of regressing predicted bounding boxes is accelerated. Additionally, incorporating angle loss helps to avoid the “wandering” effect in predicted bounding boxes.

The enhanced model results in an 8.2% and 3.4% improvement in mAP0.5 for the Vis-Drone-DET2021 and UAVDT datasets, respectively. However, the two-stage “processing” of the images makes the network architecture more intricate, leading to a rise in computational demands and inference time. The GFLOPs value for YOLOv8s is 28.5, while RPS-YOLO has a GFLOPs value of 37.7, suggesting potential for further optimization in the use of computational resources. Future studies will focus on boosting the model’s detection precision while also enhancing computational efficiency and reducing inference time.

Author Contributions

Conceptualization, P.L. (Peigang Liu); data curation, C.W.; formal analysis, P.L. (Penghui Lei) and C.W.; funding acquisition, P.L. (Peigang Liu); investigation, C.W.; methodology, P.L. (Penghui Lei); project administration, P.L. (Peigang Liu); resources, P.L. (Peigang Liu); software, P.L. (Penghui Lei); supervision, P.L. (Peigang Liu); validation, C.W.; writing—original draft, P.L. (Penghui Lei); writing—review and editing, P.L. (Penghui Lei) and P.L. (Peigang Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially funded by the National Key R&D Program of China (grant no. 2019YFF0301800) and the National Natural Science Foundation of China (grant no. 61379106).

Data Availability Statement

The data that support the findings of this study are available in VisDrone-DET2021 at https://doi.org/10.1109/ICCVW54120.2021.00319 and UAVDT at https://doi.org/10.1007/s11263-019-01266-1.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| RPS-YOLO | Recursive Pyramid Structure-based YOLO |

| FPN | Feature Pyramid Network |

| RFP | recursive feature pyramid |

| FPS | Frames Per Second |

| C2f | CSP bottleneck with two convolutions |

| SC | Spatial and Channel |

| SCC2f | Spatial and Channel C2f |

| CAU | Channel Attention Unit |

| SAU | Spatial Attention Unit |

| CIOU | Complete IOU |

| LIOU | Localization IOU |

| IOU | Intersection Over Union |

| mAP | Mean Average Precision |

| FPS | Frames Per Second |

| UAV | unmanned aerial vehicle |

| SGD | Stochastic Gradient Descent |

| AP | Average Precision |

References

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 886–893. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 13–28 June 2008; pp. 1–8. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 23 June 2018; pp. 1–6. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Wong, C.; Yifu, Z.; Montes, D. ultralytics/yolov5: v6. 2-yolov5 classification models, apple m1, reproducibility, clearml and deci. ai integrations. Zenodo 2022. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. pp. 21–37. [Google Scholar]

- Ross, T.-Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Fang, W.; Zhang, G.; Zheng, Y.; Chen, Y. Multi-Task Learning for UAV Aerial Object Detection in Foggy Weather Condition. Remote Sens. 2023, 15, 4617. [Google Scholar] [CrossRef]

- Kong, Y.; Shang, X.; Jia, S. Drone-DETR: Efficient small object detection for remote sensing image using enhanced RT-DETR model. Sensors 2024, 24, 5496. [Google Scholar] [CrossRef] [PubMed]

- Tong, Z.; Jieyu, L.; Zhiqiang, D. UAV target detection based on RetinaNet. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 3342–3346. [Google Scholar]

- Bao, Z. The UAV Target Detection Algorithm Based on Improved YOLO V8. In Proceedings of the International Conference on Image Processing, Machine Learning and Pattern Recognition, Guangzhou, China, 13–15 September 2024; pp. 264–269. [Google Scholar]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A modified YOLOv8 detection network for UAV aerial image recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Lou, H.; Chen, H.; Liu, H.; Gu, J.; Bi, L.; Duan, X. A new detection algorithm for alien intrusion on highway. Sci. Rep. 2023, 13, 10667. [Google Scholar] [CrossRef] [PubMed]

- Zhai, X.; Huang, Z.; Li, T.; Liu, H.; Wang, S. YOLO-Drone: An optimized YOLOv8 network for tiny UAV object detection. Electronics 2023, 12, 3664. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Qiao, S.; Chen, L.-C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Cao, Y.; He, Z.; Wang, L.; Wang, W.; Yuan, Y.; Zhang, D.; Zhang, J.; Zhu, P.; Van Gool, L.; Han, J. VisDrone-DET2021: The vision meets drone object detection challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2847–2854. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3490–3499. [Google Scholar]

- Wang, J.; Zhang, W.; Cao, Y.; Chen, K.; Pang, J.; Gong, T.; Shi, J.; Loy, C.C.; Lin, D. Side-aware boundary localization for more precise object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part IV 16. pp. 403–419. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Sun, W.; Dai, L.; Zhang, X.; Chang, P.; He, X. RSOD: Real-time small object detection algorithm in UAV-based traffic monitoring. Appl. Intell. 2021, 52, 8448–8463. [Google Scholar] [CrossRef]

- Zhang, Z. Drone-YOLO: An efficient neural network method for target detection in drone images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y. PP-PicoDet: A better real-time object detector on mobile devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).