Abstract

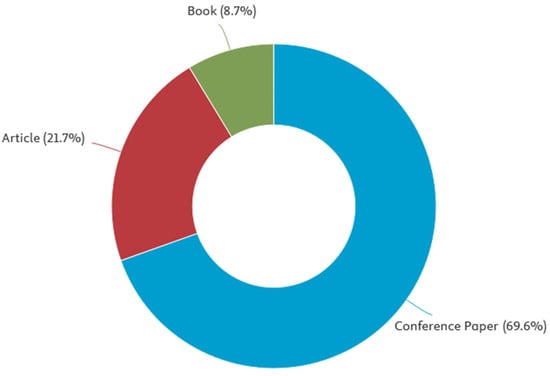

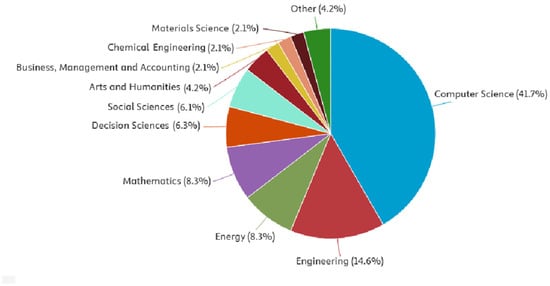

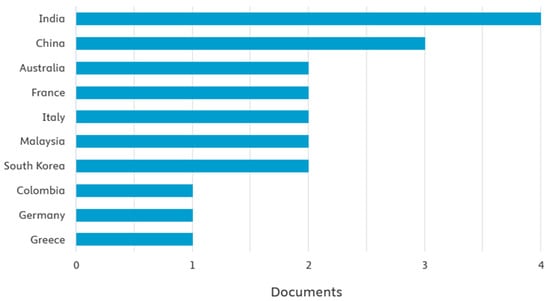

Deep learning (DL) methods have revolutionized natural language processing (NLP), enabling industrial documentation systems to process and generate text with high accuracy and fluency. Modern deep learning models, such as transformers and recurrent neural networks (RNNs), learn contextual relationships in text, making them ideal for analyzing and creating complex industrial documentation. Transformer-based architectures, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), are ideally suited for tasks such as text summarization, content generation, and question answering, which are crucial for documentation systems. Pre-trained language models, tuned to specific industrial datasets, support domain-specific vocabulary, ensuring the generated documentation complies with industry standards. Deep learning-based systems can use sequential models, such as those used in machine translation, to generate documentation in multiple languages, promoting accessibility, and global collaboration. Using attention mechanisms, these models identify and highlight critical sections of input data, resulting in the generation of accurate and concise documentation. Integration with optical character recognition (OCR) tools enables DL-based NLP systems to digitize and interpret legacy documents, streamlining the transition to automated workflows. Reinforcement learning and human feedback loops can enhance a system’s ability to generate consistent and contextually relevant text over time. These approaches are particularly effective in creating dynamic documentation that is automatically updated based on data from sensors, registers, or other sources in real time. The scalability of DL techniques enables industrial organizations to efficiently produce massive amounts of documentation, reducing manual effort and improving overall efficiency. NLP has become a fundamental technology for automating the generation, maintenance, and personalization of industrial documentation within the Industry 4.0, 5.0, and emerging Industry 6.0 paradigms. Recent advances in large language models, search-assisted generation, and multimodal architectures have significantly improved the accuracy and contextualization of technical manuals, maintenance reports, and compliance documents. However, persistent challenges such as domain-specific terminology, data scarcity, and the risk of hallucinations highlight the limitations of current approaches in safety-critical manufacturing environments. This review synthesizes state-of-the-art methods, comparing rule-based, neural, and hybrid systems while assessing their effectiveness in addressing industrial requirements for reliability, traceability, and real-time adaptation. Human–AI collaboration and the integration of knowledge graphs are transforming documentation workflows as factories evolve toward cognitive and autonomous systems. The review included 32 articles published between 2018 and 2025. The implications of these bibliometric findings suggest that a high percentage of conference papers (69.6%) may indicate a field still in its conceptual phase, which contextualizes the article’s emphasis on proposed architecture rather than their industrial validation. Most research was conducted in computer science, suggesting early stages of technological maturity. The leading countries were China and India, but these countries did not have large publication counts, nor were leading researchers or affiliations observed, suggesting significant research dispersion. However, the most frequently observed SDGs indicate a clear health context, focusing on “industry innovation and infrastructure” and “good health and well-being”.

1. Introduction

Deep learning (DL) methods have revolutionized natural language processing (NLP), enabling industrial documentation systems to process and generate text with high accuracy and fluency, even in highly specialized areas [,]. Modern DL models, such as transformers and recurrent neural networks (RNNs), learn contextual relationships in text, making them ideal for analyzing and creating complex industrial documentation []. Transformer-based architectures, such as Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformers (GPT), are ideal for tasks such as text summarization, content generation, and question answering, which are crucial for documentation systems []. Pre-trained language models, tuned to specific industrial datasets, support domain-specific vocabulary, ensuring the generated documentation complies with industry standards []. Deep learning-based systems can use sequential models, such as those used in machine translation, to generate documentation in multiple languages, promoting accessibility and global collaboration [,]. Using attention mechanisms, these models identify and highlight critical sections of input data, resulting in the generation of accurate and concise documentation. Integration with optical character recognition (OCR) tools enables DL-based NLP systems to digitize and interpret legacy documents, streamlining the transition to automated workflows []. Reinforcement learning and human feedback loops can enhance a system’s ability to generate consistent and contextually relevant text over time []. These approaches are particularly effective in creating dynamic documentation that updates automatically based on data from sensors, registers, or other sources in real time [,]. The scalability of DL techniques already enables industrial organizations to efficiently create massive amounts of documentation, reducing manual effort and increasing overall productivity [,], provided they can afford commercial AI (which is increasingly expensive due to, among other factors, high energy prices).

The genesis of DL in industrial documentation began with the limitations of traditional rule- and template-based systems []. As the complexity and richness of data in industrial operations increased, conventional methods were no longer scalable to meet dynamic documentation needs []. Early ML techniques introduced statistical models for text generation, but they lacked contextual understanding and linguistic depth. The breakthrough came with DL architectures, specifically recurrent neural networks (RNNs) and later transformers, which could effectively model sequential linguistic patterns []. Industrial organizations began exploring these models to automate tasks such as maintenance report generation, compliance documentation, and technical translation [,,]. The introduction of pre-trained models such as Word2Vec, BERT, and GPT marked a turning point, enabling the transfer of ML to specialized industrial domains []. Refining these models in domain-specific corpora allowed for more accurate, context-aware generation of safety instructions, operating manuals, and diagnostics []. Integration with IoT and enterprise systems has enabled real-time, data-driven document generation, increasing responsiveness and traceability []. The growing demand for multilingual, adaptive, and accessible content in global industry has further accelerated the implementation of deep learning in documentation []. DL NLP represents a fundamental shift in industrial documentation, from manual and reactive processing to intelligent, scalable, and proactive systems []. NLP is becoming an essential technology for generating and managing industrial documentation as manufacturing systems evolve from Industry 4.0 toward Industry 5.0 and the emerging concept of Industry 6.0. In modern industrial environments, machines, sensors, and cyber–physical systems constantly generate large amounts of operational data that must be recorded, interpreted, and communicated. Manual documentation processes struggle to keep up with this complexity, creating a need for automated tools that can transform raw technical data into clear and actionable information. NLP enables the automatic generation of reports, maintenance logs, safety instructions, and regulatory compliance records using language models learned from industrial knowledge. In Industry 4.0, NLP’s role is primarily associated with automating text extraction, summarization, and reporting in digitized and connected production systems. With the introduction of a more human-centric philosophy in Industry 5.0, NLP supports better communication between workers and intelligent machines, adapting documentation to the needs and skill levels of users. It also improves operator support through personalized explanations, multilingual interfaces, and more accessible technical communication. In the context of Industry 6.0, NLP is expected to integrate with autonomous and self-optimizing systems, enabling dynamic adaptation of documentation to real-time conditions in distributed industrial networks. These developments underscore the strategic importance of NLP in ensuring the understandability, traceability, and good coordination of complex industrial processes. Analyzing NLP-based documentation in Industry 4.0, 5.0, and 6.0 provides valuable insights into how future manufacturing ecosystems will communicate, collaborate, and maintain operational knowledge.

1.1. Observed Gaps

A significant research gap is the lack of high-quality, domain-specific datasets for training and evaluating LLMs in industrial contexts []. Most large language models are trained on general-purpose text, leading to hallucinations or inaccuracies when applied to technical documentation. Although RAG models strengthen the factual basis, there is little research on optimizing retrieval components for highly structured industrial knowledge bases. Existing models often struggle with multimodal integration, such as combining text instructions with diagrams, graphs, or sensor data []. There is insufficient exploration of real-time document generation, where models dynamically respond to live data streams from industrial systems. Many DL models lack explainability and traceability, which are crucial for security, compliance, and auditability in regulated industries. Little research addresses the generation of multilingual and culturally sensitive documents, which are essential in globalized industrial environments. Human-assisted systems for validating or correcting LLM-generated documentation are under-researched but essential for practical utility []. The literature still lacks robust evaluation frameworks and benchmarks tailored to industrial document generation tasks []. The energy and computational costs of implementing LLM with enhanced RAG systems in resource-constrained industrial environments or at the network edge remain an open challenge [].

1.2. Novelty and Contribution

This review consolidates, for the first time, advances in DL, specifically LLM and RAG architectures, in the crucial field of industrial documentation. It uniquely maps the connections between state-of-the-art NLP technologies and practical documentation needs in Industry 4.0, 5.0, and the emerging Industry 6.0 paradigm []. The study identifies and categorizes real-world applications, demonstrating how AI-generated documentation can improve operational efficiency, security, and regulatory compliance. It contributes a structured analysis of current research limitations, offering a critical perspective on data challenges, explainability, and domain adaptation in industrial NLP []. The inclusion of RAG techniques highlights new opportunities for combining static knowledge with dynamic data in documentation processes. This work proposes a comprehensive set of future research directions based on both technical advances and industrial applicability. The review advances this field by positioning deep learning-based NLP not just as a linguistic tool, but as a strategic enabler for creating intelligent, adaptive industrial ecosystems [,].

1.3. Goal and Research Questions

The main goal of this review is to provide a comprehensive and critical overview of the current state, challenges, and future directions of DL methods in NLP for industrial documentation generation. This includes analyzing the use and effectiveness of advanced architectures such as large language models (LLMs) and search-aided generation (RAGs).

The research questions (RQs) addressed in this article are as follows:

- RQ1: How are deep learning-based NLP methods currently used to generate, manage, and update industrial documentation?

- RQ2: What are the existing research gaps, technological barriers, and untapped opportunities in this interdisciplinary field?

- RQ3: What deep learning methods are currently used in NLP for industrial documentation generation, and how are they being implemented?

- RQ4: To what extent do existing solutions meet requirements such as factual accuracy, regulatory compliance, explainability, and real-time responsiveness?

Questions were selected regarding the most common publication sources, leading topics, countries, institutions, researchers, and Sustainable Development Goals (SDGs) to map the global landscape. These dimensions reveal where scientific knowledge and innovation are concentrated, helping to identify geographic and institutional factors influencing technological progress. They also allow us to trace how emerging topics (such as NLP or genAI) fit into the broader context of sustainability and the SDGs focused on Industry 4.0/5.0/6.0, demonstrating their societal relevance. Together, these research questions provide a structured framework for understanding not only what is being researched in a given area but also who is developing it and why, offering strategic insights for future research and international collaboration.

2. Materials and Methods

2.1. Dataset

The goal of our bibliometric analysis was to examine both the research landscape and the engineering knowledge and practice surrounding the design, construction, implementation, and operation of DL-based NLP systems for generating industrial documentation.To this end, we used bibliometric methods to analyze scientific publication databases and responses to the RQs formulated above to identify key areas such as the current state-of-the-art, the genesis and evolution of research topics, the origin of publications (institutions, country, and, where possible, funding), and the most influential authors and articles, taking into account their currency.This approach allowed us to obtain a comprehensive picture of current research and industry trends in the analyzed area of digital transformation. The analysis and interpretation of bibliometric data enriches current discussions and conclusions and creates a solid foundation for future research.

2.2. Methods

In this study, we searched four major bibliographic databases: Web of Science (WoS), Scopus, PubMed, and dblp. These databases were selected for their broad coverage and rich citation data, which support in-depth bibliometric analysis of the topic area under investigation (Table 1). We applied various search filters to focus on relevant literature, narrowing the search results to original and review articles in English. After this filtering, each article was manually reviewed to ensure it met the inclusion criteria, which determined the final sample size. Three reviewers participated in the manual review process, and decisions about the inclusion/exclusion of individual articles were made independently. Discrepancies in assessment were resolved by consensus among at least two of the three reviewers. Key features of the dataset were then analyzed, including the most influential authors, research groups/institutions, countries, topic clusters, and emerging trends. This enabled the mapping of the evolution of key terminology and major research developments in the thematic area under analysis. Where possible, temporal trends were tracked to monitor changes in research coverage over time, and publications were grouped into thematic clusters, revealing relationships between different research areas. This process allowed for the identification of the most important themes and subfields within a given research area.

Table 1.

Bibliometric analysis procedure used in the study (own approach).

The study is based on selected elements of the PRISMA 2020 guidelines for bibliographic reviews (PRISMA 2020 Checklist in the Supplementary Materials). The following aspects were considered: rationale (item 3), purpose(s) (item 4), eligibility criteria (item 5), information sources (item 6), search strategy (item 7), selection process (item 8), data collection process (item 9), synthesis methods (item 13a), synthesis results (item 20b), and discussion (item 23a). Tools embedded in the Web of Science (WoS), Scopus, PubMed, and dblp databases were used for bibliometric analysis. This selected review methodology allows for improved categorization by concepts (keywords), research areas, authors/teams, affiliations, countries, documents, and sources.

2.3. Data Selection

In WoS, searches were performed using the “Subject” field (consisting of title, abstract, keyword plus, and other keywords); in Scopus, using the article title, abstract, and keywords; and in PubMed and dblp, using manual keyword sets. Articles were searched in the databases using keywords such as “deep learning,” “natural language processing,” “papers,” and “industry” (Table 2).

Table 2.

Detail search query over four databases.

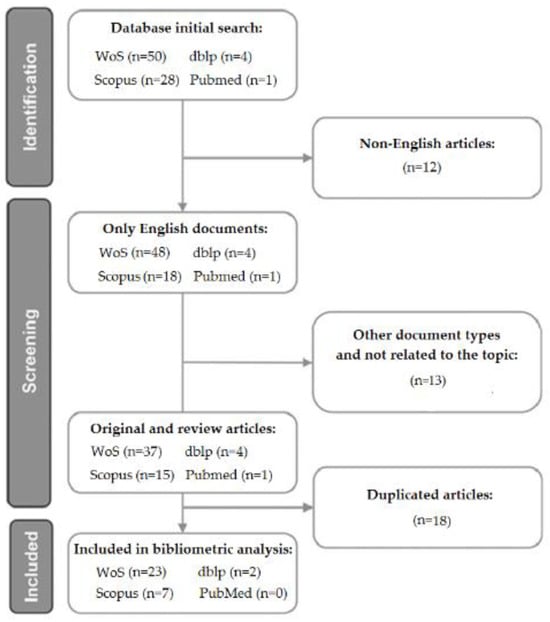

The selected set of publications underwent additional verification through manual re-screening of articles, removing irrelevant items and duplicates, which allowed us to determine the final sample size (Figure 1). We used a combination of methodological quality assessment tools to ensure reliability and validity of the articles.

Figure 1.

PRISMA flow diagram of the review process.

The aforementioned PRISMA 2020 flowchart provides a clear, step-by-step overview of the process of identifying, selecting, assessing, and ultimately including studies in the review, ensuring methodological accuracy and reproducibility. It begins with the identification phase, which involves collecting data from databases and other sources, capturing the total volume of potentially relevant literature before removing duplicates. Next, in the screening phase, titles and abstracts are filtered based on predefined eligibility criteria, eliminating studies that are clearly not related to machine learning-based wearables or digital transformation technologies. Next, in the eligibility phase, full-text articles are thoroughly assessed for methodological quality, relevance to data collection and security, and relevance to topics such as NLP or genAI. Finally, the study inclusion phase shows how many articles remain after exclusion, representing the evidence base used for synthesis and analysis. Interpreting the PRISMA diagram allows for a better understanding of the transparency of the review by showing not only the volume of literature analyzed but also the rationale for each exclusion step, which strengthens the validity of the conclusions.

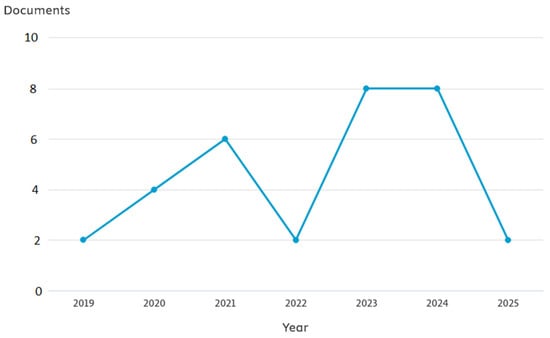

A general summary of the bibliographic analysis results is presented in Table 3 and Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8. The review included 32 articles published between 2018 and 2025. Articles published before 2018 were excluded because the integration of DL with NLP in document generation for Industry 4.0/5.0/6.0 is a relatively new phenomenon. Significant advances in AI/ML/DL and document generation have only been widely applied to industrial digital transformation processes in the last few years. Including older studies would risk being influenced by outdated methods or technologies that do not reflect current capabilities or trends. Thus, the 2018–2025 timeframe ensures that the review focuses on the most relevant, innovative, and technically feasible approaches.

Table 3.

Summary of results of bibliographic analysis (WoS, Scopus, PubMed, dblp).

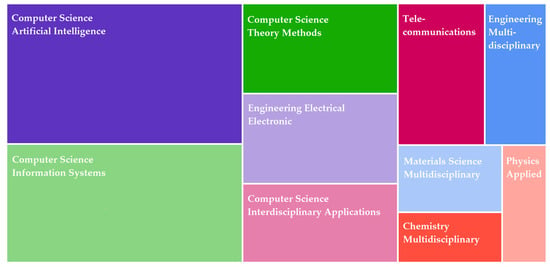

Figure 2.

Publications by categories.

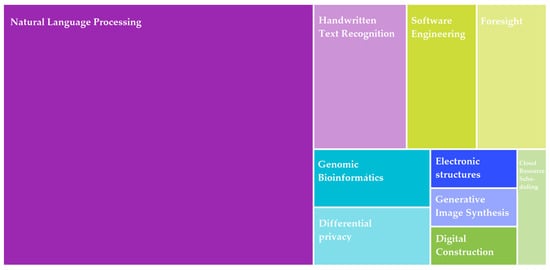

Figure 3.

Publications by micro topics.

Figure 4.

Publications by SDGs.

Figure 5.

Publications by year.

Figure 6.

Publications by type.

Figure 7.

Publications by subject area.

Figure 8.

Publications by country.

The implications of these bibliometric findings suggest that a high percentage of conference papers may indicate a field still in its conceptual phase, which contextualizes the article’s emphasis on proposed architectures rather than their industrial validation. Most research was conducted in computer science, suggesting early stages of technological maturity. The leading countries were China and India, but these countries did not have large publication counts, nor were leading researchers or affiliations observed, suggesting significant research dispersion. However, the most frequently observed SDGs indicate a clear health context, focusing on “industry innovation and infrastructure” and “good health and well-being”.

The final set of 32 publications represents a representative sample of the literature across the entire topic area. It reflects the breadth and depth of current research on DL NLP technologies in industrial document generation. This number was achieved through a rigorous selection process based on relevance, topical saturation, and scientific impact, ensuring the inclusion of key studies and influential publications. The dataset encompasses a wide range of approaches, methodologies, and applications, ensuring coverage of major research trends and directions. Recurring concepts and findings emerged consistently across all included studies, suggesting comprehensive representation of the field and topical saturation (Figure 5, Figure 6, Figure 7 and Figure 8). Including the most cited and peer-reviewed publications in the review enhances the credibility and scientific relevance of the review.

Bibliometric analysis is a robust and well-established methodological approach for mapping research trends, identifying thematic concentrations, and assessing the evolution of a field such as NLP in industrial documentation generation within Industry 4.0, 5.0, and 6.0.However, given the exponential growth in publications related to NLP, digital twins, human–machine collaboration, and Industry 4.0/5.0/6.0 over the past three years, the resulting dataset of just 32 articles may seem unexpectedly small. This result can be partially attributed to the nascent and highly specialized nature of the intersection of NLP, industrial documentation, and emerging industrial paradigms, with many studies focusing on broader AI or IoT issues rather than solely on documentation generation. At the same time, some relevant studies were not included due to limitations in search terms, database selection, or narrowly defined inclusion/exclusion criteria. For example, expanding the scope of search terms to include related concepts such as automated technical writing, industrial knowledge extraction, context-aware documentation, or semantic production systems could expand the dataset in the future. Similarly, adding broader databases (e.g., IEEE Xplore, ACM Digital Library) or including gray literature could help capture work that is not explicitly labeled as NLP but is still highly relevant. Although our bibliometric approach remains methodologically rigorous, the relatively small dataset highlights an important limitation: the field is developing, the terminology is inconsistent, and the search strategy may need to be expanded to fully reflect the multidisciplinary nature of the topic. Explicitly considering these factors enhances the credibility and transparency of the study and clarifies that the dataset represents a focused snapshot, not an exhaustive representation of a rapidly evolving field of research.

No affiliations, funding, or authors were dominant.

Our review has several potential limitations that may affect the scope and comprehensiveness of the findings and should be considered when replicating and/or updating the review. Including only English-language sources introduces linguistic bias, potentially excluding relevant studies published in other languages (e.g., Chinese). The literature search was limited to four major academic databases, which may have missed relevant work indexed elsewhere. Excluding gray literature (technical reports, theses, and industry articles) may limit insight into new practices and real-world applications not yet covered by the peer-reviewed sources.

3. Results

DL-based NLP for industrial documentation relies heavily on large language models (LLMs) such as GPT and BERT to understand and generate technical language. These models are often fine-tuned to domain-specific corpora to improve accuracy in tasks such as procedure writing, compliance reporting, and problem solving [,]. Search-augmented generation (RAG) models combine the advantages of LLMs with external knowledge bases, enabling the creation of more accurate and up-to-date documentation. In RAG architectures, the search module first selects relevant documents from the knowledge base, and the generator then generates contextually aware answers or documents []. This approach is particularly valuable in industrial applications where real-time data and historical records must be integrated with the documentation results. Transformer architectures are fundamental, enabling parallel text processing and attention mechanisms that capture long-range dependencies in industrial language. Optical character recognition (OCR) and computer vision are often used in conjunction with NLP to digitize and process legacy documentation []. Knowledge graphs and structured metadata help embed LLM results into factual, machine-readable industrial data, ensuring greater consistency. Cloud-based AI services and edge computing platforms support the implementation of NLP systems directly into production environments []. Together, these technologies enable the creation of scalable, intelligent, and contextual documentation systems that meet the automation and decision support needs of modern industries []. Implementing RAG technology in industrial documentation processes allows NLP systems to base their results on accurate, domain-specific information retrieved from technical databases and equipment records. RAG is particularly useful in industrial applications because it reduces hallucinations by ensuring that the generated documentation is directly linked to verified sources such as maintenance logs, sensor histories, and engineering manuals. However, implementing RAG requires highly structured industrial datasets, which often include time-series sensor data, machine configuration files, diagnostic reports, and procedural instructions unique to each production environment. These datasets typically vary significantly in format and quality, making data harmonization and the creation of unified knowledge repositories a significant technical challenge. Industrial datasets also contain sensitive operational information, so RAG processes must incorporate strong privacy, access control, and cybersecurity safeguards. Furthermore, technical documentation in industrial applications must meet stringent regulatory and engineering standards for clarity, accuracy, traceability, and version control. Generated output must adhere to established documentation types (maintenance manuals, compliance reports, operating procedures, and risk assessments) with precise formatting and terminology requirements. RAG-based systems must therefore be carefully tuned using industry taxonomies and domain ontologies to ensure terminological consistency and contextual accuracy. They must also support explainability by linking each generated statement to the source document, which is crucial for operator confidence and regulatory audits. Once these requirements are met, RAG will offer an effective approach to Industry 4.0/5.0/6.0 documentation, but its effectiveness depends on robust data pipelines, domain-specific search mechanisms, and strict adherence to industrial documentation standards.

3.1. Traditional Industrial Documents Generation

Traditional industrial document generation typically relied on rule-based systems and templates, offering consistency but limited adaptability []. These methods often required domain experts to hand-code linguistic rules, making updates time-consuming and error-prone []. The generated documents were typically rigid, lacking the linguistic variation and contextual nuances inherent in human-generated text. Deep learning methods, on the other hand, have revolutionized this area of industrial document generation by enabling data-driven text generation. Pre-trained language models have begun to learn complex linguistic patterns from large corpora without manual rule creation. These models support the automatic generation of safety instructions, technical specifications, and maintenance reports with high fluency []. Unlike traditional systems, deep learning (DL) models can adapt to evolving vocabulary and industry jargon through fine-tuning. Hybrid systems are emerging, combining the reliability of rule-based language with the flexibility of DL for document quality control. Challenges remain in ensuring factual accuracy and regulatory compliance when using neural language models in industry []. As DL evolves, it is transforming industrial documentation, offering scalability, customization, and performance far beyond traditional methods.

3.2. Documents Generation Within Industry 4.0

The Industry 4.0 paradigm emphasizes automation, data exchange, and intelligent systems, requiring advanced methods for generating and managing industrial documentation []. Traditional documentation processes struggle to keep pace with the dynamic, interconnected environments of smart factories. DL approaches to NLP provide scalable solutions for automatically generating technical manuals, compliance reports, and system logs. These models can process real-time data from Internet of Things (IoT) devices to generate contextual, up-to-date documentation on machine performance or maintenance needs []. Transformer-based architectures (GPT, BERT) enable the understanding and generation of complex industrial language with minimal human intervention. Integration with industrial control systems enables DL models to generate documentation that addresses specific events or errors. NLP systems trained on domain-specific corpora can support multilingual documentation, which is crucial in globalized production environments. AI-powered document generation also supports predictive maintenance by generating alerts and repair instructions before failures occur []. Despite their potential, these systems must undergo rigorous validation to meet safety, regulatory, and quality assurance standards in the context of Industry 4.0 []. As DL evolves, it is starting to play a key role in transforming industrial documentation from static records into intelligent, adaptive communication tools.

3.3. Documents Generation Within Industry 5.0

Industry 5.0 emphasizes human-centric, sustainable (including environmentally friendly) and change-resilient production, which requires more intelligent and adaptive documentation systems. DL-based NLP enables the creation of personalized documentation that takes into account human–machine collaboration and operator preferences []. Unlike Industry 4.0, where automation was the primary goal, Industry 5.0 aims to empower humans, requiring more intuitive and accessible documentation []. NLP models in Industry 5.0 must generate documents that are not only accurate but also understandable and user-friendly to support decision-making. DL techniques enable real-time document adaptation, reflecting changing production conditions, user roles, and sustainability goals []. Context-aware NLP models can adapt technical content to the needs of different users, from engineers to operators, increasing safety and efficiency. Multimodal integration allows for the combination of textual documentation with visual or audio instructions, improving accessibility in human-centric environments. Industrial document generation also benefits from AI-powered ethical design, ensuring transparency and accountability in documentation processes []. DL enhances collaboration by generating documentation that bridges communication between humans and AI-powered systems. In Industry 5.0, DL-based NLP transforms industrial documentation into an intelligent, adaptive, and human-centric component of the manufacturing ecosystem.

3.4. Documents Generation Within Industry 6.0

Industry 6.0 envisions hyperconnected, autonomous, and AI-integrated ecosystems in which documentation must evolve to keep pace with real-time intelligence. DL-based NLP plays a key role in autonomously generating, updating, and interpreting documentation in decentralized, intelligent systems []. Industrial documentation in Industry 6.0 will transition from static text to dynamic, self-adaptive knowledge interfaces, powered by AI understanding, but still require development [,]. NLP models will generate documents that communicate not only operational data but also ethical, environmental, and self-regulatory machine insights [,]. As human oversight increases, NLP systems must ensure that the generated documents explain complex AI decisions in a transparent and accessible manner [,]. Industrial digital twins (DTs) will leverage deep learning-based NLP to narrate system behavior, anomalies, and optimization paths in natural language in real time. Multilingual, context-aware document generation will become essential in globally distributed, AI-coordinated industrial networks. In Industry 6.0, documentation will be continuous and conversational—generated on demand via human–AI interaction interfaces such as voice and augmented reality (AR). This will enable documents to serve not only as records but also as collaborative agents, capable of proactive reasoning and knowledge sharing. DL-based NLP in Industry 6.0 will redefine industrial documentation as an intelligent, autonomous layer within cyber–physical–human systems.

The transition from Industry 4.0 to Industry 5.0 and towards the emerging vision of Industry 6.0 provides a valuable contextual framework for understanding the evolving role of NLP in generating industrial documentation. Industry 4.0 ushered in the digital transformation of manufacturing, in which cyber–physical systems, IoT devices, and automation generated massive streams of structured and unstructured data, requiring intelligent documentation tools. In this phase, NLP began supporting tasks such as automated report generation, maintenance records, and real-time operational summaries based on sensor data and machine events. The transition to Industry 5.0 emphasizes human-centric collaboration, prompting NLP systems to adapt to create more intuitive, personalized, and understandable industrial documentation for operators and managers. In this context, NLP helps bridge the gap between workers and increasingly autonomous machines by generating natural-language insights, safety guidelines, and adaptive instructions. Industry 5.0 also introduces ethical and sustainability considerations, motivating NLP research to support transparent and responsible documentation practices that comply with regulatory and environmental requirements. The conceptual direction of Industry 6.0 extends these advances by integrating ubiquitous intelligence, cognitive automation, and decentralized AI ecosystems. In this context, NLP can evolve into multimodal, context-aware systems capable of generating documentation that dynamically adapts to operational states in globally connected industrial networks. NLP-generated documentation in Industry 6.0 aims to support proactive decision-making, self-optimizing systems, and interoperability across domains, rather than simply recording past actions. This advancement positions NLP not as a static tool but as a transformative agent, evolving alongside the trajectory of industrial innovation toward increasingly intelligent, human-centric, and autonomous production ecosystems.

Despite significant progress, current NLP systems for industrial documentation often struggle to balance linguistic fluency with the rigorous factual accuracy required in safety-critical environments. Many transformer-based models used in Industry 4.0/5.0/6.0 contexts still exhibit hallucinatory tendencies, exposing a gap between general-purpose training data and industrial-specific knowledge. While domain tuning improves handling of technical terminology, it also increases susceptibility to overfitting, limiting the model’s ability to generalize to different machine variants or process updates. Heavy reliance on proprietary datasets in industrial environments prevents broad benchmarking and hinders the measurement of real progress against competing NLP techniques. Current evaluation metrics, such as BLEU or ROUGE, insufficiently capture maintainability-critical dimensions such as procedural correctness or compliance with ISO/IEC standards. Even advanced architectures with enhanced data mining require extensive data curation, exposing the human resource bottleneck behind seemingly automated documentation processes. HITL validation remains essential because fully autonomous systems often misinterpret sensor logs or error patterns, demonstrating that AI-based documentation has not yet achieved true industrial robustness. Multimodal NLP solutions promise improved integration of diagrams and schematics, but their accuracy is severely limited by the limited availability of annotated industrial images. Integrating NLP into Industry 5.0 processes poses management and accountability challenges, especially when models influence human decisions through automatically generated instructions. Although NLP can accelerate documentation cycles, its implementation in operational factories highlights the constant tension between the speed of innovation and the high demands of safety, traceability, and regulatory compliance.

3.5. Cybersecurity of Automatically Generated Industrial Documents

Cybersecurity threats to industrial documents generated by DL-based NLP include the following:

- Threat vectors:

- Injecting malicious data into training or high-speed input data;

- Unauthorized access to generated content (IP theft, industrial espionage);

- Misleading or falsified generated documentation.

- Attack surfaces:

- Injecting or poisoning high-speed LLM data;

- Model inversion attacks;

- Data leakage from fine-tuned models [,,].

Fundamental solutions include leveraging blockchain technology for secure documentation in the following use cases:

- Immutable storage of generated documents;

- Tamper-proof version control;

- Audit trail for document revisions;

- Smart contracts for securely running document workflows.

Other technologies include:

- IPFS for decentralized storage;

- Solutions for Industrial Smart Contracts.

The integration framework defines how blockchain and DL NLP interact:

- Generated document hash stored on-chain;

- Metadata (author, timestamp, revision ID) stored in a ledger;

- Verification mechanisms via smart contracts or zero-knowledge proofs;

- Federated/decentralized AI inference for enhanced security [].

4. Discussion

Previous publications on DL NLP for generating industrial documentation are insufficient and focused on specific applications, such as patent searches. There is a lack of industrial systems focused on documentation for technologists/engineers, scalable for both large production lines and small, unattended production lines. The entire context of generating interactive industrial documentation within the cyber–physical systems of Industry 5.0 and 6.0 remains to be further developed. These systems would significantly facilitate human–machine communication (both in the form of AR and brain–computer interface). As part of their own research, the authors are developing automatic generation of extracts from technical documentation at various levels of sophistication and detail, i.e., dedicated to different audiences. It appears that this approach could effectively establish a foundation for creating more advanced systems for generating industrial documentation based on DL NLP, especially RAG. Dynamic analysis of document structure needs to be developed, especially in the area of multimodal and multilingual documents.

Following sections contain the answers to questions RQ1–RQ4:

- RQ1: How are deep learning-based NLP methods currently used to generate, manage, and update industrial documentation? Results, Section 3.2 and Section 3.3.

- RQ2: What are the existing research gaps, technological barriers, and untapped opportunities in this interdisciplinary field? Results, Section 3.4, Discussion, Section 4.1 and Section 4.6.

- RQ3: What deep learning methods are currently used in NLP for industrial documentation generation, and how are they being implemented? Discussion, Section 4.1 and Section 4.2.

- RQ4: To what extent do existing solutions meet requirements such as factual accuracy, regulatory compliance, explainability, and real-time responsiveness? Discussion, Section 4.5.

Table 4 below provides comparative overview of NLP approaches for industrial documentation generation.

Table 4.

Comparative overview of NLP methods/technologies for industrial documentation generation.

4.1. Limitations

Current studies often focus on model performance in controlled environments, lacking validation in real-world industrial settings with noise, incomplete, or evolving data. Many approaches using LLMs or RAG are not fine-tuned for domain-specific terminology, leading to reduced accuracy and relevance in technical document generation. Studies frequently overlook user-centric design, failing to assess how well generated documents support end-users such as technicians, operators, or inspectors. There is a lack of longitudinal evaluations, meaning we do not fully understand how these systems perform or degrade over time in production environments. Few studies systematically address security and data privacy, which are critical in sensitive industrial domains where documentation may contain proprietary information. Most research assumes the availability of large, labeled datasets, but data scarcity and confidentiality issues often make such datasets unrealistic in industry. RAG-based systems are rarely tested on domain-specific retrieval mechanisms, and current retrievers may not effectively extract nuanced or structured technical content. Studies commonly neglect regulatory and compliance constraints, which limits the deployability of NLP systems in tightly regulated industries like aerospace or pharmaceuticals. Evaluation metrics used in current research are often generic (e.g., BLEU, ROUGE) and may not capture the functional quality or safety-critical relevance of industrial documentation. There is limited research into human–AI collaboration workflows, meaning it is unclear how experts and AI systems can best co-create or validate industrial documents [].

Several limitations to the use of RAG in industrial documentation can be addressed by analyzing specific data and technical gaps that still hinder effective implementation.A significant problem is that many industrial datasets lack comprehensive metadata, making it difficult for search systems to properly index and contextualize the information needed for accurate document generation. Furthermore, historical documentation is often unstructured, incomplete, or inconsistent, limiting the ability of RAG models to learn precise terminology and procedural relationships. Important data categories (rare failures, expert decision justifications, and tacit operational knowledge) are often missing, leaving significant blind spots in the generated content. From a task perspective, automated reasoning based on complex engineering logic remains poorly addressed, meaning RAG systems struggle to generate or validate documentation involving multi-step procedures or safety-critical dependencies. Similarly, real-time search from rapidly changing sensor data streams remains a technical challenge, limiting RAG’s usefulness in dynamic industrial environments. Technical challenges also arise from integrating heterogeneous data sources across legacy machines, modern IoT devices, and vendor-specific systems, complicating search accuracy. Latency and computational constraints further limit deployment at the industrial edge, where rapid document generation on a device is desirable but challenging. Another unresolved issue is the lack of robust benchmarks tailored to industrial documentation tasks, making it difficult to measure progress or compare system performance. These limitations demonstrate that effective RAG in Industry 4.0/5.0/6.0 requires better data standardization, improved inference capabilities, more robust search architectures, and domain-specific evaluation frameworks.

A classic RAG implementation in a vector database stores fragmented technical instructions in the embedding space, allowing the model generator to retrieve only the most relevant procedural segments before generating documentation. Hybrid RAG architectures based on keywords and vectors improve the retrieval of industrial terminology that is not well represented in general-purpose embedding models. In RAG pipelines with fetch compression, retrieved documents are summarized by the intermediate model before final generation, reducing context window overhead and latency in large factories. RAG systems with extended memory maintain a short-term cache of recently retrieved industrial logs, enabling rapid and iterative updates to evolving documentation, such as change-based maintenance reports. A graphical RAG system integrates knowledge graphs with embeddings, ensuring that search respects hierarchical industrial taxonomies such as IEC 61360. Some RAG implementations utilize schema-aware fragmentation, where fragments are divided based on metadata from Computer Aided Design (CAD) table structures or ISO standard sections, rather than arbitrary token limits. Multimodal RAG systems support both text and image searches, embedding schematics, wiring diagrams, and sensor graphs alongside text content. Search reranking architectures use inter-encoder models to reorder k retrieved items, providing the generator with the most semantically accurate industrial content. A context-aware RAG system uses metadata such as machine type, error code, or operator role to dynamically filter search results to generate documentation. Distributed RAG clusters utilize fragmented vector stores to support data retrieval from petabyte-scale industrial knowledge databases, enabling real-time documentation generation across multinational plants. Some implementations use RAG with security layers, where a rule-based validator checks downloaded content for compliance with security standards before allowing the generator to use it. Temporal RAG architectures integrate timestamps, ensuring that downloads are targeted to the latest sensor logs or event reports, which is particularly useful for predictive maintenance documentation. Download-driven editing systems combine RAG with an editing model that highlights differences between current and previous versions of documentation to support controlled updates. In a fine-tuned industrial RAG, both the search engine and the generator are domain-specific using proprietary instructions, improving terminology precision and reducing hallucinations. RAG frameworks with agent administration coordinate multiple download and reasoning steps—such as schema download, rule validation, and procedural text assembly—before generating the final industrial documentation.

4.2. Technological Implications

Implementing DL in NLP for industrial documentation technologically improves efficiency, scalability, and consistency, but requires careful management and human oversight. Broader use of DL in NLP significantly accelerates the automation of industrial documentation generation, reducing the need for manual content creation. Advanced models, such as transformers, can understand and replicate domain-specific language, enabling the creation of technical manuals, reports, and compliance documents with high accuracy. DL enables dynamic documentation systems that update in real time based on sensor data or operational changes, improving responsiveness and reducing human intervention. These models can extract, summarize, and format complex technical information from multiple sources, streamlining knowledge consolidation and reuse. Improved multilingual capabilities allow enterprises to generate consistent and accurate documentation in global markets, increasing availability and regulatory compliance. Integration with DTs and IoT systems enables DL-based documentation to reflect real-time machine health and maintenance needs. This technology supports predictive document generation by anticipating user or operator needs based on historical usage patterns and system behavior []. However, this increased automation raises concerns about explainability, eXplainable AI (XAI) [,], version control, and traceability in safety-critical industries such as aerospace and pharmaceuticals, and their vulnerability to cyberattacks under NIS2 [] and ISO 20001 []. Reliance on large, annotated datasets also poses challenges related to data privacy, domain adaptation, and the cost of high-quality training data [,].

The technological implications of NLP-based industrial documentation become more compelling when embedded in real-world applications. For example, Siemens’ implementation of NLP-based maintenance assistants in manufacturing plants demonstrates how automatic text generation can reduce the burden of manual reporting and improve diagnostic accuracy by drawing insights from sensor records and operator notes. Similarly, ABB’s integration of NLP tools with robotic cell supervision systems demonstrates how natural language alerts can improve operator understanding of machine conditions, supporting safer and more efficient operations. Economically, organizations are already seeing tangible benefits: General Electric’s use of natural language processing to automate turbine maintenance reporting reduced documentation time by up to 40%, supporting the claim that NLP reduces operating costs and increases productivity in high-value industrial environments. These examples help substantiate the argument that automated documentation is not just a theoretical benefit but a practical driver of cost-effectiveness. Socially, integrating NLP into industrial environments supports evolving employee expectations in Industry 5.0 by improving human–machine communication. For example, Volkswagen’s voice-controlled assistance systems on the factory floor allow technicians to access documentation hands-free, reducing cognitive load and fostering a more inclusive work environment, especially for workers with less technical experience. From an ethical perspective, mentioning specific threats strengthens the discussion: cases such as misleading automated compliance reports generated by poorly trained NLP models illustrate how algorithmic bias or flawed language models can lead to incorrect or incomplete safety documentation. This highlights the ethical necessity of transparency, robust validation, and human oversight, already present in analysis but now based on practical experience. These examples deepen the discussion, demonstrating that NLP is already shaping industrial practices and confirming claims about its transformative potential while also illustrating the importance of responsible implementation.

4.3. Economic Implications

Implementing DL with NLP for industrial documentation significantly reduces labor costs by automating time-consuming writing and editing tasks. Companies can shift resources from repetitive documentation work to more valuable analytical and strategic roles, improving overall productivity. Faster document generation shortens product development cycles, accelerating time-to-market and creating a competitive advantage. Consistent and accurate documentation reduces costly errors in operations, maintenance, and compliance, minimizing regulatory fines and equipment downtime. The initial investment in DL infrastructure and training can be high, but the long-term operational savings and productivity gains often outweigh these costs. Small and medium-sized enterprises (SMEs) can face barriers to entry due to the need for technical expertise and high-quality training data in specific domains. The scalability of automated documentation allows companies to expand more efficiently into new markets by generating multilingual or localized documents with minimal additional costs. Higher-quality documentation can increase customer satisfaction and reduce support costs, especially for technical or industrial products [,,,,,,,,,,,,]. Industries requiring extensive documentation, such as manufacturing and energy, can achieve significant cost reductions through the continuous integration of NLP-based solutions. However, the displacement of technical writing positions may create temporary economic challenges, requiring investments in workforce retraining and adaptation.

4.4. Social Implications

In many communities, the broader implementation of AI in industry (offering both products and digital services) is raising social concerns, including fears of job loss. Broader use of NLP in industrial documentation could reduce the need for traditional technical writing roles, which leads to employee displacement and requires social adaptation. Conversely, it could democratize access to complex industrial knowledge by translating content containing technical jargon, for example, into more understandable formats for a wider audience. Increased automation of documentation tasks could improve workplace safety by providing clearer, more consistent instructions and reducing human error. This technology could deepen the digital divide, as workers without access to digital skills or retraining opportunities could be left behind. By supporting the generation of multilingual documents, DL could foster greater inclusivity and equality in global workplaces. The quality and transparency of automatically generated documents could impact public trust in industries that impact health, safety, or the environment [,]. Automation could transform society’s perception of documentation from a static artifact to a dynamic, intelligent tool integrated into daily operations. Increased surveillance or monitoring of employees could occur if deep learning systems are used to track and adapt documentation based on user behavior. Social norms regarding authorship and intellectual contribution could shift as AI increasingly co-authors or fully generates industrial texts. Overall, these technologies have the potential to improve human understanding and safety, but they also require careful management of ethical, labor, and accessibility issues.

4.5. Legal and Ethical Implications

The use of DL NLP in industrial documentation raises legal concerns about liability when AI-generated content contains errors that lead to accidents or regulatory non-compliance. Ensuring intellectual property rights becomes complicated because it can be unclear who owns content generated by AI systems—developers, companies, or end users. Ethical concerns about transparency arise, particularly in safety-critical industries, where stakeholders need to know whether documents were created by humans or machines (and by whose machines). Inadequate disclosure of AI involvement can lead to legal liability if users rely on generated content believing it was verified by humans. Bias in training data can lead to discriminatory or misleading documentation, creating legal and reputational risks for organizations. Data privacy regulations, such as the GDPR, can be violated if DL systems are trained on sensitive or proprietary information without proper consent. Maintaining version control and traceability in AI-generated documents is ethically important to ensure document auditability and trustworthiness. There are concerns about the erosion of professional responsibility, as AI systems may limit human oversight of documentation processes. Ethical implementation requires a clear governance framework to ensure fairness, security, and understandability in automated documentation systems [,]. As AI regulations tighten globally, industries using DL for documentation must ensure compliance to avoid sanctions and maintain ethical integrity.

4.6. Directions for Further Research

Future research should focus on developing and refining LLM and RAG models using domain-specific corpora, including technical manuals, maintenance logs, and compliance documents. This would improve contextual accuracy and reduce hallucinations in the generated content. There is a need to design more efficient search mechanisms tailored to structured and semi-structured industrial databases. This includes incorporating ontologies, knowledge graphs, and ERP data to improve search precision in RAG processes. Investigating models that can generate or update documents in real time, based on data from sensors, DTs, or live production logs, would increase relevance and responsiveness in Industry 4.0 and 5.0 environments. Research should address the explainability of generated documentation, particularly in regulated or safety-critical industries. Techniques such as attention visualization, lineage tracking, and counterfactual reasoning can make results more understandable to users. There is an urgent need to develop standard benchmarks and evaluation metrics specifically for industrial document generation. Metrics should go beyond linguistic quality to assess functional correctness, completeness, and regulatory compliance. Future systems should combine text generation with diagrams, CAD models, video, or voice interfaces. Furthermore, supporting multilingual documentation generation is crucial for global industries and a diverse operator base []. Designing interfaces and workflows in which domain experts can interact with NLP models to validate, edit, and generate control documents will improve usability and security []. Further research should focus on privacy-preserving LLMs and the ethical implications of document automation, especially for proprietary or sensitive industrial information []. Developing lightweight or compressed versions of LLM and RAG models for edge devices and low-resource industrial environments is essential for their widespread implementation, especially in production plants lacking high-performance computing infrastructure []. Exploring how models trained in one industry sector (e.g., automotive) can be adapted to others (e.g., aerospace or energy) through transfer learning can reduce the time and cost of implementing NLP systems across industries [,] (Table 5).

Table 5.

Research agenda plan for NLP in industrial documentation.

5. Conclusions

DL has significantly advanced natural language processing in industrial documentation, enabling the creation of more intelligent, scalable, and context-aware systems. LLM and RAG architectures have demonstrated significant potential for generating accurate and adaptive technical content. Despite these advances, challenges such as domain specificity, data scarcity, explainability, and real-time implementation remain key barriers. Current research often lacks robust evaluation frameworks and fails to address key industrial requirements such as compatibility, traceability, and multilingual support. Integrating domain knowledge, structured data, and human-assisted methods is essential to improving the reliability and usability of AI-generated documentation. Future research must focus on building specialized datasets, low-cost implementation strategies, and ethical, transparent NLP systems adapted to industrial environments. As this field evolves, DL-based NLP is poised to transform industrial documentation into a dynamic, intelligent layer within human–cyber–physical ecosystems.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app152312662/s1: Partial PRISMA 2020 Checklist [].

Author Contributions

Conceptualization, I.R. and D.M.; methodology, I.R. and D.M.; software, I.R. and D.M.; validation, I.R. and D.M.; I.R. and D.M.; investigation, I.R., O.M., M.K. and D.M.; resources, I.R. and D.M.; data curation, I.R., O.M., M.K. and D.M.; writing—original draft preparation, I.R., O.M., M.K. and D.M.; writing—review and editing, I.R., O.M., M.K. and D.M.; visualization, I.R. and D.M.; supervision, I.R.; project administration, I.R.; funding acquisition, I.R. All authors have read and agreed to the published version of the manuscript.

Funding

The work presented in the paper has been financed under a grant to maintain the research potential of Kazimierz Wielki University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request to Authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial intelligence |

| DL | Deep learning |

| GDPR | General Data Protection Regulation |

| IoT | Internet of Things |

| ML | Machine learning |

| NLP | Natural language processing |

| SDG | Sustainable development goal |

| SME | Small and medium enterprise |

| XAI | eXplainable artificial intelligence |

References

- Rojek, I.; Dostatni, E.; Mikołajewski, D.; Pawłowski, L.; Wegrzyn-Wolska, K. Modern approach to sustainable production in the context of Industry 4.0. Bull. Pol. Acad. Sci. Tech. Sci. 2022, 70, e143828. [Google Scholar] [CrossRef]

- Corrêa Cordeiro, F.; Ferreirada Silva, P.; Tessarollo, A.; Freitas, C.; de Souza, E.; da Silva Magalhães Gomes, D.; Rocha Souza, R.; Codeço Coelho, F. Petro NLP: Resources for natural language processing and information extraction for the oil and gas industry. Comput. Geosci. 2024, 193, 105714. [Google Scholar] [CrossRef]

- He, S.; Lu, Y. A Modularized Architecture of Multi-Branch Convolutional Neural Network for Image Captioning. Electronics 2019, 8, 1417. [Google Scholar] [CrossRef]

- Kim, H.-S.; Kang, J.-W.; Choi, S.-Y. ChatGPT vs. Human Journalists: Analyzing News Summaries Through BERT Score and Moderation Standards. Electronics 2025, 14, 2115. [Google Scholar] [CrossRef]

- Mikołajewska, E.; Masiak, J. Deep Learning Approaches to Natural Language Processing for Digital Twins of Patients in Psychiatry and Neurological Rehabilitation. Electronics 2025, 14, 2024. [Google Scholar] [CrossRef]

- Kayabas, A.; Topcu, A.E.; Alzoubi, Y.I.; Yıldız, M. A Deep Learning Approach to Classify AI-Generated and Human-Written Texts. Appl. Sci. 2025, 15, 5541. [Google Scholar] [CrossRef]

- Pan, K.; Zhang, X.; Chen, L. Research on the Training and Application Methods of a Lightweight Agricultural Domain-Specific Large Language Model Supporting Mandarin Chinese and Uyghur. Appl. Sci. 2024, 14, 5764. [Google Scholar] [CrossRef]

- Orji, E.Z.; Haydar, A.; Erşan, İ.; Mwambe, O.O. Advancing OCR Accuracy in Image-to-LaTeX Conversion—A Critical and Creative Exploration. Appl. Sci. 2023, 13, 12503. [Google Scholar] [CrossRef]

- Feichter, C.; Schlippe, T. Investigating Models for the Transcription of Mathematical Formulas in Images. Appl. Sci. 2024, 14, 1140. [Google Scholar] [CrossRef]

- Lamaakal, I.; Maleh, Y.; El Makkaoui, K.; Ouahbi, I.; Pławiak, P.; Alfarraj, O.; Almousa, M.; Abd El-Latif, A.A. Tiny Language Models for Automation and Control: Overview, Potential Applications, and Future Research Directions. Sensors 2025, 25, 1318. [Google Scholar] [CrossRef] [PubMed]

- Al-Safi, H.; Ibrahim, H.; Steenson, P. Vega: LLM-Driven Intelligent Chatbot Platform for Internet of Things Control and Development. Sensors 2025, 25, 3809. [Google Scholar] [CrossRef]

- Javed, S.; Usman, M.; Sandin, F.; Liwicki, M.; Mokayed, H. Deep Ontology Alignment Using a Natural Language Processing Approach for Automatic M2M Translation in IIoT. Sensors 2023, 23, 8427. [Google Scholar] [CrossRef]

- Wang, J.; Tang, Y.; He, S.; Zhao, C.; Sharma, P.K.; Alfarraj, O.; Tolba, A. Log Event2vec: Log Event-to-Vector Based Anomaly Detection for Large-Scale Logs in Internet of Things. Sensors 2020, 20, 2451. [Google Scholar] [CrossRef]

- Rojek, I. Hybrid Neural Networks as Prediction Models. In Artificial Intelligence and Soft Computing, Lecture Notes in Artificial Intelligence; Rutkowski, L., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 88–95. [Google Scholar]

- Rojek, I. Models for Better Environmental Intelligent Management within Water Supply Systems. Water Resour. Manag. 2014, 28, 3875–3890. [Google Scholar] [CrossRef]

- Vaiyapuri, T.; Jagannathan, S.K.; Ahmed, M.A.; Ramya, K.C.; Joshi, G.P.; Lee, S.; Lee, G. Sustainable Artificial Intelligence-Based Twitter Sentiment Analysison COVID-19 Pandemic. Sustainability 2023, 15, 6404. [Google Scholar] [CrossRef]

- Cortés-Caicedo, B.; Grisales-Noreña, L.F.; Montoya, O.D.; Rodriguez-Cabal, M.A.; Rosero, J.A. Energy Management System for the Optimal Operation of PV Generators in Distribution Systems Using the Antlion Optimizer: A Colombian Urban and Rural Case Study. Sustainability 2022, 14, 16083. [Google Scholar] [CrossRef]

- Jamii, J.; Trabelsi, M.; Mansouri, M.; Mimouni, M.F.; Shatanawi, W. Non-Linear Programming-Based Energy Management for a Wind Farm Coupled with Pumped Hydro Storage System. Sustainability 2022, 14, 11287. [Google Scholar] [CrossRef]

- Choi, S.-W.; Lee, E.-B.; Kim, J.-H. The Engineering Machine-Learning Automation Platform (EMAP): A Big-Data-Driven AI Tool for Contractors’ Sustainable Management Solutions for Plant Projects. Sustainability 2021, 13, 10384. [Google Scholar] [CrossRef]

- Alqahtani, E.; Janbi, N.; Sharaf, S.; Mehmood, R. Smart Homes and Families to Enable Sustainable Societies: A Data-Driven Approach for Multi-Perspective Parameter Discovery Using BERT Modelling. Sustainability 2022, 14, 13534. [Google Scholar] [CrossRef]

- Ali, S.; Shirazi, F. A Transformer-Based Machine Learning Approach for Sustainable E-Waste Management: A Comparative Policy Analysis between the Swiss and Canadian Systems. Sustainability 2022, 14, 13220. [Google Scholar] [CrossRef]

- Nam, S.; Yoon, S.; Raghavan, N.; Park, H. Identifying Service Opportunities Based on Outcome-Driven Innovation Framework and Deep Learning: A Case Study of Hotel Service. Sustainability 2021, 13, 391. [Google Scholar] [CrossRef]

- Rojek, I.; Kowal, M.; Stoic, A. Predictive compensation of thermal deformations of ball screws in cnc machines using neural networks. Teh. Vjesn.-Tech. Gaz. 2017, 24, 1697–1703. [Google Scholar]

- Rojek, I.; Mikołajewski, D.; Kotlarz, P.; Tyburek, K.; Kopowski, J.; Dostatni, E. Traditional Artificial Neural Networks Versus Deep Learning in Optimization of Material Aspects of 3D Printing. Materials 2021, 14, 7625. [Google Scholar] [CrossRef] [PubMed]

- Bäckstrand, E.; Djupedal, R.; Öberg, L.M.; de Oliveira Neto, F.G. Unveiling Disparities: NLP Analysis of Software Industry and Vocational Education Gaps. In Proceedings of the Third ACM/IEEE International Workshop on NL-Based Software Engineering, Lisbon, Portugal, 20 April 2024; pp. 9–16. [Google Scholar]

- Lindh-Knuutila, T.; Loftsson, H.; Doval, P.A.; Andersson, S.; Barkarson, B.; Cerezo-Costas, H.; Guðnason, J.; Gylfason, J.; Hemminki, J.; Kaalep, H.J. Microservices at Your Service: Bridging the Gap between NLP Research and Industry. In Proceedings of the 24th Nordic Conference on Computational Linguistics (NoDaLiDa), Tórshavn, Faroe Islands, 22–24 May 2023; pp. 86–91. [Google Scholar]

- RojasGonzález, T.; Rocha, M.A.; Baltazar, R.; Casillas, M.A.; del Valle, J. Voice Control System Through NLP (Natural Language Processing) as an Interactive Model for Scalable ERP Platforms in Industry 4.0. In Proceedings of the Agents and Multi-Agent Systems: Technologies and Applications 2022: Proceedings of 16th KES International Conference, KES-AMSTA, Rhodes, Greece, 20–22 June 2022; pp. 207–217. [Google Scholar]

- Mantzaris, A.V. Benchmark Data NLP.jl: Synthetic Data Generation for NLP Benchmarking. J. Open Source Softw. 2025, 10, 7844. [Google Scholar] [CrossRef]

- Papadimas, C.; Ragazou, V.; Karasavvidis, I.; Kollias, V. Predicting learning performance using NLP: An exploratory study using two semantic textual similarity methods. Knowl. Inf. Syst. 2025, 67, 4567–4595. [Google Scholar] [CrossRef]

- Bourdin, M.; Paviot, T.; Pellerin, R.; Lamouri, S. NLP in SMEs for industry 4.0: Opportunities and challenges. Procedia Comput. Sci. 2024, 239, 396–403. [Google Scholar] [CrossRef]

- Lee, H.; Hyun Kim, J.; Sun Jung, H. ESG-KIBERT: A new paradigm in ESG evaluation using NLP and industry-specific customization. Decis. Support Syst. 2025, 193, 114440. [Google Scholar] [CrossRef]

- Czeczot, G.; Rojek, I.; Mikołajewski, D.; Sangho, B. AI in IIoT Management of Cybersecurity for Industry 4.0 and Industry 5.0 Purposes. Electronics 2023, 12, 3800. [Google Scholar] [CrossRef]

- Rojek, I.; Mroziński, A.; Kotlarz, P.; Macko, M.; Mikołajewski, D. AI-Based Computational Model in Sustainable Transformation of Energy Markets. Energies 2023, 16, 8059. [Google Scholar] [CrossRef]

- Alrashidi, B.; Jamal, A.; Alkhathlan, A. Abusive Content Detection in Arabic Tweets Using Multi-Task Learning and Transformer-Based Models. Appl. Sci. 2023, 13, 5825. [Google Scholar] [CrossRef]

- Al Duhayyim, M.; Alazwari, S.; Mengash, H.A.; Marzouk, R.; Alzahrani, J.S.; Mahgoub, H.; Althukair, F.; Salama, A.S. Metaheuristics Optimization with Deep Learning Enabled Automated Image Captioning System. Appl. Sci. 2022, 12, 7724. [Google Scholar] [CrossRef]

- Kumar, G.; Basri, S.; Imam, A.A.; Khowaja, S.A.; Capretz, L.F.; Balogun, A.O. Data Harmonization for Heterogeneous Datasets: A Systematic Literature Review. Appl. Sci. 2021, 11, 8275. [Google Scholar] [CrossRef]

- Avci, C.; Tekinerdogan, B.; Athanasiadis, I.N. Software architectures for big data: A systematic literature review. Big Data Anal. 2020, 5, 5. [Google Scholar] [CrossRef]

- Maheshwari, H.; Verma, L.; Chandra, U. Overview of Big Data and Its Issues. Int. J. Res. Electron. Comput. Eng. 2019, 7, 256. [Google Scholar]

- Younan, M.; Houssein, E.H.; Elhoseny, M.; Ali, A.A. Challenges and recommended technologies for the industrial internet of things: A comprehensive review. Measurement 2020, 151, 107198. [Google Scholar] [CrossRef]

- Wang, Y.; Jan, M.N.; Chu, S.; Zhu, Y. Use of Big Data Tools and Industrial Internet of Things: An Overview. Sci. Program. 2020, 2020, 8810634. [Google Scholar] [CrossRef]

- Ralph, B.; Stockinger, M. Digitalization and digital transformation in metal forming: Key technologies, challenges and current developments of industry 4.0 applications. In Proceedings of the XXXIX, Colloquium on Metal Forming, Leoben, Austria, 21–25 March 2020. [Google Scholar]

- Kourou, K.D.; Pezoulas, V.C.; Georga, E.I.; Exarchos, T.P.; Tsanakas, P.; Tsiknakis, M.; Varvarigou, T.; De Vita, S.; Tzioufas, A.; Fotiadis, D.I.I. Cohort Harmonization and Integrative Analysis from a Biomedical Engineering Perspective. IEEE Rev. Biomed. Eng. 2018, 12, 303–318. [Google Scholar] [CrossRef] [PubMed]

- Stoyanova, M.; Nikoloudakis, Y.; Panagiotakis, S.; Pallis, E.; Markakis, E.K. A Survey on the Internet of Things (IoT) Forensics: Challenges, Approaches, and Open Issues. IEEE Commun. Surv. Tutor. 2020, 22, 1191–1221. [Google Scholar] [CrossRef]

- Sahu, A.K.; Sahu, A.K.; Sahu, N.K. A Review on the Research Growth of Industry 4.0: IIoT Business Architectures Benchmarking. Int. J. Bus. Anal. 2020, 7, 77–97. [Google Scholar] [CrossRef]

- Khan, M.; Wu, X.; Xu, X.; Dou, W. Big data challenges and opportunities in the hype of Industry 4.0. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Sajid, S.; Haleem, A.; Bahl, S.; Javaid, M.; Goyal, T.; Mittal, M. Data science applications for predictive maintenance and materials science in context to Industry 4.0. Mater. Today Proc. 2021, 45, 4898–4905. [Google Scholar] [CrossRef]

- Jagtap, S.; Bader, F.; Garcia-Garcia, G.; Trollman, H.; Fadiji, T.; Salonitis, K. Food Logistics 4.0: Opportunities and Challenges. Logistics 2020, 5, 2. [Google Scholar] [CrossRef]

- De Vass, T.; Shee, H.; Miah, S. IoT in Supply Chain Management: Opportunities and Challenges for Businesses in Early Industry 4.0 Context. Oper. Supply Chain Manag. Int. J. 2021, 14, 148–161. [Google Scholar] [CrossRef]

- Gong, L.; Fast-Berglund, A.; Johansson, B. A Framework for Extended Reality System Development in Manufacturing. IEEE Access 2021, 9, 24796–24813. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Shoumy, N.J.; Ang, L.-M.; Seng, K.P.; Rahaman, D.; Zia, T. Multimodal big data affective analytics: A comprehensive survey using text, audio, visual and physiological signals. J. Netw. Comput. Appl. 2020, 149, 102447. [Google Scholar] [CrossRef]

- Verma, J.P.; Agrawal, S.; Patel, B.; Patel, A. Big data analytics: Challenges and applications for text, audio, video, and social media data. Int. J. Soft Comput. Artif. Intell. Appl. 2016, 5, 41–51. [Google Scholar] [CrossRef]

- Duwairi, R.; Hayajneh, A.; Quwaider, M. A Deep Learning Framework for Automatic Detection of Hate Speech Embeddedin Arabic Tweets. Arab. J. Sci. Eng. 2021, 46, 4001–4014. [Google Scholar] [CrossRef]

- Pontikis, I.; Koutivas, S.; Stafylas, D. Greek Patent Classification Using Deep Learning. In Proceedings of the Novel & Intelligent Digital Systems: The 2nd International Conference on Novel and Intelligent Digital Systems-NiDS, Athens, Greece, 29–30 September 2022; Volume 556, pp. 372–381. [Google Scholar]

- Trappey, A.J.C.; Trappey, C.V.; Wang, J.W.C. Intelligent compilation of patent summaries using machine learning and natural language processing techniques. Adv. Eng. Inform. 2022, 43, 101027. [Google Scholar] [CrossRef]

- Kwon, B.; Kim, J.; Mun, D. Construction of design requirements knowledge base from unstructured design guidelines using natural language processing. Comput. Ind. 2024, 159, 104100. [Google Scholar] [CrossRef]

- Rahman, M.M.; Finin, T. Deep Understanding of a Document’s Structure. In Proceedings of the Fourth IEEE/ACM International Conference on Big Data Computing, Applications And Technologies, Austin, TX, USA, 5–8 December 2017; pp. 63–73. [Google Scholar]

- Xiong, H.; Wu, Y.; Jin, C.; Kumari, S. Efficient and Privacy-Preserving Authentication Protocol for Heterogeneous Systems in IIoT. IEEE Internet Things J. 2020, 7, 11713–11724. [Google Scholar] [CrossRef]

- James, Y.; Szymanezyk, O. The Challenges of Integrating Industry 4.0 in Cyber Security—A Perspective. Int. J. Inf. Educ. Technol. 2021, 11, 242–247. [Google Scholar] [CrossRef]

- Shao, X.-F.; Liu, W.; Li, Y.; Chaudhry, H.R.; Yue, X.-G. Multistage implementation framework for smart supply chain management under industry 4.0. Technol. Forecast. Soc. Change 2021, 162, 120354. [Google Scholar] [CrossRef]

- Giarelis, N.; Karacapilidis, N. Deep learning and embeddings - based approaches for key phrase extraction: A literature review. Knowl. Inf. Syst. 2024, 66, 6493–6526. [Google Scholar] [CrossRef]

- IEC 61360-1:2017; Standard Data Element Types with Associated Classification Scheme—Part 1: Definitions—Principles and Methods. International Electrotechnical Commission: Geneva, Switzerland, 2017. Available online: https://webstore.iec.ch/en/publication/28560 (accessed on 28 October 2025).

- ISO 15531-1:2004; Industrial Automation Systems and Integration—Industrial Manufacturing Management Data Part 1: General Overview. International Organization for Standardization: Geneva, Switzerland, 2004. Available online: https://www.iso.org/standard/28144.html (accessed on 28 October 2025).

- Wu, C.K.; Li, X.; Yang, Z.L. Natural language processing for smart construction: Current status and future directions. Autom. Constr. 2022, 134, 104059. [Google Scholar] [CrossRef]

- Park, S.; Lee, W.; Lee, J. Learning of indiscriminate distributions of document embeddings for domain adaptation. Intell. Data Anal. 2019, 23, 779–797. [Google Scholar] [CrossRef]

- Mohammadi, H.; Giachanou, A.; Bagheri, A. A Transparent Pipeline for Identifying Sexism in Social Media: Combining Explainability with Model Prediction. Appl. Sci. 2024, 14, 8620. [Google Scholar] [CrossRef]

- Pérez-Landa, G.I.; Loyola-González, O.; Medina-Pérez, M.A. An Explainable Artificial Intelligence Model for Detecting Xenophobic Tweets. Appl. Sci. 2021, 11, 10801. [Google Scholar] [CrossRef]

- Directive (EU) 2022/2555 of the European Parliament and of the Council of 14 December 2022 on Measures for a High Common Level of Cybersecurity Across the Union, Amending Regulation (EU) No 910/2014 and Directive (EU) 2018/1972, and Repealing Directive (EU) 2016/1148 (NIS 2 Directive). Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32022L2555 (accessed on 28 October 2025).

- ISO/IEC 20000-1:2018; Information Technology—Service Management Part 1: Service Management System Requirements. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/70636.html (accessed on 28 October 2025).

- Kim, Y.; Park, K.; Yoo, B. Natural language processing-based approach for automatically coding ship sensor data. Int. J. Nav. Archit. Ocean. Eng. 2024, 16, 100581. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; and Rautaray, S. Empirical evaluation of deep learning models for sentiment analysis. J. Stat. Manag. Syst. 2019, 22, 741–752. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Q.; Wang, Y.; Wang, S. Social Media Rumor Refuter Feature Analysis and Crowd Identification Basedon XGBoost and NLP. Appl. Sci. 2020, 10, 4711. [Google Scholar] [CrossRef]

- Wu, H.; Zhong, B.; Medjdoub, B.; Xing, X.; Jiao, L. An Ontological Metro Accident Case Retrieval Using CBR and NLP. Appl. Sci. 2020, 10, 5298. [Google Scholar] [CrossRef]