Abstract

This paper presents a systematic literature review on the use of machine learning (ML) for developing adaptive accessible user interfaces (AUI) with emphasis on applications in emerging technologies such as augmented and virtual reality (AR/VR). The review, conducted according to the PRISMA 2020 methodology, included 57 studies published between 2018 and 2025. Among them we identified 24 papers explicitly describing ML-based adaptive interface solutions. Supervised learning was dominant (83% of studies) with only isolated cases of reinforcement, generative AI, and fuzzy–NLP hybrid paradigms. The analysis of all 57 papers included in review revealed that adaptive interfaces dominate current research (65%), while intelligent or hybrid systems remain less explored. Mobile platforms were the most prevalent implementation environment (25%), followed by web-based (19%) and multi-platform systems (11%), with immersive (VR/XR) and IoT contexts still emerging. Among 43 studies addressing accessibility, the most were focused on visual impairments (33%), followed by cognitive and learning disorders (25%). The results of this review can inform the creation of accessibility guidelines in emerging AR and VR applications and support the development of inclusive solutions that benefit people with disabilities, older adults, and the general population. The main contribution of this paper lies in identifying existing gaps in the integration of accessibility and Universal Design principles into ML-based adaptive systems and in proposing a new AUI model that enables user-approved, time-delayed adaptations through machine learning, balancing autonomy, personalization, and user control.

1. Introduction

In recent years, the demand for technologies that can improve the quality of life and social inclusion of people with disabilities (PWDs) has increased. According to statistics from the World Health Organization (WHO), 16% of people in the world have a significant form of disability []. Emerging technologies such as augmented and virtual reality and holographic technologies offer new opportunities to improve the living conditions of people with disabilities and older people, enabling them to be better integrated and experience a higher quality of life in everyday activities [].

Emerging technologies refer to innovative achievements that are currently at an early stage of development, adoption or integration in various fields, awaiting potentially widespread acceptance and application []. These technologies often have the potential to significantly impact industry, society and daily life in the future. One of the most important advantages of emerging technologies is the possibility to create a hybrid learning environment []. Apart from the fact that virtual reality (VR), augmented reality (AR) and holograms are new technologies, they are also visualization technologies based on three-dimensional (3D) visualization. A three-dimensional visualization can be defined as the process of creating graphical content using software technologies []. In this process, the object is displayed using a computer or a mobile device equipped with an appropriate software solution so that the visualized 3D object can be viewed from different angles and perspectives. 3D technology currently has a positive effect on the cognitive development and attention of students and helps children with developmental disabilities to perceive abstract three-dimensional concepts [,]. In addition, research has shown that the visualization of teaching or learning content can improve students’ concentration []. The use of the aforementioned technologies in the educational process of students encourages students’ active participation and allows them to experiment in a safe environment, which can significantly improve their understanding of the subject matter and increase their engagement in learning [].

In the context of the use of technology in education, Serious Games (SG) represent the optimal combination of entertainment and education. For many years, aspects of gaming activities have been successfully employed for various educational and training purposes []. The games are shared and enjoyable social activities that allow participants to learn skills as well as develop and improve cognitive and social abilities, while respecting predefined game structure and rules. Furthermore, the notion of Serious games (SGs) aims to shift the main focus of games such as traditional video games from entertainment to education. Serious Games can be used to complement traditional education by providing new interesting ways of acquiring knowledge and improving skills through problem solving in different gaming scenarios. Serious games can also be an effective training tool in various fields, e.g., medicine or military. In the military environment, serious games help soldiers to rehearse diverse tactical scenarios in a secure, controlled environment. Moreover, in the field of medicine, serious games serve as powerful simulation tools for improving doctors’ diagnostic and intervention capabilities in a risk-free virtual settings []. Such games increasingly incorporate elements of emerging technologies such as virtual and augmented reality technologies and holograms. There are many examples of Serious Games applications that provide a meaningful use of various gaming aspects for education and training. To maximize student engagement and keep their concentration as well as encourage the reflection on the learning material, the educators are increasingly trying to integrate engaging, interactive applications like Serious Games into everyday classroom instruction. This approach not only expands the creative possibilities of teachers but also allows them to introduce students to the subject matter through safe interactive experiences via different sensory channels.

Given the importance of integrating new technologies into educational processes, high-quality user experience (UX) is becoming increasingly important for the success of modern software solutions that integrate these technologies. Namely, successful design requires a balance between functionality and usability so that users, especially people with disabilities, can easily adopt the functionality of the software solution. Such situations often lead to lengthy discussions, as it can be unclear which aspect of the user experience is more important or takes precedence in the context of certain product goals []. If the user experience is neglected, the effectiveness of the software solution may decrease, leading to frustration and lower user engagement. Focusing on the user experience therefore not only improves the acceptance of new technologies but also helps to create safe and stimulating learning environments. One possible solution for an optimal user experience lies in the use of artificial intelligence and machine learning to customize the interface of software solutions to the specific needs of users.

Artificial intelligence (AI) refers to the general ability of computers to mimic human thinking and perform tasks in real-world environments, while machine learning (ML) refers to technologies and algorithms that enable systems to recognize patterns, make decisions, and improve through experience []. This form of AI can gradually improve user interaction and experience. AI is increasingly being used to evaluate [,] and customize user interfaces [], to improve accessibility and user experience. The application of AI to evaluate and customize user interfaces has led to intelligent user interfaces (IUI) and adaptive user interfaces (AUI).

The intelligent user interfaces [] are interfaces between users and computers that enhance efficiency and interaction by using artificial intelligence to adapt to real-time user behavior. These interfaces can infer, adapt, and act based on models of users, tasks, and context, aiming to create more intuitive interactions. IUI development relies on various AI methods and strategies, including intelligent assistants, intelligent decision support systems, intelligent cooperation agents, and dialogue assistants. The goal is to improve the efficiency, effectiveness, and naturalness of human–computer interaction (HCI). The design and use of the interface should consider the specific challenges of users, whether they are elderly [,], who require simpler and more intuitive interfaces, or users on the autism spectrum [], who may have different interaction needs. Although intelligent user interfaces primarily use advanced AI methods to adjust and optimize real-time interactions, adaptable user interfaces dynamically change the structure and functionality of the user interface. AUIs [] are systems that dynamically change their structure, functionality, and content to adapt to a specific user in real time. This adjustment is achieved by monitoring the user’s status, the state of the system, and the environment in which the user is operating, using the appropriate machine learning or artificial intelligence paradigm. A basic AUI architecture typically consists of four key components: event capture, event identification, user pattern recognition, and user intention prediction. The event capture module monitors user–application interactions, recording each action and assigning it a unique identification number [].

To ensure terminological consistency and avoid ambiguity, Table 1 provides concise definitions distinguishing between adaptive, adaptable, intelligent, and conversational user interfaces as discussed in the literature.

Table 1.

Definitions and distinctions between types of user interfaces identified in the literature.

This paper investigates current trends at the intersection of machine learning, adaptive and intelligent user interfaces, and accessibility, particularly in relation to Universal Design principles. The rapid adoption of AI and ML has renewed interest in adaptive and intelligent interfaces, but it remains unclear to what extent these approaches consider accessibility for people with disabilities and adhere to established design principles. This study systematically examines how existing research integrates accessibility considerations, whether classical human–computer interaction guidelines (e.g., predictability, consistency, user control, error prevention) [] are followed, and how Universal Design principles [,] are applied. By analyzing these trends and identifying trends but also gaps and shortcomings in prior work, the study provides a foundation for future research on adaptive accessible user interfaces. The following research questions (RQ) were defined:

- RQ1: Is there research related to the application of machine learning models for adapting user interfaces for people with disabilities (PWDs) in accordance with Universal Design?

- RQ2: Which machine learning paradigm is most common in the context of user interface adaptation and accessibility?

- RQ3: In what type of environment are adaptive accessible user interfaces implemented?

- RQ4: What are the challenges in applying machine learning for the development of adaptive accessible user interfaces for people with different types of disabilities?

The rest of this paper is structured as follows: Section 2 describes the research methodology. Section 3 presents the literature review, where the relevant labels were refined and narrowed. Section 4 provides a detailed analysis of the reviewed literature using the selected methodology, along with an overview of the results and a discussion of the research topic. Finally, Section 5 concludes the paper with the main findings, elaboration, and directions for future research.

2. Research Methodology

In this paper, we use the PRISMA 2020 method (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [], which provides guidelines for conducting systematic reviews and meta-analyses of research described in scientific papers. The PRISMA method ensures transparency and accuracy in identifying, selecting, analyzing, and synthesizing relevant research.

Following the PRISMA 2020 method [], we conduct literature search and selection steps to analyze machine learning applications for adaptive user interfaces in emerging technologies. This method begins with the identification phase, which aims to recognize relevant works according to a defined search strategy. For this paper, the chosen search strategy involves searching the Web of Science [] and Scopus [] database, which contain reliable, high-quality papers from various scientific disciplines. Although other databases such as IEEE Xplore and the ACM Digital Library were considered, this review primarily relied on Web of Science (WoS) and Scopus, as together they provide comprehensive coverage of high-quality journals and conferences, including many relevant IEEE and ACM publications. This rationale guided our database selection and is explicitly stated to enhance methodological transparency.

To obtain the desired results from Web of Science database the relevant research area, a query is formed using the logical operators “AND,” “OR,” and “NOT.” We also used following field types: TS (Topic—searches the article title, abstract, and keywords), DT (Document type—restricts search to specific document types), PY (Publication year—restricts search to a year range) and LA (language). The literature search was performed using the following advanced query:

TS=((User interface OR adaptive interface OR intelligent interface) AND (machine learning OR deep learning OR artificial intelligence) AND accessibility) AND DT=(Review OR Article OR Early Access OR Proceedings Paper) AND PY=(2018–2025) AND LA=(English)

To obtain the desired results from the Scopus database, a similar query structure was applied using Scopus-specific field codes and logical operators. We used the following fields: TITLE-ABS-KEY (searches the article title, abstract, and keywords), DOCTYPE (restricts search to specific document types: ar = article, re = review, cp = conference paper), PUBYEAR (restricts search to a year range), and LANGUAGE (limits results to English). The literature search was performed using the following advanced query:

TITLE-ABS-KEY ((“User interface” OR “adaptive interface” OR “intelligent interface”) AND (“machine learning” OR “deep learning” OR “artificial intelligence”) AND accessibility) AND (DOCTYPE (ar OR re OR cp) OR PUBSTAGE (AIP)) AND PUBYEAR > 2017 AND PUBYEAR < 2026 AND LANGUAGE (“english”)

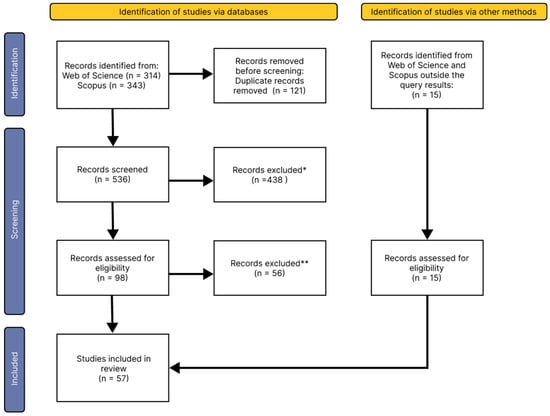

The types of documents included in the search were proceedings, articles, review articles, and early access papers, covering publications from 2018 to October 2025. The search was restricted to articles published in English. The query was run on 2 November 2025 on the Web of Science and Scopus database which yielded 657 results. Of these, 121 papers were found in both databases and their duplicates were removed. All search results, including the union of databases and the duplicates, are provided in Supplementary Table S2 for reproducibility. Papers were mostly tagged in the fields of computer science, electronics, and ergonomics. Based on the research area 8 additional articles outside the initial query were found and added to the analysis. After reviewing the titles and abstracts, 98 papers were selected for further review, and 438 papers were excluded. All authors contributed to the screening of records (MK, IZ and FŠM, respectively). The results obtained were subsequently reviewed and verified by the supervisor (ŽC).

Specific inclusion and exclusion criteria were applied during the screening process to maintain the quality and relevance of the reviewed literature. Many papers were excluded because their scope differed substantially from the aims of this review. A large portion of the retrieved works focused on the development of interfaces for operating or visualizing machine learning models, medical diagnostic systems, or robotic platforms, which fall outside the domain of adaptive user interfaces. Furthermore, several papers employed artificial intelligence or machine learning techniques not to modify the interface itself, but rather to adapt the content or tasks presented within applications (for example, simplifying problem-solving steps, recommending learning materials, or adjusting workflow complexity). Since this review specifically targets adaptive or intelligent user interfaces that employ AI or ML to enhance the accessibility of the interface particularly through visual, sensory, or interactional adaptations such content-level adaptability did not meet the inclusion criteria. Finally, brain–computer interfaces (BCI) and conversational user interfaces (CUIs), such as chatbots and other LLM-based systems, were also excluded, since while they represent an important class of interactional systems they do not involve adaptive modification of the graphical or sensory interface itself. The exception was if the graphical adaptive user interface also contained BCI and/or CUI. The primary inclusion criterion was that the research focused on interfaces capable of real-time adaptation to user behavior, preferences, or context. The full inclusion and exclusion criteria are summarized in Table 2. The screening results and process can be found in Supplementary Table S3, and the summary of included papers can be seen in Supplementary Table S4.

Table 2.

Inclusion and Exclusion Criteria for the Literature Review.

After applying these filters, an in-depth reading of the remaining studies was conducted, resulting in 57 [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,] papers meeting the inclusion criteria. The detailed results of this process are illustrated in Figure 1 and PRISMA-ScR Checklist table can be seen in Supplementary Table S1.

Figure 1.

PRISMA flowchart showing the number of publications at each stage of the systematic literature review. Records excluded after reading title and abstract *. Records excluded after full-text review **.

For the analysis, the publications were recorded in an Excel spreadsheet and categorized by year of publication, type of publication, type of interface implemented or described in the paper, environment or device in which the interface operates and whether the paper refers to people with disabilities and, if so, what type of disability. The analysis of the papers will answer the research questions posed below and describe in more detail current solutions and technologies in the field of adaptive accessible user interfaces.

3. Results

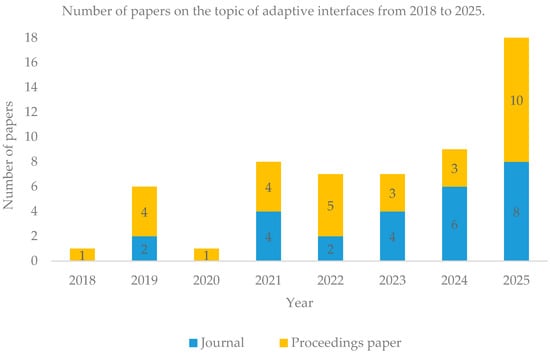

Although most papers were not included in the final analysis, the annual number of publications on applying machine learning to adaptive accessible user interfaces for people with disabilities, the elderly, and accessibility in general has increased significantly since 2018. This trend may be related to the emergence of various AI solutions, which have become especially popular following the introduction of OpenAI, ChatGPT, and other computer systems that use natural language processing (NLP). Figure 2 shows the yearly distribution of papers included in the final analysis.

Figure 2.

Yearly distribution of reviewed papers on adaptive and intelligent user interfaces from 2018 to 2025 (n = 57). The steady increase after 2020 indicates a growing research interest aligned with the global expansion of AI-based technologies such as ChatGPT and other NLP systems, suggesting that accessibility-related AI research follows general AI adoption trends.

The analysis included papers on adaptive and intelligent user interfaces but excluding interfaces that can only be statically adjusted (adaptable user interface) and conversational user interfaces (CUI). Interfaces with static adjustment allow users to manually set interface settings in advance, with these settings remaining unchanged during use []. This type of static adaptation is not the focus of the analysis, as it does not address dynamic interface adaptation in real time. CUIs enable interaction through natural language [] most often using voice assistants but are not included in the analysis because they focus on dialogue rather than real-time user interface adaptation.

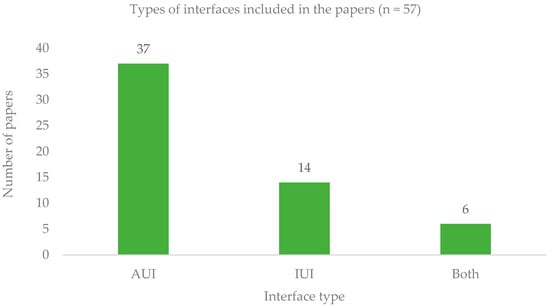

Figure 3 illustrates the distribution of included papers according to interface type. Most papers focused on AUI (n = 37), less on IUI (n = 14), while 6 papers combined both AUI and IUI. This indicates that research on applying machine learning predominantly emphasizes adaptation, with comparatively fewer works addressing intelligence or the integration of both paradigms.

Figure 3.

Distribution of analyzed papers (n = 57) according to the type of user interface implemented. Adaptive user interfaces (AUI) dominate with 65% of reviewed papers, indicating a stronger research focus on real-time adaptability compared to reasoning-based intelligence in user interfaces.

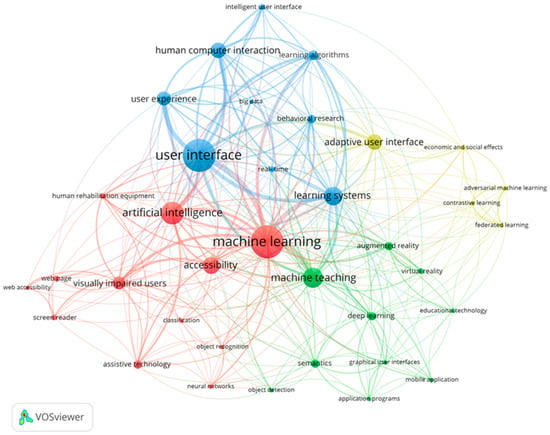

Furthermore, the keyword co-occurrence analysis of the selected papers was performed using the free software tool VOSviewer 1.6.20 []. This allowed us to generate a visual overview of the main topics covered by the papers included in this systematic review. To automate this process, we used Scopus advanced search to export a CSV file with detailed information of all 57 papers selected for this systematic review, including their author keywords and index keywords. Additionally, a special thesaurus text file was created that allowed VOSviewer to perform data cleaning by merging and unifying equivalent keywords. Initially, VOSviewer found 680 keywords in the selected papers, but after applying the thesaurus file it identified 618 unique keywords. For the keyword co-occurrence analysis only those keywords that appear in at least three of the selected papers were considered, limiting the number of keywords to 36. Finally, VOSviewer generated the keyword co-occurrence network map shown in Figure 4.

Figure 4.

Keyword co-occurrence network map of the selected papers built by VOSviewer.

Each node in Figure 4 represents one of the identified keywords, and the size of each node is proportional to the number of occurrences of that keyword in the selected papers. If any two nodes are linked, then their keywords appear together in at least one of the selected papers. The size of the link between any two nodes is proportional to the number of papers where both of their keywords appear. In this case, the most frequent keywords were “user interfaces” (in keyword lists of 23 selected papers) and “machine learning” (in keyword lists of 23 selected papers), both with a high number of links to other nodes (28 and 35, respectively). Links size also indicates that the strongest connections are between keywords “machine learning” and “machine teaching” (they appear together in the keyword lists of 13 selected papers), “machine learning” and “user interface” (in keyword lists of 11 selected papers), “machine learning” and “learning systems” (in keyword lists of 10 selected papers) as well as “user interface” and “user experience” (in keyword lists of 9 selected papers). Additionally, VOSviewer can identify clusters of close keywords, e.g., in Figure 4, there are four clusters denoted by different colors: the red cluster is dominated by the topic of machine learning, the green cluster is centered around machine teaching, the blue cluster covers user interfaces and yellow cluster is centered around adaptive user interfaces. Based on the systematic literature review conducted in this study, answers to the following research questions can be defined.

3.1. RQ1 Is There Research Related to the Application of Machine Learning Models for Adapting User Interfaces for People with Disabilities (PWDs) in Accordance with Universal Design?

Although almost all the papers included in the literature review conducted in this study mention accessibility, not all refer to people with disabilities, and very few follow Universal Design principles. Universal Design (UD) [] refers to designing and creating environments that are accessible, understandable, and useful to the widest possible range of people, regardless of age, size, ability, or disability. Only two papers mention the term “Universal Design” (n = 2) [,]. Although UD enables the creation of accessible interfaces for the general population, assistive technology is often still needed for specific cases. UD does not replace assistive technology; both approaches are considered to be complementary. Assistive technology includes various devices and software solutions, such as alternative communication methods for people with speech or motor disabilities, word prediction software, and computer-based environmental controls (e.g., turning lights on or off, opening doors) [].

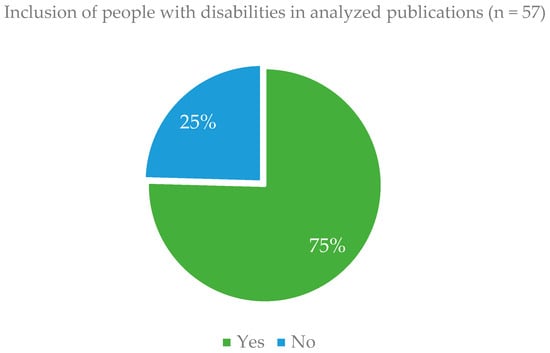

The papers that, in addition to accessibility, also include PWDs account for 75% (n = 43), while those that do not directly mention PWDs, disability, or older people account for 25% (n = 14), as shown in Figure 5.

Figure 5.

Inclusion ratio of target groups in the analyzed papers (n = 57). Three quarters of studies include people with disabilities (75%), while one quarter address accessibility without specific user group focus. The lack of emphasis on Universal Design principles indicates a research gap in generalized accessibility strategies.

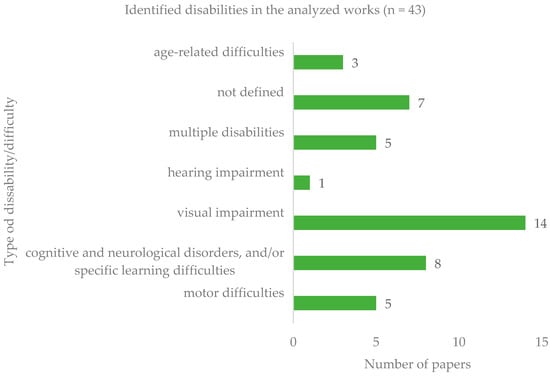

Most works that included PWDs (n = 43) focused on developing and researching solutions for people with visual impairments (n = 14, 33%). The next most frequent were those for people with cognitive and neurological disorders and/or specific learning difficulties (n = 8, 19%) and in significant number of works type of difficulty was not defined (n = 7, 16%). The number of papers focusing on motor disabilities (n = 5, 12%), multiple impairments (n = 5, 12%), the elderly (n = 3, 7%), and hearing impairments (n = 1, 2%) is smaller. In the case of multiple impairments, one study addressed combined visual and hearing difficulties by proposing a mobile solution based on the analysis of touch dynamics []. One study proposed a new concept of IUI combining AR with BCI technology for people with cognitive and motor difficulties []. Furthermore, one study [] presents a mobile application designed for people with diverse needs, incorporating features such as voice navigation, gesture controls, facial recognition, and customizable user interfaces. Another research proposes a Smart Knowledge Delivery System [] with adaptive accessibility using machine learning, aiming to enhance accessibility in learning platforms for users with visual, auditory, and cognitive disabilities. In addition, one work [] focuses on an adaptive workspace intended to support individuals with visual, cognitive, and neurological impairments. Notably only one paper addressed people with hearing impairments. This distribution is shown in Figure 6.

Figure 6.

Distribution of disability types represented in reviewed studies (n = 43). Visual impairments dominate the research focus (33%), followed by cognitive and learning disorders (25%), while hearing impairments were included in only one paper.

In one of the analyzed works authors proposed an automated approach to interface generation specifically designed for users with low vision and motor impairments. Their technique enables the transformation of an existing graphical interface into a personalized version, generated from custom interface specifications to match individual accessibility needs []. The intelligent assistive technologies (AT) are used to reduce digital inequalities and often employ AI or other forms of machine learning. They are mentioned or used in some of the papers included in this analysis. For example, ref. [] proposes an AT framework based on neural networks and embedded systems, using Jetson Nano to process speech and move the cursor on touch-sensitive devices and screens connected to the device, such as computers or other devices, thereby improving accessibility and interaction for OSI. The authors in [] state that machine learning and smart devices enable better interaction with digital systems by monitoring user emotions through haptic signals and adapting recommendations based on recognized emotional states. This makes it easier for people with limited digital literacy to use the system, reducing frustration and improving content accessibility. An example is the user preference model [], which improves the user experience and makes it easier for people with lower cognitive disabilities to use the system.

The authors proposed a framework that dynamically updates user profiles based on interaction patterns and usage statistics. While navigating the system, user actions and tool usage are monitored to adjust preferences automatically, allowing the interface to adapt over time. Additionally, the framework includes an optional monitoring component that assesses the user’s current context, such as attention, stress, or confusion, to provide timely feedback. This enables the system to determine whether the user requires assistance with specific content, accepts the help provided, or encounters difficulties with the interface or interaction mechanisms [].

As older populations and some younger people encounter barriers to using technology, intelligent systems must establish dynamic methods to identify accessibility issues and develop new strategies to improve user experience and interaction with computers. The accessibility barriers include difficulties using digital interfaces, such as complex navigation, designs that are not suitable for people with disabilities, or challenges in understanding functionality. Large datasets of user interactions can enable the identification and classification of these barriers supporting the development of more accessible and adaptive user interfaces.

3.2. RQ2 Which Machine Learning Paradigm Is Most Common in the Context of User Inter-Face Adaptation and Accessibility?

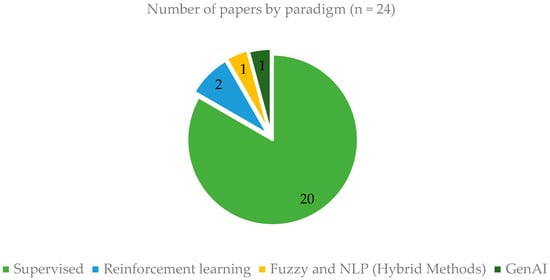

The literature review conducted in this study identified 24 (out of 57) papers that explicitly mention the use of 21 different ML models that can be categorized into three classical paradigms: supervised, unsupervised and reinforcement learning. Although all reviewed papers refer to ML or AI in some context, not all of them clearly describe the specific models, methods, or frameworks used. Most of the identified papers used supervised learning paradigms (n = 20, 83%). Supervised learning involves training a model on labeled data, where the system learns to recognize patterns based on predefined input–output pairs, whereas unsupervised learning relies on unlabeled data [].

Distinct supervised paradigms mentioned in read papers are Support Vector Machine methods [,], Multi-layer Perceptron (MLP) [], Convolutional Neural Networks (CNN) [,,], SSD MobileNet Version 2 implemented in TensorFlow/TFLite [], various decision tree variants [,], Random Forest model [], logistic regression [], and deep neural networks trained on large datasets such as Inception V3 []. Several studies highlighted the Transformer models as effective methods for processing natural language and other complex tasks. The Transformer models UIBert [] and ActionBert [], based on deep learning architectures, enable understanding of user interfaces through user interactions and visual elements. UIBert focuses on multimodal representations of user interfaces, while ActionBert leverages user actions for semantic understanding of the interface, contributing to more efficient adaptation and interaction between the user and the system.

No examples of unsupervised learning algorithms were found among the reviewed works. Interestingly, reinforcement learning appeared twice (n = 2) [,], despite its potential applicability in both AUI and IUI contexts []. Reinforcement learning (RL) is a computational approach centered on goal-directed learning through user interaction with an interface []. Additionally, only one paper was found incorporating GenAI with LLM (n = 1) [] but two papers used both supervised algorithms and GenAI [] and reinforcement learning and GenAI [] using it mostly to generate texts for additional CUI. We found one using fuzzy algorithm combined with NLP (n = 1) []. These paradigms were classified separately from conventional supervised approaches, as Generative AI focuses on deep generative modeling, while fuzzy–NLP hybrids integrate rule-based reasoning and language understanding to address uncertainty in adaptive interactions.

Distribution of identified paradigms in reviewed papers is shown in Figure 7.

Figure 7.

Distribution of identified machine learning paradigms applied to AUI and IUI research from reviewed papers (n = 24). Supervised learning dominates (83%), while reinforcement, generative, and fuzzy–NLP hybrid paradigms appear only sporadically. Note: Although 57 papers were included in the review, only 24 provided sufficient methodological detail for paradigm classification.

In addition to classification by learning paradigm, the identified models can also be grouped according to their model family or algorithmic structure, as shown in Table 3. The most frequently represented group consists of neural networks, including both classical and deep architectures such as MLP, Inception, MobileNet, UIBert, and ActionBert, CNNs. These models are primarily associated with tasks involving pattern recognition, multimodal interaction, and semantic understanding.

Table 3.

Distribution of ML paradigms grouped by model family.

Furthermore, the tree-based and ensemble methods paradigms were slightly less common. The models that were identified were K-Star, PART, Adaptive Boosting, Gradient Boosting, Random Forest Classifier, Decision Stumps, and AdaBoost, which are typically used for classification and decision-making in adaptive systems. Support Vector Machines (SVM) appear in two studies, remaining one of the most established supervised algorithms for classification tasks.

Notably, several studies employed hybrid approaches that combined different model families. Examples include the integration of supervised deep learning with reinforcement learning [] or RL and generative AI models [], as well as the combination of Random Forest and generative AI techniques []. These works are marked in Table 3 as belonging to multiple categories to reflect their mixed-model nature. Finally, Generative AI with Deep Learning (LLMs), fuzzy logic-based hybrid approaches and Logistic Regression Classifier each appeared once, suggesting that these emerging or specialized paradigms are only beginning to be applied in the context of adaptive and intelligent user interfaces.

Overall, the predominance of supervised learning models indicates that adaptive and intelligent user interfaces still rely on well-established, labeled data-driven approaches. The limited presence of reinforcement and generative paradigms suggests that real-time adaptation and autonomous interface behavior remain underexplored. Moreover, the frequent use of neural networks and ensemble methods reflects an emphasis on multimodal perception and decision-level fusion rather than unsupervised or self-learning mechanisms.

This tendency can also be explained by the difficulty of collecting sufficiently large and diverse datasets for adaptive user interface research, where data often depends on specific user populations and interaction contexts. As noted by [], the efficiency of the learning vector and dataset quality significantly affect convergence speed and model accuracy. This highlights the ongoing challenge of developing reliable models of user preferences when the training data from user interactions are limited, reaffirming the multidisciplinary nature of adaptive interface research.

3.3. RQ3 in What Type of Environments Are Adaptive Accessible User Interfaces Implemented?

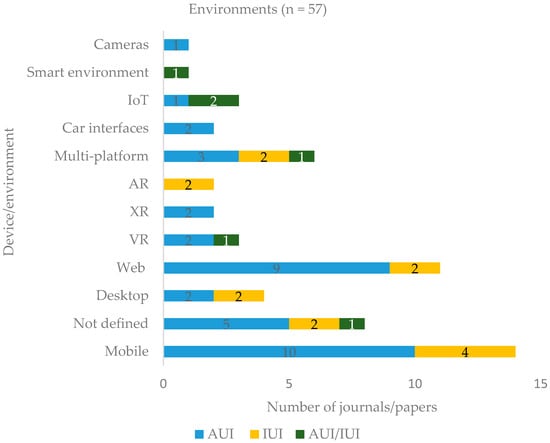

The analysis of the technological environments in which adaptive user interfaces (AUI) have been implemented in all 57 studies included in review reveals a strong prevalence of mobile environments (n = 14, 25%), followed by web-based systems (n = 11, 19%) and multi-platform solutions (n = 6, 11%). In this study, multi-platform denotes the adaptive solutions intentionally developed for interoperability across different devices or operating systems (e.g., mobile, web, and desktop), enabling continuity of accessibility features and adaptive behaviors regardless of the platform used. 10 out of 14 mobile solutions were implemented as AUI, while cross-platform systems tended to combine elements of both AUI and IUI paradigms. Desktop environments were less common (n = 4). Immersive contexts such as virtual reality (n = 3) [,,], extended reality (XR) (n = 2) [,] and augmented reality (AR) (n = 2) [,] appeared less. Sensory and other systems like Internet of Things (IoT) (n = 3) [,,] appeared the least with smart environments [], and camera-based systems [] each represented with one paper. Two papers [,] focused on automotive contexts, suggesting that adaptive accessibility solutions are emerging in vehicle interfaces as well. Both IoT and smart environment solutions combined AUI and IUI, such as mobile-smart home systems using machine learning for hearing-impaired users [] and web-based dashboards generating AI-enhanced descriptions for visually impaired users []. In contrast, eight studies did not specify the technological environment, likely indicating a conceptual or methodological rather than application-oriented focus.

Several studies emphasize that the environmental context constitutes a fundamental determinant of adaptive interface behavior. The interaction environment defines the conditions under which users engage with computational systems, thereby directly influencing the requirements for adaptation []. However, modeling the environment remains a persistent challenge due to the variability of physical settings, device constraints, and user behavior across contexts. In virtual and mixed-reality environments, these limitations are even more pronounced, as accessibility depends on factors such as spatial layout, reachability, and sensory feedback [,]. Consequently, the environment must be considered an integral component of the adaptation model rather than an external or secondary variable.

Figure 8 shows a diagram of the environment distribution of solutions in the papers as well as type of user interface implemented for them.

Figure 8.

Technological environments in which AUI, IUI or both interfaces were implemented (n = 57). Mobile systems prevail (25%), followed by web (19%) and multi-platform (11%) applications, with immersive environments (VR/AR/XR) and IoT contexts still emerging.

This distribution indicates that the adaptive accessible user interfaces are primarily implemented in mobile and web environments, where accessibility and personalization are most easily achieved and tested. The immersive, sensor-based, and automotive contexts remain underexplored, representing potential directions for future research, especially given the potential of IoT and smart environments to enable real-time, context-aware adaptation across multiple modalities.

3.4. RQ4 What Are the Challenges in Applying Machine Learning for the Development of Adaptive Accessible Interfaces for People with Different Types of Disabilities?

The main challenges in developing such interfaces include the size of the user interaction dataset used to train the machine learning model, the selection of an appropriate machine learning paradigm, and ethical issues such as protecting data privacy and preventing algorithmic bias. The algorithmic bias refers to situations where algorithms, especially those using machine learning or AI, make decisions that are unfair or discriminatory against certain groups of people []. Ethical issues are especially important for these systems because the personalized learning involves collecting and analyzing sensitive user data.

An adaptive system must be capable of managing the personalized learning paths for each individual user. It should track their activities, interpret them using specific models, and infer the user’s needs and preferences from knowledge gathered from large datasets. This includes monitoring behaviors, habits, and patterns, and analyzing them in comparison with the user’s historical activities [].

In addition to data-related and ethical concerns, several technical and infrastructural challenges arise. These include the storage and management of large, multimodal datasets, which often require scalable cloud or hybrid storage solutions capable of handling continuous data streams from sensors, interfaces, or user devices. The type of storage used (e.g., local databases, cloud-based repositories, or distributed data lakes) directly affects system performance, latency, and data accessibility. Furthermore, developing such systems demands an appropriate software architecture, typically involving modular components for data acquisition, preprocessing, model training, and user interface adaptation. On the hardware level, sufficient computational power is needed for real-time processing, often relying on GPUs or edge computing devices to reduce response time and ensure accessibility even with limited connectivity. Finally, interoperability between software and hardware components represents another challenge, as adaptive systems frequently integrate sensors, assistive devices, and mobile or web platforms, all of which must communicate efficiently and securely.

Also, we found very few solutions to rely on the principles of Universal Design. Most solutions address specific problems and offer customized approaches for certain situations, rather than creating universal solutions that work for all users.

A small number of studies (n = 7) mention or implement AUI or IUI in new technologies. The term of emerging technologies is mentioned in [].

4. Discussion and Future Research Directions

4.1. Performance and User Experience of Adaptive Interfaces

The analysis shows that adaptive user interfaces, in some contexts such as research on AUIs in automotive interfaces [], did not significantly reduce drivers’ cognitive load compared to static interfaces, contrary to expectations. The results indicated the opposite trend. The participants using static interfaces performed better in certain conditions. They required fewer clicks and solved tasks more quickly, especially in more complex environments (e.g., city driving). The user experience ratings also did not show a clear preference for adaptive interfaces. Once users become accustomed to a particular design and interaction style, they should not encounter unexpected changes in these elements. This phenomenon can be explained by the principles of Universal Design [], especially those emphasizing simplicity and intuitiveness. In practice, this means maintaining a consistent and predictable design, which helps users navigate more easily and reduces the likelihood of frustration when using the system.

Designers should exercise caution when allowing systems to make changes on behalf of users, as such automation may violate core principles of usable interface design []. When interfaces automatically adapt or modify behavior without explicit user input, they risk breaking predictability, reducing user control, and undermining consistency, which can lead to confusion, frustration, and errors. According to [] effective design must communicate system status, provide clear affordances and feedback, and support error prevention and recovery. Importantly, the goal of adaptive interfaces is not to replace the users but to empower them, enabling design choices that give humans control over technology []. These principles highlight why fully automated interface adaptations risk confusing or frustrating users, and they reinforce the importance of maintaining user control and predictability in interface design.

4.2. Gaps in Current Applications

Although various applications of AUI have been investigated, no studies have been found that implement such solutions in AR, 3D, or holographic environments. This approach could be valuable for future research, especially in the context of educational games that use adaptive interfaces []. Such games could serve as instruments for research and evaluation of the effectiveness of systems that implement AUI, considering all the challenges and obstacles mentioned above. The research shows the potential of applying AR and VR technologies in education [,], where integrating adaptive interfaces into these environments can significantly improve the user experience and the evaluation of the systems themselves.

4.3. Proposed Adaptive Accessible UI Model

Based on the results of the literature review conducted in this study, a new accessible adaptive user interface model is proposed. Unlike the traditional adaptive interfaces [], this model does not apply automatic changes in real time. Instead, it introduces a dedicated user-approval module that allows users to confirm or reject proposed changes before they are implemented. This mechanism minimizes the risk of user dissatisfaction or errors caused by unexpected modifications, since adjustments occur only after explicit user confirmation at predefined intervals. Importantly, the model also includes an option for users to revert or undo previously accepted adaptations at any stage thereby restoring the interface to an earlier state if the applied changes prove inconvenient or counterproductive. This ensures that the user control remains central throughout the adaptation process, reinforcing trust, transparency, and alignment with the principles of Universal Design.

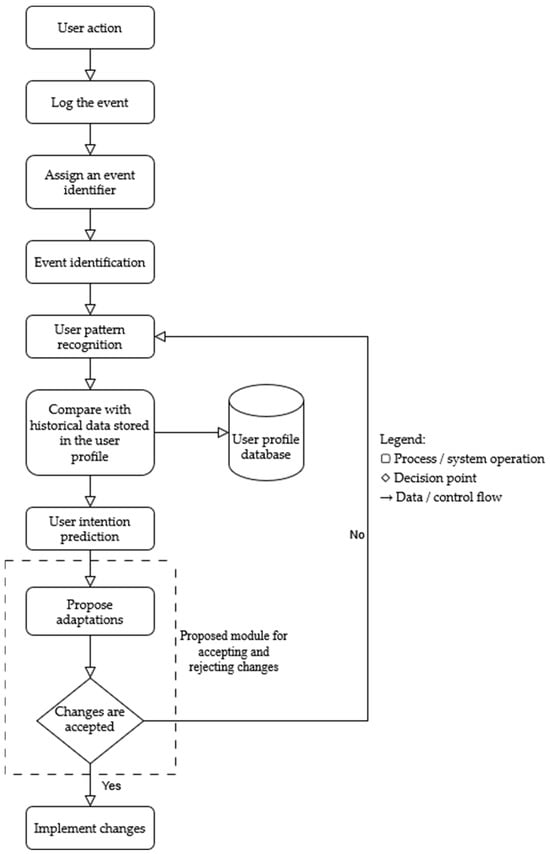

The proposed model consists of several interdependent processes that together enable adaptive yet user-controlled interaction. The system begins with the capture of user actions, which are logged and assigned an event identifier. These events are then processed through an event identification component that classifies the interaction according to predefined categories. The identified events are subsequently analyzed within the user pattern recognition and user intention prediction components, where recognized behavioral patterns are compared with historical data stored in the user profile. To ensure semantic consistency and interoperability of contextual information, the model could employ an ontology-based structure for representing user, device, and environmental parameters []. This would allow the system to interpret contextual relationships formally, facilitating scalable and transparent adaptation across devices and interaction scenarios.

Before any changes are implemented, the proposed adaptations are presented to the user through the module for accepting or rejecting interface changes. User feedback is provided through this module. Either approval or rejection is stored in the user profile and later utilized by the system to refine the pattern recognition and intention-prediction accuracy. In this way, the model supports a continuous learning loop that incorporates user consent into every adaptation stage, maintaining transparency and user autonomy while improving personalization over time. The role of the user in this process is active and transparent. The proposed changes are presented through non-intrusive communication channels—such as dialog windows, notification banners, dedicated accessibility panels, or a preview mode—depending on the interface context. The frequency of adaptation proposals is determined by time-based or event-based triggers but can also be dynamically adjusted according to user interaction patterns and feedback frequency. This design ensures that adaptation remains contextually aware yet non-disruptive, maintaining user control over the process. The complete process flow of the proposed model is illustrated in Figure 9.

Figure 9.

Process flow of the proposed adaptive accessible user interface model.

4.4. Implementation Considerations

From an implementation perspective, the approval module can operate as an intermediate layer between the front-end interface and the machine learning back end. It exchanges structured data (e.g., JSON requests) indicating the proposed adaptation, while the ML module processes aggregated historical data to refine future proposals.

Ethical and transparency considerations are integral to this model. The users are explicitly informed that the system uses their feedback to improve future adaptations, and they retain control over data sharing and storage preferences. Providing this transparency addresses common ethical concerns regarding user autonomy, consent, and explainability in adaptive AI systems, as highlighted in recent discussions on responsible machine learning and interface personalization.

This model aims to bridge the gap between automation and user autonomy by introducing an intermediary approval stage in accessible adaptive user interface systems. It preserves the benefits of ML–driven personalization while ensuring predictability and trust-key elements identified throughout this study as essential for the acceptance of adaptive accessible user interfaces.

4.5. Implications for Future Research

The future work will focus on the practical implementation and evaluation of the proposed adaptive accessible user interface model based on Universal Design. The ongoing research phase involves testing static adaptable mechanisms within 3D environments. This research includes both participants with and without disabilities, aiming to also address the lack of studies involving users with motor impairments identified in the literature review.

The evaluation of the research conducted will combine subjective and objective measures to assess usability and user satisfaction. The objective data will include task completion time, accuracy of task performance, and the number of skipped tasks, while subjective data will be collected through standardized questionnaires such as the System Usability Scale (SUS) and the NASA Task Load Index (NASA-TLX). These instruments will provide insights into perceived usability, workload, and acceptance. The study has received approval from the institutional ethics committee for conducting this research. No personal data would be collected during the research.

Additionally, the future work will address bias and fairness issues, which may arise from limited or unbalanced datasets. We are aware that the dataset limitations represent a major source of algorithmic bias as highlighted in our literature review and research questions. The system will include a DEMO phase designed to familiarize users with the system, its adaptive options, and how their interactions influence the interface behavior. This approach ensures that users understand the system’s functionality and maintains transparency.

In terms of technical direction, future work will also explore the application of advanced machine learning paradigms such as reinforcement learning, self-supervised learning, transfer learning, and synthetic data generation to enhance personalization while maintaining the user control and transparency. Additionally, fuzzy logic and generative AI approaches will be considered to address uncertainty in user behavior and enable dynamic adaptation in real time. In line with this we have proposed the pseudocode algorithm for our module found in Algorithm 1.

| Algorithm 1 Approval-Driven Adaptive Accessible User Interface Algorithm |

| 1: Initialize system and load user profile data 2: while system is running do 3: Capture user interactions and contextual parameters 4: Generate event_id for each detected interaction 5: Classify event using Event_Identification_Module 6: Analyze user patterns via Pattern_Recognition_Module 7: Predict potential adaptation using Intention_Prediction_Module 8: if adaptation proposal generated then 9: Present adaptation proposal to user (non-intrusive UI prompt) 10: if user_approval = “ACCEPT” then 11: Apply proposed adaptation 12: Log adaptation and update user profile 13: else if user_approval = “REJECT” then 14: Discard adaptation and record user preference 15: else if user_action = “UNDO” then 16: Revert interface to previous state 17: Log undo event and update user profile 18: end if 19: Update model based on feedback and new user data 20: end while 21: Save user profile and adaptation history before exit |

This proposed model also serves as the conceptual foundation for future research. The next phase will involve implementing and empirically validating this framework in controlled experimental settings, integrating insights from this systematic literature review. Specifically, the future development will focus on incorporating the selected machine learning paradigms, environment types, and accessibility considerations identified in this study, with particular attention to educational, rehabilitative, and communication-oriented applications. This approach will enable comprehensive testing of the model’s adaptability, user acceptance, and ethical transparency across different technological contexts and user groups.

5. Conclusions

This paper examined the application of machine learning in the development of adaptive accessible user interfaces (AUI), focusing on both people with disabilities and the elderly. The systematic literature review revealed that supervised learning remains the predominant paradigm, while reinforcement, generative, and fuzzy logic approaches remain underexplored despite their potential for adaptive interface modeling. 75% of the analyzed works explicitly involved people with disabilities, and the integration of Universal Design principles was rare.

The analysis also identified major challenges in this field, including the limited size and diversity of datasets, the selection of appropriate ML paradigms, and ethical concerns such as data privacy and algorithmic bias.

The contributions of this study are threefold. (i) It provides a comparative overview of ML paradigms used for adaptive and intelligent user interfaces within the analyzed period and selected databases offering what appears to be one of the first such analyses from the perspective of accessibility and Universal Design. (ii) It identifies critical research gaps, particularly the lack of studies involving hearing impairments, age-related difficulties and motor impairments, limited representation of emerging technologies such as AR/VR, and insufficient inclusion of Universal Design guidelines. (iii) It proposes a novel user-approved adaptive interface model that introduces an approval and undo mechanism, balancing automation and user control to enhance transparency, predictability, and user trust.

The proposed model can be implemented in augmented or three-dimensional environments such as educational serious games where it would provide dynamic yet user-controlled adaptive experiences. The results of this review can also contribute to the creation of accessibility guidelines for adaptive interfaces in handheld and immersive AR environments promoting inclusive design that benefits both people with disabilities and the general population.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app152312538/s1, Table S1: PRISMA-ScR Checklist []; Table S2: Query results; Table S3: Screening Results and Process, Table S4: Summary of Included Studies.

Author Contributions

Conceptualization, M.K., I.Z. and Ž.C.; methodology, M.K., I.Z., F.Š.-M. and Ž.C.; validation, F.Š.-M. and Ž.C.; formal analysis, M.K.; investigation, M.K.; data curation, M.K.; writing—original draft preparation, M.K. and I.Z.; writing—review and editing, M.K., I.Z., F.Š.-M. and Ž.C.; visualization, M.K.; supervision, I.Z. and Ž.C.; project administration, I.Z. and F.Š.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PWD | People with disabilities |

| AUI | Adaptive user interface |

| IUI | Intelligent user interface |

| CUI | Conversational user interfaces |

| AT | Assistive technologies |

| ML | Machine learning |

| NLP | Natural Language Processing |

| MLP | Multi-layer Perceptron |

| CNN | Convolutional Neural Networks |

| XR | Extended reality |

| AR | Augmented reality |

| VR | Virtual reality |

| UD | Universal design |

| IoT | Internet of Things |

| BCI | Brain–computer interface |

| RL | Reinforcement learning |

References

- World Health Organization. Disability. Available online: https://www.who.int/news-room/fact-sheets/detail/disability-and-health (accessed on 30 September 2025).

- Babic, J.; Car, Z.; Gace, I.; Lovrek, I.; Podobnik, V.; Topolovac, I.; Vdovic, H.; Zilak, M. Analysis of Emerging Technologies for Improving Social Inclusion of People with Disabilities; University of Zagreb: Zagreb, Croatia, 2020. [Google Scholar]

- Rotolo, D.; Hicks, D.; Martin, B.R. What Is an Emerging Technology? Res. Policy 2015, 44, 1827–1843. [Google Scholar] [CrossRef]

- Garlinska, M.; Osial, M.; Proniewska, K.; Pregowska, A. The Influence of Emerging Technologies on Distance Education. Electronics 2023, 12, 1550. [Google Scholar] [CrossRef]

- Keselj, A.; Zubrinic, K.; Milicevic, M.; Kuzman, M. An Overview of 3D Holographic Visualization Technologies and Their Applications in Education. In Proceedings of the 2023 46th MIPRO ICT and Electronics Convention (MIPRO), Opatija, Croatia, 22–26 May 2023. [Google Scholar]

- Sokołowska, B. Impact of Virtual Reality Cognitive and Motor Exercises on Brain Health. Int. J. Environ. Res. Public Health 2023, 20, 4150. [Google Scholar] [CrossRef]

- Yücelyiǧit, S.; Aral, N. The Effects of Three Dimensional (3D) Animated Movies and Interactive Applications on Development of Visual Perception of Preschoolers. Educ. Sci. 2016, 41, 255–271. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Justi, R. The Contribution of Visualisation to Modelling-Based Teaching. In Modelling-Based Teaching in Science Education; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Zhao, Y.; Jiang, J.; Chen, Y.; Liu, R.; Yang, Y.; Xue, X.; Chen, S. Metaverse: Perspectives from Graphics, Interactions and Visualization. Vis. Inform. 2022, 6, 56–67. [Google Scholar] [CrossRef]

- Wilkinson, P. A Brief History of Serious Games Phil. In Entertainment Computing and Serious Games; Springer: Cham, Switzerland, 2016; Volume 9970. [Google Scholar]

- Ciman, M.; Gaggi, O.; Sgaramella, T.M.; Nota, L.; Bortoluzzi, M.; Pinello, L. Serious Games to Support Cognitive Development in Children with Cerebral Visual Impairment. Mob. Netw. Appl. 2018, 23, 1703–1714. [Google Scholar] [CrossRef]

- Santoso, H.B.; Schrepp, M. Importance of User Experience Aspects for Different Software Product Categories. In User Science and Engineering; Communications in Computer and Information Science; Springer: Singapore, 2018; Volume 886. [Google Scholar]

- Kühl, N.; Schemmer, M.; Goutier, M.; Satzger, G. Artificial Intelligence and Machine Learning. Electron. Mark. 2022, 32, 2235–2244. [Google Scholar] [CrossRef]

- Stige, Å.; Zamani, E.D.; Mikalef, P.; Zhu, Y. Artificial Intelligence (AI) for User Experience (UX) Design: A Systematic Literature Review and Future Research Agenda. Inf. Technol. People 2024, 37, 2324–2352. [Google Scholar] [CrossRef]

- Keselj, A.; Milicevic, M.; Zubrinic, K.; Car, Z. The Application of Deep Learning for the Evaluation of User Interfaces. Sensors 2022, 22, 9336. [Google Scholar] [CrossRef]

- Brdnik, S.; Heričko, T.; Šumak, B. Intelligent User Interfaces and Their Evaluation: A Systematic Mapping Study. Sensors 2022, 22, 5830. [Google Scholar] [CrossRef]

- Muhamad, M.; Abdul Razak, F.H.; Haron, H. A Conceptual Framework for Co-Design Approach to Support Elderly Employability Website. In User Science and Engineering; Communications in Computer and Information Science; Springer: Singapore, 2018; Volume 886. [Google Scholar]

- Wong, C.Y.; Ibrahim, R.; Hamid, T.A.; Mansor, E.I. Usability and Design Issues of Smartphone User Interface and Mobile Apps for Older Adults. In User Science and Engineering; Communications in Computer and Information Science; Springer: Singapore, 2018; Volume 886. [Google Scholar]

- Alzahrani, M.; Uitdenbogerd, A.L.; Spichkova, M. Human-Computer Interaction: Influences on Autistic Users. Procedia Comput. Sci. 2021, 192, 4691–4700. [Google Scholar] [CrossRef]

- Heumader, P.; Miesenberger, K.; Murillo-Morales, T. Adaptive User Interfaces for People with Cognitive Disabilities within the Easy Reading Framework. In Computers Helping People with Special Needs; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020; Volume 12377. [Google Scholar]

- Liu, J.; Wong, C.K.; Hui, K.K. An Adaptive User Interface Based on Personalized Learning. IEEE Intell. Syst. 2003, 18, 52–57. [Google Scholar] [CrossRef]

- Shneiderman, B.; Plaisant, C.; Cohen, M.; Jacobs, S.; Elmqvist, N.; Diakopoulos, N. Designing the User Interface: Strategies for Effective Human-Computer Interaction, 6th ed.; Pearson Education: Boston, MA, USA, 2017; ISBN 9780134380384. [Google Scholar]

- Centre for Excellence in Universal Design. About Universal Design. Available online: https://universaldesign.ie/about-universal-design (accessed on 30 September 2025).

- Centre for Excellence in Universal Design. The 7 Principles. Available online: https://universaldesign.ie/about-universal-design/the-7-principles (accessed on 30 September 2025).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Clarivate. Web of Science. Available online: https://www.webofscience.com/wos/woscc/advanced-search (accessed on 30 September 2025).

- Scopus|Abstract and Citation Database|Elsevier. Available online: https://www.elsevier.com/products/scopus (accessed on 4 November 2025).

- Kolekar, S.V.; Pai, R.M.; Manohara Pai, M.M. Rule Based Adaptive User Interface for Adaptive E-Learning System. Educ. Inf. Technol. 2019, 24, 613–641. [Google Scholar] [CrossRef]

- Johnston, V.; Black, M.; Wallace, J.; Mulvenna, M.; Bond, R. A Framework for the Development of a Dynamic Adaptive Intelligent User Interface to Enhance the User Experience. In Proceedings of the 31st European Conference on Cognitive Ergonomics: “Design for Cognition”—ECCE 2019, Belfast, UK, 10–13 September 2019; pp. 32–35. [Google Scholar] [CrossRef]

- Gibson, R.C.; Bouamrane, M.M.; Dunlop, M.D. Ontology-Driven, Adaptive, Medical Questionnaires for Patients with Mild Learning Disabilities. In Artificial Intelligence XXXVI; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11927, pp. 107–121. [Google Scholar] [CrossRef]

- Fernández, C.; Gonzalez-Rodriguez, M.; Fernandez-Lanvin, D.; De Andrés, J.; Labrador, M. Implicit Detection of User Handedness in Touchscreen Devices through Interaction Analysis. PeerJ Comput. Sci. 2021, 7, e487. [Google Scholar] [CrossRef]

- Bessghaier, N.; Soui, M.; Ghaibi, N. Towards the Automatic Restructuring of Structural Aesthetic Design of Android User Interfaces. Comput. Stand. Interfaces 2022, 81, 103598. [Google Scholar] [CrossRef]

- Maddali, H.T.; Dixon, E.; Pradhan, A.; Lazar, A. Investigating the Potential of Artificial Intelligence Powered Interfaces to Support Different Types of Memory for People with Dementia. In Proceedings of the Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; Volume 226. [Google Scholar] [CrossRef]

- Gomaa, A.; Alles, A.; Meiser, E.; Rupp, L.H.; Molz, M.; Reyes, G. What’s on Your Mind? A Mental and Perceptual Load Estimation Framework towards Adaptive In-Vehicle Interaction While Driving. In Proceedings of the 14th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications—AutomotiveUI 2022, Seoul, Republic of Korea, 17–20 September 2022; Volume 22, pp. 215–225. [Google Scholar] [CrossRef]

- Chen, J.; Ganguly, B.; Kanade, S.G.; Duffy, V.G. Impact of AI on Mobile Computing: A Systematic Review from a Human Factors Perspective. In HCI International 2023—Late Breaking Papers; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2023; Volume 14059, pp. 24–38. [Google Scholar] [CrossRef]

- 8 Personalizing the User Interface for People with Disabilities|Part of Personalized Human-Computer Interaction|De Gruyter Books|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/10790721 (accessed on 10 November 2025).

- Wang, J.; Yang, C.; Fu, E.Y.; Ngai, G.; Leong, H.V. Is Your Mouse Attracted by Your Eyes: Non-Intrusive Stress Detection in off-the-Shelf Desktop Environments. Eng. Appl. Artif. Intell. 2023, 123, 106495. [Google Scholar] [CrossRef]

- Castro, G.P.B.; Chiappe, A.; Rodríguez, D.F.B.; Sepulveda, F.G. Harnessing AI for Education 4.0: Drivers of Personalized Learning. Electron. J. e-Learn. 2024, 22, 1–14. [Google Scholar] [CrossRef]

- Lu, J.; Liu, Y.; Shen, S.; Ren, H. Exploration of a Method for Insight into Accessibility Design Flaws Based on Touch Dynamics. Humanit. Soc. Sci. Commun. 2024, 11, 714. [Google Scholar] [CrossRef]

- Xiao, S.; Chen, Y.; Song, Y.; Chen, L.; Sun, L.; Zhen, Y.; Chang, Y.; Zhou, T. UI Semantic Component Group Detection: Grouping UI Elements with Similar Semantics in Mobile Graphical User Interface. Displays 2024, 83, 102679. [Google Scholar] [CrossRef]

- Ali, A.; Xia, Y.; Navid, Q.; Khan, Z.A.; Khan, J.A.; Aldakheel, E.A.; Khafaga, D. Mobile-UI-Repair: A Deep Learning Based UI Smell Detection Technique for Mobile User Interface. PeerJ Comput. Sci. 2024, 10, e2028. [Google Scholar] [CrossRef]

- Darmawan, J.T.; Sigalingging, X.K.; Faisal, M.; Leu, J.S.; Ratnasari, N.R.P. Neural Network-Based Small Cursor Detection for Embedded Assistive Technology. Vis. Comput. 2024, 40, 8425–8439. [Google Scholar] [CrossRef]

- Lu, J.; Liu, Y.; Lv, T.; Meng, L. An Emotional-Aware Mobile Terminal Accessibility-Assisted Recommendation System for the Elderly Based on Haptic Recognition. Int. J. Hum. Comput. Interact. 2023, 40, 7593–7609. [Google Scholar] [CrossRef]

- Ferdous, J.; Lee, H.N.; Jayarathna, S.; Ashok, V. Enabling Efficient Web Data-Record Interaction for People with Visual Impairments via Proxy Interfaces. ACM Trans. Interact. Intell. Syst. 2023, 13, 1–27. [Google Scholar] [CrossRef]

- Hong, J.; Gandhi, J.; Mensah, E.E.; Zeraati, F.Z.; Jarjue, E.; Lee, K.; Kacorri, H. Blind Users Accessing Their Training Images in Teachable Object Recognizers. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS 2022, Athens, Greece, 23–26 October 2022. [Google Scholar]

- Meiser, E.; Alles, A.; Selter, S.; Molz, M.; Gomaa, A.; Reyes, G. In-Vehicle Interface Adaptation to Environment-Induced Cognitive Workload. In Adjunct Proceedings, Proceedings of the 14th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2022, Seoul, Republic of Korea, 17–20 September 2022; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Akkapusit, P.; Ko, I.Y. Task-Oriented Approach to Guide Visually Impaired People during Smart Device Usage. In Proceedings of the 2021 IEEE International Conference on Big Data and Smart Computing, BigComp 2021, Jeju Island, Republic of Korea, 17–20 January 2021. [Google Scholar]

- Bai, C.; Zang, X.; Xu, Y.; Sunkara, S.; Rastogi, A.; Chen, J.; Agüera y Arcas, B. UIBert: Learning Generic Multimodal Representations for UI Understanding. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Montreal, QC, USA, 19–26 August 2021. [Google Scholar]

- Zouhaier, L.; Hlaoui, Y.B.D.; Ayed, L.B. A Reinforcement Learning Based Approach of Context-Driven Adaptive User Interfaces. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference, COMPSAC 2021, Madrid, Spain, 12–16 July 2021. [Google Scholar]

- Da Tavares, J.E.R.; Da Guterres, T.D.R.; Barbosa, J.L.V. Apollo APA: Towards a Model to Care of People with Hearing Impairment in Smart Environments. In Proceedings of the 25th Brazillian Symposium on Multimedia and the Web, WebMedia 2019, Rio de Janeiro, Brazil, 29 October–1 November 2019. [Google Scholar]

- Guo, A. Crowd-AI Systems for Non-Visual Information Access in the Real World. In Proceedings of the UIST 2018 Adjunct—Adjunct Publication of the 31st Annual ACM Symposium on User Interface Software and Technology, Berlin, Germany, 14 October 2018. [Google Scholar]

- Lacoche, J.; Duval, T.; Arnaldi, B.; Maisel, E.; Royan, J. Machine Learning Based Interaction Technique Selection for 3D User Interfaces. In Proceedings of the Virtual Reality and Augmented Reality: 16th EuroVR International Conference, EuroVR 2019, Tallinn, Estonia, 23 October 2019; Volume 11883. [Google Scholar]

- Saif, A.; Zakir, M.T.; Raza, A.A.; Naseem, M. EvolveUI: User Interfaces That Evolve with User Proficiency. In Proceedings of the ACM SIGCAS/SIGCHI Conference on Computing and Sustainable Societies—COMPASS 2024, New Delhi, India, 8–11 July 2024; pp. 230–237. [Google Scholar] [CrossRef]

- Stelea, G.A.; Sangeorzan, L.; Enache-David, N. Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users. Future Internet 2025, 17, 274. [Google Scholar] [CrossRef]

- Saleela, D.; Oyegoke, A.S.; Dauda, J.A.; Ajayi, S.O. Development of AI-Driven Decision Support System for Personalized Housing Adaptations and Assistive Technology. J. Aging Environ. 2025. ahead of print. [Google Scholar] [CrossRef]

- Iqbal, M.W.; Ch, N.A.; Shahzad, S.K.; Naqvi, M.R.; Khan, B.A.; Ali, Z. User Context Ontology for Adaptive Mobile-Phone Interfaces. IEEE Access 2021, 9, 96751–96762. [Google Scholar] [CrossRef]

- Auernhammer, J.; Ohashi, T.; Liu, W.; Dritsas, E.; Trigka, M.; Troussas, C.; Mylonas, P. Multimodal Interaction, Interfaces, and Communication: A Survey. Multimodal Technol. Interact. 2025, 9, 6. [Google Scholar] [CrossRef]

- Ramirez-Valdez, O.; Baluarte-Araya, C.; Castillo-Lazo, R.; Ccoscco-Alvis, I.; Valdiviezo-Tovar, A.; Villafuerte-Quispe, A.; Zuñiga-Huraca, D. Control Interface for Multi-User Video Games with Hand or Head Gestures in Directional Key-Based Games. IJACSA Int. J. Adv. Comput. Sci. Appl. 2025, 16, 1. [Google Scholar] [CrossRef]

- Shao, S. Design and Development of Artificial Intelligence-Based Smart Classroom System on B/S Architecture. In Proceedings of the 2025 International Conference on Intelligent Computing and Knowledge Extraction, ICICKE 2025, Bengaluru, India, 6–7 June 2025. [Google Scholar] [CrossRef]

- Romero, O.J.; Haig, A.; Kirabo, L.; Yang, Q.; Zimmerman, J.; Tomasic, A.; Steinfeld, A. A Long-Term Evaluation of Adaptive Interface Design for Mobile Transit Information. In Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services: Expanding the Horizon of Mobile Interaction, MobileHCI 2020, Oldenburg, Germany, 5–8 October 2020; Volume 11. [Google Scholar] [CrossRef]

- Barbu, M.; Iordache, D.D.; Petre, I.; Barbu, D.C.; Băjenaru, L. Framework Design for Reinforcing the Potential of XR Technologies in Transforming Inclusive Education. Appl. Sci. 2025, 15, 1484. [Google Scholar] [CrossRef]

- Keutayeva, A.; Jesse Nwachukwu, C.; Alaran, M.; Otarbay, Z.; Abibullaev, B. Neurotechnology in Gaming: A Systematic Review of Visual Evoked Potential-Based Brain-Computer Interfaces. IEEE Access 2025, 13, 74940–74962. [Google Scholar] [CrossRef]

- Chang, Y.-S.; Lin, C.-W.; Chen, C.-C. AI-Driven Augmentative and Alternative Communication System for Communication Enhancement in Cerebral Palsy Using American Sign Language Recognition. Sens. Mater. 2025, 37, 3841–3853. [Google Scholar] [CrossRef]

- Partarakis, N.; Zabulis, X. A Review of Immersive Technologies, Knowledge Representation, and AI for Human-Centered Digital Experiences. Electronics 2024, 13, 269. [Google Scholar] [CrossRef]

- Bellscheidt, S.; Metcalf, H.; Pham, D.; Elglaly, Y.N. Building the Habit of Authoring Alt Text: Design for Making a Change. In Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility ASSETS 2023, New York, NY, USA, 22–25 October 2023. [Google Scholar] [CrossRef]

- Tschakert, H.; Lang, F.; Wieland, M.; Schmidt, A.; MacHulla, T.K. A Dataset and Machine Learning Approach to Classify and Augment Interface Elements of Household Appliances to Support People with Visual Impairment. In Proceedings of the International Conference on Intelligent User Interfaces, IUI’23, Sydney, NSW, Australia, 27–31 March 2023; pp. 77–90. [Google Scholar] [CrossRef]

- Ferdous, J.; Lee, H.N.; Jayarathna, S.; Ashok, V. InSupport: Proxy Interface for Enabling Efficient Non-Visual Interaction WithWeb Data Records. In Proceedings of the International Conference on Intelligent User Interfaces, IUI’21, Helsinki, Finland, 22–25 March 2022; Volume 22, pp. 49–62. [Google Scholar] [CrossRef]

- Ballı, T.; Peker, H.; Pişkin, Ş.; Yetkin, E.F. RESTORATIVE: Improving Accessibility to Cultural Heritage with AI-Assisted Virtual Reality. Digit. Present. Preserv. Cult. Sci. Herit. 2025, 15, 65–72. [Google Scholar] [CrossRef]

- Stirenko, S.; Gordienko, Y.; Shemsedinov, T.; Alienin, O.; Kochura, Y.; Gordienko, N.; Rojbi, A.; Benito, J.R.L.; González, E.A. User-Driven Intelligent Interface on the Basis of Multimodal Augmented Reality and Brain-Computer Interaction for People with Functional Disabilities. Adv. Intell. Syst. Comput. 2017, 886, 612–631. [Google Scholar] [CrossRef]

- Datta, B.V.P.; Mihir, D.V.S.; Aralaguppi, Y.A.; Virupaxappa, G.; Somasundaram, T.; Department, G.R. IoT and Eye Tracking Based System for Cerebral Palsy Diagnosis and Assistive Technology. In Proceedings of the 2025 5th International Conference on Intelligent Technologies (CONIT), Hubbali, India, 20–22 June 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Kuruppu, K.A.G.S.R.; Kandambige, S.T.; Perera, W.H.T.H.; Cooray, N.T.L.; Nawinna, D.; Perera, J. Smart Agricultural Platform for Sri Lankan Farmers with Price Prediction, Blockchain Security, and Adaptive Interfaces. In Proceedings of the 6th International Conference on Inventive Research in Computing Applications, ICIRCA 2025, Coimbatore, India, 25–27 June 2025; pp. 1538–1544. [Google Scholar] [CrossRef]

- Garg, A.; Reza, S.M.; Babinski, D. Enhancing ADHD Diagnostic Assessments with Virtual Reality Integrated with Biofeedback and Artificial Intelligence. In Proceedings of the 2025 8th International Conference on Information and Computer Technologies, ICICT 2025, Hawaii-Hilo, HI, USA, 14–16 March 2025; pp. 442–447. [Google Scholar] [CrossRef]

- Ropakshi; Kaur, P.; Yadav, V.; Kumar, V.; Chahar, N.K. AccessArc: Transforming Digital Accessibility Through Secure AI-Powered Inclusive Design and Unified Service Integration. In Proceedings of the 1st International Conference on Advances in Computer Science, Electrical, Electronics, and Communication Technologies, CE2CT 2025, Bhimtal, India, 21–22 February 2025; pp. 1327–1332. [Google Scholar] [CrossRef]

- Mohanraj, S.; Babu, C.G.; Sandhya, M.; Prabha, M.R.; Raghul Vishal, T.; Rakesh, B. Voice Bot for Visually Impaired Persons Using NLP. In Proceedings of the 4th International Conference on Smart Technologies, Communication and Robotics 2025, STCR 2025, Sathyamangalam, India, 9–10 May 2025. [Google Scholar] [CrossRef]

- Yogapriya, M.; Swetha, V.; Leena, H.; Kandavel, N.; Murugan, R.T.; Gowri, R. VisionEase: Reconceptualizing Reading for Partial Vision Seniors. In Proceedings of the 2025 International Conference on Data Science, Agents and Artificial Intelligence, ICDSAAI 2025, Chennai, India, 28–29 March 2025. [Google Scholar] [CrossRef]

- Nigam, S.; Waoo, A.A. Advance Collaborative AI-Driven Bots Using RPA to Enhance Workplace Inclusivity for Employees with Disabilities in India. In Proceedings of the 2025 IEEE 14th International Conference on Communication Systems and Network Technologies, CSNT 2025, Bhopal, India, 7–9 March 2025; pp. 610–617. [Google Scholar] [CrossRef]

- Sethukarasi, T.; Yadav, R.; Ramanapriya, V.; Rivekha, J.A. Smart Knowledge Delivery System with Adaptive Accessibility Using Machine Learning. In Proceedings of the 2nd International Conference on Research Methodologies in Knowledge Management, Artificial Intelligence and Telecommunication Engineering, RMKMATE 2025, Chennai, India, 7–8 May 2025. [Google Scholar] [CrossRef]

- He, Z.; Sunkara, S.; Zang, X.; Xu, Y.; Liu, L.; Wichers, N.; Schubiner, G.; Lee, R.; Chen, J. ActionBert: Leveraging User Actions for Semantic Understanding of User Interfaces. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, AAAI 2021, Vancouver, BC, Canada, 2–9 February 2021; Volume 7. [Google Scholar]

- Miraz, M.H.; Ali, M.; Excell, P.S. Adaptive User Interfaces and Universal Usability through Plasticity of User Interface Design. Comput. Sci. Rev. 2021, 40, 100363. [Google Scholar] [CrossRef]

- Greene, R.J.; Hunt, C.; Acosta, B.; Huang, Z.; Kaliki, R.; Thakor, N. Evaluating the Impact of a Semi-Autonomous Interface on Configuration Space Accessibility for Multi-DOF Upper Limb Prostheses. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 154–161. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y. Deep Learning-Enhanced Adaptive Interface for Improved Accessibility in E-Government Platforms. In Proceedings of the 2024 6th International Conference on Frontier Technologies of Information and Computer (ICFTIC), Qingdao, China, 13–15 December 2024. [Google Scholar] [CrossRef]

- Seymour, W.; Zhan, X.; Coté, M.; Such, J. Who Are CUIs Really For? Representation and Accessibility in the Conversational User Interface Literature. In Proceedings of the 5th International Conference on Conversational User Interfaces, CUI 2023, Eindhoven, The Netherlands, 19–21 July 2023. [Google Scholar]