Abstract

Artificial intelligence (AI) plays an important role in analyzing air quality, providing new insights that enable informed environmental policy decisions at the local level based on air pollution modeling and forecasting. The aim of this study is to analyze various hybrid AI methods to predict, model, and anticipate hourly ground-level ozone concentrations. Ground-level ozone concentrations impact human health and the environment. The data used in this study was downloaded from the website of the Romanian Agency for Environmental Protection and spans five years (2020–2024). The dataset comprises two categories of data: (i) seven meteorological parameters, including temperature (T), relative humidity, precipitation, air pressure, solar brightness, wind direction, and velocity; (ii) twenty air pollutants, including two types of particulate matter, carbon monoxide, sulfur dioxide, ground-level ozone, three types of nitrogen oxide, ammonia, six volatile organic compounds, and five toxic elements. The study follows a six-stage approach: (1) data preprocessing is conducted to identify and address anomalies, outliers, and missing values, while ozone trends are analyzed; (2) correlations between ozone concentrations and other variables are examined, considering only non-missing values; (3) data splitting is carried out in training and testing sets; (4) a total of 27 hybrid AI-based algorithms are applied to determine the optimal predictive model for ozone concentration based on related variables; (5) fifty feature selection methods are applied to find the most relevant predictors for predicting ozone concentration; (6) a novel deep NARMAX model is employed to model and anticipate hourly ozone levels in Craiova. Using a set of statistical metrics, the results of the models are assessed. This research provides a novel perspective on the robustness of the predictive performance of the proposed model.

1. Introduction

Air pollution refers to the presence of harmful substances in the Earth’s atmosphere, often resulting from human activities such as industrial processes, transportation, and energy production []. These pollutants encompass a range of substances, including particulate matter (PM); nitrogen oxide (NOx); sulfur dioxide (SO2); carbon monoxide (CO); toxic elements like lead (Pb), nickel (Ni), arsenic (As), cadmium (Cd), and radon (Nt); and volatile organic compounds (VOCs) like benzene (C6H6), ethylbenzene (C8H10), three isomers of xylene (o-, m- and p-xylene), and toluene (C8H10) []. The significance of air pollution lies in its ability to compromise air quality, leading to adverse effects on human health, ecosystems, and climate safety [].

Urban environments are characterized by a concentration of diverse activities that contribute to air pollution []. The most significant sources include industrial emissions, road traffic, power plants, construction activities, and residential heating []. Understanding the specific sources of air pollutants is vital for every urban area to develop targeted strategies to mitigate pollution levels [].

The consequences of air pollution are far-reaching and impact the health of urban populations []. Exposure to pollutants has been linked to respiratory and cardiovascular diseases, exacerbation of existing health conditions, and an increased risk of premature mortality []. Furthermore, air pollution adversely affects the environment, leading to soil and water contamination, acid rain, and damage to vegetation [].

Air quality forecasting plays a pivotal role in managing and mitigating the adverse impacts of air pollution on human health and the environment []. Over the years, various AI-based approaches have been utilized to predict and anticipate air quality levels, with statistical models, machine learning (ML) and deep learning (DL) models, and hybrid models emerging as key techniques []. An overview of various AI methods and algorithms applied to tasks in chemical engineering, such as regression, classification, clustering, dimensionality reduction, prediction, and forecasting, can be found in []. Table 1 provides a detailed review of different AI approaches, outlining their strengths and limitations in the context of air pollution and air quality forecasting.

Table 1.

Various AI-based methods employed (or methods that can be employed) in the context of air pollution/quality.

In the literature, there are a few studies on air pollution that were developed for Craiova city. These studies are based on various approaches (statistical or based on artificial intelligence). In [], the authors conducted a statistical comparison of the main pollutants (PMs, NOx, and SO2) for two consecutive years, emphasizing the air pollution episodes according to the limits established by the European Environmental Agency (EEA). The ground-level ozone issue was addressed merely as a tangential outcome of rising NOx levels. Another study [] examines the natural and anthropogenic causes of some pollutants (NOx, SO2, CO, CO2, and O3) in urban agglomerations of Romania. In [], the authors focused on two datasets covering eight months of data about PM2.5 concentration. The datasets for Craiova were provided by a community-based sensor network (uRADMonitor) and the Romanian Agency for Environmental Protection. The researchers pinpointed the PM2.5 pollution episodes and highlighted the advantage of using two complementary sensor networks without considering ozone pollution. In [], the authors showed how the uRADMonitor was built. The uRADMonitor is a community-based sensor network that is used to monitor air quality in urban areas, where in Romania there are 630 low-cost sensors connected to the network. The data provided by this independent network was compared with the data provided by the Romanian Agency for Environmental Protection network (148 automatic air quality monitoring fixed stations and 11 mobile stations). Based on a one-year dataset, the researchers studied PM10 pollution levels in the five largest urban areas in Romania by utilizing five annual statistical indicators recommended by the EEA. It is emphasized again that the key advantage of the independent networks is their ability to generate a considerable volume of open-source data with a high level of granularity (every minute or even every thirty seconds) in contrast to the official network, which produces data at every hour. The disadvantages are associated with the various techniques for measurement and sensor calibration. In [], the authors calculated the linear Pearson correlation coefficients between PM10 levels and meteorological parameters using data from thirteen identical sensors located in different areas of Craiova. They also compared the values provided by those thirteen sensors for PM10 and PM2.5 concentrations with World Health Organization (WHO) recommendations identifying the air pollution episodes. Based on hierarchical regression analysis, the researchers analyzed the correlations between meteorological parameters and particulate matter concentrations []. In an educational context (Erasmus+ project, 2021-1-RO01-KA220-HED-000030286), the authors compared air quality in three cities (Craiova, Banska Bystrica, Adana) from three different countries (Romania, Turkey, and Slovakia). The conclusion of this research showed that results are affected by location, even if the authors used identical sensors. A significant statistical study for ozone in Craiova [], based on one year of data with high granulation (one minute), and applying a dual-method approach (SPSS and Phyton), found a strong positive correlation between ozone concentration and temperature and significant negative correlations with relative humidity, PM concentrations, NO2, and CO. The authors used the T-test to check if the ozone weekday weekend effect might be observed in Craiova. The result was negative. Also, the researchers did not identify exceedances in the recommendations of the WHO and the limits imposed by the EEA for ground-level ozone concentration.

Furthermore, using ML, DL, or hybrid approaches, there is also a set of studies for air pollution prediction and forecast, e.g., based on a four-year dataset (2009–2014) concerning air pollution recorded by four air quality monitoring stations that belong to the National Environmental Agency. In [], the authors applied hybrid statistics and artificial intelligence techniques, such as feedforward neural networks (FANNs) and wavelet–feedforward neural networks (WFANNs), to time series of four air pollutants (PM2.5, PM10, NO2, and O3). They investigated when FANN and WFANN can be used successfully as air pollution prediction tools. Another study [] proposed a combination of hybrid models, such as input variable selection (IVS), machine learning (ML), and a regression method, aiming to predict, model, and forecast the daily Air Quality Index and particulate matter concentrations. The authors considered a two-year dataset (one-minute granulation) collected by one low-cost sensor that comprised three meteorological parameters (T, P, RH) and seven air pollutants (PM1, PM2.5, PM10, O3, VOC, CH2O, CO2). It was observed that combining feature selection methods with the decision tree (DT), least square regression (LSR), and nonlinear autoregressive moving average model (NARMAX) significantly improved prediction, forecasting of PM concentrations, and AQI accuracy. Based on Craiova’s weather features, the authors of another study [] have proven that photovoltaics are a viable solution to reduce air pollution in a busy city like Craiova. The implications of air pollution for photovoltaic systems using six random forest models are newly investigated. The Shapley additive explanations technique was applied to interpret the decision-making model. In [], a daily time series of ozone and fine particulate matter (PM) concentrations was forecasted using a meta-hybrid deep NARMAX (nonlinear autoregressive moving average with exogenous inputs) model. The dataset of three years contained the following: (i) data at each minute for meteorological parameters (T, P, RH) and air pollutant concentrations (PMs, O3, CO2, VOCs, formaldehyde CH2O), (ii) hourly data on wind direction, wind speed, and sun brightness provided by the National Meteorological Administration.

The current understanding and forecasting of air quality in this urban area (Craiova, Romania) face several limitations:

- -

- Short-term forecasting: most existing models focus on short-term predictions, providing only a limited number of future values per air pollutant.

- -

- Absence of certain variables: existing research often lacks comprehensive studies that incorporate all relevant variables for air pollutant prediction and forecasting.

- -

- Model limitations: many models are constrained by the specific location and dataset used, making them difficult to generalize in other areas.

- -

- Model selection: in some cases, models are chosen and applied without a thorough assessment or justification for their selection.

- -

- Statistical evaluation: some studies rely on a narrow set of statistical metrics and indices, limiting the robustness of model accuracy assessments.

This research aims to overcome these limitations by exploring the use of various hybrid AI methods to predict, model, and forecast hourly ground-level ozone (O3) concentrations. The dataset consists of 27 measured variables, categorized into meteorological parameters like temperature (T), air pressure (P), relative humidity (RH), precipitation, sun brightness, wind direction and speed, and air pollutants (PM2.5; PM10; CO; SO2; O3; NO; NO2; NOₓ; NH3; VOCs; Nt; VOCs like benzene (C6H6), toluene (C7H8), ethylbenzene (C8H10), and m-, o-, and p-xylene; and toxic elements like Pb, Cd, Ni, and As). The methodology is an approach in four stages. First, data preprocessing is performed to detect and correct anomalies, outliers, and missing values, while ground-level ozone trends in Craiova are analyzed over recent years. In the second stage, correlations between ozone concentrations and other variables are examined, considering only complete data (non-missing values and variables). In the third stage, various hybrid AI algorithms were assessed to determine the optimal predictive model for ozone concentrations. In the last stage of this approach, a novel deep NARMAX model is employed to model and forecast hourly ozone levels in Craiova.

This paper is organized into five sections. Section 2 briefly presents the climatic features of the area of interest, air quality monitoring stations that were selected for this study, and the dataset. Mainly, this section focuses on data preprocessing, correlation analysis, and the temporal trends of variables within the dataset. Section 3 provides a brief review of the AI-based models used. It also outlines the evaluation criteria and the overall study framework. Section 4 presents the results and discussion. Finally, Section 5 concludes the paper with the main findings and a brief overview of future challenges and perspectives.

2. Data Description

2.1. Area of Interest and Climate Description

This research is focused on Craiova, a city which is located in the Oltenia Plain (part of the Romanian Plain) which is the key economic, historical, and cultural hub in the southwestern part of Romania. Craiova has 243,765 inhabitants (according to the 2022 census conducted by the National Institute of Statistics). The ongoing growth of this city also has negative impacts, including air pollution caused by rising traffic and the use of a heating system based on an old thermal power station, construction zones, and industrial activity. The city has 285 hectares green spaces in neighborhoods (according to the Air Quality Plan for Craiova (2020–2024)). Unfortunately, the trend is shifting towards a reduction in green areas to make way for the development of residential neighborhoods.

Craiova has a transitional temperate continental climate specific to the Romanian Plain, with sub-Mediterranean influences due to its position in southwestern Romania. The inhabitants of this city enjoy four seasons. The altitude is 100–120 m. Winters are cold (the average temperature in January is −2.4 °C, but absolute minimal temperatures can decrease below −25 °C in arctic invasion situations), and summers are hot (the average temperature in July is over 22 °C) with frequent heatwaves (absolute maximum temperatures may exceed 40 °C). Over the last three decades, the tendency is to have longer and hotter summers and milder winters. Also, more snowy days are recorded during early spring [,]. The average annual precipitation varies between 570 and 645 mm. Craiova enjoys about 2200–2250 h of sunshine per year. The highest frequency of winds is from the east and west (42%), followed by the northeast (34%), and the south, southeast, and southwest (24%). Active atmospheric dynamics are a climatic feature of this area. The atmospheric calm in the cold period of the year is characterized by significant temperature inversions and by fog. Using data from the MODIS satellite (land surface temperature and land cover type), five heat islands were identified in the paved areas of the city [].

2.2. Air Quality Monitoring Stations and the Dataset

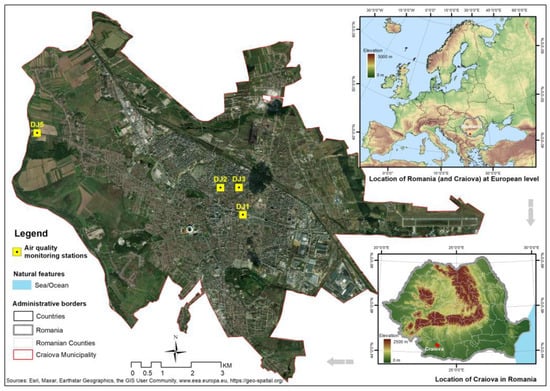

The source of the hourly open-source dataset is the Romanian Agency for Environmental Protection’s website (https://www.calitateaer.ro/). The dataset spans five years (1 January 2020–31 December 2024) and contains two categories of data: (i) seven meteorological parameters and (ii) thirteen air pollutants. Figure 1 shows the distribution of the four stations for air quality monitoring (DJ-1, DJ-2, DJ-3, and DJ-5) in Craiova.

Figure 1.

Distribution of the stations for air pollution monitoring in Craiova.

Table 2 indicates the geographical coordinates of the stations, type, location, and parameters that each station measures. In general, a traffic station assesses the influence of traffic on air quality and has a representative radius of 10–100 m. The industrial station evaluates the influence of industrial activities on air quality and has a representative radius of 100 m −1 km. Urban and suburban stations have a representative radius of 1–5 km.

Table 2.

Features of the stations from Craiova.

The measurement method for sulfur dioxide concentration is UV fluorescence (ISO/FDIS 10498). The chemo-luminescence method helps to measure nitric oxide, nitrogen dioxide, and other nitrogen oxides’ concentrations in the air (ISO 7996/1985). The gravimetric method (according to EN 12341) is a standard method to measure PM2.5 and PM10 concentrations. The carbon monoxide concentration in the air is determined using the non-dispersive infrared spectrometric method. Lead is determined by atomic absorption spectroscopy (ISO 9855/1993). Ground-level ozone concentration is measured based on an electrochemical method.

Table 3 presents all variables that were taken into consideration in this research. The variables are split into inputs (predictors) and the target variable (one output). There are two aims in the following: (1) to find out the correlation between variables and (2) to recognize the most significant predictors for accurately predicting, modeling, and forecasting ozone concentrations. As can be easily observed in Table 3, there are seventeen input variables and only one output variable, ozone concentration.

Table 3.

The considered predictor variables and output.

2.3. Data Pre-Processing

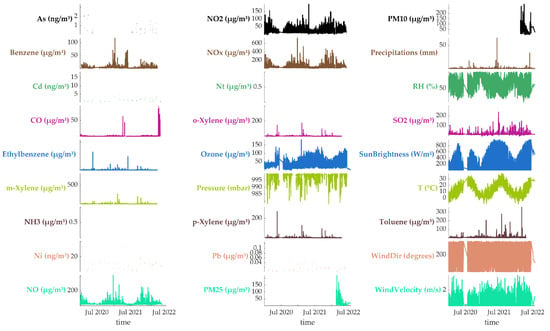

Figure 2 presents the raw hourly data used in this study before preprocessing. The objective is to assess data continuity, verify the availability of all collected variables, and identify suitable variables for the next stage, where more continuous data will be selected to analyze correlations between O3 and other variables, ultimately aiding in the development of optimal AI-based models for ozone prediction and forecasting in Craiova. As observed, certain variables exhibit discontinuities, while some, such as Ni and NH3, lack recorded values entirely. Notably, meteorological variables appear to be continuous, with minimal missing data. In contrast, air pollutant data contain several gaps. Specifically, O3, NO, NO2, NOx, CO, C6H6, C8H10, three isomers of xylene (m-, o-, p-xylene), and C7H8 are available for the period between 1 January 2020 and 18 April 2022. A second dataset, covering PM10 and PM2.5, spans from 18 February 2022 to 24 September 2024. A visualization of the evolution in time of pollutant concentrations effectively highlights missing and discontinuous variables by referencing the presence of NaN values in the ozone dataset. Based on this analysis, variables such as As, Cd, Ni, Pb, NH3, Nt, P, PM2.5, and PM10 are excluded from further analyses due to their significant data gaps.

Figure 2.

A visualization of data (including missing values) used in this work.

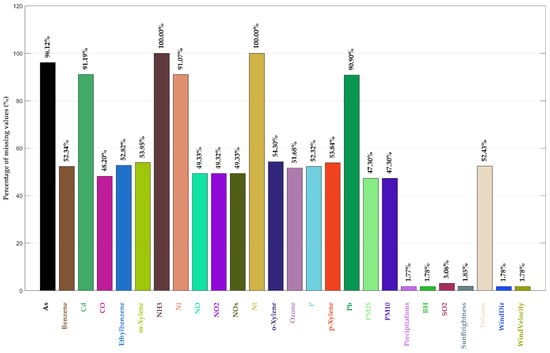

Figure 3 illustrates the percentage of missing data for each variable in the dataset. As previously mentioned, NH3 and Nt have 100% missing values, indicating no recorded data. Additionally, As, Cd, Ni, and Pb are almost entirely absent, with more than 90% missing values. Meteorological variables exhibit near-complete continuity, with missing data percentages below 3%. In contrast, pollutant variables show significant gaps, with approximately 50% missing values.

Figure 3.

Percentage of the missing values in the data used in this work.

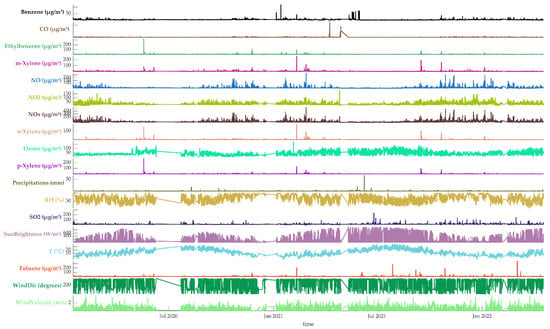

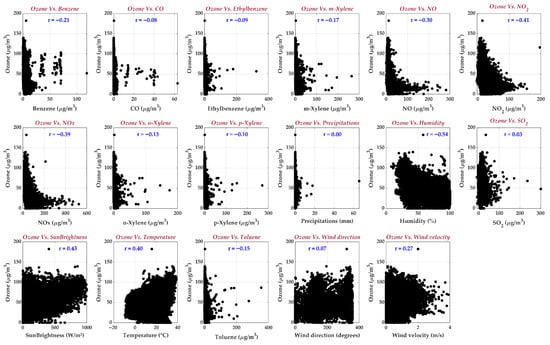

Figure 4 illustrates the distribution of ozone concentrations against the selected variables, excluding missing values. This visualization includes ozone data alongside meteorological parameters and pollutant variables. The main goal of this analysis is to investigate the correlations between ozone levels and the considered variables. The results suggest that ozone concentrations tend to decrease with lower temperatures and sun brightness while increasing with higher relative humidity (RH) and elevated NO, NO2, and NOₓ levels. However, no clear correlation is observed between the ozone and the remaining variables. Additionally, the plot highlights specific periods with notably high ozone concentrations, providing valuable insights for further analysis.

Figure 4.

Ozone versus the selected variables, excluding missing values.

Furthermore, Figure 5 illustrates the correlation strength among all selected variables. The highest correlation is observed between ozone and relative humidity (inverse correlation, r = −0.54), followed by sun brightness (r = 0.43), NO2 (inverse correlation, r = −0.41), temperature (r = 0.40), and NOx (inverse correlation, r = 22120.39). A moderate correlation is noted between ozone and NO (inverse correlation, r = −0.30), wind velocity (r = 0.27), and benzene (inverse correlation, r = −0.21). A weaker correlation is found with m-xylene (inverse correlation, r = −0.17), toluene (inverse correlation, r = −0.15), o-xylene (inverse correlation, r = −0.13), and p-xylene (inverse correlation, r = −0.10). However, no significant correlation is identified between ozone and the remaining variables. We may conclude that key variables like temperature and sunlight drive ozone formation, while humidity and pollutants may suppress it.

Figure 5.

Matrix of correlation: ozone versus the selected variables.

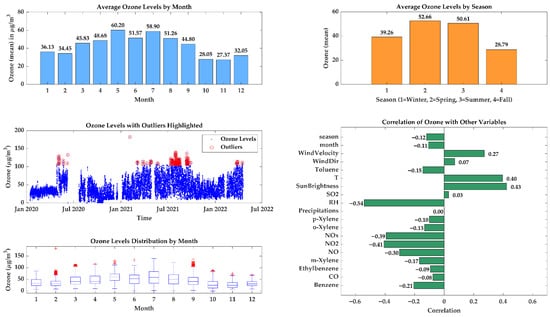

Figure 6 provides a comprehensive analysis of seasonal ozone trends, outliers, and correlations with other variables, including seasonality and monthly variations.

Figure 6.

Ozone trends visualization.

- -

- Monthly trends (first subplot): this plot illustrates average ozone concentrations for each month. Higher ozone levels are observed during summer months (June, July, and August) due to increased solar radiation and higher temperatures, which enhance photochemical reactions. Conversely, lower ozone concentrations are recorded in winter months (December and January) due to reduced sunlight and colder temperatures.

- -

- Seasonal trends (second subplot): a bar chart aggregates monthly ozone data into four seasons (winter, spring, summer, and fall). The highest ozone levels are observed in summer (season 3), driven by higher temperatures and intense sunlight. In contrast, the lowest ozone levels occur in winter (season 1), when photochemical activity is minimal.

- -

- Outlier detection (third subplot): a scatter plot highlights extreme ozone values (outliers) over time. Outliers, marked in red, may indicate pollution spikes, measurement errors, or unusual meteorological conditions. The overall time series exhibits periodic fluctuations, likely influenced by daily and seasonal cycles.

- -

- Correlation analysis (fourth subplot): a horizontal bar chart presents the correlation strength between ozone and other variables. Positive correlations are found with temperature and sun brightness, suggesting that ozone levels increase with higher temperatures and solar radiation. Negative correlations appear with variables like relative humidity, indicating that higher moisture content can suppress ozone formation.

- -

- Ozone distribution by month (fifth subplot): a box plot displays the distribution of ozone concentrations for each month, capturing medians, quartiles, and outliers. The summer months exhibit a wider range of ozone values, likely due to higher photochemical activity, whereas winter months display more stable and lower concentrations.

3. Materials and Methods

The materials and methods should be described with sufficient details to allow others to replicate and build on the published results. Please note that the publication of your manuscript implies that you must make all materials, data, computer code, and protocols associated with the publication available to readers. Please disclose at the submission stage any restrictions on the availability of materials or information. New methods and protocols should be described in detail while well-established methods can be briefly described and appropriately cited.

3.1. AI-Based Models Employed

The AI-based models that are used to predict, model, and forecast ozone concentrations for future times are investigated in the following section. The models that were chosen include statistical AI-based models designed for time series forecasting, machine learning (ML) and deep learning (DL) models specifically suited for prediction chores, and feature engineering techniques used for dimensionality reduction.

Twenty-three ML and DL models are chosen, used, and contrasted for prediction chores. They are presented in detail in Table 1. To determine the optimal set of predictor variables that can improve the prediction accuracy of the best-performing model, the model obtained in this stage was combined with feature selection approaches. Table A1 offers a summary of the FS approaches used here (see Appendix A). Fifty FS methods are used in all.

Moreover, the next phase uses the most suitable statistical models for multi-step-ahead forecasting and modeling of ozone levels, utilizing past data and the latest observations of related predictor variables. A deep nonlinear system identification technique is employed, utilizing a deep nonlinear autoregressive moving average model with exogenous inputs (Deep-NARMAX). This model characterizes a discrete-time nonlinear system with input u and output y, as described by Equation (1). To forecast the upcoming time step t, the model uses historical ozone concentration values at t − 1, t − 2, t − 3, etc., as provided by Equation (2). The most pertinent predictor variable is subjected to a similar procedure, which forecasts its future value based on its past values. Ozone levels for upcoming time frames are iteratively predicted using these predicted values, with the procedure being repeated for succeeding time steps, as described in Section 3.2.

where w is the process noise (the weight), and e is the measurement noise (the error).

where y(t) is the output variable at time t, u(t) is the combination of the predictor variables involved at time t, and e(t) is the model’s error term. Model function F is a nonlinear function that depends on the past values of the output and variable inputs. The previous equation can be presented in another version, in the context of linear models (Equation (3)).

where , , , , , and are the coefficients of the model, which are determined based on input and output data.

Equations (1) and (2) represent the general formulation of nonlinear dynamic systems used in AI-based modeling. Equation (1) corresponds to the state-space form, while Equation (2) expresses the functional dependency used by models such as NARX and NARMAX. This formulation bridges the gap between theoretical dynamic modeling and data-driven learning frameworks adopted in this study.

To improve clarity, we emphasize the following:

- Equation (1) serves as a conceptual framework connecting AI models with classical system identification theory.

- Equation (2) expresses the functional mapping structure that data-driven models (e.g., NARX, NARMAX) learn during training.

- The innovation lies in integrating this general dynamic representation with AI-based learning and hybrid feature selection to improve model generalization and interpretability.

3.2. Evaluation Criteria and Statistical Indices

A method for assessing the performance of obtained outcomes was proposed by the authors in [], introducing a performance score (φ) that indicates the effectiveness of a considered model. This performance score offers a consistent way to evaluate the precision and dependability of the model in forecasting and predicting ozone levels. Equation (4) provides the mathematical expression of the score φ. Poorer model performance is indicated by higher values of φ. Equations (5)–(10) reflect the metrics used in Equation (4): mean bias error (MBE), root mean square error (RMSE), t-statistic (TS) and standard deviation (σ), coefficient of determination (R2), Willmott’s Index of Agreement (WIA), and slope of best-fit line (SBF). Equations (11) and (12) provide the standard deviation (σ) and mean absolute percentage error (MAPE), which can be used for further investigation.

It is important to mention that the statistical indicators employed here are categorized into two groups: dispersion (error-based) and overall performance (correlation-based). For dispersion indicators such as MBE, RMSE, and Sd, the best rank corresponds to values closer to zero, whereas for overall performance indicators such as R2, the best rank corresponds to values closer to one. This section offers a precise description of the experimental results, their interpretation, and inferred experimental conclusions.

K represents the total number of measurements, and parameters , , and correspond to the ith predicted value, ith measured value, and mean value of ozone.

3.3. Methodology

For precise ozone concentration forecasting, we apply a methodology in six stages that combines data preprocessing, correlation analysis, model selection, feature selection, model training, and final deployment. Each stage is explained in detail in the following section:

1. Data preprocessing is carried out in three steps: (i) min–max scaling is utilized for normalization in order to improve model convergence and stability. (ii) Z-scores are utilized for outlier detection. (iii) The k-nearest neighbors (k-NN) method is utilized to identify the anomalies and to exclude anomalous data points.

2. Correlation analysis and trend exploration follows two steps: (i) the correlation between ozone concentrations and the other variables is analyzed. (ii) The trends in ozone levels are visualized to achieve insights into temporal patterns.

3. Data splitting. To assess the performance of each model, the dataset is divided into training (70%) and testing (30%) sets.

4. Model selection. To determine the best-performing model for ozone prediction, the performance of 23 ML and DL models is evaluated.

5. Feature selection is carried out in two steps: (i) to increase prediction accuracy, the efficacy of 50 feature selection strategies is assessed. (ii) Based on this evaluation, the best models and most relevant predictor variables are chosen.

6. Multi-step-ahead forecasting with Deep-NARMAX: (i) Future ozone levels are forecasted using a deep-NARMAX model. (ii) Historical ozone data and other relevant variables are used to determine and refine the optimal mathematical formulation for ozone value forecasting.

4. Results and Discussion

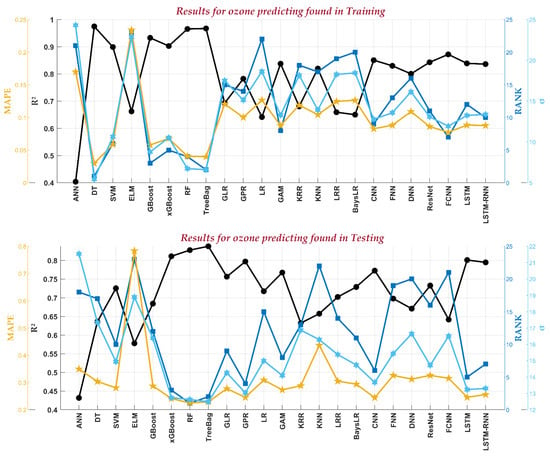

4.1. Best-Performing Model for Ozone Prediction

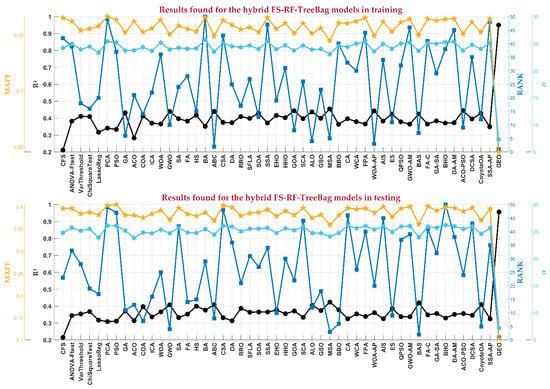

Figure 7 compares ML and DL models for predicting ozone concentration during the training and testing phases. The top three models in the training phase are DT, treebag, and ELM, while in the testing phase, the leading models are RF, treebag, and xGBoost. Based on R2 values, treebag emerges as the best-performing model in both stages, exceeding the confidence threshold of 0.95 in training and achieving approximately 0.89 in testing. Additionally, its MAPE values remain close to zero, demonstrating a strong agreement between measured and predicted ozone concentrations. These findings suggest that these ML models outperform other ML and DL models in hourly ozone prediction. To further improve prediction accuracy in the testing phase, we assessed the hybridization performance of RF, treebag, and xGBoost.

Figure 7.

A comparison of ML and DL for predicting ozone concentration in the training and testing stages.

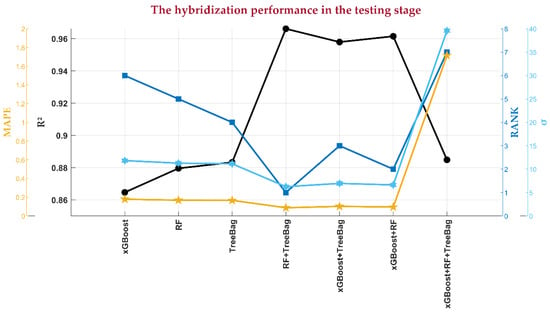

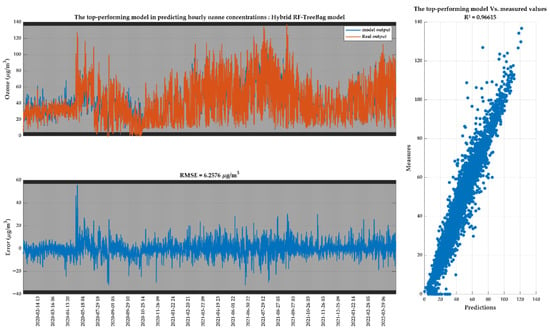

Figure 8 presents the statistical results of this hybridization, showcasing seven possible ozone prediction models derived from the three top-performing ML models. As illustrated, the RF–treebag hybrid model outperforms the others, achieving the highest prediction accuracy. The corresponding statistical metrics in the testing phase are R2 = 0.96615, MBE = 0.002468 μg/m3, RMSE = 6.2576 μg/m3, MAPE = 0.087766 μg/m3, and σ = 6.2582 μg/m3, demonstrating its effectiveness in enhancing ozone concentration prediction.

Figure 8.

A comparison the top-performing and hybrid top-performing models for predicting ozone concentration in the training and testing stages.

Furthermore, for the top-performing RF–treeBag hybrid model, Figure 9 presents the predicted versus measured ozone concentrations through a time series plot, error distribution, and scatter plot. These visualizations provide insights into the model’s accuracy, error patterns, and the correlation between predicted and observed values, further validating its effectiveness in ozone concentration prediction. In the subsequent phase, the hybrid RF–treebag model is utilized to enhance the accuracy of the most effective feature selection technique, identifying the optimal set of predictors for predicting ozone concentrations.

Figure 9.

The top-performing model for predicting ozone concentration Vs. measured values.

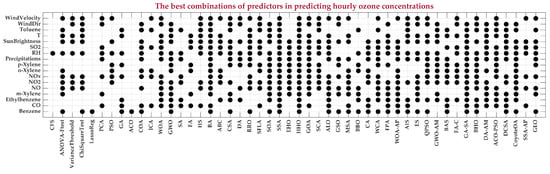

Figure 10 shows the optimal set of predictors for predicting ozone concentrations displayed by each FS technique studied.

Figure 10.

The best combinations of predictors in predicting hourly ozone concentrations.

Figure 11 presents the statistical analysis results for the best feature combinations identified by each of the 50 FS techniques. The highest prediction accuracy is achieved using the GEO model, which identifies the most relevant predictors for ozone concentration as benzene, m-xylene, NO, NOx, p-xylene, precipitation, RH, SO2, sun brightness, toluene, and wind velocity. The corresponding statistical metrics in the training phase are R2 = 0.9554, MBE = −0.01419 μg/m3, RMSE = 7.3224 μg/m3, MAPE = 0.0458 μg/m3, and σ = 7.3227 μg/m3, and in the testing phase, the corresponding statistical metrics are R2 = 0.95656, MBE = 0.043114 μg/m3, RMSE = 7.2145 μg/m3, MAPE = 0.10317 μg/m3, and σ = 7.2151 μg/m3. In contrast, other FS techniques showed minimal correlation and weak predictive accuracy for ozone concentration.

Figure 11.

A comparison of FS techniques in predicting ozone concentration in the training and testing stages.

4.2. Best-Performing Model for Ozone Forecasting

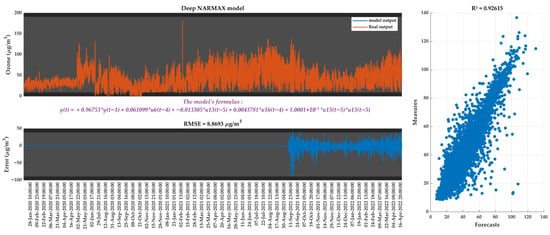

Finding and evaluating the most accurate Deep-NARMAX model for ozone level forecasting is the main goal of this subsection. To accurately forecast future ozone levels, the Deep-NARMAX approach used in this study investigates various historical data configurations for ozone and other relevant variables. The following tuning parameters are part of the model initialization: (1) range of time lags for the output variable (nu = [1 5]); (2) range of time lags for the input variables (ny = [1 5]); (3) range of time lags for the error term (ne = [1 5]); (4) maximum time lag among variables (p_temp = max([nu, ny])); (5) nonlinearity degree of the model (degree = 2); (6) maximum length of the potential model terms (term_len = 5); (7) gate weight threshold for feature selection (threshold = 0.7).

The top-performing Deep-NARMAX model for multi-step-ahead forecasting of hourly ozone concentrations is illustrated in Figure 12. This figure presents the forecasted ozone concentrations and the corresponding forecast errors, as well as RMSE and R2 values. The most accurate forecasting model is based on the following key predictors: ozone concentration at time (t − 1), solar brightness () at time (t − 5), and wind direction () and nitrogen dioxide NO2 () at time (t − 4). The statistical evaluation of this model demonstrates strong predictive performance, with low dispersion in error metrics and an R2 value approaching 0.95, aligning with the confidence level threshold. These results confirm that the proposed Deep-NARMAX model is a reliable and efficient approach for forecasting ozone concentrations with high accuracy. The statistical analysis is given by Table 4.

Figure 12.

Multi-step-ahead time series forecasting of ozone concentrations using the Deep-NARMAX model.

Table 4.

A statistical performance analysis.

5. Conclusions

In this study, an advanced deep AI-based approach was implemented to predict, model, and forecast ozone concentrations. The results demonstrated high accuracy in both prediction and forecasting of ground-level ozone concentrations.

5.1. Key Conclusions

- Among 27 ML and DL models evaluated, the hybrid RF–treebag model emerged as the top-performing model for predicting hourly ozone concentrations in both training and testing. This model achieved excellent results in the testing stage, with R2 = 0.96615, MBE = 0.002468 μg/m3, RMSE = 6.2576 μg/m3, MAPE = 0.087766 μg/m3, and σ = 6.2582 μg/m3.

- From 50 FS techniques assessed, the GEO technique was identified as the best feature selection method, providing the most effective predictor combination for ozone concentration prediction. The analysis indicated that the most relevant predictors for ozone concentration are C6H6, isomers of xylene, NO, NOx, precipitation, RH, SO2, sun brightness, C7H8, and wind velocity. When coupled with the hybrid RF–treebag model, this approach yielded strong predictive accuracy, with R2 = 0.95656, MBE = 0.043114 μg/m3, RMSE = 7.2145 μg/m3, MAPE = 0.10317 μg/m3, and σ = 7.2151 μg/m3.

- A novel Deep-NARMAX model was successfully developed for forecasting and modeling future ozone concentrations. The model relies on key predictors, including ozone concentration at time (t − 1), sun brightness at time (t − 1), and month at time (t − 2). Its statistical evaluation confirmed robust predictive performance, achieving R2 = 0.93614, MBE = −0.26051 μg/m3, RMSE = 8.279 μg/m3, MAPE = 0.032824 μg/m3, and σ = 8.2752 μg/m3.

5.2. Challenges

- There is no long-term research on the hybrid ML-DL approach on ground-level ozone based on the dataset supplied by the Romanian Agency for Environmental Protection (member of the EEA network). The amount of missing data in the Romanian Agency for Environmental Agency network has to be clarified and effectively understood.

- No study compares the quality of the data from different open sources about air pollution (independent low-cost sensor networks, the EEA network, and satellite data), eventually complementing the information from different data sources to complement data missing from one dataset.

- Improvement of the techniques of prediction and forecasting in air pollution allow decision-makers to select the best solutions for the benefit of their local communities.

5.3. Limitations

The main limitations of this research include the percentage of missing data from the Romanian Agency for Environmental Protection, the frequency of the dataset (hourly), the small number of air quality monitoring stations (four), and the uneven distribution of the stations in Craiova.

5.4. Perspectives

Using the datasets provided by satellites and comparing them with the results from independent networks and the network that belongs to the National Environmental Agency might help better comprehend ozone pollution locally. There are satellites that provide data about ozone concentration: NASA satellites (AURA and Terra and Aqua), European Space Agency satellites (Sentinel-5 Precursor, Envisat), NOAA Satellites (Suomi NPP and NOAA-20, GOES), Japanese satellites (GOSAT), Russian satellites (Meteor-M Series), Canadian satellites (SCISAT-1), Indian satellites (INSAT-3D and INSAT-3DR).

Author Contributions

Conceptualization, Y.E.M. and M.T.U.; methodology, Y.E.M.; software, Y.E.M.; formal analysis, Y.E.M. and M.T.U.; investigation, Y.E.M. and M.T.U.; resources, M.T.U.; writing—original draft preparation, Y.E.M. and M.T.U.; writing—review and editing, Y.E.M. and M.T.U.; visualization, Y.E.M.; supervision, Y.E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

(a) The dataset, models, or codes supporting this study’s findings are available from the corresponding author upon a reasonable request. (b) All data, models, and code generated or used during this study appear in the submitted article.

Acknowledgments

The Fulbright program’s support is gratefully acknowledged. The dataset was downloaded from the National Environmental Agency (Romania) website, and the authors express their gratitude for the opportunity to work with open-source data.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Summary of feature selection methods employed in this work.

Table A1.

Summary of feature selection methods employed in this work.

| Method | Description | Key Parameters | Advantages | Disadvantages | Reference |

|---|---|---|---|---|---|

| Correlation-based feature selection (CFS) | Selects features with high correlation to target and low inter-feature correlation. | Correlation threshold | Simple and computationally inexpensive. | May miss nonlinear relationships. | [] |

| ANOVA F-Test | Measures statistical significance of each feature with the target variable. | p-value threshold | Well suited for identifying significant features. | Limited to linear dependencies. | [] |

| Variance threshold | Removes features with low variance. | Variance threshold | Simple and eliminates uninformative features. | Does not consider the target variable. | [] |

| Chi-square test | Measures dependence between categorical features and the target variable. | None | Effective for categorical data. | Not suitable for continuous features. | [] |

| Lasso regularization | Shrinks coefficients of less important features to zero. | Regularization parameter λ | Performs feature selection and regression simultaneously. | Assumes linear relationships. | [] |

| Principal component analysis (PCA) | Reduces dimensionality by projecting data onto principal components. | Number of components | Captures variance and reduces redundancy. | Does not select original features directly. | [] |

| Particle swarm optimization (PSO) | Simulates particle movement to optimize feature subsets. | Number of particles, inertia weight | Efficient and widely applicable. | May converge to local optima. | [] |

| Genetic algorithm (GA) | Uses evolutionary principles like crossover and mutation. | Population size, mutation rate | Handles discrete and continuous search spaces. | Can be computationally intensive. | [] |

| Ant colony optimization (ACO) | Simulates pheromone-based movement to optimize feature selection. | Number of ants, evaporation rate | Good exploration of search space. | Requires parameter tuning and computationally intensive. | [] |

| Coyote optimization algorithm (COA) | Models social behavior of coyotes in packs. | Number of coyotes | Simple and adaptive. | Limited theoretical validation. | [] |

| Imperialist competitive algorithm (ICA) | Simulates imperialistic competition among nations to optimize selection. | Number of nations, assimilation rate | Strong global search ability. | May converge slowly. | [] |

| Whale optimization algorithm (WOA) | Mimics humpback whales’ bubble-net hunting behavior. | Number of whales, search space size | Balances exploration and exploitation. | Requires careful parameter tuning. | [] |

| Grey wolf optimizer (GWO) | Simulates leadership hierarchy and hunting mechanism of wolves. | Number of wolves | Simple and effective. | Can be slow to converge for large feature sets. | [] |

| Simulated annealing (SA) | Combines stochastic exploration with cooling schedule. | Initial temperature, cooling rate | Effective for avoiding local optima. | Sensitive to parameter settings. | [] |

| Firefly algorithm (FA) | Mimics fireflies’ light-based attraction. | Light absorption, randomness factor | Balances exploration and exploitation. | May be slower for high-dimensional data. | [] |

| Harmony search (HS) | Inspired by the process of musical improvisation to find optimal solutions. | Harmony memory size, pitch adjustment rate | Simple and effective for continuous optimization. | Requires parameter tuning. | [] |

| Bat algorithm (BA) | Mimics echolocation behavior of bats for optimization. | Loudness, pulse rate, frequency | Balances exploration and exploitation. | Can become trapped in local optima. | [] |

| Artificial bee colony (ABC) | Simulates foraging behavior of honeybees. | Number of bees, limit for abandonment | Effective for global search. | May converge slowly for large datasets. | [] |

| Crow search algorithm (CSA) | Model crows’ intelligence to hide and retrieve solutions. | Awareness probability, flight length | Effective for global optimization. | May converge slowly. | [] |

| Dragonfly algorithm (DA) | Simulates static and dynamic swarming behavior of dragonflies. | Separation, alignment, cohesion weights | Effective for feature-rich datasets. | Computationally intensive for large-scale problems. | [] |

| Raven roosting optimization (RRO) | Inspired by ravens’ intelligence and cooperation for optimization. | Awareness probability, social behavior | Effective for combinatorial problems. | Limited theoretical validation. | [] |

| Shuffled frog-leaping algorithm (SFLA) | Mimics frog leaping behavior for cooperative optimization. | Number of frogs, memeplex division | Good exploration properties. | Convergence speed depends on parameters. | [] |

| Seagull optimization algorithm (SOA) | Mimics the behavior of seagulls in their search for food | None | Balances exploration and exploitation. | May require parameter fine-tuning. | [] |

| Salp swarm algorithm (SSA) | Simulates salps’ chain-based movement in the search space. | Number of salps, adaptive parameters | Good convergence properties. | May require parameter tuning. | [] |

| Elephant herding optimization (EHO) | Simulates the social behavior of elephants in herds. | Clan size, migration rate | Handles multimodal problems effectively. | Sensitive to parameter settings. | [] |

| Harris hawks optimization (HHO) | Mimics cooperative hunting strategy of Harris hawks. | None | Good balance of exploration and exploitation. | May require parameter fine-tuning for large feature sets. | [] |

| Grasshopper optimization algorithm (GOA) | Mimics swarming behavior of grasshoppers for optimization. | Control parameters | Efficient for nonlinear problems. | May converge slowly for complex datasets. | [] |

| Sine cosine algorithm (SCA) | Uses sine and cosine functions to guide search. | Amplitude control | Effective in global optimization. | Requires parameter tuning. | [] |

| Ant lion optimization (ALO) | Simulates ant lions’ hunting mechanism to find optimal solutions. | Elite rate, trap radius | Strong exploration and exploitation. | Computationally expensive. | [] |

| Glowworm swarm optimization (GSO) | Inspired by glowworms’ luminescence for decision-making. | Luciferin update, vision range | Effective for multimodal optimization. | Slower convergence in large search spaces. | [] |

| Monkey search algorithm (MSA) | Model monkeys’ climbing behavior to search for solutions. | Climbing rate, jumping rate | Good convergence rate. | Sensitive to parameter settings. | [] |

| Biogeography-based optimization (BBO) | Model species migration among habitats for optimization. | Migration rate, mutation rate | Good for constrained optimization problems. | May converge prematurely. | [] |

| Cultural algorithm (CA) | Incorporates cultural evolution principles for optimization. | Knowledge space, acceptance criteria | Enhances search efficiency. | Complexity in implementation. | [] |

| Wolf colony algorithm (WCA) | Simulates hunting and social behavior of wolves. | Number of wolves, hunting radius | Effective for large-scale problems. | Requires careful tuning. | [] |

| Flower pollination algorithm (FPA) | Simulates pollination process of flowers. | Switch probability, step size | Handles multimodal optimization. | Requires parameter tuning. | [] |

| Whale optimization algorithm with adaptive parameters (WOA-AP) | Enhanced whale optimization algorithm with adaptive parameters. | Adaptation rate | Improves performance of standard WOA. | Computationally expensive. | [] |

| Artificial immune system (AIS) | Mimics immune response mechanisms to optimize feature selection. | Clonal selection, mutation rate | Handles high-dimensional problems well. | Complex algorithm structure. | [] |

| Eagle strategy (ES) | Model eagles’ soaring and hunting behavior for optimization. | Search radius, soaring phase | Strong balance between local and global search. | Requires computational resources. | [] |

| Quantum particle swarm optimization (QPSO) | Incorporates quantum behavior to enhance PSO. | Contraction–expansion coefficient β | Efficient in balancing exploration and exploitation. | Requires parameter tuning and is computationally heavy. | [] |

| Gray wolf optimizer with adaptive mechanism (GWO-AM) | Enhanced gray wolf optimizer with adaptive parameters. | Adaptation function | Improved convergence and efficiency. | Parameter dependency. | [] |

| Beetle antennae search (BAS) | Models beetle behavior using antennae for directional search. | Step size, antennae length | Lightweight and easy to implement. | Limited exploration capabilities in large feature spaces. | [] |

| Adaptive firefly algorithm (FA-C) | Introduces chaos into FA for enhanced exploration. | Light absorption, randomness factor | Avoids premature convergence. | Can be slower for large search spaces. | [] |

| Hybrid genetic algorithm and simulated annealing (GA-SA) | Integrates GA’s global search with SA’s local refinement. | Population size, mutation rate, temperature | Excellent global and local search. | Computationally intensive. | [] |

| Black hole optimization algorithm (BHO) | Models’ stars being pulled toward the best solution (black hole). | Event horizon | Simple and effective in feature-rich datasets. | May not handle multimodal problems effectively. | [] |

| Dragonfly algorithm with adaptive mechanism (DA-AM) | Modified dragonfly algorithm with adaptive learning. | Adaptive learning rate | Better adaptation to changing environments. | Higher computational complexity. | [] |

| Hybrid ant colony and PSO (ACO-PSO) | Combines ACO’s pheromone-based approach with PSO’s movement optimization. | Number of ants, particles, inertia weight | Captures global and local search effectively. | May require significant computational resources. | [] |

| Dynamic crow search algorithm (DCSA) | Enhances CSA with dynamic flight length and awareness. | Awareness probability, flight length | Improved convergence properties. | May converge slowly without proper tuning. | [] |

| Coyote optimization algorithm (CoyoteOA) | Models coyote social behavior for optimization. | Social adaptability, pack size | Adaptive and robust in dynamic environments. | Requires more empirical validation. | [] |

| Salp swarm algorithm with adaptive parameters (SSA-AP) | Enhanced SSA with parameter adaptation. | Adaptive parameter function | Faster convergence than standard SSA. | Sensitive to initial parameters. | [] |

| Golden eagle optimizer (GEO) | Mimics predatory behavior of golden eagles. | Flight radius | Efficient for large feature spaces. | Requires parameter tuning. | [] |

References

- Siriopoulos, C.; Samitas, A.; Dimitropoulos, V.; Boura, A.; AlBlooshi, D.M. Chapter 12-Health economics of air pollution. In Pollution Assessment for Sustainable Practices in Applied Sciences and Engineering; Mohamed, A.-M.O., Paleologos, E.K., Howari, F.M., Eds.; Butterworth-Heineman: Oxford, UK, 2021; pp. 639–679. [Google Scholar] [CrossRef]

- Mondal, A.; Mondal, S.; Ghosh, P.; Das, P. Analyzing the interconnected dynamics of domestic biofuel burning in India: Unravelling VOC emissions, surface-ozone formation, diagnostic ratios, and source identification. RSC Sustain. 2024, 2, 2150–2168. [Google Scholar] [CrossRef]

- Manisalidis, I.; Stavropoulou, E.; Stavropoulos, A.; Bezirtzoglou, E. Environmental and Health Impacts of Air Pollution: A Review. Front. Public Health 2020, 8, 14. [Google Scholar] [CrossRef] [PubMed]

- Udristioiu, M.T.; EL Mghouchi, Y.; Yildizhan, H. Prediction, modelling, and forecasting of PM and AQI using hybrid machine learning. J. Clean. Prod. 2023, 421, 138496. [Google Scholar] [CrossRef]

- El Mghouchi, Y.; Udristioiu, M.T.; Yildizhan, H.; Brancus, M. Forecasting ground-level ozone and fine particulate matter concentrations at Craiova city using a meta-hybrid deep learning model. Urban Clim. 2024, 57, 102099. [Google Scholar] [CrossRef]

- El Mghouchi, Y.; Udristioiu, M.T.; Yildizhan, H. Multivariable Air-Quality Prediction and Modelling via Hybrid Machine Learning: A Case Study for Craiova, Romania. Sensors 2024, 24, 1532. [Google Scholar] [CrossRef]

- Rich, D.Q.; Balmes, J.R.; Frampton, M.W.; Zareba, W.; Stark, P.; Arjomandi, M.; Hazucha, M.J.; Costantini, M.G.; Ganz, P.; Hollenbeck-Pringle, D.; et al. Cardiovascular function and ozone exposure: The Multicenter Ozone Study in oldEr Subjects (MOSES). Environ. Int. 2018, 119, 193–202. [Google Scholar] [CrossRef]

- Emberson, L. Effects of ozone on agriculture, forests and grasslands. Philos. Trans. A Math. Phys. Eng. Sci. 2020, 378, 20190327. [Google Scholar] [CrossRef]

- Du, J.; Qiao, F.; Lu, P.; Yu, L. Forecasting ground-level ozone concentration levels using machine learning. Resour. Conserv. Recycl. 2022, 184, 106380. [Google Scholar] [CrossRef]

- Zaini, N.; Ahmed, A.N.; Ean, L.W.; Chow, M.F.; Malek, M.A. Forecasting of fine particulate matter based on LSTM and optimization algorithm. J. Clean. Prod. 2023, 427, 139233. [Google Scholar] [CrossRef]

- Karthikeyan, A.; Priyakumar, U.D. Artificial intelligence: Machine learning for chemical sciences. J. Chem. Sci. 2022, 134, 2. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhang, W.; Palazoglu, A.; Sun, W. Prediction of ozone levels using a Hidden Markov Model (HMM) with Gamma distribution. Atmos. Environ. 2012, 62, 64–73. [Google Scholar] [CrossRef]

- Kaur, J.; Parmar, K.S.; Singh, S. Autoregressive models in environmental forecasting time series: A theoretical and application review. Environ. Sci. Pollut. Res. 2023, 30, 19617–19641. [Google Scholar] [CrossRef]

- Gao, W.; Xiao, T.; Zou, L.; Li, H.; Gu, S. Analysis and Prediction of Atmospheric Environmental Quality Based on the Autoregressive Integrated Moving Average Model (ARIMA Model) in Hunan Province, China. Sustainability 2024, 16, 8471. [Google Scholar] [CrossRef]

- Saravanan, D.; Kumar, K.S. IoT based improved air quality index prediction using hybrid FA-ANN-ARMA model. Mater. Today: Proc. 2022, 56, 1809–1819. [Google Scholar] [CrossRef]

- Muzakki, N.F.; Putri, A.Z.; Maruli, S.; Kartiasih, F. Forecasting the Air Quality Index by Utilizing Several Meteorological Factors Using the ARIMAX Method (Case Study: Central Jakarta City). J. JTIK J. Teknol. Inf. Dan Komun. 2024, 8, 569–586. [Google Scholar] [CrossRef]

- Bhatti, U.A.; Yan, Y.; Zhou, M.; Ali, S.; Hussain, A.; Qingsong, H.; Yu, Z.; Yuan, L. Time Series Analysis and Forecasting of Air Pollution Particulate Matter (PM2.5): An SARIMA and Factor Analysis Approach. IEEE Access 2021, 9, 41019–41031. [Google Scholar] [CrossRef]

- Yi, M.; Lin, F. A Hybrid Air Quality Prediction Method Based on VAR and Random Forest. J. Comput. Commun. 2025, 13, 142–154. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, Y. Soybean and Soybean Oil Price Forecasting through the Nonlinear Autoregressive Neural Network (NARNN) and NARNN with Exogenous Inputs (NARNN–X). Intell. Syst. Appl. 2022, 13, 200061. [Google Scholar] [CrossRef]

- Maleki, H.; Sorooshian, A.; Goudarzi, G.; Baboli, Z.; Tahmasebi Birgani, Y.; Rahmati, M. Air pollution prediction by using an artificial neural network model. Clean Techn. Environ. Policy 2019, 21, 1341–1352. [Google Scholar] [CrossRef]

- Naveen, S.; Upamanyu, M.S.; Chakki, K.M.C.; Hariprasad, P. Air Quality Prediction Based on Decision Tree Using Machine Learning. In Proceedings of the 2023 International Conference on Smart Systems for Applications in Electrical Sciences (ICSSES), Tumakuru, India, 7–8 July 2023. [Google Scholar]

- Liu, C.C.; Lin, T.C.; Yuan, K.Y.; Chiueh, P.T. Spatio-temporal prediction and factor identification of urban air quality using support vector machine. Urban Clim. 2022, 41, 101055. [Google Scholar] [CrossRef]

- Baran, B. Prediction of Air Quality Index by Extreme Learning Machines. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019. [Google Scholar] [CrossRef]

- Shyamala, K.; Sujatha, R. Modified Extreme Gradient Boosting Algorithm for Prediction of Air Pollutants in Various Peak Hours. In Advancements in Smart Computing and Information Security; Springer: Cham, Switzerland, 2024; pp. 125–141. [Google Scholar] [CrossRef]

- Reddy, P.D.; Parvathy, L.R. Prediction Analysis using Random Forest Algorithms to Forecast the Air Pollution Level in a Particular Location. In Proceedings of the 2022 3rd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 20–22 October 2022; pp. 1585–1589. [Google Scholar] [CrossRef]

- Abellán, J.; Masegosa, A.R. Bagging Decision Trees on Data Sets with Classification Noise. In Foundations of Information and Knowledge Systems; Link, S., Prade, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 248–265. [Google Scholar] [CrossRef]

- Lesaffre, E.; Marx, B.D. Collinearity in generalized linear regression. Commun. Stat.-Theory Methods 1993, 22, 1933–1952. [Google Scholar] [CrossRef]

- Liu, H.; Yang, C.; Huang, M.; Wang, D.; Yoo, C. Modeling of subway indoor air quality using Gaussian process regression. J. Hazard. Mater. 2018, 359, 266–273. [Google Scholar] [CrossRef]

- Sonu, S.B.; Suyampulingam, A. Linear Regression Based Air Quality Data Analysis and Prediction using Python. In Proceedings of the 2021 IEEE Madras Section Conference (MASCON), Chennai, India, 27–28 August 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Ravindra, K.; Rattan, P.; Mor, S.; Aggarwal, A.N. Generalized additive models: Building evidence of air pollution, climate change and human health. Environ. Int. 2019, 132, 104987. [Google Scholar] [CrossRef] [PubMed]

- Vovk, V. Kernel Ridge Regression. In Empirical Inference: Festschrift in Honor of Vladimir, N. Vapnik; Schölkopf, B., Luo, Z., Vovk, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 105–116. [Google Scholar] [CrossRef]

- Al-Eidi, S.; Amsaad, F.; Darwish, O.; Tashtoush, Y.; Alqahtani, A.; Niveshitha, N. Comparative Analysis Study for Air Quality Prediction in Smart Cities Using Regression Techniques. IEEE Access 2023, 11, 115140–115149. [Google Scholar] [CrossRef]

- Tella, A.; Balogun, A.L.; Adebisi, N.; Abdullah, S. Spatial assessment of PM10 hotspots using Random Forest, K-Nearest Neighbour and Naïve Bayes. Atmos. Pollut. Res. 2021, 12, 101202. [Google Scholar] [CrossRef]

- Su, Y. Prediction of air quality based on Gradient Boosting Machine Method. In Proceedings of the 2020 International Conference on Big Data and Informatization Education (ICBDIE), Zhangjiajie, China, 23–25 April 2020; pp. 395–397. [Google Scholar] [CrossRef]

- Xie, X.; Zuo, J.; Xie, B.; Dooling, T.A.; Mohanarajah, S. Bayesian network reasoning and machine learning with multiple data features: Air pollution risk monitoring and early warning. Nat. Hazards 2021, 107, 2555–2572. [Google Scholar] [CrossRef]

- Athira, V.; Geetha, P.; Vinayakumar, R.; Soman, K.P. DeepAirNet: Applying Recurrent Networks for Air Quality Prediction. Procedia Comput. Sci. 2018, 132, 1394–1403. [Google Scholar] [CrossRef]

- Krishan, M.; Jha, S.; Das, J.; Singh, A.; Goyal, M.K.; Sekar, C. Air quality modelling using long short-term memory (LSTM) over NCT-Delhi, India. Air Qual. Atmos. Health 2019, 12, 899–908. [Google Scholar] [CrossRef]

- Rao, K.S.; Devi, G.L.; Ramesh, N. Air Quality Prediction in Visakhapatnam with LSTM based Recurrent Neural Networks. Int. J. Intell. Syst. Appl. 2019, 11, 18–24. [Google Scholar] [CrossRef]

- Chakma, A.; Vizena, B.; Cao, T.; Lin, J.; Zhang, J. Image-based air quality analysis using deep convolutional neural network. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3949–3952. [Google Scholar] [CrossRef]

- Gong, X.; Liu, L.; Huang, Y.; Zou, B.; Sun, Y.; Luo, L.; Lin, Y. A pruned feed-forward neural network (pruned-FNN) approach to measure air pollution exposure. Environ. Monit. Assess. 2023, 195, 1183. [Google Scholar] [CrossRef]

- Zaini, N.; Ean, L.W.; Ahmed, A.N.; Malek, M.A. A systematic literature review of deep learning neural network for time series air quality forecasting. Environ. Sci. Pollut. Res. 2022, 29, 4958–4990. [Google Scholar] [CrossRef]

- Zhang, B.; Zou, G.; Qin, D.; Ni, Q.; Mao, H.; Li, M. RCL-Learning: ResNet and convolutional long short-term memory-based spatiotemporal air pollutant concentration prediction model. Expert Syst. Appl. 2022, 207, 118017. [Google Scholar] [CrossRef]

- Liu, J.; Xing, J. Identifying Contributors to PM2.5 Simulation Biases of Chemical Transport Model Using Fully Connected Neural Networks. J. Adv. Model. Earth Syst. 2023, 15, e2021MS002898. [Google Scholar] [CrossRef]

- Chang, S.W.; Chang, C.L.; Li, L.T.; Liao, S.W. Reinforcement Learning for Improving the Accuracy of PM2.5 Pollution Forecast Under the Neural Network Framework. IEEE Access 2020, 8, 9864–9874. [Google Scholar] [CrossRef]

- Samal, K.K.R.; Babu, K.S.; Das, S.K. Temporal convolutional denoising autoencoder network for air pollution prediction with missing values. Urban Clim. 2021, 38, 100872. [Google Scholar] [CrossRef]

- Kavasidis, I.; Lallas, E.; Gerogiannis, V.C.; Charitou, T.; Karageorgos, A. Predictive maintenance in pharmaceutical manufacturing lines using deep transformers. Procedia Comput. Sci. 2023, 220, 576–583. [Google Scholar] [CrossRef]

- Dong, J.; Zhang, Y.; Hu, J. Short-term air quality prediction based on EMD-transformer-BiLSTM. Sci. Rep. 2024, 14, 20513. [Google Scholar] [CrossRef]

- Tian, J.; Liang, Y.; Xu, R.; Chen, P.; Guo, C.; Zhou, A.; Pan, L.; Rao, Z.; Yang, B. Air Quality Prediction with Physics-Informed Dual Neural ODEs in Open Systems. arXiv 2025, arXiv:2410.19892. [Google Scholar] [CrossRef]

- Luo, J.; Gong, Y. Air pollutant prediction based on ARIMA-WOA-LSTM model. Atmos. Pollut. Res. 2023, 14, 101761. [Google Scholar] [CrossRef]

- Park, J.; Seo, Y.; Cho, J. Unsupervised outlier detection for time-series data of indoor air quality using LSTM autoencoder with ensemble method. J. Big Data 2023, 10, 66. [Google Scholar] [CrossRef]

- Anas, H.; El Mghouchi, Y.E.; Halima, Y.; Nawal, A.; Mohamed, C. Novel climate classification based on the information of solar radiation intensity: An application to the climatic zoning of Morocco. Energy Convers. Manag. 2021, 247, 114770. [Google Scholar] [CrossRef]

- Hector, I.; Panjanathan, R. Predictive maintenance in Industry 4.0: A survey of planning models and machine learning techniques. PeerJ Comput. Sci 2024, 10, e2016. [Google Scholar] [CrossRef] [PubMed]

- Condurache-Bota, S.; Draşovean, R.M.; Tigau, N. Analysis of the particulate matter long term emissions in Romania by sectors of activities. Math. Phys. Theor. Mech. 2023, 46, 90–100. [Google Scholar] [CrossRef]

- Moldovan, C.S.; Mateescu, M.D.; Sbirna, L.S.; Ionescu, C.; Sbirna, S. Study regarding concentration of main air pollutants in Craiova (Romania)–Comparison between the first half of 2011 and the similar period of 2010. In SESAM; INSEMEX: Petrosani, Romania, 2011; p. 368. [Google Scholar]

- Buzatu, G.D.; Dodocioiu, A.M. Air quality study in Craiova municipality based on data provided by uRADm independent sensor network. Ann. Univ. Craiova Biol. Hortic. Food Prod. Process. Technol. Environ. Eng. 2022, 27, 63. [Google Scholar] [CrossRef]

- Velea, L.; Udriștioiu, M.T.; Puiu, S.; Motișan, R.; Amarie, D. A Community-Based Sensor Network for Monitoring the Air Quality in Urban Romania. Atmosphere 2023, 14, 840. [Google Scholar] [CrossRef]

- Udristioiu, M.T.; Velea, L.; Motisan, R. First results given by the independent air pollution monitoring network from Craiova city Romania. AIP Conf. Proc. 2023, 2843, 040001. [Google Scholar] [CrossRef]

- Pekdogan, T.; Udriștioiu, M.T.; Yildizhan, H.; Ameen, A. From Local Issues to Global Impacts: Evidence of Air Pollution for Romania and Turkey. Sensors 2024, 24, 1320. [Google Scholar] [CrossRef]

- Yildizhan, H.; Udriștioiu, M.T.; Pekdogan, T.; Ameen, A. Observational study of ground-level ozone and climatic factors in Craiova, Romania, based on one-year high-resolution data. Sci. Rep. 2024, 14, 26733. [Google Scholar] [CrossRef]

- Dunea, D.; Pohoata, A.; Iordache, S. Using wavelet–feedforward neural networks to improve air pollution forecasting in urban environments. Environ. Monit. Assess. 2015, 187, 477. [Google Scholar] [CrossRef] [PubMed]

- Dudáš, A.; Udristioiu, M.T.; Alkharusi, T.; Yildizhan, H.; Sampath, S.K. Examining effects of air pollution on photovoltaic systems via interpretable random forest model. Renew. Energy 2024, 232, 121066. [Google Scholar] [CrossRef]

- Velea, L.; Bojariu, R.; Burada, C.; Udristioiu, M.T.; Paraschivu, M.; Burce, R.D. Characteristics of extreme temperatures relevant for agriculture in the near future (2021–2040) in Romania. Sci. Papers. Ser. E Land Reclam. Earth Obs. Surv. Environ. Engineering 2021, 10, 70–75. [Google Scholar]

- Brâncuș, M.M.; Burada, C.; Mănescu, C. The impact of late and early snowfall on urban areas in southwestern Romania. In Proceedings of the Climate, Water and Society: Changes and Challenges, 36th Conference of the International Association of Climatology, Bucharest, Romania, 3–7 July 2023; pp. 3–7. [Google Scholar]

- Udristioiu, M.T.; Velea, L.; Bojariu, R.; Sararu, S.C. Assessment of urban heat Island for Craiova from satellite-based LST. AIP Conf. Proc. 2017, 1916, 040004. [Google Scholar] [CrossRef]

- Badescu, V. Assessing the performance of solar radiation computing models and model selection procedures. J. Atmos. Sol.-Terr. Phys. 2013, 105, 119–134. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Variance Threshold as Early Screening to Boruta Feature Selection for Intrusion Detection System. Available online: https://ieeexplore.ieee.org/abstract/document/9608852 (accessed on 15 March 2025).

- Wang, J.; Zhang, H.; Wang, J.; Pu, Y.; Pal, N.R. Feature Selection Using a Neural Network with Group Lasso Regularization and Controlled Redundancy. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1110–1123. [Google Scholar] [CrossRef] [PubMed]

- Rahmat, F.; Zulkafli, Z.; Ishak, A.J.; Abdul Rahman, R.Z.; Stercke, S.D.; Buytaert, W.; Tahir, W.; Ab Rahman, J.; Ibrahim, S.; Ismail, M. Supervised feature selection using principal component analysis. Knowl. Inf. Syst. 2024, 66, 1955–1995. [Google Scholar] [CrossRef]

- Ahmad, I. Feature Selection Using Particle Swarm Optimization in Intrusion Detection. Int. J. Distrib. Sens. Netw. 2015, 11, 806954. [Google Scholar] [CrossRef]

- Bai, Y.; Xie, J.; Liu, C.; Tao, Y.; Zeng, B.; Li, C. Regression modeling for enterprise electricity consumption: A comparison of recurrent neural network and its variants. International J. Electr. Power Energy Syst. 2021, 126, 106612. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Najibi, F.; Apostolopoulou, D.; Alonso, E. Enhanced performance Gaussian process regression for probabilistic short-term solar output forecast. Int. J. Electr. Power Energy Syst. 2021, 130, 106916. [Google Scholar] [CrossRef]

- Mousavirad, S.J.; Ebrahimpour-Komleh, H. Feature selection using modified imperialist competitive algorithm. In Proceedings of the ICCKE 2013, Mashhad, Iran, 31 October–1 November 2013; pp. 400–405. [Google Scholar] [CrossRef]

- Got, A.; Moussaoui, A.; Zouache, D. Hybrid filter-wrapper feature selection using whale optimization algorithm: A multi-objective approach. Expert. Syst. Appl. 2021, 183, 115312. [Google Scholar] [CrossRef]

- Kubalík, J.; Derner, E.; Žegklitz, J.; Babuška, R. Symbolic Regression Methods for Reinforcement Learning. IEEE Access 2021, 9, 139697–139711. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Ding, W.; El-Shahat, D. A hybrid Harris Hawks optimization algorithm with simulated annealing for feature selection. Artif. Intell. Rev. 2021, 54, 593–637. [Google Scholar] [CrossRef]

- van der Heide, E.M.M.; Veerkamp, R.F.; van Pelt, M.L.; Kamphuis, C.; Athanasiadis, I.; Ducro, B.J. Comparing regression, naive Bayes, and random forest methods in the prediction of individual survival to second lactation in Holstein cattle. J. Dairy Sci. 2019, 102, 9409–9421. [Google Scholar] [CrossRef]

- Moayedikia, A.; Ong, K.L.; Boo, Y.L.; Yeoh, W.G.; Jensen, R. Feature selection for high dimensional imbalanced class data using harmony search. Eng. Appl. Artif. Intell. 2017, 57, 38–49. [Google Scholar] [CrossRef]

- Rodrigues, D.; Pereira, L.A.M.; Nakamura, R.Y.M.; Costa, K.A.P.; Yang, X.S.; Souza, A.N.; Papa, J.P. A wrapper approach for feature selection based on Bat Algorithm and Optimum-Path Forest. Expert. Syst. Appl. 2014, 41, 2250–2258. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Maulud, D.; Abdulazeez, A.M. A Review on Linear Regression Comprehensive in Machine Learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Hastie, T.J. Generalized Additive Models. In Statistical Models in S; Routledge: New York, NY, USA, 1992. [Google Scholar]

- Brabazon, A.; Cui, W.; O’Neill, M. The raven roosting optimisation algorithm. Soft Comput. 2016, 20, 525–545. [Google Scholar] [CrossRef]

- Maaroof, B.B.; Rashid, T.A.; Abdulla, J.M.; Hassan, B.A.; Alsadoon, A.; Mohammadi, M.; Khishe, M.; Mirjalili, S. Current Studies and Applications of Shuffled Frog Leaping Algorithm: A Review. Arch. Comput. Methods Eng. 2022, 29, 3459–3474. [Google Scholar] [CrossRef]

- Jia, H.; Xing, Z.; Song, W. A New Hybrid Seagull Optimization Algorithm for Feature Selection. IEEE Access 2019, 7, 49614–49631. [Google Scholar] [CrossRef]

- Hegazy, A.H.E.; Makhlouf, M.A.; El-Tawel, G.H.S. Improved salp swarm algorithm for feature selection. J. King Saud. Univ.-Comput. Inf. Sci. 2020, 32, 335–344. [Google Scholar] [CrossRef]

- Song, Y.; Liang, J.; Lu, J.; Zhao, X. An efficient instance selection algorithm for k nearest neighbor regression. Neurocomputing 2017, 251, 26–34. [Google Scholar] [CrossRef]

- Moe, S.J.; Wolf, R.; Xie, L.; Landis, W.G.; Kotamäki, N.; Tollefsen, K.E. Quantification of an Adverse Outcome Pathway Network by Bayesian Regression and Bayesian Network Modeling. Integr. Environ. Assess. Manag. 2021, 17, 147–164. [Google Scholar] [CrossRef]

- Zivkovic, M.; Jovanovic, L.; Ivanovic, M.; Krdzic, A.; Bacanin, N.; Strumberger, I. Feature Selection Using Modified Sine Cosine Algorithm with COVID-19 Dataset. In Evolutionary Computing and Mobile Sustainable Networks; Suma, V., Fernando, X., Du, K.L., Wang, H., Eds.; Springer: Singapore, 2022; pp. 15–31. [Google Scholar] [CrossRef]

- Emary, E.; Zawbaa, H.M. Feature selection via Lèvy Antlion optimization. Pattern Anal. Applic. 2019, 22, 857–876. [Google Scholar] [CrossRef]

- Shahrom, M.A.S.M.; Zainal, N.; Aziz, M.F.A.; Mostafa, S.A. A Review of Glowworm Swarm Optimization Meta-Heuristic Swarm Intelligence and its Fusion in Various Applications. Fusion: Pract. Appl. 2023, 13, 89–102. [Google Scholar] [CrossRef]

- Hafez, A.I.; Hassanien, A.E.; Zawbaa, H.M.; Emary, E. Hybrid Monkey Algorithm with Krill Herd Algorithm optimization for feature selection. In Proceedings of the 2015 11th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2015; pp. 273–277. [Google Scholar] [CrossRef]

- Rostami, O.; Kaveh, M. Optimal feature selection for SAR image classification using biogeography-based optimization (BBO), artificial bee colony (ABC) and support vector machine (SVM): A combined approach of optimization and machine learning. Comput. Geosci. 2021, 25, 911–930. [Google Scholar] [CrossRef]

- Sarbazi-Azad, S.; Saniee Abadeh, M.; Mowlaei, M.E. Using data complexity measures and an evolutionary cultural algorithm for gene selection in microarray data. Soft Comput. Lett. 2021, 3, 100007. [Google Scholar] [CrossRef]

- Li, Z. A local opposition-learning golden-sine grey wolf optimization algorithm for feature selection in data classification. Appl. Soft Comput. 2023, 142, 110319. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Ming, L.; Zhao, J. Feature selection for chemical process fault diagnosis by artificial immune systems. Chin. J. Chem. Eng. 2018, 26, 1599–1604. [Google Scholar] [CrossRef]

- Dhal, K.G.; Das, A.; Sasmal, B.; Ghosh, T.K.; Sarkar, K. Eagle Strategy in Nature-Inspired Optimization: Theory, Analysis, Applications, and Comparative Study. Arch. Comput. Methods Eng. 2024, 31, 1213–1232. [Google Scholar] [CrossRef]

- Wu, Q.; Ma, Z.; Fan, J.; Xu, G.; Shen, Y. A Feature Selection Method Based on Hybrid Improved Binary Quantum Particle Swarm Optimization. IEEE Access 2019, 7, 80588–80601. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, X. A Velocity-Guided Grey Wolf Optimization Algorithm with Adaptive Weights and Laplace Operators for Feature Selection in Data Classification. IEEE Access 2024, 12, 39887–39901. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Chakraborty, K.; Mehrotra, K.; Mohan, C.K.; Ranka, S. Forecasting the behavior of multivariate time series using neural networks. Neural Netw. 1992, 5, 961–970. [Google Scholar] [CrossRef]

- Perez, M.; Marwala, T. Microarray data feature selection using hybrid genetic algorithm simulated annealing. In Proceedings of the 2012 IEEE 27th Convention of Electrical and Electronics Engineers in Israel, Eilat, Israel, 14–17 November 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, Y.; Gao, B.; Lu, T.; Li, H.; Wu, Y.; Zhang, D.; Liao, X. A Hybrid Binary Dragonfly Algorithm with an Adaptive Directed Differential Operator for Feature Selection. Remote Sens. 2023, 15, 3980. [Google Scholar] [CrossRef]

- Menghour, K.; Souici-Meslati, L. Hybrid ACO-PSO Based Approaches for Feature Selection. Int. J. Intell. Eng. Syst. 2016, 9, 65–79. [Google Scholar] [CrossRef]

- Chin, V.J.; Salam, Z. Coyote optimization algorithm for the parameter extraction of photovoltaic cells. Sol. Energy 2019, 194, 656–670. [Google Scholar] [CrossRef]

- Ahmed, S.; Mafarja, M.H.; Aljarah, I. Feature Selection Using Salp Swarm Algorithm with Chaos. In Proceedings of the 2nd International Conference on Intelligent Systems, Metaheuristics & Swarm Intelligence, New York, NY, USA, 24–25 March 2018; Association for Computing Machinery: New York, NY, USA, 2019; pp. 65–69. [Google Scholar] [CrossRef]

- Panahi, M.; Sadhasivam, N.; Pourghasemi, H.R.; Rezaie, F.; Lee, S. Spatial prediction of groundwater potential mapping based on convolutional neural network (CNN) and support vector regression (SVR). J. Hydrol. 2020, 588, 125033. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).