Daily Peak Load Prediction Method Based on XGBoost and MLR

Abstract

1. Introduction

2. Overall Framework of Peak Load Forecasting Model

3. Framework Modules

3.1. ICEEMDAN Algorithm Mechanism

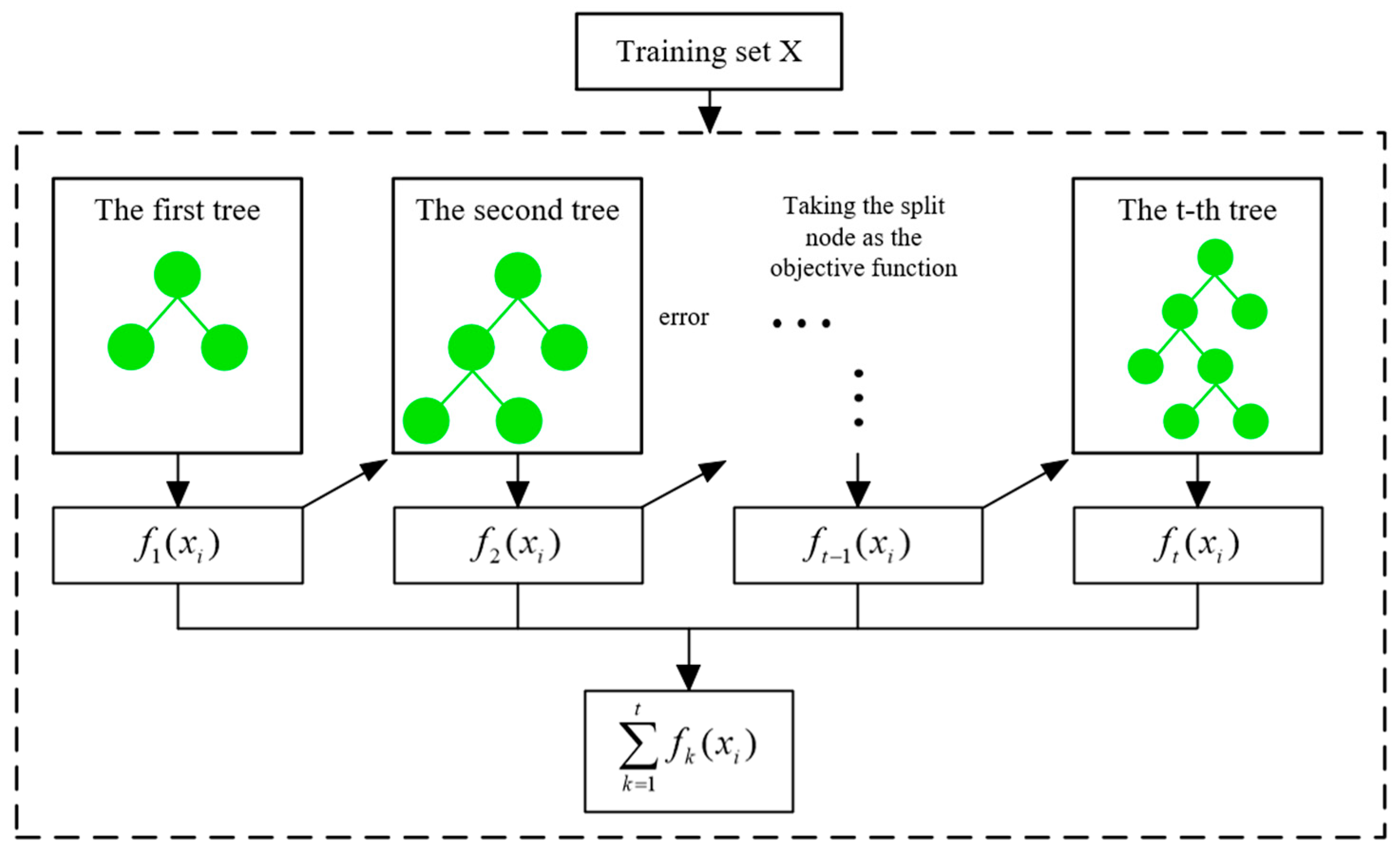

3.2. XGBoost Algorithm Mechanism

3.3. Multiple Linear Regression Mechanism

3.4. Parallel Ensemble Learning Method of Bagging Mechanism

3.5. Sparrow Search Optimization Algorithm Mechanism

4. Case Analysis

4.1. Research Data and Evaluation Metrics

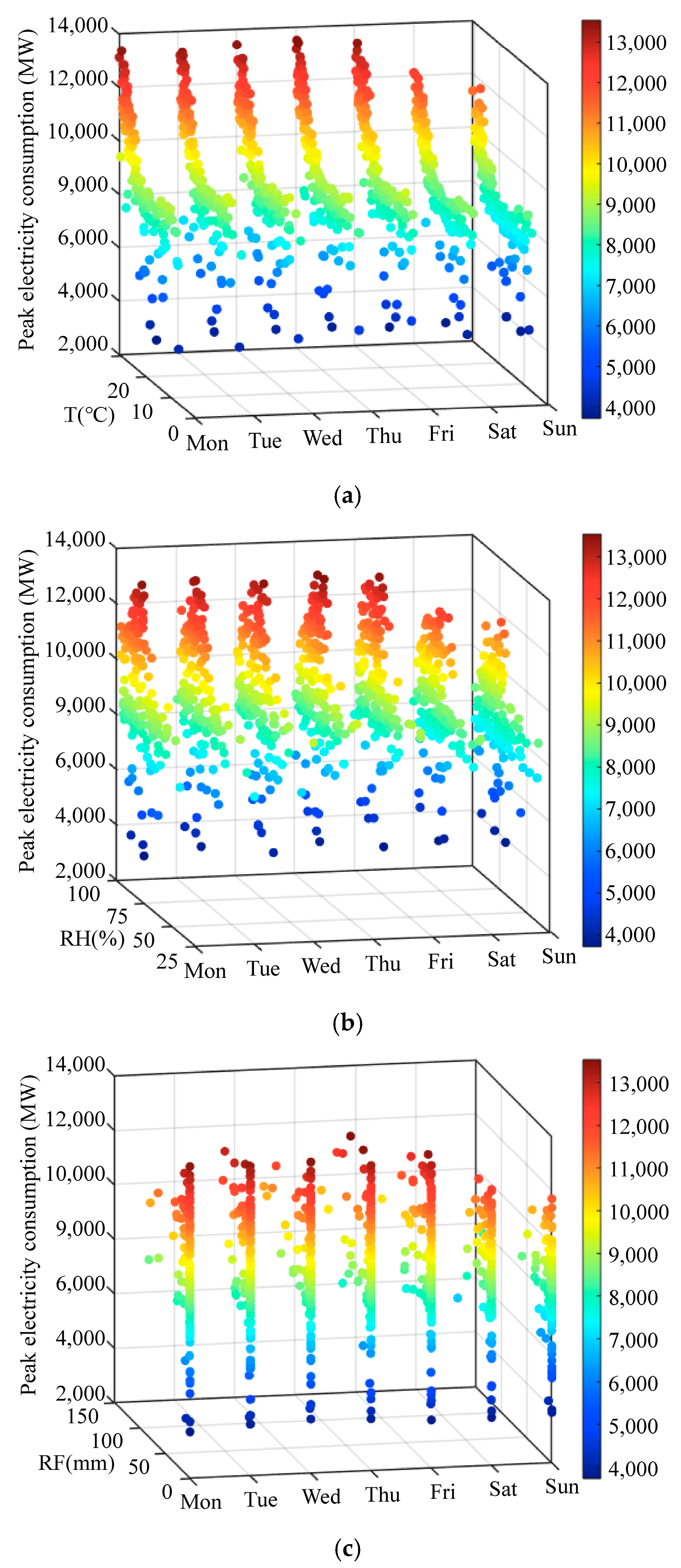

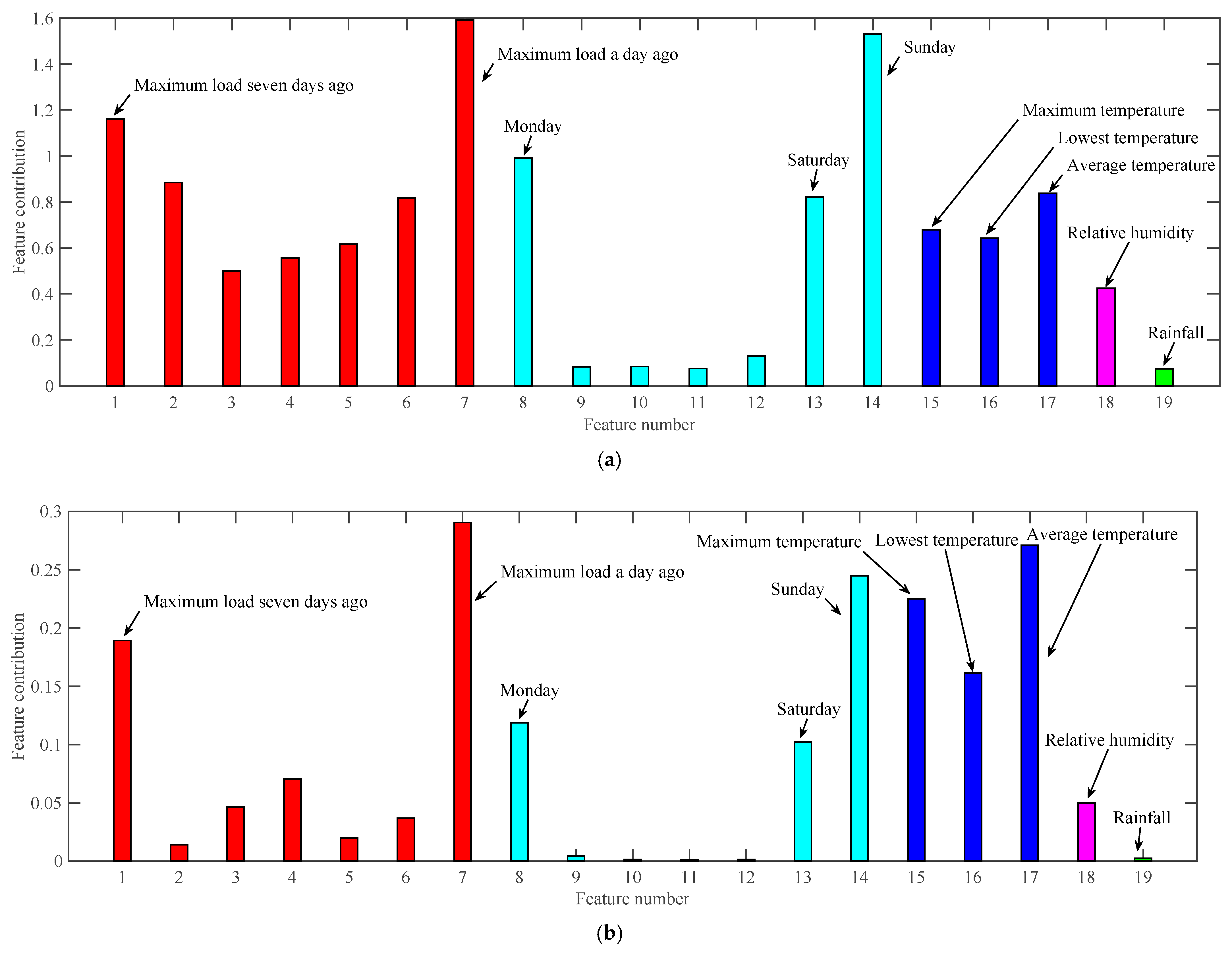

4.2. Data Characteristics Analysis

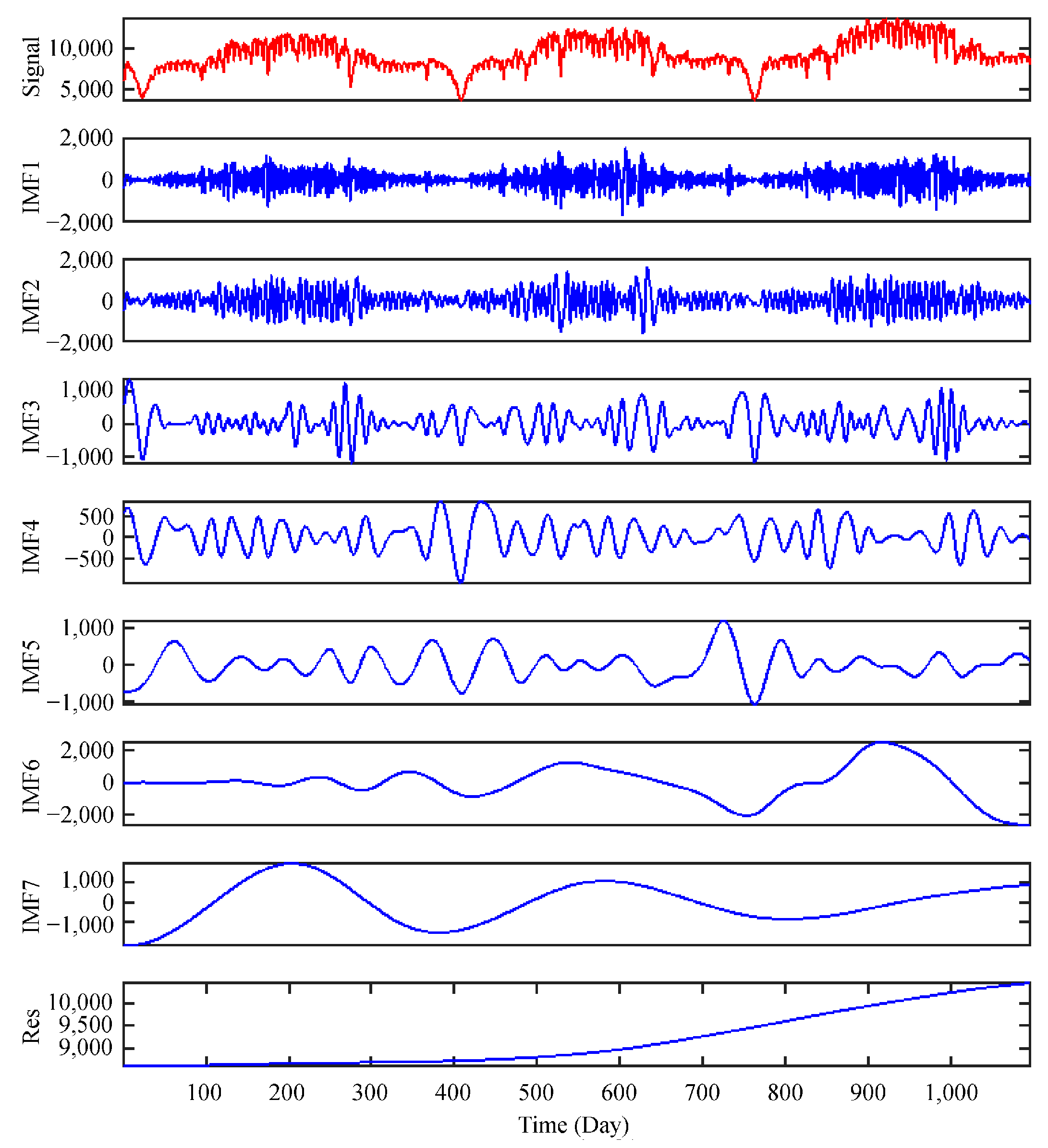

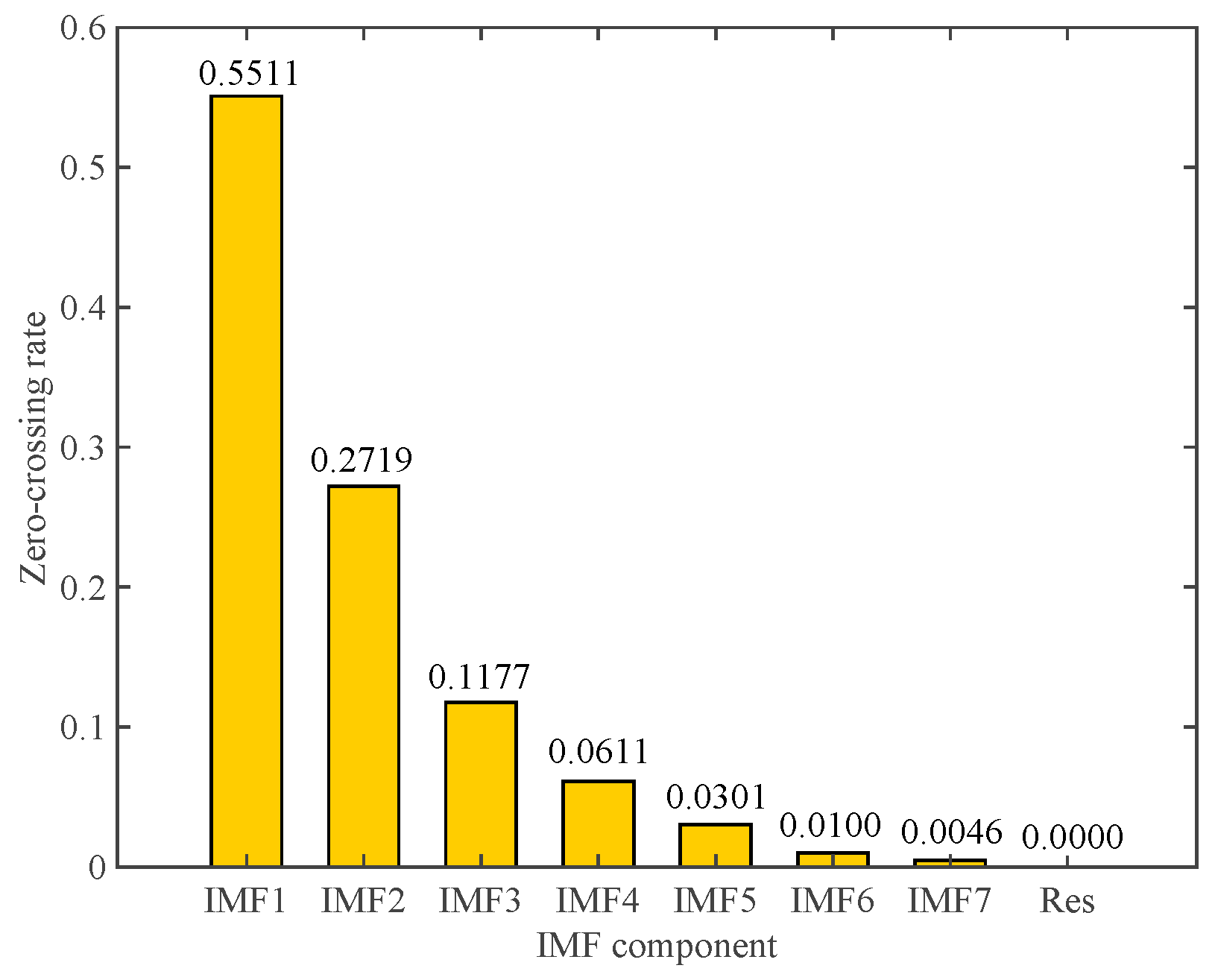

4.3. Sequence ICEEMDAN Decomposition

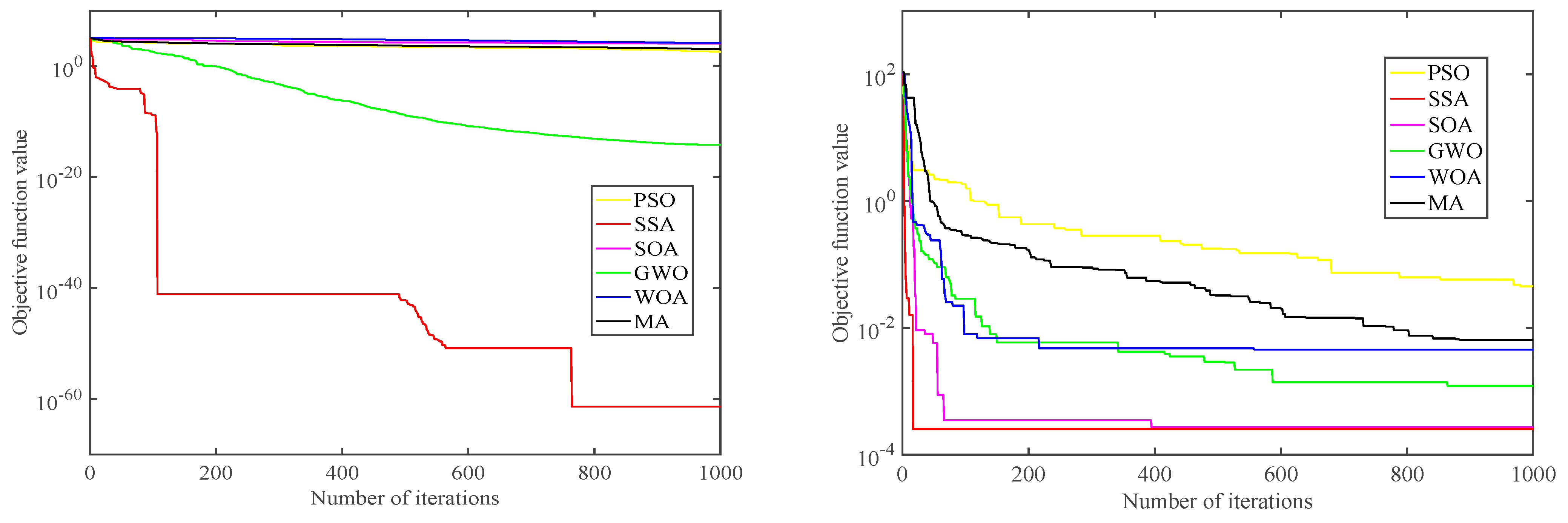

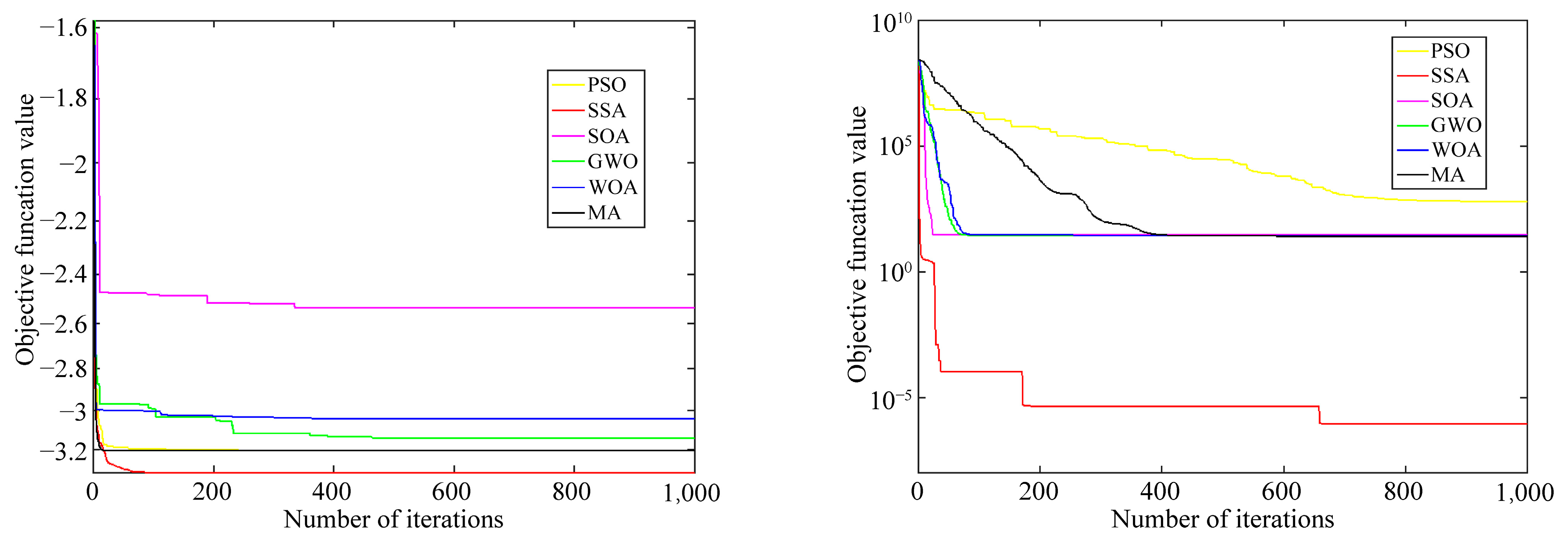

4.4. Hyperparameter Search Process

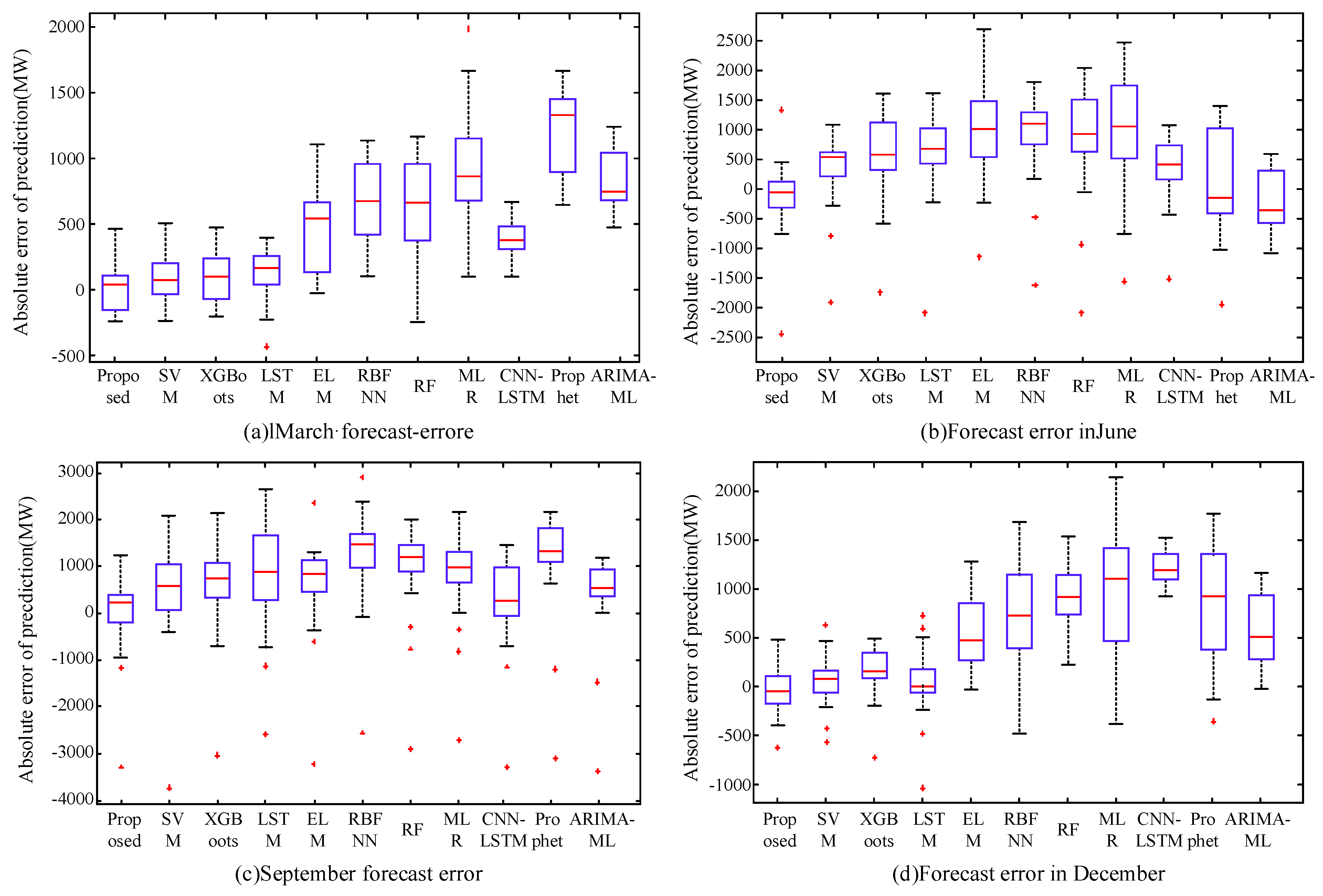

4.5. Comparative Analysis of Prediction Models

4.6. Scalability Verification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, Y.; Tahir, M.; Siano, P.; Bi, Y.; Hu, S.; Yang, J. Optimization of renewable energy-based integrated energy systems: A three-stage stochastic robust model. Appl. Energy 2025, 377, 124635. [Google Scholar] [CrossRef]

- Meng, Q.; Jin, X.; Luo, F.; Wang, Z.; Hussain, S. Distributionally robust scheduling for benefit allocation in regional integrated energy system with multiple stakeholders. J. Mod. Power Syst. Clean Energy 2024, 12, 1631–1642. [Google Scholar] [CrossRef]

- Wood, M.; Matrone, S.; Ogliari, E.; Leva, S. Comparing peak electricity load forecasting models for an industrial and a residential building. Math. Comput. Simul. 2026, 240, 303–316. [Google Scholar] [CrossRef]

- Gao, Y.; Zhao, Y.; Hu, S.; Tahir, M.; Yuan, W.; Yang, J. A three-stage adjustable robust optimization framework for energy base leveraging transfer learning. Energy 2025, 319, 135037. [Google Scholar] [CrossRef]

- Hong, Y.Y.; Apolinario, G.F.D.G.; Cheng, Y.H. Week-ahead daily peak load forecasting using hybrid convolutional neural network. IFAC PapersOnLine 2023, 56, 372–377. [Google Scholar] [CrossRef]

- Gao, Z.; Yin, X.; Zhao, F.; Meng, H.; Hao, Y.; Yu, M. A two-layer SSA-XGBoost-MLR continuous multi-day peak load forecasting method based on hybrid aggregated two-phase decomposition. Energy Rep. 2022, 8, 12426–12441. [Google Scholar] [CrossRef]

- Lianbing, L.I.; Guoqiang, G.A.; Weiguang, C.H.; Wenjie, F.U.; Chao, Z.H.; Shasha, Z.H. Ultra short term load power prediction considering feature recombination and BiGRU-Attention-XGBoost model. Mod. Electr. Power 2023, 41, 1–11. [Google Scholar]

- Cai, Q.; Chao, Z.; Su, B.; Wang, L.; Duan, Q.; Wen, Y.; Li, B. Short-term load forecasting method based on a novel robust loss neural network algorithm. Power Syst. Technol. 2020, 44, 4132–4139. [Google Scholar]

- Yu, Y.-L.; Li, W.; Sheng, D.-R.; Chen, J.-H. A hybrid short-term load forecasting method based on improved ensemble empirical mode decomposition and back propagation neural network. J. Zhejiang Univ. Sci. A Appl. Phys. Eng. 2016, 17, 101–114. [Google Scholar] [CrossRef]

- Pang, H.; Gao, J.; Du, Y. A short-term load probability density prediction based on quantile regression of time convolution network. Power Syst. Technol. 2020, 44, 1343–1350. [Google Scholar]

- Fan, S.; Li, L.; Wang, S.; Liu, X.; Yu, Y.; Hao, B. Application analysis and exploration of artificial intelligence technology in power grid dispatch and control. Power Syst. Technol. 2020, 44, 401–411. [Google Scholar]

- Wang, S.; Wang, X.; Wang, S.; Wang, D. Bi-directional long short-term memory method based on attention mechanism and rolling update for short-term load forecasting. Int. J. Electr. Power Energy Syst. 2019, 109, 470–479. [Google Scholar] [CrossRef]

- Chang, Y.; Sun, H.; Gu, T.; Du, W.; Wang, Y.; Li, W. Monthly forecast of wind power generation using historical data expansion method. Power Syst. Technol. 2021, 45, 1059–1068. [Google Scholar]

- Xu, Y.; Xiang, Y.; Ma, T. VMD-GRU short-term power load forecasting model based on optimized parameters of particle swarm algorithm. J. North China Electr. Power Univ. (Nat. Sci. Ed.) 2023, 50, 38–47. [Google Scholar]

- Li, Y.; Liu, X.; Xing, F.; Wen, G.; Lu, N.; He, H.; Jiao, R. Daily peak load prediction based on correlation analysis and bi-directional long short-term memory network. Power Syst. Technol. 2021, 45, 2719–2730. [Google Scholar]

- Rafi, S.H.; Al-Masood, N.; Deeba, S.R.; Hossain, E. A short-term load forecasting method using integrated CNN and LSTM network. IEEE Access 2021, 9, 32436–32448. [Google Scholar] [CrossRef]

- Tang, X.; Dai, Y.; Liu, Q.; Dang, X.; Xu, J. Application of bidirectional recurrent neural network combined with deep belief network in short-term load forecasting. IEEE Access 2019, 7, 160660–160670. [Google Scholar] [CrossRef]

- Afrasiabi, M.; Mohammadi, M.; Rastegar, M.; Stankovic, L.; Afrasiabi, S.; Khazaei, M. Deep-based conditional probability density function forecasting of residential loads. IEEE Trans. Smart Grid 2020, 11, 3646–3657. [Google Scholar] [CrossRef]

- Al-Rakhami, M.; Gumaei, A.; Alsanad, A.; Alamri, A.; Hassan, M.M. An ensemble learning approach for accurate energy load prediction in residential buildings. IEEE Access 2019, 7, 48328–48338. [Google Scholar] [CrossRef]

- Shi, J.; Ma, L.; Li, C.; Liu, N.; Zhang, J. Peak load forecasting method based on serial-parallel ensemble learning. Chin. J. Electr. Eng. 2020, 40, 4463–4472, 4726. [Google Scholar]

- Yu, Y.; Wang, Z.; Chen, X.; Feng, Q. Particle swarm optimization algorithm based on teaming behavior. Knowl. Based Syst. 2025, 318, 113555. [Google Scholar] [CrossRef]

- Jin, Z.; Li, X.; Qiu, Z.; Li, F.; Kong, E.; Li, B. A data-driven framework for lithium-ion battery RUL using LSTM and XGBoost with feature selection via Binary Firefly Algorithm. Energy 2025, 314, 134229. [Google Scholar] [CrossRef]

- Wang, L.; Peng, L.; Xiong, X.; Li, Y.; Qi, Y.; Hu, X. Research on high-speed constant tension spinning control strategy based on vibration detection and enhanced firefly algorithm based FOPID controller. Measurement 2025, 117, 117789. [Google Scholar] [CrossRef]

- Meng, Q.; Xu, J.; Ge, L.; Wang, Z.; Wang, J.; Xu, L.; Tang, Z. Economic optimization operation approach of integrated energy system considering wind power consumption and flexible load regulation. J. Electr. Eng. Technol. 2024, 19, 209–221. [Google Scholar] [CrossRef]

- Meng, Q.; Zu, G.; Ge, L.; Li, S.; Xu, L.; Wang, R.; He, K.; Jin, S. Dispatching strategy for low-carbon flexible operation of park-level integrated energy system. Appl. Sci. 2022, 12, 12309. [Google Scholar] [CrossRef]

- Liang, B.; Feng, W. Bearing fault diagnosis based on ICEEMDAN deep learning network. Processes 2023, 11, 2440. [Google Scholar] [CrossRef]

| Ref. | Hybrid Model | Tree Ensemble Algorithm | Heuristic Algorithm |

|---|---|---|---|

| [7] | × | √ | × |

| [8,9,10,11] | × | × | × |

| [12,13] | √ | × | × |

| [14] | × | × | × |

| [19] | √ | √ | × |

| [20] | √ | √ | PSO |

| [21] | √ | √ | GA |

| Proposed | √ | √ | SSA |

| Algorithm | Indicators | March | June | September | December |

|---|---|---|---|---|---|

| 177.15 | 576.27 | 817.91 | 263.61 | ||

| Proposed | 141.84 | 361.58 | 547.97 | 193.36 | |

| 1.65 | 3.31 | 5.13 | 2.23 | ||

| 207.17 | 920.11 | 1137.34 | 296.93 | ||

| XGBoost | 173.76 | 773.18 | 942.41 | 241.15 | |

| 1.99 | 6.47 | 8.23 | 2.75 | ||

| 731.85 | 1167.39 | 1303.76 | 1021.70 | ||

| RF | 666.01 | 1032.67 | 1198.35 | 940.47 | |

| 7.55 | 8.66 | 10.38 | 10.54 | ||

| 182.65 | 732.98 | 1041.73 | 241.24 | ||

| SVM | 139.56 | 575.15 | 769.36 | 174.64 | |

| 1.60 | 5.02 | 6.85 | 1.99 | ||

| 220.55 | 922.71 | 1317.93 | 316.98 | ||

| LSTM | 190.11 | 800.63 | 1090.02 | 202.57 | |

| 2.20 | 6.82 | 9.40 | 2.30 | ||

| 716.19 | 1205.61 | 1603.76 | 886.89 | ||

| RBFNN | 658.71 | 1120.36 | 1463.08 | 781.68 | |

| 7.48 | 9.59 | 12.58 | 8.80 | ||

| 529.59 | 1262.32 | 1123.58 | 668.41 | ||

| ELM | 430.84 | 1113.24 | 934.17 | 570.69 | |

| 4.86 | 9.37 | 8.20 | 6.43 | ||

| CNN-LSTM | 205.16 | 836.25 | 1003.27 | 289.24 | |

| 177.77 | 739.46 | 830.53 | 221.85 | ||

| 1.84 | 2.35 | 3.27 | 2.64 | ||

| Prophet | 1235.23 | 1835.26 | 1300.98 | 1653.01 | |

| 1039.9 | 1644.27 | 1124.42 | 1265.07 | ||

| 15.81 | 18.41 | 11.26 | 15.28 | ||

| ARIMA-ML | 976.53 | 1022.18 | 989.36 | 1021.02 | |

| 836.66 | 898.4 | 749.1 | 815.38 | ||

| 9.53 | 9.82 | 9.66 | 9.91 | ||

| 1039.51 | 1377.68 | 1222.65 | 1158.14 | ||

| MLR | 950.33 | 1194.96 | 1089.15 | 1015.69 | |

| 10.95 | 9.92 | 9.41 | 11.49 |

| Algorithm | |||

|---|---|---|---|

| Proposed | 11.44 | 9.47 | 1.28 |

| RF | 34.64 | 28.91 | 3.79 |

| XGBoost | 35.82 | 32.90 | 4.35 |

| SVM | 22.31 | 18.93 | 2.48 |

| LSTM | 21.59 | 17.80 | 2.40 |

| RBFNN | 37.11 | 32.96 | 4.34 |

| ELM | 25.18 | 21.50 | 2.94 |

| CNN-LSTM | 20.18 | 17.23 | 2.36 |

| Prophet | 32.58 | 27.02 | 4.21 |

| ARIMA-ML | 28.38 | 24.07 | 3.26 |

| MLR | 30.67 | 23.49 | 3.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, B.; Chen, Y.; Hu, S.; Guo, Y.; Liu, X.; Wang, Y.; Cheng, X.; Zhang, Q.; Yang, J. Daily Peak Load Prediction Method Based on XGBoost and MLR. Appl. Sci. 2025, 15, 11180. https://doi.org/10.3390/app152011180

Cao B, Chen Y, Hu S, Guo Y, Liu X, Wang Y, Cheng X, Zhang Q, Yang J. Daily Peak Load Prediction Method Based on XGBoost and MLR. Applied Sciences. 2025; 15(20):11180. https://doi.org/10.3390/app152011180

Chicago/Turabian StyleCao, Bin, Yahui Chen, Sile Hu, Yu Guo, Xianglong Liu, Yuan Wang, Xiaolei Cheng, Qian Zhang, and Jiaqiang Yang. 2025. "Daily Peak Load Prediction Method Based on XGBoost and MLR" Applied Sciences 15, no. 20: 11180. https://doi.org/10.3390/app152011180

APA StyleCao, B., Chen, Y., Hu, S., Guo, Y., Liu, X., Wang, Y., Cheng, X., Zhang, Q., & Yang, J. (2025). Daily Peak Load Prediction Method Based on XGBoost and MLR. Applied Sciences, 15(20), 11180. https://doi.org/10.3390/app152011180