Abstract

To address the challenges of low detection accuracy and weak generalization in UAV aerial imagery caused by complex ground environments, significant scale variations among targets, dense small objects, and background interference, this paper proposes an improved lightweight multi-scale small-object detection model, MBD-YOLO (MBFF module, BiMS-FPN, and Dual-Stream Head). Specifically, to enhance multi-scale feature extraction capabilities, we introduce the Multi-Branch Feature Fusion (MBFF) module, which dynamically adjusts receptive fields through parallel branches and adaptive depthwise convolutions, expanding the receptive field while preserving detail perception. We further design a lightweight Bidirectional Multi-Scale Feature Aggregation Pyramid Network (BiMS-FPN), integrating bidirectional propagation paths and a Multi-Scale Feature Aggregation (MSFA) module to mitigate feature spatial misalignment and improve small-target detection. Additionally, the Dual-Stream Head with NMS-free architecture leverages a task-aligned architecture and dynamic matching strategies to boost inference speed without compromising accuracy. Experiments on the VisDrone2019 dataset demonstrate that MBD-YOLO-n surpasses YOLOv8n by 6.3% in mAP50 and 8.2% in mAP50–95, with accuracy gains of 17.96–55.56% for several small-target categories, while increasing parameters by merely 3.1%. Moreover, MBD-YOLO-s achieves superior detection accuracy, efficiency, and generalization with only 12.1 million parameters, outperforming state-of-the-art models and proving suitable for resource-constrained embedded deployment scenarios. The superior performance of MBD-YOLO, which harmonizes high precision with low computational demand, fulfills the critical requirements for real-time deployment on resource-limited UAVs, showing great promise for applications in traffic monitoring, urban security, and agricultural surveying.

1. Introduction

Unmanned Aerial Vehicles (UAVs), also known as drones, are unpiloted aircraft operated remotely or autonomously via integrated program systems []. Equipped with high-resolution cameras and intelligent algorithms, they enable dynamic monitoring and data collection of ground-based targets. Compared to traditional satellite remote sensing or ground-based photogrammetry, UAVs offer distinct advantages. First, they feature lower operational costs and faster data acquisition capabilities, allowing for real-time capture of high-quality imagery. Second, UAVs possess high maneuverability, enabling them to perform missions effectively in complex environments, such as densely built urban areas or disaster sites. Finally, their high-resolution imaging capability (typically reaching approximately 2000 × 1500 pixels) establishes a foundation for fine-grained target identification []. These advantages have led to the widespread application of UAVs across various fields, including traffic supervision, urban planning, agricultural monitoring, power line inspection, and emergency disaster response [].

UAV Image Target Detection Technology aims to classify and locate objects within aerial images through computer vision algorithms. There are three major challenges in the field of UAV target detection that traditional methods have not faced before: First, objects from different categories often exhibit highly varying scales due to the UAV’s flight altitude and viewing angle, posing significant challenges for unified feature extraction and impairing detection accuracy. Second, in aerial scenarios, dense distribution and mutual occlusion of objects frequently lead to false negatives (missed detections) or false positives (false alarms). Furthermore, small objects, possessing limited feature information, are often obscured by background noise, further degrading detection performance. Finally, factors such as varying illumination, weather conditions, and terrain introduce degradation in image quality, increasing the risk of confusion between objects and the background []. These problems severely constrain the accuracy and reliability of UAV target detection, underpinning the demand for efficient methods to meet real-world application requirements.

In the early stages of UAV target detection, methods primarily combined handcrafted feature detection with traditional classifiers. Typical approaches included SIFT (Scale-Invariant Feature Transform) feature point matching [], HOG-LBP (Histogram of Oriented Gradients–Local Binary Patterns) feature fusion [], and SVM (support vector machine)-driven segmentation techniques []. Although these methods achieved preliminary success in laboratory environments, they were heavily reliant on manual feature selection, which imposed significant limitations on their accuracy, objectivity, robustness, and generalization capability. Conversely, deep learning architectures are characterized by several key strengths: superior generalization, the capacity for end-to-end optimization, and inherent parallelism that boosts computational efficiency []. Thus, researchers increasingly favor utilizing deep learning algorithms to extract target features from UAV aerial images for the purpose of enhancing detection accuracy.

Current deep learning-based object detection models are predominantly categorized into two major classes: two-stage algorithms and one-stage algorithms []. The two-stage algorithms, comprising models like R-CNN [], SPPNet [], Fast R-CNN [], and Faster R-CNN [], adhere to a common framework. This framework involves an initial stage of generating a set of potential object areas (Region Proposals), which are subsequently processed by Convolutional Neural Networks (CNNs) to perform categorical identification and bounding-box regression. Albeit exhibiting exceptional accuracy and robustness in managing intricate scenes and locating diminutive targets, these algorithms suffer from significant drawbacks, primarily substantial computational requirements and lengthy inference times. Conversely, single-stage detectors, represented by SSD [], RT-DETR [], and the YOLO family of models [], principally utilize an end-to-end unified design and a global feature sharing mechanism. They perform simultaneous estimation of object categories and spatial coordinates directly on the convolutional feature layers, bypassing the computationally intensive steps of candidate region generation and screening that are inherent in two-stage approaches, which typically lead to higher computational complexity (e.g., FLOPs and parameters) compared to one-stage detectors []. This methodology substantially reduces computational redundancy and enables faster detection speeds.

The YOLO (You Only Look Once) series stands as one of the most representative one-stage detection algorithms. YOLOv1 [] pioneered the single-stage detection paradigm by conceptualizing the detection task through a regression-based framework that outputs bounding-box parameters and class-specific confidence values in one pass, thereby significantly increasing detection speed. However, it exhibited weaker recall rates and localization accuracy. YOLOv2 [] enhanced bounding box prediction by introducing the Anchor mechanism, improved stability through Batch Normalization (BatchNorm) and high-resolution fine-tuning, and boosted generalization via multi-scale training. These innovations enabled it to surpass two-stage models in both speed and accuracy for the first time. YOLOv3 [] introduced a multi-scale prediction head (an early form of Feature Pyramid Networks, FPNs), leveraging three distinct feature scales to detect objects of different sizes, which markedly improved small-target detection capability. It adopted the novel Darknet-53 backbone network [], optimizing residual connections for greater efficiency. YOLOv4 [] integrated the CSPDarknet backbone [], Mish activation function, and SPP/SPPF modules [,] to strengthen feature fusion. YOLOv4 also proposed Mosaic data augmentation [] to enhance small-sample learning ability and incorporated PANet (Path Aggregation Network) [] for cross-scale feature interaction [], achieving real-time state-of-the-art (SOTA) performance on the COCO dataset []. YOLOv5 [] prioritized industrial deployment friendliness. It introduced adaptive anchor box calculation (AutoLearning Bounding Boxes), introduced the Focus slicing module to reduce dimensionality and computational costs, and utilized mixed-precision training alongside model compression techniques (e.g., Nano/Small versions) to address edge device deployment requirements. YOLOv6 [] employed the EfficientRep backbone (based on a RepVGG-like structure) [], significantly boosting inference speed. It also incorporated an Anchor-Aware mechanism to refine box predictions, effectively mitigating missed detections. YOLOv7 [] pioneered dynamic label assignment and model scaling techniques for adaptive positive/negative sample matching. It designed the E-ELAN (Extended Efficient Layer Aggregation Network) module to enhance feature extraction, outperforming contemporary models in both accuracy and speed. YOLOv8 [] replaced YOLOv5’s C3 module with the novel C2f module (featuring a richer gradient flow design). Additionally, it strategically adjusted channel dimensions across differently scaled model variants (e.g., n, s, m, l, x), significantly improving overall model efficiency. Compared to previous YOLO iterations, YOLOv8 demonstrates substantial gains in both speed and accuracy, making it highly suitable for real-time object detection applications []. This superior combination of performance and efficiency serves as the primary rationale for adopting the YOLOv8 architecture as the baseline for the improved model proposed in this paper.

While deep learning-based detectors, particularly the YOLO series, have become the de facto standard for real-time object detection and have demonstrated significant progress, they still exhibit inherent limitations when confronted with the extreme challenges of UAV imagery. To optimize the capabilities of YOLO architectures for object detection in UAV-captured imagery, numerous researchers have focused on optimizing multi-scale feature fusion, attention mechanisms, and lightweight architectures []. Yang et al. [] developed SAFPN (Spatially Aligned Feature Pyramid Network), which addresses the spatial misalignment problem inherent in traditional FPNs via a spatial alignment module. Zeng et al. [] proposed PA-YOLO, which incorporates dedicated detection layers and a dynamic upsampling operator within the ASD-FPN structure, thereby minimizing feature loss. Both approaches significantly improve detection accuracy. Furthermore, enhancement of detection accuracy can be achieved by employing optimized attention mechanisms to suppress interference from complex backgrounds. Xu et al. [] introduced GFSPP-YOLO, which integrates the CBAM (Convolutional Block Attention Module) attention mechanism with Grouped Spatial Pyramid Pooling. This enables channel-spatial feature recalibration, enhancing detection precision and lowering computational complexity. Kim et al. [] designed ECAP-YOLO, innovatively replacing the traditional SPP layer with an Efficient Channel Attention Pyramid (ECAP). By enhancing feature representation through channel attention, ECAP-YOLO achieved improved detection accuracy on the VEDAI dataset []. Wang et al. [] developed Gold-YOLO, which strengthens the interaction between shallow-layer features (critical for small objects) and deep semantic features (critical for large objects) through a Path Aggregation Network (PANet). By synergistically combining SA (Spatial Attention) layers and CA (Channel Attention) layers, it dynamically focuses on critical features, significantly enhancing detection robustness in complex scenarios (e.g., dense targets, occlusions). Zhang et al. [] proposed Drone-YOLO, which employs a sandwich-fusion module based on depthwise separable convolutions to enhance spatial details in low-level backbone features, achieving improved detection accuracy on the VisDrone2019 dataset. Moreover, lightweight architectural design is a crucial approach for balancing computational efficiency and detection accuracy. Li et al. [] introduced Simplify-YOLOv5m, which employs depthwise separable convolutions and a Slim Neck architecture, successfully achieving an optimal trade-off between computational performance and recognition precision in insulator detection tasks. Liu et al. [] proposed SF-YOLOv5, which reduces parameters by pruning high-level feature maps, demonstrating that high-level features can be effectively replaced by efficient fusion methods for small-target detection. Fan et al. [] proposed LUD-YOLO, which tackles the problem of feature deterioration throughout their transmission and integration by incorporating upsampling operations within the Feature Pyramid Network (FPN) and Progressive Feature Pyramid Network (PFPN). Additionally, the model applies a Dynamic Sparse Attention mechanism within the Cf2 module, enabling flexible computational allocation and content-aware processing. This design makes LUD-YOLO readily deployable on edge devices like UAVs.

Although existing models have achieved considerable advancements in boosting detection performance and developing efficient network structures, further enhancements are still required for accurately identifying multi-scale objects, especially minor instances, in challenging environments. Furthermore, for resource-constrained embedded devices, the computational efficiency and inference speed of models require further optimization to fulfill the requirements of practical deployment. Addressing these challenges in UAV aerial image object detection, this paper proposes MBD-YOLO (MBFF module, BiMS-FPN, and Dual-Stream Head), an improved lightweight multi-scale small-target detection model for UAVs based on YOLOv8. MBD-YOLO aims to resolve the low accuracy of detection of multi-scale targets (especially small objects) and the insufficient model lightweightness prevalent in current approaches. The primary contributions of this work are summarized as follows:

- We propose a Multi-Branch Feature Fusion (MBFF) architecture, replacing the fixed-receptive-field design of the traditional C2f module. By dynamically adjusting convolutional kernel sizes, MBFF enables collaborative extraction of multi-scale features. This module significantly enhances feature representation capability for small targets, reduces the parameter count, and effectively mitigates feature degradation caused by extreme scale variations in UAV imagery.

- We present a lightweight Bidirectional Multi-Scale Feature Aggregation Pyramid Network (BiMS-FPN), which integrates bidirectional propagation paths and a Multi-Scale Feature Aggregation (MSFA) module into the conventional FPN structure. The BiMS-FPN effectively resolves the spatial misalignment problem inherent in standard FPNs, reinforces geometric consistency across multi-resolution features, and significantly boosts detection accuracy for small targets, while concurrently reducing computational overhead and model parameters.

- We employ the state-of-the-art Dual-Stream Head, featuring a dual-branch task-aligned architecture. This head, combined with hierarchical distillation techniques and a dynamic matching metric, facilitates end-to-end efficient inference. The One-to-Many branch strengthens training supervision, whereas the One-to-One branch enables Non-Maximal Suppression (NMS)-free inference, collectively improving the recall rate for small targets.

- Extensive experiments conducted on the VisDrone2019 dataset demonstrate that MBD-YOLO achieves an optimal balance between model lightweightness and detection accuracy. Compared to the baseline model YOLOv8, our approach exhibits superior detection performance for multi-scale objects, particularly small targets. Additionally, regarding the model parameter count, MBD-YOLO outperforms several recent state-of-the-art YOLO algorithms.

2. Materials and Methods

2.1. MBD-YOLO Model Architecture

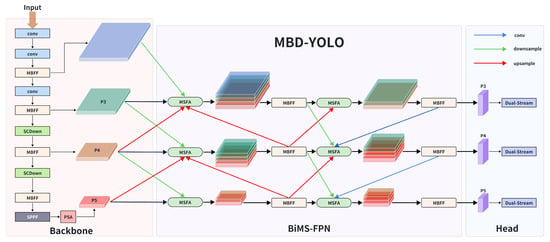

This paper introduces MBD-YOLO, an innovative real-time, end-to-end framework for processing Unmanned Aerial Vehicle (UAV) images. In comparative tests, the proposed model exhibits superior performance over the standard YOLOv8 model. The system is composed of three principal elements: a backbone that integrates the Multi-Branch Feature Fusion (MBFF) module, a neck based on the Bidirectional Multi-Scale Feature Aggregation Pyramid Network(BiMS-FPN), and a head adopting the Dual-Stream Head design. The overall design is visualized in Figure 1.

Figure 1.

The overall structure of the MBD-YOLO model.

The MBD-YOLO framework employs a three-tier cascaded architecture for end-to-end UAV target detection. Firstly, within the backbone network, the conventional C2f module of YOLOv8 is replaced by the Multi-Branch Feature Fusion (MBFF) module, which incorporates parallel processing paths with diverse receptive fields and adaptive depthwise blocks to dynamically adjust convolutional kernels []. This optimization enhances multi-scale feature extraction while strengthening local and global contextual modeling capabilities []. Secondly, the neck network leverages the BiMS-FPN to establish a bidirectional propagation mechanism: the top-down path progressively upsamples features via the MBFF module to refine feature representations, while the bottom-up path downsamples features to enhance spatial precision. Cross-scale connections are fused through the Multi-Scale Feature Aggregation (MSFA) module, which combines adaptive average pooling with feature concatenation to preserve information integrity []. Finally, the detection head adopts the Dual-Stream architecture [], utilizing a hierarchical feature fusion strategy to aggregate multi-scale features—P3 (1/8 scale for minute targets), P4 (1/16 scale for medium targets), and P5 (1/32 scale for large targets)—with its end-to-end NMS-free design significantly reducing inference latency [].

2.2. Key Modules

2.2.1. Multi-Branch Feature Fusion—MBFF

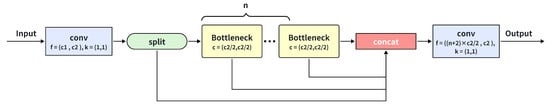

The C2f module (CSP Bottleneck with 2 Convolutions), an enhancement of YOLOv5’s C3 module in YOLOv8, aims to improve gradient flow diversity and reduce computational costs through a multi-branch feature reuse mechanism. As shown in Figure 2, the processing pipeline initiates with a 1 × 1 convolutional layer that increases the channel depth of the input features. This output is then equally divided: one segment is directly channeled to a concatenation operation to maintain the original information integrity, while the other is processed through a sequence of Bottleneck blocks for advanced feature extraction. Each Bottleneck block incorporates an optional residual connection (shortcut = True) to mitigate gradient vanishing, with all branch features finally concatenated and compressed by a cv2 convolution for lightweight output. However, this module exhibits inherent limitations:

Figure 2.

The structure of the C2f module.

- Fixed-size convolutional kernels restrict receptive-field diversity, hindering adaptation to multi-scale targets and significantly degrading detection performance for small or occluded objects;

- Direct concatenation lacks adaptive weight allocation, resulting in insufficient feature fusion capability and ineffective integration of multi-scale contextual information;

- Static computational resource allocation fixes the number of Bottleneck blocks per branch (n = 2), preventing dynamic adjustment based on target complexity, thereby causing redundant computations or inadequate feature extraction.

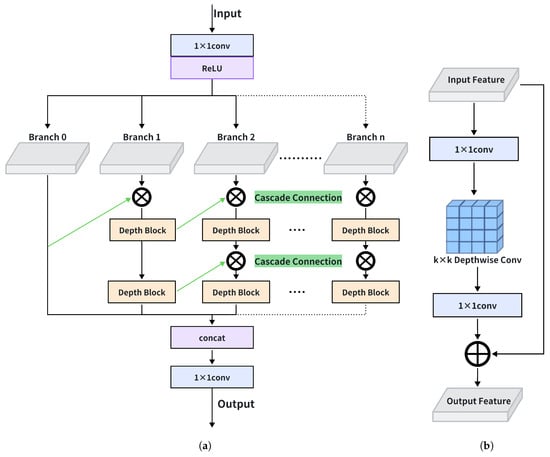

To address the inherent constraints of the conventional C2f module in capturing multi-scale features, we introduce the Multi-Branch Feature Fusion (MBFF) module, as depicted in Figure 3a. MBFF’s core innovation lies in its multi-branch parallel processing architecture, which significantly enhances feature extraction capability. Unlike C2f’s simplistic dual-branch design, MBFF introduces multiple parallel branches with varied receptive fields, facilitating extensive multi-level feature capture. To ensure a lightweight design, each branch employs a depthwise block (Figure 3b), integrating k × k depthwise convolutional kernels that process each input channel independently—performing spatial feature extraction without cross-channel computation—thereby reducing the computational load and number of parameters. MBFF’s feature fusion mechanism surpasses C2f’s simple concatenation by adopting a hierarchical feature aggregation strategy that progressively integrates features from different branches. This design preserves critical feature data while enhancing multi-context awareness. Additionally, MBFF’s re-parameterization design maintains robust feature representation capabilities while enabling efficient inference.

Figure 3.

Overall structure of Multi-Branch Feature Fusion(MBFF) module. (a) Schematic diagram of MBFF module. (b) Schematic diagram of depth block module.

Figure 3a depicts the Multi-Branch Feature Fusion (MBFF) module’s four-stage operational workflow: initial feature projection, depth block operation, cascade feature fusion, and final feature aggregation. The detailed mathematical formulation is presented as follows.

Given an input feature map , where B denotes batch size, represents input channels, and are spatial dimensions, the feature projection is computed as follows:

where

- is the ReLU activation function;

- and denote the learnable weights and bias of the 1 × 1 convolution;

- is the expanded channel dimension, which is calculated as ;

- W is the number of parallel branches.

Each branch contains d depth blocks. The depth block operation DB is formulated as

where

- and are point-wise convolution weights;

- denotes depthwise convolution with kernel size ;

- is the intermediate channel dimension.

The cascade feature fusion process is described by

where

- is the branch index;

- is the depth level index;

- are the cascaded features from the previous branch.

The final feature aggregation is computed through

where

- represents the final 1 × 1 convolution weights;

- represents the total concatenated channels.

This hierarchical fusion mechanism enables efficient multi-scale feature learning while maintaining linear computational complexity relative to the branch count W and depth d.

2.2.2. Bidirectional Multi-Scale Feature Aggregation Pyramid Network—BiMS-FPN

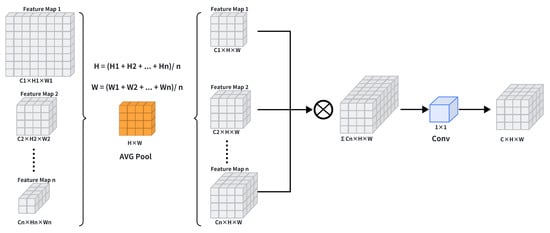

Since the Panet structure [] used in the traditional YOLO series suffers from feature redundancy and can not meet the edge deployment requirements for target recognition in UAV imagery, we introduced the multi-scale feature aggregation module (MSFA) and constructed BiMS-FPN based on the BiFPN [] bidirectional propagation mechanism. Specifically, the top-down path progressively upsamples features through the MBFF module to enhance feature representation, while the bottom-up path downsamples features to extract fine-grained spatial details. Cross-scale connections are ultimately fused by the Multi-Scale Feature Aggregation (MSFA) module. The core innovation lies in the MSFA module, which handles lateral connections within the BiMS-FPN architecture. MSFA employs an adaptive mechanism to integrate features from different scales (e.g., P3, P4, P5 levels), aiming to resolve the spatial misalignment and information degradation inherent in conventional FPNs while maintaining low computational complexity [].

As shown in Figure 4, the MSFA module first performs intelligent downsampling on input feature maps via adaptive average pooling. It then dynamically adjusts output dimensions to match target scales, followed by hierarchical integration of the pooled multi-scale features through a refined concatenation strategy.

Figure 4.

The structure of the MSFA.

The specific computational steps are outlined below:

- Feature Downsampling: Input feature maps (e.g., P4 level from Backbone) undergo downsampling via Adaptive Average Pooling []. The pooling kernel size is dynamically adjusted based on spatial characteristics of input features, formulated as follows:where denotes the input feature. This operation reduces spatial dimensions (, e.g., from to ) while preserving the channel count (C) to mitigate information loss.

- Multi-Scale Feature Alignment: Downsampled features undergo spatial alignment with adjacent-scale features (e.g., P3 or P5). This step is critical because features from different pyramid levels are naturally misaligned due to variations in sampling strides and receptive fields, which can severely impair the effectiveness of subsequent fusion operations []. The MSFA module employs learnable masks to automatically correct spatial offsets, ensuring geometric consistency across multi-resolution features and preventing pixel-level misalignment during fusion.

- Hierarchical Concatenation and Output: Aligned features are concatenated along the channel dimension:

- Channel Compression & Output Generation: The concatenated result is processed by a convolution layer to compress channel dimensionality, yielding the final fused feature representation:

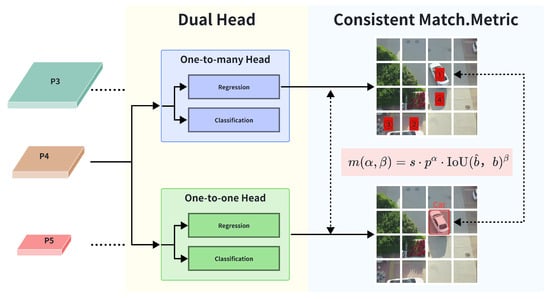

2.2.3. Improved YOLOv8 Detection Head—Dual-Stream Head

The traditional YOLOv8 detection head shows obvious limitations in multi-scale feature fusion and contextual inference, especially in the final stage of inference, which requires the use of Non-Maximal Suppression (NMS) to remove redundant prediction frames, which increases inference latency and requires continuous tuning of its parameters. To address these challenges, we design the Dual-Stream Head with reference to the Consistent Dual Assignments [] training strategy, which fundamentally solves the problem of NMS dependency in inference through dual-branch processing and end-to-end optimization []. Unlike YOLOv8’s single-branch approach and mandatory Non-Maximal Suppression (NMS), as revealed in Figure 5, the Dual-Stream Head introduces a dual-head architecture consisting of two functionally specialized components: a one-to-many head, which is enriched by multi-prediction assignment []) to enrich training supervision; and a one-to-one head (One-to-One Head), which implements NMS-free inference. Both “heads” are based on enhanced multi-scale features generated by a backbone network containing large-kernel convolution (7 × 7) and partial self-attention (PSA) mechanisms for long-distance dependency modeling, followed by a neck module combining spatial pyramid pooling (SPP) and a path aggregation network (PAN) for cross-scale P3 (1/8), P4 (1/16), and P5 (1/32) context integration. This structural innovation allows each scale to specialize in specific object sizes—high-resolution P3 features excel at detecting small objects, P4 balances medium-sized targets, and coarse-grained P5 captures large objects—addressing a critical weakness in YOLOv8’s scale-agnostic processing.

Figure 5.

The structure of the Dual-Stream Head.

The computational framework employs a unified matching metric that simultaneously addresses classification confidence and localization precision:

where

- p denotes the classification score;

- represents the predicted bounding box;

- b is the ground-truth bounding box;

- and are tunable hyperparameters balancing task-specific weights;

- s is a learnable scaling factor.

During training, the dual heads implement divergent label assignment strategies governed by this metric:

- One-to-Many Head (): Employs and to allocate top-k = 10 predictions per ground truth;

- One-to-One Head (): Uses and for strict one-to-one matching (top-k = 1).

Both heads are optimized via a joint loss function combining classification and regression components:

Classification loss uses Binary Cross-Entropy:

Regression loss combines Complete IoU (CIoU) and Distribution Focal Loss (DFL) []:

The CIoU term accounts for bounding-box geometry:

where

- p is the Euclidean distance between box centers;

- c is the diagonal length of the smallest enclosing rectangle;

- v measures aspect ratio consistency;

- models boundary distributions via discrete probability predictions.

During inference, gradient truncation deactivates the One-to-Many Head, reducing latency by 46% while the One-to-One Head outputs optimal detections without NMS [].

3. Experiments and Results

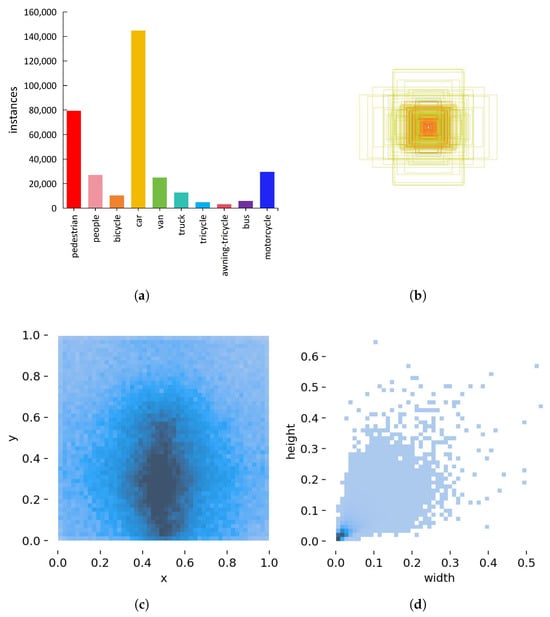

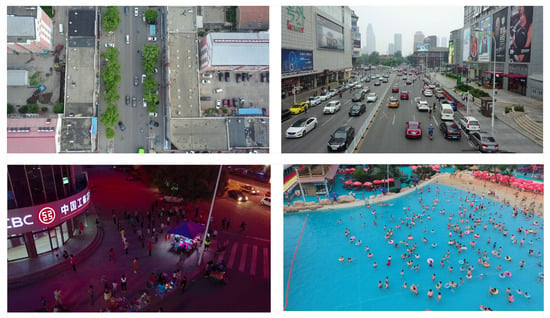

3.1. Datasets

The VisDrone2019 dataset, a large-scale collection of UAV-captured aerial images, was created by the AISKYEYE team from Tianjin University in 2018. Data acquisition spanned 14 different cities in China. This benchmark comprises ten distinct object classes: pedestrian, people, bicycle, car, van, truck, tricycle, awning-tricycle, bus, and motorcycle. As revealed in Figure 6, the dataset exhibits significant class imbalance: instances of “pedestrian” and “people” vastly outnumber other categories. Targets are predominantly small-scale, with bounding-box size distributions indicating an exceptionally high proportion of minute objects. Spatial analysis reveals non-uniform distribution of target centers across the image plane, where heatmaps highlight dense concentration in central regions. Furthermore, scatter plots of target width versus height demonstrate a strong positive correlation, indicating that objects generally exhibit regular or near-square aspect ratios. Consequently, VisDrone2019 imposes heightened demands on models to detect small-scale, center-clustered targets in urban scenarios under UAV top-down perspectives. Moreover, the extensive variety and intricacy of the scenes (Figure 7) establish a solid basis for assessing model robustness under demanding real-world conditions.

Figure 6.

Statistics and distribution of VisDrone2019 dataset. (a) Distribution of object instances per category. (b) Spatial distribution of object centers (center-biased). (c) Density heatmap of normalized object center locations.(d) Distribution of normalized object sizes.

Figure 7.

VisDrone2019 dataset samples.

The VisDrone2019 dataset is officially partitioned into a training set (6471 images), a validation set (548 images), and a test set (1580 images). Our experiments strictly follow this official split. All models are trained on the training set, with the validation set used for hyperparameter tuning and early stopping. The final performance comparison reported in this paper is based solely on the test set, ensuring an unbiased evaluation of the model’s generalization capability.

3.2. Experimental Environment and Parameter Confguration

The primary experimental setup for this work, comprehensively outlined in Table 1, is derived from the default YOLO YAML configuration. Key hyperparameters were meticulously optimized through a series of rigorous experiments to strike an optimal equilibrium between detection accuracy and training stability. Specifically, the hyperparameters were first initialized based on the default configuration of YOLOv8, and we then performed a limited grid search on the validation set to optimize critical parameters such as the initial learning rate (lr0) and weight decay. The final values were chosen to achieve stable convergence and the best performance on the validation set, ensuring a fair comparison with the baseline models, which were also tuned under the same protocol.

Table 1.

Experimental hyperparameter configuration.

3.3. Evaluation Metrics

To evaluate the model’s performance, the standard metrics of Precision (P), Recall (R), Average Precision (AP), and mean Average Precision (mAP) were adopted for assessing detection accuracy. Computational efficiency was gauged using parameters and GFLOPs [].

The Precision metric measures the ratio of correctly identified positive predictions (true positives) to all of the instances the model classified as positive. It fundamentally reflects the model’s reliability when it declares the presence of a target object. The calculation is defined by the following formula:

Within this formulation, a True Positive () denotes the accurate detection of a target, whereas a False Positive () represents the incorrect classification of a non-target object as positive. Consequently, the Precision metric functions as a crucial measure of the reliability or confidence level inherent in the model’s positive output. A model exhibiting high precision typically demonstrates strong certainty in its positive classifications; however, this performance characteristic is frequently accompanied by an elevated rate of False Negatives (FNs).

Recall, by definition, quantifies the proportion of actual positive instances that the model successfully captures. A superior Recall value is indicative of the model’s detection capability—specifically, its competence in minimizing missed detections. The recall formula is as follows:

In object detection, (Average Precision) serves as a crucial metric for evaluating a model’s detection accuracy for individual categories. Its calculation is based on the area under the Precision–Recall curve, providing a comprehensive reflection of the model’s performance across different confidence thresholds. A higher AP value indicates superior detection performance for that category. Since object detection tasks typically involve multiple categories, mAP (mean Average Precision) was introduced. mAP represents the average of the AP values across all categories. As a core comprehensive evaluation metric, it measures the model’s overall performance across the entire dataset. A higher mAP value indicates that the model maintains stable and excellent detection capabilities for all categories, thereby avoiding the one-sidedness of relying solely on single-category AP for evaluation. The calculation methods for and are summarized as follows:

Parameters represent the complete set of learnable weights within a model, fundamentally determining its architectural complexity and memory footprint. A higher parameter count typically indicates a greater model capacity, but also increases memory usage and computational overhead. For resource-constrained deployment scenarios (e.g., edge devices), models with fewer parameters are preferred. Parameters can be calculated as follows:

where L is the total number of layers, and denote the input/output channels for layer l, is the kernel size, and the bias term is included.

GFLOPS (Giga Floating-Point Operations Per Second) serves as the principal metric for inference-stage computational intensity, measuring the aggregate floating-point operations executed in a single forward pass, scaled to billions () of operations. Reduced GFLOPS values denote heightened computational efficiency, a critical attribute for real-time systems. Within convolutional layers, GFLOPS is derived as follows:

where is the output feature map size of layer l, and the factor of 2 accounts for both multiplication and addition operations. This metric helps evaluate hardware compatibility and energy consumption.

These metrics establish a balanced evaluation framework that jointly considers detection accuracy and computational efficiency. Precision and recall quantify detection reliability, while mAP50 and mAP50-95 deliver holistic assessments across varying object scales and categories. Parameters and GFLOPS determine deployment viability on resource-constrained UAV platforms.

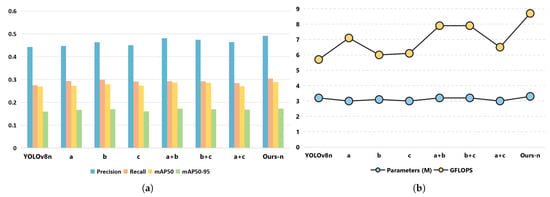

3.4. Ablation Experiment

To systematically evaluate the contribution of each component within a model and demonstrate the necessity of the design, this study conducted ablation experiments, using YOLOv8n as the baseline model, on the VisDrone2019 dataset. Three core modules were incrementally integrated: the Multi-Branch Feature Fusion (MBFF) module for backbone enhancement, the Multi-Branch Feature Pyramid Network (BiMS-FPN) for cross-scale feature aggregation in the neck, and the Dual-Stream Head for adaptive object detection. Eight experimental configurations were evaluated, including the baseline YOLOv8n; individual module additions (a: MBFF, b: BiMS-FPN, c: Dual-Stream Head); combined modules (a + b: MBFF + BiMS-FPN, b + c: BiMS-FPN + Dual-Stream Head, a + c: MBFF + Dual-Stream Head); and the full MBD-YOLO model (Ours-n: MBFF + BiMS-FPN + Dual-Stream Head). The ablation results are presented in Table 2 and Figure 8.

Table 2.

Results of ablation experiments.

Figure 8.

Analysis of ablation experiment results. (a) Histogram of ablation experiment detection accuracy. (b) Line graph of ablation experiment calculation efficiency.

As shown in Table 2, the sequential integration of the MBFF module, BiMS-FPN, and Dual-Stream Head into the baseline model consistently improves key accuracy metrics—Precision, Recall, mAP50, and mAP50-95. To evaluate synergistic effects between modules, three dual-module combinations were tested: the MBFF + BiMS-FPN (a+b) configuration achieved the highest mAP50-95 and Precision, but incurred higher computational costs (GFLOPs). In contrast, the BiMS-FPN + Dual-Stream Head (b+c) combination yielded lower accuracy, mAP50, and mAP50-95 than a+b under similar GFLOPs, while the MBFF + Dual-Stream Head (a+c) group exhibited the lowest accuracy, underscoring the MBFF module’s dependency on cross-scale fusion capabilities. The fully integrated model (Ours-n) synergizes all three modules, achieving state-of-the-art performance in UAV-based object detection: 0.491 Precision (10.8% improvement over baseline), 0.304 Recall (10.5% gain), 0.172 mAP50-95 (8.2% increase), and 0.289 mAP50 (6.3% enhancement), with only a modest 3.1% parameter increase. Collectively, MBD-YOLO significantly enhances aerial image recognition accuracy without an excessive computational burden, effectively addressing the challenge of extreme scale variations in aerial targets.

Furthermore, Figure 8 visually demonstrates the performance metrics of MBD-YOLO and its overall model efficacy. The figure clearly indicates that while the enhanced model—integrating the MBFF module, BiMS-FPN, and Dual-Stream Head—achieves superior detection accuracy, there remains room for optimization in terms of parameter efficiency and model size.

3.5. Comparison Experiment

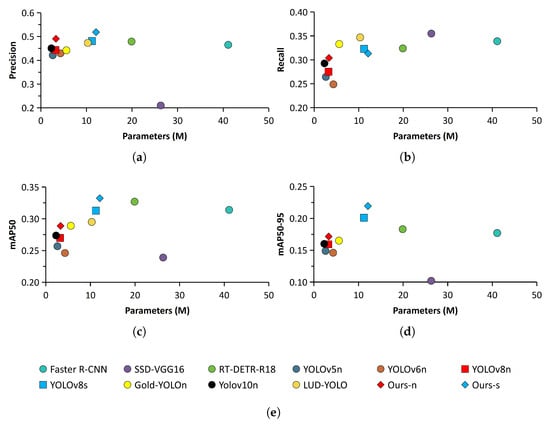

To further evaluate the superiority of MBD-YOLO, comparative experiments were conducted on the VisDrone2019 dataset against ten classical models. These include the two-stage detector Faster R-CNN [], the one-stage detector RT-DETR-R18 [], SSD-VGG16 [], and enhanced YOLO variants. The latter include YOLOv5n [], YOLOv6n [], YOLOv8n [], YOLOv8s [], Gold-YOLOn [], LUD-YOLO [], and YOLOv10n []. Table 3 and Figure 9 summarize the metrics of Precision, Recall, mAP50, mAP50-95, and Parameters for all twelve compared algorithms, including two lightweight versions of MBD-YOLO (Nano and Small). Crucially, all models were trained without pretrained weight initialization to effectively mitigate overfitting during training.

Table 3.

A comparison of the performance of the other ten models, MBD-YOLO-n, and MBD-YOLO-s on the test data of the VisDrone2019 dataset.

Figure 9.

Analysis of comparison experiment results. (a) Parameter–Precision scatter plot comparison. (b) Parameter–Recall scatter plot comparison. (c) Parameter–mAP50 scatter plot comparison. (d) Parameter–mAP50-95 scatter plot comparison. (e) Distribution of legend.

As evidenced by the results in Table 3 and Figure 9, the proposed MBD-YOLO algorithm attains a higher level of detection accuracy relative to established object detection approaches. The two-stage framework of Faster R-CNN utilizes a Region Proposal Network (RPN) for initial region generation, followed by a refinement step for classification and regression. While this design yields high precision in complex scenes, its computational redundancy results in poor real-time performance, hindering deployment in time-sensitive systems. SSD-VGG16, a single-stage multi-scale detector, leverages the VGG backbone to directly predict targets across multiple feature layers, balancing speed and accuracy. However, it exhibits weak small-target recognition capability, struggling to detect the abundant small objects typical in aerial imagery. RT-DETR-R18, an end-to-end Transformer-based detector, models global dependencies through self-attention mechanisms, enabling robust detection of occluded objects. Nevertheless, its training demands large-scale datasets and high hardware requirements, making it unsuitable for edge devices. YOLOv5n adopts a lightweight architecture using the Focus slicing module to reduce computation and CSPNet to compress parameters, yet suffers from limited accuracy in complex scenarios. YOLOv6n utilizes a multi-branch structure during training, but fuses to a single path for inference acceleration, achieving fast inference speeds at the cost of weak generalization and significant accuracy drops on new datasets. Gold-YOLOn enhances small-target recognition by fusing multi-path features via spatial-channel dual attention mechanisms, but compromises detection accuracy for medium-scale objects. LUD-YOLO employs Differentiable Neural Architecture Search (DNAS) to automatically compress models and reduce parameters, yet relies on specialized equipment for data calibration and exhibits poor scene transferability. In contrast, MBD-YOLO demonstrates exceptional performance: the Nano version achieves the highest accuracy among the sub-5M parameter models, surpassing YOLOv8n and YOLOv10n by 10.8% and 8.9% in precision; the Small version attains the highest scores in Precision, mAP50, and mAP50-95, while maintaining a compact parameter count (12.1 M).

In addressing object detection challenges within UAV-captured aerial imagery, the introduced MBD-YOLO framework exhibits outstanding precision in identifying objects, increased flexibility across various scenarios, and remarkable stability in performance. Its simplified structural design also allows for effective integration onboard drone-embedded systems, highlighting its considerable practical value and promising applicability.

3.6. Experimental Results Analysis

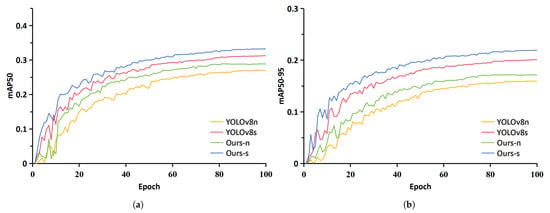

For the visual verification of the proposed algorithm’s optimized performance, a training comparison was carried out on the VisDrone2019 dataset between the two configurations of the MBD-YOLO framework (Nano and Small) and the reference models YOLOv8n and YOLOv8s, emphasizing the mAP50 and mAP50-95 metrics. As shown in Figure 10, both variants of MBD-YOLO consistently exceed the baseline models across all training epochs in terms of mAP50 and mAP50-95, conclusively demonstrating the enhanced capability of the proposed model in object detection.

Figure 10.

Performance indicators of different models’ training processes.

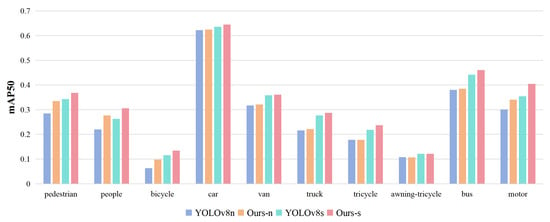

To validate MBD-YOLO’s detection performance across different target categories in the VisDrone2019 dataset, we compared its Nano and Small variants with baseline models (YOLOv8n/YOLOv8s) under identical conditions, analyzing model parameters and per-category mAP50. As shown in Table 4 and Figure 11, both MBD-YOLO versions surpassed their baselines in mAP50 across all ten categories. Specifically, MBD-YOLO-Nano achieved significant gains for small targets: pedestrians (+17.96%), people (+25.91%), and bicycles (+55.56%); moderate improvements for medium targets: cars (+0.48%), vans (+1.26%), and motorcycles (+12.96%); and enhanced detection of large targets: trucks (+2.78%) and buses (+1.32%). Similarly, MBD-YOLO-Small excelled in small-target categories: pedestrians (+7.29%), people (+15.97%), bicycles (+15.52%), and tricycles (+8.72%); showed robust performance for medium targets: cars (+1.42%), vans (+0.56%), and motorcycles (+13.80%); and showed improved accuracy in detection of large targets: trucks (+3.99%) and buses (+4.30%). These results demonstrate MBD-YOLO’s comprehensive optimization, dramatically boosting small-target detection while consistently enhancing medium- and large-target recognition.

Table 4.

Performance comparison between proposed MBD-YOLO models (MBD-YOLO-n and MBD-YOLO-s) and state-of-the-art object detection algorithms (YOLOv8n and YOLOv8s).

Figure 11.

Comparison of detection accuracy across categories among different models.

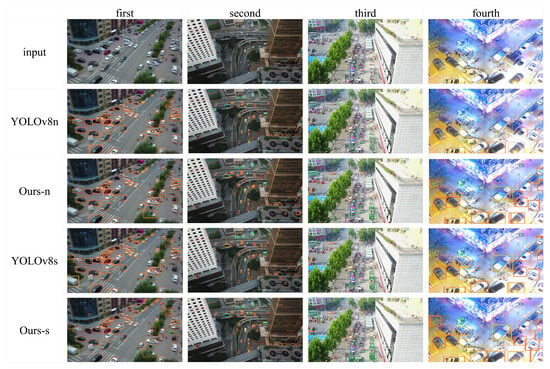

3.7. Visualization Analysis

To visually assess the efficacy of the MBD-YOLO model, we performed qualitative tests on the VisDrone2019 test set, with illustrative results provided in Figure 12. A set of representative images was chosen from the dataset to exhibit detection performance under diverse and demanding environments. Figure 12 sequentially presents detection outcomes from four models under challenging conditions: (1) an intersection scene with motion-blurred imagery due to camera shake, (2) an aerial road scenario amidst dense high-rise buildings, (3) a complex street environment with overlapping multi-category targets, and (4) a night-time street setting under streetlight illumination.

Figure 12.

Comparison of object detection results on the VisDrone2019 dataset.

In the first column’s intersection scene, camera vibration induced focal instability, resulting in severe loss of texture details and blurred target contours. For the second column’s aerial urban scenario, spatial occlusion from dense building clusters created alternating highlight and shadow zones, destabilizing color and luminance feature distributions. Concurrently, static interferences—such as curtain wall reflections and outdoor AC units—exhibited morphological similarities to target vehicles, significantly increasing false detection risks. In the third column’s complex street scene, overlapping multi-category targets (e.g., bicycles and cars) with blurred boundaries and uneven spatial distribution caused feature representation conflicts and classification interference. The fourth night-time scenario relied on high-luminosity features from vehicle lights and reflective markers, yet edge details were compromised by streetlight glare and headlight halo effects.

As demonstrated in Figure 12, MBD-YOLO consistently delivered superior performance across all scenarios. Its multi-scale feature fusion mechanism effectively compensated for detail loss and reconstructed blurred target edges, thereby boosting recall rates for overlapping small objects while reducing missed and false detections. These results validate MBD-YOLO’s robust adaptability to diverse environmental conditions.

4. Discussion

To address the challenges of low detection accuracy for multi-scale and small objects in UAV aerial imagery, this paper introduces the MBD-YOLO model, which significantly enhances UAV target detection performance across diverse object sizes in complex urban backgrounds. Moreover, its lightweight architecture fulfills deployment requirements for edge devices.

Specifically targeting the issues of low detection accuracy for multi-scale objects (especially small targets) and structural complexity in UAV aerial image analysis, we propose a novel Multi-Branch Feature Fusion (MBFF) module. This module replaces the traditional C2f structure with parallel computation paths and adaptive convolutional kernels, collaboratively optimizing depthwise convolution and hierarchical feature aggregation to achieve efficient multi-scale feature extraction and improved small-target detection accuracy. Furthermore, we redesign the Multi-Branch Feature Pyramid Network (BiMS-FPN), which integrates a bidirectional propagation mechanism and cross-scale connection fusion to resolve the spatial misalignment and information degradation inherent in conventional FPNs while reducing computational overhead and model parameters. Finally, leveraging the hierarchical feature distillation technology of the Dual-Stream Head enables retention of shallow features and dynamic attention allocation, significantly boosting the recall rate for small targets.

Compared to other methods in this field, traditional UAV detection primarily relies on manual monitoring. However, this approach struggles to achieve large-scale airspace coverage, particularly in complex terrain or adverse weather conditions, where blind spots persist. Additionally, manual operation is susceptible to fatigue-induced misjudgments and cannot sustain continuous monitoring. Low-altitude micro-targets often blend with background clutter, leading to missed detections or false positives. Deep learning-based object detection methods effectively address these challenges. Current object detection approaches primarily fall into two categories: two-stage and single-stage. For UAV deployment, single-stage detection algorithms (such as the YOLO series) are typically a superior choice over two-stage algorithms. The core reason lies in UAVs’ extreme demands for real-time performance and computational efficiency: UAVs must process sensor video or photo information in real time to accomplish tasks like obstacle avoidance, autonomous navigation, and target tracking. Any significant delay could result in mission failure or even collisions. Simultaneously, onboard computing resources (e.g., Jetson series chips), though designed for embedded systems, remain computationally constrained. Thus, the substantial speed and computational efficiency advantages of single-stage algorithms enable them to meet UAVs’ stringent deployment requirements for low latency and low power consumption, while accepting acceptable accuracy trade-offs. However, in UAV scenarios, these algorithms still face challenges such as insufficient small-object feature extraction, poor scene adaptability, and imbalanced computation–accuracy trade-offs. The proposed MBD-YOLO model integrates the MBFF module, BiMS-FPN, and dual-head architecture. While maintaining a lightweight structure, it significantly enhances the recognition performance of multi-scale targets—particularly small objects—in complex UAV backgrounds and scenarios.

However, the proposed model exhibits certain limitations. The dataset utilized in this study may not comprehensively encompass all complex scenarios, such as UAV imagery captured in maritime environments or under dense fog conditions, potentially leading to suboptimal generalization in specific situations. Furthermore, all experiments were conducted exclusively using RGB imagery, without integrating other sensor modalities (e.g., LiDAR and thermal imaging), which could lead to performance degradation for target detection under adverse weather conditions.

5. Conclusions

In UAV aerial target detection tasks, pervasive challenges include significant scale variations, minute target sizes, and complex environmental backgrounds. This paper introduces MBD-YOLO, a UAV target detection model based on YOLOv8. By replacing the conventional C2f module with the novel Multi-Branch Feature Fusion (MBFF) module, the model achieves enhanced multi-scale feature extraction and improved accuracy for small targets. Furthermore, the BiMS-FPN resolves issues of spatial misalignment and information degradation in multi-scale feature fusion, strengthening anti-interference capability in complex backgrounds while reducing computational overhead and model parameters. Finally, the Dual-Stream Head leverages hierarchical feature distillation and dynamic attention allocation to boost recall rates for small targets. Future research will focus on further optimizing computational efficiency, extending validation to diverse datasets (e.g., COCO and DOTA), and adapting multimodal sensor fusion (e.g., LiDAR and thermal imaging) to enhance performance under adverse weather conditions. Beyond these technical aspects, we will explore the practical application potential of the model in high-impact scenarios such as precision agriculture and livestock monitoring, where UAVs could be deployed to detect cattle infected with New World screwworm flies—a task that similarly involves detecting minute objects in cluttered environments. Adapting and evaluating the model for such specific use cases represents a highly promising direction for future work.

Author Contributions

All authors contributed to the study conception and design. Conceptualization, B.X. and D.C.; methodology, B.X. and D.C.; software, B.X. and Z.W.; validation, B.X., K.S. and X.P.; formal analysis, B.X., X.P. and K.S.; investigation, B.X., K.S. and C.L.; data curation, K.S. and X.P.; writing—original draft preparation, B.X.; writing—review and editing, Z.W. and B.X.; supervision, Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Geological Survey Project of the China Geological Survey (Grant No. DD20243170, “Natural Resources Monitoring (Langfang Center)”).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The public VisDrone2019 dataset can be downloaded from https://github.com/VisDrone/VisDrone-Dataset (accessed on 22 March 2025). The codes used during the study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Osmani, K.; Schulz, D. Comprehensive Investigation of Unmanned Aerial Vehicles (UAVs): An In-Depth Analysis of Avionics Systems. Sensors 2024, 24, 3064. [Google Scholar] [CrossRef]

- Yuan, S.; Li, Y.; Bao, F.; Xu, H.; Yang, Y.; Yan, Q.; Zhong, S.; Yin, H.; Xu, J.; Huang, Z.; et al. Marine environmental monitoring with unmanned vehicle platforms: Present applications and future prospects. Sci. Total Environ. 2023, 858, 159741. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef] [PubMed]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef] [PubMed]

- Mu, K.; Hui, F.; Zhao, X. Multiple Vehicle Detection and Tracking in Highway Traffic Surveillance Video Based on SIFT Feature Matching. Inf. Process. Syst. 2016, 12, 183–195. [Google Scholar] [CrossRef]

- Yuan, X.; Hao, X.; Chen, H.; Wei, X. Robust Traffic Sign Recognition Based on Color Global and Local Oriented Edge Magnitude Patterns. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1466–1477. [Google Scholar] [CrossRef]

- Chen, S.; Shi, W.; Zhou, M.; Min, Z.; Chen, P. Automatic Building Extraction via Adaptive Iterative Segmentation With LiDAR Data and High Spatial Resolution Imagery Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2081–2095. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle Detection From UAV Imagery With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6047–6067. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, Q.; Lei, G.; Wang, L.; Guo, C. Research on Lightweight Tracking of Small-Sized UAVs Based on the Improved YOLOv8N-Drone Architecture. Drones 2025, 9, 551. [Google Scholar] [CrossRef]

- Bharati, P.; Pramanik, A. Deep Learning Techniques—R-CNN to Mask R-CNN: A Survey. In Computational Intelligence in Pattern Recognition; Das, A.K., Nayak, J., Naik, B., Pati, S.K., Pelusi, D., Eds.; Springer: Singapore, 2020; pp. 657–668. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R.; Ieee. Fast R-CNN. In Proceedings of the IEEE International Conferenceon Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Cham, Switzerland, 17 September 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Olorunshola, O.; Jemitola, P.; Ademuwagun, A. Comparative study of some deep learning object detection algorithms: R-CNN, fast R-CNN, faster R-CNN, SSD, and YOLO. Nile J. Eng. Appl. Sci. 2023, 1, 70–80. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A.; Ieee. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Nida, N.; Irtaza, A.; Javed, A.; Yousaf, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using deep region based convolutional neural network and fuzzy C-means clustering. Int. J. Med Inform. 2019, 124, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Hua, W.; Chen, Q.; Chen, W. A new lightweight network for efficient UAV object detection. Sci. Rep. 2024, 14, 13288. [Google Scholar] [CrossRef] [PubMed]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Hao, W.; Zhili, S. Improved Mosaic: Algorithms for more Complex Images. J. Phys. Conf. Ser. 2020, 1684, 012094. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H.; Ieee Comp, S.O.C. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-Style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Zhao, X.; Zhang, H.; Zhang, W.; Ma, J.; Li, C.; Ding, Y.; Zhang, Z. MSUD-YOLO: A Novel Multiscale Small Object Detection Model for UAV Aerial Images. Drones 2025, 9, 429. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Yang, F.; Ouyang, T.; Liu, C. Deformer-FPN: FPN network based on deformable convolution and lightweight upsampling operator for ship detection in SAR image. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Zeng, M.; He, S.; Zeng, Q.; Niu, Y.; Zhang, R. PA-YOLO: Small Target Detection Algorithm with Enhanced Information Representation for UAV Aerial Photography. IEEE Sens. Lett. 2025, 9, 1–4. [Google Scholar] [CrossRef]

- Xu, S.; Ji, Y.; Wang, G.; Jin, L.; Wang, H. GFSPP-YOLO: A Light YOLO Model Based on Group Fast Spatial Pyramid Pooling. In Proceedings of the 2023 IEEE 11th International Conference on Information, Communication and Networks (ICICN), Xi’an, China, 17–20 August 2023; pp. 733–738. [Google Scholar] [CrossRef]

- Kim, M.; Jeong, J.; Kim, S. ECAP-YOLO: Efficient Channel Attention Pyramid YOLO for Small Object Detection in Aerial Image. Remote Sens. 2021, 13, 4851. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-yolo: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Zhang, Z. Drone-YOLO: An Efficient Neural Network Method for Target Detection in Drone Images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Li, Y.; Hu, B.; Wei, S.; Gao, D.; Shuang, F. Simplify-YOLOv5m: A Simplified High-Speed Insulator Detection Algorithm for UAV Images. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Liu, H.; Sun, F.; Gu, J.; Deng, L. SF-YOLOv5: A Lightweight Small Object Detection Algorithm Based on Improved Feature Fusion Mode. Sensors 2022, 22, 5817. [Google Scholar] [CrossRef]

- Fan, Q.; Li, Y.; Deveci, M.; Zhong, K.; Kadry, S. LUD-YOLO: A novel lightweight object detection network for unmanned aerial vehicle. Inf. Sci. 2025, 686, 121366. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, Seattle, WA, USA, 14–19 June 2020; pp. 11030–11039. [Google Scholar]

- Wang, S.; Zhao, Z.; Chen, Y.; Mao, Y.; Cheung, J. Enhancing Thyroid Nodule Detection in Ultrasound Images: A Novel YOLOv8 Architecture with a C2fA Module and Optimized Loss Functions. Technologies 2025, 13, 28. [Google Scholar] [CrossRef]

- Zhong, S.; Wen, W.; Qin, J. SPEM: Self-adaptive Pooling Enhanced Attention Module for Image Recognition. In MultiMedia Modeling; Dang-Nguyen, D.T., Gurrin, C., Larson, M., Smeaton, A.F., Rudinac, S., Dao, M.S., Trattner, C., Chen, P., Eds.; Springer: Cham, Switzerland, 2023; pp. 41–53. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Dong, Y.; Xie, X.; An, Z.; Qu, Z.; Miao, L.; Zhou, Z. NMS-Free Oriented Object Detection Based on Channel Expansion and Dynamic Label Assignment in UAV Aerial Images. Remote Sens. 2023, 15, 5079. [Google Scholar] [CrossRef]

- Wu, Y.; Yao, Q.; Fan, X.; Gong, M.; Ma, W.; Miao, Q. PANet: A Point-Attention Based Multi-Scale Feature Fusion Network for Point Cloud Registration. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2019, arXiv:1911.09070. [Google Scholar] [CrossRef]

- Feng, J.; Yi, C. Lightweight Detection Network for Arbitrary-Oriented Vehicles in UAV Imagery via Global Attentive Relation and Multi-Path Fusion. Drones 2022, 6, 108. [Google Scholar] [CrossRef]

- Yang, J.; Chen, F.; Das, R.K.; Zhu, Z.; Zhang, S. Adaptive-Avg-Pooling Based Attention Vision Transformer for Face Anti-Spoofing. In Proceedings of the 49th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3875–3879. [Google Scholar] [CrossRef]

- Huang, Z.; Wei, Y.; Wang, X.; Liu, W.; Huang, T.S.; Shi, H. AlignSeg: Feature-Aligned Segmentation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 550–557. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Q.; Hu, Q.; Cheng, J. DATE: Dual Assignment for End-to-End Fully Convolutional Object Detection. arXiv 2022, arXiv:2211.13859. [Google Scholar] [CrossRef]

- Zong, Z.; Song, G.; Liu, Y. DETRs with Collaborative Hybrid Assignments Training. arXiv 2022, arXiv:2211.12860. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).