Abstract

Background: In recent years, there has been remarkable growth in AI-based applications in healthcare, with a significant breakthrough marked by the launch of large language models (LLMs) such as ChatGPT and Google Bard. Patients and health professional students commonly utilize these models due to their accessibility. The increasing use of LLMs in healthcare necessitates an evaluation of their ability to generate accurate and reliable responses. Objective: This study assessed the performance of LLMs in answering orthodontic-related queries through a systematic review and meta-analysis. Methods: A comprehensive search of PubMed, Web of Science, Embase, Scopus, and Google Scholar was conducted up to 31 October 2024. The quality of the included studies was evaluated using the Prediction model Risk of Bias Assessment Tool (PROBAST), and R Studio software (Version 4.4.0) was employed for meta-analysis and heterogeneity assessment. Results: Out of 278 retrieved articles, 10 studies were included. The most commonly used LLM was ChatGPT (10/10, 100% of papers), followed by Google’s Bard/Gemini (3/10, 30% of papers), and Microsoft’s Bing/Copilot AI (2/10, 20% of papers). Accuracy was primarily evaluated using Likert scales, while the DISCERN tool was frequently applied for reliability assessment. The meta-analysis indicated that the LLMs, such as ChatGPT-4 and other models, do not significantly differ in generating responses to queries related to the specialty of orthodontics. The forest plot revealed a Standard Mean Deviation of 0.01 [CI: 0.42–0.44]. No heterogeneity was observed between the experimental group (ChatGPT-3.5, Gemini, and Copilot) and the control group (ChatGPT-4). However, most studies exhibited a high PROBAST risk of bias due to the lack of standardized evaluation tools. Conclusions: ChatGPT-4 has been extensively used for a variety of tasks and has demonstrated advanced and encouraging outcomes compared to other LLMs, and thus can be regarded as a valuable tool for enhancing educational and learning experiences. While LLMs can generate comprehensive responses, their reliability is compromised by the absence of peer-reviewed references, necessitating expert oversight in healthcare applications.

1. Introduction

Orthodontic treatment focuses on the diagnosis and management of various dental issues, including severe malocclusions, overcrowding, jaw misalignments, impacted teeth, congenital anomalies, and complications arising from previous dental procedures. For complex cases, treatment options may include traditional braces, clear aligners, lingual braces, palatal expanders, temporary anchorage devices, or surgical orthodontics. This type of treatment is often lengthy and requires a high level of technical skill, with the average duration typically ranging from 14 to 33 months, which may exceed patient expectations [1]. In recent years, the internet has become as a significant source of medical information for patients; however, the accuracy and quality of the information found online can often be questionable [2]. It is important to note that not all online resources provide clinically reliable or sufficiently high-quality information [3,4,5].

Recent developments in the field of technology have led to the exponential growth of artificial intelligence (AI). The term AI model is used to describe the application of technology in designing and developing computer-based applications that can mimic the functioning of the human brain [6]. Several AI-based models have been designed for application in the medical sciences, including dentistry. These models have significantly contributed by providing innovative solutions for complex challenges and assisting dentists in efficiently diagnosing oral diseases. Effective clinical decision-making and treatment planning can ultimately enhance the quality of care delivered to the patients [7,8]. Additionally, autonomous ultraviolet disinfection robots have become essential tools in the decontamination processes within healthcare settings [9]. In recent years, there has been remarkable growth in AI-based applications in healthcare, particularly with the launch of large language models (LLMs) such as ChatGPT and Google Bard [10]. These LLMs have gained tremendous popularity among AI users due to their easy accessibility to the public. The LLMs are based on neural networks that undergo extensive training on large datasets, primarily derived from internet sources such as Wikipedia, research articles, web pages, digital books, etc. This training is accomplished through deep-learning algorithms and advanced modeling techniques [11,12,13]. These models are capable of generating fluent and coherent text, translating languages, addressing user queries, and performing various language-related tasks [14].

Researchers have reported on the performance of LLMs in answering queries and questions related to various board certification exams across multiple fields of health sciences [12,15]. Health professional students commonly utilize these models because they are the most easily accessible resources. However, some studies have raised concerns about the performance of these models in certain contexts. Research indicates that these LLMs are capable of generating convincing false or inaccurate information, which presents a challenge for individuals who may lack the experience to distinguish between accurate and misleading information [16]. Therefore, from an educational perspective, the accuracy and reliability of these resources are paramount [17]. Given these reported limitations, researchers have expressed serious concerns regarding the utilization and use of these LLMs in healthcare [18]. Consequently, authors have cautioned against relying on these technologies solely as an assistive tools, rather than as a direct sources of information [19].

With advancements in technology, these LLMs have become increasingly popular among patients due to their easy accessibility for information at any point in time [20]. However, there are serious concerns regarding the incorrect answers, false information, and irrelevant and unrealistic responses generated by these LLMs [20]. The reliability, accuracy, and quality of the generated responses are critical, as they can influence the patients’ decision-making, compliance with the treatment, and also the doctor–patient relationship [21]. Therefore, these LLMs come with limitations and ethical considerations related to credibility and trustworthiness [22].

Hence, considering the importance of the output accuracy of these LLMs, this systematic review and meta-analysis aims to evaluate the performance of the most widely utilized AI-based LLMs in generating information relevant to orthodontics. These findings will assess the credibility of the information generated from these LLMs, with the focus on enhancing patient education and the dissemination of high quality information.

2. Materials and Methods

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) criteria [23] were referred to and adopted for this paper.

The data search was performed based on the PICO (Problem/Patient, Intervention/Indicator, Comparison, and Outcome) criteria detailed in Table 1.

Table 1.

Description of the PICO (P = Population; I = Intervention; C = Comparison; O = Outcome) elements.

2.1. Search Strateg

Research papers published until 31 October 2024 that refer to the utilization of AI-based LLMs for generating information on orthodontics-related inquiries were examined. A comprehensive search was conducted across prominent online databases, including PubMed, Web of Science, Embase, Scopus, and Google Scholar. The search strategy was developed by integrating medical subject headings (MeSH) with relevant search phrases, utilizing Boolean operators such as AND and OR. Keywords employed to search articles in electronic databases included Artificial Intelligence OR Chatbots OR ChatGPT OR Gemini OR Copilot OR Generative Models OR Natural Language Processing OR Machine Learning OR Decision Support Systems OR Large Language Models AND Dental Education AND Knowledge AND Information AND technology AND Clear Aligners AND Dentistry, as well as Lingual Orthodontics AND Patient Information AND Digital Orthodontics AND Orthodontics AND Dental License Examinations AND Board Exams AND Multiple Choice Questions AND Patients OR Comparison OR Performance OR Reliability OR Accuracy OR Validity.

2.2. Study Selection

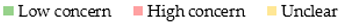

Two authors (F.B. and S.B.K.) with substantial academic and research experience independently conducted an extensive search for articles across multiple online databases, resulting in the identification of 278 articles, along with one additional article discovered through a manual search. Subsequently, a total of 279 articles were reviewed to eliminate duplicates. During this process, 220 articles were excluded due to duplication. The remaining 59 manuscripts were then subjected to a thorough evaluation to determine their eligibility.

2.3. Eligibility Criteria

Inclusion criteria:

The inclusion criteria consisted of original research articles focused on LLMs in orthodontics, articles published in the English language, articles that provided sufficient quantitative data, and articles that were available in full-text format.

Exclusion criteria:

Studies that investigated LLMs in areas other than orthodontics, as well as articles that provided limited quantitative data—such as editorial letters, correspondence, opinion letters, conference abstracts, and non-academic sources such as magazines and websites—were excluded. The details are presented in Figure 1.

Figure 1.

PRISMA 2020 flow diagram for new systematic reviews, which included database searches, registers, and other sources.

3. Results

Qualitative data were extracted from the ten articles that were finalized. All of these articles were published in the current year.

3.1. Data Extraction

After applying the eligibility criteria, the number of articles that met the inclusion criteria for the systematic review was reduced to ten. Two reviewers, (K.I. and M.A.), extracted the data and recorded it in Microsoft Excel spreadsheets (version 2021, Microsoft Corporation, Washington, DC, USA). In instances of disputes at any screening phase, these were resolved through consensus or through discussions with a third reviewer (A.A.).

3.2. Quality Assessment

The articles were evaluated for quality utilizing the Prediction model Risk Of Bias Assessment Tool (PROBAST) [24] Two reviewers, (K.I. and M.A.), demonstrated significant concordance, achieving an 82% agreement level as assessed by Cohen’s kappa. Hence, these two authors conducted the risk of bias assessment for all the ten finalized articles.

3.3. Qualitative Data of the Studies

Five out of the ten studies included in this review were quantitative, enabling a meta-analysis to assess the performance differences in LLMs through external validation concerning patient-related inquiries. Further research with larger sample sizes and lower effect sizes is necessary to clarify the significant functioning of these LLMs. Most of the LLMs have been applied to assess the information related to impacted canines, interceptive orthodontic treatment, and orthognathic surgery [25,26]; general questions related to orthodontic treatment [27,28,29,30]; and information related to orthodontic clear aligners, temporomandibular disorders (TMDs), and rapid palatal expansion [31,32,33,34]. The application of LLMs is summarized in Table 2.

Table 2.

Details of the studies that have used AI-based LLMs for generating responses related to orthodontics.

3.4. Study Characteristics

The study characteristics comprised the first author’s details; publication year; study objective; type of AI algorithms and details of datasets used for testing the LLMs; testing and evaluation methods; comparison with other LLMs/human experts; performance of the LLMs, and conclusions and recommendations.

3.5. Outcome Measures

The performance of the LLMs reported in the included studies was evaluated in terms of the accuracy, reliability, usability, and readability of the responses generated by the LLMs.

3.6. Risk of Bias (RoB) Assessment and Applicability Concerns

The present review aims to evaluate the research papers related to orthodontics that have focused exclusively on the external validation of prediction models. Studies of this nature can adopt the PROBAST for a robust and comprehensive analysis. PROBAST serves as a tool to assess the risk of bias (RoB) for both prediction development models with external validation and prediction models that are solely subjected to external validation [24]. PROBAST provides a systematic and clear methodology for evaluating the RoB and the relevance of studies that create, validate, or revise prediction models for personalized predictions. It consists of four key domains: participants, predictors, outcomes, and analysis. Within these domains, there are 20 signaling questions designed to support a structured assessment of RoB, which is characterized by deficiencies in study design, execution, or analysis that result in systematically biased estimates of the predictive performance of the model. As observed in the present systematic review, most of the external validation studies of prediction models are subjective in nature, relying on expert opinion, which introduces bias., All ten papers included in the final analysis have evaluated the general prediction models (ChatGPT-4) available rather than focusing on predictive models specific to a particular specialty or medicine. Three of the ten studies (Hatia A et al. [26] Daraqel B et al. [27] and Tanaka et al. [33]) were qualitative, while the remaining studies limited their quantitative analysis to continuous assessment scores. Notably few studies [29,31,32] employed valid subjective readability rating scales, such as DISCERN, Global Quality Scale (GQS), and the accuracy of information (AOI) index. In contrast, most of the other studies adopted their own definition of readability and accuracy. None of the studies in the present review utilized an objective scale for assessing responses. Furthermore, the awareness of predictors prior to the commencement of validation was not reported in any of the studies. The area under the Receiver Operating Characteristic curve (ROC) was not been reported as part of the validation in any of these studies, which further contributes to bias in interpreting the actual effectiveness of the models in accurately addressing orthodontic clinical queries. Two studies [29,30] raised concerns regarding low clinical applicability. Validation studies require a minimum of 100 participants to ensure a reliable outcome; otherwise, the risk for bias and the applicability estimates of model performance becomes more pronounced [24]. The details are presented in Table 3.

Table 3.

Risk of Bias Assessment and Applicability Concerns.

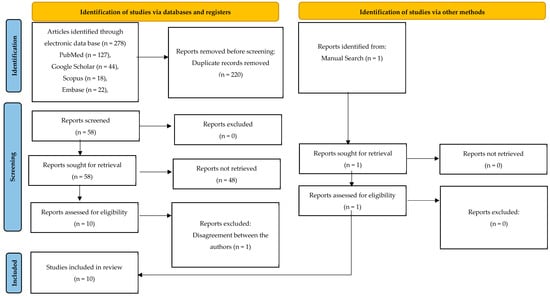

3.7. Statistical Protocol

The meta-analysis was conducted on continuous data (mean scores) obtained from various predictive models. All the studies included in the review utilized ChatGPT-4. A comparison was made to analyze the variance between ChatGPT-4 and other predictive models. The validation performance of ChatGPT-4 was independently compared against ChatGPT-3.5, Gemini, and Copilot in a study by Dursun et al. [31]. Similarly, the validation of ChatGPT-4 with ChatGPT-3.5, Google Bard, and Microsoft Bing was compared to analyze the outcome of the study by Makrygiannakis et al. [24]. Two other studies (Vassis S et al. [28] and Kilinic et al. [29]) compared ChatGPT-4 with ChatGPT version 3.5.; the study by Naureen et al. [34] compared the responses of ChatGPT-4 to those of Google Bard. R Studio software (version 4.4.0, 2024, the R foundation for statistical computing, Vienna, Austria) was used for the metanalysis and forest plot generation. The mean score of the experimental group (ChatGPT-3.5, Gemini, and Copilot) was found to be 1.28 (±0.93) and, for the control group (ChatGPT-4), it was 1.27 (±0.77). The standard mean difference was analyzed for comparison of ChatGPT-4 versus other models. From the forest plot, we can infer that the effect size of most of the studies was high, and most studies exhibited high confidence intervals, indicating a lesser relevance of responses to predictors, as well as fewer predictors used for eliciting responses. Except for the study by Vassis S et al. [28], other studies demonstrated a higher effect size in the range of (0.5 to 0.8). There is a high level of homogeneity in results, as the i2 percentage is zero. No effect was seen while observing the line. The pooled effect size conclusively reflects that no predictive model is currently more efficient than the others. All the predictive models are general models and not specific to the specialty in question, and the datasets used to train these models may be insufficient at this moment. The details are presented in Figure 2.

Figure 2.

Forest plot analysis for ChatGPT-4 with that of other LLMs [28,29,30,31,34].

4. Discussion

This paper aims to report on the performance of various LLMs that have been utilized to answer queries related to the specialty of orthodontics. A total of ten original research articles were finalized for evaluation in this systematic review and meta-analysis.

4.1. LLMs That Have Been Applied for Generating Answers to Questions Related to Orthodontics

Most of the reported studies have utilized ChatGPT-3.5 and ChatGPT-4 [25,26,27,28,29,30,31,32,33,34], Google’s Bard/Gemini [27,31,34], and Microsoft’s Bing/Copilot AI [30,31].

The most commonly used was ChatGPT, which is a LLM, which is rooted in a natural language processing [NLP], and enables computer systems to comprehend the natural language inputs, which includes techniques like machine learning [11]. These LLMs are developed using neural networks and undergo training using large amounts of text datasets from various sources. Once trained, their task is to generate coherent, human-like conversational responses to queries/questions/prompts based on the data that they have been trained on. This process is accomplished through the deep-learning algorithms and advanced modeling [11,12,13]. Another LLM known as Google Bard, now known as Gemini, was recently introduced by Google and has been made available for public access. A key difference between Gemini and ChatGPT is that Gemini utilizes real-time internet to extract the most updated information while generating the responses to the queries [35]. Another widely recognized LLM, known as Bing Chat, which has been re-launched as Copilot, was introduced by Microsoft. This model effectively addresses the limitations encountered by ChatGPT, including the ability to update in accordance with current events through internet access [36].

4.2. Methods That Have Been Adopted for Assessing the Level of Accuracy and Reliability of the Responses Generated for Questions Related to Orthodontics

Most of the reported studies have employed Likert scales to evaluate the accuracy of the responses, which were assessed by experienced specialists [25,26,28,30,34].

The most commonly used tool for assessing the reliability of the responses was the DISCERN tool. This tool is a validated and a reliable tool developed for healthcare professionals and patients to evaluate the reliability of health-related texts [37,38,39,40]. It comprises 16 questions, where the first 8 questions are related to the reliability and next 7 questions are for assessing the treatment options, with the last question assessing the overall quality of the article. These questions are rated on 5-point scale, with 1 indicating low quality and 5 indicating high quality [37].

The quality and the usefulness of the responses generated by the LLMs were assessed using Global Quality Scale (GQS). The quality of information was evaluated on a five-point Likert scale. A score of 1 is considered as poor quality, a score of 2 is considered as generally poor quality, a score of 3 is considered as moderate quality, a score of 4 is considered as good quality, and a score of 5 is considered as excellent quality. This scale is designed to assess the quality of information, the flow, and the convenience of use of the information available online [41,42,43].

In one study, the quality of responses generated by the LLMs was assessed using the AOI index. This index measures the quality and completeness of the responses generated by the LLMs on a 10-point visual analog scale. It comprises five domains: factual accuracy, corroboration, consistency, clarity and specificity, and relevance of response. A higher score suggests that the responses are more accurate and complete [44].

The most common tool used for assessing the reliability of the readability responses generated by the LLMs was the Flesch Reading Ease Score (FRES). This is a valid tool for evaluating the readability and grading the comprehension of the generated text. The readability was measured using the Flesch Reading Ease Score calculator. The scoring system ranges from 0 to 100, based on which the readability is determined as easy or difficult [45,46].

4.3. Application and Performance of AI Based LLMs for Answering Queries Related to the Specialty of Orthodontics

AI has gained huge popularity among healthcare professionals, as these sophisticated models have demonstrated commendable performance in various fields of dentistry [7]. In orthodontics, the AI models have performed exceptionally well when applied for detecting cephalometric landmarks, assessing the need for orthodontic tooth extractions, estimating skeletal maturity and age estimation, predicting post-operative orthodontic corrections, and assisting with treatment planning and clinical decision-making [22,23]. These models have displayed performances which are equivalent to that of experienced orthodontists [47,48].

With the recent advent of AI-based LLMs, especially ChatGPT Google Bard, and Copilot, there has been a significant increase in awareness among the AI users. These models can provide a wide range of information in real time, and furnish the users with most appropriate, current, and precise information. The GPT-3.5 language model serves as the foundation for the publicly available version of ChatGPT. In contrast, the more recent GPT-4 version is accessible only through the ChatGPT Plus premium subscription. Following this, Microsoft launched the Bing Chat AI chatbot, which is currently known as Copilot [30]. Copilot addresses several key issues frequently encountered in ChatGPT, such as having live internet access, offering footnotes with links to the sources of the information provided, and staying up to date on current events through internet connectivity [11]. Additionally, Google launched Gemini, formerly known as Bard, a generative artificial intelligence chatbot that accesses the internet in real-time to provide responses that reflect the most recent facts [27].

Recently, these LLMs have gained significant popularity among health professional students and patients. For example, they have already demonstrated a wide range of applications in the field of health sciences. These include identifying research topics, assisting with clinical and laboratory diagnosis, assisting with medical education, improving the sharing of knowledge among healthcare professionals, gathering medical knowledge, and facilitating medical consultation [49,50].

ChatGPT received significant attention after achieving high accuracy when passing the United States Medical Licensing Examination (USMLE) and other prestigious medical university examinations [51,52]. Hatia A et al. [26] reported on the performance of the ChatGPT in answering questions and solving clinical scenarios related to interceptive orthodontics in terms of the accuracy and completeness of the responses. The results indicated that the LLM performance was good, achieving a accuracy rate of 46%for questions related to orthodontic clinical cases, and the completeness of the responses generated was correct in 54.3% of cases. Based on these findings, the authors concluded that ChatGPT displayed a performance which was insufficient to replace the specialists’ intellectual work. Another study conducted by Alkhamees A [25] examined the performance of ChatGPT-4 in terms of the accuracy, reliability, and quality of information generated on orthodontic topics, which included questions related to impacted canines, interceptive orthodontic treatment, and orthognathic surgery. The responses generated by the LLM to the questions were of good quality for all the topics, with 68.7% rated as good or very good by the experienced orthodontists. However, the authors cautioned against solely relying on the information from the LLM, as it was not 100% accurate and should not be considered as an alternative for a specialist opinion.

Daraqel B et al. [27] reported on a study comparing the performance of two renowned LLMs, ChatGPT-3.5 and Google Bard, in terms of response accuracy, completeness, generation time, and response length when answering general orthodontic questions. The performance of these LLMs was assessed by five orthodontists. Here, the authors reported that the responses generated for the queries were of high quality. ChatGPT outperformed Google Bard in terms of accuracy and completeness; however, acquiring responses was generally faster with Google Bard. Another similar comparative study was conducted by Dursun D et al. [31] to assess and compare the performance of various LLMs like ChatGPT-3.5, ChatGPT-4, Gemini, and Copilot in terms of the accuracy, reliability, quality, and readability of responses generated for questioned related to orthodontic clear aligners. In this study, the authors utilized standardized assessment tools like modified DISCERN scale for evaluating reliability, GQS for quality, and the FRES for readability. The authors reported that the all LLMs provided generally accurate, moderately reliable, and moderate to good quality answers to questions about the clear aligners. Hence, these LLMs need to be supplemented with more evidence-based information and improved readability. In a similar study, Kılınc D. D et al. [29] reported on the performance of ChatGPT-3.5 and ChatGPT-4 regarding the reliability and readability of responses to questions about orthodontic treatment. In this study, the authors utilized the Flesch–Kincaid tool to assess the readability of the responses and the DISCERN tool to evaluate the reliability. The authors observed that the reliability of the responses improved during the second evaluation, while the readability decreased. Hence, the authors concluded that the LLMs were capable of generating comprehensive responses; however, they were not scientifically valid, as they were not based on peer-reviewed references.

Naureen S et al. [34] reported on a study evaluating and comparing the performance of ChatGPT-4 and Google Bard regarding the accuracy of their responses to questions related to orthodontic diagnosis and treatment modalities. The performance was assessed using a five-point Likert scale by human experts. The authors reported that ChatGPT-4 was more efficient than Google Bard in providing responses that were more accurate and up-to-date information.

Vassis S et al. [28] reported on a study to assess and compare the performance of ChatGPT-3.5 and ChatGPT-4 regarding the reliability and validity of informing patients about orthodontic side effects. The performance was evaluated by orthodontists using a 5-point Likert scale. The experts rated the responses as neither deficient nor satisfactory; therefore, the information generated cannot replace a consultation at the orthodontic clinic. However, the patients perceived that the responses generated by the LLMs were very useful and comprehensive. Nearly 80% of the patients reported that they preferred the information generated by the LLMs over the standard text information. Makrygiannakis M.A. et al. [30] reported on the performance of Google’s Bard and OpenAI’s ChatGPT-3.5 and ChatGPT-4 regarding the clinical relevance of their responses to questions about orthodontics. The responses were evaluated using a 10-point Likert scale by two orthodontists. The authors reported that all these LLMs generated valuable, evidence-based information in orthodontics. However, occasionally, the responses generated lacked comprehension, scientific accuracy, clarity, and relevance. Therefore, these responses need to be considered with caution, as there is a potential risk of making incorrect decisions. Tanaka OM et al. [33] reported a study on the performance of ChatGPT-4.0 regarding the accuracy and quality of information related to clear aligners, temporary anchorage devices, and digital imaging in orthodontics using a 5-point Likert scale. Five orthodontists evaluated the information and concluded that majority of the responses generated by the LLMs were within the rage of good to very good. However, the authors suggested that efforts should be made to enhance the reliability of the information provided. A similar study conducted by Demirsoy K K et al. [32] evaluated the performance of ChatGPT-4 regarding the accuracy, relevance, and reliability of information related to orthodontics. The assessment was conducted using GQS and DISCERN scale by orthodontists, dental students, and individuals seeking the treatment. The authors reported that ChatGPT has significant potential for usability in patient information and education in orthodontics. However, the authors suggested that ongoing refinement and customization are essential for optimizing its effectiveness in clinical practice. AI-based LLMs have been extensively tested for their performances in the health sector, including in dentistry. In the present systematic review, out of the 10 included articles, only a few utilized standardized tools to assess accuracy, quality, reliability, and readability [29,31,32]. Consequently, despite being commonly used tools among health professions and patients seeking healthcare, these LLMs have certain limitations. These limitations include a tendency to generate superficial responses, which may result in factually incorrect responses, commonly referred as hallucinations [53,54]. Additionally, the reliability of the information generated by the LLMs may sometimes lack the details on the source of information/citation, which can mislead the users and undermine the credibility of the information [55]. To overcome a few of these limitations, researchers have, however, recommended the use of English language specifically for formulating the prompts, as this can influence the quality of the output generated by the LLMs, enabling it to draw data from wider databases [54]. LLMs rely heavily on the data utilized during their training, which can lead to biases arising from misinformation, inaccuracies, or outdated content within the training datasets. Additionally, a significant limitation of these models is the inconsistency and unreliability of their responses when the same question is posed multiple times [56]. The lack of standardized assessment tools to evaluate the accuracy and quality of the responses generated by LLMs is a critical issue that warrants attention, particularly in assessing their potential advantages and risks prior to their deployment in healthcare settings. Furthermore, ethical and legal concerns arise regarding the use of data, especially when patient information is involved in the training process. This presents a potential risk, as these models may inadvertently memorize and disclose sensitive information if adequate security measures are not implemented.

In this systematic review, the number of included studies was limited, and most of these studies did not utilize standardized tools for evaluating the content and quality of the responses generated by the LLMs. The included studies did not exhibit a uniform approach to the presentation of results. A majority of these studies were qualitative, highlighting a need for future research to address these limitations by incorporating quantitative findings, thereby enhancing the overall quality of the studies. Consequently, the findings from these studies cannot be generalized. It is therefore premature to draw definitive conclusions from the studies included in this analysis. We recommend that future research incorporates a larger number of samples through patient queries or prompts to achieve a broader range of results.

5. Conclusions

The widespread adoption of AI-based LLMs in healthcare has prompted this meta-analysis to evaluate their accuracy, reliability, and performance in addressing orthodontic-related queries. The most commonly used LLMs were ChatGPT (10/10, 100% of papers), followed by Google’s Bard/Gemini (3/10, 30% of papers), and Microsoft’s Bing/Copilot AI (2/10, 20% papers). LLMs like ChatGPT-4 have been extensively utilized for various tasks within the specialty of orthodontics and have demonstrated advanced and promising outcomes in comparison with other LLMs. Therefore, they can be regarded as tools that can enhance educational and learning experiences for their users. However, the studies analyzed exhibited a high RoB, primarily due to the lack of standardized evaluation tools. This underscores the need for future research to develop and apply uniform assessment frameworks. Although LLMs can provide comprehensive responses, their reliability is often compromised by the lack of peer-reviewed references. Consequently, expert review remains essential to ensure the trustworthiness of AI-generated information in healthcare. It is imperative for developers and regulatory authorities to collaborate in upholding ethical standards, which is crucial for the responsible incorporation of LLMs into medical practice. This collaboration will help ensure data protection while fostering innovation. There is an urgent need for standardized tools to evaluate the accuracy and quality of the information produced by LLMs.

Author Contributions

Conceptualization, S.B.K. and F.A.; methodology, M.A., K.I. and O.G.S.; software, K.I.; validation, A.A. and A.S.A.; formal analysis, K.I.; investigation, M.A. and A.S.A.; resources, F.A.; data curation, K.I.; writing—original draft preparation, S.B.K.; writing—review and editing, N.A. and O.G.S.; visualization, F.A.; supervision, S.B.K.; project administration, F.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tsichlaki, A.; Chin, S.Y.; Pandis, N.; Fleming, P.S. How Long Does Treatment with Fixed Orthodontic Appliances Last? A Systematic Review. Am. J. Orthod. Dentofac. Orthop. 2016, 149, 308–318. [Google Scholar] [CrossRef]

- Crispino, R.; Mannocci, A.; Dilena, I.A.; Sides, J.; Forchini, F.; Asif, M.; Frazier-Bowers, S.A.; Grippaudo, C. Orthodontic Patients and the Information Found on the Web: A Cross-Sectional Study. BMC Oral Health 2023, 23, 860. [Google Scholar] [CrossRef]

- Mulimani, P.; Vaid, N. Through the Murky Waters of “Web-Based Orthodontics”, Can Evidence Navigate the Ship? APOS Trends Orthod. 2017, 7, 207–210. [Google Scholar] [CrossRef]

- Arun, M.; Usman, Q.; Johal, A. Orthodontic Treatment Modalities: A Qualitative Assessment of Internet Information. J. Orthod. 2017, 44, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Daraz, L.; Morrow, A.S.; Ponce, O.J.; Beuschel, B.; Farah, M.H.; Katabi, A.; Alsawas, M.; Majzoub, A.M.; Benkhadra, R.; Seisa, M.O.; et al. Can Patients Trust Online Health Information? A Meta-Narrative Systematic Review Addressing the Quality of Health Information on the Internet. J. Gen. Intern. Med. 2019, 34, 1884–1891. [Google Scholar] [CrossRef]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where Is the Artificial Intelligence Applied in Dentistry? Systematic Review and Literature Analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, Application, and Performance of Artificial Intelligence in Dentistry—A Systematic Review. J. Dent. Sci. 2020, 16, 508–522. [Google Scholar] [CrossRef]

- Kishimoto, T.; Goto, T.; Matsuda, T.; Iwawaki, Y.; Ichikawa, T. Application of Artificial Intelligence in the Dental Field: A Literature Review. J. Prosthodont. Res. 2022, 66, 19–28. [Google Scholar] [CrossRef]

- Pandya, V.S.; Morsy, M.S.M.; Halim, A.A.; Alshawkani, H.A.; Sindi, A.S.; Mattoo, K.A.; Mehta, V.; Mathur, A.; Meto, A. Ultraviolet Disinfection (UV-D) Robots: Bridging the Gaps in Dentistry. Front. Oral Health 2023, 4, 1270959. [Google Scholar] [CrossRef] [PubMed]

- Labadze, L.; Grigolia, M.; Machaidze, L. Role of AI Chatbots in Education: Systematic Literature Review. Int. J. Educ. Technol. High. Educ. 2023, 20, 56. [Google Scholar] [CrossRef]

- Vaishya, R.; Misra, A.; Vaish, A. ChatGPT: Is This Version Good for Healthcare and Research? Diabetes Metab. Syndr. Clin. Res. Rev. 2023, 17, 102744. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef] [PubMed]

- Fergus, S.; Botha, M.; Ostovar, M. Evaluating Academic Answers Generated Using ChatGPT. J. Chem. Educ. 2023, 100, 1672–1675. [Google Scholar] [CrossRef]

- Eggmann, F.; Weiger, R.; Zitzmann, N.U.; Blatz, M.B. Implications of Large Language Models such as ChatGPT for Dental Medicine. J. Esthet. Restor. Dent. 2023, 35, 1098–1102. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLOS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Li, H.; Moon, J.T.; Purkayastha, S.; Celi, L.A.; Trivedi, H.; Gichoya, J.W. Ethics of Large Language Models in Medicine and Medical Research. Lancet Digit. Health 2023, 5, e333–e335. [Google Scholar] [CrossRef] [PubMed]

- Dipalma, G.; Inchingolo, A.D.; Inchingolo, A.M.; Piras, F.; Carpentiere, V.; Garofoli, G.; Azzollini, D.; Campanelli, M.; Paduanelli, G.; Palermo, A.; et al. Artificial Intelligence and Its Clinical Applications in Orthodontics: A Systematic Review. Diagnostics 2023, 13, 3677. [Google Scholar] [CrossRef]

- Beam, A.L.; Drazen, J.M.; Kohane, I.S.; Leong, T.-Y.; Manrai, A.K.; Rubin, E.J. Artificial Intelligence in Medicine. N. Engl. J. Med. 2023, 388, 1220–1221. [Google Scholar] [CrossRef] [PubMed]

- Abd-alrazaq, A.; AlSaad, R.; Alhuwail, D.; Ahmed, A.; Healy, P.M.; Latifi, S.; Aziz, S.; Damseh, R.; Alrazak, S.A.; Sheikh, J. Large Language Models in Medical Education: Opportunities, Challenges, and Future Directions. JMIR Med. Educ. 2023, 9, e48291. [Google Scholar] [CrossRef]

- Acar, A.H. Can Natural Language Processing Serve as a Consultant in Oral Surgery? J. Stomatol. Oral Maxillofac. Surg. 2024, 125, 101724. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Zhuang, Y.; Zhu, Y.; Iwinski, H.; Wattenbarger, M.; Wang, M.D. Retrieval-Augmented Large Language Models for Adolescent Idiopathic Scoliosis Patients in Shared Decision-Making. Available online: https://openreview.net/forum?id=Of21JJE4Kk (accessed on 24 September 2024).

- van Dis, E.A.M.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five Priorities for Research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Br. Med. J. 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Wolff, R.F.; Moons, K.G.M.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S. PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann. Intern. Med. 2019, 170, 51. [Google Scholar] [CrossRef] [PubMed]

- Alkhamees, A. Evaluation of Artificial Intelligence as a Search Tool for Patients: Can ChatGPT-4 Provide Accurate Evidence-Based Orthodontic-Related Information? Cureus 2024, 16, e65820. [Google Scholar] [CrossRef] [PubMed]

- Hatia, A.; Doldo, T.; Parrini, S.; Chisci, E.; Cipriani, L.; Montagna, L.; Lagana, G.; Guenza, G.; Agosta, E.; Vinjolli, F.; et al. Accuracy and Completeness of ChatGPT-Generated Information on Interceptive Orthodontics: A Multicenter Collaborative Study. J. Clin. Med. 2024, 13, 735. [Google Scholar] [CrossRef] [PubMed]

- Daraqel, B.; Wafaie, K.; Mohammed, H.; Cao, L.; Mheissen, S.; Liu, Y.; Zheng, L. The Performance of Artificial Intelligence Models in Generating Responses to General Orthodontic Questions: ChatGPT vs. Google Bard. Am. J. Orthod. Dentofac. Orthop. 2024, 165, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Vassis, S.; Powell, H.; Petersen, E.; Barkmann, A.; Noeldeke, B.; Kristensen, K.D.; Stoustrup, P. Large-Language Models in Orthodontics: Assessing Reliability and Validity of ChatGPT in Pretreatment Patient Education. Cureus 2024, 16, e68085. [Google Scholar] [CrossRef]

- Kılınç, D.D.; Mansız, D. Examination of the Reliability and Readability of Chatbot Generative Pretrained Transformer’s (ChatGPT) Responses to Questions about Orthodontics and the Evolution of These Responses in an Updated Version. Am. J. Orthod. Dentofac. Orthop. 2024, 165, 546–555. [Google Scholar] [CrossRef] [PubMed]

- Makrygiannakis, M.A.; Giannakopoulos, K.; Kaklamanos, E.G. Evidence-Based Potential of Generative Artificial Intelligence Large Language Models in Orthodontics: A Comparative Study of ChatGPT, Google Bard, and Microsoft Bing. Eur. J. Orthod. 2024, cjae017. [Google Scholar] [CrossRef]

- Dursun, D.; Geçer, R.B. Can Artificial Intelligence Models Serve as Patient Information Consultants in Orthodontics? BMC Med. Inform. Decis. Mak. 2024, 24, 211. [Google Scholar] [CrossRef] [PubMed]

- Kurt Demirsoy, K.; Buyuk, S.K.; Bicer, T. How Reliable Is the Artificial Intelligence Product Large Language Model ChatGPT in Orthodontics? Angle Orthod. 2024, 94, 602–607. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, O.M.; Gasparello, G.G.; Hartmann, G.C.; Casagrande, F.A.; Pithon, M.M. Assessing the Reliability of ChatGPT: A Content Analysis of Self-Generated and Self-Answered Questions on Clear Aligners, TADs and Digital Imaging. Dent. Press J. Orthod. 2024, 28, e2323183. [Google Scholar] [CrossRef] [PubMed]

- Naureen, S.; Kiani, H.G. Assessing the Accuracy of AI Models in Orthodontic Knowledge: A Comparative Study between ChatGPT-4 and Google Bard. J. Coll. Physicians Surg. Pak. 2024, 34, 761–766. [Google Scholar] [CrossRef]

- Rahaman, M.S.; Ahsan, M.M.T.; Anjum, N.; Rahman, M.M.; Rahman, M.N. The AI Race Is On! Google’s Bard and Openai’s Chatgpt Head to Head: An Opinion Article. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Cascella, M.; Semeraro, F.; Montomoli, J.; Bellini, V.; Piazza, O.; Bignami, E. The Breakthrough of Large Language Models Release for Medical Applications: 1-Year Timeline and Perspectives. J. Med. Syst. 2024, 48, 22. [Google Scholar] [CrossRef] [PubMed]

- Charnock, D.; Shepperd, S.; Needham, G.; Gann, R. DISCERN: An Instrument for Judging the Quality of Written Consumer Health Information on Treatment Choices. J. Epidemiol. Community Health 1999, 53, 105–111. [Google Scholar] [CrossRef]

- Meade, M.; Dreyer, C. Web-Based Information on Orthodontic Clear Aligners: A Qualitative and Readability Assessment. Aust. Dent. J. 2020, 65, 225–232. [Google Scholar] [CrossRef]

- Patel, A.; Cobourne, M.T. The Design and Content of Orthodontic Practise Websites in the UK Is Suboptimal and Does Not Correlate with Search Ranking. Eur. J. Orthod. 2014, 37, 447–452. [Google Scholar] [CrossRef]

- Seehra, J.; Cockerham, L.; Pandis, N. A Quality Assessment of Orthodontic Patient Information Leaflets. Prog. Orthod. 2016, 17, 15. [Google Scholar] [CrossRef] [PubMed]

- Zainab, A.; Sakkour, R.; Handu, K.; Mughal, S.; Menon, V.; Shabbir, D.; Mehmood, A. Measuring the Quality of YouTube Videos on Anxiety: A Study Using the Global Quality Scale and Discern Tool. Int. J. Community Med. Public Health 2023, 10, 4492–4496. [Google Scholar] [CrossRef]

- Livas, C.; Delli, K.; Ren, Y. Quality Evaluation of the Available Internet Information Regarding Pain during Orthodontic Treatment. Angle Orthod. 2012, 83, 500–506. [Google Scholar] [CrossRef]

- Weil, A.G.; Bojanowski, M.W.; Jamart, J.; Gustin, T.; Lévêque, M. Evaluation of the Quality of Information on the Internet Available to Patients Undergoing Cervical Spine Surgery. World Neurosurg. 2014, 82, e31–e39. [Google Scholar] [CrossRef] [PubMed]

- Dourado, G.B.; Volpato, G.H.; de Almeida-Pedrin, R.R.; Pedron Oltramari, P.V.; Freire Fernandes, T.M.; de Castro Ferreira Conti, A.C. Likert Scale vs Visual Analog Scale for Assessing Facial Pleasantness. Am. J. Orthod. Dentofac. Orthop. 2021, 160, 844–852. [Google Scholar] [CrossRef] [PubMed]

- Kincaid, J.; Fishburne, R.; Rogers, R.; Chissom, B. Derivation of New Readability Formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy Enlisted Personnel. Available online: https://stars.library.ucf.edu/istlibrary/56 (accessed on 22 November 2024).

- Phan, A.; Jubril, A.; Menga, E.; Mesfin, A. Readability of the Most Commonly Accessed Online Patient Education Materials Pertaining to Surgical Treatments of the Spine. World Neurosurg. 2021, 152, e583–e588. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Albalawi, F.; Alshehri, A.; Awawdeh, M.; Iyer, K.; Alsomaie, B.; Aldhebaib, A.; Singh, O.G.; Alfadley, A. Performance of Artificial Intelligence Models Designed for Automated Estimation of Age Using Dento-Maxillofacial Radiographs—A Systematic Review. Diagnostics 2024, 14, 1079. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Vishwanathaiah, S.; Maganur, P.C.; Patil, S.; Naik, S.; Baeshen, H.A.; Sarode, S.S. Scope and Performance of Artificial Intelligence Technology in Orthodontic Diagnosis, Treatment Planning, and Clinical Decision-Making—A Systematic Review. J. Dent. Sci. 2021, 16, 482–492. [Google Scholar] [CrossRef] [PubMed]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in Medicine: An Overview of Its Applications, Advantages, Limitations, Future Prospects, and Ethical Considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef]

- Subramani, M.; Jaleel, I.; Krishna Mohan, S. Evaluating the Performance of ChatGPT in Medical Physiology University Examination of Phase I MBBS. Adv. Physiol. Educ. 2023, 47, 270–271. [Google Scholar] [CrossRef]

- Mello, M.M.; Guha, N. ChatGPT and Physicians’ Malpractice Risk. JAMA Health Forum 2023, 4, e231938. [Google Scholar] [CrossRef]

- Reis, F.; Lenz, C.; Gossen, M.; Volk, H.-D.; Drzeniek, N.M. Practical Applications of Large Language Models for Health Care Professionals and Scientists. JMIR Med. Inform. 2024, 12, e58478. [Google Scholar] [CrossRef]

- Harrer, S. Attention Is Not All You Need: The Complicated Case of Ethically Using Large Language Models in Healthcare and Medicine. eBioMedicine 2023, 90, 104512. [Google Scholar] [CrossRef] [PubMed]

- Lareyre, F.; Raffort, J. Ethical Concerns Regarding the Use of Large Language Models in Healthcare. EJVES Vasc. Forum 2024, 61, 1. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).