A Lightweight YOLOv11n-Based Framework for Highway Pavement Distress Detection Under Occlusion Conditions

Abstract

Featured Application

Abstract

1. Introduction

2. Related Work

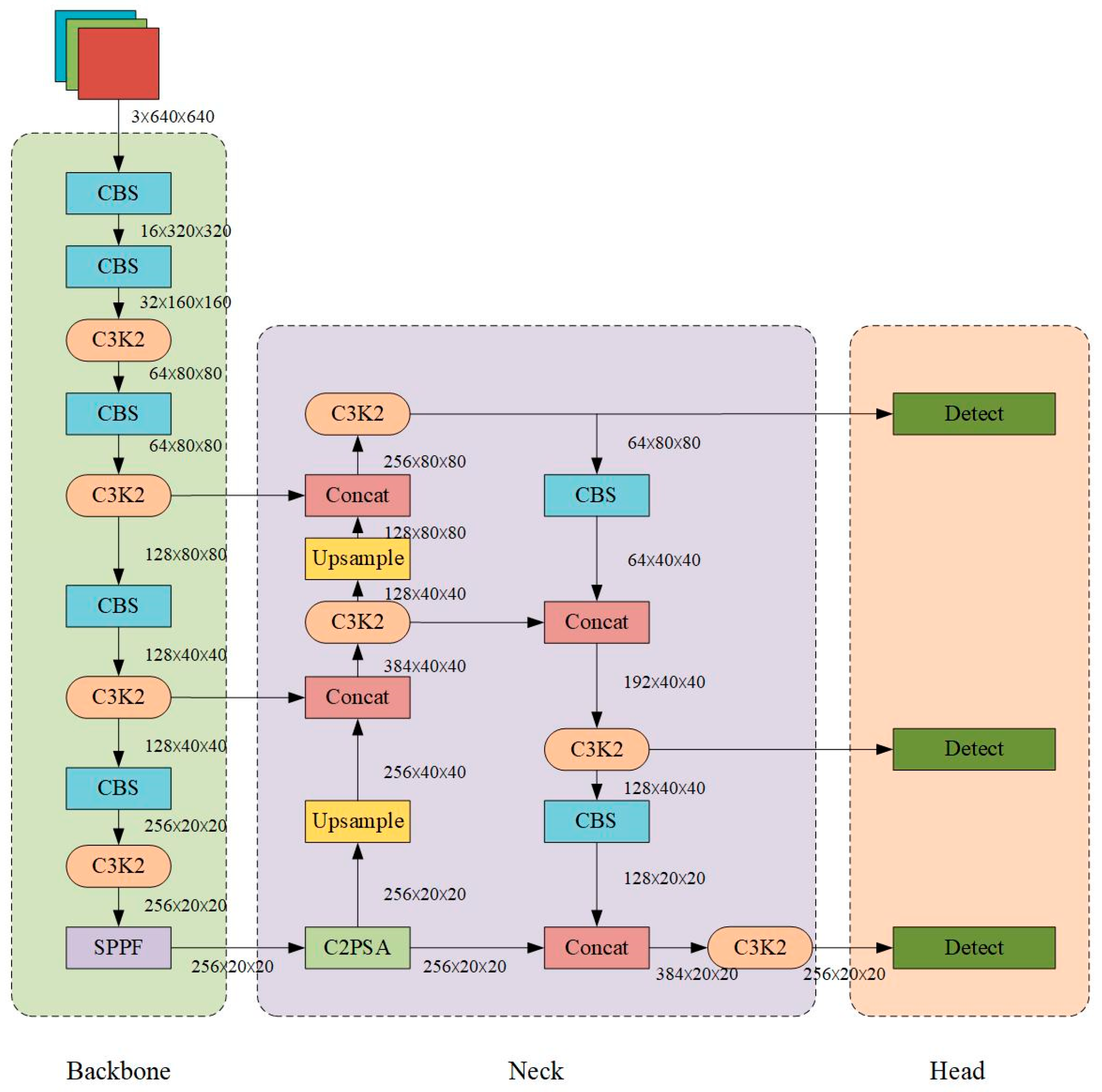

YOLOv11

3. Methodology

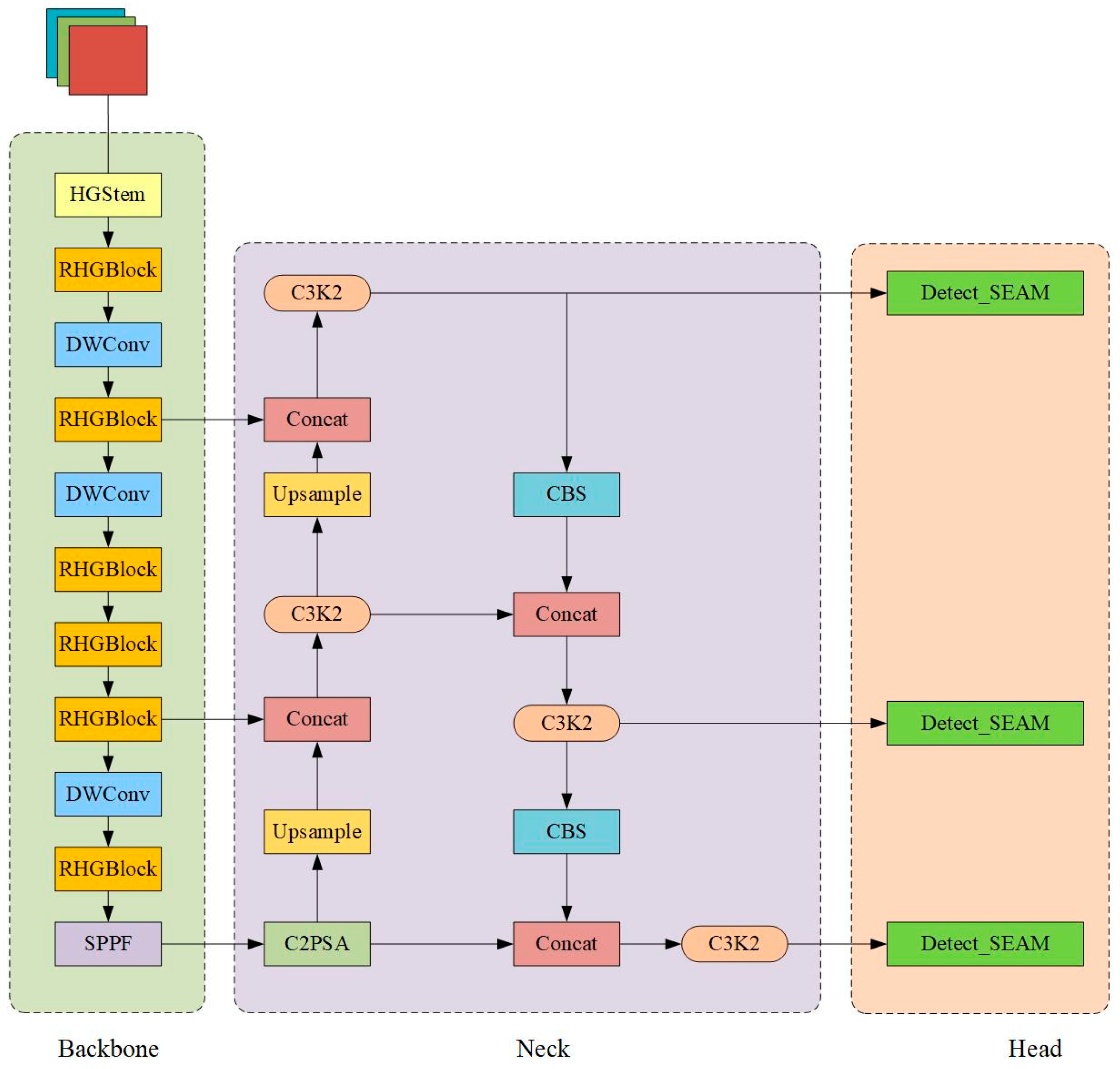

3.1. Improved YOLOv11n

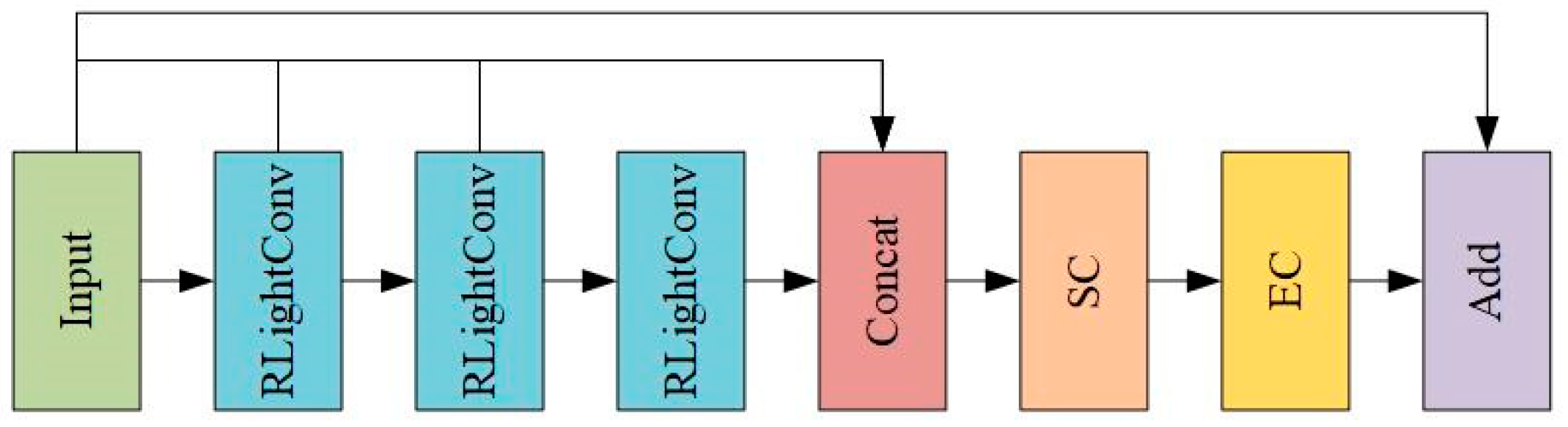

3.2. RHGNetV2

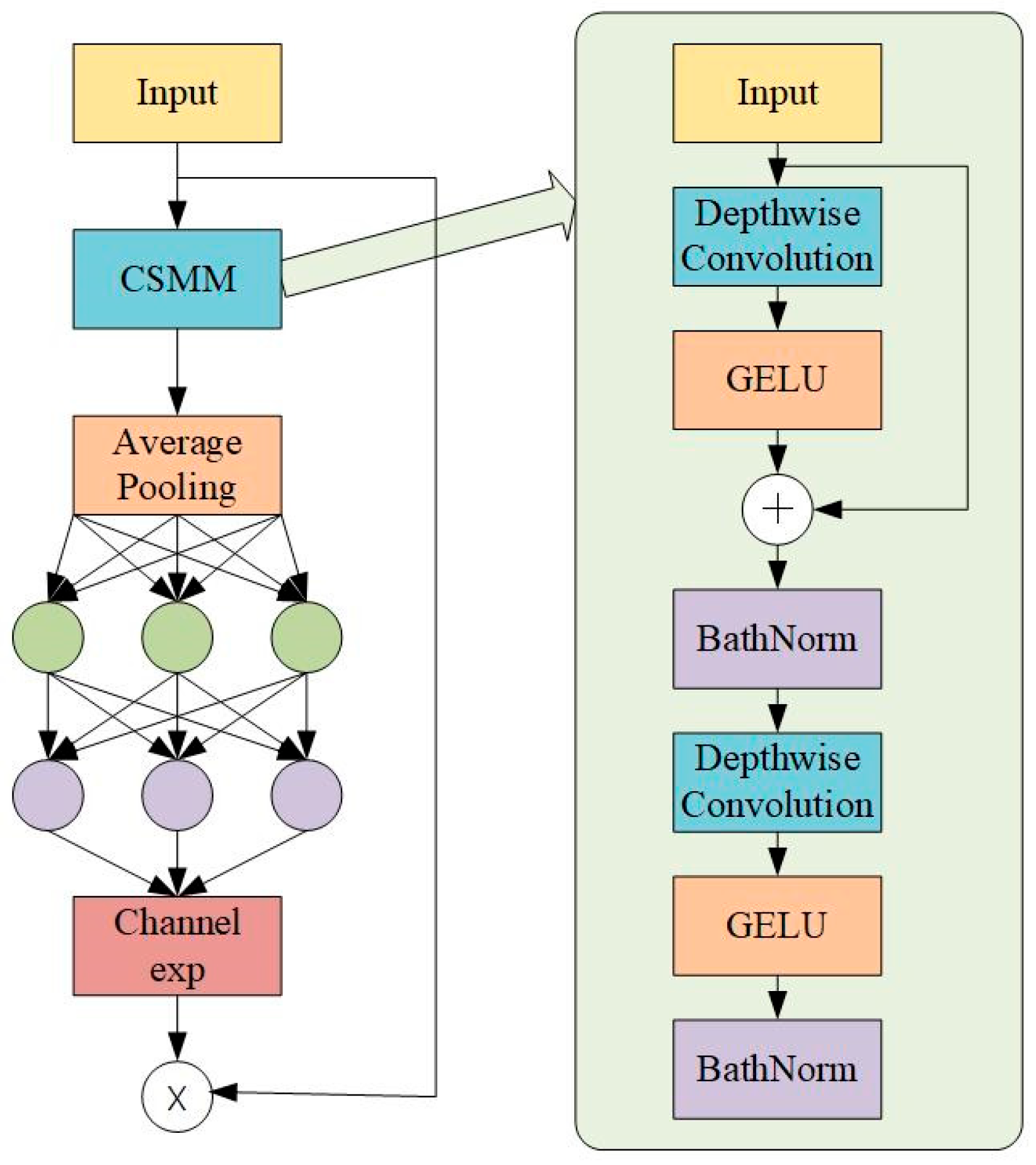

3.3. Detect_SEAM

4. Experimental Design and Results Analysis

4.1. Experimental Environment

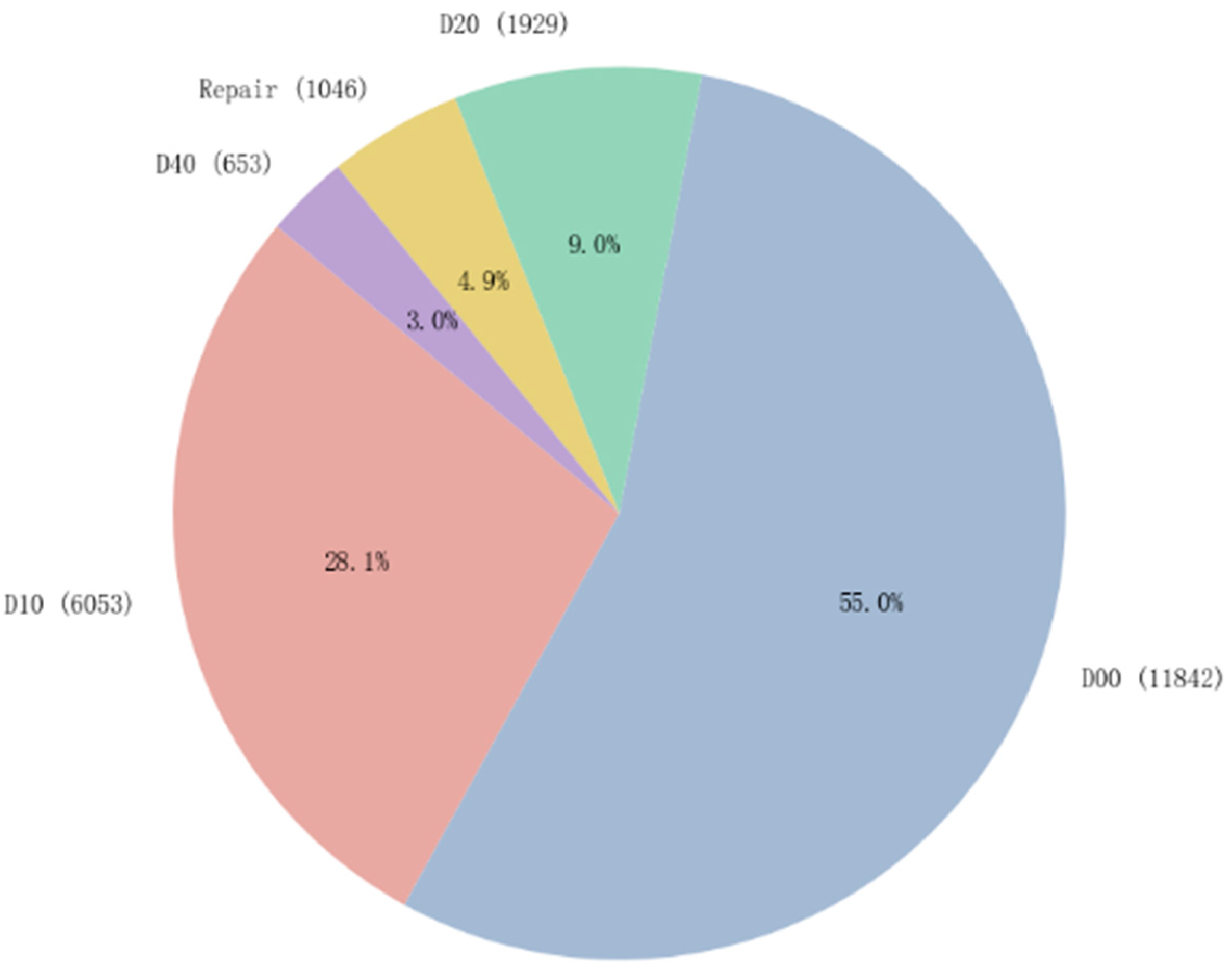

4.2. Dataset

4.3. Evaluation Metrics

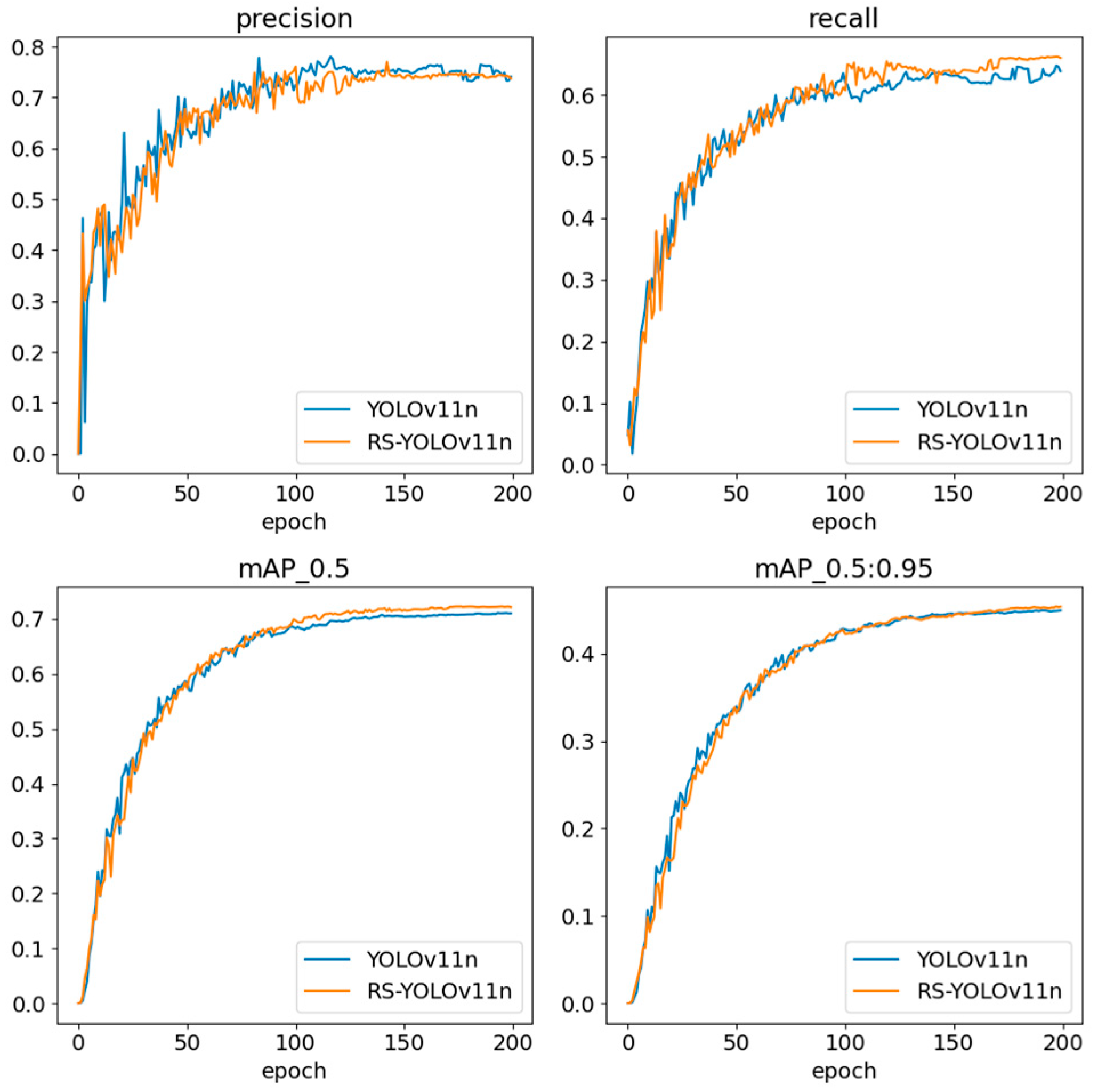

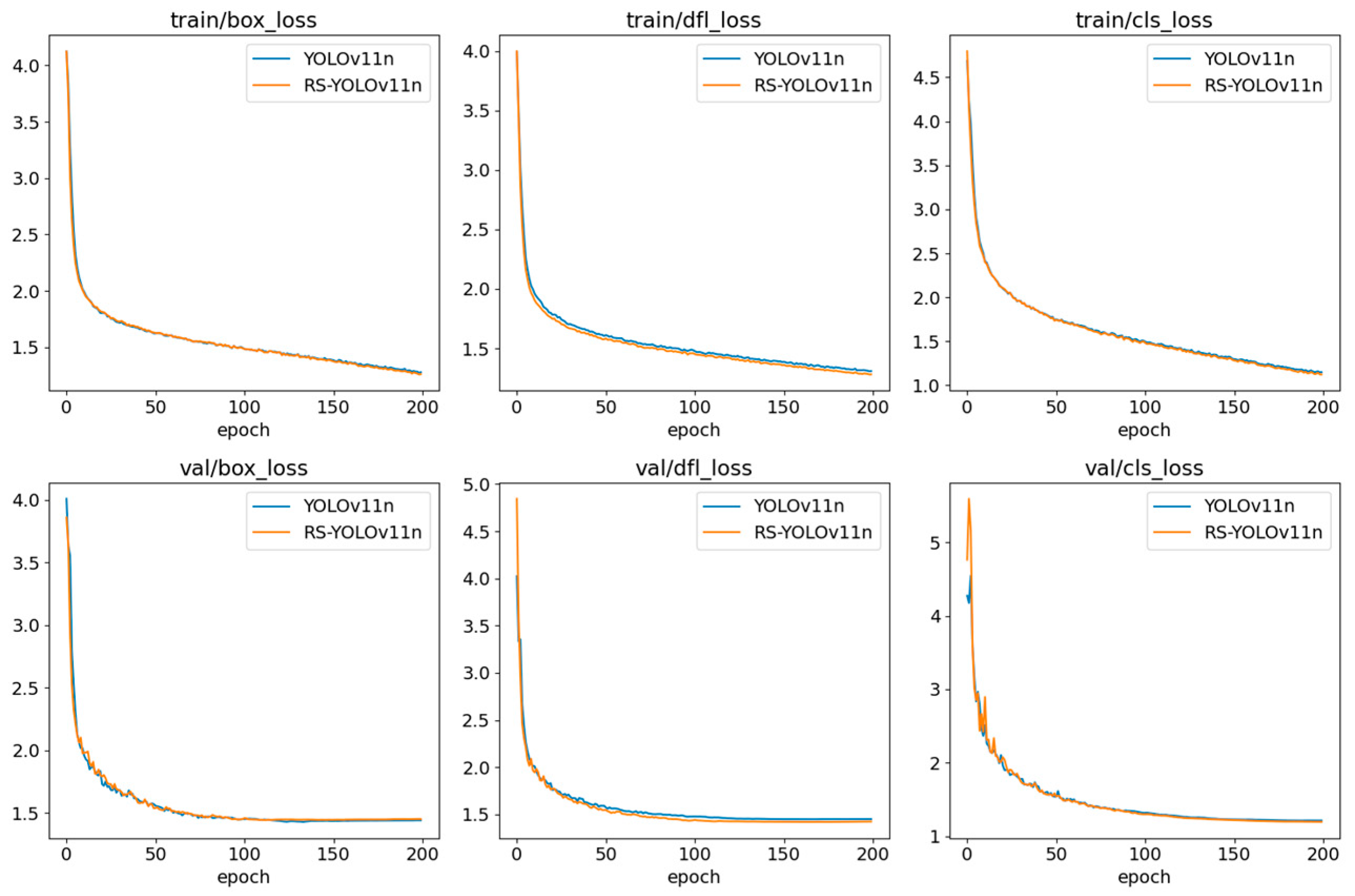

4.4. Ablation Study

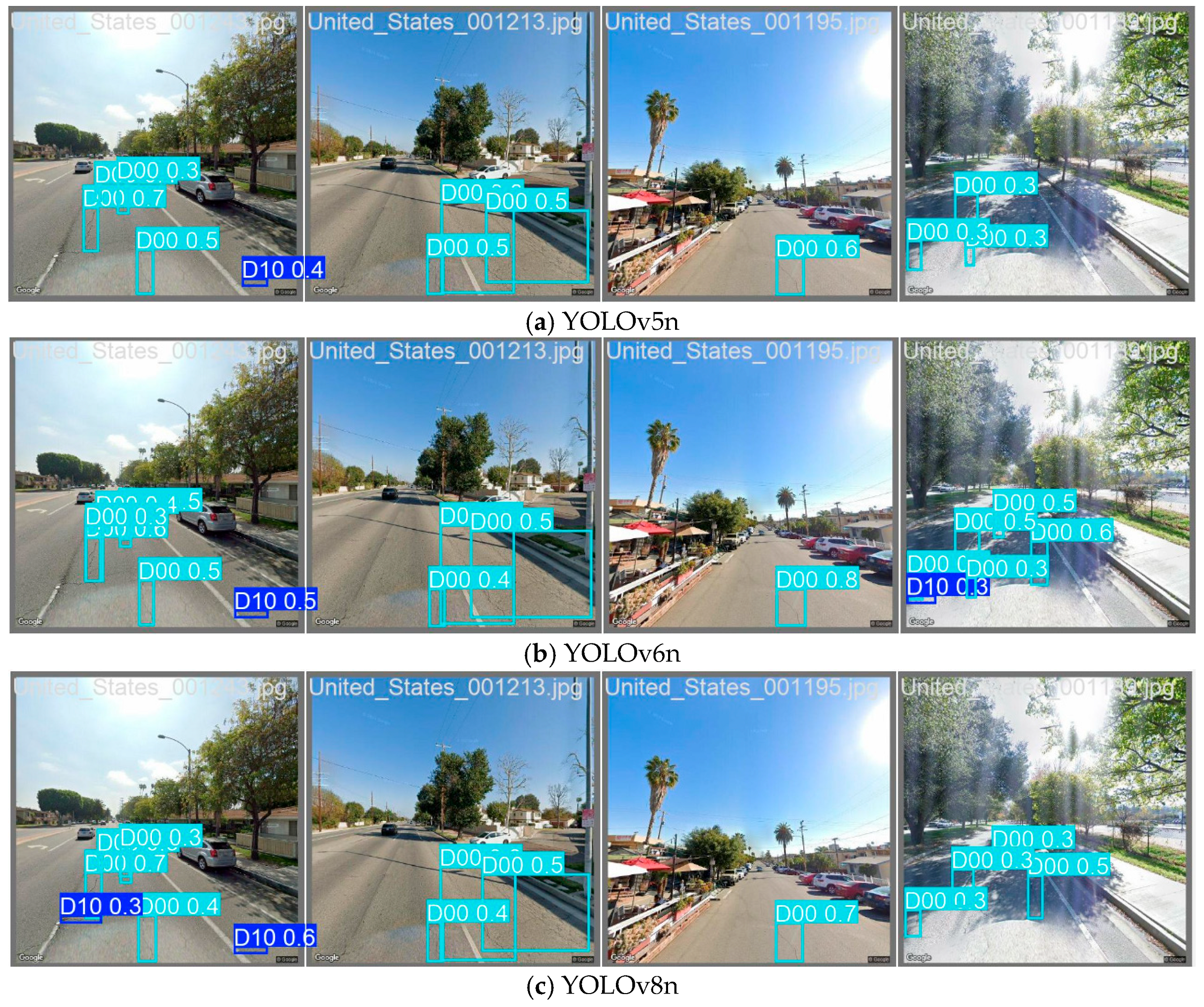

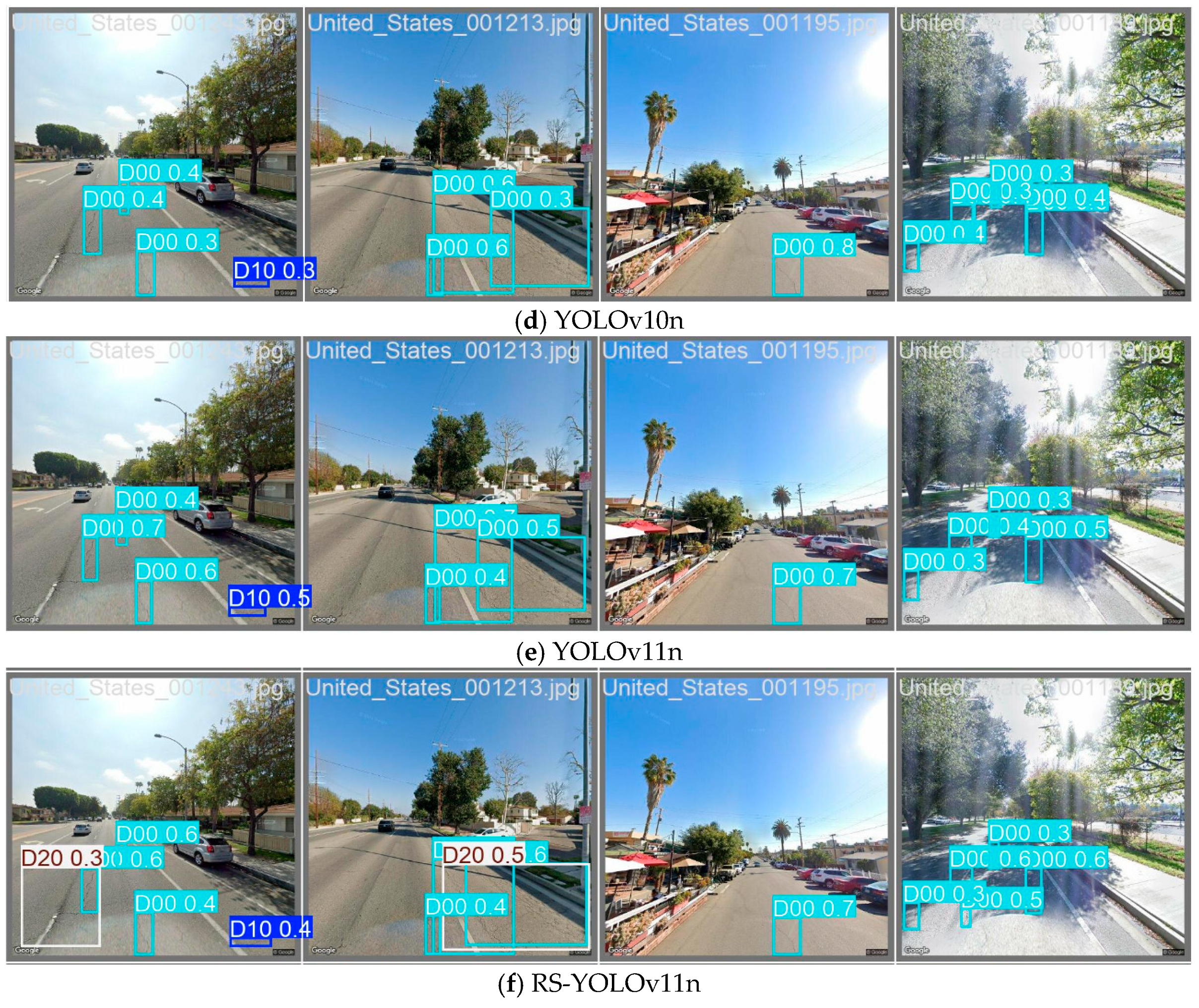

4.5. Comparative Experiments

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, D.H.; Shi, J.X.; Glazier, C. Real-Time Image Thresholding Based on Sample Space Reduction and Interpolation Approach. J. Comput. Civ. Eng. 2003, 17, 264–272. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Hashimoto, S. Fast crack detection method for large-size concrete surface images using percolation-based image processing. Mach. Vis. Appl. 2010, 21, 797–809. [Google Scholar] [CrossRef]

- Tsai, Y.; Kaul, V.; Mersereau, M.R. Critical Assessment of Pavement Distress Segmentation Methods. J. Transp. Eng. 2010, 136, 11–19. [Google Scholar] [CrossRef]

- Lee, Y.B.; Kim, Y.Y.; Yi, S.; Kim, J.-K. Automated image processing technique for detecting and analysing concrete surface cracks. Struct. Infrastruct. Eng. 2013, 9, 567–577. [Google Scholar] [CrossRef]

- Li, G.; He, S.; Ju, Y.; Du, K. Long-distance precision inspection method for bridge cracks with image processing. Autom. Constr. 2014, 41, 83–95. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X. Concrete Bridge Crack Detection Technology Based on Digital Images. J. Hunan Univ. (Nat. Sci. Ed.) 2013, 40, 34–40. [Google Scholar]

- Wang, X.; Feng, D.; Li, W. Research and Implementation of Pavement Crack Detection Algorithm. J. Beihua Univ. (Nat. Sci. Ed.) 2017, 27, 9–10+13. [Google Scholar]

- Zheng, M.; Lei, Z.; Zhang, K. Intelligent detection of building cracks based on deep learning. Image Vis. Comput. 2020, 103, 103987. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Chen, B.; Feng, T.; Chen, Z. Road Crack Detection Algorithm Based on Improved Mask R-CNN. J. Comput. Appl. 2020, 40, 162–165. [Google Scholar]

- Sun, C.; Pei, L.; Li, W.; Hao, X.; Chen, Y. Pavement Crack Sealing Detection Method Based on Improved Faster R-CNN. J. South China Univ. Technol. (Nat. Sci. Ed.) 2020, 48, 84–93. [Google Scholar]

- Xu, X.; Zhao, M.; Shi, P.; Ren, R.; He, X.; Wei, X.; Yang, H. Crack Detection and Comparison Study Based on Faster R-CNN and Mask R-CNN. Sensors 2022, 22, 1215. [Google Scholar] [CrossRef]

- Zhao, M.; Shi, P.; Xu, X.; Xu, X.; Liu, W.; Yang, H. Improving the Accuracy of an R-CNN-Based Crack Identification System Using Different Preprocessing Algorithms. Sensors 2022, 22, 7089. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; Lu, X.; Li, H. Automatic fracture detection from the images of electrical image logs using Mask R-CNN. Fuel 2023, 351, 128992. [Google Scholar] [CrossRef]

- Qiwen, Q.; Denvid, L. Real-time detection of cracks in tiled sidewalks using YOLO-based method applied to unmanned aerial vehicle (UAV) images. Autom. Constr. 2023, 147, 104745. [Google Scholar]

- He, T.; Li, H. Pavement Disease Detection Model Based on Improved YOLOv5. J. Civ. Eng. 2024, 57, 96–106. [Google Scholar] [CrossRef]

- Sike, W.; Xueqin, C.; Qiao, D. Detection of Asphalt Pavement Cracks Based on Vision Transformer Improved YOLO V5. J. Transp. Eng. Part B Pavements 2023, 149, 04023004. [Google Scholar]

- Ding, K.; Ding, Z.; Zhang, Z.; Yuan, M.; Ma, G.; Lv, G. Scd-yolo: A novel object detection method for efficient road crack detection. Multimed. Syst. 2024, 30, 351. [Google Scholar] [CrossRef]

- Han, Z.; Cai, Y.; Liu, A.; Zhao, Y.; Lin, C. MS-YOLOv8-Based Object Detection Method for Pavement Diseases. Sensors 2024, 24, 4569. [Google Scholar] [CrossRef]

- Hu, X.; Yan, Y.; Wang, D.; Zhang, Y. Lightweight Pavement Disease Detection Method Based on YOLOM Algorithm. China J. Highw. Transp. 2024, 37, 381–391. [Google Scholar] [CrossRef]

- Ying, H.; Qin, Y.; Liu, X.; Zhu, J.; Chen, W. Asphalt Pavement Deep Image Disease Detection Algorithm Based on Improved YOLOv8. J. Hunan Univ. Sci. Technol. (Nat. Sci. Ed.) 2025, 40, 88–101. [Google Scholar] [CrossRef]

- Sun, P.; Yang, L.; Yang, H.; Yan, B.; Wu, T.; Li, J. DSWMamba: A deep feature fusion mamba network for detection of asphalt pavement distress. Constr. Build. Mater. 2025, 469, 140393. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, A.A.; Peng, Y.; Wei, Y.; Cheng, H.; Shang, J. Adaptive learning network for detecting pavement distresses in complex environments. Eng. Appl. Artif. Intell. 2025, 152, 110784. [Google Scholar] [CrossRef]

- Zhang, B.; Xu, S.; Zhong, Y.; Cai, H.; Zang, Q.; Li, X. Method for Identifying Loose Disease in Semi-Rigid Base Layers Based on Improved YOLOv8 Algorithm. J. Zhengzhou Univ. (Eng. Ed.) 2025, 46, 122–129. [Google Scholar] [CrossRef]

- Liu, Q.; Liang, J.; Wang, X.; Liang, Y.; Fang, W.; Hu, W. MSG-YOLO: A Lightweight Pavement Disease Detection Algorithm. Highw. Eng. 2025, 50, 91–101. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, D. Pavement Disease Detection Model Based on Improved YOLOv9. China Test. 2025, 51, 19–29. [Google Scholar]

- Liu, P.; Yuan, J.; Gao, Q.; Chen, S. Pavement Disease Detection Method Based on Improved YOLOv5. J. Beijing Univ. Technol. 2025, 51, 552–559. [Google Scholar]

- Ruggieri, S.; Cardellicchio, A.; Nettis, A.; Renò, V.; Uva, G. Using Attention for Improving Defect Detection in Existing RC Bridges. IEEE Access 2025, 13, 18994–19015. [Google Scholar] [CrossRef]

- Luo, Z.; Jiang, Y.; Li, W. An Improved YOLOv11n-Based Model for Road Defect Detection. Microelectron. Comput. 2025, 1–13. Available online: https://link.cnki.net/urlid/61.1123.TN.20250225.1018.010 (accessed on 31 August 2025).

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Rahima, K.; Muhammad, H.; Richard, H.; Paul, A. A comprehensive review of convolutional neural networks for defect detection in industrial applications. IEEE Access 2024, 12, 94250–94295. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. Yolo-facev2: A scale and occlusion aware face detector. Pattern Recognit. 2024, 155, 110714. [Google Scholar] [CrossRef]

| Category | Version |

|---|---|

| Operating System | Windows11 |

| CPU | Intel(R) Core(TM) i7-14650HX 2.20 GHz |

| GPU | NVIDIA GeForce RTX 4050 |

| Pytorch Version | Pytorch 2.2.2 |

| Python Version | Python 3.10.14 |

| CUDA Version | CUDA 12.1 |

| Parameter | Value |

|---|---|

| imgsz | 640 |

| epochs | 200 |

| batch | 32 |

| workers | 4 |

| optimizer | SGD |

| iou | 0.7 |

| lr0 | 0.01 |

| lrf | 0.01 |

| momentum | 0.937 |

| Model | P/% | R/% | F1/% | map@0.5/% | Parameters/(×106) | Size/MB | FPS/(f/s) | GFLOPs/(G) |

|---|---|---|---|---|---|---|---|---|

| YOLOv11n | 74.96 | 63.40 | 67.84 | 70.85 | 2.58 | 5.20 | 288.87 | 6.30 |

| YOLOv11n+RHGNetv2 | 74.43 | 65.41 | 68.81 | 72.16 | 2.13 | 4.50 | 240.58 | 5.70 |

| YOLOv11n+SEAM | 74.38 | 63.19 | 67.55 | 70.88 | 2.49 | 5.10 | 267.02 | 5.80 |

| RS-YOLOv11n | 75.56 | 64.43 | 68.45 | 71.49 | 2.04 | 4.30 | 228.07 | 5.20 |

| Model | P/% | R/% | F1/% | map@0.5/% | Parameters/(×106) | Size/MB | FPS/(f/s) | GFLOPs/(G) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n | 72.09 | 62.19 | 65.87 | 68.67 | 2.50 | 5.00 | 324.89 | 7.10 |

| YOLOv6n | 67.20 | 62.90 | 64.09 | 67.37 | 4.23 | 8.30 | 343.37 | 11.80 |

| YOLOv8n | 76.06 | 61.24 | 67.15 | 71.09 | 3.01 | 6.00 | 307.99 | 8.10 |

| YOLOv10n | 68.59 | 65.47 | 66.52 | 68.99 | 2.27 | 5.50 | 228.65 | 6.50 |

| YOLOv11n | 74.96 | 63.40 | 67.84 | 70.85 | 2.58 | 5.20 | 288.87 | 6.30 |

| RS-YOLOv11n | 75.56 | 64.43 | 68.45 | 71.49 | 2.04 | 4.30 | 228.07 | 5.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Luo, X.; Yang, C.; Fang, M.; Liu, W. A Lightweight YOLOv11n-Based Framework for Highway Pavement Distress Detection Under Occlusion Conditions. Appl. Sci. 2025, 15, 9664. https://doi.org/10.3390/app15179664

Li W, Luo X, Yang C, Fang M, Liu W. A Lightweight YOLOv11n-Based Framework for Highway Pavement Distress Detection Under Occlusion Conditions. Applied Sciences. 2025; 15(17):9664. https://doi.org/10.3390/app15179664

Chicago/Turabian StyleLi, Wei, Xiao Luo, Changhao Yang, Miao Fang, and Weiyu Liu. 2025. "A Lightweight YOLOv11n-Based Framework for Highway Pavement Distress Detection Under Occlusion Conditions" Applied Sciences 15, no. 17: 9664. https://doi.org/10.3390/app15179664

APA StyleLi, W., Luo, X., Yang, C., Fang, M., & Liu, W. (2025). A Lightweight YOLOv11n-Based Framework for Highway Pavement Distress Detection Under Occlusion Conditions. Applied Sciences, 15(17), 9664. https://doi.org/10.3390/app15179664