Abstract

Sound source localization (SSL) adds a spatial dimension to auditory perception, allowing a system to pinpoint the origin of speech, machinery noise, warning tones, or other acoustic events, capabilities that facilitate robot navigation, human–machine dialogue, and condition monitoring. While existing surveys provide valuable historical context, they typically address general audio applications and do not fully account for robotic constraints or the latest advancements in deep learning. This review addresses these gaps by offering a robotics-focused synthesis, emphasizing recent progress in deep learning methodologies. We start by reviewing classical methods such as time difference of arrival (TDOA), beamforming, steered-response power (SRP), and subspace analysis. Subsequently, we delve into modern machine learning (ML) and deep learning (DL) approaches, discussing traditional ML and neural networks (NNs), convolutional neural networks (CNNs), convolutional recurrent neural networks (CRNNs), and emerging attention-based architectures. The data and training strategy that are the two cornerstones of DL-based SSL are explored. Studies are further categorized by robot types and application domains to facilitate researchers in identifying relevant work for their specific contexts. Finally, we highlight the current challenges in SSL works in general, regarding environmental robustness, sound source multiplicity, and specific implementation constraints in robotics, as well as data and learning strategies in DL-based SSL. Also, we sketch promising directions to offer an actionable roadmap toward robust, adaptable, efficient, and explainable DL-based SSL for next-generation robots.

1. Introduction

Sound source localization (SSL) is the task of estimating the location or direction of a sound-emitting source relative to the sensor (microphone array). In robotic systems, SSL serves as a crucial component of robot audition, greatly enhancing a robot’s perceptual capabilities []. An accurate SSL module enables a robot to orient its sensors towards the active speaker, disambiguate simultaneous talkers, or navigate autonomously towards an event that is audible but not visible. SSL serves various functional roles in robotics. For instance, speech localization is critical for human–robot interaction (HRI), enabling a robot to accurately identify the location of a person giving a command, even in noisy environments [,]. While the domestic and service applications of SSL in HRI are well-documented, its role in industrial settings is crucial yet often understated, particularly for ensuring Occupational Health and Safety (OHS). Accurate SSL allows a robot to precisely identify and respond to human voice commands, including critical safety alerts, and to detect the presence of humans to avoid collisions on a noisy factory floor. Localizing machinery noise is also vital for fault detection and condition monitoring in industrial manufacturing. This allows a robot to detect the acoustic signature of a failing component and inspect the production line before it leads to a catastrophic and potentially dangerous system failure [,,]. Furthermore, the detection of specific warning tones or the sudden appearance of new sound sources can be integrated into simultaneous localization and mapping (SLAM). This provides audio-goal seeking capabilities and can furnish acoustic landmarks or constraints when vision is occluded, enriching the robot’s understanding of its environment. SSL is also a core component in other robotic applications, including autonomous vehicles [], aerial robots [,], search-and-rescue systems [,,], and security robots [,], where localizing auditory events is essential for effective decision making.

Alternative localization in robotics can be done by vision, light detection and ranging (LiDAR), Wi-Fi, Bluetooth, Global Positioning Systems (GPS), infrared (IR), and radio frequency identification (RFID). However, each of the aforementioned techniques has its pros and cons []. For example, cameras are susceptible to occlusion, darkness, glare, or privacy constraints [,]; LiDARs and Wi-Fi-based techniques are highly accurate in localization but expensive [,]; IR-based and Bluetooth localization are only accurate in specific conditions such that IR signals are easily obscured by physical obstacles and Bluetooth is range-limited; GPS is rendered unreliable by the presence of multiple building walls [] and RFID-based localization suffers from interference between readers in the presence of multiple RFID tags and readers in the scene []. Acoustic waves, in contrast, propagate around obstacles and in complete darkness, furnishing a complementary channel that often operates beyond the line of sight of on-board cameras. SSL is therefore not a rival but a synergistic partner to these modalities, enriching multi-sensor fusion and improving the robustness of perceptual pipelines.

Despite these advantages, sound-based localization is notoriously sensitive to microphone geometry, environmental reverberation and noise, and the presence of multiple simultaneous sound emitters. Early robotic SSL systems, dating back to the Squirt robot in 1989 [], relied on classical signal-processing algorithms []. These techniques model microphone spacing, speed of sound, and narrow-band free-field assumptions analytically; they achieve good performance in anechoic rooms or with a single talker but degrade quickly under strong reverberation, diffuse noise, and source motion. The last decade has witnessed a paradigm shift toward data-driven learning, mirroring breakthroughs in computer vision and speech recognition.

At the core of this shift lies deep learning, a class of machine learning techniques based on multi-layered neural networks capable of automatically extracting informative features from raw audio or spectrograms. Unlike classical signal-processing methods that rely on predefined rules or handcrafted features, deep learning models learn hierarchical representations directly from the data. This makes them especially effective in real-world SSL scenarios, where factors such as reverberation, background noise, and multiple overlapping sources present significant challenges. Over time, deep learning architectures have evolved to better capture both spatial and temporal information in acoustic signals, resulting in more robust and accurate localization systems.

Common deep learning-based SSL architectures include convolutional neural networks (CNNs) for spatial feature extraction from spectrograms, and convolutional–recurrent hybrids (CRNNs) that combine CNNs with recurrent layers to capture temporal dynamics. Further improvements such as the residual and densely connected networks facilitate the training of deeper models by improving gradient flow and encouraging feature reuse. More recently, attention-based transformers have demonstrated a remarkable ability to learn spatial features directly from raw waveforms or spectrograms []. These models are typically trained on large collections of synthetic or recorded room impulse responses, which help them generalize to unseen environments with varying noise and reverberation. The growing availability of low-cost microphone arrays and compact edge computing devices has also enabled the deployment of such models on mobile robotic platforms, opening up new opportunities for real-time, robust sound localization across a range of robotic applications.

Looking carefully to the existing reviews, we found many surveys on sound source localization in the past three decades; however, to better illustrate new studies, we summarize the ones published after 2017 in Table 1. A closer look at recent reviews indicates that no review has explored SSL in robotic platforms with a particular focus on deep learning models.

Table 1.

Comparative summary of sound-source-localization (SSL) review papers.

From the eight post-2017 SSL reviews in Table 1, only 3/8 (37.5%) have a robotics focus at all, and none of the three have a primary focus on deep learning methods. One review is robotics-domain-specific (condition-monitoring robots) but does not center on DL-SSL. To make this explicit, we add a column “DL-SSL on robots?” in Table 1, which shows the absence of such a synthesis to date. This quantitative snapshot motivates our contribution: up-to-date literature bridging these two rapidly evolving fields. In this regard, Rascon and Meza’s seminal survey [], which could be the closest review to our review due to its robotic theme, predates the DL boom since it included papers before 2017. Liaghat et al. [] had a broader focus to systematically review SSL works without focusing on specific applications (e.g., robotics), while reviewing the SSL methods between 2011 and 2021, overlooking the focus on DL models. In contrast, Grumiaux et al. [] focused on new methods (from 2011 to 2021), especially in DL methods and their challenges. This review is very informative in the general domain of SSL and was very well cited, while it does not restrict its focus to robotics. The review by Desai and Mehendale [], surveyed the SSL works based on the number of microphones, i.e., two microphones mimicking human auditory systems (binaural) and multiple microphones, and also based on the method (based on classical vs. convolutional neural networks). They also provided very informative information in general SSL, such as the challenges of each SSL systems, while they did not focus on robotics SSL. Zhang et al. [], had a different focus such that it explored nonverbal sound in human–robot interaction by offering new taxonomies of function (perception vs. creation) and form (vocables, mechanical sounds, etc.). While not strictly about SSL, it identified how sound contributes to robot perception and communication, and emphasized underexplored aspects like robot-generated sound and shared datasets. Their focus was novel and important for understanding how SSL integrates into broader auditory HRI. Jekaterynczuk and Piotrowski [] offered an extensive comparison of classical and AI-based SSL methods, with interesting classification by mic configuration, signal parameters, and neural architectures. They categorized the application of SSL works based on civil and military domains, and did not focus on robotics specifically. Lv et al. [] recently wrote a very interesting and specific review on SSL in condition-monitoring robots (CMRs). They reviewed the diverse SSL techniques, including traditional and machine learning models. Their review was specifically narrowed to CMRs, and since SSL has not been extensively explored in there, they proposed a framework for future studies in this field. They also encourage future researchers in different condition monitoring tasks who use mobile robots to include SSL in addition to their existing monitoring systems, such as visual, infrared, etc. The most recent review on SSL was carried out by Khan et al. [], where they explored traditional SSL as well as machine learning models. They also categorized works based on different applications, such as industrial domains, medical science, and speech enhancement.

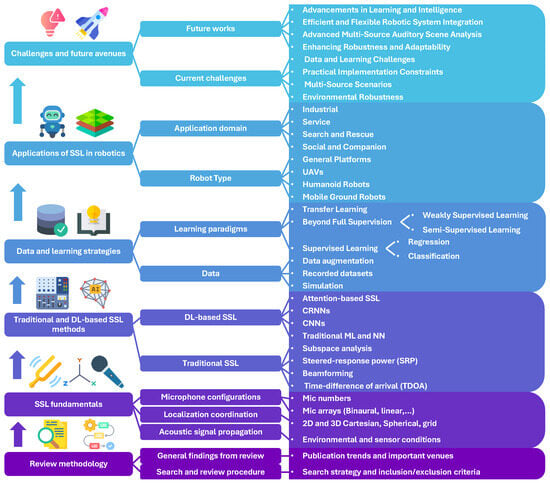

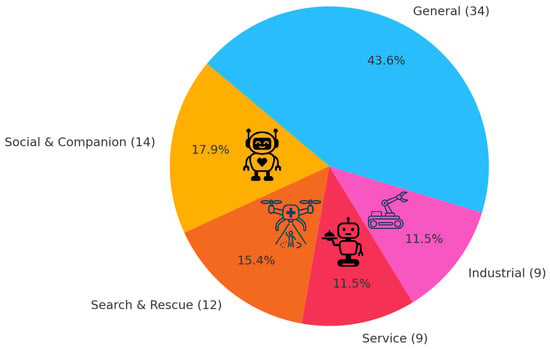

This review fills the gap in other surveys by reviewing peer-reviewed literature from 2013 to 2024 in which different methods (especially machine learning and deep learning) in SSL are applied to, or evaluated on, robotic platforms. It also explained the fundamental SSL facets and traditional SSL as well as DL methods. We also explore the data, a pillar in deep learning models, together with different training strategies, then map SSL functions to concrete robotic tasks including service, social, search and rescue, and industrial applications and outline open challenges and future avenues. To better clarify our review structure, we depict an overall structure in Figure 1 explaining different aspects of our review.

Figure 1.

Overall review structure.

The remainder of this review is organized accordingly, such that Section 2 details our literature-search and review. Section 3 revisits the acoustic foundations of SSL and the key environmental assumptions. Section 4 briefly outlines traditional SSL to contemporary deep learning architectures. Section 5 discusses the dataset and learning strategies underpinning DL-based SSL. Section 6 surveys how these techniques are deployed across the different classes of robots and applications, and Section 7 identifies research challenges and future avenues.

2. Review Methodology

Our goal is to paint a clear picture of how different methods, especially deep learning approaches in sound-source localization (SSL) are currently being used, tested, and deployed on robotic platforms. To achieve this, we carried out a targeted, multi-stage literature search prioritizing the breadth of coverage over exhaustive enumeration.

2.1. Literature Search Strategy

Our search commenced by querying major engineering and robotics databases, including IEEE Xplore, ACM Digital Library, ScienceDirect, and SpringerLink. This was complemented by the extensive use of Google Scholar to capture publications from emerging workshops and arXiv preprints that may have subsequently undergone peer review. We also manually inspected conference proceedings from key robotics venues such as ICRA, IROS, RSS, and IEEE RO-MAN, alongside principal audio forums like ICASSP, WASPAA, Interspeech, DCASE, and EUSIPCO.

The core of our search strategy involved combining three conceptual blocks using Boolean operators. The primary block focused on sound localization terms, connected via “AND” to a block of robotics-related keywords. For instance, a common search string was:

("sound source locali*" OR "acoustic locali*" OR "sound source detection" OR "DOA estimation") AND (robot* OR "mobile robot*" OR "service robot*" OR "industrial robot*" OR cobot* OR humanoid* OR "legged robot*" OR drone* OR UAV* OR quadrotor* OR multirotor*)

Variations such as “speaker localisation”, “binaural CNN”, or “SELD robot” were iteratively added as citation chaining uncovered new terminology and relevant keywords.

2.2. Inclusion and Exclusion Criteria

To ensure the relevance and quality of the reviewed literature, a strict set of inclusion and exclusion criteria was applied during the screening process. Papers were primarily included if they were

- Peer-reviewed publications, encompassing journal articles, full conference papers, or workshop papers with archival proceedings.

- Published within the window of 1 January 2013, to 1 May 2025, capturing the significant surge of deep learning applications in robotics.

- Relevant to a robotic context, meaning the work either (i) evaluated SSL on a physical or simulated robot, or (ii) explicitly targeted a specific robotic use-case (e.g., service, industrial, aerial, field, or social human–robot interaction). Some studies that have not directly implemented SSL on robotic platforms, such as simulations and conceptual frameworks, were also included if their findings were directly and explicitly transferable to the robotic context.

- Written in English.

Conversely, studies were excluded if they were patents, magazine tutorials, or non-archival extended abstracts. Articles limited to headphone spatial audio, hearing aids, pure speech recognition, or architectural acoustics that lacked direct robotic relevance were also discarded.

The screening procedure involved an initial scan of titles and abstracts to filter out papers clearly outside the defined scope. For the remaining records, a thorough full-text inspection was conducted. During this phase, particular attention was paid to the microphone configuration, the specific learning architecture employed, the evaluation protocol, and the presence of direct robotic experimentation or use-case targeting. Citation snowballing, both forward and backward, was applied to ensure that influential papers cited by the shortlisted works, or citing them, were not overlooked. This meticulous, iterative process ultimately converged on a corpus of 78 papers, which form the basis of this comprehensive review.

2.3. Publication Trends and Venues

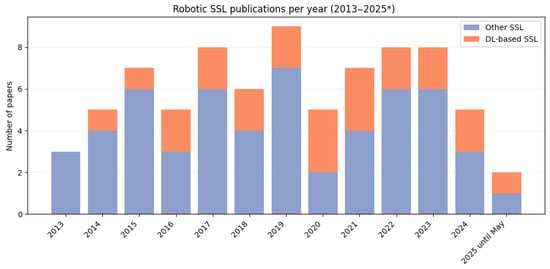

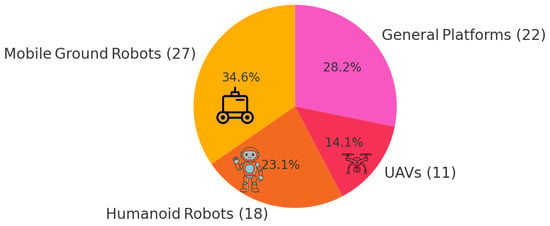

Figure 2 illustrates the evolving landscape of SSL research in robotics through its annual publication trends. As observed, annual publications in SSL for robotics have consistently remained above five papers since 2014, reaching a peak of nine papers in 2019. The seemingly lower count for 2025, however, is attributed to our review’s cut-off date of May 2025. Deep learning approaches, notably absent before 2015, began to gain significant traction that year. Since 2020, they have consistently accounted for approximately one-third of all SSL-for-robotics publications, contributing a steady 2–3 papers annually and underscoring their growing prominence in the field.

Figure 2.

Number of robotics SSL publications with DL (orange) and non-DL approaches (blue).

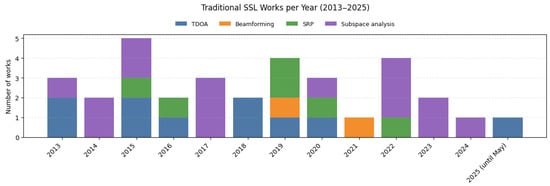

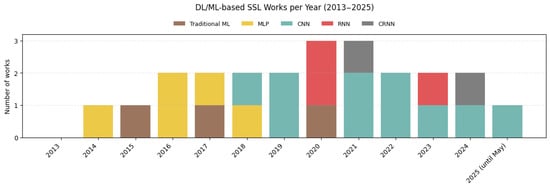

To look more closely at specific approaches, Figure 3 and Figure 4 break down the yearly counts of robotic SSL papers by method for both traditional and DL/ML approaches.

Figure 3.

Number of robotics SSL publications using: time difference of arrival (TDOA) (blue column), beamforming (orange column), steered response power (SRP) (green column), and subspace analysis (purple column).

Figure 4.

Number of robotics SSL papers traditional ML (yellow column), multilayer perceptron (MLP) (brown column), convolutional neural networks (CNNs) (cyan column), recurrent neural networks (RNNs) (red column), and convolutional–recurrent networks (CRNNs) (gray column).

Looking at Figure 3 shows that regarding traditional SSL, subspace analysis is the most frequently used approach across the years, with TDOA also popular—especially before 2020. However, SRP and beamforming appear less often, particularly in recent years. For DL/ML-based works (Figure 4), traditional ML and MLP models are more common before 2018; after that, most studies adopt CNNs, RNNs, or hybrid CRNNs. CNNs in particular account for most DL-based papers after 2019, indicating a shift from dense-layer MLPs toward convolutional architectures. Using recurrent neural layers after dense layers (RNNs) and convolutional layers (CRNNs) have also been frequently reported in SSL tasks in robotics in recent years.

To guide future research dissemination in this dynamic field, Table 2 presents the most common publication venues (those with two or more papers) from our corpus of 78 reviewed articles. Among these 78 papers, 36 were published as conference papers, 1 as a Ph.D. thesis [], and 41 as journal papers. The table highlights the significant role of prominent conferences in SSL-for-robotics research. Notably, the two most prestigious robotics conferences, IROS (IEEE/RSJ International Conference on Intelligent Robots and Systems) and ICRA (IEEE International Conference on Robotics and Automation), show substantial contributions, with 11 and 3 papers, respectively. ICASSP (International Conference on Acoustics, Speech, and Signal Processing), a key conference venue in acoustic signal processing, also features prominently with 4 papers in our review. In terms of journals, Table 2 indicates that only the IEEE Sensors Journal has contributed more than two papers to our review corpus; other journals are represented by fewer articles each.

Table 2.

Most published venues among the papers in robotic SSL in our review. In the third column, “C” and “J” stand for conference and journal.

3. SSL Foundementals

Sound source localization (SSL) is the process of determining the spatial location of one or more sound sources based on measurements from acoustic sensors. This section provides an overview of the fundamental principles and terminology that form the foundation of SSL in robotics applications.

Sound propagates through air as pressure waves, traveling at approximately 343 m per second under standard conditions. When these waves encounter microphones or other acoustic sensors, they are converted into electrical signals that can be processed to extract spatial information. The core principle underlying SSL is that sound reaches different spatial positions at different times and with different intensities, creating patterns that can be analyzed to determine the source’s location. In the context of robotics, SSL typically involves estimating direction of arrival (DOA) and distance or complete position. DOA is defined as an angle or vector pointing toward the sound source, often expressed in terms of azimuth (horizontal angle) and elevation (vertical angle). Distance is the range between the sound source and the receiver, and the position is the complete three-dimensional coordinates of the sound source in space. According to the objectives of each study, SSL tasks can include estimating DOA, distance, or position of the sound source.

As noted by Rascon and Meza [], the majority of SSL systems in robotics focus primarily on DOA estimation, as distance estimation presents additional challenges and is often less critical for many applications. One challenge in SSL is taking into consideration that the sound source (or sources) might be active, a source is active when emitting sound, or inactive during the localization task. Therefore, considering a source (or sources) as always active might be unrealistic in a practical setting. To deal with this challenge, as extensively pointed out in a survey by Grumiaux et al. [], the source activity detection can be done either before (as a separate task) or simultaneously within the SSL task, for example, a neural network predicts both location and activity of a sound source []). It is important to note that sound source localization is broader than sound event detection (SED), where in SSL, the location of sound sources is obtained, as well as the detection of the presence of sound. Therefore, in many studies, SSL is referred to as sound event localization and detection (SELD) []. It is also worthwhile mentioning that sound source separation (in the presence of multiple active sounds) is another task that has to be done in SSL when dealing with more than one active sound source []. Therefore, some studies are focusing on sound source counting as well as localization (such as []), while many assume we have prior knowledge of sound source numbers in the scene (e.g., []).

3.1. Acoustic Signal Propagation

Understanding acoustic signal propagation is essential for developing effective SSL systems. In ideal free-field conditions, sound waves propagate spherically from a point source, with amplitude decreasing proportionally to the distance from the source. However, real-world environments introduce several complexities:

Reverberation: Sound reflections from surfaces create multiple paths between the source and receiver, complicating the localization process.

Diffraction: This phenomenon happens where sound waves bend or spread around obstacles or through openings (like doorways or windows). It allows sound to be heard even when there is no direct line of sight to the source.

Refraction: This is the bending of sound waves as they pass from one medium into another, or as they travel through a medium where the speed of sound changes gradually. The speed of sound can vary due to changes in temperature, wind, or medium density.

Background noise (external noise) and sensor imperfection (internal noise): sounds from ambient, or noise generated from the robot operation itself [], can mask or interfere with the target source; this noise can be referred to as external noise. Also, due to the imperfection of the receiver system, the recorded sound can be deviated from what it should be correctly recorded because of microphones or the audio acquisition system (e.g., analog-to-digital converters). This noise is inherent to the sensing hardware and its associated electronics.

These phenomena significantly impact the performance of SSL systems and have driven the development of increasingly sophisticated algorithms to address these challenges. To deal with these challenges, some studies considered some assumptions to simplify the SSL. For example, some early studies considered that there is no reverberation in the environment; this setting is called “anechoic”. Despite not being realistic in most applications, the anechoic setting has been assumed in many SSL works [,,]. To deal with noise, some studies used denoising techniques to overcome noise (e.g., []), while some considered the effect of noise levels, as defined by SNR (signal-to-noise ratio), in the localization of sound sources [,,,].

A core challenge in practical SSL is that the estimated DOA, denoted as , is not a perfect representation of the true DOA, . The difference between these two values is the localization error, , which can be expressed as

This error arises from various sources, which can be modeled as additive terms to the ideal acoustic signal. For a simple DOA model based on the time difference of arrival (TDOA), the estimated time difference between two microphones, , is a function of the true time difference and multiple error sources:

where

- is the synchronization error, which arises from imperfect clock synchronization between microphones or channels.

- is the propagation speed error, caused by variations in the speed of sound due to changes in temperature, humidity, or wind.

- represents the reverberation error, which results from multipath propagation, causing the direct sound to be masked or delayed by reflections.

- is the signal-to-noise error, stemming from ambient noise and sensor imperfections.

In robotic SSL, several physical modeling assumptions are commonly made to simplify the problem, but they are particularly susceptible to failure in real-world, dynamic environments:

- Free-field propagation: The assumption of a free-field environment (anechoic) is rarely valid in indoor robotics. As a robot navigates an office, factory, or home, reverberation artifacts are the most significant source of error. The direct path signal is often overshadowed by reflections, leading to inaccurate TDOA or phase-based estimates. To mitigate this, advanced methods should learn robust features that are invariant to reverberation.

- Constant propagation speed: The speed of sound is assumed to be a constant 343 m/s. However, temperature and wind gradients in a real environment can cause this assumption to fail. While less impactful than reverberation in most indoor settings, spatial variations in temperature or air movement can introduce a non-negligible . For precision applications or outdoor robots, this should be revised by taking into consideration temperature and wind factors to dynamically correct the speed of sound.

- Point source model: The assumption that the sound originates from a single point is valid for small sources at a distance, but it fails for extended sound sources (e.g., a person speaking, a large machine). The phase and amplitude can vary across the source, which can confuse DOA algorithms that rely on simple time or phase differences.

Understanding these error sources and the conditions under which model assumptions fail is crucial for designing robust SSL systems for robotics. A successful system must either explicitly model these errors or learn to be invariant to their effects.

3.2. Coordinate Systems and Terminology

SSL systems typically employ either Cartesian or spherical coordinates in both 2D and 3D localization. In Cartesian coordinates, the positions of sound sources are obtained with respect to the X, Y, and Z axes in 3D (X and Y in 2D). In spherical coordinates, the location of sound sources are determined in terms of radius (distance), azimuth, and elevation angles. In 2D localization, many studies focused on azimuth and distance or azimuth (horizontal localization []) or azimuth and elevation (directional localization). Also, some works only restricted their objective to the azimuth angle (1D localization) relative to the microphone array position, and in some cases, they do the localization grid by dividing the 360 degrees azimuth angle into grid space, such as 8 sections [] or narrower grid space (e.g., 360 sections []). This trick aims to perform SSL as a classification task in machine learning, where each class is devoted to a specific subregion for estimating sound sources [].

In robotics applications, the choice of coordinate system often depends on the specific requirements of the task and the configuration of the robot’s sensors. Also, it is worth mentioning that studies focusing on mobile robots might benefit from using the robot-centric coordinate (centered on the microphones mounted on the robot [,]). On the other hand, a fixed and static coordinate system defined by the environment itself (e.g., a corner of a room, a fixed marker) may be preferred for stationary applications, e.g., industrial robotic arms that have fixed locations in the workplace.

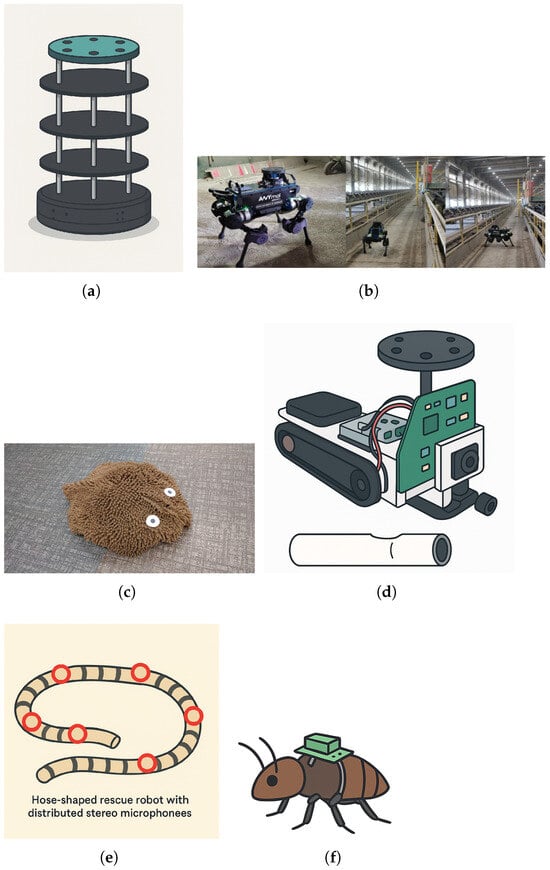

3.3. Microphone Numbers and Array Configurations

The arrangement of microphones plays a crucial role in SSL performance. Before explaining the different configurations, it is important to describe an open challenge in this regard. As a general rule, a large number of microphones in SSL leads to high accuracy in localization []. However, including more microphones can cause higher computing, higher cost, and consequently higher latency in localization (not reaching real-time localization). Also, the variation in microphone number and arrangements adds other design hyperparameters (how many microphones? which arrangement is better?). These additional hyperparameters in each study results in not having a reference SSL design system as a benchmark. On the other hand, because of some constraints in each study, e.g., different objectives, different financial and computational budgets, and design constraints, it is not expected that each study follow the same microphone design. Various microphone configuration have been used in SSL studies in robotics, as shown in Figure 5. The variety in microphone array configurations can be categorized into the following configurations:

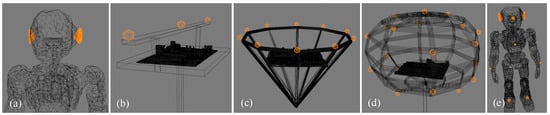

Figure 5.

Different microphone array configurations used in SSL robotics studies: (a) Binaural microphone array; (b) Linear array; (c) Circular array; (d) Spherical array; and (e) Microphones distributed in robot parts.

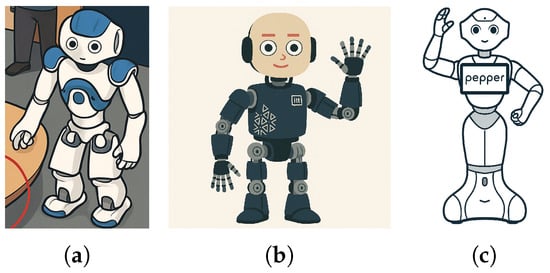

Binaural arrays: Mimicking human hearing with two microphones [], often placed on a robot’s head or within a dummy head structure [,,], as represented in Figure 5a. These are particularly common in humanoid robots, or simple mobile robots [,], and offer natural spatial cues but may have limited resolution. The robot’s head (or dummy head) can significantly impact sound waves through diffraction. This causes sound waves to bend around the head, leading to fluctuations in the time difference of arrival (TDoA), especially for sounds traveling around the front and back. The front–back ambiguity challenge in binaural SSL for robots, which means that the robot may struggle to differentiate between a sound coming from “ahead” or “behind” without additional cues or head movements. Binaural arrays are excellent at localizing sounds in the horizontal plane (azimuth) but offer very limited resolution for elevation (vertical direction). It is difficult for them to tell whether a sound is coming from above or below. These challenges lead to struggling with multiple simultaneous sound sources without using advanced separation techniques.

Linear arrays: Microphones are arranged in a straight line (see Figure 5b), providing good resolution in one dimension but suffering from front–back ambiguity and limited elevation estimation. The microphones are typically equally spaced, but non-uniform spacing can also be used to optimize performance []. In addition to the simplicity of design and implementation, this configuration is easier to calibrate than complex arrangements. A linear array mounted on the front or top of a robot can effectively localize sounds in the horizontal plane ahead of the robot [,]. This is useful for directional voice commands or detecting sounds from the front.

Circular arrays: As shown in Figure 5c, the microphones are arranged in a circle, offering 360-degree coverage in the horizontal plane and eliminating front–back ambiguity. Mounted on the “head” or “torso” of social robots to perceive sounds from any direction around them, crucial for engaging with multiple people in a room [,,].

Spherical arrays: Microphones distributed over a spherical surface, as can be seen in Figure 5d, providing full three-dimensional coverage and the ability to decompose the sound field into spherical harmonics. The spherical arrays can enable a robot [] or a drone [,,] to precisely localize and track multiple speakers in 3D space, understanding who is speaking from where in complex social settings.

Arbitrary geometries: This refers to microphone configurations where the individual microphone elements are positioned without adherence to the particular geometric arrays. The placement might be dictated by factors external to optimal acoustic design, such as the physical constraints of a platform, aesthetic considerations, the repurposing of existing sensors, or opportunistic placement in an environment. In robotics, microphones could be placed in different parts of the body, as can be seen in Figure 5e, e.g., the head, torso, and limbs of a humanoid robot [,] or distributed inside the room [,]. The irregular microphone placements have also been implemented in non-humanoid such as a hose-shaped robot [,].

The choice of the number of microphones and array configuration depends on the intention of researchers in each study. In robotics, many researchers want to mimic the human auditory system and chose binaural arrays. Also, it is noted that binaural is the most used configuration in SSL in robotics []. Interestingly, some researchers made some advancements by designing external pinnae for robots to mimic the human auditory system more than just putting two microphones on the head of the robot [,,]. Regarding the number of microphones, the number of microphones varied a lot []. It includes some SSL studies having a single microphone [,,], and some having a very dense microphone array (even 64 microphones []).

4. From Traditional SSL to DL Models

As robots are increasingly deployed in real-world settings, ranging from domestic assistants to industrial inspectors, the demands placed on SSL systems have grown considerably. These systems must now contend with complex, noisy, and reverberant environments, while maintaining low latency, minimal power consumption, and high spatial accuracy. Over the past decades, SSL methodologies have evolved in response to these challenges, beginning with analytically grounded, signal-processing techniques that exploit the physical properties of sound propagation and microphone arrays. While traditional methods such as time difference of arrival (TDOA), beamforming, and subspace analysis laid a robust foundation, their performance often degrades under non-ideal conditions and hardware constraints typical of mobile and embedded robotic systems. These limitations, in turn, have catalyzed a shift towards data-driven and learning-based paradigms, promising greater adaptability and robustness in real-world applications.

4.1. Traditional Sound Source Localization Methods

Before neural networks entered the scene, robot audition drew almost exclusively on classical array-signal-processing. Although most of today’s deep models replace, or embed, those analytical blocks, an understanding of their logic remains essential because the same acoustic constraints (microphone geometry, reverberation, noise, and multipath) still apply. Below, we revisit the four pillars that have shaped the field, i.e., time-delay estimation, beamforming, steered-response power (SRP), and subspace analysisd. Throughout, we highlight why each method proved attractive for robots and where its weaknesses motivated the subsequent shift to learning-based pipelines.

4.1.1. Time Difference of Arrival (TDOA)

Time difference of arrival (TDOA) is one of the foundational techniques in sound source localization (SSL), widely applied due to its conceptual simplicity and computational efficiency. The method relies on the fundamental observation that a sound wave will arrive at different microphones in an array at slightly different times, depending on the location of the source relative to the array []. By measuring these inter-microphone time delays, one can infer the direction or position of the sound source []. At the heart of TDOA-based SSL is the cross-correlation of audio signals recorded at different microphones. This process identifies the time lag at which the similarity between two signals is maximized, corresponding to the delay caused by the sound wave’s path difference. To enhance the performance of this basic cross-correlation, especially in noisy or reverberant environments, researchers often employ generalized cross-correlation (GCC), which introduces a frequency-dependent weighting to the correlation process []. Among the variants of GCC, the phase transform (PHAT) weighting, commonly known as GCC-PHAT, has proven particularly effective in robotics contexts [,]. By emphasizing phase information and attenuating amplitude components, GCC-PHAT improves robustness to reverberation and environmental noise. Once the time delays are estimated, spatial localization is achieved through hyperbolic positioning. Each pairwise time difference corresponds to a hyperbolic curve (in 2D) or a hyperboloid surface (in 3D) upon which the sound source must lie. The intersection of these geometric constraints from multiple microphone pairs yields an estimate of the source’s location.

Despite its popularity, the TDOA method is not without limitations. One major challenge is its sensitivity to noise and reverberation [,], particularly in enclosed environments with reflective surfaces. Although GCC-PHAT offers partial mitigation, its effectiveness diminishes in highly cluttered acoustic spaces. Additionally, the spatial resolution of TDOA-based systems is inherently limited by the sampling rate of the microphones and the inter-microphone distances, which constrain the granularity of detectable delays. Another well-documented drawback is the so-called front–back ambiguity, which arises primarily in linear microphone arrays. In such configurations, the system may struggle to distinguish whether a sound originates from in front of or behind the array due to symmetric time-delay profiles. Moreover, traditional TDOA techniques face difficulties in multi-source environments [], where overlapping sound signals can interfere with accurate time-delay estimation, leading to degraded performance or incorrect localization. Nonetheless, TDOA remains a cornerstone of SSL research and application, especially in scenarios where computational simplicity and real-time performance are prioritized. Its principles have also served as a basis for hybrid systems that integrate TDOA with more advanced signal processing or learning-based techniques, expanding its utility in modern robotic auditory systems [,,,,,,,,,].

4.1.2. Beamforming

Beamforming is a spatial signal processing technique that plays a dual role in auditory systems: it not only facilitates sound source localization (SSL) but also enhances the quality of the captured audio by amplifying signals from a desired direction while attenuating noise from others. In robotic contexts, this capability is invaluable for tasks such as speech recognition, situational awareness, and interaction in acoustically complex environments [,,]. At its core, beamforming involves the constructive combination of sound signals from multiple microphones, typically after delaying them to align with a hypothesized source direction. When the hypothesized direction matches the true direction of arrival (DOA), the signals reinforce, resulting in a high beam response. The simplest implementation of this principle is the delay-and-sum (DS) beamformer, which sums delayed microphone signals to estimate the DOA. More sophisticated approaches like the minimum variance distortionless response (MVDR) beamformer, also known as Capon’s beamformer [], improve robustness by minimizing output power from all directions except the desired one. The linearly constrained minimum variance (LCMV) beamformer allows multiple spatial constraints, making it suitable for multi-target environments []. Adaptive beamforming methods extend this paradigm by dynamically adjusting parameters in response to changes in the environment, enhancing resilience to noise and interference.

In the survey by Lv et al. [], some promising developments in beamforming were explained that could be implemented as beamforming-based SSL in robotics. For example, a novel beamforming technique by Yang et al. [] tailored for far-field, large-scale environments, significantly improved localization accuracy across multiple sound sources. In low signal-to-noise ratio (SNR) conditions, Liu et al. [] introduced a novel MVDR-based beamforming (MVDR-HBT) algorithm, which leverages statistical signal properties to boost robustness and precision. Zhang et al. [] extended beamforming to periodic, steady-state sound sources by developing a high-resolution cyclic beamforming method that support both localization and fault diagnosis in machinery. While beamforming offers considerable advantages, it is not without its challenges. Traditional beamforming techniques tend to suffer from poor dynamic range and limited real-time performance, particularly in large environments or when precise temporal resolution is required. Moreover, accurate array calibration remains a critical prerequisite—small discrepancies in microphone positioning or gain can lead to substantial localization errors. The computational complexity of adaptive beamforming in real-time applications can also place significant demands on embedded processors typical of mobile robotic platforms and UAVs [,]. Nonetheless, beamforming remains a vital technique in SSL which could be used in many robotic applications. Its capacity for both directional enhancement and localization makes it particularly attractive for systems that require perceptual robustness in dynamic or noisy environments.

4.1.3. Steered-Response Power (SRP)

Steered response power (SRP) methods constitute a prominent category of traditional SSL techniques. These methods operate on the principle of beamforming: the microphone array is virtually “steered” in multiple candidate directions, and the response power, essentially the energy of the summed signals after delay alignment, is computed for each direction. The underlying assumption is that, when the beamformer is steered towards the true source direction, the accumulated energy or response power will be maximized. Among the various SRP techniques, the SRP-PHAT (phase transform) algorithm is widely recognized for its robustness and practical effectiveness. It incorporates the phase transform weighting strategy from GCC-PHAT into the SRP framework, attenuating amplitude information and emphasizing phase cues to improve reliability in reverberant environments [].

One of the most valuable attributes of SRP methods (particularly SRP-PHAT) is their resilience in acoustically challenging settings. Unlike traditional cross-correlation techniques, SRP methods perform well even in the presence of significant reverberation, making them suitable for indoor or cluttered robotic applications. Another advantage is their capacity for multi-source localization. By analyzing the response power map across a spatial grid, systems can identify multiple peaks, each corresponding to a potential sound source. This ability makes SRP methods appealing in scenarios where robots must interact with multiple humans or monitor several machines simultaneously. However, SRP approaches are not without challenges. Chief among them is computational complexity. Evaluating the steered response across a dense spatial grid requires substantial processing, which can be burdensome for real-time systems or power-constrained platforms. Moreover, the spatial resolution of the localization is directly tied to the granularity of the search grid. Finer grids improve accuracy but exacerbate computational demands. Discretization also introduces errors, particularly when the true source lies between predefined grid points. Additionally, while SRP-PHAT improves robustness to reverberation, performance may still degrade in noisy environments or when multiple sources overlap in time and frequency, leading to ambiguities in peak detection. Despite these limitations, SRP-based methods, especially when paired with optimization strategies or hierarchical search techniques, remain a powerful tool in the SSL toolbox. They bridge the gap between theoretical accuracy and real-world applicability and continue to be refined for emerging robotic use cases that demand high reliability in dynamic, reverberant, and multi-source auditory scenes [,,,,,].

4.1.4. Subspace Analysis

Subspace-based methods form one of the most analytically powerful approaches in the domain of sound source localization (SSL). These techniques, including the well-known multiple signal classification (MUSIC) and estimation of signal parameters via rotational invariance techniques (ESPRITs), operate by analyzing the eigenspectrum of the spatial covariance matrix derived from multichannel microphone signals. Their strength lies in the decomposition of this matrix into orthogonal signal and noise subspaces, which enables the extraction of direction-of-arrival (DOA) information with high angular precision. The MUSIC algorithm remains a prominent representative of this class. It estimates the covariance matrix of the received signals and then applies eigendecomposition to distinguish the dominant signal subspace from the residual noise subspace. A spatial pseudo-spectrum is then generated by scanning over candidate directions; peaks in this spectrum correspond to estimated DOAs, exploiting the principle that the array steering vectors of true sources are orthogonal to the noise subspace [].

One of the significant limitations of traditional MUSIC is its dependency on accurate and noise-free estimation of the covariance matrix. In real-world scenarios with limited data or low signal-to-noise ratios (SNRs), these estimations can become unreliable. To address this, Zhang and Feng [] proposed the use of a non-zero delay sample covariance matrix (SCM) combined with pre-projection techniques to filter out noise and improve signal subspace estimation. Similarly, Weng et al. [] introduced the SHD-BMUSIC algorithm, which operates in the spherical harmonic domain and integrates wideband beamforming to enhance source discrimination, particularly in scenarios involving closely spaced or multiple sources. Despite their theoretical elegance and high spatial resolution, subspace methods are not without drawbacks. They are sensitive to coherent or correlated sound sources, a common condition in reverberant environments, which can collapse the signal subspace and compromise accuracy. Moreover, these methods demand significant computational resources [], primarily due to eigendecomposition and exhaustive spatial scanning. They also typically require a substantial number of temporal snapshots to yield stable covariance estimates, reducing their responsiveness in rapidly changing acoustic scenes. Nonetheless, subspace methods remain an essential pillar of SSL research. Their precision in controlled environments and potential for multi-source resolution make them attractive for applications in collaborative mobile ground robotics [,,,] and UAVs [,,,,,] as well as humanoid audition [,,,,,]. Ongoing research aims to mitigate their limitations through techniques such as subspace smoothing, sparse array design, and integration with learning-based models, enabling their gradual transition from theoretical benchmarks to practical solutions in robotic auditory systems.

Table 3 lists the information regarding the traditional SSL works in robotics that used the four typical localization families. As the table shows, a considerable number of papers in the last decade still rely on these classical SSL approaches (especially subspace analysis using MUSIC) in robotics. Interestingly, the vast majority of these studies target one stationary sound source in their localization experiments. This emphasis reflects an inherent limitation of traditional SSL techniques in tracking multiple or moving emitters with high accuracy.

Table 3.

Studies on robotic platforms in our review that used the typical traditional SSL approaches (i.e., TDOA, beamforming, SRP, and subspace analysis). “S/M’’ denotes static/moving sound sources.

Regarding the localization accuracy of traditional methods, a unified comparison is challenging due to the varied metrics and experimental conditions found in the literature. As shown in the last column of Table 3, studies report accuracy using different metrics, such as angular or distance error, and across a wide range of setups. For example, in a large-scale outdoor experiment covering a field of 240 × 160 × 80 m3, a mean distance error of 6.03 m [] can be considered a successful outcome, aligning with the typical accuracy of open-field GPS. In contrast, very high precision can be reported in close-range indoor experiments, such as the 2–10 cm error achieved by [] with the sound source 1.5–3 m away. The effect of distance on accuracy is also highlighted by [], which reported a range of azimuth and elevation errors (0.8–8.8° and 1.4–10.4°, respectively) as the sound source moved from 25.3 to 151.5 m away. Noise is another critical factor. In [], it was shown that while TDOA and subspace methods perform well in non-noisy conditions, they can lead to significant localization errors (e.g., a 100° azimuth error for TDOA-based GCC-PHAT) in extremely noisy environments with a signal-to-noise ratio (SNR) as low as −30. Beyond the model and environment, the microphone itself can influence accuracy; ref. [] demonstrated that different pinnae designs on a humanoid’s binaural microphone setup resulted in varying azimuth RMSE errors, even when using the same SSL model. Furthermore, some studies report accuracy as a success rate within a specific error threshold instead of a direct error value. For instance, ref. [] reported that subspace analysis achieved a 74–95% success rate within a threshold of 2.5° for angle and 20 cm (at 5 m) for distance estimation.

Computational latency is a crucial factor for real-time robotic SSL, yet it has been largely overlooked in many studies. While this information is not widely available, some papers provide important comparisons. For example, ref. [] noted that TDOA (GCC-PHAT) was twenty times faster than subspace analysis (MUSIC) on the same hardware, processing one second of signal in approximately 1.5 s. Similarly, ref. [] found that a TDOA-based SSL was over 50% faster than SRP-PHAT for localizing a referee whistle, enabling a closer-to-real-time performance. A few researchers have directly addressed this challenge by developing computationally efficient models. For example, ref. [] proposed a lightweight SRP model (SRP-PHAT-HSDA) that is up to 30 times more efficient than a typical SRP, allowing for real-time localization on low-cost embedded hardware. In a similar vein, ref. [] introduced a modified MUSIC-based method called optimal hierarchical SSL (OH-SSL) that reduced the processing time from 1.073 s to a mere 0.028 s, making real-time processing feasible.

4.2. DL-Based SSL

Traditional SSL methods rely on explicit mathematical models of sound propagation and array geometry. While effective in controlled conditions, they often struggle in challenging real-world environments, such as high noise levels, reverberation, multiple sound sources, and unknown or changing array configurations, as well as moving sound sources. Machine learning (ML) and especially deep learning (DL) have emerged as transformative approaches in SSL by enabling models to learn intricate patterns directly from data, with no or low need for pre-processing input data [,], often outperforming traditional methods in challenging scenarios. This section examines the evolution, methodologies, architectures, and innovations in deep learning-based SSL for robotics applications.

4.2.1. Traditional Machine Learning and Neural Networks

Before ‘‘deep’’ became fashionable, researchers already tried to map acoustic features to directions with classical machine learning. The support-vector machines (SVMs) model is a well-known ML that has been implemented in some SSL works as well as sound event classification [,,]. Interestingly, in some SVM-based sound source localization, the data features from traditional SSL has been used, such as SVM using TDOA features [] or SVM based on beamforming [,]. K-nearest neighbors (KNNs) is another classical ML model that has been used in sound source localization [] and sound source classification [,].

Aside from traditional machine learning, which is primarily dependent on the quality and relevance of the hand-crafted features extracted from the raw audio signals (e.g., TDoA values from GCC []), neural networks were able to automatically learn optimal features directly from raw multi-channel audio data or low-level spectrograms. At the beginning, some research used simple neural networks (NNs) in the SSL task. This could be done in the simplest form using a shallow neural network (having a single hidden layer) or NNs with multiple hidden layers, called multi-layer perceptrons (MLPs) []. Shallow neural networks were not frequently used in SSL [], since having only one layer as a hidden layer could not let the model learn the sound features for localization accurately. Song et al. [], used a shallow neural network with fuzzy inference, called the fuzzy neural network (FNN), for fault detection from sound signals using a plant machinery diagnosis robot (MDR). In contrast, many SSL works used deeper neural networks, with multiple hidden layers, to effectively learn the sound features during the training phase. To the best of our knowledge, in the beginning of 2000, some early works used shallow MLPs in SSL, such as NNs with two hidden layers [,]. Kim et al. [] used MLPs for both sound counting and localization, such that an MLP detected the number of sound sources, and then a different MLP localized each of the sound sources. Also, MLP was used in two studies by Davila-Chacon et al. [,] on a humanoid robot to estimate the azimuth angle and also to improve automatic speech recognition (ASR). In robotics, Youssef et al. [] used an MLP to estimate the sound source azimuth angle by exploiting the binaural signal received in two microphones mounted on a humanoid robot. Another robotic study by Murray and Ervin [] used an MLP for estimating the elevation angle while sounds were recorded through artificial pinnae, mimicking the human auditory system. In another robotic study by He et al. [], they proposed an MLP-based model for simultaneously detecting and localizing multiple sound sources in human–robot interaction scenarios, and they showed that their model outperformed traditional SSL, such as MUSIC-based. Similarly, Takeda and Komatani used MLP in their works and showed that the MLP model outperforms MUSIC in SSL in studies with a robotic theme [,,].

It is important to note that the neural network architecture in each study is different from each other and has many hyperparameters. For example, the activation function in each layer, the number of hidden layers and nodes in each layer, as well as the type of input data, i.e., could be raw signal, spectrogram, or sound features captured from traditional SSL, and could be different for each NN. Therefore, hyperparameter selection through an ablation study could help in achieving the best architecture. Also, another challenge in ML-based SSL is to ensure that the quantity and quality of data is sufficient in training, such that the model could effectively learn in the training phase and avoid some common issues, such as overfitting [].

4.2.2. Convolutional Neural Networks (CNNs)

A convolutional neural network is a famous DL architecture composed of learnable convolution kernels, interleaved with nonlinear activations, and, typically, pooling or normalization layers []. The defining feature is weight sharing: the same kernel is slid across the input, allowing the network to detect local patterns regardless of their absolute position. CNNs were first popularized for image analysis [], yet their inductive biases, translation invariance, and local receptive fields map naturally to spectro-temporal audio representations. Compared to analytic TDOA or MUSIC pipelines, CNNs require no hand-crafted feature selection [], can fuse information across multiple microphones and hundreds of frequency bins in a single forward pass, and can be trained to ignore ego-noise unique to a given platform. Their computation is a chain of dense tensor operations that map efficiently onto embedded GPUs, making real-time deployment feasible even on small service robots.

As pointed out in the survey by Grumiaux et al. [], in 2015, the first CNN-based SSL was performed by Hirvonen []. In this study, a CNN model was trained to classify an audio signal containing one speech or musical source into spatial regions as a classification task. Some studies used raw audio waveforms as input for the CNN architecture [,,]. This approach eliminates the need for hand-crafted features but may require larger models and more training data. However, other CNN-based SSL studies typically used the input in the form of a multichannel short-time Fourier transform (STFT) spectrogram [,]. These multichannel spectrograms are typically used as 3D tensors, with one dimension for time (or frames), one for frequency (bins), and one for channel. Some works used the feature of conventional SSL works, e.g., 2D images of DoA feature extracted by beamforming [] or GCC features [,], as an input for the CNN architecture. The convolutional layers are regarded as an important hidden layer in CNNs, Conv1D, and Conv2D, and 3D convolutional layers have been used in SSL works. It is generally conceived that the higher dimension can lead to the better capture of the feature while it adds a computational cost []. Even though, some works suggest having hybrid convolutional dimensions in SSL [], e.g., a set of Conv2D layers for frame-wise feature extraction followed by several Conv1D layers in the time dimension for temporal aggregation [].

In robotics, several pioneering studies demonstrate the effectiveness of CNNs in SSL tasks, particularly in overcoming the limitations of traditional methods in complex, real-world scenarios. Notably, these approaches leverage CNNs to map binaural or multi-microphone audio features directly to sound source locations or directions. Nguyen et al. [] explored an autonomous sensorimotor learning framework for a humanoid robot (iCub) to localize speech using a binaural hearing system. Their contribution involved an automated data collection and labeling procedure, and they successfully trained a CNN with white noise to map audio features to the relative angle of a sound source. Crucially, their experiments with speech signals showed the CNN’s capability to localize sources even without explicit spectral feature handling, highlighting the network’s inherent ability to learn robust mappings. Similarly, Boztas [] focused on providing auditory perception for humanoid robots, emphasizing the importance of localizing moving sound sources in scenarios where cameras might be ineffective. By recording audio from four microphones on a robot’s head and combining it with odometry data, the study demonstrated that a CNN could accurately estimate the location of a moving sound source, thus validating the robot’s ability to sense sound positions with high accuracy, akin to living creatures. Furthermore, CNN-based SSL models are pushing the boundaries to address more complex robotic challenges. He et al. [] proposed using neural networks for the simultaneous detection and localization of multiple sound sources in human–robot interaction, moving beyond the single-source focus of many conventional methods. They introduced a likelihood-based output encoding for arbitrary numbers of sources and investigated sub-band cross-correlation features, demonstrating that their proposed methods significantly outperform traditional spatial spectrum-based approaches on real robot data. Jo et al. [] presented a three-step deep learning-based SSL method tailored for human–robot interaction (HRI) in intelligent robot environments. Their approach prioritizes minimizing noise and reverberation by extracting excitation source information (ESI) using linear prediction residual to focus on the original sound components. Subsequently, GCC-PHAT is applied to cross-correlation signals from adjacent microphone pairs, with these processed single-channel, multi-input cross-correlation signals then fed into a CNN. This design, which avoids complex multi-channel inputs, allows the CNN architecture to independently learn TDOA information and classify the sound source’s location. While not directly tested on a robot, Pang et al. [] introduced a multitask learning approach using a time–frequency CNN (TF-CNN) for binaural SSL, aiming to simultaneously localize both azimuth and elevation under unknown acoustic conditions. Their method extracts interaural phase and level differences as input, mapping them to sound direction, and shows a promising localization performance, explicitly stating its usefulness for human–robot interaction. Similarly, Ko et al. [] proposed a multi-stream CNN model for real-time SSL on low-power IoT devices, including its application to camera-based humanoid robots. Their model processes multi-channel acoustic data through parallel CNN layers to capture frequency-specific delay patterns, achieving high accuracy on noisy data with a low processing time, further emphasizing its applicability for robots mimicking human reactions in crowded environments. CNN-based SSL has also been tested in a multi-robot configuration. In this regard, Mjaid et al. [] introduced AudioLocNet, a novel lightweight CNN that frames SSL as a classification task over a polar grid, letting each robot infer both azimuth and range to locate and communicate with each other.

4.2.3. Convolutional Recurrent Neural Networks (CRNNs)

A CNN treats each input window independently; it excels at learning spatial–spectral patterns, but it has no mechanism to remember how those patterns evolve over time. Sound localization in the wild, however, is inherently temporal. Robots rotate their heads, sources start and stop speaking, and early reflections arrive a few milliseconds after the direct path. To deal with temporal dependency, recurrent neural network (RNN) architectures are designed by adding state vectors that are updated at every time step, enabling the model to maintain a memory of past observations and to capture temporal dependencies that a vanilla CNN cannot represent. In the context of sound localization, an RNN ingests a sequence of time-–frequency frames, propagates information through gated recurrent units (GRUs) [] or long short-term memory (LSTM) cells [], and outputs either frame-wise direction estimates or a smoothed trajectory of source positions. Regarding RNNs in SSL, few works, such as those by Nguyen et al., [] and Wang et al. [], used RNNs without combining these with CNNs []. The former work implemented an RNN to match and fuse sound event detection (SED) and DOA predictions, and the latter one used a bidirectional LSTM to identify speech dominant time–frequency units for DOA estimation. Despite these successes, pure RNN solutions remained limited in SSL (e.g., two SSL works in robotics [,]) because the recurrent layers themselves could not learn spatial filters or phase relations from raw spectra. This bottleneck set the stage for convolutional–recurrent hybrids (CRNNs), which delegate spatial feature extraction to a front–end CNN and let the RNN specialize in temporal reasoning, thus combining the best of both worlds.

CRNNs have been regularly implemented for SSL since 2018 []. Having some convolutional layers following a recurrent unit, such as a bidirectional gated recurrent unit (BGRU) or LSTM, and a fully connected layer, is a typical CRNN architecture in SSL. This kind of model has been shown to be robustly able to detect overlapped sounds and a noisy environment [,]. Regarding the localization of multiple sound sources, some CRNN-based SSL works used a separate CRNN for sound source counting as well as one for localization [], while some assumed the prior knowledge of sound sources and localize each with a trained model [], and some simultaneously localize multiple sound sources within a single CRNN model [,].

In robotics, CRNNs have been recently (after 2020) used in SSL tasks. For example, Kim et al. [] addressed the critical challenge of ego noise generated by mobile robots (robot vacuum cleaner), which significantly degrades SSL performance. Their approach proposed a multi-input–multi-output (MIMO) noise suppression algorithm built upon an extended time-domain audio separation network (TasNet []) architecture. They evaluated their work by the CNN (U-Net []) and CRNN (by adding an LSTM block) models across different noise levels. Aside from the works of Kim et al., other CRNN architectures in SSL have been used indirectly in robotic application. For instance, Mack et al. [] implemented a Conv-BiLSTM model to estimate the DOA angles based on the spectrogram inputs of human voice and artificial noise with possible applications in robotics. Jalayer et al. [] implemented CovLSTM-based SSL for the localization of humans and machines (CNC machines) using raw audio signals in an industrial noisy environment that can be applied for scenarios with industrial robots in the scene. Two studies by Altayeva et al. [] and Akter et al. [] implemented ConvLSTM-based sound detection and classification for various sound events in an urban environment, which could be applied in security robots in urban areas. Varnita et al. [] similarly implemented ConvLSTM-based sound event localization and detection (SELD), which could be practically used for the accurate sound-based navigation and awareness of robots regarding the events.

4.2.4. Attention-Based SSL

The intuition behind attention is simple yet powerful: rather than processing an input sequence with a fixed, locality-biased kernel, a model can learn to select and weight the time–frequency regions that matter most for the task at hand. Introduced by Bahdanau et al. [] for machine translation and generalized in the Transformer of Vaswani et al. [], attention replaces recurrence with a data-driven affinity matrix that relates every element of the input to every other element. Each output representation is thus a context-aware combination of the entire input, enabling the network to capture long-range dependencies and intricate phase relationships across microphone channels that are essential for precise sound localization [].

The first wave of attention-augmented SSL networks (after 2020) kept the overall CRNN structure and simply inserted a self-attention layer after the recurrent stack. Phan et al. [] and Schymura et al. [] reported consistent gains over the CRNN baseline: the localization error decreased while the models learned to focus automatically on time frames where sources were active. Mack et al. [] placed two successive attention blocks, the first operating directly on phase spectrograms, and the second on convolutional feature maps, to generate dynamic masks that emphasize frequency bins dominated by the target speaker. Following the broader “attention-is-all-you-need” trend, fully Transformer-style encoders are now replacing recurrent back-ends altogether. Multi-head self-attention (MHSA) layers were coupled with lightweight CNN front ends by Emmanuel et al. [] and Yalta et al. [], cutting computation time while winning the SELD track. Many SSL research studies in recent years directly implemented Transformers in SSL [,,]; for instance, the most recent one by Zhang et al. [] extends this trend by coupling a CNN front–end with a Transformer encoder that attends over sub-band spatial-spectrum features, enabling the network to identify true DOA peaks and suppress spurious ones, and thereby delivering state-of-the-art localization accuracy for multiple simultaneous sources under heavy noise and reverberation.

Although Transformer-style architectures already set the performance bar on SSL benchmarks, documented examples of their on-board use in mobile or humanoid robots remain scarce. Yet the very property that makes attention models excel in controlled evaluations, namely their ability to focus dynamically on the most informative time–frequency cues, addresses exactly the challenges faced by robots in the wild. In a bustling factory or a crowded café, an attentive SSL front–end can learn to highlight the spectral traces of a target speaker while suppressing background chatter, motor ego-noise, and sporadic impacts, thus preserving a stable acoustic focus for downstream speech recognition, navigation, or event detection. Because multi-head self-attention offers a global receptive field and permutation-invariant decoding, it naturally accommodates multi-source tracking without handcrafted heuristics, making it a promising cornerstone for robots that must localize critical events in real time and interact seamlessly with humans in complex soundscapes. Admittedly, full Transformers demand more computation and memory than lightweight CNNs, but recent engineering advances bring their inference cost within reach of embedded platforms. Sparse attention variants reduce the quadratic complexity of self-attention, slashing both latency and RAM with negligible loss in angular accuracy [,]. Further savings arise from weight-sharing across heads and post-training quantization [], allowing multi-head self-attention to execute comfortably on Jetson-class GPUs or Edge-TPUs already found on many service, inspection, and aerial robots. These techniques suggest that attention-driven SSL is poised to become a practical and powerful component of next-generation robotic audition systems.

Table 4 summarizes the studies that apply machine learning and deep learning techniques to SSL on robotic platforms. Early work (2013–2017) relied mainly on shallow neural networks and MLPs or on classical feature-reduction methods and machine learning (e.g., PCA and SVM). From 2017 onward, however, the field has shifted decisively toward convolutional, recurrent, and hybrid (CRNN) architectures. These models can jointly exploit the spatial–spectral structure of microphone signals and their temporal dynamics, enabling, for example, the localization of several simultaneous active sound sources [] or the tracking of moving emitters [,,]. Notably, to the best of our knowledge, Transformer-based networks—now state-of-the-art in many audio tasks—have not yet been adopted for robotic SSL, leaving a promising avenue for future research.

Table 4.

Machine and deep learning based SSL studies on robotic platforms.

Regarding performance accuracy, we observed that studies report a variety of metrics, making a direct comparison challenging. For example, some works provide an angular (either azimuth or elevation or both) or distance error, while others report localization accuracy based on a specific prediction threshold. This inconsistency stems from diverse experimental setups, including different environmental conditions and sound source configurations. Despite this, several studies have directly compared traditional and ML/DL-based SSL methods, often demonstrating the latter’s superiority in challenging scenarios. For instance, Ref. [] reported that a proposed ML-based model significantly outperformed traditional SRP in high-noise (SNR = −10) and highly reverberant environments, achieving 40–50% lower angular error and 50% higher localization accuracy. Similarly, two studies by Takeda et al. [,] showed that MLP-based methods were more accurate than subspace analysis (MUSIC) in reverberant conditions. Importantly, DL-based approaches (MLPs and CNNs) have proven more effective in multi-source scenarios. Moreover, Ref. [] found that DL-based models maintained a low angular error (below 10°) when localizing multiple sources, whereas SRP and MUSIC errors increased to 20–40°. More recently, ref. [] demonstrated that a CNN-based model achieved a 98.75% accuracy for single-source localization (within a 15° threshold), a significant improvement over TDOA-based methods (GCC and GCC-PHAT), which achieved around 70% accuracy.

In addition to comparing against traditional methods, some studies have also compared different DL architectures. Ref. [] reported that a CNN-based model outperformed an MLP for both azimuth and elevation detection in noisy conditions (SNR = −5), showing more than a 10% gain in accuracy. For tracking moving sources, ref. [] showed that LSTM and CNN models were considerably more accurate than MLPs, with localization errors (MAE) worth approximately 25% of the MLP’s error. Hybrid architectures also show promise, as [] demonstrated that a combined CNN and LSTM model (ConvLSTM) surpassed a pure CNN in localizing both human speech and machine-generated sounds. However, the performance of these models can still be impacted by the number of sources, as shown in [], where adding a second and third simultaneous source increased the azimuth error from 2.1° to 4.7° and 12°, respectively. Ref. [] reported a similar effect, noting that more sources reduced accuracy, especially in noisy conditions. The distance between the sound source and the microphone array is another critical factor similar to what was reported for traditional SSL (Table 3). The authors in [] found that an MLP’s azimuth error slightly decreased as the source moved from 1 m to 2.8 m, while [] showed that an LSTM’s accuracy dropped by about 7% when the source distance increased from 1 m to 2 m.

Similarly to traditional methods, most ML/DL-based studies have overlooked the critical factor of computational latency and real-time performance. This lack of reporting is a notable gap in the literature. However, a few works have provided this information. For example, ref. [] reported that their ML-based model (PCA + DFE) had a latency of just 220 ms, enabling real-time SSL. Ref. [] also highlighted that, while SVM-based SSL requires a long training time, its localization processing time is faster than traditional methods like TDOA, facilitating closer-to-real-time performance.

5. Data and Learning Strategies

Deep learning sound-source localization requires extensive and diverse corpora as well as training paradigms that bridge the gap between laboratory conditions and everyday robotics. Mobile robots face motor ego-noise, rapidly changing geometries, overlapping talkers, and strong reverberation. We review (i) how training data are generated or collected, (ii) the augmentation schemes that mitigate over-fitting, and (iii) supervised, semi-/weakly-supervised, self-supervised, and transfer-learning paradigms that turn those data into robust models.

5.1. Data

Simulation pipelines: Most systems bootstrap with synthetic data because collecting ground-truth directions for every time frame is costly. The standard recipe convolves dry audio (a reverberation-free data) with room-impulse responses (RIRs) generated by an image-source method (ISM) [] or its GPU-accelerated variants []. Open source libraries such as Habets’ RIR generator [], Pyroomacoustics [], and ROOMSIM [,] can render millions of multichannel RIRs spanning room sizes, reverberation times and source–array poses []. Models trained on such corpora generalize surprisingly well [,], e.g., the VAST dataset [] covers a vast variety of different geometries simulated in ROOMSIM and showed that virtually learned mappings on this dataset generalize to real test data. Although they have some limitations, for example, a simulated acoustic room (e.g., generated by Pyroomacoustics) is inherently unable to generate external diffuse noise and simulate obstacles or separating walls within the simulated room [,]. Dry-signal choice matters: mixing speech, noise, and sound events outperforms noise-only training (Krause et al. []). For robotics, to narrow the gap between simulation and reality, specific considerations such as ego-noise (generated by the robot itself), simulating recording from moving microphones [], in case microphones are mounted on a mobile robot, should be taken into account. Achieving a high-fidelity simulation of SSL in robotics can avoid risky and costly field trials.

Recorded datasets: Real corpora remain indispensable for evaluation. Regarding the data collection, the emergence of some worldwide challenges organized in recent years has motivated the public sharing of the datasets. One of the most widely used real-recorded benchmarks for modern localization networks are the sound-event localization and detection (SELD) datasets released by the Detection and Classification of Acoustic Scenes and Events (DCASE) challenge []. Open science is a guiding principle of DCASE: every task ships an openly licensed dataset, a baseline system, and a fixed evaluation protocol, enabling reproducible comparison across systems and years. For SSL, the relevant tasks included the localization of static sound sources [] as well as moving ones [,,]. The latest development of the SELD task include using audio-visual input [], and, in 2024, distance estimation []. These datasets were recorded in the real-world reverberant and noisy environments containing different sound types (e.g., human speech and barking dog sounds). As highlighted in the survey by Grumiaux et al. [], the datasets of the DCASE challenges have become the benchmark for deep learning-based SSL, e.g., [,]. Although the recordings are not captured on mobile robots, the datasets’ noisy, reverberant, multi-source scenarios mirror many robotic deployments and could therefore serve as an invaluable data source for some robotic research. The acoustic source localization and tracking (LOCATA) challenge [] provides another comprehensive data corpus encompassing scenarios from a single static source to multiple moving speakers, using various microphone arrays (from a 15-microphone planar array to a 12-microphone robot head array and even binaural hearing aids). This dataset could be very beneficial for robotic SSL since it includes the data captured from a pseudo-spherical array with 12 microphones integrated into a prototype head for the humanoid robot NAO.