Abstract

With the global surge in patent filings, accurately evaluating similarity between patent documents has become increasingly critical. Traditional similarity assessment methods—primarily based on unimodal inputs such as text or bibliographic data—often fall short due to the complexity of legal language and the semantic ambiguity that is inherent in technical writing. To address these limitations, this study introduces a novel multimodal patent similarity evaluation framework that integrates weak AI techniques and conceptual similarity analysis of patent drawings. This approach leverages a domain-specific pre-trained language model optimized for patent texts, statistical correlation analysis between textual and bibliographic information, and a rule-based classification strategy. These components, rooted in weak AI methodology, significantly enhance classification precision. Furthermore, the study introduces the concept of conceptual similarity—as distinct from visual similarity—in the analysis of patent drawings, demonstrating its superior ability to capture the underlying technological intent. An empirical evaluation was conducted on 9613 patents in the manipulator technology domain, yielding 668,010 document pairs. Stepwise experiments demonstrated a 13.84% improvement in classification precision. Citation-based similarity assessment further confirmed the superiority of the proposed multimodal approach over existing methods. The findings underscore the potential of the proposed framework to improve prior art searches, patent examination accuracy, and R&D planning.

1. Introduction

As global patent filings continue to surge, the number of active patents reached approximately 18.59 million as of 2023, representing a 1.78-fold increase from 10,821,400 in 2014 []. Within such a vast database, accurately identifying similar technologies has become a critical task in the patent ecosystem. This difficulty in discovering and distinguishing similar technologies arises from various factors. The complexity of technical expressions written in legal terminology [] and issues of synonymy [,] often hinder the accurate understanding of the underlying technological similarities. Consequently, patent examiners, ecosystem stakeholders, and inventors face substantial time and financial burdens when conducting prior art searches or attempting to avoid infringement. Inaccurate search results can further lead to serious issues, including delays in patent examinations, legal disputes, and misdirection in R&D planning.

Patent documents are inherently multimodal in nature, composed of textual descriptions, bibliographic metadata, and drawing images. Each component reflects a different aspect of the invention, and their integrated use enables more accurate similarity evaluation. The text component describes the technical content and implementation methods of the invention in detail, while the bibliographic data—such as IPC classification codes, citation information, and inventor details—indicates the technological domain and its relevance [,,,]. Liu et al. (2011) demonstrated improved search accuracy by combining bibliographic fusion with text mining techniques []. Evaluating patent similarity using bibliographic data, like a citation or backward citation, remains a valid approach today [,,]. Yoo et al. (2023) proposed a formula that combines similarity scores based on bibliographic features (e.g., IPC codes, citation data, inventor details) with text-based similarity using LLaMA2 [].

However, extracting documents that are similar to the query document is challenging when relying solely on simple keywords or a simple weighted combination of text and bibliographic information. This difficulty arises because search data is imbalanced, unlabeled, and the criteria for weighting are ambiguous.

Several studies have also explored the combined use of text and images. Csurka et al. (2011) incorporated patent drawings into a multimodal patent retrieval system that used text, images, and IPC codes []. Vrochidis et al. (2014) proposed a content- and concept-based image retrieval method by leveraging both the visual similarity of patent drawings and their annotated text descriptions []. Patent drawings, in particular, visually represent the complex structure and principles of an invention, supporting a clearer interpretation of the claims. However, when classified purely from a visual perspective, patent drawings often take the form of binary images with over nine different categories, which are not directly aligned with the technological classification. Furthermore, a single drawing may include elements from multiple categories, making automated classification into a single type challenging. As a result, visual similarity does not necessarily correspond to technological similarity. Moreover, most existing patent similarity studies focus on text alone, or combine text with either bibliographic or image data [,], while integrated approaches that simultaneously consider all three components of patent documents remain extremely rare.

This study evaluated patent similarity using a model-agnostic-based multimodal approach [], weak AI techniques, and conceptual similarity of patent drawings in order to overcome the limitations of existing similarity evaluation methods and improve the precision of similar document classification. The proposed method demonstrated higher accuracy and precision compared to conventional approaches. By reducing noise in search results and minimizing unnecessary similarity judgments, the approach offers meaningful time and cost savings for patent examiners, research institutes, and industry practitioners. An overview of the proposed method is presented in Table 1.

Table 1.

Overview of existing research and proposed methods.

The contributions of this study are as follows. First, the concept of conceptual similarity in patent drawings is introduced, and it is empirically demonstrated that this measure better reflects the technical intent of an invention than visual similarity. Second, a statistical correlation between textual similarity and bibliographic similarity is analyzed and validated, providing a new standard for evaluating document similarity. Lastly, the practical applicability of rule-based classification methods and the usefulness of stepwise evaluation strategies are also confirmed.

2. Theoretical Background

2.1. Multimodal Approach

The multimodal approach refers to a methodology that integrates multiple modalities such as text, images, audio, and video to solve problems, leveraging complementary information from diverse sources to achieve higher accuracy than unimodal methods. Baltrušaitis et al. (2018) categorized modality integration methods into model-agnostic approaches and model-based approaches, which include kernel-based models, graphical models, and neural networks [].

A widely used model-based approach is one that utilizes neural networks. Most state-of-the-art multimodal neural network models are Transformer-based, with ViLBERT, UNITER, CLIP, and BLIP being representative examples [,,,,]. A major advantage of deep neural network approaches is that they can learn from large amounts of data and effectively learn inter-modality interactions. In other words, multimodal fusion models combine two modalities with semantic relevance into a single vector file. However, deep learning-based models are difficult to directly apply to long texts and elaborate drawing images, such as those in patent documents, due to limitations in input length, semantic mismatches between text and images, and structural complexity.

Modality-agnostic approaches can be broadly classified into three types: early fusion, late fusion, and hybrid fusion. Early fusion combines features from different modalities at the input stage, enabling the model to learn correlations and interactions from the outset. Late fusion processes each modality independently and merges the results at the final stage using mechanisms such as averaging. Hybrid fusion integrates the advantages of both methods within a unified framework []. Shutova et al. (2016) proposed two techniques: intermediate fusion, which concatenates L2-normalized linguistic and visual vectors, and late fusion, which calculates independent similarity scores and averages them []. Morvant et al. (2014) proposed a method based on the concept of majority voting, where each modality’s output is treated as a voter and weighted optimally during fusion []. Khanna and Sasikumar (2013) proposed a rule-based system for multimodal emotion recognition. They constructed rules to classify emotions based on features extracted from various modalities. Furthermore, they combined the results from multiple rules with a confidence formula to classify the optimal emotion [].

This study adopts a model-independent approach, utilizing a rule-based system (textual and bibliographic information) and an averaging method (text and image data). Rule-based merging utilizes statistical analysis results, thereby avoiding errors in weighting. The averaging method offers the advantage of simple computation and the ease of application to datasets with similar structures.

2.2. Weak AI

John Searle (1980) was the first to introduce the concepts of strong AI and weak AI []. Strong AI refers to artificial intelligence that surpasses human cognitive abilities, whereas weak AI is defined as a tool that simulates intelligent behavior. Russell and Norvig (2016) described weak AI as a system focused on solving specific problems, characterized by its ability to provide solutions within defined problem domains, execute clearly defined tasks, and emphasize output performance rather than replicating human cognitive processes []. Core features of weak AI include a problem-centric design, imitation and execution, goal-directed functionality, and operation within a limited scope.

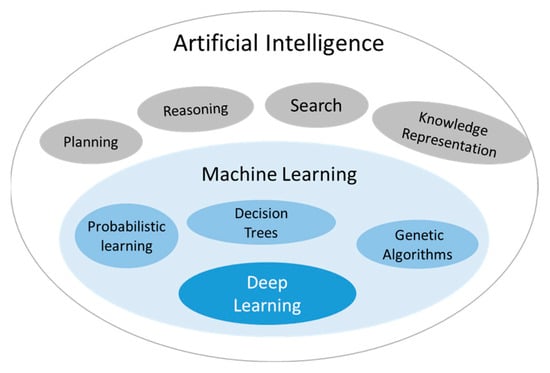

Building on these theoretical developments, Gary Marcus and Ernest Davis (2019) expanded the conceptual boundaries of AI to include not only machine learning and traditional programming but also rule-based algorithms and human-curated knowledge systems []. The categories of AI are summarized in Figure 1.

Figure 1.

Artificial intelligence and some of its subfields [].

The proposed method in this study reflects the core attributes of weak AI through three distinct components: a domain-specific language model for patent documents, a statistical correlation analysis, and a rule-based classification system.

2.2.1. Domain-Specific Solution: Pre-Trained Language Model for Patents

Since the introduction of the BERT model, various pre-trained language models have been developed. These include SciBERT for scientific papers [], BioBERT for biomedical applications [], Legal-BERT for legal documents [], and PatentBERT for patent documents []. Domain-specific models can accurately measure semantic similarity between texts used in a given field compared to general language models. Patent texts are expressions of technology written in legal terms, requiring specialized language models for patent technology [].

The BERT-for-Patents model, based on BERT LARGE, was trained on over one million publicly available patents from the U.S. Patent and Trademark Office. It employs a tokenizer optimized for patent texts and comprises 346 million parameters. The model generates 1024-dimensional feature vectors without fine-tuning and applies either the CLS token or mean pooling for sentence-level embeddings []. Yu et al. (2024) presented an ensemble model combining BERT-for-Patents with three other BERT variants, applying weighted adjustments to improve semantic similarity and achieve higher accuracy in CPC classification tasks [].

2.2.2. Statistical Approach

This component analyzes the correlation between textual and bibliographic similarity using statistical methods. The advantage of statistical analysis lies in its ability to quantify the strength and direction of relationships between variables, offering insight into how one variable influences another. These correlation coefficients can also inform strategies for improving model inputs and implementing effective weighting schemes.

Previous studies have used IPC co-classification analysis [,,,] or backward citation information [,,,] to analyze the similarity of two patent technologies. However, there has been no empirical evidence of any statistical correlation between text similarity and bibliographic similarity, such as IPC co-classification codes or backward citation information. Statistical approaches can be proven through correlation analysis of text similarity and bibliographic similarity for patent data within a certain technological category.

2.2.3. Rule-Based System

Rule-based systems classify input data based on predefined criteria derived from domain expertise []. Although unsupervised learning techniques like clustering are often employed in the absence of labeled data, such methods pose challenges in evaluation and interpretability []. In contrast, rule-based systems offer enhanced transparency and explainability by providing clear reasoning for each classification outcome, despite limitations in scalability and generalization. This study uses statistical analysis results to classify document pairs into four types using an if–then method.

2.2.4. Performance Evaluation Method

The classification results obtained using a rule-based system are evaluated using a confusion matrix. This matrix systematically identifies discrepancies between predicted and actual classes and consists of four components: true positive (TP), false positive (FP), false negative (FN), and true negative (TN). A confusion matrix is primarily a metric used to classify data using machine learning models and analyze classification results []. However, it is also useful in rule-based classification systems, as it allows for the easy identification of the impact of each rule on classification accuracy. Performance evaluation metrics include accuracy, precision, recall, and F1 score, and MAP/NDCG metrics can be additionally utilized.

Thus, this study’s approach to assessing patent document similarity reflects the typical characteristics of weak AI, which focuses on solving specific problems: the use of domain-specific language models, statistical correlation analysis, data classification using a rule-based system, and performance evaluation utilizing a confusion matrix. Weak AI is optimized to achieve clear goals within a limited scope and has strengths in its interpretability, ease of implementation, and reliability, making it an efficient and practical alternative to complex AI models [].

2.3. Conceptual Similarity in Patent Drawings

In patent documents, drawings visually describe the technical characteristics and functional effects of an invention. Therefore, the conceptual similarity of patent drawings is grounded in the core principles and functional resemblances of the underlying technological invention. This notion of conceptual similarity is particularly critical in patent infringement lawsuits, where it is used extensively to interpret the technical scope of patent claims and to assess whether a competing product is structurally and functionally identical, or merely a design-around that maintains substantive equivalence.

However, an important characteristic of patent drawings is that the subject matter of the patent is often not an object or thing with a specific shape. In cases where the shape does not actually exist, such as chemical inventions or method inventions, flowcharts that express the contents or order of the chemical invention formula or method are often used as drawings. Kravets et al. (2017) classified the types of patent drawings into eight types: technical drawings, chemical structures, program codes, sequences of genes, flowcharts and UML diagrams, diagrams or graphs, mathematical formulas [], and tables. Jiang et al. (2020) and Castorena et al. (2020) added symbols [,]. Therefore, the visual similarity of patent drawings is evaluated based on the properties or characteristics of the images, and above all, the various types of drawings make it difficult to directly reflect the content of the technology.

To address these issues, Csurka et al. (2012), Vrochidis et al. (2012), and Vrochidis et al. (2014) separately stored patent drawings and texts and proposed image retrieval based on visual similarity and concept-based image retrieval functions, respectively [,,]. However, it was difficult to directly compare the similarity between patent drawings and texts. Therefore, in this section, we propose the conceptual similarity of patent drawings, which has rarely been addressed in patent similarity assessment.

This section introduces the concept of conceptual similarity in patent drawings, an aspect rarely addressed in existing patent similarity evaluations. Image similarity is generally categorized into visual similarity and conceptual similarity. Terms used in prior research—such as visual, perspective, and content-based image—all refer to the visual content of an image [,,,]. In contrast, the term contextual is often used interchangeably with concept-based or context-based approaches [,,,,,,]. Visual similarity is commonly used for image retrieval, classification, or prediction tasks. On the other hand, conceptual similarity leverages various forms of associated textual data—including descriptions, keywords, tags, annotations, captions, labels, and comments—to retrieve semantically similar images []. Conceptual similarity evaluates the degree to which particular entities or ideas share similar purposes, functions, structures, or meanings across different contexts.

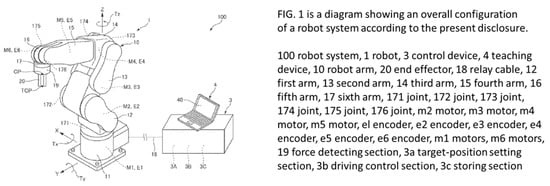

This study evaluates the conceptual similarity of patent drawings using the “BRIEF DESCRIPTION OF THE DRAWINGS” and “DESCRIPTION OF EXEMPLARY EMBODIMENTS” in patent documents. Examples of a patent document are shown in Figure 2.

Figure 2.

Patent application examples (adapted from U.S. Patent Publication No. 20220134571A1, available via the USPTO database []).

3. Data and Methodology

3.1. Overview of Data Analysis and Similarity Evaluation Methods

This section outlines the key components used to evaluate similarity in patent documents, which are typically categorized into three types: textual information, bibliographic information, and visual content such as drawings. Within the textual category, the abstract and the independent claim are the most directly relevant components for evaluating technological similarity. The abstract provides a summary of the patented technology, while the independent claim specifies the configuration or method underlying the invention. Textual data serve as the primary source of information for assessing technological similarity between patents. In terms of bibliographic information, patent classification codes and cited references have been identified in prior research as highly correlated with patent similarity. Additionally, the filing year is utilized to analyze the temporal correlation of patent similarity over time.

Finally, patent drawings, which serve to describe or illustrate the technology, are also considered as relevant indicators in evaluating patent similarity. The summary of the patent document elements relevant to similarity evaluation is shown in Table 2.

Table 2.

Patent document elements relevant to similarity evaluation.

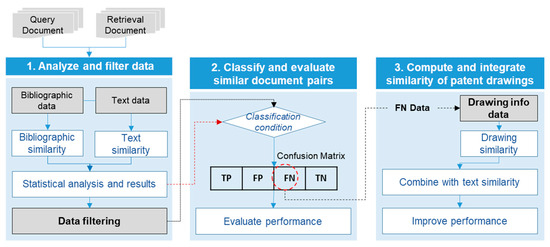

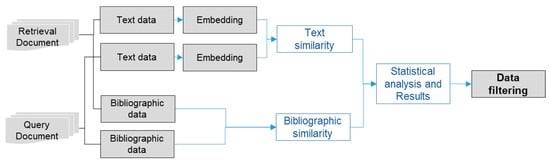

To assess the similarity of patent documents, this study utilizes three elements of patent documents (text, bibliographic information, and drawings) and proposes a multimodal approach, weak AI, and the conceptual similarity of patent drawings. In Step 1, textual and bibliographic similarity between two documents (Query Document and Search Document) are calculated. Data noise is removed and filtered based on statistically significant results. In Step 2, statistical analysis results and a rule-based system are used to classify the filtered data into four types. In Step 3, conceptual similarity of patent drawings is calculated for the FN-classified dataset and combined with text similarity. Additionally, the experimental results verify that the classification accuracy is improved and that it shows better results than single-modality and arithmetic combination methods. The process of the similarity assessment for patent documents using a multimodal approach is shown in Figure 3.

Figure 3.

Similarity assessment process for patent documents using a multimodal approach (conceptualized and developed as part of this study).

The main methodologies employed in this study are as follows. Text embedding is performed using the Anferico/BERT-for-Patents model with a mean pooling approach. Statistical analyses include Spearman correlation coefficients and one-way ANOVA. Cosine similarity is applied to textual information, while Jaccard similarity is used for bibliographic data. Similarity scores are combined using scalar multiplication and the geometric mean. Classification is conducted using a rule-based system, and model performance is evaluated using precision and accuracy metrics. The summary of the methods is shown in Table 3.

Table 3.

Methods for assessing similarity in patent documents.

3.2. Step 1: Similarity Analysis and Data Filtering

3.2.1. Calculating Text Similarity

Textual information includes both the abstract and the representative claim columns of patent documents. The BERT model is used to obtain text embeddings, and the cosine similarity is calculated for each column’s embeddings. The combined similarity of the two text columns is computed using scalar multiplication, which multiplies the two similarity values arithmetically. Scalar multiplication retains large values while suppressing smaller ones, thus accentuating the difference in similarity between documents.

Bali, A. et al. (2024) evaluated the BERT model as a language model capable of measuring similarity reflecting context [], and Yu, L. et al. (2024) numerically presented that the Anferico/BERT-for-Patents model is effective in measuring patent document similarity through individual performance and ensemble contribution []. Blume, M. et al. (2024) stated that fine-tuning of the BERT model is necessary for determining similarity that is suitable for patents [].

3.2.2. Calculating Bibliographic Information Similarity

The similarity assessment using bibliographic information incorporates three variables: the complete CPC classification codes, the representative CPC classification code, and backward citation data. To evaluate similarity from a patent application period perspective, we use only backward citation data from two citation datasets. The Jaccard similarity metric is applied to measure the degree of overlap between sets of complete CPC codes and citation data. For the representative CPC classification, similarity is operationalized using a three-level coding scheme: a value of 2 is assigned when both documents share an identical CPC code (ex, B25J-0001/00, B25J-0001/00), a value of 1 is given when the two codes match up to the fourth hierarchical level (ex B25J-0001/00, B25J-0001/03), and a value of 0 is applied when the codes differ entirely (ex, B25J-0001/00, B25J-0009/0003). An overview of the bibliographic similarity calculation methods is presented in Table 4.

Table 4.

Bibliographic information similarity calculation methods.

3.2.3. Correlation Analysis Between Textual and Bibliographic Similarity

To examine the relationship between textual similarity and bibliographic similarity, correlation analyses are conducted using appropriate statistical methods tailored to the characteristics of the variables. Spearman’s rank correlation coefficient is applied to analyze the relationships between textual similarity and both complete classification codes and citation-based similarity, due to the non-linear nature of the variables []. In contrast, one-way analysis of variance (ANOVA) is used to assess the relationship between textual similarity and representative classification code groups, testing for mean differences across multiple groups []. Table 5 summarizes the correlation methods and corresponding similarity ranges used in this analysis.

Table 5.

Correlation analysis methods between textual and bibliographic information.

The correlation analysis between bibliographic similarity and text similarity is repeated for each filing year of the searched document, calculating a correlation index for each year. This correlation is related to the time-series flow of the query document and the searched document. Based on the query document, the filing year of the searched document with the highest correlation index is identified, and the start date of the searched document is adjusted to that filing year. This approach helps reduce false positives (FPs) and false negatives (FNs) by narrowing the time span of the retrieved documents and refining the classification of similar document sets. The overall input, analysis, and filtering procedures for the full dataset are illustrated in Figure 4.

Figure 4.

Procedure for data input, analysis, and filtering stages.

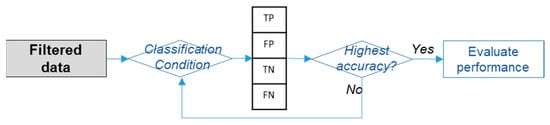

3.3. Step 2: Classification and Evaluation of Similar Document Pairs

Step 2 involves classifying all document pairs into four categories based on classification criteria. If the representative classification code group has a scale of 2 and the overall classification code similarity or citation similarity is close to 1, the document pair can be considered very similar. So, by setting bibliographic similarity as the actual similarity (x) and using text similarity as the predicted value (y), four classification criteria can be set.

The threshold value of textual similarity refers to the value that corresponds to the highest accuracy in classifying document pairs. As illustrated in Figure 5, by using both the actual similarity criteria and predicted similarity criteria in conjunction with a confusion matrix, document pairs can be categorized into four types as shown in Table 6.

Figure 5.

Method and procedure for classifying document pairs.

Table 6.

Document pairs classification using confusion matrix.

3.4. Step 3: Conceptual Similarity Calculation and Integration of Patent Drawings

In Step 3, conceptual similarity between patent drawings is measured using their accompanying textual descriptions, and this similarity is then integrated with textual similarity to improve document pair classification performance. The document pairs used here are those classified as FN in Step 2. FN data are either positive (actually similar document pairs) or missed due to text similarity that is lower than the threshold. If the patent drawings and descriptions shown in Figure 2 are extracted, the result is as presented in Figure 6.

Figure 6.

Example of patent drawing and description (extracted from U.S. Patent Publication No. 20220134571A1, available via the USPTO database []).

Embed the description text attached to patent drawings and calculate cosine similarity. To combine the drawing-based conceptual similarity with textual similarity, the geometric mean is used. Compared to the arithmetic mean, which can be skewed by extremely large values in one similarity measure, the geometric mean provides a more stable metric by reducing the impact of such outliers.

4. Experiments and Results

4.1. Description of Data Collection, Sampling, and Variables

In this study, manipulator technology was selected as the experimental subject, and U.S. patent application data were collected. Manipulator technology corresponds to the CPC classification code group B25J, which is a complex technology with approximately 290 subgroups. For the experiment, 70 patents filed in 2021 were set as the query dataset, and 9543 patents filed between 2000 and 2020 were set as the search dataset to establish the basis for experimentation and analysis. The overall data collection and sampling process is summarized in Table 7.

Table 7.

Overview of patent datasets used in the experiment, including Query/Search datasets and collected image data.

Looking at the patent status of the Search Dataset, as shown in Table 8, the number of applications gradually increased from 2000 until it surpassed 1000 in 2018. Table 9 also shows the status of technology classification. Within the main group of representative classification codes, B25J-0009 (Program-controlled manipulators) accounted for 5683 applications, while B25J-0015 (Gripping heads) accounted for 1493 applications, representing 75.1% of the total data.

Table 8.

Number of patent applications by year in Search Dataset.

Table 9.

Number of patent applications by CPC in Search Dataset.

These datasets consist of seven columns, and the variable names and data formats are shown in Table 10.

Table 10.

Variable names and data formats.

4.2. Results and Analysis

4.2.1. Results of Textual and Bibliographic Similarity Calculations and Correlation Analysis

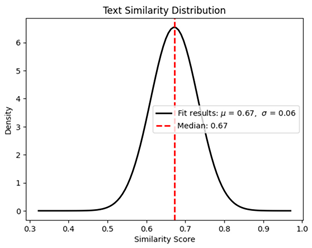

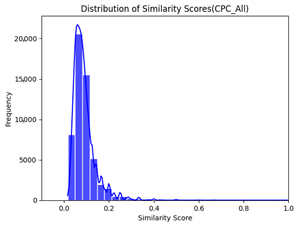

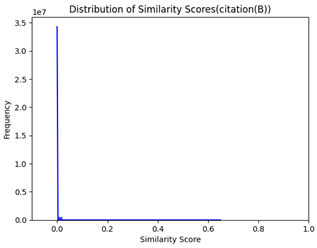

Pairwise similarity was calculated by matching the 70 query patents with the 9543 search patents on a one-to-one basis, yielding 668,010 records with six columns (query_index (index of query patents), search_index (index of search patents), CPC_Main_match (similarity of representative CPC codes), CPC_All_match (similarity of complete CPC codes), citation(B)_match (similarity of backward citations), and text_bert (combined similarity of representative claims and abstracts, hereafter “textual similarity”). Table 11 presents the descriptive statistics and data distributions for the four columns, excluding the two index columns.

Table 11.

Descriptive statistics for the four similarity columns.

text_bert shows a bell-shaped distribution ranging from 0.32 to 0.97, with data for CPC_All and citation(B) clustered below 0.1. CPC_Main was counted as 0 (different classification codes) for 378,870 cases (56.7%), and 1 (same group unit) for 278,698 cases (41.7%), and 2 (identical CPC_Main codes) for 10,442 cases (1.56%). As such, the distributions of the four similarities are highly unbalanced.

First, we estimated the relationship between the variables by calculating Spearman’s correlation using four variables: text similarity and three bibliographic similarities. The Spearman coefficient between text_bert and CPC_Main_match was 0.23, and that between text_bert and CPC_All_match was 0.21, indicating a positive correlation. In contrast, the coefficient between text_bert and citation(B)_match was 0.041, indicating a very weak correlation.

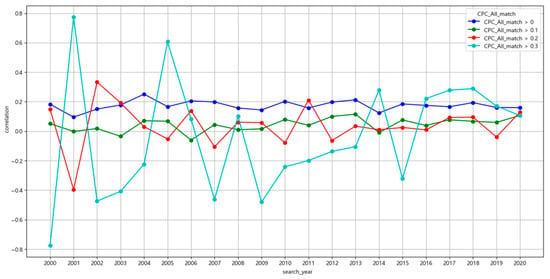

Secondly, the results of Spearman correlation analysis with text_bert were obtained by setting the criteria for CPC_All_match (➀ greater than 0, ➁ greater than 0.1, ➂ greater than 0.2, ④ greater than 0.3) and these are as shown in Figure 7. Looking at the graph, there is no significant change in the correlation coefficient by year from ➀ to ➂. On the other hand, because there were relatively few data points exceeding the criteria value of 0.3 (④), this resulted in a large change in the correlation coefficient by year. However, in 2018, we can see that the correlation coefficient increased somewhat for most criteria. In conclusion, the results of the correlation analysis by year between text similarity and CPC simultaneous classification similarity showed that searched documents after 2018 had the highest correlation with applied documents.

Figure 7.

Yearly correlation between text similarity and full classification code similarity based on experimental results of this study.

Finally, a one-way ANOVA was performed using CPC_Main_match and text_bert. The one-way ANOVA results showed that the F-statistics for the entire dataset and the post-2018 dataset were 19,279.1 and 8104.45, respectively. Although the F-statistics for the entire dataset are large, the p-values were all below 0.05, indicating statistical significance. This indicates that the between-group variation is significantly greater than the within-group variation in both datasets. The results of the statistical analysis for both the entire dataset and the post-2018 dataset are presented in Table 12.

Table 12.

Correlation analysis results between bibliographic similarity and textual similarity.

Restricting the search set to patents filed from 2018 onward, based on the statistical findings, reduces the comparison pairs to 273,980 (3914 search patents × 70 query patents).

4.2.2. Classification Criteria and Results of Similar Document Pairs

In this experiment, actual similar document pairs were defined using two varia-bles—overall classification code similarity and representative classification code group—combined with an AND operation. The criteria for actual similar document pairs were “representative classification code group is 1 or more” and “overall classi-fication code similarity is greater than 0.” Conversely, the criteria for actual dissimilar document pairs were “representative classification code group is 0” and “overall clas-sification code similarity is also 0.” The conditional expressions are shown in Table 13.

Table 13.

Classification criteria for document pairs.

Based on the classification criteria for similar document pairs, the results of classification with varying textual similarity thresholds—from the average value of 0.68 to 0.80—are presented in Table 14. The average value of text_bert in the dataset since 2018 is 0.68. When classifying data by increasing the threshold by 0.01, accuracy peaked at 0.886 at a threshold of 0.77, then gradually decreased. When accuracy is 0.886, the corresponding precision is 0.617, and MAP, which represents ranking quality, is 0.7138. As a result, the optimal predicted value corresponding to the actual value of the similar document pair is 0.77.

Table 14.

Classification performance of document pairs under varying mean text similarity thresholds (0.68–0.80).

The meaning of each indicator is as follows. The classification results at a threshold of 0.77 show that TNs (237,044 cases) account for approximately 86.5% of the entire dataset (273,980 cases), followed by TPs (5711 cases), FPs (3543 cases), and FNs (27,682 cases). In such an imbalanced dataset, the high proportion of TNs makes accuracy an unsuitable metric for evaluating model performance. In this study, accuracy is appropriately evaluated solely as a criterion for determining the threshold for text similarity. Precision refers to the proportion of data that are actually positive among those predicted by the model as positive. In this study and experiment, precision is the most important performance metric. Low precision increases the number of false-positive data and increases the review cost. Recall refers to the proportion of text similarity exceeding the threshold in pairs of similar documents. Lowering the text threshold increases recall but decreases precision. This demonstrates that precision and recall generally exhibit a trade-off in data where the boundary between positive and negative is unclear. Finally, the F1 score evaluates the balance between precision and recall, but like recall, it is a secondary indicator to precision.

In addition to this binary classification evaluation, we added the MAP/NDCG@10 index to evaluate the quality of similarity-based ranking. MAP/NDCG is an index that measures “whether similar documents are well ranked at the top when sorting patent documents with high similarity based on the query document” [,]. The MAP index is also used to evaluate search performance in patent searches []. The values of MAP and NDCG@10, evaluated with a precision focus at a threshold of 0.77, are 0.7138 and 0.7656, respectively. This indicates that some FPs are at the top of the ranking and TPs are not completely ranked at the top.

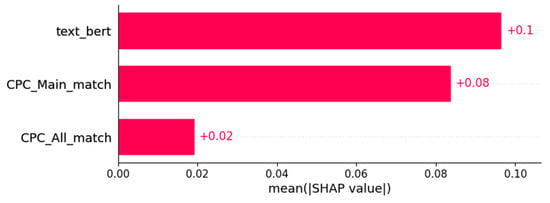

Using the classification results with a threshold value of 0.77, Shapley Additive Explanation (SHAP) analysis can be performed to analyze the impact of the variables used on the classification results. As shown in Figure 8, the average SHAP values of the three variables (features) used in the conditional expression were calculated as 0.1 for text_bert, 0.08 for CPC_Main_match, and 0.02 for CPC_All_match. SHAP analysis is used to clearly explain a machine learning model as an indicator of its explainability []. It can also be used to determine the importance or priority of variables used in rules in rule-based systems.

Figure 8.

Global feature importance plot based on experimental results of this study.

4.2.3. Calculation of Conceptual Similarity and Precision Improvement Results

In the third experimental phase, we derived conceptual similarity between representative images and combined it with text similarity. The image dataset used in this step targets data classified as a FN at a threshold of 0.77. While these FN data meet the criteria for true similar documents, their text similarity values are below the threshold. Because of limitations in data collection, among the 3502 FN pairs with text similarity greater than 0.75, we selected 365 document pairs and extracted descriptive texts for each representative image. These 365 pairs include 53 query documents and 157 search documents.

Calculating conceptual and combined similarity of the drawings revealed that a total of 204 that were previously classified as false negatives (FNs) were reclassified as true positives (TPs), increasing the number of TPs from 5711 to 5915. Consequently, accuracy improved by 1.30% compared to before applying conceptual similarity to the drawings. These results are summarized in Table 15.

Table 15.

Changes in classification performance metrics by stage (based on a threshold of 0.77).

4.3. Summary and Validation of Experimental Results

When summarizing the entire experimental results as shown in Table 15, the precision increased step-by-step: 0.549 in Stage 1 (entire dataset classification), 0.617 in Stage 2 (filtered dataset classification), and 0.625 in Stage 3 (combined with conceptual similarity).

Finally, we conducted two additional experiments to validate our research methodology. The first experiment validated the proposed method. We compared the number of document pairs with a citation similarity greater than 0 in the dataset when text similarity alone was used, when text similarity and overall classification code similarity were combined with a weight of 0.5, and when the proposed method was used. Citation similarity was excluded from the experimental variables because it had a very low correlation with text similarity, but it can be used to verify the experimental results.

As shown in Table 16, the numbers of data points with a citation similarity greater than 0 were 82, 57, and 106, respectively, indicating that the proposed method includes the largest number of similar document pairs.

Table 16.

Comparison of similarity evaluation methods.

In the second experiment, we computed visual similarity for 365 drawing images using the ResNet-50 model and compared it with conceptual similarity. As summarized in Table 17, visual similarity showed lower similarity values and larger variance compared to conceptual similarity.

Table 17.

Comparison of visual similarity and conceptual similarity in drawings.

When combined with text similarity, only 39 document pairs exceeded the threshold. This was a significant difference from the 204 document pairs exceeding the threshold in conceptual similarity analysis. This demonstrates that the conceptual similarity approach is more effective at extracting similar documents that would otherwise be missed in data classified as false negatives (FNs).

The third experiment compared the results of calculating similarity by concatenating text and image embeddings with the results of combining conceptual similarity. As shown in Table 18, the embedding combination method exhibited a wider range of minimum and maximum values than the conceptual similarity combination method, and the variance was over seven times greater. High variance indicates high data volatility and low stability, which increases the likelihood of errors in the decision-making process. Furthermore, the embedding combination method presents limitations in terms of explainability, making the interpretation of the results difficult.

Table 18.

Comparison of embedding combination and conceptual similarity combination results.

Unlike conceptual similarity, concatenating embedding data from different formats is a widely used method in recent multimodal deep learning models, such as CLIP. While some studies have combined images and text from design patents [], embedding-level concatenation methods offer little benefit for learning complex technical descriptions and patent drawings together in patent data. The proposed method independently reflects the similarity of each modality and, when combined, emphasizes only cases where both similarities are simultaneously high, making it easy to identify differences in document similarity.

5. Conclusions

This study employed a multimodal approach, weak AI, and the conceptual similarity of patent diagrams to improve the precision of similarity evaluation between patent documents. As a result of the experiment, a statistically significant positive correlation was confirmed between text similarity and bibliographic similarity, particularly showing a high correlation in recently retrieved documents based on the query documents. Through data filtering and rule-based classification, similar document pairs could be effectively classified, and by combining the conceptual similarity of patent drawing with text similarity, classification precision was further improved.

From an academic perspective, this study newly proposed the concept of conceptual similarity in patent diagrams, demonstrating that it better reflects technical intent compared to visual similarity. Furthermore, the statistical correlation analysis between text and bibliographic information provided a new standard for evaluating document similarity, and it was confirmed that the temporal context serves as an important criterion in evaluating patent document similarity. Based on these academic achievements, the practical implications of this study are as follows. The proposed method can be applied even in large-scale unlabeled or imbalanced data environments. The rule-based system and three-stage progressive evaluation method increase the model’s explainability, enabling reproducibility and application. Furthermore, compared to existing machine learning and deep learning models, the proposed method offers practical significance in that it efficiently reduces the execution time and computing resources required for analysis processes, including classification learning.

This study presents a useful method for prioritizing search results based on similarity when a patent database system generates a large number of search results, focusing on query documents. However, there are cases where two technically dissimilar patents show high textual similarity. A limitation of this study is that it does not logically clarify the difference between semantic and technical similarity in a language model. Future research should utilize SHAP to quantify or visualize the words and sentences that influence the similarity assessment of two patent texts. This approach is consistent with reviews of SHAP applications across an NLP and with recent research on similarity model interpretation [].

Furthermore, this study lacks the ability to apply it to other domains. Therefore, future research is needed to broaden the scope of similarity assessment and to generalize the research model.

Author Contributions

Conceptualization, H.K.; methodology, H.K.; software, H.K.; validation, H.K. and G.G.; formal analysis, H.K.; investigation, H.K.; resources, G.G.; data curation, H.K.; writing—original draft preparation, H.K.; writing—review and editing, H.K. and G.G.; visualization, H.K.; supervision, G.G.; project administration, G.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data, including query/search patent metadata and representative drawings used in conceptual similarity analysis, are openly available in Figshare at https://doi.org/10.6084/m9.figshare.29603543. A detailed list of image file names and corresponding patent IDs is included in two csv files (patent_query_data(70).csv, patent_search_data(9543).csv).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| ANOVA | Analysis of Variance |

| CPC | Cooperative Patent Classification |

| MAP | Mean Average Precision |

| NDCG | Normalized Discounted Cumulative Gain |

References

- World Intellectual Property Organization. IP Statistics Data Center: International Patent Filings by Applicants. World Intellectual Property Organization. Available online: https://www3.wipo.int/ipstats/key-search/search-result?type=KEY&key=205 (accessed on 1 June 2025).

- Yoo, Y.; Jeong, C.; Gim, S.; Lee, J.; Schimke, Z.; Seo, D. A Novel Patent Similarity Measurement Methodology: Semantic Distance and Technological Distance. arXiv 2023, arXiv:2303.16767. [Google Scholar] [CrossRef]

- Bali, A.; Bhagwat, A.; Bhise, A.; Joshi, S. Semantic Similarity Detection and Analysis for Text Documents. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024; pp. 1–9. [Google Scholar] [CrossRef]

- Zhang, L.; Li, J.; Wang, C. Automatic synonym extraction using Word2Vec and spectral clustering. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 5629–5632. [Google Scholar] [CrossRef]

- Liu, S.H.; Liao, H.L.; Pi, S.M.; Hu, J.W. Development of a Patent Retrieval and Analysis Platform—A hybrid approach. Expert Syst. Appl. 2011, 38, 7864–7868. [Google Scholar] [CrossRef]

- Preschitschek, N.; Niemann, H.; Leker, J.; Moehrle, M. Anticipating industry convergence: Semantic analyses vs IPC co-classification analyses of patents. Foresight 2013, 15, 446–464. [Google Scholar] [CrossRef]

- Zhang, Y.; Shang, L.; Huang, L.; Porter, A.L.; Zhang, G.; Lu, J.; Zhu, D. A hybrid similarity measure method for patent portfolio analysis. J. Informetr. 2016, 10, 1108–1130. [Google Scholar] [CrossRef]

- Ashtor, J.H. Investigating cohort similarity as an ex ante alternative to patent forward citations. J. Empir. Leg. Stud. 2019, 16, 848–880. [Google Scholar] [CrossRef]

- Rodriguez, A.; Kim, B.; Turkoz, M.; Lee, J.M.; Coh, B.Y.; Jeong, M.K. New multi-stage similarity measure for calculation of pairwise patent similarity in a patent citation network. Scientometrics 2015, 103, 565–581. [Google Scholar] [CrossRef]

- Whalen, R.; Lungeanu, A.; DeChurch, L.; Contractor, N. Patent similarity data and innovation metrics. J. Empir. Leg. Stud. 2020, 17, 615–639. [Google Scholar] [CrossRef]

- Csurka, G.; Renders, J.M.; Jacquet, G. XRCE’s Participation at Patent Image Classification and Image based Patent Retrieval Tasks of the CLEF IP 2011, CLEF 2011 Workshop (Notebook Papers/Labs/Workshop). In Proceedings of the Multilingual and Multimodal Information Access Evaluation, Amsterdam, The Netherlands, 19–22 September 2011. [Google Scholar] [CrossRef]

- Vrochidis, S.; Moumtzidou, A.; Kompatsiaris, I. Enhancing patent search with content based image retrieval. In Professional Search in the Modern World: COST Action IC1002 on Multilingual and Multifaceted Interactive Information Access; Springer: Cham, Switzerland, 2014; pp. 250–273. [Google Scholar] [CrossRef]

- Jiang, S.; Luo, J.; Pava, G.R.; Hu, J.; Magee, C.L. A convolutional neural network based patent image retrieval method for design ideation. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference (IDETC/CIE), ASM International, Virtual, 17–19 August 2020; Volume 83983, p. V009T09A039. [Google Scholar]

- Kucer, M.; Oyen, D.; Castorena, J.; Wu, J. DeepPatent: Large scale patent drawing recognition and retrieval. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 2309–2318. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining task agnostic visiolinguistic representations for vision and language tasks. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, NeurIPS, Vancouver, CO, Canada, 8–14 December 2019; pp. 13–23. [Google Scholar]

- Chen, Y.C.; Li, L.; Yu, L.; El Kholy, A.; Ahmed, F.; Gan, Z.; Liu, J. UNITER: Universal image text representation learning. In Proceedings of the ECCV Workshops, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Online, 18–24 July 2021; Volume 139, pp. 8748–8763. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; Volume 162, pp. 12888–12900. [Google Scholar]

- Shutova, E.; Kiela, D.; Maillard, J. Black holes and white rabbits: Metaphor identification with visual features. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL HLT), San Diego, CA, USA, 12–17 June 2016; pp. 160–170. [Google Scholar]

- Morvant, E.; Habrard, A.; Ayache, S. Majority vote of diverse classifiers for late fusion. In Proceedings of the Structural, Syntactic, and Statistical Pattern Recognition: Joint IAPR International Workshop, S+SSPR 2014, Joensuu, Finland, 20–22 August 2014; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2014; Volume 8621, pp. 153–162. [Google Scholar] [CrossRef]

- Khanna, P.; Sasikumar, M. Rule based system for recognizing emotions using multimodal approach. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 32–39. [Google Scholar] [CrossRef]

- Searle, J.R. Minds, brains, and programs. Behav. Brain Sci. 1980, 3, 417–457. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson: London, UK, 2016. [Google Scholar]

- Marcus, G.; Davis, E. Rebooting AI: Building Artificial Intelligence We Can Trust; Vintage: New York, NY, USA, 2019. [Google Scholar]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A Pretrained Language Model for Scientific Text. In Proceedings of the EMNLP-IJCNLP, Hong Kong, China, 3–7 November 2019; pp. 3615–3620. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Chalkidis, I.; Fergadiotis, M.; Malakasiotis, P.; Aletras, N.; Androutsopoulos, I. LEGAL-BERT: The Muppets Straight out of Law School. In Findings of the Association for Computational Linguistics: EMNLP 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar] [CrossRef]

- Srebrovic, R.; Yonamine, J. How AI, and Specifically BERT, Helps the Patent Industry. Google Blog. Available online: https://services.google.com/fh/files/blogs/bert_for_patents_white_paper.pdf (accessed on 1 June 2025).

- Yu, L.; Liu, B.; Lin, Q.; Zhao, X.; Che, C. Semantic Similarity Matching for Patent Documents Using Ensemble BERT related Model and Novel Text Processing Method. arXiv 2024, arXiv:2401.06782. [Google Scholar] [CrossRef]

- Qiu, Z.; Wang, Z. Patent Citation Network Simplification and Similarity Evaluation Based on Technological Inheritance. IEEE Trans. Eng. Manag. 2021, 70, 4144–4161. [Google Scholar] [CrossRef]

- Grosan, C.; Abraham, A. Rule Based Expert Systems. In Intelligent Systems: A Modern Approach; Springer: Berlin/Heidelberg, Germany, 2011; pp. 149–185. [Google Scholar] [CrossRef]

- Almuqati, M.T.; Sidi, F.; Mohd Rum, S.N.; Zolkepli, M.; Ishak, I. Challenges in Supervised and Unsupervised Learning: A Comprehensive Overview. Int. J. Adv. Sci. Eng. Inf. Technol. 2024, 14, 1449–1455. [Google Scholar] [CrossRef]

- Liang, J. Confusion Matrix: Machine Learning; POGIL Activity Clearinghouse: Lancaster, PA, USA, 2022; Volume 3. [Google Scholar]

- Kravets, A.; Lebedev, N.; Legenchenko, M. Patents Images Retrieval and Convolutional Neural Network Training Dataset Quality Improvement. In Proceedings of the IV International Research Conference “Information Technologies in Science, Management, Social Sphere and Medicine” ITSMSSM 2017, Tomsk, Russia, 5–8 December 2017; Atlantis Press: Paris, France, 2017; pp. 287–293. [Google Scholar]

- Castorena, J.; Bhattarai, M.; Oyen, D. Learning Spatial Relationships between Samples of Patent Image Shapes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops CVPRW 2020, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 172–173. [Google Scholar] [CrossRef]

- Vrochidis, S.; Moumtzidou, A.; Kompatsiaris, I. Concept Based Patent Image Retrieval. World Pat. Inf. 2012, 34, 292–303. [Google Scholar] [CrossRef]

- Ghauri, J.A.; Müller Budack, E.; Ewerth, R. Classification of Visualization Types and Perspectives in Patents. In Proceedings of the International Conference on Theory and Practice of Digital Libraries TPDL 2023, Zadar, Croatia, 26–29 September 2023; Springer: Cham, Switzerland, 2023; pp. 182–191. [Google Scholar] [CrossRef]

- Sidiropoulos, P.; Vrochidis, S.; Kompatsiaris, I. Content Based Binary Image Retrieval Using the Adaptive Hierarchical Density Histogram. Pattern Recognit. 2011, 44, 739–750. [Google Scholar] [CrossRef]

- Vrochidis, S.; Papadopoulos, S.; Moumtzidou, A.; Sidiropoulos, P.; Pianta, E.; Kompatsiaris, I. Towards content-based patent image retrieval: A framework perspective. World Pat. Inf. 2010, 32, 94–106. [Google Scholar] [CrossRef]

- Achille, A.; Ver Steeg, G.; Liu, T.Y.; Trager, M.; Klingenberg, C.; Soatto, S. Interpretable measures of conceptual similarity by complexity constrained descriptive auto encoding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 11062–11071. [Google Scholar] [CrossRef]

- Bartolini, I. Context Based Image Similarity Queries. In Proceedings of the Adaptive Multimedia Retrieval: User, Context, and Feedback—Third International Workshop, AMR 2005, Revised Selected Papers, Glasgow, UK, 28–29 July 2005; Springer: Berlin/Heidelberg, Germany, 2006; pp. 222–235. [Google Scholar] [CrossRef]

- Franzoni, V.; Milani, A.; Pallottelli, S.; Leung, C.H.; Li, Y. Context based image semantic similarity. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery, FSKD 2015, Zhangjiajie, China, 15–17 August 2015; pp. 1280–1284. [Google Scholar] [CrossRef]

- Huang, L.; Milne, D.; Frank, E.; Witten, I.H. Learning a concept based document similarity measure. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 1593–1608. [Google Scholar] [CrossRef]

- Nguyen, C.T. Bridging semantic gaps in information retrieval: Context based approaches. In Proceedings of the ACM VLDB 2010, Singapore, 13–17 September 2010. [Google Scholar]

- United States Patent and Trademark Office, U.S. Patent Application No. 17/514,052. Available online: https://ppubs.uspto.gov/pubwebapp/static/pages/ppubsbasic.html (accessed on 1 July 2025).

- Chen, Y.L.; Chiu, Y.T. An IPC based vector space model for patent retrieval. Inf. Process. Manag. 2011, 47, 309–322. [Google Scholar] [CrossRef]

- Jang, H.; Kim, S.; Yoon, B. An eXplainable AI (XAI) model for text-based patent novelty analysis. Expert Syst. Appl. 2023, 231, 120839. [Google Scholar] [CrossRef]

- Chikkamath, R.; Parmar, V.R.; Otiefy, Y.; Endres, M. Patent classification using BERT for patents on USPTO. In Proceedings of the 2022 5th International Conference on Machine Learning and Natural Language Processing, Sanya, China, 23–25 December 2022; pp. 20–28. [Google Scholar]

- Lee, J.S.; Hsiang, J. PatentBERT: Patent Classification with Fine Tuning a Pre-Trained BERT Model. arXiv 2019, arXiv:1906.02124. [Google Scholar] [CrossRef]

- Gómez, J.; Vázquez, P.P. An Empirical Evaluation of Document Embeddings and Similarity Metrics for Scientific Articles. Appl. Sci. 2022, 12, 5664. [Google Scholar] [CrossRef]

- Achananuparp, P.; Hu, X.; Shen, X. The Evaluation of Sentence Similarity Measures. In Proceedings of the Data Warehousing and Knowledge Discovery: 10th International Conference, DaWaK 2008, Turin, Italy, 2–5 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 305–316. [Google Scholar] [CrossRef]

- Arsov, N.; Dukovski, M.; Evkoski, B.; Cvetkovski, S. A Measure of Similarity in Textual Data Using Spearman’s Rank Correlation Coefficient. arXiv 2019, arXiv:1911.11750. [Google Scholar] [CrossRef]

- Verma, J.P. One Way ANOVA: Comparing Means of More than Two Samples. In Data Analysis in Management with SPSS Software; Springer: New Delhi, India, 2012; pp. 221–254. [Google Scholar] [CrossRef]

- Blume, M.; Heidari, G.; Hewel, C. Comparing Complex Concepts with Transformers: Matching Patent Claims against Natural Language Text. arXiv 2024, arXiv:2407.10351. [Google Scholar] [CrossRef]

- Magdy, W.; Leveling, J.; Jones, G.J.F. Exploring Structured Documents and Query Formulation Techniques for Patent Retrieval. In Proceedings of the Workshop of the Cross Language Evaluation Forum for European Languages CLEF 2009, Corfu, Greece, 30 September–2 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 410–417. [Google Scholar] [CrossRef]

- Salih, A.M.; Raisi-Estabragh, Z.; Galazzo, I.B.; Radeva, P.; Petersen, S.E.; Lekadir, K.; Menegaz, G. A Perspective on Explainable Artificial Intelligence Methods: SHAP and LIME. Adv. Intell. Syst. 2025, 7, 2400304. [Google Scholar] [CrossRef]

- Lo, H.C.; Chu, J.M.; Hsiang, J.; Cho, C.C. Large Language Model Informed Patent Image Retrieval. arXiv 2024, arXiv:2404.19360. [Google Scholar] [CrossRef]

- Mosca, E.; Szigeti, F.; Tragianni, S.; Gallagher, D.; Groh, G. SHAP-Based Explanation Methods: A Review for NLP Interpretability. In Proceedings of the 29th International Conference on Computational Linguistics COLING 2022, Gyeongju, Republic of Korea, 12–17 October 2022; International Committee on Computational Linguistics: Gyeongju, Republic of Korea, 2022; pp. 4593–4603. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).