Abstract

With the widespread application of unmanned aerial vehicle technology in search and detection, express delivery and other fields, the requirements for unmanned aerial vehicle dynamic area coverage algorithms has become higher. For an unknown dynamic environment, an improved Dual-Attention Mechanism Double Deep Q-network area coverage algorithm is proposed in this paper. Firstly, a dual-channel attention mechanism is designed to deal with flight environment information. It can extract and fuse the features of the local obstacle information and full-area coverage information. Then, based on the traditional Double Deep Q-network algorithm, an adaptive exploration decay strategy and a coverage reward function are designed based on the real-time area coverage rate to meet the requirement of a low repeated coverage rate. The proposed algorithm can avoid dynamic obstacles and achieve global coverage under low repeated coverage rate conditions. Finally, with Python 3.12 and PyTorch 2.2.1 environment as the training platform, the simulation results show that, compared with the Soft Actor–Critic algorithm, the Double Deep Q-network algorithm, and the Attention Mechanism Double Deep Q-network algorithm, the proposed algorithm in this paper can complete the area coverage task in a dynamic and complex environment with a lower repeated coverage rate and higher coverage efficiency.

1. Introduction

Unmanned aerial vehicle (UAV) area coverage technology is widely used in fields such as express delivery, reconnaissance and search and rescue [1,2]. For these scenarios, the UAVs commonly fly in an unknown environment. As a classic trial-and-error learning method, reinforcement learning (RL) can motivate UAVs to continuously learn obstacle avoidance strategies and optimize path planning through exploration and exploitation strategies [3,4,5]. Although many area coverage algorithms have been studied and applied, the problem of the coverage efficiency decrease caused by repeated coverage is common in current algorithms. How to reduce the repeated coverage rate and improve the coverage efficiency are still valuable research issues.

The Q-learning reinforcement learning algorithm was applied to the area coverage problem for an unknown environment in [6]. The method has a lower repeated coverage rate, but it cannot handle area coverage tasks in complex environments. An improved Deep Q-Network (DQN) algorithm was proposed to realize static area coverage exploration tasks in [7]. A spiral cooperative search method was proposed in [8], and the spiral method is more suitable to regular circular areas without obstacles. In order to address complex environment area coverage tasks, a deep deterministic policy gradient algorithm framework based on the Q-learning algorithm was proposed in [9]. The algorithm was simulated in environments containing different numbers and sizes of obstacles. By introducing Gaussian noise into the action space to enhance the exploration capabilities, an improved Double Deep Q-network (DDQN) algorithm was designed to improve both the coverage time and coverage rate [10]. A coverage path planning method was proposed based on a diameter-height model in [11]. The diameter-height model preserves mountainous terrain features more effectively, but parallel spiral ascents increase the path length. For large-scale search areas, a vehicle–UAV collaboration coverage method was proposed in [12], which divides large areas into sub-areas, uses vehicles to transport UAVs, and has UAVs cover each area. However, the mentioned algorithms above mainly focus on area coverage path planning in static environments, which are unable to effectively handle dynamic obstacles.

For unknown dynamic environments, the area coverage rate was considered as an optimization function [13], and their improved method outperformed fundamental models but did not address the issue of redundancy. A full coverage algorithm combining obstacle guidance and a backtracking mechanism was proposed in [14]. A three-dimensional real-time path planning method is proposed by integrating the improved Lyapunov guidance vector field, an interfered fluid dynamical system, and a varying receding-horizon optimization strategy derived from model predictive control in [15]. Experimental results demonstrate its applicability in target tracking and obstacle avoidance within complex dynamic environments. However, its effectiveness in area coverage tasks remains unvalidated, and it suffers from high computational complexity, making deployment challenging. A modified artificial potential field-HOAP method based on position prediction of obstacles was proposed in [16]. A dynamic window approach local path planning method based on velocity obstacles is proposed in [17]. However, it lacks the guarantee of global optimality. An obstacle trajectory prediction classification method was proposed based on nonlinear model predictive control with the PANOC non-convex solver in [18]. However, as a local path planner, it exhibits limited capability in processing global information. An improved Deep Q-Network algorithm based on a Dynamic Data Memory mechanism was proposed for dynamic visual detection in [19]. The research does not validate whether the method can accomplish the area coverage task. The algorithms mentioned above have made some adjustments and improvements for dynamic environments. However, these methods are either not suitable for unknown environments or do not have global environment information processing capabilities.

Motivated by the above discussions, the UAV area coverage problem for an unknown dynamic environment is investigated in this paper. To improve coverage efficiency for existing methods Environmental information processing and coverage strategy improvement are mainly considered. The Attention Mechanism (AM) algorithm simulated human visual attention and has widespread applications in pattern recognition which can dynamically allocate weights to enable the model to focus on the most important aspects of the input data and disregard irrelevant or secondary elements [20,21,22,23]. It has the ability to learn different channels and spatial weight information which can be used to process global environmental information [24,25]. By introducing the AM algorithm into the area coverage problem, an improved Dual-Attention Mechanism Double Deep Q-network (DAM-DDQN) algorithm is proposed in this paper. The DAM-DDQN algorithm can avoid unknown dynamic obstacles effectively and achieve global coverage rapidly with a low repeated coverage rate.

The main contributions of this paper are as follows:

- (1)

- A DAM-DDQN algorithm has been proposed for dynamic obstacle avoidance in UAV area coverage. It can not only avoid dynamic, unknown obstacles, but also improve the coverage efficiency by reducing the repeated coverage rate.

- (2)

- A DAM is designed to realize the feature information fusion of local obstacle information and full-area coverage information for the flight environment.

- (3)

- An adaptive exploration decay DDQN algorithm is proposed. In this algorithm, the decay strategy is designed based on the real-time coverage rate. Meanwhile, a reward function is designed to solve sparse reward problems. The improved DDQN algorithm accelerates the convergence process.

The organization of this paper is summarized as follows: Section 2 describes the problem to be studied. Section 3 introduces the DAM-DDQN algorithm, which was designed for this study and includes state and action space modeling, an improved dual-channel grid attention mechanism and an improved DDQN algorithm. Section 4 presents the experimental simulation analysis. Finally, conclusions are presented in Section 5.

2. Problem Formulation

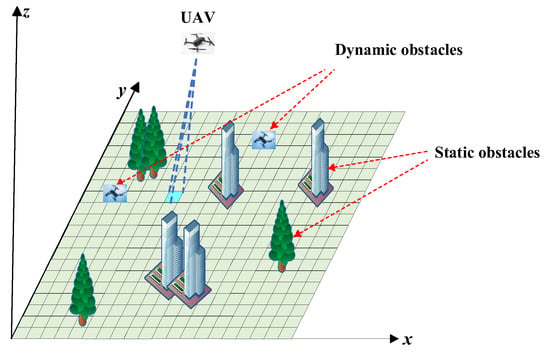

UAV area coverage path planning aims to completely cover a given target area with an optimal path within a limited time. In this paper, we consider UAV area coverage for a dynamic complex environment and the area coverage environment is shown in Figure 1. The target area environment comprises static obstacles, such as trees and buildings, as well as dynamic obstacles, such as other flying UAVs.

Figure 1.

UAV area coverage environment.

For a UAV area coverage task, such as reconnaissance and detection, if the flying altitude is constantly changed, it will not only not help the execution of the task, but also reduce the working performance of the airborne sensors due to the change in altitude. Therefore, this paper does not consider the change in UAV flight altitude [26,27,28]. In order to facilitate the verification of the algorithm, some assumptions are given as follows:

Assumption 1.

The UAV flies in the same horizontal plane which is a fixed altitude.

Assumption 2.

The scanning area of the UAV is fixed in that it scans one grid area at a time.

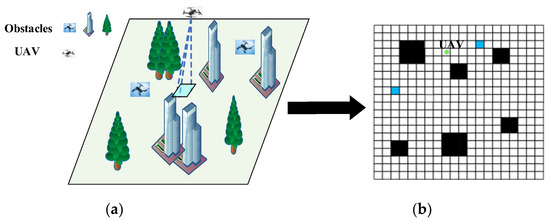

Based on the above assumptions, the target area coverage environment is modeled as a two-dimensional simulation grid map as shown in Figure 2.

Figure 2.

UAV area coverage simulation environment model. (a) UAV target area coverage environment; (b) Two-dimensional simulation grid map.

In Figure 2b, the green dot denotes the UAV, black squares denote static obstacles that the UAV cannot traverse and must avoid, and blue squares denote dynamic obstacles that the UAV must avoid with real-time judgment.

The objective of this work is to complete the area coverage task by designing an optimal path with a low repeated coverage rate that avoids obstacles simultaneously.

3. Area Coverage Algorithm Based on DAM-DDQN

In this section, the state space, action space, reward function and DAM-DDQN algorithm are given for the area coverage problem mentioned in Section 2.

3.1. UAV State Space

The reinforcement learning algorithm needs state information to learn effective strategies for achieving area coverage path planning. The state information includes the current position information of the UAV and the information on its neighbors. The information on its neighbors contains three parts: whether they are obstacles, whether they are boundaries and whether they are covered areas. The state of the UAV can be represented as follows:

where is the current position of the UAV, is the information on its neighbors.

where , , and are the numbers of columns and rows of the grid map, respectively.

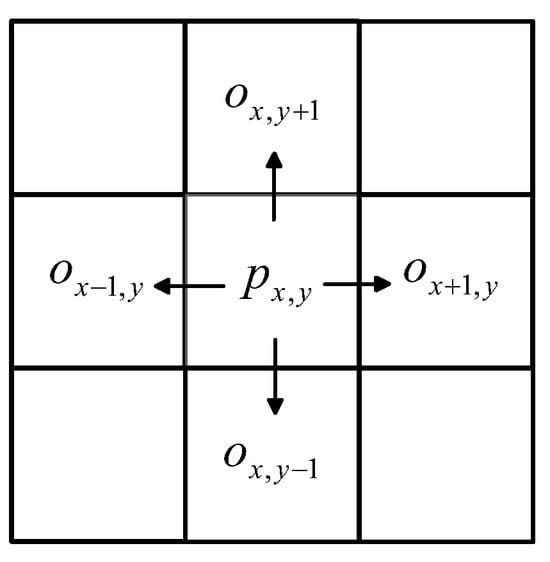

The neighbor information of the UAV includes information on neighbors in all four directions, as shown in Figure 3.

Figure 3.

The neighbor information of the UAV.

In Figure 3, arrow represents the direction of the four neighbors of the local position, represents the position information of the UAV, is the information on its left neighbor, is the information on its upper neighbor, is the information on its right neighbor, is the information on its lower neighbor. Consequently, can be represented as follows:

3.2. UAV Action Space

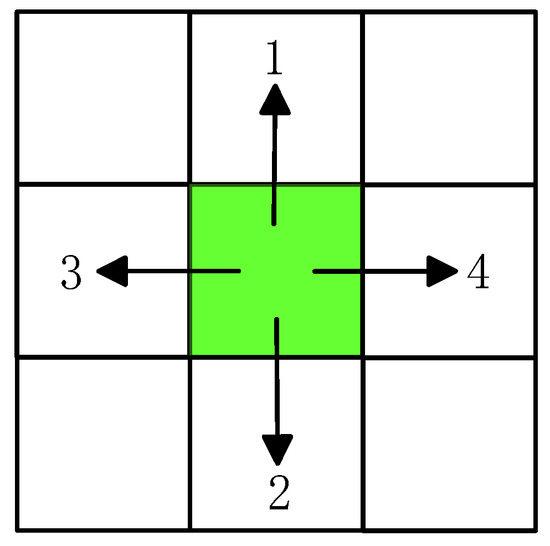

In this paper, the UAV is considered as a particle and it needs to adjust its movement to achieve area coverage. The movement direction of the UAV is set to up, down, left and right. As shown in Figure 4, the actions of the UAV are set to four directional movements which can be represented by 1 to 4.

Figure 4.

UAV movement directions.

In Figure 4, the arrow indicates the movement direction of the UAV after the UAV makes the action selection. We represent the action of UAV by 1 to 4 in Figure 4. Therefore, the action space of the UAV can be represented as follows:

It is obvious that Equation (4) shows the maximum action space of the UAV, because when the UAV position is in the upper boundary, the optional action selection space of the UAV is .

3.3. Reward Function Design

Reinforcement learning trained and obtained the optimal path of UAV area coverage by maximizing the cumulative reward . In order to improve the area coverage efficiency of the UAV, we expect that the UAV covers the uncovered new area as much as possible. Meanwhile, the UAV must avoid all obstacles and not fly outside the boundary. Accordingly, the instantaneous reward at each step contains two components: an obstacle avoidance reward and a coverage reward .

In the UAV area coverage task, the requirement for obstacle avoidance must be met. Therefore, by setting a large obstacle collision penalty of , the UAV can learn effective avoidance strategies gradually. The obstacle avoidance reward is defined in Equation (5):

Based on the exploration mechanism, the objective of the UAV area coverage is to search and traverse the entire area with a low repeated coverage rate. Therefore, when a new area is covered, the UAV receives a reward value. Conversely, if there is repeated coverage, a penalty is applied. The coverage reward is defined in Equation (6).

The parameters , and are adjusted based on the actual area coverage environment. Through extensive experimental tests, 30, 15 and 5 are chosen as the values of , and , respectively.

The instantaneous reward at each step is defined as follows:

The UAV records reward and selects action at each step during every episode. After each episode, the maximum cumulative reward is obtained. The cumulative reward is defined and calculated as shown in Equation (8).

where γ denotes the discount factor, t denotes a deterministic variable that represents a certain step. With the γ being smaller and the greater emphasis on the current return value, it usually takes value from the range of .

3.4. DAM-DDQN Area Coverage Algorithm

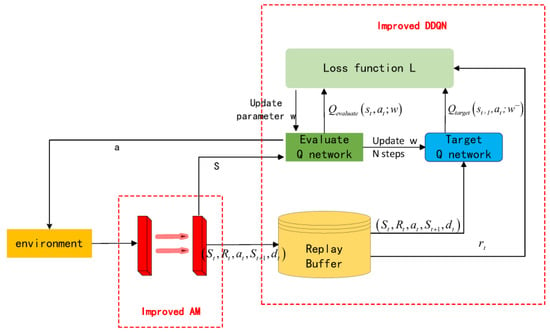

Figure 5 shows the framework of the DAM-DDQN algorithm proposed in this paper. An improved dual-channel grid attention mechanism has been introduced into the deep reinforcement learning algorithm. It pre-processes environmental information, which is then trained and computed through DDQN. By leveraging the feature extraction capabilities of the AM, the algorithm balances local dynamic obstacles and global information, which enables the UAV to rapidly obtain an optimal path for global obstacle avoidance with low repeated coverage and realize dynamic obstacle avoidance.

Figure 5.

Framework of DAM-DDQN area coverage algorithm.

3.4.1. DAM Algorithm for Unknown Dynamic Environments

In terms of area coverage, dynamic obstacles are important local information. In the environment of the area of UAV coverage, there exist unknown and uncertain obstacles. The AM is characterized by its ability to extract local features and prioritize dynamic obstacle information, which enables the UAV to respond effectively to sudden obstacles.

- (1)

- Traditional AM algorithm

The traditional AM algorithm simulates human visual attention and has widespread applications in pattern recognition [29]. The core concept involves dynamically allocating weights to enable the model to focus on the most important aspects of the input data while disregarding irrelevant or secondary elements. Figure 6 shows the network structure diagram of the AM.

Figure 6.

AM network structure.

In UAV area coverage, the AM uses multiple convolutional neural networks to extract the local obstacle feature information of the unknown coverage environment. Then by using a sigmoid function, it can obtain the attention weights of the extracted features. According to dot product operation and flattening, it can yield the local obstacle feature information with different attention weights, which enables the UAV to quickly identify obstacles and avoid them.

- (2)

- Improved DAM algorithm

Although the AM algorithm enables the UAV to achieve dynamic obstacle avoidance, it results in redundant flight paths which wastes resources and causes high repetition rates in area coverage [30].

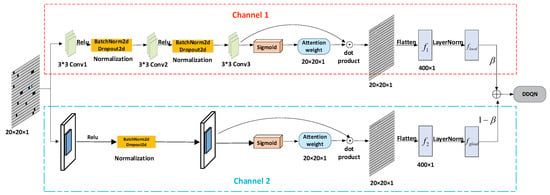

To address this issue, a DAM structure is designed in this paper. For the designed DAM structure, a full-area coverage feature extraction mechanism has been added to the existing single-channel dynamic obstacle feature extraction mechanism. Figure 7 shows the DAM network structure.

Figure 7.

DAM network structure.

In Figure 7, Channel 1 extracts obstacle features, while Channel 2 extracts features covering the entire area. Through normalization and weighted feature fusion, the UAV can avoid obstacles, improve flight efficiency and reduce path redundancy and repeated coverage of the area.

Channel 1 uses a three-layer convolutional neural network to extract local obstacle information. The first layer network extracts obstacle contours and simple edge information. The second layer network extracts the local arrangement patterns of obstacle groups. The third layer network extracts local features related to the movement directions of dynamic obstacles. The network extracts local main feature by calculating the inner product of the corresponding areas, as shown in Equation (9).

where m and n denote the size of the convolution kernel, i and j denote the grid environmental spatial dimension, is the input grid map, is convolution kernel parameters, denotes the size of grid map 20 × 20, denotes the learning bias vector of a length which is dynamically updated during training. This equation represents the process of processing the local 3 × 3 window of the input feature map. This window is multiplied element by element with the convolution kernel, and the result is summed to obtain the main local features of the input feature map.

The local main feature is mapped to the interval [0, 1] through the sigmoid function. The sigmoid function is defined in Equation (10):

The local attention weights are obtained as follows:

Through dot product operations, flattening and normalization, we can obtain local obstacle feature information , as shown in Equation (12).

Channel 2 uses a two-layer fully connected neural network to extract global grid map coverage information. The first layer network compresses the 20 × 20 high-dimensional grid information into 64 low-dimensional features to extract the grid map coverage information. The second layer network converts these features back into their original grid dimensions to generate global main feature information , as shown in Equations (13) and (14).

where denotes the feature of the first layer network, and denote the weight matrices of the first and second fully connected layers, respectively, and denote the bias vectors of the first and second layer networks.

The global main feature is mapped to the interval [0, 1] through the sigmoid function, and the global attention weights are obtained as follows:

Through dot product operation, flattening and normalization, we can obtain global grid map coverage feature information , as shown in Equation (16).

Finally, the combined feature information about the obstacle and grid map coverage can be computed using the following equation:

where denotes the weight coefficient which usually takes values from ranges . In this paper, in order to enable the UAV to avoid static and dynamic obstacles in time, the value of is set to 0.65 through experimental tests.

After processing and calculating the DAM algorithm, the resulting output is then integrated into the neural network of the DDQN to realize the UAV area coverage.

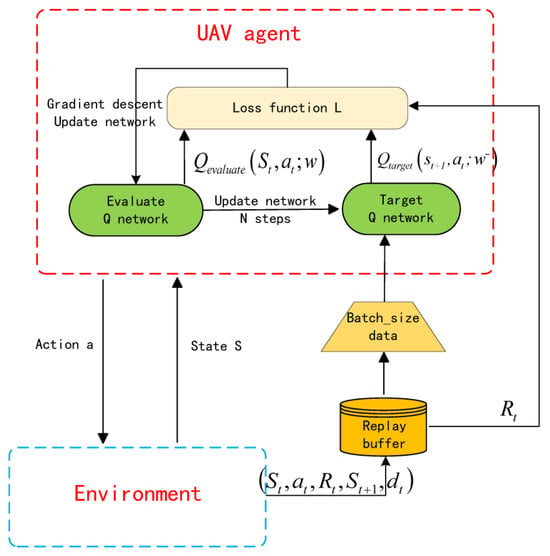

3.4.2. Improved DDQN Algorithm

The DDQN algorithm utilizes two neural networks for action selection and action value estimation. The DDQN structure is shown in Figure 8. It primarily addresses point-to-point path planning problems using a goal-oriented approach with relatively straightforward training conditions, which can be accomplished using the fundamental algorithm [31].

Figure 8.

DDQN structure.

In Figure 8, the UAV selects an action based on the current state through the evaluation Q-network, interacts with the environment, acquires experience data such as states and rewards, and stores them in the experience replay buffer. During the offline training process of the UAV, a batch of data is randomly sampled from the experience replay buffer. The evaluated Q-network calculates the action value for the current state, and the target Q-network uses fixed parameters to calculate the target value for the next state. Through the loss function and gradient descent, the parameters of the evaluation Q-network are updated. Every N step, the parameters of the evaluation Q-network are synchronized to the target Q-network, making the training more stable and avoiding value estimation biases.

The evaluated Q value and the target Q value are calculated by Equations (18) and (19).

where represents instantaneous reward, is the discount factor, is the action of the current moment, and represent the parameters of two neural networks, and is the next state following the current state .

The loss function is used to optimize and update the network parameters. The loss function is defined as shown in Equation (20) to ensure that the output of the DDQN more closely approximates the target value.

The action selection is determined based on . To maintain random exploration, action selection strategies typically adopted greedy strategies [32] as shown in Equation (21).

where is the exploration probability which is a constant. If is too large, over-exploration will cause the algorithm to fail to converge, resulting in low learning efficiency. If is too small, it causes the algorithm to fall into a local optimum. It is hard to choose an optimal value.

In this paper, the objective of area coverage path planning is to achieve global coverage and avoid dynamic obstacles with a low repeated coverage rate. The determination of an optimal solution relies not only on the rewards function, but also on an effective exploring mechanism for the unknown environment. To improve training effectiveness and avoid local optima, we design an adaptive exploration probability based on the coverage rate in Equation (22).

where denotes the real-time coverage rate of the UAV, and denote coverage adjustment parameters, and they usually take values from ranges , . The UAV can complete the area coverage training task effectively.

3.4.3. DAM-DDQN Algorithm Implementation

The implementation process of the dynamic obstacle avoidance area coverage DAM-DDQN algorithm is outlined in Algorithm 1 which is as follows.

| Algorithm 1.DAM-DDQN |

|

|

4. Simulation Analysis

4.1. Simulation Platform

To verify the effectiveness of the improved DAM-DDQN algorithm in this paper, the DAM-DDQN model designed is implemented using Python language, and experiments are conducted on a self-established deep reinforcement learning experimental platform that includes both hardware and software environments.

For the hardware configuration, a computer running the Windows 11 system is used, equipped with an Intel Core i5-13500H@2.60GHz CPU (Intel, Santa Clara, CA, USA), 32 GB of RAM, and an NVIDIA GeForce RTX 4050 GPU (NVIDIA, Santa Clara, CA, USA). This GPU is utilized to accelerate training computations.

In terms of the software platform, the experimental environment is built based on the PyTorch 2.2.1 deep reinforcement learning framework, Python 3.12, Matplotlib 3.10, NumPy 1.26, PyTorch 2.2.1 and CUDA 12.0 as the supporting software.

Both the training and testing of the model are carried out in this integrated hardware and software environment.

4.2. Parameter Setting

In real scenarios, the fight environment of the UAV is commonly unknown. Without considering the change in UAV flight altitude, we establish a 20 × 20 two-dimensional grid simulation environment with static and dynamic obstacles to verify the area coverage performance of the proposed algorithm. The main parameters for the proposed algorithm are shown in Table 1.

Table 1.

Algorithm parameter settings.

4.3. Evaluation Criteria

- (1)

- Coverage rate

The raster environment model includes no-fly zones (black static obstacles, blue dynamic obstacles) and free-flight zones (white raster), and the coverage objective is to cover all free-flight areas with a low repeated coverage rate. The coverage rate is calculated as shown in Equation (23).

where represents the coverage rate, represents the total grid area that the UAV has searched and covered; represents the total number of grids in the grid environment; represents the number of grids occupied by obstacles. The core metric of training is to learn a high-coverage strategy in a short period of time, achieve high-coverage path planning, and realize effective training.

- (2)

- Repeated coverage rate

In the actual flight process, due to the existence of obstacles and the local complex environment, it is easy to cause the UAV to hover in the local optimum and demonstrate repeated coverage; this paper sets the repeated coverage rate to the flight mission statistics, and the repeated coverage rate is calculated as shown in Equation (24).

where represents the repeated coverage rate, represents the total number of grid cells covered two or more times. A high repeated coverage rate means that there are many useless paths. Under the premise of achieving high coverage, the shortest path to complete the coverage should be learned under the goal of a low repeated coverage rate.

- (3)

- Coverage total steps

In the experiment, each update to the status of the UAV is regarded as part of the learning process. The process of the UAV moving from a random initial position to achieving full coverage of the map is considered a trial. In fact, after attempting a sufficient number of steps, the UAV will achieve full coverage of the entire environment. We defined the total flight steps of the coverage trial as coverage total step . If is small, it means that the UAV area coverage has few redundant flight paths.

4.4. Simulation and Analysis

In the subsection, we consider two simulation scenarios: (1) environment without dynamic obstacles; (2) environment with dynamic obstacles. The proposed DAM-DDQN algorithm is compared with SAC, DDQN and AM-DDQN algorithms to verify the area coverage performance.

- (1)

- Simulation of an environment without dynamic obstacles

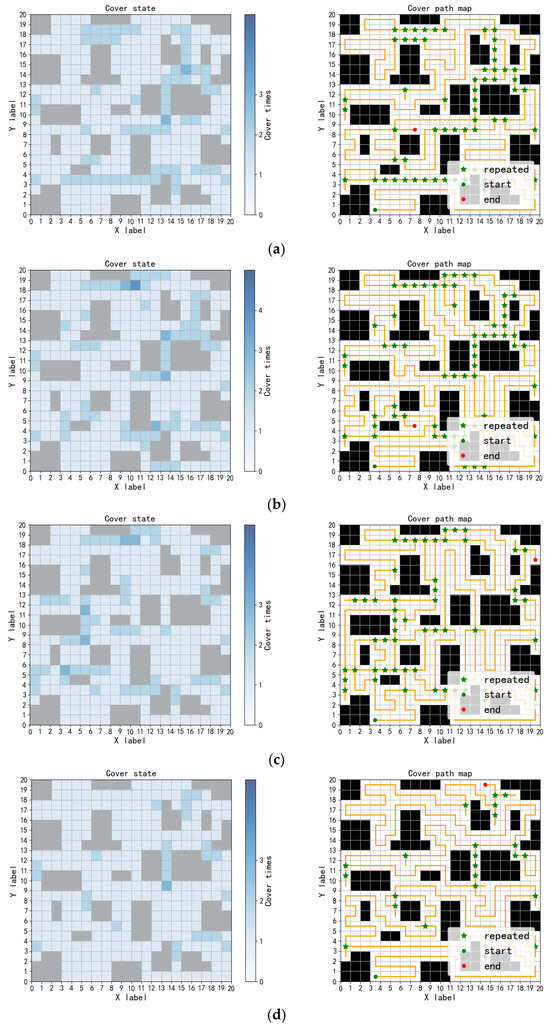

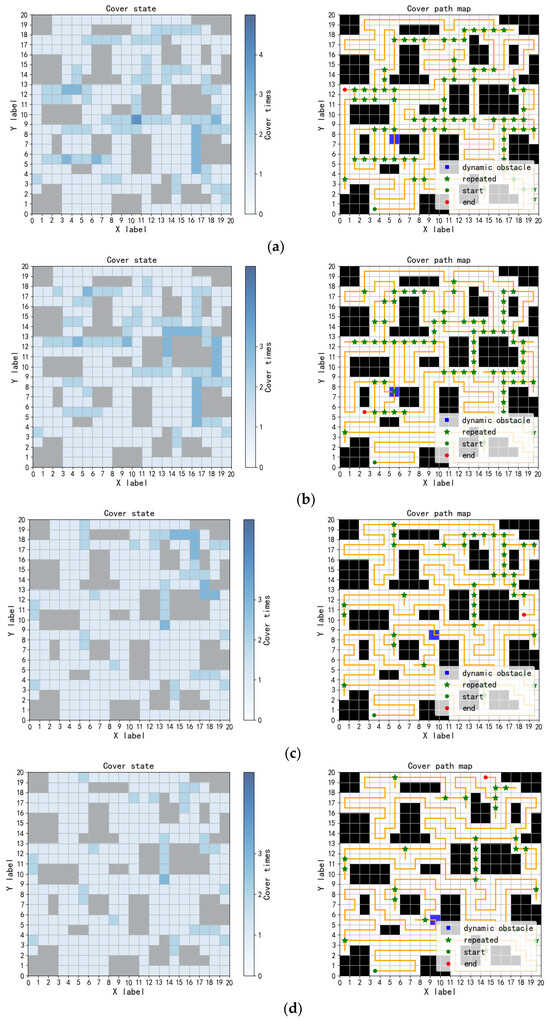

The simulation environment is a 20 × 20 grid environment containing static obstacles distributed at a density of 30%. The path planning results for area coverage are shown in Figure 9.

Figure 9.

Area coverage results of the four algorithms in an environment without dynamic obstacles. (a) SAC; (b) DDQN; (c) AM-DDQN; (d) DAM-DDQN.

Figure 9 shows the results for area coverage path planning with four algorithms. The left diagram in Figure 9 represents the number of covering times for the grid area, with deeper colors indicating more coverage times, and 0, 1, 2, 3 denote the grid coverage times. The right diagram shows the final coverage path results of the UAV. Green dots indicate the start points of UAV and red dots indicate the end points. Yellow lines depict the coverage path of the UAV and green stars mark areas of repeated coverage. It can be seen in Figure 9 that the proposed method in this paper has fewer redundant paths and a lower repeated coverage rate.

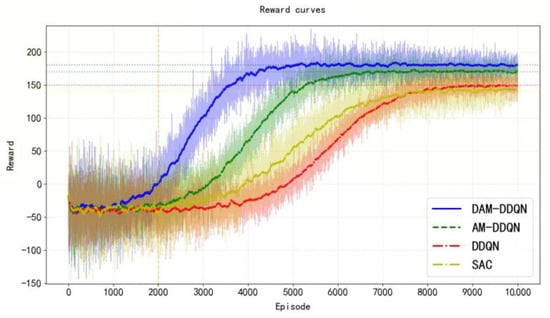

The training reward curves are shown in Figure 10.

Figure 10.

Reward curves for simulation training in environments without dynamic obstacles using four algorithms.

The four horizontal dotted lines (in blue, green, red, and yellow) respectively represent the fluctuation averages of the final converged reward values for the four algorithms. The vertical yellow dotted line denotes the approximate boundary between the intense oscillation phase and the stable ascending phase of the training process.

Due to the existence of greedy strategies, at each decision moment, the UAV has a probability of selecting a random action. Therefore, the training results based on reinforcement learning usually do not converge to a fixed value and exhibit an up-and-down trend in Figure 10.

As shown in Figure 10, the reward convergence speed of the SAC and DDQN algorithms are similar, with the SAC algorithm converging faster. However, the SAC algorithm converges to a lower reward value. Therefore, the DDQN algorithm has been selected as the prime algorithm. The improved DAM-DDQN algorithm has a shorter training time and faster convergence speed. By the 4000th episode, it has already approached the converged reward value and subsequently remains stable at the highest reward value.

Since each experiment involves an element of randomness, even if the starting point of the UAV and the distribution of obstacles remain the same, the coverage results may differ each time. To increase the reliability of the results, 200 area coverage simulations were conducted randomly on a 20 × 20 map with 30% obstacles. The results are shown in Table 2.

Table 2.

Comparison of simulation data for training in environments without dynamic obstacles.

Table 2 shows that four algorithms can completely realize area coverage tasks and avoid obstacles within limited steps. However, there were differences in the repeat coverage rate, coverage total steps and training episodes. The repeated coverage rates of the four algorithms are 23.10%, 21.04%, 15.89% and 8.90%, respectively. The improved DAM-DDQN algorithm has a lower repeated coverage rate. This indicates that the DAM-DDQN algorithm has fewer redundant flight paths. This can be seen as reflected in the coverage total steps. The average coverage total steps of the four algorithms are 339, 335, 321 and 298, respectively. Meanwhile, the training episodes of the DAM-DDQN algorithm are almost half of those of the traditional DDQN algorithm. This demonstrates that the proposed DAM-DDQN algorithm can reduce the repeat coverage rate and redundant flight paths effectively with a shorter training time.

- (2)

- Simulation of an environment with dynamic obstacles

In real flight environments, in addition to static obstacles such as buildings and trees, there are also some dynamic obstacles such as flying animals and other UAVs. Therefore, randomly moving dynamic obstacles are added to the simulation map to verify the effectiveness of the algorithm. Dynamic obstacles can move freely within the grid environment.

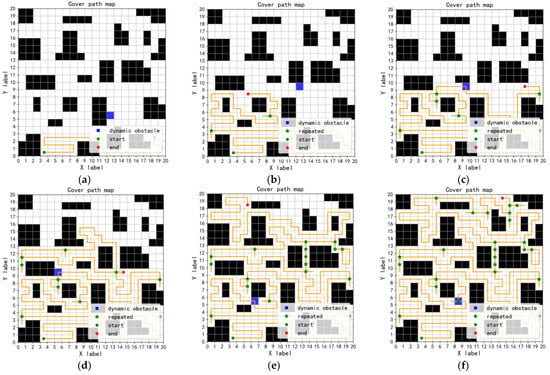

Figure 11 shows the area coverage process at six different time steps for the DAM-DDQN algorithm. The blue square represents a movable dynamic obstacle.

Figure 11.

Dynamic obstacle avoidance area coverage path process record with DAM-DDQN. (a) 30 steps; (b) 95 steps; (c) 140 steps; (d) 203 steps; (e) 275 steps; (f) 309 steps.

It can be seen in Figure 11 that the UAV can learn the optimal path gradually, which enables it to perfectly avoid obstacles and achieve area coverage. Under the same conditions, the coverage path results of the SAC, DDQN, AM-DDQN, and DAM-DDQN algorithms are shown in Figure 12.

Figure 12.

Simulation coverage path results of the three algorithms in an environment with dynamic obstacles. (a) SAC; (b) DDQN; (c) AM-DDQN; (d) DAM-DDQN.

In Figure 12, the blue grid denotes freely movable dynamic obstacles, and other legends have the same meaning as the static environment. When facing static obstacles and dynamic obstacles, the UAV can respond quickly and maneuver around them to avoid collision in the four algorithms. However, it can be seen in Figure 12 that the proposed DAM-DDQN method has fewer redundant paths and a lower repeated coverage rate for the dynamic environment.

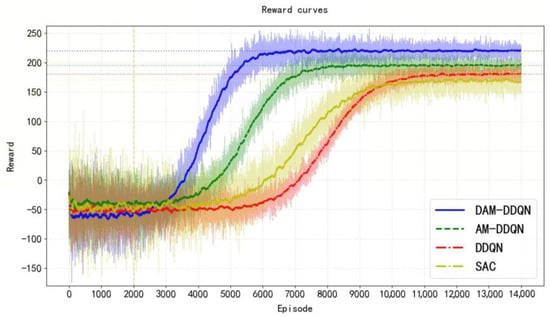

The training reward curves are shown in Figure 13.

Figure 13.

Reward curves for simulation training in environments with dynamic obstacles using four algorithms.

In Figure 13, the four horizontal dotted lines and the vertical yellow dotted line denote the same meaning as those in Figure 10. The training time is slightly longer due to the uncertainty of the dynamic obstacle. However, under the same conditions, the DAM-DDQN algorithm converges faster and achieves higher reward values than the three other algorithms.

After 200 simulations for the above four algorithms under the same conditions, the average values of the evaluation criteria are shown in Table 3.

Table 3.

Comparison of simulation data for training in environments with dynamic obstacles.

Table 3 shows that there are significant differences in terms of both repeated coverage and training time across different algorithms in dynamic environments compared to static ones. That is because a dynamic obstacle introduces uncertainty. It increases the repeated coverage rate, coverage total steps and training episodes. However, compared to the SAC, DDQN and AM-DDQN algorithms, the proposed DAM-DDQN algorithm can reduce the average repeat coverage rate, average coverage total steps and average training episodes significantly.

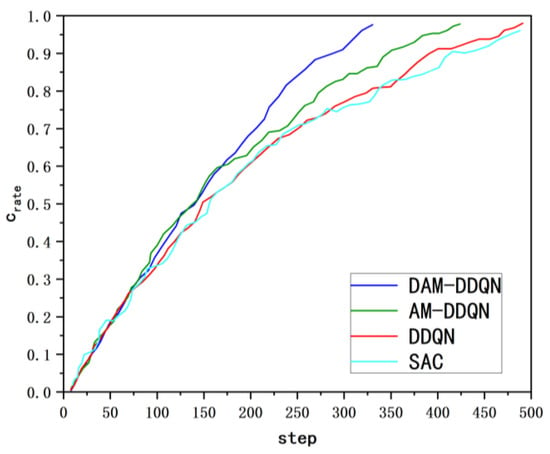

Figure 14 shows the relationship between coverage rate and the coverage steps of the four algorithms during the area coverage process corresponding to Figure 12. A steeper curve indicates a faster coverage speed, while fewer fluctuations indicate a lower repeated coverage rate. As shown in Figure 14, incorporating the dual-channel grid attention mechanism into the DDQN algorithm results in a faster convergence speed and smaller fluctuations.

Figure 14.

Curves of the four algorithms during the coverage process.

In this section, the SAC, DDQN, AM-DDQN, and DAM-DDQN algorithms are simulated in unknown static dynamic and dynamic environments. The results show that all the algorithms can effectively avoid dynamic obstacles and achieve area coverage path planning. However, compared to the three other algorithms, the repeated coverage rate, coverage total steps and training episodes of the proposed DAM-DDQN algorithm have been significantly improved.

5. Conclusions

In this paper, a DAM-DDQN algorithm is proposed to address the UAV area coverage problem under unknown dynamic environments. A DAM algorithm is designed to realize the feature information fusion of local obstacle information and full-area coverage information for the flight environment. An improved DDQN algorithm is proposed based on an adaptive exploration strategy to reduce the repeated coverage rate. The proposed DAM-DDQN algorithm can achieve area coverage and avoid obstacles with a lower repeated coverage rate. The simulation results verify the effectiveness of the proposed algorithm. However, in a large-scale complex environment, it is difficult for a single UAV to complete full-area coverage quickly and reliably due to the limitations of endurance and the exploration range. In future work, we will focus on the Multi-UAV unknown dynamic area coverage problem.

Author Contributions

Conceptualization, J.H. and Y.L.; methodology, J.H. and H.L.; simulation and validation, H.L.; writing—original draft preparation, J.H. and H.L.; writing—review and editing, C.C. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Key Research and Development Program of Shaanxi (2024GX-ZDCYL-03-06, 2025CY-YBXM-096), The Youth Innovation Team of Shaanxi Universities (2023997), and the Young Talent Nurturing Program of Shaanxi Provincial Science and Technology Association (20240109).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, W.; Zhen, Z. Intelligent surveillance and reconnaissance mode of police UAV based on grid. In Proceeding of the 2021 7th International Symposium on Mechatronics and Industrial Informatics (ISMII), Zhuhai, China, 22–24 January 2021; pp. 292–295. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; Zhang, Z.; Wang, G.; Du, N. A deep-learning-based sea search and rescue algorithm by UAV remote sensing. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, UK, 1998. [Google Scholar] [CrossRef]

- Ohi, A.Q.; Mridha, M.F.; Monowar, M.M.; Hamid, M.A. Exploring optimal control of epidemic spread using reinforcement learning. Sci. Rep. 2020, 10, 22106. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar] [CrossRef]

- Tan, X.; Han, L.; Gong, H.; Wu, Q. Biologically inspired complete coverage path planning algorithm based on Q-learning. Sensors 2023, 23, 4647. [Google Scholar] [CrossRef] [PubMed]

- Saha, O.; Ren, G.; Heydari, J.; Ganapathy, V.; Shah, M. Deep reinforcement learning based online area covering autonomous robot. In Proceedings of the 2021 7th International Conference on Automation, Robotics and Applications (ICARA), Prague, Czech Republic, 4–6 February 2021; pp. 21–25. [Google Scholar] [CrossRef]

- Hou, T.; Li, J.; Pei, X.; Wang, H.; Liu, T. A Spiral Coverage Path Planning Algorithm for Nonomnidirectional Robots. J. Field Robot. 2025, 42, 2260–2279. [Google Scholar] [CrossRef]

- Aydemir, F.; Cetin, A. Multi-agent dynamic area coverage based on reinforcement learning with connected agents. Comput. Syst. Sci. Eng. 2023, 45, 215–230. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Z.; Xing, N.; Zhao, S. UAV Coverage Path Planning Based on Deep Reinforcement Learning. In Proceedings of the 2023 IEEE 6th International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 4–6 August 2023; pp. 143–147. [Google Scholar] [CrossRef]

- Zhang, N.; Yue, L.; Zhang, Q.; Gao, C.; Zhang, B.; Wang, Y. A UAV Coverage Path Planning Method Based on a Diameter–Height Model for Mountainous Terrain. Appl. Sci. 2025, 15, 1988. [Google Scholar] [CrossRef]

- Zhang, N.; Zhang, B.; Zhang, Q.; Gao, C.; Feng, J.; Yue, L. Large-Area Coverage Path Planning Method Based on Vehicle–UAV Collaboration. Appl. Sci. 2025, 15, 1247. [Google Scholar] [CrossRef]

- Tong, D.; Wei, R. Regional coverage maximization: Alternative geographical space abstraction and modeling. Geogr. Anal. 2017, 49, 125–142. [Google Scholar] [CrossRef]

- Le, A.V.; Parween, R.; Elara Mohan, R.; Khanh Nhan, N.H.; Enjikalayil, R. Optimization complete area coverage by reconfigurable hTrihex tiling robot. Sensors 2020, 20, 3170. [Google Scholar] [CrossRef]

- Yao, P.; Wang, H.; Su, Z. Real-time path planning of unmanned aerial vehicle for target tracking and obstacle avoidance in complex dynamic environment. Aerosp. Sci. Technol. 2015, 47, 269–279. [Google Scholar] [CrossRef]

- Feng, J.; Zhang, J.; Zhang, G.; Xie, S.; Ding, Y.; Liu, Z. UAV dynamic path planning based on obstacle position prediction in an unknown environment. IEEE Access 2021, 9, 154679–154691. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, B.; Liu, P.; Pan, J.; Chen, S. Velocity obstacle guided motion planning method in dynamic environments. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 101889. [Google Scholar] [CrossRef]

- Lindqvist, B.; Mansouri, S.S.; Agha-mohammadi, A.; Nikolakopoulos, G. Nonlinear MPC for collision avoidance and control of UAVs with dynamic obstacles. IEEE Robot. Autom. Lett. 2020, 5, 6001–6008. [Google Scholar] [CrossRef]

- Liu, J.; Luo, W.; Zhang, G.; Li, R. Unmanned Aerial Vehicle Path Planning in Complex Dynamic Environments Based on Deep Reinforcement Learning. Machines 2025, 13, 162. [Google Scholar] [CrossRef]

- Liu, Z.; Cao, Y.; Chen, J.; Li, J. A hierarchical reinforcement learning algorithm based on attention mechanism for UAV autonomous navigation. IEEE Trans. Intell. Transp. Syst. 2022, 24, 13309–13320. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Craighero, L. Advances in Psychological Science; Psychology Press: Montreal, QC, Canada, 1998; pp. 171–198. [Google Scholar]

- Guo, F.; Zhang, Y.; Tang, J.; Li, W. YOLOv3-A: A traffic sign detection network based on attention mechanism. J. Commun. 2021, 42, 87–99. [Google Scholar] [CrossRef]

- Qiu, S.; Wu, Y.; Anwar, S.; Li, C. Investigating attention mechanism in 3D point cloud object detection. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 403–412. [Google Scholar] [CrossRef]

- Cabreira, T.M.; Brisolara, L.B.; Paulo, R.F.J. Survey on coverage path planning with unmanned aerial vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]

- Yuan, J.; Liu, Z.; Lian, Y.; Chen, L.; An, Q.; Wang, L.; Ma, B. Global optimization of UAV area coverage path planning based on good point set and genetic algorithm. Aerospace 2022, 9, 86. [Google Scholar] [CrossRef]

- Cao, Y.; Cheng, X.; Mu, J. Concentrated coverage path planning algorithm of UAV formation for aerial photography. IEEE Sens. J. 2022, 22, 11098–11111. [Google Scholar] [CrossRef]

- Wu, X.; Wang, G.; Shen, N. Research on obstacle avoidance optimization and path planning of autonomous vehicles based on attention mechanism combined with multimodal information decision-making thoughts of robots. Front. Neurorobotics 2023, 17, 1269447. [Google Scholar] [CrossRef] [PubMed]

- Chame, H.F.; Chevallereau, C. A top-down and bottom-up visual attention model for humanoid object approaching and obstacle avoidance. In Proceedings of the 2016 XIII Latin American Robotics Symposium and IV Brazilian Robotics Symposium (LARS/SBR), Recife, Brazil, 8–12 October 2016; pp. 25–30. [Google Scholar] [CrossRef]

- Westheider, J.; Rückin, J.; Popović, M. Multi-UAV adaptive path planning using deep reinforcement learning. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 649–656. [Google Scholar] [CrossRef]

- Zhang, D.G.; Zhao, P.Z.; Cui, Y.; Chen, L.; Zhang, T.; Wu, H. A new method of mobile ad hoc network routing based on greed forwarding improvement strategy. IEEE Access 2019, 7, 158514–158524. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).