Abstract

This work presents the development of NOVA, an educational virtual assistant designed for the Parallel Computing course, built using a Retrieval-Augmented Generation (RAG) architecture combined with Large Language Models (LLMs). The assistant operates entirely in Spanish, supporting native-language learning and increasing accessibility for students in Latin American academic settings. It integrates vector and relational databases to provide an interactive, personalized learning experience that supports the understanding of complex technical concepts. Its core functionalities include the automatic generation of questions and answers, quizzes, and practical guides, all tailored to promote autonomous learning. NOVA was deployed in an academic setting at Universidad Politécnica Salesiana. Its modular architecture includes five components: a relational database for logging, a vector database for semantic retrieval, a FastAPI backend for managing logic, a Next.js frontend for user interaction, and an integration server for workflow automation. The system uses the GPT-4o mini model to generate context-aware, pedagogically aligned responses. To evaluate its effectiveness, a test suite of 100 academic tasks was executed—55 question-and-answer prompts, 25 practical guides, and 20 quizzes. NOVA achieved a 92% excellence rating, a 21-second average response time, and 72% retrieval coverage, confirming its potential as a reliable AI-driven tool for enhancing technical education.

1. Introduction

The integration of artificial intelligence (AI) in education has reshaped instructional practices, particularly through intelligent tutoring systems (ITS), virtual learning environments, and adaptive educational agents [,,]. These systems aim to personalize content delivery, provide real-time feedback, and improve learner engagement. Among the most transformative technologies are Large Language Models (LLMs), which enable natural language interaction and the generation of dynamic content []. When embedded in educational platforms, LLMs can support students in the construction of knowledge through context-sensitive explanations, automated assessments, and guided learning activities [,]. Recent initiatives have explored these models in diverse domains, including language learning, STEM education, and collaborative problem-solving [,,].

Courses in computing, engineering, and data science often involve complex abstract reasoning and domain-specific terminology, which require pedagogical tools capable of aligning content to curricular standards while maintaining conceptual clarity. In response to these challenges, researchers have begun to propose AI-powered educational assistants tailored to specialized domains [,,]. These efforts highlight the potential of intelligent systems to facilitate autonomous learning, especially when combined with retrieval mechanisms that ensure factual grounding and relevance [,].

Parallel computing is a foundational paradigm in contemporary computer science, underpinning advances in high-performance systems, big data processing, and artificial intelligence. Despite its growing importance, parallel programming presents pedagogical challenges, especially within undergraduate computer science curricula [,]. The technical complexity of the subject, which requires the understanding of concepts like concurrency, synchronization, and memory architecture, often relegates it to advanced levels of coursework [,]. This approach delays the exposure of students to parallel problem-solving strategies, potentially limiting their ability to internalize parallel thinking early in their academic journey.

Recent studies emphasize the importance of restructuring the way parallel programming is taught. Conte et al. demonstrated through empirical experiments involving 252 students that early exposure, even among those without prior computing knowledge, can produce learning outcomes comparable to or better than those of advanced students, especially when combined with active methodologies such as Team-Based Learning (TBL) and Problem-Based Learning (PBL) []. Neto et al., in a systematic review, reaffirmed the importance of using practical platforms such as low-cost clusters and single-board computers to deliver hands-on experiences in parallelism through tools such as OpenMP, MPI, and CUDA []. These findings suggest that educational strategies grounded in practice and accessibility can bridge gaps in learning complex computing paradigms.

Despite the growing interest in LLMs for educational applications [,,], challenges related to factual inaccuracies and limited contextual grounding persist, especially when addressing highly specialized technical content []. To mitigate these issues, hybrid frameworks such as Retrieval-Augmented Generation (RAG) have emerged as a promising solution. By integrating domain-specific knowledge sources into the generation process, RAG enhances the factual consistency and contextual relevance of AI-generated outputs [,,]. This architecture is particularly suited for instructional settings that demand alignment with curricular content and high levels of technical precision.

More advanced models, such as KG-RAG, take this a step further by incorporating structured knowledge graphs into the retrieval process. This approach not only mitigates hallucination risks but also ensures conceptual continuity across educational queries, aligning well with instructional goals. Studies show that KG-RAG significantly improves learning outcomes, with experiments reporting up to a 35% increase in student performance in controlled evaluations []. Such models have shown capacity to ground answers in course materials and assist independent learning [,,,].

Although conversational AI is increasingly used in education, current intelligent tutoring systems and LLM-based assistants are overwhelmingly geared toward English-language resources, leaving Spanish-speaking learners with limited and fragmented support, particularly in advanced technical subjects such as parallel computing. This lack of linguistically and domain-appropriate study materials restricts self-directed learning, widens the achievement gap, and impedes early exposure to parallel thinking skills. Consequently, there is a pressing need for an educational assistant that (i) bases its answers in authoritative Spanish-language sources and (ii) aligns explanations with the cognitive demands of parallel computing courses.

Therefore, in this study, we present NOVA, an intelligent virtual educational assistant developed for the Parallel Computing course at the Universidad Politécnica Salesiana. The design of NOVA was guided by the pedagogical and technical challenges outlined earlier: the complexity of parallel computing concepts, the limitations of generic LLMs in providing contextually aligned explanations, and the additional barrier posed by the predominance of English-language technical resources for Spanish-speaking students. To address these challenges, NOVA adopts a Retrieval-Augmented Generation (RAG) architecture, which allows the system to ground its responses in a curated corpus of course-specific materials, mitigating factual inaccuracies and ensuring conceptual alignment with curricular objectives.

The modular architecture, composed of five distinct components: relational database for interaction logging, vector database for semantic retrieval, FastAPI backend for orchestration, Next.js frontend for user interaction, and automation server for workflow management, was specifically chosen to enable scalability, maintainability, and clear separation of responsibilities. This design supports real-time retrieval of relevant content, integration of user feedback, and delivery of culturally and linguistically appropriate instructional resources, making it particularly suitable for the demands of specialized technical education.

The main contributions of this paper include:

- Development and deployment of a RAG-based educational assistant optimized for Spanish-speaking students.

- Integration of domain-specific, parallel computing instructional materials into the retrieval framework.

- Empirical evaluation of the system’s effectiveness, measuring both the accuracy of responses and alignment with curricular objectives.

- Insights into the strengths and limitations of the RAG approach in technical educational settings.

The remainder of this article is structured as follows. Section 2 reviews related work in AI-based educational systems and parallel computing instruction. Section 3 outlines the design and implementation of the proposed assistant, detailing its system architecture and content pipeline. Section 4 presents the experimental setup and evaluation methodology. Section 5 discusses the results in terms of learning outcomes and system usability. Finally, Section 6 concludes with implications, limitations, and directions for future research.

2. Related Works

The integration of AI into educational environments has significantly advanced the personalization of learning. In particular, LLMs have emerged as key enablers for natural interaction and tailored feedback []. However, LLMs often exhibit factual hallucinations and a lack of contextual precision []. RAG frameworks have been developed to address these shortcomings by establishing responses in curated, domain-specific knowledge repositories, enhancing reliability in educational applications [,].

Kabudi et al. [] analyzed 147 studies between 2014 and 2020 and identified a wide range of AI-enabled adaptive learning systems designed to support individualized instruction across multiple domains. Their study emphasized the potential of these systems in improving the quality of learning experiences and highlighted a significant research gap between the design of such systems and their real-world implementation in higher education settings. Most interventions remain at the prototype stage, and few are validated through deployment in formal academic environments.

LLM-based intelligent tutors have been piloted in a variety of educational settings. Chen [] proposed a ChatGPT with GPT-4 model and Microsoft Copilot Studio integration into Microsoft Teams to support syntax instruction, demonstrating statistically significant improvements in learner outcomes. Oprea and Adela [] reviewed the transformative potential of LLMs in education, noting their effectiveness in promoting learner engagement. These studies confirm that LLMs can effectively personalize feedback and improve engagement, but they also highlight their limitations in terms of factual consistency and curricular alignment. Additionally, they predominantly target English-speaking learners and general education topics, overlooking advanced STEM domains and non-English speakers.

To mitigate factual inaccuracies and improve alignment, RAG-based approaches have been proposed. Dong et al. [] introduced KG-RAG, which combines knowledge graphs with RAG to deliver coherent and conceptually structured answers, reporting a 35% improvement in assessment scores. Similarly, Modran et al. [] proposed an LLM-based tutoring system indexed with LlamaIndex, showing improved engagement in engineering courses. NotebookLM, evaluated by Tufiño [], grounded AI interactions in course documents to support collaborative learning and reduce hallucinations. These RAG-based systems demonstrate superior factuality and alignment compared with pure LLMs, yet remain largely focused on English-language, non-specialized contexts. Taken together, these studies illustrate the promise of LLM-based and RAG-enhanced tutoring systems but also expose two persistent gaps: the lack of solutions tailored to advanced technical domains such as parallel computing, and the near-total absence of Spanish-language AI-powered educational tools. As Spanish is one of the most widely spoken languages globally, this linguistic gap represents an important equity concern in STEM education. NOVA directly addresses these deficits by providing a modular RAG-based virtual assistant grounded in curriculum-aligned materials in Spanish for parallel computing.

In the specific domain of computer science education, particularly parallel computing, Conte et al. [] demonstrated that early exposure to parallel programming, even among students without prior computing knowledge, yields comparable or superior learning outcomes when supported by active methodologies such as PBL and TBL. Neto et al. [] systematically reviewed parallel programming education, emphasizing the value of hands-on learning with low-cost clusters and standard parallel programming libraries.

This comparison highlights how our assistant uniquely targets complex technical education through modular integration of Retrieval-Augmented Generation and Large Language Models, applied specifically to a university-level parallel computing course in Spanish—a context largely ignored by prior work.

To better situate our contribution, Table 1 summarizes a comparative analysis of the most relevant studies. It focuses on domain specificity, AI architecture, level of personalization, and deployment maturity. This comparison highlights how our assistant uniquely targets complex technical education through modular integration of Retrieval-Augmented Generation and Large Language Models, applied specifically to a university-level parallel computing course.

Table 1.

Comparison of AI-Powered Educational Assistants Applied to Technical Subjects and NOVA’s Unique Spanish-Language Contribution.

3. Materials and Methods

The development of NOVA followed an iterative prototyping approach, consistent with design-based research principles, aimed at creating and refining an educational assistant that aligns with the pedagogical and linguistic needs of Spanish-speaking students in a Parallel Computing course. The system was developed through successive cycles of implementation, internal testing, and informal feedback from instructors and students to ensure that functionality, content, and user experience met curricular and cultural expectations. The primary design objectives were to support autonomous learning, ensure the availability of Spanish-language resources in a highly technical domain, and integrate officially validated course materials into the retrieval corpus.

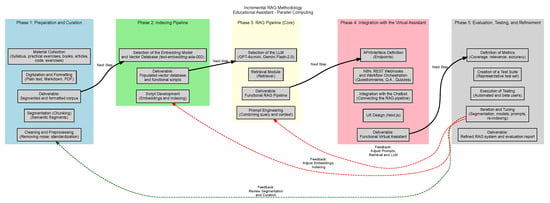

The resulting methodology is organized into five well-defined phases, each contributing to the transformation of instructional materials into an adaptive assistant. As shown in Figure 1, the pipeline incorporates feedback loops and continuous iteration across segmentation, indexing, model selection, and system integration to ensure accuracy, contextual relevance, and pedagogical value.

Figure 1.

Incremental RAG methodology for the development of the educational virtual assistant in parallel computing. The architecture is structured in five phases: (1) preparation and curation, (2) indexing pipeline, (3) RAG core pipeline, (4) integration with the virtual assistant, and (5) evaluation and refinement.

3.1. Phase 1: Preparation and Curation

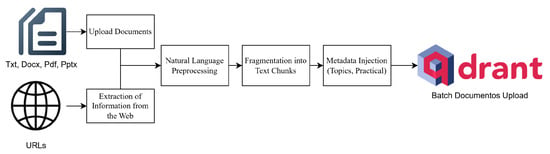

This phase focused on the structured preparation of educational materials for integration into the virtual assistant system. The complete pipeline followed in this phase, ranging from document ingestion to aggregation and vector indexing, is illustrated in Figure 2, which provides a visual overview of the steps involved in preparing the corpus for semantic retrieval.

Figure 2.

Data preparation and ingestion process for Phase 1. The flow includes document loading, natural language preprocessing, semantic chunking, metadata enrichment, and storage in the Qdrant vector database.

The process began with the collection of instructional resources corresponding to four thematic units of the Parallel Computing course, covering topics such as high-performance computing, parallel architectures, application development, and clustering techniques. The dataset included official lecture slides, laboratory practices, and exercise guides validated by instructors from the past four academic periods, excluding duplicated content and incorporating additional resources that had not been previously used. Table 2 summarizes the core units, number of documents, and types of educational materials included in the dataset.

Table 2.

Summary of core units, number of documents, and types of educational materials included in the knowledge base.

In the digitization and formatting stage, files in TXT, DOCX, PDF, and PPTX formats were uploaded, alongside unprotected URLs. Document ingestion was handled using the loader() methods provided by the LangChain library [], while web content was extracted using Selenium-based scraping tools []. These technologies enabled effective retrieval of educational data from multiple sources, regardless of their original structure.

The extracted text underwent an intensive cleaning and preprocessing stage using the NLTK natural language processing library []. This included the removal of URLs, non-alphanumeric symbols, extraneous punctuation, unnecessary spacing, list markers, quotations, specific date formats, and technical abbreviations. The objective was to preserve only relevant textual content, ensuring high-quality semantic search outcomes in subsequent stages.

Following preprocessing, the content was segmented into semantically meaningful units or “chunks.” Only fragments exceeding 200 characters were retained to ensure sufficient information density. Each chunk was enriched with metadata, such as original source and topic classification, allowing effective filtering and organization. The processed batches were then indexed in the Qdrant vector database using the text-embedding-3-small model with a dimensionality of 768 vectors []. This indexing step guaranteed compatibility with the retrieval infrastructure of the assistant.

3.2. Phase 2: Indexing Pipeline

This phase focused on transforming the structured corpus into semantically indexed content suitable for retrieval through vector similarity. The process involved selecting and configuring embedding models, integrating them with a scalable vector database, and developing the backend logic required for ingestion and storage.

The first step consisted of evaluating appropriate embedding models capable of generating high-quality vector representations of the curated text. Two models were selected for different tasks within the pipeline. The model text-embedding-ada-002 from OpenAI was used for tasks involving semantic search and similarity computation, owing to its strong representational capacity and integration support. In parallel, the model text-embedding-3-small was chosen for large-scale document ingestion []. This model was configured to produce vectors with a dimensionality of 768, facilitating scalable indexing while maintaining semantic accuracy.

With the embedding strategy defined, the implementation of the backend indexing system began. The backend was developed using Python 3.10 and LangChain, leveraging its integration capabilities with the Qdrant vector database. The system was deployed in a Dockerized environment, which simplified configuration and scalability. Once initialized, the Qdrant client served as the primary interface for all document-related operations, including ingestion, modification, and retrieval. The backend functioned according to standard database operations—create, read, update, and delete (CRUD)—which ensures modularity and maintainability.

A complete set of methods was implemented to manage vector data within Qdrant. These methods included the validation of collection existence, the creation and registration of new collections, the ingestion of documents along with metadata, and the retrieval of stored content in structured formats. In addition, the backend supported the deletion and update of both individual documents and entire collections, allowing for full control over the dataset. Documents were organized within collections based on instructional units and themes, with metadata assigned to support targeted retrieval operations.

The development of indexing scripts was a critical deliverable of this phase. These scripts automated the loading of cleaned textual fragments, the generation of embeddings using the selected models, and the structured insertion of these vectors into the Qdrant collections. Each entry was enriched with metadata such as topic identifiers and the original source, supporting contextual filtering in later stages of the retrieval pipeline.

3.3. Phase 3: RAG Pipeline (Core)

This phase focused on designing and implementing the RAG pipeline, which is the core mechanism for generating contextually grounded educational content in response to user queries. The pipeline comprises four main components: model selection, semantic retrieval, integration of prompt engineering, and the orchestration of content generation flows for different academic resources. All prompts, user inputs, and system outputs were developed and evaluated in Spanish, ensuring full alignment with the instructional language of the course and facilitating broader adoption across Latin American institutions.

The first component involved the selection of LLMs suitable for the assistant’s architecture. GPT-4o mini was chosen as the primary model for handling complex queries due to its robust comprehension capacity and operational efficiency [], along with support for up to one hundred twenty-eight thousand input tokens and a benchmark score of eighty-two percent on the Massive Multitask Language Understanding (MMLU) []. For lightweight tasks, the assistant employed Gemini Flash 2.0, a high-speed model suitable for prompt responses with lower cognitive demand [].

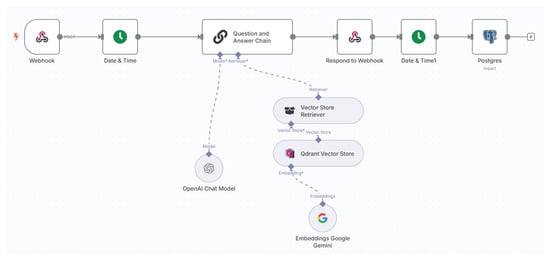

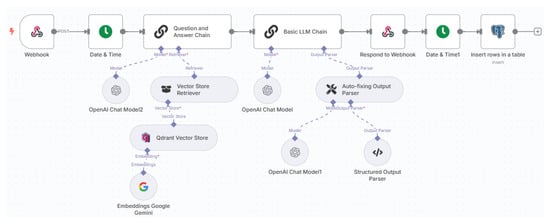

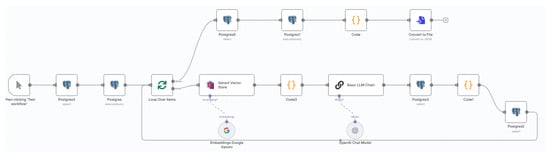

To complement the generation process, a semantic retrieval module was developed to extract the most relevant information from the vector database prior to generation. The assistant uses a vector similarity search in Qdrant to retrieve the five most relevant content fragments based on the user’s query, filtered by metadata parameters such as unit and topic. These filters, defined at the moment of document ingestion, ensure that the language model receives highly contextualized input. Figure 3 shows the workflow of the question-and-answer generation process.

Figure 3.

Workflow for the generation of questions and answers. The assistant receives a student query, retrieves relevant fragments based on metadata, and returns a contextualized explanation with code examples. Red asterisks indicate required object-type variables for the proper functioning of the workflow node, for example, “Model.” Each node must be connected to an object of the type requested (e.g., GPT-4o-mini), and it cannot be connected to an incompatible object.

Once the relevant context is retrieved, prompt engineering plays a critical role in shaping the interaction with the language model. Custom prompt templates were designed for each type of academic resource. In the case of the question-and-answer flow, the assistant is instructed to explain the concept in detail, provide a sample code, and present an interactive explanation aligned with the topic selected by the student. An example of the prompt structure applied in this flow is shown in Algorithm 1.

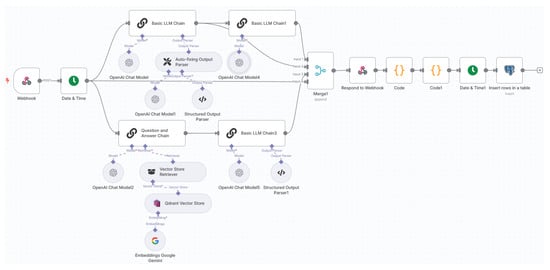

In the quiz generation flow, a structured prompt is used to create both theoretical and coding questions. The assistant is guided to generate a problem statement with a real-world use case, provide a traditional solution, and suggest a parallel computing code base to be completed by the student. The results are returned in JSON format and stored for later review. This process is shown in Figure 4.

Figure 4.

Workflow for quiz generation. The assistant builds theory and code-based questions from course content and instructor instructions, providing detailed, structured output for each evaluation item. Red asterisks indicate required object-type variables for the proper functioning of the workflow node, for example, “Model.” Each node must be connected to an object of the type requested (e.g., GPT-4o-mini), and it cannot be connected to an incompatible object.

The final generation flow supports the creation of practical guides. These prompts are structured to generate a detailed problem description, a list of functional requirements, and a rubric of evaluation based on a total score of ten points. Unlike previous flows, the context in this case is restricted exclusively to documents tagged as laboratory practices, ensuring thematic and instructional alignment. Each response is saved and associated with metadata for future evaluation and analysis, as illustrated in Figure 5.

Figure 5.

Workflow for the generation of practical guides. The assistant processes filtered content tagged as lab exercises, producing a scenario, functional requirements, and a rubric aligned with course themes. Red asterisks indicate required object-type variables for the proper functioning of the workflow node, for example, “Model.” Each node must be connected to an object of the type requested (e.g., GPT-4o-mini), and it cannot be connected to an incompatible object.

The integration of all components, language model selection, semantic retrieval, and task-specific prompt engineering resulted in a flexible, scalable architecture capable of delivering educational responses tailored to the course structure and instructional goals. This phase concluded with the deployment of a fully functional RAG pipeline, forming the intelligent core of the educational assistant and enabling real-time, pedagogically relevant interactions between students and the system.

| Algorithm 1 Prompt template used for the question-and-answer generation flow |

|

3.4. Phase 4: Integration with the Virtual Assistant

This phase focused on operationalizing the functionality of the system through a complete software architecture that connects users with the educational assistant. This was accomplished by defining a formal application interface, implementing service orchestration via workflow automation, integrating the retrieval and generation pipeline into a chatbot component, and deploying a fully interactive front-end interface based on modern web technologies. The system was named NOVA (short for Innovative Assistant), as it represents a next-generation approach to virtual educational support.

The first step involved the design of a RESTful API using FastAPI []. Each endpoint serves as a high-level interface, encapsulating authentication through JSON Web Tokens (JWT) [], parameter validation, and coordination of semantic retrieval and generative logic. Key endpoints such as /qa, /quiz, and /practice accept a JSON payload that includes the instructional unit, the topic, and optional user-defined parameters. Once validated, each request is assigned a unique trace identifier and redirected to a corresponding webhook on the n8n platform []. During the same time, the server logs performance metrics and stores the complete transaction in PostgreSQL, ensuring complete traceability.

Each webhook represents a workflow associated with a specific assistant function. Upon receiving a request, the workflow initiates a semantic query in Qdrant, filtering documents by unit, topic, and, in some cases, practice metadata. The top five fragments are retrieved and injected into the prompt, which is sent to the selected Large Language Model. The model response is post-processed and formatted as plain text for question answering, JSON for quizzes or guides, and returned to the front end. The same workflow logs execution time and stores all inputs and outputs for further analysis. This architecture decouples client and server logic, allows horizontal scaling, and enables educators to modify assistant behavior without touching any backend code.

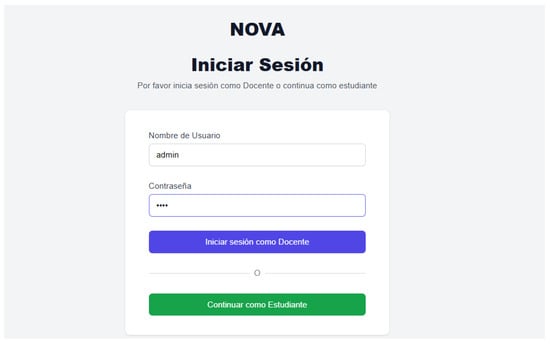

The front-end system was developed using Next.js. Authentication and access control are managed through a security model based on JWT, which ensures that users can only access content permitted by their roles. As shown in Figure 6, the login page serves as the authentication gateway for both students and instructors. Unauthorized users are redirected to a custom access-denied view, reinforcing secure navigation across the application.

Figure 6.

Loginpage of NOVA. This interface handles secure access for both students and instructors using JWT-based authentication (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

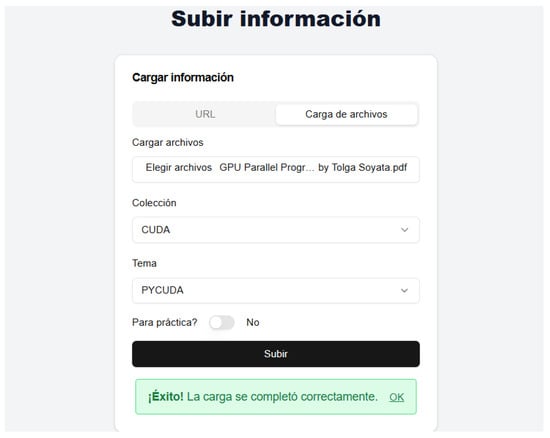

Once authenticated, instructors gain access to dashboards where they can upload instructional materials, inspect stored content fragments, and monitor system usage metrics. Figure 7 presents the interface for uploading content to the assistant’s knowledge base. In contrast, students interact directly with the assistant by submitting questions, generating quizzes, or creating guided practice assignments through a streamlined interface.

Figure 7.

Upload page in NOVA. This interface allows instructors to submit new knowledge artifacts by selecting the unit, topic, and content type (e.g., e-book, presentations, lab practice). These data are used to index and contextualize future responses (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

The chatbot (question and answer) module acts as the main entry point for student interaction. When a user sends a message, the system adds a conversation identifier and forwards the request to the /chat endpoint. The backend then coordinates the retrieval of relevant fragments via Qdrant and injects them into the prompt submitted to GPT-4o mini. The generated response is streamed to the user interface using Server-Sent Events (SSE), enabling real-time feedback.

By the end of this phase, the educational assistant had been deployed as a functional system, hosted in a secure public domain (the NOVA assistant is temporarily hosted for scientific validation at the following public domain: https://cloudcomputing.ups.edu.ec/asistente-educativo, accessed on 23 June 2025, and access is intended exclusively for academic evaluation purposes and may be discontinued after the end of the research project) with HTTPS encryption. It integrates five Docker containers: PostgreSQL, Qdrant, FastAPI, Next.js, and n8n, all orchestrated under a single configuration. The result is a modular, extensible, and production-ready educational assistant that supports robust authentication, interaction logging, and seamless integration with current and future educational needs.

3.5. Phase 5: Evaluation, Testing, and Refinement

The final methodological phase involved the design and execution of a rigorous evaluation protocol to ensure the pedagogical alignment, functional reliability, and iterative improvement of the educational assistant. Recognizing that retrieval augmented generation systems can encounter issues at various stages, retrieval, augmentation, and generation, this phase established metrics, workflows, and processes for identifying and addressing such limitations.

To begin, a set of core evaluation metrics was defined to assess the effectiveness of the assistant. These included coverage, to measure the proportion of retrieved content effectively used in responses; relevance, to ensure contextual alignment between queries and content; and accuracy, to verify that responses met academic expectations and avoided hallucinations. The metric design accounted for the complexity of parallel computing topics and the need for high-quality support in self-directed learning environments. These metrics were conceived as descriptive indicators within the specific instructional context, without predefined acceptability thresholds or inferential statistical testing, since the purpose of the evaluation was to demonstrate feasibility and pedagogical alignment rather than statistical significance.

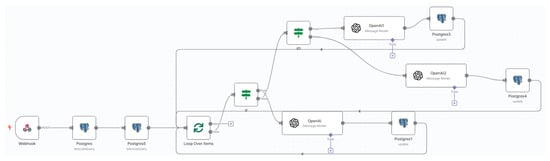

Custom end-to-end (E2E) evaluation workflows were created using the n8n platform []. These workflows enabled comprehensive testing by processing user interactions from input to final output and routing them through conditional logic based on resource type (e.g., Q&A, practical guides, or quizzes). Each type of deliverable was evaluated using specialized prompts that reflected the pedagogical criteria expected for that resource. The evaluation process was automated, with trace records filtered from the PostgreSQL database and scored according to structured rubrics. Figure 8 illustrates the flow used for the evaluation of academic resources.

Figure 8.

Workflow used for the end-to-end evaluation of academic resources, including practical guides, quizzes, and Q&A modules.

A separate workflow was dedicated to coverage testing [], assessing whether the most relevant information retrieved by the vector store was properly utilized by the language model in generating responses. This included calculating the relationship between the elements used and retrieved, verifying contextual alignment, and tracking the influence of the document metadata. Figure 9 presents the coverage evaluation pipeline.

Figure 9.

Coverage evaluation workflow to analyze how retrieved context influences the generation phase. Red asterisks indicate required object-type variables for the proper functioning of the workflow node, for example, “Model.” Each node must be connected to an object of the type requested (e.g., GPT-4o-mini), and it cannot be connected to an incompatible object.

To support these metrics, a representative test suite was assembled based on real course scenarios []. This suite included question prompts, guide generation requests, and quiz creation tasks derived from course syllabi and materials, and ensured consistent coverage across instructional topics. All interactions were logged into the trace database and classified by type for later analysis.

Following initial evaluations, iterative tuning activities were performed to enhance system performance. These refinements targeted various components of the architecture: segmentation logic was improved to better segment content semantically; metadata filters were adjusted for more precise document retrieval; and prompt engineering strategies were adapted to the instructional tone and level of specificity required by each resource type. Reindexing of updated content ensured that changes were reflected in subsequent queries.

The scoring criteria were implemented using structured evaluation prompts, such as the one shown in Algorithm 2, which defines the end-to-end evaluation logic for chatbot responses in the Q&A module.

| Algorithm 2 End-to-End Evaluation Prompt for Q&A Responses Generated by the Virtual Assistant |

| Require: User question, contextual input, assistant’s response |

| Ensure: Evaluation score (1: Poor, 2: Fair, 3: Excellent) |

|

Finally, the structured evaluation and tuning protocol established in this phase allowed for scalable validation and laid the foundation for generating a complete system evaluation report. This methodological rigor ensured that the educational assistant evolved on the basis of concrete academic criteria, reinforcing its alignment with the instructional needs of parallel computing courses.

4. Results

This section presents the outcomes derived from the evaluation of the educational assistant system deployed in the Parallel Computing course. The evaluation process was designed to measure the system’s performance from both technical and academic perspectives, using a structured suite of one hundred test cases. These cases included question-and-answer tasks, quiz generation, and practical guide creation.

Each test followed a complete sequence of retrieval and generation, allowing for the collection of metrics such as response time, quality rating, and relevance of contextual fragments. Additionally, qualitative analysis was conducted to evaluate instructional clarity and contextual alignment. The results are presented in four main categories that reflect the assistant’s capability to support autonomous learning and deliver value in real academic scenarios.

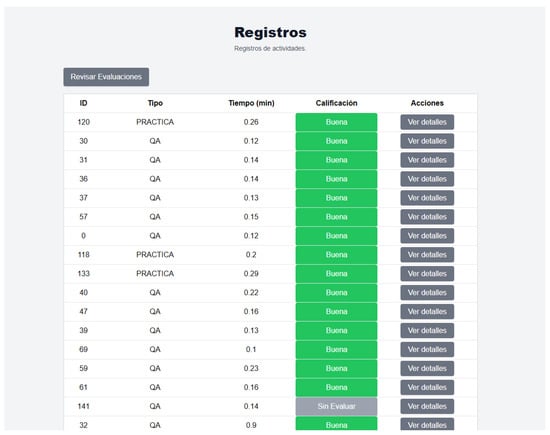

4.1. System Response and Content Evaluation

A total of 100 test cases were executed: 55 question-and-answer interactions, 25 guide requests, and 20 quiz generation prompts. Each response was automatically logged and assessed using a rating scale from 1 to 3, where 3 indicated excellent quality, 2 acceptable, and 1 insufficient, as detailed in Table 3. Across all cases, 92 percent received a score of 3, reflecting high quality, instructional relevance, and adherence to teacher guidelines. This score was derived entirely from the automated evaluation workflow detailed in Section 3.5 (Algorithm 2).

Table 3.

Evaluation of academic responses by resource type.

Response time varied by task complexity. Simple tasks such as question-and-answer delivery were completed within 5 to 10 s. More elaborate requests like quizzes and guides took between 25 and 35 s, with a global average of 21 s. In most cases, the assistant generated content that included explanations, coding examples, or assessment rubrics aligned with the course’s educational materials.

To evaluate the effectiveness of the retrieval component, a coverage metric was used. This metric compares the number of relevant fragments retrieved from the vector database with those that are used in the generated response. The average coverage score was 72 percent, indicating that most responses were effectively grounded in the relevant course materials. This high coverage minimizes the reliance on the internal parametric knowledge of LLMs, reducing the risk of hallucinations and ensuring that the generated educational content remains factually consistent and aligned with the instructional objectives.

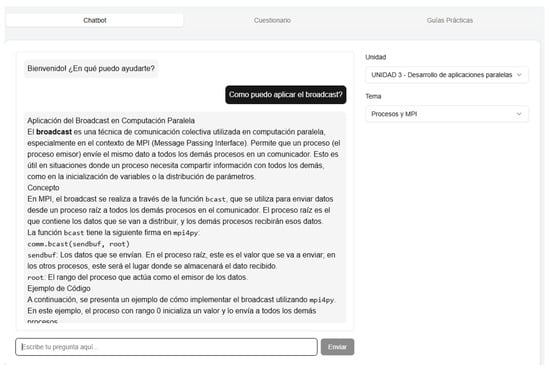

4.2. Instructional Alignment and Case Examples

Selected test cases were analyzed to evaluate how well the assistant aligned with the academic content and learning objectives. In the case of questions and answers, the assistant delivered structured responses using terminology and examples consistent with the course slides and teacher guides. For example, in a query about the application of broadcast in parallel computing using the MPI library, the assistant retrieved fragments from source materials and constructed precise explanations with corresponding code. The response included a clear definition, technical context, usage details, and a demonstration using the mpi4py library. This structured explanation aligned with the course content and provided students with both conceptual understanding and practical implementation guidance (see Figure 10).

Figure 10.

Example of a question-and-answer interaction. The assistant explains the concept of broadcast in parallel computing with a structured explanation and a code snippet aligned with the MPI library (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

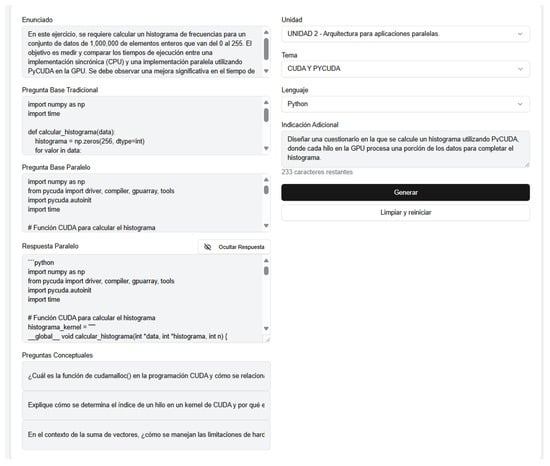

Quizzes produced by the assistant combined conceptual understanding with code-based challenges. Many included scenarios where students had to compare execution performance between synchronous and parallel implementations. These quizzes reflected classroom practices and emphasized the application of core concepts. For example, in a request related to histogram calculation using CUDA and PyCUDA, the assistant generated a side-by-side implementation of the solution using both CPU and GPU, followed by multiple-choice questions that assessed the student’s understanding of parallel execution and memory handling. This structured output demonstrated the assistant’s ability to scaffold learning through problem solving and targeted questioning (see Figure 11).

Figure 11.

Example of a quiz generated by the assistant. The quiz includes a problem statement, sequential and parallel base code, an expected output, and concept-based multiple-choice questions (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

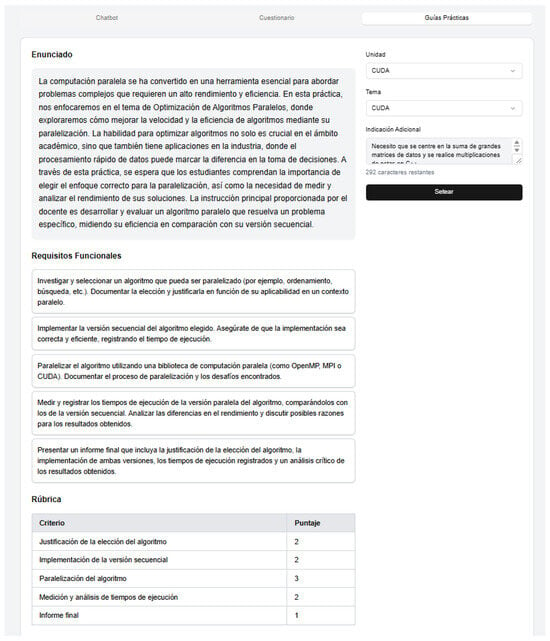

For practical guides, the assistant generated complete instruction sets, including functional goals, evaluation rubrics, and partially completed code fragments. These guides adhered to the standards defined by instructors and included specific topics like multiprocessing, MPI, CUDA, thread synchronization, and parallel programming in general. The generated responses followed a format that supported both formative and summative evaluation. For example, in a guide focused on optimizing parallel algorithms using CUDA, the assistant generated a scenario where students had to implement and evaluate the sum and multiplication of large data matrices, comparing the efficiency of the parallel version against its sequential counterpart. The guide included detailed implementation instructions, functional requirements, and a grading rubric addressing justification, parallelization, execution measurements, and critical analysis (see Figure 12).

Figure 12.

Practical guide generation page. Students can define the unit and topic, then receive a detailed scenario, functional requirements, and a rubric to guide their implementation (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

4.3. User Interaction Monitoring and System Usage

The user interface allowed both students and instructors to interact with the assistant in different ways. Each request generated a trace that was stored with metadata, including the type of resource, response time, and evaluation score. These data were visualized in a dashboard accessible to administrators, providing visibility over system usage patterns and performance behavior.

The dashboard included metrics on the most used services, average processing time, and the percentage of high-quality outputs. These analytics provided continuous feedback for system refinement and served as a foundation for future expansion. Figure 13 shows the dashboard used to monitor these interactions.

Figure 13.

System usage dashboard with records of interactions, response times, and evaluation scores (all labels in the interface are in Spanish, as the assistant is designed for native-language use in Latin American academic environments).

5. Discussion

The implementation and evaluation of the educational virtual assistant for the Parallel Computing course demonstrated that a retrieval augmented generation architecture, when supported by a structured knowledge base and carefully designed workflows, can produce highly accurate and pedagogically aligned academic content. The assistant’s consistent performance across question-and-answer sessions, quizzes, and practical guides supports the initial working hypothesis that generative language models, when properly guided by contextual data, can replicate instructional tasks commonly performed by educators.

The consistently high scores, where 92% of responses were rated as excellent and 72% of outputs reflected proper coverage of retrieved fragments, indicate that combining a semantically indexed academic corpus with targeted prompt engineering and LLM generation contributes to a reliable content delivery mechanism. The materials used in this study were drawn from a structured academic dataset covering four core units of the Parallel Computing course, including sub-topics such as CUDA, PyCUDA, message passing interface, and clustering.

These preliminary findings suggest that NOVA’s high relevance and accuracy scores are attributable to its modular architecture, the inclusion of validated course materials, careful prompt tuning, and temperature adjustment. The observed coverage metric (72%) likely reflects both the limitations of the curated corpus, as certain answers required information not explicitly present in the uploaded materials, and the controlled retrieval strategy (top-5 fragments). To mitigate this limitation, prompt tuning and a carefully selected temperature value between 0.2 and 0.35 were employed. This configuration strikes a balance: it instructs the model to primarily base its responses on the context provided by the instructor’s materials, while still allowing it to draw from its internal knowledge to fill in gaps when the corpus lacks sufficient detail. A lower temperature, closer to 0, would have resulted in the model declining to answer when the context was insufficient. This deliberate balance ensures that answers remain thematically aligned with the course while maintaining overall quality.

Compared with prior systems such as Chen’s ChatGPT-based tutor, which focuses on syntax instruction and general engagement, and KG-RAG applied in finance education achieving a 35% improvement in assessments, NOVA demonstrates competitive performance (92% excellent responses, 72% coverage) in a more specialized STEM domain and in Spanish. NotebookLM and Modran’s RAG-based tutors show promising but unreported quantitative metrics, while NOVA provides transparent evaluation within a highly technical, non-English educational setting. It is important to note that these comparisons are based on architectural and contextual differences rather than results from controlled experiments or inferential statistical analyses. While NOVA demonstrates promising performance in a highly specialized and linguistically inclusive setting, we do not claim statistically validated superiority over other RAG-based systems at this stage.

These findings confirm earlier evidence from natural language processing and education research, where systems enhanced by retrieval components tend to reduce hallucinations and improve alignment with factual content. In this context, the assistant’s success suggests that retrieval augmented generation systems, when supplied with domain-aligned, high-quality materials and refined prompts, can serve not only as technical support tools but as credible educational agents. Their ability to adapt content dynamically while preserving instructional coherence points to new possibilities for scalable, curriculum-integrated AI assistance in technical education. Moreover, this capability positions such systems as valuable contributors to evolving pedagogical models.

From a pedagogical perspective, NOVA can support flipped classrooms by providing preparatory materials and contextual answers prior to in-class activities. It also facilitates self-paced review and exam preparation by making validated course content accessible interactively. Future integration into instructional workflows could further enhance autonomous learning in technical education.

In broader terms, these results contribute to ongoing discussions around the use of artificial intelligence in higher education. The assistant not only reduced the time required to generate instructional content but also promoted student autonomy by enabling on-demand access to contextualized explanations and exercises. This aligns with pedagogical frameworks that emphasize active learning and learner-centered instruction, particularly in technical domains where the availability of tailored resources is often limited.

Building on this, NOVA also demonstrates how linguistically inclusive educational tools can reduce barriers in STEM education for underrepresented Spanish-speaking populations. Its architecture can be adapted to accommodate regional dialects or even other low-resource languages, promoting equity and accessibility in technical learning environments. We also acknowledge, however, that even though NOVA was developed and evaluated entirely in Spanish, cultural and linguistic biases can still arise due to regional variations in the language or the way instructional materials are curated. Such biases could manifest themselves in terminology, examples, or pedagogical approaches that may not fully align with the expectations of students in different Spanish-speaking contexts. To mitigate these risks, the course instructors wrote the knowledge base and carefully reviewed it to ensure that the content reflects appropriate terminology, examples, and educational practices for the intended audience.

In terms of deployment, NOVA relies on a modular stack (Qdrant, FastAPI, Next.js, n8n) that is easily extensible to other domains by replacing the knowledge base and metadata. This design enables other institutions to adopt and adapt NOVA with minimal changes, opening possibilities for broader dissemination and open-source collaboration. We acknowledge, however, that reliance on commercial LLMs and dedicated vector databases entails costs that may be challenging for some institutions. The flexible architecture allows adaptation to different levels of infrastructure, making it possible to tailor deployments to available resources.

In future work, expanding the assistant’s capabilities to support multimodal content, adaptive feedback mechanisms, and domain transfer across subjects would provide a more comprehensive learning experience. Additional research could explore how the assistant’s output affects student outcomes over time, integrating metrics of academic performance and learner engagement to validate its impact within digital education further. Future iterations may also explore cost-effective deployment strategies, including the use of open-source language models and lightweight configurations, to improve accessibility for institutions with limited computational resources. Additional research will also include controlled validation studies involving real students to assess the effectiveness, usability, and perceived pedagogical value of the assistant in authentic classroom settings.

Limitations

This study has several limitations. The evaluation was conducted over a limited sample of 100 test cases designed by the development team, without direct input from end users during validation. Metrics such as coverage and relevance rely on subjective interpretation of retrieval adequacy and may be influenced by manual annotation of metadata and corpus selection. The absence of formal usability testing or inter-rater reliability analysis further limits the generalizability of the findings. Finally, as the corpus was specific to one institution and course, potential overfitting to its content cannot be ruled out. Nonetheless, these instances were relatively infrequent and did not substantially detract from the overall perceived quality or pedagogical value of the responses, as reflected in the high excellence rating.

6. Conclusions

This work presented the design, implementation, and evaluation of NOVA, an educational virtual assistant tailored for the Parallel Computing course at Universidad Politécnica Salesiana. Built on a Retrieval Augmented Generation (RAG) framework and operating entirely in Spanish, NOVA demonstrated the potential of integrating curated academic corpora, semantic search, and Large Language Models to support technical education in Latin American contexts.

The assistant successfully delivered contextualized responses for question-and-answer sessions, quiz generation, and the creation of practical guides, effectively aligning outputs with instructional materials and demonstrating strong pedagogical relevance. These outcomes support the hypothesis that RAG architectures, when combined with pedagogically aligned datasets and specialized prompt engineering, can produce coherent and instructional responses in complex subjects like parallel computing.

The use of Spanish as the system’s operating language addressed the accessibility gap in AI-enhanced educational tools, ensuring native language interaction and culturally appropriate explanations. This localization effort expands the relevance of RAG-based assistants to underrepresented academic regions and opens the door for broader integration across other domains and languages.

Future research will focus on extending NOVA’s capabilities, exploring its long-term impact on learning, and broadening its application to other academic fields.

Author Contributions

Conceptualization, G.A.L.-P., L.A.A.-N., and K.D.P.-G.; methodology, G.A.L.-P., L.A.A.-N., and K.D.P.-G.; software, L.A.A.-N. and K.D.P.-G.; validation, G.A.L.-P., L.A.A.-N., and K.D.P.-G.; formal analysis, G.A.L.-P.; investigation, G.A.L.-P., L.A.A.-N., and K.D.P.-G.; resources, G.A.L.-P.; data curation, G.A.L.-P.; writing—original draft preparation, L.A.A.-N. and K.D.P.-G.; writing—review and editing, G.A.L.-P.; visualization, L.A.A.-N. and K.D.P.-G.; supervision, G.A.L.-P.; project administration, G.A.L.-P.; funding acquisition, G.A.L.-P. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been supported by the Universidad Politécnica Salesiana (UPS).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. Due to academic privacy policies and ongoing research extensions, the datasets are not publicly available but can be shared upon reasonable request for scientific validation purposes.

Acknowledgments

During the preparation of this manuscript, the authors used Draw.io for the creation of the architecture diagram, Graphviz (via Python) for the methodology diagram, and native tools from the n8n software suite to generate the workflow diagrams. Additionally, ChatGPT (OpenAI, GPT-4) was used to assist in the structuring, drafting, and refinement of the manuscript. All outputs generated by these tools were thoroughly reviewed and edited by the authors, who take full responsibility for the final content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Anghel, G.A.; Zanfir, C.M.; Matei, F.L.; Voicu, C.D.; Neacșa, R.A. The Integration of Artificial Intelligence in Academic Learning Practices: A Comprehensive Approach. Educ. Sci. 2025, 15, 616. [Google Scholar] [CrossRef]

- Alam, A. Harnessing the Power of AI to Create Intelligent Tutoring Systems for Enhanced Classroom Experience and Improved Learning Outcomes. In Proceedings of the Intelligent Communication Technologies and Virtual Mobile Networks, Tirunelveli, India, 16–17 February 2023; Rajakumar, G., Du, K.L., Rocha, Á., Eds.; Springer: Singapore, 2023; pp. 571–591. [Google Scholar]

- Feng, W.; Lai, X.; Zhang, X.; Fan, X.; Du, Y. Research on the construction and application of intelligent tutoring system for english teaching based on generative pre-training model. Syst. Soft Comput. 2025, 7, 200232. [Google Scholar] [CrossRef]

- Ge, Y.; Hua, W.; Mei, K.; Ji, J.; Tan, J.; Xu, S.; Li, Z.; Zhang, Y. OpenAGI: When LLM Meets Domain Experts. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; pp. 5539–5568. [Google Scholar]

- Chen, W.Y. Intelligent Tutor: Leveraging ChatGPT and Microsoft Copilot Studio to Deliver a Generative AI Student Support and Feedback System within Teams. arXiv 2024, arXiv:2405.13024. [Google Scholar]

- Oprea, S.; Adela, B. Transforming Education With Large Language Models: Trends, Themes, and Untapped Potential. IEEE Access 2025, 13, 87292–87312. [Google Scholar] [CrossRef]

- Li, Z.; Wang, Z.; Wang, W.; Hung, K.; Xie, H.; Wang, F.L. Retrieval-augmented generation for educational application: A systematic survey. Comput. Educ. Artif. Intell. 2025, 8, 100417. [Google Scholar] [CrossRef]

- Singh, M.; Sun, D. Evaluating Minecraft as a game-based metaverse platform: Exploring gaming experience, social presence, and STEM outcomes. Interact. Learn. Environ. 2025, 1–23. [Google Scholar] [CrossRef]

- Wei, X.; Wang, L.; Lee, L.K.; Liu, R. The effects of generative AI on collaborative problem-solving and team creativity performance in digital story creation: An experimental study. Int. J. Educ. Technol. High. Educ. 2025, 22, 23. [Google Scholar] [CrossRef]

- Modran, H.A.; Bogdan, I.C.; Ursuțiu, D.; Samoilă, C.; Modran, P.L. LLM Intelligent Agent Tutoring in Higher Education Courses Using a RAG Approach. In Proceedings of the Futureproofing Engineering Education for Global Responsibility, Tallinn, Estonia, 24–27 September 2024; Auer, M.E., Rüütmann, T., Eds.; Springer: Cham, Switzerland, 2025; pp. 589–599. [Google Scholar]

- Dong, C.; Yuan, Y.; Chen, K.; Cheng, S.; Wen, C. How to Build an Adaptive AI Tutor for Any Course Using Knowledge Graph-Enhanced Retrieval-Augmented Generation (KG-RAG). arXiv 2023, arXiv:2311.17696. [Google Scholar] [CrossRef]

- Tophel, A.; Chen, L.; Hettiyadura, U.; Kodikara, J. Towards an AI tutor for undergraduate geotechnical engineering: A comparative study of evaluating the efficiency of large language model application programming interfaces. Discov. Comput. 2025, 28, 76. [Google Scholar] [CrossRef]

- Zhu, H.; Xiang, J.; Yang, Z. UnrealMentor GPT: A System for Teaching Programming Based on a Large Language Model. Comput. Appl. Eng. Educ. 2025, 33, e70023. [Google Scholar] [CrossRef]

- Newhall, T. Teaching parallel and distributed computing in a single undergraduate-level course. J. Parallel Distrib. Comput. 2025, 202, 105092. [Google Scholar] [CrossRef]

- Gowanlock, M. Teaching parallel and distributed computing using data-intensive computing modules. J. Parallel Distrib. Comput. 2025, 202, 105093. [Google Scholar] [CrossRef]

- Conte, D.J.; de Souza, P.S.L.; Martins, G.; Bruschi, S.M. Teaching Parallel Programming for Beginners in Computer Science. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Gowanlock, M.; Gallet, B. Data-Intensive Computing Modules for Teaching Parallel and Distributed Computing. In Proceedings of the Data-Intensive Computing Modules for Teaching Parallel and Distributed Computing, Portland, OR, USA, 17–21 June 2021; pp. 350–357. [Google Scholar] [CrossRef]

- Carneiro Neto, J.A.; Alves Neto, A.J.; Moreno, E.D. A Systematic Review on Teaching Parallel Programming. In Proceedings of the 11th Euro American Conference on Telematics and Information Systems, Aveiro, Portugal, 1–3 June 2022; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Swacha, J.; Gracel, M. Retrieval-Augmented Generation (RAG) Chatbots for Education: A Survey of Applications. Appl. Sci. 2025, 15, 4234. [Google Scholar] [CrossRef]

- Chu, Y.; He, P.; Li, H.; Han, H.; Yang, K.; Xue, Y.; Li, T.; Krajcik, J.; Tang, J. Enhancing LLM-Based Short Answer Grading with Retrieval-Augmented Generation. arXiv 2025, arXiv:2504.05276. [Google Scholar]

- Raihan, N.; Siddiq, M.L.; Santos, J.C.; Zampieri, M. Large Language Models in Computer Science Education: A Systematic Literature Review. In Proceedings of the 56th ACM Technical Symposium on Computer Science Education V. 1, SIGCSETS 2025, Pittsburgh, PA, USA, 26 February–1 March 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 938–944. [Google Scholar] [CrossRef]

- Tufino, E. NotebookLM: An LLM with RAG for active learning and collaborative tutoring. arXiv 2025, arXiv:2504.09720. [Google Scholar] [CrossRef]

- Dakshit, S. Faculty Perspectives on the Potential of RAG in Computer Science Higher Education. arXiv 2024, arXiv:2408.01462. [Google Scholar]

- Kuratomi, G.; Pirozelli, P.; Cozman, F.G.; Peres, S.M. A RAG-Based Institutional Assistant. arXiv 2025, arXiv:2501.13880. [Google Scholar]

- Reicher, H.; Frenkel, Y.; Lavi, M.J.; Nasser, R.; Ran-milo, Y.; Sheinin, R.; Shtaif, M.; Milo, T. A Generative AI-Empowered Digital Tutor for Higher Education Courses. Information 2025, 16, 264. [Google Scholar] [CrossRef]

- Xu, X.; Liu, S.; Zhu, L.; Long, Y.; Zeng, Y.; Lu, X.; Li, J.; Dong, Y. Development and evaluation of a retrieval-augmented large language model framework for enhancing endodontic education. Int. J. Med Inform. 2025, 203, 106006. [Google Scholar] [CrossRef] [PubMed]

- Teng, D.; Wang, X.; Xia, Y.; Zhang, Y.; Tang, L.; Chen, Q.; Zhang, R.; Xie, S.; Yu, W. Investigating the utilization and impact of large language model-based intelligent teaching assistants in flipped classrooms. Educ. Inf. Technol. 2025, 30, 10777–10810. [Google Scholar] [CrossRef]

- Kabudi, T.; Pappas, I.; Olsen, D.H. AI-enabled adaptive learning systems: A systematic mapping of the literature. Comput. Educ. Artif. Intell. 2021, 2, 100017. [Google Scholar] [CrossRef]

- Jeong, S.; Baek, J.; Cho, S.; Hwang, S.J.; Park, J.C. Adaptive-RAG: Learning to Adapt Retrieval-Augmented Large Language Models through Question Complexity. arXiv 2024, arXiv:2403.14403. [Google Scholar]

- Parra-Zambrano, B.E.; León-Paredes, G.A. A Web Approach for the Extraction, Analysis, and Visualization of Sentiments in Social Networks Regarding the Public Opinion on Politicians in Ecuador Using Natural Language Processing and High-Performance Computing Tools. In Proceedings of the Information Technology and Systems, Temuco, Chile, 24–26 January 2024; Rocha, Á., Ferrás, C., Hochstetter Diez, J., Diéguez Rebolledo, M., Eds.; Springer: Cham, Switzerland, 2024; pp. 457–466. [Google Scholar]

- Loper, E.; Bird, S. NLTK: The Natural Language Toolkit. arXiv 2002, arXiv:0205028. [Google Scholar] [CrossRef]

- Pan, J.J.; Wang, J.; Li, G. Survey of vector database management systems. VLDB J. 2024, 33, 1591–1615. [Google Scholar] [CrossRef]

- Goel, R. Using text embedding models as text classifiers with medical data. arXiv 2024, arXiv:2402.16886. [Google Scholar] [CrossRef] [PubMed]

- Hesham, A.; Hamdy, A. Fine-Tuning GPT-4o-Mini for Programming Questions Generation. In Proceedings of the 2024 International Conference on Computer and Applications (ICCA), Cairo, Egypt, 17–19 December 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring Massive Multitask Language Understanding. arXiv 2021, arXiv:2009.03300. [Google Scholar] [CrossRef]

- Thelwall, M. Is Google Gemini better than ChatGPT at evaluating research quality. J. Data Inf. Sci. 2025, 10, 1–5. [Google Scholar] [CrossRef]

- Mabotha, E.; Mabunda, N.E.; Ali, A. Performance Evaluation of a Dynamic RESTful API Using FastAPI, Docker and Nginx. In Proceedings of the Performance Evaluation of a Dynamic RESTful API Using FastAPI, Docker and Nginx, Batman, Turkiye, 4–6 December 2024; pp. 174–181. [Google Scholar] [CrossRef]

- Rushdy, E.; Khedr, W.; Salah, N. Framework to secure the OAuth 2.0 and JSON web token for rest API. J. Theor. Appl. Inf. Technol. 2021, 99, 2144–2161. [Google Scholar]

- Barra, F.L.; Rodella, G.; Costa, A.; Scalogna, A.; Carenzo, L.; Monzani, A.; Corte, F.D. From prompt to platform: An agentic AI workflow for healthcare simulation scenario design. Adv. Simul. 2025, 10, 29. [Google Scholar] [CrossRef] [PubMed]

- Tuyishime, A.; Basciani, F.; Di Salle, A.; Cánovas Izquierdo, J.L.; Iovino, L. Streamlining Workflow Automation with a Model-Based Assistant. In Proceedings of the 2024 50th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Paris, France, 28–30 August 2024; pp. 180–187. [Google Scholar] [CrossRef]

- Horgan, J.R.; London, S.; Lyu, M.R. Achieving software quality with testing coverage measures. Computer 1994, 27, 60–69. [Google Scholar] [CrossRef]

- Breakey, K.M.; Levin, D.; Miller, I.; Hentges, K.E. The Use of Scenario-Based-Learning Interactive Software to Create Custom Virtual Laboratory Scenarios for Teaching Genetics. Genetics 2008, 179, 1151–1155. [Google Scholar] [CrossRef] [PubMed][Green Version]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).