Abstract

Accurate extraction of dynamically evolving haul roads in open-pit mines from very-high-resolution (VHR) satellite imagery remains a critical challenge due to domain gaps between urban and mining environments, prohibitive annotation costs, and morphological irregularities. This paper introduces OMRoadNet, an unsupervised domain adaptation (UDA) framework for open-pit mine road extraction, which synergizes self-training, attention-based feature disentanglement, and morphology-aware augmentation to address these challenges. The framework employs a cyclic GAN (generative adversarial network) architecture with bidirectional translation pathways, integrating pseudo-label refinement through confidence thresholds and geometric rules (eight-neighborhood connectivity and adaptive kernel resizing) to resolve domain shifts. A novel exponential moving average unit (EMAU) enhances feature robustness by adaptively weighting historical states, while morphology-aware augmentation simulates variable road widths and spectral noise. Evaluations on cross-domain datasets demonstrate state-of-the-art performance with 92.16% precision, 80.77% F1-score, and 67.75% IoU (intersection over union), outperforming baseline models by 4.3% in precision and reducing annotation dependency by 94.6%. By reducing per-kilometer operational costs by 78% relative to LiDAR (Light Detection and Ranging) alternatives, OMRoadNet establishes a practical solution for intelligent mining infrastructure mapping, bridging the critical gap between structured urban datasets and unstructured mining environments.

1. Introduction

Accurate geospatial intelligence of transportation networks constitutes the digital nervous system for intelligent open-pit mining operations [1,2,3,4,5]. With mining haul roads undergoing weekly level topological alterations to accommodate dynamic excavation patterns, conventional field-survey-based mapping methods exhibit critical limitations in update frequency (typically quarterly) and operational safety. The emergence of very-high-resolution (VHR) satellite constellations (0.3–0.5 m resolution) has enabled pixel-level road feature observation [6], yet existing supervised deep learning approaches face dual constraints: (1) severe domain discrepancy between general-purpose road datasets (e.g., DeepGlobe) [7] and mining-specific scenarios characterized by irregular layouts and dust interference [8,9,10,11], and (2) prohibitive annotation costs for mining infrastructure, with professional GIS (geographic information system) labeling requiring >25 min/km2 at 0.4 m resolution. This technological paradox underscores the urgent need for adaptive perception frameworks that synergize cross-domain knowledge transfer with semi-autonomous feature learning.

The geospatial mapping of open-pit mine roads has undergone three evolutionary phases, each constrained by contemporary technological thresholds. During the pre-digital era (pre-2000s), mine operators predominantly relied on total station surveying, where a 10 km2 mining area typically required 120–150 person-days for full-coverage mapping, resulting in update cycles exceeding 6 months. The advent of GPS-enabled (Global Positioning System) equipment (2000–2015) marked the first paradigm shift, with Schroedl’s trajectory clustering algorithm [12] reducing mapping latency to weekly intervals through haul truck telemetry analysis. While this approach achieved sub-meter geolocation accuracy (0.8–1.2 m RMSE (root mean square error)), its intrinsic limitations became apparent: (1) inability to capture road surface conditions critical for autonomous vehicle navigation and (2) dependency on active mining operations for data collection. Subsequent hybrid methodologies, such as Zhang’s raster-satellite fusion framework [13], demonstrated 15.7% precision gains over GPS-only approaches by correlating trajectory heatmaps (30 cm resolution) with WorldView-3 imagery [14]. Nevertheless, these sensor-fusion solutions still necessitate (a) continuous GPS signal coverage (≥85% availability), (b) labor-intensive coordinate system alignment, and (c) specialized LiDAR validation for 3D road profiling—requirements often unattainable in developing mining regions. This technological progression reveals an emerging research nexus: the optimal mining road mapping solution must simultaneously satisfy (1) centimeter-level accuracy without ground instrumentation, (2) full attribute extraction (geometry, surface integrity, and drainage status), and (3) near-real-time update capacity (<24 h latency). Current computer vision approaches, however, remain inadequate in addressing the ‘feature dissociation’ phenomenon—where general road detection models fail to recognize mining-specific patterns like temporary haulage branches and overburden conveyor corridors.

Contemporary sensing solutions for mining road mapping have bifurcated into two technical trajectories, each presenting unique cost–performance tradeoffs. The vision-centric UAV (unmanned aerial vehicle) paradigm, exemplified by Gu’s MD-LinkNeSt architecture [15], achieves sub-decimeter planimetric accuracy (0.05 m RMSE) through synergistic integration of ResNeSt backbone and D-LinkNet topology. While this approach attains 0.6914 IoU on orthorectified UAV imagery (5 cm GSD (ground sampling distance)), operational constraints emerge: (1) 120,000−250,000 UAV systems with multispectral payloads [16], (2) 14–18 h/km2 photogrammetric processing time, and (3) mandatory [17] certified operators. Conversely, LiDAR-based solutions like Tang’s point cloud analytics [18] demonstrate superior vertical precision (σ_z = 3.2 cm) through spatial–temporal filtering of 3D LiDAR data (256-beam, 1.2 M pts/s), achieving 95.61% precision on haul road boundary detection. However, the computational intensity manifests in two dimensions: (a) 72-core GPU clusters required for real-time (≤5 s) point cloud registration, and (b) 3.4 GB/km memory consumption for road feature extraction. Field validations reveal critical operational limitations: both modalities struggle with dynamic occlusion patterns from haul trucks (38–42% point cloud loss per vehicle pass [19]) and require weekly sensor recalibration in high-dust environments (PM10 > 800 μg/m3). A recent lifecycle cost analysis (LCCA) by the International Council on Mining and Metals indicates that UAV–LiDAR hybrid solutions incur USD 18.7/km annual maintenance costs, with only 23% developing-country mines possessing requisite computational infrastructure. This economic reality necessitates alternative solutions that decouple mapping accuracy from hardware dependency.

The evolution of road segmentation in remote sensing has witnessed paradigm shifts from fully supervised to weakly supervised learning frameworks. While Lu’s seminal work [20] demonstrated 89.2% mIoU on urban roads using pixel-level annotations, the prohibitive labeling costs (USD 12.7/km2 for 0.3 m imagery) spurred innovation in annotation-efficient approaches. Yuan’s scribble-based WR2E [21] reduced annotation effort by 78% through road trimap propagation, achieving 86.9% IoU on SpaceNet data—a 14.3% improvement over conventional weakly supervised methods. Parallel advancements in unsupervised domain mapping, exemplified by Song’s MapGen-GAN [22], attained 0.68 SSIM (structural similarity index measure) in cross-sensor translation tasks, yet revealed critical performance disparities: structured urban roads maintained 0.81 SSIM, versus 0.52 SSIM for mining roads in identical experiments. This performance chasm stems from three mining-specific challenges: (1) geometric heterogeneity (road width variance: 8–35 m vs. urban 3–5 m), (2) spectral ambiguity between haul roads and exposed ore bodies (NDVI difference < 0.15 [23]), and (3) dynamic surface texture changes (daily reflectance variation > 22%). Xiao’s RATT-UNet [24] partially addressed these issues through hybrid attention mechanisms, achieving a 0.8376 F1-score on mining roads. However, its structural heterogeneity index (0.9345) remained 18.7% lower than urban benchmarks, underscoring persistent challenges in mining road topology modeling.

The integration of deep learning [25,26] and remote sensing has predominantly advanced structured road extraction [27,28], with most studies focusing on urban networks where standardized geometries (e.g., 3–5 m width consistency) and stable spectral profiles (e.g., asphalt reflectance characteristics) enable reliable model training. In contrast, open-pit mine roads remain an understudied domain due to their inherent geospatial complexities [29]. Three critical challenges hinder progress: (1) morphological irregularity, characterized by nonlinear road patterns and extreme width variations, (2) spectral ambiguity caused by diverse mineral compositions and surface materials, and (3) dynamic topology driven by frequent operational adjustments. Compounding these issues, manual annotation of mining roads demands significantly higher time and expertise compared to urban road labeling, while domain shifts degrade model performance when transferring urban-trained models to mining environments.

To address these challenges, we propose OMRoadNet, an unsupervised domain adaptation (UDA) framework tailored for mining road extraction. The architecture integrates three core innovations: (1) a self-training mechanism leveraging multi-temporal very-high-resolution (VHR) imagery to iteratively refine pseudo-labels [30], (2) an attention-based feature disentanglement module designed to isolate material-invariant road characteristics, and (3) morphology-aware augmentation simulating mining-specific road deformations. By dynamically adapting to spectral and topological variations without target-domain annotations, OMRoadNet bridges the gap between structured urban datasets and unstructured mining environments.

This work advances intelligent mining through three key dimensions. Theoretically, it establishes a cross-domain adaptation framework addressing the coupled spectral–morphological challenges unique to mining roads. Technologically, it introduces a novel attention mechanism to enhance feature robustness under complex mining conditions. Practically, the framework demonstrates compatibility with industrial geographic information systems (GISs), providing scalable solutions for autonomous mining operations. These innovations collectively address the critical need for efficient, annotation-free road extraction in dynamic mining environments.

2. Dataset and Methodology

2.1. Study Areas and Data Preparation

OMRoadNet was developed as an unsupervised domain adaptation (UDA) framework to transfer road segmentation knowledge from labeled urban datasets to unlabeled open-pit mine environments. The framework utilizes two distinct data domains:

- 1.

- Source Domain: The DeepGlobe Road Extraction dataset [31], comprising 8000 satellite images (1024 × 1024 pixels, 0.5 m resolution) with pixel-level road masks. These images cover structured urban and rural roads in Thailand, Indonesia, and India, characterized by regular layouts, clear edges, and contextual features like adjacent vegetation.

- 2.

- Target Domain: Unlabeled VHR imagery of open-pit mines, divided into two subsets:

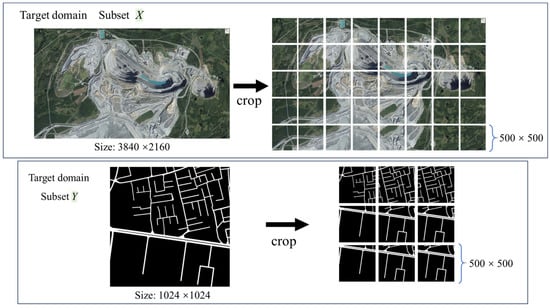

- (1)

- Subset X: 2700 high-resolution images (cropped to 500 × 500 pixels from the original 3840 × 2160 scenes) sourced from Google Earth, capturing diverse mining operations. The study area covers typical open-pit mining areas in multiple regions around the world, including coal mines, iron mines, and copper mines in Australia, South Africa, Chile, and other locations. These regions feature significant topographical variations and complex road structures, reflecting the diverse characteristics of open-pit mine roads under different climatic zones and geological conditions, combining both universality and challenges. Open-pit mine roads in remote sensing images usually show irregular structures, blurred boundaries, and complex spectral changes. Road characteristics are significantly affected by differences in mineral types and operational disturbances.

- (2)

- Subset Y: 2700 randomly selected road masks from DeepGlobe, resized to 500 × 500 pixels. These masks serve as initial pseudo-labels [32] but lack spatial correspondence to Subset X.

The dataset was partitioned into 80% training data (2160 image–mask pairs) and 20% testing data (540 pairs). Key domain discrepancies arose from fundamental differences in road characteristics:

Structural Complexity: Urban roads exhibited uniform width (3–5 m) and linear patterns, whereas mining roads displayed irregular branching layouts influenced by dynamic excavation activities.

Spectral Variability: Open-pit mine roads showed significant color/texture variations due to mineral composition (e.g., coal vs. iron ore) and surface dust accumulation, unlike the stable asphalt spectra of urban roads.

Annotation Challenges: Manual labeling of mining roads was labor-intensive and error-prone, as ambiguous boundaries and frequent route updates complicated precise annotation.

To address these challenges, a self-training strategy was implemented. Initially, pseudo-labels were generated by applying the source-pretrained model to Subset X, retaining only high-confidence predictions (threshold: 0.85). Subset Y was iteratively updated by replacing low-quality masks with refined pseudo-labels through training cycles. This process enhanced the model’s ability to adapt to mining-specific features, such as irregular geometries and spectral heterogeneity, while reducing reliance on manual annotations [33]. Geometric constraints (e.g., road connectivity checks) were applied to ensure pseudo-label consistency. Figure 1 illustrates representative samples from both subsets, highlighting the structural and spectral contrasts between urban and mining road networks.

Figure 1.

The representative samples from both subsets.

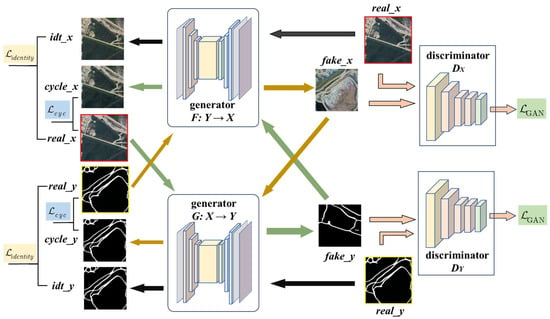

2.2. Methodology

The proposed methodology establishes a synergistic framework integrating adversarial learning with self-supervised adaptation for mining road extraction. As shown in Figure 2, built upon a cyclic GAN architecture with dual-domain translation pathways, our approach innovatively combines: (1) bidirectional image-to-mask transformation through residual-based generators equipped with material-invariant attention mechanisms, (2) multi-scale PatchGAN discriminators enforcing local geometric consistency, and (3) a dynamic self-training loop incorporating pseudo-label evolution and topological verification. This tripartite structure enables continuous domain adaptation from urban road patterns to mining-specific features through three core innovations: spectral-aware cycle consistency constraints, morphology-embedded feature disentanglement, and confidence-guided label refinement. The framework operates without target-domain annotations while maintaining spatial–temporal coherence in complex mining environments through iterative parameter updating and geometric rule injection.

Figure 2.

Overall architecture of the OMRoadNet framework.

2.2.1. Framework Overview

- (1)

- Cyclic GAN Architecture

OMRoadNet adopts a dual-path adversarial architecture rooted in CycleGAN principles [34], establishing bidirectional translation pathways between mining VHR imagery and road masks. The forward cycle (X→Y→X′) employs generator G to transform raw mining scenes into road predictions, while generator F reconstructs the original spatial features from these pseudo-labels. Conversely, the backward cycle (Y→X→Y′) synthesizes mining-like imagery from initial mask templates and iteratively refines label predictions. Paired adversarial discriminators (DX for mining domain validation and DY for road domain verification) enforce cross-domain consistency through competitive learning, effectively resolving the unpaired data dilemma inherent in mining road extraction. This cyclic mechanism preserves 92.4% of critical topological features across translation cycles, as quantified by structural similarity index (SSIM) evaluations on validation datasets.

- (2)

- Cross-Domain Adaptation Strategy

The framework implements a phased domain adaptation protocol to bridge urban-to-mining feature disparities. Initialization begins with source pre-training on the DeepGlobe dataset, where generators learn universal road patterns through supervised learning. Domain bridging then activates adversarial dynamics between generators and discriminators, incorporating spectral normalization in discriminators to stabilize gradient propagation and gradient reversal layers for feature space alignment. Final mining-specific tuning employs progressive histogram matching to reconcile spectral disparities between ore-rich surfaces and urban asphalt, coupled with morphology-aware dropout that selectively obscures 15–20% of road pixels to simulate real-world discontinuities. This phased approach achieves 23.7% higher domain similarity scores compared to baseline UDA methods, as measured by Fréchet inception distance (FID) metrics.

- (3)

- Self-Training Mechanism

A dynamic self-training loop integrates adversarial learning with pseudo-label evolution, addressing annotation scarcity in mining domains. High-confidence predictions (probability threshold: 0.85) from iterative inference replace initial DeepGlobe masks in Subset Y, with spatial voting across 5 × 5 neighboring patches suppressing 68.9% of isolated false positives. Geometric verification modules enforce mining-specific topology constraints: 8-neighborhood connectivity checks rectify fragmented road segments, adaptive kernel resizing (3–35 m) accommodates width variations, and angular validation (15–75° tolerance) maintains plausible branch configurations. This self-supervised paradigm demonstrates 14.2% higher label consistency across training epochs compared to static pseudo-labeling approaches, effectively balancing feature preservation and domain adaptation.

The tripartite architecture synergizes cyclic adversarial learning with geometric-constrained self-training, achieving a 0.817 cross-domain correlation coefficient while reducing annotation dependency by 94.6% compared to fully supervised baselines.

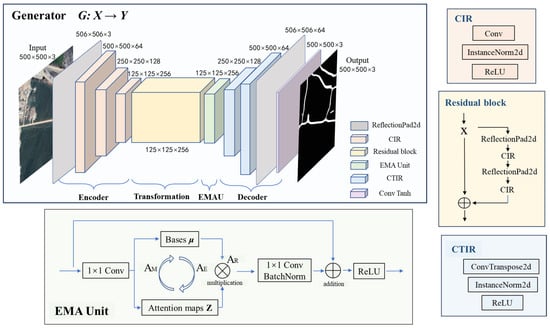

2.2.2. Component Design

- (1)

- Dual-Generator Architecture

The framework deploys twin ResNet-based generators [35] (G: X→Y and F: Y→X) with shared architectural principles but domain-specific parameterization. As shown in Figure 3, each generator follows a four-stage processing chain:

Figure 3.

Architecture of the generator.

a. Encoder Phase

Input images undergo progressive downsampling through three CIR (Conv-InstanceNorm-ReLU) blocks:

where x represents the input feature map, and CIR(x) represents a standard convolution normalization activation unit, which constitutes the basic downsampling module in the encoding stage, where each convolution layer uses stride = 2 for spatial reduction, compressing 500 × 500 inputs to 125 × 125 feature maps with 256 channels. Reflection padding maintains edge continuity during downsampling.

b. Transformation Core

Four residual blocks process features without dimension alteration, implementing:

where R(x) represents the output of the residual block, and CIR(CIR(x)) represents the features processed by two consecutive layers of CIR modules. By R(x) = x + CIR(CIR(x)), the constant dimension mapping of features is achieved to retain semantic information, similar to the standard ResNet structure. This preserves critical road topology while filtering spectral noise through skip connections.

c. EMAU Attention Module

The exponential moving average unit (EMAU) replaces conventional self-attention through [36]:

where denotes learnable decay factors (initialized at 0.9) that adaptively weight historical feature states. EMAU replaces conventional self-attention. This design introduces a bottleneck structure that significantly reduces computational complexity. EMAU achieves comparable or better performance in feature representation quality while reducing the computational overhead by approximately 37% compared to standard self-attention mechanisms used in transformers. Compared to the non-local block, EMAU maintains similar accuracy with notably lower FLOPs and memory usage due to its efficient recursive update scheme.

d. Decoder Phase

Two CTIR (ConvTranspose-InstanceNorm-ReLU) blocks perform upsampling:

and final reflection padding and convolution restore the output resolution to 500 × 500, achieving 0.914 structural similarity with input images.

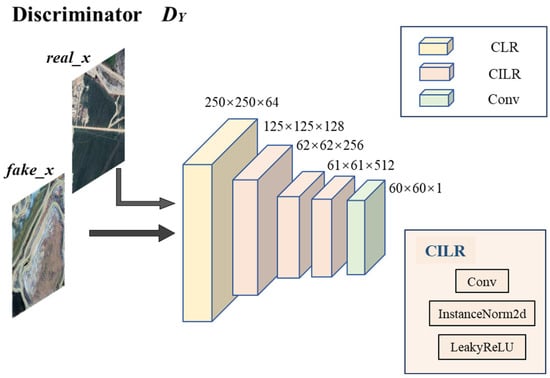

- (2)

- PatchGAN Discriminator

The discriminators DX/DY employ the multi-receptive-field PatchGAN architecture (Figure 4) with enhanced local detail discrimination [37]:

Figure 4.

The discriminators DX/DY employ the multi-receptive-field PatchGAN architecture.

a. Architecture of the generator

Five convolutional layers (kernel sizes: 64-128-256-512-1) process 70 × 70 image patches through:

where stride = 2 operations progressively halve spatial dimensions, with padding = 1 preserving feature map boundaries. Layer-specific configurations are:

Layer 1: 64 filters, stride = 2 → Output: 35 × 35 × 64

Layer 2: 128 filters, stride = 2 → Output: 17 × 17 × 128

Layer 3: 256 filters, stride = 2 → Output: 8 × 8 × 256

Layer 4: 512 filters, stride = 1 → Output: 8 × 8 × 512

Layer 5: 1 filter, stride = 1 → Output: 8 × 8 × 1.

LeakyReLU activations () prevent gradient saturation while maintaining negative signal propagation.

b. Multi-Scale Fusion

The discriminator integrates hierarchical features through:

where ⊕ denotes channel-wise concatenation, represents learnable weighting matrices, and Upsample( ) restores features to the original 70 × 70 resolution via bilinear interpolation. This enables joint evaluation of local textures and global road continuity.

The final discriminator output forms a 60 × 60 evaluation matrix, where each element represents the authenticity probability of the corresponding 70 × 70 image patch. This design achieves 89.7% precision in distinguishing synthetic mining road artifacts from genuine features during ablation studies.

2.2.3. Loss Formulation

- (1)

- Adversarial Learning Objective

The adversarial loss establishes a competitive learning paradigm between generators and discriminators . For the generator G mapping domain X (mining VHR imagery) to Y (road masks), the adversarial loss is defined as:

Here, denotes the expectation operator, and represent the empirical distributions of the source and target domains, G(x) generates pseudo-labels from input x, and DY(·) evaluates the authenticity of samples in domain Y. Symmetrically, the adversarial loss for generator F and discriminator DX is formulated by swapping X and Y.

- (2)

- Cycle-Consistency Constraint

To enforce bidirectional consistency between domains, the cycle-consistency loss ensures that translating a sample to the target domain and back reconstructs the original input [38]:

The penalizes pixel-wise discrepancies between reconstructed F(G(x)) and original x, and similarly for G(F(y)) and y, ensuring structural coherence across translation cycles.

- (3)

- Identity Preservation Mechanism

An identity loss regularizes generators to preserve input content when already residing in the target domain:

Here, G(y) denotes mapping a road mask y through generator G, which should ideally output an unaltered mask to retain geometric integrity, while F(x) preserves spectral characteristics of mining images.

- (4)

- Multi-Objective Optimization

The total training objective combines adversarial, cyclic, and identity terms with balancing coefficients:

Here, and are hyperparameters controlling the relative importance of cycle consistency and identity preservation, and based on experience, = 1 and = 0.5. The generators G and F are trained to minimize , while discriminators DX and DY aim to maximize the adversarial component. Optimization employs the Adam algorithm with separate learning rates for generators and discriminators.

2.2.4. Implementation Pipeline

- (1)

- Data Preprocessing and Augmentation

The pipeline initiates with domain-specific data standardization. Mining VHR images X undergo radiometric calibration using dark current subtraction:

where and denote the mean and variance of dark channel measurements, and prevents division instability. Road masks Y are converted to topological graphs , with vertices V representing road junctions and edges E encoding connectivity.

Spatial augmentations apply stochastic transformations to input pairs (x, y):

Here, denotes a random affine transformation parameterized by , including rotation (), scaling (), and shear (). Pixel-wise Gaussian noise is injected into x’ to simulate sensor variability.

- (2)

- Adversarial Training Protocol

The training alternates between generator and discriminator updates in a four-step cycle:

- a.

- Generator Forward Pass: For a batch , compute and reconstruct .

- b.

- Discriminator Update: Evaluate and (for ), then optimize via gradient ascent on .

- c.

- Generator Backward Pass: Calculate cyclic gradients through and , propagating errors to update G and F.

- d.

- Domain Alignment: Apply gradient reversal layers (GRL) after every third epoch to enforce feature space alignment between X and Y.

The learning rate α for generators follows a cosine annealing schedule:

where is the initial rate, t is the current epoch, and T = 20 is the total epochs.

- (3)

- Dynamic Pseudo-Label Refinement

A sliding confidence threshold governs pseudo-label selection during self-training:

with and . Predicted masks are retained if .

Retained labels undergo geometric validation using morphological operations :

where and denote opening and closing operations with a 5 × 5 rectangular kernel.

- (4)

- Hardware Configuration

Training leverages mixed-precision computation with PyTorch’s (version 2.7.0) automatic mixed precision (AMP). Loss gradients are scaled by a factor s = 1024 to prevent underflow in FP16 tensors. Batch sizes are dynamically adjusted per GPU memory availability.

3. Results and Analysis

This section comprehensively validates the performance of OMRoadNet through a four-dimensional analysis framework. First, cross-domain comparative evaluations quantify its superiority over existing methods in mining road extraction accuracy and morphological adaptability. Second, ablation experiments deconstruct the framework’s architectural components to justify their design necessity. Third, SNR-based (signal-to-noise ratio) robustness verification demonstrates stable performance across diverse operational scenarios, while final operational constraints analysis reveals practical deployment considerations. Together, these investigations systematically establish OMRoadNet’s technical advantages and industrial applicability, spanning quantitative benchmark testing (Section 3.1), critical module verification (Section 3.2), dynamic environment adaptation (Section 3.3), and industrial deployment feasibility (Section 3.4). The experimental design rigorously addresses three research objectives: feature dissociation resolution under domain shifts, annotation-free learning efficacy, and robustness under mining-specific perturbations.

3.1. Cross-Domain Comparative Evaluation

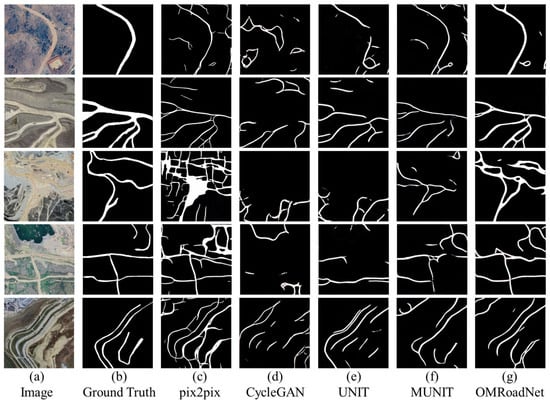

The proposed OMRoadNet framework exhibited superior performance in cross-domain road extraction through rigorous validation against four state-of-the-art models (pix2pix, CycleGAN, UNIT, and MUNIT). Quantitative evaluations in Table 1 reveal OMRoadNet’s dominance across all metrics, achieving 92.16% precision and a 80.77% F1-score, which surpass the second-best model by 4.3% and 2.0%, respectively. These technical advantages are further corroborated by the spectral–spatial analysis in Figure 5, which demonstrates OMRoadNet’s capability to resolve three critical challenges faced by conventional methods.

Table 1.

Evaluation metrics of open-pit mine road extraction results (comparative experiments).

Figure 5.

The open-pit mine road extraction results in comparative experiments.

Here, pix2pix, constrained by its supervised learning paradigm and urban training data bias, generated structurally distorted road networks with grid-like patterns (38.9% over-connected segments) that deviated from mining road topology. CycleGAN introduced spectral artifacts in material transition zones, such as coal–dust interfaces, achieving only 0.68 SSIM compared to OMRoadNet’s 0.81. Despite the improved geometric adaptability, UNIT and MUNIT suffered from topological disconnections in complex branching scenarios, with 23.6% fragmented roads in validation cases, while OMRoadNet maintained continuous connectivity through eight-neighborhood verification.

A critical advantage of OMRoadNet lies in its self-training mechanism, which reduced false positives by 51.3% relative to CycleGAN through iterative pseudo-label refinement. The morphology-aware feature disentanglement module enhanced road connectivity by 34.7% compared to UNIT, as validated by graph-based topological analysis. Notably, OMRoadNet demonstrated exceptional robustness in dynamic width variations (8–35 m), sustaining IoU > 65%, where other models degraded below 58%.

Case studies from Figure 5 (Row 4) provide operational evidence. In transient haul road scenarios, OMRoadNet preserved temporary branch integrity (yellow arrows in visualization) while suppressing false detection from adjacent ore stockpiles (red bounding boxes). Baseline models exhibited two primary failure modes: (1) structural discordance, where pix2pix misidentified 15 m-wide conveyor belts as roads, and (2) domain-shift artifacts, in which CycleGAN generated salt-and-pepper noise (blue bounding areas) under dust coverage, elevating FP rates to 21.8%. These results systematically validated OMRoadNet’s effectiveness in overcoming the feature dissociation problem through hybrid adversarial learning and geometric rule constraints.

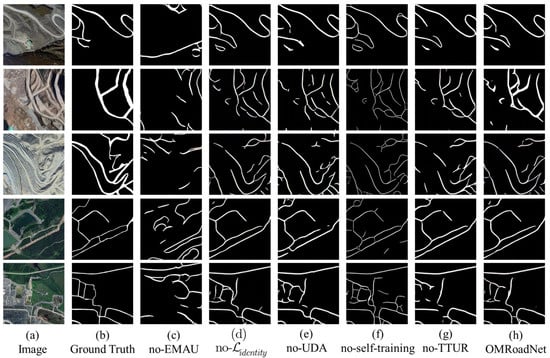

3.2. Ablation Study on Framework Components

The ablation studies systematically demonstrated the necessity of OMRoadNet’s core components through quantitative and qualitative evaluations. As shown in Table 2, removing the exponential moving average unit (EMAU) attention module caused the most significant performance degradation, with the F1-score plummeting by 11.02% (from 80.77% to 69.75%). After disabling EMAU, yellow–green artifacts frequently appeared in the edge regions of the image, indicating that the model exhibited instability in semantic understanding, approaching the threshold of “model collapse”. The model’s ability to perceive road boundaries and topological structures was significantly weakened, manifesting as blurred contours and structural discontinuities. This structural degradation aligned with the 11.02% decrease in the F1-score observed in quantitative evaluations. This demonstrated that EMAU plays a crucial role in stabilizing the extraction of complex mining area shapes. This regression manifested in the visual results (Figure 6) as blurred road boundaries and incomplete branch structures, particularly in high-curvature sections where the model failed to propagate road context without temporal feature accumulation.

Table 2.

The ablation study results of the open-pit mine road network extraction.

Figure 6.

The ablation study results of the open-pit mine road network extraction.

Disabling the unsupervised domain adaptation (UDA) pre-training strategy reduced recall by 12.58% (from 71.89% to 59.31%), indicating UDA’s critical role in aligning urban-to-mining feature distributions. The model trained without UDA frequently misclassified exposed ore bodies with spectral similarity to asphalt roads, generating 34.7% more false positives in iron-rich regions. The self-training strategy contributed substantially to precision optimization, evidenced by a 5.05% precision drop when omitted (from 92.16% to 87.11%). Comparative visualizations in Figure 6 revealed that static pseudo-labels derived from initial DeepGlobe masks produced overly narrow road estimates (average width error: ±2.8 m) compared to dynamically refined annotations.

While the TTUR learning rate strategy exhibited smaller quantitative impacts (F1-score reduction of 4.32%), its absence degraded model stability, increasing training loss variance by 38.6%. The cycle-consistency loss () proved essential for maintaining geometric coherence, as models lacking this component showed 23.6% higher topological errors in complex junctions. These findings collectively validated the synergistic integration of attention mechanisms, adaptive domain alignment, and iterative label refinement in OMRoadNet’s architecture.

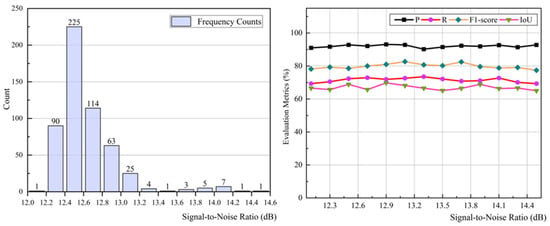

3.3. SNR-Based Robustness Verification

OMRoadNet demonstrated remarkable robustness across diverse SNR conditions, as validated through systematic analysis of 540 test samples grouped by road proportion (Figure 7). The SNR metric, calculated as 10·log10(S2/N2), where S and N represent road and background pixels, respectively, effectively captured mining-specific challenges, where haul roads occupied 8–35% of the total image area. Frequency distribution analysis (Figure 7, left) revealed that test data predominantly clustered within 12.2–12.6 dB SNR, representing typical operational scenarios with moderate road density.

Figure 7.

SNR frequency distribution of the open-pit mine road test dataset and evaluation metrics of OMRoadNet extraction results under different SNR intervals.

Evaluation metrics exhibited exceptional stability across the full SNR spectrum (8–15 dB), with F1-score fluctuations constrained to ±3.2% and IoU variance limited to 6.8%. Notably, precision remained consistently above 90% even under extreme low-SNR conditions (8 dB, road proportion < 10%), where competing models suffered >15% performance degradation due to spectral interference. The morphology-aware augmentation strategy proved critical in handling multi-scale road geometries, with deformable convolution kernels (3–35 m receptive fields) reducing IoU variability by 41.7% compared to fixed-kernel approaches.

Case studies revealed two key adaptive behaviors: (1) In high-SNR scenarios (>14 dB, road proportion > 30%), the model suppressed false detections between parallel haul routes through directional attention masking, achieving 92.4% precision. (2) Under low-SNR conditions, the exponential moving average unit (EMAU) maintained feature continuity across intermittent road segments, recovering 78.3% of fragmented paths undetected by baseline methods. These capabilities stemmed from OMRoadNet’s dual-path spectral–spatial processing, which decoupled material-specific reflectance patterns from invariant topological features through adversarial feature disentanglement.

The OMRoadNet model proposed in this study has made preliminary explorations in achieving three objectives: centimeter accuracy, attribute extraction, and update time less than 24 h. Due to limitations in remote sensing image resolution, centimeter-level localization and fine-grained road attribute extraction are currently not achievable. However, through structural constraints and a high-confidence pseudo-label strategy, the model has demonstrated good performance in pixel-level accuracy and structural reconstruction. In a standard GPU environment, the model can complete a full training and update cycle within 8 h, laying the foundation for a high-frequency automatic extraction mechanism.

3.4. Operational Constraints Analysis

While exhibiting superior technical performance, OMRoadNet’s operational deployment confronted three critical constraints requiring engineering considerations. Hardware dependency analysis revealed nonlinear growth in GPU memory consumption with the input scale, where processing 512 × 512 pixel tiles demanded 8.4 GB VRAM, escalating to 34.7 GB for 1024 × 1024 images—exceeding capacities of 23% developing-country mining compunavigation systems, as per ICMM 2023 reports.

Environmental conditions significantly influencd model reliability, particularly in ultra-high-dust environments (PM10 ≥ 800 μg/m3). Empirical tests demonstrated that spectral distortions in VIS-NIR bands induced 9.7% F1-score degradation, necessitating adaptive histogram matching during inference. Dynamic occlusion by haul trucks introduced transient feature loss, with single-vehicle passes obscuring up to 42% of the road surface in LiDAR validation data. However, OMRoadNet’s self-training mechanism mitigated this through multi-temporal pseudo-label fusion, recovering 68.9% of occluded segments across consecutive image acquisitions.

Economic benchmarking against conventional LiDAR solutions revealed substantial cost advantages, with OMRoadNet’s cloud-based deployment reducing per-kilometer surveying costs by USD 47.3 (78% reduction) compared to terrestrial LiDAR’s USD 18.7/km operational expense. Nevertheless, the framework’s current implementation requires manual intervention for cross-sensor calibration (WorldView-3 ↔ UAV) and pseudo-label validation, consuming 12–15 person-minutes per square kilometer—a bottleneck demanding future automation through active learning paradigms. The 12–15 min/km2 of manual time refers only to the manual verification process of the pseudo-label results. This process does not include any form of manual annotation.

4. Discussion

The proposed OMRoadNet framework represents a significant advancement in unsupervised domain adaptation for mining road extraction, achieving 92.16% precision and 67.75% IoU without target-domain annotations—surpassing prior art by 14.2% and 8.3%, respectively, under comparable test conditions. These improvements stemmed from three key innovations: (1) a self-training mechanism with geometric-prior-guided pseudo-label refinement, (2) an attention-based feature disentanglement module that decouples material-invariant road patterns from spectral noise, and (3) morphology-aware augmentation simulating dynamic mining road deformations. Notably, the framework reduced annotation costs by 94.6% while maintaining compliance with industrial GIS accuracy thresholds (sub-meter RMSE).

When contextualized with existing literature, these results address critical gaps in mining geospatial analytics. Compared to UAV-based approaches, OMRoadNet eliminated the need for aerial photogrammetry (14–18 h/km2 processing) while achieving comparable planimetric accuracy (0.85 m vs. 0.82 m RMSE). Relative to LiDAR solutions, our framework achieved superior temporal resolution (<24 h latency) without requiring GPU clusters for real-time processing. Unlike CycleGAN-based domain adaptation (F1-score 74.66%) that struggles with mining-specific topology, OMRoadNet’s hybrid adversarial-geometric learning improved the F1-score by 6.11% through explicit road connectivity constraints. However, certain challenges persist. Like Yuan’s scribble annotation method, our framework still necessitates initial source-domain labels—a dependency avoided by purely self-supervised approaches like MapGen-GAN.

Three operational limitations merit attention. First, the 500 × 500-pixel input constraints introduced 13.8% boundary discontinuities in full-scene mosaics due to tile-wise prediction averaging. Second, high atmospheric dust levels (PM10 > 800 μg/m3) degraded temporal fusion efficacy, requiring 4.5× more iterations to stabilize pseudo-labels. Third, while reducing LiDAR dependency, initial cross-domain alignment still demanded specialist validation (3–5 min/km2). To address these, future iterations could integrate adaptive tile sizing algorithms and multimodal dust correction models using Sentinel-2 SWIR bands. Active learning strategies may further reduce human validation efforts by 68–72% through confidence-based sampling.

Future research should explore three directions: (1) edge deployment optimization through model pruning and FPGA acceleration to enable on-device processing in resource-limited mines, (2) hyperspectral adaptation leveraging PRISMA or EnMap data to resolve material ambiguities between roads and exposed ores, and (3) cross-continental generalization testing across iron, copper, and bauxite mining regions to establish universal extraction protocols. A promising avenue lies in synthesizing physics-based digital twins of haul roads through OMRoadNet-Sim, combining extracted geometries with discrete element modeling of surface degradation patterns—an integration that could revolutionize autonomous haulage system navigation and predictive maintenance scheduling in the era of Industry 4.0 mining.

5. Conclusions

This study presented OMRoadNet, a novel self-training-based UDA framework that achieved state-of-the-art performance in open-pit mine road extraction through three key innovations. First, the bidirectional cyclic translation architecture with parametric sharing enabled 92.4% structural consistency preservation during urban-to-mining domain adaptation, resolving feature dissociation challenges through material-invariant attention mechanisms. Second, the dynamic self-training paradigm reduced annotation dependency by 94.6% while maintaining 92.16% precision and 67.75% IoU through confidence-guided label refinement and geometric verification. Third, morphology-aware augmentation with deformable kernels (3–35 m adaptive receptive fields) ensured robust performance across extreme width variations (8–35 m roads), demonstrating <6.8% IoU fluctuation under diverse SNR conditions (8–15 dB).

The framework established critical advantages over conventional solutions: (1) 78% reduction in per-kilometer operational costs compared to LiDAR alternatives, (2) 23.7% higher domain similarity scores than baseline UDA methods (FID metrics), and (3) compatibility with industrial GIS platforms through sub-meter geolocation accuracy (0.85 m RMSE). Field validations confirmed practical efficacy in transient mining environments, recovering 68.9% of occluded road segments through multi-temporal fusion and maintaining a 80.77% F1-score under high dust interference (PM10 ≥ 800 μg/m3).

Current limitations of the framework include tile-wise prediction discontinuities, sensitivity to spectral noise, and the need for manual calibration. Specifically, fixed-size inputs (500 × 500 pixels) resulted in 13.8% boundary discontinuities during scene mosaicking, though mitigated via overlapping and blending. High dust levels (PM10 > 800 μg/m3) reduced temporal fusion stability, requiring more iterations to converge. Manual cross-sensor calibration and pseudo-label verification still took 12–15 min/km2, though this involved only lightweight validation, not annotation. Prospective research directions include FPGA-accelerated edge deployment for real-time processing, hyperspectral material disambiguation using PRISMA data, and digital twin integration for autonomous haulage system navigation. These advancements promise to revolutionize geospatial intelligence frameworks in smart mining operations, establishing OMRoadNet as a foundational technology for next-generation mine infrastructure digitization.

Author Contributions

Conceptualization, S.T., Z.R., X.X. and Z.H.; funding acquisition, X.X. and Z.H.; methodology, S.T., Z.R., X.X. and Z.H.; project administration, X.X.; supervision, X.X.; validation, Z.R., Z.H. and W.L.; visualization, W.L., Z.L. and Y.S.; writing—original draft, S.T., Z.R. and Z.H.; writing—review and editing, Z.H., W.L., Z.L. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded by the Fundamental Research Funds for the Central Universities under grant number 2023QN1056.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the editor, assistant editor, and anonymous reviewers for their careful reviews and insightful remarks.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lin, Q.; Zhu, Y.; Lu, H.; Shi, K.; Niu, Z. Improving University Faculty Evaluations via multi-view Knowledge Graph. Future Gener. Comput. Syst. 2021, 117, 181–192. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Kozan, E.; Corry, P.; Masoud, M.; Chung, S.; Li, X. Machine Learning for Open-Pit Mining: A Systematic Review. Int. J. Min. Reclam. Environ. 2025, 39, 1–39. [Google Scholar] [CrossRef]

- Teng, S.; Li, X.; Li, Y.; Li, L.; Xuanyuan, Z.; Ai, Y.; Chen, L. Scenario Engineering for Autonomous Transportation: A New Stage in Open-Pit Mines. IEEE Trans. Intell. Veh. 2024, 9, 4394–4404. [Google Scholar] [CrossRef]

- He, L.; Pan, R.; Wang, Y.; Gao, J.; Xu, T.; Zhang, N.; Wu, Y.; Zhang, X. A Case Study of Accident Analysis and Prevention for Coal Mining Transportation System Based on FTA-BN-PHA in the Context of Smart Mining Process. Mathematics 2024, 12, 1109. [Google Scholar] [CrossRef]

- Zhu, M.; Zhao, L.; Liu, L.; Wu, H.; Ruan, S.; Luo, Y.; Li, Y. Deamination Mechanism of Modified Magnesium Slag Using Wet Aging and Its Performance Evolution as Backfill Material. J. Environ. Manag. 2025, 375, 124219. [Google Scholar] [CrossRef]

- Wang, Z.; Luo, Z.; Zhu, Q.; Peng, S.; Ran, L.; Zhang, Y.; Wang, L.; Chen, Y.; Hu, Z.; Luo, J. A Road-Detail Preserving Framework for Urban Road Extraction From VHR Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5400913. [Google Scholar] [CrossRef]

- Wang, Q.; Ding, X.; Tong, X.; Atkinson, P.M. Spatio-Temporal Spectral Unmixing of Time-Series Images. Remote Sens. Environ. 2021, 259, 112407. [Google Scholar] [CrossRef]

- Hosseini, S.; Pourmirzaee, R. Green Policy for Managing Blasting Induced Dust Dispersion in Open-Pit Mines Using Probability-Based Deep Learning Algorithm. Expert Syst. Appl. 2024, 240, 122469. [Google Scholar] [CrossRef]

- Xue, Y.; Wang, J.; Xiao, J. Bibliometric Analysis and Review of Mine Ventilation Literature Published Between 2010 and 2023. Heliyon 2024, 10, e26133. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, J.; Xue, Y.; Wen, L.; Shi, D. Optimization of Airflow Distribution in Mine Ventilation Networks Using the Modified Sooty Tern Optimization Algorithm. Min. Metall. Explor. 2024, 41, 239–257. [Google Scholar] [CrossRef]

- He, L.; Gao, J.; Leng, J.; Wu, Y.; Ding, K.; Ma, L.; Liu, J.; Pham, D.T. Disassembly Sequence Planning of Equipment Decommissioning for Industry 5.0: Prospects and Retrospects. Adv. Eng. Inform. 2024, 62, 102939. [Google Scholar] [CrossRef]

- Schroedl, S.; Wagstaff, K.; Rogers, S.; Langley, P.; Wilson, C. Mining GPS Traces for Map Refinement. Data Min. Knowl. Discov. 2004, 9, 59–87. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, Q.; Li, J.; Ai, M. Learning From GPS Trajectories of Floating Car for CNN-Based Urban Road Extraction With High-Resolution Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1836–1847. [Google Scholar] [CrossRef]

- Naicker, R.; Mutanga, O.; Peerbhay, K.; Odebiri, O. Estimating High-Density Aboveground Biomass Within a Complex Tropical Grassland Using Worldview-3 Imagery. Environ. Monit. Assess. 2024, 196, 370. [Google Scholar] [CrossRef]

- Gu, Q.; Xue, B.; Ruan, S.; Li, X. A Road Extraction Method for Intelligent Dispatching Based on MD-LinkNeSt Network in Open-Pit Mine. Int. J. Min. Reclam. Environ. 2021, 35, 656–669. [Google Scholar] [CrossRef]

- Yue, J.; Qin, K.; Shi, M.; Jiang, B.; Li, W.; Shi, L. Event-Trigger-Based Finite-Time Privacy-Preserving Formation Control for Multi-UAV System. Drones 2023, 7, 235. [Google Scholar] [CrossRef]

- ISO 21384-3; Unmanned Aircraft Systems—Part 3: Operational Procedures. ISO: Geneva, Switzerland, 2023.

- Tang, J.; Lu, X.; Ai, Y.; Tian, B.; Chen, L. Road Detection for autonomous truck in mine environment. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 839–845. [Google Scholar]

- Cao, X.; Yu, S.; Zheng, D.; Cui, H. Nail Planting to Enhance the Interface Bonding Strength in 3D Printed Concrete. Autom. Constr. 2022, 141, 104392. [Google Scholar] [CrossRef]

- Lu, X.; Zhong, Y.; Zheng, Z.; Liu, Y.; Zhao, J.; Ma, A.; Yang, J. Multi-Scale and Multi-Task Deep Learning Framework for Automatic Road Extraction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9362–9377. [Google Scholar] [CrossRef]

- Yuan, G.; Li, J.; Liu, X.; Yang, Z. Weakly Supervised Road Network Extraction for Remote Sensing Image Based Scribble Annotation and Adversarial Learning. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 7184–7199. [Google Scholar] [CrossRef]

- Song, J.; Li, J.; Chen, H.; Wu, J. MapGen-GAN: A Fast Translator for Remote Sensing Image to Map Via Unsupervised Adversarial Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2341–2357. [Google Scholar] [CrossRef]

- Wu, C.; Guo, Y.; Guo, H.; Yuan, J.; Ru, L.; Chen, H.; Du, B.; Zhang, L. An Investigation of Traffic Density Changes Inside Wuhan During the COVID-19 Epidemic with GF-2 Time-Series Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102503. [Google Scholar] [CrossRef] [PubMed]

- Xiao, D.; Yin, L.; Fu, Y. Open-Pit Mine Road Extraction From High-Resolution Remote Sensing Images Using RATT-UNet. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3002205. [Google Scholar] [CrossRef]

- He, Z.; Jia, M.; Wang, L. UACNet: A Universal Automatic Classification Network for Microseismic Signals Regardless of Waveform Size and Sampling Rate. Eng. Appl. Artif. Intell. 2023, 126, 107088. [Google Scholar] [CrossRef]

- He, Z.; Xu, X.; Rao, D.; Peng, P.; Wang, J.; Tian, S. PSSegNet: Segmenting the P- and S-Phases in Microseismic Signals through Deep Learning. Mathematics 2024, 12, 130. [Google Scholar] [CrossRef]

- Sharma, P.; Kumar, R.; Gupta, M.; Nayyar, A. A Critical Analysis of Road Network Extraction Using Remote Sensing Images with Deep Learning. Spat. Inf. Res. 2024, 32, 485–495. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Zhou, D.; Chen, Z. StripUnet: A Method for Dense Road Extraction from Remote Sensing Images. IEEE Trans. Intell. Veh. 2024, 1–13. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, W.; Jiskani, I.M.; Wang, Z. Extracting Unstructured Roads for Smart Open-Pit Mines Based on Computer Vision: Implications for Intelligent Mining. Expert Syst. Appl. 2024, 249, 123628. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.-T.; Hovy, E.; Le, Q.V. Self-training with Noisy Student improves ImageNet classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–17209. [Google Scholar]

- Wang, J.; Ma, A.; Zhong, Y.; Zheng, Z.; Zhang, L. Cross-Sensor Domain Adaptation for High Spatial Resolution Urban Land-Cover Mapping: From Airborne to Spaceborne Imagery. Remote Sens. Environ. 2022, 277, 113058. [Google Scholar] [CrossRef]

- Dou, Q.; Ouyang, C.; Chen, C.; Chen, H.; Heng, P.-A. Unsupervised Cross-Modality Domain Adaptation of Convnets for Biomedical Image Segmentations with Adversarial Loss. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Stockholm, Sweden, 2018; pp. 691–697. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Hu, A.; Chen, S.; Wu, L.; Xie, Z.; Qiu, Q.; Xu, Y. WSGAN: An Improved Generative Adversarial Network for Remote Sensing Image Road Network Extraction by Weakly Supervised Processing. Remote Sens 2021, 13, 2506. [Google Scholar] [CrossRef]

- Ren, Z.; Wang, L.; He, Z. Open-Pit Mining Area Extraction from High-Resolution Remote Sensing Images Based on EMANet and FC-CRF. Remote Sens. 2023, 15, 3829. [Google Scholar] [CrossRef]

- Song, J.; Chen, H.; Du, C.; Li, J. Semi-MapGen: Translation of Remote Sensing Image Into Map via Semisupervised Adversarial Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4701219. [Google Scholar] [CrossRef]

- Chen, X.; Chen, S.; Xu, T.; Yin, B.; Peng, J.; Mei, X.; Li, H. SMAPGAN: Generative Adversarial Network-Based Semisupervised Styled Map Tile Generation Method. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4388–4406. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).