Abstract

The traditional particle swarm optimization (PSO) algorithm often exhibits defects such as of slow convergence and easily falling into a local optimum. To overcome these problems, this paper proposes an enhanced variant featuring adaptive selection. Initially, a composite chaotic mapping model integrating Logistic and Sine mappings is employed to initialize the population for diversity and exploration capability. Subsequently, the global and local search capabilities of the algorithm are balanced through the introduction of adaptive inertia weights. The population is then divided into three subpopulations—elite, ordinary, and inferior particles—based on their fitness values, with each group employing a distinct position update strategy. Finally, a particle mutation strategy is incorporated to avoid convergence to local optima. Experimental results demonstrate that our algorithm outperforms existing algorithms on the standard benchmark functions. In practical engineering applications, our algorithm also has demonstrated better performance than other meta heuristic algorithms.

1. Introduction

As technology advances and societal needs evolve, optimization techniques have become essential across various industries. Traditional optimization methods frequently encounter difficulties when addressing complex, multidimensional challenges, which complicates the pursuit of optimal solutions. This has spurred increased interest in the development of more efficient and intelligent optimization algorithms. One notable example is particle swarm optimization (PSO) [1], which is distinguished by its capacity to address both mixed-space and continuous optimization problems, thus serving as a valuable tool. PSO has been effectively applied in numerous domains, including parameter optimization [2], neural network training [3], clustering analysis [4], combinatorial optimization [5], pattern recognition [6], and image processing [7]. Furthermore, the intelligent optimization capabilities of PSO present significant potential for advancement in artificial intelligence [8] and machine learning [9]. By conducting comprehensive research on the PSO algorithm, we can gain deeper insights into its underlying mechanisms and performance, thereby facilitating its enhancement and wider application.

Numerous researchers have made great efforts to enhance PSO algorithms in terms of running performance, convergence, and parameter settings. To address high-dimensional complex problems, Shi et al. introduced a novel method for adjusting the inertia weight factor, which they modified to decrease linearly from 0.9 to 0.4 [10]. Subsequently, they adjusted the inertia weights to random values between 0.5 and 1 and designed a new algorithm, PSO-RIW, which demonstrated improved robustness in experimental tests [11]. Ratnaweera et al. proposed a variable acceleration factor mechanism that proved more effective than time-varying inertia weights for optimizing single-peak functions in simulations [12]. Arumugam et al. introduced the GLBEST-PSO algorithm, which modifies inertia weights by averaging the global and individual optima during iterations [13]. Chegini et al. developed the PSOSCALF algorithm by integrating the hybrid PSO algorithm, the positive cosine algorithm, and Levy’s flight method, thereby enhancing the exploration capabilities of PSO and influencing local optimal positions with a degree of temptation [14]. A recent study [15] introduced a self-learning strategy alongside a nonlinear weight factor strategy aimed at enhancing the local search capabilities of the algorithm. This was achieved by employing Levy’s flights to perturb the positions of the population, thereby assisting in escaping local optima. The authors of [16] presented a normal cloud model designed to adjust both the range of the wolf population’s positions and the optimal individual, as well as the dispersion of the wolf population. This approach effectively improved the algorithm’s local exploitation ability and convergence accuracy. Furthermore, several novel metaheuristic algorithms have been developed, including the dung beetle optimization algorithm [17], the vulture search algorithm [18], the sand cat swarm optimization algorithm [19], and the adaptive hybrid variational differential evolution (a-HMDE) [20]. Dixit et al. [21] proposed a new differential evolutionary algorithm based on particle swarm optimization, which utilizes differential evolution variants in the initial stages to broaden the search space, identify more promising solutions, and mitigate the risk of premature convergence. Seyyedabbasi et al. [22] introduced the comprehensive learning particle swarm optimizer (CLPSO) for addressing complex multimodal problems. This method leverages the historical optimal experiences of other particles to enhance population diversity and global search capabilities. Concurrently, some researchers have integrated concepts from various optimization algorithms to improve convergence speed and search accuracy. These advancements have facilitated the widespread application of PSO in power network optimization [23], mechanical design innovation [24], and logistics and distribution scheduling [25].

Although a lot of research has been conducted on the PSO algorithm, the main problems of the algorithm have not been fully solved. First, the algorithm itself has the problem of premature convergence. For example, when the algorithm uses complex multi-peak functions, it is easy to fall into local optimization. Meanwhile, the algorithm is highly sensitive to parameter settings such as inertia weights and acceleration coefficients, but there is no generalized parameter setting mechanism. Second, it often faces an imbalance between global and local search. Too much focus on the global search capability leads to inefficiency and slow convergence, while too much emphasis on the local search capability increases the risk of falling into a local optimum. Third, when facing a high-dimensional optimization problem, the search space exponentially expands, leading to a significant increase in computational complexity, and the algorithm is more likely to converge to a local optimum. In addition, the interaction mechanism between particles is complex, making it difficult to effectively guide the search direction. In practice, engineering optimization problems often contain both equal and unequal constraints. In such scenarios, methods need to be developed that can handle these constraints while maintaining the performance of the algorithm. Therefore, improvements to the algorithms still require significant work.

This paper proposes an adaptive selection particle swarm optimization algorithm and investigates its applications. Central to the algorithm is the integration of enhancement strategies into the standard particle swarm approach, which allows the particles to perform a more nuanced search within the solution space, thereby enhancing population diversity. The enhancement strategies examined in this paper include the following:

- Initializing the particle swarm using hybrid chaotic mapping to achieve a more uniform distribution of the population within the solution space, thereby enhancing population diversity.

- Focusing on dynamically adjusting the inertia weights through adaptive weights, allowing particles to conduct precise searches at each stage.

- Dividing the population into three subsets and updating the velocity and position of the particles by applying cross-learning and social learning mechanisms to the elite particles, which aims to improve the exploration performance of the particles.

- Employing the evolutionary strategies DE/best/1 and DE/rand/1 on ordinary particles to enhance their utilization, while also introducing a mutation mechanism to prevent the improved algorithm from converging on local optimal solutions during the search process.

A variety of strategies, including composite chaotic mapping for population initialization, adaptive inertia weights, an elite particle learning mechanism, and a differential evolution strategy for ordinary particles, are integrated to develop a novel particle swarm optimization algorithm referred to as APSO. This integration seeks to enhance the algorithm’s search efficiency, mitigate convergence to local optima, and bolster its robustness. To assess the effectiveness of the improved APSO algorithm, we conduct tests and comparisons with other algorithms, evaluating the optimal value, standard deviation, and average value for each. Ultimately, the algorithm is applied to real-world engineering problems to derive practical solutions.

2. PSO Algorithm

This section provides a thorough overview of the fundamental concepts and equations underlying the traditional particle swarm optimization algorithm, including a detailed explanation of the parameters utilized in these equations. Furthermore, due to the flowchart that delineates the steps of the algorithm, readers can achieve a clearer understanding of the particle swarm optimization process.

2.1. Principle

The PSO algorithm is a widely recognized method of population-based intelligent optimization. Similar to other algorithms in this category, it typically commences by randomly initializing a set of solutions, referred to as particles. The algorithm then iteratively updates these solutions, guiding the entire population toward enhanced fitness. The ultimate objective is to identify the optimal solution to the problem within a constrained number of iterations.

If the PSO algorithm with N particles is employed to address a problem within a search space of dimension J, the position and velocity components of the i-th particle (where ) at the n-th iteration can be expressed as and , respectively. During each iteration, PSO employs specific formulas to update the velocity and position of each particle in each dimension component:

Equations (1) and (2) present the updated iterative formulas of the original PSO algorithm [1]. In these equations, and represent acceleration factors that are utilized to modify the convergence speed, typically assuming a value of 2. and are random numbers distributed on [0, 1]; denotes the position component of the j-dimensional space of particle i in the t-th iteration; denotes the optimal position of an individual; denotes the optimal position of a group; after the completion of each iteration, the position of each particle must be updated according to the following rules:

where represents the objective function used to determine the corresponding positional adaptation value. In many cases, it is necessary to confine particle velocities within a specific interval, denoted as , to align with the practical requirements when employing the algorithm. However, researchers have observed that the initial version of the algorithm exhibited slow convergence rates when addressing certain problems, primarily due to its overly global search behavior. This limitation resulted in diminished algorithm performance. To address this issue, Shi and Eberhart [11] introduced an enhanced PSO algorithm incorporating inertia weights, which involves the addition of inertia weights to Equation (4):

The formula consists of three components: the first is termed the ’inertia component, which reflects the influence of the particle’s inertia weights on its current velocity state, thereby preventing excessive oscillation during the search process. The inertia weights, denoted as , decrease linearly from 0.9 to 0.4 over the course of the iterations. This algorithm has been demonstrated to outperform alternative versions in numerous experiments and applications, and is therefore referred to as the standard PSO algorithm [26]. Unless otherwise specified, all references to the PSO algorithm in the subsequent sections pertain to the standard PSO algorithm. The second component is the “individual cognition” of the particle, which represents its learning regarding its own position. The third component is the “social cognition” of the particles, which reflects their learning of the optimal position within the group.

The superposition of “individual cognition” and “social cognition” allows particles to engage in what is termed “partial local search”, while the “inertial part” facilitates “partial global search”. Furthermore, this combination enables particles to execute a “biased global search”. These concepts are derived from the long-term search process, illustrating the overall trend of search behavior rather than defining absolute “global” or “local” search behaviors. Consequently, the flight speed in particle swarm optimization primarily consists of these three components, guiding the particles within the population to converge to a single point gradually.

2.2. Algorithm Implementation

Step 1: Based on the characteristics of the optimization problem, the algorithm randomly initializes several parameters, including the particle swarm size N, the search dimension J, the upper and lower bounds of the search, the maximum number of iterations , the maximum velocity , the minimum velocity , the inertia weight , and the learning factors ( and ).

Step 2: The boundaries are assessed based on the initialized positions and velocities of individual particles, followed by the calculation of fitness values. The adaptation values are then sorted, and the velocity and position of the particles are updated in accordance with Equations (3) and (4).

Step 3: Based on the updated velocity and position, the optimal position and optimal fitness of both individual and group particles are revised. A determination is made to ascertain whether the specified condition is met; if so, the process advances to Step 4; if not, it returns to Step 3.

Step 4: Output the optimal solution of the problem.

3. Improved PSO Algorithm

Building upon the standard PSO algorithm, this section introduces four enhancement strategies: composite chaotic mapping for population initialization, adaptive inertia weights, an elite particle learning mechanism, and an ordinary particle mutation strategy. Each of them enhances specific aspects of the standard algorithm, including population initialization, inertia weights, particle velocity and position updates, and cross-mutation. We provide a detailed description of the algorithmic formulas, parameters, and the significance of each improved strategy. Furthermore, a flowchart illustrating the improved adaptive PSO algorithm, referred to as APSO, along with the steps for its implementation, is also presented.

3.1. Complex Chaotic Mapping Initialization Population

In the traditional framework of particle swarm optimization algorithms, the initialization of particle populations typically relies on random methods. However, this random initialization can sometimes result in a less uniform distribution of the population within the solution space, increasing the risk of the algorithm converging to a local optimum. This, in turn, negatively impacts both the search efficiency and solution accuracy of the algorithm. To address this issue, the diversity of the population can be enhanced through chaotic mapping. The fundamental principle involves employing specific mapping techniques of chaotic sequences to translate the optimization variables into chaotic variable space, thereby leveraging the characteristics of chaos to significantly enhance population diversity. Several common chaotic mapping expressions are shown in Table 1.

Table 1.

Common chaotic mappings.

Different chaotic mappings exhibit distinct properties and exert varying effects on optimization algorithms. Logistic mappings enhance space traversal [27]; however, they may lead to aggregation or spatial white space. In contrast, Sine mappings possess a simpler structure and higher efficiency, which can enhance search accuracy and optimization performance. Tent mappings contribute to a more uniform distribution of particles, although later iterations may transition into a periodic sequence. Cubic and Chebyshev mappings are highly sensitive to initial values, resulting in uneven distribution across the mapping region. Given the numerous limitations associated with traditional chaotic mapping methods, researchers are increasingly favoring optimized chaotic mapping techniques for initializing population-based intelligence optimization algorithms. Among the various optimization schemes, Logistic mapping and Sine mapping are two extensively studied chaotic mapping methods. The composite chaotic mapping that integrates Sine mapping and Logistic mapping offers significant advantages in terms of spatial traversal, population diversity, and distribution uniformity, while also maintaining a simple structure and high computational efficiency [28]. Consequently, we employ a composite chaotic mapping method that combines Sine mapping and Logistic mapping to initialize the population, thereby effectively enhancing the search efficiency and solution accuracy. The specific definition formula and initial value expression for the composite chaotic mapping are presented below:

where a, b, c are denoted as the control parameters with the range of values , , , respectively; and denote the random number and the initial value of particle i in the d-th dimension space of the initial position, respectively; and denote the lower bound and upper bound of the range of values; and the range of values of the particle is .

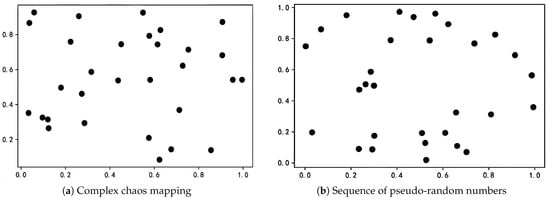

To assess the feasibility of the population initialized using composite chaotic mapping, we compare it to a population initialized with a pseudo-random sequence. Figure 1 illustrates the distribution of the composite chaotic mapping initialized population alongside that of the pseudo-random sequence initialized population.

Figure 1.

Initialized population distribution map using two methods.

The distribution map clearly indicates that the method of initializing the population using composite chaos effectively integrates the advantages of Sine mapping and Logistic mapping. This approach significantly enhances the uniform distribution of the spatial probability density of the population and improves its spatial traversability [29]. In contrast, initializing the population with a traditional sequence of random numbers, despite demonstrating a high degree of randomness, results in particle aggregation. This aggregation can negatively affect the initial iterations of the algorithm, leading to premature convergence of the particles and potentially trapping them in local optimal solutions. Consequently, the use of composite chaotic mapping for population initialization markedly enhances the efficiency of the algorithmic optimization search and increases the diversity of the population.

3.2. Adaptive Inertia Weights

Adaptive inertia weighting is a mechanism designed to optimize the fitness value by dynamically adjusting the particle inertia weights. This approach allows the algorithm to flexibly modify its search strategy in various situations, thereby enhancing its effectiveness in locating the optimal solution to a given problem. Specifically, when a particle’s fitness evaluation result is lower than the overall performance of the population, the strategy assigns smaller inertia weights to facilitate a fine local search. Conversely, when a particle’s fitness exceeds the average fitness of the population, larger inertia weights are assigned to improve global search capabilities. The expression for these adaptive weights is presented below:

where denotes the adaptive inertia weight of particle i at the t-th iteration; and denote the maximum and minimum values of the inertia weights, respectively; , , and denote the current, the best, and the average fitness values of particle i at the t-th iteration, respectively. is computed using Equation (8):

The average fitness is significantly influenced by extreme values, resulting in sensitive and frequent adjustments of inertia weights. This sensitivity can greatly diminish the stability and efficiency of the algorithm. Furthermore, the average fitness value is particularly responsive to the selection of the fitness function, which complicates the accurate representation of individual differences within the population. This, in turn, leads to a conflation of the search history of individuals with the current position information of particles. Consequently, the algorithm becomes more complex and challenging to fine-tune in practice. This issue is especially pronounced when a majority of individuals in the particle population cluster around a local optimum, potentially leading to a misjudgment of the average fitness value and premature convergence to a local optimal solution. Therefore, relying solely on the average fitness value of the population to adjust inertia weights presents several drawbacks, despite its potential to enhance algorithm performance in certain contexts. To address these challenges, the particle population can be segmented, and the proportion of individual particles can be utilized as a criterion for adjusting inertia weights. This approach aims to more accurately reflect the differences among individuals in the particle population while reducing reliance on specific fitness values. Such a method allows for more flexible and dynamic weight adjustments, balancing global and local search capabilities and ultimately improving the accuracy and convergence speed of the algorithm. The following expression delineates the adaptive inertia weights based on the proportionality division criterion of the particle swarm.

where denotes the degree of deviation of particle i from the optimal particle or the dominant particle swarm at the t-th iteration, denotes the upper limit of the inertia weight of the dominant particle swarm, and denotes the coefficient of , which is designed to make the adaptive inertia weight transition smoothly throughout the iteration process.

The degree of deviation of a particle from the optimal particle or the dominant particle population is calculated using Equation (10):

where denotes the cut-off value of the t-th iteration for dividing the dominant and disadvantaged particles, and denotes the median of the dominant particle’s adaptation value.

When , this can be written as the following equation:

Since is continuous, the segmented points have equal values, and the formula for is denoted below:

When , the inertia weight of particle i in the inferior particle swarm is shown below:

where is proportional to , so the maximum inertia weight of the inferior particle population is given by the following equation:

Let and get the expansion as

Further simplification yields the formula

3.3. Elite Particle Learning Mechanism

The particle swarm optimization algorithm classifies particles based on their fitness values, identifying those with lower fitness yet superior performance as the preferred subset, referred to as elite particles. Through continuous optimization and iteration, these elite particles play a critical role in the algorithm’s pursuit of the global optimal solution, enabling a more precise search for the optimal position and thereby enhancing the algorithm’s efficiency and solution quality. Within the particle swarm optimization framework, the primary position vectors involved in the iterative process include the historical optimal position and the global optimal position; both are essential to the velocity update mechanism of the particles. The essence of the elite particle learning mechanism lies in its ability to effectively leverage its own historical optimal position and the global optimal position to efficiently and accurately locate the global optimal solution during the search process. The velocity of the elite particle is calculated using the following formula:

Among them, the velocity update of the elite particle is divided into three parts: the first part represents the previous velocity part; the second part represents the part of cross-learning between the individual optimal position and the global optimal position ; and the third part represents the part of social cognition based on the global optimal position. The velocity update of the elite particle is the sum of these three parts.

The position update of the elite particles is calculated using Equation (22):

Elite particles enhance the iteration speed and optimization search accuracy of the algorithm by employing cross-learning and social learning strategies. These approaches prevent premature convergence of the particles, thereby avoiding failure to reach the global optimal solution. Particle cross-learning integrates the strengths of different particles to generate new search directions while continuously tracking and retaining their historical optimal positions. This adaptive adjustment of speed and position enhances the diversity of the particle swarm, facilitating movement beyond local optima to explore a broader search space. Conversely, social learning allows the algorithm to leverage the benefits of collective intelligence and information sharing, thereby accelerating convergence, mitigating oscillations due to excessive speed, and improving search efficiency and solution quality. The combination of these two learning strategies effectively balances the exploration and exploitation capabilities of the particles, significantly enhancing the performance of the particle swarm algorithm.

3.4. Mutation Strategies for Ordinary Particles

In particle swarm optimization algorithms, ordinary particles often become trapped in local optima and rely heavily on the current optimal individual within the population, thereby neglecting the exploration of new regions of the solution space. This phenomenon can adversely affect the efficiency of the global search. It is important to note that the global optimal individual may simply be a local optimum rather than the true optimal solution for the entire population. Consequently, this dependence not only diminishes the convergence speed of the algorithm but also reduces the efficiency of the particles in conducting a global search. Due to the inherent differences among particles, various updating methods can be employed, which enhances the diversity of the population. For the updating method of standard particles, iterative updates can be performed using the differential evolution algorithm. The DE/best/1 strategy, a variant of the differential evolution algorithm, is notable for its approach of guiding the search based on the data from the best individual in the population, along with the characteristics of other individuals, thereby improving the efficiency and effectiveness of the search [30]. In this algorithm, two distinct individuals from the population are randomly selected to generate a difference vector by calculating the difference between them. This difference vector, in conjunction with the best individual in the population, serves as a mutation factor applied to the target individual, resulting in the creation of a mutated new individual. The formula for the update rate of DE/best/1 can be defined as shown in Equation (23):

where denotes the global optimal position of the current population in dimension j, denotes the differential scaling factor taking values in the interval [0, 2], and denote the random unequal values in the population that satisfy , and t denotes the current iteration number.

The DE/rand/1 strategy in the differential evolution algorithm involves a mutation stage where three distinct individuals from the population are randomly selected. The algorithm computes a difference vector from these individuals and linearly combines it with one of the randomly selected individuals to generate new mutated individuals, thereby enhancing the diversity of the population. Unlike the stochastic mutation approach employed in composite chaos theory, the DE/rand/1 strategy is more systematic and directed during the evolutionary process, facilitating efficient search and optimization of the particles [31]. The velocity update formula for DE/rand/1 is as follows:

In this context, let , and represent three distinct individual particles that have been randomly selected from the current population and meet the criterion . The differential evolution algorithm, particularly the DE/best/1 and DE/rand/1 variants, is employed in the velocity update formula for standard particles. During the iterative process, if the absolute value of the ratio of the current fitness to the previous fitness is equal to or exceeds 0.9, the individual particles may face the challenge of local optima, categorizing the particle in question as a mutated particle. This mutated particle is assigned a predetermined crossover mutation probability, denoted as CR. The algorithm then determines which gene loci will be directly inherited from the parent individual and which loci will undergo mutation operations, thereby enhancing the diversity of the population. The definition of crossover mutation is provided below:

where denotes a new individual after cross-mutation, denotes a uniformly distributed random number between a range of [0, 1], and d denotes an integer randomized between f ensuring that at least one element of the new individual resulting from cross-mutation comes from . The update formula for the position after mutation is as below:

Consequently, achieving a balance between the exploration and exploitation of particles is essential. This can be accomplished by implementing optimization strategies designed to enhance the global search capabilities of the particles. Such an approach aims to improve the algorithm’s performance, enabling the particles to more accurately identify the optimal solution within the global solution space.

3.5. Steps of the Improved Algorithm

Step 1: Establish the initialization parameters for the enhanced algorithm, which include the particle swarm size designated as population size, the search dimension referred to as dimensions, the upper and lower bounds of the search denoted as upper bound and lower bound, the maximum number of iterations labeled as max iterations, and the maximum and minimum speeds represented as and , respectively. The composite chaos initialization of the population particles is executed according to Equations (5) and (6).

Step 2: Verify the boundary conditions and compute the fitness values based on the initialized positions and velocities of the individual particles. Subsequently, sort the fitness values and update the velocities and positions of the particles in alignment with Equations (2) and (4).

Step 3: Organize the particle population into subsets based on the magnitude of the fitness values, categorizing 15% of the particles with the lowest fitness values as elite particles, 15% with the highest fitness values as inferior particles, and the remaining 70% as ordinary particles.

Step 4: In the velocity Formula (4), dynamically adjust the inertia coefficients using the adaptive inertia weight Formula (4).

Step 5: In the divided subset for the elite particles, apply Formulas (21) and (22) to update the particle velocity individual position, and for ordinary particles, and apply Formula (23) or (24) to update the particle velocity. Use the cross-mutation Formula (25) to obtain the adaptation value of the larger particles to mutate to produce a new individual, and use Formula (26) to calculate the position update of the mutated particles.

Step 6: Assess the fitness of the particles by updating their velocities and positions. Based on the optimal position and fitness value of both the individual and the group, determine whether the specified conditions are met. If the conditions are satisfied, proceed to Step 7; otherwise, return to Step 6.

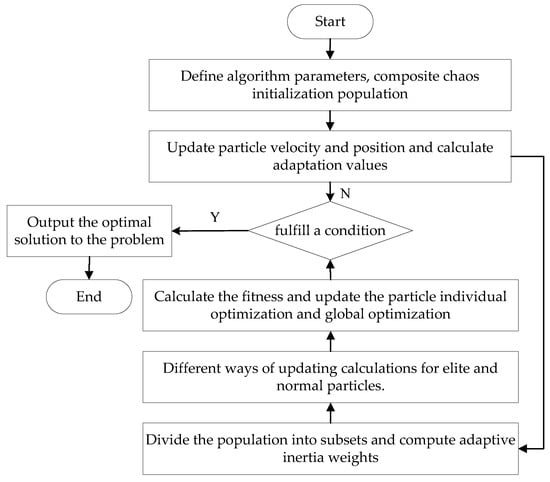

Step 7: Present the optimal solution to the problem. The diagram illustrating the enhanced algorithm is presented below. See Figure 2 for details.

Figure 2.

Flowchart of the improved algorithm APSO.

3.6. Complexity Analysis

Based on the classical particle swarm optimization algorithm, the APSO algorithm introduces improvement measures such as composite chaotic mapping initialization population, adaptive inertia weights, elite particle learning mechanism, and mutation strategy for ordinary particles. These measures aim to improve the search efficiency and solution quality of the algorithm and avoid falling into local optimal solutions.

Algorithm 1 shows the computational framework for the algorithm APSO. Lines 4–6 and 7–18 are the parameter initialization stage and iterative optimization stage, respectively. The first stage usually involves setting parameters such as population size, spatial dimension, maximum number of iterations, learning factor, velocity boundary, and position boundary. The second stage divides the particles into two populations, dynamically adjusts their adaptive inertia weights, and then iterates over all particles to update their velocities and positions, calculate fitness values, and update both individual and global optimal solutions. The time bottleneck of the algorithm lies in the lines 7–18 because there exist two nested for loops, which dominate the time complexity of .

| Algorithm 1 Computational framework for the algorithm APSO. |

|

4. Experiments

The CEC benchmarking function provides a standardized framework for assessing the efficiency of optimization algorithms across various scales [32]. It incorporates complex mathematical formulations that are both intricate and diverse, playing a crucial role in effectively measuring and evaluating the optimization performance of these algorithms. Through comparative analyses of different optimization algorithms, researchers can refine tuning processes to achieve better optimization results. To thoroughly assess the capabilities of the proposed algorithms, benchmark functions with diverse characteristics in Table 2 are utilized for testing [32]. These functions encompass unimodal, multimodal, and composite benchmarking types, and are very suitable for verifying the key indexes of the algorithm such as the convergence speed, the local search accuracy, the global exploration ability, and the high-dimensional adaptability.

Table 2.

Fourteen representative benchmarking functions, where the symbols S, M, B, T, and V denote the shape of the function as unimodal, multimodal, bowl, plate, and valley, respectively, and the optimal values of all the functions are zero.

This study demonstrates the comprehensive performance of the proposed algorithm by randomly selecting benchmark functions and comparing it with the standard PSO algorithm [26], as well as several particle swarm enhancement algorithms (e.g., NIPAO [27], IMPSO [28], CLPSO [2], IFEHPSO [29]) and other metaheuristic algorithms (e.g., GWO [33], WOA [34], SSA [35], BOA [33]). To ensure the reliability of the simulation test results for each algorithm and to enable a fair comparison of their performances, the population size for the particle swarm and the number of iterations were standardized to 30 and 200, respectively, with each benchmark function executed independently 30 times. To facilitate clear observation and comparison of the test results from the benchmark functions, the average (), standard deviation (), and optimal value () of the corresponding results are employed as evaluation metrics, with highlighted as the primary performance index.

The experiments were conducted on a server equipped with a CPU (Intel Core i7, 3.20 GHz, 12 cores, 24 threads) and 32 GiB of DDR4 RAM (3600 MHz). The operating system was Windows 10, the integrated development environment was Spyder 5.4, and the experimental programs were written in Python 3.12.

4.1. Comparative Analysis of the Enhanced Algorithm and Standard PSO Algorithm

The efficacy of the algorithm’s optimization can be evaluated through both the mean and optimal values, while the standard deviation serves as an indicator of the algorithm’s stability. The results obtained from testing the enhanced PSO algorithm in comparison to the standard PSO algorithm are presented in Table 3.

Table 3.

Comparison of the enhanced algorithm APSO and the standard algorithm PSO, where and denote the average and optimal values of the problem, respectively.

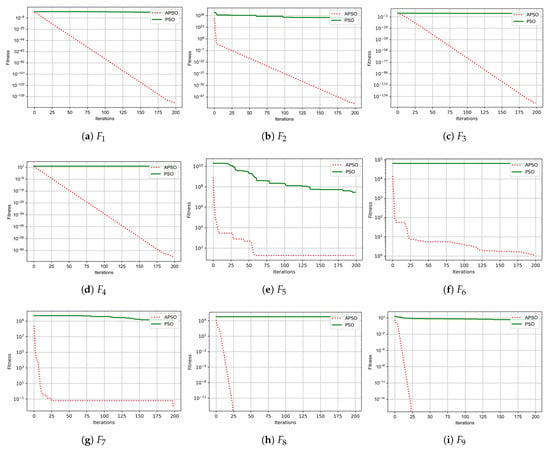

The comparative experiments conducted between the standard PSO algorithm and the enhanced PSO algorithm reveal a marked improvement in the optimization performance of the latter. Evaluations of various test functions demonstrate that the optimal values achieved by the improved algorithm consistently approximate the theoretical optimal values of the respective test functions, indicating effective optimization results. As illustrated in Table 3, both algorithms exhibit a standard deviation of 0.00E+00, suggesting minimal variability and relatively stable performance. Notably, with the exceptions of and , where the relative gap in results is not substantial, the APSO algorithm demonstrates a significant enhancement in solution accuracy across the remaining functions. This further substantiates the efficacy of the improved algorithm. The convergence curves presented below illustrate a comparison between the enhanced algorithm, APSO, and the conventional PSO across various test functions.

Figure 3 indicates that the improved algorithm exhibits a convergence rate comparable to that of the standard PSO on functions and , while requiring fewer iterations and achieving convergence at a faster pace. Furthermore, for the remaining test functions, the APSO demonstrates a marked improvement in speed relative to the standard PSO, thereby exhibiting a more pronounced overall convergence effect.

Figure 3.

Convergence curves of the algorithms APSO and PSO for 200 iterations on nine functions.

4.2. Comparative Analysis of the Enhanced Algorithm and the Alternative PSO Optimization Techniques

To evaluate the overall performance of the enhanced algorithm, we conducted a series of tests using 10 functions as a benchmark. The detailed results of these evaluations are shown in Table 4. As shown in Table 4, the improved algorithm APSO has calculated the mean, standard deviation, and optimal values by randomly comparing ten different test functions. The results indicate that the improved APSO algorithm demonstrates superior performance across most of the test functions, achieving the global minimum on . Based on the optimal values, the APSO algorithm ranks first among the nine functions and second on . Its mean, standard deviation, and optimal value outperform those of other algorithms in most cases, with the particularly impressive optimal value reflecting the stability of the improved algorithm. In this set of test functions, the algorithm’s optimal value significantly impacts the results, aligning closely with the theoretical optimal value of the test function. This illustrates the improvement of the improved algorithm in terms of the effectiveness and feasibility of the optimization search. It is worth mentioning that several PSO control algorithms obtain the same average and optimal values on the function, which reflects the fact that the control algorithms tend to fall into local optimality.

Table 4.

Performance comparison of the improved algorithm APSO with other representative algorithms on 10 functions, where , and denote the average, standard deviation and optimal values of the solution, respectively.

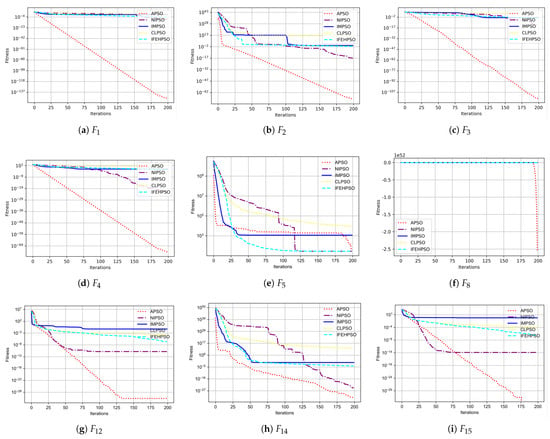

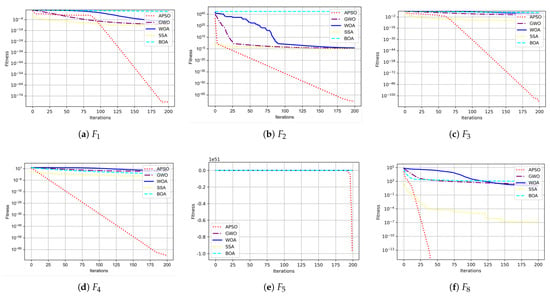

Figure 4 illustrates the convergence effects of the improved APSO algorithm compared to the NIPSO, IMPSO, CLPSO, and IFEHPSO algorithms across various test functions. As evidenced by the convergence curve graphs, the improved APSO algorithm converges significantly faster than the other particle swarm improvement algorithms in most cases after 200 iterations, quickly reaching the optimal level. Among these, the convergence rates of the improved APSO algorithm on functions , , , and are nearly identical, with a faster convergence speed compared to other algorithms. This suggests that the improved algorithm effectively enhances convergence speed.

Figure 4.

Convergence curves of the proposed algorithm APSO and the four other representative algorithms of for 200 iterations on nine functions.

4.3. A Comparison of Our Algorithm APSO with Other Metaheuristic Algorithms

Based on a comparison of the APSO algorithm with other metaheuristic algorithms in Table 5, APSO ranks among the top two in terms of mean, standard deviation, and optimal value. Notably, the optimal value of the improved APSO algorithm is 0 in the test functions , , and , achieving the best results for these functions. This outcome demonstrates the algorithm’s accuracy and convergence efficiency in locating the global optimal solution. Although the SSA algorithm surpasses APSO in optimal value for functions , , and , APSO outperforms in overall performance, ranking first in the average results for 7 out of 10 functions, thereby indicating strong performance.

Table 5.

Comparison of our improved algorithm APSO and other metaheuristic algorithms on ten functions, where , and denote the average, standard deviation and optimal values of the solution, respectively.

The convergence curve of the improved APSO algorithm compared to other metaheuristic algorithms is shown in Figure 5. The convergence curve graph illustrates changes in the velocity curve, showing that the improved algorithm’s speed curve significantly outperforms those of other meta-inspired algorithms in most instances, indicating a faster convergence rate. This reinforces the success and effectiveness of the algorithm’s enhancements.

Figure 5.

Convergence curves of the proposed algorithm APSO and the four other algorithms for 200 iterations on ten functions.

4.4. Higher-Dimensional Function Analysis

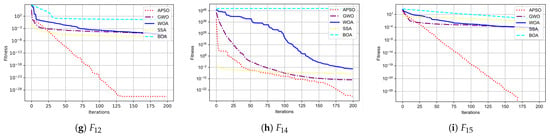

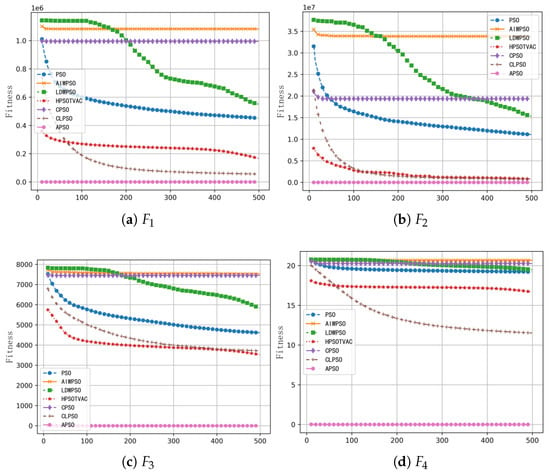

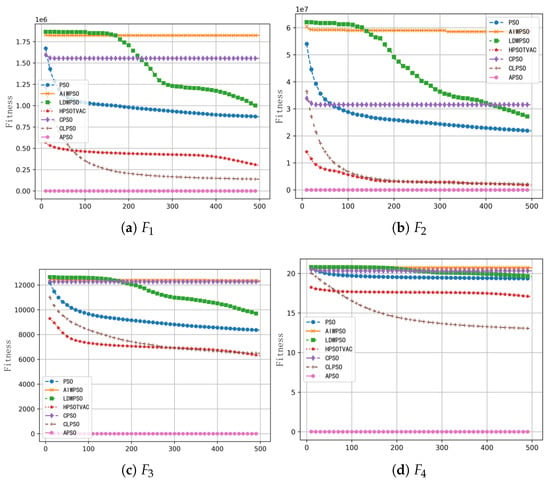

To verify the performance of the APSO algorithm for solving high-dimensional particle swarm optimization problems, we adopted the standard particle swarm algorithm and five typical improved algorithms for the performance comparisons, including PSO, AIWPSO, LDWPSO, HPSOTVAC, CPSO, and CLPSO. Two classical unimodal functions, and , and two multimodal functions and are selected for the experimental valuation; the dimensions of particles are set as and . The population size is , and the number of iterations is , respectively. To avoid the interference of randomness, each algorithm runs 30 times independently. Table 6 gives the statistical results, including the average value , standard deviation , maximum value , and optimal value , and the best results are bolded. Figure 6 and Figure 7 illustrate the convergence curves of the seven algorithms for dimensions of 500 and 800, respectively.

Table 6.

The experimental results of four representative benchmarking functions on dimensions of 500 and 800, respectively.

Figure 6.

Convergence curves of the seven testing algorithms for dimensions of 500.

Figure 7.

Convergence curves of the seven testing algorithms for dimensions of 800.

From the data in Table 6 and Figure 6 and Figure 7, APSO performs better in high-dimensional optimization problems compared with other algorithms, especially for both unimodal and multimodal functions with accurate convergence and good stability and robustness. Therefore, the comprehensive performance of APSO algorithm is better than other comparative algorithms, and it is very suitable for solving high-dimensional optimization problems.

5. Engineering Application

Under the background of the rapid development of Industry 4.0 and intelligent manufacturing, the particle swarm optimization algorithm provides innovative solutions for energy saving, emission reduction, and resource optimization in the field of industrial manufacturing by virtue of its efficient characteristics. The algorithm has shown significant application value in many industrial fields. In the design of pressure vessels in the chemical and petroleum industries, the wall thickness to diameter to height ratio and other parameters are optimized to ensure safety while reducing material costs. In the field of industrial spring manufacturing, the fatigue life and lightweight level of products are simultaneously improved by multi-objective optimization of parameters such as steel wire diameter and effective turn number. In the production of automobile reducers, the key parameters such as gear modulus and tooth width can be optimized to reduce weight and improve transmission efficiency. In addition, the algorithm can be applied to other areas, such as multimodal inseparable problems, black-box optimization with moderate variable coupling, and continuous/semi-continuous search spaces. These typical applications fully prove that particle swarm optimization algorithm has a certain contribution to promote the development of industry and intelligent manufacturing.

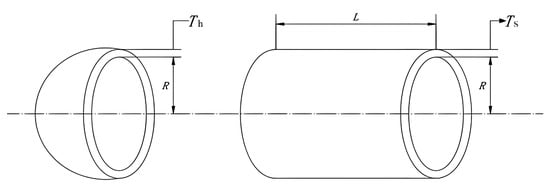

5.1. Pressure Vessel Design

In the pressure vessel design problem, the main objective is to minimize the total cost of making the pressure vessel, such as materials and welding, while ensuring the normal operation of the vessel. The head of the pressure vessel is hemispherical, and the two ends of the vessel are covered. The problem mainly involves four key optimization variables: the thickness of the container , the thickness of the container head , the internal radius of the container R, and the length of the cylindrical section L without considering the head. A schematic diagram of the pressure vessel is shown in Figure 8.

Figure 8.

Structure diagram of pressure vessel.

The mathematical model of the pressure vessel design is as follows:

- Design variable: ;

- Objective function: ;

- Constraint condition: , , , .

- Variable range: , , , .

The fitness function consists of the objective function and the penalty function , which can be expressed as . It is an important basis for evaluating the feasibility of the problem solution. When a solution does not satisfy the constraints, we will design a penalty and add it into the fitness value. By increasing this penalty number, the model can be forced to always search for the optimal solution in the feasible region during the process of iterative calculation. In this way, the constraints in the problem can be transformed into penalty terms in the fitness function, so as to ensure that the problem design not only satisfies all the constraints but also minimizes the cost. The objective function is responsible for evaluating and calculating the cost of the problem.

To solve the optimal solution of this constrained optimization problem, we compare the improved adaptive particle swarm optimization algorithm (APSO) with the algorithms GWO, WOA, SSA, and BOA. To ensure the reliability of the experimental results, the same test function was used to evaluate all the algorithms. In order to eliminate the performance fluctuation of the algorithm, each algorithm was run 30 times independently, and the average value of the optimal solution of each algorithm was taken as the performance evaluation index. Table 7 shows the detailed comparative experimental results.

Table 7.

Optimal solution of each algorithm in pressure vessel design.

According to the optimal cost results in Table 7, in the pressure vessel design optimization problem, the improved APSO algorithm obtains the best solution for each design variable, which significantly reduces the total material cost. The actual cost was reduced from 7167 to 6352, resulting in savings of approximately 815. The experimental results show that the algorithm APSO is feasible and effective in such practical engineering applications. In addition, the algorithm APSO shows superior performance in optimizing the design parameters of pressure vessels compared with similar algorithms.

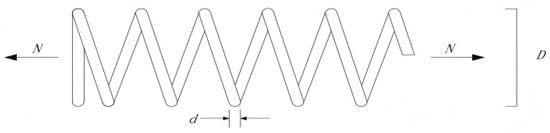

5.2. Tension Spring Design

The tension/compression spring problem is a classic design challenge in structural engineering. Its objective is to minimize the spring weight while ensuring safety and stability by meeting four constraints: deflection, shear stress, surge frequency, and outer diameter. The core design variables for this problem include the wire diameter d, mean coil diameter D, and the number of active coils N. The structural design of the tension/compression spring is illustrated in Figure 9.

Figure 9.

Schematic diagram of tension spring.

The mathematical model of the design is as follows:

- Design variable: ;

- Objective function: ;

- Constraint condition: , , , .

- Variable range: , , .

In this design, it is necessary to set the boundary of , , and under constraint conditions. The upper and lower bounds of the particle individual are and , respectively. If , , do not satisfy the constraints, we need to define a fitness function and add a penalty function to it. These two functions are and , respectively.

In order to verify the performance of the algorithm, we compared the improved algorithm with other metaheuristic algorithms such as GWO, WOA, SSA and BOA. To ensure the fairness of verification, each algorithm was run 30 times independently, and the minimum of the running results was taken as the optimal solution. The experimental results are shown in Table 8.

Table 8.

Optimal solution of the algorithm in the design of tension and compression springs.

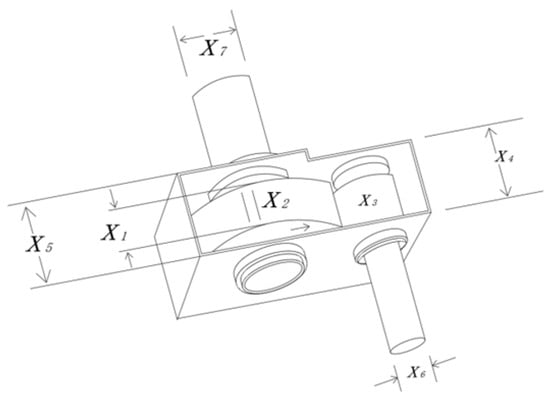

5.3. Reducer Optimization

The optimization problem of the speed reducer aims to minimize the weight of the reducer taking into account the bending stresses of the gears, the lateral deflection of the shafts, the surface pressure of the speed reducer, and the stresses on the shafts. As shown in Figure 10, the speed reducer consists of seven main constraint variables: the width of the speed reducer b, the modulus of the teeth m, the number of teeth of the pinion z, the length of the first shaft between the bearings , the length of the second shaft between the bearings , the diameter of the first shaft , and the diameter of the second shaft . By solving the constraints of the speed reducer, the values of the relevant variables and the weight of the speed reducer can be obtained.

Figure 10.

Schematic diagram of the reducer.

The mathematical model of the design is as follows:

- Design variable: ;

- Objective function: ;

- Constraint condition:,, ,,,,,,,,.

- Variable range: .

In the speed reducer design, in order to improve the values of the seven core parameters and thus optimize the weight of the reducer, the range of values of the optimization problem can be transformed into the boundaries of the particle swarm optimization, where the upper boundary is and the lower boundary is . Since there may be cases where the solution of the problem does not satisfy the constraints, it is necessary to devise a large penalty term for the fitness function, to ensure that the particles satisfy all the conditions during optimization search. The fitness function and penalty function are and , respectively.

In order to verify the efficiency of our algorithm, the improved algorithm is compared with other metaheuristic algorithms such as GWO, WOA, SSA and BOA. Each algorithm was run 30 times independently, and the average of the minimum values of the results of each run was taken as the optimal value. The experimental results are shown in Table 9.

Table 9.

The optimal values of various swarm optimization algorithms for reducer design.

According to the data comparison results in Table 9, it can be seen that in the reducer optimization problem, the optimal solution obtained by the improved APSO algorithm is superior to other metaheuristic algorithms. Although its advantage is relatively limited, i.e., the optimal value is about a thousand times less than the second-best value, it still has practical value as an alternative scheme for engineering design. The experimental results show that, compared with the baseline algorithms, APSO not only significantly optimizes the key design parameters but also effectively reduces the weight of the reducer, which fully proves that the algorithm has both slight advantages and some application potential in solving such engineering optimization problems.

6. Conclusions and Future Work

With the rapid advancement of intelligent population optimization algorithms, researchers have proposed numerous novel and effective population-based optimization methods, resulting in significant achievements in related optimization research. The evolution of algorithmic improvement strategies is keeping pace with contemporary developments, highlighting the vast potential of population intelligence algorithms. This paper focuses on the particle swarm optimization algorithm, enhancing it through four distinct improvement strategies and ultimately proposing an adaptive selection PSO algorithm. To validate the effectiveness and applicability, we conducted a series of test experiments that compared the improved algorithm against the standard particle swarm algorithm, and other metaheuristic algorithms on multiple benchmark test functions. The experimental results indicate that our algorithm shows the better performance in accuracy, stability and convergence.

To verify the application effect of the algorithm in practice, we applied the improved algorithm to real-world engineering applications, comparing its results with optimal solutions derived from other metaheuristic algorithms. The experimental results indicate that our algorithm surpasses other comparative algorithms in both optimality and developmental efficiency. The enhancements significantly boost its optimization capabilities, resulting in a reduced computational burden, accelerated convergence speed, and robust performance, enabling more better solutions in various engineering application problems.

Although the performance of the algorithm has been enhanced, there is still room for further improvement. For example, mechanisms such as genetic algorithms and simulated annealing can be integrated to enhance the global search capability or combined with deep learning [36], recurrent neural networks [37,38], and graph neural networks [39] to optimize the hyperparameters; the dimensionality disaster can be mitigated and the computational efficiency can be improved by subspace decomposition, parallel computing and sparse coding; and the inertia weights and acceleration coefficients can be dynamically adjusted using reinforcement-based learning or fuzzy logic to balance the local and global exploration capabilities.

Author Contributions

Conceptualization, Z.J. and L.-B.H.; Methodology, D.Z. and X.-Y.L.; Validation, Z.J. and X.-Y.L.; Formal analysis, L.-B.H.; Investigation, D.Z.; Writing—original draft, Z.J. and X.-Y.L.; Writing—review & editing, L.-B.H.; Visualization, D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Scientific Research Start-up Funds of Guangdong Ocean University under Grant 060302112201; the Scientific Research Innovation Team Project of Guangxi Minzu Normal University under Grant KYTD202406; and the Integrated Characteristic Construction Project of “Institution (Research Institute)-Discipline (Degree Program)-Team (Think Tank)-Innovation Platform-Incubation Project” at Guangxi Minzu Normal University under Grant 1024/10300130.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

We thank the reviewers for their valuable comments that have significantly improved the quality of this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Cai, B.; Zhu, X.; Qin, Y. Parameters optimization of hybrid strategy recommendation based on particle swarm algorithm. Expert Syst. Appl. 2021, 168, 114388. [Google Scholar] [CrossRef]

- Miramontes, I.; Melin, P.; Prado-Arechiga, G. Particle Swarm Optimization of Modular Neural Networks for Obtaining the Trend of Blood Pressure. In Intuitionistic and Type-2 Fuzzy Logic Enhancements in Neural and Optimization Algorithms; Springer: Berlin/Heidelberg, Germany, 2020; pp. 225–236. [Google Scholar] [CrossRef]

- Pu, Q.; Gan, J.; Qiu, L.; Duan, J.; Wang, H. An efficient hybrid approach based on PSO, ABC and k-means for cluster analysis. Multim. Tools Appl. 2022, 81, 19321–19339. [Google Scholar] [CrossRef]

- Yuan, X.; Tian, H.; Zhang, W.; Zhao, H.; Zhao, Z.; Zhang, N. CA-PSO: A Combinatorial Auction and Improved Particle Swarm Optimization based Computation Offloading Approach for E-Healthcare. In Proceedings of the ICC 2022-IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 3850–3855. [Google Scholar] [CrossRef]

- Batista, G.C.; Silva, W.L.S.; de Oliveira, D.L.; Saotome, O. Automatic speech patterns recognition of commands using sVM and Pso. Multim. Tools Appl. 2019, 78, 31709–33173. [Google Scholar] [CrossRef]

- Wu, S. Simulation of classroom student behavior recognition based on PSO-KNN algorithm and emotional image processing. Intell. Fuzzy Syst. 2022, 40, 7273–7283. [Google Scholar] [CrossRef]

- Ebrahimi, S.; Fayek, A.R.; Sumati, V. Hybrid Artificial Intelligence HFS-RF-PSO Model for Construction Labor Productivity Prediction and Optimization. Algorithms 2021, 14, 214. [Google Scholar] [CrossRef]

- Garai, S.; Paul, R.K.; Yeasin, M.; Paul, A.K. CEEMDAN-Based Hybrid Machine Learning Models for Time Series Forecasting Using MARs Algorithm and PSO-Optimization. Neural Process. Lett. 2024, 56, 92. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y. Comparing inertia weights and constriction factors in particle swarm optimization. In Proceedings of the 2000 Congress on Evolutionary Computation, La Jolla, CA, USA, 16–19 July 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 84–88. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y. Tracking and optimizing dynamic systems with particle swarms. In Proceedings of the 200l Congress on Evolutionary Computation, Seoul, Republic of Korea, 27–30 May 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 94–100. [Google Scholar] [CrossRef]

- Ratnaweera, A.; Halgamuge, S.K.; Watson, H.C. Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans. Evol. Comput. 2004, 8, 240–255. [Google Scholar] [CrossRef]

- Arumugam, M.S.; Rao, M.V.C. On the improved performances of the particle swarm optimization algorithms with adaptive parameters, cross-over operators and root mean square (RMS) variants for computing optimal control of a class of hybrid systems. Appl. Soft Comput. 2008, 8, 324–336. [Google Scholar] [CrossRef]

- Chegini, S.N.; Bagheri, A.; Najafi, F. Psoscalf: A new hybrid PSO based on Sine Cosine algorithm and levy flight for solving optimization problems. Appl. Soft Comput. 2018, 73, 697–726. [Google Scholar] [CrossRef]

- Li, Y.; Than, T.; Zhao, H.; Wang, X. An improved sin cosine optimization algorithm with self-learning strategy and Lévy flight. J. Chongqing Univ. 2019, 42, 5666. [Google Scholar] [CrossRef]

- Zhang, Z.; Rao, S.H.; Zhang, S.J. Grey wolf optimization algorithm based on adaptive normal cloud model. Control Decis. 2021, 36, 2562–2568. [Google Scholar] [CrossRef]

- Xue, J.K.; Shen, B. Dung beetle optimizer: A new meta-[6]heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Alsattar, H.A.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimization algorithm. Artif. Intell. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Jing, J.; Liang, A.; Kai, Q.; Ponnuthurai, N. Suganthan, S. Baskar. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Mostafa, R.R.; Khedr, A.M.; Al Aghbari, Z.; Afyouni, I.; Kamel, I.; Ahmed, N. An adaptive hybrid mutated differential evolution feature selection method for low and high-dimensional medical datasets. Knowl.-Based Syst. 2024, 283, 111218. [Google Scholar] [CrossRef]

- Dixit, A.; Mani, A.; Bansal, R. An adaptive mutation strategy for differential evolution algorithm based on particle swarm optimization. Evol. Intell. 2021, 15, 1–15. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Zhang, S. Optimal Control of Hybrid Energy Storage System of New Energy Power Generation System Based on Improved Particle Swarm Algorithm. Interconnect Netw. 2022, 22, 1–18. [Google Scholar] [CrossRef]

- Jia, W.; Liu, X.; Zhang, C.; Qiu, M.; Tan, Y.; Yu, Z. Design of Zero-Differential Steering Controller for Tracked Vehicles with Hydraulic-Mechanical Transmission Based on Particle Swarm Optimization Algorithm. IEEE Access 2023, 11, 32187–32200. [Google Scholar] [CrossRef]

- Zhu, J.; Li, Q.; Ying, S.; Zheng, Z. Research on Parallel Task Scheduling Algorithm of Saas Platform Based on Dynamic Adaptive Particle Swarm Optimization in Cloud Service Environment. Int. J. Comput. Intell. Syst. 2024, 17, 260. [Google Scholar] [CrossRef]

- Assareh, E.; Behrang, M.A.; Assari, M.R.; Ghanbarzadeh, A. Application of PSO (particle swarm optimization) and GA (genetic algorithm) techniques on demand estimation of oil in Iran. Energy 2010, 35, 5223–5229. [Google Scholar] [CrossRef]

- Liu, L.; Jiang, W.; Zhou, H.; Pu, C.; Qian, P.; Liu, B. A Novel Particle Swarm Optimization Algorithm Incorporating Improved Sine Chaos Mapping. J. Xi’an Jiaotong Univ. 2023, 57, 182–193. [Google Scholar] [CrossRef]

- Wang, P.; Lei, Y.; Agbedanu, P.R.; Zhang, Z. Makespan-driven workflow scheduling in clouds using immune-based PSO algorithm. IEEE Access 2020, 8, 29281–29290. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.-M.; Geng, G.-H.; Zhou, L.; Duan, Y.-Z. Hybrid Particle Swarm Optimization Algorithm Based on Intuitionistic Fuzzy Entropy. Acta Electron. Sin. 2021, 49, 2381–2389. [Google Scholar] [CrossRef]

- Lin, M.; Wang, Z.; Zheng, W. Hybrid particle swarm-differential evolution algorithm and its engineering applications. Soft Comput. 2023, 27, 16983–17010. [Google Scholar] [CrossRef]

- Shang, J.; Li, G. A Hybrid Composite Differential Evolution and Multi-objective Particle Swarm Optimization Evolutionary Algorithm and Its Application. IEEE Access 2024, 12, 74417–74431. [Google Scholar] [CrossRef]

- Nenavathh, K.; Jatoth, D.R.; Das, D.S. A synergy of the sine-cosine algorithm and particle swarm optimizer for improved global optimization and object tracking. Swarm Evol. Comput. 2018, 43, 1–30. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Tarafdar, A.; Karmakar, K.; Das, R.K.; Khatua, S. Multi-criteria scheduling of scientific workflows in the Workflow as a Service platform. Comput. Electr. Eng. 2023, 105, 108458. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Zhang, Y.; Geng, G. Finite-Time Convergent Modified Davidenko Method for Dynamic Nonlinear Equations. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 1630–1634. [Google Scholar] [CrossRef]

- Dai, L.; Zhang, Y.; Geng, G. Norm-Based Finite-Time Convergent Recurrent Neural Network for Dynamic Linear Inequality. IEEE Trans. Ind. Inform. 2024, 20, 4874–4883. [Google Scholar] [CrossRef]

- Fu, J.; Zhang, Y.; Geng, G.; Liu, Z. Recurrent Neural Network with Scheduled Varying Gain for Solving Time-Varying QP. IEEE Trans. Circuits Syst. II Express Briefs 2024, 71, 882–886. [Google Scholar] [CrossRef]

- Zhang, Y.; Liao, B.; Geng, G. GNN Model With Robust Finite-Time Convergence for Time-Varying Systems of Linear Equations. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 4786–4797. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).