Abstract

The intelligent development of continuous casting quality engineering is an essential step for the efficient production of high-quality billets. However, there are many quality defects that require strong expertise for handling. In order to reduce reliance on expert experience and improve the intelligent management level of billet quality knowledge, we focus on constructing a Domain-Specific Knowledge Graph (DSKG) for the quality engineering of continuous casting. To achieve joint extraction of billet quality defects entity and relation, we propose a Self-Attention Partition and Recombination Model (SAPRM). SAPRM divides domain-specific sentences into three parts: entity-related, relation-related, and shared features, which are specifically for Named Entity Recognition (NER) and Relation Extraction (RE) tasks. Furthermore, for issues of entity ambiguity and repetition in triples, we propose a semi-supervised incremental learning method for knowledge alignment, where we leverage adversarial training to enhance the performance of knowledge alignment. In the experiment, in the knowledge extraction part, the NER and RE precision of our model achieved 86.7% and 79.48%, respectively. RE precision improved by 20.83% compared to the baseline with sequence labeling method. Additionally, in the knowledge alignment part, the precision of our model reached 99.29%, representing a 1.42% improvement over baseline methods. Consequently, the proposed model with the partition mechanism can effectively extract domain knowledge, cand the semi-supervised method can take advantage of unlabeled triples. Our method can adapt the domain features and construct a high-quality knowledge graph for the quality engineering of continuous casting, providing an efficient solution for billet defect issues.

1. Introduction

Continuous casting refers to a continuous process of passing liquid steel through a continuous casting machine [1]. As a modern steel manufacturing technology, it is an important energy-saving part of the production process of the steel industry [2]. The continuous casting machine is mainly composed of different devices (e.g., the ladle, tundish, stopper rod, mold, and pulling machine), second cooling equipment, and cutting equipment. Continuous casting is widely used because of its high efficiency, environmental protection, energy saving, and many other advantages. Continuous casting, steelmaking, and continuous rolling have become the three carriages of progress and innovation in the iron and steel industry.

During continuous casting, the quality of the cast slab cannot be guaranteed due to several factors such as raw material defects, improper worker operations, and unreasonable process parameter settings. Therefore, organizing and utilizing collected continuous casting quality events, along with corresponding recommendations and solutions provided by experts, can effectively take measures when quality events occur during continuous casting. In this regard, continuous casting efficiency and quality can be improved.

Knowledge graphs (KGs) were first proposed by Google in 2012. They are graphical data structures used to describe and organize knowledge. They represent entities, concepts, and relationships in the real world as nodes and edges. The relationships between edges and nodes can map complex logic in the real world, thus achieving knowledge storage and querying. Currently, although General KGs (GKGs) cover a wide range of content, the depth of knowledge in specific domains is still insufficient [3]. Accordingly, GKGs cannot be directly applied to the quality engineering of continuous casting.

The so-called Domain-Specific KG (DSKG) focuses on a specific domain, which can structure domain-specific knowledge and be of great significance for guiding domain-specific work. Currently, DSKGs have been extensively studied in fields such as power grids [4] and medicine [5,6,7], but there is little research on the quality engineering of continuous casting. Therefore, we propose a method for constructing a DSKG for quality engineering of continuous casting. It utilizes the advantages of Deep Learning (DL) in Natural Language Processing (NLP) to extract knowledge from unstructured text in the continuous casting domain, and ultimately constructs a high-quality DSKG for quality engineering of continuous casting through knowledge alignment. The contributions of this work are two-fold.

- A joint knowledge extraction method for quality engineering of continuous casting based on self-attention partition and recombination.

- A knowledge alignment method for continuous casting based on semi-supervised incremental learning and adversarial training.

The following contents of this paper include the following: Section 2 introduces the related works of knowledge extraction, knowledge alignment, and DSKG. Section 3 describes the construction of the DSKG for quality engineering of continuous casting. Section 4 introduces a Self-Attention Partition and Recombination Model (SAPRM) to achieve joint extraction of entity and relation for quality engineering of continuous casting, and a knowledge alignment method for continuous casting based on semi-supervised incremental learning. Section 5 presents the experiment and analyzes the results. Section 6 concludes our work.

2. Related Works

2.1. Knowledge Extraction

The key step in constructing a KG is knowledge extraction. Knowledge extraction consists of two parts: Named Entity Recognition (NER) and Relation Extraction (RE).

Currently, there are two main types of knowledge extraction tasks. One is the pipeline model, which first performs NER and then RE, such as the PL marker [8], proposed by Deming Ye et al. These approaches are flawed because they do not consider the intimate connection between NER and RE. Also, error propagation is another drawback of pipeline methods.

In order to conquer these issues, the joint extractionc of entity and relation is proposed, and demonstrates stronger performance on both tasks. HGERE [9], proposed by Yan Z et al., is a joint entity and relation extraction model equipped with a span pruning mechanism and a higher-order interaction module. It extracts features of entities and relations within a hypergraph. However, with a stronger encoder, performance improvement is not significant, and there is an issue with high computational efficiency. RFBFN [10], proposed by Zhe L et al., extracts entities and relations simultaneously based on a Transformer. PRGC [11], proposed by Zheng H et al., performs a joint extraction of entities and relations by aligning the head and tail entities identified through relation recognition. UIE [12], proposed by Lu Y et al., uses prompt-based fine-tuning of Large Language Models (LLMs) to unify four types of natural language Information Extraction (IE) tasks, including entity extraction and relation extraction. N Ngo et al. proposed the DA4JIE [13] framework, which jointly solved four IE tasks in Unsupervised Domain Adaptation (UDA) setting. They employed an instance-relational domain adaptation method and incorporated context-invariant graph learning. However, a method that explicitly learns to find the optimal connections for the relation graph might be able to produce better performance for their problem.

M Van Nguyen et al. proposed an information extraction model [14] that learned cross-instance dependencies through different layers of a Pre-trained Language Models (PLM) and cross-type dependencies via the Chow-Liu algorithm. However, this method does not explore other graph kernels to compute graph similarity. Moreover, recent structure learning methods are not evaluated. Li Y et al. formulated the real-world Attribute Extraction (AE) task into a unified Attribute Tree [15] and proposed a simple but effective tree-generation model to extract both in-schema and schema-free attributes from texts. However, one of the datasets used in their work, whose size is still small, produces randomness bias during the model training and may affect the final experimental results. They also did not conduct experiments on LLMs. Zhang R et al. proposed a table-to-graph generation model [16], which unified the tasks of coreference resolution and relation extraction with a table filling framework, and leveraged a coarse-to-fine strategy to facilitate information sharing among these subtasks. However, experiments of this model are only conducted on domain-general documents. Additionally, it takes relatively more time and computational resources than models that only extract relations.

2.2. Knowledge Alignment

Knowledge alignment, also known as knowledge matching, aims to determine whether entities can represent the same object in the physical world. Measuring the similarity between entities is a key task in knowledge alignment. Kang S et al. calculated entity semantic similarities based on entity descriptions and knowledge embeddings to judge whether align the two entities [17]. Zhou L used a pre-trained model, taking the semantic similarity between two entities as the alignment criterion [18]. Jiang T et al. measured whether entities could be aligned by assessing the match of entity attributes and rules. But the cases of literal heterogeneity still exist in relation pairs; they do not adopt embedding-based methods to aligning relation pairs. Additionally, logical rules can also be obtained by an ontology knowledge base, in addition to extracting from data [19].

Dettmers T. et al. used the similarity of entity structures as a standard for entity alignment, but their model was still shallow compared to convolutional architecture found in Computer Vision (CV). Additionally, they did not consider how to enforce large-scale structures in embedding space so as to increase the number of interactions between embeddings [20].

2.3. Domain-Specific Knowledge Graph

In recent years, with the development of KG technology, researchers have begun applying it in the field of quality issue diagnosis and analysis. For instance, Zhao et al. constructed a KG to identify and resolve quality issues in nut–bolt pairs based on gated graph neural networks [21]. Li developed a KG for quality issues in power equipment by using logs from power devices, improving fault detection and maintenance efficiency. However, the construction process lacks automation, standardized top-level design and quality evaluation criteria [4]. Payal Chandak et al. created a KG for precision medicine based on the study of drug–disease relationships. It has a positive impact on guiding clinical medication, but its functionality can be enhanced by integrating deep graph neural networks [5]. Yang Z et al. constructed a medical KG by jointly extracting knowledge through integrating global and local contexts. Although the model architecture can be optimized to support parallel computing, it does not consider the alignment of Chinese medical text entities [6]. Sun Z et al. used table labeling and convolutional neural networks (CNN) methods for joint extraction to build a medical KG. But this method does not solve the problem of imbalance of categorization categories in biomedical datasets, and does not explain the application of convolution to table methods [7].

Ren H et al. constructed a DSKG for industrial equipment, providing guidance for the use of industrial equipment. Yet they did not consider the impact of newly added entities and relations on fine-tuned results and the issue of data imbalances [22]. Liu P et al. built a KG in the aviation assembly domain based on joint extraction and reinforcement learning. But they did not consider the issue of fuzzy entity boundaries, and some particular or generic relations may be useful to improve the KG structure [23]. Wang T et al. used federated learning to extract entities and relations in sedimentology, and constructed a sedimentological DSKG. But they did not use self-created sedimentological corpus to mimic the imbalanced data where federated learning is performed to solve joint extraction task [24].

3. Problem Modeling

3.1. Characteristics of Quality Engineering Texts of Continuous Casting

To ensure the continuous casting quality, steel enterprises and relevant departments have systematically compiled industry standards and written books on continuous casting quality based on expert experiences. The corpus comprehensively covers potential quality defects that may arise during continuous casting, as well as the corresponding causes and solutions.

Compared to daily texts, quality engineering texts in continuous casting domain have the following characteristics. Firstly, these texts are highly specialized, containing a large number of proprietary terms and technical jargon in continuous casting domain, such as crystallizer, secondary cooling zone, surface cracks on square billets, equiaxed grains, etc. Those KGs applicable to general domain cannot cover the quality engineering texts related to continuous casting. Secondly, the proportion of entities and relationships in the corpus is significant. In the general domain, a sentence may only contain one triple, while in the continuous casting domain, each sentence may contain multiple triples that need to be extracted.

Therefore, in the process of constructing a DSKG for quality engineering of continuous casting, the following difficulties and challenges exist. Firstly, the classification of entities and relations in quality engineering of continuous casting is more complex and specialized. Unlike the standardized categorization of entities and relations in the general domain, the classification of entities and relationships in the ontology model of this field needs to be combined with expert knowledge and engineering experience to ensure the coverage and accuracy of the ontology. Secondly, the data sources for quality engineering of continuous casting are almost all unstructured, since the data mostly come from documents and industry standards, which are unstructured data, and there is no existing corpus that can be directly used. Annotating data and building dataset are also challenges in this domain.

3.2. DSKG Modeling for Quality Engineering of Continuous Casting

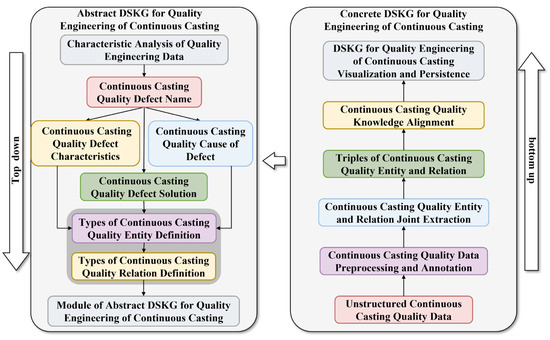

Owing to the relatively stable core components of the texts in the quality engineering of continuous casting, where these core elements can be further refined into various types of unstructured information, we adopt a combined approach of top-down and bottom-up to construct a DSKG for quality engineering of continuous casting. The combined approach leverages the advantages of both: the top-down strategy allows us to initially define a schema layer, providing a structured framework for knowledge organization; meanwhile, the bottom-up approach enables us to iteratively refine and update the schema based on patterns observed during knowledge extraction. This combination ensures that the resulting knowledge graph is more complete, adaptable, and reliable. The construction process of the DSKG for quality engineering of continuous casting is shown in Figure 1.

Figure 1.

Construction process of the DSKG for quality engineering of continuous casting.

Firstly, through the quality engineering texts analysis of continuous casting, an abstract KG is designed by using a top-down approach. Then, guided by this abstract KG, a DSKG is constructed by using a bottom-up approach.

3.2.1. Constructing of Abstract KG

Abstract KG is the organizational structure of a KG, which describes the data model of entities and relations between entities within a domain. Under the guidance of domain experts, we analyze the content of quality engineering texts of continuous casting. Meaningful concepts and relations between concepts in quality engineering of continuous casting have been extracted and refined. This constitutes the ontology model of the DSKG for quality engineering of continuous casting.

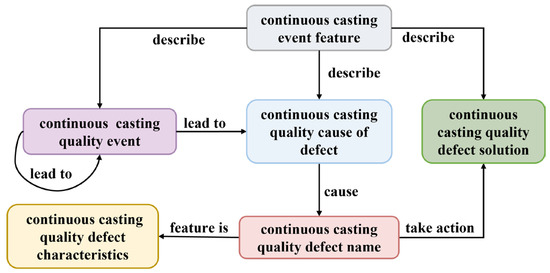

Figure 2 illustrates the entity elements and their relations in the ontology model.

Figure 2.

Entities and relations in abstract KG.

Specifically, the ontology model consists of six entity elements: continuous casting defect name, continuous casting event, continuous casting cause of the defect, continuous casting defect characteristics, continuous casting defect solution, and continuous casting event feature. These elements are interconnected by five relationships: lead to, cause, describe, taken action, and feature is.

3.2.2. Constructing of Concrete KG

The construction process of a concrete KG mainly involves four steps: preprocessing, knowledge extraction, knowledge alignment, and knowledge storage.

Preprocessing involves converting unstructured natural language into the required input format for the knowledge extraction stage. We extract and preprocess data as follows:

(i) Scan professional books and other textual materials, extract the natural language content, remove tables and images, segment long texts into sentences by using periods, and obtain sentence-level corpora.

(ii) Convert traditional Chinese characters to simplified Chinese characters, and remove rare characters from sentences.

(iii) Remove ‘#’ and ‘*’ symbols to avoid special symbols affecting knowledge extraction.

(iv) Convert the Chinese representation of chemical elements into chemical symbols.

(v) Convert textual descriptions of size comparisons into symbols.

(vi) Verify the closure of punctuation marks.

According to constructed abstract KG for quality engineering of continuous casting based on the schema layer, the processed corpus sentences need to be annotated to identify the entities and the relations between them. Owing to the wide application of NLP, now, there are specialized tools available for data annotation. We leverage the doccano annotation tool to annotate entities and relations in the continuous casting quality texts.

Due to the specialized and knowledge-intensive of quality engineering texts of continuous casting, we propose the SAPRM for entity and relation joint extraction. The purpose of this model is to extract domain-related entities and relations from quality engineering texts of continuous casting. This lays the foundation for building a DSKG for quality engineering of continuous casting.

Due to inevitable phenomena such as recording errors, the use of abbreviations, and symbols in natural language texts, there still exist duplicates in obtaining entities. Accordingly, we propose a knowledge alignment model based on semi-supervised incremental learning. This model aims to align entities with ambiguity issues, thereby further improving the quality of the constructed DSKG for quality engineering of continuous casting.

Table 1 presents examples of some operational entities, indicating whether two entities can be merged based on their meanings. Given the characteristics of domain-specific entities, the entity alignment task for continuous casting knowledge fusion faces following challenges.

Table 1.

Examples of entity pairs in quality engineering of continuous casting.

The first challenge is lack of context. Continuous casting quality entities are relatively short in length. After knowledge extraction, there may be a lack of context.

The second challenge is data quality. In the knowledge of quality engineering of continuous casting, there are too many professional terms. Entities pointing to the same concept may be disrupted by issues such as formatting, units, capitalization, spacing, abbreviation of terms, input errors, etc., which can bring knowledge fusion with more difficulty.

To eliminate semantic conflicts in quality knowledge units of continuous casting and address the issues of synonymy and morphological variation among entities, we propose to fuse redundant or ambiguous continuous casting knowledge units by using an entity alignment model to ensure the quality of the DSKG for quality engineering of continuous casting.

Additionally, it is important to consider persistent storage of the DSKG for quality engineering of continuous casting. We use Neo4j as the database to store the knowledge.

In conclusion, we completed the entire process from unstructured knowledge to constructing and storing the DSKG for quality engineering of continuous casting.

4. Method

4.1. Joint Extraction Based on Self-Attention Partition and Recombination for Quality Engineering of Continuous Casting

We propose the SAPRM for knowledge extraction. This model divides the joint extraction task into two sub-tasks: NER and RE. Formally, given an input sequence of length n, where represents the i-th token in the sequence. Concerning NER, the goal is to extract a set E of all types of entities. While for RE, the aim is to find a relation set R. Combining the results of both NER and RE, the model can extract quality engineering of continuous casting triple set S. In , e represents an entity while r means relationship between two entities.

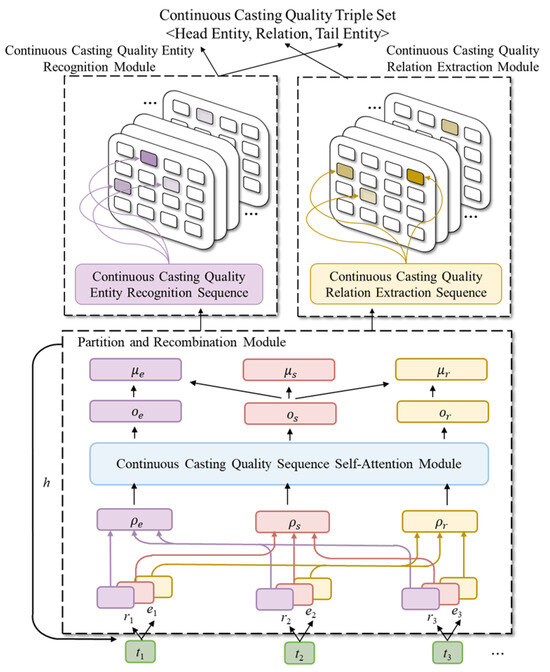

Specifically, the SAPRM consists of three parts: the partition and recombination module, the NER module, and the RE module. The partition and recombination module divides the input data into different segments based on the characteristics of the texts, which are then used as inputs for the NER module and the RE module. The overall structure of SAPRM is shown in Figure 3. The pseudocode is shown in Algorithm 1.

| Algorithm 1 SAPRM |

| Input: Continuous casting quality sequence T Output: Continuous casting quality triple set <entity, relation, entity> for each t in T do ρs = ρs + (t*e ∩ t*r) //get shared partition from token t with entity gate and relation gate ρe = ρe + (t*e − ρs) //get NER-related partition from token t with entity gate and shared partition ρr = ρs + (t*r − ρs) //get RE-related partition from token t with relation gate and shared partition oe, os, or = attention (ρe, ρs, ρr) //calculate attention score in attention module μe = oe + os, μr = or + os he = tanh(μe), hr = tanh(μr) for pos in length(T) do if is_head_start_pos(pos, he) is true then entities += (T[pos, end_pos], k) for e1, e2 in entities do if is_correct_relation (e1, e2, hr) is true then triples += (e1, r, e2) return continuous casting quality triple set |

Figure 3.

Overall structure of SAPRM.

4.1.1. Partition and Recombination Module of Continuous Casting Quality Sequence

The partition and recombination module consists of three steps: partition, self-attention mechanism, and recombination. In this module, each token is first divided into three partitions: entity partition, relation partition and shared partition. Then, based on the features required by specific tasks, certain partition can be selected and combined to filter specific tasks.

- Partition of Continuous Casting Quality Sequence

This step divides tokens into three parts: entities, relations, and common parts. For an input sequence T = {t1,t2,…,tn}, we leverage entity gate and relation gate , and then the sequence is segmented into three partitions based on their relevance to specific tasks. For example, entity gate separates neurons into two partitions: NER-related and NER-unrelated. The shared partition is formed by combining partition results from both gates and information in the shared partition can be regarded as valuable to both tasks. The partition result of token is shown in Equation (1).

As shown in Equation (1), and are the results partitioned by entity gate and relation gate , is the current continuous casting quality token, h is the hidden layer’s output from last epoch, and is defined by Equation (2).

The output of and can be seen as an approximation of a binary gate with the form of (0,…,0,1,…,1), thereby identifying a segmentation point within the sequence. Subsequently, the input data are divided into three parts, namely the entity partition , the relation partition , and the shared partition , as shown in Equation (3). The first two partitions store intra-task information, which only contain specific features for the NER task or the RE task. It can improve our model’s focus on the two tasks. The third stores inter-task information, which can provide global information on the sentence level, concerning the dependencies on the context.

As shown in Equation (3), are the shared sequence, NER-related sequence and RE-related sequence. is part of the token concerned with NER, is part of the token concerned with RE, and is the shared part of the current token. Additionally, is operator that takes the intersection of two operands, and is operator that takes the difference between two operands.

- Self-Attention Mechanism

Successively, we input the aforementioned three partitions into the self-attention component. Take the NER-related partition as an example: we leverage the NER-related partition in query matrix, combine the NER-related partition and the shared partition for key and value matrix in self-attention mechanism, which is shown in Equation (4).

The output of self-attention module for the NER-related partition is shown in Equation (5).

In this way, we can calculate the output of self-attention module for the RE-related partition and the shared partition as well.

- Recombination of Continuous Casting Quality Sequence

As shown in Equation (6), we recombine three partitions , , and , which were calculated by the self-attention module.

As shown in Equation (6), , , and are the continuous casting quality sequence’s NER partition, shared partition, and RE partition after recombination.

The final output form of the partition recombination component is shown in Equation (7). The obtained and represent the filtered sequences, which are used for NER and RE tasks separately.

As shown in Equation (7), h is the output of the hidden layer, while and are the continuous casting quality sequence for NER and RE.

4.1.2. NER Module of Continuous Casting Quality

The objective of the NER module is to identify and categorize all entity spans in a given sentence. Specifically, the task is treated as a type-specific table filling problem. Given a set of entity types ε, for each type k, we fill out a table whose element represents the probability of token and being the start and end positions of an entity of type k. For each token pair , we concatenate token-level features and , and sentence-level global features , and pass them through a fully connected layer and ELU activation function to obtain the entity representation for that group of tokens, as shown in Equation (8).

As shown in Equation (8), is the representation of an entity that begins with token and ends with token .

According to , we obtain the probability of this entity being an entity of type k through a forward feedback network, which is shown as Equation (9).

As shown in Equation (9), is the probability of entity being k-th kind, which starts with token and ends with token , while is sigmoid active function.

4.1.3. RE Module of Continuous Casting Quality

The RE module aims to identify all triplets in the sentence. In this module, only the prediction of the starting position of entities is required, as the entire entity scope has already been identified by the NER module. Similar to the NER module, the RE module is also considered a table-filling problem for specific relations. Given a set of relation R, for each relation r, a relation table is constructed, where its elements represent the probability of token and being the head and tail entities of that relation. Therefore, we can use a table to extract all triples related to relationship l. For each triple , we obtain the feature representation by concatenating token-level features , , and sentence-level global features , as shown in Equation (10).

As shown in Equation (10), is the triple representation, which starts with token and ends with token .

Using the feature representation of the triplet, the correct probability of the triple is obtained through a feedforward neural network, as shown in Equation (11).

As shown in Equation (11), is the probability of token and token being the starting token of continuous casting quality subject and object entity with relation r.

4.1.4. Loss Function

We leverage BCELoss as loss function, which is shown in Equation (12).

Our work is to achieve NER and RE in the quality engineering of continuous casting; hence, the loss functions are defined separately for the NER module and RE module, as shown in Equation (13).

As shown in Equation (13), is the loss function about continuous casting quality NER, is the loss function about continuous casting quality RE, and are the continuous casting quality entity and relation’s true category, while and are the continuous casting quality entity and relation’s predicted category.

The total loss function is shown as Equation (14).

As shown in Equation (14), L is the total loss function, is the loss function about continuous casting quality NER, and is the loss function about continuous casting quality RE.

4.2. Knowledge Alignment Method for Quality Engineering of Continuous Casting Based on Semi-Supervised Incremental Learning

In Section 4.1, we leverage the SAPRM to obtain structured unit knowledge in the quality engineering of continuous casting. This section integrates the extracted continuous casting quality entities by using knowledge alignment method to enhance the quality of knowledge.

Formally, in the DSKG for the quality engineering of continuous casting, knowledge is represented as K = (E,R,T), where E represents entities, R represents relationships between entities, and T = (E,R,E) represents the triples in the KG. The fusion of redundant or ambiguous knowledge can be regarded as a matching task for entity pairs. Therefore, formally, its definition is as shown in Equation (15).

As shown in Equation (15), K is the quality engineering knowledge of continuous casting, and e is an entity in kind k.

The knowledge fusion task involves finding all similar entities and generating matching results , which represents two entities that have the same physical meaning.

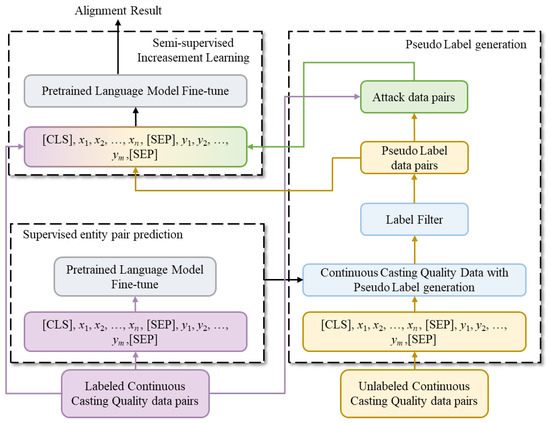

To achieve the above goal, we propose a DL model based on semi-supervised incremental learning for knowledge alignment, and a KG is refined based on the aligned triples. The proposed DL model based on semi-supervised incremental learning includes a supervised learning module, pseudo-label generation module, and incremental learning module. Figure 4 provides the overall flowchart of the knowledge alignment model, and the pseudocode is shown in Algorithm 2.

| Algorithm 2 Knowledge Alignment Method Based on Semi-supervised Incremental Learning. |

| Input: labeled pairs of entities Wlabel and unlabeled pairs of entities Wunlabel Output: finely-tuned BERT BERTFT ← fine-tune(BERT(e1, e2)) for (e1, e2) in Wlabel for (e1, e2) in Wunlabel pl ← BERTFT((e1, e2)) Wtotal ← Wtotal + (e1, e2) if confidence(pl) ≥ threshold for w in Wunlabel ep ← replace w with w’ if importance(w) ≥ threshold m ← BERTFT(ep, e2) Wtotal ← Wtotal + (ep, e2) if m changed BERTFT ← fine-tune(BERT(e1, e2)) for (e1, e2) in Wtotal return BERTFT |

Figure 4.

Process of knowledge alignment.

4.2.1. Supervised Learning Module

In the supervised learning module, we finely tune BERT [25] by using labeled data. The input format of entity pairs is defined as Equation (16).

As shown in Equation (16), I is the input sequence of continuous casting quality knowledge, [CLS] and [SEP] are special tokens in BERT, while and are first and second continuous casting quality entity tokens, respectively.

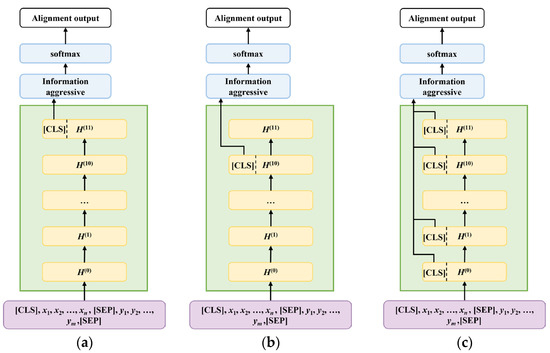

This module consists of a BERT component and the result prediction component. The result prediction component aggregates the outputs from different layers of BERT to increase prediction accuracy. Figure 5 illustrates this, where Figure 5a indicates the aggregation layer only using the output from the last layer, Figure 5b indicates the use of the output from the second-to-last layer, and Figure 5c indicates aggregation of the outputs from the last two layers and the first two layers to retain features from both deep and shallow layers simultaneously.

Figure 5.

Different output structure of our method. (a) The aggregation layer using the output from the last layer; (b) The aggregation layer using the output from the second-to-last layer; (c) The aggregation layer using the outputs from the last two layers and the first two layers.

We leverage the output of the 12th layer of the BERT component as the aggregation layer, as shown in Figure 5a. This can be expressed as in Equation (17).

As shown in Equation (17), H is output of continuous casting quality knowledge alignment, and is the aggregation of parameters in BERT 12th layer.

4.2.2. Pseudo-Label Generation Module for Continuous Casting Quality Knowledge

Due to the large number of continuous casting quality knowledge units, the cost of constructing pairs and labeling all entity pairs extracted from the entire knowledge units is very high. However, in semi-supervised learning methods, unlabeled data can also be leveraged to train the model. Therefore, we propose a pseudo-label generation module to leverage unlabeled data.

The input of the pseudo-label generation module is the unlabeled samples of continuous casting quality entity pair. It uses the knowledge alignment model for prediction, which has been finely tuned in the previous stage. According to the output probability values, high-confidence predictions are then considered as pseudo-labels for entity pairs, as shown in Equation (18).

As shown in Equation (18), p is the confidence of a continuous casting quality entity pair, FTB is the pretrained language model finely tuned for the first time, and is an unlabeled entity pair in the test set.

4.2.3. Incremental Learning Module of Continuous Casting Quality Knowledge

The incremental learning module selects pseudo-labeled samples based on a confidence probability as incremental data to be added to the labeled samples. The BERT component is then finely tuned again.

To overcome the negative impact of random noise on model performance, we combine adversarial training into the incremental learning module. We leverage the BERT ATTACK model [26] to generate perturbed samples as adversarial training. The BERT ATTACK model is based on BERT and generates adversarial samples by calculating the importance score of tokens in the input sequence , as shown in Equation (19). If the importance of a token exceeds a certain threshold, it is then replaced by a synonym , namely transforming the input sequence to . If the output of the model changes after this transformation, it is considered a successful attack. Each successful attack adds an adversarial sample to this method.

As shown in Equation (19), is the ground truth of the target model, is the input sequence with token masked, and is the target model output without token .

After obtaining the adversarial samples, we combine them with the labeled data and pseudo-labeled data as input for incremental learning. The BERT model is further finely-tuned using incremental learning. The final prediction results are obtained through the result prediction component.

5. Experiment and Analysis

5.1. Experimental Environment and Parameters Setting

The experiments consisted of mainly two parts: experiment analysis of SAPRM and experimental analysis of continuous casting quality knowledge alignment method. In addition, we propose the storage and visualization of the DSKG for quality engineering of continuous casting.

5.1.1. Experimental Data

The data details used in the DSKG construction process are illustrated in Table 2.

Table 2.

Data details in DSKG.

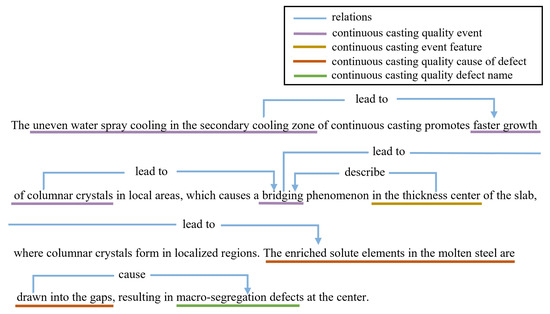

An example of data annotation is shown in Figure 6.

Figure 6.

Example of data labeling.

5.1.2. Hyperparameter Setting of DSKG Construction Method for Quality Engineering of Continuous Casting

The hyperparameter settings for the SAPRM and the knowledge alignment model are presented in Table 3. All the hyperparameters were tuned empirically within reasonable ranges via grid search based on validation performance.

Table 3.

Hyperparameters of SAPRM and knowledge alignment algorithm.

5.2. Evaluation of SAPRM

5.2.1. Performance of SAPRM

To validate the performance of the SAPRM in the DSKG construction for quality engineering of continuous casting, we compared with TPLinker [27], PRGC [11], PFN [28] and TablERT-CNN [29] algorithms, and the results are shown in Table 4. The symbol † means all models use BERT-BASE-CHINESE as encoder.

Table 4.

Experiment results of SAPRM.

For NER task, SAPRM outperforms PFN in both precision and F1 score. For RE task, SAPRM significantly outperforms the other models in terms of precision, recall, and F1 score. Specifically, compared to PFN, SAPRM achieves a 1.72% improvement in NER precision, a 2.69% improvement in RE precision, and a 4.31% improvement in RE F1 score.

In the task of continuous casting quality engineering knowledge extraction, our SAPRM achieves the best performance. Therefore, these prove that our SAPRM is an effective approach for quality engineering knowledge extraction task of continuous casting.

5.2.2. Ablation Study on SAPRM

To demonstrate the positive impact of each part of the SAPRM on the overall performance, ablation experiments are conducted, and the results are shown in Table 5.

Table 5.

Ablation experiment results of SAPRM.

We conduct the ablation study from four aspects. For the number of layers in the self-attention module, we attempt one layer and two layers. Due to the limitation of data scale, increasing the self-attention module to two layers results in the model failing to converge due to overly complex parameters. Therefore, setting the number of layers to 1.

Then, if the self-attention module is removed, the performance of SAPRM decreases significantly. For the NER task, the accuracy decreases by 6.38%, and for RE task, the accuracy decreases by 3.18%. This indicates that the self-attention module plays an important role in SAPRM.

For the partition module, if partition is not performed and knowledge extraction is conducted directly, the performance of SAPRM is also significantly affected. For NER task, the accuracy decreases by 7.85%, and for RE task, the accuracy decreases by 4.74%. This illustrates the necessity and effectiveness of segmenting the training sequence into three partitions: entity-related, shared, and relation-related.

Additionally, if the global mechanism is removed, it also affects the overall performance of SAPRM. Consequently, combining local features and global contexts is necessary in improving performance of SAPRM.

The ablation study demonstrates that each module of our SAPRM model plays an indispensable positive role in the results, and proves the effectiveness of this model in handling knowledge extraction tasks in quality engineering of continuous casting.

5.2.3. Experiment on Loss Function

The loss function we propose consists of two parts: the quality entity recognition loss of continuous casting and the quality relation extraction loss of continuous casting. Therefore, we explore the proportion of these two parts of the loss by setting hyperparameter λ with different value, as shown in Table 6.

Table 6.

Influence of different λ setting.

After modifying the proportions of the two parts of the loss function, the performance of the model decreased. Among the different λ values, λ with value 1 still maintains the best performance, while λ with value 0.67 performs second best in all cases. Consequently, we set the hyperparameter λ as 1.

5.2.4. Generalization Capability

To verify that our model has a certain degree of generalization performance, experiments are conducted on three general datasets: ADE, WebNLG, and SciERC. The symbol ◊ represents dataset using macro-F1 while ♦ means usage of micro-F1. The symbol ⸸ represents usage BERT-BASE-CASED as BERT encoder, while ‡ means SciBERT [30] as BERT encoder. The results are as shown in Table 7. The results indicate that our model outperforms other methods in NER task on the WebNLG dataset and achieves second-best performance in RE task. On the ADE and SciERC datasets, our model outperforms other methods in both NER task and RE task.

Table 7.

Experiment results on generalization capability.

According to the generalization experiment, although our model does not rely heavily on any particular dataset structure, it achieves better performance in domains characterized by a relatively small number of entity types with high occurrence, such as the ADE dataset, which contains short biomedical sentences rich in fine-grained entities. The SAPRM architecture, through its partition-and-recombination mechanism, can dynamically capture task-specific features and preserve contextual information, which contributes to its strong performance even on complex scientific texts in SciERC and redundant encyclopedic data in WebNLG with long sentences, overlapping relations, or diverse entity types.

5.3. Evaluation of Continuous Casting Quality Knowledge Alignment Method

5.3.1. Performance of Continuous Casting Quality Knowledge Alignment Method

The knowledge alignment results are shown in Table 8. For different pre-trained models, BERT is replaced with ALBERT [36] and ERNIE [37] for comparison. The ERNIE model is a large-scale pre-trained language model proposed by Baidu. When using the ERNIE model, the accuracy of the model decreases by 4.48%; when using ALBERT, the accuracy of the model decreases by 1.42%.

Table 8.

Experiment results of alignment.

This result indicates that using the BERT model as the backbone of the model, we propose results in the most significant performance improvement.

To investigate the influence of different output structures of BERT on the results, experiments are conducted by changing the output structure, and the results are shown in Table 9.

Table 9.

Results of different output structures.

It can be observed that the differences between different output structures are small, and using the last layer directly as the output can achieve the best performance. Among them, using a combination of the second-to-last layer, the last two layers, and the first two layers all yield decent results.

BERT may suffer from catastrophic forgetting due to its large number of layers. By adjusting the weight decay hyperparameter, the negative impact of catastrophic forgetting can be mitigated. Table 10 shows the influence of different weight decay settings on the results. When the weight decay is set to 1 × 10−4, our continuous casting quality engineering knowledge alignment model achieves the best performance.

Table 10.

Results in different weight decay.

5.3.2. Ablation Study on Continuous Casting Quality Knowledge Alignment Method

Table 11 presents the ablation experiments for our continuous casting quality engineering knowledge alignment task.

Table 11.

Ablation experiment results of alignment.

For adversarial training, if this part is removed, the accuracy of the knowledge alignment model decreases by 0.71%, and the F1 score decreases by 0.74%. This indicates the effectiveness of adversarial training for the model. For incremental learning and adversarial training, removing them results in a decrease in accuracy by 1.38%, a decrease in recall by 11.89%, and a decrease in the F1 score by 7.03%.

The improvement of adversarial training on the model is relatively small compared to other modules. The possible reason is that due to the specialization of knowledge in quality engineering of continuous casting, the success rate of the attack model is low, resulting in fewer generated adversarial samples, and hence the effect of adversarial training is not significant. Even so, the performance degradation caused by removing adversarial training still proves the significance of adversarial training for the model we propose. The utilization of adversarial training is due to the fact that incremental learning leads to noise and erroneous samples. Accordingly, adversarial training must be removed together with incremental learning. These results demonstrate that incremental learning and adversarial training significantly enhance the continuous casting quality engineering knowledge alignment task. These two modules play a very important role in the model we propose.

5.4. Storage of DSKG for Quality Engineering of Continuous Casting

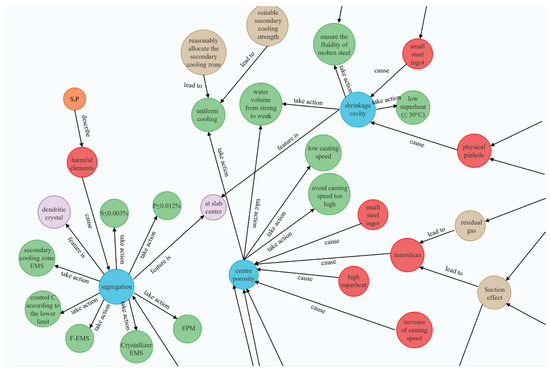

As shown in Figure 7, we leverage the Neo4j database for persistent storage and visualization of the DSKG for quality engineering of continuous casting. The specific node and relationship data are shown in Table 2. In short, the process we propose successfully constructed a DSKG for quality engineering of continuous casting from continuous casting quality text and achieved persistent storage for future application.

Figure 7.

DSKG for engineering quality of continuous casting.

6. Conclusions

We propose a method for constructing a DSKG for the quality engineering of continuous casting based on knowledge extraction and alignment. We designthe SAPRM for joint entity and relation extraction, and a semi-supervised adversarial learning approach for knowledge alignment. The experimental results show that, in the knowledge extraction part, the entity and relation extraction accuracies of our method improved by 1.72% and 2.69%, respectively, compared to the best baseline, while in the knowledge alignment part, the accuracy of our method reaches 99.29%. It proves the effectiveness of our partition mechanism in knowledge extraction and the leverage of adversarial training in knowledge alignment. The constructed DSKG not only enhances automation in the quality management of continuous casting but also substantially reduces the reliance on expert experience, offering a scalable and efficient solution for industrial applications. For future work, we plan to leverage LLM to strengthen our DSKG. We also plan to extend our DSKG to multi-modal KG by utilizing continuous casting quality pictures.

Author Contributions

Conceptualization, X.W. (Xiaojun Wu); methodology, Y.S.; software, Y.S. and X.W. (Xinyi Wang); validation, X.W. (Xiaojun Wu), Y.S., X.W. (Xinyi Wang) and H.L.; formal analysis, X.W. (Xiaojun Wu); investigation, Q.G.; resources, Q.G.; data curation, X.W. (Xiaojun Wu), Y.S., H.L. and X.W. (Xinyi Wang); writing—original draft preparation, Y.S.; writing—review and editing, X.W. (Xiaojun Wu), X.W. (Xinyi Wang), H.L. and Q.G.; visualization, X.W. (Xiaojun Wu); supervision, X.W. (Xiaojun Wu); project administration, X.W. (Xiaojun Wu); funding acquisition, Q.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Shaanxi Province Innovation Capacity Support Plan 2024RS-CXTD-23.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable feedback.

Conflicts of Interest

Author Qi Gao was employed by the company China National Heavy Machinery Research Institute Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Birs, I.R.; Muresan, C.; Copot, D.; Ionescu, C.-M. Model identification and control of electromagnetic actuation in continuous casting process with improved quality. IEEE-CAA J. Autom. Sin. 2023, 10, 203–215. [Google Scholar] [CrossRef]

- Thomas, B.G. Review on modeling and simulation of continuous casting. Steel Res. Int. 2018, 89, 1700312. [Google Scholar] [CrossRef]

- Abu-Salih, B. Domain-specific knowledge graphs: A survey. J. Netw. Comput. Appl. 2021, 185, 103076. [Google Scholar] [CrossRef]

- Li, Y. Research on Some Problems of Power Equipment Defect Text Mining and Application Based on Knowledge Graph. Master’s Thesis, Zhejiang University, Hangzhou, China, 2022. [Google Scholar]

- Chandak, P.; Huang, K.; Zitnik, M. Building a knowledge graph to enable precision medicine. Sci. Data 2023, 10, 67. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Huang, Y.; Feng, J. Learning to leverage high-order medical knowledge graph for joint entity and relation extraction. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 9023–9035. [Google Scholar]

- Sun, Z.; Xing, L.; Zhang, L.; Cai, H.; Guo, M. Joint biomedical entity and relation extraction based on feature filter table labeling. IEEE Access 2023, 11, 127422–127430. [Google Scholar] [CrossRef]

- Ye, D.; Lin, Y.; Li, P.; Sun, M. Packed Levitated Marker for Entity and Relation Extraction. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 4904–4917. [Google Scholar]

- Yan, Z.; Yang, S.; Liu, W.; Tu, K. Joint Entity and Relation Extraction with Span Pruning and Hypergraph Neural Networks. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 7512–7526. [Google Scholar]

- Li, Z.; Fu, L.; Wang, X.; Zhang, H.; Zhou, C. RFBFN: A relation-first blank filling network for joint relational triple extraction. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Dublin, Ireland, 22–27 May 2022; pp. 10–20. [Google Scholar]

- Zheng, H.; Wen, R.; Chen, X.; Yang, Y.; Zhang, Y.; Zhang, Z.; Zhang, N.; Qin, B.; Ming, X.; Zheng, Y. PRGC: Potential Relation and Global Correspondence Based Joint Relational Triple Extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 6225–6235. [Google Scholar]

- Lu, Y.; Liu, Q.; Dai, D.; Xiao, X.; Lin, H.; Han, X.; Sun, L.; Wu, H. Unified Structure Generation for Universal Information Extraction. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 5755–5772. [Google Scholar]

- Ngo, N.; Min, B.; Nguyen, T. Unsupervised domain adaptation for joint information extraction. In Findings of the Association for Computational Linguistics: EMNLP 2022; Association for Computational Linguistics: Abu Dhabi, United Arab Emirates, 2022; pp. 5894–5905. [Google Scholar]

- Van Nguyen, M.; Min, B.; Dernoncourt, F.; Nguyen, T. Learning cross-task dependencies for joint extraction of entities, events, event arguments, and relations. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 9349–9360. [Google Scholar]

- Li, Y.; Xue, B.; Zhang, R.; Zou, L. AtTGen: Attribute tree generation for real-world attribute joint extraction. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 2139–2152. [Google Scholar]

- Zhang, R.; Li, Y.; Zou, L. A novel table-to-graph generation approach for document-level joint entity and relation extraction. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 10853–10865. [Google Scholar]

- Kang, S.; Ji, L.; Liu, S.; Ding, Y. Cross-Lingual entity alignment model based on the similarities of entity descriptions and knowledge embeddings. Acta Electron. Sin. 2019, 47, 1841–1847. [Google Scholar]

- Zhou, L. Research on Intelligent Diagnosis and Maintenance Decision Method of Train Control On-Board Equipment in High-speed Railway. Ph.D. Thesis, Lanzhou Jiaotong University, Lanzhou, China, 2024. [Google Scholar]

- Jiang, T.; Bu, C.; Zhu, Y.; Wu, X. Two-Stage Entity Alignment: Combining Hybrid Knowledge Graph Embedding with Similarity-Based Relation Alignment. In Proceedings of the Pacific Rim International Conference on Artificial Intelligence, Yanuca Island, Fiji, 26–30 August 2019; pp. 162–175. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2d knowledge graph embeddings. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zhenbing, Z.; Jikun, D.; Yinghui, K.; Dongxia, Z. Construction and application of bolt and nut pair knowledge graph based on GGNN. Power Syst. Technol. 2020, 45, 98–106. [Google Scholar]

- Ren, H.; Yang, M.; Jiang, P. Improving attention network to realize joint extraction for the construction of equipment knowledge graph. Eng. Appl. Artif. Intell. 2023, 125, 106723. [Google Scholar] [CrossRef]

- Liu, P.; Qian, L.; Zhao, X.; Tao, B. The construction of knowledge graphs in the aviation assembly domain based on a joint knowledge extraction model. IEEE Access 2023, 11, 26483–26495. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, L.; Lv, H.; Zhou, C.; Shen, Y.; Qiu, Q.; Li, Y.; Li, P.; Wang, G. A distributed joint extraction framework for sedimentological entities and relations with federated learning. Expert Syst. Appl. 2023, 213, 119216. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar]

- Li, L.; Ma, R.; Guo, Q.; Xue, X.; Qiu, X. BERT-ATTACK: Adversarial Attack Against BERT Using BERT. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6193–6202. [Google Scholar]

- Wang, Y.; Yu, B.; Zhang, Y.; Liu, T.; Zhu, H.; Sun, L. TPLinker: Single-stage Joint Extraction of Entities and Relations Through Token Pair Linking. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 1572–1582. [Google Scholar]

- Theodoropoulos, C.; Moens, M.-F. An information extraction study: Take in mind the tokenization! In Proceedings of the Conference of the European Society for Fuzzy Logic and Technology, Palma, Spain, 4–8 September 2023; Springer Nature: Cham, Switzerland, 2023; pp. 593–606. [Google Scholar]

- Ma, Y.; Hiraoka, T.; Okazaki, N. Joint entity and relation extraction based on table labeling using convolutional neural networks. In Proceedings of the Sixth Workshop on Structured Prediction for NLP, Dublin, Ireland, 27 May 2022; Association for Computational Linguistics: Dublin, Ireland, 2022; pp. 11–21. [Google Scholar]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A Pretrained Language Model for Scientific Text. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3615–3620. [Google Scholar]

- Wang, J.; Lu, W. Two are Better than One: Joint Entity and Relation Extraction with Table-Sequence Encoders. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1706–1721. [Google Scholar]

- Eberts, M.; Ulges, A. Span-based joint entity and relation extraction with transformer pre-training. In ECAI 2020; IOS Press: Amsterdam, The Netherlands, 2020; pp. 2006–2013. [Google Scholar]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A Novel Cascade Binary Tagging Framework for Relational Triple Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1476–1488. [Google Scholar]

- Wang, Y.; Sun, C.; Wu, Y.; Yan, J.; Gao, P.; Xie, G. Pre-training entity relation encoder with intra-span and inter-span information. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1692–1705. [Google Scholar]

- Zhong, Z.; Chen, D. A Frustratingly Easy Approach for Entity and Relation Extraction. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 50–61. [Google Scholar]

- Sy, C.Y.; Maceda, L.L.; Canon, M.J.P.; Flores, N.M. Beyond BERT: Exploring the Efficacy of RoBERTa and ALBERT in Supervised Multiclass Text Classification. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 223. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Tian, H.; Wu, H.; Wang, H. Ernie 2.0: A continual pre-training framework for language understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 8968–8975. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).