Abstract

GPT (Generative Pre-trained Transformer) is a generative language model that demonstrates outstanding performance in the field of text generation. Generally, the attention mechanism of the transformer model behaves similarly to a copy distribution. However, due to the absence of a dedicated encoder, it is challenging to ensure that the input is retained for generation. We propose a model that emphasizes the copy mechanism in GPT. We generate masks for the input words to initialize the distribution and explicitly encourage copying through training. To demonstrate the effectiveness of our approach, we conducted experiments to restore ellipsis and anaphora in dialogue. In a single domain, we achieved 0.4319 (BLEU), 0.6408 (Rouge-L), 0.9040 (simCSE), and 0.9070 (BERTScore), while in multi-domain settings we obtained 0.4611 (BLEU), 0.6379 (Rouge-L), 0.8902 (simCSE), and 0.8999 (BERTScore). Additionally, we evaluated the operation of the copy mechanism on out-of-domain data, yielding excellent results. We anticipate that applying the copy mechanism to GPT will be useful for utilizing language models in constrained situations.

1. Introduction

GPT (Generative Pre-trained Transformer) has achieved great success in the field of natural language processing. This model demonstrates exceptional performance in various text generation tasks, producing innovative results in several applications such as machine translation, text summarization, and question answering. The success of GPT has become a significant milestone showcasing the potential of large language models (LLMs).

However, in environments where LLMs cannot be used, sLLMs (small LLMs) in the form of GPT often struggle to achieve desired results with zero-shot learning alone [1]. We aim to introduce a copying mechanism to GPT, which is a method that preserves keywords found in the input while having limited training data. As far as we know, encoder–decoder transformer models like T5 [2] have achieved excellent results in applications requiring specific contexts by using a copying mechanism, but there have been no instances of its application in GPT.

We validated the capabilities of the copying mechanism for GPT through tasks involving ellipsis and anaphora resolution in conversation. Ellipsis and anaphora processing are among the challenging problems in natural language processing. In human conversations, as shown in Table 1, information is often explicitly omitted, or substitutes are used based on already shared context, avoiding the repetition of previous dialogue content. In Table 1, User1 uses anaphora (‘그걸 (it)’) at the end of the conversation. While humans can recognize omitted information as the original information through contextual clues in such phenomena, it is very difficult for machines to interpret this appropriately [3].

Table 1.

The bold parts indicate the portions that need to be resolved. In the example below, standard GPT omits the word LLM that needs to be resolved.

Previous studies have addressed ellipsis and anaphora resolution in ways similar to coreference resolution [4] or machine reading comprehension [5]. However, in a question-and-answer environment with a mixture of various domains, it is necessary to reference the content from the same domain as the sentences where ellipsis and anaphora occur, making simple coreference resolution insufficient. Particularly in spoken dialogue, two additional factors must be considered. The first is processing time. Among existing methods, the mention pair approach [6] generates all possible candidates for classification, which requires a significant amount of time. The second is contextual consideration. It is essential to reference only the appropriate contextual content in dialogue to handle ellipsis and anaphora.

In this paper, we conducted experiments on ellipsis and anaphora resolution through prompt modification based on whether the history and current information share the same context in a conversational environment with context switching, as well as through a copying mechanism for GPT. We demonstrated that the copying mechanism in GPT shows significant performance improvements not only in the training domain but also in domains outside of training.

The contributions of this paper are as follows:

- We improved the performance of ellipsis and anaphora resolution by introducing a copying mechanism to the GPT structure.

- We proposed a method for prompt modification in conversational environments where context switching occurs.

- We demonstrated that the proposed method shows significant performance improvements not only in the training domain but also in domains outside of training (https://github.com/cwnu-airlab/CGM, accessed on 14 December 2024).

2. Related Work

2.1. Conversational Question Answering

Question answering (QA) systems provide information in response to questions formatted in various forms, including structured and unstructured data, but are not limited to these categories. They can be viewed as a case of conversational artificial intelligence, and, with advancements in technology, a research topic has emerged focused on conversational question answering (conversational QA), which involves understanding multiple dialogue contexts to generate responses [7]. In QA conducted in a conversational manner, accurate answers must be generated by retrieving or extracting information based not only on the current user query but also on previous dialogue history [8]. A key task in this process is to appropriately select the dialogue history that the QA model should reference to understand the question effectively. Previous studies have adopted dynamic history selection methods based on attention mechanisms [9] and reinforcement learning [10]. This paper demonstrates the effectiveness of a copying mechanism based on the GPT architecture to address one of the issues that arise in conversational QA: ellipsis and anaphora resolution.

2.2. Ellipsis and Anaphora Resolution

Ellipsis and anaphora are phenomena that frequently occur in multi-turn conversations, but they are also considered challenging problems to resolve.

In the case of ellipsis, it can be regarded as a type of anaphora; however, unlike anaphora, there are no indicators in the sentence that signify omitted parts, making it crucial to identify only the omitted meanings from the prior information, as these omitted meanings do not take on any special form [11]. Previous studies have adopted a structure that integrates seq2seq architecture with a copying mechanism to address ellipsis resolution in conversation [12]. Existing Seq2Seq models often suffer from problems such as focusing excessively on certain pieces of information, repetitively generating the same words, or encountering Out-Of-Vocabulary (OOV) issues.

Anaphora refer to different expressions that denote the same entity and are resolved using methods similar to coreference, which involves resolving any phrases or clauses that refer to the same physical entity. Recently, research has been conducted on resolving anaphora and coreference by utilizing transformer-based models like BERT and SpanBERT [13], which implicitly integrate common sense and contextual information through complex embeddings [14]. Such models are advantageous for establishing connections between substitute expressions as they can understand the bidirectional context of any given sentence. However, since the proposed method is a model that classifies by comparing all possible candidates, it requires significant classification time.

2.3. Copy Mechanism

The copying mechanism is a method that copies specific parts of the input sequence directly to the output sequence, primarily applied to Seq2Seq models for tasks such as machine translation [15] and document summarization [16]. The copying mechanism determines whether to generate new tokens or directly copy tokens from the encoder’s input sequence by using the attention mechanism during the decoding process. This approach combines the ability of language models to generate new words with the advantages of the copying mechanism to overcome OOV, effectively addressing various problems encountered in traditional Seq2Seq models [17].

In this paper, we constructed a generative model for sentence restoration by applying the Pointer Generator [18], which utilizes the copying mechanism, to the GPT architecture. The Pointer Generator decides whether to copy input tokens using the context vector generated from the encoder’s attention distribution and the switch. Since GPT consists solely of a decoder structure, there are several differences when compared with the actual functioning of the Pointer Generator:

- In the Pointer Generator, the Bahdanau attention [19] is used to determine which tokens in the encoder’s input sequence assign higher weights, which then informs the calculation of . In this paper, we used 1 for tokens present in the input and 0 for tokens not present in the input.

- When creating the switch, the Pointer Generator utilizes the hidden states of both the encoder and decoder, along with the decoder’s input. In contrast, we generated using only the decoder’s hidden states. In the GPT architecture, there is no encoder; instead, the final output hidden state of the decoder is assumed to contain information from all previous tokens, and the current decoder’s hidden state is employed.

Through this structure, we activated the copying mechanism to add weights to the tokens included in the input sequence, thereby increasing the probability of selecting the weighted tokens from the input sequence.

3. The Proposed Method

3.1. Problem Definition

To resolve ellipsis and anaphora using the copying mechanism, information about the parts where ellipsis and anaphora occur is necessary. This paper aims to resolve the current utterance (prompt text), , where ellipsis and anaphora have occurred, to obtain the resolved utterance, . We check whether the two given inputs, (=) and , belong to the same domain, and, depending on whether they are in the same domain or not, we input either both and together or only to the model. We set the length of to 2. Additionally, we assume that all ellipsis and anaphora have been resolved prior to the current utterance.

3.2. Domain Classification

As shown in Figure 1, if the inputs and given to the model belong to the same domain, the predicted label is set to 1, and, if they belong to different domains, it is set to 0. The classified result is multiplied by . We trained the classifier using a corpus that combines IT domain and Finance domain data. The model determines whether the previous utterance belongs to the same domain as the current utterance.

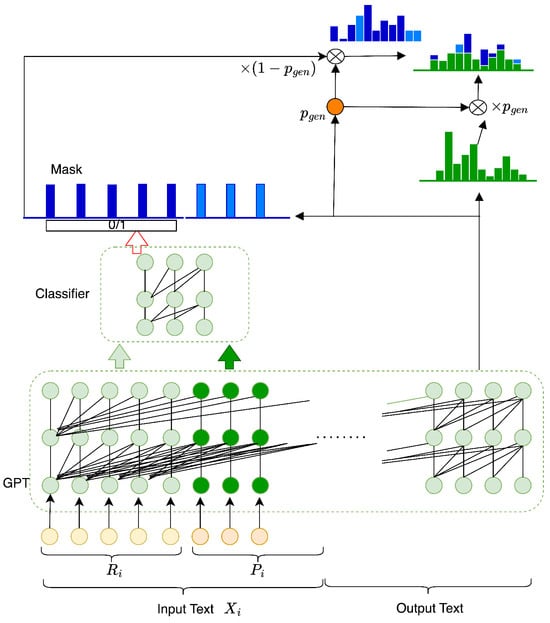

Figure 1.

Copy mechanism architecture using Mask.

3.3. Baseline

We used the Ko-GPT-Trinity (https://huggingface.co/skt/ko-gpt-trinity-1.2B-v0.5 accessed on 14 December 2024) generative model, which has a GPT [20] structure, fine-tuned for the task of resolving ellipsis and anaphora, as our baseline model. The baseline model’s input is as follows:

In this setup, is used as the input for the generative model, with the references concatenated in front of based on the result of the domain classifier. If the inputs belong to the same domain, the previous information, , is concatenated. However, if they are from different domains, the previous information should not be referenced, so no reference information is included. The elements are separated by the delimiter ‘;’ to specify the parts that need to be resolved.

Copy-Mechanism GPT with Mask

We conducted resolution experiments using a model called CGM (Copy-Mechanism GPT with Mask), which applies the copying mechanism to the generative model. The architecture is shown in Figure 1. Like the baseline, we use prompts as in Equation (1) based on the classifier’s results, but we adjust the prediction probabilities of the generative model through operations using Mask and values, and then determine the final probability, . The Mask is a one-dimensional vector created by marking tokens used in the input prompt as 1 and others as 0, then multiplied by , which is the GPT’s last hidden value at the current time, t. is a value obtained by passing the Mask and through a linear layer and then applying a sigmoid function, acting as a switch to determine how much weight to give to tokens used in the input. The formula for generating is as follows:

The value is multiplied by , which is the prediction probability of the existing generative model, GPT. By multiplying by , the prediction probability value is decreased. Additionally, the value obtained by subtracting from 1 is multiplied by the Mask, indicating the weight that the input tokens marked in the Mask will receive. Through this process, the Mask and the predicted probabilities are combined, allowing the tokens used in the provided prompt to receive additional weights. The final predicted probability can be expressed as follows:

Here, are weight vectors. equals 1 if the i-th token is present in the input, and 0 otherwise.

Training

We trained the model using standard cross entropy loss. The learning rate was set to and the batch size was set to 8. We used a pre-trained GPT-2 model and fine-tuned it using our training data. For experiments with the classifier, we additionally trained only the classifier model separately.

4. Experimental Results

4.1. Experimental Corpus

In our experiments, we use dialogue data about financial products and IT contract products. Table 2 shows the data used in the experiments (since these data were provided by Korea Telecom and cannot be fully disclosed, we have published a portion of the data on Github (https://github.com/cwnu-airlab/CGM, accessed on 14 December 2024). Additionally, to verify the generality of our proposed method, we conducted experiments using the data published in [12]. Table 3 shows the proportion of ellipsis and anaphora, and the number of dialogues in the experimental dataset.

Table 2.

The statistics of experimental dataset.

Table 3.

The number of ellipsis and anaphora in experimental dataset.

4.2. Comparing Models

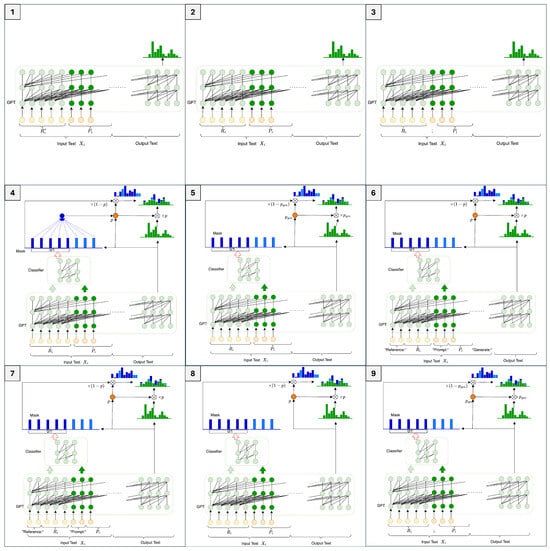

We implemented and compared the following models to verify whether the model preserves the given keywords effectively in environments with limited training data. The detailed model structure is shown in the Figure 2.

Figure 2.

Comparing models.

- : a general GPT-2 structure without a copying mechanism, using a prompt that sequentially concatenates the unresolved previous utterances, , and the current utterance, P, without any delimiters.

- : a general GPT-2 structure without a copying mechanism, using a prompt that concatenates the resolved previous utterances, R, and the current utterance, P, in sequence without any delimiters.

- Baseline: a version of that inserts the special character ‘@@’ when there is no previous information and uses the delimiter ‘;’ between each element.

- PG-GPT: a model that applies the Pointer Generator structure to GPT, using a Mask based on the input tokens to copy the information instead of a context vector based on attention distribution.

- CGM: a structure within PGM that does not use the Mask when generating .

- CGM + HardPrompt A: a modified model within the CGM structure that changes the prompt structure. Prompt A is ‘Reference: R Prompt: P Generate:’.

- CGM + HardPrompt B: prompt B excludes the ‘Generate:’ part from CGM + HardPrompt A.

- CGM + 0/1 Mask only: a structure within CGM that does not use the last hidden state when generating the Mask.

- CGM + SepTwice: a model that modifies the prompt structure within CGM, using the delimiter twice before the current utterance, P, in the prompt.

4.3. Experimental Results and Analysis

4.3.1. Evaluation Metrics

In our experiments, we used BLEU [21], Rouge-L [22], simCSE [23], and BERTScore [24]. The BLEU score evaluates the fluency by calculating n-gram precision between candidate and reference sentences, with a brevity penalty ():

where is the n-gram precision and is the weight for each n-gram precision. The brevity penalty penalizes short translations:

where c is the length of the candidate sentence and r is the length of the reference sentence. ROUGE-L evaluates the longest common subsequence (LCS) between a candidate sequence and reference sequence. Given a reference sequence X of length m and a candidate sequence Y of length n, Rouge-L is calculated as:

where LCS(X, Y) is the length of the longest common subsequence between X and Y. As another metric to evaluate the model’s restoration capability, we calculate F1 through exact matching between the reference sequence and candidate sequence. We evaluated how well the restoration target was reconstructed using BLEU, Rouge-L, and F1, which indicate how accurately words match, and assessed how well the intent expressed in the user’s current utterance was maintained using simCSE and BERTScore, which represent semantic similarity between two sentences. simCSE computes the cosine similarity between sentence embeddings to measure semantic similarity:

BERTScore computes token-level cosine similarity between BERT embeddings of two sentences, with importance weighting:

where x and y are the reference and candidate sentences, respectively.

4.3.2. IT Domain Utterance Restoration Experiments

Table 4 shows the performance results. We conducted experiments in a single domain to verify the restoration effect of the proposed method. We compared the performance by changing each element.

Table 4.

Experimental result of utterance restoration in a single domain. Bold represents the best performance in each evaluation indicator.

Using the resolved information, R, we achieved higher performance compared with using the simple history , and adding special characters to distinguish each sentence’s improved performance across all metrics. Additionally, considering BERTScore and the SimCSE score confirmed that the resolved sentences effectively preserved the original intent expressed by the user. In particular, the CGM-based model that applied the copying mechanism achieved higher performance in BLEU and ROUGE than the baseline, as it operates by directly copying the input tokens for output. In the restaurant reservation data as well, although the proposed method showed slightly lower performance in terms of sentence fluency and intent preservation, it demonstrated high performance in Rouge-L and F1, which indicate restoration capability.

Table 5 presents examples of each model’s result. In the current utterance, , “But what if there’s an accident because of the robot?” the virtual product ‘AI delivery robot’ was substituted with ‘robot’, yet it can be observed that the ‘AI delivery robot’ was effectively restored by referencing the previous history, .

Table 5.

Examples of sentence restoration by model. Bold represents the keyword that needs to be recovered in the result of models.

Table 6 shows the resolution performance for each utterance type. In most performance metrics, CGM-based models applying the copy mechanism recorded high performance. For anaphora and ellipsis types, which require accurately copying necessary keywords from previous time points, CGM showed relatively high performance by copying even detailed keywords, as shown in Table 7, unlike other CGM-based models that only copy higher-level concept keywords. While other models simply connected the product name and the current question, , and failed to replace ‘the first product’ with the product name, CGM was able to find ‘the first product’ from the answer, , and correctly restore it. Furthermore, as shown in Table 8, CGM can accurately convey the exact meaning of the user’s utterance by copying only ‘card payments’, excluding other unnecessary words. On the other hand, for the ‘Others’ type, CGM recorded lower performance compared with other models. This is because, in the current copy mechanism structure for GPT, it is not possible to assign concentrated weights to specific parts of the given input information, so it failed to find the correct parts to copy. Table 9 shows an example of this. Compared with other models that correctly restored the product name without altering the user’s intent, CGM additionally restored ‘service customer’, resulting in errors such as altering the user’s intent. In the restaurant reservation data as well, similar to the IT domain, CGM-based models showed generally high performance in Rouge-L and F1 metrics.

Table 6.

Experimental results by utterance type. Bold represents the best performance in each evaluation indicator.

Table 7.

Examples of anaphora resolution. Bold represents the keyword that needs to be recovered in the result of models.

Table 8.

Examples of ellipsis type restoration.

Table 9.

Examples of other type restoration. Due to the limitations of the Mask, the current information is not emphasized, leading to the incorrect copying of previous information.

4.3.3. Detecting Domain

We also conducted experiments in a dialogue environment where domain shifts occur to verify the effectiveness of the copy mechanism on out-of-domain data.

For four CGM-based models that showed relatively high performance in single-domain environments, we examined their performance with and without the use of a domain classifier. The results are shown in Table 10, which demonstrate that using the domain classifier improves performance in BLEU and ROUGE, indicating more accurate word matching across all models.

Table 10.

Sentence restoration experiment results based on the inclusion of domain classification.

5. Conclusions

We propose a copying mechanism structure for GPT-based sLLMs and validate its ability to preserve keywords found in the input by applying it to the tasks of resolving ellipsis and substitutes in conversation. To adapt the existing Pointer Generator structure, which is based on an encoder–decoder architecture, to the GPT structure, we adopted a Mask structure based on input tokens. Additionally, we conducted various experiments on ellipsis and anaphora resolution across different structures beyond the existing Pointer Generator structure, successfully demonstrating the introduction of the Pointer Generator within the GPT architecture. Notably, through experiments on ellipsis and anaphora resolution in conversations with domain transitions, we showed that adopting the Pointer Generator structure enables effective resolution of ellipsis and anaphora, even for domains outside the training data. By implementing the copying mechanism using Mask in the GPT architecture, we hope to facilitate the use of language models in restricted situations, such as with limited training data. However, the current structure has limitations in that it cannot specify which parts of the input information play an important role in generation, and, since it is based on tokenizer token IDs, it cannot handle OOV occurrences. We leave this for future research.

Author Contributions

Data curation, J.-W.C. (Ji-Won Cho); formal analysis, J.-W.C. (Ji-Won Cho), J.O. and J.-W.C. (Jeong-Won Cha); methodology, J.-W.C. (Ji-Won Cho), J.O. and J.-W.C. (Jeong-Won Cha); project administration, J.-W.C. (Jeong-Won Cha); software, J.-W.C. (Ji-Won Cho); supervision, J.-W.C. (Jeong-Won Cha); writing—original draft, J.-W.C. (Ji-Won Cho) and J.O.; writing—review and editing, J.O. and J.-W.C. (Jeong-Won Cha). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Institute for Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2022-0-00320, RS-2022-II220320, Artificial intelligence research about cross-modal dialogue modeling for one-on-one multi-modal interactions).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xu, S.; Li, H.; Yuan, P.; Wu, Y.; He, X.; Zhou, B. Self-Attention Guided Copy Mechanism for Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1355–1362. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.M.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2019, 21, 140:1–140:67. [Google Scholar]

- González, J.L.V.; Rodríguez, A.F. Importance of Pronominal Anaphora Resolution in Question Answering Systems. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Hong Kong, China, 3–6 October 2000. [Google Scholar]

- Aralikatte, R.; Lamm, M.; Hardt, D.; Søgaard, A. Ellipsis and Coreference Resolution as Question Answering. arXiv 2019, arXiv:1908.11141. [Google Scholar]

- Aralikatte, R.; Lamm, M.; Hardt, D.; Søgaard, A. Ellipsis Resolution as Question Answering: An Evaluation. In Proceedings of the Conference of the European Chapter of the Association for Computational Linguistics, Kiev, Ukraine, 21–23 April 2021. [Google Scholar]

- Park, C.; Choi, K.H.; Lee, C.; Lim, S. Korean Coreference Resolution with Guided Mention Pair Model Using Deep Learning. ETRI J. 2016, 38, 1207–1217. [Google Scholar] [CrossRef]

- Zaib, M.; Zhang, W.E.; Sheng, Q.Z.; Mahmood, A.; Zhang, Y. Conversational question answering: A survey. Knowl. Inf. Syst. 2021, 64, 3151–3195. [Google Scholar] [CrossRef]

- Choi, E.; He, H.; Iyyer, M.; Yatskar, M.; Yih, W.T.; Choi, Y.; Liang, P.; Zettlemoyer, L. QuAC: Question Answering in Context. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2174–2184. [Google Scholar] [CrossRef]

- Qu, C.; Yang, L.; Qiu, M.; Zhang, Y.; Chen, C.; Croft, W.B.; Iyyer, M. Attentive History Selection for Conversational Question Answering. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019. [Google Scholar]

- Qiu, M.; Huang, X.; Chen, C.; Ji, F.; Qu, C.; Wei, W.; Huang, J.; Zhang, Y. Reinforced History Backtracking for Conversational Question Answering. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021. [Google Scholar]

- Mutal, J.; Gerlach, J.; Bouillon, P.; Spechbach, H. Ellipsis Translation for a Medical Speech to Speech Translation System. In Proceedings of the European Association for Machine Translation Conferences/Workshops, Lisbon, Portugal, 4–6 May 2020. [Google Scholar]

- Quan, J.; Xiong, D.; Webber, B.L.; Hu, C. GECOR: An End-to-End Generative Ellipsis and Co-reference Resolution Model for Task-Oriented Dialogue. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. SpanBERT: Improving Pre-training by Representing and Predicting Spans. Trans. Assoc. Comput. Linguist. 2019, 8, 64–77. [Google Scholar] [CrossRef]

- Liu, R.; Mao, R.; Luu, A.T.; Cambria, E. A brief survey on recent advances in coreference resolution. Artif. Intell. Rev. 2023, 56, 14439–14481. [Google Scholar] [CrossRef]

- Gülçehre, C.; Ahn, S.; Nallapati, R.; Zhou, B.; Bengio, Y. Pointing the Unknown Words. arXiv 2016, arXiv:1603.08148. [Google Scholar]

- Gu, J.; Lu, Z.; Li, H.; Li, V.O.K. Incorporating Copying Mechanism in Sequence-to-Sequence Learning. arXiv 2016, arXiv:1603.06393. [Google Scholar]

- Al-Sabahi, K.; Yang, K. Supervised Copy Mechanism for Grammatical Error Correction. IEEE Access 2023, 11, 72374–72383. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get To The Point: Summarization with Pointer-Generator Networks. arXiv 2017, arXiv:1704.04368. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. CoRR 2014, arXiv:1409.0473. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2019, arXiv:1904.09675. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).