Real-Time Recognition of Korean Traditional Dance Movements Using BlazePose and a Metadata-Enhanced Framework

Abstract

1. Introduction

2. Background Works

2.1. Classification and Characteristics of Korean Traditional Dance Movements

| Dance Movement Classification Method | Classification | Description |

|---|---|---|

| Body-Based Movement Classification | Classification by body parts (head, shoulders, hands, feet, knees) | Lee Eun-joo (1996) proposed a method for categorizing dance movements by body parts, such as the head, arms, feet, and legs, into applied movements [5]. Later, Lee Bo-reum (2022) refined this method, systematizing a detailed classification based on specific body parts suitable for Korean court dance education [27]. |

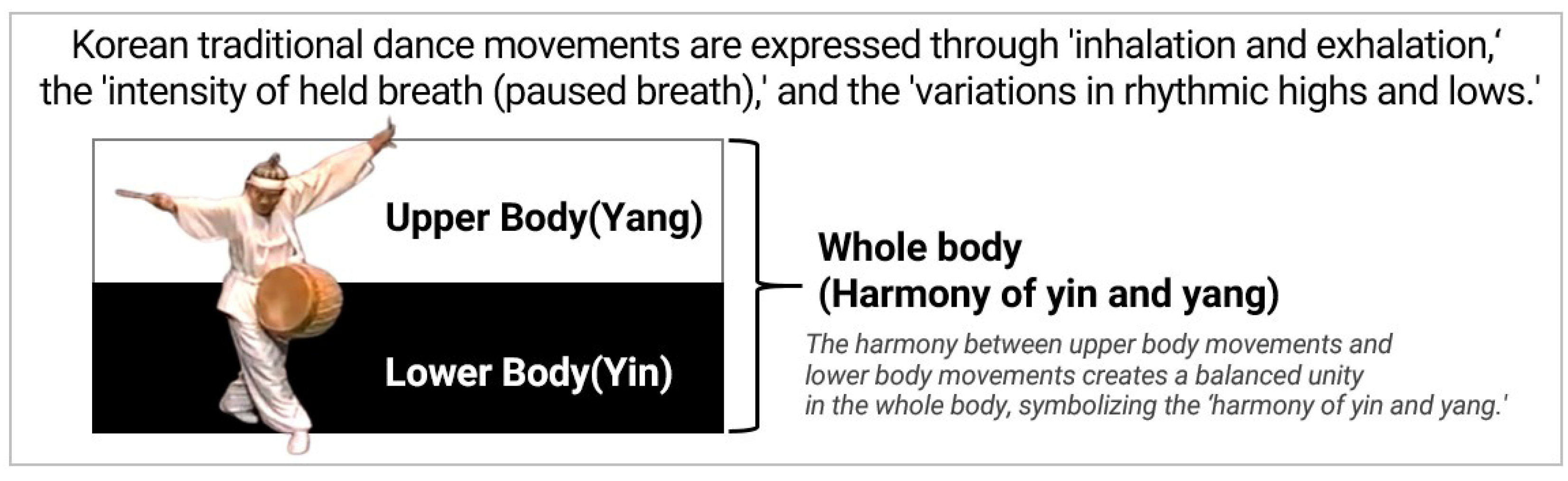

| Dance Movement Element-Based Classification | Upper body dance movement—48 items/ Lower body dance movement—48 items | This is a classification method that subdivides the upper and lower body into 48 items each, utilizing the breathing techniques of Korean dance and the concept of yin and yang. Seo Hee-joo (2003) systematized this [15], while Cha Su-jeong (2005) constructed 48 movements distinguishing the lower body and upper body for traditional dance education [16]. |

| Upper body dance Movement—18 items/ Lower body dance movement—18 items | Heo Sun-sun developed 18 upper and lower body movements based on traditional philosophy, including the concepts of heaven, earth, and humanity, as well as yin-yang and the five elements, emphasizing their connection to breathing [17,18,19,28]. | |

| Dance Type-Based Movement Classification | Movement classification by dance type | The traditional Korean fan dance, known as “buchaechum,” is a modern dance created by dancers and has developed under the influence of other traditional dances, such as the sword dance. The fan dance consists of a total of 21 movements [20], while the Honam sword dance consists of 11 movements [21,23]. |

| Labanotation-Based Movement Element Classification | Standardization of traditional dance movement elements based on Western techniques (LMA, Laban Movement Analysis) | Kang Sung-beom (2004) analyzed the key elements of Korean traditional dance through Laban Movement Analysis (LMA), and subsequently [24], Choi Won-sun (2016, 2018) utilized LMA analysis as a method to reassess the value of the intangible cultural heritage of Jindo [25,26]. |

2.2. Applications of AI in Dance Movement Analysis and Creation

2.3. AI Model Research on Motion Recognition

3. Materials and Methods

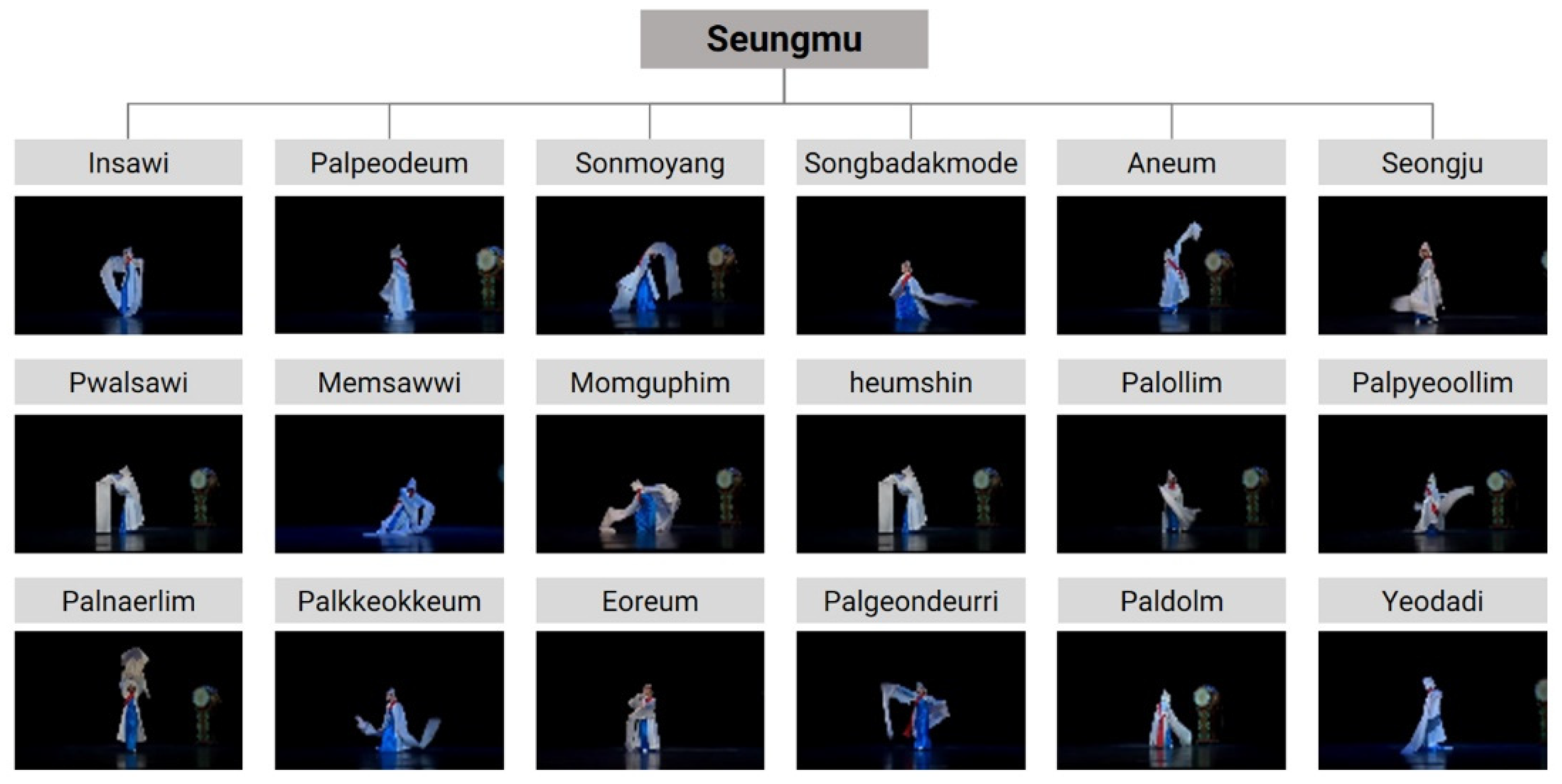

3.1. Establishing a Classification Framework for Korean Traditional Dance Movements

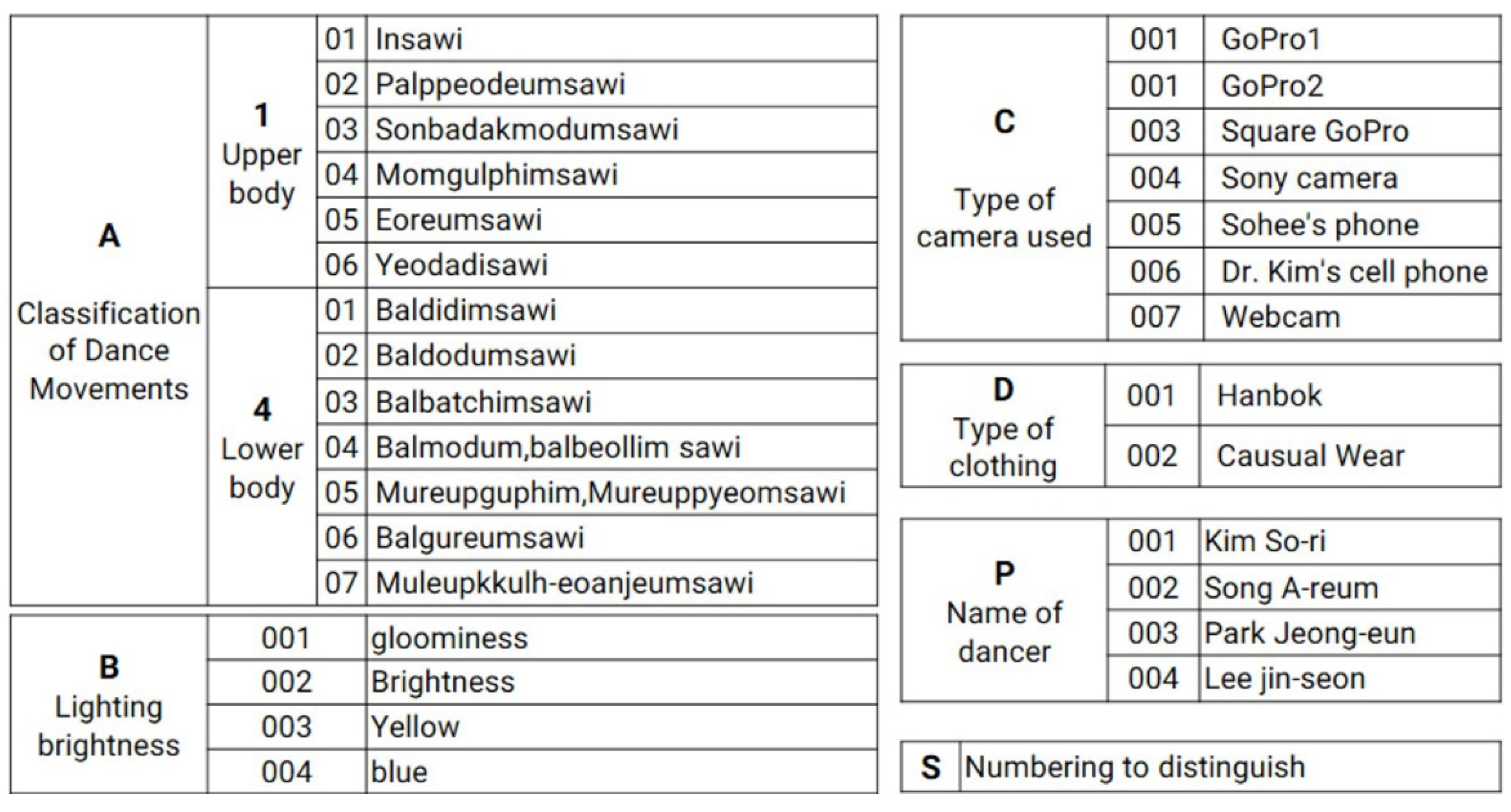

3.2. Establishing a Metadata Set for Traditional Dance Training

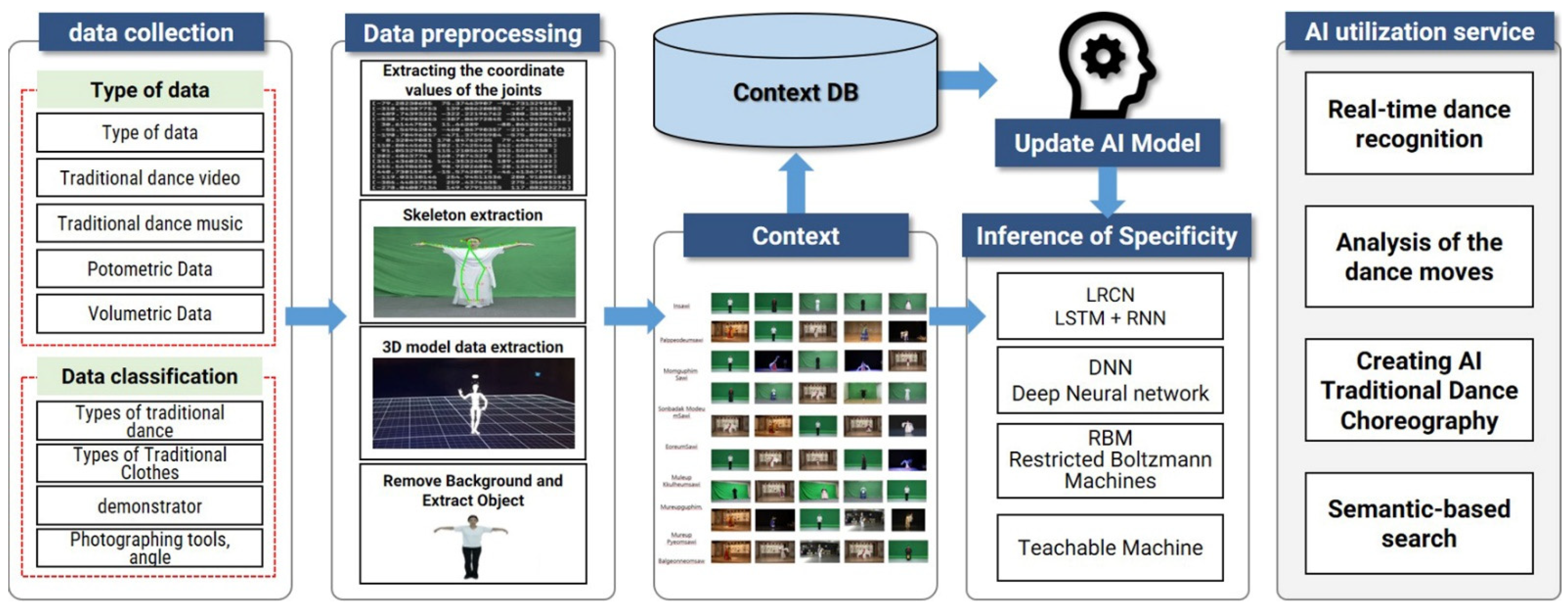

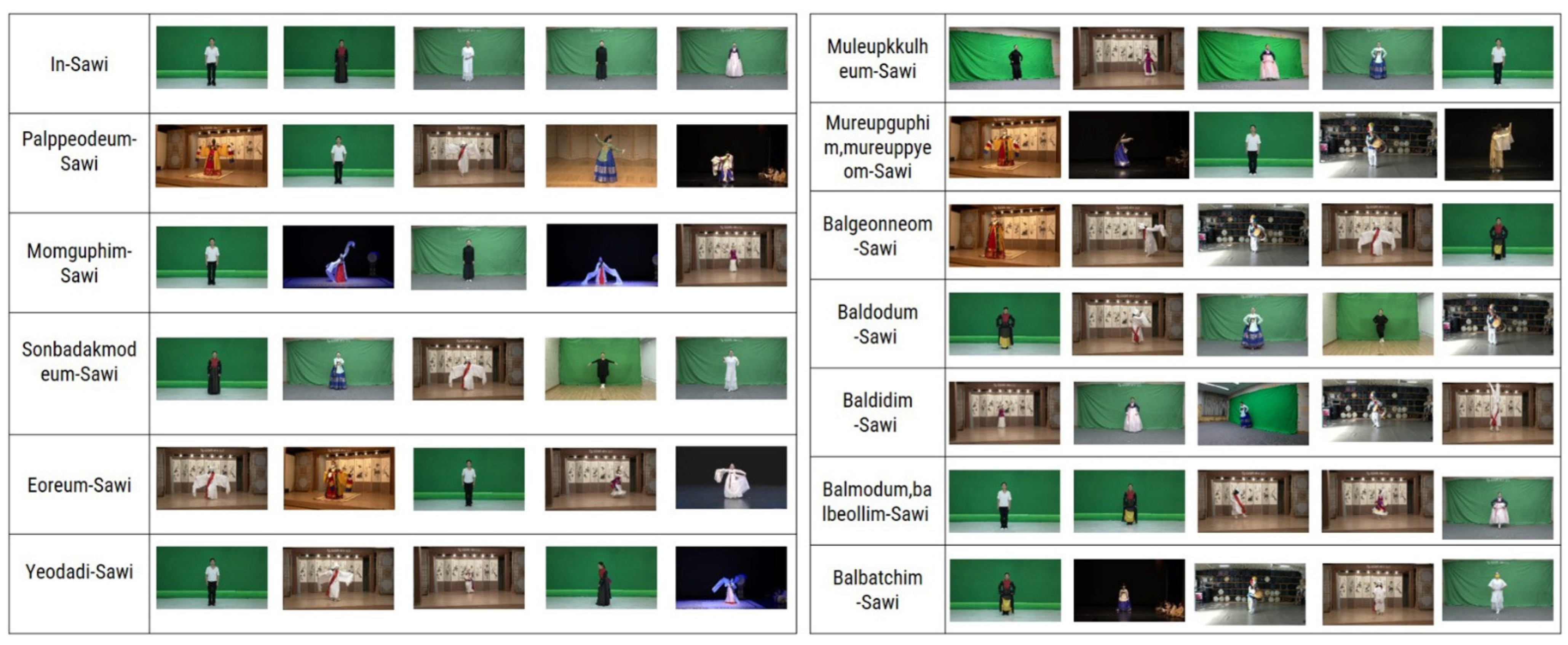

3.2.1. Collecting Video Data on Traditional Dance

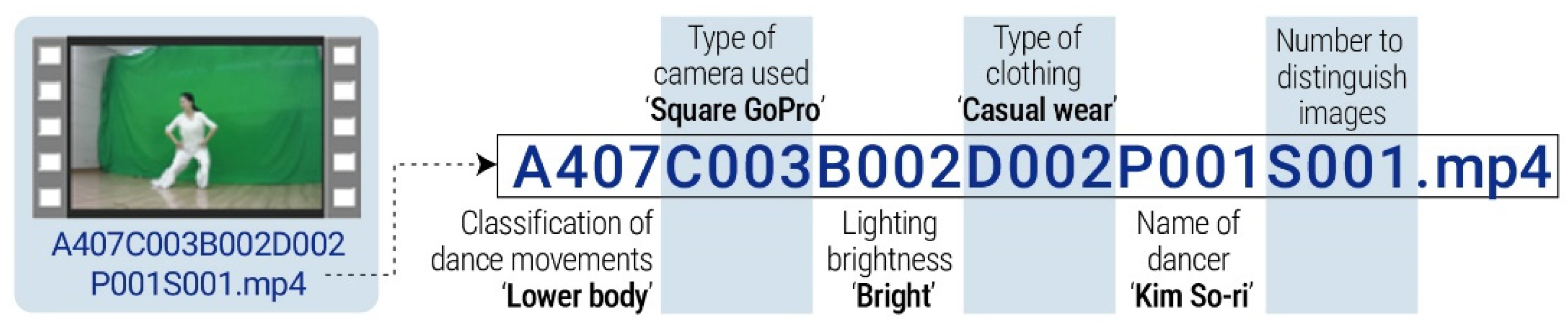

3.2.2. Generating a Metadata Set for Traditional Dance Training

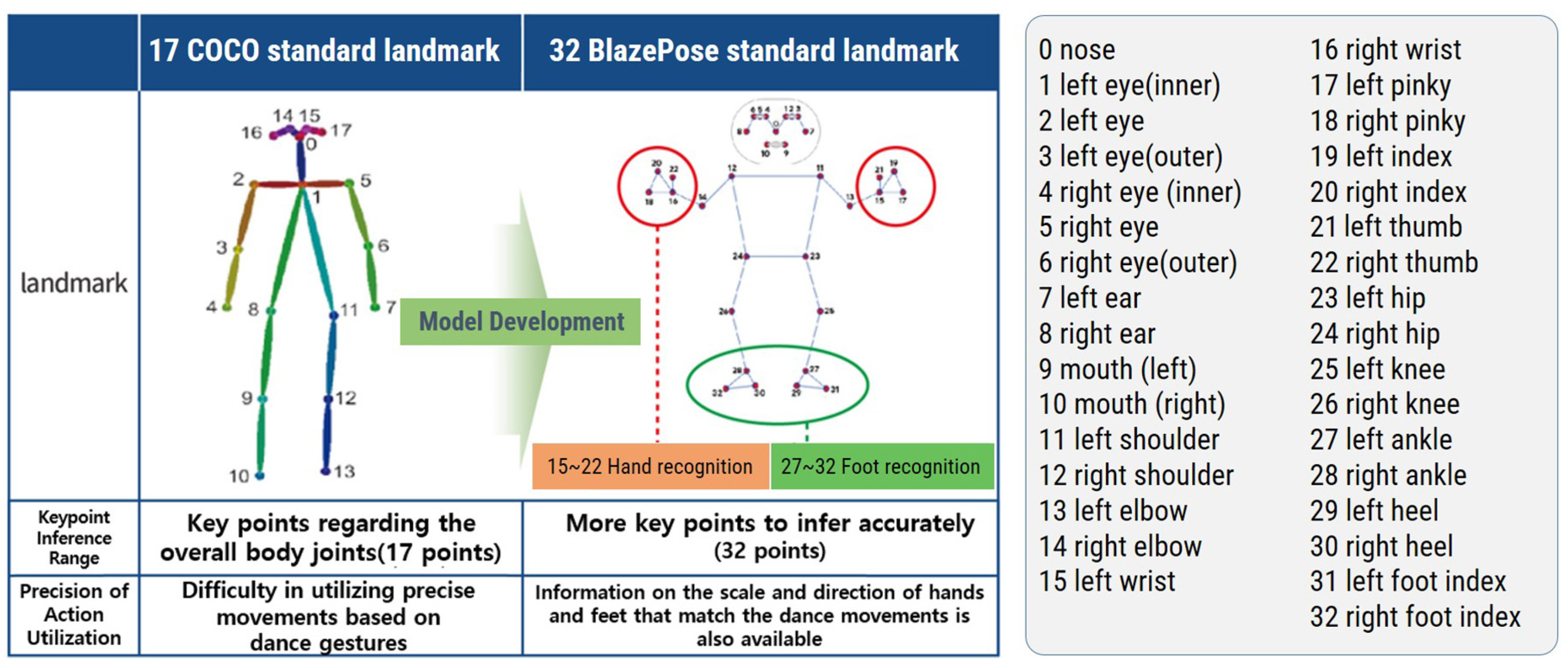

3.3. Development of an AI Prototype Specialized in Traditional Dance Movements

4. Result

5. Limitations and Future Work

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Park, K.-S. Dance Movements; Ilji Publishing: Seoul, Republic of Korea, 1982. [Google Scholar]

- Jung, B.-H. The Aesthetics of Korean Dance; Jipmoondang: Seoul, Republic of Korea, 2004. [Google Scholar]

- Jung, B.-H. The Archetype and Recreation of Korean Traditional Dance; Minsokwon: Seoul, Republic of Korea, 2011. [Google Scholar]

- Kim, Y. Basic Breathing; Modern Aesthetics Press: Seoul, Republic of Korea, 1997. [Google Scholar]

- Lee, E.-J. A study on Korean dance terminology. Incheon Coll. J. 1996, 24, 315–333. [Google Scholar]

- Lee, E.-J. A study on standardization of dance terminology in Korean dance. Korean Danc. Soc. 1997, 25, 187–219. [Google Scholar]

- Lee, B.-R. Modular educational system for Korean court dance: Focusing on dance terminology in Korean and Chinese texts. Yeongnam Danc. J. 2002, 10, 197–223. [Google Scholar]

- Chan, C.; Ginosar, S.; Zhou, T.; Efros, A.A. Everybody Dance Now. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5933–5942. [Google Scholar] [CrossRef]

- Kishore, P.V.V.; Kumar, K.V.V.; Kumar, E.K.; Sastry, A.S.C.S.; Kiran, M.T.; Kumar, D.A.; Prasad, M.V.D. Indian classical dance action identification and classification with convolutional neural networks. Adv. Multimed. 2018, 2018, 5141402. [Google Scholar] [CrossRef]

- Mohanty, A.; Vaishnavi, P.; Jana, P.; Majumdar, A.; Ahmed, A.; Goswami, T.; Sahay, R.R. Nrityabodha: Towards understanding Indian classical dance using a deep learning approach. Signal Process. Image Commun. 2016, 47, 529–548. [Google Scholar] [CrossRef]

- Kumar, K.V.V.; Kishore, P.V.V.; Anil Kumar, D. Indian classical dance classification with adaboost multiclass classifier on multifeature fusion. Math. Probl. Eng. 2017, 2017, 6204742. [Google Scholar] [CrossRef]

- Lee, S. Complexity of dance movements and limitations of pose recognition technology. J. Motion Data Anal. 2022, 10, 75–85. [Google Scholar]

- Chen; Xu, Y.; Zhang, C.; Xu, Z.; Meng, X.; Wang, J. An improved two-stream 3D convolutional neural network for human action recognition. In Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Crnkovic-Friis, L.; Friis, L. Generative choreography using deep learning. arXiv 2016, arXiv:1605.06921. [Google Scholar]

- Seo, H.-J. Explanation of Breathing Techniques and Terminology in Korean Dance; Iljisa: Seoul, Republic of Korea, 2003. [Google Scholar]

- Cha, S.-J. Development of Basic Curriculum Programs for Korean Traditional Dance. Ph.D. Dissertation, Sookmyung Women’s University, Seoul, Republic of Korea, 2005. [Google Scholar]

- Soon-Sun, H. Traditional Dance Movements of Korea; Hyungseol Publishing: Seoul, Republic of Korea, 1996. [Google Scholar]

- Heo, S.-S. A study on the establishment of terminology for Park Geum-Seul’s dance movements. Korean Future Dance Soc. Res. J. 1997, 4, 223–254. [Google Scholar]

- Heo, S.-S. Korean Dance Movements and Notation; Hyungseul Publishing: Seoul, Republic of Korea, 2005. [Google Scholar]

- Choi, S.-H.; Kim, B.-B.; Jang, G.-D. A study on types and characteristics of 20th-century fan dance. J. Korean Soc. Sports Sci. 2021. [Google Scholar] [CrossRef]

- Jang, S.-J. A Study on the Transmission Status and Preservation Value of Hanjin-ok’s Honam Sword Dance. Master’s Thesis, Chosun University, Gwangju, Republic of Korea, 2020. [Google Scholar]

- Jeon, S.-H. Comparative study of fan dance between Koreans and Korean-Chinese: Focused on dance movements and aesthetic orientation. J. Folklore Stud. 2019, 38, 201–238. [Google Scholar]

- Park, J.-E. A Study on the Characteristics and Heritage Value of Hanjin-ok Style Sword Dance Movements. Master’s Thesis, Chonnam National University, Gwangju, Republic of Korea, 2021. [Google Scholar]

- Kang, S.-B. Analysis of movement characteristics of Korean traditional dance through LMA—Focused on Lee Mae-Bang’s Seungmu. J. Dance Hist. Doc. 2004, 6, 1–29. [Google Scholar]

- Choi, W.-S. Characteristics of Jin-Do drum dance movements by Park Byung-Cheon through LMA analysis. J. Dance Hist. Doc. 2016, 43, 113–141. [Google Scholar]

- Choi, W.-S. Utilization of LMA for movement analysis and re-evaluation of cultural archetypes in Korean traditional dance. Our Dance Sci. Technol. 2018, 14, 79–108. [Google Scholar]

- Lee, B.-R. Establishing a classification system based on body parts for Korean court dance education. J. Korean Dance Stud. 2022, 30, 123–145. [Google Scholar]

- Heo, S.-S.; Oh, R.; Kim, O.-H.; Yang, S.-J.; Lee, Y.-J. The structure and terminology of basic dance movements in Korean dance. J. Korean Dance Educ. 2013, 24, 137–149. [Google Scholar]

- Yang, J.S.; Han, Y.M. Folk Dance and Art: Discussions on Korean Traditional Dance and New Dance. Comparative Folklore 2002, 23, 83–102. [Google Scholar]

- Heo, S.-S. Characteristics of notation for Park Eun-Ha’s Namdo Gipsugeon Dance. Korean Dance Stud. 2012, 30, 71–98. [Google Scholar]

- Jo, B.-M. A Study on the Constituent Principle of Basic Dance of Lower Body Movement in Traditional Korean Dance. Ph.D. Dissertation, Catholic University of Daegu, Daegu, Republic of Korea, 2015. [Google Scholar]

- Zhang, W.; Liu, Z.; Zhou, L.; Leung, H.; Chan, A.B. Martial arts, dancing and sports dataset: A challenging stereo and multi-view dataset for 3D human pose estimation. Image Vis. Comput. 2017, 61, 22–39. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context dataset for object detection, segmentation, and human pose estimation. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. MPII Human Pose: A dataset for human pose estimation with joint coordinates and activity labeling. In Proceedings of the Conference on Computer Vision Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 466–473. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6M: Large scale dataset for 3D human pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1325–1339. [Google Scholar] [CrossRef]

- Chen, M.; Yang, X.; Feng, J. Enhanced 3D convolutional neural networks for action recognition. IEEE Access 2020, 8, 123456–123465. [Google Scholar]

- Choi, J.-Y. Development of an Exercise Program Capable of Posture and Motion Feedback Using Deep Learning CNN. Master’s Thesis, Sungkyunkwan University, Seoul, Republic of Korea, 2021. [Google Scholar]

- Guangxu, G.H.; Yao, T.; Jian, K. LSTM recurrent neural network speech recognition system based on I-vector feature under low resource conditions. Comput. Appl. Res. 2017, 34, 392–396. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, L.; Dubey, S.; Olimov, F.; Rafique, M.A.; Jeon, M. 3D Convolutional with Attention for Action Recognition. arXiv 2022, arXiv:2206.02203. [Google Scholar]

- Yuan, X.; Pan, P. Research on the evaluation model of dance movement recognition and automatic generation based on long short-term memory. Math. Probl. Eng. 2022, 2022, 6405903. [Google Scholar] [CrossRef]

- Kang, Y. GeoAI application areas and research trends. J. Korean Geogr. Soc. 2023, 58, 395–418. [Google Scholar]

- Li, R.; Yang, S.; Ross, D.A.; Kanazawa, A. AI choreographer: Music-conditioned 3D dance generation with AIST++. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13401–13412. [Google Scholar]

- Li, H.; Guo, H.; Huang, H. Analytical model of action fusion in sports tennis teaching by convolutional neural networks. Comput. Intell. Neurosci. 2022. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yang, C.; An, Z.; Zhu, H.; Hu, X.; Zhang, K.; Xu, K.; Li, C.; Xu, Y. Gated convolutional networks with hybrid connectivity for image classification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12581–12588. [Google Scholar]

- Lin, C.-B.; Dong, Z.; Kuan, W.-K.; Huang, Y.-F. A framework for fall detection based on OpenPose skeleton and LSTM/GRU models. Appl. Sci. 2020, 11, 10329. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 568–576. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2022, 34, 5586–5609. [Google Scholar] [CrossRef]

- National Intangible Heritage Center. Available online: https://www.nihc.go.kr/ (accessed on 12 July 2021).

- Traditional Dance Research and Preservation Association. Available online: https://tartlab.co.kr/ (accessed on 10 August 2021).

- National Gugak Center. Available online: https://www.gugak.go.kr/ (accessed on 17 August 2021).

- Korea Heritage Service. Available online: https://english.khs.go.kr/ (accessed on 2 September 2021).

- Jindo-gun Tourism and Culture. Available online: https://www.jindo.go.kr/tour/tour/info/history/003.cs?m=65 (accessed on 8 February 2022).

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast networks for video recognition. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6202–6211. [Google Scholar]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with two-stream neural networks. J. Neural Netw. 2021, 34, 220–230. [Google Scholar]

- Zhang, F.; Bazarevsky, V.; Vakunov, A. BlazePose: Real-Time 3D Pose Estimation; Google Research Report; Google: Mountain View, CA, USA, 2020. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A framework for building perception pipelines. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1235–1247. [Google Scholar]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device real-time body pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

| 1. In-Sawi (인사위) | 2. Palppeodeum-Sawi (팔뻗음사위) | 3. Sonmoyang Sawi (손모양사위) | |||

| Greeting Gesture: A gesture of placing the hands in front, to the sides, behind, above, or below close to the body. |  | Arm Stretch Gesture: A movement of stretching the arm forward, backward, to the side, up, or down. |  | Hand Shape Gesture: A motion in which the fingertips are directed up, down, or back and forth. |

| 4. Sonbadakmodeum-Sawi (손바닥모듬사위) | 5. Aneum-Sawi (안음사위) | 6. Seongju-Sawi (성주사위) | |||

| Palm Combination Gesture: A movement of bringing the palms of both hands together. |  | Hug Gesture: An action of placing one’s arm across the chest, with fingertips reaching to the opposite shoulder. |  | Guardian Spirit Gesture: A movement representing the shape of a house, with arms forming an “L” or “D” shape in front. |

| 7. Mem-Sawi (멤사위) | 8. Hwal-Sawi (활사위) | 9. Palkkeokkeum-Sawi (팔꺽음(께끼)사위) | |||

| Carrying Gesture: An action of carrying an object on one’s shoulder. |  | Bow Gesture: A movement where the arm is rounded, symbolizing a bow. |  | Arm Bending Gesture: A movement of bending the arm up, down, forward, sideways, or backward. |

| 10. Momguphim-Sawwi (몸굽힘사위) | 11. Heumshin Sawwi (흠신사위) | 12. Palollim-Sawwi (팔올림사위) | |||

| Body Bending Gesture: A movement of bending the body forward, sideways, or backward, with emphasis on bending forward. |  | Body Bowing Gesture: Heumshin refers to a movement of bending the body forward as a sign of respect. |  | Arm Raising Gesture: A motion of raising the arm. |

| 13. Palpyeoollim-Sawi (팔펴올림사위) | 14. Palnaerim-Sawi (팔내림사위) | 15. (Eoreum-Sawi (어름사위) | |||

| Extended Arm Raising Gesture: A movement of lifting the arm while bending and straightening it. |  | Arm Lowering Gesture: A motion of lowering the arm. |  | Affectionate Gesture: A movement of pushing, pulling, or shaking the body, as if treating a child or animal fondly. |

| 16. Palgeondeuri-Sawi (팔건들이사위) | 17. Paldolm-Sawi (팔돎사위) | 18. Yeodadi-Sawi (여닫이사위) | |||

| Arm Shaking Gesture: A movement of shaking the arm. |  | Arm Rotating Gesture: A movement that rotates the arm using the shoulder, elbow, and wrist joints. |  | Opening and Closing Gesture: A movement that rotates the arm using the shoulder, elbow, and wrist joints |

| 1. Baldidim-Ssawi (발디딤사위) | 2. Baldodum-Sawi (발돋움사위) | 3. Eutteum-Sawi (으뜸사위) | |||

| Foot Pressing Gesture: “Didim” refers to standing firmly with one foot and pressing down, derived from the term “Didida”. |  | Toe-Raising Gesture: “Dodum” refers to the act of standing on tiptoe with heels raised, focusing balance on the toes. |  | Primary Gesture: This primary leg movement combines stepping with one foot and rising with the other, creating a sweeping motion in the lower body. |

| 4. Balbatchim-Sawi (발받침사위) | 5. Balmoyang-Sawi (발모양사위) | 6. Balmodum & Balbeollim -Sawi (발모둠, 발벌림사위) | |||

| Foot Support Gesture: A movement of supporting an object with the feet, lifting the feet and knees in response. |  | Foot Shape Gesture: Movements where the feet point up, down, inward, outward, or where the soles face up or down. |  | Feet Together and Apart Gesture: Movements in which the feet come closer together or move farther apart. |

| 7.Mureupguphim & Mureuppyeom-Sawi (무릎굽힘, 무릎폄사위) | 8. Mureupgulheoanjeum-Sawi (무릎밃, 뗌사위) | 9. Muleupkkulheoanjeum-Sawi (무릎꿇어앉음사위) | |||

| Knee Bending and Extending Gesture: Movements involving bending and extending the knees. |  | Knee Pushing and Lifting Gesture: A motion of pushing against the floor with the knee and then lifting the knee from the floor. |  | Kneeling Gesture: The action of kneeling down on the floor. |

| 10. Meam-Sawi (맴사위) | 11. Balgeonneom-Sawi (발건넘사위) | 12. Baljjigeum-Sawi (발찍음사위) | |||

| Spinning Gesture: A movement in which the body spins in place. |  | Foot Crossing Gesture: A movement representing the act of crossing over, with one foot leaping forward and landing. |  | Stamping Gesture: A stamping motion using the heel or toe. |

| 13. Gyeopdari-Sawi (겹다리사위) | 14. Georeum-Sawi (걸음사위) | 15. Balgureum-Sawi (발구름사위) | |||

| Leg Overlapping Gesture: A movement of overlapping the legs. |  | Walking Gesture: Dance movements that depict walking. |  | Foot Stamping Gesture: A Movement of stepping firmly on the floor with the foot so that the ground resounds. |

| 16. Jiseum-Sawi (짓음사위) | 17. Momdolm-Sawi (몸돎사위) | 18. Momteulm-Ssawi (몸틈사위) | |||

| Excitement Gesture: A movement that signifies playfully showing off in delight, with the center of gravity shifting charmingly. |  | Torso Turning Gesture: A movement of rotating the torso. |  | Body Twisting Gesture: A twisting motion of the body. |

| Dance Movements | Quantity | ||

|---|---|---|---|

| Upper body dance movements | 1 | In-Sawi (인사위) | 48 |

| 2 | palppeodeum-Sawi (팔뻗움사위) | 44 | |

| 3 | Sonbadakmodum-Sawi (손바닥모듬사위) | 45 | |

| 4 | Momguphim-Sawi (몸굽힘사위) | 36 | |

| 5 | Eoreumi-Sawi (어름사위) | 45 | |

| 6 | Yeodad-Sawi (여닫이사위) | 46 | |

| Lower body dance movements | 1 | Baldidim-Sawi (발디딤사위) | 36 |

| 2 | Baldodum-Sawi (발돋움사위) | 38 | |

| 3 | Balbatch-Sawi (발받침사위) | 36 | |

| 4 | Balmodum, balbeollim-Sawi (발모음발벌림사위) | 40 | |

| 5 | Mureupguphim, Mureuppyeom-Sawi (무릎굽힘, 무릎폄) | 36 | |

| 6 | Balgeonneom-Sawi (발건넘사위) | 35 | |

| 7 | muleupkkulheoanjeum-Sawi (무릎꿇어앉음사위) | 36 | |

| Total | 521 | ||

| Model/Methodology | Key Achievements | Limitations/Identified Issues |

| LRCN Model (combining CNN and LSTM) | - Learned motion patterns from traditional dance video data. - Enabled early motion recognition. | - High error rate due to datasets with diverse backgrounds. - Requires standardized datasets. |

| SlowFast Network [56] | - Parallel analysis of static and dynamic content. - Improved accuracy in recognizing upper body and arm movements. | - Additional data needed for recognizing specific detailed movements. |

| Two-stream Action Recognition Network [57] | - Simultaneously analyzed appearance and motion information using 2D and 3D CNN. - Improved accuracy for upper-body-centric movements. | - Poor recognition rate for lower body movements, necessitating further training. |

| Key point R-CNN (based on COCO dataset) | - Achieved high recognition accuracy (over 95%) even for various angles and rapid movements. - Delivered stable performance for both individual and group movements. | - Not capable of real-time processing. |

| Division | Upper Body Movement | Lower Body Movement | ||

|---|---|---|---|---|

| Dance Movements |  |  |  |  |

| Types of dance movements | In-Swai | Sonmoeum-Sawi | Muleupkkulheoanjeum-Sawi | Balbatchim-Sawi |

| Body division | Arm key point | Hand key point | Leg key point | Foot key point |

| Key point | 11~14 | 15~22 | 25~26 | 27~31 |

| Item | Description | Key Roles/Actions | Related Libraries/ Modules |

|---|---|---|---|

| Initialization | Initialization of MediaPipe and TensorFlow models | - Initialize the BlazePose model (mp_pose.Pose) and load the pre-trained LSTM model (model_4.h5). | mediapipe, tensorflow |

| Landmark Extraction | Extract landmarks from video frames using BlazePose | - Use pose.process(img)to extract relative position data (landmarks): 33 key points with their (x, y, z, visibility) values. | mediapipe |

| Feature Calculation | Calculate relative vectors and angles using landmark data | - Compute relative vectors (v) between landmarks. - Calculate angles between vectors (using np.arccosand np.einsum). - Generate feature vectors including angles (in radians). | numpy |

| Preparing LSTM Input | Store specific vector sequences (seq) and input them to the LSTM model | - Create sequences composed of 40 frames. - Adjust input dimensions for the model using np.expand_dims. | tensorflow.keras.models |

| Comparison Analysis | Compare BlazePose results with traditional classifications and templates | - Use a Bi-LSTM model to analyze real-time data sequences and compare them with pre-trained datasets and templates. | train.ipynb |

| Motion Prediction | Predict motion classes for input sequences using the LSTM model | - Identify the class with the highest probability from model output (y_pred). - Exclude results with low confidence based on the confidence threshold. | tensorflow |

| 1. In-Sawi | 2. Sonbadakmodeum-Sawi | 3. Palppeodeum-Sawi | 4. Muleupkkulheanjeum-Sawi | 5. Balbatchim-Sawi |

|  |  |  |  |

| Model | Accuracy (%) | Inference Time (ms) | FPS |

|---|---|---|---|

| Bi-LSTM (Proposed) | 99.35 | 0.0804 | 12,440 |

| DD-Net | 100 | 0.5417 | 1846 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.S. Real-Time Recognition of Korean Traditional Dance Movements Using BlazePose and a Metadata-Enhanced Framework. Appl. Sci. 2025, 15, 409. https://doi.org/10.3390/app15010409

Kim HS. Real-Time Recognition of Korean Traditional Dance Movements Using BlazePose and a Metadata-Enhanced Framework. Applied Sciences. 2025; 15(1):409. https://doi.org/10.3390/app15010409

Chicago/Turabian StyleKim, Hae Sun. 2025. "Real-Time Recognition of Korean Traditional Dance Movements Using BlazePose and a Metadata-Enhanced Framework" Applied Sciences 15, no. 1: 409. https://doi.org/10.3390/app15010409

APA StyleKim, H. S. (2025). Real-Time Recognition of Korean Traditional Dance Movements Using BlazePose and a Metadata-Enhanced Framework. Applied Sciences, 15(1), 409. https://doi.org/10.3390/app15010409