Abstract

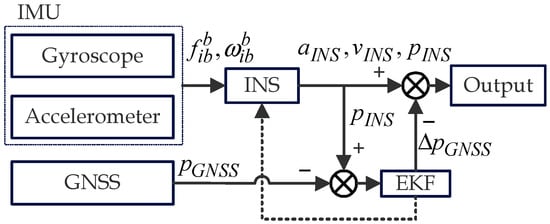

In the field of navigation and positioning, the inertial navigation system (INS)/global navigation satellite system (GNSS) integrated navigation system is known for providing stable and high-precision navigation services for vehicles. However, in extreme scenarios where GNSS navigation data are completely interrupted, the positioning accuracy of these integrated systems declines sharply. While there has been considerable research into using neural networks to replace the GNSS signal output during such interruptions, these approaches often lack targeted modeling of sensor information, resulting in poor navigation stability. In this study, we propose an integrated navigation system assisted by a novel neural network: an inverted-Transformer (iTransformer) and the application of a frequency-enhanced channel attention mechanism (FECAM) to enhance its performance, called an INS/FECAM-iTransformer integrated navigation system. The key advantage of this system lies in its ability to simultaneously extract features from both the time and frequency domains and capture the variable correlations among multi-channel measurements, thereby enhancing the modeling capabilities for sensor data. In the experimental part, a public dataset and a private dataset are used for testing. The best experimental results show that compared to a pure INS inertial navigation system, the position error of the INS/FECAM-iTransformer integrated navigation system reduces by up to 99.9%. Compared to the INS/LSTM (long short-term memory) and INS/GRU (gated recurrent unit) integrated navigation systems, the position error of the proposed method decreases by up to 82.4% and 78.2%, respectively. The proposed approach offers significantly higher navigation accuracy and stability.

1. Introduction

Highly accurate and reliable positioning, navigation, and timing technologies are essential for the safe operation of mobile smart devices [1]. Inertial navigation systems (INS) are characterized by short-term high accuracy and independence from environmental influences. However, standalone INS solutions degrade over time due to the significant errors inherent in the raw measurements of inertial measurement units (IMUs) [2]. This degradation is especially pronounced and drastic in INS systems that use small, low-cost IMU devices, which many mobile devices are compelled to use due to space constraints. The integration of global navigation satellite systems (GNSSs) and INS has been widely used to improve the accuracy and reliability of positioning [3,4], because GNSSs can provide long-term accurate and continuous positioning signals under decent visibility conditions [5], compensating for the defect of low-cost INS. However, the GNSS has one almost inevitable drawback: it relies heavily on stable signals for high-precision positioning. In areas with ambiguous signals, such as cities, forests, and tunnels, GNSS signals can become unstable or even lost [6,7]. If the GNSS signal is interrupted for a prolonged period, the GNSS/INS integrated navigation system will inevitably revert to a pure INS, leading to the rapid accumulation of positioning errors and compromising the safety and efficiency of vehicle operation [8].

There are many solutions to this problem, typically involving the addition of extra sensors such as barometers, odometers, cameras, LIDAR, etc., to collect richer environmental data [9,10,11]. However, this approach results in higher hardware costs and a more complicated data fusion process, making it less economical for general application scenarios [12]. In recent years, machine learning algorithms have been shown to improve the performance of GNSS/INS integrated navigation systems by modeling the mathematical relationship between navigation parameters and vehicle dynamic data during GNSS outages [13].

Sharaf et al. used a radial basis function neural network to model INS positions and the mathematical relationship between INS and GNSS for stable and reliable navigation performance [14,15]. Tan et al. employed support vector machines (SVM) optimized by genetic algorithms (GAs) to address neural network overfitting and local minimum problems [16]. Static neural networks have limited navigation performance due to their inability to remember long-term time features, leading to the application of more advanced deep learning models. Long short-term memory (LSTM), as a dynamic neural network, uses past and present information to predict future states and was applied to obtain stable GNSS navigation data during interruptions [17]. X. Meng et al. proposed a convolutional neural networks-gated recurrent unit (CNNs-GRU) hybrid model combined with an improved robust adaptive Kalman filter to enhance navigation accuracy during GNSS failures [18]. Despite solving the basic degradation problem, these deep neural networks still face accuracy and long-term stability issues. To further explore artificial intelligence in this field, large-scale deep learning models have been integrated into INS/GNSS systems. For example, the Transformer [19] was used to improve navigation reliability during GNSS outages [20], and the time convolutional network (TCN) was applied for its feature extraction and time modeling capabilities, achieving multi-task learning when combined with other neural networks like GRU [21].

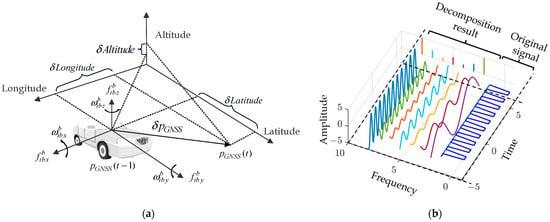

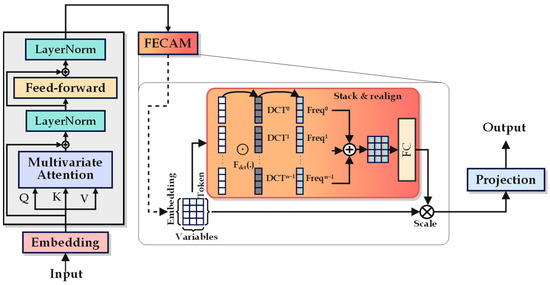

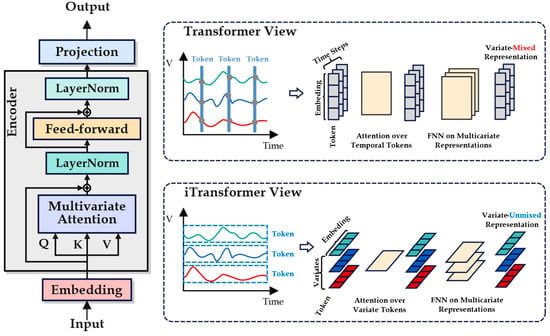

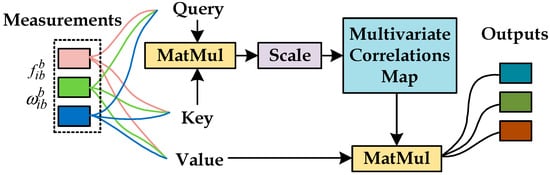

It is worth noting that the models mentioned above are significant research advancements in time-series prediction, which raises the question of whether other models could also improve navigation stability. In time-series prediction, recurrent neural networks such as LSTM [22] and GRU [23] are well suited for capturing long-term dependencies due to their gating mechanisms and memory units. However, they lack specialized feature extraction units. For time-series data rich in motion and noise features, such as IMU data, LSTM and GRU may struggle to learn effective features. The attention-based Transformer [19] model has become a popular research direction in time-series prediction. Its well-designed attention mechanism can effectively learn both temporal and spatial characteristics. However, like most time-series prediction models, the Transformer is sensitive to inconsistencies in the timing of events. The Transformer treats each variable at the same timestamp as a token, which can introduce noise if the timestamps are misaligned or if there is a shift in the statistical distribution of the data [24]. The iTransformer [25] addresses this issue effectively by inputting the entire time series for each input channel into the model as a token, thereby avoiding the noise introduced by misaligned timestamps or non-stationary information. Additionally, the inclusion of the attention mechanism makes iTransformer more effective in capturing variable relationships between sequences. This model is particularly suitable for modeling multi-channel IMU data in INS/GNSS combined systems.

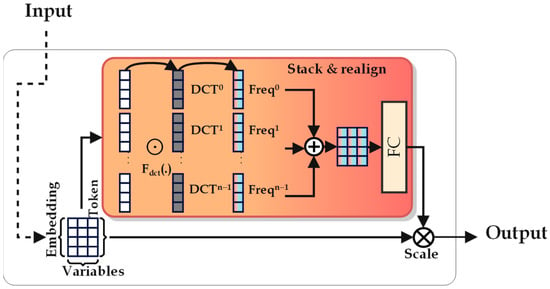

Navigation data possess unique characteristics. In addition to their rich kinematic features, they also contain a significant amount of noise, which can mislead AI algorithms into learning invalid features. Therefore, a critical challenge is to enable AI to correctly understand and analyze the features of navigation data to achieve optimal prediction performance. The core sensor of the INS is IMU, which includes gyroscopes and accelerometers. Numerous studies have demonstrated that frequency analysis is an effective method for analyzing data from these devices. Wang et al. analyzed the relationship between IMU measurement noise and inertial guidance system noise from a frequency-domain perspective to improve the positioning accuracy of the INS/DVL integrated navigation system [26]. Zihajehzadeh et al. used Gaussian process regression, combining both time-domain and frequency-domain features of the IMU to estimate a human’s walking speed [27]. Bolotin et al. proposed a low-cost calibration method for IMUs, utilizing fast Fourier transform (FFT) to obtain frequency-domain data and find the frequency, amplitude, and phase at the spectrum’s peak [28]. Frequency analysis methods are also widely used in time-series forecasting to extract compact and rich temporal features, such as FNet, frequency-enhanced decomposed transformer (FEDformer), and a frequency interpolation time-series analysis baseline (FITS) [29,30,31].

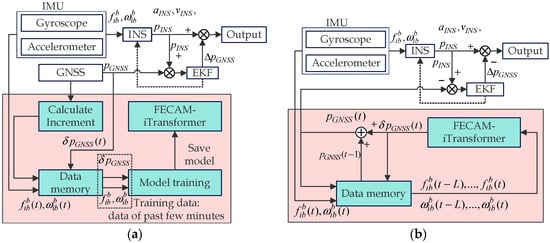

In this paper, a novel AI-assisted INS/GNSS integrated navigation system is proposed to address the rapid accuracy degradation of the INS/GNSS integrated navigation system during a GNSS outage. The iTransformer is introduced and optimized based on considerations of computational accuracy and data characteristics to make it more suitable for navigation.

The main contributions of this paper are as follows:

- Proposing FECAM-iTransformer: To extract more comprehensive information from IMU data, we introduce the frequency-enhanced channel attention mechanism (FECAM) [32] into the iTransformer. This mechanism applies attention across multiple frequency-domain channels to capture more compact, richer, and more comprehensive time features in navigation data. This enhancement broadens the iTransformer’s perspective and improves its ability to model multi-channel IMU data effectively.

- Proposing INS/FECAM-iTransformer Integrated Navigation System: This system provides pseudo-GNSS signals to the extended Kalman filter in case of a GNSS outage.

- Conducting Extensive Experiments: We conduct experiments using both a high-precision public dataset and a low-precision personal dataset to evaluate the model’s performance in two different scenarios: professional integrated navigation sensors and low-cost, small sensors, and the effectiveness of the proposed method is verified.

The remainder of this paper is structured as follows: In Section 2, we describe the theory of the INS/GNSS integrated navigation system in detail, including its mathematical model [17,33,34]. Section 3 introduces the design of the proposed FECAM-iTransformer and the INS/FECAM-iTransformer integrated navigation system. Section 4 presents the experiments conducted, along with a comprehensive comparative analysis. Section 5 discusses the results and significance of this research. Lastly, Section 6 makes a conclusion.

4. Results and Analyses

The effectiveness of the proposed model is evaluated using both public and private datasets in the experimental section of this paper. Public datasets, obtained with high-precision equipment, are used to assess the model’s performance on higher-quality data. In contrast, private datasets, collected using low-cost sensors and mobile robot platforms, are employed to test the model’s performance on lower-quality, interference-prone devices.

4.1. Configuration of Model

In addition to INS, the original iTransformer, and the FECAM-iTransformer, two existing deep neural networks, LSTM and GRU, commonly used to address the GNSS loss-of-lock problem, are selected for comparison. The configurations and training parameters of each model are determined using the grid search method and are summarized in Table 1.

Table 1.

The configurations and training parameters of each model.

The computer used for training and testing is configured with a Windows 11 operating system, an Intel i5-12500H CPU, and 16 GB of memory.

Additionally, due to the large error associated with INS, it is neither practical nor desirable to display comparisons using images. Therefore, when presenting the test results, the trajectory of the INS should be plotted, but its results will not be included in line charts, box plots, or bar charts. Instead, comparisons between the INS and other methods will be presented in tabular form.

4.2. Experiment on Public Datasets

4.2.1. Description of Public Datasets

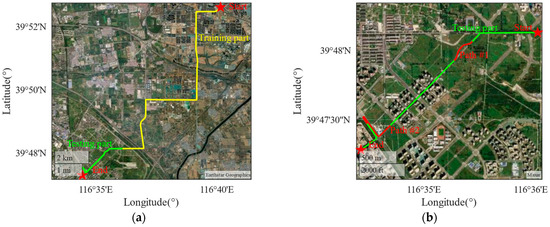

The public dataset provided by Dalian University of Technology can be accessed at the following: http://www.psins.org.cn/newsinfo/1508278.html accessed on 29 February 2024. The dataset was uploaded to the open-source website on 24 May 2021. The data collection route was located on an open road with strong GNSS signal reception in Tongzhou District, Beijing, China. The dataset was collected using the MTI710, a high-precision INS/GNSS integrated navigation device, and a vehicle with an average speed of 3.5 m/s. The parameters of MTI710 are detailed in Table 2 below. To avoid repetition of the experimental route, half of the dataset, with a duration of 6000 s, is used in this paper. The trajectory of the dataset is shown in Figure 8, where the yellow segment represents the training set, with a duration of 4800 s, accounting for 80% of the data. The green segment represents the test set, with a duration of 1200 s, accounting for 20% of the data, as shown in Figure 8a. Within the test set, two representative sections with different durations are selected as test tracks and marked in red, with durations of 100 s and 200 s, called Path #1 and Path #2, respectively, as shown in Figure 8b. The longitude, latitude, and altitude measurements obtained from the GNSS receiver are used as ground truth values for testing.

Table 2.

The parameters of MTI710.

Figure 8.

The trajectory of public datasets: (a) the trajectory of training part and testing part of public datasets; (b) the trajectory of Path #1 and Path #2.

4.2.2. The Results of Experiment on Public Dataset

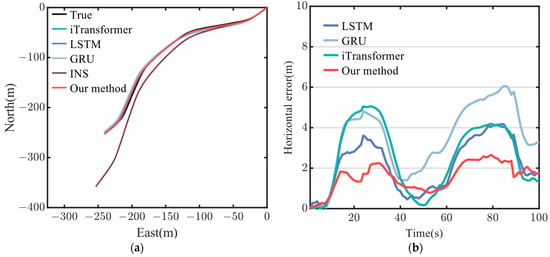

Path #1 has a duration of 100 s and follows a gentle curve, with the movement of the vehicle being relatively stable and smooth. Figure 9a presents a comparison of the test tracks and the horizontal errors for each method on Path #1. It is evident from the trajectory diagram that all integrated navigation methods based on neural networks closely fit the real path, with minimal differences among the various methods. The same path results in similar trajectories and errors for each method. In contrast, the INS approach significantly deviates from the true path, particularly when the vehicle changes direction. Figure 9b shows the comparison of horizontal position errors for the different methods, excluding pure INS (since the INS error is excessively large, making it difficult to display alongside other methods, a graphical comparison would be meaningless here; instead, a quantitative comparison will be provided in tabular form later). From the 8th second onward, the positioning errors of each method begin to change significantly, though the differences between the methods remain relatively small. Among these, the method proposed in this paper demonstrates the least error. Figure 10 shows the error values of each method in three different directions, showing small differences along the stable route. Since the vehicle is in a horizontal motion, the altitude error is very small.

Figure 9.

Trajectory diagram and horizontal position errors diagram: (a) the trajectory of each method on Path #1; (b) the horizontal position errors of each method on Path #1.

Figure 10.

Position errors of north, east, and altitude on Path #1.

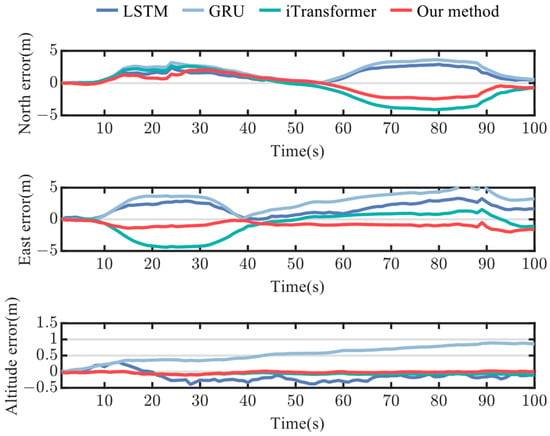

Figure 11a presents the horizontal position error distribution of each method in the form of a box plot. The error distribution of the method proposed in this paper is more concentrated, with most of the errors and the median below 3 m indicating greater stability compared to other methods. Figure 11b shows the root mean square error (RMSE) and maximum error (MAX Error) of the horizontal position for each method, presented in a bar chart. Our method yields strong results, with an RMSE of 1.7 m and a MAX Error of 2.7 m after 100 s of GNSS outage. While the iTransformer’s error is slightly higher than that of the LSTM, the difference is minimal. On the more moderate route, both the LSTM and GRU perform well, but on routes with more drastic changes in a motion state, the effectiveness of each method needs further verification. This will be explored in experiments on Path #2.

Figure 11.

The box plot and bar chart of position error on Path #1: (a) The box plot shows the horizontal position error distribution of each method; (b) The bar chart shows the RMSE and MAX Error of each method.

Table 3 provides a detailed comparison of the RMSE and MAX Error for the east, north, and horizontal position errors of each method on Path #1. The proposed method, the FECAM-iTransformer, demonstrates superior performance across all directions, with significant reductions in absolute position errors in the east, north, and horizontal directions:

- Compared to INS: The RMSE decreased by 98%, 80.3%, and 96.5%, and the MAX Error decreased by 98.1%, 81.9%, and 97.5%, respectively;

- Compared to iTransformer: The RMSE is reduced by 51.4%, 39%, and 44.1%, and the MAX Error decreased by 54.7%, 40.5%, and 47.5%, respectively;

- Compared to LSTM: The RMSE decreased by 49.3%, 11%, and 31.5%, and the MAX Error decreased by 31.2%, 26.3%, and 36.4%, respectively;

- Compared to GRU: The RMSE is reduced by 69%, 35.6%, and 55.5%, and the MAX Error decreased by 44.8%, 52.9%, and 56.1%, respectively.

Table 3.

MAX Error and RMSE of different methods on Path #1.

Table 3.

MAX Error and RMSE of different methods on Path #1.

| Method | East Error (m) | North Error (m) | Horizontal Error (m) | |

|---|---|---|---|---|

| MAX Error | Our method | 1.9959 | 2.4495 | 2.6581 |

| iTransformer | 4.4082 | 4.1171 | 5.0657 | |

| LSTM | 2.9022 | 3.3230 | 4.1863 | |

| GRU | 3.6161 | 5.1970 | 6.0572 | |

| INS | 106.6452 | 13.5499 | 107.5026 | |

| RMSE | Our method | 0.9880 | 1.4085 | 1.7205 |

| iTransformer | 2.0331 | 2.3087 | 3.0763 | |

| LSTM | 1.9485 | 1.5821 | 2.5099 | |

| GRU | 3.1849 | 2.1873 | 3.8636 | |

| INS | 48.9940 | 7.1497 | 49.5129 |

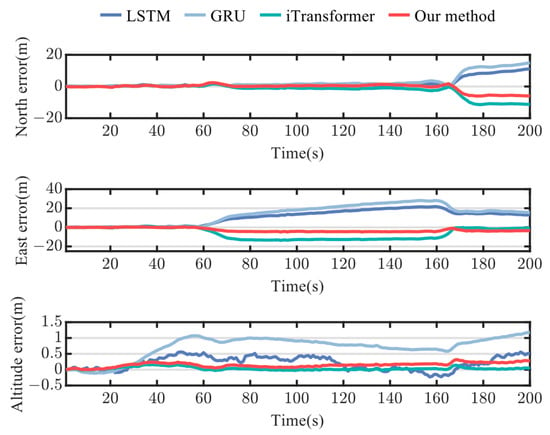

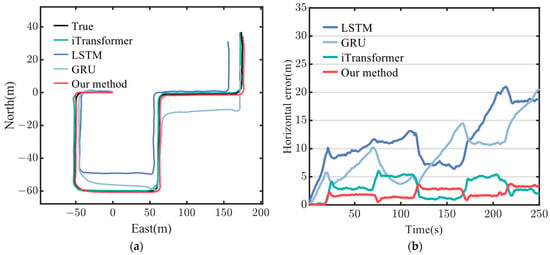

Path #2 has a duration of 200 s and involves straight lines, right-angle turns, and U-shaped bends, making the vehicle’s motion state more complex than that of Path #1. Figure 12 presents the comparison of test tracks and horizontal errors for each method on Path #2. As shown in Figure 12a, the INS trajectory deviates completely from the true path, with an error exceeding 400 m. In contrast, the other methods remain much closer to the true trajectory. The specific horizontal error values are depicted in Figure 12b with a line chart (excluding the INS error, as it is too large and would interfere with the normal display of the other methods). After the first right-angle turn at 60 s, all methods experience some error amplification. However, the iTransformer and our proposed method do not continue to accumulate errors, while the errors for the LSTM and GRU begin to increase further, likely due to the significant impact of the maneuver on the vehicle’s state. A second change in positioning error occurs at 165 s when the vehicle navigates a U-turn. Among all the methods, the proposed method exhibits the smallest horizontal error, demonstrating its ability to maintain high positioning accuracy during complex vehicle maneuvers. The comparison of positioning errors in the east, north, and vertical directions is shown in Figure 13, where our method consistently outperforms the others in all directions. It is worth noting that at the U-turn point, the horizontal positioning error briefly decreases before increasing again. This occurs because the northbound and eastbound prediction results of the neural network fluctuate at the vehicle’s turning point. As shown in Figure 13, the northbound prediction error increases, while the eastbound prediction error decreases around the 165 s mark. This leads to a temporary reduction in the accumulation of horizontal errors at that point. Subsequently, both the northbound and eastbound prediction errors stabilize, causing the overall horizontal error to increase again. In addition, the vehicle typically travels at a low speed during the U-turn, which reduces the complexity of the model’s prediction. This is another reason for the decrease in the horizontal positioning error.

Figure 12.

Trajectory diagram and horizontal position errors diagram on Path #2: (a) the trajectory of each method on Path #2; (b) the horizontal position errors of each method on Path #2.

Figure 13.

Position errors of north, east, and altitude on Path #2.

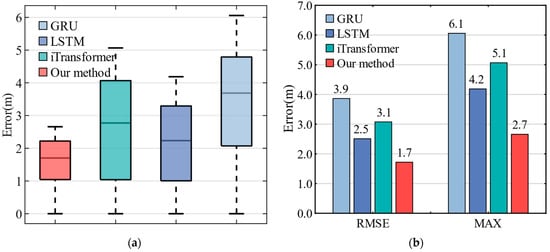

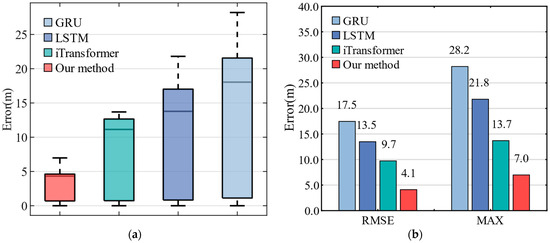

Figure 14a shows the horizontal position error distribution for each method in the form of a box plot. The error distribution of the method proposed in this paper is more concentrated, with most errors and the median falling below 5 m, indicating greater stability and accuracy compared to other methods. Figure 14b presents the RMSE and MAX Error of the horizontal absolute position for each method in the form of a bar chart. The proposed method demonstrates strong performance, achieving an RMSE of 4.1 m and a MAX Error of 7.0 m after 200 s of GNSS outage.

Figure 14.

The box plot and bar chart of position error on Path #2: (a) The box plot shows the horizontal position error distribution of each method; (b) The bar chart shows the RMSE and MAX Error of each method.

Table 4 provides a detailed comparison of the RMSE and MAX Error for the east, north, and horizontal position errors of each method on Path #2. The reductions in position errors in the east, north, and horizontal directions are as follows:

- Compared to INS: The RMSE decreased by 98.5%, 97.2%, and 98.3%, and the MAX Error decreased by 98.9%, 97.1%, and 98.6%, respectively;

- Compared to iTransformer: The RMSE is reduced by 61.1%, 46.4%, and 57.8%, and the MAX Error decreased by 64.2%, 47.1%, and 49%, respectively;

- Compared to LSTM: The RMSE decreased by 73.8%, 36.2%, and 69.6%, and the MAX Error decreased by 77.5%, 46.7%, and 68%, respectively;

- Compared to GRU: The RMSE is reduced by 79.6%, 55.6%, and 76.5%, and the MAX Error decreased by 82.6%, 59.7%, and 75.3%, respectively.

Table 4.

MAX Error and RMSE of different methods on Path #2.

Table 4.

MAX Error and RMSE of different methods on Path #2.

| Method | East Error (m) | North Error (m) | Horizontal Error (m) | |

|---|---|---|---|---|

| MAX Error | Our method | 4.8879 | 6.0308 | 6.9790 |

| iTransformer | 13.6634 | 11.3954 | 13.6902 | |

| LSTM | 21.7570 | 11.3180 | 21.8038 | |

| GRU | 28.0816 | 14.9659 | 28.2057 | |

| INS | 444.8387 | 207.2744 | 490.7587 | |

| RMSE | Our method | 3.3980 | 2.3024 | 4.1046 |

| iTransformer | 8.7338 | 4.2948 | 9.7326 | |

| LSTM | 12.9937 | 3.6071 | 13.4851 | |

| GRU | 16.6707 | 5.1831 | 17.4579 | |

| INS | 226.1042 | 83.2790 | 240.9533 |

Experiments on public datasets demonstrate that the proposed model effectively addresses the rapid accumulation of positioning errors caused by the GNSS outage in professional strapdown inertial navigation systems, outperforming existing methods such as the LSTM and GRU. The model will also be further tested on a low-cost INS/GNSS integrated navigation system and a mobile robot.

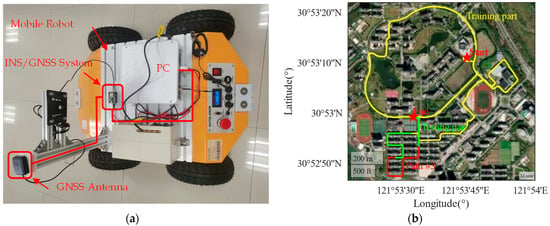

4.3. Experiment on Private Dataset

A low-cost INS/GNSS integrated navigation system and a small mobile robot platform were used to collect the private dataset at 13:03 on 11 July 2024, at a university located in Pudong New Area, Shanghai, China. The robot’s moving speed was about 0~1.5 m/s, compared to the 3 m/s speed used for the public dataset. Furthermore, the robot’s size is , and its net weight is . The IMU sensor is the JY901B, and the GNSS module is the ATGM336H-5N-71. The sensors’ and mobile robot’s parameters are detailed in Table 5. The total duration of the dataset is 2800 s, with 80% (2240 s) used as training data and the remaining 20% (560 s) as test data. Within the test data, a 250 s route is selected as the test track, as shown in Figure 15, in which yellow represents the training part, green represents the testing part, and red indicates the testing path named Path #3. The longitude, latitude, and altitude measurements obtained from the GNSS receiver are used as ground truth values for testing.

Table 5.

The parameters of JY901B, ATGM336H-5N-71, and mobile robot.

Figure 15.

Data acquisition platform and trajectory of datasets: (a) The appearance and electrical connection diagram of the data acquisition platform are provided; (b) The data acquisition path covers the entire route with a strong GNSS signal throughout, lasting a total of 2800 s. Of this, 2240 s are used for the training set, 560 s for the test set, and a 25 s segment is designated as the test path called Path #3.

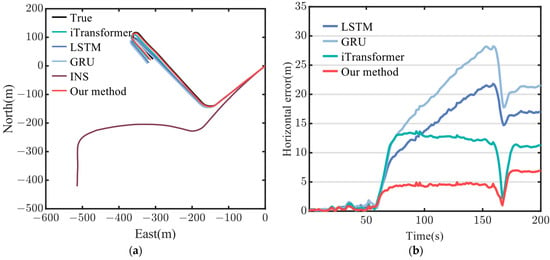

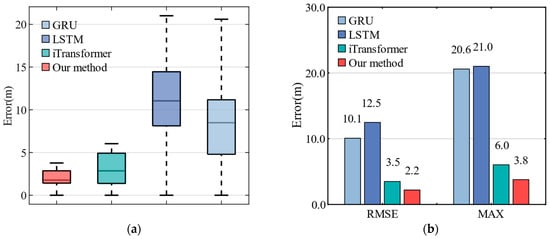

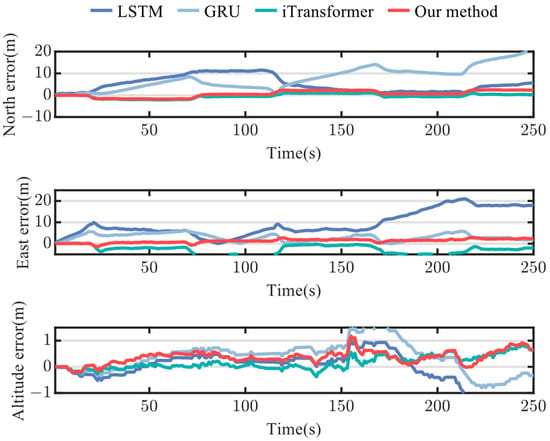

For the test on the private dataset, we first compare the RMSE and MAX Errors. An intuitive comparison of RMSE and MAX Errors is shown in Figure 16. Table 6 provides a detailed comparison of the RMSE and MAX Error for each method in the east, north, and horizontal directions. It can be seen that the method proposed in this paper achieved excellent results in all directions, with the reduction in absolute position error in the east, north, and horizontal directions as follows:

- Compared to INS: The RMSE and MAX Error decreased by 99.9% in all directions, respectively;

- Compared to iTransformer: The RMSE is reduced by 55%, −32.4%, and 37.2%, and the MAX Error decreased by 51.6%, −13.6%, and 37.4%, respectively;

- Compared to LSTM: The RMSE decreased by 85.9%, 74.3%, and 82.4%, and the MAX Error decreased by 86.1%, 78.4%, and 82%, respectively;

- Compared to GRU: The RMSE is reduced by 55.5%, 83.4%, and 78.2%, and the MAX Error decreased by 53.3%, 87.9%, and 81.7%, respectively.

Figure 16.

The box plot and bar chart of position error on Path #3: (a) The box plot shows the horizontal position error distribution of each method; (b) The bar chart shows the RMSE and MAX Error of each method.

Table 6.

MAX Error and RMSE of different methods on Path #3.

Table 6.

MAX Error and RMSE of different methods on Path #3.

| Method | East Error (m) | North Error (m) | Horizontal Error (m) | |

|---|---|---|---|---|

| MAX Error | Our method | 2.9107 | 2.4888 | 3.7739 |

| iTransformer | 6.0123 | 2.1510 | 6.0318 | |

| LSTM | 20.9941 | 11.4996 | 21.0050 | |

| GRU | 6.2305 | 20.5142 | 20.5985 | |

| INS | 200,340 | 1,493,000 | 1,495,300 | |

| RMSE | Our method | 1.5307 | 1.5734 | 2.1951 |

| iTransformer | 3.3269 | 1.0635 | 3.4928 | |

| LSTM | 10.8684 | 6.1222 | 12.4741 | |

| GRU | 3.4413 | 9.4653 | 10.0715 | |

| INS | 4959 | 2684 | 5639 |

Among these, the iTransformer achieved the best performance in the north direction, slightly outperforming the method proposed in this paper. However, its performance in the east direction was weaker, leading to a lower overall score in horizontal positioning. Our method achieved the best overall performance. Additionally, due to the high noise and low stability of the low-cost sensors, the error in pure INS inertial navigation was so large that its trajectory and error values could not be properly displayed alongside the other methods. Therefore, the INS is excluded from further comparisons.

It is worth noting that on Path #3, the neural network performs better than on Path #2 due to differences in the operating environments of the public and private datasets, as well as the speeds of the acquisition vehicles and robots. The faster the vehicle and the more complex the operating environment, there will be more pronounced changes in motion during acceleration, deceleration, and turning. The neural network must adapt to these rapid changes, which can introduce fluctuations. The acquisition vehicle for the public dataset operates in a more complex environment and at higher speeds. In contrast, the robots using private datasets operate at a lower, steady speed, resulting in relatively smaller error fluctuations.

Nevertheless, noise in the low-cost sensor measurements persisted and affected the performance of the LSTM and GRU models. However, the iTransformer and FECAM-iTransformer, with their attention mechanisms, mitigated the impact of these noises, achieving positioning accuracy comparable to the smooth driving on Path #1.

Figure 17 shows the comparison of test tracks and horizontal errors for each method on Path #3. The test route includes multiple right-angle turns, and due to the mobile robot lacking a shock absorber, it experiences relatively high noise levels, posing a significant challenge for the neural network models. As seen in Figure 17a, LSTM and GRU are significantly affected, deviating considerably from the actual trajectory, while the iTransformer and FECAM-iTransformer align more closely with the real trajectory. Figure 17b shows that the fluctuations in the error graph are caused by the mobile robot’s turns. The horizontal positioning errors accumulate more noticeably with the LSTM and GRU, whereas the FECAM-iTransformer shows a smaller overall error than the iTransformer, consistently maintaining its horizontal positioning error below 5 m. This is because the attention mechanism of the iTransformer and FECAM-iTransformer filters out invalid information in the time series, and some noise that causes error accumulation is no longer considered. The FECAM-iTransformer further enhances the effective frequency channel from the frequency domain and weakens the adverse effects brought by the invalid frequencies, so its performance is better than the iTransformer. Figure 18 shows the error values of each method in three different directions on Path #3.

Figure 17.

Trajectory diagram and horizontal position errors diagram on Path #3: (a) the trajectory of each method on Path #3; (b) the horizontal position errors of each method on Path #3.

Figure 18.

Position errors of north, east, and altitude on Path #3.

5. Discussion

In this paper, an INS/FECAM-iTransformer integrated navigation system is proposed to address the rapid accumulation of INS errors during GNSS outages. Experimental results demonstrate that the FECAM-iTransformer offers higher accuracy and stability compared to existing neural network solutions. The main advantages of the FECAM-iTransformer are as follows:

- (1)

- The iTransformer can model the entire input sequence in the time domain across multiple tokens, effectively capturing the variable correlations between the multi-channel data from the IMU.

- (2)

- The FECAM enables the FECAM-iTransformer to model in the frequency domain. By using an SE-Block, the FECAM enhances the effective frequency information of the IMU, allowing the model to capture rich frequency-domain features from the IMU data.

In this experiment, two datasets were used: a public dataset and a private dataset. From each dataset, three representative routes were selected for testing, referred to as Path #1, Path #2, and Path #3.

The experiments on Path #1 demonstrate that neural networks can effectively mitigate error amplification during GNSS outages. However, on the stable Path #1, the performance gap between all models is minimal, with the proposed method in this paper reducing the error by 97.5% compared to the INS system.

Experiments on Path #2 show that during sharp turns in the trajectory, the attention mechanism plays a significant role in modeling IMU and GNSS signals. The performance of LSTM and GRU on this route is notably inferior to that of the iTransformer and FECAM-iTransformer. The FECAM-iTransformer, which places additional emphasis on multi-channel frequency-domain features, achieved higher positioning accuracy than the iTransformer. Compared to INS, the positioning error of the FECAM-iTransformer is reduced by 98.3%.

Experiments on Path #3 demonstrate that neural networks with attention mechanisms perform better on low-cost sensor platforms, regardless of whether the vehicle is turning or traveling in a straight line. The FECAM-iTransformer reduced the positioning error by 99.9% compared to the INS.

Compared to traditional deep learning models, the FECAM-iTransformer demonstrates superior navigation accuracy and stability. Current neural network solutions generally fail to deeply and specifically capture and extract the time and frequency characteristics of the IMU data, as they typically model all sequence information solely in the time domain. Therefore, further research in this area, focusing on detailed feature analysis and extraction of sensor data, represents a promising direction for improvement.

However, there are areas for further enhancement in this work: the training and test datasets do not cover all possible application scenarios. Although experiments have shown that the method is effective on both professional and low-cost equipment platforms for land vehicles, future studies could extend experimental vehicles to surface, underwater, aerial, and other scenarios. As the volume of data increases, the proposed method is expected to achieve even better adaptability.

6. Conclusions

In this paper, a novel method for estimating the GNSS location measurements using the FECAM-iTransformer algorithm is proposed to mitigate the accumulated INS errors during GNSS signal interruptions. The iTransformer and FECAM algorithms are employed to extract time-domain and frequency-domain features from multi-channel IMU sensor data, enabling the stable output of reliable GNSS positioning signals even in the event of a complete GNSS outage. This approach significantly reduces vehicle position errors. Additionally, this study provides a detailed analysis of the model’s input features and outputs. The model dynamically learns and predicts changes in vehicle position using IMU inputs, specifically triaxial acceleration and triaxial angular velocity. Compared to existing LSTM and GRU models, the FECAM-iTransformer achieves more accurate and stable navigation prediction results.

Author Contributions

Conceptualization, X.K. and B.Y.; methodology, B.Y.; software, B.Y.; validation, X.K. and B.Y.; formal analysis, B.Y.; investigation, B.Y.; resources, X.K.; data curation, B.Y.; writing—original draft preparation, B.Y.; writing—review and editing, X.K.; visualization, B.Y.; supervision, X.K.; project administration, X.K.; funding acquisition, X.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China: Design and Development of High Performance Marine Electric Field Sensor, grant number 2022YFC3104001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available from the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| INS | Inertial navigation system |

| GNSS | Global navigation satellite system |

| IMUs | Inertial measurement units |

| EKF | Extended Kalman filter |

| iTransformers | Inverted Transformers |

| FECAM | Frequency-Enhanced Channel Attention Mechanism |

| FC | Fully Connected |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| SE-Block | Squeeze and Excitation Block |

| RMSE | Root mean square error |

| MAX Error | Maximum error |

References

- Yuanxi, Y.; Cheng, Y.; Xia, R. PNT intelligent services. Acta Geod. Et Cartogr. Sin. 2021, 50, 1006–1012. [Google Scholar] [CrossRef]

- Groves, P.D.; Wang, L.; Walter, D.; Martin, H.; Voutsis, K.; Jiang, Z. The four key challenges of advanced multisensor navigation and positioning. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium—PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 773–792. [Google Scholar] [CrossRef]

- Xu, X.; Sun, Y.; Yao, Y.; Zhang, T. A Robust In-Motion Optimization-Based Alignment for SINS/GPS Integration. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4362–4372. [Google Scholar] [CrossRef]

- Zaidi, B.H.; Manzoor, S.; Ali, M.; Khan, U.; Nisar, S.; Ullah, I. An inertial and global positioning system based algorithm for ownship navigation. Int. J. Sens. Netw. 2021, 37, 209. [Google Scholar] [CrossRef]

- Zhou, J.; Zhao, X.; Cheng, X.; Xu, Z.; Zhao, H. Vehicle Ego-Localization Based on Streetscape Image Database Under Blind Area of Global Positioning System. J. Shanghai Jiaotong Univ. (Sci.) 2019, 24, 122–129. [Google Scholar] [CrossRef]

- Li, X.; Chen, W.; Chan, C.; Li, B.; Song, X. Multi-sensor fusion methodology for enhanced land vehicle positioning. Inf. Fusion 2019, 46, 51–62. [Google Scholar] [CrossRef]

- Havyarimana, V.; Hanyurwimfura, D.; Nsengiyumva, P.; Xiao, Z. A novel hybrid approach based-SRG model for vehicle position prediction in multi-GPS outage conditions. Inf. Fusion 2018, 41, 1–8. [Google Scholar] [CrossRef]

- Abdel-Hamid, W.; Noureldin, A.; El-Sheimy, N. Adaptive Fuzzy Prediction of Low-Cost Inertial-Based Positioning Errors. IEEE Trans. Fuzzy Syst. 2007, 15, 519–529. [Google Scholar] [CrossRef]

- Gao, J.; Li, K.; Chen, J. Research on the Integrated Navigation Technology of SINS with Couple Odometers for Land Vehicles. Sensors 2020, 20, 546. [Google Scholar] [CrossRef]

- Du, X.; Hu, X.; Hu, J.; Sun, Z. An adaptive interactive multi-model navigation method based on UUV. Ocean Eng. 2023, 267, 113217. [Google Scholar] [CrossRef]

- Wang, D.; Wang, B.; Huang, H.; Yao, Y. Online Calibration Method of DVL Error Based on Improved Integrated Navigation Model. IEEE Sens. J. 2022, 22, 21082–21092. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, M.; Yang, Y.; Ran, C.; Zhou, L. Improved Gaussian process regression-based method to bridge GPS outages in INS/GPS integrated navigation systems. Measurement 2024, 229, 114432. [Google Scholar] [CrossRef]

- Jwo, D.-J.; Biswal, A.; Mir, I.A. Artificial Neural Networks for Navigation Systems: A Review of Recent Research. Appl. Sci. 2023, 13, 4475. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A.; Osman, A.; El-Sheimy, N. Online INS/GPS integration with a radial basis function neural network. IEEE Aerosp. Electron. Syst. Mag. 2005, 20, 8–14. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A. Sensor Integration for Satellite-Based Vehicular Navigation Using Neural Networks. IEEE Trans. Neural Netw. 2007, 18, 589–594. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Wang, J.; Jin, S.; Meng, X. GA-SVR and Pseudo-position-aided GPS/INS Integration during GPS Outage. J. Navig. 2015, 68, 678–696. [Google Scholar] [CrossRef]

- Fang, W.; Jiang, J.; Lu, S.; Gong, Y.; Tao, Y.; Tang, Y.; Yan, P.; Luo, H.; Liu, J. A LSTM Algorithm Estimating Pseudo Measurements for Aiding INS during GNSS Signal Outages. Remote Sens. 2020, 12, 256. [Google Scholar] [CrossRef]

- Meng, X.; Tan, H.; Yan, P.; Zheng, Q.; Chen, G.; Jiang, J. A GNSS/INS Integrated Navigation Compensation Method Based on CNN–GRU + IRAKF Hybrid Model During GNSS Outages. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar] [CrossRef]

- Wang, H.; Tang, F.; Wei, J.; Zhu, B.; Wang, Y.; Zhang, K. Online Semi-supervised Transformer for Resilient Vehicle GNSS/INS Navigation. IEEE Trans. Veh. Technol. 2024, 1–16, early access. [Google Scholar] [CrossRef]

- Cheng, J.; Gao, Y.; Wu, J. An Adaptive Integrated Positioning Method for Urban Vehicles Based on Multitask Heterogeneous Deep Learning During GNSS Outages. IEEE Sens. J. 2023, 23, 22080–22092. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Kim, T.; Kim, J.; Tae, Y.; Park, C.; Choi, J.; Choo, J. Reversible Instance Normalization for Accurate Time-Series Forecasting against Distribution Shift. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022; Available online: https://api.semanticscholar.org/CorpusID:251647808 (accessed on 1 January 2024).

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M.J.A. iTransformer: Inverted Transformers Are Effective for Time Series Forecasting. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, K.; Cao, Z. System noise variance matrix adaptive Kalman filter method for AUV INS/DVL navigation system. Ocean Eng. 2023, 267, 113269. [Google Scholar] [CrossRef]

- Zihajehzadeh, S.; Park, E.J. A Gaussian process regression model for walking speed estimation using a head-worn IMU. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 11–15 July 2017; pp. 2345–2348. [Google Scholar] [CrossRef]

- Bolotin, Y.; Savin, V. Turntable IMU Calibration Algorithm Based on the Fourier Transform Technique. Sensing 2023, 23, 1045. [Google Scholar] [CrossRef]

- Lee-Thorp, J.; Ainslie, J.; Eckstein, I.; Ontañón, S.J.A. FNet: Mixing Tokens with Fourier Transforms. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL 2022), Seattle, WA, USA, 10–15 July 2022. [Google Scholar] [CrossRef]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R.J.A. FEDformer: Frequency Enhanced Decomposed Transformer for Long-term Series Forecasting. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, Maryland, 17–23 July 2022. [Google Scholar] [CrossRef]

- Xu, Z.; Zeng, A.; Xu, Q. FITS: Modeling Time Series with 10k Parameters. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar] [CrossRef]

- Jiang, M.; Zeng, P.; Wang, K.; Liu, H.; Chen, W.; Liu, H. FECAM: Frequency enhanced channel attention mechanism for time series forecasting. Adv. Eng. Inform. 2023, 58, 102158. [Google Scholar] [CrossRef]

- Zhao, S.; Zhou, Y.; Huang, T. A Novel Method for AI-Assisted INS/GNSS Navigation System Based on CNN-GRU and CKF during GNSS Outage. Remote Sens. 2022, 14, 4494. [Google Scholar] [CrossRef]

- Tamer, B. A New Approach to Linear Filtering and Prediction Problems. In Control Theory: Twenty-Five Seminal Papers (1932–1981); IEEE: Piscataway, NJ, USA, 2001; pp. 167–179. [Google Scholar] [CrossRef]

- Trawny, N.; Mourikis, A.I.; Roumeliotis, S.I.; Johnson, A.E.; Montgomery, J.F. Vision-aided inertial navigation for pin-point landing using observations of mapped landmarks. J. Field Robot. 2007, 24, 357–378. [Google Scholar] [CrossRef]

- Shen, C.; Zhang, Y.; Tang, J.; Cao, H.; Liu, J. Dual-optimization for a MEMS-INS/GPS system during GPS outages based on the cubature Kalman filter and neural networks. Mech. Syst. Signal Process. 2019, 133, 106222. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).