Abstract

According to the World Health Organization (WHO), 5% of people around the world have hearing disabilities, which limits their capacity to communicate with others. Recently, scientists have proposed systems based on deep learning techniques to create a sign language-to-text translator, expecting this to help deaf people communicate; however, the performance of such systems is still low for practical scenarios. Furthermore, the proposed systems are language-oriented, which leads to particular problems related to the signs for each language. For this reason, to address this problem, in this paper, we propose a system based on a Recursive Neural Network (RNN) focused on Mexican Sign Language (MSL) that uses the spatial tracking of hands and facial expressions to predict the word that a person intends to communicate. To achieve this, we trained four RNN-based models using a dataset of 600 clips that were 30 s long; each word included 30 clips. We conducted two experiments; we tailored the first experiment to determine the most well-suited model for the target application and measure the accuracy of the resulting system in offline mode; in the second experiment, we measured the accuracy of the system in online mode. We assessed the system’s performance using the following metrics: the precision, recall, F1-score, and the number of errors during online scenarios, and the results computed indicate an accuracy of 0.93 in the offline mode and a higher performance for the online operating mode compared to previously proposed approaches. These results underscore the potential of the proposed scheme in scenarios such as teaching, learning, commercial transactions, and daily communications among deaf and non-deaf people.

1. Introduction

According to the National Institute of Statistics and Geography of Mexico (INEGI) [1], there are around 1,350,802 people with hearing disabilities in Mexico. As is known, these people communicate with each other using sign language fluently; however, most people who use speech are unfamiliar with Mexican Sign Language (MSL), so communicating between them can be difficult when there is no interpreter or shared means of communication, such as a written language that both parties are proficient in.

On the other hand, artificial intelligence has advanced to rival human decisions in some areas [2]; such is the case of the discipline called deep learning. Within deep learning is the Recurrent Neural Networks or RNN, and part of this technique is the Long Short-Term Memory or LSTM networks. These machine learning techniques are used to process data sequences widely applied to research on automatic speech recognition and natural language processing [3].

For example, Ref. [4] proposed a neural network focused on human action recognition from videos. The authors utilized an attention mechanism to focus on the most important features of each frame. The authors propose a new neural network architecture to capture this temporal information. The network combines two streams:

- An LSTM stream to analyze the sequence of frames in the video.

- A CLSTM (Convolutional LSTM) stream captures spatial and transient information.

As a result, the method is more accurate than previously existing methods.

On the other hand, Ref. [5] created a model for generating video descriptions. The model uses recurrent neural networks, specifically LSTMs, to capture the transient structure of videos and generate descriptions. The model was trained using a dataset made of YouTube videos; authors claim their model outperforms previous models regarding accuracy.

From a different perspective, Ref. [6] proposed a method to generate Indian Sign Language (ISL) videos from sentences using sentence preprocessing and generative adversarial networks (GAN). Their method focuses on converting sentences into glosses (a way to represent signs using written language) and generating synthetic video frames for each gloss using a GAN architecture. The generated videos use animated avatars and 2D/3D graphics.

Despite the difficulties related to this research field, it is essential to highlight the importance of communication since it is fundamental to connecting people and communities; this motivates our work since, for the deaf community in Mexico, MSL is more than a means of communication, it is a pillar of both their culture and their identity. Despite the progress regarding public awareness of the importance of MSL, this type of communication still faces significant challenges in achieving its adoption by our society. For this reason, this work is more than just a technological exercise; it is a concrete manifestation of the commitment to including deaf people in our society. We believe that this can be reached by empowering the deaf community with technological tools that facilitate their communication, so we expect that this work contributes to achieving a more inclusive and equitable society.

The contributions of this paper are:

- We designed four multimodal models based on spatial tracking, gesture recognition, and an RNN (Section 3).

- We tuned the proposed systems and identified the most suited model for the target application (Section 5).

- We tested the proposed system both in an offline and online scenario (Section 5).

- We evaluated the accuracy of the system in the offline and online scenarios (Section 5).

We expect our work to impact different social areas, such as education, the health care sector, transportation, finances, and daily life in general, helping deaf people interact with the rest of the people in equal circumstances.

The remainder of this paper is organized as follows: in Section 2, we discuss related works to our proposed model; Section 3 describes the operation of the system we propose; We detail the materials and the creation process of our model, as well as the characteristics of the data set and the evaluations carried out on the model in Section 4; we present the results obtained in Section 5; next, our conclusions are presented in Section 6; finally, we have the references and the Appendix A and Appendix B.

2. Related Works

The research detailed in [7] focused on developing a sign language detection system to facilitate communication between the deaf community and banks. The main difficulty lies in the complexity of Indian Sign Language (ISL) gestures related to the banking domain. The lack of standardized datasets and the variability in gesture length were significant challenges. To address this, the proposed approach combines feature extraction using a Convolutional Neural Network (CNN) with an LSTM Recurrent Neural Network. This system was trained and tested on a customized ISL dataset.

On the other hand, ref. [8] focused on developing an American Sign Language (ASL) recognition system based on computer vision and deep learning. It also presents a publicly available motion-based ASL dataset for future research which contains thirty phrases obtained from the book American Sign Language for Dummies along with phrases considered basic for communication. They consider three types of algorithms: ConvLSTM, Convolutional Neural Network (CNN) and LSTM, and Mediapipe and LSTM, and compared them, with two main parameters to observe: the number of sentences in the model and the precision of the sentences in each one. They used the the MediaPipe (an open source framework by Google useful for tasks such as face detection, hand tracking, and more) and LSTM model. They concluded with 97% accuracy in training and 79% accuracy within the tests carried out. Finally, they propose to use the Transformer algorithm to improve the performance of the system for future work.

In the field of video interpretation, the authors of [9] implemented a methodology to evaluate expressiveness in facial videos captured of people responding to ads based on deep learning; specifically, they used an LSTM network. They detailed the structure of the LSTM model which includes a dropout layer which turns off neurons randomly selected to avoid overfitting the NN. The model was trained to determine expressiveness based on facial cues, and user interfaces were provided through a website with a camera connected to an IoT board to display the results.

In turn, Ref. [10] proposed a sign language-to-text translation system using deep learning models, specifically CNN and LSTM. The system was trained on an enriched dataset that contains common phrases used in professional meetings. The results show that the LSTM model outperforms the CNN model.

The authors of [11] proposed a model for video recognition using computer vision techniques for Chinese sign language which achieves an accuracy of 85%. They designed a model based on an LSTM neural network to capture skeletal tracking information. They ended the paper by suggesting further improvements to their model’s accuracy by retraining using an expanded dataset and incorporating facial expression recognition.

Also, in the research detailed in [12], a system focused on translating MSL using RGB-D information was introduced. The authors built a semantic corpus of 53 words belonging to Mexican Sign Language (MSL) from scratch. From this corpus, they captured the trajectory of hand movements using a Kinect sensor; then, a KNN-based algorithm eliminated inconsistencies and noise in the extracted patterns. They used a Multilayer Perceptron Neural Network for word classification and implemented the Backpropagation algorithm for training. Finally, they validated their system using the K-Fold Cross Validation method, obtaining an average accuracy percentage of 93.46%.

Ortiz [13] created a database containing Colombian Sign Language (CSL) signs and used it to train a CNN to recognize CSL gestures. They found several activation functions failed to respond adequately; however, Dropout improved the model’s accuracy and loss. Additionally, they observed that the number of layers is unrelated to the model’s accuracy.

Natarajan [14] proposed deep learning-based techniques to enhance sign language video recognition, translation, and generation. The researchers utilized the MediaPipe library, a hybrid CNN, and a bidirectional long short-term memory (BLSTM) model for pose detail extraction and text generation. They utilized a hybrid machine translations neural network combined with MediaPipe and a dynamic generative adversarial network (GAN) to generate sign language gestures. The proposed model achieved over 95% classification accuracy and significantly improved visual quality. The dynamic GAN was evaluated using different multilingual datasets, and excellent results were produced.

In contrast, Ref. [15] presented a sign language recognition system called SignGest that uses acoustic signals from a smartphone to capture sign language gestures and a CNN model to distinguish between different gestures. In addition, it used a deep convolutional GAN to generate training data. They implemented the system on a server and an Android smartphone without additional hardware. The experimental results showed that SignGest achieved its target performance which they assessed using the Structural Similarity Index Measure (SSIM).

Additionally, in [16], a method for recognizing words in Japanese Sign Language (JSL) based on the abstraction of hand signals was implemented; this was achieved using measuring the hand shape, position, and movement. The proposed system uses a contour-based method and template matching for hand shape recognition. They also explored a second method based on the Hidden Markov Model. The system was evaluated using a dataset of 400 JSL words, and results showed a recognition rate of 33.8%.

In turn, Ref. [17] introduced an interactive Taiwanese sign language learning system based on Microsoft Kinect. They processed the color image, depth image, and skeleton points of the hands and body to extract relevant information. Then, they fitted an supporting vector machine (SVM) classification to identify the intended sign language word, and the experimental results showed a recognition rate of 90.2% and hand motion detection of 98%.

In the paper [18], the authors presented research focused on the automatic recognition of Mexican Sign Language (MSL) by analyzing body movement and facial gestures. The proposed system employs multiple gestures, including hands, body, and face, using a dataset of 3000 samples of 30 different MSL signs. They used a depth camera to capture the coordinates of the movements while utilizing RNN for classification. The results of their study demonstrated a high accuracy of the system, reaching 97% on clean test data and 90% on data with a high level of noise, highlighting the robustness and effectiveness of the proposed approach.

Another relevant work in MSL recognition is [19], the authors presented a system developed to recognize static signs of the dactylological alphabet. They utilized the MediaPipe framework and a CNN model to interpret the letters representing the hand signals captured by a camera. A database composed of 336 test images was used, their system achieved an accuracy of 83.63% with the CNN model. In addition, they evaluated samples of each letter and achieved an accuracy of 84.57%, a sensitivity of 83.33%, and a specificity of 99.17%. The advantage of their proposal lies in its implementation capacity on low-consumption equipment, allowing real-time classification and improving the accessibility and usability of the system.

The authors of [20] proposed a method for the recognition of the static alphabet of the MSL capable of learning the shape of each of the 21 letters from examples. The method they proposed first normalizes each example of the training set into a 3D pose through utilizing the principal component analysis (PCA). The input data was captured using a 3D sensor, and the method generates three types of features representing each shape. The authors used a set of data acquired in a laboratory, their approach achieved 100% accuracy. In addition, the features used in this method have a clear and intuitive geometric interpretation.

Another interesting work is [21], in this work, the authors explored a new lexical video dataset of MSL, which includes the most frequently used dynamic gestures. Each gesture class was composed of different versions of videos recorded under uncontrolled conditions. This dataset, named MX-ITESO-100, contains a lexicon of 100 gestures and 5000 videos made by three participants, showing various grammatical elements. Furthermore, they evaluated the dataset using a two-step neural network model, reaching an accuracy of 99%, making it a useful benchmark for training future machine learning models in computer vision systems.

Another work related to project we propose is [22]. The authors designed a bidirectional sign language translation system to bridge the communication gap between hearing and deaf people. They evaluated Several deep learning models, including RNN, bidirectional RNN (BRNN), LSTM, GRU, and Transformers to identify the most accurate sign language recognition and translation model. Also, they used a Keypoint (the spatial locations of important landmarks in the image) detection approach through MediaPipe to track and understand gestures. The system incorporates an intuitive graphical interface, which allows translation between MSL and Spanish in both directions, facilitating the entry of signs or text and obtaining the corresponding translations. The evaluation results demonstrated high accuracy, with the BRNN model standing out with an accuracy of 98.8%. The authors underlined the importance of hand characteristics in sign language recognition and offered a promising solution to improve communicative accessibility and promote the inclusion of people with hearing disabilities.

In [23], the authors presented a model for sign language that combines spatial and temporal attention with a general neural network. The model they designed uses self-attention mechanisms to extract relevant information from node and edge features in both spatial and temporal domains. The architecture of their system is divided into three branches: a graph-based spatial branch that identifies spatial dependencies, a graph-based temporal branch the temporal branch that reinforces temporal dependencies in sequential hand skeleton data, and a general neural network branch that improves the generalization capability of the model increasing its robustness. They evaluated the system performance on the MSL, the Pakistani Sign Language (PSL) datasets, and the American Sign Language Large Video Dataset (ASLLVD), including 3D joint coordinates for face, body, and hands; the authors conducted experiments on both individual gestures and their combinations. The model achieved a 99.96% accuracy on the MSL dataset, 92.00% on PSL, and 26.00% on the ASLLVD dataset, covering over 2700 classes.

On the other hand, the research presented in [24] focused on identifying lexical signs. This study proposed a method to identify phonetic units in MSL videos using an unsupervised model, to achieve this, they used an image thresholding approach based on the Motion-Retention Model of Liddell and Johnson. The goal was to identify the smallest linguistic units containing relevant information, avoiding the loss of sublexical data that might go unnoticed by most natural language processing (NLP) algorithms. Furthermore, the method allows for removing noisy or redundant video frames, thus reducing computational costs. The authors tested their algorithm tested on a collection of MSL videos, and human judges assessed the relevance of the extracted segments. The results indicated that the frames selected by the algorithm contained enough information to be comprehensible for signers. In some cases, up to 80% of available frames could be discarded without loss of comprehensibility, which has direct implications for future electronic representation, transmission, and processing of sign language.

The authors of [25] introduced an interactive platform for teaching MSL based on artificial intelligence (AI) models, which they called DAKTILOS. This platform allows the native integration of real-time tracking of hand movements from 2D images. DAKTILOS recognizes and evaluates both static and dynamic signs of the LSM alphabet the user signals. First, the system identifies 21 critical points of the hand using the MediaPipe model; next, these are dynamically compared to the target hand configurations (letters) using the FingerPose classifier. Finally, the system computes a scores and determines whether the sign was performed correctly, providing visual feedback. The platform allows for exploring 27 letters, showing the correct configuration with a 3D hand. Preliminary tests were conducted with eight subjects to evaluate the functionality of DAKTILOS.

These works support the idea of using RNN to generate a common means of communication to facilitate communication between people with hearing disabilities who use sign language, with people who can hear; however, despite previous efforts, the following issues remain unaddressed:

- Previous systems depend on the specific signs of the language, that is to say, they are language-oriented and as a consequence, there are particular design constraints.

- The accuracy of most of those approaches is still low for practical scenarios.

- The authors have limited the experiments they conducted on their schemes to offline tests.

3. The Proposed System

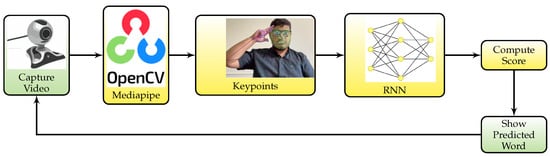

The main idea is to utilize spatial coordinates of the body parts involved in MSL, specifically the hands, torso, and face because gestures play an important role in MSL. Then, use these coordinates as patterns for training an RNN to predict words of the MSL in an online scenario. We planned the proposed system to receive live video from a camera; and then, compute the necessary data from it; next, feed this data into the RNN and make predictions, and finally, loop again in a continuous manner. We conceive this process as illustrated in Figure 1.

Figure 1.

Block diagram of the proposed system.

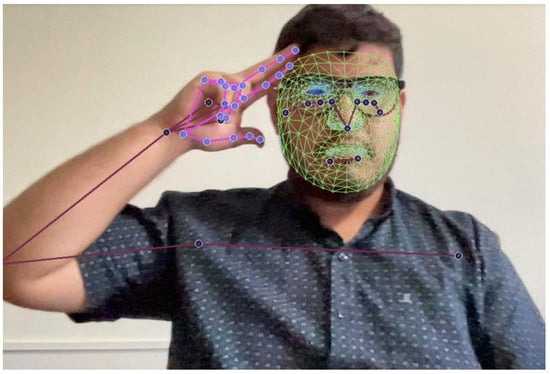

We believe this distinguishes our work from many deep learning-based approaches to video interpretation since these use frame changes to identify content; unlike most of them, we tailored a system based on the spatial coordinates as we explained above. Also, we considered the position relative to the torso to provide further information about spatial position. We utilized the mediapipe model to compute the spatial coordinates of the body parts we are interested in (Figure 2).

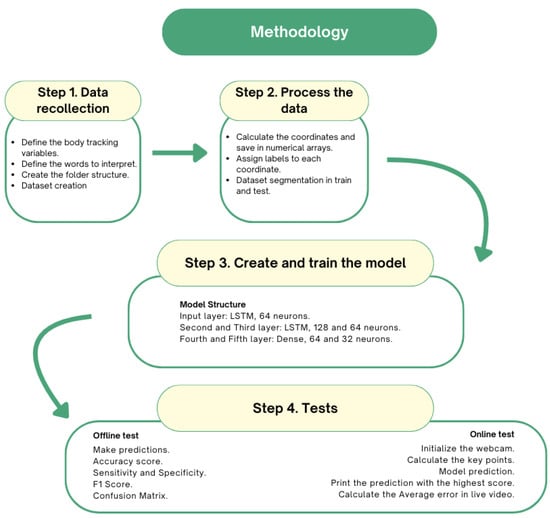

Figure 2.

Workflow to develop the proposed system.

In the remainder of this section, we explain the procedure to construct the dataset, and then, we discuss the proposed model of the RNN.

3.1. Dataset Construction

As discussed above, we use a custom dataset consisting of the spatial coordinates of the body parts to train the model. To achieve this, we utilize the Mediapipe model to compute the landmarks of the involved body parts; thus, in this section, we detail the general procedure to construct the patterns of this dataset.

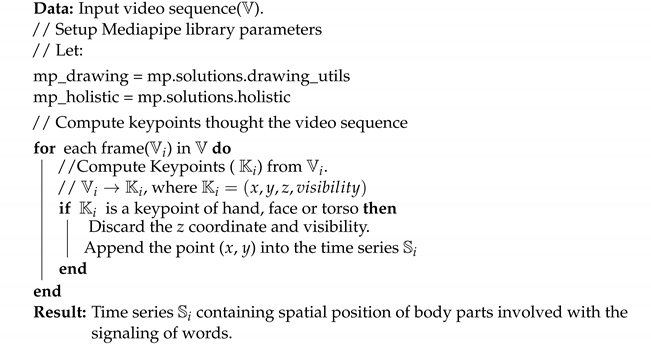

To delve into the details of how to compute keypoints using the Mediapipe model, let us define as the set of frames that comprises a video sequence; each frame () of is feed into the Mediapipe model and it outputs a tuple, which we call , the first three members of which are the x, y, and z spatial coordinates of the main landmarks of hands, face, and torso. Examples of these landmarks include knuckles, wrists, eyes, mouth, shoulders, and other important landmarks. Besides the spatial coordinates, the Mediapipe model outputs a score called visibility, which is unnecessary for the model we propose and thus we discard it; that is to say, we assemble a tuple in this way: for each landmark. Next, we append this tuple to a time series; the resulting time series is made of the spatial coordinates of body landmarks, which serves as patterns for the NN model we propose.

We detail the entire process in Algorithm 1 and Figure 3, which explains the use of the keypoints computed by the Mediapipe model.

| Algorithm 1: Procedure for computing the coordinates of landmarks using the Mediapipe model. |

|

Figure 3.

Tracking the spatial position of keypoints leads to a pattern related to the word signaled.

3.2. Proposed Neural Network Structures

The next building block of the proposed system is the RNN. Since there are several RNN types that have proven to be effective in this kind of application, we designed some variations of the proposed system for different configurations utilizing the following types of RNN: the LSTM, the Bidirectional LSTM, and the Gated Recurrent Unit (GRU).

We implemented four configurations, two based on LSTM, one in bidirectional LSTM, and one more based on the GRU. Table 1 details the layered architecture for each case.

Table 1.

Model architecture for each of the proposed models.

We summarize the main hyperparameters of the structures we planned in two sets. The first one is in Table 2, which contains a set of hyperparameters we used in common in all cases we explored, such as the learning rate, number of batches, and other relevant hyperparameters. The second is in Table 3, which summarizes the set of particular hyperparameters; for example, the loss function. Given the nature of the model training, we use categorical cross-entropy and the mean square error (MSE) as loss functions, defined in Equations (1) and (2), respectively. Finally, we utilize the adaptive moment estimation (Adam) optimizer defined in Equation (3).

where:

Table 2.

Hyperparameters common for the the proposed NN models.

Table 3.

Specific hyperparameters for each of the proposed models.

- : Categorical Crossentropy.

- : Number of classes.

- : Ground truth value (0 or 1) for the i-th class.

- : Predicted value for the i-th class.

- : Mean Squared Error.

- N: Total number of observations or data.

- : True value of the i-th observation.

- : Estimated value for the i-th observation.

- : Optimizer parameter at time step n.

- : Learning rate.

- : Magnitude of the gradient.

- : A small value to avoid division by zero.

- : First-order momentum at time step n.

- : Hyperparameter between 0 and 1 that controls the exponential decay rate.

- : Gradient calculated at time step n.

- : Second-order momentum at time step n.

- : Exponential decay rate of the second-order momentum.

- : The square gradient at time step n.

In this study, we call the structures in Table 1 the “base models” that we used as a reference to explore. This exploration involved testing and evaluating the performance of the proposed system for different numbers of neurons for the layers for each model, and thus, we discuss details in this regard in the next section where we explain the experiments we conducted.

4. Materials and Methods

As discussed in the previous section, the proposed system’s core is an RNN; we constructed the network using the version 2.15.0 of Keras, we also utilized the version 2.15.0 of Tensorflow. We also used the Mediapipe version 0.10.8 included in the OpenCV (V.4.9.0.80) library. Both tools were used to compute the spatial coordinates of hands and facial expressions. The computing equipment used to create the proposed system was a Macbook Air with a 1270 MHz Apple M1 processor, 8 GB of RAM, 500 GB of storage, and a MacOS Sonoma operative system.

4.1. Dataset Characteristics

We created a custom dataset to train the LSTM neural network. We utilized the OpenCV library (version 4.9.0.80) to compute the keypoints for the body parts involved in making MSL signs, which are The torso, the face, and the left and right hands as shown in Figure 3; first, we recorded a set of videos with the following characteristics:

- A total of 600 videos were generated, considering 30 per word. Appendix A contains the complete list of words to train the neural network.

- The framerate of all videos is 30 frames per second.

- The size of each video is 720 × 720.

- A total of 1662 keypoints were extracted for each frame.

- A total of 20 words are the ones that the system learned to identify.

- A total of 18,000 frames were obtained considering 30 frames per video.

Second, we calculated the keypoints of body parts from those videos; then, we saved the related coordinates of the body parts over time and used those coordinates for training.

4.2. Experimental Setup

We conducted two experiments; the first was tailored to explore different configurations of the base models to identify the best setup for each model; to achieve this goal, we trained the base models for different layer sizes and evaluated the performance of the resulting trained models in an offline scenario. The layer sizes we evaluated are listed in Table 4. This table is intended to be used with Table 1 for cross-referencing. Another test we explored was varying the rate of neurons that are turned-off during training to improve accuracy on the test data, so we trained the RNN considering different Dropout rates to assess its influence on the performance of the resulting model. We tested several Dropout rates: 0.3, 0.4, 0.5, and 0.6.

Table 4.

Layer sizes for each model listed in Table 1. The rows in each column specify the number of neurons in each layer of the corresponding model.

The second experiment was designed to evaluate the performance of the tuned models in an online scenario; for this purpose, we used a web camera to capture live video, which we fed into the network and then we compared the words the system predicted with the ground truth.

4.3. Performance Assessment

We assessed the performance considering the Accuracy, Sensitivity, Specificity, and F1 Score since these are typical for evaluating Neural Network-Based systems.

We consider that it is mandatory to evaluate the performance of the proposed system in an online scenario considering the number of errors the system makes during the time the signaler is performing a signal, this is because it will result in a sequence of non-sense words, which surely will confuse users. To the best of our knowledge, there is no metric to evaluate this particular behavior, and thus, we propose a metric to assess the performance in this setup. We call this metric the “Average error in live video during translation” (AELVT); this metric measures the average of number of times the system mistranslated a sign before the system is able to translate the sign correctly. We define the AELV as follows:

- : Average error in live video translations.

- : Number of words that the system can translate.

- : Number of bad translations before reaching the correct one.

Next, we calculate the Average Error in the entire Live Video using the next formula:

The above formula measures the AELVT for an entire class.

5. Results and Discussion

We conducted the first experiment we explained in Section 4. This was an offline setup consisting of training the base Models for different layer sizes, and then we compared the resulting models to determine the best performing model considering its predictions on test data. We found that the metrics of Model 2 are too low and in consequence, we disregarded it whereas Models 3 and 4 already perform high, and thus, we think that no further exploration in this regard was necessary.

On the basis of the above criteria, we trained the different setups listed in Table 4 and determined from the test that the setup Model 1-A performed higher compared to Models 1-B and C; in consequence, we disregarded Models 1-B to C and in the remainder of this paper, we refer to the Model 1-A as “Model 1”.

We explored the influence of adding a Dropout stage, considering Dropout rates of 30%, 40%, 50%, and 60%. The results for the model we identified as the highest performing model for our system are shown in Table 5. We found that adding a Dropout layer to improve performance is ineffective for the particular models we proposed, furthermore, the performance of the resulting models are lower compared to their counterparts that do not include a dropout stage. The corresponding results for the other models we explored are similar and are reported in Appendix B.

Table 5.

Example of the influence of Varying the Dropout rate. This example illustrates the metrics for Model 1-A.

Next, we evaluated the metrics we discussed in Section 4.3 and presented those measures in Table 6. The tuned Model 1 achieved an accuracy of 0.93 indicating the model correctly predicted 93% of the data during the evaluation. We also computed precision, sensitivity, and specificity leading to the following results: precision of 0.95 (95%), and F1 Score of 0.93 (93%). Model 4 apparently achieved a perfect score; however, we want to emphasize the fact that this score was achieved in an offline scenario and the performance in the online test tells a different story as this particular model triggers many false positives before the model makes its “final prediction”.

Table 6.

Comparison of metrics for all the proposed models.

We can compare our system to the one proposed by Natarajan [14], which reached an accuracy of 95%, in contrast to our system, which achieved an accuracy of 93% in the tests we carried out. Also, the work of Garcia [12] exhibited an accuracy of 93.46%; that is, a result similar to those obtained by our system in the tests we conducted.

Next, we conducted an online test where we evaluated the system’s accuracy in processing live video. The setup is illustrated previously in Figure 1. In this case, we are interested in measuring how well the proposed system performs; to achieve this, we measured the AELVT metric we defined in Section 4. The results are reported in Table 6.

We prioritized the model that exhibits lower AELVT since it might confuse the user of the system. Under this criteria, we consider Model 1 as the best approach among the proposed models.

A comparison versus the approaches discussed in Section is as follows: the method proposed by Awata [16] achieved a rate of 33.8% of recognition rate whereas we reached an error of 11% through AELVT, thus the performance of the proposed system is higher.

The Figure 4 ilustrates examples of the online tests, here we can see the operation to recognize several words.

Figure 4.

Examples of online operation using live video feeds for different words.

6. Conclusions

In this paper, we proposed an MSL translator based on an RNN. We explored four main structures, two models constructed using LSTM networks, one model constructed utilizing Bidirectional LSTM, and one model with GRU. We used the spatial position of the body parts involved in signaling to predict words and build a custom dataset to evaluate the performance of the four models.

The experiments we conducted show that Model 1 achieved higher performance among the models we proposed whereas performs with almost the same performance compared with similar approaches proposed previously by other authors in offline scenarios.

On the other hand, the results for MSL translation in the online scenario exhibited higher performance as the number of AELVT errors is lower compared to the corresponding error level achieved by similar approaches (Table 7); our system reduces the errors in 67.47%.

Table 7.

Average error in live video translation.

We observed that the bidirectional model failed to interpret words that look alike and share the same spatial positions. For example, the model confuses the words yes and eat. Also, the GRU-based model has a performance similar to that of model 1, but its drawback is that it triggers false positives at the early stages of signaling of the word, it needs to collect several coordinates to properly predict the corresponding word.

The main limitation of the systems is that the translation of MSL signals is limited to the words in the dataset used for training, which limits its widespread application.

In future work, it is proposed to incorporate training of more words into the network, especially those essential for basic communication (asking for help, directions, etc.) to verify if the system is scalable. Another important research direction is to reduce the errors that occur before the signaling of the word is complete. Another work is to translate also into an audio signal. This would make interactions between hearing and deaf people simpler and more natural.

These results demonstrated the potential of the proposed models in scenarios such as teaching, learning, business transactions, and daily communications between deaf and non-deaf people.

Author Contributions

Conceptualization, E.A.B.-G. and N.M.-R.; methodology, E.A.B.-G.; software, N.M.-R.; validation, M.G.-L., J.R.G.-M. and M.N.-M.; formal analysis, H.P.-M.; investigation, M.G.-L.; resources, J.R.G.-M.; data curation, M.N.-M.; writing—original draft preparation, E.A.B.-G.; writing—review and editing, M.G.-L. and J.R.G.-M.; visualization, J.R.G.-M.; supervision, M.N.-M.; project administration, M.G.-L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

The authors thank the Universidad Veracruzana for their support for this work, and Erik A. Borges-Galindo want to thank the Conahcyt for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LSTM | Long Short Term Memory |

| CNN | Convolutional Neural Network |

| ASL | American Sign Language |

| MSL | Mexican Sign Language |

| CSL | Colombian Sign Language |

| ISL | Indian Sign Language |

| JSL | Japanese Sign Language |

| GAN | Generative Adversarial Network |

Appendix A. List of Words in the Dataset

| Word (Spanish) | Translation to English |

| Hola | Hello |

| Perdón | Sorry |

| De nada | You’re welcome |

| Sí | Yes |

| No | No |

| Gracias | Thank You |

| Bien | Good |

| Mal | Bad |

| Educación | Education |

| Lápiz | Pencil |

| Casa | House |

| Mesa | Table |

| Hoy | Today |

| Mamá | Mom |

| Papá | Dad |

| Por favor | Please |

| Ayudar | Help |

| Comer | To eat |

| Tener | To have |

| Tolerar | To tolerate |

Appendix B. Influence of Dropout Rate on the Metrics Achieved by the Proposed Models

Table A1.

Influence of the Dropout rate on the metrics achieved by Model 1-B.

Table A1.

Influence of the Dropout rate on the metrics achieved by Model 1-B.

| Dropout | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|

| Precision per class | 0.46666 | 0.13333 | 0.63333 | 0.06666 |

| Recall per class | 0.46666 | 0.13333 | 0.63333 | 0.06666 |

| F1Score | 0.49238 | 0.03137 | 0.61666 | 0.00833 |

| Accuracy mean | 0.46666 | 0.13333 | 0.63333 | 0.06666 |

| Recall mean | 0.36111 | 0.0625 | 0.5 | 0.06666 |

| F1Score mean | 0.38280 | 0.01470 | 0.46875 | 0.00833 |

| Error rate | 0.53333 | 0.86666 | 0.36666 | 0.93333 |

Table A2.

Influence of the Dropout rate on the metrics achieved by Model 1-C.

Table A2.

Influence of the Dropout rate on the metrics achieved by Model 1-C.

| Dropout | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|

| Precision per class | 0.1 | 0.73333 | 0.13333 | 0.0 |

| Recall per class | 0.1 | 0.73333 | 0.13333 | 0.0 |

| F1Score | 0.01818 | 0.73333 | 0.03137 | 0.0 |

| Accuracy mean | 0.01 | 0.73333 | 0.13333 | 0.0 |

| Recall mean | 0.07692 | 0.63235 | 0.07142 | 0.0 |

| F1Score mean | 0.01398 | 0.62254 | 0.01680 | 0.0 |

| Error rate | 0.9 | 0.26666 | 0.86666 | 0.1 |

Table A3.

Influence of the Dropout rate on the metrics achieved by Model 2.

Table A3.

Influence of the Dropout rate on the metrics achieved by Model 2.

| Dropout | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|

| Precision per class | 0.16666 | 0.03333 | 0.1 | 0.66666 |

| Recall per class | 0.16666 | 0.03333 | 0.1 | 0.66666 |

| F1Score | 0.47619 | 0.00215 | 0.01818 | 0.59365 |

| Accuracy mean | 0.16666 | 0.03333 | 0.1 | 0.66666 |

| Recall mean | 0.0625 | 0.0625 | 0.0625 | 0.61764 |

| F1Score mean | 0.00403 | 0.01470 | 0.01136 | 0.52380 |

| Error rate | 0.83333 | 0.96666 | 0.9 | 0.33333 |

Table A4.

Influence of the Dropout rate on the metrics achieved by Model 3.

Table A4.

Influence of the Dropout rate on the metrics achieved by Model 3.

| Dropout | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|

| Precision per class | 0.5 | 0.53333 | 0.00833 | 0.1 |

| Recall per class | 0.5 | 0.53333 | 0.06666 | 0.1 |

| F1Score | 0.48185 | 0.45 | 0.28619 | 0.01818 |

| Accuracy mean | 0.5 | 0.53333 | 0.06666 | 0.1 |

| Recall mean | 0.48039 | 0.47777 | 0.06666 | 0.05882 |

| F1Score mean | 0.44084 | 0.39111 | 0.00833 | 0.01069 |

| Error rate | 0.5 | 0.46666 | 0.93333 | 0.9 |

Table A5.

Influence of the Dropout rate on the metrics achieved by Model 4.

Table A5.

Influence of the Dropout rate on the metrics achieved by Model 4.

| Dropout | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|

| Precision per class | 1 | 0.53333 | 0.9 | 0.73333 |

| Recall per class | 1 | 0.53333 | 0.9 | 0.73333 |

| F1Score | 1 | 0.46666 | 0.87142 | 0.69141 |

| Accuracy mean | 1 | 0.53333 | 0.9 | 0.73333 |

| Recall mean | 1 | 0.54166 | 0.92307 | 0.66666 |

| F1Score mean | 1 | 0.5 | 0.89010 | 0.64081 |

| Error rate | 0 | 0.46666 | 0.09999 | 0.26666 |

References

- INEGI. Estadísticas a propósito del día internacional de las personas con discapacidad (Datos Nacionales). In Comunicación Social; Comunicado de Presna Num. 713/21; INEGI: Aguascalientes, Mexico, 2021; pp. 1–5. [Google Scholar]

- Chollet, F. Deep Learning with Python, 2nd ed.; Manning Publications Co.: Shelter Island, NY, USA, 2021; pp. 1–20. [Google Scholar]

- Ma, Z.; Ma, J.; Liu, X.; Hou, F. Large Margin Training for Long Short-Term Memory Neural Networks in Neural Language Modeling. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19 August 2022; Volume 5, pp. 673–677. [Google Scholar]

- Dai, C.; Liu, X.; Lai, J. Human action recognition using two-stream attention based LSTM networks. Appl. Soft Comput. 2020, 86, 105820. [Google Scholar] [CrossRef]

- Agarwal, A.; Garg, S.; Bansal, P. A Deep Learning Framework for Visual to Caption Translation. In Proceedings of the 2021 3rd International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 17 December 2021; Volume 3, pp. 304–307. [Google Scholar]

- Vasani, N.; Autee, P.; Kalyani, S.; Karani, R. Generation of Indian sign language by sentence processing and generative adversarial networks. In Proceedings of the International Conference on Intelligent Sustainable Systems (ICISS), Thoothukudi, India, 5 December 2020; Volume 3, pp. 1250–1255. [Google Scholar]

- Jayadeep, G.; Vishnupriya, N.V.; Venugopal, V.; Vishnu, S.; Geetha, M. Mudra: Convolutional Neural Network based Indian Sign Language Translator for Banks. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13 May 2020; Volume 4, pp. 1–5. [Google Scholar]

- Ru, T.S.; Sebastian, P. Real-Time American Sign Language (ASL) Interpretation. In Proceedings of the 2023 2nd International Conference on Vision Towards Emerging Trends in Communication and Networking Technologies (ViTECoN), Vellore, India, 5 May 2023; Volume 2, pp. 1–6. [Google Scholar]

- Srinivasa, K.G.; Anupindi, S.; Sharath, R.; Chaitanya, S.K. Analysis of Facial Expressiveness Captured in Reaction to Videos. In Proceedings of the 2017 IEEE 7th International Advance Computing Conference (IACC), Hyderabad, India, 7 January 2017; Volume 7, pp. 664–670. [Google Scholar]

- Rahman, A.I.; Akhand, Z.; Nahian, K.; Tasin, A.; Sarda, A.; Bhuiyan, S.; Rakib, M.; Ahmed Fahim, Z.; Kundu, I. Continuous Sign Language Interpretation to Text Using Deep Learning Models. In Proceedings of the 2022 25th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 19 December 2022; Volume 25, pp. 745–750. [Google Scholar]

- Cheng, S.; Huang, C.; Wang, Z.; Wang, J.; Zeng, Z.; Wang, F.; Ding, Q. Real-Time Vision-Based Chinese Sign Language Recognition with Pose Estimation and Attention Network. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 9 December 2021; pp. 1210–1215. [Google Scholar]

- García-Bautista, G.; Trujillo-Romero, F.; Caballero-Morales, S.O. Mexican Sign Language word recognition using RGB-D information. Rev. Electron. Comput. Inform. Biomed. Electron. 2021, 10, 1–23. [Google Scholar]

- Ortiz-Farfán, N.; Camargo-Mendoza, J.E. Computational Model for Sign Language Recognition in a Colombian Context. Tech. Lógicas 2020, 23, 191–226. [Google Scholar]

- Natarajan, B.; Rajalakshmi, E.; Elakkiya, R.; Kotecha, K.; Abraham, A.; Gabralla, L.A.; Subramaniyaswamy, V. Development of an End-to-End Deep Learning Framework for Sign Language Recognition, Translation, and Video Generation. IEEE Access 2022, 10, 104358–104374. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, J.; Li, Y.; Wang, L. SignGest: Sign Language Recognition Using Acoustic Signals on Smartphones. In Proceedings of the IEEE 20th International Conference on Embedded and Ubiquitous Computing (EUC), Wuhan, China, 30 October 2022; Volume 20, pp. 60–65. [Google Scholar]

- Awata, S.; Sako, S.; Kitamura, T. Japanese Sign Language Recognition Based on Three Elements of Sign Using Kinect v2 Sensor. Commun. Comput. Inf. Sci. 2017, 713, 95–102. [Google Scholar]

- Yang, S.-H.; Gan, J.-Z. An interactive Taiwan sign language learning system based on depth and color images. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics–Taiwan, Taipei, Taiwan, 14 June 2017; pp. 112–113. [Google Scholar]

- Mejía-Peréz, K.; Córdova-Esparza, D.-M.; Terven, J.; Herrera-Navarro, A.-M.; García-Ramírez, T.; Ramírez-Pedraza, A. Automatic Recognition of Mexican Sign Language Using a Depth Camera and Recurrent Neural Networks. Appl. Sci. 2022, 12, 5523. [Google Scholar] [CrossRef]

- Sánchez-Vicinaiz, T.J.; Camacho-Pérez, E.; Castillo-Atoche, A.A.; Cruz-Fernandez, M.; García-Martínez, J.R.; Rodríguez-Reséndiz, J. MediaPipe Frame and Convolutional Neural Networks-Based Fingerspelling Detection in Mexican Sign Language Alphabet. Technologies 2024, 12, 124. [Google Scholar] [CrossRef]

- Rios-Figueroa, H.V.; Sánchez-García, A.J.; Sosa-Jiménez, C.O.; Solís-González-Cosío, A.L. Use of Spherical and Cartesian Features for Learning and Recognition of the Static Mexican Sign Language Alphabet. Mathematics 2022, 10, 2904. [Google Scholar] [CrossRef]

- Martínez-Sánchez, V.; Villalón-Turrubiates, I.; Cervantes-Álvarez, F.; Hernández-Mejía, C. Exploring a Novel Mexican Sign Language Lexicon Video Dataset. Multimodal Technol. Interact. 2023, 7, 83. [Google Scholar] [CrossRef]

- González-Rodríguez, J.-R.; Córdova-Esparza, D.-M.; Terven, J.; Romero-González, J.-A. Towards a Bidirectional Mexican Sign Language–Spanish Translation System: A Deep Learning Approach. Technologies 2024, 12, 7. [Google Scholar] [CrossRef]

- Miah, A.S.M.; Hasan, M.A.M.; Okuyama, Y.; Tomioka, Y.; Shin, J. Spatial–temporal attention with graph and general neural network-based sign language recognition. Pattern Anal. Appl. 2024, 27, 37. [Google Scholar] [CrossRef]

- Martínez-Guevara, N.; Rojano-Cáceres, J.R.; Curiel, A. Unsupervised extraction of phonetic units in sign language videos for natural language processing. Univ. Access Inf. Soc. 2023, 22, 1143–1151. [Google Scholar] [CrossRef]

- Gortarez-Pelayo, J.J.; Morfín-Chávez, R.F.; Lopez-Nava, I.H. DAKTILOS: An Interactive Platform for Teaching Mexican Sign Language (LSM). In Proceedings of the 15th International Conference on Ubiquitous Computing & Ambient Intelligence (UCAmI 2023), Riviera Maya, Mexico, 30 November 2023; Lecture Notes in Networks and Systems. Bravo, J., Urzáiz, G., Eds.; Springer: Cham, Switzerland, 2023; Volume 842. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).