Abstract

In this paper, we propose a Multi-objective Five-element Cycle Optimization algorithm based on Multi-strategy fusion (MOFECO-MS) to address the Bi-objective Traveling Thief Problem (BITTP), an extension of the Traveling Thief Problem that incorporates two conflicting objectives. The novelty of our approach lies in a unique individual selection strategy coupled with an innovative element update mechanism rooted in the Five-element Cycle Model. To balance global exploration and local exploitation, the algorithm categorizes the population into distinct groups and applies crossover operations both within and between these groups, while also employing a mutation operator for local searches on the best individuals. This coordinated approach optimizes parameter settings and enhances the search capabilities of the algorithm. Extensive experiments were conducted on nine BITTP instances, comparing MOFECO-MS against eight state-of-the-art multi-objective optimization algorithms. The results show that MOFECO-MS excels in both Hypervolume (HV) and Spread (SP) indicators, while also maintaining a high level of Pure Diversity (PD). Overall, MOFECO-MS outperformed the other algorithms in most instances, demonstrating its superiority and robustness in solving complex multi-objective optimization problems.

1. Introduction

Real-world optimization problems are inherently complex, often comprising multiple interdependent sub-problems. Traditionally, researchers address these challenges by decomposing such complex problems into independent sub-problems, solving them separately without considering their interactions. However, this approach overlooks the interdependencies among these sub-problems, leading to sub-optimal solutions that do not necessarily translate to optimal solutions for the entire problem [1].

To address this issue and better understand real-world complexities, the Traveling Thief Problem (TTP) was introduced as a benchmark problem [2]. TTP is a combinatorial optimization problem that integrates the Traveling Salesman Problem (TSP) and the Knapsack Problem (KP). The unique aspect of TTP lies in its ability to simulate the interdependencies between these two sub-problems, capturing the essence of real-world optimization scenarios where decisions made in one aspect of the problem influence outcomes in another. By modeling such interactions, TTP provides a more holistic approach to solving complex problems, making it a significant tool for researchers aiming to develop optimization algorithms that are more applicable to real-world situations.

In the context of TTP, two variants are commonly studied: the single-objective TTP and the bi-objective TTP (BITTP), which is the focus of this paper. The BITTP not only seeks to optimize the route and item selection to minimize travel time and maximize profit but also offers a framework for studying trade-offs between conflicting objectives, which is crucial for real-world decision-making processes.

Numerous scholars have explored diverse methodologies to tackle the single-objective TTP, leveraging a spectrum of approaches ranging from heuristic algorithms [3,4,5,6] to swarm intelligence optimization techniques [7,8,9,10].

In recent years, the Bi-Objective Traveling Thief Problem (TTP) has garnered significant attention, with various approaches being developed to address its unique challenges. The complexity of TTP arises from the interdependence between the Traveling Salesman Problem and the Knapsack Problem, making traditional single-objective methods insufficient. To tackle these challenges, researchers have proposed a range of multi-objective evolutionary algorithms, hybrid approaches combining dynamic programming, and specialized metaheuristics. These methods aim to optimize both the journey time and the total value of collected items while addressing the interrelated nature of the sub-problems.

For instance, several studies have focused on multi-objective evolutionary algorithms, such as NSGA-II and its variants, which demonstrate robust performance across various TTP instances [11]. Others have integrated dynamic programming within evolutionary frameworks, showing significant improvements in solution quality, particularly for large instances [12]. Additionally, adaptive and specialized algorithms tailored to the unique facets of TTP instances have been proposed [13], further enhancing the effectiveness of these methods. Metaheuristic approaches like Variable Neighbourhood Search [14] and weighted-sum methods [15] have also been explored, offering competitive results compared to traditional optimization techniques. Moreover, the application of Ant Colony Optimization (ACO) [16] and the Imperialist Competitive Algorithm (ICA) has shown promise in improving best-known solutions across different TTP instances [17].

The following Table 1 summarizes these recent advancements in solving the Bi-Objective TTP, categorizing the studies based on their research focus, methods, and key contributions.

Table 1.

Summary of Recent Studies on BITTP.

This paper introduces a novel heuristic multi-objective optimization algorithm for solving BITTP, leveraging the Five-element Cycle Optimization algorithm (FECO). FECO, grounded in the Five-element Cycle Model (FECM), has effectively addressed combinatorial optimization problems [22]. Building upon this framework, we devise a fresh individual evaluation criterion and integrate it with FECO’s distinctive optimization mechanism to formulate an innovative individual update strategy. This strategy aims to harmonize global exploration and local exploitation, enhancing search capabilities and facilitating more effective solutions to BITTP.

The remaining sections of the paper are organized as follows: Section 2 provides a comprehensive explanation of BITTP and FECO. The methodology for solving BITTP using the proposed algorithm is delineated in Section 3. Section 4 introduces the experimental conditions, including the experimental operating environment, benchmark problems, and algorithm performance metrics. Section 5 details the algorithm’s parameter settings, experimental results, and analysis. Finally, Section 6 offers concluding remarks and outlines avenues for future research.

2. Preliminaries

2.1. Bi-Objective Traveling Thief Problem

The TTP is a novel combinatorial optimization challenge that amalgamates two classical problems, the TSP and the KP. The BITTP, as adopted in this paper following the definition from [23], is a variant of TTP with dual objective functions. Specifically, it entails a scenario where a traveling thief traverses n cities while selectively acquiring items from a pool of items and placing them in their knapsack, ultimately returning to the departure city. Notably, the departure city does not contain any items, while other cities may contain one or more items. The objective is to devise an optimal route plan and item acquisition strategy to minimize the thief’s travel time while maximizing the total profit from the items. It is crucial to note the interdependence between the two objectives, as the selection of heavier items may impede the thief’s speed, thereby elongating travel time. The formulation of objective functions and constraints for BITTP is outlined below.

The route plan in the BITTP is represented by the vector , where is a permutation vector denoting the sequence of cities visited by the thief. Here, represents the ith city visited by the thief, with i ranging from 1 to n.

The item plan represented as is a vector denoted by , where corresponds to the jth item. Each element in is a binary value: signifies that the jth item is selected, while indicates its exclusion. The index j ranges from 1 to .

The speed of the traveling thief diminishes linearly with the augmentation of the cumulative weight of the selected items [21]. Hence, the velocity at which the traveling thief departs from city can be expressed as follows:

where and represent the thief’s speed when the knapsack is empty and when it is full, respectively. In this study, we set and . denotes the total weight of items carried as the thief departs from the city , while Q signifies the knapsack capacity.

where indicates that item j located at city is stolen, and conversely, if it has not been stolen, . The weight of item j is denoted by .

The distance matrix between n cities is known and symmetric, implying that . Therefore, the traveling time, which represents the first objective function of BITTP, is computed as shown in (3).

The second objective function of the BITTP, representing the thief’s profit, is expressed by (4). For convenience and consistency with profit minimization, profit maximization is also transformed into a minimization solution.

where denotes the value of the jth item.

Subject to:

2.2. Five-Element Cycle Model

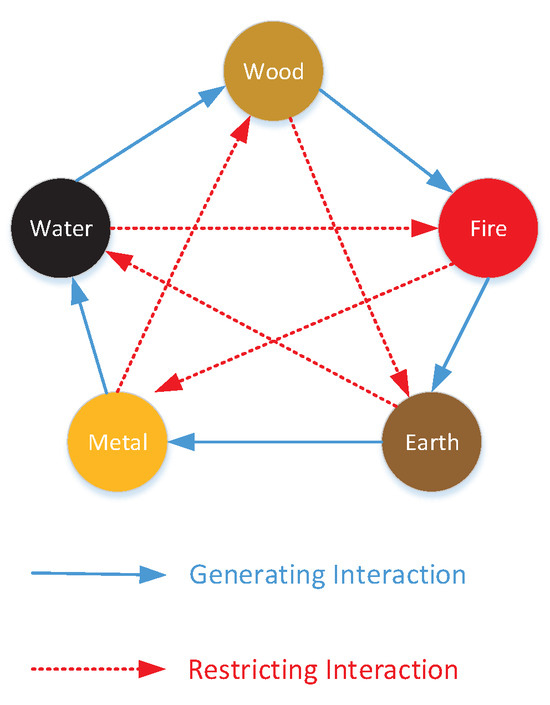

Chinese philosophers have long employed the five-element theory to expound on the genesis of all phenomena in the universe. This theory elucidates the interconnectedness of metal, wood, water, fire, and earth, detailing both generative and restrictive interactions. Generative interaction denotes a symbiotic relationship between elements of disparate attributes, wherein each facilitates the growth and potency of the other. Specifically, wood fuels fire, fire fosters earth, earth fosters metal, metal nurtures water, and water nourishes wood. Conversely, restrictive interaction delineates how elements of distinct attributes curtail and vie with each other. In this paradigm, wood subdues the earth, and the earth confines water. Water quells fire, fire tempers metal, and metal restrains wood. This intricate interplay ensures a dynamic equilibrium in nature (as detailed in Reference [22]). This intricate dynamic is depicted in Figure 1, where the outer blue arrow symbolizes the generative cycle, while the inner red arrow represents the restrictive cycle. Generative interactions can be likened to the relationship between parents and offspring, wherein the former supports and aids the latter, while restrictive interactions mirror the dynamic between grandparents and grandchildren, wherein the former impedes the latter’s development, thereby establishing a harmonious equilibrium.

Figure 1.

The generating and restricting interaction among five elements.

The Five-element Cycle Model (FECM) is founded upon the principles of the Five-element theory [22]. Consider a dynamic system comprising L elements. At time k, the force exerted on element by other elements is denoted as . Each element’s mass is represented by . The FECM is delineated as follows:

where . When , is replaced by L; when , is replaced by L; when , is replaced by 1; when , is replaced by 1. , , , and are weight coefficients, typically set to 1.

2.3. Multi-Objective Five-Element Cycle Algorithm

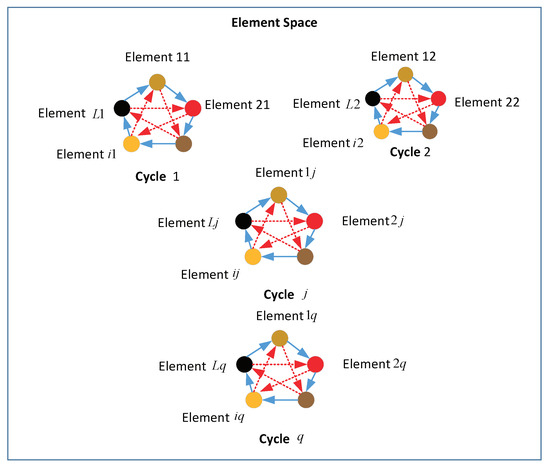

Based on the FECM, the Five-element Cycle Optimization algorithm (FECO) is introduced [22]. Operating as an iterative algorithm, FECO tackles optimization problems, with each element representing a feasible solution. The population is divided into q cycles, each comprising L elements, as depicted in Figure 2. Typically, the objective function value of an element is equated to its mass. Leveraging the relationship between element masses and the forces exerted on them in the FECM, and higher forces correspond to smaller masses. Consequently, elements with greater forces are deemed superior solutions in optimization problems aimed at minimizing the objective function. This implies that as forces increase, element masses diminish, gradually converging towards the optimal solution. Hence, the force of each element dictates whether the solution is updated. Elements with lower forces, indicative of larger masses, necessitate updates to explore better solutions. Ultimately, the optimal solution is derived through iterative refinement.

Figure 2.

The structure of the element space.

The Multi-objective Five-element Cycle Optimization algorithm (MOFECO) is an extension of FECO tailored for addressing Multi-objective Optimization Problems (MOP) [24]. Given that MOP entails multiple objective functions, each element encompasses multiple forces. In [24], it is proposed that an element is retained in the iteration if at least two of its forces are positive; otherwise, the element undergoes an update. Conversely, in [25], an element is updated if the sum of forces across all its objective functions is negative.

3. Multi-Objective Five-Element Cycle Optimization Algorithm Based on Multi-Strategy Fusion for BITTP

3.1. Expression of Solution and Objective Function

The proposed Multi-objective Five-element Cycle Optimization algorithm based on Multi-strategy Fusion (MOFECO-MS) is utilized in this study to tackle the BITTP. In the context of MOFECO-MS applied to BITTP, each solution corresponds to an element within the FECM framework. The population, comprising N individuals of MOFECO-MS, is segmented into q cycles, each containing L elements. They are denoted as , an element representing the ith element in the jth cycle at the kth iteration, constituting a feasible solution for BITTP. The decision variables of BITTP encompass two components, the route plan and the item plan . The expression succinctly captures this duality.

The route plan comprises n decision variables, corresponding to the number of cities, denoted as:

The item plan encompasses decision variables, representing the number of items, denoted as:

In MOFECO, the number of masses associated with each element is equivalent to the number of objective functions. Here, denotes the rth objective function value of the element :

For BITTP, r takes values of 1 and 2.

Based on the relationship among FECM, MOFECO-MS, and BITTP as shown in Table 2, MOFECO-MS is designed to solve the BITTP. Table 2 illustrates the relationship between the Five-element Cycle Model (FECM), the Multi-objective Five-element Cycle Optimization algorithm (MOFECO-MS), and the Bi-objective Traveling Thief Problem (BITTP). In FECM, elements represent entities within the cycle, with mass indicating their state, and force reflecting the interactions driving system evolution.

Table 2.

Relationship among FECM, MOFECO-MS and BITTP.

In MOFECO-MS, these elements correspond to potential solutions within the algorithm’s population, where each element’s mass is linked to the objective function values in BITTP, indicating solution quality. The force applied to these elements represents the evolutionary pressure guiding the optimization process. The BITTP context translates these elements into concrete solutions (tour plans and picking plans), with their mass reflecting objective function values like travel time and total profit.

This table clarifies how MOFECO-MS leverages the principles of FECM, with each component in the optimization algorithm corresponding directly to the theoretical constructs in FECM, thereby providing a strong foundation for solving BITTP.

3.2. Initializing the Population

Since BITTP comprises two distinct components, addressing each component separately during the initial population construction phase is imperative. A sequence of n integers for the route plan is randomly generated, stipulating that the first integer corresponds to the starting city, denoted as city 1. Concurrently, the item plan is encoded using a binary scheme, where a value of 1 indicates the selection of an item, while 0 signifies its absence. During the initialization stage, we assume all items are initially selected, with subsequent adjustments performed using a repair operator. This repair operator ensures compliance with the knapsack capacity constraint by iteratively removing randomly selected items from the selection until the total weight of the chosen items conforms to the specified knapsack capacity. This iterative process continues until the cumulative weight of the selected items satisfies the knapsack capacity constraint. The pseudocode for the repair operator is presented in Algorithm 1.

| Algorithm 1 Repair operator |

|

After establishing the route and item plans, the objective function can be computed according to Section 2.1. Subsequently, the objective function value for each element can be assigned as their respective mass by (10).

3.3. Force Calculation

The number of forces exerted on element by other elements in the jth cycle is equivalent to the number of objective functions. The rth force acting on is denoted by and computed using (11).

It can be seen from the above formula that the force of an element is closely related to its mass. The smaller the mass of an element is, the greater the force of the element is. Therefore, one can evaluate the element according to the force of each element.

3.4. Elements Sorting Strategy

In each iteration, it is essential to rank the elements for evaluation. Subsequently, we design the update strategy based on this ranking. Following the evaluation, we retain the elite elements while discarding the non-elite ones, ultimately identifying the optimal solutions.

In MOFECO-MS, we employ two sorting methods for these scenarios. Firstly, we sort the elements within each cycle based on their forces when determining the update strategy. Secondly, we utilize fast non-dominated sorting [26] to rank the elements according to their dominance relationship after each evolutionary iteration.

Since this paper entails two objective functions, each element possesses two distinct forces denoted as . Consequently, unlike the single objective function scenario, the element with the greatest force cannot be straightforwardly deemed the optimal element within the cycle. Herein, we adopt a sorting approach where we initially arrange the forces in the jth cycle for each objective function in ascending order, yielding respective sequence numbers denoted as . Subsequently, we compute the sum of sequence numbers for each element across both objective functions. By sorting these sum values, we identify the element with the highest ranking within cycle j, denoted as . We collate all into , structured as . encompasses two element types: those within the same cycle as , termed , and those outside cycle j, denoted as . This sorted collection is denoted as . The comprehensive sorting process is delineated in Algorithm 2.

| Algorithm 2 The sorting process of the elements in each cycle |

|

Once the elements are sorted, we can identify the optimal element within each cycle based on the force order. Additionally, employing non-dominated sorting enables us to pinpoint the Pareto optimal solution, representing the solution with a non-dominant sorting rank of 1 [26] as the current optimal solution.

Hence, elements can be categorized into different types, as illustrated in Table 3.

Table 3.

Symbols and description of the elements.

3.5. Exploration Strategy

The exploration rate represents the likelihood of an intelligent optimization algorithm selecting new solutions for exploration. A higher exploration rate implies a greater inclination of the algorithm towards exploring uncharted solution spaces, thereby increasing the chances of discovering optimal solutions. MOFECO-MS employs the crossover operator to enhance the algorithm’s exploration capability. Since the decision variables of BITTP consist of two parts, the route plan and the item plan, these two components are separately updated during element updates.

For the route plan, the partial mapping crossover operator (PMX) [27] is employed because it maintains population diversity and prevents the algorithm from converging to local optima. PMX facilitates diversity preservation by avoiding the generation of duplicate genes through mapping, thus enhancing both convergence and exploration capabilities.

As the item plan is binary encoded, the uniform crossover operator [28] is utilized. This operator exchanges genes at each locus of paired individuals with the same crossover probability, generating two new individuals. Uniform crossover enhances population diversity, facilitates gene communication and combination, and improves the algorithm’s search efficiency and convergence rate.

During the exploration phase, expanded and narrowed exploration strategies are employed to expedite and enhance the search process. Expanded exploration involves crossing element with the best element in each cycle, comprising and . On the other hand, narrowed exploration entails crossing with , with the frequency of these methods adjusted by probability . The pseudocode for the exploration phase is depicted in Algorithm 3. Notably, the probability of expanded exploration is , while the probability of narrowed exploration is .

| Algorithm 3 Exploration phase |

|

3.6. Exploitation Strategy

The exploitation rate, within the context of intelligent optimization algorithms, signifies the likelihood or frequency of leveraging known high-quality solutions. A higher exploitation rate implies a greater propensity for utilizing established reasonable solutions with the anticipation of further optimization around them. Typically, this rate is achieved by selecting the best solution or weighting the selection based on solution quality. While a higher exploitation rate aids rapid convergence towards local optima, it also raises the risk of entrapment in local optima, potentially overlooking the global optimum. Hence, selecting the appropriate exploitation strategy is crucial.

MOFECO-MS utilizes a mutation operator to enhance exploitation capability, similar to the crossover operator. This mutation operator is bifurcated into two segments: mutation of the route plan and mutation of the item plan. For the route plan, we employ the swap operator [29]; for the item plan, we introduce a novel heuristic operator proposed in this paper.

Within the heuristic operator, the first step involves computing the remaining knapsack capacity, denoted as . Subsequently, we identify those whose weights are lower than the remaining knapsack capacity from the untaken items. Next, randomly select an item meeting the weight criteria and place it into the knapsack to augment profit. The pseudocode outlining the heuristic operator is delineated in Algorithm 4.

| Algorithm 4 Heuristic operator |

|

Indeed, the exploitation phase is also segregated into expanded and narrowed exploitation. Expanded exploitation involves mutating any of the current optimal elements , while narrowed exploitation entails mutating an element that is potentially a good solution in the exploitation phase, represented by . The frequency of expanded and narrowed exploitation is determined by the probability . The pseudocode outlining the exploitation phase is depicted in Algorithm 5. Notably, the probability of expanded exploitation is , while the probability of narrowed exploitation is .

| Algorithm 5 Exploitation phase |

|

3.7. Elite Retention Strategy

The elite retention strategy is a commonly employed technique to modulate the progression rate of algorithms. Throughout the iterative process of the algorithm delineated in this paper, select individuals showcasing superior performance are preserved to impede their elimination, thereby expediting the algorithm’s convergence. After each iteration, the parent and offspring populations undergo reorganization to safeguard exceptional individuals from elimination, thereby realizing elite retention.

3.8. Update Strategy for the Element

The element update strategy significantly influences the algorithm’s performance. Therefore, the crucial issues we need to address revolve around which elements necessitate updating and how to enact those updates.

Given the elitist strategy we employ, which preserves the entire parent population, during element updates, we can update each element to explore as many new individuals as possible, thereby hastening the discovery of the global optimal solution.

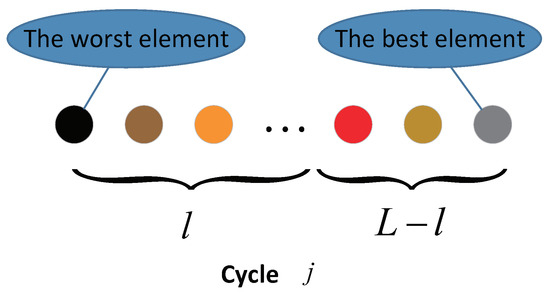

In the proposed algorithm, we initially employ the aforementioned element sorting strategy, ensuring that elements within each cycle are arranged from worst to best, as illustrated in Figure 3. Subsequently, we implement exploration capability with the first l elements and exploitation with the last elements in each cycle. The pseudocode detailing this process is provided in Algorithm 6.

| Algorithm 6 The update process for elements |

|

Figure 3.

The L elements sorted from the worst to the best in the cycle j.

When selecting the first l elements in each cycle for exploration, we update these l elements using the exploration strategy.

During the exploration phase, denotes the best element in the cycle, encompassing both within the same cycle j as , as well as , which represents the best element among the remaining cycles.

Conversely, we adopt the exploitation strategy to exploit the last elements because these elements have been sorted within the cycle and are deemed superior solutions. Thus, in the exploitation phase, comprises the optimal elements within cycle , , and the updated elements .

To determine the most effective search method for updating elements, we devised four distinct combinations, denoted as SM1–SM4, outlined in Table 4.

Table 4.

Search methods in updating.

In Table 4, the variations among the three sets of methods, SM1, SM2, and SM3, lie in the selection of elements during narrowed exploitation. Specifically, they are , , and , respectively. The distinction between SM3 and SM4 pertains to the choice of for exploration during expanded exploration, which are and , respectively.

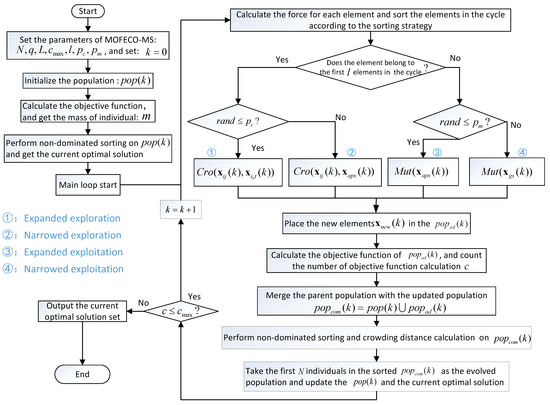

3.9. Flowchart of MOFECO-MS

The flowchart depicting the MOFECO-MS algorithm is presented in Figure 4. Notably, the parameter employed in the initialization phase denotes the maximum number of objective function computations and serves as the termination criterion for the algorithm. From this flowchart, it is evident that the operators used in our proposed algorithm are genetic operators, such as crossover and mutation.

Figure 4.

The flowchart of Multi-objective Five-element Cycle Optimization algorithm based on Multi-strategy Fusion (MOFECO-MS).

While MOFECO-MS shares several similarities with traditional genetic algorithms (GAs) in terms of using these genetic operators and following an iterative process, there are key differences that distinguish it. In MOFECO-MS, the population is organized into cycles (or rings) as defined by the Five-element Cycle Model, where each element corresponds to an individual in a GA. The entire set of cycles represents the population, and genetic operators are applied based on the sorting of elements within each cycle, rather than through the selection mechanisms typical in GAs. This cyclic ordering, driven by the Five-element Cycle, governs how elements are updated, ensuring that the process aligns with the model’s inherent dynamics. This approach not only maintains a balance between exploration and exploitation but also introduces a novel mechanism that differentiates MOFECO-MS from conventional GAs.

4. Experimental Setup

4.1. Operating Environment

In this study, we conducted a series of computational experiments to assess the effectiveness of the proposed algorithm. The implementation of these experiments was carried out using MATLAB R2018a and the computational experiments were executed on a high-performance system equipped with a 2.4 GHz Intel Xeon E5645 processor (Intel, Santa Clara, CA, USA). The system also featured 32 GB of RAM, providing ample memory to handle large datasets and complex calculations efficiently. The operating environment for these experiments was Windows 10. This setup ensured that the experiments were performed in a stable and efficient computing environment, thereby allowing for accurate and reliable evaluation of the algorithm’s performance.

4.2. BITTP Instances

The test instances utilized in this paper are drawn from the comprehensive BITTP benchmark developed by Polyakovskiy et al. [23]. Specifically, we have selected nine instances with varying numbers of cities and items, as detailed in Table 5. These instances cover a range of scenarios with varying numbers of cities and items, providing a robust assessment of the proposed algorithm’s performance across different problem scales and complexities.

Table 5.

The Bi-objective Traveling Thief Problem (BITTP) test instances.

Table 5 outlines the key characteristics of the selected instances. The instances are defined by the number of cities (n), the number of items (), and the knapsack capacity (Q). The knapsack component of each instance is constructed based on the ratio of item profit to weight, categorized into three distinct types: bounded strongly correlated (bsc), uncorrelated with similar weights (usw), and uncorrelated (unc). The column labeled R indicates the distribution of items across cities, with the number in each city excluding the departure city where no items are available.

4.3. The Statistical Analysis Methods

The statistical analysis methods are based on the computational and experimental outcomes derived from 15 independent runs of each algorithm. Specifically, the reported results include the best solution (Best), worst solution (Worst), median (Median), mean (Average), and standard deviation (StdDev) obtained across these 15 runs. Here, is a detailed explanation of each statistical indicator:

- Best: This represents the best performance achieved by the algorithm across the 15 runs. It indicates the highest level of performance that the algorithm can reach under the given experimental conditions. A higher best value generally suggests that the algorithm is capable of finding very high-quality solutions, even if it is not consistent every time.

- Worst: This represents the worst performance observed among the 15 runs. It provides insight into the least favorable outcome that the algorithm might produce. A higher worst value is preferable as it indicates that even in the least favorable scenario, the algorithm still performs relatively well.

- Median: This is the middle value of the performance results when they are sorted in ascending order. It gives an indication of the typical performance of the algorithm, minimizing the impact of outliers. The median is particularly useful in understanding the central tendency of the algorithm’s performance.

- Mean (Average): This represents the mean performance across all 15 runs. It is calculated by summing all the performance values and dividing by the number of runs. The average provides a general idea of the algorithm’s overall performance.

- Standard Deviation (StdDev): This measures the amount of variation or dispersion in the performance results. A lower standard deviation is preferable as it indicates that the algorithm’s performance is more consistent across different runs. This means that the algorithm is reliably achieving similar results, which is a desirable characteristic in optimization algorithms.

Each of these statistical indicators provides a different perspective on the algorithm’s performance. By considering them together, we can gain a comprehensive understanding of the strengths and limitations of each algorithm under different conditions. The performance metrics are chosen to ensure that the analysis is thorough and that the best algorithm can be identified based on a balanced consideration of all relevant factors.

4.4. Performance Metrics

Multi-objective optimization (MOO) algorithms are designed to solve problems with multiple conflicting objectives, where the goal is to find a set of solutions that optimally balance these objectives. Evaluating the performance of MOO algorithms is crucial for assessing their effectiveness in finding high-quality solutions across the entire Pareto front, the set of non-dominated solutions.

In this paper, we take the Hypervolume (HV), Spacing (SP), and Pure Diversity (PD) metrics together as evaluation criteria to evaluate the performance of algorithms. Choosing the HV, SP, and PD metrics together provides a comprehensive assessment of the algorithm’s performance across different aspects:

HV assesses both convergence and overall spread, SP ensures even distribution of solutions, and PD guarantees exploration of the entire search space. These metrics collectively ensure that the algorithm is not only converging to the optimal solutions but also maintaining a diverse set of solutions. This balance is critical in multi-objective optimization as it provides a set of well-distributed, high-quality solutions for decision-makers.

By considering all three metrics, we can gain a complete understanding of the algorithm¡¯s strengths and weaknesses in solving multi-objective optimization problems, leading to more informed decisions in algorithm selection and development. Here, is a detailed description of them:

4.4.1. Hypervolume (HV)

Hypervolume (HV) serves as a comprehensive indicator for assessing multi-objective evolutionary algorithms, capable of concurrently reflecting the convergence and distribution characteristics of the algorithm [30]. It is computed by determining the hypervolume of the region bounded by the Pareto front and the reference point. The calculation formula is provided below:

where denotes the Lebesgue measure, signifies the hypervolume formed by the reference point and a point on the Pareto front, and s denotes the Pareto front generated by the algorithm.

In selecting the reference point , we use the worst value of each objective function observed on the Pareto front. Hence, a larger hypervolume () corresponds to superior algorithm performance.

4.4.2. Spacing (SP)

The spacing (SP) metric is a widely used measure in MOO to assess the uniformity of the distribution of solutions in the Pareto front. It evaluates how evenly the solutions are spread along the Pareto front. A lower value of SP indicates a more uniformly distributed set of solutions, which is desirable in MOO as it ensures better coverage and diversity [31]. The SP metric is calculated using the following steps and formula:

- Calculate the distance:For each solution i in the obtained Pareto front, compute the distance to its nearest neighbor solution in the objective space. This distance is typically calculated using the Euclidean distance.

- –

- M is the number of objectives,

- –

- is the value of the k-th objective for solution ,

- –

- is the value of the k-th objective for solution .

- Compute the average distance:Calculate the average of these minimum distances.where A is the Pareto front. is the number of solutions in the Pareto front.

- Calculate the SP metric:Finally, compute the SP metric as the standard deviation of the distances from their mean .

By using the SP metric, we can quantitatively evaluate and compare the distribution quality of solution sets obtained by different multi-objective optimization algorithms. Lower SP indicates that the solutions are more evenly distributed along the Pareto front, which is desirable. Higher SP indicates uneven distribution of solutions, suggesting gaps or clusters in the Pareto front.

4.4.3. Pure Diversity (PD)

In multi-objective optimization algorithms, the PD metric is used to assess the diversity of a solution set generated by the algorithm, focusing on the differences between the solutions [32]. This metric is important for understanding the algorithm’s ability to explore the solution space comprehensively. Here, is a common formula for calculating :

where

- are two different solutions from the set,

- is the distance between these two solutions, which can be calculated using the Euclidean distance or another suitable metric.

This formula calculates the average distance between all possible pairs of solutions in the set, providing a measure of the set’s diversity. A higher value indicates better diversity, meaning greater differences between the solutions.

5. Experimental Results and Analysis

5.1. Parameter Setting of MOFECO-MS

In the MOFECO-MS algorithm, several parameters require optimization, including the population size N, the termination conditions of the algorithm , the crossover probability , mutation probability for updating elements, the number of cycles q, the number of elements L allocated in each cycle, and the parameter l determining the ratio of exploration to exploitation within each cycle. To identify the optimal values for these parameters, we conducted experiments individually for each parameter, all performed on the instance and each set of experiments was run 15 times independently.

5.1.1. Determining the Parameters N and

To facilitate a more equitable comparison with other optimization algorithms, we have set the termination criterion for our iterative algorithm, MOFECO-MS, not by the number of iterations but by the number of objective function evaluations. Since different optimization algorithms employ varying update strategies at each iteration, using the number of objective function evaluations as the termination criterion ensures a fairer comparison.

Therefore, under this termination condition, we set the number of iterations to a relatively large value to accommodate the required number of objective function evaluations. The number of objective function evaluations is determined based on the population size. The population size settings were referenced from [22], and we configured different N and corresponding values, as shown in Table 6.

Table 6.

Evaluation of N and on performance metrics.

Table 6 presents the results of experiments conducted with different combinations of N and . The performance metrics considered are the average hypervolume and the computation time t.

The combination of , = 1000 × N was selected in Table 6 due to its superior performance across the metrics. Specifically:

- Hypervolume (): The average hypervolume value of 2.98 × indicates a high-quality solution set, demonstrating better convergence and diversity compared to other configurations.

- Computation time (t): With a computation time of 155.75, this configuration offers a reasonable balance between solution quality and computational efficiency. While there are configurations with slightly higher hypervolume values, they require significantly more computation time, making , = 1000 × N the most efficient choice overall.

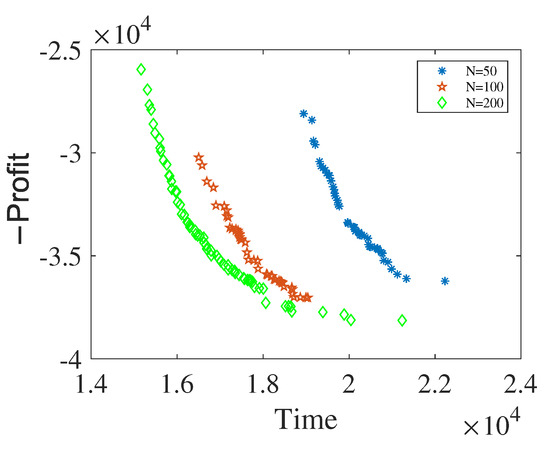

In addition, Figure 5 shows Pareto fronts under different N while keeping = 500 × N. The Pareto fronts are generated by running the algorithm 15 times, with the HV metric taken as the median value. Among the tested values of , is chosen because it likely provides a good balance between convergence speed and solution quality, as indicated by the Pareto front.

Figure 5.

Pareto fronts under different N.

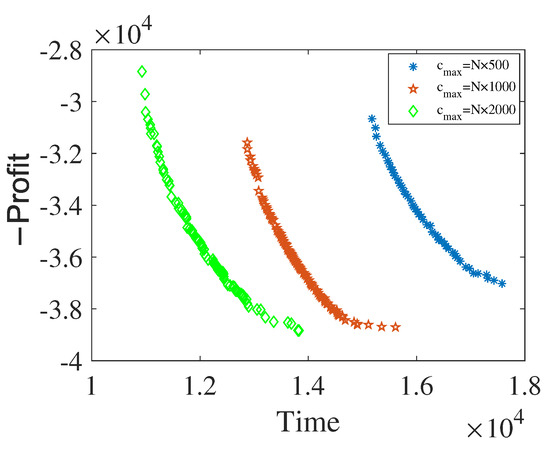

Figure 6 shows Pareto fronts under different with . The Pareto fronts are generated by varying . By observing the Pareto fronts, = 1000 × N is selected because it demonstrates a better trade-off between the objective functions (in this case, profit and time). The chosen value ensures that the algorithm can effectively explore the solution space and find optimal solutions.

Figure 6.

Pareto fronts under different .

Therefore, by selecting this combination, we ensure that our algorithm achieves high-quality solutions within a manageable computational budget, thereby providing a robust and efficient optimization framework.

5.1.2. Determining the Parameters and

Table 7 provides a concise nomenclature for the MOFECO-MS algorithm corresponding to different combinations of and . For instance, denotes the algorithm with and .

Table 7.

Algorithm names with different and .

Table 8 presents the experimental outcomes for various and values. We emphasize the algorithms exhibiting the best results for each performance metric. Notably, algorithm almost demonstrates superior performance across all metrics. Consequently, we conclude that and yield optimal results. Furthermore, we conduct verification tests for specific and values, as shown in Table 9 and Table 10. Once again, the results reaffirm the superiority of and .

Table 8.

Statistical results of HV indicator of the algorithms for different , .

Table 9.

Algorithm names with special and .

Table 10.

Statistical results of HV indicator of the algorithm using special , .

5.1.3. Determining the Parameters L and q

Since this paper employs element sorting within cycles, parameter variations q and L significantly influence the algorithm’s performance. Table 11 presents the outcomes corresponding to four distinct combinations of q and L. Notably, the analysis indicates superior performance when and . Consequently, we opt to set and accordingly.

Table 11.

Statistical results of HV indicator of the algorithms with different L and q.

5.1.4. Determining the Parameter l

After determining , we ascertain the optimal value for l. Based on the experimental findings showcased in Table 12, it becomes evident that the algorithm yields superior results when .

Table 12.

Statistical results of HV indicator of the algorithms with different l.

5.2. Selection of Search Methods

In Section 3.8, we outline the design of four distinct search methods to select elements for element updates. Subsequently, we conduct experiments to compare the performance of these four methods and identify the most effective one for integration into the final MOFECO-MS.

For experimentation purposes, we fix the parameters as follows: , , , , , and . We select the instance for testing to identify the optimal method for updating elements. The experimental findings are summarized in Table 13.

Table 13.

Statistical results of HV indicator obtained by four different search methods.

From Table 4 and Table 13, we can see that SM4 obtains the best results, which indicates that when expanding the exploration, the adopts for the best search effect. Because in expanded exploration using the can explore more solutions and improve the diversity of the algorithm, in narrowed exploitation the is also best to use , and in narrowed exploitation, is used because it may be better than and has more possibilities than , so using can make the comprehensive performance of the algorithm better. Thus, in the MOFECO-MS algorithm, we choose SM4 to update the elements.

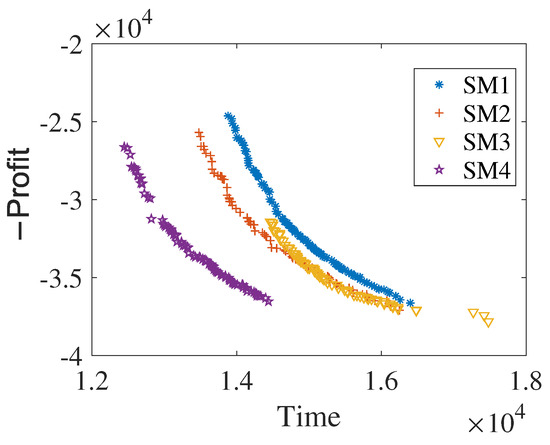

Figure 7 shows the pareto front corresponding to the median HV values obtained by each search method after 15 runs of the MOFECO-MS algorithm with these four search methods.

Figure 7.

The pareto front corresponding to the median HV values obtained by MOFECO-MS algorithm with four search methods.

The results in Figure 7 are also consistent with those in Table 13. Since the objective function of this paper is to minimize, the closer the frontier curve is to the lower left, the better the algorithm effect will be. From Figure 7, the accuracy and distribution of each search method can also be seen intuitively, and SM4 is obviously better than the other three methods.

5.3. Experimental Results and Discussion on Algorithms Comparison

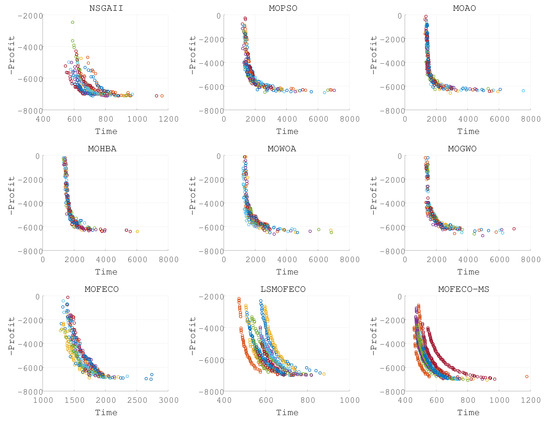

To evaluate the performance of our proposed MOFECO-MS algorithm, we compare it with eight other intelligent optimization algorithms based on different mechanisms. These include the classic Non-Dominated Sorting Genetic Algorithm II (NSGAII) [26], the Multi-objective Particle Swarm Optimization (MOPSO) [33], the Multi-Objective Whale Optimization Algorithm (MOWOA) [34], the Multi-objective Grey Wolf Optimization (MOGWO) [35], the Multi-objective Aquila Optimizer (MOAO) [36], and the Multi-Objective Honey Badger algorithm (MOHBA) [37], all proposed in recent years. Additionally, we include the basic Multi-Objective Five-element Cycle Optimization algorithm (MOFECO) [24] and a Local Search Many-Objective Five-element Cycle optimization algorithm (LSMOFECO) [25].

MOWOA mimics humpback whale hunting behavior, while MOGWO simulates gray wolf group predation behavior. MOAO and MOHBA, proposed in the last two years, are inspired by eagles’ hunting and honey badgers’ foraging behavior, respectively. The parameters for MOWOA, MOGWO, MOAO, and MOHBA remain consistent with their original literature. NSGAII employs the same crossover and mutation operators as MOFECO-MS, with crossover and mutation probabilities set to and , respectively. MOFECO and LSMOFECO utilize parameters identical to those in MOFECO-MS. All eight algorithms share a population size of . The termination criterion for each algorithm is when the number of objective function evaluations reaches 100,000 ().

We conduct 15 independent runs for each algorithm on the BITTP instances in Table 5. The experimental results are presented in Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20, Table 21, Table 22, Table 23, Table 24, Table 25, Table 26, Table 27, Table 28, Table 29, Table 30, Table 31, Table 32, Table 33, Table 34, Table 35, Table 36, Table 37, Table 38, Table 39 and Table 40, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16.

Table 14.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 15.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 16.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 17.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 18.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 19.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 20.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 21.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 22.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 23.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 24.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 25.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 26.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 27.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 28.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 29.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 30.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 31.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 32.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 33.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 34.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 35.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 36.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 37.

Statistical results of PD indicator of the eight comparison algorithms for .

Table 38.

Statistical results of HV indicator of the eight comparison algorithms for .

Table 39.

Statistical results of SP indicator of the eight comparison algorithms for .

Table 40.

Statistical results of PD indicator of the eight comparison algorithms for .

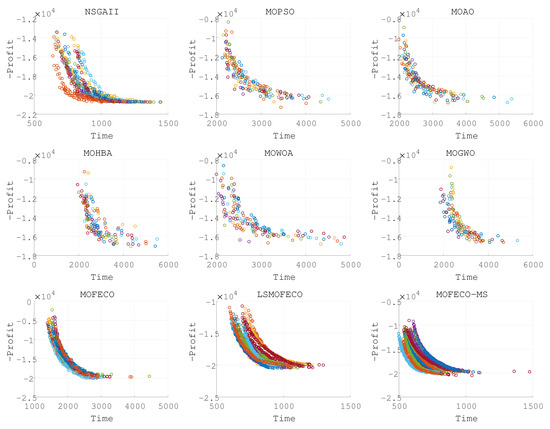

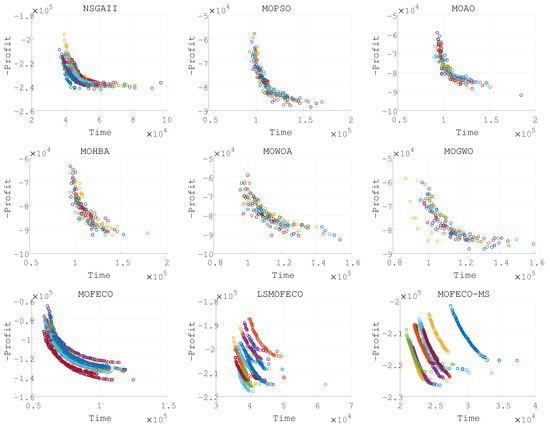

Figure 8.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

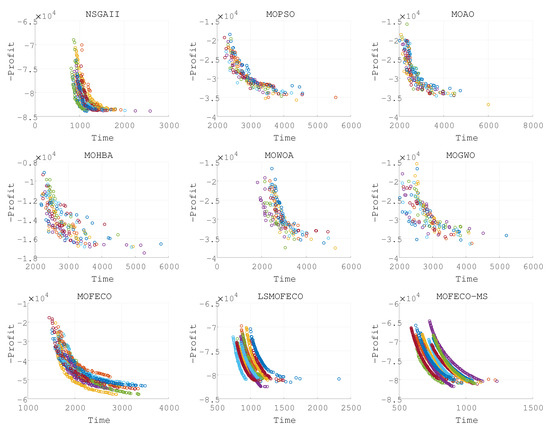

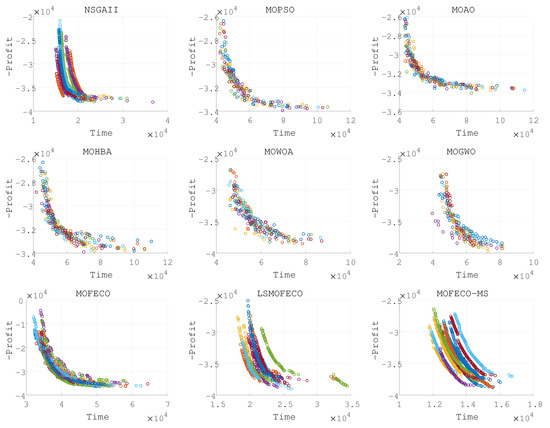

Figure 9.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

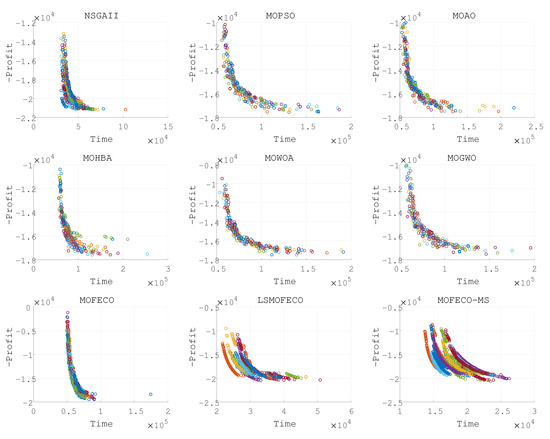

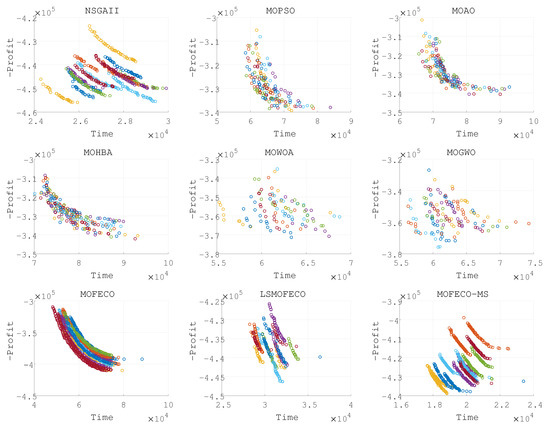

Figure 10.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

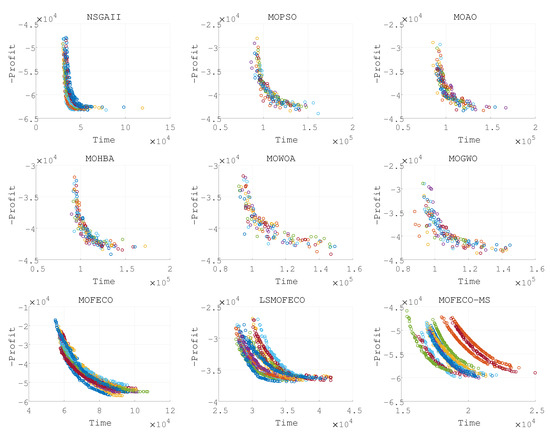

Figure 11.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

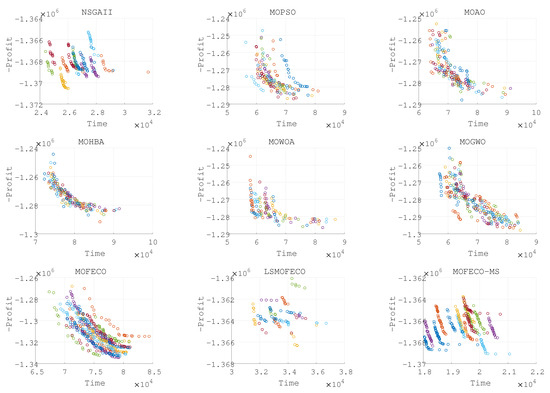

Figure 12.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

Figure 13.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

Figure 14.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (Pareto front obtained in each run are denoted by each color).

Figure 15.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (solutions obtained in each run are denoted by each color).

Figure 16.

Plots of the non-dominated solutions in the objective space of obtained by nine algorithms in 15 independent runs (solutions obtained in each run are denoted by each color).

Based on the experimental results presented in Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16 and the detailed metrics analysis, we can derive key insights into the performance of the MOFECO-MS algorithm compared to other competing algorithms across the various BITTP instances:

- Overall summary:

- –

- MOFECO-MS demonstrated outstanding performance across multiple instances, particularly in the HV (Hypervolume) and SP (Spread) indicators, proving its overall advantage in multi-objective optimization problems.

- –

- While MOFECO-MS did not achieve the highest PD (Pure Diversity) values in every instance, its performance remained stable and close to optimal.

- –

- The comparison charts show that MOFECO-MS consistently outperformed other algorithms in terms of solution distribution and quality across different instances, highlighting its ability to generate diverse and stable solutions.

- Specific instance analysis:

- –

- :

- *

- HV Indicator: MOFECO-MS achieved the best HV values across all statistical measures in 15 runs, demonstrating strong overall performance. The only exception was its variance, which was not the best, but its overall performance remained excellent.

- *

- SP Indicator: MOFECO-MS had convergence results slightly behind LSMOFECO, but the performance was very close, showing excellent capability in maintaining solution concentration and convergence.

- *

- PD Indicator: Although not the highest, MOFECO-MS still performed well, indicating strong competitiveness in diversity.

- –

- :

- *

- HV and SP Indicators: MOFECO-MS excelled in these key indicators, showing outstanding performance in both overall quality and convergence.

- *

- PD Indicator: Second to MOFECO but still ranked highly, indicating strong diversity performance.

- –

- :

- *

- HV Indicator: MOFECO-MS achieved the best HV values, confirming its overall performance advantage.

- *

- SP Indicator: It performed closely with LSMOFECO, showing good convergence.

- *

- PD Indicator: Second to MOFECO, but still ranked highly.

- –

- :

- *

- HV and SP Indicators: MOFECO-MS achieved the best performance in both indicators, showing strong performance in medium-scale instances.

- *

- PD Indicator: While not the highest, it remained at a high level.

- –

- , :

- *

- HV and SP Indicators: MOFECO-MS continues to perform best in these two indicators, showing excellent performance in more complex instances.

- *

- PD Indicator: Slightly behind MOFECO but still close to optimal, indicating a good balance in diversity.

- –

- ,, :

- *

- Performance Summary: In these instances, MOFECO-MS maintained its outstanding performance, particularly in HV and SP indicators, continuing to show the best performance. The PD indicator was close to optimal, demonstrating overall excellence.

- *

- The charts show that MOFECO-MS continues to deliver excellent distribution and high quality in these instances, demonstrating the algorithm’s robustness in large-scale problems.

- Analysis and discussion

- –

- The reason MOFECO-MS performed well across multiple runs is due to its well-balanced global exploration and local exploitation capabilities, allowing it to find high-quality solutions across different scales and complexities of TTP instances.

- –

- Convergence: MOFECO-MS’s optimization strategy effectively reduces gaps between solutions, ensuring uniform distribution along the Pareto front.

- –

- Diversity: Although MOFECO-MS did not achieve the highest PD values in some instances, it still maintained a high level of diversity, meaning it could explore a broader solution space without sacrificing quality.

- –

- Stability: MOFECO-MS demonstrated stability across multiple independent runs, indicating that its design is capable of consistently delivering high-quality outputs when addressing complex problems.

- –

- MOFECO-MS consistently performed exceptionally well across a wide range of BITTP instances, proving its potential as a powerful tool for solving complex multi-objective optimization problems. Its outstanding performance in HV and SP indicators, combined with its balanced performance in PD, makes it one of the leading algorithms in handling problems of various scales.

From the results presented in Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16, it is evident that the MOFECO-MS algorithm consistently outperforms other algorithms in most of the BITTP instances, particularly in terms of Hypervolume (HV) and Spread (SP) indicators. Its strong performance can be attributed to its ability to balance exploration and exploitation effectively, ensuring both high-quality solutions and diverse coverage of the Pareto front. While it may not always achieve the highest Pure Diversity (PD) values, its performance in this area remains competitive, further demonstrating its robustness across different problem scales.

6. Conclusions and Future Work

This paper presents a Multi-objective Five-element Cycle Optimization algorithm based on Multi-strategy fusion (MOFECO-MS) for solving instances of the Bi-objective Traveling Thief Problem (BITTP). Building on the principles of the Five-element Cycle Optimization algorithm, we introduced a novel selection and update strategy that enhances the algorithm’s ability to balance global exploration and local exploitation. The algorithm’s effectiveness was demonstrated through extensive comparative evaluations across nine BITTP instances, where MOFECO-MS consistently outperformed eight leading multi-objective optimization algorithms in terms of solution quality, distribution, and convergence. The results affirm MOFECO-MS as a robust and effective approach for complex multi-objective optimization problems, particularly in scenarios requiring both precision and diversity.

For future work, we aim to explore adaptive parameter adjustment mechanisms to further improve the algorithm’s performance, particularly in terms of crossover and mutation probabilities. Additionally, we plan to investigate the incorporation of dynamic update strategies that adjust based on the evolutionary stage, which could enhance the algorithm’s ability to adapt to different problem landscapes. Another promising direction includes integrating other evolutionary operators into the update strategy and exploring the potential of co-evolution with complementary algorithms to further advance BITTP solution methodologies.

Author Contributions

Y.X.—conceptualization, methodology, software, validation, investigation, writing—original draft preparation; J.G.—data curation and analysis, writing—review and editing; C.J.—data interpretation, writing—review and editing; H.M.—data interpretation, writing—review and editing; M.L.—conceptualization, supervision, writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities, Grant No. 222201917006, under the affiliation of the Key Laboratory of Smart Manufacturing in Energy Chemical Process (East China University of Science and Technology), Ministry of Education.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://sites.google.com/view/ttp-gecco2023/home.

Acknowledgments

The authors would like to thank Mandan Liu for her critical review and insightful comments on the manuscript. Special thanks are extended to Jingjing Guo, Chao Jiang, and Haibao Ma for their assistance in revising and editing the paper.

Conflicts of Interest

Author Haibao Ma was employed by the company Technology Center, Vanderlande Industries Logistics Automated Systems Shanghai Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Mei, Y.; Li, X.; Yao, X. On investigation of interdependence between sub-problems of the Travelling Thief Problem. Soft Comput. 2016, 20, 157–172. [Google Scholar] [CrossRef]

- Bonyadi, M.R.; Michalewicz, Z.; Barone, L. The travelling thief problem: The first step in the transition from theoretical problems to realistic problems. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013. [Google Scholar]

- El Yafrani, M.; Ahiod, B. A local search based approach for solving the Travelling Thief Problem: The pros and cons. Appl. Soft Comput. 2017, 52, 795–804. [Google Scholar] [CrossRef]

- Maity, A.; Das, S. Efficient hybrid local search heuristics for solving the travelling thief problem. Appl. Soft Comput. 2020, 93, 106284. [Google Scholar] [CrossRef]

- El Yafrani, M.; Ahiod, B. Cosolver2B: An efficient local search heuristic for the travelling thief problem. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–5. [Google Scholar]

- Yafrani, M.E.; Martins, M.S.; Krari, M.E.; Wagner, M.; Delgado, M.R.; Ahiod, B.; Lüders, R. A fitness landscape analysis of the travelling thief problem. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 277–284. [Google Scholar]

- El Yafrani, M.; Ahiod, B. Efficiently solving the Traveling Thief Problem using hill climbing and simulated annealing. Inf. Sci. 2018, 432, 231–244. [Google Scholar] [CrossRef]

- Moeini, M.; Schermer, D.; Wendt, O. A hybrid evolutionary approach for solving the traveling thief problem. In Proceedings of the International Conference on Computational Science and Its Applications; Springer: New York, NY, USA, 2017; pp. 652–668. [Google Scholar]

- Wagner, M. Stealing items more efficiently with ants: A swarm intelligence approach to the travelling thief problem. In Proceedings of the International Conference on Swarm Intelligence; Springer: New York, NY, USA, 2016; pp. 273–281. [Google Scholar]

- Zouari, W.; Alaya, I.; Tagina, M. A new hybrid ant colony algorithms for the traveling thief problem. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 95–96. [Google Scholar]

- Laszczyk, M.; Myszkowski, P.B. A Specialized Evolutionary Approach to the bi-objective Travelling Thief Problem. In Proceedings of the 2019 Federated Conference on Computer Science and Information Systems, Leipzig, Germany, 1–4 September 2019; pp. 47–56. [Google Scholar]

- Wu, J.; Polyakovskiy, S.; Wagner, M.; Neumann, F. Evolutionary Computation plus Dynamic Programming for the Bi-Objective Travelling Thief Problem. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 777–784. [Google Scholar]

- Santana, R.; Shakya, S. Evolutionary approaches with adaptive operators for the bi-objective TTP. In Proceedings of the 2022 IEEE Symposium Series on Computational Intelligence (SSCI), Singapore, 4–7 December 2022; pp. 1202–1209. [Google Scholar] [CrossRef]

- Kumari, R.; Srivastava, K. Variable Neighbourhood Search for Bi-Objective Travelling Thief Problem. In Proceedings of the 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 4–5 June 2020; pp. 47–51. [Google Scholar]

- Chagas, J.B.C.; Wagner, M. A weighted-sum method for solving the bi-objective traveling thief problem. Comput. Oper. Res. 2022, 138, 105560. [Google Scholar] [CrossRef]

- Yang, L.; Jia, X.; Xu, R.; Cao, J. An MOEA/D-ACO Algorithm with Finite Pheromone Weights for Bi-objective TTP. In Proceedings of the Data Mining and Big Data: 6th International Conference, DMBD 2021, Guangzhou, China, 20–22 October 2021; Proceedings, Part I 6. Springer: New York, NY, USA, 2021; pp. 468–482. [Google Scholar]

- Rabiei, P.; Mirghaderi, S.H.; Arias-Aranda, D. Efficiently Solving the Bi-Objective Traveling Thief Problem Using Imperialist Competitive Algorithm: Case of Small Instances. SSRN 2023. [Google Scholar]

- Blank, J.; Deb, K.; Mostaghim, S. Solving the Bi-objective Traveling Thief Problem with Multi-objective Evolutionary Algorithms. In Proceedings of the International Conference on Evolutionary Multi-Criterion Optimization, Münster, Germany, 17–19 March 2017; pp. 46–60. [Google Scholar]

- Santana, R.; Shakya, S. Dynamic programming operators for the bi-objective Traveling Thief Problem. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Kumari, R.; Srivastava, K. NSGAII for Travelling Thief Problem with Dropping Rate. In Proceedings of the 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; pp. 1647–1653. [Google Scholar]

- Chagas, J.B.C.; Blank, J.; Wagner, M.; Souza, M.J.F.; Deb, K. A non-dominated sorting based customized random-key genetic algorithm for the bi-objective traveling thief problem. J. Heuristics 2021, 27, 267–301. [Google Scholar] [CrossRef]

- Liu, M. Five-elements cycle optimization algorithm for the travelling salesman problem. In Proceedings of the International Conference on Advanced Robotics, Hong Kong, China, 10–12 July 2017; pp. 595–601. [Google Scholar]

- Polyakovskiy, S.; Mohammad Reza, B.; Markus, W.; Zbigniew, M.; Frank, N. A Comprehensive Benchmark Set and Heuristics for the Traveling Thief Problem. In Proceedings of the 2014 Annual Conference on Genetic and Evolutionary Computation, Vancouver, BC, Canada, 12–16 July 2014; pp. 477–484. [Google Scholar]

- Ye, C.; Mao, Z.; Liu, M. A Novel Multi-Objective Five-Elements Cycle Optimization Algorithm. Algorithms 2019, 12, 244. [Google Scholar] [CrossRef]

- Mao, Z.; Liu, M. A local search-based many-objective five-element cycle optimization algorithm. Swarm Evol. Comput. 2022, 68, 101009. [Google Scholar] [CrossRef]

- Deb, K.; Agrawal, S.; Pratap, A.; Meyarivan, T. A Fast Elitist Non-dominated Sorting Genetic Algorithm for Multi-objective Optimization: NSGA-II. In Proceedings of the International Conference on Parallel Problem Solving from Nature, Paris, France, 18–20 September 2000; pp. 849–858. [Google Scholar]

- Goldberg, D.E.; Lingle, R. AllelesLociand the Traveling Salesman Problem. In Proceedings of the 1st International Conference on Genetic Algorithms, Pittsburgh, PA, USA, 24–26 July 1985. [Google Scholar]

- Syswerda, G. Uniform Crossover in Genetic Algorithms. In Proceedings of the 3rd International Conference on Genetic Algorithms, Fairfax, VA, USA, 2–9 June 1989. [Google Scholar]

- Wang, K.P.; Huang, L.; Zhou, C.G.; Pang, W. Particle swarm optimization for traveling salesman problem. In Proceedings of the International Conference on Machine Learning and Cybernetics, Xi’an, China, 5 November 2003; pp. 1583–1585. [Google Scholar]

- Zitzler, E.; Thiele, L. Multiobjective Optimization Using Evolutionary Algorithms—A Comparative Case Study; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Tian, Y.; Cheng, R.; Zhang, X.; Jin, Y. PlatEMO: A MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput. Intell. Mag. 2017, 12, 73–87. [Google Scholar] [CrossRef]

- Solow, A.; Polasky, S.; Broadus, J. On the measurement of biological diversity. J. Environ. Econ. Manag. 1993, 24, 60–68. [Google Scholar] [CrossRef]

- Coello, C.C.; Lechuga, M.S. MOPSO: A proposal for multiple objective particle swarm optimization. In Proceedings of the 2002 Congress on Evolutionary Computation, CEC’02 (Cat. No. 02TH8600), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1051–1056. [Google Scholar]

- Kumawat, I.R.; Nanda, S.J.; Maddila, R.K. Multi-objective whale optimization. In Proceedings of the TENCON 2017—2017 IEEE Region 10 Conference, Penang, Malaysia, 5–8 November 2017; pp. 2747–2752. [Google Scholar]

- Lu, C.; Xiao, S.; Li, X.; Gao, L. An effective multi-objective discrete grey wolf optimizer for a real-world scheduling problem in welding production. Adv. Eng. Softw. 2016, 99, 161–176. [Google Scholar] [CrossRef]

- Mohammed Hamouda, A.; Ahmed Tijani, S.; Salah, K.; Habeeb Bello, S.; Monier, H.; Mokhtar, S. Single-and multi-objective modified aquila optimizer for optimal multiple renewable energy resources in distribution network. Mathematics 2022, 10, 2129. [Google Scholar] [CrossRef]

- Papasani, A.; Devarakonda, N. A novel feature selection algorithm using multi-objective improved honey badger algorithm and strength pareto evolutionary algorithm-II. J. Eng. Res. 2022, 11, 71–83. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).