Abstract

An automatic medical diagnosis service based on deep learning has been introduced in e-healthcare, bringing great convenience to human life. However, due to privacy regulations, insufficient data sharing among medical centers has led to many severe challenges for automated medical diagnostic services, including diagnostic accuracy. To solve such problems, swarm learning (SL), a blockchain-based federated learning (BCFL), has been proposed. Although SL avoids single-point-of-failure attacks and offers an incentive mechanism, it still faces privacy breaches and poisoning attacks. In this paper, we propose a new privacy-preserving Byzantine-resilient swarm learning (PBSL) that is resistant to poisoning attacks while protecting data privacy. Specifically, we adopt threshold fully homomorphic encryption (TFHE) to protect data privacy and provide secure aggregation. And the cosine similarity is used to judge the malicious gradient uploaded by malicious medical centers. Through security analysis, PBSL is able to defend against a variety of known security attacks. Finally, PBSL is implemented by uniting deep learning with blockchain-based smart contract platforms. Experiments based on different datasets show that the PBSL algorithm is practical and efficient.

1. Introduction

In recent years, online medical diagnosis services [1] have attracted considerable interest due to their ability to overcome geographical restrictions and reduce the waiting time of seeing doctors [2,3]. To discover hidden diseases from collected medical data, deep learning (DL) has been applied to e-healthcare systems [4,5,6]. DL can process huge amounts of medical data and automatically find valid patterns that are too complex for human-led analysis. DL intrinsically relies on large amounts of training data. Unfortunately, in traditional healthcare systems, medical data tend to be distributed among different hospitals, and the small amount of medical data collected by individual hospitals are often insufficient to train reliable models [7,8]. Therefore, data centralization is an effective way to address the lack of training data [9]. Although the centralized model has brought many benefits, it still faces many challenges, including surge in data traffic, disclosure of local data, confidentiality of global models, and data monopolies [10].

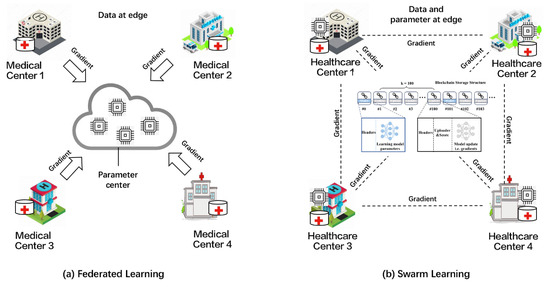

As shown in Figure 1a, Google’s federated Learning (FL) [11,12] is implemented with the help of a centralized server that aggregates all local model gradients and takes an overall average to produce a global model update. Although the problems of local data storage and local data confidentiality have been solved, the global model parameters are still processed and stored by the centralized server. Furthermore, such centralized architectures decrease fault tolerance. To solve these problems, Stefanie et al. [13] proposed swarm learning (SL), in which multiple medical centers share their local gradients through blockchain peer-to-peer networks, and the medical center that wins the consensus mechanism replaces the centralized parameter server to update the global parameters according to collected gradients.

Figure 1.

(a) Traditional federated learning (FL) architecture and (b) swarm learning (SL) architecture.

As shown in Figure 1b, despite the move away from centralized servers, SL still faces data security and privacy breaches. First, SL does not protect the privacy of hospital data and guarantee the confidentiality of the global model. While local model updates and global models are already recorded on blockchain, blockchain itself does not guarantee the privacy of local data and the confidentiality of the global model. For example, any curious medical center participating in swarm learning can infer the original training data of other medical centers according to the gradient and model parameters stored in the blockchain [14,15]. Secondly, in the process of gradient collection and parameter updating, the malicious behavior of dishonest medical centers can destroy the swarm learning process and poison the federated model. In particular, malicious medical centers (e.g., Byzantine attackers) can easily disrupt swarm learning systems by spreading false information over the network [16]. For example, Bagdasaryan et al. demonstrated that dishonest edge devices can poison collaborative models by replacing updated models with its exquisitely designed one [17].

Therefore, we propose a privacy-preserving Byzantine-robust swarm learning (PBSL), which ensures the confidentiality of the local gradient, the privacy of the global model, and the robustness of Byzantine attacks. Specifically, after the blockchain-based point-to-point network is established, each medical center calculates its local gradients according to the local training data. Then, the local gradients encrypted by the fully homomorphic encryption (FHE) scheme, CKKS, are broadcast to all other medical centers on the blockchain-based network. If medical centers on the blockchain reach a consensus, the winning medical center uses a proposed Byzantine attack tolerant aggregation scheme based on secure cosine similarity, which supports lossless collaborative model learning while protecting the privacy of the medical center.

In short, the main contributions of this paper include the following three aspects:

- PBSL can not only protect the privacy of local sensitive data, but also ensure the confidentiality of the global collaborative model. Using the fully homomorphic encryption (FHE) scheme CKKS, PBSL encrypts the local gradient submitted by the medical center and aggregates to generate a global collaborative model without decryption.

- PBSL can achieve swarm learning (SL) that resists Byzantine attacks. In order to resist Byzantine attacks, we propose a privacy-protecting Byzantine-robust aggregation method, which uses secure cosine similarity to punish malicious .

- PBSL prototype is implemented by integrating deep learning and blockchain-based smart contract. Moreover, PBSL is tested using real medical datasets to evaluate its effectiveness. Experimental results show that PBSL is effective.

The remainder of this paper is organized as follows. We review related works in Section 2. We review some primitives in Section 3, and introduce our system model and design goals in Section 4. Then, we introduce our scheme in Section 5. Next, we present the security and performance analyses in Section 6 and Section 7, respectively. Finally, we conduct a summary of our work in Section 8.

2. Related Work

In this section, we focus on related works about privacy-preserving FL, Byzantine-robust FL, blockchain-based FL, and swarm learning.

2.1. Privacy-Preserving Federated Learning

Although the training dataset is divided and stored separately, federated learning (FL) cannot protect training data privacy because the exchanged global model and local gradients still contain a large amount of sensitive information. Three popular privacy-protecting federal learning (PPFL), i.e., differential privacy (DP), homomorphic encryption (HE), and secure multi-party computation (MPC), have been proposed to address this problem.

DP-based PPFL protects the local updates against inference attacks through adding random noises [18,19,20]. However, it is challenging to bring a trade-off of accuracy loss. SMC-based PPFL collaboratively computes the aggregated values between multi-parties’ inputs through secure multi-party computing in nature [21,22,23,24,25,26]. SMC-based PPFL has been constructed in one [27], two [28], three [29], and four [30] servers. However, although SMC, which is a cryptographic primitive, has powerful privacy, it is inefficient. HE-based PPFL uses homomorphic encryption to support aggregation operation on cipher-texts, and protects training data privacy from curious parameter servers without external learning accuracy loss [31]. EaSTFLy [32] uses -Shamir’ secret sharing and Paillier partially homomorphic encryption (PHE). BatchCrypt [33] encodes quantized gradients into signed integers and then encrypts them, achieving training speedup compared with full-precision encryption. However, most of them contain massive, complicated arithmetical operations, which brings considerable computation overhead.

In this paper, threshold fully homomorphic encryption (TFHE), which combines -Shamir’ secret sharing and CKKS fully homomorphic encryption (FHE) [34], is used as a privacy protection mechanism, which greatly reduces the computing and communication overheads.

2.2. Byzantine-Robust Federated Learning

2.2.1. Byzantine Attack

As we all know, federated learning is vulnerable to Byzantine attacks [35,36,37]. In order to map the specified feature to the target class, the Byzantine attacker tries to modify the classification boundary of the trained model. It has been proven that an attacker can control the entire training process and steal user privacy [16].

According to the goals of the attackers, Byzantine attacks can be divided into targeted attacks [17] and untargeted attacks [35]. Targeted attacks target only one or a few data categories in the dataset, while maintaining the accuracy of other categories. Untargeted attacks are undifferentiated attacks that aim to reduce the accuracy of all data categories. And according to the abilities of the adversaries, Byzantine attacks can also be divided into data attacks [37] and model attacks [35]. In data attacks, the attackers indirectly poison the global model by poisoning the client’s local data. For example, the label-flipping attack flips the label of the normal features to the target class. And the backdoor attack is an attempt to seek a set of parameters to establish a strong link between the trigger and the target label while minimizing the impact on benign input classification. In model attacks, the attackers can directly manipulate and control the model updates of communication between the clients and the server, which directly affects the accuracy of the global model.

2.2.2. Byzantine-Robust Federated Learning

Typical FLs, such as FedSGD [38] and FedAvg, are not resistant to poisoning attacks, resulting in untrustworthy and inaccurate global models. By comparing local gradients received from different parties, Byzantine-robust federated learning (BRFL) [16,39,40,41] excludes abnormal gradients to protect the training model from poisoning attacks. Based on the Euclidean distance between any two gradients, Krum [16] excludes the outlier gradients. Specifically, in each training round, a candidate model would be selected. Similarly, Trim-mean [39] first sorts the received local gradients, then removes the larger and smaller gradients among them, and finally takes the mean of the remaining gradients as the global gradient. GeoMed [42] updates the FL model by selecting a gradient based on the geographic median to realize gradient aggregation. Bulyan [43] first implements the Krum-based aggregation and then updates the global model by averaging the gradient closest to the median value. Meanwhile, based on the cosine similarity between the parties’ historical gradients, FoolsGold [35] sets the weights of parties to ward off Sybil attacks in FL.

However, these schemes do not consider privacy threats, which directly aggregate the global model directly in plaintext. In order to solve this problem, the FL scheme [40] using DP and SMC can not only prevent inference attack during training, but also improve model accuracy. However, because it is difficult to calculate the similarity between the encrypted gradients, the FL scheme [40] is vulnerable to malicious gradients. To this end, privacy-enhanced federated learning (PEFL), which employs HE as the underlying technology, punishes malicious parties via the logarithmic function of gradient data. However, the PEFL scheme still does not avoid malicious behaviors of the servers and inference attacks onto clients.

To develop a Byzantine-resilient, and at the same time, privacy-preserving federated learning framework, we use TFHE to protect user privacy, cosine similarity to remove malicious gradients, and blockchain to avoid malicious behavior of servers.

2.3. Blockchain-Based Federated Learning and Swarm Learning

By heavily relying on a single server, traditional FL cannot protect against a single point of failure and resist malicious attacks. As the infrastructure of Bitcoin, blockchain has attracted a lot of attention because of its decentralization and reliability. Several blockchain-based FL protocols [44,45,46,47] replace servers with blockchain, enabling privacy protection and enhancing trust among parties. BAFFLE [44] uses blockchain to avoid single points of failure and aggregates local models using smart contracts (SCs). Similarly, through blockchain smart contracts, BlockFL [46] exchanges and validates local model updates. By providing incentives associated with training samples, BlockFL can integrate more equipment and more training samples. Meanwhile, as a state-of-the-art BCFL, swarm learning (SL) [13,48] combines edge computing, blockchain networking, and federated learning while maintaining confidentiality without a central coordinator. In the e-heath field, SL has been successfully applied to the diagnosis of COVID-19, tuberculosis, leukemia and lung diseases [13], skin lesion classification fairness [49], genomics data sharing [50], and risk prediction of cardiovascular events [51].

However, the above schemes impose heavy computation and communication costs on the blockchain nodes and cannot distinguish the malicious gradients. To address these issues, the blockchain-based federated learning framework with committee consensus (BFLC) [45] can effectively reduce consensus computing and prevent malicious attacks through innovative committee consensus mechanisms.

Finally, Table 1 shows a comparison of our PBSL scheme with the various FL schemes described above. The results show that our scheme can not only resist poisoning attacks and be robust to users dropping out, but also has high privacy and computational efficiency. The compared results point out that PBSL can not only resist Byzantine attacks and be robust to parties dropping out, but also protect privacy and improve computing efficiency.

Table 1.

A comparative summary between our scheme and previous schemes.

3. Preliminaries

In this section, we review the basics of PBSL, namely swarm learning and full homomorphic encryption.

3.1. Swarm Learning

As the state-of-the-art BCFL, discarding the centralized server, swarm learning (SL) shares local gradients through the swarm network, and independently builds models on local data at the swarm edge nodes. SL ensures the confidentiality, privacy, and security of sensitive data with the help of blockchain. Unlike clients in traditional FL, edge nodes not only perform training tasks, but also execute transactions. The workflow of SL is as follows. A new edge node is first registered to the smart contract (SM) pre-deployed on the blockchain. Then, the swarm edge nodes perform a new training round, including downloading the global model and performing localized training. And then, all swarm edge nodes commit the local gradients to the SM through the swarm network. Finally, the SM aggregates all local gradients to create an updated model.

Suppose there are K parties that constitute a collaborative group, in which each party holds its local dataset (). denotes the joint dataset. Without disclosing their local sensitive data, K parties aim to cooperatively train a global general classifier from the complete dataset D. As illustrated in Figure 1b, in tth iteration, the party downloads the global model from the blockchain and trains the local model based on the local dataset . Equation (1) describes the objective function optimized by training:

where is the training sample, is the empirical error function, and is the training sample distribution. We adopt stochastic gradient descent (SGD) to solve this minimization problem. Using and , each party computes its local gradient via Equation (2):

Through the consensus mechanism, the winning party performs the model update operation with Equation (3):

where , , and is the local learning rate. Once the update operation is completed, the winning party then sends the updated results through transactions to all parties.

3.2. The CKKS Scheme

As a typical fully homomorphic encryption (FHE), the Cheon–Kim–Kim–Song (CKKS) scheme [34] is a cryptographic technique in which addition and multiplication operations on plaintext are equivalent to corresponding operations on cipher-text. With the unique encoding, decoding, and rescaling mechanisms, CKKS can encrypt float numbers and vectors. CKKS consists of the following functions:

- (1)

- Key Generation: . This procedure generates a public key for encryption, a corresponding secret key for decryption, and a key for evaluation.

- (2)

- Encoding: . Taking an -dimensional vector and a scaling factor s as inputs, the procedure transforms to a polynomial m by encoding, i.e., , where and are the inverses of and , respectively.

- (3)

- Encryption: . Taking the given polynomial and public key as inputs, the procedure outputs the cipher-text .

- (4)

- Addition: . Taking the cipher-texts and as inputs, the procedure outputs .

- (5)

- Multiplication: . Taking the cipher-texts and and the evaluation key as inputs, the procedure outputs .

- (6)

- Rescaling: . Taking a cipher-text at level l as inputs, the procedure outputs the cipher-text in , where represents the integer closest to the real number x.

- (7)

- Decryption: . Taking the cipher-text c and the secret key as inputs, the procedure outputs the polynomial m.

- (8)

- Decoding: . Taking the plaintext polynomial as inputs, the procedure transforms into a vector using .

3.3. Threshold Fully Homomorphic Encryption

To construct distributed FHE, threshold fully homomorphic encryption (TFHE) [52] has been proposed, in which each participant has a share of a secret key corresponding to an FHE public key.

Let be a set of parties. Threshold fully homomorphic encryption scheme (TFHE) is tuple of PPT algorithms satisfying the following specifications:

- : The algorithm inputs a security parameter , a circuit depth d, and an access structure , and outputs a public key , and secret key shares .

- : The algorithm inputs a public key and a single-bit plaintext , and outputs a cipher-text .

- : The algorithm inputs a public key , a boolean circuit of and cipher-texts encrypted under the same public key, and outputs an evaluation cipher-text .

- : The algorithm inputs a public key , a cipher-text and a secret key share . and outputs a partial decryption related to the party .

- : The algorithm inputs a public key , a set for some where we recall that we identify a party with its index i, and deterministically outputs a plaintext .

And we construct using the schemes, and as follows:

- : The algorithm inputs , d, and , and generates . Then, is divided into shares using .

- : The algorithm inputs and , computes , and output .

- : The algorithm inputs , C and , computes , and outputs .

- : The algorithm inputs , , and , computes , and outputs .

- : The algorithm inputs and , and checks if . If this is not the case, then it outputs ⊥. Otherwise, it arbitrarily chooses a satisfying set of size t and computes the Lagrange coefficients for all . Then, it computes , and outputs .

4. Problem Statement

In this section, we formulate our system model, threat model, and design goals.

4.1. System Model

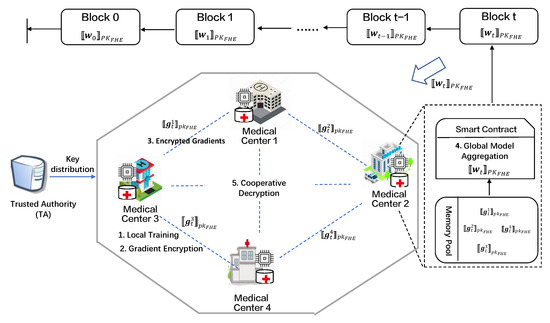

As shown in Figure 2, our PBSL includes three entities: trusted authorities (), medical centers (), and blockchain ()-based peer-to-peer network. Each is equipped with a PC server, and s are interconnected through blockchain ()-based peer-to-peer networks. The three entities involved in the system are shown below.

Figure 2.

System model of PBSL.

- stands for Trusted Authority and is responsible for generating initial parameters and public/secret key pairs and distributing them to .

- represents K medical centers participating in swarm learning. In our system, each trains the model using its local medical dataset, encrypts the gradient, shares the encrypted gradient to the blockchain, and cooperatively trains with other to achieve a more accurate global model.

- represents a blockchain platform made up of self-organizing networks of interconnected medical centers (). Every node (i.e., ) on blockchain has the opportunity to aggregate the global model, so can replace a central aggregator. Smart contract (SC) deployed on the blockchain homomorphically aggregates the encrypted gradients to obtain the global collaboration model.

4.2. Threat Model

is a trusted third party. Considering the autonomy of in swarm learning, we select the “curious-and-malicious” threat model, which is weaker than the “honest-and-curious” threat model. Specifically, curious are honest in uploading true gradients trained on their local datasets, while attempting to obtain more information from gradients shared by other stored on the blockchain, thus compromising users’ data privacy. In order to reduce the global model accuracy, malicious can perform malicious operations or deliberately abandon local training. The potential threats to our PBSL are as follows:

- Privacy: Since local gradients contain ’ private information, if the directly upload the local gradients in plaintext, the adversaries can infer the private information of honest , which leads to data leakage of .

- Byzantine Attacks: Byzantine attack refers to the arbitrary behavior of the system participant who does not follow the designed protocol. For example, a Byzantine randomly sends a vector in place of the local gradient, or mistakenly aggregates the local gradient of all .

- Poisoning Attacks: Poison attack refers to various malicious behaviors launched by the . For example, an flips the label of the sample and uploads the toxic gradient.

4.3. Design Goals

Under the system and threat models mentioned above, aiming to design a privacy-preserving swarm learning (PBSL) scheme, the proposed scheme should provide confidentiality and privacy guarantees, resist Byzantine attacks, and reduce computational overheads. And our scheme should achieve the same precision as classical swarm learning (SL). Specifically, the following goals are achieved:

- Confidentiality: Guaranteeing the confidentiality of the global model stored in the blockchain. Specifically, after swarm learning, only can access the global model stored in the blockchain.

- Privacy: Guaranteeing the privacy of each ’ local sensitive information. Specifically, during swarm learning, every ’ local training data cannot be leaked to other .

- Robustness: Detecting malicious and discarding their local gradients during swarm learning. Specifically, a mechanism is constructed to identify malicious medical centers and discard their submitted local model gradients.

- Accuracy: While protecting data privacy and resisting malicious attacks, the model accuracy of PBSL training should be the same as that of the original SL training.

- Efficiency: Reducing the high computational and communication cost of encryption operations in a privacy-preserving SL scheme.

- Tolerance to drop-out: Guaranteeing the privacy and convergence even if up to D are delayed or dropped.

5. Our Privacy-Preserving Swarm Learning

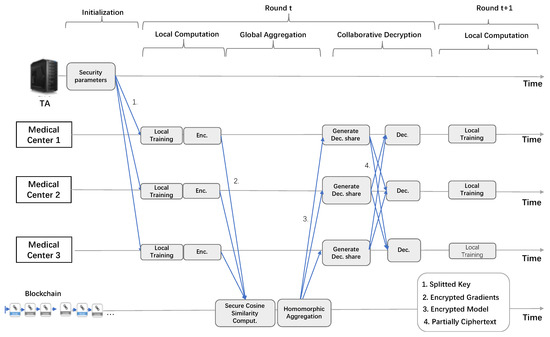

As shown in Figure 3, our PBSL described in this section mainly includes four stages: (I) system initialization, (II) local computation, (III) model aggregation, and (IV) global model decryption and updating.

Figure 3.

An overview of proposed PBSL.

Firstly, sets initialization parameters, generates key pairs , divides into K shares through Shamir’s secret sharing, and distributes K shares to K , respectively.

Then, train their model on its local dataset and encrypt their gradients by fully homomorphic encryption technology CKKS [34], which are broadcasted to the blockchain-based network.

Furthermore, the that wins the consensus mechanism uses a cosine similarity-based strategy to punish malicious gradient vectors, obtain the encrypted global model through homomorphically aggregating the encrypted local gradients, and stores the encrypted collaborative learning results on the blockchain.

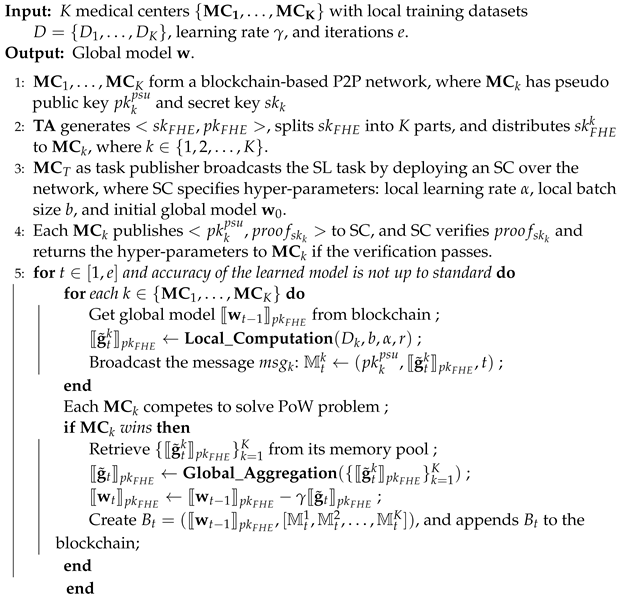

Finally, obtain the encrypted global models from the blockchain and cooperatively decrypt the encrypted global models to obtain the global models. We summarize PBSL in Algorithm 1, and illustrate the details of each process in the following. Table 2 lists the description of notations in our PBSL.

| Algorithm 1: PBSL |

|

Table 2.

Summary of notations in PBSL.

5.1. System Initialization

5.1.1. Blockchain P2P Network Construction

form blockchain-based peer-to-peer network. have pseudonymity, i.e., pseudo public keys , and their corresponding secret keys are privately kept, respectively.

5.1.2. Cryptosystem Initialization

Firstly, generates a key pair . sets a security parameter , and chooses a power-of-two , an integer , an integer , and a real value . Then, samples , , and , where is the set of signed binary vectors whose Hamming weight is exactly h and samples a vector in by drawing its coefficient independently from the discrete Gaussian distribution of variance . The private key is set as and the public key as where .

Secondly, randomly divides into K parts. computes , s.t., , sets a threshold m, s.t., , and defines a polynomial function of secret sharing protocol , where . divides into K parts through calculating . broadcasts to all through blockchain-based network.

Finally, each asks for its own private key .

5.1.3. Registration

To participate in swarm learning, need to register to smart contract (SC) published on the blockchain, which specifies the entire deep learning task. Each constructs a zero-knowledge proof of its private key . The is defined as , where , , , and . Then, submits “Registration” transaction to the blockchain. And then, each on the blockchain verifies , i.e., to prevent an adversary from registering itself with copied from an honest . If the verification is passed, is registered as a member of the learning group and stores its to the blockchain.

And in order to protect public broadcast channels, we can construct the symmetric encryption key and use the symmetric key encryption algorithm to encrypt the traffic data between and .

5.1.4. Parameter Initialization

In order to initialize the system parameters, a randomly selected sets the initial parameters , where is the learning rate, b is batch size, and is initial model parameters. Then, broadcasts the encrypted parameters to all other (). Upon receiving from , decrypts to obtain . Finally, all obtained uniform initial parameters .

5.2. Local Computation

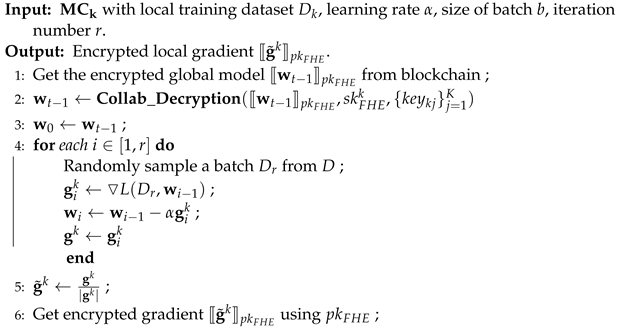

Algorithm 2 describes the procedure of in detail. In a local computation procedure, there are four parts: local model training, normalization, encryption, and sharing. The four parts are described in detail below.

5.2.1. Local Model Training

In the tth iteration, each obtains the encrypted global model from the latest block on the blockchain. Then, each calls to obtain the local model . Finally, using and , each MC computes the local gradient , as shown in Equation (4):

where represents the experical loss function and ▿ represents the derivation operation.

| Algorithm 2: Local_Computation |

|

5.2.2. Normalization

In order to directly apply our cosine-similarity-based aggregation rule to cipher-text and mitigate the effects of malicious gradients with a larger magnitudes, these local gradients need to be normalized. For each , we use Equation (5) to normalize the local gradients :

where is the unit vector.

5.2.3. Encryption

To ensure the confidentiality of local model updates, as shown in Algorithm 3, each encrypts using . Because gradients are signed the floating-point vector, gradients are encrypted using the CKKS scheme [34] instead of the Paillir scheme [53] with high computation overheads. is treated as vectors and encrypted using .

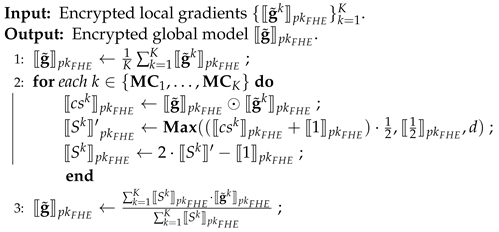

| Algorithm 3: Global_Aggregation |

|

5.2.4. Message Encapsulation and Sharing

Each encapsulates , , and timestamp t into the following message:

and broadcasts to other .

5.3. Global Aggregation

5.3.1. PoW Competition

In order to compete for the right to append blocks to the blockchain, all that make up the blockchain execute any consensus algorithm (e.g., PoW). The only winner has the right to construct a block and add it to the blockchain.

5.3.2. Homomorphic Aggregation

In the tth iteration, the winner is responsible for aggregating all the encrypted gradients stored in the memory pool. decodes and homomorphically aggregates encrypted gradients to generate the encrypted global model without the need for decryption by the following equation:

5.3.3. Secure Cosine Similarity Computation

Then, we compute the cosine similarity between two gradients and . And the encrypted gradients and are obtained as and . The encrypted cosine similarity between and can be transformed to the inner product, which is described as follows:

If , it means that the angle between and is greater than , that is, has a negative impact on . The trust score of is defined by Equation (7) to punish the malicious gradients

Equation (8) gives the definition of the function.

According to the previous work [54], the cipher-text homomorphism operation of function is given below:

To compare the cipher-text of and , the numerical method for the comparison of homomorphic ciphers in the range of is called [54]. The encrypted score is set by

5.3.4. Model Aggregation and Updating

In the tth epoch, the winner homomorphically aggregates the local gradients weighted by their trust score according to the following equation:

and subsequently updates the global model:

5.3.5. Construction of New Block

constructs the new block that contains the following:

and appends to the blockchain.

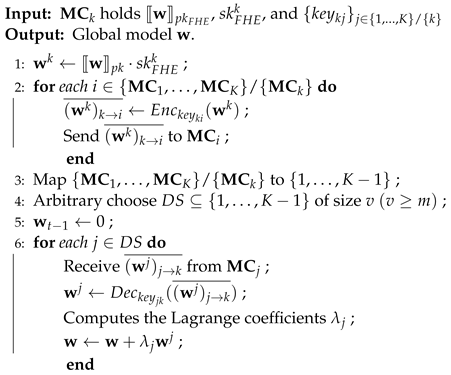

5.4. Collaborative Global Model Decryption

As described in Algorithm 4, each downloads the generated block from the blockchain. executes the partial decryption algorithm to obtain , i.e.,

Then, each device broadcasts the encrypted

for other (). And then, upon receiving from , computes . Furthermore, randomly selects v numbers from the set to form a decryption set . Finally, executes the final decryption algorithm to obtain , i.e.,

where () is the Lagrange coefficient.

| Algorithm 4: Collab_Decryption |

|

6. Security Analysis of PBSL

In this section, we review our security requirements of PBSL, described in Section 2, and analyze the security of PBSL.

6.1. Privacy and Confidentiality

Theorem 1.

PBSL can achieve the privacy of local model gradient and the confidentiality of global model .

Proof.

We show that and are able to be guaranteed at different stages of swarm learning.

- In the stage of local computation, is encrypted with , i.e.,where and , and splits into K distributed secret keys for . Because any with only a single cannot decrypt , PBSL can protect the privacy of at this stage.

- At the stage of global model aggregation, the winning homomorphically aggregates to generate , then homomorphically calculates by , and updatesThe whole process is performed on cipher-text. And with only a single cannot decrypt . Therefore, PBSL can protect the confidentiality of in the stage.

- At the stage of collaborative global model decryption, all decrypt the distributedly, and each shares its partially decryption result . Because any with only a single cannot decrypt , PBSL can protect the privacy of in the stage.

□

6.2. Byzantine-Robust

Theorem 2.

For an arbitrary number of malicious , in the iteration, the loss between the learned model and the optimal model under no attacks is bounded, i.e.,

with probability at least , where is caused by CKKS.

Proof.

Our PBSL improves the encryption algorithm of FLTrust [55] and extends FLTrust to blockchain, so we directly apply FLTrust’s security analysis to PBSL. According to FLTrust, in our PBSL, the loss between the learned model in the iteration and the optimal model is

with probability at least , where is caused by CKKS. As described in CKKS [34], Equation (19) gives the bound of the encoding and encryption errors.

Therefore, generated by the cipher-text operation of our PBSL can be expressed as follows:

where from ⊕ is , from ⊗ is , , , , and . Then, when , we have . □

Therefore, the loss between the learned model and the optimal model is bounded.

7. Evaluation

In this section, we evaluate our scheme and present the experimental results to analyze the performance of our PBSL from two perspectives: defense effectiveness against attacks, and resource overhead in terms of computation.

7.1. Experimental Setup

7.1.1. Experimental Environment

Implementation Settings: In order to evaluate the integrated performance, we implement the PBSL scheme on a private Ethereum blockchain setup with real medical datasets. In our experiment, smart contract performs secure a global gradient aggregation algorithm instead of the centralized server.

- A blockchain system uses Ethereum, a blockchain-based smart contracts platform [56,57,58]. Smart contracts developed in the Solidity programming language are deployed to private blockchains using Truffle v5.10And Ethereum is deployed on a desktop computer with 3.3 GHz Intel(R) Xeon(R) CPU, 16 GB memory, and Windows 10.

- CKKS Scheme is deployed using the TenSEAL library, which is a library for carrying out homomorphic encryption operations on tensors, and its code is available on Github (https://github.com/OpenMined/TenSEAL, accessed on 9 June 2024).

Dataset: Our PBSL scheme was evaluated using two widely used datasets, the MNIST dataset and the FashionMNIST dataset.

- MNIST dataset consists of handwritten digital pictures of 250 different people. Every sample is a black and white image with pixels. The dataset is divided into a training set with 60,000 samples and a test set with 10,000 samples. We distributed the entire dataset evenly across 10 .

- FashionMNIST dataset constitutes images in 10 categories. The whole dataset contains 60,000 samples (50,000 for training and 10,000 for testing). The dataset was also evenly distributed to 10 .

7.1.2. Byzantine Attacks

In our experiments, the following Byzantine attacks were considered:

- Label Flipping Attack: By changing the label of the training sample to another category, the label flipping attacker misleads the classifier. To simulate this attack, the labels of the samples on each malicious are modified from l to , where N is the total number of labels and .

- Backdoor Attack: Backdoor attackers force classifiers to make bad decisions about samples with specific characteristics. To simulate the attack, we choose pixels as the trigger, and randomly select a number of training images on each malicious , and overwrite the pixels at the top left of each selected images. Then, we reset the labels of all the selected images to the target class.

- Arbitrary Model Attack: The model attacker arbitrarily tampers with some of the local model parameters. To reproduce the arbitrary model attack, malicious select a random number as its jth local model parameter.

- Untargeted Model Poisoning: The model attacker constructs the toxic local model that is similar to benign local models but causes the correct global gradient to reverse, or is much bigger (or smaller) than the maximum (or minimum) benign local models.

7.1.3. Evaluation Metrics

To verify that our PSDL scheme improves model accuracy, we use test accuracy and test error rate as evaluation measures on different training datasets. In the case of different numbers of malicious , the results of our PBSL scheme were verified by using the no-attack FedSGD scheme [38] as the baseline, and the Krum scheme [16], Bulyan [16], Trim-mean [39] as the control.

7.1.4. SL Settings

In our experiment, the total number K of is set to 10, and all are selected in each iteration during training. And we selected the models derived from convolutional neural networks (CNNs) with structure: , to train three different datasets using Python, Numpy, and Tensorflow. Then, the training data are evenly distributed to each , i.e., for both MNIST and FashionMNIST datasets, the size of each local dataset is 6000. We considered the proportion of different malicious from to . Our PBSL scheme is compared with SL and Krum. And the batch size b is set as 128.

7.2. Experimental Results

To demonstrate that the PBSL scheme meets our goals of accuracy, robustness, privacy, efficiency, and reliability, we conduct the following experiments. The experimental results show that PBSL can achieve the expected goals, which are discussed below.

7.2.1. Experimental Results on Defense Performance

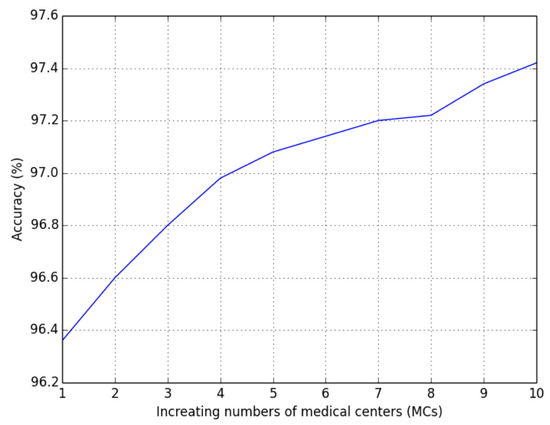

Impact of Number of : The test accuracy was measured by increasing the number of involved in collaborative training. The experiments were run with different numbers of from 1 to 10. Figure 4 shows that the more participate, the higher the training accuracy.

Figure 4.

The impact of the number of .

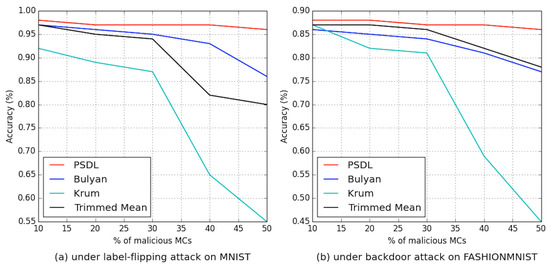

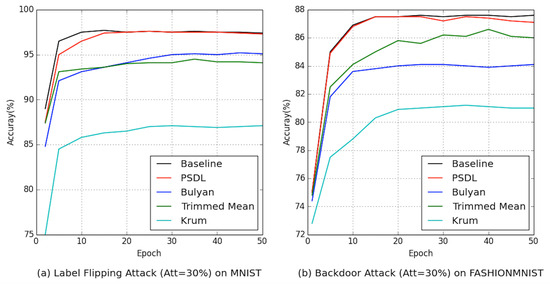

Impact of Different Proportions of Malicious : To check the robustness of our PBSL scheme, we evaluate the accuracy loss caused by the ratio change in malicious . Figure 5 and Figure 6 show the results of PBSL training on two different datasets in the presence of different proportions of malicious . The experiment shows that the proposed basic scheme is reasonable and robust.

Figure 5.

Comparison of accuracy with different numbers of malicious under different attacks on different datasets.

Figure 6.

Comparison of accuracy with different epochs under different attacks on different datasets.

Impact of different attacks: To prove Byzantine robustness against different typical Byzantine attacks, we performed targeted attacks and untargeted attacks to our PBSL scheme. Table 3 shows the training results on MNIST and FashionMNIST datasets in the presence of different proportions of malicious under targeted and untargeted attacks. The results show that PBSL still keeps the same accuracy as baseline for different typical attacks, achieving the goal of robustness and accuracy.

Table 3.

Accuracy of PBSL and Bulyan with different numbers of malicious MCs under untargeted attack and targeted attack on different datasets.

7.2.2. Efficiency Comparison with Related FL Approaches

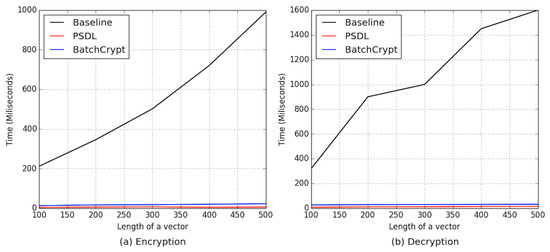

Comparison of PBSL with alternative privacy protection schemes. In the PBSL scheme, all operations, including similarity calculations and model aggregation, are computed in cipher-text. No participant can recover the original plaintext of the ’ from the cipher-text. Therefore, the PBSL scheme meets the ’ requirements for data privacy. To verify the efficiency of PBSL, using the Paillier-based federated learning scheme, i.e., PEFL [41], as a benchmark, we evaluated PBSL and BatchCrypt, where BatchCrypt [33] is a federated learning scheme based on HE. For each , we use the time required for encryption and decryption of each iteration as a criterion. As shown in Figure 7, PBSL greatly improves computational efficiency and reduces computational costs compared to baseline and BatchCrypt. PBSL adopts a novel encryption scheme, i.e., CKKS [34], which has its unique advantages in encrypting large-scale floating-point vectors and network models with many parameters.

Figure 7.

Comparison of the cost time of encryption and decryption in baseline, BatchCrypt, and PBSL.

Comparison of PBSL with alternative blockchain-based schemes.Table 4 compares the model accuracy and mining overhead on the FashionMNIST dataset between LearningChain in [59], BCFL [45], and PBSL, where denotes training time per iteration and denotes mining time per block. First, we observe that the proposed PBSL achieves the highest model accuracy, compared with LearningChain and BCFL. This is because the privacy protection of PBSL uses fully homomorphic encryption (FHE) instead of differential privacy, which improves the accuracy of the model. Second, the mining overhead of PBSL is less than that of LearningChain but more than that of BCFL. This is due to the fact that LearningChain defends against Byzantine attacks at the cost of resource consumption for mining, while BCFL reduces the mining overhead at the expense of model robustness.

Table 4.

Comparison of accuracy and mining overhead on FashionMNIST dataset.

And the model aggregation is implemented as functions of smart contract, and the computational cost of a function in a smart contract is assessed in gas units. Therefore, we measure the computational consumption of PBSL in gas. Using the CNN model, gas costs are tested in each iteration with 10 . After all the have submitted their local updates in cipher-text, SC obtains the global gradient at gas per transaction by homomorphically aggregating all local updates.

8. Conclusions

In the article, we review the shortcomings of swarm learning (SL) and further propose a new privacy-protection Byzantine-resilient swarm learning for e-heath, called PBSL, which ensures the privacy of local gradient and the tolerance toward Byzantine attacks. With the CKKS cryptosystem based on threshold decryption and decentralized computing based on blockchain, each can safely aggregate other ’ gradients to generate a more accurate global model, while the confidentiality of the final global model can be ensured. And in the process of global model aggregation, our proposed Byzantine-robust aggregation method uses secure cosine similarity to punish malicious . Detailed security analysis and extensive experiments show that PBSL has privacy protection capability and efficiency.

In this paper, our attack detection strategy is based on cosine similarity between the local model gradient and the global model gradient. In future work, in order to prevent new attack vectors, we will continue to study new attack detection strategies that are suitable for blockchain platforms and take into account privacy protection, so as to deal with unforeseen threats in future-proofing systems.

Author Contributions

Conceptualization, X.Z.; Methodology, X.Z.; Software, T.L.; Writing—original draft, X.Z.; Writing—review & editing, T.L.; Supervision, H.L.; Project administration, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: [http://yann.lecun.com/exdb/mnist/, https://github.com/zalandoresearch/fashion-mnist].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Meier, C.; Fitzgerald, M.C.; Smith, J.M. Ehealth: Extending, enhancing, and evolving health care. Annu. Rev. Biomed. Eng. 2013, 15, 359–382. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Lu, R.; Ma, J.; Chen, L.; Qin, B. Privacy-preserving patient-centric clinical decision support system on naive bayesian classification. IEEE J. Biomed. Health Inform. 2016, 20, 655–668. [Google Scholar] [CrossRef] [PubMed]

- Rahulamathavan, Y.; Veluru, S.; Phan, C.W.; Chambers, J.A.; Rajarajan, M. Privacy-preserving clinical decision support system using gaussian kernel-based classification. IEEE J. Biomed. Health Inform. 2014, 18, 56–66. [Google Scholar] [CrossRef] [PubMed]

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Saeed, M.; et al. Do no harm: A roadmap for responsible machine learning for healthcare. Nat Med. 2019, 2019, 1337–1340. [Google Scholar] [CrossRef] [PubMed]

- Courtiol, P.; Maussion, C.; Moarii, M.; Pronier, E.; Pilcer, S.; Sefta, M.; Manceron, P.; Toldo, S.; Zaslavskiy, M.; Le Stang, N.; et al. Deep learning-based classification of mesothelioma improves prediction of patient outcome. Nat. Med. 2019, 25, 1519–1525. [Google Scholar] [CrossRef] [PubMed]

- Warnat-Herresthal, S.; Perrakis, K.; Taschler, B.; Becker, M.; Baßler, K.; Beyer, M.; Günther, P.; Schulte-Schrepping, J.; Seep, L.; Klee, K.; et al. Scalable Prediction of Acute Myeloid Leukemia Using High-Dimensional Machine Learning and Blood Transcriptomics. iScience 2020, 23, 100780. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine learning in medicine. N. Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef] [PubMed]

- Savage, N. Calculating disease. Nature 2017, 550, 115–117. [Google Scholar] [CrossRef] [PubMed]

- Ping, P.; Hermjakob, H.; Polson, J.S.; Benos, P.V.; Wang, W. Biomedical informatics on the cloud: A treasure hunt for advancing cardiovascular medicine. Circ. Res. 2018, 122, 1290–1301. [Google Scholar] [CrossRef]

- Kaissis, G.A.; Makowski, M.R.; Ruckert, D.; Braren, R.F. Secure privacy-preserving and federated machine learning in medical imaging. Nat. Mach. Intell. 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Konecny, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Mcmahan, H.B.; Moore, E.; Ramage, D.; Arcas, B. Federated learning of deep networks using model averaging. arXiv 2016, arXiv:1602.05629v1. [Google Scholar]

- Warnat-Herresthal, S.; Schultze, H.; Shastry, K.L.; Manamohan, S.; Mukherjee, S.; Garg, V.; Sarveswara, R.; Händler, K.; Pickkers, P.; Aziz, N.A.; et al. Swarm Learning for decentralized and confidential clinical machine learning. Nature 2021, 594, 265–270. [Google Scholar] [CrossRef] [PubMed]

- Song, C.; Ristenpart, T.; Shmatikov, V. Machine learning models that remember too much. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; ACM: New York, NY, USA, 2017; pp. 587–601. [Google Scholar]

- Melis, L.; Song, C.; De Cristofaro, E.; Shmatikov, V. Inference attacks against collaborative learning. arXiv 2018, arXiv:1805.04049. [Google Scholar]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 2017, 30, 119–129. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. arXiv 2018, arXiv:1807.00459. [Google Scholar]

- Shokri, R.; Shmatikov, V. Privacy-preserving deep learning. In Proceedings of the ACM Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 310–1321. [Google Scholar]

- Heikkila, M.A.; Koskela, A.; Shimizu, K.; Kaski, S.; Honkela, A. Differentially private cross-silo federated learning. arXiv 2020, arXiv:2007.05553. [Google Scholar]

- Zhao, L.; Wang, Q.; Zou, Q.; Zhang, Y.; Chen, Y. Privacy-preserving collaborative deep learning with unreliable participants. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1486–1500. [Google Scholar] [CrossRef]

- Xu, R.; Baracaldo, N.; Zhou, Y.; Anwar, A.; Ludwig, H. HybridALpha: An efficient approach for privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 13–23. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Chan, T.-H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. Pcanet: A simple deep learning baseline for image classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef]

- Aliper, A.; Plis, S.; Artemov, A.; Ulloa, A.; Mamoshina, P.; Zhavoronkov, A. Deep learning applications for predicting pharmacological properties of drugs and drug repurposing using transcriptomic data. Mol. Pharm. 2016, 13, 2524–2530. [Google Scholar] [CrossRef]

- Budaher, J.; Almasri, M.; Goeuriot, L. Comparison of several word embedding sources for medical information retrieval. In Proceedings of the Working Notes of CLEF 2016—Conference and Labs of the Evaluation Forum, Évora, Portugal, 5–8 September 2016; pp. 43–46. [Google Scholar]

- Rav, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.Z. Deep learning for health informatics. IEEE J. Biomed. Health Inf. 2017, 21, 4–21. [Google Scholar] [CrossRef]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Mohassel, P.; Zhang, Y. Secureml: A system for scalable privacy-preserving machine learning. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 25 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 19–38. [Google Scholar]

- Wagh, S.; Gupta, D.; Chandran, N. Securenn: 3-party secure computation for neural network training. Proc. Priv. Enhanc. Technol. 2019, 3, 26–49. [Google Scholar] [CrossRef]

- Chaudhari, H.; Rachuri, R.; Suresh, A. Trident: Efficient 4pc framework for privacy preserving machine learning. In Proceedings of the 27th Annual Network and Distributed System Security Symposium, NDSS, San Diego, CA, USA, 23–26 February 2020. [Google Scholar]

- Phong, L.T.; Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1333–1345. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, X.; Shen, L.; Wang, D. EaSTFLy: Efficient and secure ternary federated learning. Comput. Secur. 2020, 94, 101824. [Google Scholar] [CrossRef]

- Zhang, C.; Li, S.; Xia, J.; Wang, W. BatchCrypt: Efficient homomorphic encryption for Cross-Silo federated learning. In Proceedings of the USENIX Annual Technical Conference (USENIX ATC 20), Online, 15–17 July 2020; pp. 493–506. [Google Scholar]

- Cheon, J.; Kim, A.; Kim, M.; Song, Y. Homomorphic encryption for arithmetic of approximate numbers. In Advances in Cryptology, Proceedings of the ASIACRYPT 2017: 23rd International Conference on the Theory and Applications of Cryptology and Information Security, Hong Kong, China, 3–7 December 2017; Springer: Cham, Switzerland, 2017; pp. 409–437. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. Mitigating sybils in federated learning poisoning. arXiv 2018, arXiv:1808.04866. [Google Scholar]

- Fang, M.; Cao, X.; Jia, J.; Gong, N. Local model poisoning attacks to byzantine-robust federated learning. In Proceedings of the 29th USENIX Security Symposium (USENIX Security), Boston, MA, USA, 12–14 August 2020; pp. 1605–1622. [Google Scholar]

- Tolpegin, V.; Truex, S.; Gursoy, M.E.; Liu, L. Data poisoning attacks against federated learning systems. In Proceedings of the 25th European Symposium on Research in Computer Security, ESORICS 2020, Guildford, UK, 14–18 September 2020; Springer: Cham, Switzerland, 2020; pp. 480–501. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Yin, D.; Chen, Y.; Ramchandran, K.; Bartlett, P.L. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 5636–5645. [Google Scholar]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- Liu, X.; Li, H.; Xu, G.; Chen, Z.; Huang, X.; Lu, R. Privacy-enhanced federated learning against poisoning adversaries. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4574–4588. [Google Scholar] [CrossRef]

- Chen, Y.; Su, L.; Xu, J. Distributed statistical machine learning in adversarial settings: Byzantine gradient descent. Proc. ACM Meas. Anal. Comput. Syst. 2017, 1, 1–25. [Google Scholar] [CrossRef]

- Guerraoui, R.; Rouault, S. The hidden vulnerability of distributed learning in byzantium. In Proceedings of the International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; pp. 3521–3530. [Google Scholar]

- Ramanan, P.; Nakayama, K. BAFFLE: Blockchain based aggregator free federated learning. In Proceedings of the International Conference on Blockchain (Blockchain), Virtual Event, 2–6 November 2020; pp. 72–81. [Google Scholar]

- Li, Y.; Chen, C.; Liu, N.; Huang, H.; Zheng, Z.; Yan, Q. A blockchain-based decentralized federated learning framework with committee consensus. IEEE Netw. 2021, 35, 234–241. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Blockchained on-device federated learning. IEEE Commun. Lett. 2020, 24, 1279–1283. [Google Scholar] [CrossRef]

- Weng, J.; Zhang, J.; Li, M.; Zhang, Y.; Luo, W. DeepChain: Auditable and Privacy-Preserving Deep Learning with Blockchain-Based Incentive. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2438–2455. [Google Scholar] [CrossRef]

- Warnat-Herresthal, S.; Schultze, H.; Shastry, K.; Manamohan, S.; Mukherjee, S.; Garg, V.; Sarveswara, R.; Händler, K.; Pickkers, P.; Aziz, N.A.; et al. Swarm Learning as a privacy-preserving machine learning approach for disease classification. bioRxiv 2020. [Google Scholar] [CrossRef]

- Fan, D.; Wu, Y.; Li, X. On the Fairness of Swarm Learning in Skin Lesion Classification. In Clinical Image-Based Procedures, Distributed and Collaborative Learning, Artificial Intelligence for Combating COVID-19 and Secure and Privacy-Preserving Machine Learning, Proceedings of the 10th Workshop, CLIP 2021, Second Workshop, DCL 2021, First Workshop, LL-COVID19 2021, and First Workshop and Tutorial, PPML 2021, Strasbourg, France, 27 September–1 October 2021; CoRR Abs/2109.12176; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Oestreich, M.; Chen, D.; Schultze, J.; Fritz, M.; Becker, M. Privacy considerations for sharing genomics data. Excli J. 2021, 2021, 1243. [Google Scholar]

- Westerlund, A.; Hawe, J.; Heinig, M.; Schunkert, H. Risk Prediction of Cardiovascular Events by Exploration of Molecular Data with Explainable Artificial Intelligence. Int. J. Mol. Sci. 2021, 22, 10291. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.; Rasmussen, P.M.R.; Sahai, A. Threshold Fully Homomorphic Encryption. Cryptology ePrint Archive, Report 2017/257. 2017. Available online: http://eprint.iacr.org/2017/257 (accessed on 9 June 2024).

- Paillier, P. Public-key crypto-systems based on composite degree residuosity classes. In Proceedings of the International Conference on the Theory and Application of Cryptographic Techniques, Prague, Czech Republic, 1–2 May 1999; pp. 223–238. [Google Scholar]

- Cheon, J.; Kim, D.; Lee, H.; Lee, K. Numerical method for comparison on homomorphically encrypted numbers. In Proceedings of the ASIACRYPT 2019 25th International Conference on the Theory and Application of Cryptology and Information Security, Kobe, Japan, 8–12 December 2019; pp. 415–445. [Google Scholar]

- Cao, X.; Fang, M.; Liu, J.; Gong, N.Z. FLTrust: Byzantine-robust federated learning via trust bootstrapping. In Proceedings of the Network and Distributed System Security Symposium, Virtual, 21–25 February 2021; pp. 1–18. [Google Scholar] [CrossRef]

- Li, Z.; Kang, J.; Yu, R.; Ye, D.; Deng, Q.; Zhang, Y. Consortium blockchain for secure energy trading in industrial internet of things. IEEE Trans. Ind. Inform. 2018, 14, 3690–3700. [Google Scholar] [CrossRef]

- Hu, S.; Cai, C.; Wang, Q.; Wang, C.; Luo, X.; Ren, K. Searching an encrypted cloud meets blockchain: A decentralized, reliable and fair realization. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018. [Google Scholar]

- Wang, S.; Zhang, Y.; Zhang, Y. A blockchain-based framework for data sharing with fine-grained access control in decentralized storage systems. IEEE Access 2018, 6, 437–450. [Google Scholar] [CrossRef]

- Chen, X.; Luo, J.J.; Liao, C.W.; Li, P. When machine learning meets blockchain: A decentralized, privacy-preserving and secure design. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 1178–1187. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).