Abstract

Multi-factor authentication (MFA) is a system for authenticating an individual’s identity using two or more pieces of data (known as factors). The reason for using more than two factors is to further strengthen security through the use of additional data for identity authentication. Sequential MFA requires a number of steps to be followed in sequence for authentication; for example, with three factors, the system requires three authentication steps. In this case, to proceed with MFA using a deep learning approach, three artificial neural networks (ANNs) are needed. In contrast, in parallel MFA, the authentication steps are processed simultaneously. This means that processing is possible with only one ANN. A convolutional neural network (CNN) is a method for learning images through the use of convolutional layers, and researchers have proposed several systems for MFA using CNNs in which various modalities have been employed, such as images, handwritten text for authentication, and multi-image data for machine learning of facial emotion. This study proposes a CNN-based parallel MFA system that uses concatenation. The three factors used for learning are a face image, an image converted from a password, and a specific image designated by the user. In addition, a secure password image is created at different bit-positions, enabling the user to securely hide their password information. Furthermore, users designate a specific image other than their face as an auxiliary image, which could be a photo of their pet dog or favorite fruit, or an image of one of their possessions, such as a car. In this way, authentication is rendered possible through learning the three factors—that is, the face, password, and specific auxiliary image—using the CNN. The contribution that this study makes to the existing body of knowledge is demonstrating that the development of an MFA system using a lightweight, mobile, multi-factor CNN (MMCNN), which can even be used in mobile devices due to its low number of parameters, is possible. Furthermore, an algorithm that can securely transform a text password into an image is proposed, and it is demonstrated that the three considered factors have the same weight of information for authentication based on the false acceptance rate (FAR) values experimentally obtained with the proposed system.

1. Introduction

Researchers have paid close attention to MFA, as it makes tasks requiring identity verification more secure and leads to improved performance through the use of additional data for authentication. Rattani and Tistarelli [1] reported that the classification performance was improved when using a method that fused face and iris images. Van Goethem et al. [2] proposed a multi-factor system using a password, fingerprint, and SMS (or email) to increase the security of authentication, and they explained that it was lightweight and could be used in mobile devices. To improve security, Shao et al. [3] proposed a fuzzy graph model that can maintain privacy for MFA system users. Ometov et al. [4] stated that highly secure MFA is necessary for the safe use of Advanced Internet of Things (IoT) in smart cities. He et al. [5] suggested that the judgment accuracy of emotion recognition could be increased by combining two factors—electroencephalogram (EEG) signal and eye movement or EEG signal and peripheral physiology—based on brain–computer interfaces. Deebak and Hwang [6] proposed a lightweight two-factor authentication system that can be employed in a social-IoMT (Internet of Medical Things) environment for a smart e-health system that guarantees privacy. Several researchers have surveyed research trends regarding MFA. In 2018, Ometov et al. [7] surveyed papers which studied MFA as a means of improving security. In 2021, Mujeye [8] conducted a user survey on whether MFA was used by people with mobile devices and found that 50% of users were using MFA in a mobile environment. From a bibliometrics perspective, Saqib et al. [9] conducted a recent MFA-related survey in 2022. They reported that most papers were published in 2019 and, by country, the US, India, and China produced the largest number of MFA-related papers. Additionally, in 2023, Otta et al. [10] conducted a survey on various factors that can be used for authentication to avoid threatening cloud infrastructure and proposed a method for configuring safe and efficient MFA.

A convolutional neural network (CNN) is a deep learning model that imitates the human visual processing system using convolutional, pooling, and fully connected layers, making it suitable for image classification. The convolutional layer is used to find the image’s feature points, the pooling layer reduces the weights and number of calculations required for image processing, and the fully connected layer classifies the image. Applying CNNs in the context of MFA improves its accuracy and security—aspects that have fueled the consistent publication of related research. For instance, Ying and Chuah [11] attempted to solve the anti-spoofing problem through simultaneously testing the user’s liveness along with a face image and proposed a multi-task CNN. Hammad et al. [12] implemented multimodal biometric authentication using two steps to pass both ECG and fingerprint tests and then fused the results. Kwon et al. [13] proposed a secure authentication system which compares face images captured by CCTV with face images using a smartphone. Zhou et al. [14] proposed a secure authentication system using a CNN for face and acoustic features, while Jena et al. [15] learned fingerprint and facial features using Google Colaboratory’s CNN model. Sivakumar et al. [16] learned fingerprint and finger-vein images using VGG-19 and proposed adding a hashing method for multimodal biometric templates to increase security. Liu et al. [17] used passwords, human hand impedance, and geometry data as factors, and they developed a CNN to enable user recognition. Zhang et al. [18] proposed electroencephalogram (EEG) identity authentication through transforming EEG signals into three-dimensional data that were then learned using a multi-scale convolutional structure. There have also been cases where CNNs have been applied in a multi-input system for continuous authentication, with the aim being to monitor login sessions. To identify smartphone users, Hu et al. [19] and Li et al. [20,21] applied a CNN through transforming frequency domain data created by an accelerometer and gyroscope using the fast Fourier transform method. Xiong et al. [22] used a CNN for ECG classification, while Tu et al. [23], Yaguchi et al. [24], and Dua et al. [25] proposed the use of multi-input CNNs for human activity recognition.

CNN-based multi-factor research is also being conducted in the medical field, where precise judgment is required. Some papers have proposed increasing medical precision through using CNNs with a multi-factor, multi-input structure. For example, Oktay et al. [26] reported a cardiac image approach that creates a high-resolution image through inputting several low-resolution images, which is helpful in medical treatment. Abbood and Al-Assadi [27] revealed that CT scans and X-Ray COVID-19 images have been used for multi-input CNN learning to diagnose COVID-19, increasing the accuracy to 98%. Elmoufidi et al. [28] found that the accuracy of glaucoma assessment can be improved to 99.1% using fundus images in a multiple input system that employs VGG19, a CNN architecture. Additionally, to ensure safe healthcare services, Gupta et al. [29] proposed a telecare medical information system (TMIS) based on machine learning to maintain anonymity in telemedicine. Systems demonstrating that a CNN-based multi-input system can be applied to environmental management have also been proposed. For instance, Wang et al. [30] proposed a 4D fractal CNN model that can predict water quality using lake images. Huang and Kuo [31] recommended applying such an approach for short-term photovoltaic power prediction through multi-input CNN learning of temperature, solar radiation, and PV system output data.

The objective of this study is to propose an MFA system that satisfies the following:

- (1)

- A mobile-applicable lightweight MFA system;

- (2)

- A multi-modal system that can use both image and text simultaneously, in order to use a text password as a factor;

- (3)

- A system where passwords can be used safely;

- (4)

- A system in which the weights of three or more factors are applied equally.

To achieve these objectives, a CNN-based system was developed. A CNN-based design was chosen as people often look at other people’s faces and appearances to determine who they are. In addition, there are many studies on CNN-based authentication systems, allowing the results of this paper to be comparatively evaluated. This study proposes a CNN-based MFA system using feature-level concatenation, and it is shown that the objectives were achieved, according to the experimental results. The three factors used for learning were a face image, a securely converted password image, and a user-specified auxiliary image. The contributions of this study are as follows:

- (1)

- This study presents a mobile multi-factor CNN (MMCNN) with three inputs, containing only two convolutional layers and two FC layers. With the MMCNN, the proposed CNN-based multi-modal MFA system exhibited an accuracy of 99.6% in the experiment. The three factors used for learning were a face image, an image securely converted from a password, and a specific image designated by the user. These contributions are introduced in Section 3.1, Section 3.2, and Section 4.1.

- (2)

- This study presents an algorithm to create a secure transformed password image, called the Secure Password Transformation (SPT) algorithm. To ensure that a password image is safe even if it is exposed, the pixel location of the password bit among the image pixels is configured differently for each user. This algorithm is introduced in Section 3.3.

- (3)

- This study also presents a method for demonstrating that the three considered factors have the same weight regarding the accuracy of authentication, which is confirmed by assuming that one or two factors include fake information. It is found that the weight value of each factor on the authentication system can be determined by judging their FAR values. In this research, the three factors were adjusted to equal weights which were then shown through the FAR values. The experimental results of the system are described in Section 4.2.

2. Related Works

For the dual purpose of increasing accuracy and security, researchers have continually proposed novel recognition and authentication systems using multiple inputs and factors based on CNN architectures. Xing and Qiao [32] proposed the DeepWriter model, with a CNN structure containing seven layers, in order to identify writers from their handwriting. Through testing, they distinguished 300 writers with an accuracy of 99.01%. A method was then employed to input two handwritten images simultaneously, and the two input images were combined with an element-wise sum before the softmax classifier after passing through the seven layers. Utilizing feature-level concatenation, Sun et al. [33] developed a CNN with a seven-layer structure and simultaneously input three images of the same flower to classify flowers into three classes. The CNN displayed an accuracy of 89.6%. Delgado-Escano et al. [34] proposed a system that detects pedestrian movement and identifies pedestrians. The researchers reported that gait recognition can be performed using accelerometer signals and signals generated from a gyroscope. Data from 744 people were classified, and an accuracy of 96.2% was achieved using a CNN structure with five layers. Two input factors were learned in parallel and a feature-level fusion method was used. Attempts have also been made to improve the accuracy of medical diagnoses with CNN-based multi-input systems. For instance, Naglah et al. [35] proposed a system to detect thyroid cancer using T2-weighted magnetic resonance imaging (MRI) images and apparent diffusion coefficient (ADC) map images. A multi-input CNN called the computer-aided design (CAD) system was utilized, and two factors of the data were learned using a parallel structure, which were then used for feature-level concatenation. In their experiment, 49 cases were distinguished and an accuracy of 87% was recorded when using a CNN structure with four layers. Through experiments, the researchers revealed that their proposed multi-input system was suitable for mobile devices, as the CNN had relatively high accuracy compared with the existing AlexNet and ResNet18 while having a lightweight structure consisting of only four layers. Additionally, Mazumder et al. [36] reported that CNN learning can be used to diagnose respiratory symptoms through two inputs: respiratory audio sound and demographic vector. These two factors utilized feature-level concatenation through parallel learning and exhibited an accuracy of 87.3% in distinguishing 126 subjects. The CNN used had a deep CNN structure with nine layers. Multi-modality was implemented using sound and vector data.

Other researchers have strived to use multiple signal inputs. They found that the signals can be factors in the convolutional layers. For instance, Chen et al. [37] used a CNN to learn three signals—electrocardiography (ECG), electrodermal activity (EDA), and respiratory activity (RSP)—for emotion recognition. These three factors underwent parallel learning to achieve feature-level concatenation. The experiment was conducted with a CNN structure containing eight layers, and five types of emotions were classified with an accuracy of 83.8%. Ahamed et al. [38] proposed a system using ECG and photoplethysmography (PPG) data in a CNN structure for two-factor authentication. The two signals were fused during the parallel learning process to increase security. The CNN had 17 layers and classified 66 data samples with 99.8% accuracy. Ammour et al. [39] proposed a biometric authentication system utilizing Resnet50 for learning using two factors: ECG signals and fingerprint images. A multi-modal system was implemented using signal and image data as factors, and data samples from 70 people were classified with an accuracy of 98.6%. The two factors were concatenated in a feature-level manner. Sing and Tiwari [40] proposed biometric authentication using a CNN structure called the parallel Sparrow Search Algorithm-Deep Belief Network (SSA-DBN), consisting of seven layers, in which the three factors are ECG signals, fingerprints, and sclera images. Multimodality was achieved through inputting signals and images simultaneously, and SSA-DBN classified data from 80 people with an accuracy of 97.13%. For these factors, score-level fusion was performed after learning was complete. Rajasekar et al. [41] proposed an MFA system using a parallel CNN with three factors: face images, fingerprints, and iris images. Data from 106 people were classified with 99.92% accuracy using four layers in the CNN. As this comprises a lightweight structure with only four layers, it can be applied in mobile devices. However, as only images were learned, it is difficult to assert that a multi-modal approach was implemented.

The MMCNN proposed in this paper is a parallel multi-input, multi-factor MFA with three factors: face images, auxiliary images, and password text. It has a multi-modal structure that can learn text and images simultaneously. It has only four layers, and, thus, can be applied in mobile devices. In addition, data security is improved through the use of a secure password image production method for text passwords. The review of existing CNN-based MFA research papers published to date revealed that they do not meet all the functions implemented in the proposed MFA system. These points are summarized in Table 1.

Table 1.

Comparison of the proposed MFA system with other MFA systems.

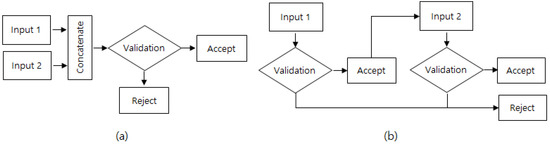

Researchers have also proposed MFA systems that can increase accuracy and security levels through mixing a CNN with another method. Table 1 also lists these kinds of MFA systems. For example, Zhou et al. [42] proposed two-factor authentication using echo signals reflected from facial landmarks and a 3D facial contour, which they named EchoPrint. They explained that this is a secure method, as the echoed acoustic features are not easily spoofed. The two factors were subjected to parallel feature-level concatenation, and a CNN with six layers was used to extract the features of echo signals. The support vector machine (SVM) classifier was then applied to classify the data of 50 people with an accuracy of 93.75%. Aleuya and Vicente [43] implemented facetureID, an MFA system, using face images, OTP text, and handwriting gesture images. Face and gesture images were learned using a CNN containing seven layers, following which the one-time password (OTP) confirmation procedure in text format was used to classify 20 people with an accuracy of 96%. The method for checking the three factors was sequential authentication, where the face image was checked first, followed by the OTP text and, finally, the gesture. When the authentication process of the MFA system is performed sequentially, a separate CNN is needed for each factor. The difference between the sequential authentication process and the parallel structure is depicted in Figure 1.

Figure 1.

Two types of authentication process: (a) parallel multi-input validation and (b) sequential multi-input validation.

Priya and Sumalatha [44] proposed an MFA system using password text and a signature image. Employing the sequential authentication system, they first confirmed the password and then the signature image. The signature image was learned using a CNN with seven layers, and the identity was authenticated using a hashed password to strengthen the security. This two-factor authentication system exhibited an accuracy of 99% when used in a sample of 200 people. Sajjad et al. [45] implemented a multi-input two-tier structure for anti-spoofing. In the first tier, each image was hashed to increase the security level and enable anti-spoofing, and the hashed images were learned with a CNN. The sequential authentication system was applied in the order of fingerprint, finger-vein, and face images. Using GoogLeNet with 22 layers as a CNN, data from 50 people were classified with an accuracy of 99.75%. Mehdi Cherrat et al. [46] employed a five-layer CNN in parallel to learn fingerprint, finger-vein, and face images. For these three factors, score level fusion was performed after the CNN evaluation results, following which the RF classifier was applied to classify the data of 106 people with an accuracy of 99.49%. Shalaby et al. [47] proposed the use of OTP and iris images to strengthen security when using bank automated teller machines (ATMs), and devised a system using a CNN with a five-layer structure to learn iris images. This is a sequential authentication system, which performs final authentication using OTP after checking the iris images learned with the CNN. The image itself was encrypted and transmitted to the server to increase the security level, and the data of 60 people were classified with 99.33% accuracy. Utilizing a CNN–LSTM (long short-term memory) structure for fear level classification, Masuda and Yairi [48] learned electroencephalogram (EEG) and peripheral physiological signal (PPS) data. Feature-level concatenation with a parallel structure was employed, and four fear levels were distinguished with an accuracy of 98.79% using CNN–LSTM with 11 layers.

Table 2 presents a list of recent CNN-based MFA systems which have obtained more than 97% accuracy. Ahamed et al. [38] and Ammour et al. [39] suggested systems with 17 layers and 50 layers, respectively, which are not suitable for use in mobile devices. Sing and Tiwari [40] proposed a relatively lightweight system with seven layers, but did not consider the safety of the data. The system of Rajasekar et al. [41] recognizes only the image as a factor and, so, it cannot implement multi-modality. The system of Masuda and Yairi [48] has 11 layers, making it unsuitable for mobile devices, and does not consider data safety. Sasikala [49] proposed a relatively lightweight system with six layers, but it is a complex system with a convolutional layer, gated recurrent unit (GRU) layer, and attention layer; furthermore, it implements only image data, so it cannot implement multi-modality. On the other hand, the MFA System with MMCNN proposed in this study has a four-layer CNN structure which is suitable for use in mobile devices, and is implemented as a multi-modality system such that both image and text can be used as factors. In addition, as it converts text passwords into secure images using the secure password transformation (SPT) algorithm, it takes data security into account. Therefore, the proposed system can be considered as a system with superior functions to those of existing CNN-based MFA systems.

Table 2.

List of recent CNN-based MFA systems with high accuracy.

In this study, a lightweight CNN that can be used in mobile devices is proposed. MobileNet [50] and ShuffleNet [51] have been proposed for use in mobile devices; however, MobileNet has a 28-layer structure and ShuffleNet has an 11-layer structure; thus, they are considered to have too many layers. Alexnet [52], which was proposed in the early stages of CNN development, has a simple structure of eight layers; therefore, based on this structure, a four-layer structure suitable for mobile devices was further proposed. Meanwhile, research on capturing text information through images has been conducted. Piugie et al. [53] proposed a safe user authentication system which converts key-stroke dynamic behavioral data into a 3D image, which is learned with a CNN and utilized as an authentication factor along with the password. Thkchenko et al. [54] suggested a two-level quick-response (2LQR) code that has public and private levels for private messages or authentication. Christiana et al. [55] proposed a visual cryptographic scheme (VCS) technique that represents the one-time password (OTP) text as an image, then creates three encrypted shares with the image. When decrypting the shares, XOR operations are performed on the three shares to restore the image with the original OTP text. In this study, a safe text-to-image conversion algorithm is proposed, called the Secure Password Transformation algorithm, which hides the location of pixels containing text information, unlike existing methods.

3. Proposed System

The proposed system is a CNN-based MFA system consisting of three methods. The three methods are an eight-layer multi-input CNN, a four-layer MMCNN, and the SPT (Secure Password Transformation) algorithm, which safely converts text passwords into images.

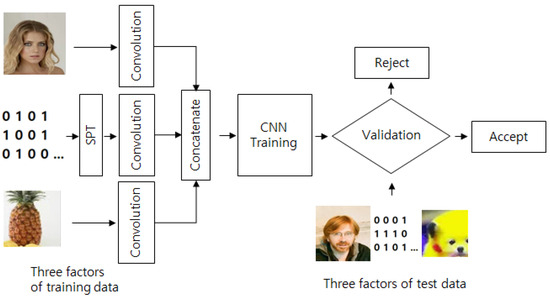

3.1. Overall Structure of Proposed MFA System

The proposed MFA system uses a face image, text password, and the user’s designated photo as input factors. The latter is a photo of something that the users will always have (e.g., their pet dog, favorite fruit, house, or car), which are used as auxiliary images. The text password is then converted into a secure password image using the SPT algorithm before being input to the first convolutional layer. Learning is processed after the three input factors are concatenated in the CNN. Subsequently, the entered test images are validated to determine whether the registrant is accepted. Figure 2 depicts the overall structure of the proposed CNN-based MFA system.

Figure 2.

Overall structure of the proposed MFA system.

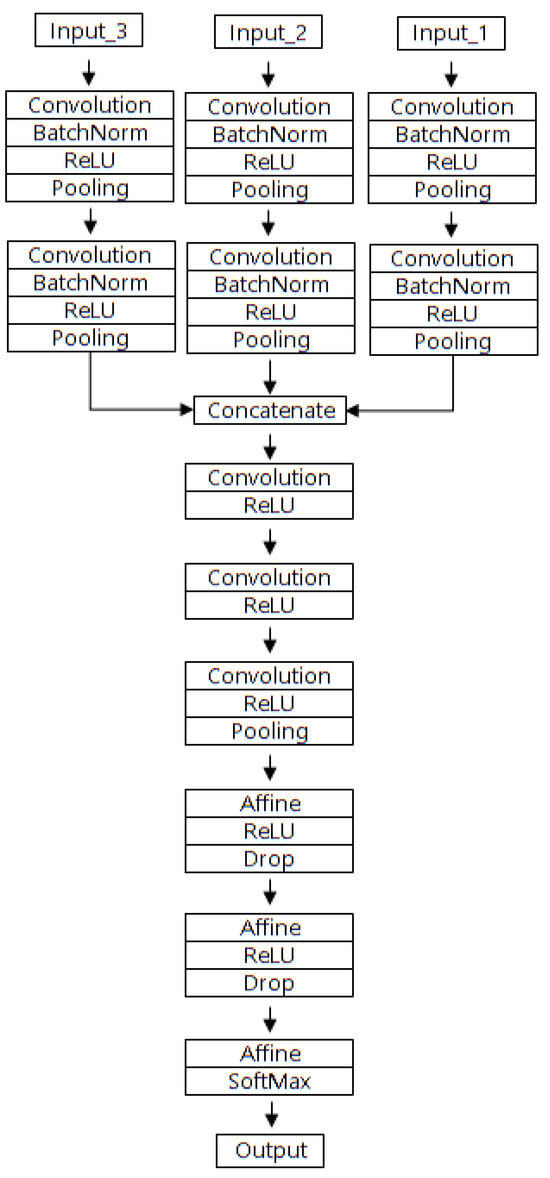

Figure 3 displays the structure of the eight-layer multi-input CNN, which is trained on data with a three-factor input structure. The three factors are each learned with two convolutional layers, undergo a concatenation process, and then pass through three convolutional layers and three fully connected (FC) layers (Affine layers) to output the final class, determined by SoftMax classification. Using this eight-layer multi-input CNN, the results of the experiment exhibited 99.8% accuracy.

Figure 3.

Structure of the proposed eight-layer multi-input CNN.

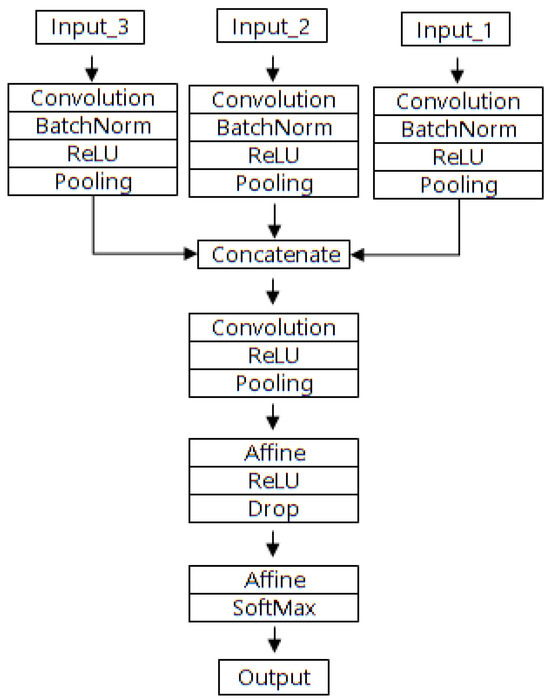

3.2. Mobile Multi-Factor CNN (MMCNN)

In addition, this study presents a mobile multi-factor CNN (MMCNN) with a lightweight structure of only four layers for use in mobile devices, the experimental results for which revealed an accuracy rate of 99.6%. The MMCNN passes through one convolutional layer for each input factor, then concatenates the three factors. Subsequently, it passes through one convolutional layer and two FC layers (Affine layers), the result of which is output through SoftMax classification. Figure 4 displays the schematic structure of the MMCNN, and Table 3 provides detailed specifications for each layer.

Figure 4.

Structure of the MMCNN.

Table 3.

Detailed specifications of the MMCNN.

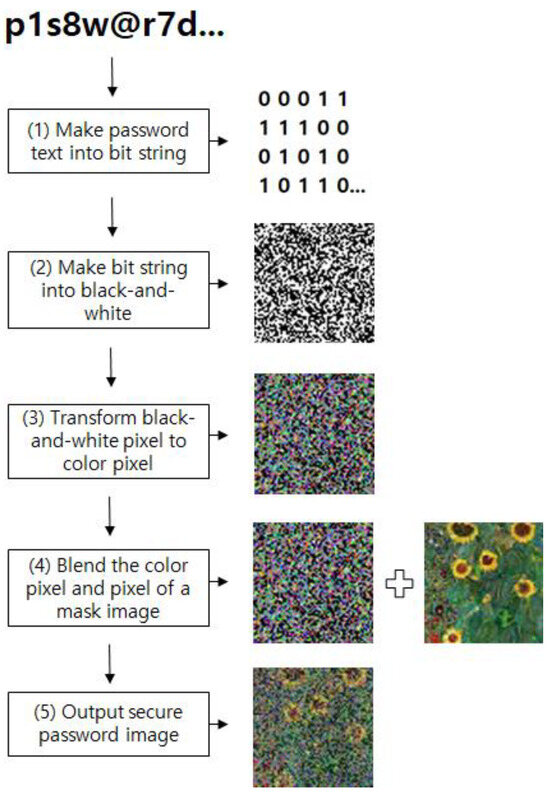

3.3. Secure Password Transformation (SPT) Algorithm

SPT is an algorithm that transforms a text password into a secure password image. There are five steps, and the function of each is described as follows.

- (1)

- Convert the password text into a bit string: This step converts the password entered as text into a binary bit string. It is written as a bit string of a specified length, taking into account the number of pixels to be used in the image. In this experiment, it was configured as 2080 bits.

- (2)

- Convert the bit string into a black and white image: The password bit string is composed into a grayscale image, where 0 is black and 1 is white. In this experiment, the image size was set to 65 × 65 = 4225 pixels, of which 2080 pixels were created as password bit strings and the remaining 2145 pixels were filled with random bit strings. The location of the 2080 password string pixels was fixed, and security was implemented by specifying a different location for each user.

- (3)

- Transform black-and-white pixels to color pixels: This transforms the grayscale image into a RGB color image. In this experiment, the password string’s 2080 pixels were transformed into color pixels through multiplying by a specified value, while the remaining 2145 pixels were assigned random colors.

- (4)

- Blend the color pixels and pixels of a mask image: The password RGB color image created in (3) and a mask image designated for each user are blended. Pixel blending is achieved through applying the equation below. The mask image was designated separately for each user, in order to further enhance security. In the equation, the α value was set as 0.5, the Image_mask was RGB pixel values of mask image, and Image_RGB_color was RGB pixel values of the password RGB-color image.Image_pw = α × Image_mask + (1 − α) × Image_RGB_color

- (5)

- Output the secure password image: The final secure password image that is created is saved.

The step-by-step generated images are displayed in Figure 5.

Figure 5.

Steps involved in transforming a text password into a secure password image.

The SPT algorithm inputs the password text and mask image and outputs a secure password image. Password image security can be explained from two perspectives. If the password text is converted into an image with a width of 65 pixels and a height of 65 pixels, it will have 65 × 65 = 4225 pixels. If the password bit string length is fixed to 2080, the number of ways to find 2080 positions among 4225 pixels in a combination expression is 4225C2080 >> 4225C200 >> 21024, which is safe. In addition, the mask image designated for each user has a size of 65 × 65 pixels, and the password image is safe, as blending is performed while the mask image is hidden within the system rather than exposed to the outside. Algorithm 1 presents the SPT algorithm.

| Algorithm 1. The Secure Password Transformation (SPT) algorithm. |

| Input: Password Text pw_text, Mask Image image_mask Output: Secure Password Image secure_pw_image 01: # initialize variables 02: img_width = 65 # width, height 03: img_pixel = 4225 # 65 × 65 = 4225 04: pw_length = 2080 # password length = 2080 bits 05: alpha = 0.5 # alpha value to be used for blending images 06: # (1) Make password text into bit string 07: password_bits = stringtobinary(password_string, pw_length) 08: # (2) Make bit string into black and white 09: # Select password 2080 pixel location among 4225 pixels 10: pw_place = random.choice(img_pixel, size = pw_length, replace = False) 11: # Password bits are stored in the 2080 positions out of 4225 bits. 12: # 0 or 1, random binary bits are stored in the remaining locations. 13: image_bits = random.choice(2, size= img_pixel, replace = True) 14: for i in range(0,img_pixel): #4225 15: for j in range(0,pw_length): #2080 16: if i = =pw_place[j]: 17: image_bits[i] = password_bits[j] 18: pw_array_before[i] = image_bits[i] 19: # Convert 4225 bit array into 65 × 65 gray image. 0-> black 1->white 20: for i in range(0,image_width): #65 21: for j in range(0,image_width): #65 22: pw_array[i][j] = pw_array_before[i*image_width + j] 23: # If necessary, check black and white output using pw_array. 24: img_bw = pw_array * 16581375 #RGB black and white 25: img_black_white = Image.fromarray(img_bw) 26: # (3) Transform black-and-white pixel to color pixel 27: # RGB 0–255 value random choice 28: RGB_image_bits[] =random.choice(256, size = img_pixel, replace = True) 29: # RGB_data generation 30: for i in range(img_width): #65 31: for j in range(img_width): #65 32: for k in range(3): #RGB 33: RGB_data[i][j][k] = RGB_generater(pw_array, RGB_image_bits) 34: img_RGB_Color = Image.fromarray(RGB_data,’RGB’) 35: # (4) Blend the color pixel and pixel of a mask image 36: # blending images with the value of alpha 37: # 0.5 * image_mask + (1 − 0.5) * img_RGB_color 38: secure_pw_image = cv2.addWeighted(img_mask, alpha, img_RGB_color, (1-alpha), 0) 39: # (5) Output secure password image 40: cv2.imwrite(image_path + file_name, secure_pw_image) |

4. Experimental Results and Discussion

Two types of CNN were used in the experiment: a three-input CNN with eight layers and an MMCNN with four layers. The MMCNN has a lightweight structure that can be used in mobile devices. The data set of three factors comprised face, password, and auxiliary images. The password images were the result of text conversion using the SPT algorithm. For the experiment, 35 samples were created for each of the 100 classes. Of these, 30 samples per class were used for learning, and 5 were used for testing; thus, out of 3500 data samples, 3000 were used for training and 500 were used for testing. Table 4 summarizes the details of the experiment. The source of the data set is available on GitHub [56].

Table 4.

Experimental details.

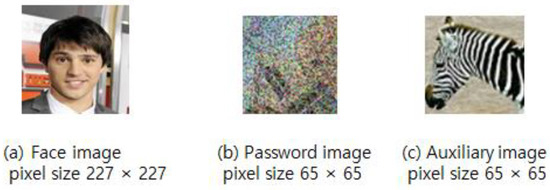

4.1. Experimental Data Set and the Results

The face image consisted of pixels 227 × 227 in size, while the password image and auxiliary image were each made of pixels 65 × 65 in size. Figure 6 displays a sample of the data used in the experiment.

Figure 6.

Sample data.

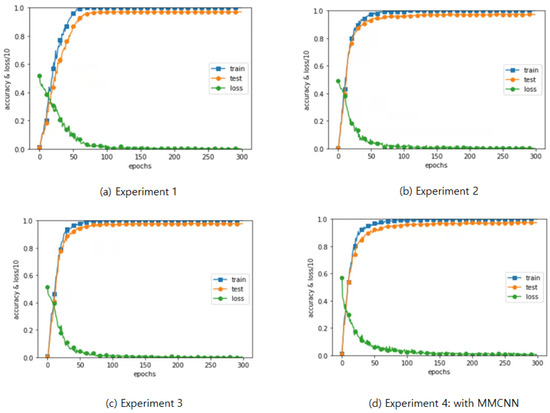

The experiment was conducted in four ways. The first experiment used two factors for authentication: face and password images. An accuracy of 98.8% was achieved using the eight-layer CNN. The second experiment used face and auxiliary images and achieved an accuracy of 98.6% using the eight-layer CNN. The third experiment involved three-factor authentication using face, password, and auxiliary images, and achieved an accuracy of 99.8% using the eight-layer CNN. The fourth experiment consisted of three-factor authentication using the four-layer MMCNN and achieved an accuracy of 99.6%. Table 5 summarizes the four experiments. Figure 7 graphically illustrates the results of the four experiments, displaying the training accuracy, test accuracy, and loss values for 300 epochs.

Table 5.

Results of the experiments.

Figure 7.

Results of the experiments for 300 epochs.

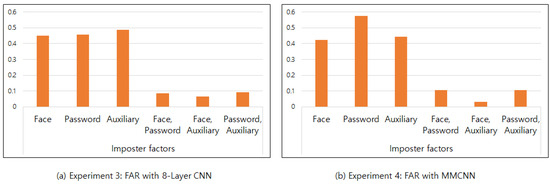

4.2. Experimental Results: FRR, FAR

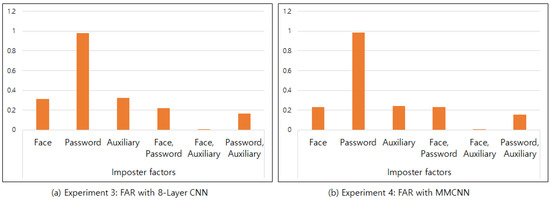

The false rejection ratio (FRR) and false acceptance ratio (FAR) were obtained in each of the four experiments. The FAR in the four experiments was acquired through inputting each factor as an imposter image. In the case of two-factor authentication (i.e., Experiment 1 and Experiment 2), one factor was changed to an imposter image. In the cases of Experiment 3 and Experiment 4, which involved three-factor authentication, the FAR was determined in six cases: three cases where one factor was an imposter image and three cases where two factors were imposter images. Table 6 presents the FRR for the four experiments and the FAR for all cases.

Table 6.

Results of the FRR and FAR experiments.

Three-factor authentication was conducted both when one factor was the imposter image and when two factors were imposters. For one imposter, the average FAR was 0.465, while for two imposters, the average FAR was 0.08. Even when the imposter factors were different, their FARs were similar. Therefore, the three considered factors affected the authentication accuracy in a uniform manner. The MMCNN yielded similar results, and Figure 8 displays each FAR graph.

Figure 8.

Comparison of FAR according to the number of imposter factors.

4.3. Discussion

Equal information amount of three factors: The scientific importance of this study is reflected in the fact that, while the authentication accuracy was increased to over 99% when using three factors, the weight of each factor was also equal.

As fake face images can be used to pass a face authentication system, the highest level of security can be applied when using three or more factors. To prove that the security level has increased, it must be confirmed that each element of the multi-factor system has an equal influence on the accuracy of authentication. In this study, the equal influence of each element was proven through FAR testing using fake information. When a password made of text was transformed into a black-and-white image for learning, the FAR value was 0.98 if the password was fake. This indicates that the amount of password information is small enough to be ignored, and identity was confirmed only with face and auxiliary image information. In the case of fake faces and fake auxiliary images, the FAR value ranged from 0.2 to 0.3, as shown in Figure 9. The problem was solved by converting the black and white image of the password into a color image, then combining the password color image with a different image mask for each user with a weight of 0.5. Figure 8 indicates equal FAR values, showing that the information amount of the password image, face image, and auxiliary image have similar weights for identification. This process completes the STP algorithm and allows for the creation of a secure password image.

Figure 9.

Comparison of FAR according to the number of imposter factors with black and white password images.

Applicability for mobile devices: The CNN structures studied for use in mobile devices include MobileNet [50] and ShuffleNet [51]. However, MobileNet has a 28-layer structure, and parallel processing is performed through depthwise convolution, while ShuffleNet has an 11-layer structure and uses the Channel Shuffle method. When testing multi-input multi-factor authentication using these two structures, their structures were considered somewhat complicated. In order to test the influence of each factor, Alexnet [52] was selected as a standard architecture, which has a relatively simple structure and a low number of layers (i.e., eight layers), and, therefore, the first experiment used a CNN with an eight-layer structure. Afterward, MMCNN with a lighter (four-layer) structure was proposed, which was experimentally confirmed to have an accuracy of 99.6%.

The number of parameters in the proposed CNN structure was 86,067,556 with eight layers and 13,717,220 with four layers, and the memory capacity occupied when run on a 64-bit computer was 657 megabytes with eight layers and 105 megabytes with four layers.

5. Conclusions

This study proposed a CNN-based MFA system that uses biometric data and pass-word text and is characterized by its mobility and data security. When three factors were used in the experiments, the eight-layer CNN exhibited an accuracy of 99.8%, while the four-layer MMCNN had an accuracy of 99.6%. The MMCNN has a lightweight structure, making it suitable for use in mobile devices, and the proposed SPT algorithm creates a secure image from a text password. Using the FAR and imposter images, the effect of each factor on CNN training in the MFA system was experimentally measured. The three factors proposed in this paper were compared according to the obtained FAR values when using one-factor and two-factor imposter images, which revealed that the three factors were learned with a uniform amount of information.

While the previously proposed MobileNet and ShuffleNet are large-capacity CNN structures which can distinguish 1000 classes, the proposed system can be used as an application for groups with a small number of people (about 100) with a high level of security. Being applicable to mobile devices in particular, small, independent devices can be used even in systems where access to the Internet cloud is restricted, due to the risk of hacking. Future work will aim to further reduce the number of parameters in the models and extend the modality to multiple factors in order to increase the mobility and safety of usage.

Funding

This research was funded by the 2022 Korean Bible University Academic Research Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The source of the data set is available on GitHub. Available online: https://github.com/hjinob/CNN-Based-Multi-Factor-Authentication-System-for-Mobile-Devices (accessed on 20 April 2024).

Conflicts of Interest

The author declares no conflicts of interest.

References

- Rattani, A.; Tistarelli, M. Robust Multi-Modal and Multi-Unit Feature Level Fusion of Face and Iris Biometrics. In Proceedings of the Advances in Biometrics: Third International Conference (ICB 2009), Alghero, Italy, 2–5 June 2009; Proceedings 3. pp. 960–969. [Google Scholar] [CrossRef]

- Van Goethem, T.; Scheepers, W.; Preuveneers, D.; Joosen, W. Accelerometer-Based Device Fingerprinting for Multi-Factor Mobile Authentication. In Proceedings of the Engineering Secure Software and Systems: 8th International Symposium (ESSoS 2016), London, UK, 6–8 April 2016; Proceedings 8. pp. 106–121. [Google Scholar] [CrossRef]

- Shao, Z.; Li, Z.; Wu, P.; Chen, L.; Zhang, X. Multi-factor combination authentication using fuzzy graph domination model. J. Intell. Fuzzy Syst. 2019, 37, 4979–4985. [Google Scholar] [CrossRef]

- Ometov, A.; Petrov, V.; Bezzateev, S.; Andreev, S.; Koucheryavy, Y.; Gerla, M. Challenges of multi-factor authentication for securing advanced IoT applications. IEEE Netw. 2019, 33, 82–88. [Google Scholar] [CrossRef]

- He, Z.; Li, Z.; Yang, F.; Wang, L.; Li, J.; Zhou, C.; Pan, J. Advances in multimodal emotion recognition based on brain-computer interfaces. Brain Sci. 2020, 10, 687. [Google Scholar] [CrossRef]

- Deebak, B.D.; Hwang, S.O. Federated Learning-Based Lightweight Two-Factor Authentication Framework with Privacy Preservation for Mobile Sink in the Social IoMT. Electronics 2023, 12, 1250. [Google Scholar] [CrossRef]

- Ometov, A.; Bezzateev, S.; Mäkitalo, N.; Andreev, S.; Mikkonen, T.; Koucheryavy, Y. Multi-factor authentication: A survey. Cryptography 2018, 2, 1. [Google Scholar] [CrossRef]

- Mujeye, S. A Survey on Multi-Factor Authentication Methods for Mobile Devices. In Proceedings of the 2021 The 4th International Conference on Software Engineering and Information Management, New York, NY, USA, 16–18 January 2021; pp. 199–205. [Google Scholar] [CrossRef]

- Saqib, R.M.; Khan, A.S.; Javed, Y.; Ahmad, S.; Nisar, K.; Abbasi, I.A.; Julaihi, A.A. Analysis and Intellectual Structure of the Multi-Factor Authentication in Information Security. Intell. Autom. Soft Comput. 2022, 32, 1633–1647. [Google Scholar] [CrossRef]

- Otta, S.P.; Panda, S.; Gupta, M.; Hota, C. A Systematic Survey of Multi-Factor Authentication for Cloud Infrastructure. Future Internet 2023, 15, 146. [Google Scholar] [CrossRef]

- Ying, X.; Li, X.; Chuah, M.C. Liveface: A Multi-Task CNN for Fast Face-Authentication. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 955–960. [Google Scholar] [CrossRef]

- Hammad, M.; Liu, Y.; Wang, K. Multimodal biometric authentication systems using convolution neural network based on different level fusion of ECG and fingerprint. IEEE Access 2018, 7, 26527–26542. [Google Scholar] [CrossRef]

- Kwon, B.W.; Sharma, P.K.; Park, J.H. CCTV-Based Multi-Factor Authentication System. J. Inf. Process. Syst. 2019, 15, 904–919. [Google Scholar] [CrossRef]

- Zhou, B.; Xie, Z.; Ye, F. Multi-Modal Face Authentication Using Deep Visual and Acoustic Features. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Jena, P.P.; Kattigenahally, K.N.; Nikitha, S.; Sarda, S.; Harshalatha, Y. Multimodal Biometric Authentication: Deep Learning Approach. In Proceedings of the 2021 International Conference on Circuits, Controls and Communications (CCUBE), Bangalore, India, 23–24 December 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Sivakumar, P.; Rathnam, B.R.; Divakar, S.; Teja, M.A.; Prasad, R.R. A Secure and Compact Multimodal Biometric Authentication Scheme using Deep Hashing. In Proceedings of the 2021 IEEE International Conference on Intelligent Systems, Smart and Green Technologies (ICISSGT), Visakhapatnam, India, 13–14 November 2021; pp. 27–31. [Google Scholar] [CrossRef]

- Liu, J.; Zou, X.; Han, J.; Lin, F.; Ren, K. BioDraw: Reliable Multi-Factor User Authentication with one Single Finger Swipe. In Proceedings of the 2020 IEEE/ACM 28th International Symposium on Quality of Service (IWQoS), Hang Zhou, China, 15–17 June 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Zhang, R.; Zeng, Y.; Tong, L.; Shu, J.; Lu, R.; Li, Z.; Yang, K.; Yan, B. EEG Identity Authentication in Multi-Domain Features: A Multi-Scale 3D-CNN Approach. Front. Neurorobot. 2022, 16, 901765. [Google Scholar] [CrossRef]

- Hu, H.; Li, Y.; Zhu, Z.; Zhou, G. CNNAuth: Continuous Authentication via Two-Stream Convolutional Neural Networks. In Proceedings of the 2018 IEEE international conference on networking, architecture and storage (NAS), Chongqing, China, 11–14 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Li, Y.; Hu, H.; Zhu, Z.; Zhou, G. SCANet: Sensor-based continuous authentication with two-stream convolutional neural networks. ACM Trans. Sens. Netw. 2020, 16, 1–27. [Google Scholar] [CrossRef]

- Li, Y.; Tao, P.; Deng, S.; Zhou, G. DeFFusion: CNN-based continuous authentication using deep feature fusion. ACM Trans. Sens. Netw. 2021, 18, 1–20. [Google Scholar] [CrossRef]

- Xiong, Y.; Wang, L.; Wang, Q.; Liu, S.; Kou, B. Improved convolutional neural network with feature selection for imbalanced ECG Multi-Factor classification. Measurement 2022, 189, 110471. [Google Scholar] [CrossRef]

- Tu, Z.; Xie, W.; Qin, Q.; Poppe, R.; Veltkamp, R.C.; Li, B.; Yuan, J. Multi-stream CNN: Learning representations based on human-related regions for action recognition. Pattern Recognit. 2018, 79, 32–43. [Google Scholar] [CrossRef]

- Yaguchi, K.; Ikarigawa, K.; Kawasaki, R.; Miyazaki, W.; Morikawa, Y.; Ito, C.; Shuzo, M.; Maeda, E. Human Activity Recognition Using Multi-Input CNN Model with FFT Spectrograms. In Proceedings of the Adjunct Proceedings of the 2020 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2020 ACM International Symposium on Wearable Computers, New York, NY, USA, 12–17 September 2020; pp. 364–367. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- Oktay, O.; Bai, W.; Lee, M.; Guerrero, R.; Kamnitsas, K.; Caballero, J.; de Marvao, A.; Cook, S.; O’Regan, D.; Rueckert, D. Multi-Input Cardiac Image Super-Resolution Using Convolutional Neural Networks. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 246–254, Proceedings, Part III 19. [Google Scholar] [CrossRef]

- Abbood, E.A.; Al-Assadi, T.A. GLCMs Based multi-inputs 1D CNN deep learning neural network for COVID-19 texture feature extraction and classification. Karbala Int. J. Mod. Sci. 2022, 8, 28–39. [Google Scholar] [CrossRef]

- Elmoufidi, A.; Skouta, A.; Jai-Andaloussi, S.; Ouchetto, O. CNN with multiple inputs for automatic glaucoma assessment using fundus images. Int. J. Image Graph. 2023, 23, 2350012. [Google Scholar] [CrossRef]

- Gupta, B.B.; Prajapati, V.; Nedjah, N.; Vijayakumar, P.; El-Latif, A.A.A.; Chang, X. Machine learning and smart card based two-factor authentication scheme for preserving anonymity in telecare medical information system (TMIS). Neural. Comput. Applic. 2023, 35, 5055–5080. [Google Scholar] [CrossRef]

- Wang, L.; Wang, X.; Zhao, Z.; Wu, Y.; Xu, J.; Zhang, H.; Yu, J.; Sun, Q.; Bai, Y. Multi factor status prediction by 4d fractal CNN based on remote sensing images. Fractals 2022, 30, 2240101. [Google Scholar] [CrossRef]

- Huang, C.J.; Kuo, P.H. Multiple-input deep convolutional neural network model for short-term photovoltaic power forecasting. IEEE Access 2019, 7, 74822–74834. [Google Scholar] [CrossRef]

- Xing, L.; Qiao, Y. Deepwriter: A Multi-Stream Deep CNN for Text-Independent Writer Identification. In Proceedings of the 2016 15th International Conference on Frontiers in Handwriting Recognition (ICFHR), Shenzhen, China, 23–26 October 2016; pp. 584–589. [Google Scholar] [CrossRef]

- Sun, Y.; Zhu, L.; Wang, G.; Zhao, F. Multi-input convolutional neural network for flower grading. J. Electr. Comput. Eng. 2017, 2017. [Google Scholar] [CrossRef]

- Delgado-Escano, R.; Castro, F.M.; Cózar, J.R.; Marín-Jiménez, M.J.; Guil, N. An end-to-end multi-task and fusion CNN for inertial-based gait recognition. IEEE Access 2018, 7, 1897–1908. [Google Scholar] [CrossRef]

- Naglah, A.; Khalifa, F.; Khaled, R.; Abdel Razek, A.A.K.; Ghazal, M.; Giridharan, G.; El-Baz, A. Novel MRI-Based CAD System for Early Detection of Thyroid Cancer Using Multi-Input CNN. Sensors 2021, 21, 3878. [Google Scholar] [CrossRef]

- Mazumder, A.N.; Ren, H.; Rashid, H.A.; Hosseini, M.; Chandrareddy, V.; Homayoun, H.; Mohsenin, T. Automatic detection of respiratory symptoms using a low-power multi-input CNN processor. IEEE Des. Test 2021, 39, 82–90. [Google Scholar] [CrossRef]

- Chen, P.; Zou, B.; Belkacem, A.N.; Lyu, X.; Zhao, X.; Yi, W.; Huang, Z.; Liang, J.; Chen, C. An improved multi-input deep convolutional neural network for automatic emotion recognition. Front. Neurosci. 2022, 16, 965871. [Google Scholar] [CrossRef]

- Ahamed, F.; Farid, F.; Suleiman, B.; Jan, Z.; Wahsheh, L.A.; Shahrestani, S. An Intelligent Multimodal Biometric Authentication Model for Personalised Healthcare Services. Future Internet 2022, 14, 222. [Google Scholar] [CrossRef]

- Ammour, N.; Bazi, Y.; Alajlan, N. Multimodal Approach for Enhancing Biometric Authentication. J. Imaging 2023, 9, 168. [Google Scholar] [CrossRef]

- Singh, S.P.; Tiwari, S. A Dual Multimodal Biometric Authentication System Based on WOA-ANN and SSA-DBN Techniques. Sci 2023, 5, 10. [Google Scholar] [CrossRef]

- Rajasekar, V.; Saracevic, M.; Hassaballah, M.; Karabasevic, D.; Stanujkic, D.; Zajmovic, M.; Tariq, U.; Jayapaul, P. Efficient Multimodal Biometric Recognition for Secure Authentication Based on Deep Learning Approach. Int. J. ON Artif. Intell. Tools 2023, 32, 2340017. [Google Scholar] [CrossRef]

- Zhou, B.; Lohokare, J.; Gao, R.; Ye, F. EchoPrint: Two-Factor Authentication Using Acoustics and Vision on smart Phonesin. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 15 October 2018; pp. 321–336. [Google Scholar] [CrossRef]

- Aleluya, E.R.M.; Vicente, C.T. Faceture ID: Face and hand gesture multi-factor authentication using deep learning. Procedia Comput. Sci. 2018, 135, 147–154. [Google Scholar] [CrossRef]

- Priya, K.D.; Sumalatha, L. Offline Handwritten Signatures Based Multifactor Authentication in Cloud Computing Using Deep CNN Model. I-Manag. J. Cloud Comput. 2019, 6, 13–25. [Google Scholar] [CrossRef]

- Sajjad, M.; Khan, S.; Hussain, T.; Muhammad, K.; Sangaiah, A.K.; Castiglione, A.; Esposito, C.; Baik, S.W. CNN-based anti-spoofing two-tier multi-factor authentication system. Pattern Recognit. Lett. 2019, 126, 123–131. [Google Scholar] [CrossRef]

- Mehdi Cherrat, E.; Alaoui, R.; Bouzahir, H. Convolutional neural networks approach for multimodal biometric identification system using the fusion of fingerprint, finger-vein and face images. PeerJ Comput. Sci. 2020, 6, e248. [Google Scholar] [CrossRef]

- Shalaby, A.; Gad, R.; Hemdan, E.E.; El-Fishawy, N. An efficient multi-factor authentication scheme based CNNs for securing ATMs over cognitive-IoT. PeerJ Comput. Sci. 2021, 7, e381. [Google Scholar] [CrossRef]

- Masuda, N.; Yairi, I.E. Multi-Input CNN-LSTM deep learning model for fear level classification based on EEG and peripheral physiological signals. Front. Psychol. 2023, 14, 1141801. [Google Scholar] [CrossRef]

- Sasikala, T.S. A secure multi-modal biometrics using deep ConvGRU neural networks based hashing. Expert Syst. Appl. 2024, 235, 121096. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2021, arXiv:1704.04861. Available online: https://arxiv.org/abs/1704.04861 (accessed on 10 May 2024).

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. Available online: https://arxiv.org/abs/1707.01083 (accessed on 10 May 2024).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. Available online: https://proceedings.neurips.cc/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html (accessed on 10 May 2024). [CrossRef]

- Piugie, Y.B.W.; Di Manno, J.; Rosenberger, C.; Charrier, C. Keystroke Dynamics Based User Authentication Using Deep Learning Neural Networks. In Proceedings of the 2022 International Conference on Cyberworlds (CW), Kanazawa, Japan, 27–29 September 2022; pp. 220–227. [Google Scholar] [CrossRef]

- Tkachenko, I.; Puech, W.; Destruel, C.; Strauss, O.; Gaudin, J.M.; Guichard, C. Two-level QR code for private message sharing and document authentication. IEEE Trans. Inf. Forensics Secur. 2015, 11, 571–583. [Google Scholar] [CrossRef]

- Christiana, A.O.; Oluwatobi, A.N.; Victory, G.A.; Oluwaseun, O.R. A Secured One Time Password Authentication Technique Using (3, 3) Visual Cryptography Scheme. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2019; Volume 1299, p. 012059. [Google Scholar] [CrossRef]

- GitHub. Available online: https://github.com/hjinob/CNN-Based-Multi-Factor-Authentication-System-for-Mobile-Devices (accessed on 20 April 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).