Abstract

It is important for chatbots to emotionally communicate with users. However, most emotional response generation models generate responses simply based on a specified emotion, neglecting the impacts of speaker’s personality on emotional expression. In this work, we propose a novel model named GERP to generate emotional responses based on the pre-defined personality. GERP simulates the emotion conversion process of humans during the conversation to make the chatbot more anthropomorphic. GERP adopts the OCEAN model to precisely define the chatbot’s personality. It can generate the response containing the emotion predicted based on the personality. Specifically, to select the most-appropriate response, a proposed beam evaluator was integrated into GERP. A Chinese sentiment vocabulary and a Chinese emotional response dataset were constructed to facilitate the emotional response generation task. The effectiveness and superiority of the proposed model over five baseline models was verified by the experiments.

1. Introduction

With the development of deep learning techniques, the performances of dialogue systems have been much improved.

They can generate not only fluent, but also various replies based on users’ queries. They respond to the user’s questions anytime and anywhere, so that the user can obtain a greater sense of satisfaction and security. Early response generation algorithms obtained satisfactory results in semantic consistency and content richness. However, how to improve users’ interests and generate human–computer resonance have not attracted much attention. Emotions can be used to improve the interactivity of the dialogue system and make the dialogue system more anthropomorphic. Therefore, it is essential for the chatbot to emotionally communicate with people [1,2].

Many attempts have been made to effectively embed emotions into the generated responses of the chatbot. There are three typical ways to integrate the emotion into the response generation process. In the first way, an emotion-related energy term is used to control the emotion expression. For example, Affect-LM [3] embeds emotions into the response by adding an emotion-related energy term to an LSTM-based model. In the second way, the emotion category embedding is used to represent emotions. Yuan et al. [4] established a model that maps five emotion categories into low-dimensional, dense, real-valued vector representations, which directly guide the selection of contextual information and transfer emotional tendencies from source sequences to user-specific sequences. ECM [5] also uses the emotion category embeddings to compute emotion states. The emotion state decays with the decoding process in the internal memory module. The emotion will be fully expressed when the emotion state decays to 0. ECM also selects words from a sentiment vocabulary or a general vocabulary to generate responses in an external memory module. The last way is to map the emotion words into embeddings. The model built by Asghar et al. [6] uses emotional word embeddings to incorporate emotions into the LSTM encoder and a standard cross-entropy loss function to learn how to generate emotionally expressive responses. The beam search is also used for diverse decoding.

Deep learning techniques have been widely adopted by the dialogue systems to better express emotions. Using the adversarial learning technology, the generator is in charge of generating emotional responses given a dialogue history and a sentiment label. The adversarial discriminator enforces emotional response quality by trying to determine whether the item (dialogue history and response) comes from the real data distribution [7]. Shen and Yang [8] proposed the CDL framework that uses reinforcement learning to alternately generate emotional responses and emotional queries. The model consists of two ECMs with independent parameters and a sentiment classifier, which jointly solve a dual problem. The two models are alternately trained by reinforcement learning to generate content-coherent and emotional responses.

In order to generate smoother and richer emotional responses, other features such as the dialog topic are introduced into the dialogue systems. TE-ECG [9] uses the topic module to obtain the topics of the conversation, so as to ensure the coherence of conversational interaction and high-quality responses. Meanwhile, the dynamic emotional attention mechanism incorporates emotions into the responses. ESCBA [10] is a syntactically constrained bidirectional asynchronous method, where pre-generated sentiment keywords and topic keywords asynchronously participant in the decoding process.

However, most of these studies focused on generating emotional responses based on specified and unchanged emotions. In fact, in daily communication, the personality of the speaker plays an important role in the emotion expression. Generally speaking, there are two ways to define the personality of the dialogue system: the implicit way and the explicit way. The implicit methods usually extract the personality from the dialogue history. However, the explicit methods directly define the personality of the dialogue system using documents, vectors, or codes in advance.

Implicit methods use datasets that are readily available to extract the embedded personalities. For example, Ma et al. [11] designed a personalized language model to construct a generic user portrait from their historical replies. The work introduced a key–value pair memory neural network to store the user’s historical input–reply pairs and generate the dynamic user portrait. The dynamic user portrait mainly reflects what and how the user responds to similar inputs in the history. However, the portrait cannot be directly obtained. Therefore, it is inconvenient for others to observe and modify the extracted personality.

As a comparison, the explicit methods describe the personality of the dialog system in a more direct way, which can be documents or embeddings, etc. Inspired by word embedding, the personality can be expressed as a persona embedding, which can be added into the encoding process to generate responses. Li et al. [12] proposed a model based on the personality embedding. It was the first one to introduce personality into the chatbot model. In addition to the persona embedding, the profile is also commonly used to describe the chatbot personality. Qian et al. [13] used the key–value profile to define the personality of the chatbot, such as: “Name: Wangzai; Age: 18; Gender: Male; Hobbies: Anime; Specially: Piano”. According to the user’s input, a certain keyword in the profile is selected, and then, a reply is generated based on this word. Another common form to describe the personality is the sentence profile. Zhang et al. [14] described the chatbot personality using a five-sentence profile. A profile in the sentence format can be: “I like to ski. My wife does not like me anymore. I have went to Mexico 4 times this year. I hate Mexican food. I like to eat cheetos.” In a memory-enhanced neural network, it is used to produce responses that are more personal, specific, consistent, and attractive than a role-free model. However, a dataset that meets the above requirements needs to be constructed at an expensive cost [13]. At the same time, these profiles focus on the personal information of the chatbot, such as the name, gender, age, and hobbies, which help address the consistency of the input and responses. Unfortunately, in these works, they are independent of the emotions to be generated in the responses.

For the above reasons, our work employed an explicit method that offers a balance between simplicity and ease of emotional expression: the “Big Five” personality vectors (which are described in Section 2.1.1) to describe the personality of the robot. The “Big Five” personality vectors have been employed in chatbots. For example, some studies incorporated the “Big Five” personality traits directly into the decoding process of LSTM [15] or classified chatbots based on the personality [16]. Bauerhenne et al. [17] combined the personality with the emotion, but they only considered a single factor in the “Big Five” personality traits. To sum up, existing studies primarily focus on directly and separately integrating the “Big Five” personality vector into the design of chatbots without fully integrating it with other characteristics, such as emotions.

As discussed above, very few works have fully considered the impact of the chatbot’s personality on the emotional response generation. How to generate suitable emotional responses for the chatbot based on its personality is a problem worthy of exploration. In this work, we propose a new model, namely Generating Emotional Responses based on the chatbot’s Personality (GERP), which generates emotional responses based on a pre-defined personality of the chatbot in the form of the “Big Five” personality vectors (which are described in Section 2.1.1). The responses generated by GERP make the chatbot more anthropomorphic. The main contributions of our work are summarized as follows:

- A new personality-based emotional response generation model, namely GERP, which automatically selects and expresses the emotions according to the personality of the chatbot is proposed. It can predict the emotion to be expressed in the response based on the chatbot’s personality and generate the corresponding emotional response.

- The five Openness, Conscientiousness, Extroversion, Agreeableness and Neuroticism (OCEAN) [18] characteristics were adopted to explicitly define the chatbot’s personalities. Unlike previous work [12,13,14], which used ambiguous descriptions such as the personal profile or physical appearance to illustrate the chatbot’s personalities, this is the first time the OCEAN model has been utilized to precisely define the personality in the emotional response generation task.

- An improved emotional response generation model is proposed, which generates the emotional response word-by-word using an LSTM-based decoder restrained by the predicted emotion. The best emotional response is selected by a newly proposed beam evaluator. The generated emotional response conforms to both the dialog contexts and the chatbot’s personality.

- A new Chinese sentiment vocabulary was constructed to provide suitable emotion words in the decoding process of the LSTM decoder. In addition, a Chinese Personality Emotion Lines Dataset was constructed as the benchmark for the performance validation for the emotional response generation models.

The experimental results corroborated the effectiveness and computational efficiency of the proposed GERP model. The source code and the datasets are available at https://github.com/slptongji/GERP (accessed on 21 February 2023).

2. Methodology

2.1. Prior Knowledge

2.1.1. “Big Five” Personality Traits

The “Big Five” personality traits [18] can be used to explicitly describe the personality of the chatbot. They are: Openness (O), Conscientiousness (C), Extroversion (E), Agreeableness (A), and Neuroticism (N). A person’s personality can be represented by the values of the five factors of the OCEAN traits, and the five values constitute the OCEAN vector. Consequently, the personality can be uniquely represented by an OCEAN vector. Each of the factors reveals the strength of the corresponding personality trait. For example: Openness represents the range between extreme openness and extreme closure. Conscientiousness stands for extreme responsibility and extreme lack of planning. Extroversion means extreme extroversion and extreme introversion. Agreeableness represents extreme kindness and extreme self-interest. Neuroticism stands for extremely emotionally unstable and extremely emotionally stable. Many researchers have confirmed that the five personality traits have strong universality. An experimental study of people from more than 50 different cultures showed that these five dimensions accurately describe the personality [18]. Meanwhile, psychologist Buss [19] provided a detailed explanation of these five personality traits, showing that they represent the most-important qualities that shape our social landscape.

2.2. PAD Emotional Space

In order to visualize the emotions and integrate them with the response generation model, our work adopted the three-element theory of emotion proposed by Russell et al. [20] to project different emotions into three-dimensional emotion vectors. The three values of an emotion vector reflect the emotion intensity in Pleasure (P), Arousal (A), and Dominance (D). The space where emotion vectors are located is called the PAD emotional space. Russell et al. [20] demonstrated that these independent bipolar dimensions are necessary and sufficient for defining emotional states. Their experimental results provided 151 emotion categories and the corresponding emotion vectors. We selected six emotions, i.e., anger, disgust, joy, surprise, sadness, and fear, from 151 emotion categories because the selected ones correspond to the most basic human emotion categories that meet people’s daily emotional communication needs [21]. The six selected emotion categories and their PAD emotion vectors are shown in Table 1. In addition, the neutral emotion vector was manually set as [0.00, 0.00, 0.00].

Table 1.

Seven PAD emotion vectors.

Personality is an important factor that affects emotions. Mehrabian et al. [22] carried out an experiment in which the PAD vector was generated based on the OCEAN personality vector. There were 72 participants invited including 28 men and 44 women. Each participant had to complete the five personality traits scale and the PAD temperament scale. After the experimental analysis, Mehrabian provided a stable relationship between the PAD emotion vector and the OCEAN personality vector [22,23]:

where Pl represent the value of pleasure, Ar represent the value of arousal, and Do represents the value of dominance. represents an emotion vector in the PAD space.

2.3. Overview of GERP

Denote the emotion set and the personality set . The input of GERP is the history dialogue from the chatbot and the user, respectively. The corresponding emotions in and are and , where . The personality of the chatbot is denoted by , where is the probability that the chatbot has the i-th personality . The objective of GERP is to generate an emotional response for the chatbot that conforms to both the contents of and and the chatbot’s personality .

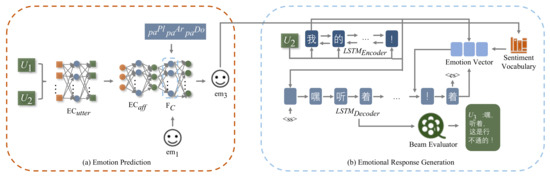

As shown in Figure 1, GERP consists of two modules, namely Emotion Prediction and Emotional Response Generation. In Emotion Prediction, the emotion to be expressed in is predicted based on , , and . It is believed that can be affected by the personality of the respondent and the dialogue history. Therefore, , , and are all considered in the emotion prediction. In Emotional Response Generation, will be generated based on the predicted using an LSTM-based decoder, such that the chatbot can respond with a suitable emotion. In particular, a new Chinese sentiment dictionary is constructed to provide emotion words in the Emotional Response Generation module. The emotion words express explicitly in the generated response .

Figure 1.

Structure of GERP. The Emotion Prediction module (a) predicts an emotion based on the history dialogue and , the initial emotion , and the chatbot’s personality . In the Emotional Response Generation module (b), the emotional response is generated using under the supervision of the emotion vector obtained from . GERP is a Chinese personalized emotional dialogue system which settles and generates in Chinese.

2.4. Emotion Prediction

To obtain , and will be mapped into the PAD emotion space. According to the definition in [20], can be mapped into the PAD emotion space with a representation vector , which is shown in Figure Table 1. The representation vector of in the PAD space can be computed using Equation (1) Then, the representation vector of emotion , which will be expressed in in Emotional Response Generation, can be computed as [20]

where is the direction of the emotional change according to the dialog contexts, which is defined as the variation between two points in the PAD emotion space, representing the previous and subsequent emotions. It can be computed as [24]

where represents the utterance encoder, represents the affective encoder, and is the context semantic coding of and . was adopted to extract the meaning of and , and outputs the semantic representation of . In the end, is mapped to using a fully connected layer . corresponds to the emotion category of response . The direction of emotional change is defined as the variation between two points in the PAD emotion space, representing the previous and subsequent emotions.

2.5. Emotional Response Generation

In this module, is generated based on the user input and the emotion category predicted in Section 2.4. It was implemented based on the EmoDS model [25], which expresses a specific emotion in the response with the help of an emotion vector. Given an emotion category (e.g., ), EmoDS firstly adopts an LSTM model to encode the user input and feeds the output of into another LSTM model as the context vector. Then, EmoDS computes the emotion vector based on the context vector and and generates the emotional response in the decoding process of based on the emotion vector. can insert emotion words coming from a sentiment vocabulary into the response at appropriate time steps in its decoding process. Compared with EmoDS, there are two main improvements in the Emotional Response Generation module of GERP. First, GERP introduces a beam evaluator to retrieve the most-appropriate emotional response from the candidate responses generated by . Second, GERP adopts a new Chinese sentiment vocabulary to provide more suitable emotion words. The details are illustrated as follows.

2.5.1. User Input Encoding

As mentioned above, the user input (where is the i-th word in ) is converted to a context vector using :

where and represent the forward and the backward process of , respectively. The i-th hidden state of is defined as . The last hidden state is the output of and will be fed into as the context vector.

2.5.2. Emotion Vector Computation and Emotional Response Generation

To insert suitable emotional words into the response, an emotion vector is added into the decoding process of . Given the emotion category , the corresponding emotion vector is computed as [24]

where is the function that generates the emotion vector based on the attention mechanism [26], is the hidden state of at time step , and represents the word embedding of the k-th emotional word under the emotion category in the sentiment vocabulary. is the output of . The emotion vector will participate in the decoding step of at each time step j [24].

where represents the state at time step j and refers to the embedding of the word at the last time step .

The status at each time step will be used to generate the reply as [24]

where is the word in the sentiment vocabulary and is the word in the general dictionary . , , and v are trainable parameters, and is the weight distribution coefficient for choosing to generate emotion or a generic word.

In the end, can be generated word-by-word in the above process of the well-trained . The output of , i.e., , represents the generation error of the model and is used as one of the metrics for the beam evaluator to retrieve the best response. and can be trained according to [25].

2.5.3. Beam Evaluator Construction

In the previous step, each word in is retrieved by using beam search [25]. Beam search was originally used in the machine translation to generate translations that are the closest to the original sentences referring to their meanings. In the response generation task, beam search is often used to generate diverse and fluent responses. However, for the emotional response generation task, the fluency of the response and the correctness of the emotion expression should be considered simultaneously. Therefore, we improved EmoDS by adding a beam evaluator to choose the most-appropriate sentence based on the scores of sentence fluency and emotional expression from the candidate responses obtained by the beam search.

The key element of the beam evaluator is a BiLSTM-based model , which predicts the category of the emotion expressed in the candidate response. It is constructed using two LSTM layers and a softmax layer. Given the training responses and their emotion categories, can learn the relationship between them. is trained using the same training set for GERP.

In the test process, for each candidate response generated by the beam search, outputs an emotion score , which represents the emotional score of under the emotion category. The larger the score, the more accurate the emotion expressed in the candidate response is.

Based on the generation score from and the emotion score of , the overall score for the candidate response is calculated as

The candidate response corresponding to the highest will be selected as the best emotional response for .

2.6. Sentiment Vocabulary Construction

The sentiment vocabulary is essential, which provides suitable emotion words in the process of emotional response generation. In fact, a Chinese Emotional Vocabulary Ontology Database (CEVOD) [27], which contains a set of emotion words in Chinese, has been proposed for this purpose. However, the words in CEVOD are too formal to be used in the dialog environment. Therefore, a new Chinese sentiment vocabulary, namely CSVocab, was constructed to provide more suitable emotion words.

To construct CSVocab, firstly, 5152 emotion words were collected from the Chinese version of the Personality Emotion Lines Dataset (CPELD) (the details of CPELD’s construction are introduced in Section 3.1). Then, the pseudo-emotion distributions for the words in CSVocab were calculated. After that, a neural network was adopted to learn the emotional representations of the words in CSVocab. The details of CSVocab’s construction are illustrated as follows.

2.6.1. Pseudo-Emotion Distribution Calculation

The pseudo-emotion distribution of each word w in CSVocab is calculated based on CEVOD using the improved SO-PMI method [28]. Given the i-th emotion category , the occurrence probability of w in is defined as

where is the number of words in CSVocab, is the Euclidean distance between the two words, and is the size of . represents the pseudo-emotion distribution of w in CSVocab. If the pseudo-emotion distribution of a word is , it is re-classified as a neutral word. In the end, emotion words were categorized into seven emotion categories based on their pseudo-emotion distributions.

2.6.2. Learning Emotional Word Representations

A two-layer neural network was used to learn the emotional representation vector for the word w at the word and the sentence levels successively according to [29]. It functions as a classifier, which trains the emotion word vectors while predicting the emotion categories of words and sentences.

The sentiment vector representation for each word w was randomly initialized. Training was first performed on the word level, and the softmax layer computes its sentiment distribution [29]:

where and are training parameters. The learned represents the sentiment distribution of the word w.

The average cross-entropy loss function was used to measure the difference between the sentiment distribution predicted by the model and the sentiment annotations at the word level [29]:

where represents the k-th sentence in the dataset.

can be further trained at the sentence level relying on the sentiment labels of the sentences in the dataset. The average embedding of a sentence is initially computed from the word embeddings of the individual words in the sentence [29]:

The sentence embedding layer predicts the sentiment category expressed by the sentence [29]:

where and are training parameters. The learned represents the sentiment distribution of the sentence .

The loss function can be computed based on the predicted emotional category, and the standard emotional label in the dataset participates [29] as

where represents the standard emotional label in the dataset.

Finally, word-level and sentence-level loss functions jointly train the word vectors [29] as

where is the weight coefficient.

2.6.3. Sentiment Vocabulary Construction

In the end, a single-layer softmax classifier was adopted to build the sentiment vocabulary, and a part of the emotional words was used as the training data [29]. The emotional embedding is fed into the classifier, and the predicted emotional category of the word is output. Based on the predicted emotional categories, the emotional words in CSVocab can be classified into seven emotion categories to construct the final sentiment vocabulary. For each emotion category in CSVocab, 114 emotion words were selected. Finally, 798 emotion words corresponding to seven emotion categories made up the CSVocab sentiment vocabulary.

3. Experiments and Results

3.1. Experiment Setup

GERP was implemented using Python in the Ubuntu environment. The size of the hidden states in and was 256; the word embedding dimension was 50; the initialization method of the word embedding was GloVe [30].

3.1.1. Dataset

The dataset used for the experiment was CPELD, which is the Chinese version of Personality Emotion Lines Dataset (PELD) [24]. PELD is derived from the scripts of the “Friends” TV series, and the personality for the six main characters (i.e., Joey, Phoebe, Chandler, Ross, and Rachel) were explicitly given by Wen et al. [24] in the form of OCEAN characteristics, as shown in Table 2.

Table 2.

Six OCEAN personality vectors in CPELD.

The scripts in PELD were translated into Chinese using DEEPL and Google Translation by us. Finally, 10,648 utterances which all come from the six main characters were collected to construct CPELD. CPELD as further randomly divided into the training, validation, and test sets with the proportion of 8:1:1.

3.1.2. Comparative Experiment

To further illustrate the superiority of GERP, baseline experiments and ablation experiments were performed. In addition to GERP, four baseline models, i.e., Se2qseq-Noatt [31] (S2S-N), Seq2seq [31] (S2S), ECM [5], and SeqGAN [32] (SGAN) were evaluated for performance comparison. A Random model (Ran), which randomly selects the emotion to express in the response, was also evaluated as a comparison. The models used for ablation experiments included −Emotion Vector (−EVec) (GERP without the emotion vector), −Beam Search (−BSear) (GERP without the beam search), −Beam Evaluator (−BEval) (GERP without the beam evaluator), and +External Vocabulary (+ExV) (GERP trained using CEVOD instead of CSVocab).

Three performance evaluation metrics were used: (1) The emotional word Ratio (Ratio), which indicates the percentage of the responses that contain the emotional words; (2) The BLEU score [33], which is the word overlapping score of the generated responses relative to the standard responses in CPELD; (3) The correctness of emotion expression [25], which justifies whether is correctly expressed in the generated responses. The correctness of emotion expression can be evaluated by the Macro F1 (F1-M) and Weighted F1 (F1-W).

3.1.3. Human Evaluation

In order to further evaluate the appropriateness of the responses generated by the comparative models, we randomly selected 10 responses from the test results and invited 30 volunteers to evaluate the responses using a questionnaire designed by us. The volunteers had to evaluate the responses listed in the questionnaire from the following four aspects: (1) sentence Fluency and Consistency (FC); (2) the Emotion is clearly Expressed or not (EE); (3) the Emotion is Correctly expressed or not (EC); (4) given the responses, infer the three Best-Matching characters (personalities) (BM).

The scoring criteria for the questionnaire indicators were as follows:

- FC: 0 means the response is not fluent; 1 means it is fluent; but not consistent with the context; 2 means fluent and consistent with the context response;

- EE: 0 means is expressed (no obvious strong emotion) in the response; 1 means that the emotion expressed is more obvious; 2 means a clear emotion is expressed in the response;

- EC: 0 means the emotion expressed is incorrect; 1 means the correct (the target emotion category will be given when scoring this item);

- BM: Given the personality descriptions of six characters and five sets of dialogue examples for each character, ask the volunteers to select which role the chatbot is most likely to be set to based on the chatbot’s response (choose at least one and up to three). If the most-likely role selected by the volunteer only contains the correct answer, three points are awarded. The volunteer will receive two points when selecting two roles and the correct answer is included. If the volunteer chooses three roles and the correct answer is included, score 1 point, otherwise score 0 points

The preview of the questionnaire is shown in Figure 2.

Figure 2.

Three sets of human evaluation questionnaire preview. All volunteers were native Chinese speakers, so all questions in the questionnaire were in Chinese.

3.2. Experimental Results

3.2.1. Case Study

Seven emotional responses generated by GERP corresponding to different personalities are shown in Table 3. The history dialogs and are also shown to present the dialog contexts. The characters that correspond to different personalities and his/her possible emotions are listed in the last two columns. The generated responses are listed in the third column. The emotional words, which include words and punctuation marks, are highlighted. Take Phoebe for example. She likes to freely express her feelings. Therefore, to simulate her way of communication, the generated responses contain strong emotion words, such as “have fun”, “doesn’t work”, or “sorry”. For another example, Chandler is a funny and affable person. When expressing the happy emotion, the word “that’s right!” is inserted to express the joy feeling. By contrast, Rachel cares for her friends’ feelings and would like to implicitly express her emotions. Therefore, a neutral emotion is selected and expressed in the generated response using a simple period mark “.”. It can be seen that the generated emotional responses conformed to different chatbot’s personalities.

Table 3.

Emotional responses generated by GERP. The emotion words, which include words and punctuation marks, are highlighted.

3.2.2. Evaluation Results

The experimental results for the eight evaluation metrics are shown in Table 4. Several models are irrelevant to the F1-scores and human evaluation because they (Seq2seq with and without attention, SeqGAN, and −Vec) do not introduce emotion factors. They cannot express a certain emotion in the response, so the F1-score and EC, which are related to emotions, do not apply to them. Comparative models except Ran do not involve the personality, so BM in human evaluation does not apply to them. Ran and GERP are only different in how we choose , so FC, EE, and EC in the manual evaluation are of no value. We only focused on BM, which reflects their differences.

Table 4.

The experimental result of GERP and the comparison models. The results of GREP are bolded.

Baseline Study

It can be seen that GERP achieved the best performance on nearly all the evaluated metrics, except the BLEU score, which revealed the effectiveness of GERP in the task of emotional response generation based on the personality.

First of all, considering the Ratio scores of the six models, the responses generated by GERP contained the most emotion words, which indicates that the these responses can prominently express emotions. Secondly, the scores of F1-M and F1-W indicated that, compared with the other five models, the emotional responses generated by GERP can faithfully express the specified emotion in more cases. Thirdly, when evaluated manually by the volunteers, GERP achieved the best performance on the FC, EE, EC, and BM scores and was far better than the other five models. This indicated that the responses generated by GERP were the most-fluent and appropriate considering the dialog contexts and the speakers’ personalities. The scores in the last column (BM) reflect the accuracy of character inference of the volunteers from the given emotional responses. Compared with the random model, the higher score of GERP indicated that the emotional expression in the generated responses provided clear clues to the volunteers when identifying the personalities of the chatbot. Because the other four models generated responses only based on the given emotions instead of the personality, BM scores are not available for these models.

Although GERP did not achieve the best performance on the BLEU metric, its BLEU score was relatively high (0.082) compared with the other five models. The third-best performance of GERP on the BLEU metric was due to the existence of the beam evaluator. Because GERP has to balance the correctness of emotional expression and the generation accuracy, the generated responses contained more different emotion words compared with the benchmark responses, which consequently lowered GERP’s BLEU score.

Ablation Study

When considering the emotion word percentage (Ratio metric), the proposed sentiment dictionary CSVocab provided the main contribution. When substituted by CEVOD, the Ratio score dramatically decreased from 0.040 to 0.008. When other components were removed, the corresponding Ratio scores also decreased, which revealed the effectiveness of the emotion vector, the beam search, and the beam evaluator component in the insertion rate of emotion words. Similarly, when the beam evaluator was removed, the BLEU representing fluency dropped from 0.082 to 0.137. Meanwhile, the emotion vector and the beam search also made a contribution to fluency. Similarly, the scores of the ablation model dropped when using both the F1-M and F1-W metrics. Especially after removing the beam evaluator, F1-M dropped to 0.137 and F1-W dropped to 0.279, which means that the four components helped the model express emotions more accurately. Among the human evaluations, GERP performed more prominently. Models lacking the corresponding components suffered in terms of fluency, emotional expression, the accuracy of emotional expression, and personality fit. The results showed that the integration of sentiment vectors, beam search, the proposed beam evaluator, and CSVocab can improve the overall performance of GERP.

4. Discussion

Deep learning techniques have been demonstrated to be effective in enhancing the performance of dialogue systems in terms of fluency and emotion expression. Methods such as Seq2seq [31], Generative Adversarial Networks (GANs) [34], transfer learning [35], and reinforcement learning [36] have been applied to enable dialogue systems to generate fluent emotional responses. However, systems applying these methods primarily focus on expressing specific emotions and facilitating the self-learning and optimization of systems through extensive data analysis. In order to automatically select proper emotions, chatbot personalities are introduced to the dialogue system. However, how to predict emotions more accurately according to the personality is still worth studying. The GERP model proposed by our work integrates Nature Language Generation technology with psychological knowledge to predict emotions based on predefined personalities. The model incorporates emotional vectors into the Seq2seq architecture to enable the suitable expression of emotions in the generated responses.

5. Conclusions

Emotions and personalities have been used in chatbots in the open domain dialogue system. However, the existing emotional dialogue systems focus on how to better express the specified emotion in the response, while most of the existing personality dialogue systems take personality as an independent factor and fail to connect with the emotion adequately. By contrast, we proposed a new model, namely GERP, to generate emotional responses based on the dialog contexts and the chatbot’s personality. This is the first attempt to generate emotional responses based on the personalities defined by the OCEAN model. Emotional responses can be generated using an LSTM-based decoder equipped with a newly proposed beam evaluator under the supervision of a personality-converted emotion. In order to express emotions more appropriately, a sentiment vocabulary CSVocab was constructed. The experimental results demonstrated the effectiveness of GERP in generating both fluent and emotional responses. To validate the performances of emotional response generation models, a Chinese emotional response dataset, CPELD, was also constructed, which can benefit the related research in the future.

Author Contributions

Supervision, Y.S.; writing—original draft, Z.Z.; writing—review and editing, Y.S, D.W. and X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61972285, and in part by the Fundamental Research Funds for the Central Universities.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The original version of PELD can be found here: https://github.com/preke/PELD (accessed on 21 February 2023). The CEVOD dataset can be found here: http://ir.dlut.edu.cn/info/1013/1142.htm (accessed on 21 February 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, C.; Qingqing, Z.; Rui, Y.; Junfei, L. A Survey of Open Domain Dialogue Systems Based on Deep Learning. Chin. J. Comput. 2019, 42, 1439–1466. [Google Scholar]

- Yin, Z.; Zhen, L.; Tingting, L.; Yuanyi, W.; Cuijuan, L.; Yanjie, C. A Survey of Research on Text Emotional Dialogue Systems. Comput. Sci. Explor. 2021, 15, 825. [Google Scholar]

- Ghosh, S.; Chollet, M.; Laksana, E.; Morency, L.P.; Scherer, S. Affect-lm: A neural language model for customizable affective text generation. arXiv 2017, arXiv:1704.06851. [Google Scholar]

- Yuan, J.; Zhao, H.; Zhao, Y.; Cong, D.; Qin, B.; Liu, T. Babbling-the hit-scir system for emotional conversation generation. In Proceedings of the Natural Language Processing and Chinese Computing: 6th CCF International Conference, NLPCC 2017, Dalian, China, 8–12 November 2017; pp. 632–641. [Google Scholar]

- Zhou, H.; Huang, M.; Zhang, T.; Zhu, X.; Liu, B. Emotional chatting machine: Emotional conversation generation with internal and external memory. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Asghar, N.; Poupart, P.; Hoey, J.; Jiang, X.; Mou, L. Affective neural response generation. In Proceedings of the Advances in Information Retrieval: 40th European Conference on IR Research, ECIR 2018, Grenoble, France, 26–29 March 2018; pp. 154–166. [Google Scholar]

- Kong, X.; Li, B.; Neubig, G.; Hovy, E.; Yang, Y. An adversarial approach to high-quality, sentiment-controlled neural dialogue generation. arXiv 2019, arXiv:1901.07129. [Google Scholar]

- Shen, L.; Feng, Y. CDL: Curriculum dual learning for emotion-controllable response generation. arXiv 2020, arXiv:2005.00329. [Google Scholar]

- Peng, Y.; Fang, Y.; Xie, Z.; Zhou, G. Topic-enhanced emotional conversation generation with attention mechanism. Knowl.-Based Syst. 2019, 163, 429–437. [Google Scholar] [CrossRef]

- Li, J.; Sun, X. A syntactically constrained bidirectional-asynchronous approach for emotional conversation generation. arXiv 2018, arXiv:1806.07000. [Google Scholar]

- Ma, Z.; Dou, Z.; Zhu, Y.; Zhong, H.; Wen, J.R. One chatbot per person: Creating personalized chatbots based on implicit user profiles. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, Canada 11–15 July 2021; pp. 555–564. [Google Scholar]

- Li, J.; Galley, M.; Brockett, C.; Spithourakis, G.P.; Gao, J.; Dolan, B. A persona-based neural conversation model. arXiv 2016, arXiv:1603.06155. [Google Scholar]

- Qian, Q.; Huang, M.; Zhao, H.; Xu, J.; Zhu, X. Assigning personality/identity to a chatting machine for coherent conversation generation. arXiv 2017, arXiv:1706.02861. [Google Scholar]

- Zhang, S.; Dinan, E.; Urbanek, J.; Szlam, A.; Kiela, D.; Weston, J. Personalizing dialogue agents: I have a dog, do you have pets too? arXiv 2018, arXiv:1801.07243. [Google Scholar]

- Xing, Y.; Fernández, R. Automatic evaluation of neural personality-based chatbots. arXiv 2018, arXiv:1810.00472. [Google Scholar]

- Lessio, N.; Morris, A. Toward Design Archetypes for Conversational Agent Personality. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3221–3228. [Google Scholar]

- Fernández-Martínez, M.; Martínez-Mirón, E.A.; Martínez-López, R.; Castro-González, Á. Emotional States and Personality Profiles in Conversational AI. IEEE Access 2021, 9, 56455–56468. [Google Scholar]

- Fiske, D.W. Consistency of the factorial structures of personality ratings from different sources. J. Abnorm. Soc. Psychol. 1949, 44, 329. [Google Scholar] [CrossRef]

- McCrae, R.R.; Costa, P.T., Jr. Social adaptation and five major factors of personality. J. Personal. 1992, 60, 303–322. [Google Scholar]

- Russell, J.A.; Mehrabian, A. Evidence for a three-factor theory of emotions. J. Res. Personal. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Ekman, P.E.; Davidson, R.J. The Nature of Emotion: Fundamental Questions; Oxford University Press: Oxford, UK, 1994. [Google Scholar]

- Mehrabian, A. Analysis of the big-five personality factors in terms of the PAD temperament model. Aust. J. Psychol. 1996, 48, 86–92. [Google Scholar] [CrossRef]

- Mehrabian, A. Relationships among three general approaches to personality description. J. Psychol. 1995, 129, 565–581. [Google Scholar] [CrossRef]

- Wen, Z.; Cao, J.; Yang, R.; Liu, S.; Shen, J. Automatically Select Emotion for Response via Personality-affected Emotion Transition. arXiv 2021, arXiv:2106.15846. [Google Scholar]

- Song, Z.; Zheng, X.; Liu, L.; Xu, M.; Huang, X.J. Generating responses with a specific emotion in dialog. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3685–3695. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Linhong, X.; Hongfei, L.; Yu, P.; Hui, R.; Jianmei, C. Construction of emotional vocabulary ontology. J. Inf. 2008, 27, 180–185. [Google Scholar]

- Turney, P.D. Thumbs up or thumbs down? Semantic orientation applied to unsupervised classification of reviews. arXiv 2002, arXiv:cs/0212032. [Google Scholar]

- Wang, L.; Xia, R. Sentiment lexicon construction with representation learning based on hierarchical sentiment supervision. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 502–510. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Vinyals, O.; Le, Q. A neural conversational model. arXiv 2015, arXiv:1506.05869. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Baxter, J. A model of inductive bias learning. J. Artif. Intell. Res. 2000, 12, 149–198. [Google Scholar] [CrossRef]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).