Abstract

Image captioning is the task of automatically generating a description of an image. Traditional image captioning models tend to generate a sentence describing the most conspicuous objects, but fail to describe a desired region or object as human. In order to generate sentences based on a given target, understanding the relationships between particular objects and describing them accurately is central to this task. In detail, information-augmented embedding is used to add prior information to each object, and a new Multi-Relational Weighted Graph Convolutional Network (MR-WGCN) is designed for fusing the information of adjacent objects. Then, a dynamic attention decoder module selectively focuses on particular objects or semantic contents. Finally, the model is optimized by similarity loss. The experiment on MSCOCO Entities demonstrates that IANR obtains, to date, the best published CIDEr performance of 124.52% on the Karpathy test split. Extensive experiments and ablations on both the MSCOCO Entities and the Flickr30k Entities demonstrate the effectiveness of each module. Meanwhile, IANR achieves better accuracy and controllability than the state-of-the-art models under the widely used evaluation metric.

1. Introduction

Image captioning is a complex task of automatically producing natural language sentences to describe the content of a given image. It requires not only understanding the relationship between each object, but also generating sentences to describe the most conspicuous objects.

In the early stages, the image captioning task is based on templates [1,2] or retrieval [3,4]. In recent years, with the rapid development of deep learning [5,6], most current image captioning methods have been based on deep learning and adopted an encoder–decoder structure, in which the encoder extracts features to represent the content of the image. The decoder uses the extracted features to generate a description. The latest caption model even achieved better performance than humans in all accuracy-based metrics. However, these established models tend to generate sentences by describing the most conspicuous objects, but fail to describe a desired region or object as human. It is essential for practical applications. For example, when assisting visually impaired people to walk, the generated caption should describe what is on the road or the color of traffic lights. Meanwhile, many studies have indicated that traditional models tend to produce generic descriptions to capture frequent descriptive patterns, but fail to describe particular objects. To endow captioning models with controllability, several models introduce extra control signals to generate captions, called controllable image captions (CIC).

The CIC model can easily generate diverse captions for the same image by feeding different control signals. One type of CIC [7,8,9] focuses on controlling describing styles, such as factual, sadly, and happy; the other type aims to control the content, such as region [10], object [11,12], part-of-speech tags [13,14], and length level [15].

To produce controllable object image captioning, Marcella et al. [11] proposed a model to control the content and the order of the image caption explicitly grounded on a sequence of image regions. Chen et al. [16] proposed a control signal that represents a targeted activity as a verb and some entities involved in this activity as semantic roles. Many researchers [12,14,15] work in this direction.

In current models, the accuracy of the generated sentences depends on the accuracy of the understanding of the object role. However, the object feature obtained by the detection model lacks prior information. For example, given the features of a man and a baseball, it would be difficult to infer their relationship and the concept of a player. Besides, most of the models adopt cross-entropy loss, which leaves a lack of diversity in the generated sentences.

Based on the above problems, this paper introduces a framework based on the information-augmented and node-relation estimation network (IANR) to improve the performance of controllable image captioning. This method is an encoder–decoder structure. The information-augmented graph encoder consists of an information-augmented embedding module and a multi-relational weighted graph convolution network (MR-WGCN). The information-augmented embedding module is designed to add prior information for objects and relationships. The MR-WGCN emulates the message passing from one node to others in different ways. In terms of the decoder, this paper designs a model that dynamically pays attention to control signals or features with prior information. To further increase the diversity of descriptions, an additional similarity loss is added to the traditional cross-entropy loss.

The main contributions are summarized as follows:

- The proposed information-augmented embedding module adds prior information for each object and relation node.

- A Multi-Relational Weighted Convolution Graph (MR-WGCN) is proposed to aggregate messages from related nodes in different ways.

- A dynamic attention decoder is designed to fuse the result of control signals or node features with prior information, which can address the need to generate sentences that satisfy the control signal.

- The designed novel similarity loss cooperate with traditional cross-entropy loss to utilize information effectively for generating diverse captions.

- This paper performs an extensive comparative study on two commonly used datasets, et al., MSCOCO Entities and Flickr30k Entities, to evaluate designed IANR. The experimental results show that the proposed method achieves significantly higher accuracy and diversity in all evaluation metrics than the baseline method, ASG2Caption. In addition, IANR achieves state-of-the-art controllability and accuracy on two datasets.

The rest of the paper is structured as follows. First, related work is briefly introduced and discussed in Section 2. Section 3 introduces the proposed method for controllable image captioning (CIC). Section 4 shows the experimental evaluation of the proposed method and other methods. Finally, Section 5 discusses the conclusion and future research directions.

2. Related Work

At present, most image captioning models have achieved significant improvement based on the encoder–decoder and reinforcement learning. Inspired by neural language translation, the encoder–decoder structure learns image content with an encoder and transforms the image content into sentences with a decoder. The NIC [17] exploits the convolution neural network to obtain a fixed-length vector representing the content of the whole image and recurrent neural networks to generate words sequentially. Traditional image captioning methods are trained by maximizing the likelihood of ground truth captions, which cannot optimize quality metrics, such as CIDEr. Self-critical Refs. [18,19,20] optimized non-differentiable metrics using reinforcement learning. To reduce the impact of redundant regions in the image, Refs. [21,22] encodes the features of detected object regions [23], and then ground words with relevant image regions dynamically in generation. Except for the detected region, some researchers regard the sentences as the relationships of the objects in the image. The Refs. [24,25,26] adopted the scene graph [27] to utilize the detected objects and their relationships. The ASG2Caption [12] proposed an abstract scene graph (ASG) instead of the detected scene graph to generate the desired caption. This work proposes a novel module called the information-augmented graph encoder, which is composed of an information-augmented embedding module and a multi-relational weighted graph encoder to incorporate a priori information into objects or relation nodes, improving the accuracy and diversity of the generated sentences.

Controllable Image Captioning

Controllable image captioning is a more challenging task that aims to generate sentences according to extra control signals, such as style and semantic. The target of style control research [7,8,9,28,29] is to restrain emotions or linguistic styles, such as factual, sad, happy, or humorous. etc.Most of them [28,30,31,32] train on datasets with stylized labels. A few studies [7,33] use a monolingual stylized language corpus without paired images to disentangle style from factual items.

The target of semantic control aims to control the described contents or structures in the image, such as region [10], object [11,12,34,35], part-of-speech tags [13,14], and length level [15]. DenseCap [10] detects and describes diverse regions in the image. CGO [34] combines two LSTMs in opposite directions for generating image captions with desired objects. SCT [11] controls the described objects and the order of the generated sentences. ASG2Caption [12] proposes an abstract scene graph to control the described objects and relationships. Sub-GC [36] describes sub-graphs of image scene graphs. POS [13] uses the Part-of-Speech tag sequence to guide caption generation. MTTSNet [14] generates sentences with the assistance of POS information for each relationship between object combinations in a scene graph. LaBERT [15] uses a length signal to control and describe the image, either roughly or in detail. In addition to this, there are other semantic control methods, such as DUDA [37] that describes semantic differences between two images. SCAN [38] introduces a signal controlling the sentence quality, sentence length, and number of nouns.

All the above work mainly concentrates on the control process. They usually adopt region features as one of the inputs, ignoring the prior information about the objects and their relationships. This paper not only proposes an information-augmented graph encoder to add prior information to each node, but also proposes an improved dynamic attention decoder to selectively focus on the control signals or node features. Finally, the proposed similarity loss facilitates IANR to learn more diverse information.

3. Proposed Model

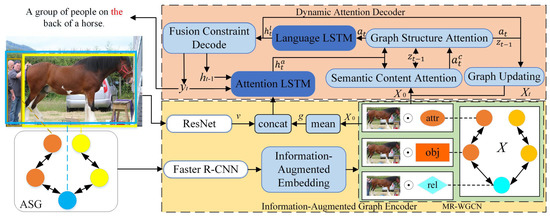

Given an image I, the goal of IANR is to generate a fluent caption based on the control signal of the Abstract Scene Graph (ASG) [12]. The ASG reflects the user’s intention through nodes and their relationships. Humans can describe the given image through multiple sentences. Meanwhile, the image has multiple ASGs. According to different ASGs, IANR can generate different sentences. The structure of IANR is shown in Figure 1. The structure includes an information-augmented graph encoder and a dynamic attention decoder. Section 3.1 describes how the proposed information-augmented graph encoder adds prior information and uses the proposed MR-WGCN to enhance features with surrounding node information. Section 3.2 describes how to generate sentences and update all node information. Finally, we train the IANR through cross-entropy loss and the similarity loss.

Figure 1.

The IANR includes an Information-Augmented Graph Encoder and a Dynamic Attention Decoder. Given an image I and a control signal ASG , X and E are the sets of nodes and edges. The information-augmented embedding adds a priori information to all nodes. The proposed MR-WGCN enhances node features through surrounding information. Then the Dynamic Attention Decoder incorporates the result of Semantic Content Attention and Graph Structure Attention to select node information. Finally, this paper generates sentences through language LSTM and the proposed Fusion Constraint Decode module. After generating a word, we updated the node feature of graph to .

3.1. Information-Augmented Graph Encoder

The encoder was proposed to encode ASG as a set of node features , where N is the number of nodes in ASG. The ASG for image was denoted as , where and are the sets of nodes and edges, respectively. The types of nodes are object node , attribute node , and relationship node . The six types of edges are bidirectional connections between the subject node and relationship node , object node and attribute node , and relationship node to object , respectively. The feature of nodes in ASG was extracted from the grounded box in the image. The box of the relationship node was the union bounding box of the two involved objects.

ASG contains information about each node region, while the region features extracted from the detection network do not contain prior knowledge. For example, given the features of a man and a baseball, it would be difficult to infer their relationship and the concept of a player. To overcome this problem, the proposed information-augmented graph encoder consists of information-augmented embedding and a multi-relational weighted convolution graph to add prior information.

Information-Augmented Embedding. In this encoder, the memory-augmented attention operator (MA) [39] adds prior information for each node. The operator is defined as:

where are embedding matrices, are learnable matrices for a priori information, d is a scaling factor, and indicates concatenation.

The MA adds prior information to nodes and enables higher attention to the focal node based on the interrelationship of each node. However, is it possible that a node does not contain any relationships or priors? The last step of memory-augmented attention is a weighted summation of node features, which may lead to some misinterpretation. Therefore, the relational discriminator was designed to remove or modify some incorrect and unnecessary features.

In this discriminator, the sentinel value is first calculated:

where , and are learnable weights. is the sigmoid logistic function. For the sentinel value , a higher means that the feature needs to be saved.

After that, we mixed the original node feature with the memory-augmented feature as follows:

where , and are learnable weights.

Finally, the proposed information-augmented embedding module produces the feature by rescaling with the sentinel value :

where ⊙ represents element-wise multiplication. The node features are . The effectiveness and accuracy of the region feature with prior information were improved by using the proposed relational discriminator structure.

Multi-Relational Weighted Convolution Graph. The types of nodes in ASG are object, attribute, and relationship. Since the types of nodes cannot be distinguished by their visual appearance alone, the node features were enhanced by the type embedding as follows [12]:

where is one node in . is the embedding for three types of nodes, and is a positional embedding matrix to distinguish the different attribute nodes connected to the same object.

With the above formula, attribute information was added to the node feature. Furthermore, there were six types of edges and three types of nodes in ASG. Since nodes and edges are of different types, how does the message pass from one type of node to another along different edges? Therefore, the designed multi-relational weighted convolution graph (MR-WGCN) extends the MR-GCN [40] with different weights of edges as follows:

where denotes the neighbours of i-th node under the edge , is the weight of the edge from to . is the ReLU function, and are learnable matrices of l-th for self-loop. The formula of is:

Exploiting this layer brings context information from neighborhood nodes to the center node. Stacking multiple MR-GCN layers enabled us to obtain contextual context information. After that, calculating the average of as the global graph representation .

3.2. Dynamic Attention Decoder

The language decoder employs a two-layer LSTM structure [21], Semantic Content Attention, Graph Structure attention, and a Fusion Constraint Decoder. The two-layer LSTM includes an attention and a language . The attention computes the as follows:

where is a global image representation extracted from ResNet152, is the previous word embedding, is the previous hidden state from language , indicates concatenation, and is a learnable matrix for dimension reduction.

Considering that ASG is a graph-based structure, there are two types of attention based on semantic content and graph structure.

Semantic Content Attention. Semantic Content Attention mainly takes the semantic content into account. In the following formula, is initialized to . Then we adjust feature through Formula (9), which is similar to the shortcut connection. Finally, the importance of each node is normalized by a softmax function.

where and are learnable parameters in semantic content attention.

Graph Structure Attention. The Graph Structure Attention takes into account the graph structure of each node. ASG reflects the user’s intended order. According to the structure of ASG, if the current node is an object node, the next node to be described will be a relation node or an attribute node close to the object node. The next node still has a lower probability of being another object node that has a common relationship with the current object node. Thus, there are three types of attention transfer: (1). Stay at the same node to describe the object with several words; (2). move to the next node to describe the relation or attribute; and (3). move to another object node that is related to the same relation node. Hence, represents the attention transfer from the original node.

To calculate the probability of each attention transfer, was combined with the previous attention feature as the state feature .

Then, we calculated the weight of three attention transfers through the state feature as follows:

where and are learnable parameters, and indicates the weight of each attention transfer.

The final step is to fuse the results of semantic content attention and graph structure attention, as follows:

where and are learnable parameters, is a sentinel value to decide whether to pay more attention to the result of semantic content attention or graph structure attention.

Based on the nodes that should be focused on to obtain the current attention feature was used to generate the next word.

Fusion Constraint Decoder. The fusion constraint decoder generates the next word with the current attention feature , the hidden state of attention , , and the previous word . Firstly, the hidden feature is generated by the language of the two-layer LSTM structure [21].

In the standard method, the next word was generated as follows:

To generate more accurate sentences, the following formula was used instead of the standard method.

where , is the number of total words. Formula (19) generates two word probabilities through . The output word is the one with the highest probability according to and . This design enabled us to obtain a more accurate result.

Meanwhile, in order for the two fully connected layers to learn different emphases of , we generated different vectors through the similarity loss.

A smaller indicates that they are less similar. is a similarity threshold. If is less than , the two vectors are sufficiently dissimilar to be excluded from the loss. Through this loss, the probability of non-correct words is as orthogonal as possible.

Hence, using the standard cross-entropy loss and similarity loss to train IANR:

where is a weight to balance the cross-entropy loss and similarity loss.

Graph Updating. A sentence contains not only visual words, but also some non-visual words, such as “a”, “the” and “some”. When having non-visual words, the generated words do not express the accessed graph nodes, and thus the graph should not be updated. Therefore, a sentinel gate is proposed to dynamically adjust the attention weight through the output of language , , and currently accessed node vector as follows:

where . is a vector to indicate whether or not the generated word expresses the meaning of the accessed node.

As with NMT [6], the node update by using the add operation after an erase operation is as follows:

Therefore, a node can be set to 0 if it is no longer being accessed. Meanwhile, a node can be updated through if it needs to be described in more than one word. In this way, we updated the node features from to to generate the next word.

4. Experiments

4.1. Experimental Datasets

An extensive set of experiments was performed on two widely used datasets: MSCOCO Entities [11] and Flickr30k Entities [41] to evaluate the effectiveness of the proposed model. Both datasets contained images with corresponding descriptions in English and a correspondence between nouns and image regions. The control signal of ASG was automatically constructed based on the annotations of two datasets, as in Ref. [12]. In order to have a fair comparison with other methods, we followed “Karpathy” splits for MSCOCO Entities, using 112,742 images for training, 4790 images for validation, and 4979 images for testing. Each image has almost five sentences, as well as a corresponding ASG control signal. As for the Flickr30k Entities, which are smaller than MSCOCO Entities, they have 29,000 images for training, 1014 images for validation, and 1000 images for testing. Each image has almost five sentences, and the corresponding ASG control signal does as well.

4.2. Experimental Evaluation Metrics

We evaluated the quality of the generated sentences through two aspects: accuracy and diversity. For accuracy, this paper employed six evaluation metrics, including BLEU@4(b@4) [42], METEOR (M) [43], ROUGE (R) [44], CIDEr (C) [45], SPICE (S) [46], and alignment score (NW) [11], where B@4 computes the precision of the generated words. However, BLEU@4 does not consider synonyms and part-of-speech information. The METEOR considers this information through WordNet and calculates the average of accuracy and recall. ROUGE is a similarity metric to computes the recall on the longest common subsequence. CIDEr assigns a lower weight to common words and a higher weight to novel words. This metric better reflects the matching level of novel words. Most of the novel words are objects, attributes, and relations, which would not be prepositions or adverbs. Hence, The CIDEr better reflects the matching level of the novel words. SPICE evaluates the semantic similarity of the generated sentences and ground truth. The alignment score (NW) evaluates the consistency between the generated caption and the regional sequence. For diversity, we followed Ref. [12] using two metrics: n-gram diversity (D-n) [13,47] and Self-CIDEr (s-C) [48]. The D-n is the ratio of the different n-grams to total number of words in the best five captions. The Self-Cider is a recent metric which uses the CIDEr score as the kernel matrix K in the LSA to evaluate semantic diversity. The range of BLEU@4, METEOR, ROUGE, SPICE, NW, D-n, and s-C is . The range of CIDEr is . Note that all the scores have been reported in percentages. The higher the score, the more accurate or diverse it is.

4.3. Experimental Details

This paper extracts visual features for grounded regions by standard Faster-RCNN [23] pretrained on VisualGenome, and we also extract global image features by ResNet152 [5] pertained on ImageNet. For the information-augmented graph encoder, the dimension of , and , using two layers of MR-WGCN. For the language decoder, the global feature dimension , the word embedding and the hidden size of two LSTM were set to be 512. During training, we trained the network through cross-entropy loss and the designed similarity loss over 25 epochs. For the Adam optimizer, the learning rate was set to 0.0002 and the batch size set to 128. For language decoding, we exploited the beam search strategy with a beam size of 5 for all experiments. All experiments were conducted on NVIDIA GPU GTX-1080Ti. IANR was based on ASG2Caption [12].

4.4. Ablation Experiment

To quantify the impact of each proposed module, we compared it with a list of ablation models on various settings. To ensure fairness, in the following experiments, we fix the initialization parameters of the network.

4.4.1. Impact of Each Module on Encoder

To study the effects of the proposed information-augmented graph modules (memory-augmented attention (MA) [39], relational discriminator (RD), and multi-relational weighted graph encoder (MR-WGCN)) on the encoder, we started from a baseline model [12] that has a multi-relational graph encoder (MR-GCN) [40]. Then, we replaced MR-GCN in the baseline model with the proposed MR-WGCN, which takes into account how the message passes from one type of node to another along different edges. After that, we added MA and RD to the new model, respectively. Table 1 shows the results of each model on the two datasets.

Table 1.

Settings and results of ablation studies. (Baseline: ASG2Caption [12]; MR-WGCN: replace MR-GCN in baseline model with the proposed MR-WGCN; MR-WGCN+MA: replace MR-GCN and insert memory-augmented attention(MA); MR-WGCN+MA+RD: replace MR-GCN with MR-WGCN, insert memory-augmented attention (MA) and relational discriminator (RD) into the baseline model). Bold for the best.

It is obvious that the model with MR-WGCN outperforms the baseline model on five types of accuracy metrics, except B@4. The score for B@4 on MSCOCO Entities is slightly lower than the baseline model by 0.02. In terms of diversity, the results on the MSCOCO Entities dataset are better than the baseline model, but worse on the Flickr30k Entities. This means the MR-WGCN can improve the accuracy of visual words significantly. The reason for MR-WGCN tending to generate similar sentences on small data sets may be the insufficient training data, which makes the MR-WGCN unable to learn the difference between each sentence. Then, we applied the MA module to add the prior information to the “MR-WGCN+MA” model. All evaluation metrics were improved. This means the MA module can steadily improve the accuracy and diversity of the generated sentences. The final model, which adopts the proposed RD module to evaluate the prior information, outperforms the “MR-WGCN+MA” model in all accuracy metrics. For the diversity, the results of the larger dataset are better than “MR-WGCN”, but poor on the smaller one. Compared to the baseline model, better results are achieved for all metrics.

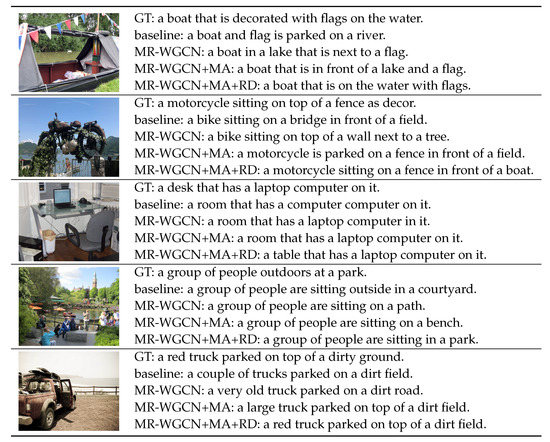

Figure 2 shows a few examples with images and captions generated by the ablated models with various settings and human-annotated ground truth sentences (GT). From these examples, the baseline model generates captions that are logical but inaccurate, while the proposed module generates more accurate captions. More specifically, the designed modules have advantages in the following three aspects: (1) IANR figures out the relationship between objects. There is a boat on the water with flags/motorcycle sitting on a fencelaptop computer on the table in the first/second/third examples. However, the baseline model presents the flag as parked on a river/a bike sitting on a bridge/laptop computer in a room, while IANR describes it correctly; (2) IANR describes the objects in the control signals more accurately. For example, IANR describes the motorcycle and fence, not the bike and bridge, in the second example; the object is a laptop computer, not a desktop computer, in the third example; people are sitting in a park, not in a courtyard, in the fourth example; and (3) IANR counts objects more accurately. In the image of the five examples, there is one truck, not a couple of trucks. IANR has these advantages because it can add a priori information of objects and remove useless information.

Figure 2.

Examples of captions generated by the baseline model, various ablation models mentioned in the Section 4.4.1, as well as the corresponding ground truths (GT).

4.4.2. Impact of Each Module on Decoder

To quantify the impact of each proposed module in the decoder, the ablation experiment is shown in Table 2. All models have MR-WGCN, MA, and RD modules in the encoder. The base model (Row 1 and 5) beginning with the decoder only has semantic content attention (SCA). Then, in Rows 2 and 6, we added a fusion constraint decode (FCD) to the decoder and the performance improved in the accuracy and diversity metrics, except for a b@4 drop of 0.01 in Flickr30k Entities. In particular, CIDEr/Spice/Self-CIDEr improved by 2.1/0.31/0.11 and 2.57/0.04/1.13, respectively. When comparing Row 2 with Row 3, in which a graph update (gupda) was employed for updating node features based on the generated words and currently accessed nodes, there was an improvement in all metrics except s-C. For the performance of Flickr30k Entities, the metrics of B@4, R, NW, s-C slightly decreased, while C, S, and M significantly increased. It shows the networks with gupda tended to generate similar prepositions when the training data were insufficient, and the increased M, R, C, S indicate that the nouns, relations, and so forth were described correctly. Rows 4 and 8 enhance the decoder with graph structure attention (GSA). The graph structure attention captures the structure information in the graph to supplement semantic content attention. Hence, It outperforms other models in most metrics on two datasets. The metrics of METEOR/CIDEr/Spice/NW/D-1/D-2/Self-CIDEr improved by 0.1/0.98/0.27/0.03/0.52/0.51/2.54 and 0.04/0.42/2.78/0.7/0.63/0.55/0.63/0.11, respectively. The improvement on the small dataset was greater than that on a large dataset. The improvement for visual words was more obvious. Hence, the designed GSA is useful for generating more diverse and accurate sentences.

Table 2.

Comparison of variants for proposed modules in decoder on MSCOCO Entities. (✓) indicates “used” (SCA:semantic content attention; GSA:graph structure attention; gupda:graph updating; FCD: Fusion Constraint Decode). Bold for the best.

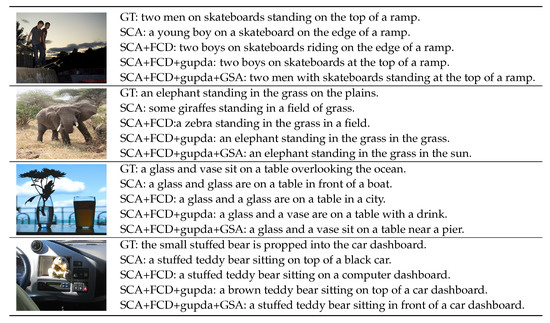

Figure 3 shows a few examples of images and the generated captions. From these examples, the proposed modules have advantages in the following two aspects: (1). IANR counts objects more accurately. There are two men/an elephant, not a young boy/some giraffes in the first and second examples. (2). IANR describes the objects more accurately. For example, an elephant, not some giraffes standing in the grass in the second example; a glass and a vase, not a glass and glass on a table in the third example; a teddy bear in front of a car dashboard, not on top of a black car in the fourth example. IANR has these advantages because it makes fuller use of the control signal to generate the correct sentences.

Figure 3.

Examples of captions generated by various ablation models. GT represents one of the corresponding ground truth sentences. SCA denotes the model has MR-WGCN, MA, and RD modules, but the decoder only has semantic content attention (SCA). SCA+FCD, SCA+FCD+gupda, and SCA+FCD+gupda+GSA is to add FCD, gupda, GSA modules into SCA step by step.

4.5. Comparative Experiment

Table 3 shows the performance comparison between the current state-of-the-art controllable and uncontrollable models with the proposed method. In this comparison, we use the same MSCOCO Entities dataset set as Refs. [11,16,35]. Compared with other controllable image caption models, IANR was higher than SOAT in METEOR, CIDEr, and SPICE by 0.64, 50.58, and 4.91. All these enhancements show that IANR can significantly improve the ability to describe objects and relationships.

Table 3.

Comparisons with the state-of-the-art on the MSCOCO Entities dataset. B@4, M, R, C, and S stand for BLUE@4, METEOR, ROUGE-L, CIDEr, and SPICE, respectively. Bold for the best.

4.5.1. Comparison on the Same Test Data

A well-known advantage of controllable image captioning is the ability to generate diverse image captions through different control signals. Each control signal is produced in different ways, so some images or sentences will be removed. Hence, we compared IANR with the two latest controllable models, VSR [16] and SCT [11], which release codes, extract features, and pretrained models.

For fair comparison, those models were compared on the common parts of the VSR and SCT test datasets. The common part of the MSCOCO Entities has 4678 images and 14,179 sentences. The common part of the Flickr30k Entities has 1000 images and 4982 sentences. The input feature sequence of “SCT” is the region feature corresponding to the words in the sentence. The “SCT w/o sequence” generates sentences by predicting the sequence of the selected regions. “VSR” achieves a better score by specifying the ground verb and associated object node features. “VSR w/o verb” removes the ground verb information and only uses the features of the ground truth region and relations.

The quantitative results are shown in Table 4. It is obvious that the captions generated by IANR in two datasets have much higher accuracy and diversity (CIDEr 224 VS 165.66 in VSR, Self-CIDEr 50.05 VS 46.29 in VSR). “SCT w/o sequence” obtained the worst results because it lacked the ground region sequences. Compared with VSR, IANR does not only need to know what the relationship is. This is more consistent with the application of image captioning. Even though there is less information in control signals, the metrics of accuracy and diversity are still higher than VSR.

Table 4.

The performance comparisons on MSCOCO Entities and Flickr30k Entities datasets. All tests were performed in the common part of the datasets, but each model had region features. Bold for the best.

4.5.2. Comparison on Same Training Data

We compared the latest model with the same test data in Section 4.5.1, but those models had different control signals and feature sequences. Hence, the proposed model is compared with several carefully designed baselines that use the same training data. Those baselines include: (1). AoANet, which employs self-attention as an encoder and decoder; (2). the BUTD model, which dynamically attends over relevant object regions when generating different words; (3). SCT, which regards the set of visual regions as a control signal; and (4) ASG2Caption, which proposes the ASG as a control signal.

Table 5 shows comparison results with the aforementioned models on MSCOCO Entities. IANR achieves state-of-the-art results on automatic evaluation metrics, outperforming all baselines in terms of alignment with the control signal through NW. IANR outperforms the controllable AoANet and controllable BUTD by 46.72 on CIDEr, 2.24 on NW, and 7.98 on Self-CIDEr. Compared with SCT trained with the same visual feature, our model improves by 93.48 on CIDER, 9.42 on NW and 17.17 on self-CIDEr. Finally, compared with the ASG2Caption model, IANR still outperforms it in all metrics, such as by being higher by 17.7 on CIDER, 0.91 on NW, and 0.62 on self-CIDEr.

Table 5.

The performance comparisons on the MSCOCO Entities dataset for controllable image captioning. All models were re-implemented and trained on the same region feature. Bold for the best.

4.6. Result and Discussion

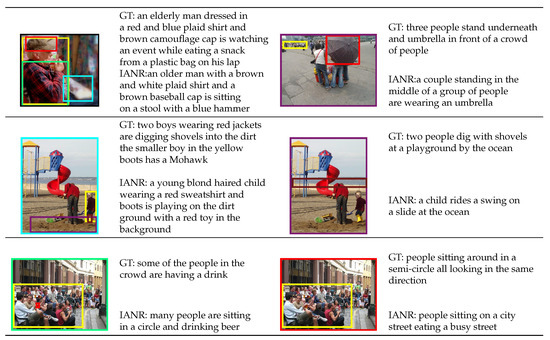

In the experiment, the results of some of the images were not correct. This section visualizes some failure results in Flickr30k Entities, as shown in Figure 4. On the left of the first line, there are too many boxes, resulting in captioning model failure to describe the relationships between them. On the right of the first line, according to the control signal, the model needs to describe populations and umbrellas that do not have relationships. Hence, IANR generates an incorrect description. The left side of the last two lines is the correct sentence and control signal, and the right side is incorrect. In the second line, it is difficult to describe the relationship between the child and the ocean. On the right side of the third row, the red box contains two boxes indicating a semicircle and the same direction, respectively, which is too close and makes the model difficult to describe.

Figure 4.

Examples of ground truth and a failed case generated by the proposed model.

As can be seen from Figure 4, a proper control signal is the key issue. In the future, we propose to design an appropriate control signal and corresponding captioning model according to the actual application.

5. Conclusions

Consider that all currently available object-controllable image captioning methods have overlooked the prior information of detected objects and relationships. To this end, this paper proposed a novel module called the information-augmented graph encoder, which is composed of an information-augmented embedding module and a multi-relational weighted graph encoder. The dynamic attention model was designed to fuse the result of a control signal and node features with prior information. In addition, we designed a similarity loss for generating diverse captioning. Extensive experiments on the MSCOCO Entities and Flickr30k Entities achieved state-of-the-art performance in terms of controllable image captioning models. More remarkably, IANR exceeded the best-published CIDEr score to date by 6.7%/5.6% on the MSCOCO Entities/Flickr Entities test split. It also significantly improved the diversity of captions.

The main limitation of this study is the difficulty in constructing the control signal to determine what is needed to be described in the given image. However, in some specific applications, it is possible to know approximately what needs to be described, for example, describing the road or the surrounding items when assisting a visually impaired person to walk; and constructing a control signal through some models [55] to detect the salient object in remote sensing images. Compared to other image captioning models, IANR is able to run in real-time up to 1.15 ms per image on a GPU-enabled device, which is significantly faster. Hence, IANR is more suitable for combining with some detection models [55] to describe salient objects. In the future, it is proposed to simplify the control signals, compress the model, or combine it with some detection methods to make IANR available for mobile devices or real-time tasks.

Author Contributions

S.D.: conceived, designed the whole experiment, and wrote the original draft. Y.Z.: designed and performed the experiment. H.Z. (Hong Zhu): contributed to the review of this paper. J.S.: participated in the design of the experiments. D.W.: participated in the verification of the experimental results. N.X.: participated in the review and revision of the paper. G.L.: provided funding support. H.Z. (Huiyu Zhou): contributed to the review of this paper and provided funding support. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the NSFC No. 61771386, and by the Key Research and Development Program of Shaanxi No. 2020SF-359, and by the Research and development of manufacturing information system platform supporting product lifecycle management No. 2018GY-030, Doctoral Research Fund of Xi’an University of Technology, China under Grant Program No. 103-451119003, and by the Natural Science Foundation of Shaanxi Province No. 2021JQ-487, and by the Xi’an Science and Technology Foundation No. 2019217814GXRC014CG015-GXYD14.11, and by Natural Science Foundation of Shaanxi Province No. 2023-JC-YB-550.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included within the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mitchell, M.; Dodge, J.; Goyal, A.; Yamaguchi, K.; Stratos, K.; Han, X.; Mensch, A.; Berg, A.; Berg, T.; Daumé, H., III. Midge: Generating image descriptions from computer vision detections. In Proceedings of the 13th Conference of the European Chapter of the Association for Computational Linguistics, Avignon, France, 23–27 April 2012; pp. 747–756. [Google Scholar]

- Ushiku, Y.; Harada, T.; Kuniyoshi, Y. Efficient image annotation for automatic sentence generation. In Proceedings of the 20th ACM international conference on Multimedia, Nara, Japan, 2 November 2012; pp. 549–558. [Google Scholar]

- Kuznetsova, P.; Ordonez, V.; Berg, T.L.; Choi, Y. Treetalk: Composition and compression of trees for image descriptions. Trans. Assoc. Comput. Linguist. 2014, 2, 351–362. [Google Scholar] [CrossRef]

- Liu, X.; Li, H.; Shao, J.; Chen, D.; Wang, X. Show, tell and discriminate: Image captioning by self-retrieval with partially labeled data. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 338–354. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Graves, A.; Wayne, G.; Danihelka, I. Neural turing machines. arXiv 2014, arXiv:1410.5401. Available online: https://arxiv.53yu.com/abs/1410.5401 (accessed on 7 March 2023).

- Gan, C.; Gan, Z.; He, X.; Gao, J.; Deng, L. Stylenet: Generating attractive visual captions with styles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3137–3146. [Google Scholar]

- Guo, L.; Liu, J.; Yao, P.; Li, J.; Lu, H. Mscap: Multi-style image captioning with unpaired stylized text. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4204–4213. [Google Scholar]

- Shetty, R.; Rohrbach, M.; Anne Hendricks, L.; Fritz, M.; Schiele, B. Speaking the same language: Matching machine to human captions by adversarial training. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4135–4144. [Google Scholar]

- Johnson, J.; Karpathy, A.; Li, F.-F. Densecap: Fully convolutional localization networks for dense captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4565–4574. [Google Scholar]

- Cornia, M.; Baraldi, L.; Cucchiara, R. Show, control and tell: A framework for generating controllable and grounded captions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8307–8316. [Google Scholar]

- Chen, S.; Jin, Q.; Wang, P.; Wu, Q. Say as you wish: Fine-grained control of image caption generation with abstract scene graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9962–9971. [Google Scholar]

- Deshpande, A.; Aneja, J.; Wang, L.; Schwing, A.G.; Forsyth, D. Fast, diverse and accurate image captioning guided by part-of-speech. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10695–10704. [Google Scholar]

- Kim, D.J.; Oh, T.H.; Choi, J.; Kweon, I.S. Dense relational image captioning via multi-task triple-stream networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7348–7362. [Google Scholar] [CrossRef] [PubMed]

- Deng, C.; Ding, N.; Tan, M.; Wu, Q. Length-controllable image captioning. In Proceedings of the European Conference on Computer Vision. Springer, Glasgow, UK, 23–28 August 2020; pp. 712–729. [Google Scholar]

- Chen, L.; Jiang, Z.; Xiao, J.; Liu, W. Human-like controllable image captioning with verb-specific semantic roles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16846–16856. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-critical sequence training for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7008–7024. [Google Scholar]

- Liu, S.; Zhu, Z.; Ye, N.; Guadarrama, S.; Murphy, K. Improved image captioning via policy gradient optimization of spider. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 873–881. [Google Scholar]

- Zhang, L.; Sung, F.; Liu, F.; Xiang, T.; Gong, S.; Yang, Y.; Hospedales, T.M. Actor-critic sequence training for image captioning. arXiv 2017, arXiv:1706.09601. Available online: https://arxiv.53yu.com/abs/1706.09601 (accessed on 7 March 2023).

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Huang, L.; Wang, W.; Chen, J.; Wei, X.Y. Attention on attention for image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–8 October 2019; pp. 4634–4643. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://proceedings.neurips.cc/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 7 March 2023). [CrossRef] [PubMed]

- Yang, X.; Tang, K.; Zhang, H.; Cai, J. Auto-encoding scene graphs for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10685–10694. [Google Scholar]

- Guo, L.; Liu, J.; Tang, J.; Li, J.; Luo, W.; Lu, H. Aligning linguistic words and visual semantic units for image captioning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 765–773. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring visual relationship for image captioning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2019; pp. 684–699. [Google Scholar]

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2019; pp. 5831–5840. [Google Scholar]

- Chen, T.; Zhang, Z.; You, Q.; Fang, C.; Wang, Z.; Jin, H.; Luo, J. “Factual”or“Emotional”: Stylized Image Captioning with Adaptive Learning and Attention. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2019; pp. 519–535. [Google Scholar]

- Gurari, D.; Zhao, Y.; Zhang, M.; Bhattacharya, N. Captioning images taken by people who are blind. In Proceedings of the European Conference on Computer Vision. Springer, Glasgow, UK, 23–28 August 2020; pp. 417–434. [Google Scholar]

- Mathews, A.; Xie, L.; He, X. Senticap: Generating image descriptions with sentiments. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Alikhani, M.; Sharma, P.; Li, S.; Soricut, R.; Stone, M. Clue: Cross-modal coherence modeling for caption generation. arXiv 2020, arXiv:2005.00908. Available online: https://arxiv.53yu.com/abs/2005.00908 (accessed on 7 March 2023).

- Shuster, K.; Humeau, S.; Hu, H.; Bordes, A.; Weston, J. Engaging image captioning via personality. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12516–12526. [Google Scholar]

- Mathews, A.; Xie, L.; He, X. Semstyle: Learning to generate stylised image captions using unaligned text. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2019; pp. 8591–8600. [Google Scholar]

- Zheng, Y.; Li, Y.; Wang, S. Intention oriented image captions with guiding objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8395–8404. [Google Scholar]

- Shao, J.; Yang, R. Controllable image caption with an encoder–decoder optimization structure. Appl. Intell. 2022, 52, 11382–11393. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, L.; Chen, J.; Yu, D.; Li, Y. Comprehensive image captioning via scene graph decomposition. In Proceedings of the European Conference on Computer Vision. Springer, Glasgow, UK, 23–28 August 2020; pp. 211–229. [Google Scholar]

- Park, D.H.; Darrell, T.; Rohrbach, A. Robust change captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 4624–4633. [Google Scholar]

- Zhu, Z.; Wang, T.; Qu, H. Macroscopic control of text generation for image captioning. arXiv 2021, arXiv:2101.08000. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-memory transformer for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10578–10587. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Berg, R.v.d.; Titov, I.; Welling, M. Modeling relational data with graph convolutional networks. In Proceedings of the European Semantic Web Conference, Crete, Greece, 3–7 June 2019; pp. 593–607. [Google Scholar]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k entities: Collecting region-to-phrase correspondences for richer image-to-sentence models. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 27–28 October 2015; pp. 2641–2649. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, B.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics, Ann Arbor, MI, USA, 25–30 June 2005; pp. 228–231. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. Spice: Semantic propositional image caption evaluation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 382–398. [Google Scholar]

- Aneja, J.; Agrawal, H.; Batra, D.; Schwing, A. Sequential latent spaces for modeling the intention during diverse image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 4261–4270. [Google Scholar]

- Wang, Q.; Chan, A.B. Describing like humans: On diversity in image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4195–4203. [Google Scholar]

- Wang, N.; Xie, J.; Wu, J.; Jia, M.; Li, L. Controllable Image Captioning via Prompting. arXiv 2022, arXiv:2212.01803. Available online: https://arxiv.53yu.com/abs/2212.01803 (accessed on 7 March 2023).

- Zhang, M.; Chen, J.; Li, P.; Jiang, M.; Zhou, Z. Topic scene graphs for image captioning. IET Comput. Vis. 2022, 16, 364–375. [Google Scholar] [CrossRef]

- Huo, D.; Kastner, M.A.; Komamizu, T.; Ide, I. Action Semantic Alignment for Image Captioning. In Proceedings of the 2022 IEEE 5th International Conference on Multimedia Information Processing and Retrieval (MIPR), Online, 2–4 August 2022; pp. 194–197. Available online: https://ieeexplore.ieee.org/abstract/document/9874541 (accessed on 7 March 2023).

- Liu, W.; Chen, S.; Guo, L.; Zhu, X.; Liu, J. Cptr: Full transformer network for image captioning. arXiv 2021, arXiv:2101.10804. Available online: https://arxiv.53yu.com/abs/2101.10804 (accessed on 7 March 2023).

- Shi, Z.; Zhou, X.; Qiu, X.; Zhu, X. Improving image captioning with better use of captions. arXiv 2020, arXiv:2006.11807. Available online: https://arxiv.53yu.com/abs/2006.11807 (accessed on 7 March 2023).

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5659–5667. [Google Scholar]

- Gadamsetty, S.; Ch, R.; Ch, A.; Iwendi, C.; Gadekallu, T.R. Hash-Based Deep Learning Approach for Remote Sensing Satellite Imagery Detection. Water 2022, 14, 707. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).