Abstract

Chinese pesticide named-entity recognition (NER) aims to identify named entities related to pesticide properties from unstructured Chinese pesticide information texts. In view of the characteristics of massive, fragmented, professional, and complex semantic relationships of pesticide information data, a deep learning method based on multi-feature fusion was applied to improve the accuracy of pesticide NER. In this study, the pesticide data set is manually annotated by the begin inside outside (BIO) sequence annotation scheme. Bi-directional long short-term memory (BiLSTM) and iterated dilated convolutional neural networks (IDCNN) combined with conditional random field (CRF) form the model BiLSTM-IDCNN-CRF, and it is applied to implement named-entity recognition in Chinese pesticide data sets. IDCNN is introduced to enhance the semantic representation ability and local feature capture ability of the text. BiLSTM network and IDCNN network are combined to obtain the long-distance dependence relationship and context features of different granularity of pesticide data text. Finally, CRF is used to implement the sequence labeling task. According to the experiment results, the accuracy rate, recall rate, and F1 score of the BiLSTM-IDCNN-CRF model in the Chinese pesticide data set were 78.59%, 68.71%, and 73.32%, respectively, which are significantly better than other compared models. Experiments show that the BiLSTM-IDCNN-CRF model can effectively identify and extract entities from Chinese pesticide information text data, which is helpful in constructing the pesticide information knowledge graph and intelligent question-answering.

1. Introduction

With the popularization of modern information technology, modern agricultural information is developing continuously, a mass of information exists in the form of electronic text, and pesticide text grows at an exponential rate. In the face of massive and fragmented pesticide information data and the intelligent service in the field of pesticides, one of the important problems is how to identify and extract the relevant entities automatically, accurately, and efficiently from a large quantity of Chinese pesticide information texts to build a knowledge base of pesticide information.

Pesticides are widely used in agricultural production, and a large number of literature data need to be fully mined, developed, and utilized. Chinese pesticide named entity refers to the general term of various pesticide-related named entities in Chinese pesticide texts, mainly including pesticide names, active ingredients and contents, indications, usage, and other information. In the vertical field of pesticides, the extracted entities will be applied to the subsequent natural language processing tasks in the field of pesticide technology, such as relation extraction, event extraction, domain knowledge graph construction, etc. Using neural network technology can automatically, accurately, and quickly identify the named entities in the field of pesticide technology from a large amount of literature, which is helpful for pesticide technology researchers to carry out related scientific research work, help farmers use pesticides scientifically, reasonably and safely, and promote the application of text extraction technology in agricultural data analysis. The research on named-entity recognition (NER) in the general field has been relatively mature. NER has the characteristics of a relatively stable number, standardized structure, and unified naming rules. In the field of agricultural information, NER in agricultural planting, agricultural pests, and diseases [1,2,3,4,5] has been developed gradually in recent years. In the field of Chinese pesticide, there are many kinds of named entities with distinct domain particularity, and a pesticide may have many aliases, or the same pesticide name has completely different semantics in different texts [6]. Pesticide NER is full of challenges because of the complex structure of Chinese pesticide information text.

Aiming at the Chinese pesticide NER, a BiLSTM-IDCNN-CRF model with multi-feature fusion is applied in this study, which combines the BiLSTM model and IDCNN model to extract features from texts with different granularities, and then integrates the features obtained by these two models and introduces the CRF layer for classification. Compared with a single model, the model applied in this paper can make full use of text information for Chinese pesticide entity recognition. Experiments show that the applied method in this paper achieves better results than BERT, RoBERTa, BiLSTM, IDCNN, CRF, GlobalPointer, and other models on pesticide data sets.

The main contributions of this study are as follows: Firstly, the Chinese pesticide technical field data set was constructed by preprocessing, and BIO sequence labeling was carried out. Secondly, a multi-model fusion model BiLSTM-IDCNN-CRF is applied to Chinese pesticide naming entity recognition: BiLSTM mechanism makes the model pay attention to the global context features, which makes the text features more accurate. The IDCNN model has the advantage of taking into account local features. The text features with different granularity extracted with the BiLSTM model and IDCNN model are fused. Then, they were combined with the CRF layer to form a model to mine hidden information in pesticide text labels, which improved the accuracy of Chinese pesticide named entities recognition. Thirdly, the identification results of pesticide named entities in this study were also used in the subsequent relationship extraction task, laying a foundation for the construction of the pesticide knowledge graph.

The structure of this paper is as follows. In Section 1, the application background of pesticide NER and the main contribution and structure of this paper are briefly described. Section 2 analyzes the research status of NER in different fields. Section 3 introduces the preprocessing method of pesticide data text. Section 4 analyzes the research contents and methods of this study and introduces the related technical theories and models. Section 5 compares and discusses the experimental results of several groups of models and finally concludes that the BiLSTM-IDCNN-CRF model has the best effect on the identification of pesticide naming entities. Section 6 summarizes the main content and gives the future research direction.

2. Related Work

The methods of NER for agricultural pests and diseases can be divided into the rule-based method, dictionary matching-based method, traditional machine learning method, and deep learning method. Among these, the rule-based method and dictionary matching-based method have some problems, such as poor universality and time-consuming manual operation. As a traditional machine learning method, conditional random fields (CRF) is a commonly used NER method because of its high accuracy in jointly predicting entity categories. NER in the field of agricultural pests and diseases, such as crops and pests and diseases, is based on CRF. Traditional machine learning methods transform the problem of NER into the problem of sequence labeling, but they rely heavily on manual feature extraction, which requires a lot of engineering skills and domain knowledge and costs a lot of manpower and time. On the other hand, due to the limitation of artificial design features, traditional machine learning is difficult to apply to large-scale data sets, which often results in over-fitting. The deep neural network is a model which can automatically extract data features and directly classify them in recent years. The model does not need to spend a high cost of manpower and time to design feature extraction and can automatically learn the features of each word or phrase. Since deep learning is a data-driven method, the larger the data scale, the more accurate the learned model and the better the classification performance. Thus, the deep learning model based on a neural network can better solve the above problems of traditional machine learning in agricultural NER.

In the field of natural language processing, different orders of words may cause a huge difference in the meaning of sentences, so it is often necessary to map words into word vectors and then input them into neural networks. For the task of NER, the neural network framework used commonly is the encoder-decoder architecture; that is, the given text sequence is encoded into the corresponding semantic code by the encoder model, and then the semantic code is sent into the decoder model to obtain the label sequence of the given text sequence, to train the end-to-end model. The end-to-end model can avoid the cumulative propagation of errors among the modules and improve recognition accuracy.

Recurrent neural network (RNN) [7] inputs data into the model one by one according to the time sequence and takes the previous output results to the next hidden layer for training, which has good performance in processing sequence data. The bidirectional recurrent neural networks (bidirectional RNNs) make the prediction results input not only the past information but also the present information and involve the forward and reverse propagation information as well as the future information so that they can fully connect with the context information to make predictions, and to a certain extent, make use of the information of the entire sequence to make predictions. However, the semantics of RNN output will be biased towards the later input data, and on account of the weight matrix multiplexing in RNN, the problem of gradient disappearing or gradient explosion will occur when the sequence length is too long.

Based on RNN units, a long short-term memory (LSTM) model [8,9,10] implements the selective utilization of historical information through input gates, output gates, and forget gates and can effectively capture the information of longer sequence data. The above problem is improved. Bidirectional LSTM (BiLSTM) [11] combines the output of forward and backward sequence processing at the same time, which can capture the long-distance dependence of text better, and is a model of the bidirectional recurrent neural network combined with short-term and short-term memory. In this model, three gating units are introduced to solve the problems of gradient disappearing and gradient explosion in RNN [12,13].

Iterated dilated convolutional neural network (IDCNN) [14,15] is composed of multiple layers of different dilated convolutional neural networks (DCNN) [16]. Since each word in the input sentence may have an impact on the labeling of the current position, the last layer of neurons may not be able to capture the information of the entire input sentence, and only part of the raw data can be obtained. IDCNN can extract local features while paying attention to long-distance information; hence, it solves the long-distance dependence problem that the convolutional neural network CNN [17,18,19] cannot solve.

Huang et al. [20] introduced the LSTM model in sequence labeling and proposed for the first time to combine the bidirectional long and short-memory neural network mode with the CRF layer for the task of NER. For the BiLSTM-CRF model, the bidirectional LSTM component can effectively use the past and future input features, while the CRF layer can use the sentence-level tag information. Experiments show that the BiLSTM-CRF model can make full use of future input features without the help of word embedding and can produce accurate labeling performance with robustness. Ma et al. [21] introduced a neural network framework combining BiLSTM, CNN, and CRF, which can extract deep features from the text at the word and character level. The model does not need feature engineering and data preprocessing, whereas the traditional sequence labeling system requires a lot of task-specific knowledge in the form of manual features and data preprocessing. Guo et al. [22] proposed a Chinese NER model combining multi-scale local context features and self-attention mechanism for agricultural pest and disease texts, constructed a JMCA-ADP model based on BiLSTM-CRF, and established an agricultural pest and disease available corpus AgCNER. Compared with similar corpus, this corpus has more categories and a larger sample size, which deals with the problem of ignoring the potential local context features of the text.

Jiang Xiang et al. [23] proposed a BiLSTM-IDCNN-CRF model based on word embedding, which combines the characteristics of different granularities obtained by the BiLSTM network and IDCNN network, and each output of dilated convolutional layer IDCNN contains a wide range of information without the loss information of pooling. The model has obtained good results in the ecological NER of the ecological governance technology data set. X. Yang et al. [24] compared and analyzed the experimental effects of BERT, RoBERTa, ALBERT, and ELECTRA. Experiments show that the RoBERTa-MIMIC model achieves the most advanced performance on three public clinical concept extraction datasets and verifies the excellent performance of the RoBERTa model in medical named-entity recognition tasks. Liu Liu et al. [25] compared the performance of NER based on CRF, LSTM, LSTM-CRF, and BERT around intangible cultural heritage with traditional music. Experiments show that BERT has the best semantic representation ability among the four models and can better complete the task of automatic recognition of traditional music terms, but it has the limitation of only recognizing some unique terms and having a small training set.

Compared with the general corpus, the information density of Chinese pesticide text is higher, which contains a large number of specific terms related to the fields of animal, botany, and chemistry, and there are widespread phenomena such as long-distance dependence, bidirectional dependence, the coexistence of normative expressions and abbreviated colloquial expressions and terminology nested. Some existing modules integrating multiple neural networks have complex structures, and some works easily cause errors in the identification of pesticide entity boundaries and categories. The granularity of the extracted entities is relatively rough, and the semantic features of the obtained text are insufficient. As a result, the knowledge structure contained is not comprehensive enough, and there is a lack of precise attribute information on pesticides as well as fine-grained knowledge about the usage, storage, and transportation methods.

Pre-training language models in different tasks can acquire some features based on a good deal of empirical knowledge, which has stronger semantic representation and feature extraction ability than traditional deep learning methods. In terms of vectorial representation of pesticide information, deep learning can fully extract semantic features from text statements and provide ideas and references for pesticide NER.

3. Preprocessing

The data set of this study comes from the China pesticide information network (http://www.chinapesticide.org.cn/, accessed on 8 June 2022) which crawls important pesticide text information from the pesticide registration data labels in the industry data, and finally retains nearly 40,000 data after de-duplication and data cleaning. Other pesticide texts mainly include pesticide news texts, pesticide question-and-answer texts, and other texts from the network. Compared with the official website data, the network texts are colloquial and networked, the sentence patterns are not rigorous enough, the semantic expression is not clear enough, and the composition is more complex.

3.1. Important Format Information Extraction

Pesticide text preprocessing can not only extract important format information directly but also eliminate noise and reduce interference. Preprocessing is mainly used to clean the original data, which is conducive to the deep learning framework to extract text features and improve the efficiency of NER.

Pesticide instructions have a unified format and writing method, including pesticide registration certificate number, registration certificate holder, pesticide name, active ingredients and content, dosage form, scope and method of use, technical requirements for use, poisoning salvage guide, storage and transportation methods, and other format information. The format information extracted from the pesticide instructions can be regarded directly as an important pesticide named entity and can also contain an important pesticide named entity.

3.2. Text Normalization

Chinese pesticide instructions contain many non-standard words or symbols, which have no practical significance for the recognition of named entities. Text normalization mainly includes simplification, elimination of interference items, and data collation. Some drugs are labeled with traditional Chinese characters in the instructions. Simplification means to convert the traditional characters in the text into simplified characters, such as “中崋” (Chinese Traditional) into ”中华” (simplified Chinese). The interfering items mainly include bullets, special characters, noise words, or meaningless sentences. Bullets in the text, such as “★”, “●”, “■”, etc., represent an order in the specification and usually have no meaning for the named entity. Meaningless special characters, spaces, and punctuation marks should also be removed. Distracting words or meaningless sentences such as “is one of the household supplies”, “convenient”, “simple”, and so on, these words or sentences do not contain practical meaning in the pesticide instructions. Data collation included the removal of missing data and the division of long sentences. The format of each pesticide instruction is not completely fixed, so it is necessary to deal with the missing items of the data and organize them into text data in a unified format. For long sentences, punctuate the sentences to ensure that the length of each sentence does not exceed 256 words.

4. Method

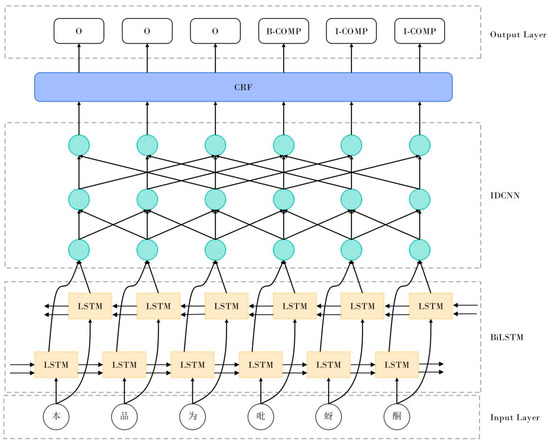

The Chinese pesticide NER system first uses BiLSTM to model the whole sentence of the text, and then the features containing context information are learned through the context of the sentence, and the obtained features are sent to the IDCNN model. Since the output parameters of the BiLSTM model and the input parameters of the IDCNN model may be different, in order to reduce the resolution loss caused by the change in the number of features, 1 × 1 convolution is used to adjust the number of context features learned by the BiLSTM model, so that the output features of BiLSTM are consistent with the input features of IDCNN. In the IDCNN model, the dilated convolution is used to further learn a wider range of context features, and then the learned features are spliced to obtain the features learned by the IDCNN. Finally, label inferences are derived from the output features of the IDCNN through a fully connected layer CRF. The framework of the Chinese pesticide named-entity recognition system is shown in Figure 1.

Figure 1.

Framework of Chinese pesticide named-entity recognition system. Notes: The English translation of the Chinese character is “This product is pyrazone”.

4.1. BiLSTM Layer

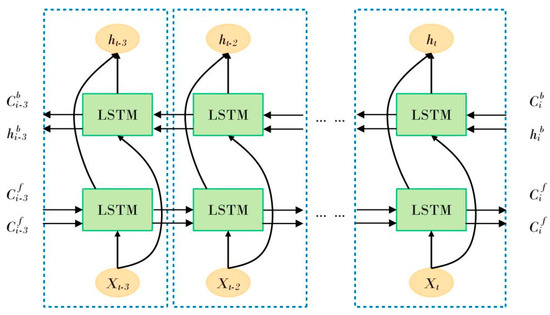

In BiLSTM, the input is the embedding vector of each word, which contains character embedding and word embedding, and the output is the score of each word prediction label, which will be the input of the IDCNN layer. Its structure is shown in Figure 2. BiLSTM learns the sequence data from left to right as well as from right to left through two layers of LSTM neurons, and the feature output is the future feature of the forward word and the historical feature of the backward word.

Figure 2.

BiLSTM model diagram.

Given input sequence , of which represents the input variable corresponding to time t, and n is the length of the sequence. is the output of BiLSTM, and represents the output vectors of the forward and backward LSTM, respectively, then:

where ⊕ represents the vector splicing operation; that is, two feature vectors are spliced according to a certain feature dimension. For a given input , the output from the BiLSTM model .

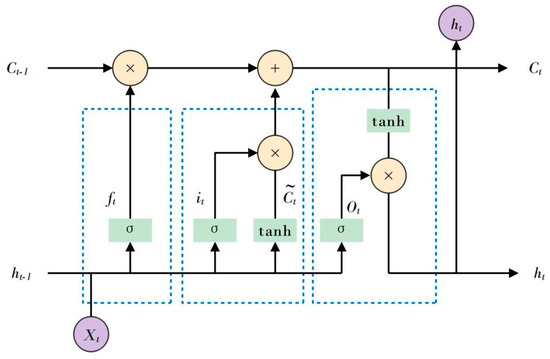

Forward module output and backward module output can be implemented by basic LSTM modular units. The internal structure of the LSTM [9] model is shown in Figure 3:

Figure 3.

Internal structure of LSTM model.

The LSTM model consists of three gate units: forget gate, input gate, and output gate, which determine the memory and forgetting of information at each moment.

The forget gate controls whether the information will be forgotten at each moment. is the output of the forget gate, which represents the feature information retained by the model that is important to the current sequence. According to the input of the current time step and the hidden state of the previous time step , the forget gate determines to forget the past information carried by the cell state of the previous layer; we have:

The input gate determines how much new information is added to the model. As the input of input gate, represents how much of the input information needs to be filtered; represents the current cell state, then:

The updated as part of the input for the next time step, we have:

The output gate determines whether there is information output at each moment. As the output result of the output gate, indicates the selected output characteristic according to the correlation in the modeling. represents the hidden state generated by the gate value of the output gate, acting on the updated cell state , and is used as an activation function, and as part of the input for the next time step. The whole process of the output gate is to generate the hidden state , then there are:

where denotes the hidden state at time t − 1; represents the state of the cell at time t − 1; is the corresponding trainable parameter obtained by random initialization at the beginning of training and optimized continuously; is the corresponding bias parameter that does not participate in the training and is obtained by random initialization.

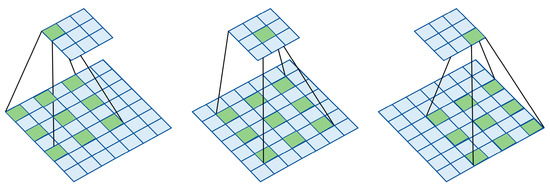

4.2. IDCNN Layer

The IDCNN model is a connected layer constructed from dilated convolutions, as shown in Figure 4. The feature vector H obtained in the BiLSTM layer is sent into the IDCNN to obtain the dependency of the spatial features on a given text sequence.

Figure 4.

Dilated convolution IDCNN model.

Let the parameters of the IDCNN be , is the parameter of the i-th dilated convolution block, denotes its output, where is the feature transformation function. is the dilated convolution of the first layer, and the dilated distance is 1, is the feature vector output by the -th layer dilated convolution. In general, . indicates the -th layer dilated convolution and the dilated distance is . In general, denotes the second and thereafter dilated convolutional layer, and denotes the activation function ReLU. According to the defined parameters, there are:

Finally, the output can be obtained by splicing the results of the convolution blocks, as is shown in Formula (11):

In this study, RoBERTA, BiLSTM, BERT, and CRF models are selected for various combinations with IDCNN to capture the long-term dependent features and local features of the Chinese pesticide text. During the convolution operation, because an expansion width is added to the convolution kernel, the convolution width will increase exponentially with the increase in the number of layers, and eventually, all the input data will be covered quickly. For each word of the input text, IDCNN finally outputs a vector, which is the probability of each tag corresponding to each word calculated by IDCNN.

4.3. CRF Layer

Conditional random fields (CRF) [26] is a discriminative probabilistic model based on an undirected graphical model. CRF generally assumes that the random output variables form a Markov random field. In order to make the classifier perform better, the adjacent data information is considered when labeling the data. Linear chain CRFs are often used to solve the sequence labeling problem. The observation sequence is the input, and the output is the annotation sequence. The conditional probability model is obtained by regularized maximum likelihood estimation.

The output vector of the IDCNN model is sent to CRF [27] for decoding, and the specific calculation is as shown in Formula (12):

where is the corresponding prediction tag for , is a trainable parameter, which is obtained by random initialization at the beginning of the training procedure and is optimized in training. The bias parameter is a bias parameter and is not involved in training.

The feature of dependence distribution among words in the labeled sequence is conducive to CRF fitting training data and reducing the probability of illegal sequences in labeled sequence prediction. The prediction of the tag for each word can be regarded as a classification task. For classification, the contextual features and long-distance dependence of the text should be taken into account. CRF obtains the score of a labeled sequence through the state transition matrix and obtains the global optimal sequence based on the comprehensive score of the labeled sequence.

There are many long-distance dependence and bidirectional semantic dependencies in Chinese pesticide texts, e.g., “Pentafluorosulfochlor has 25 g/L of the content of effective elements. The dosage form is a dispersible oil suspension agent. It is used to control annual weeds in rice seedling fields. The dosage is 35–45 mL/mu. This product is sulfonamide herbicide.” These sentences are all about the properties of pentafluorosulfochlor, which have a long-distance dependence and bidirectional semantic dependence. BiLSTM is introduced to solve the bidirectional semantic dependency problem. The two LSTMs are combined into a BiLSTM, one for forward processing input sequence and the other for reverse processing sequence. The output of the two LSTMs is combined to obtain the final output result of BiLSTM. The solution to long dependencies is to introduce gating mechanisms in LSTM that allow information to pass through selectively: The forget gate determines what information is discarded from the cell state, the input gate determines the information stored in the cell state, the output gate determines the value of the output, and the long-distance information is remembered by the cell state and the past cell state can be maintained to the current time step. In this way, the long-span dependencies in the series can be captured, and the information that can be remembered and forgotten can be learned through training.

On the other hand, although BiLSTM can extract forward and backward features from the whole sequence and take into account the global situation, the BiLSTM derived from a cyclic neural network cannot take into account local features well. The IDCNN model can capture longer context information than traditional CNN by increasing the receptive field through dilated convolutions and enhancing the handling of long text. While taking into account some global features, IDCNN can extract local features more accurately. Therefore, vector features output by IDCNN can supplement some local features. The model BiLSTM-IDCNN-CRF applied in this study can solve the long-distance dependency and bidirectional semantic dependency problems through BiLSTM, taking into account the global and local features through IDCNN.

5. Experimental Results and Analysis

The data set of Chinese pesticide instructions contains 10 entity categories. In order to train the parameters better, the data set is processed in this experiment. The NER model is based on word vector features, and the BIO tagging method [28] is used to label pesticide entities, that is, B-begin, I-inside, O-outside, where B-X means the beginning of entity X, I-X represents the middle or end of entity X, and O denotes entities that do not belong to any type. Label studio [29] is used as the annotation tool. Some BIO tags are shown in Table 1. In this experiment, the pesticide data set was divided into the training set, validation set, and test set according to 8:1:1. Pesticide entity categories and their presentation are shown in Table 2.

Table 1.

BIO labeled samples.

Table 2.

Category and samples of pesticide entities.

5.1. Evaluation Indicators

In this experiment, precision, recall, accuracy, and F1-score are used to evaluate the proposed methods in this study when the entity categories are not balanced. The specific formula is as follows:

TP is a true positive example that is predicted to be positive but is actually positive; FP is a false positive example that is predicted to be positive but is actually negative; FN is a false negative example that is predicted to be negative but is actually positive; TN is a true negative example that is predicted to be a negative example but is actually negative.

5.2. Analysis of Experimental Results

In order to verify the applied BiLSTM-IDCNN-CRF model on the pesticide data set, BERT-CRF, BiLSTM-CRF, GlobalPointer, RoBERTa-BiLSTM-IDCNN-CRF, and BERT-BiLSTM- IDCNN-CRF are introduced in this study.

Although the existing research has used the BiLSTM-IDCNN-CRF to perform the named-entity recognition task, the research on the named-entity recognition of Chinese pesticide data is still blank. In our study, the hyperparameters are adaptively tuned based on the performance of the model on the dataset in the process of modeling. The training framework of model BiLSTM-IDCNN-CRF uses tensorflow-gpu 2.3.0, tensorflow-addons 0.15.0, and transformers 4.6.1. The embedding dim is set to 300, hidden dim is 200, filter nums is 64, IDCNN nums is 2, maxlen is 512, and dropout is 0.3. The learning rate is .

The training framework of model BiLSTM-CRF uses torch 1.8. The maxlen is set to 512, dropout is 0.5, embed size is 256, and hidden size is 256. BERT-CRF is trained with tensorflow 1.14.0, bert4keras 0.11.4, and keras 2.3.1 frameworks. The maxlen is set to 512, Bert layers are 12, the learning rate is , and the batch size is 16. GlobalPointer uses the same training framework and parameters as BERT-CRF. RoBERTa-BiLSTM-IDCNN-CRF and BERT-BiLSTM-IDCNN-CRF take the same training framework and parameters as BiLSTM- IDCNN- CRF.

Experiment results of named-entity recognition based on these different models are shown in Table 3.

Table 3.

Experiment results of named-entity recognition based on different models.

For the combination of the pre-training BERT and CRF, the identification precision, recall, accuracy, and F1 score of the BERT-CRF model on pesticide data sets are only 75%, 56%, 41.58%, 79.98%, and 53. 64%. BiLSTM-CRF avoids the problem of introducing noise in the data set; as such, it can effectively learn the features of the text and implement NER by combining context information and word segmentation information, and the recall rate and accuracy rate are obviously improved compared with the BERT-CRF model, so the comprehensive evaluation index F1 score is improved by 5.75%. GlobalPointer globally normalizes the pesticide data set, distinguishes nested entities from non-nested entities, and improves the F1 score by 6. 61% compared with BiLSTM-CRF.

Based on the model BiLSTM-CRF, RoBERTa-BiLSTM-IDCNN-CRF introduces a robustly optimized bert (RoBERTa) and IDCNN, solves the problems of polysemy and long-distance dependency by learning the dynamic word vector representation, and improves the word representation ability. Compared with the BiLSTM-CRF, the precision, recall, precision, and F1 score of BiLSTM-IDCNN-CRF are improved by 9. 43%, 14.64%, 2.22%, and 12. 31%, respectively. The F1 score of BERT-BiLSTM-IDCNN-CRF is 1.2% higher than that of RoBERTA-BiLSTM-IDCNN-CRF. Experimental results show that BERT is better than RoBERTa. With a strong semantic representation ability, BERT combined with BiLSTM and IDCNN can capture the context features while taking into account the local features, and multi-semantic feature fusion has a great improvement on the pesticide entity recognition effect.

Compared with the experimental results of BiLSTM-CNN-CRF, the performance of BiLSTM-IDCNN-CRF is better; specifically, the F1 score is nearly 10% higher, which indicates that the local feature modeling ability of IDCNN is better than that of the traditional CNN model in Chinese pesticide data. Thus, verifying that the local feature capture capability of IDCNN helps the model to make labeling decisions.

From the experiment results in Table 3, compared with the other six models, BiLSTM-IDCNN-CRF obtains the highest performance, so the precision rate, recall rate, precision rate, and F1 score are improved and reach 78.59%, 68.71%, 91.68%, and 73.32%, respectively, which means it can obtain richer feature. When predicting whether a word is part of an entity, the BiLSTM-IDCNN-CRF model not only uses the semantic features of the whole sentence but also focuses on the semantic features of the characters around the word, so it obtains better results in the overall performance.

5.3. Discussion

BiLSTM-CRF consists of an embedding layer, a BiLSTM layer, and a CRF layer, which can extract high features of the text. The embedding layer can encode Chinese characters or words and quantify the text. The output of BiLSTM can also be used as a predictive label, but there are still some problems. For example, labels that are not adjacent may be predicted to be adjacent. CRF is a sequential labeling model, which considers the order and correlation between labels. The association between states can be learned through the feature function. CRF layer has the characteristics of integrating context labels. The introduction of CRF after the output of the BiLSTM layer can avoid incorrect tag output and make the output more accurate and reasonable.

The BERT-CRF model includes the BERT layer and CRF layer. Based on a multi-layer bidirectional transformer encoder, BERT [30] is a pre-trained unsupervised natural language processing modelBERT is, which solves the problem of word polysemy. Transformer uses a two-way self-attention mechanism instead of one-way integration of context information. BERT uses a pre-trained masked language model (MLM) and the deep bidirectional transformer component, which generates a deep bidirectional linguistic representation of the integrated context information. BERT converts the input text into a one-dimensional vector containing a word vector, a text vector, and a position vector as the model input and outputs the one-dimensional word vector corresponding to each character as the semantic representation of the text. The pre-training BERT can encode the annotation data and be trained to obtain the accurate semantic representation of characters. CRF performs a state transition constraint on the output result of the upper layer.

GlobalPointer is mainly used to solve multi-label classification. Global normalization is used to identify named entities, nested entities, and non-nested entities indiscriminately. If there are m entity types to be identified, then there are m multi-label classification problems. GlobalPointer is simple and efficient without recursively calculating the denominator when training or dynamic programming when predicting. Ideally, the time complexity is O (1). In order to solve the problem of inconsistent training and prediction caused by recognizing the head and tail of an entity, GlobalPointer regards the head and tail as a whole.

Introducing IDCNN to the combination of BERT-CRF and BiLSTM-CRF, BERT-BiLSTM-IDCNN-CRF can be obtained. The BERT layer converts the sentence into a vector representation. The BiLSTM layer obtains all the information of a sequence through the front and back directions. The IDCNN layer extracts the features of words and sentences, and the CRF layer obtains the final prediction results.

The RoBERTa-BiLSTM-IDCNN-CRF model can be obtained by changing the pre-trained model in the BERT-BiLSTM-IDCNN-CRF model to RoBERTa. RoBERTa [31] contains a transformer encoder which has powerful feature extraction capabilities. Its core part, the self-attention mechanism, can model the relationship between words of any length and captures the grammatical structure within a sentence and the dependencies between words. RoBERTa learns the dynamic word vector representation by referring to the context of words, which solves the problem that the static word vector cannot represent polysemous words and improves the word representation ability.

Based on BiLSTM-CRF, IDCNN is introduced to obtain the BiLSTM-IDCNN-CRF model. The model first uses BiLSTM-IDCNN to extract features from text information and then uses the CRF layer to classify each character or word.

In order to further understand the task of the Chinese pesticide NER, recognition errors were analyzed. Table 4 shows the extraction results of BiLSTM-IDCNN-CRF for 10 types of entities.

Table 4.

Experimental results of BiLSTM-IDCNN-CRF entities.

BiLSTM-IDCNN-CRF achieves different results in the F1 score. The F1 score of the entity recognition of pesticide control object OBJE is low (0.3771), which is much lower than the entity categories such as pesticide name (0.9284), pesticide manufacturer (0.9247), and pesticide use classification (0.9337). The reason for the low F1 is that the standard of pesticide control objects in pesticide instructions is not uniform, and a large amount of noise information is introduced, which makes it fail to segment the entities of control objects effectively and accurately in most cases.

According to the analysis of the entities with low NER effects in Table 4, the error types can be roughly divided into two types: entity boundary recognition error and entity type recognition error. Entity boundary recognition error refers to the fact that the entity type is predicted correctly, but the entity boundary is not recognized accurately; that is, the modifiers before and after the entity may be omitted. Entity type recognition error refers to the problem of splitting a single entity into multiple entities and overlapping entities. In the problem of splitting multiple entities from a single entity, some entities are long and complex, which will lead to splitting errors. For example, “acetamiprid technical” should be predicted as an active ingredient (COMP), but sometimes “acetamiprid” is predicted as a pesticide name (NAME) and “technical” as a pesticide formulation (FORM), resulting in a split error. The problem of entity overlap mainly refers to the fact that entities may belong to multiple categories and need to be judged according to the context semantics. For example, the sentence “emamectin benzoate is an antibiotic insecticide”, “emamectin benzoate” can be either the name of the pesticide (NAME) or the active ingredient (COMP), and in the practical context, “ememectin benzoate” is more likely to be the active ingredient; however, the model actually predicted it as the pesticide name (NAME).

6. Conclusions

In this paper, the BiLSTM-IDCNN-CRF model was employed for the designation and recognition of Chinese pesticide entities for the first time. Through the compared experiments with the combination of BERT, BiLSTM, IDCNN, CRF and GlobalPointer, and other deep learning architectures, BiLSTM-IDCNN-CRF can make better use of the relevant knowledge in pesticides to mine the deep features and long-distance dependencies for Chinese pesticide texts; thus, the local and global semantic features can be captured, and the complementary features of context information and local information can be fully learned to improve the accuracy of entity feature extraction and entity recognition. Compared with the experimental results of other models in this paper, the BiLSTM-IDCNN-CRF in this paper achieves the optimal indexes in most indicators of pesticide NER. To further improve the accuracy of Chinese pesticide NER and mine pesticide knowledge, and construct knowledge graphs in related fields, the pesticide database will continue to be enriched and expanded in future research, more models will be applied in this field, and the existing models will be optimized based on the characteristics of pesticide data to enhance the practical applicability of the models and promote the research progress of pesticide entities recognition.

Author Contributions

Conceptualization, H.Z.; methodology, H.Z.; software, W.J.; validation, W.J.; formal analysis, W.J.; data curation, W.J.; writing—original draft preparation, W.J.; writing—review and editing, W.J., Y.F. and H.Z.; visualization, W.J.; project administration, W.J.; funding acquisition, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by a special intergovernmental project for international cooperation in Science, Technology, and Innovation, by the National Key R&D Program (No. 2019YFE0103800), Natural Science Foundation of Shandong Province of China (No. ZR2022MG070) and Shandong Province College Student Innovation and Entrepreneurship Training Program (No. 2012).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, S.Q.; Zhang, M.M.; Liu, F. Kiwi fruit planting entity recognition based on character and word information fusion. Trans. Chin. Soc. Agric. Mach. 2022, 53, 323–331. [Google Scholar]

- Guo, X.; Tang, Z.; Diao, L.; Zhou, H.; Li, L. Recognition of Chinese agricultural diseases and pests named entity with joint radical-embedding and self-attention mechanism. Trans. Chin. Soc. Agric. Mach. 2020, 51, 335–343. [Google Scholar]

- Wu, S.S.; Zhou, A.L.; Xie, N.F.; Liang, X.H.; Wang, H.H.; Li, X.Y.; Chen, G.P. Construction of visualization domain-specific knowledge graph of crop diseases and pests based on deep learning. Trans. Chin. Soc. Agric. Eng. 2020, 36, 177–185. [Google Scholar]

- Wang, C.; Gao, J.; Rao, H.; Chen, A.; He, J.; Jiao, J.; Zou, N.; Gu, L. Named entity recognition (NER) for Chinese agricultural diseases and pests based on discourse topic and attention mechanism. Evol. Intell. 2022. [Google Scholar] [CrossRef]

- Zhang, J.Y.; Guo, M.; Geng, Y.J.; Li, M.; Zhang, Y.L.; Geng, N. Chinese named entity recognition for apple diseases and pests based on character augmentation. Comput. Electron. Agric. 2021, 190, 106464. [Google Scholar] [CrossRef]

- Li, X.J. The Research of Named Entity Recognition in Agricultural Field. Master’s Thesis, Anhui Agricultural University, Hefei, China, 2019. [Google Scholar]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved Semantic Representations from Tree-Structured Long Short-Term Memory Networks. arXiv 2015, arXiv:1503.00075. [Google Scholar]

- Zhu, X.D.; Sobhani, P.; Guo, H.Y. Long short-term memory over recursive structures. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; pp. 1604–1612. [Google Scholar]

- Wang, S.; Wang, X.; Wang, S.; Wang, D. Bi-directional long short-term memory method based on attention mechanism and rolling update for short-term load forecasting. Int. J. Electr. Power Energy Syst. 2019, 109, 470–479. [Google Scholar] [CrossRef]

- Zhang, L. Word Sense Disambiguation Model based on Bi-LSTM. In Proceedings of the 2022 14th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Changsha, China, 15–16 January 2022; pp. 848–851. [Google Scholar]

- Medsker, L.R.; Jain, L.C. Recurrent Neural Networks: Design and Applications; CRC Press: Boca Raton, FL, USA, 2001; pp. 64–67. [Google Scholar]

- Strubell, E.; Verga, P.; Belanger, D.; Andrew, M. Fast and Accurate Entity Recognition with Iterated Dilated Convolutions. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A Convolutional Neural Network for Modelling Sentences. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, MA, USA, 23–24 June 2014. [Google Scholar]

- Fisher, Y.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2016, arXiv:1511.07122. [Google Scholar]

- Gu, J.X.; Wang, Z.H.; Kuen, J.; Ma, L.Y.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.X.; Wang, G.; Cai, J.F.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- He, H.; Yang, X.; Wu, L.; Wang, G. Iterated dilated convolutional neural networks for word segmentation. Neural Netw. World 2020, 30, 333–346. [Google Scholar] [CrossRef]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, Long Short-Term Memory, Fully Connected Deep Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015. [Google Scholar]

- Huang, Z.H.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Ma, X.Z.; Hovy, E. End-to-end sequence labeling via Bi-directional LSTM-CNNs-CRF. arXiv 2016, arXiv:1603.01354. [Google Scholar]

- Guo, X.C.; Zhou, H.; Su, J.; Hao, X.; Tang, Z.; Diao, L.; Li, L. Chinese agricultural diseases and pests named entity recognition with multi-scale local context features and self-attention mechanism. Comput. Electron. Agric. 2020, 179, 105830. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.X.; Yuan, H. Named entity recognition in the field of ecological management technology based on BiLSTM-IDCNN-CRF model. Comput. Appl. Softw. 2021, 38, 134–141. [Google Scholar]

- Yang, X.; Bian, J.; Hogan, W.R.; Wu, Y.H. Clinical concept extraction using transformers. J. Am. Med. Inform. Assoc. 2020, 27, 1935–1942. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Qin, T.Y.; Wang, D.B. Automatic extraction of traditional music terms of intangible cultural heritage. Data Anal. Knowl. Discov. 2020, 4, 68–75. [Google Scholar]

- John, L.; Andrew, M.; Fernando, P. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the Eighteenth International Conference on Machine Learning (ICML-2001), San Francisco, CA, USA, 28 June–1 July 2001. [Google Scholar]

- Sutton, C.; Mccallum, A. An Introduction to Conditional Random Fields for Relational Learning. In Introduction to Statistical Relational Learning; MIT Press: Cambridge, MA, USA, 2006; pp. 93–128. [Google Scholar]

- Tjong, E.F.; Sang, K.; Veenstra, J. Representing Text Chunks. In Proceedings of the EACL’99 Ninth Conference of the European Chapter of the Association for Computational Linguistics, Bergen, Norway, 8–12 June 1999; pp. 173–179. [Google Scholar]

- Available online: https://labelstud.io/ (accessed on 8 June 2022).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training Of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Liu, Y.H.; Ott, M.; Goyal, N.; Du, J.F.; Joshi, M.; Chen, D.Q.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).