Real-Time, Model-Agnostic and User-Driven Counterfactual Explanations Using Autoencoders

Abstract

1. Introduction

- Data closeness: Given an input , the counterfactual explanation has to be a minimal perturbation of , i.e.,Related to data closeness [7] also mentions sparsity. However, this property can be a challenge when working with continuous variables because it may not be possible to find a parsimonious explanation by only modifying a few features.

- User-driven: The counterfactual explanation has to be user-driven, meaning that the user can indicate whether the class , to which a counterfactual belongs, can be specified. That is, given a black-box modelOtherwise, in multiclass problems, for example, the changes would only be directed to the closest class, excluding explanations for other classes. Note that this property also ensures the validity property of [7].

- Amortized inference: The counterfactual explanations have to be straightforward, without solving an optimization problem for each input to be changed. In this way, the explanation model should learn to predict the counterfactual. The algorithm needs to quickly calculate a counterfactual for any new input . Otherwise, the process of generating explanations is time-consuming.

- Data manifold closeness: In addition to satisfying the property of data closeness, a counterfactual instance has to be close to the distribution of the data (), i.e., the changes have to be realistic, i.e.,

- Agnosticity: The generation of counterfactuals can be applied to any machine learning model without relying on any prior knowledge or assumptions about the model.

- Black-box access: The generation of counterfactuals can be achieved with access to only the predict function of the black-box model.

2. Related Work

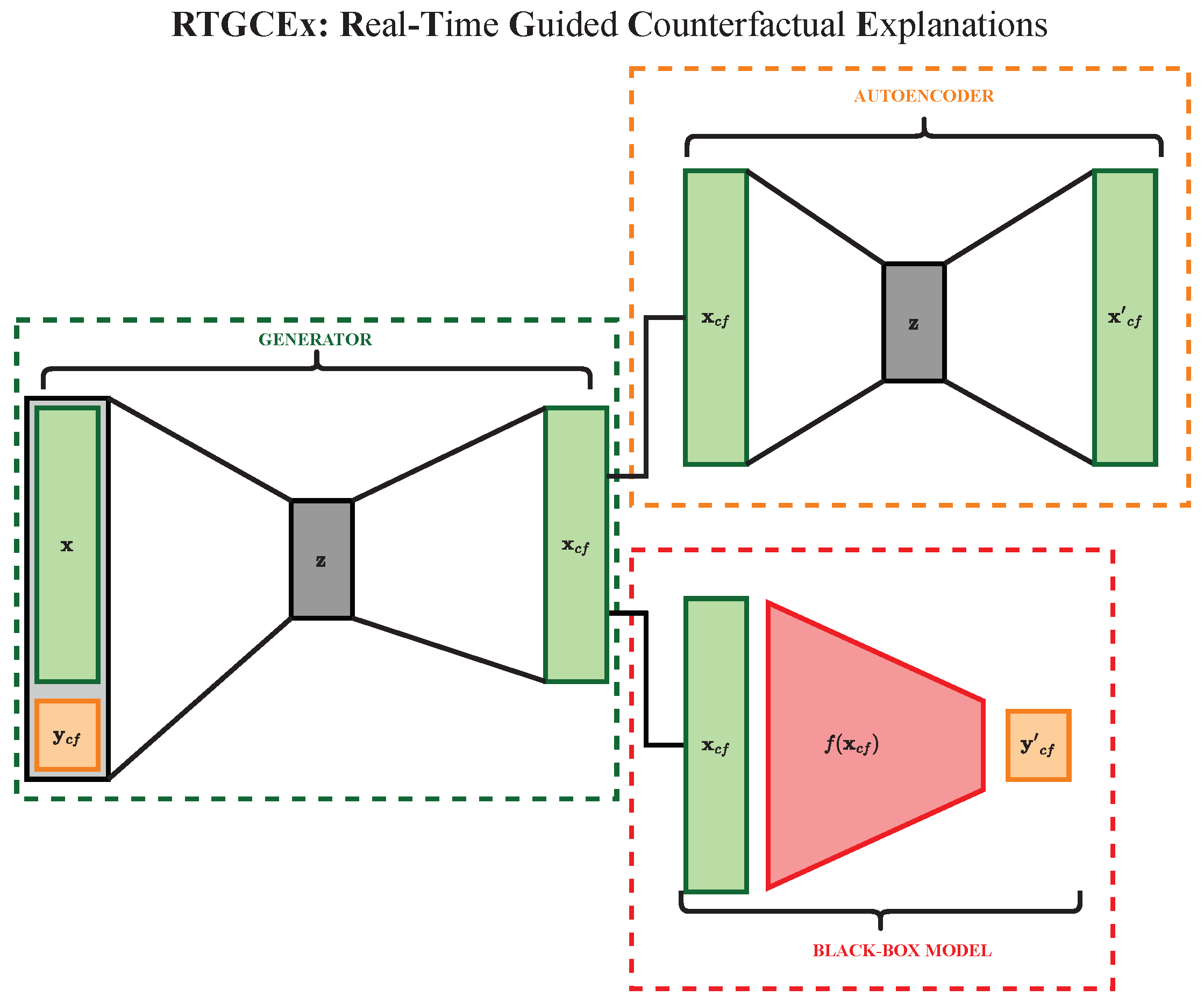

3. Real-Time Guided Counterfactual Explanations

- Autoencoding phase:

- Counterfactual generation phase:

4. Experimental Framework

4.1. Datasets

- MNIST.

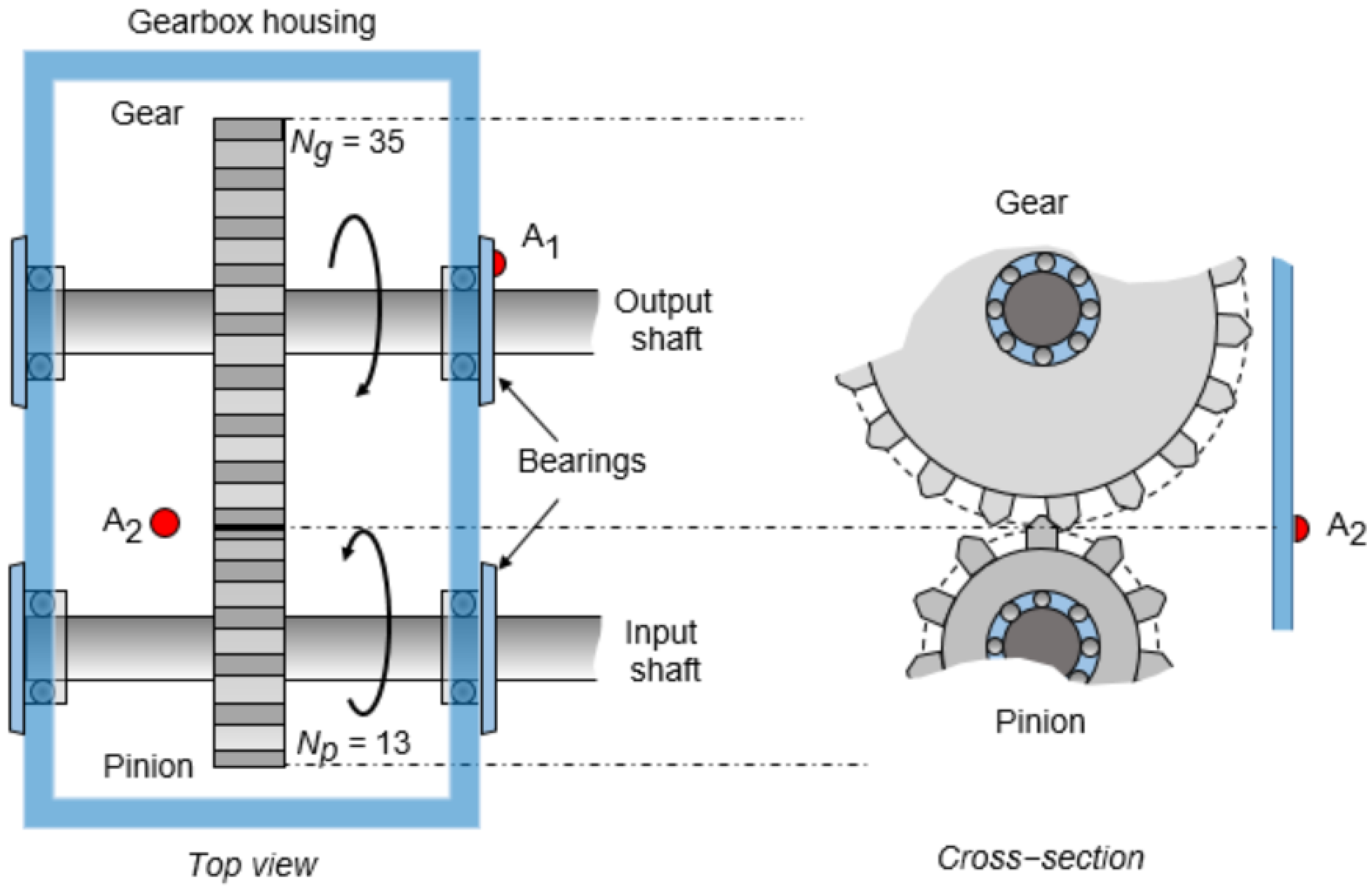

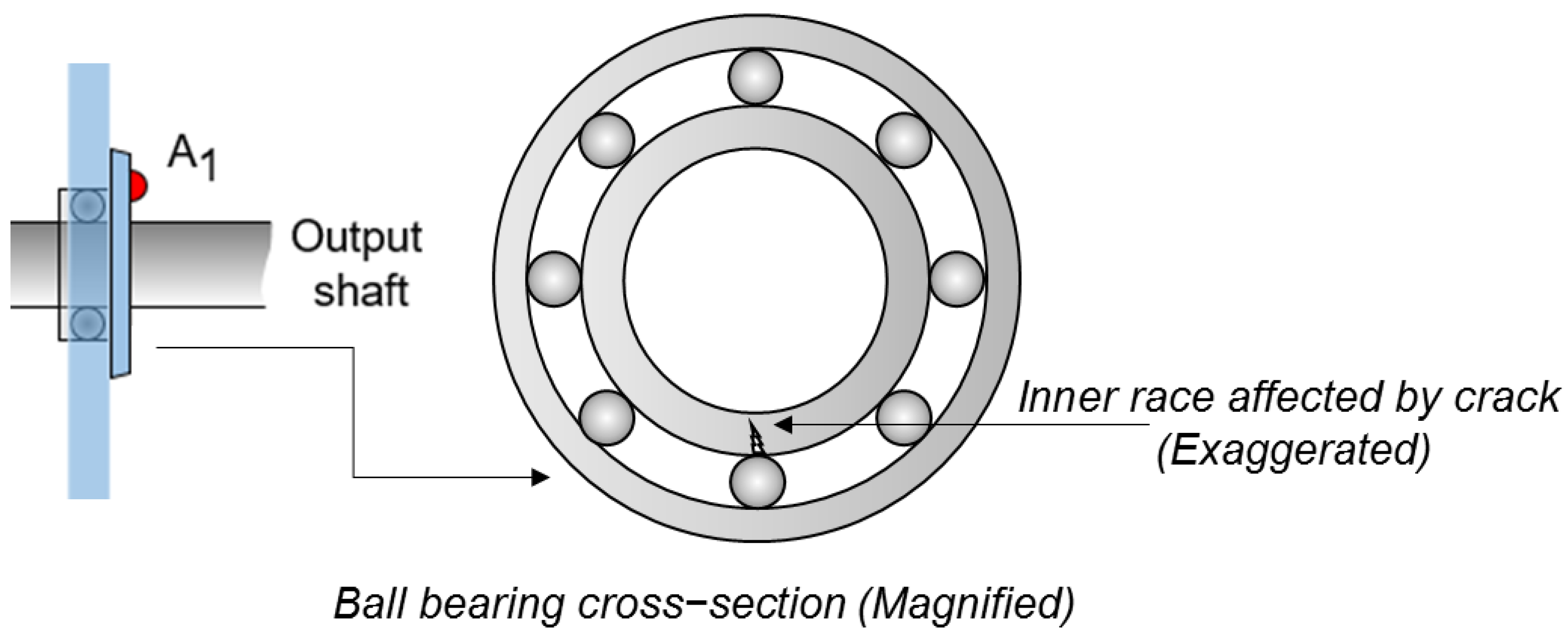

- Gearbox.

4.2. Employed Models

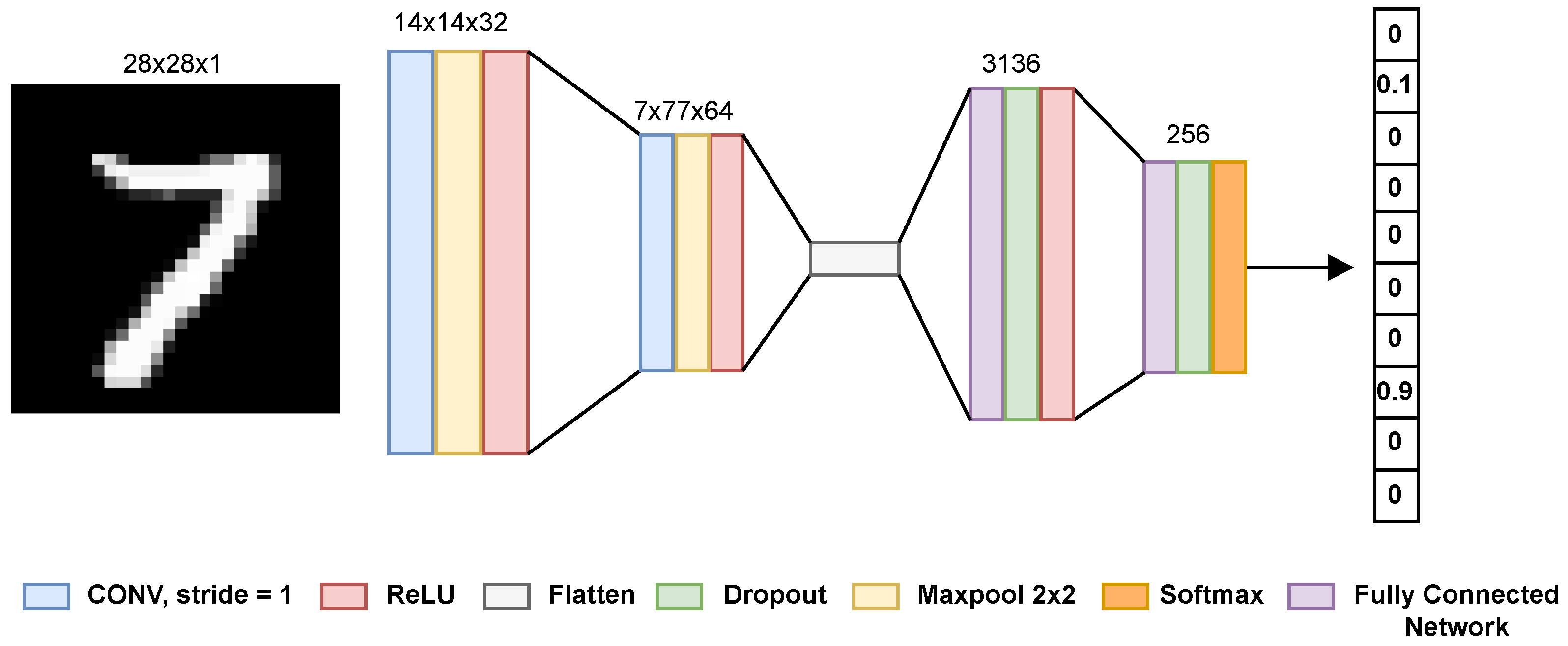

4.2.1. MNIST

- Black-box model.

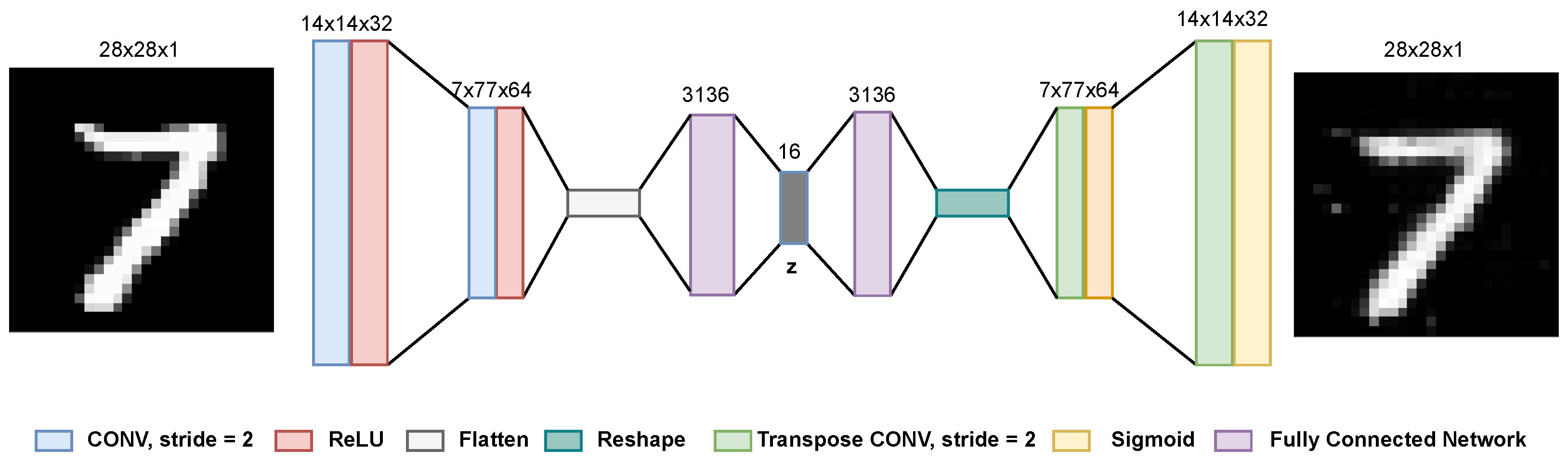

- Autoencoder.

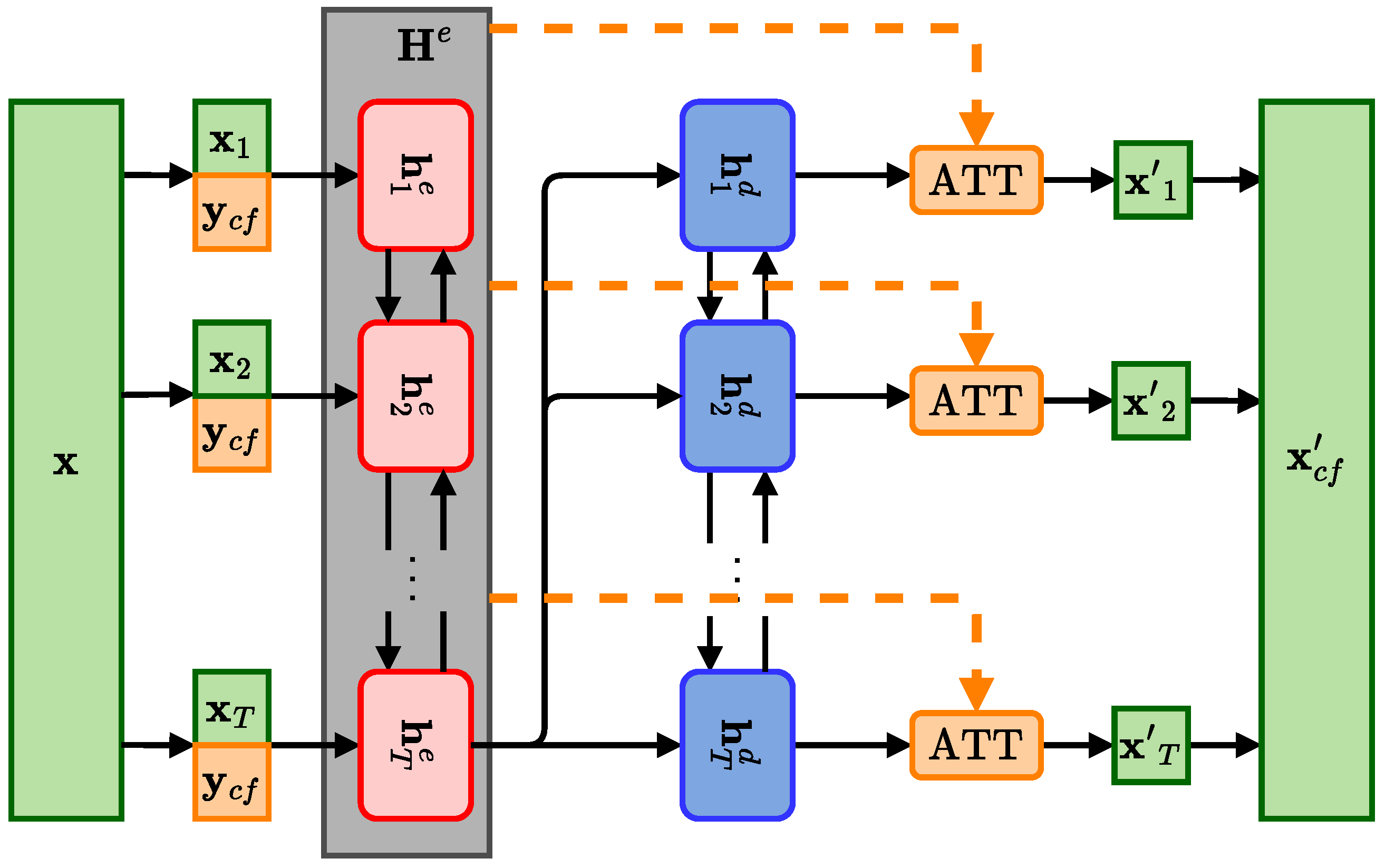

- Generator.

4.2.2. Gearbox

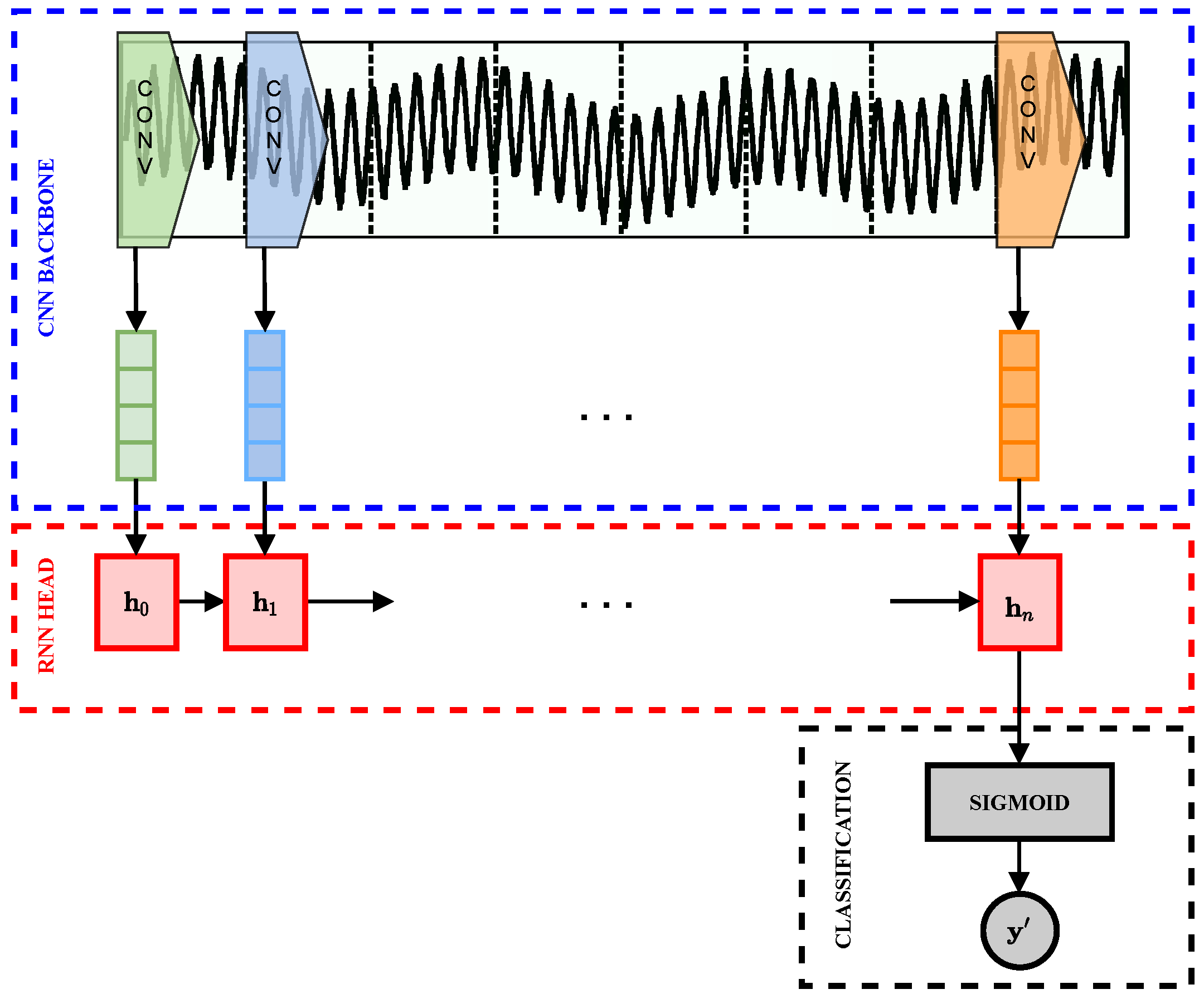

- Black-box model.

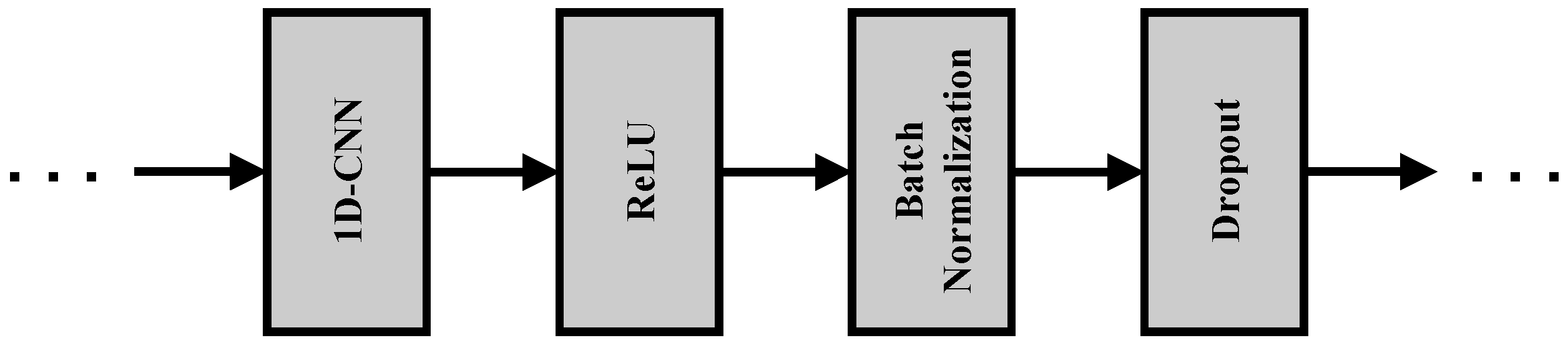

- Autoencoder.

- Generator.

4.3. Loss Functions

- Autoencoding phase: In this stage, we optimize a loss function with the aim of learning to reconstruct the inputs from the training dataset. The loss function employed for this purpose is the mean squared error (see Equation (11)):where N denotes the number of training samples.

- Counterfactual generation phase: During this phase, the weights of the autoencoder and the black-box model are maintained constant, and the generator is optimized to minimize a loss function that incorporates three distinct terms (as shown in Equation (5)).

- -

- Data closeness loss: This loss function has to minimize the distance between the original samples and counterfactuals . Thus, we employ the mean squared error in both use cases:

- -

- Validity loss: This loss function has to ensure that the class given by the black-box model matches the counterfactual class defined by the user, i.e., . As the MNIST use case is a multiclass problem and the Gearbox use case is a binary classification problem, we use different loss functions here.On the one hand, for MNIST, we used the categorical cross-entropy loss:where is the one-hot encoded ground truth label and C is the number of classes.On the other hand, for the Gearbox use case, we used the binary cross-entropy loss:where is 0 for normal data and 1 for anomalous data.

- -

- Data manifold closeness loss: This loss function ensures that the generated counterfactuals are close to the data manifold. Thus, it has to minimize the distance between the generated counterfactuals and the reconstruction given by the AE for the counterfactuals . Therefore, the loss used for this was the mean squared error:

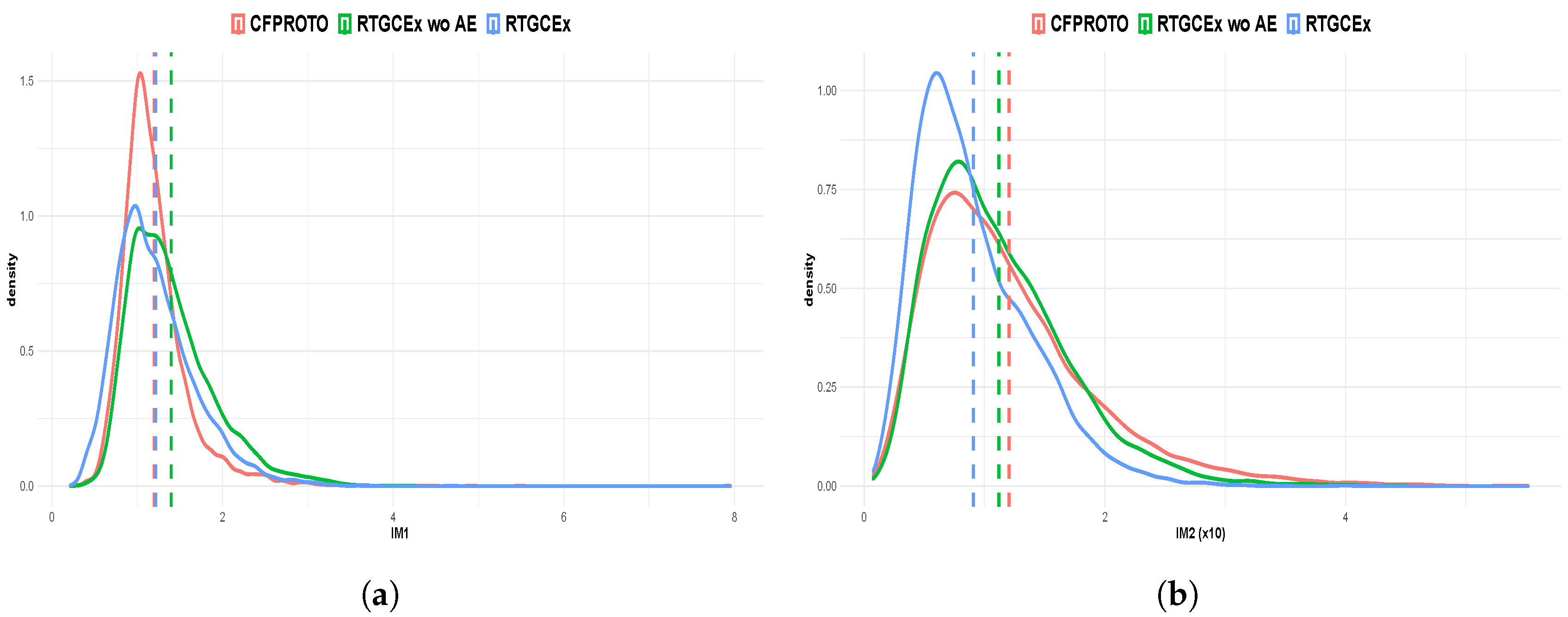

4.4. IM1 and IM2 Metrics

5. Results and Discussion

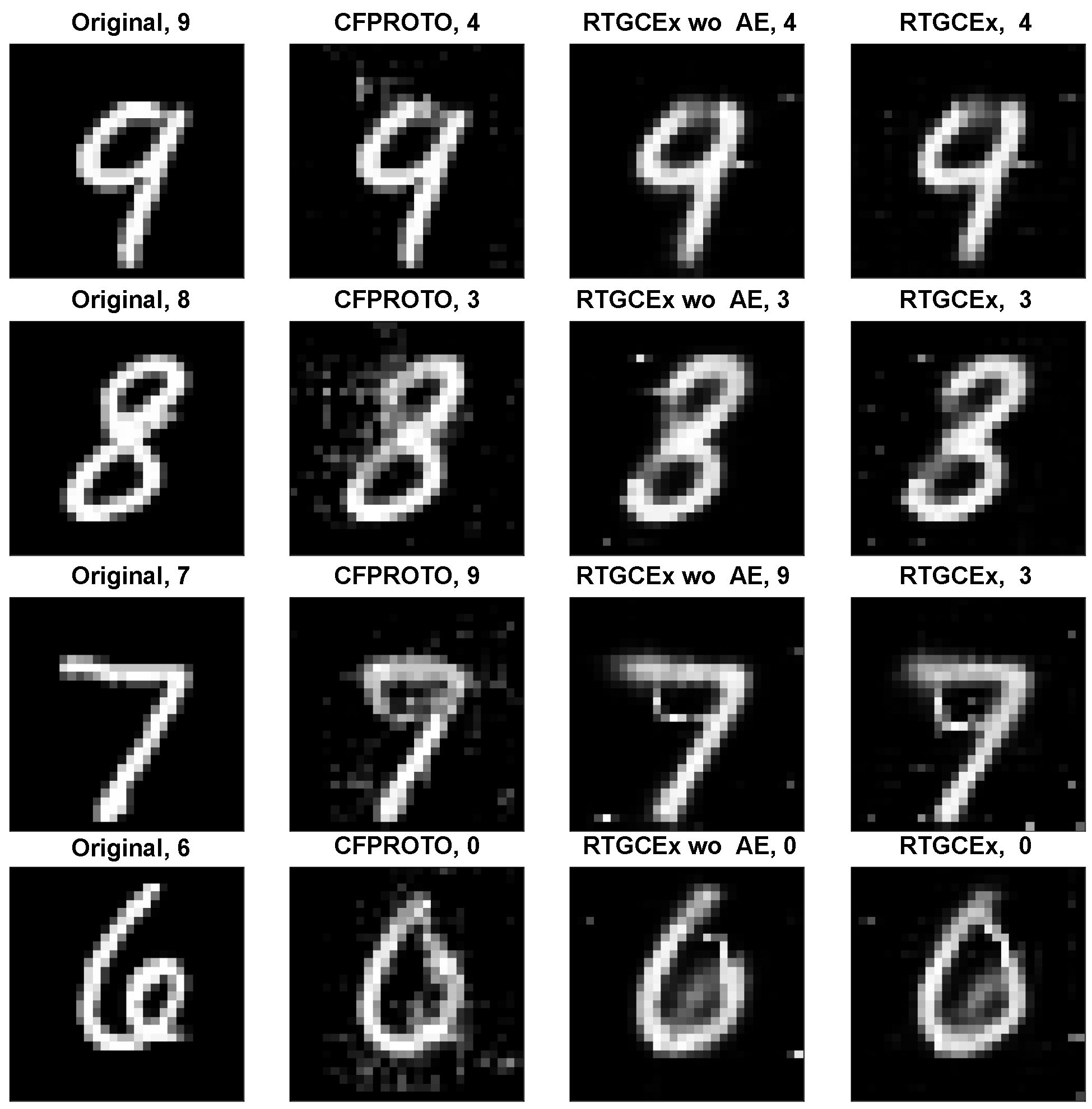

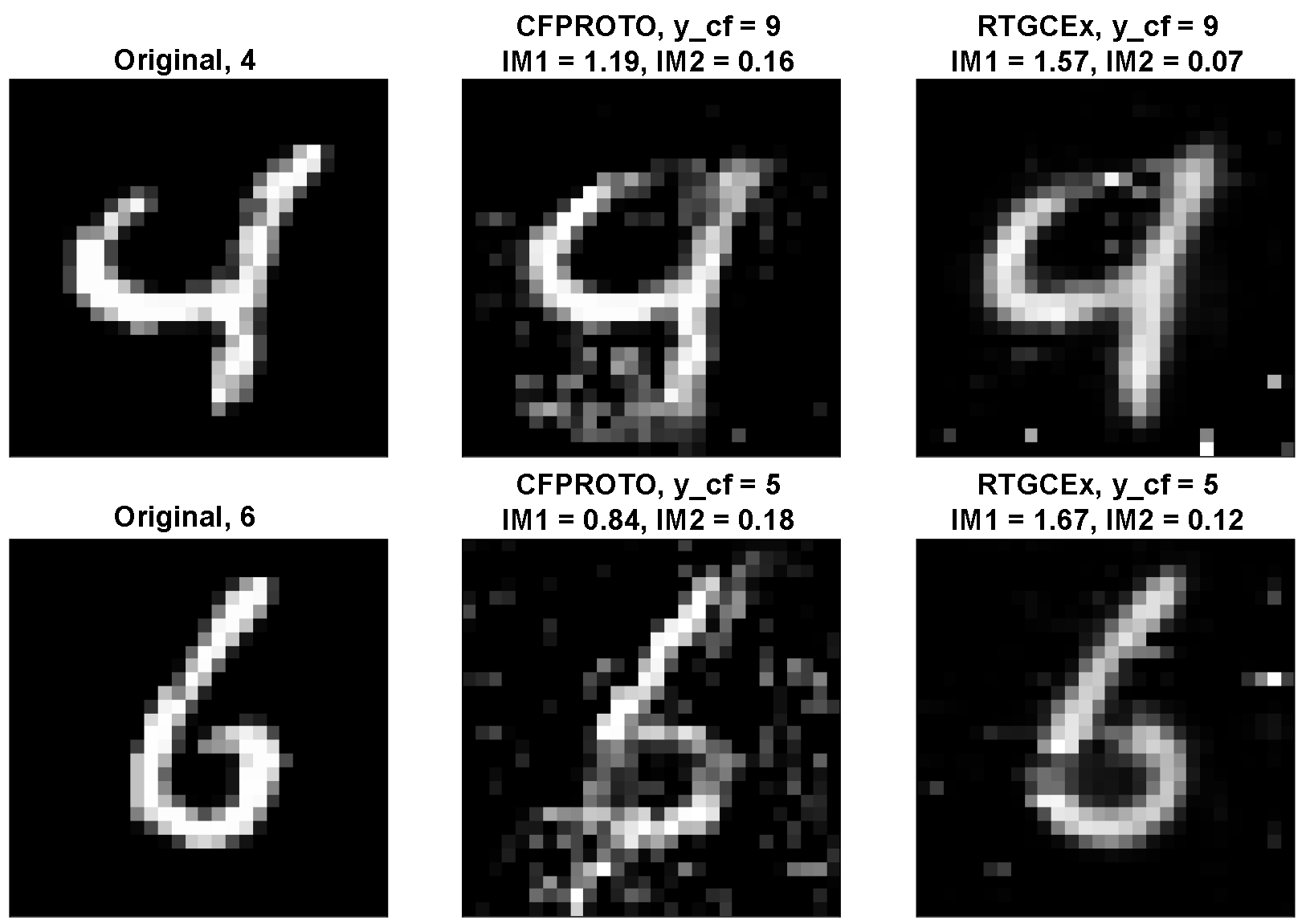

5.1. MNIST

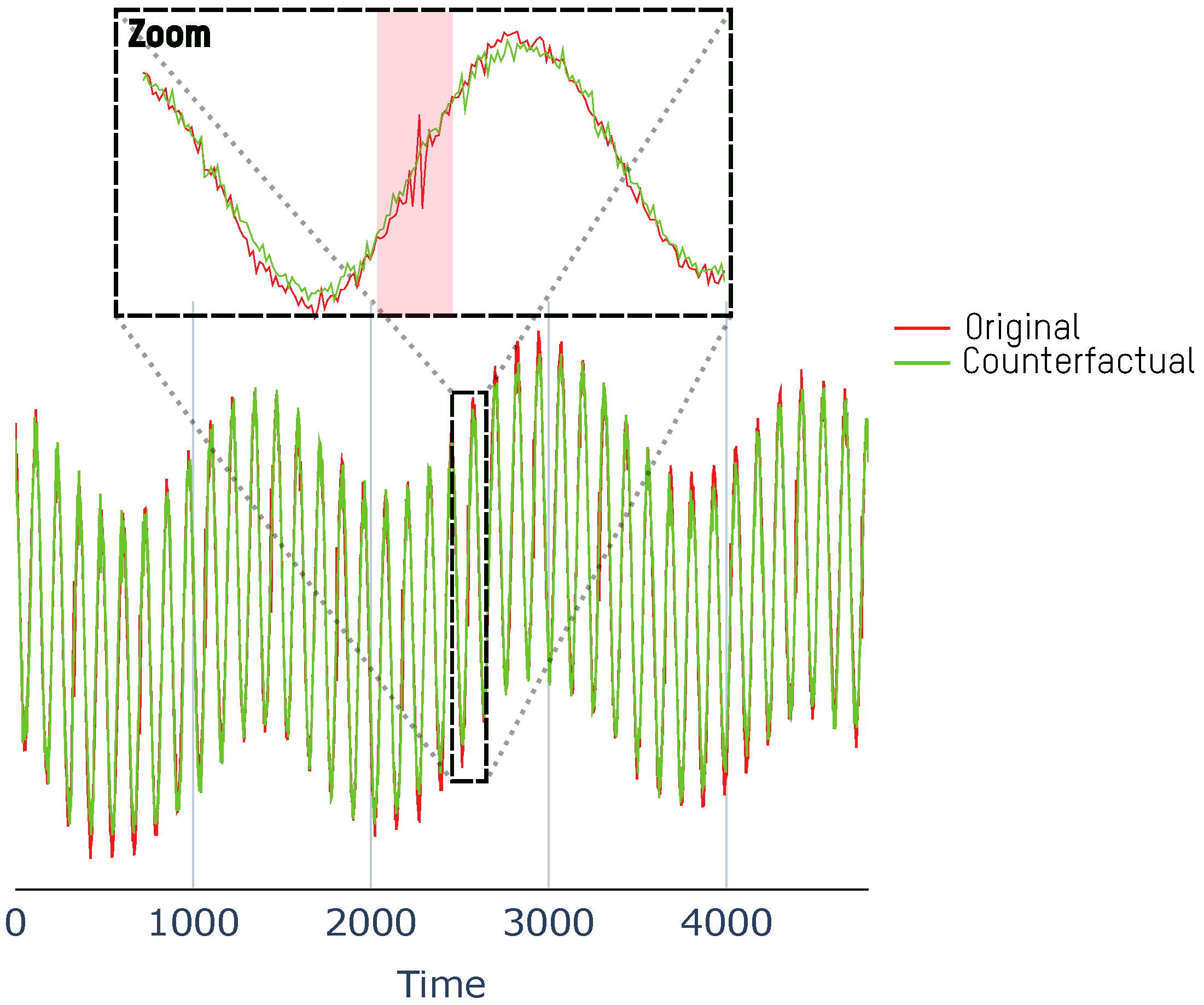

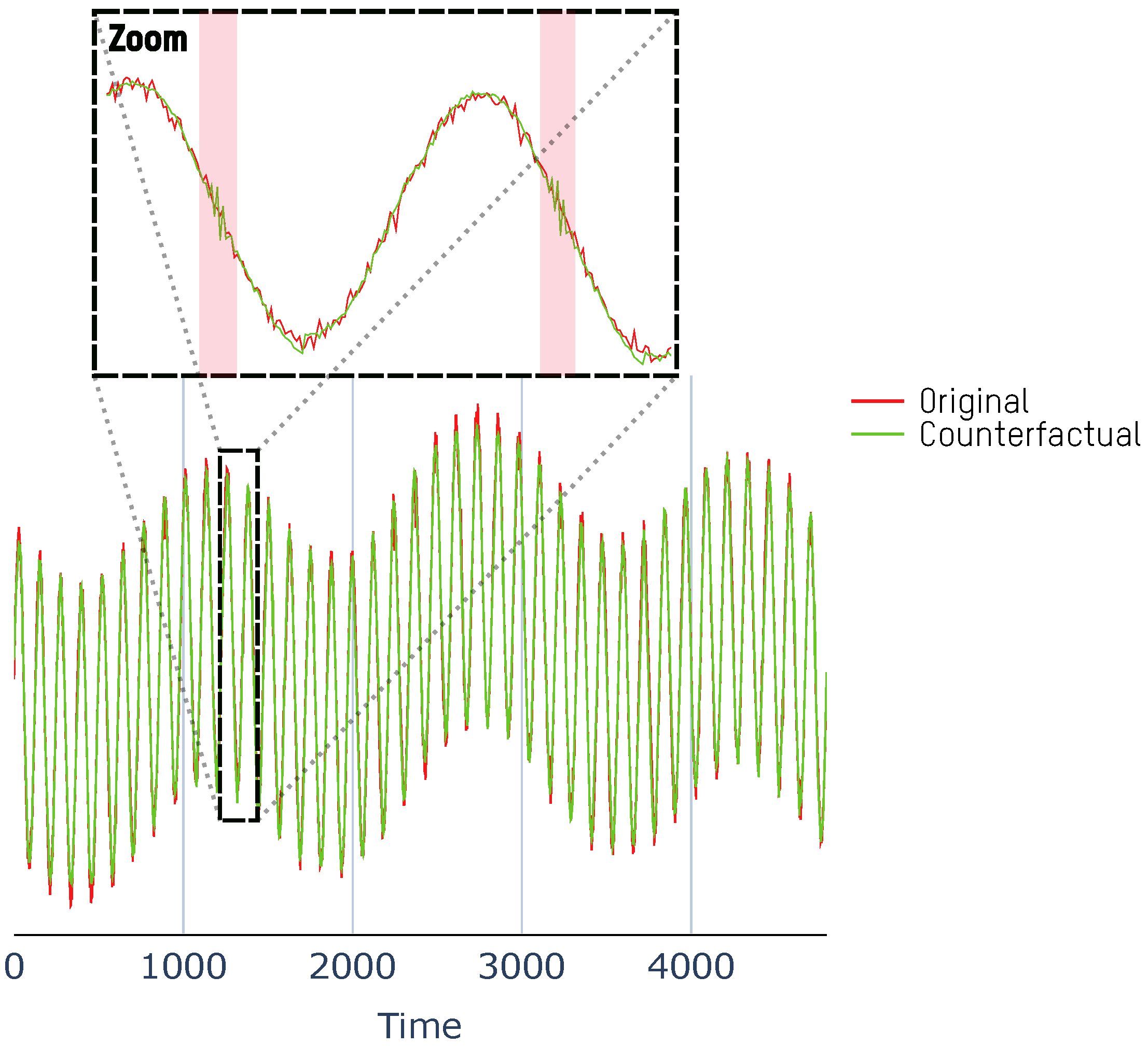

5.2. Gearbox

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-precision model-agnostic explanations. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Friedman, J.H.; Popescu, B.E. Predictive learning via rule ensembles. Ann. Appl. Stat. 2008, 2, 916–954. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4765–4774. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why should i trust you? Explaining the predictions of any classifier. In Proceedings of the 22nd ACM CA, International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 3319–3328. [Google Scholar]

- Stepin, I.; Alonso, J.M.; Catala, A.; Pereira-Fariña, M. A Survey of Contrastive and Counterfactual Explanation Generation Methods for Explainable Artificial Intelligence. IEEE Access 2021, 9, 11974–12001. [Google Scholar] [CrossRef]

- Verma, S.; Dickerson, J.; Hines, K. Counterfactual Explanations for Machine Learning: A Review. arXiv 2020, arXiv:2010.10596. [Google Scholar]

- Artelt, A.; Hammer, B. On the computation of counterfactual explanations—A survey. arXiv 2019, arXiv:1911.07749. [Google Scholar]

- Dhurandhar, A.; Chen, P.Y.; Luss, R.; Tu, C.C.; Ting, P.; Shanmugam, K.; Das, P. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 592–603. [Google Scholar]

- Van Looveren, A.; Klaise, J. Interpretable counterfactual explanations guided by prototypes. arXiv 2019, arXiv:1907.02584. [Google Scholar]

- Kenny, E.M.; Keane, M.T. On generating plausible counterfactual and semi-factual explanations for deep learning. arXiv 2020, arXiv:2009.06399. [Google Scholar] [CrossRef]

- Nugent, C.; Doyle, D.; Cunningham, P. Gaining insight through case-based explanation. J. Intell. Inf. Syst. 2009, 32, 267–295. [Google Scholar] [CrossRef]

- Mothilal, R.K.; Sharma, A.; Tan, C. Explaining machine learning classifiers through diverse counterfactual explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 607–617. [Google Scholar]

- Nemirovsky, D.; Thiebaut, N.; Xu, Y.; Gupta, A. CounteRGAN: Generating Realistic Counterfactuals with Residual Generative Adversarial Nets. arXiv 2020, arXiv:2009.05199. [Google Scholar]

- Liu, S.; Kailkhura, B.; Loveland, D.; Han, Y. Generative counterfactual introspection for explainable deep learning. arXiv 2019, arXiv:1907.03077. [Google Scholar]

- Mahajan, D.; Tan, C.; Sharma, A. Preserving causal constraints in counterfactual explanations for machine learning classifiers. arXiv 2019, arXiv:1912.03277. [Google Scholar]

- Saxena, D.; Cao, J. Generative Adversarial Networks (GANs) Challenges, Solutions, and Future Directions. ACM Comput. Surv. 2021, 54, 1–42. [Google Scholar] [CrossRef]

- Balasubramanian, R.; Sharpe, S.; Barr, B.; Wittenbach, J.; Bruss, C.B. Latent-CF: A Simple Baseline for Reverse Counterfactual Explanations. arXiv 2020, arXiv:2012.09301. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Mathworks Gearbox Simulator. Available online: https://www.mathworks.com/help/signal/examples/vibration-analysis-of-rotating-machinery.html (accessed on 1 February 2023).

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-head CNN–RNN for multi-time series anomaly detection: An industrial case study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Lin, M.; Lucas, H.C., Jr.; Shmueli, G. Research commentary—Too big to fail: Large samples and the p-value problem. Inf. Syst. Res. 2013, 24, 906–917. [Google Scholar] [CrossRef]

- Hvilshøj, F.; Iosifidis, A.; Assent, I. On quantitative evaluations of counterfactuals. arXiv 2021, arXiv:2111.00177. [Google Scholar]

- Schemmer, M.; Holstein, J.; Bauer, N.; Kühl, N.; Satzger, G. Towards Meaningful Anomaly Detection: The Effect of Counterfactual Explanations on the Investigation of Anomalies in Multivariate Time Series. arXiv 2023, arXiv:2302.03302. [Google Scholar]

| Model | AE | Black-Box Model |

|---|---|---|

| Metric | MSE | Accuracy |

| Value | 0.007 | 0.991 |

| Method | IM1 | IM2 (×10) | Speed (s/it) | |||

|---|---|---|---|---|---|---|

| Mean | IQR | Mean | IQR | Mean | IQR | |

| CFPROTO | 1.20 | 0.39 | 1.20 | 0.83 | 17.40 | 0.09 |

| RTGCEx wo AE | 1.40 | 0.63 | 1.12 | 0.74 | 0.14 | 0.01 |

| RTGCEx | 1.21 | 0.58 | 0.91 | 0.63 | 0.14 | 0.01 |

| Method | CFPROTO | RTGCEx wo AE | RTGCEx | |||

|---|---|---|---|---|---|---|

| IM1 | IM2 | IM1 | IM2 | IM1 | IM2 | |

| CFPROTO | - | 0.66 | 0.53 | 0.50 | 0.38 | |

| RTGCEx wo AE | 0.34 | 0.47 | - | 0.26 | 0.24 | |

| RTGCEx | 0.50 | 0.62 | 0.74 | 0.76 | - | |

| Model | AE | Black-Box Model | ||

|---|---|---|---|---|

| Metric | MSE | P | R | F1 |

| Value | 0.973 | 1 | 0.986 | |

| Method | Closeness Loss | Counterfactual Loss | Data Manifold Loss | Speed (s) | |

|---|---|---|---|---|---|

| One Sample | Test Data | ||||

| RTGCEx wo | |||||

| RTGCEx wo | |||||

| RTGCEx | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Labaien Soto, J.; Zugasti Uriguen, E.; De Carlos Garcia, X. Real-Time, Model-Agnostic and User-Driven Counterfactual Explanations Using Autoencoders. Appl. Sci. 2023, 13, 2912. https://doi.org/10.3390/app13052912

Labaien Soto J, Zugasti Uriguen E, De Carlos Garcia X. Real-Time, Model-Agnostic and User-Driven Counterfactual Explanations Using Autoencoders. Applied Sciences. 2023; 13(5):2912. https://doi.org/10.3390/app13052912

Chicago/Turabian StyleLabaien Soto, Jokin, Ekhi Zugasti Uriguen, and Xabier De Carlos Garcia. 2023. "Real-Time, Model-Agnostic and User-Driven Counterfactual Explanations Using Autoencoders" Applied Sciences 13, no. 5: 2912. https://doi.org/10.3390/app13052912

APA StyleLabaien Soto, J., Zugasti Uriguen, E., & De Carlos Garcia, X. (2023). Real-Time, Model-Agnostic and User-Driven Counterfactual Explanations Using Autoencoders. Applied Sciences, 13(5), 2912. https://doi.org/10.3390/app13052912