Abstract

This article concerns the improvement of digital image quality using mathematical tools such as nonlinear partial differential operators. In this paper, to perform smoothing on digital images, we propose to use the p(x)-Laplacian operator. Its smoothing power plays a main role in the restoration process. This enables us to dynamically process certain areas of an image. We used a mathematical model of image regularisation that is described by a nonlinear diffusion Equation (this diffusion is modelled by the p(x)-Laplacian operator). We implemented the continuous model in order to observe the steps of the regularisation process to understand the effects of the different parameters of the model on an image. This will enable parameters to be used and adapted in order to provide a proposed solution.

1. Introduction

The smoothing of digital images is an important theme in the field of image correction. It provides a method for erasing unwanted effects caused by various factors, such as the quality of the acquisition material, human error or the degradation induced in order to reduce its storage space.

There are many processing operators for smoothing digital images. Their aim is to maintain highlights in the relevant areas and smooth the rest.

Among these operators, we can mention the p(x)-Laplacian operator, which mathematically models the diffusion of a signal (in this case, a digital image), taking into account the local information of this signal. This operator, by its formulation, is able to perform smoothing according to a criterion. Generally, in the literature, the criterion defining the degree of smoothing for the p(x)-Laplacian operator is calculated at the beginning of processing. In this way, the amount of smoothing is set to the same level. In the present work, contrary to what is generally carried out, we wanted to make the processing adaptive by locally adjusting the criterion taken into account in the definition of the p(x)-Laplacian operator. Thus, this procedure allows us to select, from the observed image, the areas that will undergo the necessary smoothing while preserving those that we consider more important.

In recent years, the most common version is the lossy compression, which often results in image degradation. In this context, we propose the adaptation of a nonlinear mathematical model for the improvement of these images. Currently, most image restoration methods are based on partial differential Equations (see [,,,]) that involve two parameters, one of which is always considered to be fixed.

This paper is organised as follows:

- Section 1 introduces the subject matter of this work and the setting of the problem;

- In Section 2, we present the main mathematical tools associated with a continuous version of the problem and its formulation. The use of graph theory proposes a discretised version of the initial problem by employing an adaptive algorithm. In addition, we recall some current properties of the -Laplacian operator;

- Section 3 discusses the simulation approaches;

- Section 4 proposes some illustrations of usually referenced images which are provided in well-known databases;

- Section 5 addresses some comparative studies between our proposed approach and others.

2. Context and Problems

2.1. Pictures

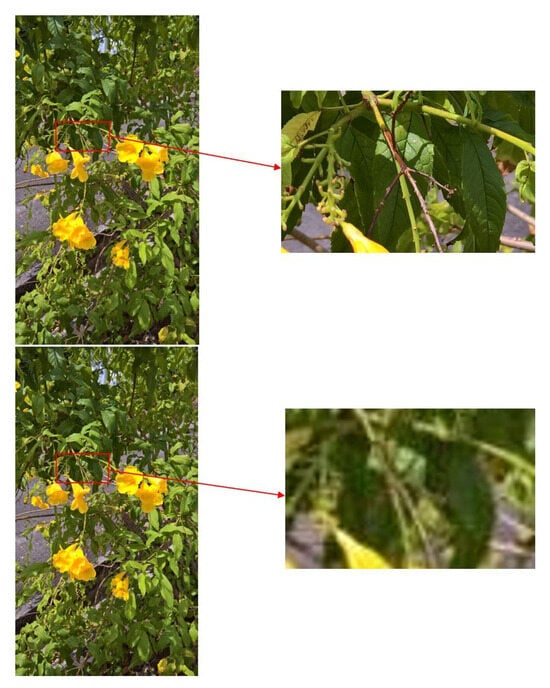

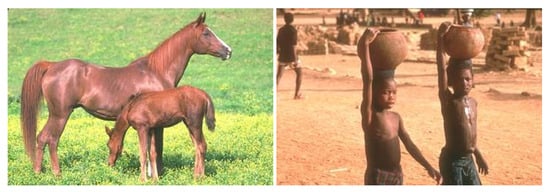

Images may have minor changes that are in need of smoothing in order to improve their readability. The image shown in Figure 1 is an example of the type of degradation that can be seen in an image.

Figure 1.

Comparison between a degraded and a non-degraded image. Above: BMP, 2160 × 3840, 23.7 MB; below: an image which has been compressed, 2160 × 3840, 4.8 MB.

All equipment has sensors and acquisition algorithms that are more or less efficient, which means that quality is lost. To this, one must add the human factor [], which is often the reason why the work of an inexperienced photographer has lower quality.

2.2. Adaptive Smoothing Using the p(x)-Laplacian

Mathematical modelling is used to model phenomena from various fields, including physics, chemistry, biology and, in particular, digital image processing. In some cases, computer science makes it possible to apply some of these patterns to improve the quality of digital images. In this paper, we use the results from a class governed by a nonlinear operator of the pseudo-Laplacian-type with a variable exponent. In our case, this function denotes an image (see []).

Our aim is to improve the quality of the images by homogenising areas where the gradient is low, while preserving the amount of detail in the areas where the gradient is high.

3. The Method

3.1. Presentation of Some Models with a Diffusion Term

First of all, we must consider that an observed digital image is the noisy image. This digital image could be interpreted as a real image f (the desired one) with one (or several) noise(s) or residual(s) of image r (see [], p. 6, (18) r = N(0;) is considered as a Gaussian noise with mean = 0 and deviation ). The effects of the degradation (caused by the camera or object motion, atmospheric turbulence optics, etc.) on the piecewise smooth image f are modelled by a linear or a nonlinear operator K. Usually, the operator K is named the degradation operator or degradation function, and it is also known as a point spread function (PSF) or kernel.

This is modelled by the following equation

The smoothing process consists of approaching from by means of a minimisation problem of the energy function as defined below

where

- stands for the energy that allows one to go from to the desired image;

- denotes the regularisation energy to the real image f;

- interprets the energy of approximation between the real image f and the observed image , also named the fitting term or the fidelity term;

- is the fidelity parameter or the penalty parameter.

The main goal is to recover an image corrupted by the noise while preserving edges and some details on the observed image and small scale structures such as textures and other details on the observed image.

Among examples of energies functions, we can cite the following continuous energies functions.

We can also cite the model associated with the following energy function

here, the variable exponent p is defined as follows

where is a positive constant. Precisely, is either the index of the preservation (i.e., or the index of degradation (i.e., . For more details, a reader interested in the field can refer to [].

A variant of Model (5) is the following

This energy function was introduced by Chambolle and Lions []. In this model, we can observe that for , while for .

3.2. The Formulation of the Present Model

To date, the previous models have been considered with a degree of smoothness p independent of the location x in the domain . The aim of our paper is to observe the effects of the degree of smoothness depending on the location x. Taking into account the spatial location x, the smoothness is considered a dynamic process linked to the following energy function.

where

represents the Gaussian filter function. Models (7)–(8) were suggested and investigated by Chen, Levine, and Rao in [] in the special context of BV functions. For more details and properties on BV functions, an interested reader can consult [].

The variable exponent function belongs in the interval

It represents a regularisation degree. It also improves the quality of the image resulting from the smoothing process. More precisely, this function encodes the information on the regions where the gradient is near zero (the homogeneous regions). Therefore, the model avoids the staircase effect by always keeping the edges. When the function is constant , this situation is namely called static restoration while the opposite situation is called dynamic restoration.

In addition, is the data fidelity parameter. This parameter specifies the compromise between the two competing terms and determines the amount of noise removed.

It is not difficult to see that the objective function is strictly convex, and so using standard convex analysis arguments demonstrates that the optimisation problem has a unique solution.

represents the local variation of the function f over the domain . Let us note that, in Model (7), the parameters and strike a balance between the regularisation and approximation energies.

3.3. Preliminaries and Results for the Minimising Problem Associated with (7)

Before dealing with the results of the insurance of the minimisation problem, we recall some preliminary tools.

Let be an open-bounded domain in with a sufficient regular boundary. Throughout this section, we assume that p is a measurable function from to : We write

the Lebesgue space with a variable exponent , which is the so-called Nakano space and a special sort of Musielak–Orlicz spaces (see []). It is defined as follows:

This space is equipped with the Luxemburg-type norm

designates the variable exponent Sobolev spaces on the open-bounded domain . It is defined as follows:

with the norm

where defines the norm of on the Lebesgue space

The complete and full results on the Lebesgue space and Sobolev spaces with a variable exponent can be found in the monograph in [].

Now, let us consider the energy function defined as in (7), and then the smoothing process corresponds to the optimal problem. Below, we give the existence and uniqueness results.

Proposition 1.

Under the assumptions and the minimising problem

has a unique solution in

Proof.

We present the most important points to ensure that the desired result (11) is achieved. Throughout the proof, we denote by the application defined for any f in by

The proof will be divided into two steps.

- Step 1 In the first step, we establish the existence of an element of such thatFor this, we can already notice that, for any is strictly positive. This implies that there exists a real number , such thatTo do this, we consider a minimising sequence ofIndeed, since is a minimising sequence of then we specifically haveBy using the triangular inequality and (17), it follows that there exists a constant , such thatOn the other hand, since , the embedding of into is continuous (i.e., there exists a constant such that ). Thus, combined with (18), we find a constant , such thatTo continue, we observe that after combining the equivalence norm properties [] (Theorem 2.3) with the estimate in (16), we can deduce that there exists a positive constant such thatHence, let us return to the definition in (10) of the norm . By using the estimates from (19) and (20), we obtainIn other words, we obtain the desired estimate (14). Moreover, since is a reflexive space, we can extract from the sequence a sub-sequence that converges weakly in . We denote this weak limit by . Since the application is strictly convex (as a sum of two convex functions on ) and continuous for the topology of the norm , we can apply [] (Corollary 3.9). In this case, we obtainConsequently, from the existence of , leads to f on one sideOn the other side, taking into account that the sequence has the property from (15), this implies that, after passing to the lower limit on n, the following yieldsTherefore, it is possible to conclude thatThen, estimates from (21) and (22) lead to the conclusion thatwhich establishes that the minimisation problem has a solution in The uniqueness of is due to the strict convexity property of the function Indeed, let us reason by the opposite. This means that, suppose that the application reaches its minimum in and that has two distinct elements. As is strictly convex on particularly for all we haveThus, because we specifically haveBy observing (23), we can thus conclude that a contradiction yields since the left-hand member is estimated below by , while the right-hand member is estimated above by conforming to the hypothesis on and , respectively.The uniqueness of the solution of the minimising problem occurs. □

Remark 1.

The solution of (11) is characterised as the solution to a partial differential equation described by the stationary equation.

Indeed, employing the Euler–Lagrange equation, we can noticed that the unique solution of the minimising problem (11) coincides with the problem of finding solutions for the partial differential equation in (24). Therefore, to determine in practice this unique solution of the optimal problem (11), it is equivalent to solving the partial differential equation in (24).

This point is not our aim in this paper. We propose in the next part another approach comprising the discretisation of the minimisation (11).

3.4. The Corresponding Discretised Model by Means of the Graph Theory

After reviewing the continuous case, we now discuss how to translate the framework to the discrete setting of a graph. In contrast to the well-known numerical recalled earlier, it is proposed to apply the theory of weighted graphs applied to regularisation processing [].

We introduce this section by recalling the main tools on graph theory.

Let designate a finite set of d vertices . Every vertex consists of a pixel for the image. The finite set V plays the same role as the domain of given previously in the general case. Let u and v be two vertices, where is named the edge. We write for two adjacent vertices. The neighbourhood of a vertex u (i.e., the set of vertices adjacent to u) is denoted by We say that an edge is incident on vertex u if the edge starts from u. An edge is a self-loop if .

Let be a symmetric function (i.e., for every in ). In [,], one can find some examples of the weight functions If is not an edge, one has , and thus the set of edges can be characterised by the weight function as

The degree of a vertex u is defined as

V, E, and define a weighted graph . The elements of V are the vertices of the graph, and the elements of E are the edges of the graph. A path is a sequence of vertices such that is an edge for all .

The following highlights some properties of graph theory.

- –

- A graph is connected when there is a path between any two vertices.

- –

- A graph is undirected when the set of edges is symmetric, i.e., for each edge , we also have , and the weight function satisfies the symmetry condition In the following, the graphs are always assumed to be connected, undirected, and have no self-loops or multiple edges.

Now, we designate by the space of real-valued functions f defined on the vertices of a weighted graph , i.e., each function in is assigned to be a real value to each vertex For a function , the norm of f is given by

Let us note that, for to be constant, simply becomes the classical -norm. This means that

The mapping with g is an inner product on . Moreover, the mapping

is a norm.

has a Hilbert’s space structure on .

The notation always denotes two adjacent vertices. The local variation defined by

is a semi-norm which measures the regularity of a function around a vertex of the graph.

Translated from the usual notation applied to the classical differential operators in PDEs, the fundamental tools are the weighted partial differentials on graphs. For more detailed information on these operators, one is referred to [,,].

Let be a weighted graph and be a function on the set of vertices V of Then, one can define the weighted partial differentials of f at a vertex in the direction of a vertex as

Based on this definition, one can straightforwardly introduce the weighted gradient operator on graphs which is defined on a vertex, i.e.,

The weighted gradient at a vertex must be understood as a function of , and thus the norm of this finite vector represents its local variation and is given as follows

We notice that the bounded domain presented previously is a subset of In the discretised formulation, the smoothing process is reduced to be an optimal problem as follows

The use of this framework may be considered as a discrete analogue of the continuous regularisation on weighted graphs. One of the advantages is that the regularisation is directly expressed in a discrete setting, and involved processing methods are computed by simple and efficient iterative algorithms without solving any partial differential equations (PDEs). The idea of using functions on weighted graphs in a regularisation process has been proposed in other contexts, such as semi-supervised data learning []. However, in our paper, we adopted a digital approach contrary to the numerical approaches used in [], for instance. From this, the optimal problem in (26) becomes

The models with or have been treated (for more details, the reader can consult []).

In our work, we treat the general case The next proposition is the following.

Proposition 2.

The discretised minimising problem in (27) has a unique solution.

Proof.

By using the elementaries tools of convex analysis applied to the functional

and are defined as in the previous case. Obviously, we note that the problem in (27) has a unique global minimum. Indeed, we define the functions and as follows

It is clear that each function is convex in Moreover, is coercive in which means that . This fact is easily obtained using the triangular inequality.

Because of this, it could be argued that the problem described by (27) has a unique solution in □

In our work, the weight considered is

where

and the term designates the cardinal of vertices of the graph. This choice is favoured because we only take into account the difference between a vertex and its neighbourhood, and we also consider the deviation such that

4. Some Illustrations of the Effects of Parameters p and

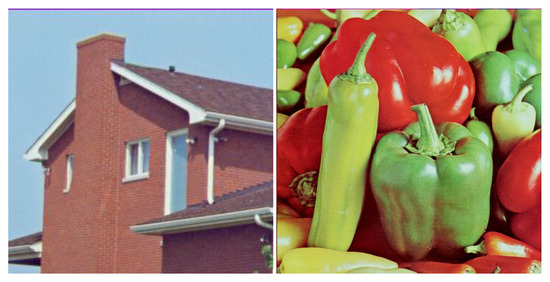

The process for calculating the p(x)-Laplacian on an image is that of the implementation in Algorithm 1. The effect parameters p and of the model on an image are illustrated in Figure 2.

- In the case , the pictures are partitioned into several homogeneous areas;

- In the case , when p approaches 2, there is a smoothing effect, whereas when p converges on 1 there is no smoothing effect;

- The increase in parameter limits the effects of p.

Figure 2.

Effects of parameters p and on the Lena image. From left to right, parameter p increases to values . From top to bottom, the lambda increases by taking values .

Overall, when acting on the value of these parameters, several smoothing levels are reached which make the pictures striking or blurred.

| Algorithm 1 Algorithm regularisation |

| x and j are positions in IM is the map result is a temporary map Compute gradient map G on image Compute P map values on image such as := 0.01 := user value in For all x in image := Do := For j in neighbours of x While |

4.1. Algorithmic Formulation of the Mathematical Model

4.1.1. Implementation Procedure

As in [], an image is represented by a graph. In this structure, every vertex contains the coordinates of a pixel, and each edge indicates the difference between two pixels. The mathematical model in (26) is applied to the graph which must satisfy the existence and uniqueness requirements given in Section 3.1 into account.

4.1.2. Towards an Adaptive Model

In the literature, the parameter p is often treated as static in the studies of this model. Otherwise, in our study, we propose to adapt parameter in order to control the smoothing effects due to parameter p. The aim is to take account of the details in the image.

model evaluation process to control the p effects: the parameters p and play a crucial role in the dynamical smoothing process. Therefore, we calculate the difference in colour between a pixel and its neighbours in the following way. The gradient was chosen in accordance with the objective that had to be achieved.

Implementation of the parameter control process: In a preliminary step, we calculate the gradient of the image in order to detect prominent areas as well as noise. The idea is to vary the parameters in relation to the gradient in order to preserve details (areas of interest) and smooth out other areas. However, since the gradient is a local operator, it is sensitive to noise and also generates very large anomalous values that alter the process. For this reason, it is advisable to check the values obtained in each pixel in order to reduce the effect of the noise.

Efficient interval for the gradient: the three following types of pixels are focused on:

- –

- Noise-affected pixels;

- –

- Noise-unaffected pixels;

- –

- Outline pixels.

We would like to point out that the gradient values should be found by taking into account pixels (i.e., noise-affected and contour pixels which have the least effect on neighbouring pixels) that affect the process as little as possible. Thanks to these values, we will be able to create intervals that will allow us to select relevant values of the gradient. To do this, these intervals, defined by the interquartile deviation of the gradient distribution, are given by the statistical theory of data dispersion (see []) in the series according to the following formula

where and are the first quartile and the third quartile, respectively. The positive constant k chosen as either 1.5 or 3 was discussed by Frigge et al. in [].

These authors concluded for k that 1.5 is a suitable value for most situations. According to them, there are cases where 3 may be an appropriate compromise.

Thus, the following intervals were chosen:

and

For the interval , the outer values correspond to major aberrant values (such as noise).

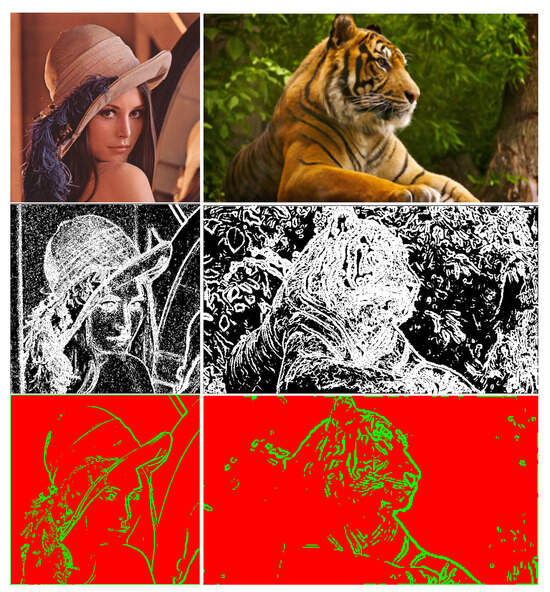

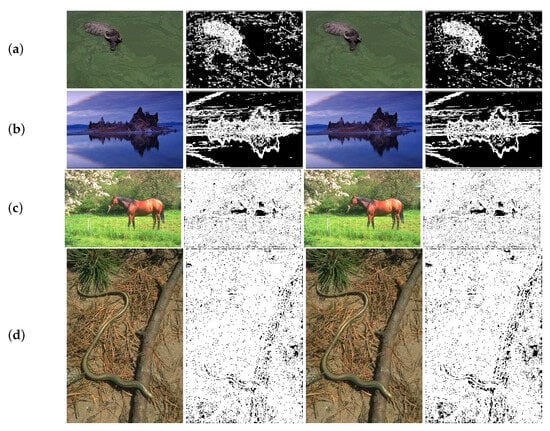

Study of the sturdiness of these criteria: After a series of tests on an image database, we found that the interval is noise-sensitive and allows one to consider areas to be smoothed. Algorithm 2 implements the process, and Figure 3 shows the effects of such a choice on two images.

Figure 3.

Illustration of the interval effects. The (first line) shows the original images, the (second line) shows the result of the edge and noise detection, and the last line shows the result of the proposed classification. More specifically, the pixels that have a gradient value in the interval are shown in red and the pixels with a value outside of this interval are shown in green.

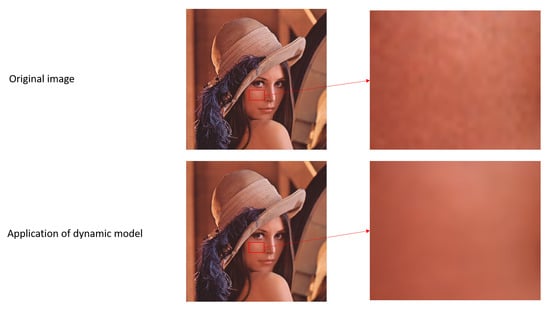

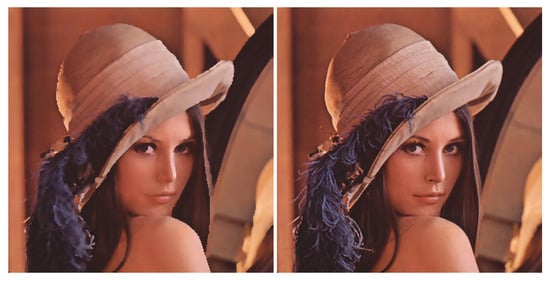

Pixels that participate in the dynamic processing are shown in red. They will be submitted to variations in p and depending on the gradient value. Pixels that will not be modified are shown in green. Their values are located in the highest gradient areas. The values force the smoothing process to be neutralised. The adaptive model effects are shown in Figure 4.

Figure 4.

Illustration of the adaptive model effects on the Lena image.

We can see that the areas that are considered to be visually homogeneous (Lena’s skin texture, for example) are smoothed in order to further standardise the colours. On the contrary, the contours with a high gradient are preserved.

| Algorithm 2 Algorithm regularisation with dynamic |

| x and j are positions in IM is the map result is a temporary map Compute gradient map G on image Compute P map values on image such as := 0.01 Compute quartile on the gradient norm Compute map on image with quartile such as: For all x in image := Do := For j in neighbours of x While |

5. Results and Discussion

Presentation of the database and discussion of execution times and complexity

We used two images to illustrate the treatments:

- –

- Lena image, which is widely used in image processing;

- –

- Tiger image, because it contains the most significant data in image processing. The tiger is present within the focal depth, while the rest of the scene, which is less important, should be homogenised.

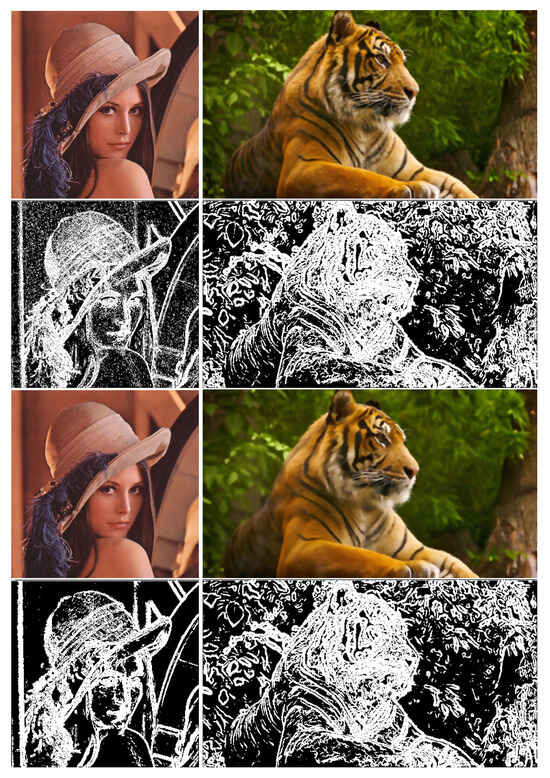

The execution of the programme mainly depends on the smoothing factor and the number of pixels to be smoothed. The higher the smoothing factor, the more a pixel must be modified. Processing continues until every pixel in the image is stabilised. The complexity is in , where N is the number of pixels in an image and k is a constant representing the disorder in the original image. By increasing the value of in detailed areas, the proposed model reduces the processing time. Figure 5 shows the results of the adaptive model on noise.

Figure 5.

Illustration the effects of the adaptive model. From top to bottom, the original images, the result of applying the Sobel filter, then the result of applying the adaptive algorithm, and finally the result of applying the Sobel filter. The application of the proposed adaptive method reduces the number of noise and contour points for the Lena image from 21,571 pixels to 19,552 pixels (a reduction of 9.35%) and for the Tiger image from 15,600 to 14,374 (a reduction of 7.86%).

We used the adaptive model on 100 images of the Berkeley database set []. This database contains colour photographs of various scenes. The results of these studies are presented in the following sections.

5.1. Results with the Peak Signal-to-Noise Ratio (PSNR)

We evaluated the quality of the filtering using the peak signal-to-noise ratio (PSNR) in a first work. This parameter is used to calculate the presence of noise in an image. Its formulation is described in (31):

In our study, there are no noiseless images, and so we go back to the formula in (33) to evaluate the quality of the images from the dynamic processing.

The values obtained can be compared with the reference values in Table 1. The values obtained on Lena’s image after varying p and are shown in Table 2. These values can be compared with the reference values given in Table 1.

Table 1.

Relationship between PSNR and image quality.

Table 2.

Result of using PSNR on the Lena image (Figure 2) by varying p and .

The Lena image with the adaptive model provides a PSNR = 27.66, while the tiger image gives a PSNR = 24.31. In order to validate the proposed filter, we chose to use the adaptive model on 100 images extracted from the Berkeley database [] (see Figure 6), 14 images extracted from the USC-SIPI Image Database [] (see Figure 7), and 24 images extracted from Kodak Lossless True Color Image Suite [] (see Figure 8). Images from these databases are widely known and show different scenes with contrasts.

Figure 6.

Illustration of the images contained in the Berkeley database.

Figure 7.

Illustration of the images contained in the USC-SIPI databases.

Figure 8.

Illustration of the images contained in the KODAK database.

From the Berkeley database, Table 3 and Table 4 show the DSSIM and PSNR results obtained, and Figure 9 shows two images that obtained the best PSNR following the application of the adaptive model as well as the two images with the worst PSNR.

Table 3.

DSSIM parameter values obtained on images of Figure 9. The Sobel columns give the number of points designating the contours as well as the noise.

Table 4.

Statistical results on the PSNR obtained with the adaptive model on the Berkeley database.

Figure 9.

Illustrations of the results obtained by testing 100 images extracted from the Berkeley database with an alteration (a degraded aspect due to, for instance, bad capture or compression) with a ratio of almost 50%. From top to bottom (a–d), we note the two best results and the two worst results obtained using the PSNR and DSSIM parameters. From left to right, the original degraded image followed by the result of the application of the Sobel filter, then the result of the application of the adaptive algorithm on the degraded image, and finally, the result of the Sobel filter. In all cases, there is a decreasing number of pixels with a high gradient.

The values presented in Table 4 are quite homogeneous, as can be seen from the standard deviation, which remains at 2.19, and the average PSNR, which remains at 31.13. This last value represents a good image quality according to this parameter. With regard to the sample used, 26.36 corresponds to a minimum PSNR. It places the worst case towards a good result. Otherwise, for an image quality considered excellent, 36.24 is the best PSNR obtained by the adaptive model.

5.2. Results with the Structural Dissimilarity Measure (DSSIM)

In the first stage, we use structural dissimilarity DSSIM [] to assess the quality of filtering, and Table 5 shows the results obtained. This measure was developed to evaluate the visual quality of a compressed image with respect to the original image. It uses the SSIM parameter (structural similarity) to measure the similarity of structure between the two images. Its formula is given by (34):

with

where and are averages in the x and y direction, and and are the variances of x and y, respectively. are parameters needed to stabilise the division.

Table 5.

The result of using the DSSIM parameter on the Lena image (Figure 2) by varying p and .

In order to simulate the images from the network, we compressed all images in the dataset. For this study, we chose to use JPEG compression. The images available in the database were compressed in JPEG format with several compression ratios ranging from 10% to 90% quality. We have already shown the impact of dynamic with respect to static . Table 6 summarises this study.

Table 6.

Illustrations of DSSIM results obtained from 100 images extracted from the Berkeley database. This study was carried out according to the compression ratio in order to simulate degradation, and different values taken for were compared to the method used for dynamic (bold values represent the best results).

The algorithmic process gives the best values in the dynamic case and where is 1.0. In terms of the DSSIM criterion, these two cases give better results. However, the value of 1.0 leads to too much smoothing over the whole image, as shown in Figure 10.

Figure 10.

Illustration of the effects of the use of static and dynamic on the Lena image. (Left side) ; (Right side) is dynamic.

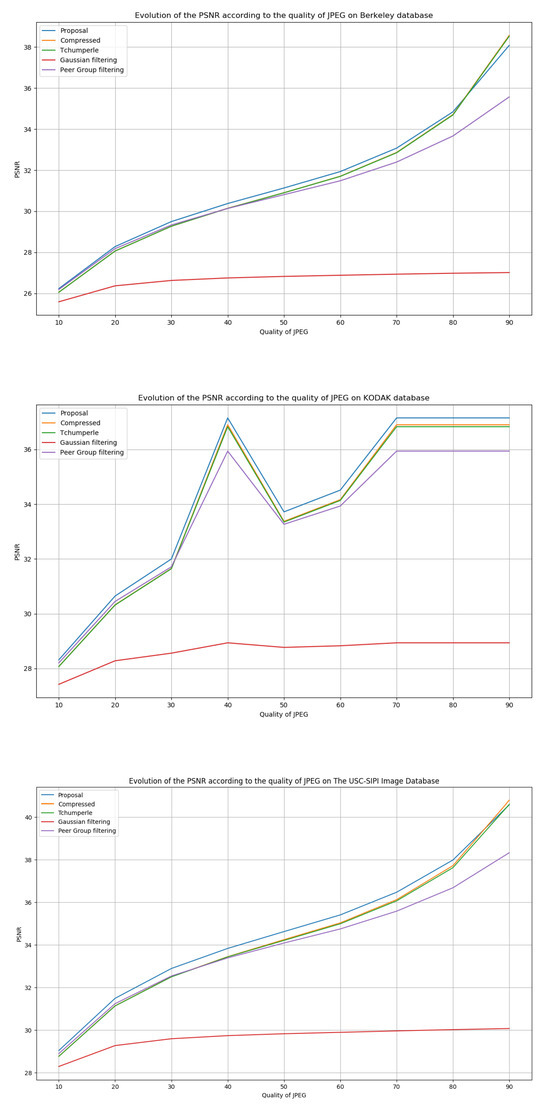

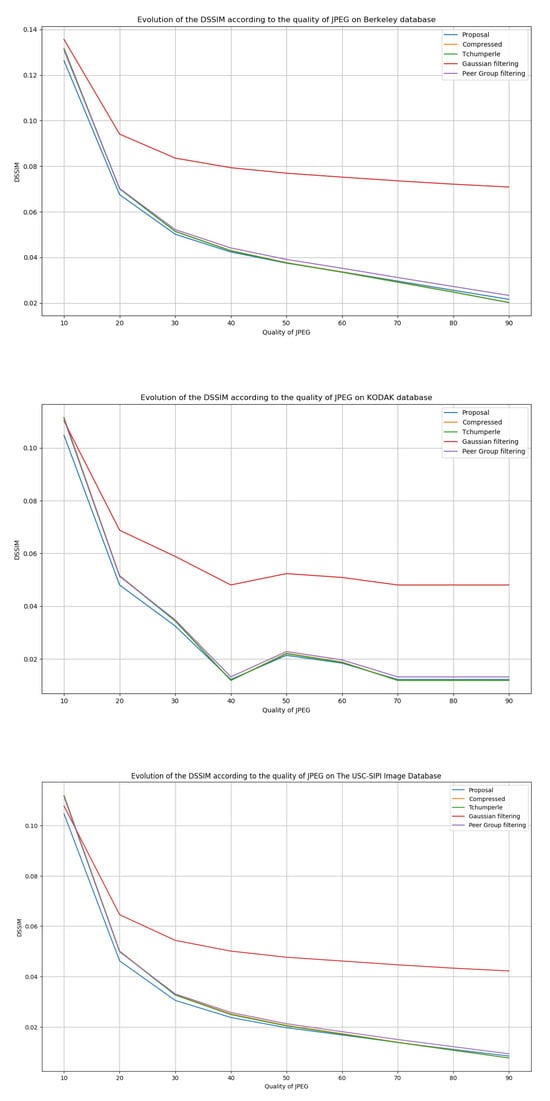

In a second step, we compare one side of the original image with the compressed image and the other side with the compressed image after applying our filtering. We used other filtering algorithms in the evaluation process. The method of D. Tschumperle [] was used, which consists of regularising an image by an anisotropic smoothing process based on PDEs. This model requires parameterisation for its implementation, so we used standard values given in the literature. Gaussian filtering was used, which consists of applying a Gaussian filter to the rows and then to the columns of the image. Peer group filtering was used, which allows an image to be smoothed by replacing a pixel with the weighted sum of its closest neighbours in terms of colour distance. The purpose was to observe whether our proposal leads to improvements in terms of visual rendering, especially in the case of high losses. The results obtained with PSNR and DSSIM are shown in Figure 11 and Figure 12 (for PSNR, a higher value is better, whilst for DSSIM, a lower value is better).

Figure 11.

Results on the evolution of the PSNR according to the quality of compression.

Figure 12.

Results on the evolution of the DSSIM according to the quality of the compression.

In Figure 11 and Figure 12, the curve representing the compressed image expresses the relevance of the models. In fact, in the case of PSNR, this relevance is provided by a model that gives results above the compressed image and, up to the case of DSSIM, by a model that gives results below the compressed image. However, the use of Gaussian filtering does not satisfy these conditions. In the worst case, the others are generally confused by the compressed image. Finally, we observed, with the help of Table 7, using the standard deviation, the stability of the results on all the databases used in this work. Table 8 shows the average execution times achieved by our model on the image databases used. The algorithm still needs to be optimized to reduce the time required to process an image. The average processing time observed is approximately 10 min per image.

Table 7.

Illustration of the mean values and standard deviations on DSSIM and PSNR criteria obtained after image processing by our proposed model on the Berkeley (120 images), Kodak (24 images), and USC-SIPI (13 images) databases.

Table 8.

Presentation of the average execution times of the adaptive p(x)-Laplacian model on the image databases used in the experiment.

When using the PSNR parameter, the visual rendering is improved for compression with a quality between 10% and 70%. The use of the DSSIM parameter shows that, for a quality of between 10% and 30%, the proposed model improves. In any case, for compression with high information loss, our proposal gives better PSNR and DSSIM values than the just compressed image. As soon as the compression becomes low, we see that it is no longer necessary to use our proposal, since it provides values that are lower than those given by the compressed image.

The work focuses on the proposal of an adaptive control operator for the smoothing strength of the p(x)-Laplacian method. Tests were carried out on the three following image databases: Berkeley, USC-SIPI, and KODAK. We degraded the images of these three databases by applying compression levels with losses. The results in Figure 11 and Figure 12 show a higher efficiency of the p(x)-Laplacian method with our adaptive controller compared to other smoothing methods in the literature with respect to the PSNR and DSSIM evaluation operators. We showed that the p(x)-Laplacian operator with our control operator produces better results than the p(x)-Laplacian with a static . Our method produces a value for each point of the image adapted to the situation, as shown in the examples in Table 6 with respect to the DSSIM evaluation criterion. Finally, we compared the p(x)-Laplacian with our control operator to other smoothing models in the literature, still based on the same principle of degradation of all images in the datasets. Our proposal gives better results, although for the KODAK image database, we still have roughly equivalent results in terms of the PSNR evaluation criterion.

The contribution of an adaptive controller of the parameter of the p(x)-Laplacian operator has not yet been studied in the literature. Our contribution, which aims to control its smoothing strength according to the locations of an image, shows an improvement in the results obtained using this operator. In a final study, we used two noise generation methods from the literature, again based on the image databases Berkeley, KODAK, and USC-SIPI. These methods consist of creating random additive noise to the images based on a probability law for the distribution, such as the Gaussian distribution or the Poisson distribution. The results of applying these methods and then correcting them using the methods presented in this work are shown in Table 9 and Table 10. Overall, we can see that the proposed method ranks second only to the Tchumperle method.

Table 9.

Results of the evaluation of the application of smoothing methods on the Berkeley, KODAK, USC-SIPI noisy image databases using the Gaussian-distributed additive noise method.

Table 10.

Results of the evaluation of the application of smoothing methods on the Berkeley, KODAK, USC-SIPI noisy image databases using the Poisson-distributed noise generated method.

6. Conclusions and Prospects

In the field of image enhancement, smoothing methods are used to correct singularities. Among these methods, we are interested in the p(x)-Laplacian. It is generally used in the literature with a fixed control parameter. This choice does not allow for finer processing over the entire image. We proposed to apply this nonlinear regularisation operator, which we have made adaptive, in order to emphasise important visual information. We therefore proposed an adaptive control criterion that controls smoothing as a function of the image gradient, which we exploited using statistics. In order to evaluate this new model, we used the PSNR and DSSIM parameters. The former indicates a good reconstruction quality when its value is above 24 dB, and the latter assesses the quality of the filtering. From our test database, the results obtained put the average PSNR at 24.80 dB, with the worst result around a reasonable reconstruction. According to the DSSIM parameter, the quality of the image improved, provided that it had undergone many degradations, such as lossy compression.

Author Contributions

Conceptualization, J.-L.H., J.N. and J.V.; Writing—original draft, J.-L.H., J.N., J.V. and I.-P.M.; Visualization, J.-L.H., J.N. and J.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bougleux, S.; Elmoataz, A.; Melkemi, M. Local and nonlocal discrete regularisation on weighted graphs for image and mesh processing. Int. J. Comput. Vis. 2009, 84, 220–236. [Google Scholar] [CrossRef]

- Chambrolle, A.; Lions, P. Image recovery via total variation minimization and related problems. Numer. Math. 1997, 76, 167–188. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithm. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Chen, Y.; Levine, S.; Rao, M. Variable exponent, linear growth functionals in image restoration. SIAM J. Appl. Math. 2006, 66, 1383–1406. [Google Scholar] [CrossRef]

- Coren, S.; Girgus, J.S. Principles of perceptual organization and spatial distortion: The gestalt illusions. J. Exp. Psychol. Hum. Percept. Perform. 1980, 6, 404–412. [Google Scholar] [CrossRef] [PubMed]

- Ricard, J.; Baskurt, A.; Idrissi, K.; Lavoue, G. Object of interest-based visual navigation, retrieval, and semantic content identification system. Comput. Vis. Image Underst. 2004, 94, 271–294. [Google Scholar]

- Bollt, E.M.; Chartrand, R.; Esedoglu, S.; Schultz, P.; Vixie, K.R. Graduated adaptive image denoting local compromise between total variation and isotropic diffusion. Adv. Comput. Math. 2009, 61–85. [Google Scholar] [CrossRef]

- Harjuletho, P.; Hästö, P.; Latvala, V. Minimizers of the variable exponents, nonuniformly convex Dirichlet energy. J. Math. Pures Appl. 2008, 89, 174–197. [Google Scholar] [CrossRef]

- Musielak, J. Orlicz Spaces and Modular Spaces; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Diening, L.; Harjulehto, P.; Hasta, P.; Ruzicka, M. Lebesgue and Sobolev Spaces with Variable Exponents; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Kovacik, O.; Rakosnik, J. On spaces Lp(x) and Wk,p(x). Czechoslov. Math. J. 1991, 41, 592–618. [Google Scholar]

- Brezis, H. Analyse Fonctionnelle Théorie et Applications Masson et Cie 1983. Available online: https://cir.nii.ac.jp/crid/1571135649569314304 (accessed on 10 February 2023).

- Elmoataz, A.; Olivier, L.; Bougleux, S. Nonlocal discrete regularisation on weigthed graphs: A framework for image and manifold processing. IEEE Trans. Image Process. 2008, 17, 1047–1060. [Google Scholar] [CrossRef] [PubMed]

- Elmoataz, A.; Desquesnes, X.; Lezoray, O. Non-Local Morphological PDEs and p-Laplacian Equation on Graphs With Applications in Image Processing and Machine Learning. IEEE J. Sel. Top. Signal Process. 2012, 6, 764–779. [Google Scholar] [CrossRef]

- Ta, V.T.; Elmoataz, A.; Lezoraty, O. Nonlocal PDEs-based morphology on weighted graphs for image and data processing. IEEE Trans. Image Process. 2011, 20, 1504–1516. [Google Scholar] [PubMed]

- Zhou, D.; Schölkopf, B. Regularization on Discrete Spaces. In Proceedings of the Pattern Recognition; Kropatsch, W.G., Sablatnig, R., Hanbury, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 361–368. [Google Scholar]

- Albarello, L.; Bourgeois, E.; Guyot, J.L. Statistique Descriptive: Un Outil Pour Les Praticiens Chercheurs; De Boeck Supérieur: Louvain-la-Neuve, Belgium, 2010. [Google Scholar]

- Frigge, M.; Hoaglin, D.C.; Iglewicz, B. Some implementations of the boxplot. Am. Stat. 1989, 43, 50–54. [Google Scholar]

- Martin, D.; Fowlkes, C.; Ta, D.; Malik, J. A Database of Human Segmented Natural Images and its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Weber, A.G. The USC-SIPI Image Database Version 5. USC-SIPI Report 1997, 315. Available online: https://cir.nii.ac.jp/crid/1573950399791107200 (accessed on 27 January 2023).

- Franzen, R. Kodak Lossless True Color Image Suite. 1999, 4. Available online: http://r0k.us/graphics/kodak (accessed on 27 January 2023).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Tschumperlé, D. Fast anisotropic smoothing of multi-valued images using curvature-preserving PDE’s. Int. J. Comput. Vis. 2006, 68, 65–82. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).