A Novel Visual SLAM Based on Multiple Deep Neural Networks

Abstract

:1. Introduction

- A deep LoopClosing module was designed by combining Seq-CALC [28] and SG-Matcher.

- Compared with many existing SLAM systems, the proposed SLAM system reduces estimation error by at least 0.1 m on the KITTI odometry dataset, and it can run in real time at a speed of 0.08 s per frame.

- The rest of the paper is organized as follows. In Section 2, some related work is presented. In Section 3, the system architecture of our visual SLAM and the design principles of each module are explained in detail. In Section 4, the experiments used to test our system are described and the experimental results are analyzed in detail.

2. Related Work

2.1. Feature Extractions for Visual SLAM

2.2. Deep Feature Matching

2.3. Loop Closure Detection

3. The Proposed SLAM

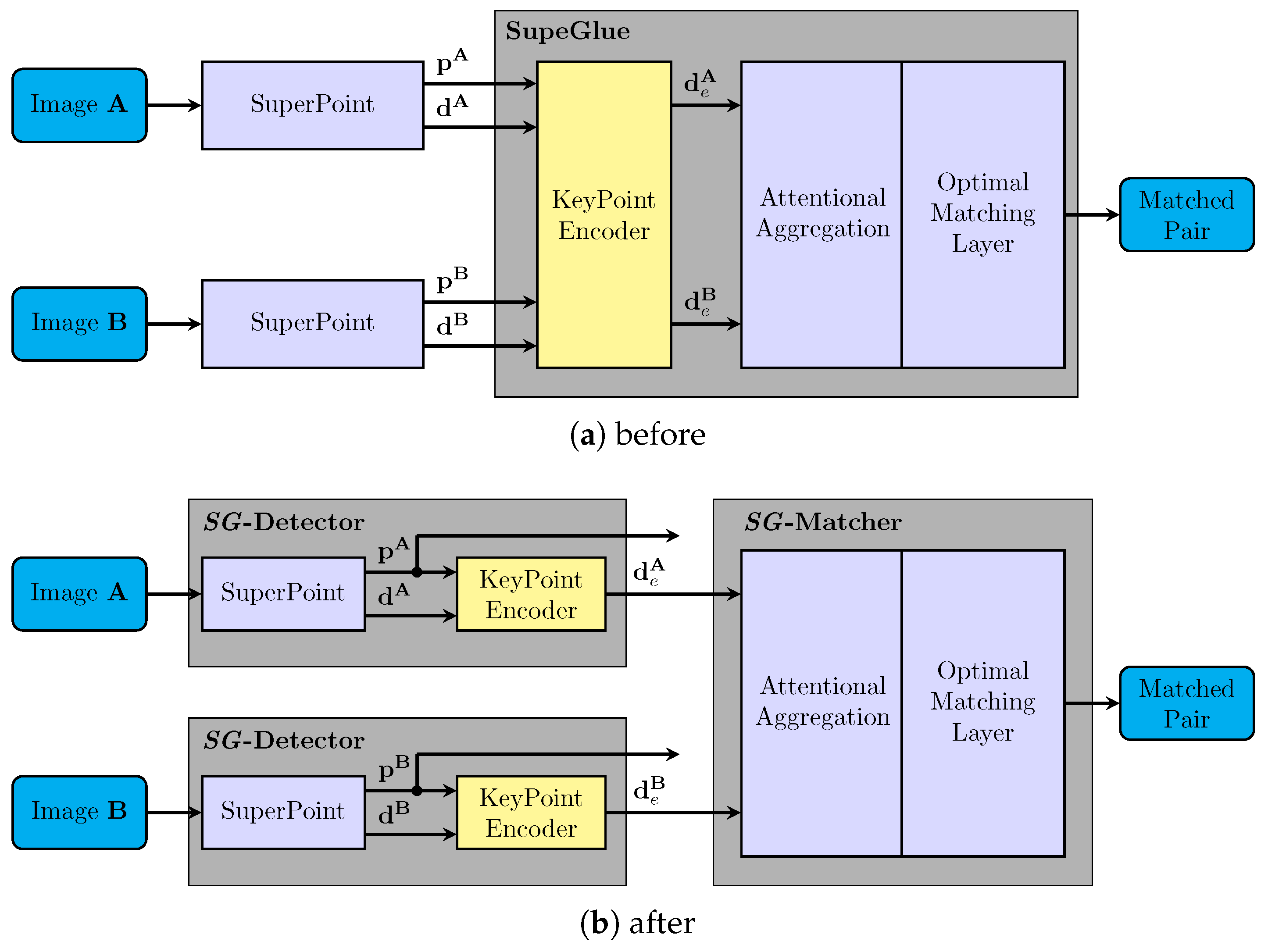

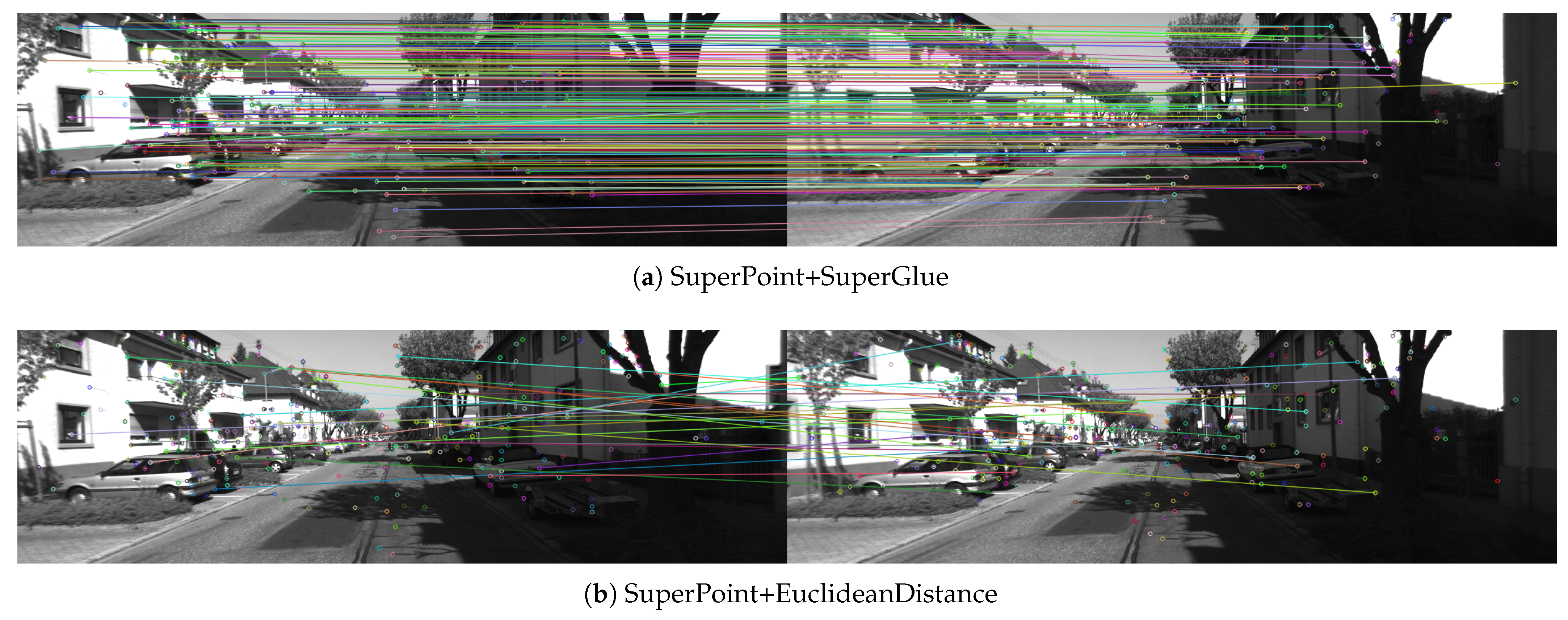

3.1. Feature Extraction and Matching

3.2. (3)-Based Pose Fusion Estimation

3.3. Backend Optimization

3.4. Loop Closure Correction

4. Experiments

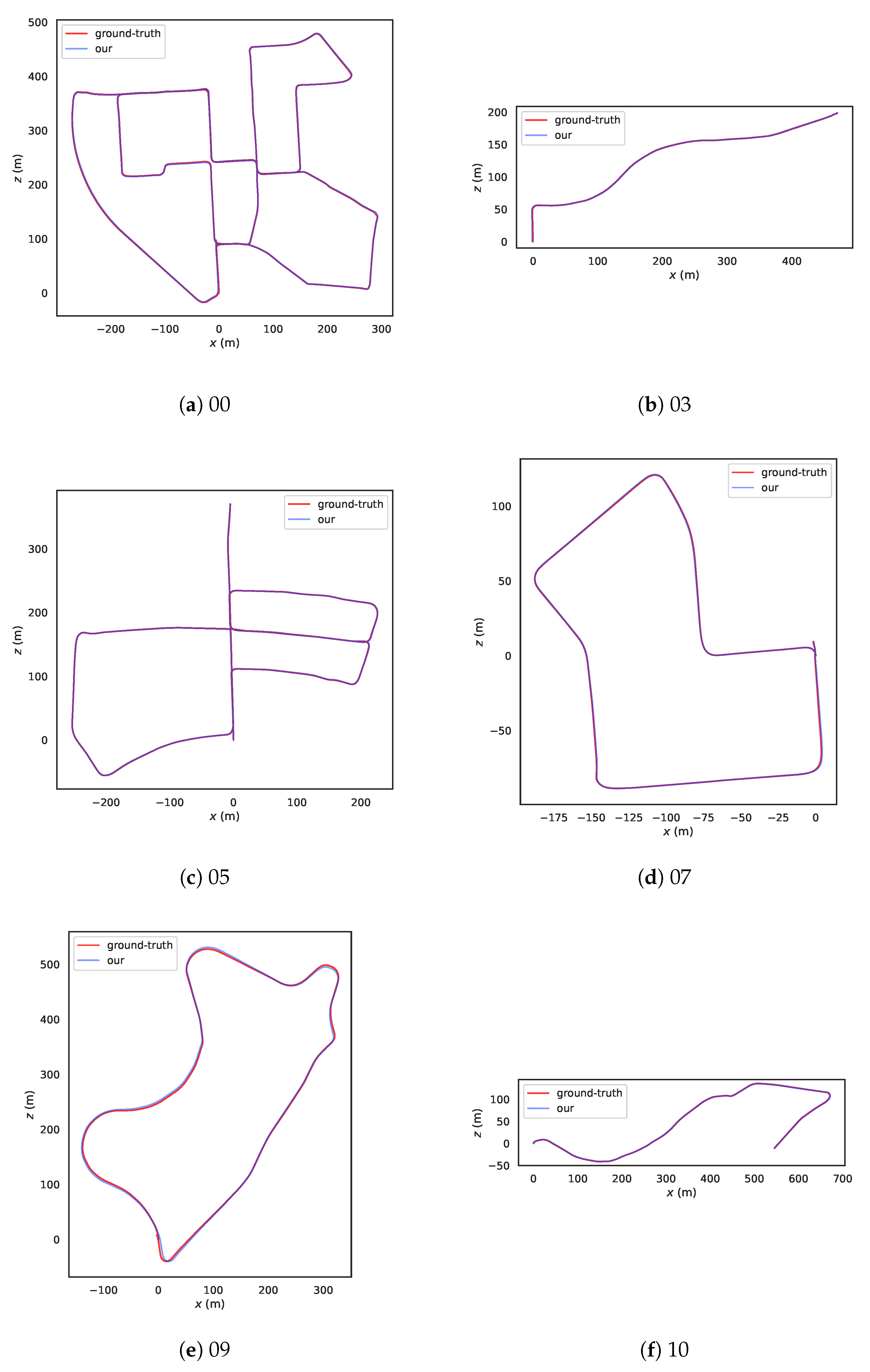

4.1. Evaluation Experiment

4.2. Ablation Experiment

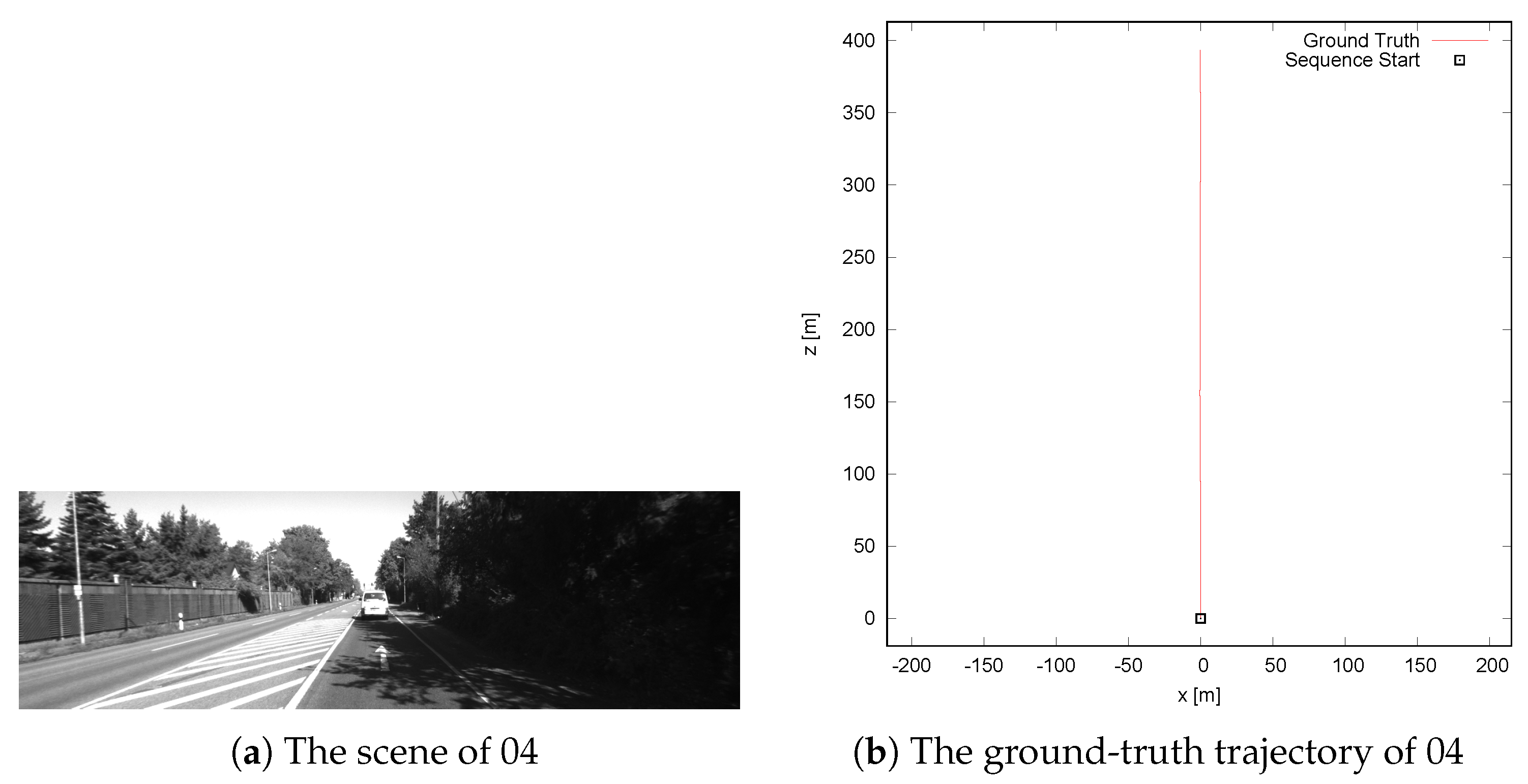

4.3. Validation Experiment

4.4. Runtime Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, IEEE, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Dong, X.; Cheng, L.; Peng, H.; Li, T. FSD-SLAM: A fast semi-direct SLAM algorithm. Complex Intell. Syst. 2022, 8, 1823–1834. [Google Scholar] [CrossRef]

- Wei, S.; Wang, S.; Li, H.; Liu, G.; Yang, T.; Liu, C. A Semantic Information-Based Optimized vSLAM in Indoor Dynamic Environments. Appl. Sci. 2023, 13, 8790. [Google Scholar] [CrossRef]

- Wu, Z.; Li, D.; Li, C.; Chen, Y.; Li, S. Feature Point Tracking Method for Visual SLAM Based on Multi-Condition Constraints in Light Changing Environment. Appl. Sci. 2023, 13, 7027. [Google Scholar] [CrossRef]

- Ni, J.; Wang, L.; Wang, X.; Tang, G. An Improved Visual SLAM Based on Map Point Reliability under Dynamic Environments. Appl. Sci. 2023, 13, 2712. [Google Scholar] [CrossRef]

- Gao, X.; Wang, R.; Demmel, N.; Cremers, D. LDSO: Direct sparse odometry with loop closure. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Madrid, Spain, 1–5 October 2018; pp. 2198–2204. [Google Scholar]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual planar semantic SLAM for aerial robotic systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Stückler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Hamburg, Germany, 28 September–3 October 2015; pp. 1935–1942. [Google Scholar]

- Mo, J.; Islam, M.J.; Sattar, J. Fast direct stereo visual SLAM. IEEE Robot. Autom. Lett. 2021, 7, 778–785. [Google Scholar] [CrossRef]

- Pire, T.; Fischer, T.; Castro, G.; De Cristóforis, P.; Civera, J.; Berlles, J.J. S-PTAM: Stereo parallel tracking and mapping. Robot. Auton. Syst. 2017, 93, 27–42. [Google Scholar] [CrossRef]

- Mo, J.; Sattar, J. Extending monocular visual odometry to stereo camera systems by scale optimization. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, The Venetian Macao, Macau, 3–8 November 2019; pp. 6921–6927. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, IEEE, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1–26 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Zhang, D.; Zhang, H.; Tang, J.; Wang, M.; Hua, X.; Sun, Q. Feature pyramid transformer. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 323–339. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Tang, J.; Ericson, L.; Folkesson, J.; Jensfelt, P. GCNv2: Efficient correspondence prediction for real-time SLAM. IEEE Robot. Autom. Lett. 2019, 4, 3505–3512. [Google Scholar] [CrossRef]

- Tang, J.; Folkesson, J.; Jensfelt, P. Geometric correspondence network for camera motion estimation. IEEE Robot. Autom. Lett. 2018, 3, 1010–1017. [Google Scholar] [CrossRef]

- Li, D.; Shi, X.; Long, Q.; Liu, S.; Yang, W.; Wang, F.; Wei, Q.; Qiao, F. DXSLAM: A robust and efficient visual SLAM system with deep features. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, NV, USA, 25–29 October 2020; pp. 4958–4965. [Google Scholar]

- Sarlin, P.E.; Cadena, C.; Siegwart, R.; Dymczyk, M. From coarse to fine: Robust hierarchical localization at large scale. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12716–12725. [Google Scholar]

- Deng, C.; Qiu, K.; Xiong, R.; Zhou, C. Comparative study of deep learning based features in SLAM. In Proceedings of the 2019 4th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), IEEE, Nagoya, Japan, 13–15 July 2019; pp. 250–254. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar]

- Xiong, F.; Ding, Y.; Yu, M.; Zhao, W.; Zheng, N.; Ren, P. A lightweight sequence-based unsupervised loop closure detection. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), IEEE, Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Han, X.; Leung, T.; Jia, Y.; Sukthankar, R.; Berg, A.C. Matchnet: Unifying feature and metric learning for patch-based matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8922–8931. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Joshi, C. Transformers are graph neural networks. Gradient 2020, 7, 5. [Google Scholar]

- Angeli, A.; Filliat, D.; Doncieux, S.; Meyer, J.A. Fast and incremental method for loop-closure detection using bags of visual words. IEEE Trans. Robot. 2008, 24, 1027–1037. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Merrill, N.; Huang, G. Lightweight unsupervised deep loop closure. arXiv 2018, arXiv:1805.07703. [Google Scholar]

- Stillwell, J. Naive Lie Theory; Springer: New York, NY, USA, 2008. [Google Scholar]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Proceedings of the Numerical Analysis, Dundee, UK, 28 June–1 July 1977; Watson, G.A., Ed.; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Vilamoura, Algarve, 7–12 October 2012; pp. 573–580. [Google Scholar]

| Sequence | Our | ORB-SLAM2 | LSD-SLAM | DSV-SLAM |

|---|---|---|---|---|

| 00 | 1.0 | 1.3 | 1.0 | 2.6 |

| 03 | 0.5 | 0.6 | 1.2 | 2.5 |

| 04 | 0.2 | 0.2 | 0.2 | 1.1 |

| 05 | 0.6 | 0.8 | 1.5 | 3.8 |

| 06 | 0.7 | 0.8 | 1.3 | 0.8 |

| 07 | 0.4 | 0.5 | 0.5 | 4.4 |

| 09 | 3.1 | 3.2 | 5.6 | 5.3 |

| 10 | 0.8 | 1.0 | 1.5 | 1.8 |

| Sequence | Fuse Last and Reference | Reference |

|---|---|---|

| 00 | 1.0 | 1.2 |

| 03 | 0.5 | 1.5 |

| 04 | 0.2 | 0.2 |

| 05 | 0.6 | 0.9 |

| 06 | 0.7 | 0.8 |

| 07 | 0.4 | 0.5 |

| 09 | 3.1 | 3.3 |

| 10 | 0.8 | 1.3 |

| Sequence | Our | SuperPoint-SLAM |

|---|---|---|

| 00 | 1.0 | X |

| 03 | 0.5 | 1.0 |

| 04 | 0.2 | 0.4 |

| 05 | 0.6 | 3.7 |

| 06 | 0.7 | 14.3 |

| 07 | 0.4 | 3.0 |

| 09 | 3.1 | X |

| 10 | 0.8 | 5.3 |

| Sequence | Number of Frames | Time per Frame (ms) |

|---|---|---|

| 00 | 4541 | 81.8 |

| 03 | 801 | 57.6 |

| 04 | 271 | 81.5 |

| 05 | 2761 | 72.1 |

| 06 | 1101 | 60.4 |

| 07 | 1101 | 70.8 |

| 09 | 1591 | 62.7 |

| 10 | 1201 | 59.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, B.; Yu, A.; Hou, B.; Li, G.; Zhang, Y. A Novel Visual SLAM Based on Multiple Deep Neural Networks. Appl. Sci. 2023, 13, 9630. https://doi.org/10.3390/app13179630

Zhu B, Yu A, Hou B, Li G, Zhang Y. A Novel Visual SLAM Based on Multiple Deep Neural Networks. Applied Sciences. 2023; 13(17):9630. https://doi.org/10.3390/app13179630

Chicago/Turabian StyleZhu, Bihong, Aihua Yu, Beiping Hou, Gang Li, and Yong Zhang. 2023. "A Novel Visual SLAM Based on Multiple Deep Neural Networks" Applied Sciences 13, no. 17: 9630. https://doi.org/10.3390/app13179630

APA StyleZhu, B., Yu, A., Hou, B., Li, G., & Zhang, Y. (2023). A Novel Visual SLAM Based on Multiple Deep Neural Networks. Applied Sciences, 13(17), 9630. https://doi.org/10.3390/app13179630