Abstract

Personal semantic memory is a way of inducing subjectivity in intelligent agents. Personal semantic memory has knowledge related to personal beliefs, self-knowledge, preferences, and perspectives in humans. Modeling this cognitive feature in the intelligent agent can help them in perception, learning, reasoning, and judgments. This paper presents a methodology for the development of personal semantic memory in response to external information. The main contribution of the work is to propose and implement the computational version of personal semantic memory. The proposed model has modules for perception, learning, sentiment analysis, knowledge representation, and personal semantic construction. These modules work in synergy for personal semantic knowledge formulation, learning, and storage. Personal semantics are added to the existing body of knowledge qualitatively and quantitatively. We performed multiple experiments where the agent had conversations with the humans. Results show an increase in personal semantic knowledge in the agent’s memory during conversations with an F1 score of 0.86. These personal semantics evolved qualitatively and quantitatively with time during experiments. Results demonstrated that agents with the given personal semantics architecture possessed personal semantics that can help the agent to produce some sort of subjectivity in the future.

1. Introduction

Subjectivity is defined as the quality of humans how they act, think and react in their way in a particular situation [1,2,3,4]. The quality of subjectivity varies with changes in personal feelings emotions and thoughts. Subjectivity changes as the knowledge and the experience evolve [4]. This knowledge is related to the perception, feelings, views, and judgments for example an agent “X” has positive thinking about an agent “Y”. Agent “X” positive thinking is based on agent “X” experience with the agent “Y”. A third agent “Z” doesn’t necessarily think about agent “Y” in the same because agent “Z” thinking is based on agent “Z” experience with the agent “Y”. Agent “X” thinking can vary from person “Z” qualitatively and quantitatively. The individual’s emotion perception is associated with personality traits [5]. Personality traits are defined as the forms of feelings, behaviors, and thoughts. Personality traits change with time in life span as the thoughts, feelings, and emotions evolved with time [6]. An agent with personal semantics can have the feature of possessing views, perspectives, and preferences based on experiences.

Intelligent agents need cognitive features for improving their behaviors [4,7]. Personal semantics are providing a way that may help the agent in the future for possessing subjectivity for beliefs, views, perspectives, and preferences [8]. A model of personal semantic memory is proposed and implemented in this work. Personal semantic memory is the type of long-term memory responsible for personal semantic knowledge acquisition that evolves with time based on knowledge from other cognitive memories i.e., episodic memory and semantic memory [9]. It acquires self-knowledge, personal perspectives, repeated events, personal beliefs, and preferences [8,10,11]. The nature of personal semantic memory is explained in cognitive psychology and neurosciences distinctively separating it from other long-term declarative cognitive memories (semantic memory and episodic memory) [9,10,12]. There are different types of memories. A detailed description of cognitive memories is given in Table 1 [13,14].

Table 1.

Type of cognitive memories from the literature.

Philosophical aspects of personal semantic memory are different from the state of the art memories [15,16] both in terms of its classification and neural correlates [17]. Different types of personal semantic knowledge are illustrated in Table 2.

Table 2.

Type of personal semantic knowledge.

The advancement in the development of agents has already come to the point where all required memories are implemented. These included semantic memory and episodic memory that can play a vital role in perception, reasoning, and learning for the formulation of personal semantics.

The proposed personal semantic memory model used the semantic knowledge from the semantic memory and the event knowledge from the episodic memory for perception, learning, and cognitive values computation. Working memory act as a main control unit where all the content space is accumulated from perception, semantic memory, and episodic memory. The content space play role in inferring the personal semantics. Personal semantic formulates and explicitly represented in the form of knowledge graphs where nodes are predicates and the links indicate the versions. A chunk of personal semantic in a personal semantic memory consists of the content and the cognitive values. The proposed cognitive values indicate the robustness of the chunk of personal semantics in the personal semantic memory. Personal semantic memory evolves with time in the form of amendments and cognitive values that’s why the design requirement of personal semantic memory is different from the existing state-of-the-art memories. Change may occur through adding a new amendment as a knowledge node or may increase the cognitive values of the existing one. The proposed model was integrated with a conversational agent to perform an experiment where the agent with the proposed model had a conversation with human participants. As a result of conversations, the agent possesses a subjective point of view about the person. On arrival of the query, a chunk of personal semantic memory with the highest cognitive value of mass was retrieved as a response from the agent memory. In the future increase in resources may fast up the whole process of memory formulation. This work is an effort to define and implement procedures that run in agent memory to construct personal semantics. Inducing subjectivity in an intelligent agent is indeed a challenging task in terms of resources available for knowledge, learning, and computation. The basic building block required for personal semantic memory is now well-explained structures still improvement is required in terms of efficiency.

2. Related Work

There is a need of implementing modules that can provide a way for inducing subjectivity in intelligent agents for improving their behavior [7]. This work intends to endow subjectivity in intelligent agents through personal semantic memory implementation. This behavior is required in intelligent agents for possessing subjective states.

The philosophical term of subjectivity is the quality of being a subject and having own versions of perspectives, beliefs, and feelings, which can only be true in accordance to the subject himself. These perspectives, beliefs, and feelings can be related to the self and the environment. Subjectivity changes with experiences. In the past theoretical description of artificial subjectivity and its necessity is highlighted. It improves agent in context to having inner mental life as quoted by Kiverstein [18]. Evans highlighted the importance of having subjectivity in terms of emotions, passions, drives, and inclination in robots [19].

Personal semantic memory is revealed by Renoult et al. [8,10,15,16] through performing Functional Magnetic Resonance Imaging (fMRI) experiments on the human brain. Theoretical description of nature and types of personal semantic memory is carried out. In cognitive psychology, many theories exist for the nature of personal semantic memory. Grilli separates the neural correlates of personal semantic memory from other memory by experimenting on dementia patients. Renoult et al. subcategorized the personal semantic memory into self-knowledge, autobiographical facts, and repeated events. This work focus on building a subjective point of view as a result of agent conversation with humans that is a core of subjectivity. Endowing subjectivity in agents required some artifacts integration in agent architecture that can be fulfilled by the implementation of personal semantic memory.

In past, computational versions of many cognitive memories were designed, developed, and integrated. Each type of cognitive memory (sensory memory, perceptual memory, semantic memory, episodic memory, and procedural memory) involved in the type of knowledge acquisition indicated from their names. The designs of these memories were fulfilled their philosophical requirements. These memories play a key role in perception, planning, learning, and reasoning. There are schemas for knowledge encoding, decoding, and storage. The encoded knowledge is later used for perception, learning, and in other cognitive processes improving the overall behavior of an agent.

The structure of the state-of-the-art cognitive memories implemented in different cognitive architectures is illustrated in Table 3.

Table 3.

State of the art cognitive architecture with memory comparison Working Memory (WM), Semantic Memory (SM), Episodic Memory (EM), Procedural Memory (PROM).

Acquiring inspiration from the existing state of the art and considering the benefits of having a computational version of personal semantic memory, In this research work, a methodology for the implementation of personal semantic memory is proposed addressing the challenge of subjectivity [8]. The main contribution of this research is to implement personal semantic memory for cognitive agents. The model proposed and computed quantitative measure mentioned in the methodology section to represent the content of personal semantic memory, making required modifications in the existing knowledge representation schemes. Personal semantics provides a unique way to represent autobiographical knowledge and its variations in terms of personal beliefs, perspectives, opinions, and preferences that are based on and influence subjectivity. Therefore, it is expected that inducing personal semantic memory along with episodic and semantic memories, will be a small but vital step in the roadmap towards subjectivity.

3. Methodology

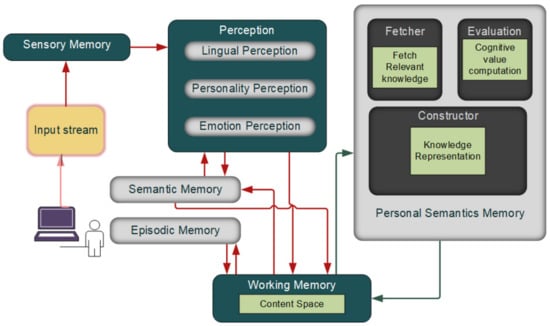

The personal semantic memory model is proposed in this paper as shown in Figure 1. The model consisted of different modules namely sensory memory, perception, working memory, semantic memory, and episodic memory for the construction of personal semantics. These modules worked in synergy to construct personal semantics. Personal semantic memory was formulated in two phases. In the first phase, perceptual semantics were formulated, and in the second phase personal semantics were formulated. The perceptual semantic phase included sensory memory, perception, semantic memory, and episodic memory. The system architecture is given in Figure 1 and explained in proposed architecture section. Implementation of the proposed architecture is given in implementation of proposed architecture section. The nature of each component of the personal semantic model is the same as given in Table 1.

Figure 1.

System overview.

3.1. Proposed Architecture

Sensory memory is the short-term memory of the agent received the external information from sensors. come as an input to the system in textual form. It consisted of a single sentence or multiple sentences. A sensory memory function named in sensory memory was running that received the external information and tokenize it into sentences and words represented as low-level percepts. These low-level percepts named in Equation (1). The function invoked the perception unit carrying the low-level percepts to the perception unit for further processing.

The perception unit received the low-level percepts and performed the perception into three fragments: lingual perception, personality perception, and emotion perception. It holds perception information of agent, object, and recipient. In these fragments, perception of emotion, personality, and language took place. A perception function named was responsible for lingual perception, personality perception, and emotion perception. The output of this function was represented as high-level percepts, denoted as in Equation (2). High-level percept consisted of the knowledge about the agent, action, recipient, personality, emotion, and sentiments and represented using language data frame in the form of name-value pairs. Action from the lingual perception was acted as a cue in working memory for fetching the relevant knowledge from the semantic memory.

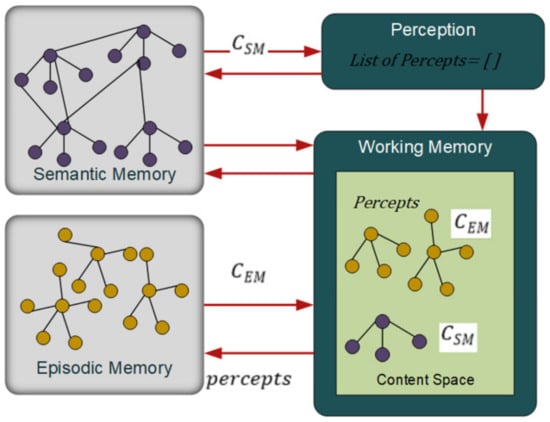

Working memory was the executive control unit where the current knowledge temporarily resides named as content space . A working memory function named () fetched the relevant knowledge from the semantic memory and the episodic memory based on the cue from . It retrieved the number of chunks from semantic memory denoted as in Equation (4). Chunks from episodic memory is denoted as in Equation (5). The chunk of content space consisted of the percepts, and at this stage as shown in Equation (6) and Figure 2. This content space was used by the second phase for personal semantic formulation.

Figure 2.

Content Space in working memory.

Semantic Memory is long-term memory that holds the general knowledge that plays a key role in the perceptual semantic phase [10,31]. Here the semantic knowledge helped in analyzing the positivity and negativity of action performed for example if the agent receives external information “John helps Mark”, how the agent can evaluate that some positive action has been performed by the John? Semantic knowledge against the action “help” was required to evaluate the person John for his action to build a type of person semantics like “John is a good person”, “I like John”, and “I always find John doing good”. Semantic memory can be constructed using conceptual graphs, semantic networks, etc. In this work, a semantic memory was constructed using knowledge graphs considering the benefits of knowledge graphs for easy extraction of complex knowledge [32].

Episodic Memory is the long-term memory accumulated the event knowledge in the form of episodes. Episodic memory stored the seven aspects of each event in the form of an episode. Three standard aspects were used from the emotion framework [33] such as Agent, AgentAction, Time whereas four aspects Sentiment, AgentEmotion, RecepientEmotion, and Personality added that means at a certain time some event held in which Agent_i performed Action_i with Sentiment_i and Emotion_i showing Personality_i. These aspects contributed to the computation of cognitive value as shown in Equation (6).

The personal semantics formulation phase consisted of the evaluation module and the personal semantic construction module as shown in Figure 1. In this phase personal semantic memory content and cognitive values were formulated. Each chunk of personal semantics memory has eight aspects of personal semantics Agent_i, Personality_i, Sentiment_i, AttentionObjective_i, AttentionSubjective_i, Mass_i, Confidence_i, Support_i which means that Agent_i has personality_i and the sentiment_i with cognitive value AttentionObjective_i, AttentionSubjective_i, Mass_i, Confidence_i and Support_i. Knowledge graphs were used to represent the personal semantics where the predicate was represented as a node and all the proposed cognitive values were represented as attributes. The list of proposed cognitive values was Attention Objective, Attention Subjective, Mass, Confidence, and Support. These values were initially practiced using the wizard of Oz method [34]. A Mass value was proposed to compute the strength of a chunk of personal semantics that indicates its resistance to change. Details of cognitive values are given under evaluation modules. Each chunk of personal semantics was either added as new amendments using AmendmentLink or updated the existing one in terms of cognitive values. Cognitive values were computed in the evaluation module whereas the constructor module was involved in the representation of the chunk of personal semantic memory.

In Evaluation Module, was the evaluator function that evaluated the knowledge based on the content space and calculated the proposed cognitive values of the specific chunk of personal semantic memory as shown in Equation (7) using Equations (9)–(12).

The cognitive values that were used in this work are the Mass, Attention Objective, Attention Subjective, Confidence, and Support.

Mass was the weight of each personal semantic knowledge node indicated how many supported episodes exist in the episodic memory of the agent. Suppose agent had number of supported episodes for the given chunk of personal semantic knowledge. Mass was calculated by adding the number of supported episodes and the direct association. The direct association was 1 in case the agent have direct interaction with the individual otherwise it was zero. Mass increased as the number of supported episodes increased symbolized in Equation (8).

Attention Objective, of a chunk of personal semantics was the repetition of the same event calculated using Equation (9). If the same event occurred repeatedly the Attention Objective increases. A function , checked the experience periodically and returned how many consecutive occurrences exist. Every repeated occurrence incremented the attention objective by 1.

Attention Subjective, of a chunk of personal semantics was the similarity between the interests. Suppose the agent had a series of interest whereas other interactive agents had interests, . The Attention Subjective was computed by calculating the similarity between the interests of the two agents using Equation (10).

Confidence of a chunk of personal semantic indicated how many times the agent correctly thought in the same way. Confidence of personal semantics was the number of similar events divided by the total events. Suppose an event with a specific personality was and event with the same personality and same sentiments was . The confidence was calculated as shown in Equation (11).

Support of a chunk of personal semantic indicated how many times the agent thought in the same way. Support of personal semantics was the number of a similar event divided by the total experiences given in Equation (12). The support value indicated the support of specific personal semantics that how many times the same thinking occurred.

The personal semantic memory constructor module represented the personal semantics in the personal semantic memory. Personal semantics chunk was added as an amendment to the existing personal semantic memory or reflects the cognitive values of the existing one. A function named in Equation (13) represented the chunk of personal semantic in the personal semantic memory in Neo4j for long-term association in the form of a knowledge graph as given in Equation (13). PSM is the chunk of the knowledge graph represented in Neo4j.

Further, this section explained the implementation of the modules of personal semantic memory. It consisted of sensory memory, perception (emotion perception, personality perception, and lingual perception), working memory (semantic and episodic similarities), semantic memory (Action knowledge), episodic memory, and all the processes involved in the construction of personal semantic memory (self-knowledge and person knowledge).

3.2. Implementation of Proposed Architecture

Sensory memory received the input text. Here the NLTK (Natural Language Toolkit) sentence tokenizer and word tokenizers tokenized the input stream into tokens T={T1……….Tn} and passed these tokens to the perception unit for lingual perception, personality perception, and emotion perception.

Lingual perception processed tokens using Natural Language Processing (NLP) part-of-speech tagging, named entity tagging, and basic dependency tagging. NLTK (Natural Language Toolkit) taggers Part of Speech (POS) tagger tagged input stream as noun N = {NN, NNS, NNP, NNPS}, adjective Adj = {J J, J JR, J JS}, and verb V = {VB, VBD, VBP, VBN, VBG, VBZ}. Name Entity tagger of nltk was used for the identification of names and places. Spacy basic dependency tagger was used for Agent, Action and Recipient identification that tagged tokens as Agent = {nsubj}, and Recipient R = {dobj}.

Personality perception perceived the personality from the input using the MBTI personality 16 trait model. Dataset was downloaded from Kaggle for the implementation of personality perception. Data consist of 8675 samples. The downloaded data was preprocessed for removing URLs and words that could guide the dataset for perception i.e., INTJ, ESFJ, etc. Linear SVC was used for training with 86% accuracy. The output of the perception module is one of the personality traits among the 16–MBTI personality types [35].

Emotion perception extracted the emotion from semantic memory against the given action. Tokens T are matched with event nodes in semantic memory. Node with the highest similarity value was retrieved with all the relationships from the semantic memory. AgentEmotionLink was further evaluated for extraction of emotion value. The similarity was estimated using the Jaccard similarity index shown in Equation (14). Suppose the node content had words and the tokens had words. The similarity was computed between and using Equation (14).

shows the maximum similarity.

Working memory was the executive control that involved fetching the relevant knowledge from semantic and episodic memory as shown in Figure 1. Action from the lingual perception acted as a cue. In semantic memory and episodic memory, knowledge was represented in the form of nodes and relationships. fetched all the nodes from semantic memory returning a node with a high similarity score. Jaccard similarity score was used to select an event node with all the associated links using Equation (8). AgentEmotionLink, RecepientEmotionLink, and RecepientSentimentLink were more evaluated for the extraction of agent emotion, recipient emotion, and recipient sentiments values from the corresponding nodes. In the same way, also collected relevant episodes from the episodic memory based on the cue. represented a chunk of episodes as shown in Figure 2.

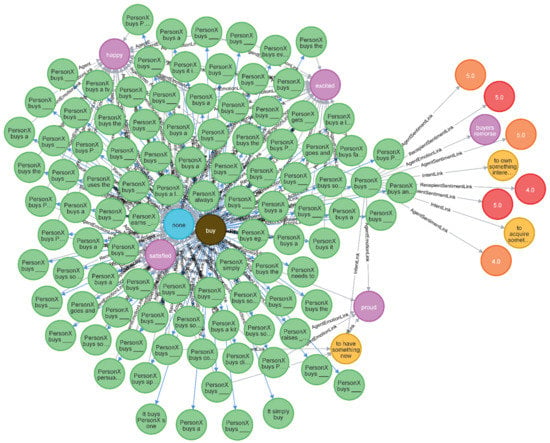

Semantic memory for action sentiment analysis was constructed using the Event2mind dataset [36]. The dataset consisted of 46,473 instances. Dataset was imported as a knowledge graph in Neo4j extracting labels as relationship and the event as predicate shown in Figure 3 and Figure 4. The resultant knowledge graph consisted of six types of relationships and seven types of labels. Relationships were AgentEmotionLink, RecepientEmotionLink, IntentLink, RecepientSentimentLink, AgentSentimentLink and ActionLink. Nodes were XEmotion, YEmotion, Action, XSentiment, YSentiment, Event and Intent. “X” represented the first person and “Y” represented the second person.

Figure 3.

Knowledge Graph representation against event (a) Content “It tastes better” (b) Content for action “person X PersonX buys a new car”.

Figure 4.

Neo4j Semantic Memory view for n matching nodes for action “Buy”.

AgentEmotionLink represented the agent emotions, IntentLink represented the agent intents, RecepientEmotionLink represented the recipient emotion and RecepientSentimentLink represented the recipient sentiments. While importing the dataset, each event of the dataset is processed using a spacy dependency tagger. Spacy tagger tagged verb as V = {VB, VBD, VBP, VBN, VBG, VBZ}. The semantic memory unit represented it in the form of a node and linked it with the event node via ActionLink as shown in Figure 3a. The verb was stemmed through applying poster stemmer converting word into base form for avoiding duplication of the words i.e., walk, walking and walked had single representation as walk. After importing the dataset, the semantic memory knowledge graph consisted of 157,887 nodes and 269,415 relationships. A chunk of semantic memory is shown in Figure 4.

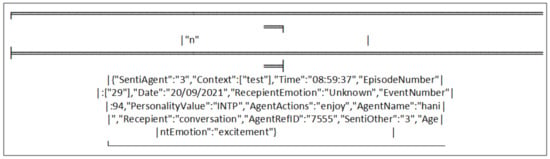

Episodic memory was contained knowledge of Agent, AgentAction, Sentiment, AgentEmotion, RecepientEmotion, Personality, and Time in the form of episodes. Episodes were represented as knowledge nodes. These aspects helped the model to anticipate the cognitive value of the chunk of personal semantics as shown in Equation (9). Representation of one episode in episodic memory is given in Figure 5.

Figure 5.

SingleEpisode representation in Episodic Memory (Neo4j text view).

Agent retrieved relevant past episodes based on the cue. Episodes related to a single person were stored in the form of a cluster, the agent with the proposed architecture had the number of clusters in episodic memory. One cluster represented the episodes related to one person shown in Figure 6.

Figure 6.

n-Episodes of a person name “hani” in Episodic Memory.

Personal semantic memory consisted of the evaluation module and the constructor module as mentioned in Figure 1. The evaluation module performed the sentiment analysis of the recipient sentiment. If the sentiment value was between the ranges of 0–3 the performed action was considered as positive and if the sentiment value was between the ranges of 3–5 the performed action was considered as negative. Sentiments of recipients from semantic memory were used for assessment. Mass, subjective attention, attention objective, confidence, and support value were calculated according to the aforementioned equations of cognitive values computation. Cognitive value and sentiment analysis represented a chunk of personal semantic knowledge in the form of the language data frame.

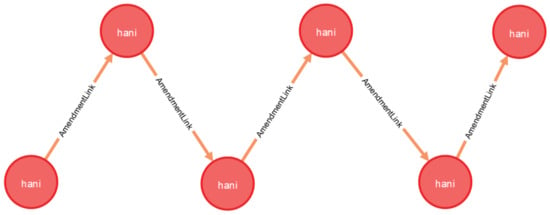

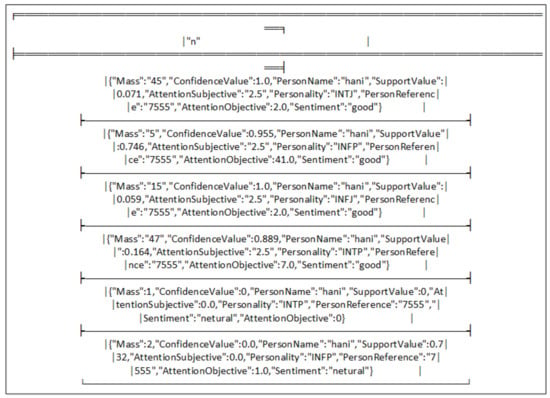

The personal semantic constructor module represented this newly generated chunk of personal semantics either as a new amendment to the existing personal semantic memory or updated the cognitive values of the existing one for long-term association. A graphical representation of a chunk of personal semantics in Neo4j is given in Figure 7. Figure 8 depicted the detail of chunks of related personal semantics in the Neo4j text view.

Figure 7.

Knowledge Graph in personal semantic memory.

Figure 8.

Representation of knowledge in personal semantic memory (Neo4j Text View).

Personal semantics enable the agent to think about Person X in a way i.e., Person X is energetic, Person X is a good person and I like person X. personal semantics with high mass value was retrieved during experiments. The minimum threshold of all the cognitive values can be set according to the domain. During experiments, the threshold value for support was 0.3 and for confidence was 0.5. Memory learns through experiences, after fifty new interactions, the model retrains itself for knowledge generation and all the cognitive values becoming recalculated using new experiences.

4. Experiment Setup

In this work, an experimental setup was used in which a chatbot with the given architecture was developed using the python wx library. During experiments, chatbot interact with the random population of participants. There were 346 participants of different age groups and all had a basic understanding of the English language. There were two modes of conversation Question Mode and Conversation Mode.

In conversation mode, the chatbot asked random questions from the pool of questions available https://github.com/hanimunir/Question/blob/main/QuestionContext.csv. (accessed date: 29 September 2021) Participants were instructed to answer those questions honestly with the first answer coming to their mind. During conversation chatbot personal semantics memory was constructed comprehended self-knowledge (personal view, perspectives, and preferences). Chatbot dialogue in conversational mode is given in Table 4.

Table 4.

Example dialogue exchange between a personal semantic agent and human agent.

In Question Mode, the chatbot was queried for the personal semantics about the participant. A chunk of personal semantic memory with the highest mass value was retrieved in response to the query. Chatbot expressed the personal semantics using the dictionary given in Table 5. The contents of the dictionary were taken from the MBTI personality test.

Table 5.

Dictionary to express personal semantics.

Validation of a given model was carried out using the external validation method of unsupervised learning. An MBTI-personality test was used for generating the true labels M = {C1, C2, C3,…, Cn} whereas personal semantic memory model generated labels were considered as predicted labels P ={ D1, D2, D3,…, Dn}. The similarity between the two is computed generating confusion metrics in Section 5. Chatbot asked questions randomly from the participants from the MBTI questionnaire (test) and the pool of general questions (training) turn by turn. Memory learns after every fifty interactions. The performance metrics recall, precision, and F1 score were measured temporally at time t retraining model for every fifty new interactions given in Table 5 in Section 5. Precision, recall, and F1 score calculated according to the Equations (15)–(17).

5. Results

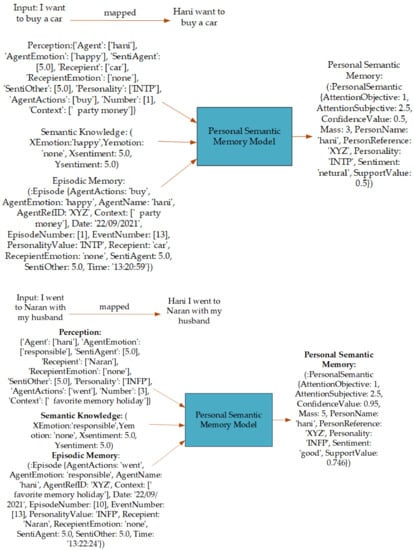

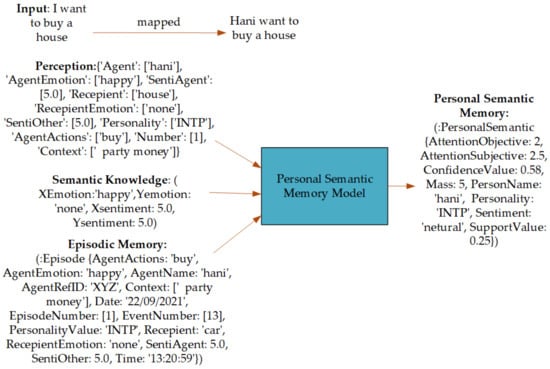

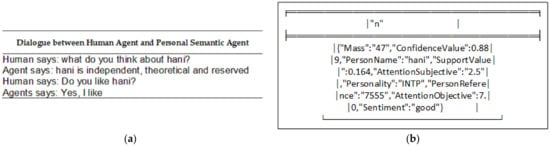

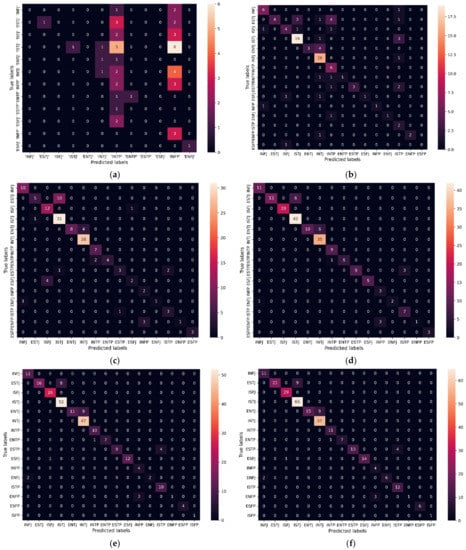

Multiple experiments were performed to verify the model during conversation mode. Knowledge transient during experiments is depicted in Figure 9. Chatbot received external stimuli from the participants. Percept, semantic knowledge, episodes, and personal semantic knowledge generated during processing of external stimuli as shown in Figure 9. Knowledge transient is shown in Figure 9. Figure 10 depicted the personal semantics generated by the chatbot generated. Confusion metrics for each iteration were computed and shown in Figure 11.

Figure 9.

Knowledge transient during experiments.

Figure 10.

Experiment Results for a personae = {‘AgentName’: [‘hani’], ‘PersonReference’: [‘XYZ’], ‘PersonInterest’: [‘Boating’], ‘Interaction’: [7], ‘Personality’: None} (a) Dialogue between chatbot and human in Question mode for the verification of personal semantic memory; (b) Knowledge retrieved from Personal Semantic Memory in response to the query.

Figure 11.

Confusion Metrics. (a) After 50 interactions; (b) After 100 interactions; (c) After 150 interactions; (d) After 200 interactions; (e) After 250 interactions; (f) After 300 interactions.

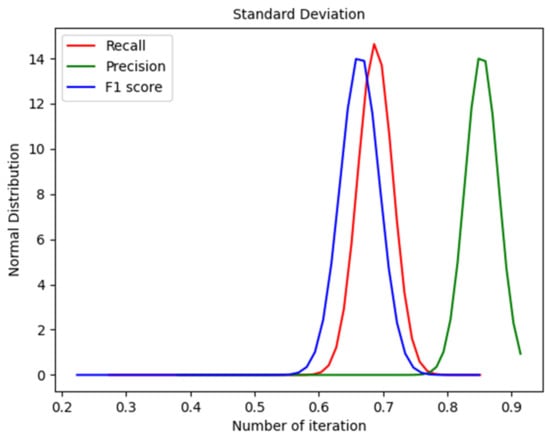

Our findings described an increase in recall and precision retraining model after a few conversations as shown in Figure 12 and illustrated from Table 6. The philosophical aspect of personal semantic memory is different from the existing memories, the proposed model was compared with the state-of-the-art other cognitive memory models for technicality as given in Table 7. An agent with a personal semantic model can able to construct and express personal views, perspectives, and preferences mentioned in Table 2 along with the other cognitive features of emotion recognition, semantic memory, and episodic memory as expressed likeness (I like Hani) and personal views (Hani is independent, theoretical and reserved) during experiments given in Figure 10a.

Figure 12.

Standard deviation for Recall, Precision and F1 score.

Table 6.

Recall, Precision and F1 score for every fifty new interactions.

Table 7.

State-of-the-art comparison with proposed personal semantic memory: Working Memory (WM), Semantic Memory (SM), Episodic Memory (EM), Personal Semantic Memory (PSM).

6. Discussion

In our findings, personal semantic memory played role in subjective knowledge acquisition related to personal views about a person. These views evolved as the knowledge evolved. Cognitive values are supported in the computation of robustness of a particular chunk of personal semantics. Semantic memory and episodic memory helped in the formulation of personal semantics. Semantic memory and episodic memory in the proposed model fulfill the philosophical requirements emphasized by Renoult by comparing the main characteristics of semantic and episodic memory [12]. He elaborated semantic memory as the content of perception and actions and found a separate region in the brain for their representation. In this work, general world knowledge in the form of semantic memory was represented as a knowledge graph where the predicate between the person “X” and person “Y” and the impact of the predicate was represented as nodes and the types of relationship between as link shown in Figure 3. According to Renoult, semantic processing is the understanding of external stimuli [12]. Semantic processing of external stimuli in the proposed model took place at the perception level for the easy understanding of the meanings depicted in Figure 12. In the future, further enrichment in knowledge can further improve formulated personal semantics.

In the proposed architecture, the episodic memory main features described in [12,24,28,39] were considered. Episodic memory entailed events knowledge in the form of episodes as shown in Figure 6. The episode consisted of the event number, episode number, time, and space information for the long-term associations. The knowledge from episodic memory and semantic knowledge was used for inferring the personal semantic knowledge. The proposed model sentiment analysis unit can help in the assessment of actions and personality. Inferred knowledge was represented in personal semantic memory as a chunk of personal semantic. The model followed the concept of amendment for the version. Each version had mass, support, and confidence values as shown in Figure 8. Mass represented the weight for resistance that can be used in the future for resisting the change depending on subjective importance. Renoult claimed that the preferences and perspectives of a person evolve with time [42]. The proposed model followed the philosophy for personal semantics construction. Personal semantics in a proposed model evolved with time in terms of weight and content as depicted in Figure 12. Minimum threshold values for cognitive values can be adjusted according to the domain knowledge as it can vary from domain to domain for the truthiness of knowledge. In this research, a chatbot was developed with the given architecture. Personal semantics constructed in the personal semantic memory of a chatbot during experiments. Chatbot expressed personal semantic in the form of perspective and likeness shown in Figure 10a. This was required for testing and validation purposes. Chatbot responded on receiving the external stimuli in the form of a query during experiments. Personal semantics expressed by the chatbot fall in the sub category of personal semantics highlighted by Renoult [8]. The given results are the qualitative description of the theories and philosophies proposed by Renoult and Grilli [9,17]. Results suggest that agents with the proposed architecture can possess personal views, perspectives, and preferences that may evolve with time. Moreover, the model suggested that agent behaviors can be improved through possessing and expressing conscious responses from personal semantic memory during the interaction.

This work opens up a new way for intelligent agents to formulate their views, beliefs, and thoughts that is the foundation of subjectivity. An intelligent agent can formulate these based on the knowledge from the perception that can be different from others in terms of content and weight. Moreover, this ability of intelligent agents can help them in the assessment of actions for mapping perception to their own actions repertoire in the taxonomy of social learning [43]. In the future intelligent agent can use this ability to assess collaborators in the collaborative environment during interaction where control over physical states, emotions, and feeling is required [44].

An agent with the proposed model was capable of having personal semantics based on semantic memory built from the Event2mind dataset and episodes from episodic memory. The system’s general knowledge and experiences are limited right now and can be improved further as the knowledge evolves. Considering the importance of the work for the future, there is a need to define more knowledge regulatory units for agent better understanding to improve personal semantic production and formulation for other types of personal semantics mentioned in Table 2, also there is a need to deduce a test to check the level of subjectivity on some Likert scale as the personal semantics evolves and become more mature.

7. Conclusions

The personal semantic memory model is proposed in this paper for personal semantics acquisition. Our contribution is the implementation of personal semantic memory providing a way of inducing subjectivity in a cognitive agent in terms of personal views and perspectives. Personal semantics changed as knowledge evolved. The experiments were conducted on the chatbot with the proposed architecture in which conversation of chatbot held with humans for the construction of personal semantic memory. As a result of conversations, personal semantics in a personal semantic memory of a chatbot formulates. The results suggest that agents with a proposed architecture can acquire personal semantic knowledge utilizing the knowledge from semantic memory and episodic memory endowing subjectivity in context to the personal views, perspectives, and self-knowledge.

Author Contributions

Conceptualization, W.M.Q. and A.-e.-h.M.; methodology, A.-e.-h.M. and W.M.Q.; supervision, W.M.Q.; validation, A.-e.-h.M.; writing—original draft, A.-e.-h.M.; writing—review and editing, W.M.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

Authors declare no conflict of interest.

References

- Ratner, C. Subjectivity and Objectivity in Qualitative Methodology. Forum Qual. Soz. Forum Qual. Soc. Res. 2002, 3, 1–8. [Google Scholar]

- Rey, F.G. The Topic of Subjectivity in Psychology: Contradictions, Paths, and New Alternatives. In Theory of Subjectivity from a Cultural-Historical Standpoint; Springer: Berlin/Heidelberg, Germany, 2021; pp. 37–58. [Google Scholar]

- McCormick, C.J. “Now There’s No Difference”: Artificial Subjectivity as a Posthuman Negotiation of Hegel’s Master/Slave Dialectic. Master’s Thesis, Georgia State University, Atlanta, GA, USA, 2011. [Google Scholar]

- Su, X.; Guo, S.; Chen, F. Subjectivity Learning Theory towards Artificial General Intelligence. arXiv 2019, arXiv:1909.03798. [Google Scholar]

- Komulainen, E.; Meskanen, K.; Lipsanen, J.; Lahti, J.M.; Jylhä, P.; Melartin, T.; Wichers, M.; Isometsä, E.; Ekelund, J. The Effect of Personality on Daily Life Emotional Processes. PLoS ONE 2014, 9, e110907. [Google Scholar] [CrossRef]

- Roberts, B.W.; Mroczek, D. Personality Trait Change in Adulthood. Curr. Dir. Psychol. Sci. 2008, 17, 31–35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Samsonovich, A.V. On a Roadmap for the BICA Challenge. Biol. Inspired Cogn. Archit. 2012, 1, 100–107. [Google Scholar] [CrossRef]

- Renoult, L.; Armson, M.J.; Diamond, N.B.; Fan, C.L.; Jeyakumar, N.; Levesque, L.; Oliva, L.; McKinnon, M.; Papadopoulos, A.; Selarka, D.; et al. Classification of General and Personal Semantic Details in the Autobiographical Interview. Neuropsychologia 2020, 144, 107501. [Google Scholar] [CrossRef]

- Renoult, L.; Davidson, P.S.; Palombo, D.J.; Moscovitch, M.; Levine, B. Personal Semantics: At the Crossroads of Semantic and Episodic Memory. Trends Cogn. Sci. 2012, 16, 550–558. [Google Scholar] [CrossRef] [Green Version]

- Renoult, L.; Tanguay, A.; Beaudry, M.; Tavakoli, P.; Rabipour, S.; Campbell, K.; Moscovitch, M.; Levine, B.; Davidson, P.S. Personal Semantics: Is It Distinct from Episodic and Semantic Memory? An Electrophysiological Study of Memory for Autobiographical Facts and Repeated Events in Honor of Shlomo Bentin. Neuropsychologia 2016, 83, 242–256. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Renoult, L.; Davidson, P.S.; Schmitz, E.; Park, L.; Campbell, K.; Moscovitch, M.; Levine, B. Autobiographically Significant Concepts: More Episodic than Semantic in Nature? An Electrophysiological Investigation of Overlapping Types of Memory. J. Cogn. Neurosci. 2014, 27, 57–72. [Google Scholar] [CrossRef] [Green Version]

- Renoult, L. Semantic Memory: Behavioral, Electrophysiological and Neuroimaging Approaches. In From Neurosciences to Neuropsychology—The Study of the Human Brain; Corporación Universitaria Reformada: Barranquilla, Colombia, 2016. [Google Scholar]

- Kotseruba, I.; Tsotsos, J.K. A Review of 40 Years of Cognitive Architecture Research: Core Cognitive Abilities and Practical Applications. arXiv 2016, arXiv:1610.08602. [Google Scholar]

- Lucentini, D.F.; Gudwin, R.R. A Comparison Among Cognitive Architectures: A Theoretical Analysis. Procedia Comput. Sci. 2015, 71, 56–61. [Google Scholar] [CrossRef] [Green Version]

- Coronel, J.C.; Federmeier, K.D. The N400 Reveals How Personal Semantics Is Processed: Insights into the Nature and Organization of Self-Knowledge. Neuropsychologia 2016, 84, 36–43. [Google Scholar] [CrossRef] [Green Version]

- Kazui, H.; Hashimoto, M.; Hirono, N.; Mori, E. Nature of Personal Semantic Memory: Evidence from Alzheimer’s Disease. Neuropsychologia 2003, 41, 981–988. [Google Scholar] [CrossRef]

- Grilli, M.D.; Verfaellie, M. Experience-near but not experience-far autobiographical facts depend on the medial temporal lobe for retrieval: Evidence from amnesia. Neuropsychologia 2016, 81, 180–185. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kiverstein, J. Could a Robot Have a Subjective Point of View? J. Conscious. Stud. 2007, 14, 127–139. [Google Scholar]

- Evans, K. The Implementation of Ethical Decision Procedures in Autonomous Systems: The Case of the Autonomous Vehicle. Ph.D. Thesis, Sorbonne Université, Paris, France, 2021. [Google Scholar]

- Albus, J.S.; Barbera, A.J. RCS: A Cognitive Architecture for Intelligent Multi-Agent Systems. Annu. Rev. Control 2005, 29, 87–99. [Google Scholar] [CrossRef]

- Ritter, F.E.; Tehranchi, F.; Oury, J.D. ACT-R: A Cognitive Architecture for Modeling Cognition. Wiley Interdiscip. Rev. Cogn. Sci. 2019, 10, e1488. [Google Scholar] [CrossRef]

- Rohrer, B.; Hulet, S. BECCA—A Brain Emulating Cognition and Control Architecture; Tech. Rep.; Cybernetic Systems Integration Department, University of Sandria National Laboratories: Alberquerque, NM, USA, 2006. [Google Scholar]

- Arrabales, R.; Ledezma Espino, A.I.; Sanchis de Miguel, M.A. CERA-CRANIUM: A Test Bed for Machine Consciousness Research; International Workshop on Machine Consiouness. In Proceedings of the International Workshop on Machine Consiouness, Hong Kong, 12–14 June 2009. [Google Scholar]

- Sun, R. The CLARION Cognitive Architecture: Toward a Comprehensive Theory Of Mind. In The Oxford Handbook of Cognitive Science; Oxford University Press: Oxford, UK, 2016; p. 117. [Google Scholar]

- Hart, D.; Goertzel, B. OpenCog: A Software Framework for Integrative Artificial General Intelligence. In AGI; ISO Press: Amsterdam, The Netherlands, 20 June 2008; pp. 468–472. [Google Scholar]

- Evertsz, R.; Ritter, F.E.; Busetta, P.; Pedrotti, M.; Bittner, J.L. CoJACK–Achieving Principled Behaviour Variation in a Moderated Cognitive Architecture. In In Proceedings of the 17th Conference on Behavior Representation in Modeling and Simulation; Providence, RI, USA, 14–17 April 2008; pp. 80–89.

- Franklin, S.; Patterson, F.G., Jr. The LIDA Architecture: Adding New Modes of Learning to an Intelligent, Autonomous, Software Agent; Society for Design and Process Science: Plano, TX, USA, 2006; Volume 703, pp. 764–1004. [Google Scholar]

- Laird, J.E. The Soar Cognitive Architecture; MIT Press: London, UK, 2012. [Google Scholar]

- Wendt, A.; Gelbard, F.; Fittner, M.; Schaat, S.; Jakubec, M.; Brandstätter, C.; Kollmann, S. Decision-Making in the Cognitive Architecture SiMA. In Proceedings of the 2015 Conference on Technologies and Applications of Artificial Intelligence (TAAI), Tainan, Taiwan, 20–22 November 2015; pp. 330–335. [Google Scholar]

- Vernon, D.; von Hofsten, C.; Fadiga, L. The ICub Cognitive Architecture. In A Roadmap for Cognitive Development in Humanoid Robots; Springer: Berlin/Heidelberg, Germany, 2010; pp. 121–153. [Google Scholar]

- Bukhari, S.T.S.; Qazi, W.M. Perceptual and Semantic Processing in Cognitive Robots. Electronics 2021, 10, 2216. [Google Scholar] [CrossRef]

- Pujara, J.; Miao, H.; Getoor, L.; Cohen, W. Knowledge Graph Identification. In The Semantic Web – ISWC 2013, Proceedings of the International Semantic Web Conference, Sydney, NSW, Australia, 21–25 October 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 542–557. [Google Scholar]

- Kazemifard, M.; Ghasem-Aghaee, N.; Koenig, B.L.; Tuncer, I.Ö. An emotion understanding framework for intelligent agents based on episodic and semantic memories. Auton. Agents Multi-Agent Syst. 2014, 28, 126–153. [Google Scholar] [CrossRef]

- Riek, L.D. Wizard of Oz Studies in Hri: A Systematic Review and New Reporting Guidelines. J. Hum.-Robot. Interact. 2012, 1, 119–136. [Google Scholar] [CrossRef] [Green Version]

- Keh, S.S.; Cheng, I. Myers-Briggs Personality Classification and Personality-Specific Language Generation Using Pre-Trained Language Models. arXiv 2019, arXiv:1907.06333. [Google Scholar]

- Rashkin, H.; Sap, M.; Allaway, E.; Smith, N.A.; Choi, Y. Event2mind: Commonsense Inference on Events, Intents, and Reactions. arXiv 2018, arXiv:1805.06939. [Google Scholar]

- Murphy, D.; Paula, T.S.; Staehler, W.; Vacaro, J.; Paz, G.; Marques, G.; Oliveira, B. A Proposal for Intelligent Agents with Episodic Memory. arXiv 2020, arXiv:2005.03182. [Google Scholar]

- Sarthou, G.; Clodic, A.; Alami, R. Ontologenius: A Long-Term Semantic Memory for Robotic Agents. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–8. [Google Scholar]

- Nuxoll, A.M.; Laird, J.E. Enhancing Intelligent Agents with Episodic Memory. Cogn. Syst. Res. 2012, 17, 34–48. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Tan, A.-H.; Teow, L.-N. Semantic Memory Modeling and Memory Interaction in Learning Agents. IEEE Trans. Syst. Man Cybern. Syst. 2016, 47, 2882–2895. [Google Scholar] [CrossRef]

- Wojtczak, S. Endowing Artificial Intelligence with Legal Subjectivity. AI Soc. 2021, 1–9. [Google Scholar] [CrossRef]

- Renoult, L.; Kopp, L.; Davidson, P.S.; Taler, V.; Atance, C.M. You’ll Change More than I Will: Adults’ Predictions about Their Own and Others’ Future Preferences. Q. J. Exp. Psychol. 2016, 69, 299–309. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Breazeal, C.; Scassellati, B. Robots That Imitate Humans. Trends Cogn. Sci. 2002, 6, 481–487. [Google Scholar] [CrossRef]

- Cook, J.L.; Murphy, J.; Bird, G. Judging the Ability of Friends and Foes. Trends Cogn. Sci. 2016, 20, 717–719. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).