Abstract

This paper proposes a novel reinforcement learning (RL)-based tracking control scheme with fixed-time prescribed performance for a reusable launch vehicle subject to parametric uncertainties, external disturbances, and input constraints. First, a fixed-time prescribed performance function is employed to restrain attitude tracking errors, and an equivalent unconstrained system is derived via an error transformation technique. Then, a hyperbolic tangent function is incorporated into the optimal performance index of the unconstrained system to tackle the input constraints. Subsequently, an actor-critic RL framework with super-twisting-like sliding mode control is constructed to establish a practical solution for the optimal control problem. Benefiting from the proposed scheme, the robustness of the RL-based controller against unknown dynamics is enhanced, and the control performance can be qualitatively prearranged by users. Theoretical analysis shows that the attitude tracking errors converge to a preset region within a preassigned fixed time, and the weight estimation errors of the actor-critic networks are uniformly ultimately bounded. Finally, comparative numerical simulation results are provided to illustrate the effectiveness and improved performance of the proposed control scheme.

1. Introduction

Recent years have witnessed an increasing demand for reliable and economical access to space. Reusable launch vehicles (RLV), as a cost-effective means of undertaking space missions, are attracting more and more attention from researchers [1]. A dynamic model of RLV provides strong non-linear and coupling characteristics due to the complex flight environment of the re-entry phase. External disturbances, uncertain structural and aerodynamic parameters, and input constraints inevitably exist during real flight, having a significant impact on the attitude control system. In this context, attitude control for RLV is a challenging topic and has elicited widespread interest. Various control methodologies, such as adaptive control [2], dynamic inversion control [3], robust control [4], sliding mode control [5,6], and neural network (NN) control [7,8], have been applied over the past decades. Nevertheless, there is still scope to develop an optimal control approach for RLV suffering from complicated non-linear dynamics, parametric uncertainties and limited inputs.

From a mathematical point of view, the Hamilton–Jacobi–Bellman (HJB) function and its solution are required to be established to solve the optimal control problems. However, it is difficult to derive an analytical solution from the HJB function for non-linear continuous-time systems. Given this, a reinforcement learning (RL) scheme with an actor-critic (AC) structure was initially created by Werbos [9], whereby a critic network was exploited to approximate the value function, and an actor network was deployed to obtain the optimal control policy. Informed by Werbos’ contribution, Vamvoudakis et al. developed an online AC algorithm to solve the continuous-time infinite horizon optimal control problem [10]. He et al. proposed a novel online learning and optimization structure by incorporating a reference network into the AC structure [11]. Ma et al. devised a learning-based adaptive sliding mode control scheme for a tethered space robot with limited inputs [12]. Although the above control strategies have provided excellent results in terms of optimal control, the existing problem concerns the need for accurate dynamic modeling [13]. Given the parametric uncertainties and unknown disturbances, it is, in practice, difficult to exactly determine the system dynamics for RLV, limiting the methods’ applicability. Therefore, a further problem exists in that the aforementioned methods’ robustness must be enhanced for practical systems with unknown dynamics. Fan et al. combined (ISMC) with a reinforcement learning control scheme for non-linear systems with partially unknown dynamics [14]. However, the input constraints were not considered, and the ISMC used may lead to unexpected oscillation [15]. Zhang et al. developed a learning-based tracking control scheme for which the uncertainties and input constraints were considered [16]. Nevertheless, the control design is conservative, and the iterative algorithm is rather complicated.

It is of note that previous RL-based control methods only establish asymptotic or finite-time convergence [17]. Therefore, the upper bound of the convergence time is uncontrollable, and the transient and steady-state performance, namely, the maximum overshoot and the steady accuracy, cannot be quantitatively prearranged by users. As a promising solution to this problem, the prescribed performance control (PPC) method created by Bechlioulis et al. has attracted widespread attention [18]. The salient feature of PPC is that users can quantitatively pre-arrange both the transient performance and the steady tracking error. In [19], a novel PPC scheme combined with a command filter was proposed for a quadrotor unmanned aerial vehicle subject to error constraints. In [20], an NN-based adaptive non-affine tracking controller was devised for an air-breathing hypersonic vehicle with guaranteed prescribed performance. In [21], a data-driven PPC scheme was developed for an unmanned surface vehicle with unknown dynamics. However, the conventional PPC approach only ensures that the system states converge to the preset region as time tends to infinity [22], leading to an unsatisfactory solution for time-limited problems, such as the re-entry mission. Moreover, the input constraints and the optimality are not comprehensively considered in the conventional PPC paradigm. Therefore, the expected performance index cannot be consistently guaranteed and optimized for RLV suffering from poor aerodynamic maneuverability and limited control torques.

Motivated by the foregoing considerations, a novel RL-based tracking controller, with fixed-time prescribed performance for RLV subject to parameter uncertainties, external disturbances and input constraints, is investigated. The main contributions and characteristics of the proposed method can be summarized as follows.

- An online RL-based, nearly optimal, controller with limited inputs is developed by synthesizing the AC structure and the hyperbolic tangent performance index. In addition, the robustness of the learning-based controller is strengthened by incorporating a super-twisting-like sliding mode control.

- Compared with a previous learning-based controller described in [10,11,12], in which the system dynamics are required to be known exactly, the proposed control scheme only requires the input-output data pairs of RLV, such that the system dynamics can be completely unknown.

- In contrast to existing RL-based control schemes with asymptotic or finite-time convergence [10,11,12,17,21], the proposed control scheme can ensure that the tracking errors converge to a preset region within a preassigned fixed time. Moreover, the prescribed transient and steady-state performance can be guaranteed.

- Comparative numerical simulation investigations show that the proposed method can provide improved performance in terms of the transient response, and steady accuracy with less control effort.

2. Problem Statement and Preliminaries

2.1. Problem Statement

Following [2], the control-oriented model of the rigid-body RLV is given as follows:

where represents the attitude angle vector, denotes the angular rate vector, is the control input vector, and are limited to the interval , is the unknown disturbance vector, is the aerodynamic torque vector, and is the external disturbance vector. can be formulated as:

where , and represent the damping moment coefficients, , and are the static stability moment coefficients, V is the velocity, is the dynamic pressure, and and are the cross-sectional area and the reference length of RLV, respectively.

The skew-symmetric matrix , the inertia matrix , and the coordinate transformation matrix are defined by

Defining the guidance command vector , the attitude tracking error vector , and the angular rate tracking error vector , the tracking error dynamics are given as:

where is the control matrix, denotes the lumped disturbance vector.

Assumption A1

([2]). is bounded by .

Assumption A2

([2]). During the re-entry phase, , thus is always invertible.

Control Objective: According to the tracking error dynamics (4), the control objective of this paper can be summarized as developing an RL-based optimal control scheme with guaranteed fixed-time prescribed performance such that the attitude tracking errors can converge to a preset region within a preassigned fixed time.

2.2. Preliminaries

Lemma 1

([23]). Considering a non-linear function , the following equation

always holds, where is bounded by a real positive constant.

Lemma 2

([24]). Considering the following fixed-time prescribed performance function (FTPPF)

where represent the initial and terminal values of FTPPF, respectively. is the preassigned convergence time. It can be concluded that is a positive, non-increasing and continuous function with , and .

Lemma 3

([25]). For any , the following inequality holds

where satisfies (i.e., ).

3. Controller Design

3.1. Prescribed Performance Constraint

In this subsection, the following constraint is formulated to restrain the attitude tracking errors within the FTPPF

where is defined in Lemma 2. Subsequently, the equivalent unconstrained error variables can be derived via the error transformation method [18]

with . Taking the first and second-order time derivatives of yields

with , and . Substituting (4) into (10), one obtains

where , and , and the symbols and represent the diagonal matrix and the column vector, respectively.

3.2. Reinforcement Learning-Based Control Design

Firstly, the following sliding variable is defined as:

where is a positive diagonal matrix. Taking the first time derivative of along (11) yields

where . In order to achieve a satisfactory tracking performance, a traditional super-twisting controller based on (12) and (13) is developed as follows:

where , , and and are positive constants. Nevertheless, the feasibility of (14) may not be guaranteed with limited control inputs, and the time-fuel performance index cannot be approximately optimized. To this end, an online RL-based, nearly optimal, controller is proposed by integrating the AC structure into the super-twisting controller for a comprehensive solution to the issue mentioned above.

Before elaborating the detailed design procedure, it is assumed that there exists a group of admissible control strategies [10]

Moreover, can achieve the control objective with the following time-fuel performance index being satisfied

where , is a positive diagonal matrix, and is chosen as [26]:

where is selected as a positive diagonal matrix, represents the variable of integration, and the upper and lower bounds of are and , respectively. The Lyapunov equation of (16) can be calculated as:

where . The optimal value function is defined as:

and the corresponding HJB equation can be formulated as:

where .

Equation (20) is equivalent to

Solving the partial derivative of (21) yields the following optimal control strategy

Substituting into (17), one obtains

where is a column vector with all elements being one, and is a row vector generated by the elements on the main diagonal of . Combining (20) and (23), it can be derived that

The nearly optimal control can be obtained by solving (24) if is available. Inspired by the NN-based control scheme, the following approximation of can be established

where is the optimal weight vector, is the base vector, and is the approximation error of NN. Subsequently, the gradient of is

where , . In light of the universal approximation property of NN for smooth functions on prescribed compact sets, the approximation errors and are bounded with a finite dimension of [27]. Moreover, it is assumed that , and are bounded [13,14,16,17,21].

Recalling (22), the NN-based nearly optimal control law can be formulated as:

Defining the Bellman error as [14]:

with , (20) can be rewritten as:

where is the bounded HJB error [10].

In this paper, the optimal weight is generated by the online RL scheme with the AC structure. In this context, the nearly optimal control policy is formulated as:

where is the weight of the actor network. Moreover, the performance index (16) can be estimated as:

where is the weight of the critic network. The adaptation laws for and are designed as:

where , ; and are positive diagonal matrices; ; , and are positive real constants. The projection operator is imposed on (33) to guarantee that is bounded [28]. It is assumed that is persistently excitating (PE) [29].

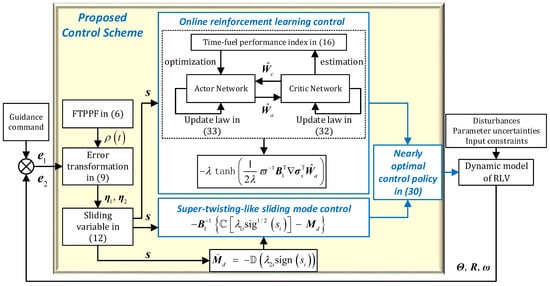

The proposed control scheme is illustrated by a block diagram in Figure 1.

Figure 1.

The block diagram of the proposed control scheme.

3.3. Stability Analysis

Theorem 1.

- the sliding variable , the weight estimation errors and are uniformly ultimately bounded (UUB);

- the attitude tracking errors uniformly obey the fixed-time performance envelops in (8).

Proof of Theorem 1

Consider the Lyapunov function candidate as follows:

where and . Taking the first time derivative of V yields

with , , and

Furthermore, according to (17), it can be deduced that

with . Equation (38) can be rewritten as:

where . Noting that is an odd function, it can be concluded that . Moreover, it is indicated that from the definition in (17). Based on the above discussions, (40) can be simplified as:

Subsequently, incorporating (32) with yields

With the aid of Lemma 1 and Lemma 3, (43) can be further simplified as:

where , and are bounded. For ease of notation, two bounded vectors and are defined as:

then (44) can be further sorted into the following structure

With the definition of the generalized vector , the inequality (47) can be rewritten as:

where , . Given that , are bounded vectors, it can be concluded that the is a bounded vector and and . Therefore, is negative if

which implies that the sliding variable and are UUB. Furthermore, in view of the assumption that is PE, the weight estimation error is also UUB [14]. Once the sliding variable converges to the vicinity of origin, the gradient vector together with will also be small enough. Defining , and assuming that there exists a small positive constant satisfying , the dynamic of the sliding variable can be represented as:

According to the deduction lines in [30], the sliding variable can reach a small neighborhood of origin; thus, the equivalent unconstrained errors are bounded.

Recalling the error transformation method in (9), it can be derived that

Note that is a smooth, strictly increasing, function w.r.t. and satisfies , ; thus, it can be derived that and hold true if is bounded. Following the above analysis, it can be deduced that never violates the fixed-time prescribed performance constraints in (8) for all , and for .

This completes the proof of Theorem 1. □

4. Numerical Simulations

In this section, numerical simulations for the re-entry phase of RLV are carried out to illustrate the effectiveness and improved performance of the proposed control scheme. The parameters of the RLV are based on [31]. The initial states of the re-entry phase are set as , , , , and . The guidance commands are designed as , and . The uncertainties of the inertia parameters, the aerodynamic parameters and the air density are each set to +20% bias. The external disturbance is given as [32]:

The saturation bound of the control torques is set as . The attitude tracking errors are required to be less than for . Therefore, the parameters of the FTPPF are selected as and . Comprehensively considering the satisfactory transient and steady-state performance, the parameters of the proposed control scheme are rigorously chosen as . Inspired by the work of [12,14], the suitable basis vectors can be selected as polynomial combinations of the concerned state variables in the performance index (16). The base vectors of the actor and critic networks are adjusted in repeated trials to balance the approximation error and the computational burden. They are identically selected as:

The initial values of and are randomly generated in . The user-defined parameters of the weight adaptation laws are designed as .

Furthermore, in order to demonstrate the superiority of the proposed control scheme, the RL-based finite-time control (RLFTC) with input constraints in [17], and the robust adaptive backstepping control (RABC) method in [33], are implemented. To provide a fair comparison, the time-fuel performance index of the RLFTC is identical to the proposed method, and the generation method for the initial values of the actor network and the critic network remains the same. The other user-defined parameters of the RLFTC are intensively selected as . The parameters of the RABC remain the same as that in [33]. The simulation results are given in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10.

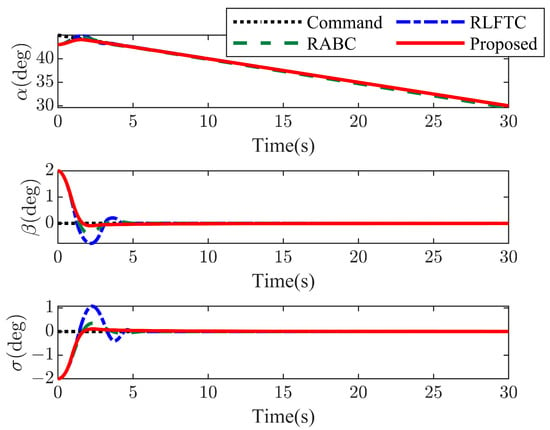

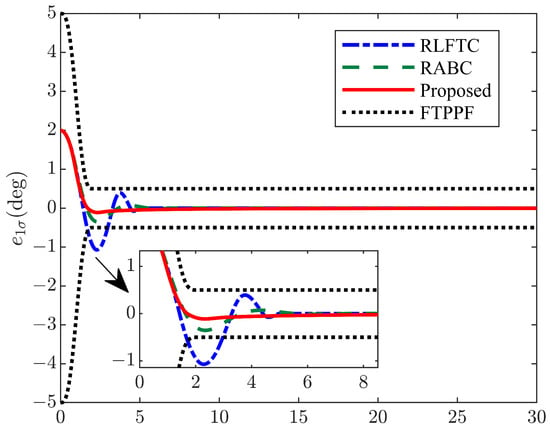

Figure 2.

Time histories of the attitude angles.

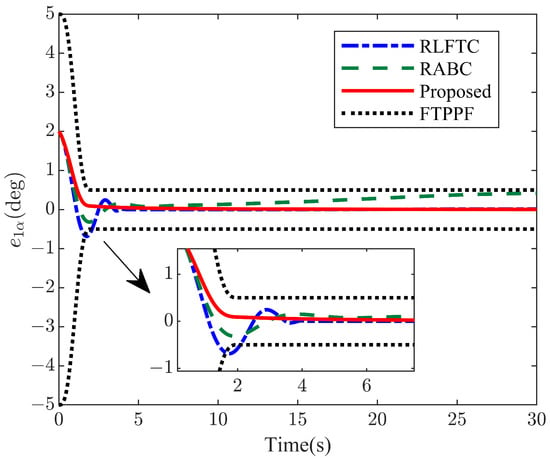

Figure 3.

Time histories of the attitude tracking error .

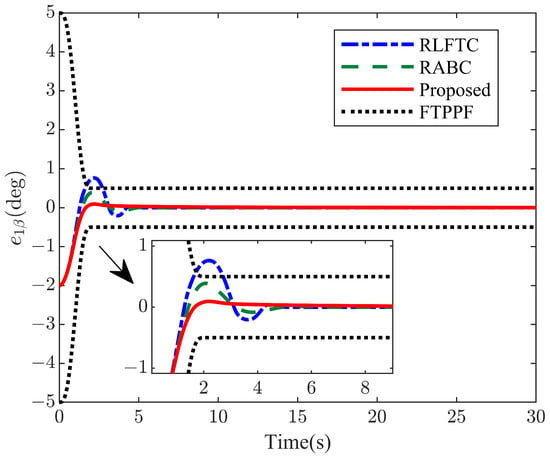

Figure 4.

Time histories of the attitude tracking error .

Figure 5.

Time histories of the attitude tracking error .

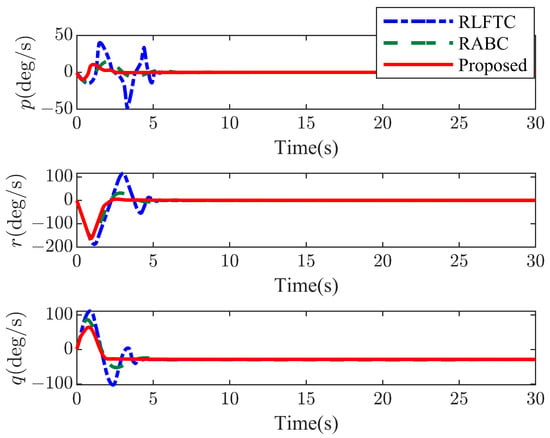

Figure 6.

Time histories of the angular rates.

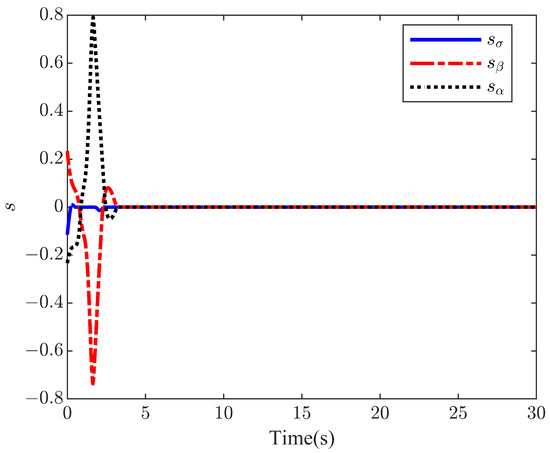

Figure 7.

Time histories of the sliding manifolds.

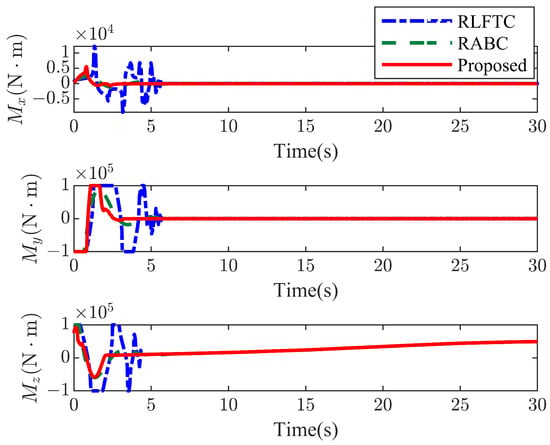

Figure 8.

Time histories of the control torques.

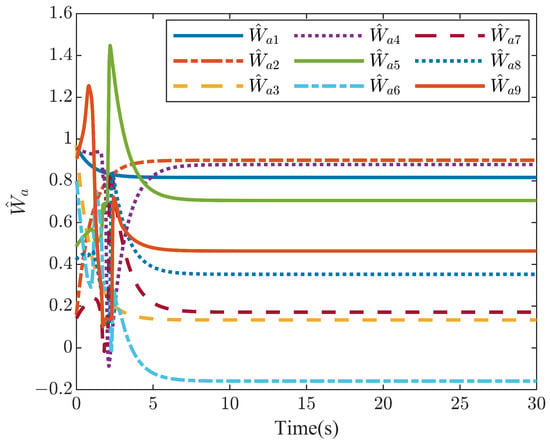

Figure 9.

Weights of the actor network.

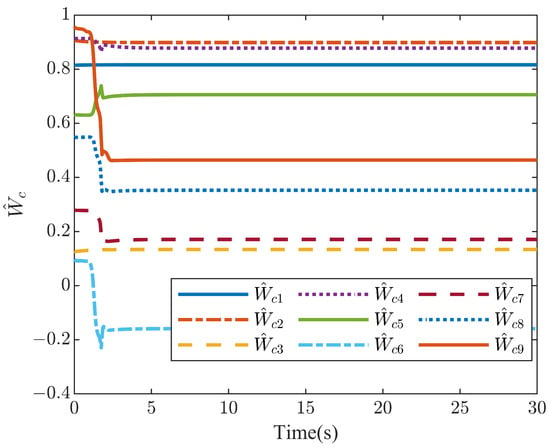

Figure 10.

Weights of the critic network.

The tracking performances of the attitude angles under three controllers are shown in Figure 2, Figure 3, Figure 4 and Figure 5. It can clearly be seen that the proposed control scheme can provide faster convergence and smaller overshoot in the presence of parametric uncertainties and external disturbances. The angular rates of RLV and the sliding manifolds are demonstrated in Figure 6 and Figure 7, which provide further evidence for the improved performance of the proposed control scheme. Moreover, by comparing the tracking performance with the control inputs illustrated in Figure 8, it can be observed that the proposed control scheme exhibits better transient and steady-state performance with limited control inputs. The evolution trajectories of and are depicted in Figure 9 and Figure 10, respectively. It can be readily found that and are convergent to the same values, which indicates that the ideal weight vector can be effectively estimated via the proposed adaptation law.

To make a clear comparison, the maximum overshoot and the adjustment time are introduced to evaluate the transient performance of these controllers. Moreover, the integral absolute control effort (IACE) index and the integral of the time and absolute error (ITAE) index of three control schemes are calculated to evaluate the tracking accuracy and control effort. The performance indices of the three channels are summarized in Table 1, Table 2 and Table 3.

Table 1.

Performance indexes of the -channel.

Table 2.

Performance indexes of the -channel.

Table 3.

Performance indexes of the -channel.

From the foregoing simulation results, it can be concluded that the proposed control scheme outperforms RLFTC and RABC in terms of transient performance, tracking accuracy and control effort. Furthermore, by synthesizing the AC structure and the fixed-time PPC paradigm, the proposed control scheme offers an online RL-based model-free solution for controlling RLV and other complex industrial systems.

5. Conclusions

In this paper, an innovative RL-based tracking control scheme with fixed-time prescribed performance has been proposed for RLV under parametric uncertainties, external disturbances, and input constraints. By resorting to the FTPPF, fixed-time performance envelopes have been imposed on the attitude tracking errors. Combined with the AC-based online RL structure and the super-twisting-like sliding mode control, the optimal control policy and the performance index have been learned recursively, and the robustness of the learning process has been further enhanced. Moreover, theoretical analysis has demonstrated that the attitude tracking error can converge to a preset region within a preassigned fixed time, and that the sliding variable, the weight estimation errors of the actor and critic networks are UUB. Comparative simulation results have verified the effectiveness and improved performance of the proposed control scheme. The angular rate constraints will be addressed in our future work, and the optimal control problem for the underactuated RLV will be specifically addressed. Experimental investigations, such as hardware-in-the-loop simulations, will be undertaken.

Author Contributions

Conceptualization, S.X. and Y.G.; methodology, S.X.; software, S.X.; validation, Y.G., C.W. and Y.L.; formal analysis, S.X.; investigation, S.X.; resources, C.W.; data curation, Y.G.; writing—original draft preparation, S.X.; writing—review and editing, S.X., Y.G., C.W., Y.L. and L.X.; visualization, S.X., Y.G. and L.X.; supervision, C.W.; project administration, C.W.; funding acquisition, C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the SAST Foundation grant number SAST2021-028.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their constructive comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RLV | Reusable Launch Vehicles |

| NN | Neural Network |

| RL | Reinforcement Learning |

| AC | Actor-Critic |

| UUB | Uniformly Ultimately Bounded |

| HJB | Hamilton–Jacobi–Bellman |

| PPC | Prescribed Performance Control |

| FTPPF | Fixed-time Prescribed Performance Function |

| RLFTC | Reinforcement-Learning-Based Finite-Time Control |

| RABC | Robust Adaptive Backstepping Control |

| IACE | Integral Absolute Control Effort |

| ITAE | Integral of Time and Absolute Error |

References

- Stott, J.E.; Shtessel, Y.B. Launch vehicle attitude control using sliding mode control and observation techniques. J. Frankl. Inst. B 2012, 349, 397–412. [Google Scholar] [CrossRef]

- Tian, B.L.; Li, Z.Y.; Zhao, X.P.; Zong, Q. Adaptive Multivariable Reentry Attitude Control of RLV With Prescribed Performance. IEEE Trans. Syst. Man Cybern. Syst. 2022, 1–5. [Google Scholar] [CrossRef]

- Acquatella, P.; Briese, L.E.; Schnepper, K. Guidance command generation and nonlinear dynamic inversion control for reusable launch vehicles. Acta Astronaut. 2020, 174, 334–346. [Google Scholar] [CrossRef]

- Xu, B.; Wang, X.; Shi, Z.K. Robust adaptive neural control of nonminimum phase hypersonic vehicle model. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 1107–1115. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, C.Z.; Wu, R.; Cui, N.G. Fixed-time extended state observer based non-singular fast terminal sliding mode control for a VTVL reusable launch vehicle. Aerosp. Sci. Technol. 2018, 82, 70–79. [Google Scholar] [CrossRef]

- Ju, X.Z.; Wei, C.Z.; Xu, H.C.; Wang, F. Fractional-order sliding mode control with a predefined-time observer for VTVL reusable launch vehicles under actuator faults and saturation constraints. ISA Trans. 2022, in press. [CrossRef]

- Cheng, L.; Wang, Z.B.; Gong, S.P. Adaptive control of hypersonic vehicles with unknown dynamics based on dual network architecture. Acta Astronaut. 2022, 193, 197–208. [Google Scholar] [CrossRef]

- Xu, B.; Shou, Y.X.; Shi, Z.K.; Yan, T. Predefined-Time Hierarchical Coordinated Neural Control for Hypersonic Reentry Vehicle. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Werbos, P. Approximate dynamic programming for realtime control and neural modelling. In Handbook of Intelligent Control: Neural, Fuzzy and Adaptive Approaches; Van Nostrand Reinhold: New York, NY, USA, 1992; pp. 493–525. [Google Scholar]

- Vamvoudakis, K.G.; Lewis, F.L. Online actor–critic algorithm to solve the continuous-time infinite horizon optimal control problem. Automatica 2010, 46, 878–888. [Google Scholar] [CrossRef]

- He, H.B.; Ni, Z.; Fu, J. A three-network architecture for on-line learning and optimization based on adaptive dynamic programming. Neurocomputing 2012, 78, 3–13. [Google Scholar] [CrossRef]

- Ma, Z.Q.; Huang, P.F.; Lin, Y.X. Learning-based Sliding Mode Control for Underactuated Deployment of Tethered Space Robot with Limited Input. IEEE Trans. Aerosp. Electron. Syst. 2021, 58, 2026–2038. [Google Scholar] [CrossRef]

- Wang, N.; Gao, Y.; Zhao, H.; Ahn, C.K. Reinforcement learning-based optimal tracking control of an unknown unmanned surface vehicle. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3034–3045. [Google Scholar] [CrossRef] [PubMed]

- Fan, Q.Y.; Yang, G.H. Adaptive actor–critic design-based integral sliding-mode control for partially unknown nonlinear systems with input disturbances. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 165–177. [Google Scholar] [CrossRef] [PubMed]

- Kuang, Z.; Gao, H.; Tomizuka, M. Precise linear-motor synchronization control via cross-coupled second-order discrete-time fractional-order sliding mode. IEEE/ASME Trans. Mechatronics 2020, 26, 358–368. [Google Scholar] [CrossRef]

- Zhang, H.; Cui, X.; Luo, Y.; Jiang, H. Finite-horizon H∞ tracking control for unknown nonlinear systems with saturating actuators. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 1200–1212. [Google Scholar] [CrossRef]

- Wang, N.; Gao, Y.; Yang, C.; Zhang, X.F. Reinforcement learning-based finite-time tracking control of an unknown unmanned surface vehicle with input constraints. Neurocomputing 2022, 484, 26–37. [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Rovithakis, G.A. Robust adaptive control of feedback linearizable MIMO nonlinear systems with prescribed performance. IEEE Trans. Autom. Control 2008, 53, 2090–2099. [Google Scholar] [CrossRef]

- Cui, G.Z.; Yang, W.; Yu, J.P.; Li, Z.; Tao, C.B. Fixed-time prescribed performance adaptive trajectory tracking control for a QUAV. IEEE Trans. Circuits Syst. II 2021, 69, 494–498. [Google Scholar] [CrossRef]

- Bu, X.W. Guaranteeing prescribed performance for air-breathing hypersonic vehicles via an adaptive non-affine tracking controller. Acta Astronaut. 2018, 151, 368–379. [Google Scholar] [CrossRef]

- Wang, N.; Gao, Y.; Zhang, X.F. Data-driven performance-prescribed reinforcement learning control of an unmanned surface vehicle. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 5456–5467. [Google Scholar] [CrossRef]

- Luo, S.B.; Wu, X.; Wei, C.S.; Zhang, Y.L.; Yang, Z. Adaptive finite-time prescribed performance attitude tracking control for reusable launch vehicle during reentry phase: An event-triggered case. Adv. Space Res. 2022, 69, 3814–3827. [Google Scholar] [CrossRef]

- Modares, H.; Sistani, M.B.N.; Lewis, F.L. A policy iteration approach to online optimal control of continuous-time constrained-input systems. ISA Trans. 2013, 52, 611–621. [Google Scholar] [CrossRef]

- Tan, J.; Guo, S.J. Backstepping control with fixed-time prescribed performance for fixed wing UAV under model uncertainties and external disturbances. Int. J. Control 2022, 95, 934–951. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, Z.; Guo, L.; Liu, H.P. Barrier Lyapunov functions-based adaptive fault tolerant control for flexible hypersonic flight vehicles with full state constraints. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 3391–3400. [Google Scholar] [CrossRef]

- Lyshevski, S.E. Optimal control of nonlinear continuous-time systems: Design of bounded controllers via generalized nonquadratic functionals. In Proceedings of the 1998 American Control Conference, ACC (IEEE Cat. No. 98CH36207). Philadelphia, PA, USA, 26–26 June 1998; Volume 1, pp. 205–209. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw. 1990, 3, 551–560. [Google Scholar] [CrossRef]

- Kamalapurkar, R.; Walters, P.; Dixon, W.E. Model-based reinforcement learning for approximate optimal regulation. Automatica 2016, 64, 94–104. [Google Scholar] [CrossRef] [Green Version]

- Bhasin, S.; Kamalapurkar, R.; Johnson, M.; Vamvoudakis, K.G.; Lewis, F.L.; Dixon, W.E. A novel actor–critic–identifier architecture for approximate optimal control of uncertain nonlinear systems. Automatica 2013, 49, 82–92. [Google Scholar] [CrossRef]

- Moreno, J.A.; Osorio, M. Strict Lyapunov functions for the super-twisting algorithm. IEEE Trans. Autom. Control 2012, 57, 1035–1040. [Google Scholar] [CrossRef]

- Wei, C.Z.; Wang, M.Z.; Lu, B.G.; Pu, J.L. Accelerated Landweber iteration based control allocation for fault tolerant control of reusable launch vehicle. Chin. J. Aeronaut. 2022, 35, 175–184. [Google Scholar] [CrossRef]

- Zhang, C.F.; Zhang, G.S.; Dong, Q. Fixed-time disturbance observer-based nearly optimal control for reusable launch vehicle with input constraints. ISA Trans. 2022, 122, 182–197. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Z.; Du, Y. Robust adaptive backstepping control for reentry reusable launch vehicles. Acta Astronaut. 2016, 126, 258–264. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).