Three-Dimensional Imaging via Time-Correlated Single-Photon Counting

Abstract

1. Introduction

2. Theory Analysis

2.1. TCSPC

2.2. Detection Probability of Signal Photon Events

2.3. Imaging Scheme Based on TCSPC

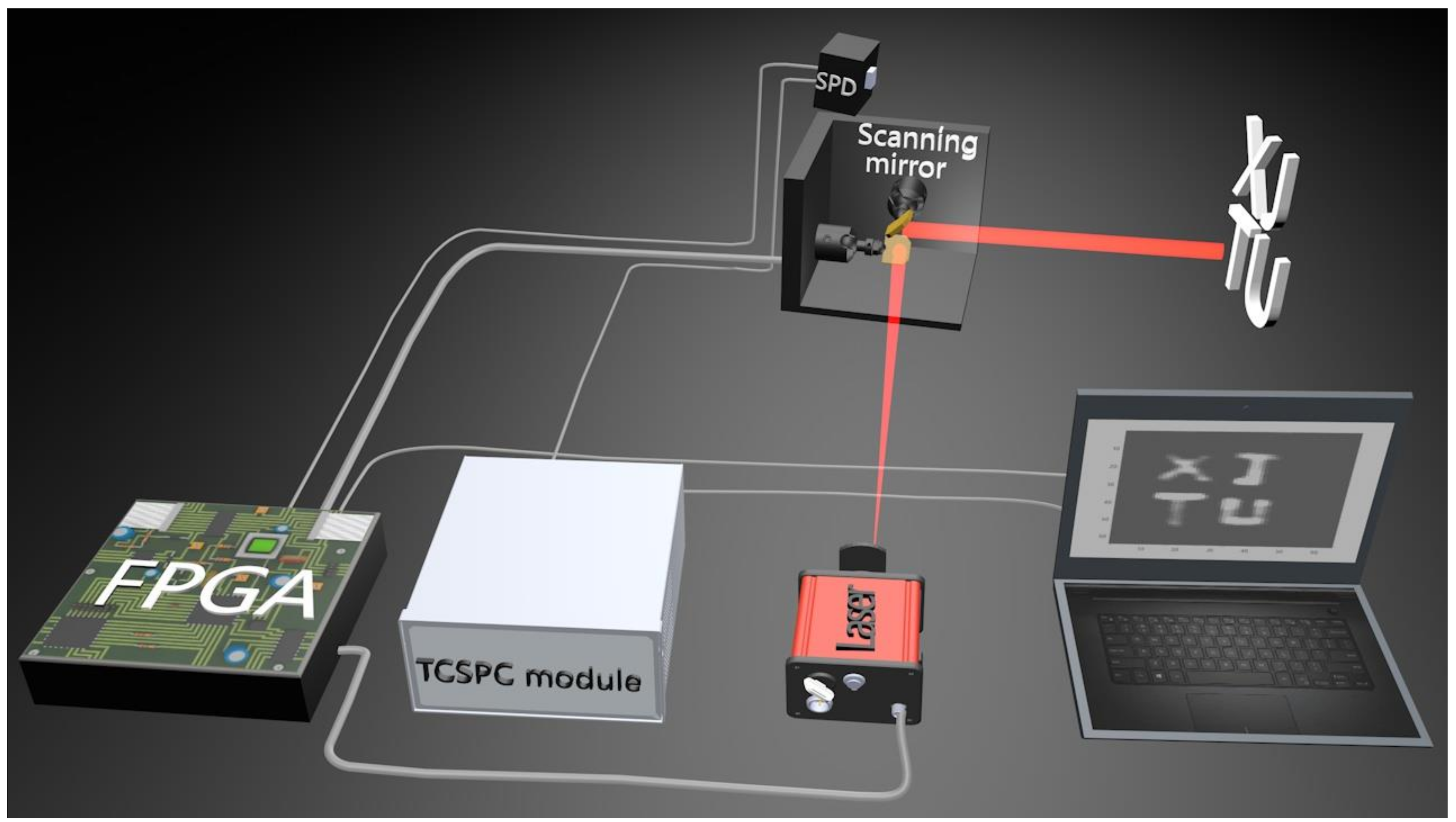

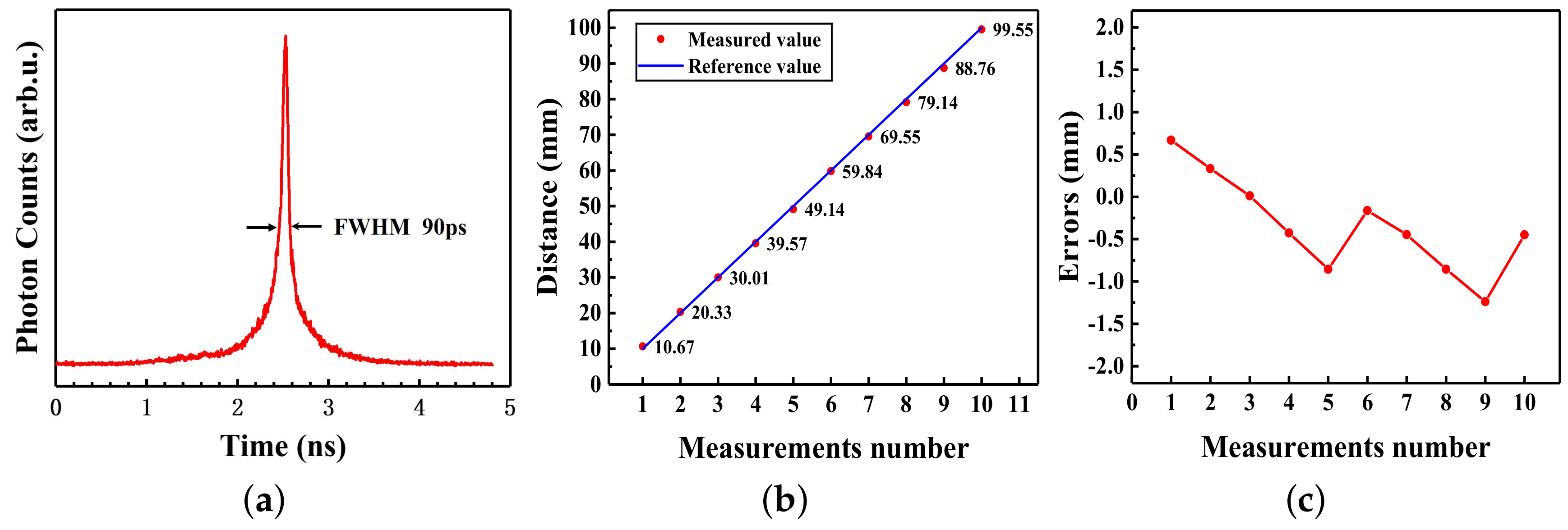

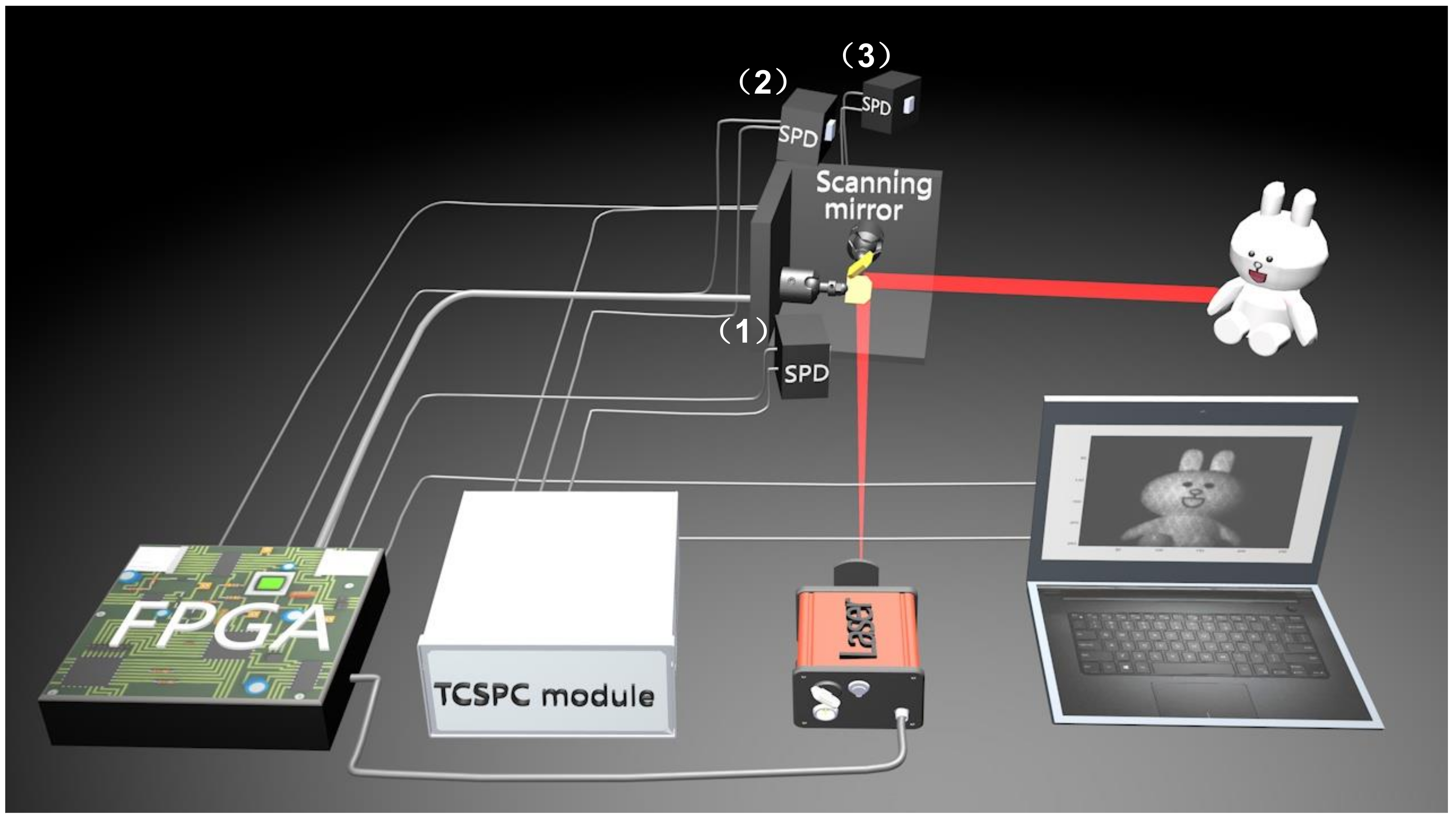

3. Experimental Setup and Results

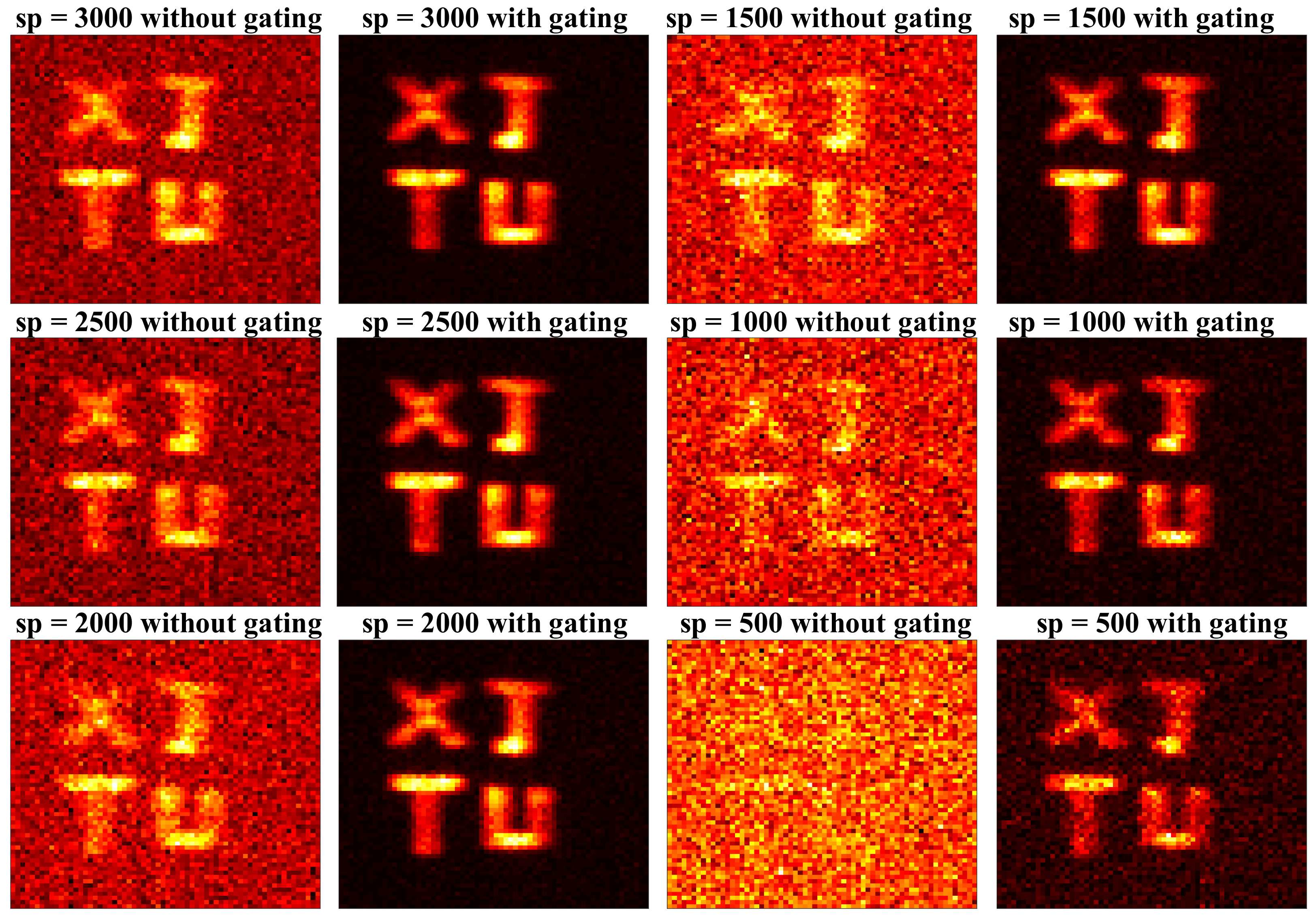

3.1. Noise Reduction Experiment Based on Range-Gated Technology

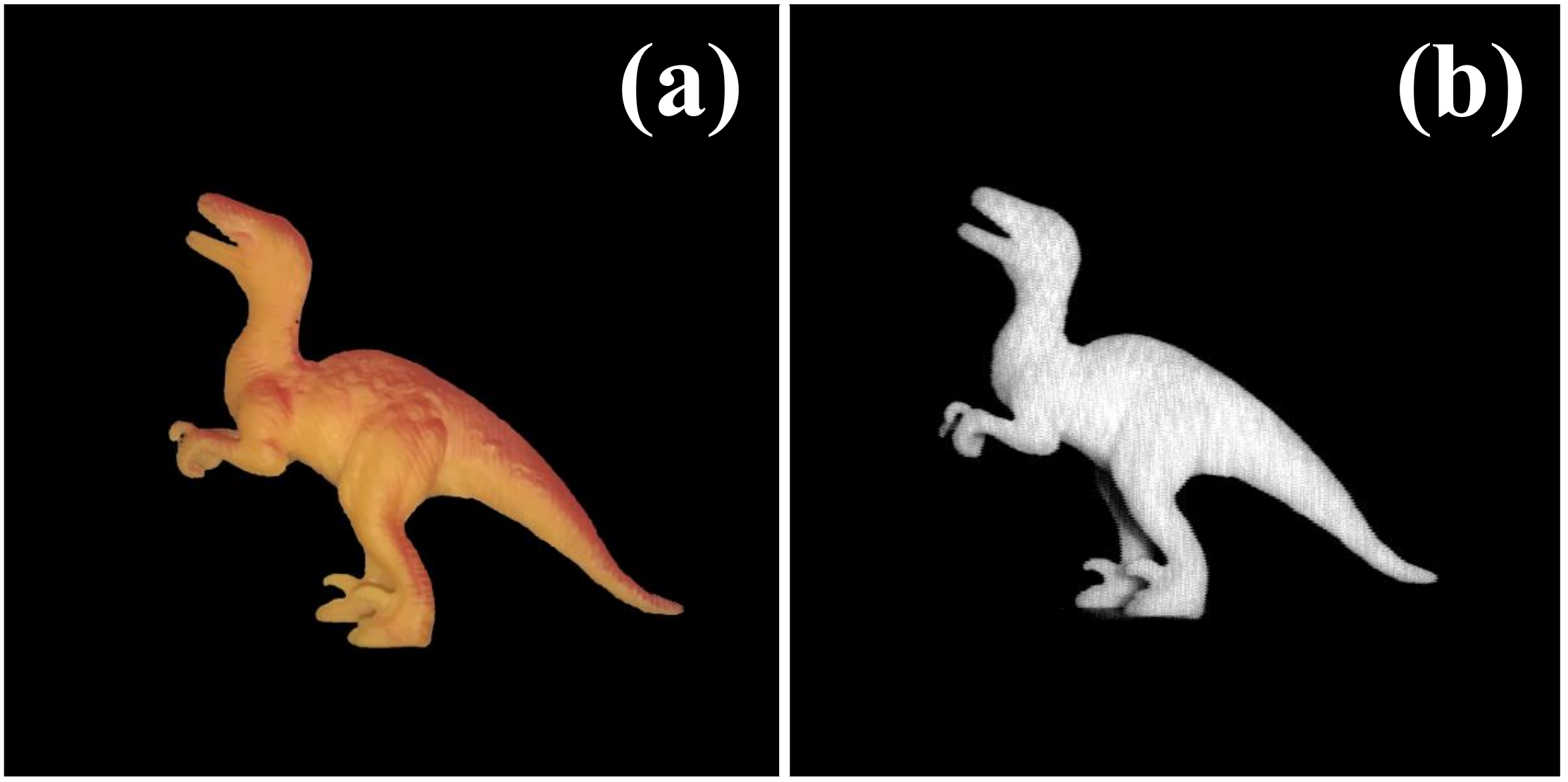

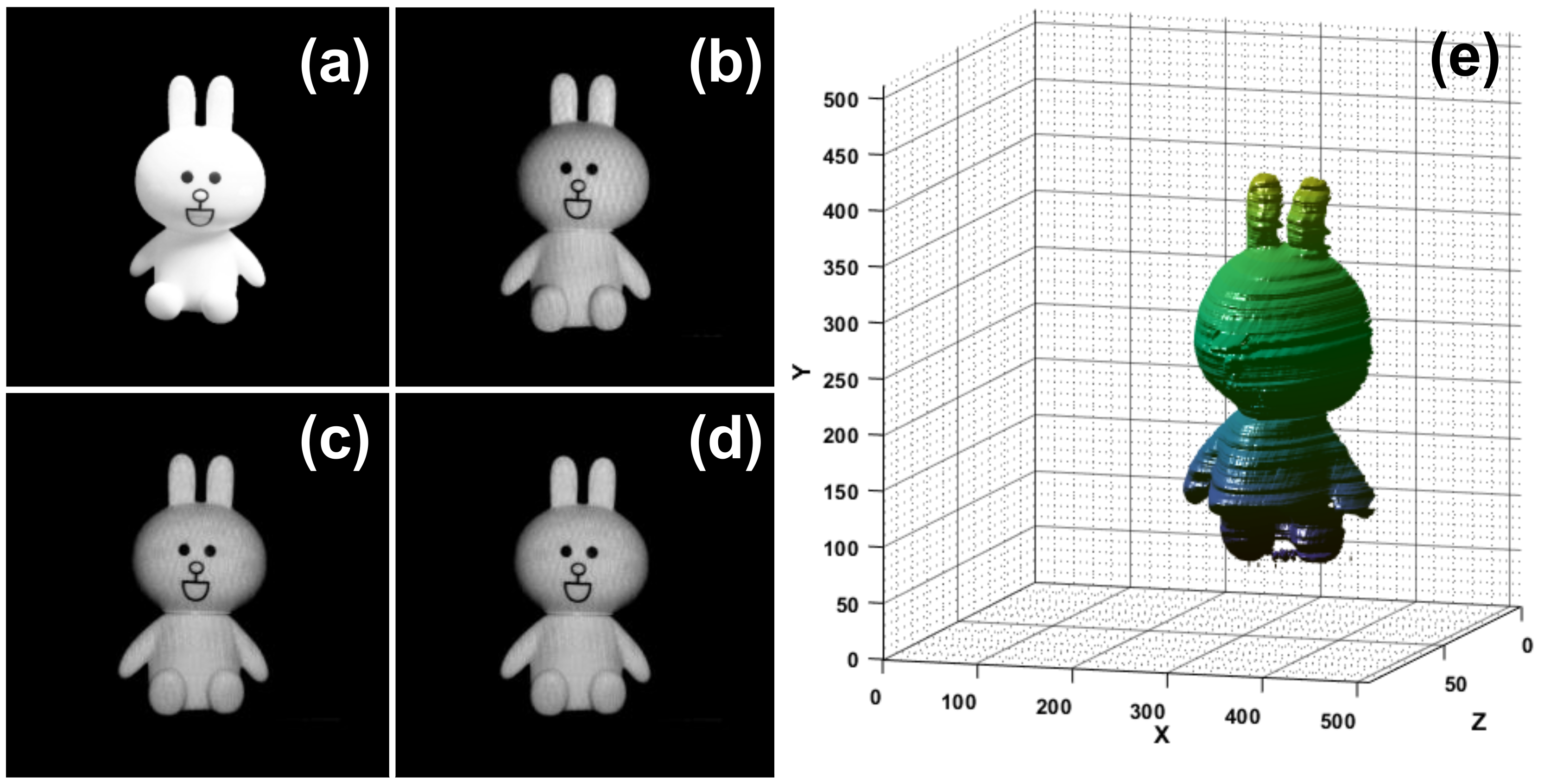

3.2. High-Resolution Imaging Experiments for Complex Objects

3.3. 3D Imaging Experiment Based on Photometric Stereo Algorithm

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chhabra, P.; Maccarone, A.; McCarthy, A.; Buller, G.; Wallace, A. Discriminating underwater lidar target signatures using sparse multi-spectral depth codes. In Proceedings of the 2016 Sensor Signal Processing for Defence (SSPD), Edinburgh, UK, 22–23 September 2016; pp. 1–5. [Google Scholar]

- Becker, W. Advanced Time-Correlated Single Photon Counting Techniques; Springer Series in Chemical Physics; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Buller, G.S.; Collins, R.J. Single-photon generation and detection. Meas. Sci. Technol. 2010, 21, 012002. [Google Scholar] [CrossRef]

- Ware, W.R. Fluorescence Lifetime Measurements by Time Correlated Single Photon Counting; Technical Report; University of Minnesota: Minneapolis, MN, USA, 1969. [Google Scholar]

- Ware, W.R. Transient luminescence measurements. Creat. Detect. Excit. State 1971, 1, 213. [Google Scholar]

- Schuyler, R.; Isenberg, I. A monophoton fluorometer with energy discrimination. Rev. Sci. Instrum. 1971, 42, 813–817. [Google Scholar] [CrossRef]

- Bachrach, R. A photon counting apparatus for kinetic and spectral measurements. Rev. Sci. Instrum. 1972, 43, 734–737. [Google Scholar] [CrossRef]

- Binkert, T.; Tschanz, H.; Zinsli, P. The measurement of fluorescence decay curves with the single-photon counting method and the evaluation of rate parameters. J. Lumin. 1972, 5, 187–217. [Google Scholar] [CrossRef]

- Lewis, C.; Ware, W.R.; Doemeny, L.J.; Nemzek, T.L. The Measurement of Short-Lived Fluorescence Decay Using the Single Photon Counting Method. Rev. Sci. Instrum. 1973, 44, 107–114. [Google Scholar] [CrossRef]

- Love, L.C.; Shaver, L. Time correlated single photon technique: Fluorescence lifetime measurements. Anal. Chem. 1976, 48, 364A–371A. [Google Scholar] [CrossRef]

- Becker, W.; Bergmann, A.; König, K.; Tirlapur, U. Picosecond fluorescence lifetime microscopy by TCSPC imaging. In Multiphoton Microscopy in The Biomedical Sciences; International Society for Optics and Photonics: Bellingham, WA, USA, 2001; Volume 4262, pp. 414–419. [Google Scholar]

- Becker, W.; Bergmann, A.; Hink, M.; König, K.; Benndorf, K.; Biskup, C. Fluorescence lifetime imaging by time-correlated single-photon counting. Microsc. Res. Tech. 2004, 63, 58–66. [Google Scholar] [CrossRef]

- Degnan, J.J. Satellite laser ranging: Current status and future prospects. IEEE Trans. Geosci. Remote Sens. 1985, GE-23, 398–413. [Google Scholar] [CrossRef]

- Massa, J.S.; Wallace, A.M.; Buller, G.S.; Fancey, S.; Walker, A.C. Laser depth measurement based on time-correlated single-photon counting. Opt. Lett. 1997, 22, 543–545. [Google Scholar] [CrossRef]

- Massa, J.S.; Buller, G.S.; Walker, A.C.; Cova, S.; Umasuthan, M.; Wallace, A.M. Time-of-flight optical ranging system based on time-correlated single-photon counting. Appl. Opt. 1998, 37, 7298–7304. [Google Scholar] [CrossRef] [PubMed]

- Degnan, J.J. Photon-counting multikilohertz microlaser altimeters for airborne and spaceborne topographic measurements. J. Geodyn. 2002, 34, 503–549. [Google Scholar] [CrossRef]

- Ren, M.; Gu, X.; Liang, Y.; Kong, W.; Wu, E.; Wu, G.; Zeng, H. Laser ranging at 1550 nm with 1-GHz sine-wave gated InGaAs/InP APD single-photon detector. Opt. Express 2011, 19, 13497–13502. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Liu, D.; Zhang, W.; You, L.; He, Y.; Zhang, W.; Yang, X.; Wu, G.; Ren, M.; Zeng, H.; et al. Time-of-flight laser ranging and imaging at 1550 nm using low-jitter superconducting nanowire single-photon detection system. Appl. Opt. 2013, 52, 3241–3245. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, A.; Collins, R.J.; Krichel, N.J.; Fernández, V.; Wallace, A.M.; Buller, G.S. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl. Opt. 2009, 48, 6241–6251. [Google Scholar] [CrossRef]

- McCarthy, A.; Ren, X.; Della Frera, A.; Gemmell, N.R.; Krichel, N.J.; Scarcella, C.; Ruggeri, A.; Tosi, A.; Buller, G.S. Kilometer-range depth imaging at 1550 nm wavelength using an InGaAs/InP single-photon avalanche diode detector. Opt. Express 2013, 21, 22098–22113. [Google Scholar] [CrossRef]

- Henriksson, M.; Larsson, H.; Grönwall, C.; Tolt, G. Continuously scanning time-correlated single-photon-counting single-pixel 3-D lidar. Opt. Eng. 2016, 56, 031204. [Google Scholar] [CrossRef]

- Gordon, K.; Hiskett, P.; Lamb, R. Advanced 3D imaging lidar concepts for long range sensing. In Advanced Photon Counting Techniques VIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2014; Volume 9114, p. 91140G. [Google Scholar]

- Kostamovaara, J.; Huikari, J.; Hallman, L.; Nissinen, I.; Nissinen, J.; Rapakko, H.; Avrutin, E.; Ryvkin, B. On laser ranging based on high-speed/energy laser diode pulses and single-photon detection techniques. IEEE Photonics J. 2015, 7, 1–15. [Google Scholar] [CrossRef]

- Pawlikowska, A.M.; Halimi, A.; Lamb, R.A.; Buller, G.S. Single-photon three-dimensional imaging at up to 10 kilometers range. Opt. Express 2017, 25, 11919–11931. [Google Scholar] [CrossRef]

- Kang, Y.; Li, L.; Liu, D.; Li, D.; Zhang, T.; Zhao, W. Fast long-range photon counting depth imaging with sparse single-photon data. IEEE Photonics J. 2018, 10, 1–10. [Google Scholar] [CrossRef]

- Li, Z.; Wu, E.; Pang, C.; Du, B.; Tao, Y.; Peng, H.; Zeng, H.; Wu, G. Multi-beam single-photon-counting three-dimensional imaging lidar. Opt. Express 2017, 25, 10189–10195. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.P.; Huang, X.; Cao, Y.; Wang, B.; Li, Y.H.; Jin, W.; Yu, C.; Zhang, J.; Zhang, Q.; Peng, C.Z.; et al. Single-photon computational 3D imaging at 45 km. arXiv 2019, arXiv:1904.10341. [Google Scholar]

- Kirmani, A.; Venkatraman, D.; Shin, D.; Colaço, A.; Wong, F.N.; Shapiro, J.H.; Goyal, V.K. First-photon imaging. Science 2014, 343, 58–61. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Shi, J.; Wu, X.; Zeng, G. Fast first-photon ghost imaging. Sci. Rep. 2018, 8, 5012. [Google Scholar] [CrossRef]

- Kong, H.J.; Kim, T.H.; Jo, S.E.; Oh, M.S. Smart three-dimensional imaging ladar using two Geiger-mode avalanche photodiodes. Opt. Express 2011, 19, 19323–19329. [Google Scholar] [CrossRef]

- Huang, P.; He, W.; Gu, G.; Chen, Q. Depth imaging denoising of photon-counting lidar. Appl. Opt. 2019, 58, 4390–4394. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Tobin, R.; Halimi, A.; McCarthy, A.; Laurenzis, M.; Christnacher, F.; Buller, G.S. Three-dimensional single-photon imaging through obscurants. Opt. Express 2019, 27, 4590–4611. [Google Scholar] [CrossRef]

- Xin, S.; Nousias, S.; Kutulakos, K.N.; Sankaranarayanan, A.C.; Narasimhan, S.G.; Gkioulekas, I. A theory of fermat paths for non-line-of-sight shape reconstruction. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6800–6809. [Google Scholar]

- Li, S.; Zhang, Z.; Ma, Y.; Zeng, H.; Zhao, P.; Zhang, W. Ranging performance models based on negative-binomial (NB) distribution for photon-counting lidars. Opt. Express 2019, 27, A861–A877. [Google Scholar] [CrossRef]

- Snyder, D.L. Random Point Processes; Wiley: Hoboken, NJ, USA, 1975. [Google Scholar]

- Holroyd, M.; Lawrence, J.; Humphreys, G.; Zickler, T. A photometric approach for estimating normals and tangents. ACM Trans. Graph. (TOG) 2008, 27, 133. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Zheng, H.; Wang, G.; Zhou, Y.; Chen, H.; He, Y.; Liu, J.; Sun, J.; Xu, Z. Three-Dimensional Imaging via Time-Correlated Single-Photon Counting. Appl. Sci. 2020, 10, 1930. https://doi.org/10.3390/app10061930

Fu C, Zheng H, Wang G, Zhou Y, Chen H, He Y, Liu J, Sun J, Xu Z. Three-Dimensional Imaging via Time-Correlated Single-Photon Counting. Applied Sciences. 2020; 10(6):1930. https://doi.org/10.3390/app10061930

Chicago/Turabian StyleFu, Chengkun, Huaibin Zheng, Gao Wang, Yu Zhou, Hui Chen, Yuchen He, Jianbin Liu, Jian Sun, and Zhuo Xu. 2020. "Three-Dimensional Imaging via Time-Correlated Single-Photon Counting" Applied Sciences 10, no. 6: 1930. https://doi.org/10.3390/app10061930

APA StyleFu, C., Zheng, H., Wang, G., Zhou, Y., Chen, H., He, Y., Liu, J., Sun, J., & Xu, Z. (2020). Three-Dimensional Imaging via Time-Correlated Single-Photon Counting. Applied Sciences, 10(6), 1930. https://doi.org/10.3390/app10061930