Abstract

People perceive the mind in two dimensions: intellectual and affective. Advances in artificial intelligence enable people to perceive the intellectual mind of a robot through their semantic interactions. Conversely, it has been still controversial whether a robot has an affective mind of its own without any intellectual actions or semantic interactions. We investigated pain experiences when observing three different facial expressions of a virtual agent modeling affective minds (i.e., painful, unhappy, and neutral). The cold pain detection threshold of 19 healthy subjects was measured as they watched a black screen, then changes in their cold pain detection thresholds were evaluated as they watched the facial expressions. Subjects were asked to rate the pain intensity from the respective facial expressions. Changes of cold pain detection thresholds were compared and adjusted by the respective pain intensities. Only when watching the painful expression of a virtual agent did, the cold pain detection threshold increase significantly. By directly evaluating intuitive pain responses when observing facial expressions of a virtual agent, we found that we ‘share’ empathic neural responses, which can be intuitively emerge, according to observed pain intensity with a robot (a virtual agent).

1. Introduction

“Does a robot (a virtual agent) have a mind of its own?” This question has long been debated. Supported by remarkable improvements and advances in artificial intelligence, the answer “Yes” has recently become the dominant view, mainly in engineering. Researchers in this field have eagerly adopted neuro-computing to understand learning systems, model networks, and transform information from various modalities with respect to computational neuroscience. Such engineering applications have resulted in the distribution of autonomous robots like Siri (Apple Inc., Cupertino, CA, USA), Alexa (Amazon.com, Seattle, WA, USA), and Pepper (Softbank Robotics.com, Tokyo, Japan) in our everyday life, and these robots can provide reasonable responses to requests and inquiries by people. Furthermore, autonomous robots have achieved the ability to understand and respond to semantic tasks, resulting in a “theory of mind” [1]. People certainly perceive the mind of a robot through their semantic interactions with it; however, such minds simply reflect the intellectual and cognitive processes of robots, which are autonomously intended and acted. As an alternative to the intellectual mind, people perceive the affective mind in a wide variety of entities, including humans and animals, without any intellectual actions or semantic interactions (for example, when just observing a baby giant panda playing with a toy, people intuitively perceive the panda as feeling happy). Thus, people perceive the mind in two dimensions: One is intellectual, and the other is affective [2]. From the standpoint of the affective mind, many psychological researchers still do not agree that a robot (a virtual agent) has minds of its own. Furthermore, specifying the intellectual mind, some researchers argue that people merely create semantic meanings for actions by a robot on their own and it is undefined whether a robot really has a mind of its own. According to this argument, the affective mind of a robot might be also semantically created through people’s cognitive processes based on their experiences to date. Modelling multimodal expressions of affective minds in a robot has shown that people perceive it as having affective minds [3]. However, it still has not been confirmed whether the minds are created semantically through people’s own cognitive processes or a robot itself has such minds. We investigated whether pain experiences are modulated intuitively and unconsciously when observing the facial expressions of a virtual agent modeling affective minds.

2. Materials and Methods

2.1. Participants

Nineteen healthy subjects (31.4 ± 5.9 years, 9 male) gave their informed consent and participated in this study. The institutional local ethics committee approved this study (#2831-1).

2.2. Measurement

Cold pain detection threshold was measured using a computerized thermal tester (Pathway; Medoc, Israel) with a Peltier device measuring 32 × 32 mm. Baseline temperature was set at 32 °C. Cold pain detection threshold testing was performed on the central volar part of the hand. The temperature of the thermode gradually decreased (1 °C/s) until the subject pressed a response button at a specific thermal sensation, which was defined as detecting a painful sensation of cold. This returned the stimulus intensity to baseline (3 °C/s) and recorded the temperature at which the subject responded. Cold pain detection thresholds were calculated as the average for three stimuli.

First, the cold pain detection threshold was measured when the subjects were asked to watch a black screen (defined as the default cold pain detection threshold). Cold pain detection thresholds were then measured while watching three different facial expressions of a virtual agent (see below). The presentation of the three different facial expressions was randomized for each participant to prevent order effects. After cold pain detection thresholds had been measured for all facial expressions, the subjects mandatorily rated the pain intensities of the three different facial expressions on an 11-point numerical rating scale (NRS).

2.3. Facial Expressions of a Virtual Agent

Each facial expression of a virtual agent consisted of a 20-s looped video clip. The video continuously restarted without pause until cold pain detection thresholds have been measured for each facial expression. The video clips contained dynamic facial expressions intended to be painful, unhappy, and neutral, all generated with three-dimensional computer graphing software for the creation of virtual characters (People Putty, Haptek, Santa Cruz, CA, USA). This software autonomously creates moving facial expressions. Using a picture of a gender-neutral adult, the facial expressions of a virtual agent were systematically manipulated based on the Facial Action Coding System (FACS), an objective, anatomically based system that permits a full description of the basic units of facial movement associated with private experience, including pain. Forty-four different action units (AUs) have been identified. Core action units representing the facial expression of pain in adults are: brow lowering (AU4), tightening of the orbital muscles surrounding the eye (AU6&7), nose wrinkling/upper lip raising (AU9&10), and eye closure (AU43) [4]. The pain expression can be differentiated from other facial expressions like neutral and unhappy [5,6,7].

2.4. Statistical Analysis

Differences between the average default cold pain detection threshold of all participants and their cold pain detection thresholds while watching a black screen and the three facial expressions were compared using analysis of covariance (ANCOVA) with the respective pain intensities as covariates. We set NRS = 0 for pain intensity while watching a black screen. Holm’s multiple comparison procedure was used to compare the pair-wise differences in this ANCOVA model.

3. Results

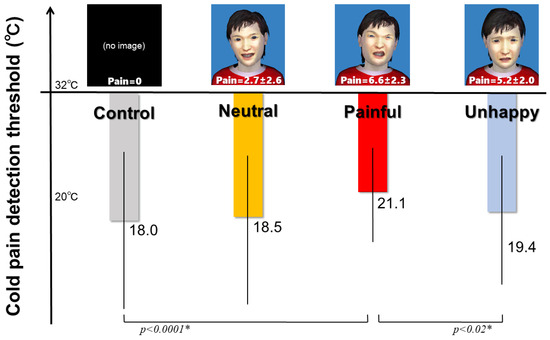

The default cold pain detection threshold of 19 participants watching a black screen was 18.0 ± 8.2 °C. When watching a virtual agent with painful, unhappy, and neutral facial expressions, cold pain detection thresholds were 21.1 ± 5.7 ℃ 19.4 ± 6.9 ℃ and 18.5 ± 7.7 °C, respectively (Table 1). Cold pain detection thresholds were thus quite-variable. Changes of cold pain detection thresholds watching a virtual agent with painful, unhappy, and neutral facial expressions were 3.1 ± 5.4, −1.7 ± 4.2 and −0.9 ± 2.6, respectively. Pain intensities of the different facial expressions (painful, unhappy, and neutral) were rated as 6.6 ± 2.3, 5.2 ± 2.0 and 2.7 ± 2.6, respectively. These pain ratings for three different facial expressions were varied among the participants (Table 1). Following facial expressions were rated in order of severity: Painful > unhappy > neutral (n = 10); Unhappy > painful > neutral (n = 3); Painful = unhappy > neutral (n = 2); Painful > neutral > unhappy (n = 2); Unhappy > neutral > painful (n = 1); and painful = neutral > unhappy (n = 1). Adjusted by the respective pain intensities, only when watching the painful expression of a virtual agent did the cold pain detection threshold increase significantly (Figure 1).

Table 1.

Background Information and Measuring Data of Individual Participants.

Figure 1.

Cold Pain Detection Thresholds while Watchng Three Different Facial Expressions of a Virtual Agent and a Black Screen. Average cold pain detection thresholds (squares) of the participants while watching three different facial expressions of a virtual agent and a black screen are shown. Error bars indicate standard deviations of the respective cold pain detection thresholds for the facial expressions. For the three facial expressions, participants mandatorily rated pain intensity on an 11-point numerical rating scale. Differences between the average default cold pain detection threshold of all participants and their cold pain detection thresholds while watching a black screen and the three facial expressions were compared using analysis of covariance (ANCOVA) with the respective pain intensities as covariates. Only when watching the painful expression of a virtual agent did the cold pain detection thresholds increase significantly. * Adjusted by pain intensities for facial expressions.

4. Discussion

Perception of given behaviors in another individual automatically activates one’s own representation of those behaviors, including facial expressions [8]. This phenomenon supports recognizing not only motor contexts but also affective minds through facial expressions and/or affective behaviors, which is called empathy [9]. Pain is an unpleasant sensory and emotional experience and thus is considered as one embodiment of affective minds. Empathy is of course observed in pain [10] and most previous studies reported as follows: (1) Pain rating of another individual by the observer is influenced by amounts of the facial expressions and/or behaviors of another individual. (2) The perception of pain in another individual taps into the affective component of pain processing of the observer, notably through activation of neural substrates like the anterior cingulate cortex and anterior insular cortex. Further, the activities of these neural substrates are correlated with the intensity of the pain observed, as descriptively rated by the observer [11]. Empathic neural responses thus share neural circuits between the observer and another individual, and the observer’s neural circuits are activated according to another individual’s pain intensity. Therefore, empathic neural response is suggested to be an isomorphic experience of the observer and the affective minds “shared” between the observer and another individual. In the present study, we confirmed the participants were healthy, but we did not collect data on their psychological conditions like as state anxiety and depressive mood. Psychological conditions might affect empathic behaviors when observing facial expressions. We should raise this as a limitation of this study.

Empathic understanding of another individual’s pain intensity does not dispel skepticism about the semantically created meanings indicated by describing pain ratings on the basis of the observer’s painful experiences to date. In fact, the social situation and reasoning are most efficiently triggered in a context where the observed behaviors of another individual are highly relevant to the observer’s own intentions and actions [12]. This would indicate that empathy for the intellectual mind is reflected in conscious experiences and modulated by top-down contextual factors. Apart from the intellectual mind, our present study directly evaluated intuitive pain responses when observing facial expressions of a virtual agent and found that empathic neural responses can intuitively emerge according to observed pain intensity. This finding would suggest that empathy for affective minds is developed automatically and without conscious awareness of the observer’s own affective state. That is, our subjects intuitively shared pain, one embodiment of affective minds, with a virtual agent. The term “share” means to have something at the same time as someone else. If one has something and another individual has it at the same time, they can share it. Considering this, one can “share” an affective mind regardless of one’s intellectual cognitive processes when a virtual agent has it at the same time although our sample size was limited. Consequently, we can respond to the classical question, “Does a robot (a virtual agent) have a mind of its own?”, that “Yes, a robot (a virtual agent) has a mind of its own because we intuitively share it.”

Author Contributions

Conceptualization, M.S. (Mizuho Sumitani), M.O. and M.S. (Masahiko Sumitani); study design, M.S. (Mizuho Sumitani), M.O. and M.S. (Masahiko Sumitani); data collection, M.S. (Masahiko Sumitani), H.A., K.A. and R.T.; formal analysis, M.S. (Mizuho Sumitani), M.O. and M.S. (Masahiko Sumitani); validation, M.S. (Mizuho Sumitani), M.O. and M.S. (Masahiko Sumitani); writing—original draft preparation, M.S. (Mizuho Sumitani), M.O., R.T., H.A., K.A., R.T. and M.S. (Masahiko Sumitani); writing—review and editing, all authors; All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by JSPS KAKENHI Grant Number 19H03749.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Brian, S. Theory of mind for a humanoid robot. Auton. Robots 2002, 12, 13–24. [Google Scholar]

- Gray, H.M.; Gray, K.; Wegner, D.M. Dimensions of mind perception. Science 2007, 315, 619. [Google Scholar] [CrossRef]

- Plachaud, C. Modeling multimodal expression of emotion in a virtual agent. Phil. Trans. Soc. B 2009, 364, 3539–3548. [Google Scholar] [CrossRef]

- Prkachin, K.M. The consistency of facial expressions of pain: A comparison across modalities. Pain 1992, 51, 297–306. [Google Scholar] [CrossRef]

- Hale, C.; Hadjistavropoulos, T. Emotional components of pain. Pain Res. Manag. 1997, 2, 217–225. [Google Scholar] [CrossRef]

- LeResche, L. Facial expression in pain: A study of candid photographs. J. Nonverbal. Behav. 1982, 7, 46–56. [Google Scholar] [CrossRef]

- LeResche, L.; Dworkin, S.F. Facial expressions of pain and emotions in chronic TMD patients. Pain 1988, 35, 71–78. [Google Scholar] [CrossRef]

- Jackson, P.L.; Decety, J. Motor cognition: A new paradigm to study self-other interactions. Curr. Opin. Neurobiol. 2004, 14, 259–263. [Google Scholar] [CrossRef]

- Carr, L.; Iacoboni, M.; Dubeau, M.C.; Mazziotta, J.C.; Lenzi, G.L. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. USA 2003, 100, 5497–5502. [Google Scholar] [CrossRef]

- Goubert, L.; Craig, K.D.; Vervoort, T.; Morley, S.; Sullivan, M.J.; de C. Williams, A.C.; Cano, A.; Crombez, G. Facing others pain: The effects of empathy. Pain 2005, 118, 285–288. [Google Scholar] [CrossRef]

- Jackson, P.L.; Meltzoff, A.N.; Decety, J. How do we perceive the pain of others? A window into the neural processes involved in empathy. Neuroimage 2005, 24, 771–779. [Google Scholar] [CrossRef]

- Kampe, K.K.; Frith, C.D.; Frith, U. “Hey John”: Signals conveying communicative intention toward the self activate brain regions associated with “mentalizing,” regardless of modality. J. Neurosci. 2003, 23, 5258–5263. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).