Abstract

Despite the increasing integration of government chatbots (GCs) into digital public service delivery, their real-world effectiveness remains limited. Drawing on the literature on algorithm aversion, trust-transfer theory, and perceived risk theory, this study investigates how the type of service agent (human vs. GCs) influences citizens’ trust of e-government services (TOE) and e-government service adoption intention (EGA). Furthermore, it explores whether the effect of trust of government (TOG) on TOE differs across agent types, and whether perceived risk (PR) serves as a boundary condition in this trust-transfer process. An online scenario-based experiment was conducted with a sample of 318 Chinese citizens. Data were analyzed using the Mann–Whitney U test and partial least squares structural equation modeling (PLS-SEM). The results reveal that, within the Chinese e-government context, citizens perceive higher risk (PR) and report lower adoption intention (EGA) when interacting with GCs compared to human agents—an indication of algorithm aversion. However, high levels of TOG mitigate this aversion by enhancing TOE. Importantly, PR moderates the strength of this trust-transfer effect, serving as a critical boundary condition.

1. Introduction

Integrating chatbots into digital public services has emerged as a significant global trend within artificial intelligence (AI) applications (; ). Government chatbots (GCs) possess the potential to address the inherent shortcomings associated with traditional human-led public consultation services, such as information fragmentation, repetitive and static responses, prolonged response times, inconsistent quality, difficulties in data integration, and insufficient standardization (). Despite their considerable potential in enhancing service efficiency and accessibility, the practical implementation of GCs has fallen short of expectations. For instance, compared to human customer service representatives, employing chatbots can significantly decrease the public’s willingness to actively contact governmental institutions, with a reported reduction rate of up to 18.1% (). Consequently, many individuals would prefer enduring inconveniences such as queuing rather than engaging with governmental chatbots (). Thus, effectively facilitating a smooth transition from traditional human services to AI-driven government services has become an urgent issue within digital governance practices.

A key factor underlying these challenges is the public’s skepticism regarding the capability of GCs to adequately fulfill designated tasks (; ). Such distrust further reduces public willingness to adopt AI-driven recommendations (). More alarmingly, this skepticism could generalize to the entire digital governance framework, potentially undermining the overall effectiveness of digital government transformation strategies (). The existing literature suggests several technology-oriented strategies to enhance public trust, such as improving chatbot anthropomorphism (), enhancing technical reliability and stability (), increasing system transparency and explainability (), and strengthening commitments to security and privacy. Nevertheless, the efficacy of these strategies remains constrained by widespread public AI aversion biases (; ). Empirical studies indicate that despite recognizing the technological advantages of chatbots, users frequently prefer human judgment over AI-driven solutions (; ; ).

Therefore, leveraging the public’s existing trust in government institutions offers a promising pathway to address these challenges, as this form of trust is not contingent upon individuals’ perceptions of the technology itself (). According to trust-transfer theory, individuals’ evaluations of new technologies often heavily depend on their pre-existing trust in the providing entity, such as government agencies (; ; ). For instance, a study on service channel switching found that higher satisfaction with offline services was associated with greater trust in and willingness to adopt online services ().

However, recent research has suggested that when public perceptions of government corruption increase, individuals may prefer AI-based services to avoid potential negative experiences associated with human-operated interactions (). This finding highlights a practical concern: the impact of pre-existing trust in government on citizens’ adoption of e-government services may vary depending on the type of agent (human vs. GC). Unfortunately, our understanding of this differential effect remains limited.

Building on these theoretical insights and drawing from the literature on algorithm aversion, this study integrates trust-transfer theory and perceived risk theory to examine three core research questions: (1) Does agent type (human vs. government chatbot) influence citizens’ perceptions of e-government services delivered by the agent? (2) Is the effect of trust in government on citizens’ adoption of e-government services consistent across different agent types (human vs. GC)? (3) If differences exist, are there boundary conditions that moderate the differential effect of trust in government across agent types?

To address these research questions, we conducted a scenario-based online experiment and collected data from 318 Chinese citizens via Sojump, one of China’s largest professional online survey platforms (). China was selected as the research context for two key reasons. First, previous studies have indicated that Chinese citizens tend to exhibit relatively high initial trust in AI, reflecting a cultural orientation that favors technological innovation and demonstrates openness toward automation and digital transformation (). Second, GCs have already been widely integrated into China’s e-government service infrastructure, making it a highly relevant setting for investigating the phenomena under study ().

The findings reveal that, compared to services provided by human agents, e-government services delivered by GCs are associated with higher perceived risk and lower adoption intention, highlighting the presence of algorithm aversion. Furthermore, trust in government more effectively reduces perceived risk when the service agent is human. Notably, the trust-transfer effect from government trust to trust in e-government services exceeds that of human agents only under conditions of low perceived risk in the GC agent context. This finding reveals a boundary effect in the differential impact of agent type on the trust-transfer process.

From a theoretical perspective, this study systematically integrates trust-transfer theory and perceived risk theory, thereby deepening our understanding of how human–machine distinctions influence the interplay between trust in government, perceived risk, and trust in e-government services. In doing so, it extends the theoretical boundaries of the technology adoption literature. From a practical standpoint, the findings offer critical insights for government agencies, enabling them to strategically deploy chatbots under varying levels of perceived risk. Such strategies can help to mitigate public resistance toward AI-driven e-government services and enhance citizens’ acceptance and satisfaction with digital public service delivery.

2. Theoretical Framework and Hypothesis Development

2.1. Government Chatbots (GCs) in Chinese E-Government Services

E-government involves the application of information and communication technologies (ICT) by public authorities to improve the quality and efficiency of public services (). Government chatbots (GCs) serve as a digital interface through which governments interact with citizens, functioning as an integral component of e-government initiatives (). The deployment of GCs in public service delivery marks a noteworthy step forward in the ongoing development and refinement of e-government systems (; ; ).

Chatbots represent AI-driven applications utilizing natural language processing to interact with users through text or speech (; ). Government chatbots (GCs), which are specifically adapted for public administration, are customized to fulfill the unique demands of government services (). Recent advancements in AI, coupled with the COVID-19 pandemic, have accelerated the adoption of chatbots by governmental agencies, providing 24/7 interactive Q&A, transactional services, and policy interpretations (; ; ). Compared to human agents, GCs excel at delivering generalized, standardized services (; ; ; ).

In the Chinese context, GCs are not merely perceived as service-oriented technological tools but are also regarded as extensions of the national governance apparatus, bearing symbolic functions related to institutional legitimacy and social management (). With the widespread adoption of digital governance tools, public perceptions of GCs are often closely intertwined with trust in government, thereby shaping both trust in these services and citizens’ willingness to adopt them.

Moreover, existing research has highlighted that in China, the government exercises centralized control over public narratives and information dissemination. Through institutionalized propaganda mechanisms and censorship systems, a governance environment characterized by information asymmetry has been established (). This suggests that Chinese citizens may perceive the risks associated with GCs to be lower than those perceived in Western countries. Supporting this view, prior studies have reported relatively high levels of trust among Chinese citizens in AI-based tools ().

China’s large internet user base, strong state authority, and relatively underdeveloped civic consciousness have further contributed to the country’s competitive advantage in the development and implementation of GCs, positioning it ahead of many Western nations in this domain ().

2.2. The Impact Caused by Different Agency Types

Existing research presents conflicting perspectives regarding the impact of agent type (human vs. AI) in service support contexts (). On one hand, some scholars argue that even when AI and human-provided services are functionally equivalent, individuals tend to exhibit a preference for human decision-making—a phenomenon referred to as “algorithm aversion” (; ; ). On the other hand, a growing body of research suggests that many individuals place greater trust in, and express a stronger preference for, recommendations and services provided by AI agents over those offered by humans, reflecting what is known as the “algorithm appreciation” perspective ().

However, in the context of e-government services, researchers have pointed out that citizens’ attitudes toward AI follow the “AI aversion” perspective, meaning that people prefer services provided by humans rather than AI ().

2.2.1. Different Agent Types and Perceived Risk (PR)

Within AI and user behavior research, perceived risk (PR) has been extensively acknowledged as a critical determinant influencing initial trust and adoption intentions (; ). PR can be delineated into various multidimensional constructs. Previous research has examined multiple dimensions of risk associated with the use of online services, such as performance risk, time risk, privacy risk, psychological risk, security risk, and social risk (; ). Within the context of digital government services, PR specifically encompasses concerns about the exposure of personal information, privacy violations, and potential financial losses ().

However, when considering overall risk perception, prior studies have typically treated PR as a unidimensional construct (; ; ). It refers to the subjective expectation of potential losses or failures experienced by users when pursuing desired outcomes (; ). This study adopts this particular unidimensional definition of PR.

Within public service contexts, citizens’ trust in AI agents differs notably from human agents, primarily due to AI’s perceived lack of controllability, insufficient transparency in decision-making, and absence of empathetic responsiveness and flexible judgment. Additionally, citizens express concerns about the potential of AI exacerbating social inequalities and eroding democratic accountability (; ; ).

On the other hand, research has indicated that people tend to perceive greater homogeneity among AI agents compared to human agents. As a result, when a service failure is attributed to an AI agent, individuals are more likely to generalize the failure to the broader category of AI agents than they would for a failure caused by a human agent (). This generalized attribution may heighten citizens’ perceived risk associated with AI-mediated services. Hence, we propose the following:

H1.

Compared to human agents, citizens perceive a higher level of perceived risk (PR) associated with services provided by government chatbots (GCs).

2.2.2. Different Agent Types and Trust of E-Government Services (TOE)

Research indicates that the successful implementation of digital government services substantially hinges upon the public’s trust in these services (). A lack of trust erodes the perceived legitimacy of public actions and diminishes citizens’ cooperative willingness toward public policies (), potentially leading digital government initiatives toward stagnation or outright failure (). In studies of information systems and public administration, trust is frequently regarded as a prerequisite for user engagement and the adoption of online services. Therefore, investigating TOE is crucial for understanding the effectiveness of GC implementation.

From the perspective of trust, variations in agent type (human vs. GCs) may significantly impact TOE (). Previous research has suggested that individuals tend to perceive artificial intelligence as an abstract and distant entity, whereas human agents are psychologically closer to users and capable of offering more emotionally resonant interactions (). Although both AI and human decision-making processes involve opaque “black box” elements, people are generally more tolerant of the opacity in human decisions due to their cultural and social familiarity (). Consequently, it has been proposed that users place greater trust in human agents, as they are perceived to be more similar to the users themselves and less associated with artificial intelligence (). Hence, we propose the following:

H2.

Compared to human agents, citizens have a lower level of trust of e-government services (TOE) associated with services provided by government chatbots (GCs).

2.2.3. Different Agent Types and E-Government Adoption Intention (EGA)

In addition to concerns regarding the service capabilities of GCs, another critical barrier to their adoption lies in the perception that AI systems lack emotional support capabilities, such as empathy (; ). This perception often leads to the rejection of services provided by AI systems. This phenomenon can be interpreted through the lens of the Stereotype Content Model (SCM), which posits that individuals typically evaluate others—including social groups—along two fundamental dimensions: competence and warmth (). Recent research in human–machine interaction has extended the applicability of this model to non-human entities, as users tend to assess AI agents, including chatbots, in ways similar to how they evaluate human agents (; ).

Within this framework, competence refers to the perceived effectiveness, efficiency, and capability of non-human agents, typically derived from evaluations of their cognitive performance (). In contrast, warmth encompasses perceptions of friendliness, kindness, and concern for others, informed by the agent’s perceived emotional responsiveness and supportiveness ().

Empirical findings consistently suggest that, compared to human agents, non-human entities such as GCs are often perceived as less warm, which in turn diminishes users’ willingness to engage with them (). This issue is especially salient in public service domains, where emotional engagement is frequently considered a fundamental expectation. Therefore, public acceptance of GCs is markedly lower in emotionally intensive service areas—such as childcare or education—than in domains with lower emotional demands, such as waste management ().

To address this challenge, scholars have proposed various strategies. One involves enhancing the communicative style of GCs by adopting warm, socially oriented dialogue, which has been shown to improve user acceptance (). Another approach recommends hybrid service models that combine human and AI agents, thereby mitigating the negative effects of the low warmth perception stereotype associated with non-human entities in high service warmth demand contexts ().

Overall, these findings suggest that, compared to human agents, GCs’ limited capacity for emotional expression may reduce citizens’ willingness to adopt them in the context of government service delivery. Based on these insights, we propose the following hypothesis:

H3.

Compared to human agents, citizens have a lower level of e-government adoption intention (EGA) associated with services provided by government chatbots (GCs).

2.3. Trust of Government (TOG) and Perceived Risk (PR)

Prior research has demonstrated that trust has a direct impact on perceived risk (). Prior to engaging with electronic services, users typically evaluate both the entity providing the service and the underlying mechanisms through which the service is delivered (). In the context of e-government services, trust in the service-providing entity is generally conceptualized as trust in the government, while trust in the mechanism is often associated with trust in the internet (). However, as the internet has become a routine and widely accepted part of everyday life, trust in the internet is not the primary focus of the present study ().

Regarding trust of government (TOG), prior research has suggested that it can be conceptualized as a multidimensional construct encompassing three distinct dimensions: perceived competence, perceived benevolence, and perceived integrity (). Perceived competence refers to the extent to which citizens perceive government organizations as capable, efficient, skilled, and professional (). Perceived benevolence denotes the extent to which a citizen perceives a government organization to genuinely care about the public’s welfare and to be motivated by public interest (). Perceived integrity is defined as citizens’ perceptions regarding the sincerity, honesty, and reliability of a government in fulfilling its promises ().

Alternatively, other studies have viewed TOG as a unidimensional construct, defined as the extent to which the public perceives government organizations as professional, efficient, competent, genuinely concerned about citizens’ interests, and committed to fulfilling their promises (). Since this study focuses more on the overall perception of trust in the government, we have adopted the latter approach, which views trust in the government as a single structure. In citizen-centered e-government service contexts, behavioral uncertainty often stems from the government agencies responsible for service delivery (). Therefore, citizens’ trust in the government can directly shape their perception of risk.

Several previous studies have investigated this relationship. For example, research on electronic tax-filing services has shown that citizens’ perceived risk of using e-filing systems is negatively influenced by their level of trust in the government (). Similarly, a study examining Chinese citizens’ use of a COVID-19 contact tracing platform found that trust in government organizations significantly reduced privacy concerns associated with using the platform (). Based on these findings, we propose the following hypothesis:

H4.

Trust of government (TOG) will directly negatively affect citizens’ perceived risk (PR) of e-government services.

2.4. Factors Affecting Trust of E-Government Services (TOE)

2.4.1. Perceived Risk Theory

Perceived risk has been widely recognized as a key antecedent influencing trust in online services (). Trust and perceived risk are generally understood to have an inverse relationship, whereby trust effectively reduces users’ perception of risk. Conversely, heightened perceptions of risk can erode trust, making it more challenging for users to develop confidence in services and products (). Previous studies have examined the relationship between perceived risk and trust across various contexts, including cross-border e-commerce transactions (), e-government services (), and chatbot-based interactions (). These investigations consistently suggest that higher levels of perceived risk are negatively associated with users’ confidence in engaging with online services.

This relationship can be partly explained by the strong link between perceived risk and negative emotions, such as anxiety and concern, which are known to directly undermine trust (). Moreover, perceived risk has been shown to exacerbate information asymmetry, reduce the perceived usefulness and ease of use of chatbots, and amplify concerns associated with interacting with such technologies. These factors collectively diminish trust in chatbot services (). Based on the aforementioned evidence, we propose the following hypothesis:

H5.

Perceived risk (PR) will directly and negatively affect citizens’ trust of e-government services (TOE).

2.4.2. Trust Transfer Theory

The principle of bounded rationality suggests that human decision-making is consciously rational but inherently limited, implying that individuals rely more on heuristics, experiences, hopes, and beliefs rather than fully rational calculations (). Trust transfer theory further elaborates that an individual’s trust in a familiar source can transfer to an unfamiliar target when the two entities are perceived to be closely related (; ). Trust transfer serves as a cognitive simplification strategy that is employed by individuals when confronting decisions involving high levels of uncertainty or perceived risk (). For instance, studies suggest that consumers with higher levels of trust in a company tend to perceive the company’s AI services more positively ().

Thus, within e-government contexts, established public trust of government (TOG) may effectively transfer to technologies and services that are directly provided or endorsed by government institutions (). For instance, public trust in judicial institutions significantly predicts citizens’ support for adopting AI-driven decision-making within legal systems (). Similarly, a study on the adoption of e-government in Jordan found that trust in the government is an important factor in predicting trust in the e-government (). Thus, we propose the following hypothesis:

H6.

Trust of government (TOG) will directly and positively affect citizens’ trust of e-government services (TOE).

2.5. Trust of E-Government Services (TOE) and E-Government Adoption Intention (EGA)

Previous studies on e-government services have defined e-government adoption intention (EGA) as the extent to which citizens are willing to engage with government services through online platforms (). As such, EGA serves as an indicator of individuals’ conscious plans to use or not to use e-government services and is frequently employed as a proxy for actual usage behavior ().

The impersonal nature of the internet and the limited control over shared information have heightened citizens’ perceived risks when using online services (). Due to these risks, citizens may resist substituting traditional face-to-face interactions with digital alternatives unless supported by a high level of trust (). Given that trust helps to reduce uncertainty, trust in e-government services can alleviate citizens’ perceived uncertainty in digital environments, thereby facilitating the formation of intentions to adopt such services (). Based on these findings, we propose the following hypothesis:

H7.

Trust of e-government services (TOE) will directly and positively affect citizens’ e-government adoption intention (EGA).

2.6. Modulation Effects of Agent Types

Although both human agents and government chatbots (GCs) represent governmental authorities in delivering public services and theoretically convey the positive effects derived from trust in government (TOG), thereby mitigating citizens’ perceived uncertainty and risk (PR) during service interactions, this study proposes that agent type moderates the relationship between TOG and PR. Specifically, it argues that the negative influence of TOG on PR is weaker when services are delivered by GCs rather than human agents.

According to attribution theory (; ; ), individuals typically form judgments about observed events along three dimensions—controllability, stability, and locus of causality. Compared to human agents, who possess clearly identifiable legal accountability, GCs lack a formal legal status, resulting in ambiguity regarding responsibility attribution (). Consequently, citizens face greater difficulty identifying specific entities accountable for potential risks or service failures.

Previous studies exploring citizens’ perceptions of service failures across different agent types (human vs. AI) found that individuals are less likely to directly attribute service failures to AI agents and are more inclined to hold governmental institutions responsible (). This differentiation in responsibility attribution further weakens the mitigating effect of TOG on citizens’ PR toward e-government services, as well as the trust-transfer effect to e-government services, when the agent type is GCs. Based on these findings, we propose the following hypothesis:

H8.

Agent type moderates the relationship between trust of government (TOG) and perceived risk (PR). Specifically, the negative effect of TOG on PR is weaker when the service provider is a government chatbot (GC) rather than a human agent.

H9.

Agent type moderates the relationship between trust of government (TOG) and trust of e-government services (TOE). Specifically, the positively effect of TOG on PR is weaker when the service provider is a government chatbot (GC) rather than a human agent.

Furthermore, the potential moderating effects of governmental trust are likely context-dependent rather than static. Previous research has identified significant algorithm aversion particularly in high-risk sectors such as finance (), healthcare (), and legal services (), while AI solutions in low-risk contexts such as navigation or spelling checks are more readily accepted ().

Research suggests that individuals exhibit heightened levels of sensitivity when faced with significant potential losses (; ). Consequently, in high-risk scenarios, users become particularly cautious, closely scrutinizing the service provider’s capacity to avoid critical errors (). For instance, public trust in the accuracy and reliability of chatbot services is considerably lower in high-risk fields such as taxation and childcare support, compared to lower-risk activities like waste classification (). Consequently, PR may serve as an important moderating variable in the relationship between TOG and algorithm aversion; however, this interactive mechanism remains inadequately explored.

The results of a GC study on perceptions of government corruption indirectly support this view. The study found that when citizens perceive government institutions as lacking integrity or fairness (i.e., low-TOG scenarios) in high-risk situations—such as resource allocation—they may prefer AI-based services due to concerns about potential corruption or subjective biases associated with human-led services (). In other words, the perceived level of risk moderates the effect of government trust on trust transfer toward e-government services under different agency contexts. This finding highlights the important three-way interaction between PR, TOG, and agency type on TOE. Therefore, this study hypothesizes as follows:

H10.

Perceived risk (PR) moderates the moderating effect of agent type on the relationship between trust of government (TOG) and trust of e-government services (TOE).

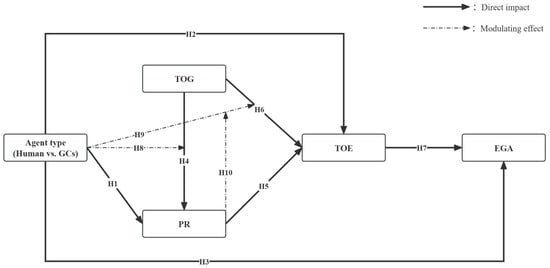

The research model of this study is shown in Figure 1.

Figure 1.

Research model of this research. Agent type: GC (government chatbot) or human. TOE: Trust of e-government services. EGA: E-government service adoption intention. TOG: Trust of government. PR: Perceived risk.

3. Methodology

3.1. Experimental Scenario Design and Measurement Instrument Development

We conducted a scenario-based online experimental study to empirically validate the proposed conceptual model. The objective was to avoid either overestimating or underestimating the impact of agent type differences on citizens’ TOE and their EGA, while also elucidating the underlying mechanisms.

Given the practical usage context of GCs, the experimental scenario was intentionally designed around a typical government consultation task, as such interactions represent one of the most common real-world applications of GCs (). Specifically, participants were asked to imagine a general information-seeking situation in which they intended to use an official online platform to inquire about a government agency’s office hours.

Within this context, we implemented a 2 (government news: positive vs. negative) × 2 (agent type: human vs. GC) between-subjects experimental design. We showed participants news reports highlighting government performance (positive or negative) and clearly describing the type of service agents (human or GC). These manipulations were designed to induce variation in TOG () and recognition of agent type ().

After reading the scenario description, participants were asked to complete measurement items corresponding to each construct in the theoretical model, including trust of government (TOG; 3 items) (), perceived risk (PR; 2 items) (), trust of e-government services (TOE; 3 items) (), and e-government services adoption intention (EGA; 3 items) (). To ensure the accuracy and validity of the research findings, all measurement items were drawn from well-established scales in the existing literature and were appropriately adapted to fit the specific context of this study.

All measurement items for the study variables were assessed using five-point Likert scales ranging from 1 (“strongly disagree”) to 5 (“strongly agree”). The final section of the questionnaire collected demographic information, including participants’ age, gender, educational background, and frequency of using AI tools.

In addition, to ensure data quality, one attention check item was embedded in the questionnaire, requiring participants to recall who provided the service—whether it was a human or a GC. Responses that failed to match the assigned condition were excluded from the analysis, thereby confirming that participants were attentive and provided meaningful responses.

Appendix A lists all measurement items used in the study. Appendix B provides descriptions of all scenarios.

3.2. Data Collection

The data were collected through an online survey distributed to Chinese citizens by Sojump, one of China’s largest professional online survey companies ().

Given the shared linguistic and cultural background of all respondents, the online questionnaire was administered in Chinese to ensure accurate comprehension and response (). Prior to participation, all respondents were provided with an informed consent form and were fully briefed on the purpose of the study. The data collected were used exclusively for academic purposes and handled in strict accordance with principles of confidentiality, voluntary participation, and anonymity. Over a five-day data collection period, a total of 409 valid questionnaires were collected, of which 91 were excluded due to failed attention checks, participants being under the age of 18, or incomplete information, resulting in 318 valid samples.

Among the respondents, 58.8% were female (n = 187) and 54.7% were between the ages of 31 and 40 (n = 147). A substantial majority held a university degree (n = 288; 90.2%) and reported frequent use of AI tools (n = 198; 62.3%). This indicates that they have experienced both human and AI agent services, representing a potential user group for future GCs. Therefore, we believe that this sample is highly representative and can be used for further analysis. A detailed summary of sample demographics is presented in Table 1.

Table 1.

Summary of sample demographics (N = 318).

3.3. Research Ethics Review

This study was approved by the Research Ethics Committee of the School of Economics and Management at Liaoning University of Technology.

4. Data Analysis and Results

4.1. Data Analysis

As noted in Section 3.1, participants were asked to recall whether the service agent described in the scenario was a human or a GC. Responses that were inconsistent with the scenario assignment were removed from the dataset. Thus, the successful elimination of inconsistent cases supports the validity of agent type manipulation.

The data were analyzed using IBM SPSS version 29.0. The normality of variables was assessed using the Kolmogorov–Smirnov test, and the results indicated that the data did not conform to a normal distribution ().

Therefore, a nonparametric approach, specifically the Mann–Whitney U test, was applied to examine whether significant differences existed between the GC and human agent groups regarding PR (H1), TOE (H2), and EGA (H3).

Previous research has noted that a primary limitation of nonparametric tests is their exclusive utility in testing statistical hypotheses rather than estimating parameters (). Consequently, while the Mann–Whitney U test can provide p-values, it does not yield estimates of the magnitude of relationships between variables. Nevertheless, this limitation does not affect the interpretation of the current study’s results, as the analysis is primarily concerned with determining whether an association exists between the type of agent and the investigated variables.

In addition, we also employed partial least squares structural equation modeling (PLS-SEM) to test our research model. This approach was chosen because PLS-SEM is well-suited for handling non-normally distributed data and for analyzing complex model structures (). Compared to covariance-based structural equation modeling (CB-SEM), which is more appropriate for testing well-established theoretical frameworks, PLS-SEM is better suited for prediction-oriented and explanatory research objectives (). Moreover, PLS-SEM has demonstrated strong performance in testing both mediation and moderation effects, making it a suitable analytical method for our proposed model (). We followed Hair’s recommendations for model testing, including both measurement and structural models ().

4.2. Common Method Bias

To assess the potential presence of common method bias, we employed Harman’s single-factor test, a widely used diagnostic method for this purpose (). The results indicated that the first principal component accounted for less than 40% of the total variance, suggesting that common method bias was not a serious concern in this study (; ).

4.3. Measurement Model

Measurement models are commonly employed to evaluate the quality of measurement instruments in empirical research, with the primary goal of ensuring that the items accurately capture the underlying constructs they are intended to measure (). This objective is achieved by assessing various criteria, including factor loadings, internal consistency reliability (Cronbach’s alpha), composite reliability (CR), average variance extracted (AVE), and discriminant validity (; ). To ensure the reliability and validity of the measurement items for all constructs in this study, we evaluated the measurement model using Smart-PLS 4.0 ().

As shown in Table 2, all standardized factor loadings exceeded 0.5, the average variance extracted (AVE) values were greater than 0.5, and the composite reliability (CR) values surpassed the recommended threshold of 0.6 (). In addition, the Cronbach’s alpha values for all constructs were above the commonly accepted cutoff of 0.7 (). These results demonstrate that the reliability and validity of all measurement items were satisfactory and met the recommended standards.

Table 2.

Reliability and validity results of the structure.

Discriminant validity was assessed using the heterotrait–monotrait ratio of correlations (HTMT). HTMT is an established criterion that evaluates discriminant validity by comparing the average correlations between constructs (heterotrait–heteromethod) to the average correlations within the same construct (monotrait–heteromethod). When all HTMT values are below the recommended threshold of 0.85, the constructs are considered sufficiently distinct (; ). As shown in Table 3, all HTMT values in this study ranged from 0.534 to 0.847, below the cutoff value, indicating that the measurement instrument demonstrates satisfactory discriminant validity.

Table 3.

Discriminant validity HTMT.

4.4. Measurement Invariance

As this study collected data under two distinct scenario conditions—namely, human agents and GCs—it was essential to ensure that the measurement instruments functioned equivalently across both groups (). To achieve this, we followed prior methodological recommendations and assessed measurement invariance by implementing the three-step Measurement Invariance of Composite Models (MICOM) procedure: (1) assessment of configural invariance, (2) assessment of compositional invariance, (3) assessment of equality of composite mean values and variances ().

The measurement models for both the human agent group and the GC group employed identical measurement items, thereby demonstrating configural invariance of the proposed model structure (). Furthermore, compositional invariance was supported, as the composite scores for all constructs were equal across the two groups. As shown in Table 4, all original correlations exceeded the 5.00% quantile of the empirical distribution, indicating satisfactory compositional invariance (). Finally, all original differences for each construct fell within the boundaries of their corresponding 95% confidence intervals, indicating that there were no significant differences in the composite means and variances between the human and GC groups (see Table 5). This finding further supports the presence of measurement invariance across the two groups (). These findings indicate that the measurement model exhibits satisfactory measurement invariance across the two conditions—human agents and GCs.

Table 4.

Compositional invariance assessment.

Table 5.

Composite mean value and variance assessment.

4.5. Differences Between Different Types of Agents

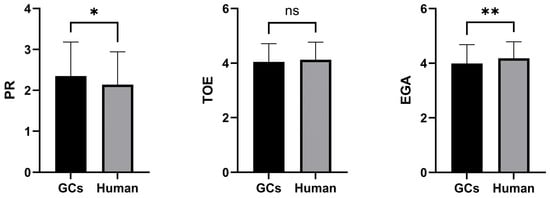

The results of Mann–Whitney U test revealed no significant difference between the GC group and the human group in terms of TOE scores (Z = −1.09, p > 0.05). However, significant differences were observed in PR (Z = −2.30, p < 0.05) and TOG (Z = 2.64, p < 0.05) scores. These findings suggest that while differences in agent type may not affect citizens’ perceptions of TOE, they may influence perceptions of PR and TOG. The results are presented in Figure 2 and Table 6.

Figure 2.

The results of Mann–Whitney U test. * = p < 0.05; ** = p < 0.01 ns: Not significant.

Table 6.

Results of Mann–Whitney U test.

4.6. Structural Model and Hypothesis Testing

Following the recommendations of prior research, we examined the variance inflation factor (VIF) values for all measurement items to assess potential multicollinearity (). The results indicated that all VIF values (1.000–1.276) were below the threshold of 5, suggesting that multicollinearity was not a problem in this study ().

Subsequently, we evaluated the structural model by examining the coefficient of determination (R2), the standardized path coefficients, and the model’s predictive relevance (Q2) ().

R2 is a standard indicator for evaluating structural models and predicting endogenous variables in research models (). () classified R2 values above 0.67 as “high,” between 0.33 and 0.67 as “medium,” and between 0.19 and 0.33 as “weak.” The results indicated that the R2 values for all endogenous variables exceeded 0.19 (see Table 7), suggesting that the structural model exhibits acceptable explanatory power and overall model quality. In addition, the model’s predictive relevance was evaluated using the blindfolding procedure, specifically the Stone–Geisser Q2 statistic. Prior research suggests that a model demonstrates predictive relevance when all Q2 values are greater than zero (). As shown in Table 7, the Q2 values for all endogenous constructs are greater than zero, indicating that the model possesses satisfactory predictive relevance ().

Table 7.

Results of determination (R2) and the model’s predictive relevance (Q2).

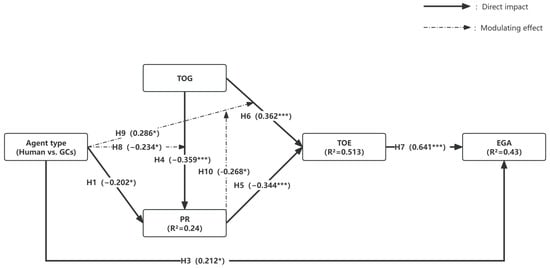

The structural model analysis produced several noteworthy findings (see Figure 3). Firstly, agent type exhibited a significant negative effect on PR (β = −0.202, p < 0.05), thereby supporting hypothesis H1. Given that agent type was coded as zero for GCs and one for human agents, this negative coefficient indicates that interactions involving human agents are associated with lower perceived risk compared to those involving GCs. In other words, citizens perceive less risk when interacting with human agents rather than government chatbots. Conversely, the direct relationship between agent type and TOE was not statistically significant (β = −0.148, p > 0.05); thus, hypothesis H2 was not supported. Notably, agent type had a significant positive impact on EGA (β = 0.212, p < 0.05), supporting hypothesis H3 and suggesting that interactions involving human agents, relative to GCs, positively enhance citizens’ adoption intentions toward e-government services.

Figure 3.

Results of structural model and hypothesis testing. Agent type: GC (government chatbot) or human. TOE: Trust of e-government services. EGA: E-government service adoption intention. TOG: Trust of government. PR: Perceived risk. Agent type was coded as a binary variable (0 = “GCs”, 1 = “human”). NS: Not significant. * = p < 0.05; *** = p < 0.001.

Regarding institutional trust, TOG significantly reduced perceived risk (β = −0.359, p < 0.001), thus supporting hypothesis H4. Furthermore, perceived risk negatively affected TOE (β = −0.344, p < 0.001), while TOG had a positive direct effect on TOE (β = 0.362, p < 0.001); hence, hypotheses H5 and H6 were both supported. These findings provide empirical evidence of a trust-transfer mechanism, suggesting that greater institutional trust enhances trust in specific e-government services, both directly and indirectly, through the mitigation of perceived risk. In turn, TOE exhibited a robust positive relationship with EGA (β = 0.641, p < 0.001), thereby confirming hypothesis H7 and underscoring TOE’s central mediating role in facilitating e-government adoption.

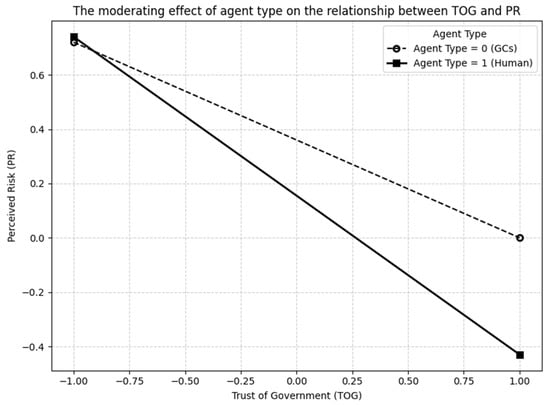

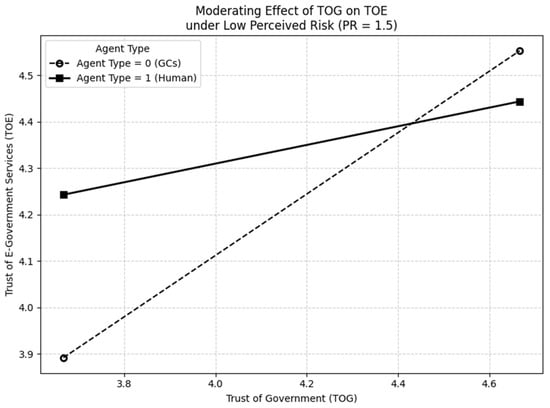

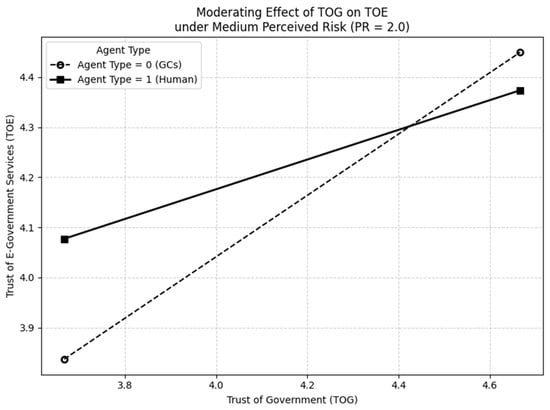

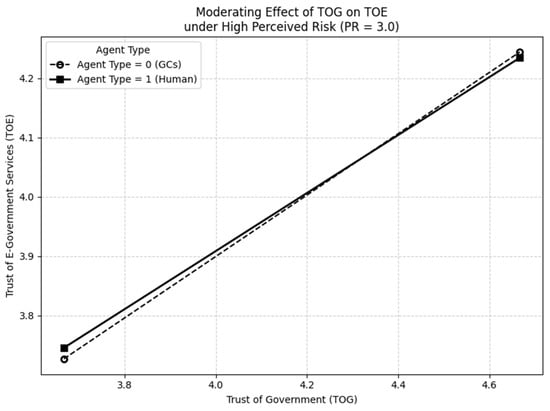

The moderation analyses provided additional insights. A significant interaction effect between agent type and TOG on PR was identified (β = −0.234, p < 0.05), supporting hypothesis H8 and indicating that government trust more strongly mitigates perceived risk when the interaction involves human agents rather than GCs. Similarly, the interaction between agent type and TOG significantly influenced TOE (β = 0.286, p < 0.05), supporting hypothesis H9; this implies that the positive relationship between government trust and TOE is stronger for interactions involving human agents compared to GCs. Moreover, a significant three-way interaction among agent type, TOG, and PR on TOE (β = −0.268, p < 0.05) supported hypothesis H10, highlighting the nuanced and conditional interplay among agent identity, institutional trust, and risk perceptions in shaping citizens’ trust toward e-government services. Figure 4 shows the moderating effect of agency type on the relationship between TOG and PR. Figure 5, Figure 6 and Figure 7 show the three-way interaction effects of agency type, TOG, and PR on TOE. The results are summarized in Table 8.

Figure 4.

The moderating effect of agent type on the relationship between trust of government (TOG) and perceived risk (PR).

Figure 5.

The trust-transfer effect of trust of government (TOG) on trust of e-government services (TOE) under conditions of low perceived risk (PR).

Figure 6.

The trust-transfer effect of trust of government (TOG) on trust of e-government services (TOE) under conditions of medium perceived risk (PR).

Figure 7.

The trust-transfer effect of trust of government (TOG) on trust of e-government services (TOE) under conditions of high perceived risk (PR).

Table 8.

Results of hypothesis testing.

5. Discussion

Drawing on the theoretical foundations of algorithm aversion, trust-transfer theory, and perceived risk theory, this study aimed to explore three core research questions: (1) whether the type of agent (human vs. government chatbot) influences citizens’ perceptions of e-government services; (2) whether the effect of TOG on citizens’ adoption of e-government services is consistent across different agent types; (3) if differences exist, whether there are boundary conditions that moderate the differential effects of TOG across agent types. The empirical findings offer novel insights into the psychological mechanisms and contextual dependencies underlying the adoption of AI-mediated public services.

5.1. The Impact of Agent Type on Citizens’ Perceptions of E-Government Services

The results provide partial support for the influence of agent type on citizens’ attitudes. Although no significant difference was found TOE between the GCs and human agent groups, significant differences emerged in PR and adoption intention EGA. These findings suggest that while citizens perceive higher risks and lower adoption intentions when interacting with GCs, their trust in the service itself remains comparable to that of human-mediated services. This reveals a perceptual decoupling between the agent and the service, indicating that citizens’ algorithm aversion is more affective and identity-driven rather than rooted in a rational evaluation of service competence (; ; ).

5.2. The Moderating Role of Agent Type in Trust Transfer Mechanisms

This study further contributes to the literature by illustrating that the effects of institutional trust are contingent on agent type. Specifically, TOG was found to reduce PR and directly enhance TOE, but these effects were significantly stronger when services were delivered by human agents rather than by GCs. These findings underscore the differential efficacy of trust-transfer mechanisms depending on the perceived human-likeness and accountability of the service agent.

Two complementary explanations may account for this asymmetry. First, from a responsibility attribution perspective, citizens are more likely to assign blame or credit to identifiable human agents, whose actions are perceived as individually accountable within bureaucratic structures (). In contrast, GCs—being algorithmic tools—lack legal and moral agency (). As such, service failures involving GCs are more readily attributed to systemic factors or institutional shortcomings, weakening the buffering effect of TOG on perceived risk.

Second, from a social cognition standpoint, human agents are better equipped to engage in relational signaling—through adaptive language, emotional cues, and personalized interaction—which strengthens interpersonal trust and facilitates the extension of institutional trust to specific service experiences (). The absence of these qualities in GCs limits their ability to serve as effective trust conduits, particularly in politically sensitive or emotionally charged service contexts ().

5.3. Perceived Risk as a Boundary Condition for Human–GC Trust Gap

This study further uncovers the conditional dynamics of the human–GC trust gap within the context of China’s digital governance. Specifically, we find that when citizens’ PR is high, there is no significant difference in TOE between human agents and GCs, regardless of their level of TOG. However, under low-PR conditions, divergent patterns emerge depending on the level of TOG. When TOG is high, citizens exhibit greater trust in GCs than in human agents—an indication of algorithm appreciation. Conversely, when TOG is low, trust in human agents surpasses that in GCs, reflecting algorithm aversion (see Figure 4, Figure 5 and Figure 6).

Interestingly, these findings appear to contradict earlier research (). Prior research has shown that in high perceived corruption and high-risk contexts, citizens may prefer GCs to avoid negative experiences with potentially corrupt bureaucrats. In contrast, when perceived risk is low, there tends to be no significant preference between human and AI agents. However, this discrepancy is explainable. We argue that under high-risk conditions, citizens prioritize the agent’s perceived ability to effectively complete the task. In China, where the government maintains strict control over online discourse, citizens generally exhibit higher trust in government than in countries with higher perceived corruption and greater freedom of speech (). This limits the formation of widespread biases against government officials. Moreover, given the Chinese government’s strong promotion of AI technologies, citizens’ trust in GCs’ task performance capability is nearly equivalent to that of human agents (; ). The non-significant result of H2 further supports this interpretation.

According to prospect theory, individuals demonstrate heightened sensitivity in conditions of potential high loss (; ). Thus, under high-PR conditions, Chinese citizens tend to evaluate agents based on their perceived task competence rather than relying on human–machine biases, resulting in a “trust equilibrium” between human and GCs.

In contrast, under low-risk conditions, the experiential aspects of agent interaction become more salient. Prior research on human–computer interaction supports this view, suggesting that when service failure is less severe, anthropomorphic social cues such as friendly expressions or informal tone can enhance users’ positive emotional responses—an effect that diminishes under high-severity conditions (; ). Therefore, under low PR and high TOG, GCs—viewed as legitimate extensions of institutional authority—are more likely to benefit from trust transfer (). Users overcome biases rooted in their non-human characteristics and recognize functional advantages, such as rapid response, 24/7 availability, and standardized processing, resulting in algorithm appreciation ().

Conversely, when TOG is low, GCs are more likely to be perceived through the lens of their non-human traits—such as lack of empathy, absence of accountability, and unfamiliar communicative styles—leading to algorithm aversion ().

6. Conclusions

6.1. Practical Contribution

This study offers several noteworthy theoretical contributions to the literature on e-government and human–AI interaction. First, it enriches the concept of algorithm aversion by revealing a perceptual decoupling between the service agent and the service itself. While citizens interacting with GCs exhibit higher PR and lower EGA, their TOE remains comparable to that of human-mediated services. This finding suggests that algorithm aversion may be less about doubts concerning task competence and more about affective discomfort and identity misalignment with non-human agents (; ).

Second, by integrating agent type (i.e., human vs. government chatbot) as a moderator into the proposed model, this study makes a significant theoretical contribution to the literature on digital government and trust-transfer mechanisms. While prior research has primarily treated agent type as a main effect or contextual variable, our findings highlight its boundary-spanning role in shaping the strength and direction of the relationship between TOG and TOE. Specifically, the moderating effect of agent type suggests that the trust-transfer process is not uniform across service delivery modes; instead, it is contingent upon whether the interaction is mediated by a human or a digital agent. This nuanced perspective extends existing trust theories by demonstrating that trust-building in public service contexts is sensitive to the characteristics of the service interface. Thus, our revised model advances conceptual clarity by identifying agent type as a critical contextual condition under which institutional trust exerts influence on service-level trust, offering new pathways for both theory refinement and practical implementation in AI-enabled public service delivery.

To the best of our knowledge, this study is the first to empirically examine the differential effect of TOG on TOE across distinct agent types, and the boundary conditions under which these trust-transfer mechanisms operate. Our findings indicate that TOG more effectively reduces PR and enhances TOE when services are delivered by human agents as opposed to GCs. This asymmetry in trust-transfer efficacy is theoretically meaningful, as it highlights the moderating role of agent type and challenges the assumption that institutional trust translates uniformly across different service channels.

Third, we identify perceived risk as a critical boundary condition that shapes the dynamics of human–GC trust gaps. Under high-risk conditions, Chinese citizens prioritize agents’ task competence over anthropomorphic traits, resulting in a “trust equilibrium” between human agents and GCs. In contrast, under low-risk conditions, experiential and relational factors become more salient, producing either algorithm appreciation or aversion depending on citizens’ baseline trust in government. This conditional dynamic integrates insights from prospect theory, trust-transfer theory, and social cognition perspectives, offering a more nuanced model of how citizens form trust judgments in AI-mediated public services.

Finally, by situating our study in the unique context of China’s digital governance, where government trust is generally high and AI initiatives are state-endorsed, we contribute a localized yet theoretically generalizable understanding of trust formation in e-government. The results advance the cross-cultural applicability of trust-related theories and highlight the need to account for political and institutional contexts in studies of public sector AI adoption.

6.2. Theoretical Contributions

The findings of this study yield several important practical implications for policymakers and system designers aiming to enhance public acceptance of AI-enabled government services.

First, the observed gap in EGA between human and GCs agents under low-risk conditions underscores the importance of emotional and relational design in GC development. Government agencies should invest in humanizing interface features such as adaptive language, emotional expressiveness, and personalized responses to mitigate affective resistance and foster user engagement (; ).

Second, under high-risk service scenarios, citizens place greater weight on agents’ perceived task competence than on their human-likeness. This suggests that GC deployment in such contexts should emphasize capabilities like reliability, consistency, and accuracy, rather than focusing solely on social cues. Clear communication of performance metrics and service guarantees may help to reinforce task-based trust (; ).

Third, although TOG does help to promote trust transfer to TOE in the context of GCs, its impact is significantly weaker than that of human agents. To bridge this gap, the government should clearly communicate the legitimacy, authority, and accountability of GCs. For example, it could improve accountability systems for GCs and develop explainable artificial intelligence (XAI) ().

Fourth, our findings suggest the need for differentiated deployment strategies depending on the perceived risk of the task. In low-risk contexts, GC design should prioritize user experience and relational richness (). In contrast, in high-risk settings, highlighting procedural transparency and outcome assurance is more likely to enhance public confidence. For example, improving the accountability system for GCs () and promoting a human–GC collaborative e-government service delivery system ().

Finally, this study provides an evidence-based foundation for designing citizen-centric e-government systems that strategically manage trust, mitigate algorithm aversion, and align with the public’s expectations under varying service conditions. As governments worldwide increasingly integrate AI into their service delivery infrastructures, understanding the conditional trust dynamics identified in this study is essential for fostering effective and equitable human–machine governance.

6.3. Research Limitations and Future Research

Despite its contributions, this study has several limitations that warrant attention in future research.

First, this study did not distinguish whether users were engaging with the service for the first time or had prior experience with repeated use. In contexts of repeated service interactions, decision-makers may adapt their strategies based on ongoing feedback from previous decisions, which may enhance or diminish the salience of task-relevant information—such as agent type—in the decision-making process. Therefore, future research would benefit from examining whether the impact of agent type on trust in e-government services persists or diminishes over the course of repeated interactions ().

Second, due to the screening of participant attention checks, 91 out of the 409 collected responses (22.2%) were excluded, reflecting a considerable attrition rate. Additionally, our sampling approach led to participants predominantly comprising individuals with prior experience using AI tools, resulting in potential sampling bias. Consequently, the generalizability of our findings might be limited to populations already familiar with AI technology. Future studies should consider employing broader sampling techniques to include diverse participant backgrounds and investigate potential differences in outcomes between AI-experienced and AI-inexperienced groups, thereby enhancing the external validity and representativeness of the results.

Third, the generalizability of our findings should be interpreted within the specific cultural and political context of China. Prior cross-cultural studies based on Hofstede’s cultural dimensions have shown that Chinese citizens exhibit lower levels of uncertainty avoidance compared to populations in countries such as South Korea, which partly explains their higher intention to adopt online payment systems despite potential risks (). Notably, uncertainty avoidance was found to negatively moderate the relationship between perceived ease of use and usage intention. We argue that similar cultural dynamics may influence how Chinese citizens perceive and adopt AI-driven e-government services. Future research should draw on cultural frameworks such as Hofstede or GLOBE to compare how citizens from different countries respond to AI-enabled public services, thereby providing a more culturally grounded understanding of trust and risk perceptions in digital governance.

Fourth, while this study focused on PR and TOG as key antecedents of TOE and EGA, future research should broaden the scope to include additional psychological factors (e.g., empathy, trust propensity) and contextual influences (e.g., time pressure, task complexity). These factors may interact with existing variables or exert independent effects on users’ evaluations and decision-making processes, thereby offering a more comprehensive understanding of citizens’ responses to government chatbot services.

Moreover, although this study explored the relationships among agent type, TOG, and PR, and sought to manipulate the distribution of TOG levels by varying government performance reports (positive or negative), thereby extending the understanding of agent types in the context of e-government services, several limitations remain. Specifically, given that both TOG and PR are recognized as inherently multidimensional constructs, simplifying them into unidimensional structures to represent overall perceptions may result in an oversimplification of these concepts (; ). Consequently, this simplification potentially restricts the generalizability and interpretability of our findings. Future studies are encouraged to adopt multidimensional approaches based on realistic usage scenarios to more accurately capture the complexity of trust and risk perceptions within AI-based public services.

Finally, technological advancements such as natural language processing improvements, emotional recognition, and adaptive dialog strategies may further blur the distinctions between human and GC interactions. As such, longitudinal studies are needed to track how citizen trust in GCs evolves alongside these technological developments ().

Author Contributions

Conceptualization, Y.S., T.N. and X.Y.; methodology, Y.S.; software, Y.S.; validation, Y.S.; formal analysis, Y.S.; investigation, Y.S.; resources, Y.S., T.N. and X.Y.; data curation, Y.S.; writing—original draft preparation, Y.S.; writing—review and editing, Y.S., T.N. and X.Y.; visualization, Y.S.; supervision, T.N. and X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Scientific Research Ethics Committee of the School of Economics and Management, Liaoning University of Technology (protocol code 20250315 on 15 Match 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Measurement Items for All Structures

| Construct | Items |

| Trust in e-government service (TOE) () |

|

| |

| |

| E-government service adoption intention (EGA) () |

|

| |

| |

| Trust in the government (TOG) () |

|

| |

| |

| Perceived risk (PR) () |

|

|

Appendix B. Scenario Description

| Scenario Description: 2 × 2 Between-Subjects Design (Agent Type × Government Performance News) | |

| Positive × Human | You recently read a report about the positive results achieved by the government in improving the quality of public services. For example, government officials have demonstrated a high level of integrity and professionalism in handling public affairs and actively responded to the needs of citizens. In addition, the government has strengthened training for civil servants to ensure that they provide efficient services and adhere to ethical standards. In this context, you wish to use the government’s online platform to inquire about the office hours of a certain department. The system has automatically assigned a government staff member to assist you. |

| Positive × GCs | You recently read a report about the positive results achieved by the government in improving the quality of public services. For example, government officials have demonstrated a high level of integrity and professionalism in handling public affairs and actively responded to the needs of citizens. In addition, the government has strengthened training for civil servants to ensure that they provide efficient services and adhere to ethical standards. In this context, you wish to use the government’s online platform to inquire about the office hours of a certain department. The system has automatically assigned a government chatbot to assist you. |

| Negative × Human | You have recently come across a report stating that certain government departments have been criticized for inefficiency and a lack of integrity in public service delivery. Some citizens have reported experiences of bureaucratic shirking and inaccurate information, resulting in delays in service processing. In this context, you wish to use the government’s online platform to inquire about the office hours of a certain department. The system has automatically assigned a government staff member to assist you. |

| Negative × GCs | You have recently come across a report stating that certain government departments have been criticized for inefficiency and a lack of integrity in public service delivery. Some citizens have reported experiences of bureaucratic shirking and inaccurate information, resulting in delays in service processing In this context, you wish to use the government’s online platform to inquire about the office hours of a certain department. The system has automatically assigned a government chatbot to assist you. |

References

- Abbas, N., Følstad, A., & Bjørkli, C. A. (2023). Chatbots as part of digital government service provision—A user perspective. In A. Følstad, T. Araujo, S. Papadopoulos, E. L.-C. Law, E. Luger, M. Goodwin, & P. B. Brandtzaeg (Eds.), Chatbot research and design (Vol. 13815, pp. 66–82). Springer International Publishing. [Google Scholar]

- Abdelhalim, E., Anazodo, K. S., Gali, N., & Robson, K. (2024). A framework of diversity, equity, and inclusion safeguards for chatbots. Business Horizons, 67(5), 487–498. [Google Scholar] [CrossRef]

- Abu-Shanab, E. (2014). Antecedents of trust in e-government services: An empirical test in Jordan. Transforming Government: People, Process and Policy, 8(4), 480–499. [Google Scholar] [CrossRef]

- Adamopoulou, E., & Moussiades, L. (2020). Chatbots: History, technology, and applications. Machine Learning with Applications, 2, 100006. [Google Scholar] [CrossRef]

- Alagarsamy, S., & Mehrolia, S. (2023). Exploring chatbot trust: Antecedents and behavioural outcomes. Heliyon, 9(5), e16074. [Google Scholar] [CrossRef]

- Alghamdi, S. Y., Kaur, S., Qureshi, K. M., Almuflih, A. S., Almakayeel, N., Alsulamy, S., Qureshi, M. R. N., & Nevárez-Moorillón, G. V. (2023). Antecedents for online food delivery platform leading to continuance usage intention via E-word-of-mouth review adoption. PLoS ONE, 18(8), e0290247. [Google Scholar] [CrossRef]

- Al Shamsi, J. H., Al-Emran, M., & Shaalan, K. (2022). Understanding key drivers affecting students’ use of artificial intelligence-based voice assistants. Education and Information Technologies, 27(6), 8071–8091. [Google Scholar] [CrossRef]

- Alzahrani, L., Al-Karaghouli, W., & Weerakkody, V. (2017). Analysing the critical factors influencing trust in e-government adoption from citizens’ perspective: A systematic review and a conceptual framework. International Business Review, 26(1), 164–175. [Google Scholar] [CrossRef]

- Alzahrani, L., Al-Karaghouli, W., & Weerakkody, V. (2018). Investigating the impact of citizens’ trust toward the successful adoption of e-government: A multigroup analysis of gender, age, and internet experience. Information Systems Management, 35(2), 124–146. [Google Scholar] [CrossRef]

- Androutsopoulou, A., Karacapilidis, N., Loukis, E., & Charalabidis, Y. (2019). Transforming the communication between citizens and government through AI-guided chatbots. Government Information Quarterly, 36(2), 358–367. [Google Scholar] [CrossRef]

- Aoki, N. (2020). An experimental study of public trust in AI chatbots in the public sector. Government Information Quarterly, 37(4), 101490. [Google Scholar] [CrossRef]

- Bayram, A. B., & Shields, T. (2021). Who trusts the WHO? Heuristics and Americans’ trust in the World Health Organization during the COVID-19 pandemic. Social Science Quarterly, 102(5), 2312–2330. [Google Scholar] [CrossRef]

- Belanche, D., Casaló, L. V., Flavián, C., & Schepers, J. (2014). Trust transfer in the continued usage of public e-services. Information & Management, 51(6), 627–640. [Google Scholar] [CrossRef]

- Benitez, J., Henseler, J., Castillo, A., & Schuberth, F. (2020). How to perform and report an impactful analysis using partial least squares: Guidelines for confirmatory and explanatory IS research. Information & Management, 57(2), 103168. [Google Scholar] [CrossRef]

- Beyari, H., & Hashem, T. (2025). The role of artificial intelligence in personalizing social media marketing strategies for enhanced customer experience. Behavioral Sciences, 15(5), 700. [Google Scholar] [CrossRef]

- Bélanger, F., & Carter, L. (2008). Trust and risk in e-government adoption. The Journal of Strategic Information Systems, 17(2), 165–176. [Google Scholar] [CrossRef]

- Bonezzi, A., Ostinelli, M., & Melzner, J. (2022). The human black-box: The illusion of understanding human better than algorithmic decision-making. Journal of Experimental Psychology: General, 151(9), 2250–2258. [Google Scholar] [CrossRef] [PubMed]

- Castelo, N. (2024). Perceived corruption reduces algorithm aversion. Journal of Consumer Psychology, 34(2), 326–333. [Google Scholar] [CrossRef]

- Chen, C. (2024). How consumers respond to service failures caused by algorithmic mistakes: The role of algorithmic interpretability. Journal of Business Research, 176, 114610. [Google Scholar] [CrossRef]

- Chen, J. V., Jubilado, R. J. M., Capistrano, E. P. S., & Yen, D. C. (2015). Factors affecting online tax filing—An application of the IS success model and trust theory. Computers in Human Behavior, 43, 251–262. [Google Scholar] [CrossRef]

- Chen, T., & Gasco-Hernandez, M. (2024). Uncovering the results of AI chatbot use in the public sector: Evidence from US State Governments. Public Performance & Management Review, 1–26. [Google Scholar] [CrossRef]

- Chen, T., Li, S., Zeng, Z., Liang, Z., Chen, Y., & Guo, W. (2024). An empirical investigation of users’ switching intention to public service robots: From the perspective of PPM framework. Government Information Quarterly, 41(2), 101933. [Google Scholar] [CrossRef]

- Chen, Y.-S., & Chang, C.-H. (2012). Enhance green purchase intentions. Management Decision, 50(3), 502–520. [Google Scholar] [CrossRef]

- Choi, J.-C., & Song, C. (2020). Factors explaining why some citizens engage in e-participation, while others do not. Government Information Quarterly, 37(4), 101524. [Google Scholar] [CrossRef]

- Cortés-Cediel, M. E., Segura-Tinoco, A., Cantador, I., & Bolívar, M. P. R. (2023). Trends and challenges of e-government chatbots: Advances in exploring open government data and citizen participation content. Government Information Quarterly, 40(4), 101877. [Google Scholar] [CrossRef]

- Creemers, R. (2017). Cyber China: Upgrading propaganda, public opinion work and social management for the twenty-first century. Journal of Contemporary China, 26(103), 85–100. [Google Scholar] [CrossRef]

- Curiello, S., Iannuzzi, E., Meissner, D., & Nigro, C. (2025). Mind the gap: Unveiling the advantages and challenges of artificial intelligence in the healthcare ecosystem. European Journal of Innovation Management. ahead-of-print. [Google Scholar] [CrossRef]

- Čerka, P., Grigienė, J., & Sirbikytė, G. (2015). Liability for damages caused by artificial intelligence. Computer Law & Security Review, 31(3), 376–389. [Google Scholar] [CrossRef]

- Dang, J., & Liu, L. (2024). Extended artificial intelligence aversion: People deny humanness to artificial intelligence users. Journal of Personality and Social Psychology. [Google Scholar] [CrossRef] [PubMed]

- Dang, Q., & Li, G. (2025). Unveiling trust in AI: The interplay of antecedents, consequences, and cultural dynamics. AI and Society. [Google Scholar] [CrossRef]

- Dietvorst, B. J., & Bharti, S. (2020). People reject algorithms in uncertain decision domains because they have diminishing sensitivity to forecasting error. Psychological Science, 31(10), 1302–1314. [Google Scholar] [CrossRef] [PubMed]

- Fahnenstich, H., Rieger, T., & Roesler, E. (2024). Trusting under risk—Comparing human to AI decision support agents. Computers in Human Behavior, 153, 108107. [Google Scholar] [CrossRef]

- Fiske, S. T., Cuddy, A. J., & Glick, P. (2007). Universal dimensions of social cognition: Warmth and competence. Trends in Cognitive Sciences, 11, 77–83. [Google Scholar] [CrossRef]

- Frank, D.-A., Chrysochou, P., Mitkidis, P., Otterbring, T., & Ariely, D. (2024). Navigating uncertainty: Exploring consumer acceptance of artificial intelligence under self-threats and high-stakes decisions. Technology in Society, 79, 102732. [Google Scholar] [CrossRef]

- Frank, D.-A., Jacobsen, L. F., Søndergaard, H. A., & Otterbring, T. (2023). In companies we trust: Consumer adoption of artificial intelligence services and the role of trust in companies and AI autonomy. Information Technology & People, 36(8), 155–173. [Google Scholar] [CrossRef]

- Fuentes, R. (2011). Efficiency of travel agencies: A case study of alicante, spain. Tourism Management, 32(1), 75–87. [Google Scholar] [CrossRef]

- Gesk, T. S., & Leyer, M. (2022). Artificial intelligence in public services: When and why citizens accept its usage. Government Information Quarterly, 39(3), 101704. [Google Scholar] [CrossRef]

- Grimmelikhuijsen, S. (2012). Linking transparency, knowledge and citizen trust in government: An experiment. International Review of Administrative Sciences, 78(1), 50–73. [Google Scholar] [CrossRef]

- Grimmelikhuijsen, S., & Knies, E. (2017). Validating a scale for citizen trust in government organizations. International Review of Administrative Sciences, 83(3), 583–601. [Google Scholar] [CrossRef]

- Grzymek, V., & Puntschuh, M. (2019). What Europe knows and thinks about algorithms results of a representative survey. Bertelsmann Stiftung Eupinions February 2019. Available online: https://aei.pitt.edu/102582/ (accessed on 26 March 2025).

- Guo, Y., & Dong, P. (2024). Factors influencing user favorability of government chatbots on digital government interaction platforms across different scenarios. Journal of Theoretical and Applied Electronic Commerce Research, 19(2), 818–845. [Google Scholar] [CrossRef]

- Gupta, P., Hooda, A., Jeyaraj, A., Seddon, J. J., & Dwivedi, Y. K. (2024). Trust, risk, privacy and security in e-government use: Insights from a MASEM analysis. Information Systems Frontiers, 27, 1089–1105. [Google Scholar] [CrossRef]

- Gupta, S., Kamboj, S., & Bag, S. (2023). Role of risks in the development of responsible artificial intelligence in the digital healthcare domain. Information Systems Frontiers, 25(6), 2257–2274. [Google Scholar] [CrossRef]

- Ha, T., Kim, S., Seo, D., & Lee, S. (2020). Effects of explanation types and perceived risk on trust in autonomous vehicles. Transportation Research Part F: Traffic Psychology and Behaviour, 73, 271–280. [Google Scholar] [CrossRef]

- Haesevoets, T., Verschuere, B., & Roets, A. (2025). AI adoption in public administration: Perspectives of public sector managers and public sector non-managerial employees. Government Information Quarterly, 42(2), 102029. [Google Scholar] [CrossRef]

- Hair, J., & Alamer, A. (2022). Partial least squares structural equation modeling (PLS-SEM) in second language and education research: Guidelines using an applied example. Research Methods in Applied Linguistics, 1(3), 100027. [Google Scholar] [CrossRef]

- Hair, J. F., Ringle, C. M., & Sarstedt, M. (2011). PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice, 19(2), 139–152. [Google Scholar] [CrossRef]

- Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review, 31(1), 2–24. [Google Scholar] [CrossRef]

- Han, G., & Yan, S. (2019). Does food safety risk perception affect the public’s trust in their government? An empirical study on a national survey in China. International Journal of Environmental Research and Public Health, 16(11), 1874. [Google Scholar] [CrossRef]

- Henseler, J., Ringle, C. M., Sarstedt, M., & Sinkovics, R. (2016). Testing measurement invariance of composites using partial least squares’ edited by R. R. Sinkovics, Ruey-Jer “Bryan” Jean And Daekwan Kim. International Marketing Review, 33(3), 405–431. [Google Scholar] [CrossRef]

- Henseler, J., & Sarstedt, M. (2013). Goodness-of-fit indices for partial least squares path modeling. Computational Statistics, 28(2), 565–580. [Google Scholar] [CrossRef]

- Hung, S.-Y., Chang, C.-M., & Yu, T.-J. (2006). Determinants of user acceptance of the e-government services: The case of online tax filing and payment system. Government Information Quarterly, 23(1), 97–122. [Google Scholar] [CrossRef]

- Imran, M., Li, J., Bano, S., & Rashid, W. (2025). Impact of democratic leadership on employee innovative behavior with mediating role of psychological safety and creative potential. Sustainability, 17(5), 1879. [Google Scholar] [CrossRef]

- Ingrams, A., Kaufmann, W., & Jacobs, D. (2022). In AI we trust? Citizen perceptions of AI in government decision making. Policy & Internet, 14(2), 390–409. [Google Scholar] [CrossRef]

- Ireland, L. (2020). Who errs? Algorithm aversion, the source of judicial error, and public support for self-help behaviors. Journal of Crime and Justice, 43(2), 174–192. [Google Scholar] [CrossRef]

- Ivanović, D., Radenković, S. D., Hanić, H., Simović, V., & Kojić, M. (2025). A novel approach to measuring digital entrepreneurial competencies among university students. The International Journal of Management Education, 23(2), 101180. [Google Scholar] [CrossRef]

- Jang, J., & Kim, B. (2022). The impact of potential risks on the use of exploitable online communities: The case of South Korean cyber-security communities. Sustainability, 14(8), 4828. [Google Scholar] [CrossRef]

- Jansen, A., & Ølnes, S. (2016). The nature of public e-services and their quality dimensions. Government Information Quarterly, 33(4), 647–657. [Google Scholar] [CrossRef]

- Ju, J., Meng, Q., Sun, F., Liu, L., & Singh, S. (2023). Citizen preferences and government chatbot social characteristics: Evidence from a discrete choice experiment. Government Information Quarterly, 40(3), 101785. [Google Scholar] [CrossRef]