Effects of Interoceptive Awareness on Recognition of and Sensitivity to Emotions in Masked Facial Stimuli

Abstract

1. Introduction

2. Methods

2.1. Participants

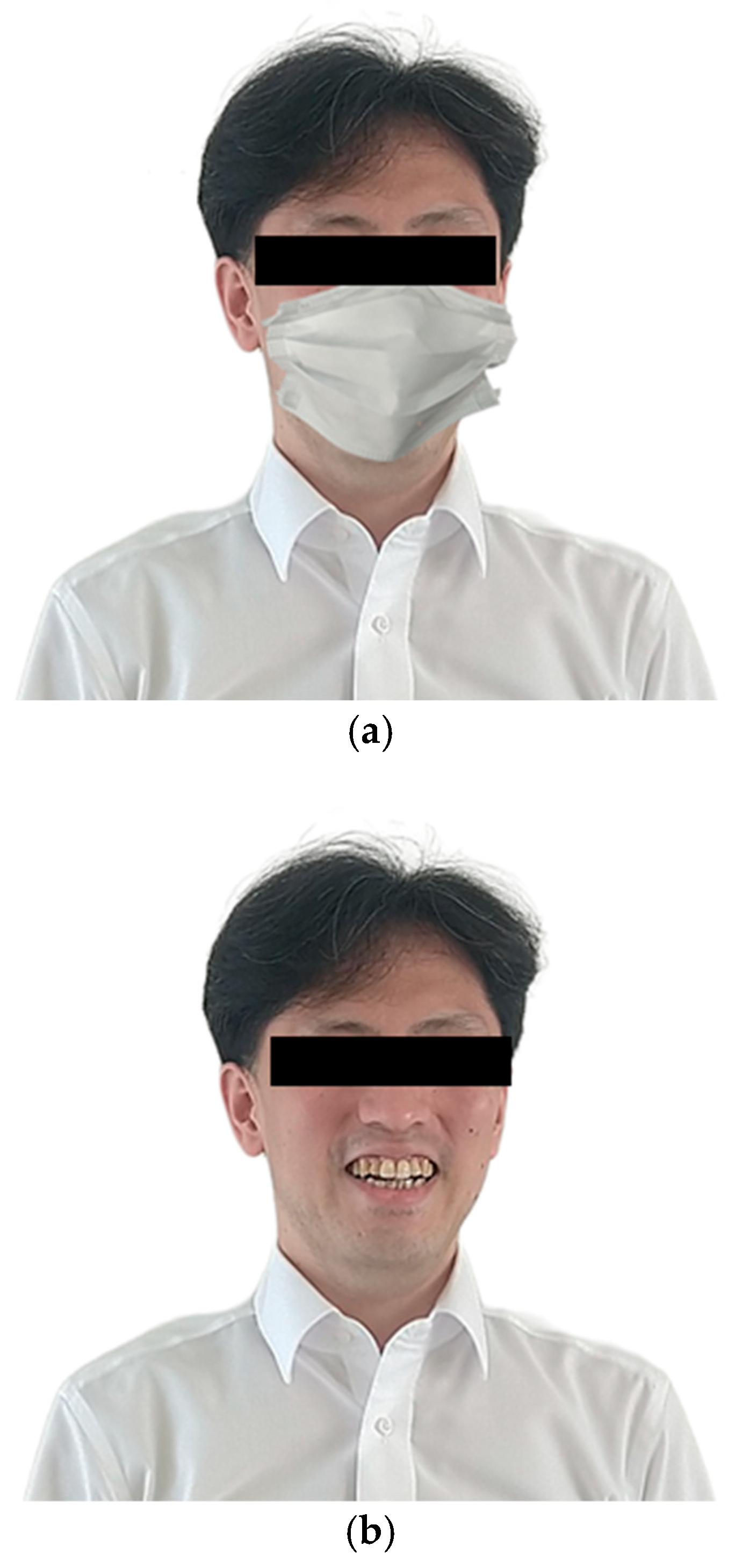

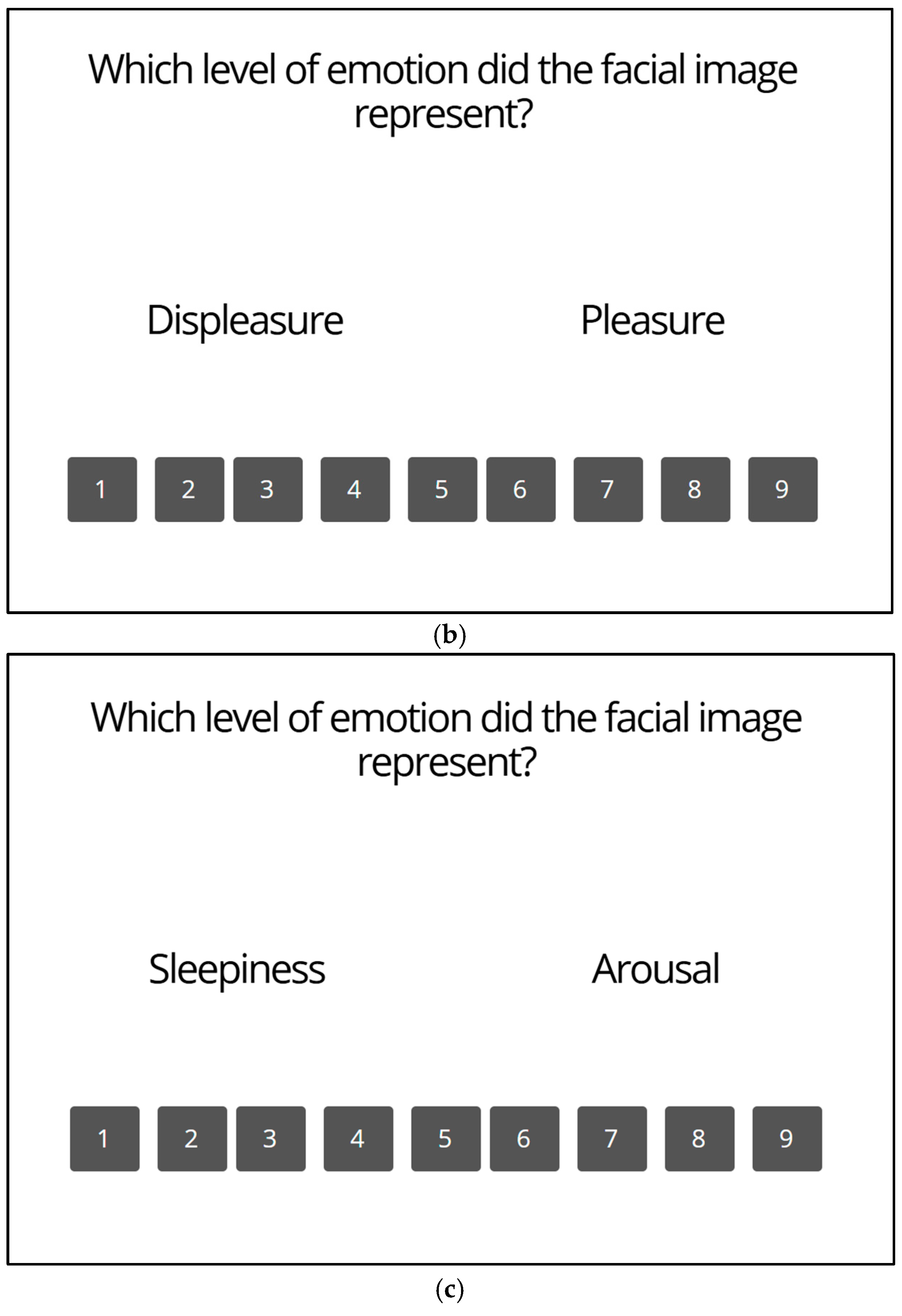

2.2. Stimuli

2.3. Psychological Scale

2.4. Procedure

2.5. Statistical Analysis Methods

3. Results

3.1. Descriptive Statistics

3.2. Results from Three-Way ANOVAs with Accuracy in Categorization of Emotions

- Three-way ANOVAs. Table 4 shows results from three-way ANOVAs with accuracy of categorization of emotions as a dependent variable. The three-way interaction (mask × emotion × interoceptive awareness) was statistically significant only for the analysis with the body-listening subscale from the MAIA as an independent variable. For the other MAIA subscales (i.e., noticing, not-distracting, attention regulation, emotional awareness and trusting), no significant three-way interaction was observed; however, two-way interactions between the presence of a mask and emotion were statistically significant. In addition, a two-way interaction between not-distracting and presence of mask was also significant. Regarding the main effects, the presence of a mask and emotion were significant, whereas interoceptive awareness was not significant across all MAIA subscales.

- Post-hoc simple interaction analyses following a three-way interaction. Because the three-way interaction involving body-listening was significant, post-hoc simple interaction analyses were conducted. These analyses revealed that simple interactions between presence of mask and body-listening were significant for the anger and disgust stimuli (anger: F [1, 560] = 8.385, p = 0.004, ηp2 = 0.095; disgust: F [1, 560] = 4.637, p = 0.032, ηp2 = 0.055). For fear, sadness, and surprise, only the main effect of the mask was significant (fear: F [1, 560] = 27.239, p < 0.001, ηp2 = 0.254; sadness: F [1, 560] = 55.904, p < 0.001, ηp2 = 0.411; surprise: F [1, 80] = 69.153, p < 0.001, ηp2 = 0.464). By contrast, statistically significant main effects or interactions were not observed for the happiness and neutral conditions.

- Post-hoc multiple comparisons following simple interactions. Given these significant simple interactions, post-hoc multiple comparisons were performed. Multiple comparisons further revealed that the accuracy for masked anger and sadness stimuli was significantly lower in individuals with higher body-listening than in those with lower body-listening (all ps < 0.05; Figure 3). Overall, these results show that individuals with a higher tendency to attend to interoception for guidance were less accurate in categorizing masked faces expressing negative emotions than those with a lower tendency to attend to interoception for insight.

- Post-hoc multiple comparisons following two-way interactions. For the analyses with the noticing, not-distracting, attention regulation, emotional awareness, and trusting subscales from the MAIA as independent variables, multiple comparisons revealed that accuracy of categorization for stimuli expressing disgust, fear, sadness, and surprise was significantly lower in the mask than in the no-mask condition for noticing or attention regulation (all ps < 0.001). Similarly, for the analyses with not-distracting, emotional awareness, or trusting, accuracy of categorization for stimuli expressing disgust, fear, sadness, and surprise was significantly lower in the mask than in the no-mask condition (all ps < 0.05). In these analyses, however, accuracy of categorization for neutral stimuli was significantly higher in the mask than in the no-mask condition (all ps < 0.05). Collectively, these results show that accuracy of categorization for disgust, fear, sadness, and surprise stimuli was lower in the no-mask than in the mask condition. In contrast, accuracy of categorization for neutral stimuli was higher in the no-mask than in the mask condition.

3.3. Results from Three-Way ANOVAs with Valence

- Three-way ANOVAs. Results from three-way ANOVAs with valence as a dependent variable are summarized in Table 5. A statistically significant three-way interaction was observed only for the analysis with the emotional awareness subscale from the MAIA as an independent variable. For the analyses involving the noticing, not-distracting, attention regulation, body-listening or trusting subscales from the MAIA as independent variables, two-way interactions between presence of mask and emotion were significant. The main effects of mask and emotion were statistically significant, whereas the main effects of interoceptive awareness were not significant for the all MAIA subscales.

- Post-hoc simple interaction analyses following a three-way interaction. Because the three-way interactions involving emotional awareness were significant, post-hoc interaction analyses were conducted. These analyses revealed significant simple interactions between presence of mask and emotional awareness for the happiness (F [1, 480] = 4.270, p = 0.039, ηp2 = 0.051) and surprise stimuli (F [1, 480] = 10.493, p = 0.0012, ηp2 = 0.116). For the disgust and fear stimuli, the main effect of presence of mask was significant in the simple interaction test that examined an interaction between presence of mask and emotional awareness (disgust: F [1, 480] = 54.215, p < 0.001, ηp2 = 0.404; fear: F [1, 480] = 62.670, p < 0.001, ηp2 = 0.439).

- Post-hoc multiple comparisons following simple interactions. Multiple comparisons further revealed that scores for valence were significantly lower in the mask than in the no-mask condition for disgust and fear stimuli in the groups with higher and lower emotional awareness (all ps < 0.001). In addition, scores for valence were significantly lower in the mask than in the no-mask condition for the happiness or surprise stimuli in the lower emotional awareness group, and for the happiness stimuli in the higher emotional awareness group (all ps < 0.001; Figure 4). Taken together, these results show that individuals with both low and high awareness of the link between interoception and emotion reported scores on valence that were closer to neutral for disgust, fear, and happiness stimuli in the mask than in the no-mask condition.

- Post-hoc multiple comparisons following two-way interactions. As two-way interactions between mask and emotion were significant for the analyses with noticing, not-distracting, attention regulation, body-listening or trusting, we subsequently performed post-hoc multiple comparisons. Multiple comparisons following two-way interactions revealed that scores on valence for disgust and fear stimuli were significantly higher and scores on valence for happiness and surprise stimuli were significantly lower in the mask than in the no-mask condition (all ps < 0.05). These results show that scores on valence in masked stimuli expressing disgust, fear, sadness, and surprise were closer to neutral compared with those in unmasked stimuli.

3.4. Results from Three-Way ANOVAs with Arousal

- Three-way ANOVAs. Table 6 summarizes results from three-way ANOVAs with arousal as a dependent variable. A three-way interaction between presence of mask, emotion, and interoceptive awareness was statistically significant only for the analysis with the emotional awareness subscale from the MAIA as an independent variable. For the analyses with the noticing, not-distracting, attention regulation, body-listening and trusting subscales from the MAIA as independent variables, two-way interactions between presence of mask and emotion were significant. Main effects of mask and emotion were statistically significant. The main effect of interoceptive awareness was also significant for the analysis with attention regulation, showing that arousal scores were higher in the higher attention regulation group compared with the lower group.

- Post-hoc simple interaction analyses following a three-way interaction. Because the three-way interaction involving emotional awareness was significant, post-hoc simple interaction analyses were conducted. These analyses revealed a significant simple interaction between presence of mask and emotional awareness for the surprise stimuli (F [1, 480] = 7.435, p = 0.007, ηp2 = 0.085). Regarding the happiness and sadness stimuli, simple interaction test that involved an interaction between presence of mask and emotional awareness revealed a significant main effect of presence of mask (happiness: F [1, 480] = 15.267, p < 0.001, ηp2 = 0.160; sadness: F [1, 480] = 4.937, p = 0.027, ηp2 = 0.058), although no other significant main effects nor interactions were observed.

- Post-hoc multiple comparisons following simple interactions. Given these significant simple interactions, post-hoc multiple comparisons were performed. These comparisons revealed that scores on arousal were significantly higher for the sadness stimuli in the mask than in the no-mask condition in individuals with lower emotional awareness. Conversely, scores on arousal were significantly lower in the mask than in the no-mask condition for happiness stimuli in the lower emotional awareness group and for surprise stimuli in the higher emotional awareness group (ps < 0.001). These data show that scores on arousal for masked facial stimuli were lower than those on unmasked stimuli, regardless of perceived connection between interoception and emotion.

- Post-hoc multiple comparisons following two-way interactions. As significant two-way interactions between mask and emotion were found for the analyses with the noticing, not-distracting, attention regulation, body-listening and trusting, we further examined them using post-hoc multiple comparisons. These analyses revealed that arousal was significantly lower for happiness and surprise stimuli, and significantly higher for sadness stimuli, in the mask than in the no-mask condition (all ps < 0.05). These data show that participants reported masked stimuli expressing sadness as being more aroused and masked stimuli expressing happiness or surprise as being less aroused than unmasked stimuli expressing these emotions.

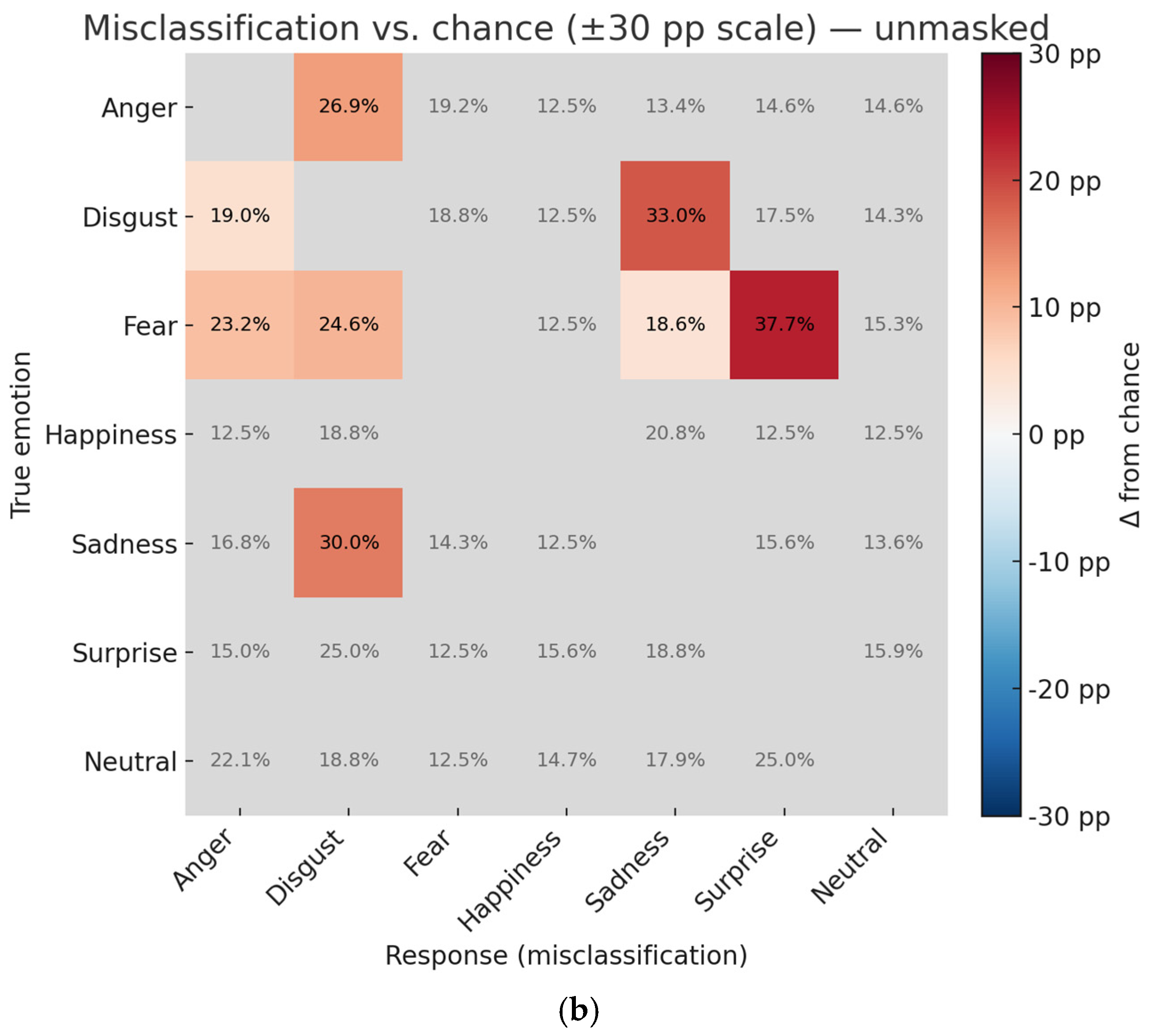

3.5. Error Patterns in Categorization of Emotions

4. Discussion

4.1. Effects of a Mask on Recognition of and Sensitivity to Emotions

4.2. Effects of Interoceptive Awareness

4.3. Limitations and Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anwyl-Irvine, A. L., Massonnié, J., Flitton, A., Kirkham, N., & Evershed, J. K. (2020). Gorilla in our midst: An online behavioral experiment builder. Behavior Research Methods, 52, 388–407. [Google Scholar] [CrossRef]

- Anzellotti, S., & Caramazza, A. (2016). From parts to identity: Invariance and sensitivity of face representations to different face halves. Cerebral Cortex, 26(5), 1900–1909. [Google Scholar] [CrossRef]

- Atanasova, K., Lotter, T., Reindl, W., & Lis, S. (2021). Multidimensional assessment of interoceptive abilities, emotion processing and the role of early life stress in inflammatory bowel diseases. Frontiers in Psychiatry, 12, 680878. [Google Scholar] [CrossRef]

- Barrett, L. F. (2006). Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review, 10(1), 20–46. [Google Scholar] [CrossRef]

- Barrett, L. F., Quigley, K. S., Bliss-Moreau, E., & Aronson, K. R. (2004). Interoceptive sensitivity and self-reports of emotional experience. Journal of Personality and Social Psychology, 87(5), 684–697. [Google Scholar] [CrossRef]

- Barrett, L. F., & Simmons, W. K. (2015). Interoceptive predictions in the brain. Nature Reviews Neuroscience, 16(7), 419–429. [Google Scholar] [CrossRef] [PubMed]

- Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition & Emotion, 28(3), 416–432. [Google Scholar]

- Bird, G., & Cook, R. (2013). Mixed emotions: The contribution of alexithymia to the emotional symptoms of autism. Translational Psychiatry, 3(7), e285. [Google Scholar] [CrossRef] [PubMed]

- Blais, C., Linnell, k. J., Caparos, S., & Estéphan, A. (2021). Cultural differences in face recognition and potential underlying mechanisms. Frontiers in Psychology, 12, 627026. [Google Scholar] [CrossRef]

- Brewer, R., Cook, R., & Bird, G. (2016). Alexithymia: A general deficit of interoception. Royal Society Open Science, 3(10), 150664. [Google Scholar] [CrossRef]

- Bridges, D., Pitiot, A., MacAskill, M. R., & Peirce, J. W. (2020). The timing mega-study: Comparing a range of experiment generators, both lab-based and online. PeerJ, 8, e9414. [Google Scholar] [CrossRef] [PubMed]

- Calì, G., Ambrosini, E., Picconi, L., Mehling, W. E., & Committeri, G. (2015). Investigating the relationship between interoceptive accuracy, interoceptive awareness, and emotional susceptibility. Frontiers in Psychology, 6, 1202. [Google Scholar] [CrossRef]

- Calvo, M. G., Fernández-Martín, A., & Nummenmaa, L. (2012). Perceptual, categorical, and affective processing of ambiguous smiling facial expressions. Cognition, 125(3), 373–393. [Google Scholar] [CrossRef]

- Calvo, M. G., & Nummenmaa, L. (2008). Detection of emotional faces: Salient physical features guide effective visual search. Journal of Experimental Psychology: General, 137(3), 471–494. [Google Scholar] [CrossRef]

- Calvo, M. G., & Nummenmaa, L. (2016). Perceptual and affective mechanisms in facial expression recognition: An integrative review. Cognition and Emotion, 30(6), 1081–1106. [Google Scholar] [CrossRef]

- Carbon, C.-C. (2020). Wearing face masks strongly confuses counterparts in reading emotions. Frontiers in Psychology, 11, 566886. [Google Scholar] [CrossRef] [PubMed]

- Chentsova-Dutton, Y. E., & Dzokoto, V. (2014). Listen to your heart: The cultural shaping of interoceptive awareness and accuracy. Emotion, 14(4), 666–678. [Google Scholar] [CrossRef]

- Craig, A. D. (2009). How do you feel—Now? The anterior insula and human awareness. Nature Reviews Neuroscience, 10(1), 59–70. [Google Scholar] [CrossRef]

- Critchley, H. D., & Garfinkel, S. N. (2017). Interoception and emotion. Current Opinion in Psychology, 17, 7–14. [Google Scholar] [CrossRef] [PubMed]

- de Vignemont, F., & Singer, T. (2006). The empathic brain: How, when and why? Trends in Cognitive Sciences, 10, 435–441. [Google Scholar] [CrossRef]

- Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17(2), 124–129. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P., & Friesen, W. V. (1978). Facial action coding system (FACS): A technique for the measurement of facial action. Consulting Psychologists Press. [Google Scholar]

- Fujimura, T., & Umemura, H. (2018). Development and validation of a facial expression database based on the dimensional and categorical model of emotions. Cognition and Emotion, 32(8), 1663–1670. [Google Scholar] [CrossRef]

- Fukushima, H., Terasawa, Y., & Umeda, S. (2011). Association between interoception and empathy: Evidence from heartbeat-evoked brain potential. International Journal of Psychophysiology, 79, 259–265. [Google Scholar] [CrossRef]

- Garfinkel, S. N., Seth, A. K., Barrett, A. B., Suzuki, K., & Critchley, H. D. (2015). Knowing your own heart: Distinguishing interoceptive accuracy from interoceptive awareness. Biological Psychology, 104, 65–74. [Google Scholar] [CrossRef]

- Greening, S. G., Mitchell, D. G. V., & Smith, F. W. (2018). Spatially generalizable representations of facial expressions: Decoding across partial face samples. Cortex, 101, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Grootswagers, T. (2020). A primer on running human behavioural experiments online. Behavior Research Methods, 52(6), 2283–2286. [Google Scholar] [CrossRef]

- Grynberg, D., Chang, B., Corneille, O., Maurage, P., Vermeulen, N., Berthoz, S., & Luminet, O. (2012). Alexithymia and the processing of emotional facial expressions (EFEs): Systematic review, unanswered questions and further perspectives. PLoS ONE, 7(8), e42429. [Google Scholar] [CrossRef]

- Gunnery, S. D., & Ruben, M. A. (2015). Perceptions of Duchenne and non-Duchenne smiles: A meta-analysis. Cognition and Emotion, 30(3), 501–515. [Google Scholar] [CrossRef]

- Han, T., Alders, G. L., Greening, S. G., Neufeld, R. W. J., & Mitchell, D. G. V. (2012). Do fearful eyes activate empathy-related brain regions in individuals with callous traits? Social Cognitive and Affective Neuroscience, 7, 958–968. [Google Scholar] [CrossRef]

- Harp, N. R., Langbehn, A., Larsen, J. T., Niedentahl, P. M., & Neta, M. (2023). Face coverings differentially alter valence judgments of emotional expressions. Basic Applied Social Psychology, 45(4), 91–106. [Google Scholar] [CrossRef] [PubMed]

- Haruki, Y., Miyahara, K., Ogawa, K., & Suzuki, K. (2025). Attentional bias towards smartphone stimuli is associated with decreased interoceptive awareness and increased physiological reactivity. Communications Psychology, 3, 42. [Google Scholar] [CrossRef]

- Hessels, R. S., Iwabuchi, T., Niehorster, D. C., Funawatari, R., Benjamins, J. S., Kawakami, S., Nyström, M., Suda, M., Hooge, I. T., Sumiya, M., Heijnen, J. I., & Senju, A. (2025). Gaze behavior in face-to-face interaction: A cross-cultural investigation between Japan and The Netherlands. Cognition, 263, 106174. [Google Scholar] [CrossRef]

- Hübner, A. M., Trempler, I., Gietmann, C., & Schubotz, R. I. (2021). Interoceptive sensibility predicts the ability to infer others’ emotional states. PLoS ONE, 16(10), e0258089. [Google Scholar] [CrossRef]

- Hübner, A. M., Trempler, I., & Schubotz, R. I. (2022). Interindividual differences in interoception modulate behavior and brain responses in emotional inference. Neuroimage, 261, 119524. [Google Scholar] [CrossRef] [PubMed]

- Ikeda, S. (2024). Social sensitivity predicts accurate emotion inference from facial expressions in a face mask: A study in Japan. Current Psychology, 43, 848–857. [Google Scholar] [CrossRef]

- Imahuku, M., Fukushima, H., Nakamura, Y., Myowa, M., & Koike, S. (2020). Interoception is associated with the impact of eye contact on spontaneous facial mimicry. Scientific Reports, 10(1), 19866. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, S., & Das, K. (2023). Investigating the role of visual experience with face-masks in face recognition during COVID-19. Journal of Vision, 22(14), 3243. [Google Scholar] [CrossRef]

- Kastendieck, T., Zilmer, S., & Hess, U. (2022). (Un) mask yourself! Effects of face masks on facial mimicry and emotion perception during the COVID-19 pandemic. Cognition and Emotion, 36(1), 59–69. [Google Scholar] [CrossRef] [PubMed]

- Keltner, D., Sauter, D., Tracy, J., & Cowen, A. (2019). Emotional expression: Advances in basic emotion theory. Journal of Nonverbal Behavior, 43(2), 133–160. [Google Scholar] [CrossRef]

- Koch, A., & Pollatos, O. (2014). Interoceptive sensitivity, body weight and eating behavior in children: A prospective study. Frontiers in Psychology, 5, 1003. [Google Scholar] [CrossRef]

- Lee, E., Kang, J. I., Park, I. H., Kim, J.-J., & An, S. K. (2008). Is a neutral face really evaluated as being emotionally neutral? Psychiatry Research, 157, 77–85. [Google Scholar] [CrossRef] [PubMed]

- Levitan, C. A., Rusk, I., Jonas-Delson, D., Lou, H., Kuzniar, L., Davidson, G., & Sherman, A. (2022). Mask wearing affects emotion perception. i-Perception, 13(3), 1–8. [Google Scholar] [CrossRef]

- Maiorana, N., Dini, M., Poletti, B., Tagini, S., Rita Reitano, M., Pravettoni, G., Priori, A., & Ferrucci, R. (2022). The effect of surgical masks on the featural and configural processing of emotions. International Journal of Environmental Research and Public Health, 19, 2420. [Google Scholar] [CrossRef]

- Maister, L., Tang, T., & Tsakiris, M. (2017). Neurobehavioral evidence of interoceptive sensitivity in early infancy. eLife, 6, e25318. [Google Scholar] [CrossRef]

- Ma-Kellams, C. (2014). Cross-cultural differences in somatic awareness and interoceptive accuracy: A review of the literature and directions for future research. Frontiers in Psychology, 5, 1379. [Google Scholar] [CrossRef]

- Mandler, G., Mandler, J. M., & Uviller, E. T. (1958). Autonomic perception questionnaire (APQ) [Database record]. APA PsycTests. [Google Scholar]

- Marshall, A. C., Gentsch, A., & Schütz-Bosbach, S. (2017). Interoceptive awareness and sensitivity differentially predict neural responses to emotional faces. Social Cognitive and Affective Neuroscience, 12(5), 718–727. [Google Scholar]

- McCrackin, S. D., & Ristic, J. (2022). Emotional context can reduce the negative impact of face masks on inferring emotions. Frontiers in Psychology, 13, 928524. [Google Scholar] [CrossRef]

- Mehling, W. E., Acree, M., Stewart, A., Silas, J., & Jones, A. (2018). The multidimensional assessment of interoceptive awareness, version 2 (MAIA-2). PLoS ONE, 13(12), e0208034. [Google Scholar] [CrossRef]

- Mehling, W. E., Price, C., Daubenmier, J. J., Acree, M., Bartmess, E., & Stewart, A. (2012). The multidimensional assessment of interoceptive awareness (MAIA). PLoS ONE, 7(11), e48230. [Google Scholar] [CrossRef] [PubMed]

- Murphy, J., & Brewer, R. (2024). Interoception: A comprehensive guide. Springer. [Google Scholar]

- Murphy, J., Catmur, C., & Bird, G. (2018). Alexithymia is associated with a multidimensional failure of interoception: Evidence from novel tests. Journal of Experimental Psychology: General, 147(3), 398–408. [Google Scholar] [CrossRef]

- Oliver, L. D., Virani, K., Finger, E. C., & Mitchell, D. G. V. (2014). Is the emotion recognition deficit associated with frontotemporal dementia caused by selective inattention to diagnostic facial features? Neuropsychologia, 60, 84–92. [Google Scholar] [CrossRef]

- Poerio, G. L., Hagan, C. C., Chilton, S., Lush, P., & Critchley, H. D. (2024). Interoceptive attention and mood in daily life. Nature Human Behaviour, 8(6), 1060–1071. [Google Scholar]

- Porges, S. W. (1993). Body perception questionnaire. Laboratory of Developmental Assessment, University of Maryland. [Google Scholar]

- Price, C. J., & Hooven, C. (2018). Interoceptive awareness skills for emotion regulation: Theory and approach of mindful awareness in body-oriented therapy (MABT). Frontiers in Psychology, 9, 798. [Google Scholar] [CrossRef]

- Ren, Q., Marshall, A. C., Liu, J., & Schütz-Bosbach, S. (2024). Listen to your heart: Trade-off between cardiac interoceptive processing and visual exteroceptive processing. NeuroImage, 299, 120808. [Google Scholar] [CrossRef] [PubMed]

- Rinck, M., Primbs, M. A., Verpaalen, I. A. M., & Bijlstra, G. (2022). Face masks impair facial emotion recognition and induce specific emotion confusions. Cognitive Research: Principles and Implications, 7, 83. [Google Scholar] [CrossRef] [PubMed]

- Ross, P., & George, E. (2022). Are face masks a problem for emotion recognition? Not when the whole body is visible. Frontiers in Neuroscience, 16, 915927. [Google Scholar] [CrossRef] [PubMed]

- Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychological Review, 110, 145–172. [Google Scholar] [CrossRef]

- Russell, J. A., & Barrett, L. F. (1999). Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. Journal of Personality and Social Psychology, 76, 805–819. [Google Scholar] [CrossRef]

- Russell, J. A., & Bullock, M. (1986). Fuzzy concepts and the perception of emotion in facial expressions. Social Cognition, 4(3), 309–341. [Google Scholar] [CrossRef]

- Russell, J. A., & Lemay, G. (2000). A dimensional contextual perspective on facial expressions. Japanese Psychological Review, 43, 161–176. (In Japanese). [Google Scholar]

- Russell, J. A., Weiss, A., & Mendelson, G. A. (1989). Affect grid: A single-item scale of pleasure and arousal. Journal of Personality and Social Psychology, 57(3), 493–502. [Google Scholar] [CrossRef]

- Sauter, M., Draschkow, D., & Mack, W. (2020). Building, hosting and recruiting: A brief introduction to running behavioral experiments online. Brain Sciences, 10(4), 251. [Google Scholar] [CrossRef]

- Scherer, K. R., & Ellgring, H. (2007). Multimodal expression of emotion: Affect programs or componential appraisal patterns? Emotion, 7(1), 158–171. [Google Scholar] [CrossRef]

- Seth, A. K., & Friston, K. J. (2016). Active interoceptive inference and the emotional brain. Philosophical Transactions of the Royal Society B: Biological Sciences, 371(1708), 20160007. [Google Scholar] [CrossRef]

- Shepherd, J. L., & Rippon, D. (2022). The impact of briefly observing faces in opaque facial masks on emotion recognition and empathic concern. Quarterly Journal of Experimental Psychology, 76(2), 404–418. [Google Scholar] [CrossRef]

- Shimizu, H. (2016). An introduction to the statistical free software HAD: Suggestions to improve teaching, learning and practice data analysis. Journal of Media, Information and Communication, 1, 59–73. (In Japanese). [Google Scholar]

- Shoji, M., Mehling, W. E., Hautzinger, M., & Herbert, B. M. (2018). Investigating multidimensional interoceptive awareness in a Japanese population: Validation of the Japanese MAIA-J. Frontiers in Psychology, 9, 1855. [Google Scholar] [CrossRef] [PubMed]

- Sifneos, P. E. (1973). The prevalence of “alexithymic” characteristics in psychosomatic Patients. Psychotherapy and Psychosomatics, 22, 255−262. [Google Scholar] [CrossRef] [PubMed]

- Slessor, G., Insch, P., Donaldson, I., Sciaponaite, V., Adamowicz, M., & Phillips, L. H. (2022). Adult age differences in using information from the eyes and mouth to make decisions about others’ emotions. Journals of Gerontology: Psychological Sciences, 77(12), 2241–2251. [Google Scholar] [CrossRef]

- Swain, R. H., O’Hare, A. J., Brandley, K., & Tye Gardner, A. (2022). Individual differences in social intelligence and perception of emotion expression of masked and unmasked faces. Cognitive Research: Principles and Implications, 7, 54. [Google Scholar] [CrossRef]

- Tanaka, J. W., Kaiser, M. D., Butler, S., & Le Grand, R. (2012). Mixed emotions: Holistic and analytic perception of facial expressions. Cognition & Emotion, 26(6), 961–977. [Google Scholar]

- Tanaka, Y., Ito, Y., Terasawa, Y., & Umeda, S. (2023). Modulation of heartbeat-evoked potential and cardiac cycle effect by auditory stimuli. Biological Psychology, 182, 108637. [Google Scholar] [CrossRef]

- Terasawa, Y., Moriguchi, Y., Tochizawa, S., & Umeda, S. (2014). Interoceptive sensitivity predicts sensitivity to the emotions of others. Cognition and Emotion, 28(8), 1435–1448. [Google Scholar] [CrossRef] [PubMed]

- Tsantani, M., Podgajecka, V., Gray, K. L. H., & Cook, R. (2022). How does the presence of a surgical face mask impair the perceived intensity of facial emotions? PLoS ONE, 17(1), e0262344. [Google Scholar] [CrossRef] [PubMed]

- van Dyck, Z., VoÈgele, C., Blechert, J., Lutz, A. P. C., Schulz, A., & Herbert, B. M. (2016). The water load test as a measure of gastric interoception: Development of a two-stage protocol and application to a healthy female population. PLoS ONE, 11(9), e0163574. [Google Scholar] [CrossRef]

- Ventura-Bort, C., Wendt, J., & Weymar, M. (2021). The role of interoceptive sensibility and emotional conceptualization for the experience of emotions. Frontiers in Psychology, 12, 712418. [Google Scholar] [CrossRef]

- Wallman-Jones, A., Lewis, J., Mackintosh, K., & Eaves, D. L. (2022). Acute physical-activity related increases in interoceptive ability are not enhanced with simultaneous interoceptive attention. Scientific Reports, 12(1), 15261. [Google Scholar] [CrossRef] [PubMed]

- Weng, H. Y., Feldman, J. L., Leggio, L., Napadow, V., Park, J., & Price, C. J. (2021). Interventions and manipulations of interoception. Trends in Neurosciences, 44, 52–62. [Google Scholar] [CrossRef]

- Wong, H. K., & Estudillo, A. J. (2022). Face masks affect emotion categorisation, age estimation, recognition, and gender classification from faces. Cognitive Research: Principles and Implications, 7, 91. [Google Scholar] [CrossRef]

- Yuki, M., Maddux, W. W., & Masuda, T. (2007). Are the windows to the soul the same in the East and West? Cultural differences in using the eyes and mouth as cues to recognize emotions in Japan and the United States. Journal of Experimentyukal Social Psychology, 43(2), 303–311. [Google Scholar] [CrossRef]

| Subscale of MAIA | Interoceptive Awareness | Anger | Disgust | Fear | Happiness | Sadness | Surprise | Neutral | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | ||

| Noticing | Higher Group | 71.32 (22.26) | 68.63 (65.73) | 60.54 (25.17) | 51.47 (25.70) | 21.32 (22.12) | 11.28 (12.06) | 97.55 (6.62) | 95.83 (8.90) | 65.44 (23.40) | 50.25 (26.75) | 93.63 (8.79) | 75.74 (16.47) | 93.63 (8.79) | 75.74 (16.47) |

| Lower Group | 66.13 (25.04) | 65.73 (28.86) | 56.45 (25.18) | 44.36 (25.17) | 18.55 (20.88) | 8.87 (15.26) | 95.16 (14.32) | 93.55 (16.73) | 66.13 (22.41) | 51.61 (25.36) | 91.13 (16.83) | 77.42 (21.51) | 91.13 (16.83) | 77.42 (21.51) | |

| Not-distracting | Higher Group | 69.74 (25.77) | 68.42 (27.99) | 56.91 (22.27) | 53.29 (24.26) | 18.42 (21.70) | 13.16 (15.90) | 96.38 (12.97) | 94.74 (15.00) | 67.76 (19.63) | 51.97 (25.59) | 90.79 (15.57) | 72.70 (19.46) | 90.79 (15.57) | 72.70 (19.46) |

| Lower Group | 69.03 (21.31) | 66.76 (21.39) | 60.80 (27.44) | 44.89 (26.32) | 21.88 (21.59) | 7.96 (10.12) | 96.86 (7.20) | 95.17 (9.80) | 63.92 (25.46) | 49.72 (26.75) | 94.32 (8.72) | 79.55 (17.07) | 94.32 (8.72) | 79.55 (17.07) | |

| Attention Regulation | Higher Group | 66.96 (23.40) | 66.67 (25.10) | 58.93 (24.74) | 49.70 (23.67) | 21.13 (21.82) | 9.52 (9.49) | 98.21 (6.52) | 95.24 (9.94) | 65.48 (23.07) | 47.62 (27.92) | 92.56 (9.59) | 76.49 (17.51) | 92.56 (9.59) | 76.49 (17.51) |

| Lower Group | 71.88 (23.30) | 68.44 (24.18) | 59.06 (25.79) | 47.81 (27.72) | 19.38 (21.55) | 11.25 (16.46) | 95.00 (12.91) | 94.69 (14.68) | 65.94 (22.99) | 54.06 (23.92) | 92.81 (14.95) | 76.25 (19.57) | 92.81 (14.95) | 76.25 (19.57) | |

| Emotional Awareness | Higher Group | 67.86 (25.60) | 60.36 (28.52) | 58.57 (26.74) | 52.86 (24.65) | 20.36 (23.89) | 11.43 (12.99) | 98.21 (5.38) | 95.71 (7.39) | 64.29 (23.71) | 49.64 (27.20) | 93.57 (8.23) | 77.50 (16.27) | 93.57 (8.23) | 77.50 (16.27) |

| Lower Group | 70.48 (21.72) | 72.87 (19.73) | 59.31 (24.10) | 45.75 (26.10) | 20.21 (19.94) | 9.57 (13.60) | 95.48 (12.62) | 94.42 (15.16) | 66.76 (22.46) | 51.60 (25.49) | 92.02 (14.84) | 75.53 (20.01) | 92.02 (14.84) | 75.53 (20.01) | |

| Body-listening | Higher Group | 69.29 (24.96) | 62.50 (25.14) | 58.97 (26.44) | 52.45 (24.10) | 17.40 (19.27) | 17.39 (19.27) | 97.01 (7.54) | 94.57 (10.09) | 62.77 (22.90) | 45.38 (26.92) | 93.21 (9.02) | 76.36 (17.33) | 93.21 (9.02) | 76.36 (17.33) |

| Lower Group | 69.44 (21.43) | 73.96 (22.44) | 59.03 (23.64) | 44.10 (26.97) | 23.96 (23.98) | 11.46 (16.47) | 96.18 (12.96) | 95.49 (14.99) | 69.44 (22.65) | 57.64 (23.58) | 92.01 (15.85) | 76.39 (20.00) | 92.01 (15.85) | 76.39 (20.00) | |

| Trusting | Higher Group | 66.29 (21.31) | 66.29 (23.90) | 56.82 (24.43) | 51.90 (27.62) | 21.59 (23.86) | 9.09 (10.49) | 98.49 (5.19) | 95.83 (7.44) | 70.08 (20.24) | 54.92 (25.57) | 93.94 (8.91) | 76.14 (18.58) | 93.94 (8.91) | 76.14 (18.58) |

| Lower Group | 71.43 (24.61) | 68.37 (25.14) | 60.46 (25.69) | 46.68 (24.18) | 19.39 (20.10) | 11.22 (14.93) | 95.41 (12.42) | 94.39 (14.89) | 62.76 (24.27) | 47.96 (26.31) | 91.84 (14.33) | 76.53 (18.51) | 91.84 (14.33) | 76.53 (18.51) | |

| Subscale of MAIA | Interoceptive Awareness | Anger | Disgust | Fear | Happiness | Sadness | Surprise | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | ||

| Noticing | Higher Group | 3.02 (0.70) | 2.87 (0.63) | 2.97 (0.70) | 3.55 (0.85) | 3.21 (0.61) | 3.83 (0.70) | 7.57 (1.10) | 7.14 (0.81) | 3.44 (0.65) | 3.35 (0.54) | 5.27 (0.71) | 5.98 (0.89) |

| Lower Group | 3.11 (0.90) | 3.06 (0.88) | 3.09 (1.01) | 3.59 (0.90) | 3.38 (1.00) | 3.94 (0.76) | 7.32 (1.06) | 6.97 (0.93) | 3.48 (0.56) | 3.57 (0.86) | 5.18 (0.73) | 4.98 (0.87) | |

| Not-distracting | Higher Group | 3.04 (0.79) | 2.95 (0.78) | 2.96 (0.90) | 3.50 (0.89) | 3.29 (0.82) | 3.78 (0.68) | 7.47 (0.91) | 7.02 (0.87) | 3.48 (0.56) | 3.45 (0.74) | 5.12 (0.74) | 4.96 (0.80) |

| Lower Group | 3.06 (0.78) | 2.93 (0.71) | 3.07 (0.77) | 3.62 (0.85) | 3.26 (0.76) | 3.95 (0.76) | 7.48 (1.22) | 7.13 (0.85) | 3.43 (0.66) | 3.42 (0.63) | 5.33 (0.69) | 5.12 (0.95) | |

| Attention Regulation | Higher Group | 3.24 (0.74) | 3.06 (0.71) | 3.13 (0.85) | 3.64 (0.84) | 3.38 (0.72) | 3.95 (0.62) | 7.29 (1.25) | 6.92 (0.95) | 3.33 (0.62) | 3.53 (0.62) | 5.17 (0.75) | 5.05 (0.88) |

| Lower Group | 2.86 (0.78) | 2.82 (0.76) | 2.90 (0.79) | 3.49 (0.90) | 3.17 (0.84) | 3.79 (0.82) | 7.67 (0.84) | 7.24 (0.72) | 3.58 (0.58) | 3.32 (0.73) | 5.30 (0.68) | 5.04 (0.89) | |

| Emotional Awareness | Higher Group | 3.11 (0.78) | 2.96 (0.76) | 3.06 (0.76) | 3.63 (1.00) | 3.41 (0.77) | 3.97 (0.72) | 7.75 (0.90) | 7.17 (0.81) | 3.45 (0.64) | 3.43 (0.67) | 5.19 (0.64) | 5.27 (0.80) |

| Lower Group | 3.01 (0.78) | 2.92 (0.73) | 2.98 (0.89) | 3.52 (0.76) | 3.17 (0.78) | 3.80 (0.72) | 7.27 (1.17) | 7.01 (0.89) | 3.46 (0.59) | 3.43 (0.70) | 5.27 (0.77) | 4.87 (0.91) | |

| Body-listening | Higher Group | 3.11 (0.74) | 2.98 (0.64) | 3.01 (0.75) | 3.57 (0.80) | 3.31 (0.69) | 3.92 (0.70) | 7.51 (1.17) | 7.04 (0.93) | 3.42 (0.62) | 3.44 (0.58) | 5.27 (0.69) | 5.20 (0.76) |

| Lower Group | 2.98 (0.84) | 2.88 (0.85) | 3.02 (0.93) | 3.56 (0.95) | 3.23 (0.89) | 3.82 (0.75) | 7.43 (0.98) | 7.13 (0.75) | 3.50 (0.60) | 3.41 (0.80) | 5.19 (0.76) | 4.84 (0.99) | |

| Trusting | Higher Group | 3.09 (0.74) | 3.02 (0.64) | 2.94 (0.75) | 3.35 (0.75) | 3.27 (0.80) | 3.76 (0.68) | 7.54 (1.12) | 7.07 (0.91) | 3.36 (0.53) | 3.38 (0.62) | 5.11 (0.73) | 4.98 (0.88) |

| Lower Group | 3.02 (0.81) | 2.89 (0.80) | 3.07 (0.89) | 3.71 (0.91) | 3.28 (0.78) | 3.95 (0.75) | 7.43 (1.06) | 7.08 (0.82) | 3.52 (0.66) | 3.46 (0.72) | 5.32 (0.70) | 5.09 (0.89) | |

| Subscale of MAIA | Interoceptive Awareness | Anger | Disgust | Fear | Happiness | Sadness | Surprise | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | No-Mask | Mask | NO-MASK | Mask | ||

| Noticing | Higher Group | 6.80 (1.00) | 6.79 (0.94) | 6.24 (1.22) | 6.24 (1.05) | 6.80 (0.95) | 6.77 (0.95) | 6.79 (1.34) | 6.47 (1.12) | 5.23 (1.01) | 5.53 (1.19) | 5.27 (0.71) | 5.08 (0.89) |

| Lower Group | 6.53 (1.09) | 6.38 (0.89) | 5.89 (1.34) | 5.99 (1.07) | 6.50 (1.03) | 6.21 (1.04) | 6.59 (1.12) | 6.26 (0.99) | 5.03 (1.22) | 5.11 (1.04) | 5.18 (0.73) | 4.98 (0.87) | |

| Not-distracting | Higher Group | 6.74 (1.10) | 6.57 (0.98) | 6.01 (1.40) | 5.90 (1.16) | 6.67 (1.07) | 6.45 (1.13) | 6.50 (1.41) | 6.19 (118) | 5.05 (1.21) | 5.33 (1.15) | 5.12 (0.74) | 4.96 (0.80) |

| Lower Group | 6.66 (0.99) | 6.69 (0.90) | 6.20 (1.15) | 6.36 (0.92) | 6.70 (0.92) | 6.65 (0.90) | 6.90 (1.10) | 6.56 (0.96) | 5.24 (0.99) | 5.41 (1.15) | 5.33 (0.69) | 5.12 (0.95) | |

| Attention Regulation | Higher Group | 6.25 (1.05) | 6.41 (0.97) | 5.97 (1.26) | 5.92 (1.10) | 6.46 (1.03) | 6.40 (0.98) | 6.46 (1.39) | 6.21 (1.16) | 5.21 (1.18) | 5.24 (1.10) | 5.17 (0.75) | 5.05 (0.88) |

| Lower Group | 6.89 (1.00) | 6.86 (0.85) | 6.27 (1.27) | 6.39 (0.97) | 6.92 (0.89) | 6.72 (1.03) | 6.98 (1.06) | 6.58 (0.96) | 5.09 (1.00) | 5.51 (1.20) | 5.30 (0.68) | 5.04 (0.89) | |

| Emotional Awareness | Higher Group | 6.67 (0.92) | 6.66 (0.84) | 6.08 (1.19) | 6.11 (1.14) | 6.67 (0.87) | 6.28 (1.24) | 6.78 (1.49) | 6.28 (1.24) | 5.22 (0.96) | 5.26 (1.11) | 5.27 (0.80) | 5.27 (0.80) |

| Lower Group | 6.72 (1.12) | 6.61 (1.01) | 6.13 (1.33) | 6.17 (1.01) | 6.71 (1.08) | 6.47 (0.94) | 6.67 (1.07) | 6.47 (0.94) | 5.10 (1.19) | 5.46 (1.17) | 4.87 (0.91) | 4.87 (0.91) | |

| Body-listening | Higher Group | 6.71 (1.03) | 6.56 (0.98) | 6.07 (1.26) | 6.05 (1.05) | 6.70 (1.06) | 6.58 (1.02) | 6.72 (1.41) | 6.32 (1.19) | 5.18 (1.12) | 5.42 (1.16) | 5.27 (0.69) | 5.20 (0.76) |

| Lower Group | 6.69 (1.06) | 6.72 (0.89) | 6.16 (1.29) | 6.27 (1.07) | 6.67 (0.91) | 6.53 (1.02) | 6.71 (1.05) | 6.48 (0.91) | 5.12 (1.07) | 5.32 (1.15) | 5.19 (0.76) | 4.84 (0.99) | |

| Trusting | Higher Group | 6.64 (1.00) | 6.45 (0.91) | 5.88 (1.19) | 5.99 (1.06) | 6.55 (0.97) | 6.43 (0.98) | 6.81 (1.24) | 6.35 (0.98) | 4.99 (1.21) | 5.11 (1.02) | 5.11 (0.73) | 4.98 (0.88) |

| Lower Group | 6.74 (1.07) | 6.75 (0.94) | 6.27 (1.30) | 6.25 (1.05) | 6.78 (1.00) | 6.65 (1.03) | 6.65 (1.28) | 6.44 (1.15) | 5.26 (1.01) | 5.55 (1.20) | 5.32 (0.70) | 5.09 (0.89) | |

| Subscale of MAIA | Main Effect: Presence of Mask | Main Effect: Emotion | Main Effect: Interoceptive Awareness | Interaction: Presence of Mask × Emotion | Interaction: Presence of Mask × Interoceptive Awareness | Interaction: Emotion × Interoceptive Awareness | Interaction: Presence of Mask × Emotion × Interoceptive Awareness | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1, 80 | ηp2 | F6, 480 | ηp2 | F1, 80 | ηp2 | F6, 480 | ηp2 | F1, 80 | ηp2 | F6, 480 | ηp2 | F6, 480 | ηp2 | |

| Noticing | 96.896 *** | 0.548 | 184.085 *** | 0.697 | 0.376 | 0.005 | 13.140 *** | 0.141 | 0.028 | <0.001 | 0.809 | 0.010 | 0.406 | 0.005 |

| Not-distracting | 106.530 *** | 0.571 | 190.851 *** | 0.705 | 0.004 | <0.001 | 14.898 *** | 0.157 | 5.206 * | 0.061 | 0.538 | 0.007 | 2.131 | 0.026 |

| Attention Regulation | 103.737 *** | 0.565 | 191.863 *** | 0.706 | 0.109 | 0.0014 | 14.493 *** | 0.153 | 0.072 | <0.001 | 0.265 | 0.003 | 0.885 | 0.011 |

| Emotional Awareness | 101.440 *** | 0.559 | 189.703 *** | 0.703 | 0.0003 | <0.001 | 13.972 *** | 0.149 | 0.004 | <0.001 | 0.895 | 0.011 | 1.773 | 0.022 |

| Body-listening | 101.759 *** | 0.560 | 190.628 *** | 0.704 | 0.892 | 0.011 | 14.825 *** | 0.156 | 0.062 | <0.001 | 1.494 | 0.018 | 2.966 * | 0.036 |

| Trusting | 97.836 *** | 0.550 | 187.234 *** | 0.701 | 0.987 | 0.012 | 14.641 *** | 0.155 | 0.453 | 0.006 | 0.856 | 0.011 | 1.276 | 0.016 |

| Subscale of MAIA | Main Effect: Presence of Mask | Main Effect: Emotion | Main Effect: Interoceptive Awareness | Interaction: Presence of Mask × Emotion | Interaction: Presence of Mask × Interoceptive Awareness | Interaction: Emotion × Interoceptive Awareness | Interaction: Presence of Mask × Emotion × Interoceptive Awareness | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1, 80 | ηp2 | F5, 400 | ηp2 | F1, 80 | ηp2 | F5, 400 | ηp2 | F1, 80 | ηp2 | F5, 400 | ηp2 | F5, 400 | ηp2 | |

| Noticing | 5.487 * | 0.064 | 480.767 *** | 0.857 | 0.095 | 0.001 | 26.938 *** | 0.252 | 0.301 | 0.004 | 0.999 | 0.012 | 0.447 | 0.006 |

| Not-distracting | 4.951 * | 0.058 | 514.413 *** | 0.865 | 0.479 | 0.006 | 29.405 *** | 0.269 | 0.432 | 0.005 | 0.306 | 0.004 | 0.406 | 0.005 |

| Attention Regulation | 5.119 * | 0.060 | 535.225 *** | 0.870 | 0.204 | 0.003 | 30.585 *** | 0.277 | 1.070 | 0.013 | 2.797 | 0.034 | 2.207 | 0.027 |

| Emotional Awareness | 5.223 * | 0.061 | 513.054 *** | 0.865 | 2.265 | 0.028 | 30.231 *** | 0.274 | 0.060 | <0.001 | 0.640 | 0.008 | 3.036 * | 0.037 |

| Body-listening | 4.788 * | 0.056 | 510.822 *** | 0.865 | 0.527 | 0.007 | 29.488 *** | 0.269 | 0.361 | 0.004 | 0.425 | 0.005 | 0.990 | 0.012 |

| Trusting | 4.350 * | 0.052 | 503.189 *** | 0.863 | 0.683 | 0.008 | 27.557 *** | 0.256 | 0.609 | 0.008 | 0.784 | 0.010 | 0.918 | 0.011 |

| Subscale of MAIA | Main Effect: Presence of Mask | Main Effect: Emotion | Main Effect: Interoceptive Awareness | Interaction: Presence of Mask × Emotion | Interaction: Presence of Mask × Interoceptive Awareness | Interaction: Emotion × Interoceptive Awareness | Interaction: Presence of Mask × Emotion × Interoceptive Awareness | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1, 80 | ηp2 | F5, 400 | ηp2 | F1, 80 | ηp2 | F5, 400 | ηp2 | F1, 80 | ηp2 | F5, 400 | ηp2 | F5, 400 | ηp2 | |

| Noticing | 5.615 * | 0.066 | 61.638 *** | 0.435 | 3.489 | 0.042 | 4.028 *** | 0.048 | 1.440 | 0.018 | 0.435 | 0.005 | 0.566 | 0.007 |

| Not-distracting | 4.863 * | 0.057 | 66.855 *** | 0.455 | 1.727 | 0.021 | 4.486 ** | 0.053 | 1.042 | 0.003 | 0.656 | 0.008 | 0.758 | 0.009 |

| Attention Regulation | 4.463 * | 0.048 | 67.979 *** | 0.459 | 4.072 * | 0.048 | 4.675 ** | 0.055 | 0.279 | 0.003 | 1.095 | 0.014 | 1.558 | 0.019 |

| Emotional Awareness | 4.512 * | 0.053 | 64.830 *** | 0.448 | 0.001 | <0.001 | 4.319 ** | 0.051 | 0.027 | <0.001 | 0.232 | 0.003 | 2.804 * | 0.034 |

| Body-listening | 4.272 * | 0.051 | 67.390 *** | 0.457 | 0.004 | <0.001 | 4.460 ** | 0.053 | 0.115 | 0.0014 | 0.646 | 0.008 | 0.974 | 0.012 |

| Trusting | 4.987 * | 0.059 | 65.735 *** | 0.451 | 1.795 | 0.022 | 4.399 ** | 0.052 | 0.548 | 0.007 | 0.711 | 0.009 | 0.805 | 0.010 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yamasaki, K.; Miyata, H. Effects of Interoceptive Awareness on Recognition of and Sensitivity to Emotions in Masked Facial Stimuli. Behav. Sci. 2025, 15, 1555. https://doi.org/10.3390/bs15111555

Yamasaki K, Miyata H. Effects of Interoceptive Awareness on Recognition of and Sensitivity to Emotions in Masked Facial Stimuli. Behavioral Sciences. 2025; 15(11):1555. https://doi.org/10.3390/bs15111555

Chicago/Turabian StyleYamasaki, Kaho, and Hiromitsu Miyata. 2025. "Effects of Interoceptive Awareness on Recognition of and Sensitivity to Emotions in Masked Facial Stimuli" Behavioral Sciences 15, no. 11: 1555. https://doi.org/10.3390/bs15111555

APA StyleYamasaki, K., & Miyata, H. (2025). Effects of Interoceptive Awareness on Recognition of and Sensitivity to Emotions in Masked Facial Stimuli. Behavioral Sciences, 15(11), 1555. https://doi.org/10.3390/bs15111555