Abstract

Identifying consciousness in other creatures, be they animals or exotic creatures that have yet to be discovered, remains a great scientific challenge. We delineate the first three steps that we think are necessary for identifying consciousness in other creatures. Step 1 is to define the particular kind of consciousness in which one is interested. Step 2 is to identify, in humans, the key differences between the brain processes that are associated with consciousness and the brain processes that are not associated with consciousness. For Step 2, to identify these differences, we focus on passive frame theory. Step 3 concerns how the insights derived from consciousness research on humans (e.g., concerning these differences) can be generalized to other creatures. We discuss the significance of examining how consciousness was fashioned by the process of evolution, a process that could be happenstance and replete with incessant tinkering, yielding adaptations that can be suboptimal and counterintuitive, far different in nature from our efficiently designed robotic systems. We conclude that the more that is understood about the differences between conscious processing and unconscious processing in humans, the easier it will be to identify consciousness in other creatures.

1. Introduction

The nature of consciousness, and how brains produce it, remains one of the greatest mysteries in science [1,2,3,4]. A related challenge is how to identify consciousness in other creatures [5], be they animals or exotic creatures that have not yet been discovered. Here, we attempt to delineate the first three steps that, in our estimation, are necessary for identifying consciousness in other creatures.

Step 1 is to define the particular kind of consciousness in which one is interested. To this end, we are referring to basic, low-level consciousness (e.g., the subjective experience of pain, breathlessness, nausea, yellow afterimages, or ringing in one’s ears). This basic form of consciousness has been called ‘sentience’ [6], ‘subjective experience’, ‘phenomenal state’, and ‘qualia’ [7]. In this article, when we discuss consciousness, we are discussing this most basic form of consciousness, which is an experience of any kind. It might be ringing in the ears, a bright light, an afterimage, the smell of lavender, a song stuck in one’s head (an ‘ear worm’), or a phone number that one is trying to remember. A conscious content is any particular thing of which one is conscious (e.g., a percept, urge, or memory). The conscious field is composed of all that one is conscious of at one moment in time.

When researchers speak about the puzzle of consciousness, and of how the brain produces it, they are referring to this most basic form of consciousness. This basic form of consciousness is contrasted with processes that are not associated with consciousness (e.g., the control of peristalsis or of the pupillary reflex). The empirical question is as follows: What is special about the brain circuitry that leads to conscious states, and why does the circuitry for peristalsis or the pupillary reflex, for example, not cause such states?

Other forms of consciousness (e.g., the “narrative self” [8]), involve cognitive processes beyond that of basic consciousness. With this in mind, it is important to note that the mystery of basic consciousness (that is, how neural activity can give rise to an experience of any kind) would apply to conscious states that lack many of the sophisticated features that are often part of conscious states. The job of explaining basic consciousness is not the job of explaining these high-level features that are often, but not always, coupled with conscious states. Rather, the job is to explain how neural circuitry can give rise to an experience of any kind. For example, the mystery applies to conscious states that lack spatial extension, as in the case of some olfactory experiences. In addition, the mystery would apply to conscious states that are of extremely brief duration, as in the case of a hypothetical creature who lived for only a few seconds and was conscious during that span. The mystery applies to states that are utterly nonsensical, as in some hallucinations, anosognosia, and the dream world. Thus, for the mysterious phenomenon of consciousness to be instantiated, the phenomenon need not require (as far as we know) spatial extension, an extended duration, meaningfulness, or complexity regarding its contents. It might be that future research will reveal that one or more of these features is necessary for there to be a consciousness of any kind, but at present, as far as we know, it seems that these states could exist in some form, and be equally mysterious, when these features are absent.

What is the difference between conscious and unconscious brain processes? Regarding this contrast, it is important to note that, in every field of inquiry within psychology and neuroscience, the contrast is made between conscious processes and unconscious processes. For example, in the field of perception, there exists the distinction between supra- versus subliminal processing; in the field of memory, there is the distinction between ‘declarative’ (explicit) processes and ‘procedural’ (implicit) processes [9,10]. Similarly, in the field of motor control, the conscious aspects of voluntary behavior are contrasted with the unconscious aspects of motor programming [11], including the implicit learning of motor sequences [12] and the unconscious processes involved in speech production [13]. More generally, in many fields of study, there exists the contrast between ‘controlled’ processing, which tends to be associated with consciousness, and ‘automatic’ processing, which tends to be associated with unconscious mechanisms [14].

2. The Neural Correlates of Conscious Processing

Historically, consciousness has been associated with neural processes associated with perception (the “sensorium hypothesis”). Except for olfaction, most of these processes are housed in posterior areas of the brain. The association of consciousness with the sensorium stems from the observations that percepts are a common component of the conscious field and that motor programming (which muscle fibers should contract at which time to carry out an action) is believed to be largely unconscious [11].

It seems that the conscious processing of a mental representation (e.g., a percept) involves a wider and more diverse network of brain areas than does the subliminal (unconscious) processing of the same representation [15,16]. This evidence stemmed initially from research on anesthesia (e.g., [17]), perception [16], and unresponsive states (e.g., comatic or vegetative states [18]). Research in binocular rivalry suggests that some mode of interaction between widespread brain areas is important for consciousness [19,20]. Consistent with these views, actions that are consciously mediated involve more widespread activations in the brain than do similar but unconsciously mediated actions [21]. Accordingly, when actions are decoupled from consciousness (e.g., in neurological disorders), the actions often appear impulsive or inappropriate, as if they are not adequately influenced by all the kinds of information by which they should be influenced. One challenge in isolating the neural correlates of consciousness is that it is difficult to determine whether one is also including, in the neural correlates, activities that only support consciousness or the processes associated with the self-report of conscious contents [22].

Regarding the neuroanatomy responsible for consciousness, investigators have focused on vision. In vision research, controversy remains regarding whether consciousness depends on higher-order perceptual regions [23,24] or lower-order regions [25,26]. Some theorists have proposed that, while the cortex may elaborate the contents of consciousness, consciousness is primarily a function of subcortical structures [27,28]. Regarding the frontal lobes, it has been proposed that, although they are involved in cognitive control, they may not be essential for the generation of basic consciousness [27,29]. This hypothesis is based in part on research on anencephaly [27], the disruption of cortical functions (e.g., through direct brain stimulation or ablation [28]), and the psychophysiology of dream consciousness, which involves prefrontal deactivations [30]. The surgical procedure of frontal lobotomy, once a common neurosurgical intervention, was never reported to render patients incapable of sustaining consciousness. However, research on patients with profound disorders of consciousness (e.g., vegetative state) suggest that signals from the frontal lobes may be essential for the instantiation of consciousness [31,32,33].

Consistent with the sensorium hypothesis, evidence suggests that perceptual regions are the primary site of consciousness. For example, electrical stimulation of parietal areas gives rise to the conscious urge to perform an action, with increased activation making subjects hallucinate that they executed the action, even though no action was performed [34,35]. In contrast, activating motor areas (e.g., premotor regions) leads to the expression of the actual action, but subjects believe that they did not perform any action whatsoever (see also [36]). The urge to perform a motor act is associated with activation of perceptual regions. Consistent with the sensorium hypothesis, the majority of studies involving brain stimulation and consciousness have found that stimulations of perceptual (e.g., posterior) brain areas lead to changes in consciousness (e.g., haptic hallucinations). In the literature, we found only one piece of evidence [36,37] in which brain stimulation of a frontal area led to conscious content. In the study, weak electrical stimulation of the pre-supplementary motor area led to the experience of the urge to move a body part, with stronger stimulation leading to movement of the same body part. It has been proposed that such activation led to feedback (e.g., corollary discharge) that is then ‘perceived’ by perceptual areas [38]. This finding is, thus, consistent with the sensorium hypothesis.

Consistent with the sensorium hypothesis, a key component of the control of intentional action is feedback about ongoing action plans to perceptual areas of the brain, such as the post-central cortex [34]. With this in mind, it has been proposed that consciousness is associated not with frontal or higher-order perceptual areas but with lower-order perceptual areas [1]. However, it is important to qualify that, though the sensorium hypothesis specifies that consciousness involves neural circuits that, traditionally, have been associated with perception, such circuits are widespread throughout the brain and exist within both cortical and subcortical regions [39].

Several features of the olfactory system render it a fruitful modality in which to isolate the substrates of consciousness. For example, olfaction can reveal much about the role of thalamic nuclei in the generation of consciousness: Unlike most senses, afferents from the olfactory sensory system bypass the first-order relay thalamus and directly target the cortex ipsilaterally [40]. Second, one can draw some conclusions about second-order thalamic relays (e.g., the mediodorsal thalamic nucleus; MDNT). After cortical processing, the MDNT receives inputs from olfactory cortical regions [41]. The MDNT plays a significant role in olfactory discrimination [42], olfactory identification, and olfactory hedonics [43], as well as in more general cognitive processes including memory [44], learning [45], and attentional processes [46,47]. However, there is no evidence that a lack of olfactory consciousness results from lesions of any kind to the MDNT (see discussions about this possibility in [48]).

Consistent with ‘cortical’ theories of consciousness, complete anosmia (the loss of the sense of smell) was observed in a patient with a lesion to the left orbital gyrus of the frontal lobe [49]. In addition, a patient with a right orbitofrontal cortex (OFC) lesion experienced complete anosmia [50], suggesting that the OFC is necessary for olfactory consciousness. In addition, conscious aspects of odor discrimination have been attributed to the activities of the frontal and orbitofrontal cortices [51]. Keller [52] concludes, “There are reasons to assume that the phenomenal neural correlate of olfactory consciousness is found in the neocortical orbitofrontal cortex” (p. 6; see also [53]).

Step 2 is to identify, in humans, the key differences between the brain processes that are associated with consciousness and the brain processes that are not associated with consciousness. For Step 2, an acceptable answer to the question regarding the difference between conscious and unconscious processes cannot be that all physical things are conscious or that all neural activities are conscious, because it is posited in the question itself that, in humans, there exist unconscious processes. Hence, for Step 2, one must identify and explain the difference between conscious and unconscious processes as they exist in the human brain (not in a computer, plant, or robot).

Regarding the answer to the question posed in Step 2, the difference between conscious and unconscious processes is not as obvious as one might suppose. For example, unconscious processes are capable of both stimulus detection and issuing motor responses to stimuli, at least under some circumstances. Moreover, it is not the case that unconscious processes are less sophisticated than conscious ones. There are many examples that reveal the sophistication of unconscious processes, for example, as in the case of syntax, motor programming, and unconscious inter-sensory processing, each of which is, to a large extent, unconsciously mediated.

Once one has identified the difference between conscious and unconscious processes in humans, one should, ideally, explain how this difference accounts for the phenomenological data, including the peculiarities of individual conscious contents.

According to one theoretical framework, the Projective Consciousness Model [54], a potential constitutive marker of consciousness (in humans, at least) is the ability of the brain to create a first-person perspectival viewpoint situated within a three-dimensional spatial environment, a format that can be understood through the principles of projective geometry. Notably, such a viewpoint is experienced both during waking and dreaming. Some such advance in defining what constitutes consciousness is needed in order to move beyond tautological formulations such as the often quoted, “something it is like” [55], which actually presupposes “consciously like” (we thank Björn Merker for permission to quote this observation from an unpublished manuscript of his). It is interesting to consider whether the Projective Consciousness Model can be applied not only to visual consciousness but to olfactory consciousness, which lacks the spatial extension and dimensions of visual consciousness but does possess the separation between the conscious content (the odorant) and the observing agent [56,57,58].

Another framework that attempts to identify the difference between conscious and unconscious processes is passive frame theory (PFT [1]). In this article, we will focus on this framework for three reasons. First, PFT was developed in our laboratory and is the framework with which we are the most familiar. Hence, it is the framework with which we are most able to make inferences regarding the presence of consciousness in other creatures. Second, in line with the basic form of consciousness defined in Step 1, of all the proposed functions of consciousness, the function proposed by PFT happens to be the most basic, low-level, function. Third, pertinent to the aim of discovering the “behavioral correlates of consciousness” (the BCCs [5]), this low-level function involves overt behavior. This last property of the framework is necessary for the thought experiment presented below in Step 3.

PFT is a synthesis of a diverse group of action-based approaches to the study of consciousness and of perception-and-action coupling. According to PFT, the conscious field is necessary to integrate specific kinds of information for the benefit of action selection, a stage of processing that is neither perceptual nor motor. Action selection, as when one presses one button versus another button or moves leftward versus rightward, is distinct from motor control/motor programming processes [59], which are largely unconscious. In the functional architecture proposed by PFT, integrations and conflicts occurring at perceptual stages of processing (e.g., the ventriloquism effect and the McGurk effect [60]) can occur unconsciously, as can conflicts or integrations occurring at stages of processing involving motor programming (e.g., coordinating the specifications of articulatory gestures [61]) or smooth muscle processing (e.g., the pupillary reflex and peristalsis). The conscious field is unnecessary for such integrations and the resolution of such conflicts (e.g., in the McGurk effect) in these stages of processing.

In contrast, conflicts occurring at the processing stage of action selection must perturb the conscious field. For example, when one endures pain, holds one’s breath while underwater, or suppresses elimination behaviors, one is conscious of the inclinations to perform certain actions and of the inclinations to not perform those very same actions. Such a scenario is a conscious conflict. That is, mental representations that are associated with simultaneously activated, conflicting action plans are experienced as phenomenal states. In PFT, the conscious field benefits action selection by permitting the “collective influence” of these conflicting inclinations toward the skeletomotor output system, as captured by the principle of Parallel Responses into Skeletal Muscle (PRISM). Such a collective influence of conflicting inclinations, existing only in virtue of the conscious field, occurs when withstanding pain, holding one’s breath while underwater, or suppressing elimination behaviors.

When action control is decoupled from consciousness, as occurs in some neurological disorders (e.g., automatisms and in anarchic hand syndrome), action selection can no longer reflect collective influence (e.g., of conflicting inclinations). In such cases, actions appear impulsive and not influenced by all the kinds of information by which context-sensitive actions should be influenced. Although these unconsciously-mediated actions can be quite sophisticated (e.g., the manipulation of tools [62]), they are “un-integrated”, that is, they are not influenced by relevant contextual factors.

3. Encapsulation

Why is the conscious field often composed of conflicting inclinations? From the standpoint of PFT, this is because most of the contents that arise in the conscious field are “encapsulated” from other contents (including inclinations) and from voluntary processing. A conscious content is said to be encapsulated when its nature or occurrence cannot be influenced directly by voluntary processing or by higher-level knowledge, as in the case of perceptual illusions [63,64]. Sacks [65] refers to such conscious contents as “autonomous”, because one cannot voluntarily influence their nature or occurrence. There are countless cases in which one’s desires cannot override the nature of encapsulated conscious contents, as is obvious in the case of physical pain or nausea. Regarding perceptual illusions (e.g., the Müller-Lyer illusion), these illusions persist despite one’s knowledge regarding the true nature of the perceptual stimulus. The nature of encapsulated conscious contents has been investigated using the reflexive imagery task (RIT). In the RIT (see review in [66]), participants are instructed not to perform a certain mental operation in response to external stimuli. For example, before being presented with a line drawing of a cat, subjects might be instructed not to think of the name of the to-be-presented visual object. Despite the intentions of the subject, the undesired mental operations often arise, yielding, for example, “cat” (i.e., /k/, /œ/, and /t/) for the stimulus CAT. Substantive RIT effects (occurring in roughly half the trials) arise even when the visual object (e.g., CAT) is presented only in the periphery, and participants are focused on a separate task (e.g., the flanker task [67]). RIT effects arise even though the involuntary effect involved processes as sophisticated as word-manipulations, as occur in the childhood game of Pig Latin; syntactic processing; and mental arithmetic (see review of evidence in [66]). What determines what is activated in consciousness in the RIT? It seems that the strength of the association between the stimulus and a particular mental representation is determinant. In one RIT [68], participants first learned to associate pseudowords with nonsense objects (n = 6). One of the words was shown with the object in sixty trials, creating “strong associates”, while the other word was shown with the object in only ten or twenty trials, creating “weak associates”. Participants were later presented with each object and instructed not to think of any of the associated pseudowords. Strong associates (proportion of trials: M = 0.52, SD = 0.40) were more likely to enter consciousness than weak associates (M = 0.21, SD = 0.32, F (19) = 2.51, p = 0.021 (Cohen’s d = 0.56).

In PFT, the contents of consciousness (e.g., nausea, the experience of a yellow afterimage, or the smell of lavender) are independent from one another, stemming from content generators that are themselves independent of each other. This is analogous to how, at certain stages of music production, the various “tracks” from a recording console at a recording studio are independent from one another [69]. When one listens to a song on the radio, it is not obvious to one that each instrument composing the music heard in a song is actually recorded separately on an independent “track.” Once the many tracks composing a song are recorded, the mixing console is used to collate (“mix”) the tracks and generate one master recording that, when played on the radio or through some other medium, presents to the listener all the instruments playing simultaneously. In this analogy, the song one hears on the radio, which is actually the integrated, co-presentation of all the independent tracks, would be analogous to the conscious field, which presents the unified collation of all the conscious contents activated at one time.

According to PFT, most of the contents of consciousness are, and should be, encapsulated, not only from voluntary processing but from each other, such that one conscious content cannot directly influence the nature or occurrence of another conscious content. The fact that encapsulation is adaptive during ontogeny is obvious when one considers the negative consequences that would arise if a young child could, by an act of will, directly control the occurrence of pain, fear, or guilt [70].

It is only through the conscious field that these encapsulated contents can influence action selection collectively. Figuratively speaking, the conscious field is a mosaic composed of the collation of all the encapsulated contents that are activated at one moment in time. The conscious field is sampled only by the (unconscious) response codes of the skeletomotor output system. Unlike the ‘workspace’ models associated with the integration consensus (e.g., [71,72]), in which conscious representations are ‘broadcast’ to modules engaged in both stimulus interpretation and content generation, in PFT (as in [73]), the contents of the conscious field are directed only at (unconscious) response modules in the skeletomotor output system.

From the standpoint of PFT, the conscious field evolved as an adaptation that allows conscious contents, each of which is encapsulated, to influence action selection collectively. For example, the conscious content of a cupcake activates the desire to eat it. This urge cannot be easily turned off at will. However, the conscious field is simultaneously populated by the conscious content of, for example, the doctor reminding one to cut down on sweets. Because of the conscious field, action selection is influenced by both contents. The field simply permits these conscious contents to influence action selection simultaneously (“collective influence”). Figuratively speaking, each conscious content “does not know” of the nature of the other conscious contents and its nature is not influenced by the nature of the other contents. The field operates blindly, whether there is conflict between conscious contents or not. In this sense, it is passive.

Without the field, however, there would not be the collective influence of contents when conflicts arise. When the conscious field is absent or not operating properly, action selection arises but is not adaptive, revealing a lack of collective influence: Actions are not guided by all the kinds of information by which they should be guided. For example, one eats the cupcake without taking into account what the doctor recommended. The conscious field permits what has been called conditional discrimination, a kind of contextualized response in which one responds to stimulus X in light of stimulus Y [73,74,75,76].

We should add that, from this standpoint, a conflict is not necessary for the conscious field to operate, but the conscious field operates incessantly in order to deal with conflicts when they arise. In some ways this resembles other biological systems. For example, the kidneys filter the blood regardless of whether the blood needs filtering (similar to a pool filter).

The architecture proposed by PFT, one in which encapsulated conscious contents blindly activate their response codes, leads to the following question. Why is the conscious field necessary if each content could just activate its corresponding response code (R) and then the differential activation of Rs could determine action selection, without any need for a conscious field? Our first response to this question is that PFT is a descriptive account of brain function, describing these processes as they evolved to be. It is not a normative account, which describes processes as they ought to be designed. Thus, our first response is that, for some reason, Mother Nature solved the problem of collective influence by using the conscious field. Hypothetically, perhaps this problem could have been solved in other (unconscious) ways, as in the case of determining action selection by simply summing up the relative strength of the Rs. Our second response to this question is that, today, it is known that, considering the number of stimuli that are presented usually to the visual system alone, conditional discriminations (e.g., responding to stimulus X in light of other stimuli at that moment [74,75,76]) can become computationally impossible [77,78].

In other words, responding adaptively to one stimulus in the context of all the other stimuli in the conscious field is unsolvable through formal computational means. It is important to emphasize that this is so even when considering only visual stimuli. In everyday life, this problem is compounded by the fact that the conscious field contains contents from many more modalities than just vision, and the field is populated as well by non-sensory elements (e.g., urges, linguistic representations, and memories), all of which can influence action selection. In collective influence, the response to a stimulus is influenced by all the contents in the field. It is a response to the configuration of all stimuli.

To complicate matters, in some circumstances, the adaptive response to Stimulus X depends on the spatial distance between Stimulus Y and Stimulus Z, as occurs in soccer, for example. Whether a defender passes the ball back to the goalie can depend on the distance between a midfielder and a member of the rival team. Such circumstances are often unpredictable, thus requiring the conscious field to always represent the spatial relations amongst all the conscious contents that are activated at a given time. This example reveals that action selection should not be determined just by the relative strength of activation of the response codes activated by each stimulus in a stimulus array. Action selection must also be influenced by the relations among the stimuli. In ways that have yet to be identified, the conscious field achieves this while formal computational systems cannot.

According to PFT, the problem of conditional discriminations and collective influence is solved, somehow, by the conscious field. The solution might be very different in nature to what engineers would design to solve this problem in a robotic system. A good analogy is found in an audio speaker. Because of its structure, configuration, and properties, an audio speaker can simultaneously reproduce, for example, all the notes that are played by a band. Let us say that the band consists of a guitarist, a bassist, and a flutist. The speaker can oscillate and reproduce continuously and simultaneously the different frequencies produced by the three musicians. At one moment, the nature of the vibration of the speaker reflects, for example, notes from both the bass guitar and the flute. It does so blindly and without any computation. It achieves this because of the way in which sine waves happen to blindly interact with each other. If one were to achieve this through computer programming, it would be an extremely complicated affair. But no such programming is needed because of the way in which sine waves happen to interact. The physical nature of the speaker, in a sense, solves a problem that would be difficult to solve through traditional computational means. Similarly, we propose that the conscious field is a trick by which Mother Nature solved the problem of collective influence and conditional discriminations. This trick might be different from the way an engineer might solve the problem.

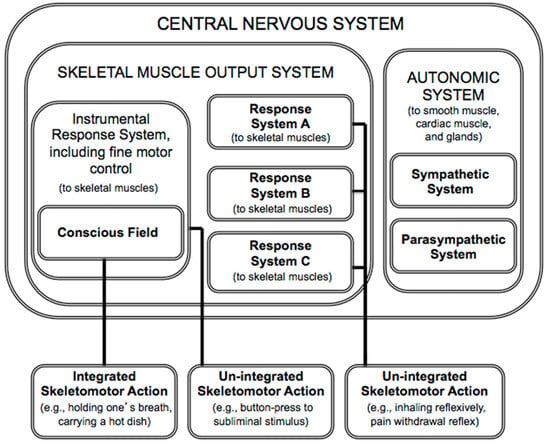

In summary, according to PFT, consciousness is for what in everyday life is called “voluntary” action. The theory proposes that consciousness serves as an information space that passively enables flexible, context-sensitive action selection, yielding integrated action. The theory also reveals that the role of consciousness is very circumscribed, serving only the skeletal muscle output system, which, in turn, subserves the somatic nervous system (Figure 1). (The somatic nervous system, which innervates skeletal muscle and relays somatic (external) sensory information, is often contrasted with the autonomic nervous system, which innervates smooth muscle, cardiac muscles and glands, and relays visceral (internal) sensory information).

Figure 1.

The circumscribed domain of consciousness within the nervous system (based on [1]). Response systems can influence action directly, as in the case of “un-integrated” actions. It is only through the conscious field that multiple response systems can influence action collectively, as when one holds one’s breath while underwater (a case of “integrated” action).

Step 3 concerns how the insights derived from consciousness research on humans can be generalized to other creatures. For Step 3, one must first consider that the products of evolution are not designed the way we humans may think they ought to be designed. Over eons, consciousness was fashioned by the process of evolution, a process that could be happenstance and replete with incessant modifications [79]. Hence, the products of evolution can be suboptimal and counterintuitive [79,80,81,82,83]. Such products could be far different in nature from those of robotics [84], which involve, for example, designing optimized solutions for pre-specified behavioral outcomes.

Consider two examples: the artificial heart is very different from its natural counterpart, and the difference between human locomotion and artificial locomotion is a stark one—that between legs versus wheels. Thus, when reverse engineering biological products, the roboticist cautions, “Biological systems bring a large amount of evolutionary baggage unnecessary to support intelligent behavior in their silicon-based counterparts” (p. 32, [84]). Similarly, the ethologist concludes, “To the biologist who knows the ways in which selection works and who is also aware of its limitations it is no way surprising to find, in its constructions, some details which are unnecessary or even detrimental to survival” (p. 260, [81]). With this in mind, when reverse engineering the products of nature, the student of consciousness must abandon a normative view (which describes how things should function) and adopt instead a more humble descriptive view (which describes the products of nature as they have evolved to be; see a theoretical account of the evolution of the nervous system in [85]).

Second, one must consider that, across different creatures, the same behavioral capacity, or cognitive function, can be carried out by vastly different mechanisms. Hence, the proposal that creature X must possess consciousness because it features a certain behavioral capacity requires that several assumptions be made. In the natural world, it is not always the case that the same behavioral capacity (e.g., navigating toward a stimulus) stems from the same underlying mechanism (e.g., vision versus echolocation). Moreover, even within one species (e.g., humans), the same behavioral operation (e.g., the closing of the eyelids) could be carried out by more than one kind of mechanism. Consider the difference between an eye blink (which normally occurs automatically and does not require conscious processing) and a wink (which is a high-level action that requires conscious processing and falls under the rubric of “voluntary” action). To an observer, these two actions might seem indistinguishable, even though their underlying processes are very different—to the actor, these two actions certainly feel very different. Consider also that the constriction or dilation of the pupils is, to an observer, an observable act. Unlike a blink or a wink, however, pupil dilation is carried out by smooth muscle effectors, in response to various environmental and internal conditions (e.g., ambient lighting and emotional arousal). People are not normally aware of such actions involving smooth muscle, yet these actions transpire quite often. To take another example, consider that a simple smile can emerge from multiple, distinct cognitive and neural mechanisms, depending on whether it is a genuine smile or a forced smile [86]. Thus, even within the same organism and with the same effector, the same kind of behavioral capacity can be implemented via vastly different mechanisms [87], some of which may be more elegant and efficient than others. Nature does things differently, for better or worse. The job of the scientist is to describe the products of nature as they are and as they function.

Inferring that creature X must possess consciousness because it features a certain behavioral capacity that, in humans, is associated only with consciousness is most compelling when that creature shares a common ancestor with humans. For such creatures, that capacity would be homologous. If there is no such shared ancestry, then the argument would be far less compelling, for, as mentioned above, it could be that the same behavioral capacity (e.g., navigating toward a stimulus) stems from different underlying mechanisms (e.g., vision versus echolocation). Thus, if the behavioral capacity is analogous (e.g., flight in birds versus in bats), then more assumptions must be made, and additional convergent evidence must be presented, when proposing that creature X possesses consciousness.

It is important to not equate consciousness with intelligence. From the standpoint of PFT, the conscious field is one of many adaptations in the animal kingdom that leads to intelligent behavior. Many processes associated with intelligent behavior arise from neural processes and structures unassociated with consciousness (e.g., in the cerebellum). Many of the unconscious processes in the nervous system (e.g., peristalsis and the pupillary reflex) could be said to be intelligent. There are many forms of intelligence in artificial systems and in the animal kingdom (e.g., reflexes in a sea slug) which stem from structures that are not homologous to those associated with human cognition and the conscious field. It is a mistake to assume that an intelligent process (e.g., in a robotic system) must stem from a conscious process. At this stage of understanding, it is important to take into consideration whether the intelligent structure is homologous with those associated with consciousness in humans.

With the above in mind, if one subscribed to PFT and encountered an exotic creature that displayed the ability to respond to a stimulus in a contextually sensitive manner that resembled the “integrated” actions of humans, this by itself would not imply that that creature possesses a conscious field. That is, the discovery of a BCC for an exotic creature could be construed as a necessary yet not sufficient criterion by which to infer consciousness in that creature. The creature’s ability, which could be analogous to that of humans, could be due to something other than a conscious field, perhaps to mechanisms that are, as of yet, unknown to us. Additional convergent evidence, including about the physical substrates of that ability in that creature, would be necessary to warrant the conclusion that the creature possesses a form of consciousness.

However, such a behavioral capacity in the chimpanzee would strongly suggest the presence of a conscious field, because, in terms of parsimony and phylogeny, that ability is more likely to be homologous to the human ability than to be only analogous to the human ability. This is because evolutionary changes (e.g., divergent and convergent evolution) emerge, largely, from interactions among environmental factors and incremental modifications of developmental processes and regulatory mechanisms [88,89]. Moreover, fundamental structural and functional organizational principles (e.g., cochlear mechanisms [90] and auditory processing [91]) are highly conserved across the taxa comprising a monophyletic group. Thus, within a phylogenetic branch, it is more likely for a specific adaptation (e.g., echolocation) to have evolved only once rather than more than once [92]. For example, in cetaceans, it is more likely the case that the echolocation abilities of the beluga whale and the sperm whale are homologous, i.e., were inherited from a common ancestor, rather than analogous, i.e., that echolocation abilities evolved independently in each of the species.

But even when generalizing consciousness to the chimpanzee, our close phylogenetic relative, one must remain conservative. Consider that the hippopotamus and the beluga whale, though closely related and sharing a common ancestor on the phylogenetic tree, possess different cognitive abilities and morphologies (e.g., legs versus flippers). Another example would be that, though genetically related, humans possess language but chimpanzees do not and that both humans and chimpanzees do not share the echolocation abilities of their fellow intelligent mammal, the beluga whale.

4. Conclusions

The more that is understood about the nature of consciousness in humans, including the differences between conscious processing and unconscious processing, the easier it will be to identify consciousness in other creatures, be they animals or exotic creatures yet to be discovered. Here, we introduced only what we consider to be the first three steps that are necessary for such an identification. The myriad, varied, and counterintuitive solutions provided by Mother Nature, when combined with the observations noted in Step 3, lead us to conclude that, when attempting to identify consciousness in other creatures, we will encounter many interesting surprises, ones that will challenge our assumptions about the origin(s) and evolution of intelligent beings everywhere.

Author Contributions

Each author contributed equally to this work. Conceptualization, A.H.C., D.L. and E.M.; investigation, A.H.C., D.L. and E.M.; writing, review, and editing, A.H.C., D.L. and E.M. Many of the ideas presented in this article appeared in [1,2]. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We gratefully acknowledge Björn Merker’s suggestions and permission to quote an observation of his from an unpublished manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Morsella, E.; Godwin, C.A.; Jantz, T.K.; Krieger, S.C.; Gazzaley, A. Homing in on consciousness in the nervous system: An action-based synthesis. Behav. Brain Sci. 2016, 39, e168. [Google Scholar] [CrossRef] [PubMed]

- Morsella, E.; Velasquez, A.G.; Yankulova, J.K.; Li, Y.; Wong, C.Y.; Lambert, D. Motor cognition: The role of sentience in perception-and-action. Kinesiol. Rev. 2020, 9, 261–274. [Google Scholar] [CrossRef]

- Crick, F. The Astonishing Hypothesis: The Scientific Search for the Soul; Touchstone: New York, NY, USA, 1995. [Google Scholar]

- Roach, J. Journal Ranks Top 25 Unanswered Science Questions. 2005. Available online: https://3quarksdaily.com/3quarksdaily/2005/07/journal_ranks_t.html (accessed on 30 June 2005).

- Hunt, T.; Ericson, M.; Schooler, J. Where’s my consciousness-ometer? How to test for the presence and complexity of consciousness. Perspect. Psychol. Sci. 2022, 17, 1150–1165. [Google Scholar] [CrossRef] [PubMed]

- Pinker, S. How the Mind Works; Norton: New York, NY, USA, 1997. [Google Scholar]

- Gray, J.A. Consciousness: Creeping up on the Hard Problem; Oxford University Press: New York, NY, USA, 2004. [Google Scholar]

- Seth, A. Being You: A New Science of Consciousness; Dutton: New York, NY, USA, 2021. [Google Scholar]

- Schacter, D.L. Searching for Memory: The Brain, the Mind, and the Past; Basic Books: New York, NY, USA, 1996. [Google Scholar]

- Squire, L.R. Memory and Brain; Oxford University Press: New York, NY, USA, 1987. [Google Scholar]

- Rosenbaum, D.A. Motor control. In Stevens’ Handbook of Experimental Psychology: Vol. 1. Sensation and Perception, 3rd ed.; Pashler, H., Yantis, S., Eds.; Wiley: New York, NY, USA, 2002; pp. 315–339. [Google Scholar]

- Taylor, J.A.; Ivry, R.B. Implicit and explicit processes in motor learning. In Action Science; Prinz, W., Beisert, M., Herwig, A., Eds.; The MIT Press: Cambridge, MA, USA, 2013; pp. 63–87. [Google Scholar]

- Levelt, W.J.M. Speaking: From Intention to Articulation; MIT Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Lieberman, M.D. The X- and C-systems: The neural basis of automatic and controlled social cognition. In Fundamentals of Social Neuroscience; Harmon-Jones, E., Winkielman, P., Eds.; Guilford: New York, NY, USA, 2007; pp. 290–315. [Google Scholar]

- Singer, W. Consciousness and Neuronal Synchronization. In The Neurology of Consciousness; Laureys, S., Tononi, G., Eds.; Academic Press: Cambridge, MA, USA, 2011; pp. 43–52. [Google Scholar]

- Uhlhaas, P.J.; Pipa, G.; Lima, B.; Melloni, L.; Neuenschwander, S.; Nikolic, D.; Singer, W. Neural synchrony in cortical networks: History, concept and current status. Front. Integr. Neurosci. 2009, 3, 17. [Google Scholar] [CrossRef] [PubMed]

- Alkire, M.; Hudetz, A.; Tononi, G. Consciousness and anesthesia. Science 2008, 322, 876–880. [Google Scholar] [CrossRef] [PubMed]

- Laureys, S. The neural correlate of (un)awareness: Lessons from the vegetative state. Trends Cogn. Sci. 2005, 12, 556–559. [Google Scholar] [CrossRef] [PubMed]

- Buzsáki, G. Rhythms of the Brain; Oxford University Press: New York, NY, USA, 2006. [Google Scholar]

- Doesburg, S.M.; Green, J.L.; McDonald, J.J.; Ward, L.M. Rhythms of consciousness: Binocular rivalry reveals large-scale oscillatory network dynamics mediating visual perception. PLoS ONE 2009, 4, e6142. [Google Scholar] [CrossRef] [PubMed]

- Kern, M.K.; Jaradeh, S.; Arndorfer, R.C.; Shaker, R. Cerebral cortical representation of reflexive and volitional swallowing in humans. Am. J. Physiol. Gastrointest. Liver Physiol. 2001, 280, G354–G360. [Google Scholar] [CrossRef] [PubMed]

- Aru, J.; Bachmann, T.; Singer, W.; Melloni, L. Distilling the neural correlates of consciousness. Neurosci. Biobehav. Rev. 2012, 36, 737–746. [Google Scholar] [CrossRef]

- Crick, F.; Koch, C. Toward a neurobiological theory of consciousness. Semin. Neurosci. 1990, 2, 263–275. [Google Scholar]

- Panagiotaropoulos, T.I.; Kapoor, V.; Logothetis, N.K. Desynchronization and rebound of beta oscillations during conscious and unconscious local neuronal processing in the macaque lateral prefrontal cortex. Front. Psychol. 2013, 4, 603. [Google Scholar] [CrossRef] [PubMed]

- Lamme, V.A. Blindsight: The role of feedback and feedforward cortical connections. Acta Psychol. 2001, 107, 209–228. [Google Scholar] [CrossRef] [PubMed]

- Tong, F. Primary visual cortex and visual awareness. Nat. Rev. Neurosci. 2003, 4, 219–229. [Google Scholar] [CrossRef] [PubMed]

- Merker, B. Consciousness without a cerebral cortex: A challenge for neuroscience and medicine. Behav. Brain Sci. 2007, 30, 63–134. [Google Scholar] [CrossRef] [PubMed]

- Penfield, W.; Jasper, H.H. Epilepsy and the Functional Anatomy of the Human Brain; Little, Brown: New York, NY, USA, 1954. [Google Scholar]

- Ward, L.M. The thalamic dynamic core theory of conscious experience. Conscious. Cogn. 2011, 20, 464–486. [Google Scholar] [CrossRef] [PubMed]

- Muzur, A.; Pace-Schott, E.F.; Hobson, J.A. The prefrontal cortex in sleep. Trends Cogn. Sci. 2002, 6, 475–481. [Google Scholar] [CrossRef] [PubMed]

- Boly, M.; Garrido, M.I.; Gosseries, O.; Bruno, M.A.; Boveroux, P.; Schnakers, C.; Massimini, M.; Litvak, V.; Laureys, S.; Friston, K. Preserved feedforward but impaired top-down processes in the vegetative state. Science 2011, 332, 858–862. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S.; Naccache, L. Towards a cognitive neuroscience of consciousness: Basic evidence and a workspace framework. Cognition 2001, 79, 1–37. [Google Scholar] [CrossRef] [PubMed]

- Lau, H.C. A higher-order Bayesian decision theory of consciousness. Prog. Brain Res. 2008, 168, 35–48. [Google Scholar]

- Desmurget, M.; Reilly, K.T.; Richard, N.; Szathmari, A.; Mottolese, C.; Sirigu, A. Movement intention after parietal cortex stimulation in humans. Science 2009, 324, 811–813. [Google Scholar] [CrossRef]

- Desmurget, M.; Sirigu, A. A parietal-premotor network for movement intention and motor awareness. Trends Cogn. Sci. 2010, 13, 411–419. [Google Scholar] [CrossRef]

- Fried, I.; Katz, A.; McCarthy, G.; Sass, K.J.; Williamson, P.; Spencer, S.S.; Spencer, D.D. Functional organization of human supplementary motor cortex studied by electrical stimulation. J. Neurosci. 1991, 11, 3656–3666. [Google Scholar] [CrossRef]

- Haggard, P. Human volition: Towards a neuroscience of will. Nat. Neurosci. Rev. 2008, 9, 934–946. [Google Scholar] [CrossRef]

- Scott, M. Corollary discharge provides the sensory content of inner speech. Psychol. Sci. 2013, 24, 1824–1830. [Google Scholar] [CrossRef]

- Merker, B. From probabilities to percepts: A subcortical “global best estimate buffer” as locus of phenomenal experience. In Being in Time: Dynamical Models of Phenomenal Experience; Shimon, E., Tomer, F., Neta, Z., Eds.; John Benjamins: Amsterdam, The Netherlands, 2012; pp. 37–80. [Google Scholar]

- Shepherd, G.M.; Greer, C.A. Olfactory bulb. In The Synaptic Organization of the Brain, 4th ed.; Shepherd, G.M., Ed.; Oxford University Press: New York, NY, USA, 1998; pp. 159–204. [Google Scholar]

- Haberly, L.B. Olfactory cortex. In The Synaptic Organization of the Brain, 4th ed.; Shepherd, G.M., Ed.; Oxford University Press: New York, NY, USA, 1998; pp. 377–416. [Google Scholar]

- Eichenbaum, H.; Shedlack, K.J.; Eckmann, K.W. Thalamocortical mechanisms in odor-guided behavior. Brain Behav. Evol. 1980, 17, 255–275. [Google Scholar] [CrossRef]

- Sela, L.; Sacher, Y.; Serfaty, C.; Yeshurun, Y.; Soroker, N.; Sobel, N. Spared and impaired olfactory abilities after thalamic lesions. J. Neurosci. 2009, 29, 12059–12069. [Google Scholar] [CrossRef]

- Markowitsch, H.J. Thalamic mediodorsal nucleus and memory: A critical evaluation of studies in animals and man. Neurosci. Biobehav. Rev. 1982, 6, 351–380. [Google Scholar] [CrossRef]

- Mitchell, A.S.; Baxter, M.G.; Gaffan, D. Dissociable performance on scene learning and strategy implementation after lesions to magnocellular mediodorsal thalamic nucleus. J. Neurosci. 2007, 27, 11888–11895. [Google Scholar] [CrossRef]

- Tham, W.W.P.; Stevenson, R.J.; Miller, L.A. The functional role of the medio dorsal thalamic nucleus in olfaction. Brain Res. Rev. 2009, 62, 109–126. [Google Scholar] [CrossRef]

- Tham, W.W.P.; Stevenson, R.J.; Miller, L.A. The role of the mediodorsal thalamic nucleus in human olfaction. Neurocase 2011, 17, 148–159. [Google Scholar] [CrossRef]

- Plailly, J.; Howard, J.D.; Gitelman, D.R.; Gottfried, J.A. Attention to odor modulates thalamocortical connectivity in the human brain. J. Neurosci. 2008, 28, 5257–5267. [Google Scholar] [CrossRef]

- Cicerone, K.D.; Tanenbaum, L.N. Disturbance of social cognition after traumatic orbitofrontal brain injury. Arch. Clin. Neuropsychol. 1997, 12, 173–188. [Google Scholar] [CrossRef]

- Li, W.; Lopez, L.; Osher, J.; Howard, J.D.; Parrish, T.B.; Gottfried, J.A. Right orbitofrontal cortex mediates conscious olfactory perception. Psychol. Sci. 2010, 21, 1454–1463. [Google Scholar] [CrossRef]

- Buck, L.B. Smell and taste: The chemical senses. In Principles of Neural Science, 4th ed.; Kandel, E.R., Schwartz, J.H., Jessell, T.M., Eds.; McGraw-Hill: New York, NY, USA, 2000; pp. 625–647. [Google Scholar]

- Keller, A. Attention and olfactory consciousness. Front. Psychol. 2011, 2, 380. [Google Scholar] [CrossRef]

- Mizobuchi, M.; Ito, N.; Tanaka, C.; Sako, K.; Sumi, Y.; Sasaki, T. Unidirectional olfactory hallucination associated with ipsilateral unruptured intracranial aneurysm. Epilepsia 1999, 40, 516–519. [Google Scholar] [CrossRef]

- Rudrauf, D.; Bennequin, D.; Granic, I.; Landini, G.; Friston, K.; Williford, K. A mathematical model of embodied consciousness. J. Theor. Biol. 2017, 428, 106–131. [Google Scholar] [CrossRef]

- Nagel, T. What is it like to be a bat? Philos. Rev. 1974, 83, 435–450. [Google Scholar] [CrossRef]

- Brentano, F. Psychology from an Empirical Standpoint; Oxford University Press: Oxford, UK, 1874. [Google Scholar]

- Schopenhauer, A. The World as Will and Representation; Dover: New York, NY, USA, 1818; Volume 1. [Google Scholar]

- Merker, B. The efference cascade, consciousness, and its self: Naturalizing the first person pivot of action control. Front. Psychol. 2013, 4, 501. [Google Scholar] [CrossRef]

- Proctor, R.W.; Vu, K.P.L. Action selection. In The Corsini Encyclopedia of Psychology; Weiner, I.B., Craighead, E., Eds.; John Wiley: Hoboken, NJ, USA, 2010; Volume 1, pp. 20–22. [Google Scholar]

- McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef]

- Kröger, B.J.; Bekolay, T.; Cao, M. On the Emergence of Phonological Knowledge and on Motor Planning and Motor Programming in a Developmental Model of Speech Production. Front. Hum. Neurosci. 2022, 16, 844529. [Google Scholar] [CrossRef]

- Yamadori, A. Body awareness and its disorders. In Cognition, Computation, and Consciousness; Ito, M., Miyashita, Y., Rolls, E.T., Eds.; American Psychological Association: Washington, DC, USA, 1997; pp. 169–176. [Google Scholar]

- Firestone, C.; Scholl, B.J. Cognition does not affect perception: Evaluating the evidence for ‘top-down’ effects. Behav. Brain Sci. 2016, 39, e229. [Google Scholar] [CrossRef] [PubMed]

- Pylyshyn, Z.W. Computation and Cognition: Toward a Foundation for Cognitive Science; MIT Press: Cambridge, MA, USA, 1984. [Google Scholar]

- Sacks, O. Hallucinations, Alfred A. Knopf: New York, NY, USA, 2012.

- Yankulova, J.K.; Zacher, L.M.; Velazquez, A.G.; Dou, W.; Morsella, E. Insuppressible cognitions in the reflexive imagery task: Insights and future directions. Front. Psychol. Cogn. 2022, 13, 957359. [Google Scholar] [CrossRef] [PubMed]

- Bueno, J.; Morsella, E. Involuntary Cognitions: Response Conflict and Word Frequency Effects. San Francisco State University, San Francisco, CA, USA. 2023, unpublished manuscript.

- Wright-Wilson, L.; Kong, F.; Renna, J.; Geisler, M.W.; Morsella, E. During action selection, consciousness represents selected actions, unselected actions, and involuntary memories. In Proceedings of the Annual Convention of the Society for Cognitive Neuroscience, San Francisco, CA, USA, 23–26 April 2022. [Google Scholar]

- Morsella, E.; Velasquez, A.G.; Yankulova, J.K.; Li, Y.; Gazzaley, A. Encapsulation and subjectivity from the standpoint of viewpoint theory. Behav. Brain Sci. 2022, 45, 37–38. [Google Scholar] [CrossRef] [PubMed]

- Baumeister, R.F.; Vohs, K.D.; DeWall, N.; Zhang, L. How emotion shapes behavior: Feedback, anticipation, and reflection, rather than direct causation. Personal. Soc. Psychol. Rev. 2007, 11, 167–203. [Google Scholar] [CrossRef] [PubMed]

- Baars, B.J. A cognitive Theory of Consciousness; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Dehaene, S. Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts; Viking: New York, NY, USA, 2014. [Google Scholar]

- Skinner, B.F. About Behaviorism; Vintage Books: New York, NY, USA, 1974. [Google Scholar]

- Spear, N.E.; Campbell, B.A. Ontogeny of Learning and Memory; Lawrence Erlbaum and Associates: New York, NY, USA, 1979. [Google Scholar]

- Grafman, J.; Krueger, F. The prefrontal cortex stores structured event complexes that are the representational basis for cognitively derived actions. In Oxford Handbook of Human Action; Morsella, E., Bargh, J.A., Gollwitzer, P.M., Eds.; Oxford University Press: New York, NY, USA, 2009; pp. 197–213. [Google Scholar]

- O’Reilly, R.C. The What and How of prefrontal cortical organization. Trends Neurosci. 2010, 33, 355–361. [Google Scholar] [CrossRef] [PubMed]

- Tsotsos, J.K. Behaviorist intelligence and the scaling problem. Artif. Intell. 1995, 75, 135–160. [Google Scholar] [CrossRef]

- Tsotsos, J.K. A Computational Perspective on Visual Attention; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Gould, S.J. Ever Since Darwin: Reflections in Natural History; Norton: New York, NY, USA, 1977. [Google Scholar]

- De Waal, F.B.M. Evolutionary psychology: The wheat and the chaff. Curr. Dir. Psychol. Sci. 2002, 11, 187–191. [Google Scholar] [CrossRef]

- Lorenz, K. On Aggression; Harcourt, Brace, & World: New York, NY, USA, 1963. [Google Scholar]

- Marcus, G. Kluge: The Haphazard Construction of the Mind; Houghton Mifflin Company: Boston, MA, USA, 2008. [Google Scholar]

- Simpson, G.G. The Meaning of Evolution; Yale University Press: New Haven, CT, USA, 1949. [Google Scholar]

- Arkin, R.C. Behavior-Based Robotics; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Cisek, P. Resynthesizing behavior through phylogenetic refinement. Atten. Percept. Psychophys. 2019, 81, 2265–2287. [Google Scholar] [CrossRef] [PubMed]

- Wild, B.; Rodden, F.A.; Rapp, A.; Erb, M.; Grodd, W.R.; Ruch, W. Humor and smiling: Cortical regions selective for cognitive, affective, and volitional components. Neurology 2006, 66, 887–893. [Google Scholar] [CrossRef]

- Marr, D. Vision; Freeman: New York, NY, USA, 1982. [Google Scholar]

- Rudel, D.; Sommer, R.J. The evolution of developmental mechanisms. Dev. Biol. 2003, 264, 15–37. [Google Scholar] [CrossRef][Green Version]

- Vanderhaeghen, P.; Polleux, F. Developmental mechanisms underlying the evolution of human cortical circuits. Nat. Rev. Neurosci. 2023, 24, 213–232. [Google Scholar] [CrossRef] [PubMed]

- Manley, G.A. Cochlear mechanisms from a phylogenetic viewpoint. Proc. Natl. Acad. Sci. USA 2000, 97, 11736–11743. [Google Scholar] [CrossRef] [PubMed]

- Kaas, J.H.; Hackett, T.A. Subdivisions of auditory cortex and processing streams in primates. Proc. Natl. Acad. Sci. USA 2000, 97, 11793–11799. [Google Scholar] [CrossRef] [PubMed]

- Gazzaniga, M.S.; Ivry, R.B.; Mangun, G.R. Cognitive Neuroscience: The Biology of Mind, 2nd ed.; W. W. Norton & Company, Inc.: New York, NY, USA, 2002. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).