Simple Summary

Quality of life is not only important for humans but also for animals. Over the past two decades many efforts have been made to develop questionnaire-based instruments that measure quality of life. Such instruments enable a more objective comparison of quality of life, for example, when evaluating the success of a therapy. Depending on their design, instruments can be either generic—assessing an animal in its whole—or disease-specific, assessing quality of life for a particular disease. We reviewed existing instruments to assess disease-specific quality of life in dogs and compared the development and testing processes with human medicine standards. We found that development processes differed in their developmental approach and were often inadequately described. Furthermore, the reliability and validity of these instruments—meaning whether they truly measure what they are intended to—were often tested only in a few aspects, or not at all. It is important to consider the limitations of an instrument, when using it, to interpret the results correctly. This review highlights the need for more consistent and thorough development of such instruments.

Abstract

Quality of life (QoL) assessment has increased in veterinary medicine in recent years, as has the number of attempts to measure it. This review aimed to provide an overview and assess the quality of existing instruments measuring disease-specific QoL in dogs. The PubMed and CAB Abstracts databases were searched in February 2023 using search terms associated with dogs, well-being, and QoL to identify relevant articles which were then evaluated for information on the development and validation processes. For further analysis, 41 publications were selected, of which instruments were available for 30 publications; of these, 24 contained information on item development, while 12 of the 41 instruments described some form of instrument evaluation. Among these 12 instruments, 2 exhibited appropriate test–retest reliability, 7 exhibited acceptable internal consistency, 9 checked at least one face or content validity, and 8 tested at least one sort of hypothesis to contribute to construct validity evaluation. None of the instruments were thoroughly evaluated for all necessary psychometric aspects for their application and result interpretation. Therefore, these instruments’ usage should be carefully considered and subject to restrictions. Further research should focus on establishing guidelines aiming to achieve high standards for instrument development and validation in veterinary medicine.

1. Introduction

Quality of life (QoL) assessment is a crucial aspect of veterinary practice. As QoL guides decisions regarding treatment as well as euthanasia, it is a key—if not the most important—component of veterinary work [].

Although the term ‘QoL’ is frequently used, no universally agreed-upon definition vis-à-vis veterinary medicine exists []. By contrast, for humans, the World Health Organization defines QoL as an ‘individual’s perception of their position in life in the context of the culture and value systems in which they live and in relation to their goals, expectations, standards and concerns’ [] (p. 1405).

Despite the lack of a formal definition, the high degree of individuality underscored for QoL among humans also applies to that among animals. The fulfilment of needs and good health play important roles and contribute to a satisfactory QoL; however, QoL transcends these aspects and encompasses all conscious, subjective experiences and feelings [,].

In human medicine, QoL assessment is most typically performed through patient self-reporting []. However, in the field of veterinary medicine, it is impossible to ask the patient to self-report; therefore, relying on measurements performed by a proxy, such as the treating veterinarian or pet owner, is necessary. Similar challenges in measuring QoL using proxy assessment can be encountered when assessing young children or people with disabilities []. Although subjective assessment can be the foundation for variable sources of bias, the incorporation of subjective judgements into the healthcare sector has become a convention—one that has been continuously refined to become increasingly standardised [] (p. 12).

However, the approaches established in human medicine to quantify QoL differ in form. A fundamental division can be made according to the structure of the tools, some comprising only a single question and others in the form of a questionnaire []. Questionnaires can be divided further into ‘generic instruments’, which follow a holistic scope and attempt to assess the individual as a whole, and ‘specific instruments’, which focus on a specific disease, body area, or certain function []. Although generic tools offer suitable alternatives to monitor patients in general, they may not reflect subtle changes in specific areas. Therefore, to assess a particular chronic disease’s impact on QoL, employing a disease-specific questionnaire explicitly designed to capture the aspects influenced by the disease can be useful [].

Developing tools to measure constructs, such as QoL, is a commonplace research phenomenon in psychology, whereby constructs such as depression, anxiety, and happiness can be assessed using psychometric instruments []. Generating and selecting items for an instrument is only one component of instrument development. Testing reliability and validity is a crucial step in instrument development, without which, we cannot ensure that the instrument measures what it is supposed to measure. Item generation, selection, and validation of psychometric instruments for humane medicine follow established methods [,]. In 2009, the US Food and Drug Administration (FDA) published its requirements for instruments intended to support labelling claims of pharmaceuticals for human medicine [].

Likewise, in veterinary medicine, various attempts have been made over the past two decades to measure the quality of life among animals. Different tools—both disease-specific and generic, differing in terms of their scope, development process, and validation level—have been developed. To the best of our knowledge, no official veterinary medicine guidelines exist on these tools’ development and validation.

Incorporating a QoL assessment into daily veterinary practice is not only beneficial but also essential as the patient’s QoL is the primary determinant of treatment decisions. Specifically, in treating chronic diseases, where changes are typically extremely minimal and can be overlooked because people have become accustomed to gradual changes in conditions, a standardised survey can be of unparalleled value [].

Selecting a suitable instrument and assessing its quality is challenging for veterinarians owing to the variety of available tools, inconsistent terminology (e.g., ‘tool’ vs. ‘instrument’ and ‘assessment’ vs. ‘measurement’), and differences in their development and validation approaches. For practitioners, who usually have limited spare time in their daily practice and limited knowledge of psychometric methods, assessing an instrument’s quality is almost impossible.

Although some articles have reviewed existing instruments for dogs [] and generic instruments for dogs and cats [], no recent publications have addressed existing disease-specific instruments for dogs.

Therefore, this scoping review intends to provide an overview of the existing structured disease-specific QoL instruments for dogs, including an assessment of their methodology, the quality of item formulation, reliability level, and validation level.

2. Materials and Methods

2.1. Definition of Terms

No universally agreed definition exists of QoL among animals, which was, therefore, defined in this study per the establishment of a conceptual framework (the process description will be published elsewhere):

Quality of life in animals is an animal’s individual perception of its current state in relation to its needs, expectations, and desires such that the resulting positive and negative affective states are reflected and observable in the animal’s behaviour and demeanour.

A QoL assessment instrument or tool was defined as a set of related questions that, collectively, are supposed to provide an overview of the dog’s QoL or well-being. A single scale on ‘How would you rate your dog’s quality of life?’ or a domain of QoL in an instrument designed for another purpose was not considered a tool.

Some concepts—for example, the concept of chronic pain or disease severity—are closely related to the concept of QoL. Measurements of these constructs frequently overlap and are, occasionally, employed interchangeably [,]. To obtain a clean separation, a tool was considered a QoL instrument if, in the original development process, the objective was to measure QoL. Therefore, a tool was not considered a QoL instrument if it is used to assess QoL but the original authors developed it as a tool for the measurement of a related construct.

A tool was considered disease-specific if it was designed to be applied to individuals with a specific disease or a disease of a specific organic system or body part.

2.2. Search Methods

This scoping literature review was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews (PRISMA-ScR) guidelines []. The search was conducted in February 2023 for the 2013–2023 period, following Belshaw et al.’s (2015) review []. To ensure contingency, the Ovid interface was used to search the CAB Abstracts and Medline (PubMed) databases.

To ensure continuity, the search terms used were those employed in Belshaw et al.’s (2015) review []—specifically, dog, dogs, canine, canines, canis, wellbeing, well-being, well being, QoL, quality of life, and quality-of-life, linked with Boolean terms: (dog OR dogs OR canine OR canines OR canis) AND (wellbeing OR well-being OR well being OR quality of life OR QoL OR quality-of-life).

We searched the abstract, title, original title, broad terms, and heading words. The results were exported to Citavi 6.14 (Swiss Academic Software, Wädenswil, Switzerland), and subsequently, the inclusion and exclusion criteria were applied.

2.3. Inclusion Criteria

The inclusion criteria were as follows: the publication had to (1) be in English; (2) be published as an original research article in a peer-reviewed journal; (3) address issues concerning domestic dogs; (4) mention QoL/well-being in the title or abstract; (5) describe a questionnaire-based assessment of QoL/well-being in the Materials and Methods section, which would be considered an instrument according to our definitions of terms; and (6) contain a disease-specific instrument.

2.4. Exclusion Criteria

The exclusion criteria were as follows: publications that (1) were published in any other language than English; (2) were case reports and reviews published in Congress proceedings or in a non-peer-reviewed journal; (3) addressed any other species than domestic dogs or addressing dogs in a broader context (e.g., rabies situation); (4) did not mention QoL or well-being in the abstract, or title, or clearly did not include them as part of the investigation (e.g., mentioned in sentences such as ‘whether this can improve quality of life has to be further investigated’); (5) did not mention QoL or well-being in the Materials and Methods section, and conducted the evaluation not based on a questionnaire (e.g., laboratory parameters), using a tool that was designed to measure a different construct (e.g., the Helsinki Chronic Pain Index or Canine Brief Pain Inventory) or assessed QoL using a single overall question; and (6) were designed for generic assessment.

2.5. Further Selection Process

A single author (FR) applied the inclusion and exclusion criteria, after which the remaining publications contained both those describing an instrument for the first time and those using a previously published instrument. Of the latter group, the original publications were manually searched and included if they satisfied the inclusion criteria.

Additionally, the instruments retrieved from the review by Belshaw et al. (2015) [] were checked against the inclusion criteria, and those that fulfilled the criteria were included.

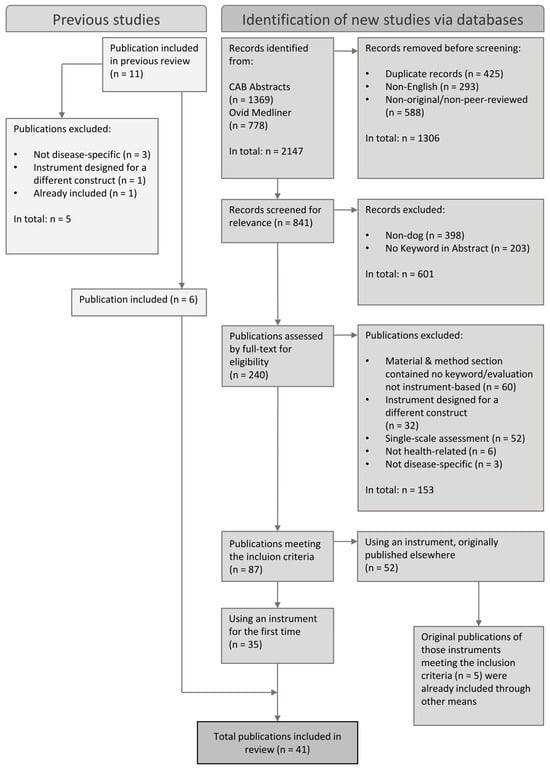

The resulting publications ultimately formed the sample for further analysis. Figure 1 presents the selection process illustrated as a PRISMA 2020 flow diagram.

Figure 1.

Selection process illustrated in a flow diagram based on the PRISMA 2020 statement.

2.6. Data Collection

The retrieved publications were screened for the following: (1) disease specification and whether the intended construct measured was QoL or well-being; (2) availability of the complete instrument; (3) information regarding the process of item generation and/or selection, based on the criteria outlined in Table 1; and (4) information regarding any testing or evaluation of the instrument, as described in Table 2.

Table 1.

Criteria for assessing the process of item generation and/or selection.

Table 2.

Criteria to assess the psychometric properties of the instruments.

Publications for which a complete instrument was available were screened for the characteristics described in Table 3.

Table 3.

Characteristics for which the fully available instruments were screened and compared.

2.7. Extraction of Disease Specification and Construct Measured

Information regarding the disease, condition, organ system, or body region for which the instrument was designed was extracted. Owing to the search terms used, instruments measuring QoL and instruments measuring well-being were retrieved. Therefore, information was also extracted based on whether the construct that was intended to be measured was QoL or well-being.

2.8. Extraction of Instrument Availability

Practitioners and clinicians in need of an instrument benefit from easy access to copies of the questionnaire. Therefore, the study assessed whether a copy of the instrument was included in the publication itself, the appendix, or the supplementary material, or whether the authors would have to be contacted to obtain a copy.

2.9. Evaluation of the Item Generation and/or Selection Process and Any Form of Evaluation and/or Testing

The criteria for assessing the process of instrument design and validation were based on the criteria established by the US FDA [], a scientific committee report on developing disease-specific QoL [], the Consensus-based Standards for the Selection of Health Status Measurement Instruments (COSMIN) risk of bias checklist, and COSMIN guidelines for systematic reviews of patient-reported outcome measures [,,,] that were adapted to veterinary medicine.

3. Results

The initial search returned 2147 publications, of which 425 were duplicates and were removed. Of the 1722 remaining publications, 87 fulfilled the inclusion criteria. Figure 1 presents the application of the inclusion and exclusion criteria.

Publications that contained an instrument used for the first time (n = 35), including modifications used for the first time, were employed for further analyses. Additionally, Belshaw et al.’s (2015) review [] was checked for relevant publications (Figure 1). For publications in which a previously published tool was used (n = 52), the original publications were searched, and it was confirmed whether they fulfilled the inclusion criteria. The original publications that fulfilled the inclusion criteria (n = 5) had already been included through other means. Finally, 41 publications were included for further examination.

3.1. Disease Specification and Construct Measured

The instruments addressed different types of disease grouped by affected organs or organic systems (Table 4). Owing to the heterogeneous appearance of cancer, the variety of instruments used to address cancer are presented in Table 5.

Table 4.

Instruments categorised by type of disease addressed.

Table 5.

Variety of body regions and organic systems addressed by instruments developed for QoL in patients with cancer.

For two instruments, well-being was the objective of the measurement; for 39 instruments, the measurement’s objective was QoL.

3.2. Instrument Availability

For 30 publications, the questionnaires used were fully available, integrated into the publication itself, or copies could be found in the appendix or supplementary material. Five publications did not contain the questionnaire itself but displayed the results of the items in a table; thus, an overall impression of the instrument could be obtained. Two publications stated that copies of the questionnaire were available from the authors upon request. Four publications did not contain either the questionnaire, a display of the items, or an availability statement.

3.3. Information About Item Generation and/or Selection

Sixteen publications did not provide any information on the process of devising the items; they used only a set of questions without explaining why they considered them suitable or where they derived them from. Twenty-four publications contained information on the development of the items (to various extents). One publication could not be classified owing to unclear references.

Five publications explicitly reviewed the literature as part of the instrument development process. Fourteen publications derived their items from existing questionnaires, but only two commented on their selection criteria or why they modified a particular instrument rather than using the originally published version.

Eight instruments were influenced by human medicine in different ways. They reflected that the items regarding content, question formatting, or domain structure were derived from a corresponding questionnaire in human medicine; however, discussions were also conducted with people affected by the disease to inform the process of item generation.

In 10 publications, the dogs’ owners could influence the instrument’s design. In three publications, they were used as a source to generate items through qualitative interviews; in two publications, they could exert influence by adapting the items during the application of the instrument; and in five publications, they were part of the testing process in different ways, for example, through pre-testing or in evaluating readability, content validity, face validity, or the instrument in general.

3.4. Information About Instrument Testing

Of the 24 publications that described the development of items, 10 also described some form of testing. One publication mentioned that validation had occurred, but no further details were provided.

Of the 16 publications in which the process of item generation and selection was not described, two contained more detailed information on the validation. In another publication, validation was mentioned; however, the data were not presented.

Twelve instruments described some form of instrument evaluation. Table 6 presents a detailed assessment of the aspects tested.

Table 6.

Assessment of the testing of 12 instruments where further details about the testing process were provided.

3.5. Characteristics of the Available Instruments

Among the publications that reported testing, the instruments were available in 11 of them. Six of them were named. The number of items ranged from 5 to 24, and the scaling of the items varied across the instruments. Visual analogue scales (VAS), numerical rating scales (NRS), adjectival scales, and 5-point Likert scales were used. Adjectival scales had values assigned so an overall score could be calculated. The overall scores were calculated for 7 of the 11 instruments. In three cases, the item scores were summed; one overall score was based on calculating the percentage of the maximal possible score, and another was based on calculating the average item score. Only one instrument had a weighted score. The characteristics are presented in Table 7.

Table 7.

Characteristics of the instruments.

4. Discussion

This review provides an overview of the disease-specific QoL instruments currently available for use in dogs and, therefore, updates the one from 10 years ago []. After the review identified 2147 records, inclusion and exclusion criteria were applied, and finally, 41 publications were included for further analysis. The field of application differed greatly, with more than a quarter of the instruments designed for use in cancer. As cancer treatment is accompanied by severe side effects and is often palliative, careful monitoring of QoL is essential to constantly weigh costs and benefits as well as to release the dog from its suffering in a timely fashion. Furthermore, the European Medicines Agency recommends QoL as an endpoint in clinical field studies for the authorisation of anticancer medicinal products for dogs and cats [].

For 30 of the 41 publications, the instrument used was fully available either within the publication itself or in the appendix or supplementary material; in the remaining publications, the instruments were not made available. The unavailability of instruments makes their use less likely, as the author must be contacted first to receive the instrument. Additionally, numerous authors may be unavailable or may not respond, and the instrument, if provided, may turn out not to fit its intended purpose. Therefore, it is unlikely that practitioners will take the time to contact an author in a busy practice where the instrument is normally needed immediately. Therefore, no authors were contacted for this study, as it was intended to provide an overview that simplifies the choice of an instrument for daily practice.

Sixteen of the 41 publications did not provide any information on the development process. Additionally, for 14 of the 41 publications, questions were derived from existing questionnaires, but 12 of these did not provide any information about the item selection process. The process of devising or selecting the items ‘is far from a trivial task, since no amount of statistical manipulation after the fact can compensate for poorly chosen questions; those that are badly worded, ambiguous, irrelevant, or—even worse—not present’ [] (p. 19). Therefore, the process of devising the items provides clues about the quality of an instrument, and furthermore, the FDA recommends that the process of item generation and selection should be documented in a comprehensible way [].

The involvement of patients in the target population during the process of item development is considered crucial in human medicine [,]. However, in veterinary medicine, we obviously cannot ask the patients themselves; therefore, owners and veterinarians must serve as a proxy. Accordingly, those who will apply the questionnaire later (usually the owners) should be involved in the process. Owners were included in the process of item generation for only three instruments [,,], with the authors of one article emphasising the input of owners as the most valuable source and suggesting the inclusion of owner input for every owner-reported measurement tool to be developed []. As has been reported previously, owners are perfectly capable of describing behavioural changes [].

However, carefully devising and selecting items is only part of the equation for producing high-quality instruments. Testing reliability and validity and, if necessary, improving the items, formatting, or structure of the instrument, if they are insufficient, is the other major part of instrument development. Twelve of the 41 publications described some form of reliability and/or validity. The degree of testing differed widely, and none of the instruments were tested for every aspect. These findings were consistent with those of Belshaw (2015) [], Doit (2021) [], and Fulmer (2022) [].

Various aspects of reliability can be assessed. Test–retest reliability was assessed for 3 of the 12 publications with appropriate statistical tests. Two publications [,] showed good test–retest reliability (≥0.70), which is considered sufficient for good reliability []. Inter-rater reliability was assessed for only one instrument []. If inter-rater reliability has not been tested or is insufficient, it cannot be guaranteed that administrations carried out by different individuals are comparable. Therefore, if inter-rater reliability has not been established and multiple administrations on the same animal are planned, a questionnaire should be administered by the same person each time to avoid unreliable results.

High internal consistency contributes to acceptable reliability, but internal consistency alone is insufficient as evidence of reliability for clinical trial purposes when test–retest reliability is not assessed []. Internal consistency was analysed and confirmed to be acceptable (Cronbach’s alpha ≥ 0.70) for 7 of the 12 publications. However, one publication was missing the calculation details [], and another publication [] did not calculate the internal consistency separately for their dog QoL instrument but included an owners’ QoL instrument in their calculation. Strictly speaking, internal consistency should only be assessed if the unidimensionality of a scale or subscale was evaluated. Therefore, calculations for instruments for which unidimensionality has not been checked or for which two constructs have been combined should be treated with caution.

Principal component analysis (PCA) or exploratory factor analysis (FA) can be performed to find an underlying structure. PCA was conducted for 3 of the 12 publications, and FA was conducted for 1 of the 12 publications. Assessing the underlying structure can facilitate omitting unnecessary items that do not contribute to the measurement. If unnecessary items are omitted, the length of a questionnaire decreases, and the respondent burden can, therefore, be lowered []. For reflective measurement models in which the items are supposed to be correlated, an assessment of the underlying structure should be conducted [].

A test’s validity describes its ability to measure what it is intended to measure [] (p. 30). Content validity must be prioritised []. This determines whether every relevant aspect is covered and whether no unnecessary aspects that could increase the measurement error are included. ‘Face validity’, which is sometimes used similarly to the expression ‘content validity’, but has a slightly different meaning, describes whether an instrument appears to be measuring what it is supposed to measure [].

Content validity was evaluated for 7 of the 12, and face validity in 4 of the 12 publications. In two cases, both content validity and face validity were evaluated, leaving three questionnaires untested for their content. Content and face validity were most frequently established through informal discussions or feedback from the pilot questionnaire, with a few authors not even using the terms ‘content validity’ or ‘face validity’ [,,,], while others established content and face validity through a comprehensive approach, for example, through semi-structured interviews [] or focus group discussions []. As all 12 questionnaires were owner-reported QoL assessments, owners should not only be included in the development process, as discussed above, but also in content validation.

Testing for correlations with measurements of closely related constructs, such as disease severity, evaluates convergent validity and is, therefore, part of hypothesis testing, which contributes to the establishment of construct validity. This testing was performed for 3 of the 12 publications. Correlations with related constructs should be between 0.30 and 0.50 []. Correlations below 0.30 would indicate that either one of the measurements or the hypothesis that the measurements are related is incorrect [] (p. 240). Correlations that are too high would indicate that the same construct had been measured and that the instrument is just a new way to measure the construct that the other instrument measures [] (p. 240). The correlations ranged from being too low or barely acceptable [] to reflecting slightly too high [] to very high [] correlations.

Another method for evaluating construct validity was to compare the questionnaire results with an overall score, which was performed for 4 of the 12 publications. Correlations with measurements of the same construct should correlate highly, at least ≥0.5 [], which was the case in all but one [] of the instruments.

Comparing ‘extreme groups’, namely, groups that are expected to score very differently in the examined construct, is a third way of contributing to construct validity [,]. This was conducted for 7 of the 12 publications, and significant (p < 0.05) differences between the groups could be observed in the tested instruments. The exception was the instrument in Marchetti et al. (2021) [], which comprised five domains that were tested separately, only four of which showed a significant difference. However, as statistical significance also depends on the sample size and large sample sizes can make minimal differences statistically significant, the magnitude of correlations or differences should be analysed []. Two studies [,] used analysis of variance (ANOVA) as the statistical test without prior testing for normal distribution—a requirement for the valid use of this statistical test.

The instruments differed widely in terms of the number of items (5–24). Generally, as many items as necessary should be used to cover every aspect and guarantee content validity, and as few items as possible to minimise the respondent burden []. Schofield et al. (2019) [] were the only authors to report the time it took owners to complete the questionnaire. As the 19 items took six minutes to complete, it can be assumed that the other instruments were also of a reasonable length, making them easy to complete while the owner waited.

In summary, many instruments described their development process inadequately, did not test at all, tested only a few aspects, or modified an existing questionnaire without reassessing its reliability and validity. Furthermore, the statistical methods used had some limitations. One possible explanation for this phenomenon is that the instruments were developed by people who believe that such an instrument is needed and try to develop it but are unaware of the large body of research conducted on instrument development in the psychological field and, therefore, with the best intentions, believe that ‘a few questions will do the job’. In addition, even in human medicine, uncertainties remain regarding the taxonomy and evaluation of measurement properties, which, in turn, leads to uncertainties when assessing the quality of a patient-reported outcome measure [,]. Therefore, it is not surprising that veterinary medicine faces similar challenges.

This study has several limitations. The search terms used were the same as those used by Belshaw et al. (2015) []. It cannot be guaranteed that no additional instruments would have been found if other or additional terms, such as ‘welfare’, had been included. However, this review focused specifically on instruments from the health sector in which the terms ‘QoL’ or ‘health-related quality of life’ (HRQoL) are used most frequently, with well-being often incorporated in the definitions of QoL or HRQoL []. Among the 41 extracted publications related to disease-specific evaluations, only 2 had well-being as the target construct. Among the 11 instruments described in more detail, the measured construct was only QoL, which supports the hypothesis that the most commonly used health-related term is quality of life.

As the instruments were frequently modified, clearly classifying them as distinct instruments was challenging. To ensure accuracy, each set of questions was included as soon as any changes were made. To avoid confusion, the use of the term ‘instrument’ should be reconsidered and should only be applied in future to sets of questions that have undergone testing and demonstrated adequate psychometric properties.

For future research, it is recommended to collaborate with researchers from other disciplines to incorporate as much knowledge as possible to advance research on QoL measurement in veterinary medicine that is in the best interests of animals. Developing guiding information similar to the COSMIN guidelines [,,,,] for veterinary medicine would also be beneficial.

5. Conclusions

Although research on QoL measurement is underway, we are still far from establishing rules for developing and testing psychometric instruments; therefore, sufficiently tested instruments in veterinary medicine are lacking. Nevertheless, if we understand the individual shortcomings of each tool, they can still be used to facilitate certain processes in daily practice. The overview provided in this review helps practitioners decide on an instrument and highlights the untested areas of the instruments, so they can be used responsibly and subject to the restrictions.

Future research should focus on establishing common guidelines for the development and testing of psychometric instruments, such as QoL instruments, in veterinary medicine. Additionally, the instruments discussed in this review should be reassessed to properly evaluate their psychometric properties and, if necessary, refine them to make them widely usable. If such a reassessment is undertaken, it should be based on established methodologies and guidelines from human medicine and psychology, where psychometric testing has a longer tradition and well-defined standards.

Overall, the increasing focus on considering QoL is commendable. Subsequently, the focus must be on establishing a sound and comprehensive research foundation to build upon.

Author Contributions

Conceptualization, F.F.R.; methodology, F.F.R. and R.K.; literature search, F.F.R.; data extraction, F.F.R.; writing—original draft preparation, F.F.R.; writing—review and editing, B.A. and S.K.; supervision, R.K., B.A. and S.K.; project administration, F.F.R. All authors have read and agreed to the published version of the manuscript.

Funding

The idea for this scoping review arose from a doctoral thesis that was funded by Boehringer Ingelheim Vetmedica GmbH. However, the review itself was done without funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analysed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The co-authors R.K. and B.A. are employees of Boehringer Ingelheim Vetmedica GmbH, Ingelheim am Rhein, Germany. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| QoL | Quality of Life |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PRISMA-ScR | Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews |

| FDA | US Food and Drug Administration |

| COSMIN | Consensus-based Standards for the Selection of Health Status Measurement Instruments |

| ICC | Intraclass Correlation Coefficient |

| VAS | Visual Analogue Scale |

| NRS | Numerical Rating Scale |

| PCA | Principal Component Analysis |

| FA | Exploratory Factor Analysis |

| HRQoL | Health-related Quality of Life |

References

- Yeates, J.; Main, D. Assessment of companion animal quality of life in veterinary practice and research. J. Small Anim. Pract. 2009, 50, 274–281. [Google Scholar] [CrossRef] [PubMed]

- Belshaw, Z.; Asher, L.; Harvey, N.D.; Dean, R.S. Quality of life assessment in domestic dogs: An evidence-based rapid review. Vet. J. 2015, 206, 203–212. [Google Scholar] [CrossRef] [PubMed]

- The WHOQOL Group. The World Health Organization quality of life assessment (WHOQOL): Position paper from the World Health Organization. Soc. Sci. Med. 1995, 41, 1403–1409. [Google Scholar] [CrossRef] [PubMed]

- McMillan, F.D. Quality of life in animals. J. Am. Vet. Med. Assoc. 2000, 216, 1904–1910. [Google Scholar] [CrossRef]

- Belshaw, Z. Quality of life assessment in companion animals: What, why, who, when and how. Companion Anim. 2018, 23, 264–268. [Google Scholar] [CrossRef]

- McDowell, I. Measuring Health: A Guide to Rating Scales and Questionnaires, 3rd ed.; Oxford University Press: Oxford, UK, 2006; ISBN 978-0-19-516567-8. [Google Scholar]

- Guyatt, G.H. Measuring Health-Related Quality of Life: General Issues. Can. Respir. J. 1997, 4, 123–130. [Google Scholar] [CrossRef]

- Scott, E.M.; Nolan, A.M.; Reid, J.; Wiseman-Orr, M.L. Can we really measure animal quality of life? Methodologies for measuring quality of life in people and other animals. Anim. Welf. 2007, 16, 17–24. [Google Scholar] [CrossRef]

- Streiner, D.L.; Norman, G.R. Health Measurement Scales: A Practical Guide to Their Development and Use, 4th ed.; Oxford University Press: Oxford, UK, 2008; ISBN 9780199231881. [Google Scholar]

- Kirkley, A.; Griffin, S. Development of disease-specific quality of life measurement tools. Arthroscopy 2003, 19, 1121–1128. [Google Scholar] [CrossRef]

- U.S. Department of Health and Human Services Food and Drug Administration. Guidance for Industry Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. 2009. Available online: https://www.fda.gov/media/77832/download (accessed on 29 January 2025).

- Fulmer, A.E.; Laven, L.J.; Hill, K.E. Quality of Life Measurement in Dogs and Cats: A Scoping Review of Generic Tools. Animals 2022, 12, 400. [Google Scholar] [CrossRef]

- Greene, L.M.; Royal, K.D.; Bradley, J.M.; Lascelles, B.D.X.; Johnson, L.R.; Hawkins, E.C. Severity of Nasal Inflammatory Disease Questionnaire for Canine Idiopathic Rhinitis Control: Instrument Development and Initial Validity Evidence. J. Vet. Intern. Med. 2017, 31, 134–141. [Google Scholar] [CrossRef]

- Beths, T.; Munn, R.; Bauquier, S.H.; Mitchell, P.; Whittem, T. A pilot study of 4CYTE™ Epiitalis® Forte, a novel nutraceutical, in the management of naturally occurring osteoarthritis in dogs. Aust. Vet. J. 2020, 98, 591–595. [Google Scholar] [CrossRef] [PubMed]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- DeVon, H.A.; Block, M.E.; Moyle-Wright, P.; Ernst, D.M.; Hayden, S.J.; Lazzara, D.J.; Savoy, S.M.; Kostas-Polston, E. A psychometric toolbox for testing validity and reliability. J. Nurs. Scholarsh. 2007, 39, 155–164. [Google Scholar] [CrossRef]

- Prinsen, C.A.C.; Mokkink, L.B.; Bouter, L.M.; Alonso, J.; Patrick, D.L.; de Vet, H.C.W.; Terwee, C.B. COSMIN guideline for systematic reviews of patient-reported outcome measures. Qual. Life Res. 2018, 27, 1147–1157. [Google Scholar] [CrossRef]

- Terwee, C.B.; Prinsen, C.A.C.; Chiarotto, A.; Westerman, M.J.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Mokkink, L.B. COSMIN methodology for evaluating the content validity of patient-reported outcome measures: A Delphi study. Qual. Life Res. 2018, 27, 1159–1170. [Google Scholar] [CrossRef] [PubMed]

- Mokkink, L.B.; de Vet, H.C.W.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; Terwee, C.B. COSMIN Risk of Bias checklist for systematic reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1171–1179. [Google Scholar] [CrossRef]

- Mokkink, L.B.; Boers, M.; van der Vleuten, C.P.M.; Bouter, L.M.; Alonso, J.; Patrick, D.L.; de Vet, H.C.W.; Terwee, C.B. COSMIN Risk of Bias tool to assess the quality of studies on reliability or measurement error of outcome measurement instruments: A Delphi study. BMC Med. Res. Methodol. 2020, 20, 293. [Google Scholar] [CrossRef]

- Budke, C.M.; Levine, J.M.; Kerwin, S.C.; Levine, G.J.; Hettlich, B.F.; Slater, M.R. Evaluation of a questionnaire for obtaining owner-perceived, weighted quality-of-life assessments for dogs with spinal cord injuries. J. Am. Vet. Med. Assoc. 2008, 233, 925–930. [Google Scholar] [CrossRef] [PubMed]

- Favrot, C.; Linek, M.; Mueller, R.; Zini, E. Development of a questionnaire to assess the impact of atopic dermatitis on health-related quality of life of affected dogs and their owners. Vet. Dermatol. 2010, 21, 64–70. [Google Scholar] [CrossRef]

- Freeman, L.M.; Rush, J.E.; Farabaugh, A.E.; Must, A. Development and evaluation of a questionnaire for assessing health-related quality of life in dogs with cardiac disease. J. Am. Vet. Med. Assoc. 2005, 226, 1864–1868. [Google Scholar] [CrossRef]

- Giuffrida, M.A.; Brown, D.C.; Ellenberg, S.S.; Farrar, J.T. Development and psychometric testing of the Canine Owner-Reported Quality of Life questionnaire, an instrument designed to measure quality of life in dogs with cancer. J. Am. Vet. Med. Assoc. 2018, 252, 1073–1083. [Google Scholar] [CrossRef] [PubMed]

- Iliopoulou, M.A.; Kitchell, B.E.; Yuzbasiyan-Gurkan, V. Development of a survey instrument to assess health-related quality of life in small animal cancer patients treated with chemotherapy. J. Am. Vet. Med. Assoc. 2013, 242, 1679–1687. [Google Scholar] [CrossRef]

- Lynch, S.; Savary-Bataille, K.; Leeuw, B.; Argyle, D.J. Development of a questionnaire assessing health-related quality-of-life in dogs and cats with cancer. Vet. Comp. Oncol. 2011, 9, 172–182. [Google Scholar] [CrossRef]

- Marchetti, V.; Gori, E.; Mariotti, V.; Gazzano, A.; Mariti, C. The impact of chronic inflammatory enteropathy on dogs’ quality of life and dog-owner relationship. Vet. Sci. 2021, 8, 166. [Google Scholar] [CrossRef] [PubMed]

- Noli, C.; Minafò, G.; Galzerano, M. Quality of life of dogs with skin diseases and their owners. Part 1: Development and validation of a questionnaire. Vet. Dermatol. 2011, 22, 335–343. [Google Scholar] [CrossRef] [PubMed]

- Schofield, I.; O’Neill, D.G.; Brodbelt, D.C.; Church, D.B.; Geddes, R.F.; Niessen, S.J.M. Development and evaluation of a health-related quality-of-life tool for dogs with Cushing’s syndrome. J. Vet. Intern. Med. 2019, 33, 2595–2604. [Google Scholar] [CrossRef]

- Weiske, R.; Sroufe, M.; Quigley, M.; Pancotto, T.; Werre, S.; Rossmeisl, J.H. Development and evaluation of a caregiver reported quality of life assessment instrument in dogs with intracranial disease. Front. Vet. Sci. 2020, 7, 537. [Google Scholar] [CrossRef]

- Wessmann, A.; Volk, H.A.; Parkin, T.; Ortega, M.; Anderson, T.J. Evaluation of quality of life in dogs with idiopathic epilepsy. J. Vet. Intern. Med. 2014, 28, 510–514. [Google Scholar] [CrossRef]

- Yazbek, K.V.B.; Fantoni, D.T. Validity of a health-related quality-of-life scale for dogs with signs of pain secondary to cancer. J. Am. Vet. Med. Assoc. 2005, 226, 1354–1358. [Google Scholar] [CrossRef]

- Coughlin, S.S. Recall bias in epidemiologic studies. J. Clin. Epidemiol. 1990, 43, 87–91. [Google Scholar] [CrossRef]

- European Medicines Agency. Guideline on Dossier Requirements for Anticancer Medicinal Products for Dogs and Cats. 2021. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/guideline-dossier-requirements-anticancer-medicinal-products-dogs-and-cats-revision-1_en.pdf (accessed on 28 January 2025).

- Wiseman, M.L.; Nolan, A.M.; Reid, J.; Scott, E.M. Preliminary study on owner-reported behaviour changes associated with chronic pain in dogs. Vet. Rec. 2001, 149, 423–424. [Google Scholar] [CrossRef] [PubMed]

- Doit, H.; Dean, R.S.; Duz, M.; Brennan, M.L. A systematic review of the quality of life assessment tools for cats in the published literature. Vet. J. 2021, 272, 105658. [Google Scholar] [CrossRef] [PubMed]

- de Vet, H.C.W.; Terwee, C.B.; Mokkink, L.B.; Knol, D.L. Measurement in Medicine: A Practical Guide; Cambridge University Press: New York, NY, USA, 2011; p. 181. ISBN 978-0-521-11820-0. [Google Scholar]

- Mokkink, L.B.; Terwee, C.B.; Knol, D.L.; Stratford, P.W.; Alonso, J.; Patrick, D.L.; Bouter, L.M.; de Vet, H.C.W. The COSMIN checklist for evaluating the methodological quality of studies on measurement properties: A clarification of its content. BMC Med. Res. Methodol. 2010, 10, 22. [Google Scholar] [CrossRef] [PubMed]

- Mokkink, L.B.; Terwee, C.B.; Patrick, D.L.; Alonso, J.; Stratford, P.W.; Knol, D.L.; Bouter, L.M.; de Vet, H.C.W. The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. J. Clin. Epidemiol. 2010, 63, 737–745. [Google Scholar] [CrossRef]

- Karimi, M.; Brazier, J. Health, Health-Related Quality of Life, and Quality of Life: What is the Difference? Pharmacoeconomics 2016, 34, 645–649. [Google Scholar] [CrossRef]

- Terwee, C.B.; Prinsen, C.A.C.; Chiarotto, A.; de Vet, H.C.W.; Bouter, L.M.; Alonso, J.; Westerman, M.J.; Patrick, D.L.; Mokkink, L.B. COSMIN Methodology for Assessing the Content Validity of PROMs—User Manual. 2018. Available online: https://www.cosmin.nl/wp-content/uploads/COSMIN-methodology-for-content-validity-user-manual-v1.pdf (accessed on 29 January 2025).

- Mokkink, L.B.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Terwee, C.B. COSMIN Methodology for Systematic Reviews of Patient-Reported Outcome Measures (PROMs)—User Manual. 2018. Available online: https://cosmin.nl/wp-content/uploads/COSMIN-syst-review-for-PROMs-manual_version-1_feb-2018.pdf (accessed on 29 January 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).