1. Introduction

The first genome ever sequenced was that of Bacteriophage MS2 [

1]. Twenty years later, the first bacterial genome,

Haemophilus influenza, was sequenced, and with it came the need to computationally predict where protein-coding genes occur in prokaryotic genomes [

2]. This gave rise to the first of the gene annotations tools, GeneMark [

3], Glimmer [

4], and CRITICA [

5], a decade later Prodigal [

6], and most recently PHANOTATE [

7] for viral genomes. One thing each of these tools shares in common is the necessity for a training set of good genes—genes that are highly likely to encode proteins, and that the software can use to learn the features that segregate coding open-reading frames (ORFs) from noncoding ones. GeneMark and GLIMMER, and to an extent Prodigal and PHANOTATE, all require precomputed gene models to find similar genes within the input genome, and the better these training models, the better the predictions that each tool makes. Both GeneMark and CRITICA rely on previously annotated genomes to predict genes in the input query genome. GeneMark selects one of its precomputed general heuristic models based on the amino acid translation table and GC content of the input genome. CRITICA uses the shared homology between the known genes and the input genome, as well as non-comparative information such as contextual hexanucleotide frequency. In contrast, GLIMMER, Prodigal, and PHANOTATE create gene models from only the input query genome, which removes the dependence on reference data. Each uses a different method to select ORFs for inclusion in a training-set, on which a gene model is built. GLIMMER builds its training-set from the longest (and thus most likely to be protein-encoding) ORFs, which are predicted by its LONGORFS program. Prodigal uses the GC frame plot consensus of the ORFs, and performs the first of its two dynamic programming steps to build a training-set. PHANOTATE creates a training-set by taking all the ORFs that begin with the most common start codon ATG.

An additional method, which has subsequently been added as an option to LONGORFS, is the Multivariate Entropy Distance (MED2) algorithm [

8], which finds the entropy density profiles (EDP) of ORFs and compares them to precomputed reference EDP profiles for coding and noncoding ORFs. The EDP of an ORF is a 20-dimensional vector

S = {

si} of the entropy of the 20 amino acid frequencies

pi, and is defined by:

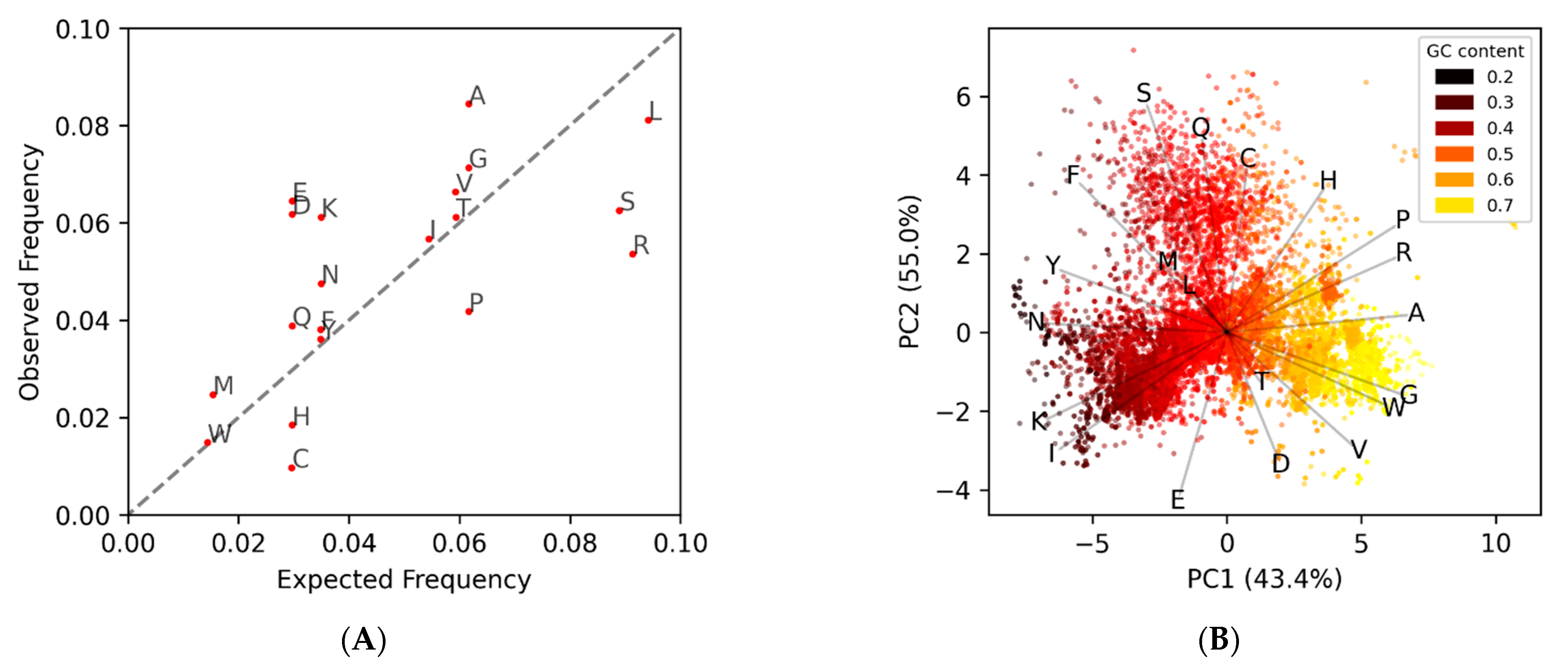

This approach relies on the coding ORFs having a conserved amino acid composition that is different from the noncoding ORFs. This differential can be seen when comparing the observed amino acid frequency of known phage protein coding genes to the expected frequencies (

Figure 1A), which are based purely on the percent AT|GC content of the genome. Since coding ORFs have a bias towards certain amino acids, and noncoding ORFs have frequencies approximately dependent on the nucleotide composition, each will cluster separately in a 20-dimensional amino acid space (

Figure 1B).

MED2 uses these observed amino acid frequency EDPs from known coding/noncoding genes, in the form of average “centers”, to classify potential ORFs in other genomes. One problem that arises is that the observed amino acid frequencies, and the EDPs derived from them, change based on the AT/GC content of a genome (

Figure 1B). This is because the codon triplets for amino acids do not change even when the probability of those codons occurring changes due to AT/GC content. The way MED2 overcomes this is by using two different sets of coding/noncoding references, one for low-GC content genomes and one for normal and high-GC content genomes. Aside from the complication of forcing a discrete scale onto continuous data, there is also the issue that the amino acid composition can change independent of GC content. A good example of this is the

Propionibacterium phages, which are the darker orange points at (4,1) in

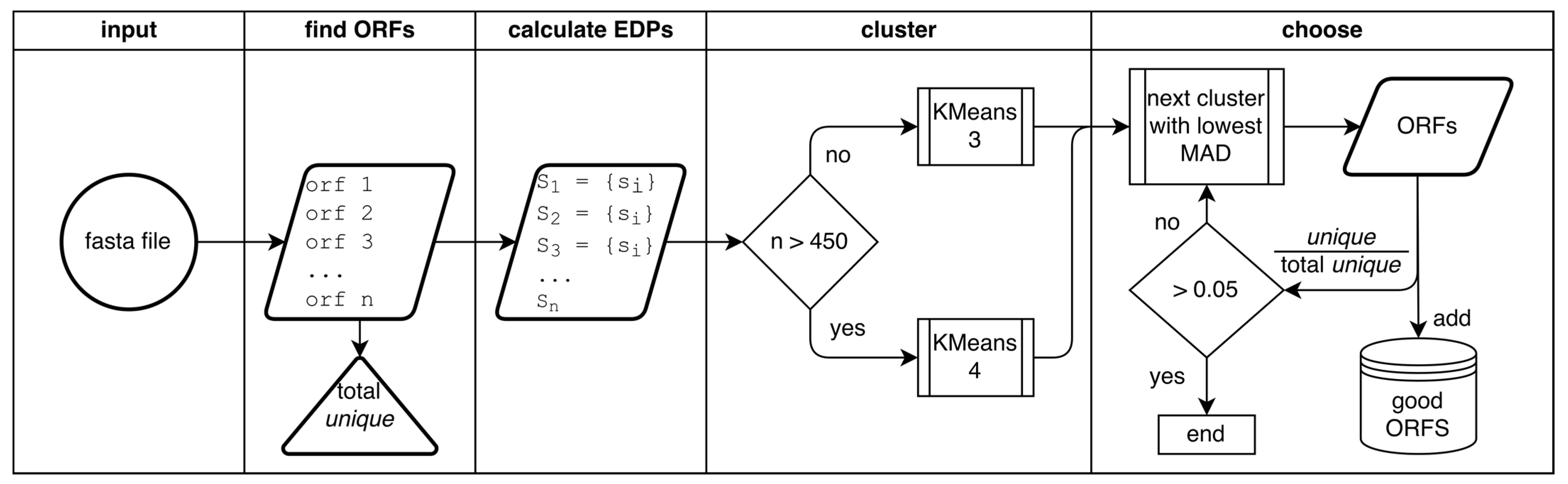

Figure 1B that cluster with the yellow high-GC content genomes despite having a normal GC content. Another problem with reference-based gene prediction, and the pitfall of all supervised learning, is that only genes similar to those already known are predicted. As such, we sought to implement a reference-free method for identifying protein-coding genes within a stretch of DNA, herein titled GOODORFS. Our approach takes the EDP metric, and expands on it by adding the three stop codons and the ORF length. However, rather than using reference EDP profiles, we use unsupervised learning (namely KMeans) to cluster the ORFs, and then denote the cluster with the lowest variation as the coding ORFs.

3. Results and Discussion

We began our work by taking the previously published EDP metric, but expanded it to also include the three stop codons, amber (+), ochre (#), and umber (*). We also appended the length of the ORF to the vector. The purpose of this was two-fold: First, the EDP metric loses informational content when converting from amino acid counts to frequencies. Certainly, a short ORF with a given frequency coding bias is less significant than a much larger ORF with the same frequency bias, since the latter maintains that bias over many more codons. Second, the ORF length bolsters the clustering step, since coding ORFs are generally longer than noncoding ones (for our dataset, the mean lengths were 595 bp and 345 bp, respectively).

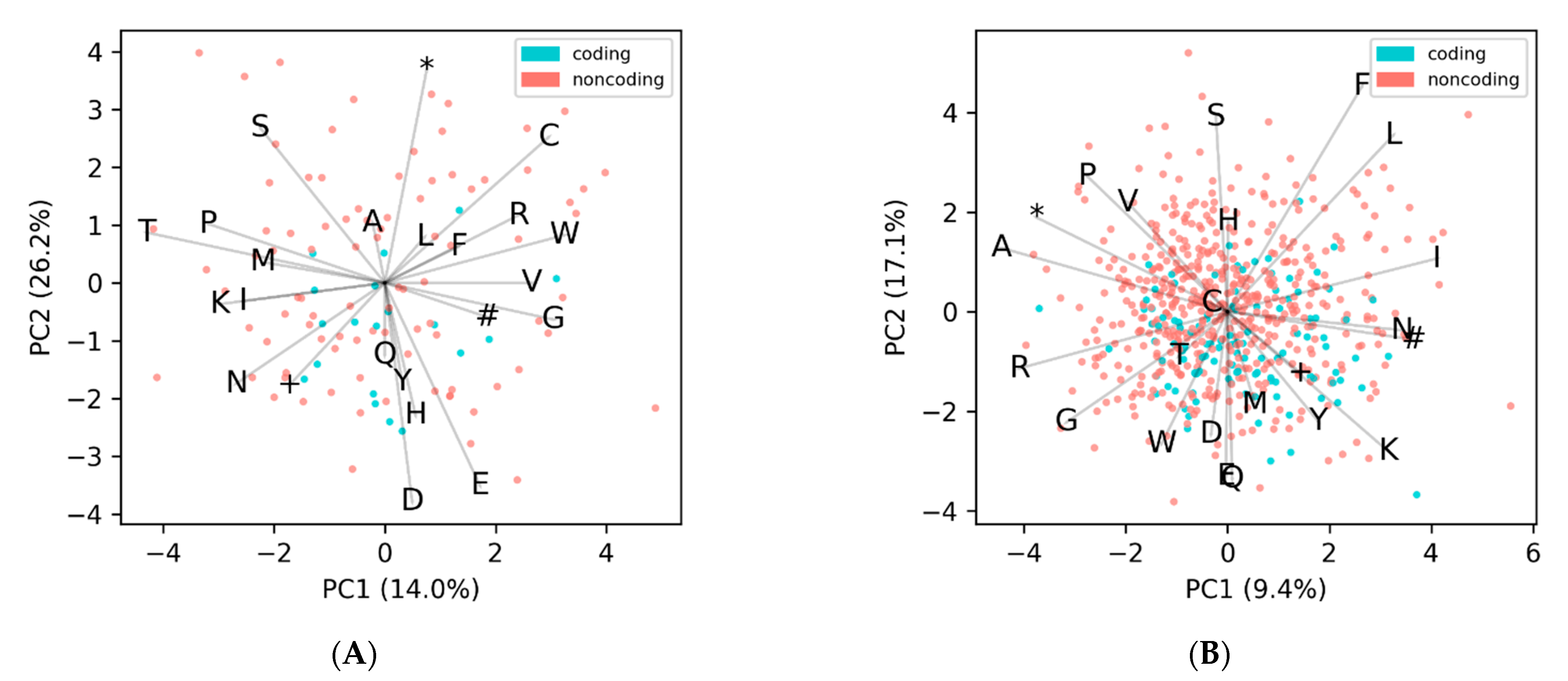

To demonstrate our approach, we took the representative genome,

Caulobacter phage CcrBL9 [

14], chosen since it is the largest genome in our dataset that has a high GC content (>60%), so it will have significantly more noncoding ORFs than coding, which allows for visualizing all of the categories in

Figure 3. We then found all the potential ORFs in the genome, calculated their 24-dimensional EDPs, and used PCA analysis to plot them in 2 dimensions (

Figure 3A). The coding ORFs (blue) and the noncoding ORFs (red) cluster separately, and this same pattern is observed across all other genomes.

Initially we began using KMeans with two clusters, coding and noncoding. This worked well for genomes with average and low GC contents, but did not work well on high-GC content genomes (mean F1-scores were 0.72 and 0.49, respectively). This is because the probability of encountering a stop codon is lower in those with a high GC content, and so they have about twice as many noncoding ORFs as coding (

Figure S1). This can be seen in

Figure 3A, where the red noncoding ORFs far outnumber the blue coding ORFs. Because the KMeans algorithm tends to distribute points into equally sized clusters, this would lead to far more noncoding ORFs being incorrectly clustered with the coding ORFs. To overcome this, we initially changed to the KMeans of three clusters, so that the coding ORFs would get their own cluster and the noncoding ORFs would be split between the two others clusters. Upon inspection of the data, it is apparent that the noncoding ORFs fall into six different clusters, one for each reading frame offset (

Figure 3B), and that not all ORFs have completely random non-conserved amino acid frequencies. The first cluster of noncoding ORFs are those in the intergenic (IG) regions, and for the most part, these have amino acid frequencies that are completely random and follow the expected frequency based on GC content alone. The other five clusters are those that overlap with a coding ORF, and are denoted by an offset, which can be thought of as how many base shifts it takes to get to the coding frame. In contrast to the IG, the offset clusters overlap with a coding ORF, and have a slightly conserved amino acid frequency. This is because even though they themselves do not encode proteins, they are not independent of the coding ORFs they overlap with; they share the same nucleotides, just offset and in different frames. These non-intergenic noncoding ORFs fall into five categories (1+, 2+, 0−, 1−, 2−), and are always in relation to the coding frame, denoted as 0+ (which is synonymous with coding). The same-strand categories (1+ and 2+) correspond to when the coding ORF is in frame

n and so the two noncoding ORFs are n + 1 and n + 2. Likewise, the three opposite-strand categories (0−, 1−, and 2−) correspond to a coding ORF at frame n and noncoding ORFs at n + 0, n + 1, and n + 2, except in the reverse direction. Thus, we would expect there to be one conserved amino acid cluster for the coding frame, five spurious semi-conserved clusters, and one cluster for intergenic regions (

Figure 3B and

Figure S2). Since we are working with phages, which can range down to only 18 unique ORFs for a genome (

Figure S3), it is not possible to cluster ORFs into these seven clusters, so we settle on clustering into three for small genomes (less than 450 unique ORFs) and four clusters for larger genomes (over 450 unique ORFs). This cutoff was chosen because when using four clusters, genomes with less than 400 unique ORFs started to fail. Likewise, when using only three clusters, genomes with more than 500 unique ORFs began failing. We could have continued this pattern of setting multiple staggered cutoffs depending on genome size, until reaching seven clusters, but did not observe significant improvements when using more clusters, even for the largest genomes. However, we still need to pick which cluster contains the coding ORFs, since unsupervised clustering does not assign labels. Since coding ORFs have a conserved amino acid frequency, they will have a higher “density” cluster when compared to noncoding ORFs—in 24-dimensional ordination the points will be highly clustered. This can be observed in

Figure 3B, where the blue coding ORFs are a dense cluster, while the noncoding ORFs are a sparse cloud. We used the mean absolute density (MAD) of each cluster to quantify the “density” in the 23-dimensional EDP space, since the MAD better accounts for outliers in the data. The cluster with the lowest sum of MADs (excluding the ORF length feature) was selected as the coding cluster.

To test the efficacy of our method, we took a set of 14,179 annotated phage genomes, and for each genome we identified all potential ORFs, calculated the amino acid EDPs, clustered them, labeled the densest cluster as coding, and then calculated the F1-score to measure the performance. The average of the F1-scores was 0.85 ± 0.13, with only 32 genomes failing (an F1-score of less than 0.1). Unsurprisingly, all the failed genomes were very small in size, with ten or less annotated protein-coding genes (and 60 or less

unique ORFs). A breakdown of the genome sizes in our dataset (both number of protein-coding genes and all potential ORFs) is shown in

Supplementary Figure S3. Additionally, all the genomes that failed belonged to the taxon

Microviridae. Whether this is due to correlation (about 62% of the genomes with less than 60 unique ORFs were

Microviridae) or causation remains undetermined. As shown in the plot of amino acid versus GC content (

Figure 1B), there is a large cloud of points that do not follow the horizontal trend, and instead cluster at the top of the figure separately along the vectors that represent the FSQC amino acids, where most of the genomes belong to

Microviridae (

Figure S4). This suggests that the

Microviridae do not use the Standard Code, but rather one that potentially substitutes one or more of the over-observed amino acid codons (FSQC) for the codons that are under-observed (VDE). This hypothesis is supported by the dozen or so phages from other taxa that group with the

Microviridae away from their expected locations in

Supplementary Figure S4. Examples of two non Microviridae phages with unusual amino acid composition are shown in

Figure 4, where no discernable separation of coding from noncoding is observed. Both of these genomes cluster away from the expected frequency with the Microviridae (

Figure S4), and the larger genome sizes reinforces the possibility that they are using a different codon translation table.

We compared the F1-scores of our analysis to those from four other similar programs that create a training set of genes from an input genome: LONGORFS (Glimmer), MED2, PHANOTATE, and Prodigal (for PHANOTATE and Prodigal, only the initial training set creation step is run, and not the entire gene-finding algorithm). The LONGORFS program had 49 genomes had an F1-score less than 0.1, and the overall mean F1-score was 0.53. For MED2, there were six genomes that caused the program to crash; of the remainder, there were 1143 genomes with an F1-score less than 0.1, and the mean F1-score was 0.55. No genomes had an F1-score less than 0.1 with PHANOTATE, since its method sacrifices precision for recall, and subsequently PHANOTATE had the lowest mean F1-score of 0.43. Prodigal performs well, with an F1-score of 0.75 and no genomes failing (an F1-score less than 0.1).

Comparing the mean F1-scores of the four alternative methods to the 0.83 obtained from GOODORFS shows just how much better our method is at recovering coding ORFs compared to other methods (

Table 1). Of the four alternative methods, Prodigal performs the best, but at this point in the complex algorithm, Prodigal has already performed one of its two rounds of dynamic programming to identify coding ORFs. Plotting the individual genome F1-scores from GOODORFS versus the four other methods shows the distribution (

Figure 5), wherein points above the diagonal identity line, of which there are many, signify that GOODORFS is outperforming the competing method. Many of the points in

Figure 5 that have low F1-scores (<0.5) with GOODORFs also happen to belong to the class of genomes that have unusual amino acid frequencies. The two representative genomes discussed above,

Ralstonia phage RSM1 and

Escherichia phage fp01, had GOODORFS F1-scores of 0.38 and 0.23, respectively. These two genomes did not fare much better with the other programs with the highest F1-scores of 0.56 and 0.41 coming from Prodigal, which is not beholden to codon translation tables, except in the form of start and stop codons.

All the previous performance results are based on simple ORF counts. If we were to instead normalize by ORF length, the F1-scores for methods that favor recall over precision (GOODORFS, Phanotate, Prodigal) would all increase because, as we have shown, the true-positives (coding ORFs) are longer than the false-positives (noncoding ORFs). This is relevant because training on a gene set usually involves iterating over the length of the genes, and so longer ORFs have more emphasis than shorter ORFs. An example of this would be in calculating synonymous codon usage bias in a set of genes, where a long ORF with many codons would contribute more than a short one with few codons. Another aspect of training on a gene model is that a high F1-scoring method might not be the best choice for a given gene prediction tool. Sometimes it is better to have a very precise training set with very few false-positives. For instance, this might be the case with Glimmer, which uses the very low F1-scoring method LONGORFS to create a training set. Perhaps having only a few true-positive genes is worth not having any false-positives contaminating the training gene model. Whether to adopt our GOODORFs method into phage gene-finding tools or pipelines is left up to the researchers to determine on a case-by-case basis.

4. Conclusions

We have demonstrated the benefits of our GOODORFS method over similar alternative methods. The more accurate the set of genes used for training a gene model, the more accurate the final gene predictions will be, and this will lead to significant improvements in current gene-finding programs. We plan on incorporating GOODORFS into the PHANOTATE code to replace its current naïve method for creating a training set of all ORFs that start with the codon ATG. Glimmer, which uses LONGORFS, which had the second lowest F1-score (after PHANOTATE), could potentially benefit from switching to using GOODORFS. Since Glimmer is quite modular in its workflow, it is quite easy to change it to use GOODORFS. To facilitate this change, we even tailored our code to mirror the command line arguments and output format of LONGORFS, and have included the code to implement this patch in the GOODORFS github repo. The one lingering shortcoming of GOODORFS is that the current version is coded in Python, which is a scripting language and is significantly slower than compiled code, as is evidenced in our runtime calculations. In order to make GOODORFS more competitive over competing methods, we have begun work on a faster compiled C version.

An area where our GOODORFS method might excel is gene prediction in metagenomes. Most of the available methods for gene prediction in metagenomic reads again rely on reference databases of known genes, which, as previously discussed, has its disadvantages. In contrast, GOODORFS is reference-free; the only prior information needed is the amino acid translation table. Depending on the sequencing technology used, generally a read only contains a fragment of a protein-coding gene, with the beginning or the end (or sometimes both) of the gene extending beyond the length of the read. Because GOODORFS allows for ORF fragments (the lack a start or stop codon) at the edges of the input sequence, it has the ability to work on metagenomic reads. All that is needed is to bin reads according to their GC content and then run the bins through GOODROFS in batches in order to predict gene fragments within the reads. We have already begun adapting and testing the GOODORFS code to work with metagenomes, and instructions are available on the github repo.