Abstract

This paper presents a model predictive control (MPC)-enabled disturbance-rejection controller approach for a pneumatic manipulator system subjected to complex nonlinear terms within the system. To facilitate the handling of the complex nonlinear terms, they are modeled as disturbances. To address these disturbances, a receding-horizon-based extended state observer (RH-ESO) incorporating a decision variable is developed. The optimal disturbance estimation error is determined through a receding-horizon optimization procedure, which provides the best estimate of the disturbance. Using this optimal estimate, the MPC-enabled disturbance-rejection controller is proposed for the pneumatic manipulator system to achieve angle tracking control. Moreover, the proposed approach ensures both the recursive feasibility of the optimization problem and the uniform boundedness of the closed-loop system. The simulation results further demonstrate the effectiveness and validity of the proposed methodology.

1. Introduction

The research on pneumatic soft robotic arms has accelerated with the recent progress in flexible actuation []. Owing to their intrinsic compliance, these arms are now prevalent in soft manipulation [], bio-inspired robotics [], and industrial automation []. Their primary actuators—pneumatic artificial muscles (PAMs)—provide high power-to-weight ratios [], large deformations [], low cost [], and straightforward fabrication []. Nevertheless, severe nonlinearities, rate-dependent hysteresis, input coupling, and sensitivity to disturbances complicate precise control []. To mitigate these difficulties, several robust strategies have been investigated, including adaptive control [], sliding mode control (SMC) [], and active disturbance-rejection control (ADRC) []. An adaptive hysteresis–compensation scheme in [] achieves high-precision trajectory tracking for PAM joints, whereas a constraint-aware adaptive fuzzy controller in [] maintains prescribed tracking accuracy under time-varying motion constraints. These advances highlight the growing maturity of PAM control; however, further work is needed to balance tracking accuracy, robustness, and computational efficiency for real-world deployment.

SMC has become the preferred robust strategy for PAM-actuated systems owing to its inherent resilience to parameter variations and external disturbances []. Literature [] combines adaptive SMC with fuzzy-logic approximation on a PAM-driven humanoid arm, achieving asymptotic trajectory tracking despite unknown nonlinearities. More recently, the study in [] incorporates a disturbance observer into the SMC framework and, with rigorous stability analysis, guarantees uniformly ultimately bounded tracking errors under discontinuous friction.

As a robust anti-disturbance methodology, ADRC has been widely deployed in power systems [], industrial robots [], and multi-agent networks []. By dynamically estimating and compensating both endogenous uncertainties and exogenous disturbances, ADRC largely decouples closed-loop performance from precise modeling requirements []. Its core component—the extended state observer (ESO)—treats disturbances as augmented state variables, permitting simultaneous estimation of the original and disturbance states and thereby yielding a full description of the extended system dynamics []. Numerous ESO variants have been proposed. A reduced-order ESO for mobile robots is introduced in [], while an event-triggered Takagi–Sugeno fuzzy ESO with adaptive parameters for bandwidth-constrained nonlinear systems is reported in []. To attenuate measurement noise, a composite observer that couples an ESO with a Kalman filter is presented in []. ESO-based solutions have also been tailored to pneumatic artificial muscles: a super-twisting ESO for trajectory regulation is presented in [], and an ESO-assisted sliding mode controller is detailed in []. Despite these advances, designing an ESO that maximizes closed-loop performance remains unresolved. The composite observer in [], for example, achieves minimum-variance state estimation rather than disturbance estimation explicitly optimized for control objectives. Motivated by this gap, the present work seeks to develop a new ESO that delivers optimal control performance.

In addition to exogenous disturbances, practical systems must respect stringent operational limits—especially state bounds and actuator saturation—which directly influence performance and safety [,,]. Model predictive control (MPC) mitigates these limitations by solving a receding-horizon optimization that enforces state and input constraints, delivering superior closed-loop behavior with explicit safety guarantees [,]. Literature [] introduces a hybrid disturbance-predictive, adaptive, event-triggered MPC that improves prediction accuracy while reducing communication demand, whereas [] develops a periodic event-triggered MPC for nonlinear systems under bounded disturbances. Despite such progress, applications of MPC to constraint-ridden pneumatic manipulators remain scarce—an observation that motivates the present study.

This work addresses the critical challenge of constrained control synthesis for pneumatic manipulator systems operating under concurrent state and input constraints as well as external disturbances. The principal theoretical and methodological advancements are systematically articulated as follows: (1) a novel ESO with an additive term, whose estimation error dynamics are explicitly incorporated into the MPC cost function formulation, is proposed that dynamically adjusts estimation gain through real-time error feedback; (2) an MPC-enabled disturbance-rejection controller is designed for the pneumatic manipulator system to address both constraints and exogenous perturbations in soft actuator applications; and (3) sufficient stability conditions are established in terms of linear matrix inequalities (LMIs), theoretically ensuring the uniform ultimate boundedness of all closed-loop signals under the proposed MPC-based disturbance-rejection controller.

2. Problem Formulation and Preliminaries

2.1. The Model of the Pneumatic Manipulator System

In this paper, the model of the pneumatic manipulator system considered is based on []. In [], the authors have established the dynamic model of the pneumatic manipulator for the corresponding experimental platform. In the experimental setup of [], compressed air is provided by an air compressor and supplied to pressure-proportional valves as the air source. Voltage signals from the industrial computer are transmitted to twelve pressure-proportional valves, which regulate the internal pressures and pulling forces of the twelve PAMs. During the control process, the system operates in three scenes: an initial scene, a preloading scene, and a movement scene. When the pneumatic manipulator is not pressurized, the input signals of the twelve pressure-proportional valves are zero, indicating that all PAMs are in a relaxed state without air pressure, and the deflection angle of the manipulator is zero. When the manipulator is required to track a given deflection angle, its motion is driven by antagonistic PAMs: one PAM is inflated while the other is deflated, resulting in an angular deflection of the manipulator. From this process, it can be seen that, although the input signal to the system is voltage, it is ultimately converted into internal pressure and pulling force of the PAMs. Therefore, in the dynamic model, the actual control input is the force, and the control objective is angle regulation. The objective of this study is to design an MPC scheme based on a novel ESO, enabling the pneumatic manipulator to track a given deflection angle signal with optimal control cost.

The pneumatic manipulator model is given according to []

where denotes the deflection angle of the joint mechanism; u is the control input. The system configuration parameters include (moment arm distance between PAM actuators and the rotating linkage), m (equivalent total mass incorporating the lower chassis and adjacent joint components), and (effective length of the driving linkage). The pneumatic actuation characteristics are defined by (pressure–voltage conversion coefficient of the proportional valves), (preloading pressure of PAMs), and (nominal rest length of the pneumatic artificial muscles). Additional manufacturing parameters specify N (number of synthetic fiber winding turns in PAM construction) and b (total length of rayon).

Then, system (2) can be transformed as the following discrete-time state-space form

where is the sampling period; i is the time step.

Considering the physical constraints on , , and of the pneumatic manipulator system, this study formulates these limitations in the following mathematical representation:

respectively, where , , and are known positive constants.

Remark 1.

Subject to the mechanical constraints of the pneumatic manipulator’s physical structure, its actual deflection angle is practically confined to ; i.e., . Consequently, the disturbance boundary is defined as .

2.2. The Receding-Horizon-Based ESO

In this paper, a receding-horizon-based ESO (RH-ESO) is proposed based on the receding-horizon mechanism. Decision variables are used in the RH-ESO to simultaneously optimize observer performance, suppress high-gain phenomena, and mitigate overshoot in disturbance estimation errors. The RH-ESO is designed as

where is the estimation of ; is the estimation of ; is the estimation of ; is the decision variable that is obtained by solving an optimization problem subsequently; are the adjustable gains; are the estimation errors that can be obtained as

where .

From (13), we have

From (12), we have

From (18), we have

From (20), we have

Let

Denote

The dynamics of can be obtained as

where

Then, it yields

Denote

Thus, there exists

To mitigate abrupt changes in observed values induced by overshoot during observation error convergence, this study imposes constraints on the observation error to enforce smooth convergence characteristics. This restriction ensures gradual variations in both state and disturbance estimates, thereby enhancing the closed-loop system performance through stabilized estimation dynamics. The constraints on () are given as

where are given positive constants. Then, it is easy to obtain

where and are obtained from constraint (23).

Remark 2.

Constraint (24) is designed to bound the disturbance estimation error, which effectively mitigates the occurrence of high-gain phenomena in the RH-ESO and reduces the overshoot in disturbance estimation. By incorporating this constraint, the disturbance estimation error can achieve smooth convergence characteristics, thereby facilitating the subsequent derivation of control inputs with enhanced stability and reduced transient fluctuations. This systematic constraint implementation ensures coordinated performance improvements throughout the closed-loop control system.

2.3. The Tracking Differentiator

In this subsection, the tracking differentiator (TD) is used to handle the transient process of the system state. Denote as the given input, as the output tracking of , and as the differential signal of . In this paper, is the deflection angle to be tracked. The TD is designed as

where

with and as given constants.

2.4. The MPC-Enabled Disturbance-Rejection Controller

To design the controller, the state tracking errors are denoted as and . Then, the control input is designed as

where and are feedback gains to be designed; is the decision variable of the MPC scheme to be obtained subsequently.

According to , , and (25), there exists

Then, it is obtained that

where .

Denote . Then, it follows that

where

Then, is constrained by , where

with and being given positive constants. Then, the constraint on is obtained as

with . Denote and . Then, we have

where

The nominal system of is obtained as

where with

Accordingly, the constraints on and are obtained as

respectively, where

2.5. The MPC Scheme

In this paper, the MPC scheme is proposed to deal with the state and input constraints of the pneumatic manipulator as well as generate a part of the control input signal. To establish the MPC scheme, a cost function is designed as

where is the predictive state with ; is the predictive decision variable; is the predictive state sequence; is the predictive decision variable sequence with ; Q and R denote the positive definite weighting matrices; represents the terminal penalty matrix requiring design. The predictive horizon is denoted by .

Next, the optimization problem of the MPC scheme can be formulated as

Prob 1:

subject to

where

Remark 3.

The optimality of the RH-ESO proposed in this paper is fundamentally different from that in []. In [], the system under consideration includes a slowly time-varying disturbance in the state equations and Gaussian white noise in the output equations. It should be noted that the optimality in [] is defined in terms of a minimum-variance performance index for estimating the states and and does not address an optimal estimate of the disturbance . By contrast, the focus of this paper is on the rolling optimization of the disturbance estimation error to obtain the optimal estimate of the slowly varying disturbance at the current instant. This “optimal” estimate is understood as the one that minimizes the system control performance index—that is, the quadratic tracking error of the system state.

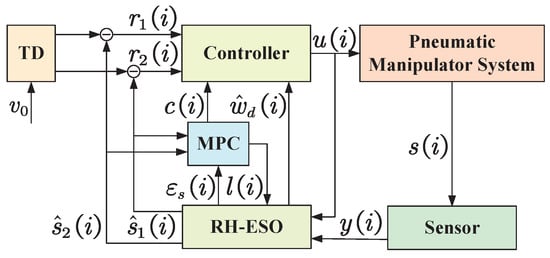

The structure diagram of the pneumatic manipulator system is presented in Figure 1.

Figure 1.

Structure diagram of the pneumatic manipulator system.

Definition 1

([]). System (3) is said to be uniformly bounded if, for all , there exists a constant such that

holds for all and .

Following the above discussion, this paper aims to achieve the following two objectives: (1) establish sufficient conditions to ensure that the proposed MPC scheme is recursively feasible, and (2) establish easily verifiable sufficient conditions to ensure that system (3) under control input (25) is uniformly bounded.

3. Results

This section establishes the recursive feasibility of Prob 1 and provides rigorous Lyapunov-based stability guarantees for the closed-loop system.

Firstly, the following lemma is given to prove the feasibility of Prob 1.

Lemma 1.

If Prob 1 is feasible at time instant t, then there exists at least one feasible solution for Prob 1 at time instant .

Proof.

Denote candidate decision variable sequences and as

where is a candidate decision variable for . can be obtained from for . Then, we have

By considering the predictive dynamics of based on , it follows that the corresponding state predictions can be derived from the system’s predictive dynamics:

where is the feasible state for .

Denote as the feasible predictive state sequence.

In order to ensure the existence of at least one solution for Prob 1 at each subsequent time step , it is necessary that the following conditions are satisfied:

In the following analysis, conditions (33)–(35) will be demonstrated one by one. To begin with, we first provide the proof for condition (33). Assuming that Prob 1 has a feasible solution at time instant t, it directly follows that constraint (27) will be satisfied, which in turn implies

for . Based on (32) and (36), we have , which yields

for . Thus, condition (33) holds true for all s within the interval .

Next, the condition (34) is verified for the time instant . By utilizing the sequences and , we have and . Based on (28) and (32), it follows that

which indicates

for . For , there exist and . Based on (29) and (32), and , it is easy to obtain

which means

Thus, condition (34) holds true for all s within the interval .

Finally, the satisfaction of condition (35) is established. Thus, . From (33), it follows that belongs to the set . Additionally, based on (29), it can be readily shown that also lies within .

Therefore, since both membership conditions are satisfied, we conclude that holds true, thereby ensuring the validity of condition (35). □

Theorem 1.

If there exist appropriate parameters , , , , , and a positive definite matrix P, make the linear matrix inequality (LMI)

hold; then, is uniformly bounded under the control input (25) with and . Moreover, the state converges to the set with

Proof.

Considering and , it can be obtained that and . Subsequently, the proof of uniform boundedness of and is given first.

Denote the optimal cost of Prob 1 at time instant i as

Then, a Lyapunov candidate function is denoted as . Consequently, there exists

Given the optimal solution that Prob 1 is obtained at time instant i, then Prob 1 has at least one feasible solution at by Lemma 1. Therefore, the feasible cost of Prob 1 at time instant can be denoted as

Denote

Split , where

For , there exists for . Therefore, it is obtained that

where

For , there exists . Then, it follows that

where . If there exist appropriate parameters , , , , , and a positive definite matrix P, make the LMI

hold, and then . In this case, it follows that

For , it yields .

Combining , , and , we have

According to the principle of optimality, the feasible cost is greater than the optimal cost, which indicates . Then, there exists

Letting , it follows that

It indicates that will converge to the set under as with

Next, the proof that is uniformly bounded is given. It is easy to get , where

Then, as , there exists

with , which suggests that will converge to the set

as . That is, the tracking error is uniformly bounded under the control input (25). This completes the proof. □

Remark 4.

The main advantages of the proposed methodology are twofold: (1) a novel RH-ESO that significantly enhances disturbance estimation accuracy is proposed. By reformulating the disturbance estimation error as prediction states and recursively solving a constrained optimization problem at each sampling instant, this innovative structure guarantees optimal observer gain selection with proven convergence properties. (2) The MPC-enabled disturbance-rejection controller is proposed with disturbance compensation, which enables the closed-loop system to simultaneously achieve prescribed optimal control performance and maintain active disturbance-rejection capabilities.

4. Numerical Example

4.1. Time-Invariant Reference Input Signal Tracking

In this subsection, a numerical example of a pneumatic manipulator system is employed to validate the method and demonstrate its effectiveness. The simulation specifies an initial deflection angle of and a target deflection angle of ; i.e., . In this part, system (1) is used to demonstrate the effectiveness of the proposed method.

Parameters of the pneumatic manipulator system used in this subsection are presented in Table 1.

Table 1.

Parameters used in the simulation.

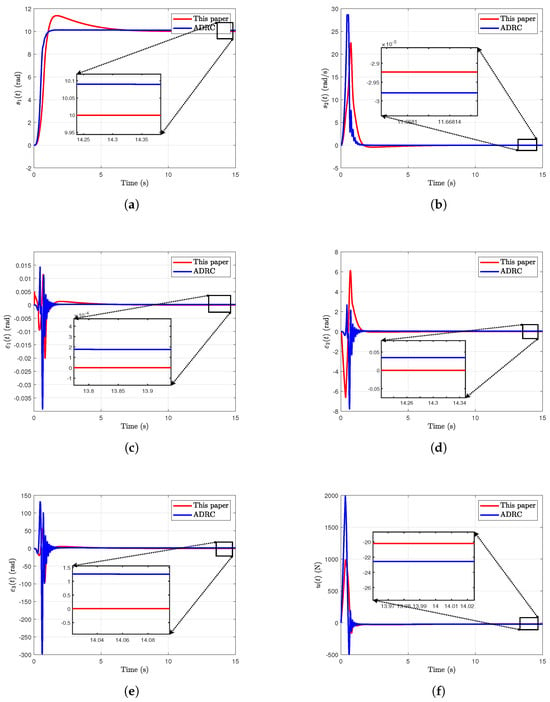

In the following, the simulation results of the pneumatic manipulator system using the proposed MPC-enabled disturbance-rejection controller and the ADRC in Figure 2 are used to show the effectiveness of the proposed method in this paper.

Figure 2.

Simulation results for reference signal of the pneumatic manipulator system. (a) The state under the proposed method and the ADRC. (b) The state under the proposed method and the ADRC. (c) The estimation error under the method in this paper and the ADRC. (d) The estimation error under the method in this paper and the ADRC. (e) The estimation error under the method in this paper and the ADRC. (f) The control input under the method in this paper and the ADRC.

Figure 2a plots the deflection angle of the pneumatic manipulator system under the proposed MPC-enabled disturbance-rejection controller and the ADRC, respectively. From Figure 2a, it is evident that the proposed method can make the deflection angle closer to the set . Figure 2b plots the angular velocity of the pneumatic manipulator system.

Figure 2c–e plot the estimation errors (), respectively. It is evident that the proposed method achieves smaller estimation error magnitudes in steady-state conditions compared to the traditional ADRC approach. From Figure 2c–e, it is easy to find that the estimation errors under the proposed method demonstrate a smaller magnitude in steady-state conditions compared to that of the ADRC approach. Figure 2f plots the control input of the pneumatic manipulator system. In Figure 2f, it can be found that the proposed method effectively mitigates excessive control signal magnitudes, preventing potential actuator saturation and enhancing operational stability. As a result, from Figure 2a–f, the effectiveness of the proposed method is verified.

4.2. Time-Varying Reference Input Signal Tracking

In this subsection, the simulation specifies an initial deflection angle of and a time-varying target deflection angle of . Furthermore, two simulation comparison groups are considered: (i) the back-stepping control (BSC) method described in [], and (ii) a classical proportional–derivative (PD) controller. Note that system (37) is used in this subsection to demonstrate the effectiveness of the proposed method.

where is an external disturbance term and is a model uncertainty term.

The parameters of the pneumatic manipulator system and the MPC scheme are the same as in Table 1. For given reference input signal , the parameters of RH-ESO, ADRC, PD, and BSC are presented in Table 2, and the results are given in Figure 3.

Table 2.

Reference signal of .

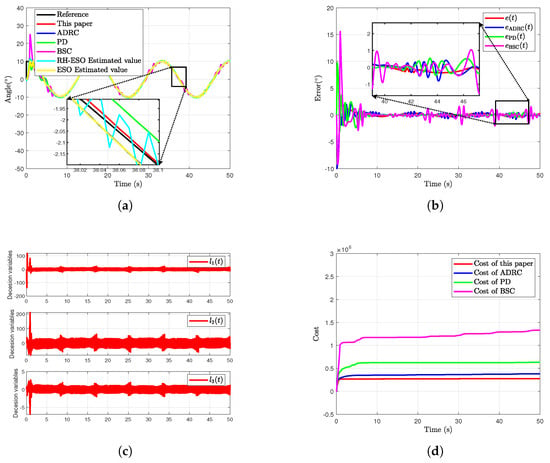

Figure 3.

Simulation results for reference signal of the pneumatic manipulator system using the parameters given in Table 2. (a) Deflection angle of the pneumatic manipulator system. (b) Deflection angle tracking error of the pneumatic manipulator system. (c) Decision variables of the RH-ESO. (d) Costs of the pneumatic manipulator system under these methods.

Figure 3a plots the deflection angle of the pneumatic manipulator system (37). Figure 3b plots the deflection angle tracking error of the pneumatic manipulator system (37). Figure 3c plots the decision variables () of the RH-ESO (9)–(11). Figure 3d plots the total costs throughout the entire simulation time of the pneumatic manipulator system (37) under the controller of this paper, ADRC, PD, and BSC, respectively. Note that the cost throughout the entire simulation time is calculated by the following formula:

where , , and .

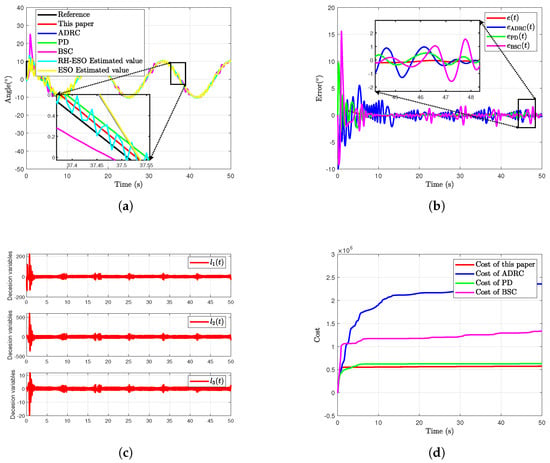

Under the same input signal, i.e., , the controller gain of the RH-ESO is increased from 70 to 130. The corresponding simulation results are shown in Figure 4.

Figure 4.

Simulation results for reference signal of the pneumatic manipulator system using the parameters given in Table 3. (a) Deflection angle of the pneumatic manipulator system. (b) Deflection angle tracking error of the pneumatic manipulator system. (c) Decision variables of the RH-ESO. (d) Costs of the pneumatic manipulator system under these methods.

Table 3.

Reference signals of .

From Figure 3a and Figure 4a, it can be clearly seen that the method mentioned in [] exhibits overshoot and vibration phenomena in the early stages of simulation. The reason is that the control quantity fluctuates greatly. Nevertheless, during the early stage of state convergence, the system still experiences considerable overshoot accompanied by minor residual oscillations. This behavior is primarily attributable to the controller structure adopted in [], which is given as

In this controller, an acceleration-level variable and a velocity-level variable are employed. On the one hand, is driven only by the reference signal, yet it exhibits large variations during the initial stage of the tracking differentiator, which seeks to follow the reference rapidly. On the other hand, the rate of change of in the control law is much higher than that of . When is large, oscillates frequently with considerable amplitude. These rapid oscillations further induce high-frequency large-magnitude oscillations in , leading to overshoot and oscillations in the state at the outset. As gradually converges, its amplitude diminishes, the amplitude of likewise decreases, and the oscillations in become weaker and smaller. Consequently, during the subsequent tracking process, no longer exhibits frequent oscillations or large excursions.

From Figure 3a and Figure 4a, it can be clearly seen that the estimated value is oscillating during the simulation. The oscillations in the angle estimate are directly caused by the decision vector . In the receding-horizon optimization problem proposed here, the variable to be optimized is defined as . Because the receding-horizon strategy minimizes a cost that explicitly anticipates the evolution of states and decision variables over a future horizon, solving the optimization at time step i immediately yields the optimal decision vector . At the next instant, however, the optimal vector is obtained by re-solving the problem with the updated initial state and therefore bears no explicit relation to . Consequently, the value of is determined solely by the optimization outcome. The oscillatory behavior of thus corroborates the role of the receding-horizon mechanism: at each sampling instant, it predicts the state trajectory over the horizon from the current initial state and returns the decision vector that is optimal for that instant.

From Figure 3 and Figure 4, it can be seen that the proposed method achieves smoother bias angle tracking and the lowest overall control cost. Moreover, by comparing the simulation results, it is evident that the proposed method is insensitive to the controller gain , whereas the traditional ADRC method is more sensitive to these gains. This further demonstrates the effectiveness of the proposed method.

4.3. Analysis of Estimation Error Under Different Observer Initial Values

In this subsection, we investigate the evolution of the estimation error when the RH-ESO initial states differ from that of the system. In Table 4, three initial states of RH-ESO and the same initial value of the system are given.

Table 4.

Initial states of the system and the RH-ESO.

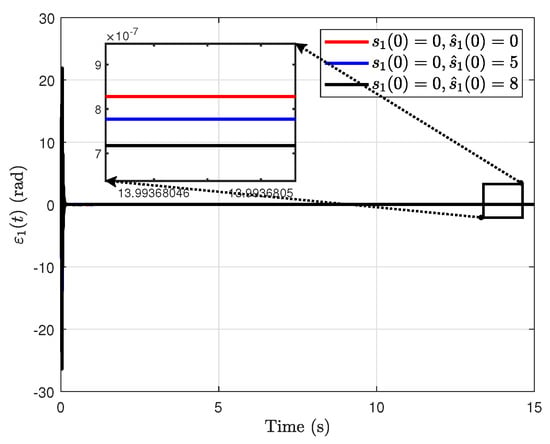

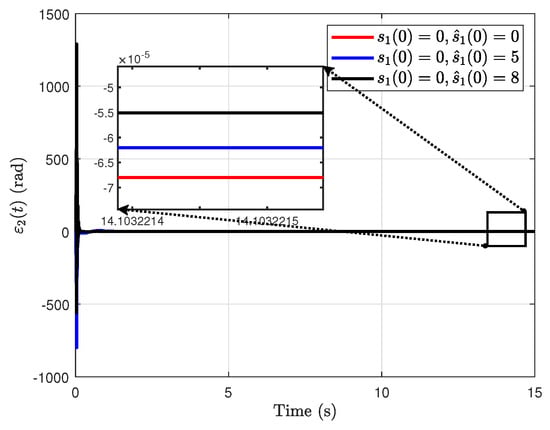

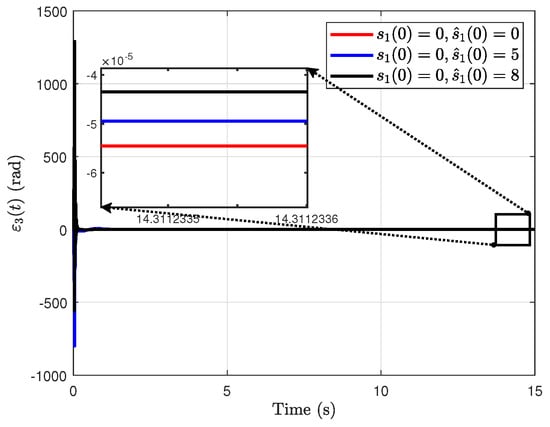

Firstly, the case in which the reference signal is is shown. The parameters of the proposed RH-ESO used in this case are those in Table 1. The corresponding results are given in Figure 5, Figure 6 and Figure 7.

Figure 5.

The estimation error under different initial values of the proposed RH-ESO.

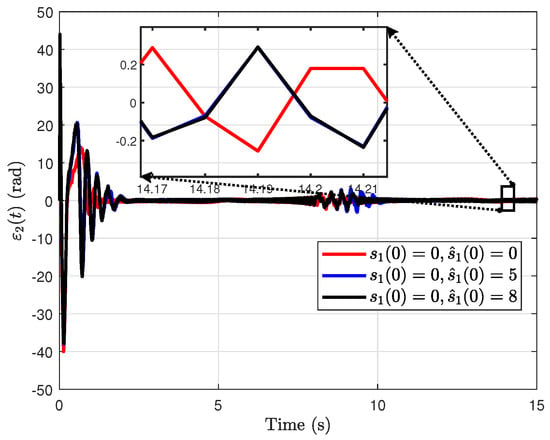

Figure 6.

The estimation error under different initial values of the proposed RH-ESO.

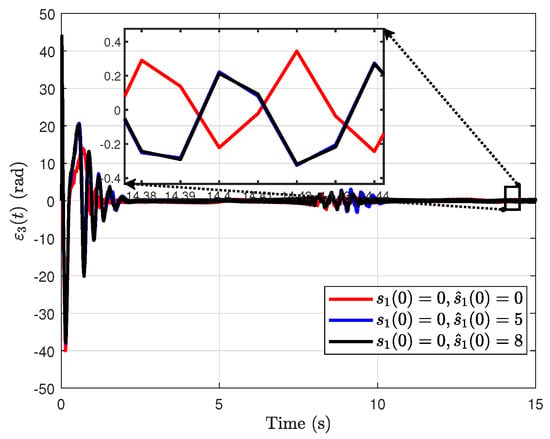

Figure 7.

The estimation error under different initial values of the proposed RH-ESO.

From Figure 6 and Figure 7, it is easy to observe that there exist large values of the estimation errors and . According to (12)–(14), when the same error signal is used, the magnitudes of the terms and greatly exceed that of . This results in relatively large values of and during the initial phase of the simulation. In addition, another simulation result can be obtained. From Figure 5, Figure 6 and Figure 7, it is easy to obtain that, the closer the initial value of the observer is to the reference signal, the higher the final observation accuracy when the reference is a constant signal.

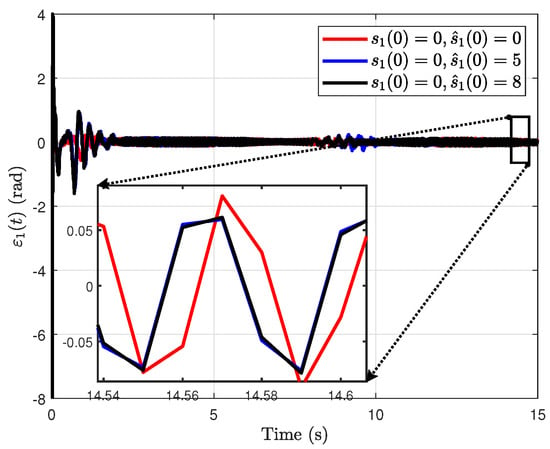

Secondly, the case in which the reference signal is is shown. The parameters of the proposed RH-ESO in this case are those in Table 2.

Figure 8.

The estimation error under different initial values of the proposed RH-ESO.

Figure 9.

The estimation error under different initial values of the proposed RH-ESO.

Figure 10.

The estimation error under different initial values of the proposed RH-ESO.

From Figure 9 and Figure 10, we can find that and remain relatively large at the beginning of the simulation. However, they are nevertheless much smaller than those obtained in Figure 6 and Figure 7. This reduction is due to a significant reduction in the RH-ESO gain used in sinusoidal tracking. Therefore, when the observation error shows a large value in the initial stage, the observer gain can be appropriately reduced to reduce the large value in the initial stage.

Furthermore, under these settings, the estimation accuracy is not as good as that achieved by a constant reference, and changing the initial state of the observer will not result in significant differences in the final observation error. The occurrence of this behavior is due to the fact that interference depends on time-varying states and control inputs, and the tracking of disturbance has a lag, resulting in a lag in the estimation of the system state. Therefore, the tracking error cannot converge precisely to zero but instead appears as a sine curve-like image. In general, a high observer gain effectively reduces the steady-state error; however, it also causes excessive overshoot at the beginning of the simulation. Therefore, the observer gain—high or low—should be selected flexibly according to the specific control requirements. In this simulation, the angle tracking error ultimately stays within ±0.05°, and the observation error during the initial phase is small and thus acceptable. Accordingly, the selected RH-ESO parameters are deemed appropriate.

5. Conclusions

This paper has proposed an MPC-enabled disturbance-rejection controller approach for a pneumatic manipulator system subjected to external disturbances. An RH-ESO has been designed that incorporates a decision variable to deal with the disturbances modeled from the complex nonlinear terms within the system. The optimal disturbance estimation error has been determined through a receding-horizon optimization procedure to obtain the best estimate of the disturbance. Using this optimal estimate, the MPC-enabled disturbance-rejection controller has been developed to enable precise angular trajectory tracking in the pneumatic manipulator system. Moreover, the recursive feasibility of Prob 1 has been guaranteed through rigorous proofs. Additionally, the uniform boundedness of the closed-loop system is mathematically guaranteed. Finally, the simulation results demonstrate the superior performance and effectiveness of the proposed approach.

Author Contributions

Conceptualization, Y.X.; methodology, X.H.; software, Y.X.; validation, X.H., D.Z. and L.W.; formal analysis, P.L.; investigation, Y.X.; resources, X.H.; data curation, D.Z.; writing—original draft preparation, Y.X.; writing—review and editing, D.Z.; supervision, D.Z.; project administration, L.W.; funding acquisition, Y.X. and X.H. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank the anonymous reviewers for their detailed comments, which helped to improve the quality of the paper. This work was supported in part by National Natural Science Foundation of China (Grant Nos. 62303061 and 62403353) and National Key Laboratory of Science and Technology on Space-Born Intelligent Information Processing under (Grant No. TJ-02-22-03).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in this paper are provided in the Section 4.

Conflicts of Interest

The authors Dongjie Zhu and Liangchao Wu were employed by the company Xingyu Electronics (Ningbo) Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MPC | Model predictive control |

| RH-ESO | Receding-horizon-based extended state observer |

| SMC | Sliding mode control |

| ADRC | Active disturbance-rejection control |

| PAMs | Pneumatic artificial muscles |

| LMIs | Linear matrix inequalities |

| TD | Tracking differentiator |

| Nomenclature | |

| The real number set | |

| The non-negative integer set | |

| The set of positive integers | |

| The dimension of A is | |

| Matrix A is positive definite (or negative definite) | |

| The maximum eigenvalue of A | |

| I | The unit matrix with appropriate dimensions |

| The s step ahead prediction of state conditioned on measurements | |

| available at time instant i |

References

- Xie, Z.; Mohanakrishnan, M.; Wang, P.; Liu, J.; Xin, W.; Tang, Z.; Wen, L.; Laschi, C. Soft robotic arm with extensible stiffening layer. IEEE Robot. Autom. Lett. 2023, 8, 3597–3604. [Google Scholar] [CrossRef]

- Low, J.H.; Lee, W.W.; Khin, P.M.; Thakor, N.V.; Kukreja, S.L.; Ren, H.L.; Yeow, C.H. Hybrid tele-manipulation system using a sensorized 3-D-printed soft robotic gripper and a soft fabric-based haptic glove. IEEE Robot. Autom. Lett. 2017, 2, 880–887. [Google Scholar] [CrossRef]

- Yu, N.; Zhai, Y.; Yuan, Y.; Wang, Z. A bionic robot navigation algorithm based on cognitive mechanism of hippocampus. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1640–1652. [Google Scholar] [CrossRef]

- Chi, H.R.; Radwan, A.; Huang, N.F.; Tsang, K.F. Guest editorial: Next-generation network automation for industrial internet-of-things in Industry 5.0. IEEE Trans. Ind. Inform. 2022, 19, 2062–2064. [Google Scholar] [CrossRef]

- Miron, G.; Plante, J.S. Design principles for improved fatigue life of high-strain pneumatic artificial muscles. Soft Robot. 2016, 3, 177–185. [Google Scholar] [CrossRef]

- Kim, W.; Park, H.; Kim, J. Compact flat fabric pneumatic artificial muscle (ffpam) for soft wearable robotic devices. IEEE Robot. Autom. Lett. 2021, 6, 2603–2610. [Google Scholar] [CrossRef]

- Tsai, T.C.; Chiang, M.H. A lower limb rehabilitation assistance training robot system driven by an innovative pneumatic artificial muscle system. Soft Robot. 2023, 10, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Cho, Y.; Kim, W.; Park, H.; Kim, J.; Na, Y. Bidirectional double-spring pneumatic artificial muscle with inductive self-sensing. IEEE Robot. Autom. Lett. 2023, 8, 8160–8167. [Google Scholar] [CrossRef]

- Liu, G.; Diao, S.; Liu, Z.; Zhang, X.; Xiao, X.; Men, S.; Sun, N. Practical Finite-Time Compliant Control for Horizontal Pneumatic Artificial Muscle Systems Under Force-Sensorless Reflecting. IEEE Trans. Autom. Sci. Eng. 2024, 22, 9515–9527. [Google Scholar] [CrossRef]

- Wang, Q.; Yang, T.; Liu, G.; Qin, Y.; Fang, Y.; Sun, N. Adaptive compensation tracking control for parallel robots actuated by pneumatic artificial muscles with error constraints. IEEE Trans. Ind. Inform. 2023, 20, 1585–1595. [Google Scholar] [CrossRef]

- Liang, D.; Sun, N.; Wu, Y.; Liu, G.; Fang, Y. Fuzzy-sliding mode control for humanoid arm robots actuated by pneumatic artificial muscles with unidirectional inputs, saturations, and dead zones. IEEE Trans. Ind. Inform. 2021, 18, 3011–3021. [Google Scholar] [CrossRef]

- Khaled, T.A.; Akhrif, O.; Bonev, I.A. Dynamic path correction of an industrial robot using a distance sensor and an ADRC controller. Ieee/Asme Trans. Mechatronics 2020, 26, 1646–1656. [Google Scholar] [CrossRef]

- Diao, S.; Liu, G.; Liu, Z.; Zhou, L.; Sun, W.; Wang, Y.; Sun, N. Prescribed-Time Adaptive Fuzzy Control for Pneumatic Artificial Muscle-Actuated Parallel Robots With Input Constraints. IEEE Trans. Fuzzy Syst. 2023, 32, 2039–2051. [Google Scholar] [CrossRef]

- Qin, Y.; Zhang, H.; Wang, X.; Sun, N.; Han, J. Adaptive set-membership filter based discrete sliding mode control for pneumatic artificial muscle systems with hardware experiments. IEEE Trans. Autom. Sci. Eng. 2023, 21, 1682–1694. [Google Scholar] [CrossRef]

- Hosseini, S.A.; Toulabi, M.; Dobakhshari, A.S.; Ashouri-Zadeh, A.; Ranjbar, A.M. Delay compensation of demand response and adaptive disturbance rejection applied to power system frequency control. IEEE Trans. Power Syst. 2019, 35, 2037–2046. [Google Scholar] [CrossRef]

- Zeng, Y.; Liang, G.; Liu, Q.; Rodriguez, E.; Pou, J.; Jie, H.; Liu, X.; Zhang, X.; Kotturu, J.; Gupta, A. Multiagent soft actor-critic aided active disturbance rejection control of DC solid-state transformer. IEEE Trans. Ind. Electron. 2024, 72, 492–503. [Google Scholar] [CrossRef]

- Fu, C.; Tan, W. Tuning of linear ADRC with known plant information. ISA Trans. 2016, 65, 384–393. [Google Scholar] [CrossRef]

- Guo, B.Z.; Zhao, Z.l. On the convergence of an extended state observer for nonlinear systems with uncertainty. Syst. Control Lett. 2011, 60, 420–430. [Google Scholar] [CrossRef]

- Qin, B.; Yan, H.; Zhang, H.; Wang, Y.; Yang, S.X. Enhanced reduced-order extended state observer for motion control of differential driven mobile robot. IEEE Trans. Cybern. 2021, 53, 1299–1310. [Google Scholar] [CrossRef]

- Li, Z.; Yan, H.; Zhang, H.; Yang, S.X.; Chen, M. Novel extended state observer design for uncertain nonlinear systems via refined dynamic event-triggered communication protocol. IEEE Trans. Cybern. 2022, 53, 1856–1867. [Google Scholar] [CrossRef]

- Sun, H.; Madonski, R.; Li, S.; Zhang, Y.; Xue, W. Composite control design for systems with uncertainties and noise using combined extended state observer and Kalman filter. IEEE Trans. Ind. Electron. 2021, 69, 4119–4128. [Google Scholar] [CrossRef]

- Zhao, L.; Li, Q.; Liu, B.; Cheng, H. Trajectory tracking control of a one degree of freedom manipulator based on a switched sliding mode controller with a novel extended state observer framework. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 1110–1118. [Google Scholar] [CrossRef]

- Zhao, L.; Cheng, H.; Wang, T. Sliding mode control for a two-joint coupling nonlinear system based on extended state observer. ISA Trans. 2018, 73, 130–140. [Google Scholar] [CrossRef]

- Mayne, D.Q.; Rawlings, J.B.; Rao, C.V.; Scokaert, P.O. Survey constrained model predictive control: Stability and optimality. Automatica 2000, 36, 789–814. [Google Scholar] [CrossRef]

- Zeilinger, M.N.; Morari, M.; Jones, C.N. Soft constrained model predictive control with robust stability guarantees. IEEE Trans. Autom. Control 2014, 59, 1190–1202. [Google Scholar] [CrossRef]

- Li, T.; Sun, X.; Lei, G.; Guo, Y.; Yang, Z.; Zhu, J. Finite-control-set model predictive control of permanent magnet synchronous motor drive systems—An overview. IEEE/CAA J. Autom. Sin. 2022, 9, 2087–2105. [Google Scholar] [CrossRef]

- Li, P.; Kang, Y.; Wang, T.; Zhao, Y.B. Disturbance prediction-based adaptive event-triggered model predictive control for perturbed nonlinear systems. IEEE Trans. Autom. Control 2022, 68, 2422–2429. [Google Scholar] [CrossRef]

- Li, N.; Zhang, K.; Li, Z.; Srivastava, V.; Yin, X. Cloud-assisted nonlinear model predictive control for finite-duration tasks. IEEE Trans. Autom. Control 2022, 68, 5287–5300. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, P.; Zhang, Z.; Wang, M.; Chen, J. Periodic event-triggered MPC for continuous-time nonlinear systems with bounded disturbances. IEEE Trans. Autom. Control 2023, 68, 8036–8043. [Google Scholar] [CrossRef]

- Zhao, L.; Li, Z.; Li, H.; Liu, B. Backstepping integral sliding mode control for pneumatic manipulators via adaptive extended state observers. ISA Trans. 2024, 144, 374–384. [Google Scholar] [CrossRef]

- Peuteman, J.; Aeyels, D.; Sepulchre, R. Boundedness properties for time-varying nonlinear systems. SIAM J. Control Optim. 2001, 39, 1408–1422. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).