Abstract

The Shantou Xiaogongyuan Historic District is a significant cultural symbol of the “Century-Old Commercial Port,” embodying the historical memory of the Chaoshan diaspora culture and modern trade. However, amid rapid urbanization, the area faces challenges such as the degradation of architectural façade styles, the erosion of historical features, and inefficiencies in traditional restoration methods, often resulting in renovated façades that exhibit “form resemblance but spirit divergence.” To address these issues, this study proposes a method integrating computer vision and generative design for historical building façade renewal. Focusing on the arcade buildings in the Xiaogongyuan District, an intelligent façade generation system was developed based on the pix2pix model, a type of Conditional Generative Adversarial Network (CGAN). A dataset of 200 annotated images was constructed from 200 field-collected façade samples, including Functional Semantic Labeling (FSL) diagrams and Building Elevation (BE) diagrams. After 800 training epochs, the model achieved stable convergence, with the generated schemes achieving compliance rates of 80% in style consistency, 60% in structural integrity, and 70% in authenticity. Additionally, a WeChat mini-program was developed, capable of generating façade drawings in an average of 3 s, significantly improving design efficiency. The generated elevations are highly compatible and can be directly imported into third-party modeling software for quick 3D visualization. In a practical application at the intersection of Shangping Road and Zhiping Road, the system generated design alternatives that balanced historical authenticity and modern functionality within hours, far surpassing the weeks required by traditional methods. This research establishes a reusable technical framework that quantifies traditional craftsmanship through artificial intelligence, offering a viable pathway for the cultural revitalization of the Xiaogongyuan District and a replicable systematic approach for AI-assisted renewal of historic urban areas.

1. Introduction

1.1. Research Background

The Xiaogongyuan Arcade Complex in Shantou exemplifies modern East–West architectural fusion. Emerging after Shantou’s opening in 1860 and maturing by the 1930s around the Sun Yat-sen Memorial Pavilion as a commercial hub, it integrates Western structural and South Asian decorative elements within a Chaoshan spatial layout. This synthesis reflects the port city’s commercial culture and the artistic achievements of its overseas Chinese community. They carry forward the exquisite decorative traditions of Chaoshan dwellings [1] and embody the historical memory of Shantou’s “Century-Old Commercial Port.”

As the Special Economic Zone was established, Shantou gradually expanded eastward, resulting in the loss of its central status and a dual decline in both physical and social structures [2]. Rapid urbanization has introduced uniform modern styles and commercialized simplification of decorative elements, resulting in homogenized arcade facades that are stripped of their original character. Concurrently, traditional building functions have become obsolete, while conventional restoration methods prove inefficient and risk damaging authentic features.

Artificial intelligence offers an efficient solution. For Xiaogongyuan’s numerous and diverse buildings, deep learning can rapidly generate designs that maintain historical character while incorporating modern functions, significantly improving renovation efficiency. Compared to traditional methods, AI technology can learn and understand complex relationships from historical building data, providing more intelligent and scientific support for design decisions [3], with the potential to address core issues such as homogenization and inefficiency.

1.2. Literature Review

As a historical relic of Shantou, Xiaogongyuan Arcades not only carry the city’s historical and cultural values but also hold significant importance for studying modern urban transformation through their spatial layout and road planning [4]. However, this district currently faces severe challenges, with widespread deterioration and numerous arcades requiring restoration [5]. Traditional design methods struggle to ensure design consistency and efficiency, failing to meet the demands of large-scale conservation and renewal.

At the level of historical conservation and contextual research, academia has extensively explored the formative causes and conservation strategies of arcade architecture. The diverse facades of the arcades in the Xiaogongyuan Historic District were shaped by multiple factors, including local climate and construction techniques [6]. In terms of conservation strategies, early government efforts focused on the restoration of individual structures and structural reinforcement [7], whereas current approaches emphasize adaptive reuse [8] and holistic conservation [9]. Scholars advocate that conservation work should integrate micro-regeneration [10], functional adaptation, and modern commercial-cultural tourism, aiming to balance cultural heritage with sustainable development while preserving historical context and collective memory. However, these conservation concepts still heavily rely on the experiential judgment of designers and lack efficient, quantifiable technical support, making it difficult to conduct rapid and scientific simulation and evaluation on a large scale, such as across the extensive Xiaogongyuan area.

In recent years, the emergence of artificial intelligence technologies has provided new perspectives and tools for this design dilemma. The academic community has begun exploring the application of AI technologies in the field of architectural heritage, driving the transformation of digital architectural research. For instance, technologies such as 3D scanning can efficiently complete the digital archiving of buildings, providing a high-precision data foundation for subsequent analysis [11]. Digitized architectural information can be leveraged by AI technologies to automatically detect structural pathologies [12] and systematically describe and analyze the cultural significance and conservation value of built heritage [13]. Furthermore, through the application of deep learning techniques, in-depth characteristic interpretation of traditional Chinese dwellings can be achieved, enabling more precise identification, description, and interpretation of these cultural attributes [14].

Deep learning is an important branch of machine learning, and since its breakthrough in 2012, it has achieved remarkable results in fields such as image recognition and natural language processing [15], with widespread applications in various aspects of digital architectural design. Among diverse deep learning models, Generative Adversarial Networks (GANs) initially gained attention for their exceptional image generation capabilities and efficiency [16], and are increasingly being utilized in architectural heritage conservation [17,18,19,20,21]. The framework employs artificial neural networks [22], primarily composed of a generator and a discriminator, which engage in mutual adversarial training and iterative optimization to ultimately produce highly realistic synthetic data [23]. Furthermore, various improved models derived from GANs, such as CycleGAN [24,25], CAIN-GAN [26], and pix2pix [27,28], have demonstrated powerful image generation and style transfer capabilities in the architectural domain, further expanding the application prospects of artificial intelligence in architectural design, conservation, and innovation.

The Conditional Generative Adversarial Network (CGAN) was proposed to achieve precise control over generation by integrating auxiliary information, thereby allowing for accurate regulation of the outputs. This characteristic makes it particularly suitable for the conservation and renewal of historic districts in the architectural field: CGAN accomplishes class-conditional image generation [29], capable of generating building facades based on input conditions such as historical district decorative styles [30], while effectively learning and summarizing architectural characteristics of specific cities to achieve precise contextual continuity and stylistic control [31]. By modifying the input dataset, CGAN can also accomplish the generation of floor plan designs [32]. CGAN demonstrate significant potential in empowering the digital restoration of architectural heritage. However, when confronted with the highly diverse stylistic decorations and complex components of historic buildings, the adaptability and refinement of current techniques require further development. This challenge precisely constitutes the main direction and untapped potential for future research.

1.3. Problem Statement and Objectives

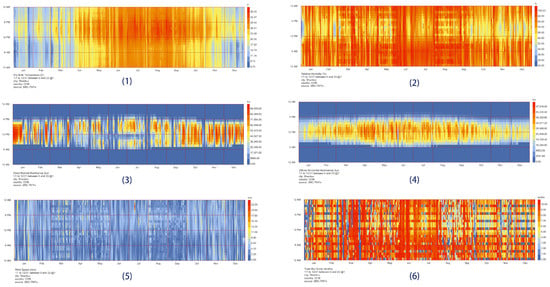

As integral urban cultural heritage, historic districts preserve architectural features and historical narratives. However, urbanization threatens their distinctive character. In Shantou, located in the subtropical monsoon zone, climatic conditions like high temperatures, humidity, and frequent typhoons accelerate the weathering of arcade facades. The salt-rich coastal atmosphere further accelerates the deterioration of building materials [33]. Figure 1 shows the climatic data of the Shantou region. Against this backdrop, the facade features of Xiaogongyuan, as the study subject, are confronting a degradation crisis, manifesting as stylistic alienation and damage across six major facade elements. The replacement of original materials (such as glazed tiles and washed river sand) with modern alternatives has led to a loss of authentic texture; interrupted colonnade continuity and simplified window lintels reveal the dual challenges of fading craftsmanship and commercial compromise; and mechanical replication of decorative patterns and fading painted colors reflect the discontinuity of traditional techniques. These issues collectively constitute the “form resemblance but spirit divergence” crisis in Xiaogongyuan’s facades. Figure 2 below illustrates these specifics.

Figure 1.

Climatic Data of Shantou Region. (1) Dry bulb temperature; (2) Relative humidity; (3) Direct normal illuminance; (4) Diffuse horizontal illuminance;(5) Wind speed; (6) Total sky cover.

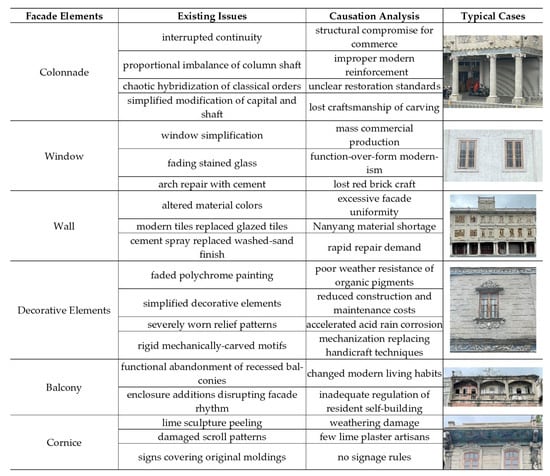

Figure 2.

Xiaogongyuan Arcade Facade Issue Analysis.

The historic district of Xiaogongyuan in Shantou exhibits widespread degradation of its arcade facades, compromising the authenticity and integrity of its historic appearance. Traditional restoration methods, which rely on manual surveying and subjective judgment, are not only inefficient but also prone to stylistic inconsistencies due to varying designer interpretations. In response, this study explores the application of deep learning and artificial intelligence technologies—specifically, a method based on Conditional Generative Adversarial Networks (CGANs)—to the facade renovation of Xiaogongyuan. By training on facade image datasets, the proposed system automatically generates architectural styles and performs style transfer, enabling rapid and visually consistent facade enhancement. This intelligent approach provides technical support for the preservation and innovative renewal of historic architecture in urban contexts. The specific research objectives are as follows:

- (1)

- The ultimate goal of this study is to develop a deep learning-based auxiliary tool for architectural facade renovation. Integrating image recognition and style transfer, the tool will automatically generate diverse design options based on input conditions (e.g., architectural style, materials). It features a visual interface for real-time previews, allowing designers to quickly obtain tailored solutions, thereby reducing costs and enhancing efficiency through intelligent support.

- (2)

- The model will also support the renovation of buildings in Xiaogongyuan by generating facade plans that align with its cultural, environmental, and renewal needs. Trained on collected facade images and data, it automatically learns features such as style, color, and materials to provide design solutions that balance historical continuity with contemporary adaptability.

- (3)

- This research will optimize facade designs based on multiple needs such as residential living, tourism development, etc., improve the overall appearance of the district, promote the revitalization of historical and cultural districts, and achieve sustainable development of Shantou’s urban image.

This study possesses significant theoretical and practical value. Theoretically, it merges AI with historic preservation, advancing deep learning applications in heritage digitization and providing new methodologies for style renewal through a CGAN-based facade model, thereby enriching specialized image generation scenarios and establishing a theory for digital stylistic inheritance. Practically, it tackles the style homogenization and character loss in Xiaogongyuan by employing an intelligent design method that utilizes a facade database and deep learning model to rapidly generate design proposals, which shortens design cycles, cuts costs, and ensures the retention of regional features in color, material, and style.

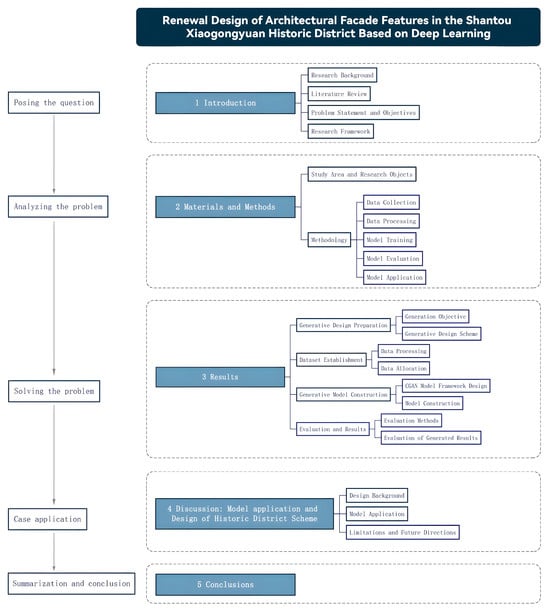

1.4. Research Framework

The research framework of this paper is shown in the figure below (Figure 3).

Figure 3.

Research Framework Diagram.

2. Materials and Methods

2.1. Study Area and Research Objects

This study focuses on the Xiaogongyuan Historical District in Shantou as the core research area. The Xiaogongyuan Historical District can also generally refer to the old city of Shantou, located at the center of the old town. The area forms a street district that radiates from the Zhongshan Memorial Pavilion as the center, covering 12 major streets, including Guoping Road, Anping Road, etc. As one of China’s largest and best-preserved modern arcade districts, the Xiaogongyuan Historical District showcases the architectural art of the early opening period of Shantou, with facades that blend multiple characteristics, including the Nanyang style and Chaozhou-Shantou traditional decorative craftsmanship. The selection of this area for the research is due not only to its well-preserved continuous arcade facades but also because its facades are undergoing systematic degradation, from material replacement to the homogenization of decorative features. This area, which has both cultural value and urgent restoration needs, provides a typical sample for exploring the use of artificial intelligence in assisting historical building renewal.

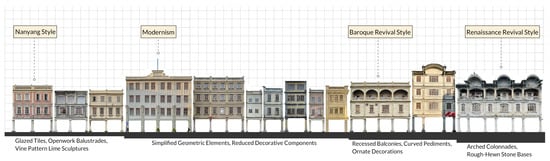

The Shantou arcade architectural complex is a typical representative of the fusion of modern Chinese and Western architectural cultures. Its architectural form and spatial organization exhibit unique composite characteristics. In terms of architectural style, the arcades incorporate Western architectural elements while adapting to local climatic conditions, resulting in a distinctive arcade typology. From a spatial perspective, the arcades demonstrate high commercial adaptability. Standardized bay and depth modules, combined with floor height design, create a vertical functional division of “shop below, residence above.” The continuous covered corridors effectively address Shantou’s hot summer climate, providing a comfortable pedestrian shopping environment while reflecting the functional ingenuity of modern commercial architecture. Along Xiaogongyuan Street, the arcades typically consist of 3–4 stories, forming a uniform skyline. The bay width predominantly ranges from 4 to 5 m, with depths of 2 to 2.5 m, creating uninterrupted, all-weather arcade streets [34]. The radial layout centered around Sun Yat-sen Memorial Pavilion enhances the visibility of arcade facades along the main streets. This uniform yet rhythmic skyline has become one of the most distinctive urban landscape features of Shantou’s old town, shaping a highly characteristic spatial sequence in the historic district. The facade aesthetics of the Xiaogongyuan Historic District are illustrated in the figure below (Figure 4).

Figure 4.

Architectural Facade Style Map of Shantou Xiaogongyuan Historic District.

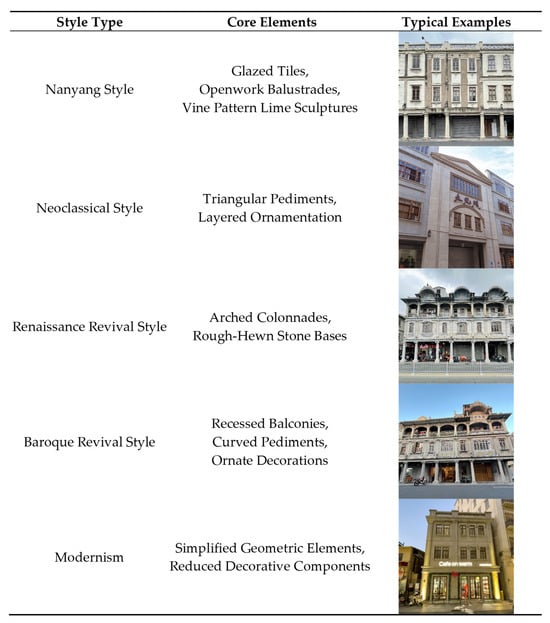

The eclectic characteristics of the Xiaogongyuan arcades serve as material witnesses to cultural collisions across different periods, concretely manifested through the coexistence of multiple architectural styles. This stylistic hybridity is not a mere patchwork but a sophisticated reorganization based on practical functionality and aesthetic expression. The ground level incorporates Nanyang-style open corridors, where continuous arcades combined with adjustable wooden louver systems form a passive climate adaptation strategy for subtropical conditions. The upper facades, meanwhile, skillfully employ simplified classical orders and decorative pediments, achieving a localized interpretation of Western architectural aesthetics through proportional adjustments. The stylistic features of the Xiaogongyuan arcade facades are detailed in the figure below (Figure 5).

Figure 5.

Summary Diagram of Arcade Facade Styles in Xiaogongyuan.

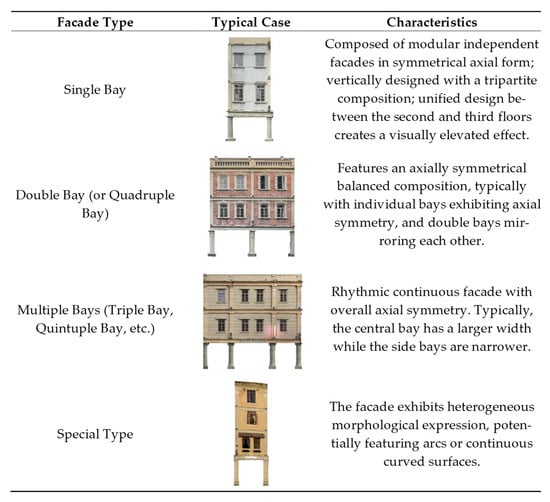

The facades of Xiaogongyuan arcades predominantly adopt a tripartite design approach, reflecting rich cultural layering. The ground level features neatly arranged simplified European classical orders as structural supports. The middle section consists of walls, windows, and decorative elements, with some arcades incorporating recessed balconies at the top. The uppermost section is composed of parapets and pediments. Common column styles include simplified Tuscan and Ionic orders, typically with wider central spans. Bay divisions are determined by the number of columns, commonly appearing as single, double, triple, or quintuple bays. The wall surfaces in the middle section skillfully integrate diverse Western classical architectural styles with traditional Chaoshan decorative components. Wall materials exhibit rich variety, accompanied by windows of varying designs. Typical layouts feature one window per bay or two windows per bay. In most cases of single- or double-window configurations, the columns corresponding to the wall sections are embedded within the walls, employing quasi-Doric or square composite designs that enhance structural stability while maintaining aesthetic harmony. The uppermost pediments and parapets combine traditional Chaoshan patterns with Rococo influences, forming a unique and regionally distinctive decorative style that highlights the defining characteristics of Shantou’s modern architecture. Specific arcade facade typologies are detailed in the figure below (Figure 6).

Figure 6.

Analysis Diagram of Arcade Facade Typology and Characteristics in Xiaogongyuan.

2.2. Methodology

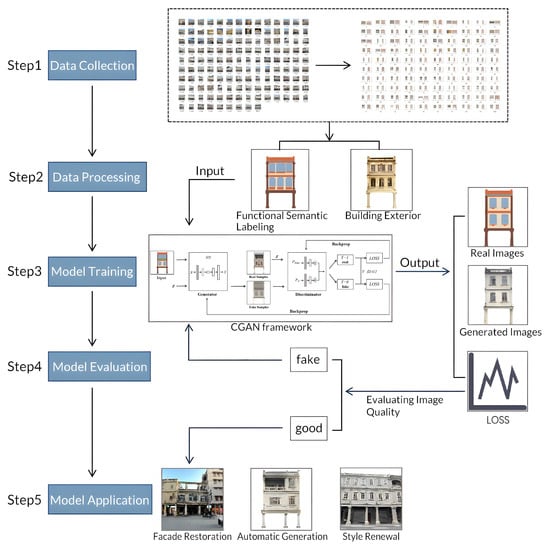

This study adopts a deep learning-based research methodology comprising five steps: data collection, data processing, model training, model evaluation, and model application. The specific methods are as follows:

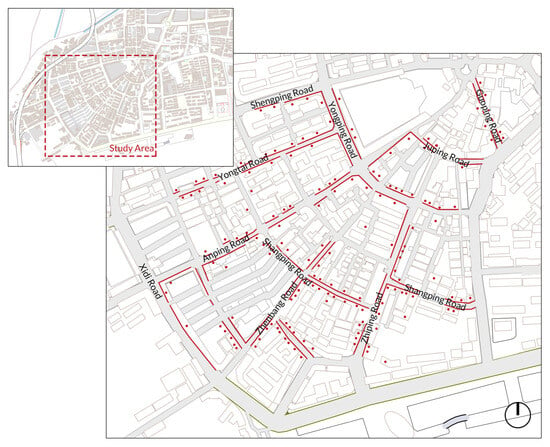

(1) Data Collection: Data collection serves as the foundation for constructing and operating deep learning models. This step aims to acquire image data of building facades in Shantou’s Xiaogongyuan Historic District. Through field investigations of the district’s architectural facade characteristics, it was observed that most arcades integrate traditional Chaoshan craftsmanship with Western architectural styles, forming a diverse composite typology. Their facades exhibit distinctive local features in colonnade forms, window and door styles, and decorative elements. For example, traditional decorative techniques such as stone carving and porcelain inlay from the Chaoshan region are reflected on some arcade facades. To ensure sample diversity and representativeness, the study selected facades along ten typical roads within the historic district (Yongping Road, Shengping Road, Anping Road, Zhiping Road, Guoping Road, Juping Road, Shangping Road, Zhenbang Road, Yongtai Road, and Xidi Road) as research objects, covering arcade buildings of different eras, styles, and functional types. The sample collection scope is illustrated in the figure below (Figure 7).

Figure 7.

Research Sample Collection Scope Map.

Among these, Guoping Road and Anping Road—serving as the core commercial axes of the Xiaogongyuan Historic District—exhibit arcades with pronounced modern tendencies. Their facades feature abundant decorative elements, though commercial signage severely obstructs the architectural character. In contrast, Yongping Road and Juping Road demonstrate relatively uniform and continuous facade designs. Most arcades here are three-story structures of masonry and reinforced concrete, showcasing a hybrid style primarily characterized by the fusion of simplified Roman-style colonnades and modern architectural influences.

The arcades along Yongtai Road feature vibrant colors and diverse forms, with some three-story structures incorporating recessed balconies. Facade decorative elements predominantly draw from botanical and animal motifs, exhibiting distinct Nanyang stylistic influences. In contrast, Xidi Road’s arcades are primarily characterized by eclecticism, with well-preserved facades showcasing clear decorative patterns. The columns feature simplified Ionic capitals and composite shafts. Beyond these dominant styles, the Xiaogongyuan arcade complex also incorporates diverse stylistic elements such as Baroque Revival and Nanyang styles, reflecting the architectural cultural fusion characteristic of modern Shantou as a treaty port, as well as the diversity and complexity of its arcade facades.

During field studies, cameras documented architectural styles, materials, and colors. The collected arcade facade images encompass typical styles while preserving historical authenticity and distinctive features. Data was digitized and categorized by building typology and style to form structured datasets for model training.

Additional data was gathered online via social media to understand public and expert perceptions of facades, informing model conditions. Literature reviews of academic papers and online resources provided theoretical foundations for applying deep learning to facade renewal design.

(2) Data Processing: After data collection, preprocessing is required for the acquired architectural facade photographs. This stage primarily involves preprocessing and annotation of the collected images. First, all image data must be cleaned and filtered to remove noise and low-quality images, ensuring that the image quality meets the requirements for model training. Second, technical software is used to perform correction and enhancement operations, processing the image data into two types. The first type is the Functional Semantic Labeling (FSL) diagram [30], which distinguishes architectural facade elements (such as walls, windows, columns, decorative components, etc.) and annotates each facade element using different color blocks. For example, wall sections are marked in yellow, windows in blue, columns in brown, and decorative components in orange. This color-block annotation method clearly identifies the different constituent elements of the facade, providing clear learning targets for the deep learning model and enabling it to more accurately learn the local facade style. The second type is the Building Exterior (BE) diagram [30], which displays the facade information of the arcade buildings, including decorative components, architectural structures, and materials. All processed image samples are uniformly standardized to a resolution of 512 × 512 pixels to ensure dimensional consistency of the input data for the model. The processed data will serve as training and validation sets for the training and optimization of the deep learning model, laying the data foundation for the subsequent generation of architectural facade renewal solutions.

(3) Model Training: After completing the data processing, this study uses the pix2pix algorithm model to generate historical building facade images with the specific style of a small park. The selection of pix2pix was based on several key considerations, primarily because this study possesses paired training data that aligns perfectly with the supervised learning framework of pix2pix. Furthermore, its deterministic output characteristic proves advantageous over stochastic generative models by better preserving the accuracy of key façade structures. Additionally, the model’s technical maturity is well-demonstrated through its successful application in precedents of architectural image translation tasks. The model is an image translation model based on Conditional Generative Adversarial Networks (CGAN), which learns the mapping relationship between functional layout diagrams and real facade images through the adversarial training mechanism between the generator and the discriminator. During the model training process, the generator and discriminator continuously optimize parameters to minimize the differences, ensuring that the generated facade retains the accuracy of the original structure while incorporating authentic and natural architectural style characteristics, thus achieving the digital generation and preservation of the historical building’s appearance.

(4) Model Evaluation: This study employs a comprehensive evaluation framework combining subjective and objective methods to systematically assess the performance of the trained pix2pix model. The subjective evaluation is conducted by a panel of 20 architecture students and supervising instructors through structured questionnaires and interviews. This process assesses both basic visual quality—including clarity, detail retention, and similarity to real images—and calculates a comprehensive Conservation Authenticity Score based on four weighted dimensions: Style Consistency (30%), evaluating adherence to characteristic decorative elements and compositional rules; Structural Integrity (25%), assessing logical correctness of load-bearing elements and structural relationships; Material Compatibility (25%), examining appropriate use and visual plausibility of traditional materials; and Weathering Pattern Retention (20%), quantifying preservation of natural aging characteristics that contribute to historical value. For objective evaluation, we employ dual approaches: monitoring the generator and discriminator loss curves to ensure training stability, and introducing the Structural Similarity Index (SSIM) to quantitatively measure the perceptual and structural fidelity between generated and reference images. The evaluation strategy is dynamically adjusted based on results, with model refinements made through methods such as augmenting training data or modifying loss functions when generated samples show significant deviations from real images in style or structure, ultimately ensuring the solutions meet both computational metrics and professional architectural standards.

(5) Model Application: This step applies the trained model to the facade renovation design of historical buildings in the small park area of Shantou. Specific applications include constructing an actual case test set, selecting typical damaged or stylistically mixed arcade facades in the district as input objects; generating multiple facade renovation schemes through the trained pix2pix model, including typical facade styles of the small park; placing the generated schemes into the NURBS surface modeling tool (Rhino7 (The detailed version of the software can be found in Appendix A)) for 3D visualization and assessing their coordination with the surrounding environment; comparing the facades generated by the pix2pix model with manually designed facades in terms of historical authenticity, coordination, time consumption, etc., and conducting a comprehensive evaluation; selecting the best scheme generated by the pix2pix model for further design refinement. If the facade scheme generated by the pix2pix model significantly outperforms the manually designed scheme in aspects such as timeliness, this method can be further extended to other areas of architectural heritage preservation and restoration, thereby greatly improving the efficiency and quality of professional design.

The research methodology flowchart is shown in the figure below (Figure 8).

Figure 8.

Research Process Flowchart.

3. Results

3.1. Generative Design Preparation

The generative design preparation phase involves systematically constructing the technical framework for facade generation design of Xiaogongyuan arcades. It primarily consists of three key steps: defining generation objectives, formulating generative technical solutions, and selecting hardware equipment and technologies that align with project requirements.

3.1.1. Generation Objective

The aim of this study is to develop a deep learning-based architectural facade generation design system, focusing on the facade renewal needs of the Xiaogongyuan Historic District in Shantou. By constructing a Conditional Generative Adversarial Network (CGAN) model, the system learns the style features, material expressions, and other aspects of the arcade facades, enabling it to automatically generate renewal plans that align with the historical characteristics upon inputting current architectural images. This generative model integrates image recognition and style transfer functions, allowing designers to preview diverse plans in real-time through a visual interface, which significantly improves design efficiency. While reducing renewal costs and enhancing design efficiency, the system achieves a multi-objective balance of historical authenticity preservation, functional adaptability improvement, and overall streetscape optimization, providing technical support for the sustainable development of the Xiaogongyuan Historic District.

3.1.2. Generative Design Scheme

The generation design process in this study consists of the following steps: The researchers first systematically collect and process data on the arcade facades of the Xiaogongyuan Historic District in Shantou, organizing the collected data into a dataset according to design requirements. This dataset will be used to train the CGAN model. During the model training process, users can observe the generation effects in real-time through a visual interface and make adjustments as needed. Once the generative model is capable of outputting facade designs that align with the characteristics of arcade facades, the model is considered trained and can be used as the Shantou Arcade Facade Generator. At this stage, designers only need to input plan images that the model can recognize, and the trained generative model will automatically output multiple arcade facade design options.

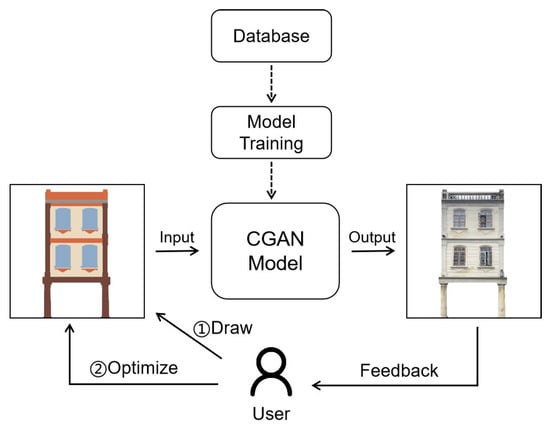

The generation process in this study follows a basic framework of “data preparation—model training—plan generation.” Given the special requirements of historical building preservation, a manual review process is added during the output stage to ensure that the generated results not only meet modern functional needs but also maintain the authenticity of the historical style. This process provides a scalable technical path for the digital renewal of historic district facades. The design generation process is shown in the figure (Figure 9).

Figure 9.

Generation Flowchart.

3.2. Dataset Establishment

3.2.1. Data Processing

The study selects the Xiaogongyuan Historic District in Shantou, Guangdong Province, as the research subject, and collects architectural facade image data through field research. During the research period, a total of 237 original facade images were obtained, of which 37 were discarded due to poor shooting angles or insufficient image resolution. Ultimately, 200 valid samples were retained. Building on this foundation, data augmentation techniques—including random horizontal flipping and scaling—were employed to construct a significantly larger and more diverse training set, thereby effectively enhancing the model’s generalization capability and mitigating overfitting. All selected facade image samples must undergo the following processing steps:

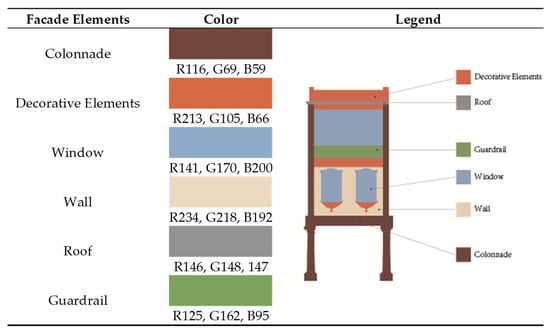

(1) Valid samples will be imported into CAD and Adobe Photoshop 2021 for correction, brightness adjustment, and other processing to convert them into line drawings. During the preprocessing stage, temporary attachments (such as billboards) must first be removed to ensure the integrity of facade features. After processing, the samples undergo color separation treatment, using different color blocks to distinguish architectural facade elements, ultimately generating Functional Segmentation Layout (FSL) diagrams. Based on the compositional characteristics of Xiaogongyuan arcade facades, main facade elements are divided into four categories, marked with distinct colors in this study: colonnades in dark brown (R116, G69, B59), decorative components in bright orange (R213, G105, B66), windows in light blue (R141, G170, B200), and walls in light yellow (R234, G218, B192). Additionally, light gray (R146, G148, B147) for roofs and green (R125, G162, B95) for railings will serve as supplementary elements in the annotation. Specific color coding specifications are detailed in the figure below (Figure 10).

Figure 10.

Color Coding Specification Table for Facade Elements of Xiaogongyuan Arcades.

According to the actual composition of the facade samples, each image may contain all or part of the annotated elements mentioned above. The color block annotations enhance the visual boundaries of different architectural elements through color contrast, reducing the model’s sensitivity to interference from textures, shadows, and other factors, allowing the machine to focus more on extracting structural features.

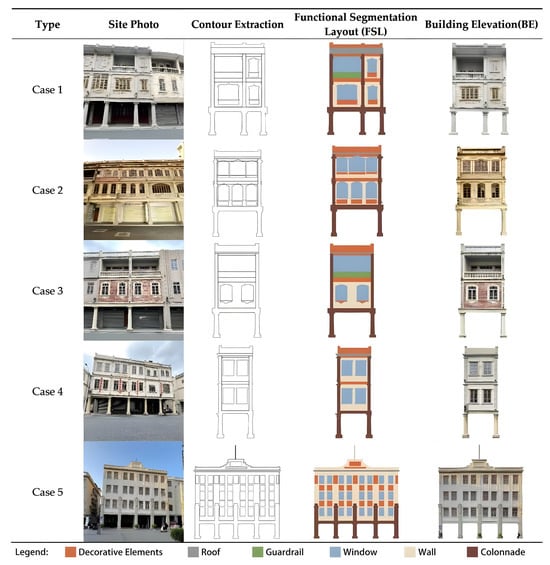

(2) After completing color block annotation, architectural elevation drawings (BE) will also be retained as training objects. The processed elevation drawings clearly display important elements such as materials and textures of Xiaogongyuan arcade facades, facilitating post-machine learning generation. The processed image data are presented in the figure below (Figure 11).

Figure 11.

Classification Table of Facade Image Data for Xiaogongyuan Arcades.

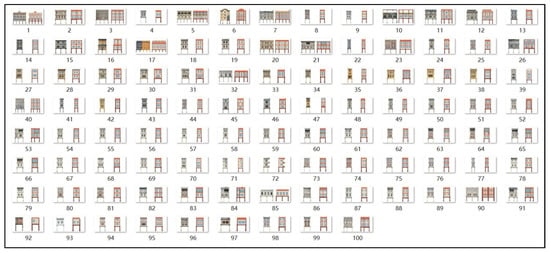

Each valid sample will be processed into two types of images: Functional Segmentation Layout diagrams and Architectural Elevation drawings. The 200 samples will thus generate a total of 400 images after processing. The baseline data is shown in the figure below (Figure 12).

Figure 12.

Base Data.

For the purpose of dataset generation, all original images are initially standardized to a resolution of 512 × 512 pixels. Subsequently, two such images are assembled side-by-side, yielding a composite image with a total resolution of 1024 × 512 pixels. Figure 13 illustrates a standardized format data sample.

Figure 13.

Standard-Format Data Diagram.

3.2.2. Data Allocation

Upon completion of dataset organization, the data will be divided into three subsets through sampling: training set, validation set, and test set. The training set comprises 60% of the total data volume, amounting to 120 samples, to ensure sufficient sample diversity. Both the validation set and test set each account for 20% of the total data, with 40 samples per set, aiming to support subsequent cross-validation.

3.3. Generative Model Construction

3.3.1. CGAN Model Framework Design

This study employs the pix2pix algorithm based on a Conditional Generative Adversarial Network (CGAN) model for training. This model demonstrates strong accuracy and stability in architectural image style transfer tasks, enabling accurate historical style transfer while preserving architectural structural features.

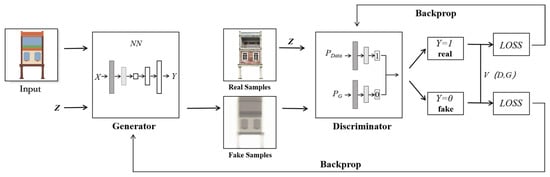

The pix2pix model generator adopts the U-Net [15] architecture to preserve the structural features of input images, ensuring that architectural details and overall contours remain intact during training. The discriminator utilizes the PatchGAN [15] architecture, focusing on authenticity judgment of local regions to enhance the quality of generated images. In the training mechanism, the generator receives input images and random vectors, learning to generate fake images; the discriminator compares generated images with real target images to assess authenticity. If the discriminator identifies an image as fake, it returns the difference between the fake and real images to the generator. During adversarial training, the generator continuously optimizes its parameters based on adversarial loss and L1 loss to reduce discrepancies with real target images, ultimately achieving precise style transfer from source to target images. The framework of this algorithmic model is shown in the figure below (Figure 14).

Figure 14.

CGAN Algorithm Model Framework Diagram.

3.3.2. Model Construction

This study constructs an arcade facade generation model based on CGAN by leveraging open-source pix2pix algorithms from online platforms such as GitHub and CSDN, with model implementation conducted using tools including PyCharm 2024.3 (Community Edition) and PyTorch 1.8.1 (The detailed version of the software can be found in Appendix A). The operating system for this model setup is Windows 11.0.

The research conducted model training based on the previously established dataset of arcade facade images from Shantou Xiaogongyuan area. All samples underwent standardized preprocessing procedures, including key steps such as resolution unification and color space conversion. During the training process, parameters like learning rate and batch size were adjusted to achieve continuous optimization of model performance under GPU acceleration. The final generation results were evaluated to ensure compliance with historical preservation design requirements, providing reliable technical support for the digital renewal of historic districts.

3.4. Evaluation and Results

3.4.1. Evaluation Methods

This study primarily employs two methods to evaluate the results generated by the model, as detailed below:

- (1).

- Subjective Evaluation: During the model iteration process, we formed an evaluation panel consisting of 20 architecture students and their supervising instructors. Through questionnaires and interview surveys, a subjective evaluation of the quality of the test-generated images was conducted. The evaluation criteria cover the following two aspects: first, the basic visual quality of the generated images, including clarity, detail retention, and similarity to real images; second, a comprehensive Conservation Authenticity Score, which is calculated as a weighted combination of four dimensions: Style Consistency (30%), Structural Integrity (25%), Material Compatibility (25%), and Retention of Weathering Patterns (20%).This study organizes a concise and universal set of evaluation criteria, as shown in Table 1.

Table 1. Quality Assessment Metrics for Generated Façades.

Table 1. Quality Assessment Metrics for Generated Façades. - (2).

- Objective Evaluation: We have introduced the Structural Similarity Index (SSIM). This metric assesses the visual fidelity of the generated results by comparing the luminance, contrast, and structural information between the generated image and the input color block reference image. By calculating and analyzing the SSIM values of both, we provide an objective quantitative basis for evaluating image generation quality.

3.4.2. Evaluation of Generated Results

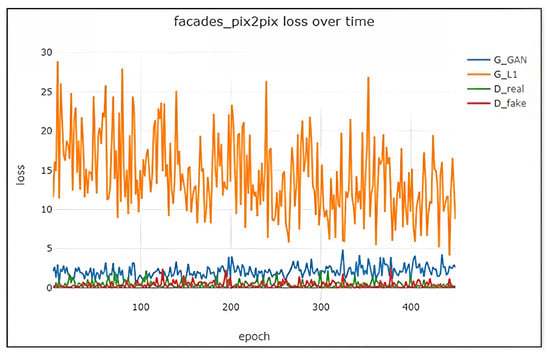

To evaluate the training effectiveness of the model, this study systematically monitored the changes in the loss functions of both the generator and discriminator. Figure 14 displays the evolution curves of four core metrics during the training process: generator adversarial loss (G_GAN), generator L1 reconstruction loss (G_L1), discriminator loss on real samples (D_real), and discriminator loss on generated samples (D_fake).

By analyzing the convergence trends and numerical relationships of these curves, the model’s effectiveness in learning facade features can be revealed, and the validity of the training strategy can be verified. As shown in Figure 15, over 500 training epochs, both G_GAN and G_L1 gradually decreased with the training progress. Among them, G_L1 consistently dominated the training process, indicating that the model prioritized ensuring the accuracy of facade structures. The losses of D_real and D_fake eventually stabilized around 0.7 and 1.2, respectively. As initial training results, the model has established basic facade generation capabilities, though there remains room for optimization. The specific experimental model parameters are listed in Table 2 below.

Figure 15.

Training Log Loss Value Statistics Chart 1 for pix2pix Model.

Table 2.

Parameter Value used in the First Study.

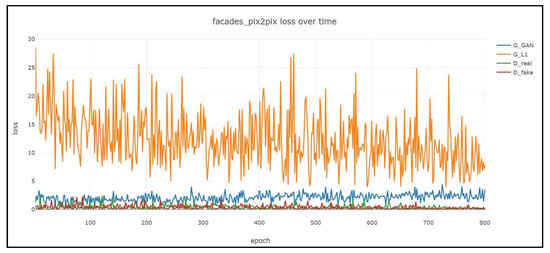

Based on the initial training results, further optimization of model parameters was conducted. In this training phase, the learning rate was reduced to 0.0001. A smaller learning rate ensures more stable optimization and prevents oscillations in the L1 loss. Simultaneously, the learning rate decay cycle was extended and the number of training epochs was increased, allowing the model more time for fine-tuning at lower learning rates, which contributed to a reduction in the L1 loss. The specific experimental model parameters are listed in Table 3 below. The training results are shown in the figure below (Figure 16).

Table 3.

Parameter Value used in the Second Study.

Figure 16.

Training Log Loss Value Statistics Chart 2 for pix2pix Model.

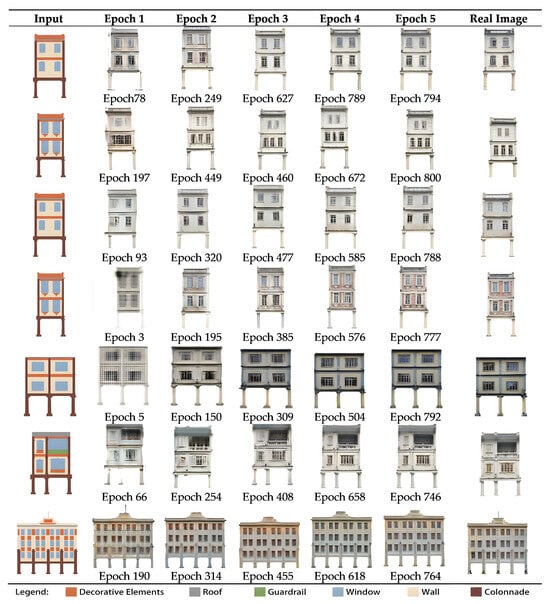

Over 800 training epochs, all loss values exhibited typical dynamic equilibrium characteristics—the adversarial game between the generator and discriminator eventually stabilized. During the initial training phase (0–200 epochs), all loss functions fluctuated drastically, indicating the model was exploring the feature space. When training progressed to 201–450 epochs, the losses began to decrease, and the generator started to grasp the patterns of style transfer. Beyond 450 epochs, the curves gradually stabilized with minor oscillations and converged toward specific values. As training approached 800 epochs, G_L1 steadily converged to approximately 6.5, showing clear signs of convergence. The generator adversarial loss (G_GAN) and the discriminator loss on real samples (D_real) stabilized after 550 epochs, with G_GAN converging to around 2.3 and D_real eventually reaching 0.11. G_L1 remained significantly higher than other loss terms, ensuring that the generated images strictly preserved the structural features of the input facades. However, the secondary upward trend of G_L1 after 450 epochs may indicate that the model began to overfit localized damaged features in the training data. Based on the above analysis, the current model has achieved basic facade generation functionality, but it still exhibits insufficient learning of arcade-specific decorative elements. The evolution of generated images during the training process is shown in the figure below (Figure 17).

Figure 17.

Figure of Generated Image Variations Across Different Training Epochs. (Intermediate output images from the pix2pix model during the training iteration process. These images inherently demonstrate lower resolution as they represent non-final optimization stages.).

As shown in the table, the trained generative model has acquired reliable capability to reconstruct architectural facade styles, enabling relatively accurate restoration of the distinctive features of Xiaogongyuan’s arcade buildings. By comparing images generated by the model with actual images, it is evident that the model not only maintains consistency in the overall facade style but also flexibly and effectively transfers and reshapes facade elements within the images, ensuring similarity and realism in details. The model demonstrates strong adaptability in style restoration, capable of handling complex architectural characteristics while achieving a sound balance between style consistency and element transformation. This characteristic endows the model with high practical value, allowing it to flexibly adjust and produce architectural facade effects that meet design requirements under different environments and needs.

Although the generative model excels in restoring architectural facade styles and transferring elements, some limitations remain. The model still exhibits certain constraints when processing intricate details. Some decorative details (such as reliefs, carvings, etc.) may show slight distortions or blurring during the generation process, affecting the overall image refinement. Despite overall style consistency, the authenticity and detail richness of generated images may decline under specific lighting or viewing angles, particularly in complex background environments and subtle variations in building materials. Additionally, the model’s generalization ability requires further enhancement. When confronted with different styles or entirely new architectural types, it may need more diverse data for additional training to improve its adaptability and accuracy.

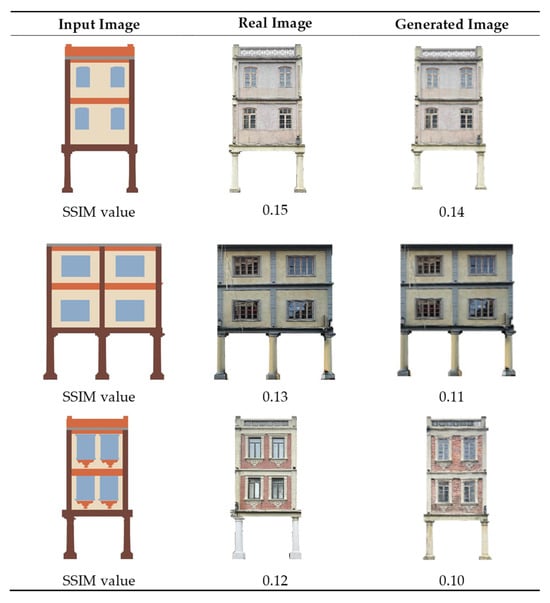

Using the SSIM algorithm with the input scheme as the input image, we calculated its SSIM values against the real image, generated image. Some SSIM values are shown in the figure (Figure 18).

Figure 18.

Exploration of SSIM Evaluation Method for Generated Facades of Arcade Buildings.

The SSIM comparison data show that the structural similarity between input images and real images remains in the range of 0.12–0.15, while the similarity between input images and generated images ranges from 0.10 to 0.14. The minimal differences in SSIM values (not exceeding 0.03) between generated and real images indicate the model’s stable structural preservation capability.

Notably, the consistently low SSIM values may be related to the algorithm’s inherent sensitivity to structural, luminance, and contrast features—when the generation task involves significant style transformation or material reconstruction, the pixel-level structural similarity might naturally remain low even if visual results meet expectations. However, whether this numerical characteristic truly reflects an inherent relationship between the algorithm’s principles and the nature of the generation task requires further investigation.

4. Discussion: Model Application and Design of Historic District Scheme

4.1. Design Background

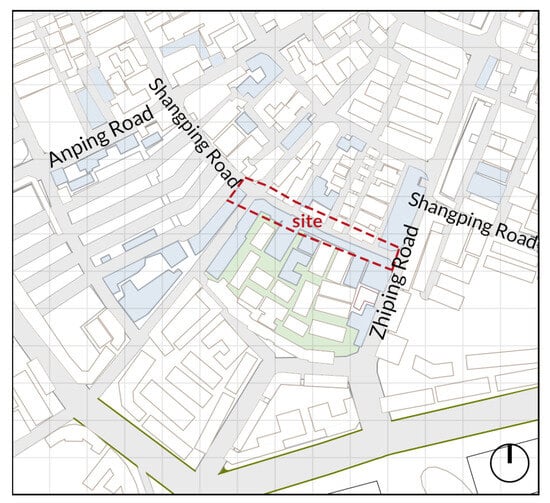

This study selects the intersection zone of Shangping Road and Zhiping Road as the experimental site. The arcade facades in this area show significant deterioration due to long-term neglect and require urgent renewal. Multiple architectural styles coexist here, including Baroque decorations, Nanyang-style colonnades, and modern renovation elements. Original decorative patterns are partially damaged or missing. This typical phenomenon of stylistic hybridity in historic districts provides ideal conditions to test the generative model’s adaptability in complex real-world environments. The design scope is illustrated in the figure below (Figure 19).

Figure 19.

Design Area.

The complexity of the architectural style in this area is primarily reflected in three aspects: In terms of time, the reconstructions and extensions from different periods have formed distinct historical layering features; in terms of culture, the collision of Western architectural language with local construction techniques has given rise to a unique eclectic style; in terms of materiality, both natural aging and human interventions have jointly led to severe damage to the architectural details. This multi-layered complexity poses special challenges for digital generation technology—it needs to identify and deconstruct mixed stylistic elements while reasonably hypothesizing and reconstructing the architectural features based on the fragmented physical evidence. Existing rule-based computational design methods often struggle to address such non-standard historical architectural characteristics, while deep learning technology, by learning style patterns and construction logic from a large number of samples, offers a new solution to this problem. It is particularly noteworthy that the diverse cultural symbols placed on the facades of buildings in this area provide an excellent sample for testing the capabilities of generative models in cross-cultural architectural feature extraction and fusion.

4.2. Model Application

Upon completing model training, the study applied the model to assess its generative capabilities. Prior to application, 40 functional segmentation layout images absent from the original dataset were prepared and input into the trained model. The discrepancies between the generated images and real images were examined. By comparing structural integrity, detail accuracy, and overall fidelity between generated and actual images, the model’s practical performance was evaluated, with its potential strengths and limitations analyzed. This process aimed to ensure the model could not only replicate features of known data but also demonstrate high generalization ability when confronted with entirely new samples.

The quality assessment of generated solutions is primarily based on the following three criteria:

- (1)

- Structural Integrity: Load-bearing components such as colonnades and arches are completely generated without distortions like structural misalignment or fragmentation;

- (2)

- Style Consistency: The output images maintain the stylistic features (e.g., decorative patterns, color schemes) of the input images used during model training;

- (3)

- Generation Authenticity: The images generated by the model exhibit high fidelity in key characteristics such as facade morphology and proportional scales, ensuring realism.

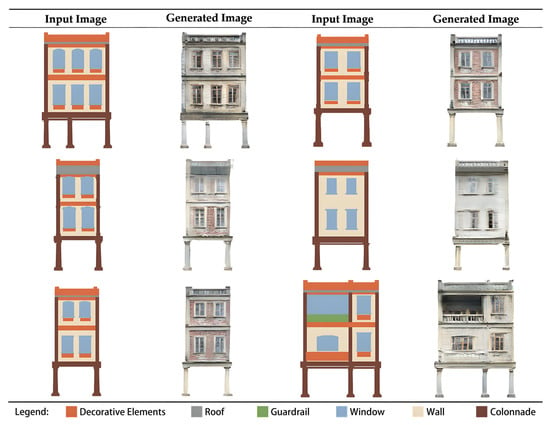

A selection of generated images is presented in the figure below. (Figure 20).

Figure 20.

Figure of Generated Images.

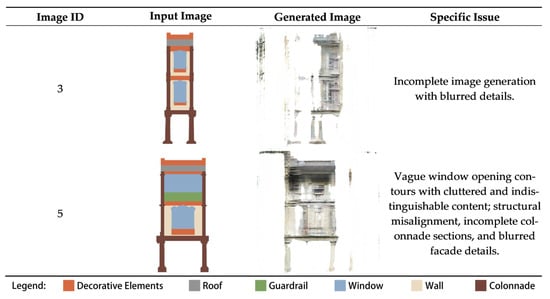

As shown in the figure, the images generated by the model maintain high fidelity in core characteristics such as architectural facade morphology and proportional scales, with particularly accurate and refined handling of details like colonnades. The output images exhibit style consistency with the real images in the training set, demonstrating complete facade structures without issues such as fragmentation or structural misalignment. However, minor blurring occurred in some detailed decorations (e.g., relief textures) during the generation process, especially for complex elements such as pediment decorations, where resolution and detail representation require further improvement. Additionally, two remaining output images showed suboptimal quality failing to meet evaluation standards. Specific cases are detailed in the figure below (Figure 21).

Figure 21.

Figure of Non-Compliant Generated Image Quality. (Examples of unsuccessful iterations generated by the trained model, illustrating specific failure cases in style transfer.).

Based on the aforementioned generated image results and evaluation criteria, the performance assessment of the generative model is presented in the table below (Table 4). Among the 40 test samples, style consistency achieved the highest compliance rate, while generation authenticity and structural integrity reached 70% and 60%, respectively. This indicates that the model performs optimally in restoring stylistic features but requires improvement in generating complex structures. When reproducing certain subtle decorative elements, the model may be constrained by the diversity and detail depth of the training data, resulting in reduced clarity and realism in these areas. To address these issues, subsequent optimization should enhance training focused on high-detail regions to improve overall image precision and detail richness.

Table 4.

Generated Model Performance Evaluation.

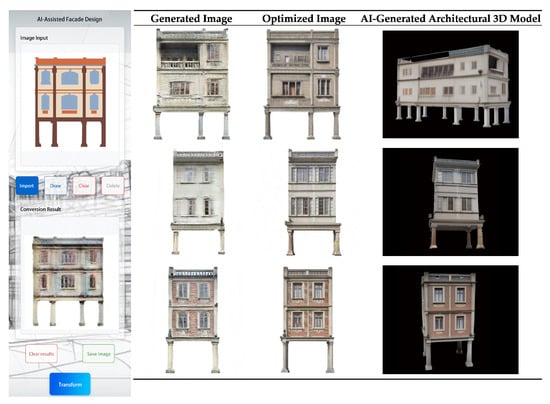

Based on the generated imagery, this study has established a comprehensive technical pipeline from 2D generation to 3D modeling. First, develop a facade design mini-program (https://dorahsy.github.io/two/ (accessed on 1 December 2025)). The first step is to generate the facade image via the mini-program. As shown in the figure below (Figure 22), the left column displays the initially generated images based on the conditional generative adversarial network; the middle column presents the optimized facade images after style adjustments, where the completeness and style consistency of detailed decorative elements were significantly improved; the right column further transforms the optimized 2D images into editable 3D architectural models through AI techniques, attempting to explore dimensional expansion in the digital preservation of historic architecture. These three sets of visual outcomes form a technical chain of “facade generation—facade optimization—3D conversion.” This exploration not only visually demonstrates the application potential of generative algorithms in architectural heritage conservation but also establishes a technical closed loop from 2D to 3D and from images to models, providing a verifiable methodological framework for the digital renewal of historic districts. Particularly noteworthy is that the parametric component design of the 3D models not only preserves the typical characteristics of historic architectural elements but also meets the technical requirements of modern building information management, demonstrating the innovative value of this study in the digital transmission of cultural heritage.

Figure 22.

Optimized Generated Images Figure.

Relying on the aforementioned research results, this study conducted a renovation of the arcade facades at the intersection of Shangping Road and Zhiping Road. During the renovation process, it was necessary to update the original arcade features by integrating the cultural connotations of historic architecture with the functional requirements of modern buildings. The generative method based on conditional generative adversarial networks can meet the needs of accurate restoration and innovative optimization.

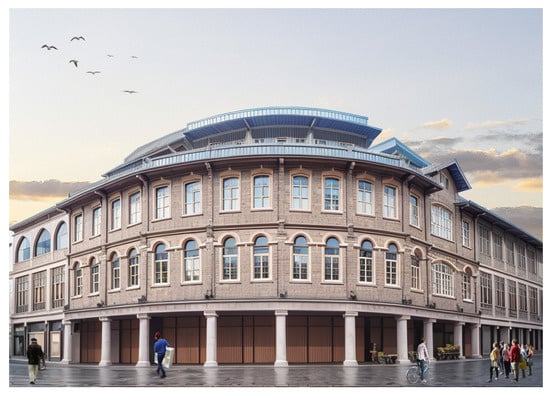

During the renovation, generative image technology was used to preliminarily restore the original facades, ensuring that the architectural appearance complies with historical features requirements. The renovated arcade features not only meet the functional demands of modern urban life but also preserve the unique charm of historic architecture, injecting new vitality into the intersection area of Shangping Road and Zhiping Road, and providing a new direction for the digital preservation and innovative renovation of architectural heritage. The figure below (Figure 23) shows the effect of the renovated arcade facades.

Figure 23.

Design Rendering.

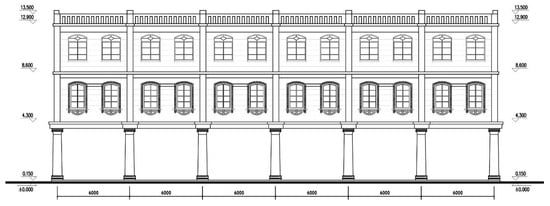

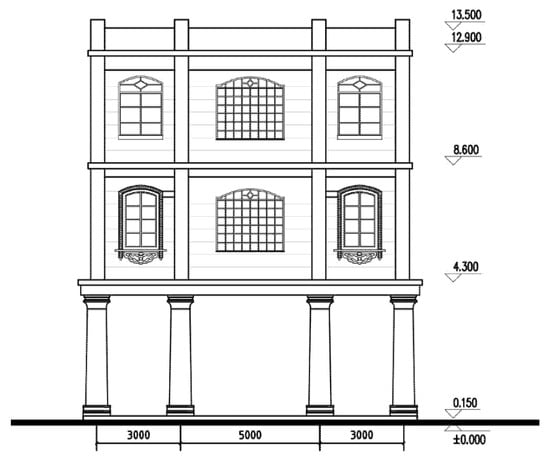

To further refine the facade renewal process, the study also produced detailed architectural elevation drawings. These drawings encompass various detailed designs of the renovated arcade facades, including structural elements, decorative features, window opening styles, etc., accurately representing the proportions, scales, and spatial layout after renovation. In practical projects, such drawings help construction teams better visualize the design intent, providing crucial reference for actual construction and ensuring the accurate translation of design concepts into physical architectural forms. The figures below (Figure 24 and Figure 25) show the renovated arcade elevation drawings.

Figure 24.

Renovated Arcade Facade Drawing No. 1 (1:200).

Figure 25.

Renovated Arcade Facade Drawing No. 2 (1:200).

4.3. Limitations and Future Directions

This study on deep learning-based facade generation for Shantou’s Xiaogongyuan arcades has several limitations. First, the limited dataset of 100 facade images restricts detailed decorative element capture, causing blurring in high-precision generation. However, some scholars like David Newton [35] argue such ambiguous outputs can inspire architectural creativity through intentional “incompleteness.” Second, the pix2pix framework prioritizes structural restoration over material texture simulation and construction logic. These constraints, documented in the literature, prompted developments like pix2pix HD [36] for higher-resolution output. While 3D conversion was attempted, results lacked parametric details and practical applicability.

Future research could expand in the following directions:

- (1)

- Enhancing datasets with high-precision scanning and multimodal deep learning;

- (2)

- Integrating AR technologies for public participation and extending methodology to other historic Overseas Chinese districts. These advances could transition AI’s role from tool to collaborative decision-maker in cultural heritage preservation and urban renewal.

5. Conclusions

This study focuses on the arcade building complex in the Xiaogongyuan historical district of Shantou and explores an intelligent design method based on deep learning technology to address the need for facade updates. By constructing a Conditional Generative Adversarial Network (CGAN) model, the study achieves automatic generation and style transfer for building facades, providing a new technological path for the digital preservation and renewal of historical districts. The research demonstrates the advantages and effectiveness of this method through data collection and processing, model construction and training, and evaluation of generation outcomes.

The innovative value of this study lies in both its methodology and practical application. This research is the first to systematically apply CGAN technology to the facade renewal of Shantou’s arcades, offering a new approach and technical framework for the digital renewal of historical buildings. In addition, the generative model developed in this study enables the rapid output of multiple design alternatives, effectively alleviating issues such as style deviations and low efficiency in traditional restoration processes. In practical applications, the study establishes a digital “Xiaogongyuan Arcade Facade Database” through deep learning of decorative patterns and material characteristics, providing new ideas for the renewal and preservation of historical districts, while also offering a new technological platform for the gradually disappearing traditional crafts.

However, despite the achievements, there are still some limitations. In terms of data, the limited amount of data and insufficient coverage of specific decorative patterns may affect the comprehensiveness of the model, leading to issues such as low-resolution generated images. In terms of technology, while the generation results are promising, there is still room for improvement in simulating material textures with higher precision. In terms of application, the quality of 3D models is still suboptimal and requires further technical refinement.

Overall, this study demonstrates the feasibility and innovativeness of applying deep learning technology in the renewal of historical building facades. Its core value lies in using artificial intelligence to quantify the features and patterns of traditional craftsmanship, establishing a reusable technical framework that balances preservation efficiency with authenticity. This provides an empirical case for the application of AI in cultural heritage preservation. In the future, through cross-regional cooperation and continuous technological optimization, it will be possible to further promote the realization of a new model for historical district protection based on “digital preservation—intelligent renewal—sustainable revitalization,” offering a new solution for the global preservation of similar cultural heritage.

Author Contributions

Conceptualization, W.Y., T.W. and C.Z.; methodology, W.Y., T.W. and C.Z.; software, W.Y. and T.W.; validation, W.Y. and T.W.; formal analysis, W.Y.; investigation, W.Y.; resources, W.Y. and T.W.; data curation, W.Y. and T.W.; writing—original draft preparation, W.Y.; writing—review and editing, W.Y., T.W. and C.Z.; visualization, W.Y. and T.W.; supervision, W.Y., T.W. and C.Z.; project administration, W.Y., T.W. and C.Z.; funding acquisition, W.Y., T.W. and C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by 2025 Ministry of Education’s Industry-University Collaborative Talent Cultivation Project, Research on practical teaching of virtual human living environment design driven by “AI + human factors” (2507053415); Shantou University Scientific Research Startup Funding Project (NTF22015); Shantou University’s 2025 “Artificial Intelligence+” Higher Education Teaching Reform Key Project (STUAI2025006).

Data Availability Statement

The datasets used and analyzed during the current study are available from Wanying Yan (21wyyan@alumni.stu.edu.cn) on reasonable request. All historical facade photographs were taken by Wanying Yan specifically for this research project; all architectural drawings, diagrams, and generated images were produced by Wanying Yan; any previously published images have been properly cited and necessary permissions have been obtained.

Acknowledgments

We acknowledge the financial support from the 2025 Ministry of Education’s Industry-University Collaborative Talent Cultivation Project, Shantou University Scientific Research Startup Funding Project, and Shantou University’s 2025 “Artificial Intelligence+” Higher Education Teaching Reform Key Project. We also extend our gratitude to our classmates for their invaluable assistance and insightful discussions throughout this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

The hardware configuration of the experimental equipment used in this study is as follows: GPU—NVIDIA GeForce RTX 3060 Laptop; CPU—12th Gen Intel(R) Core(TM) i7-12700 HX with a base speed of 2.30 GHz; RAM—16 GB.

The primary software tools employed include Rhino7, Python 3.8.20, PyCharm 2024.3 (Community Edition), PyTorch 1.8.1, with CUDA version 12.6.65.

References

- Xiao, Z. Analysis of the Cultural Characteristics of Modern Residential Architecture in Shantou. Shanxi Archit. 2016, 42, 19–20. [Google Scholar]

- Yuan, Q.; Cai, T. The Dilemma of Historic District Reconstruction Based on the Perspective of Space Production: A Case Study of Small Park Historic District of Shantou. Mod. Urban Res. 2016, 7, 68–77. [Google Scholar]

- Luo, D. Research on Evidence-Based Design of Building Facades in Historical and Cultural Neighborhoods Based on Machine Learning. Master’s Thesis, Guangdong University of Technology, Guangzhou, China, 2025. [Google Scholar] [CrossRef]

- Zhang, Y. Discussion on the implementation mode of protection and renovation of historical and cultural blocks. World Arch. 2022, 12, 73–77. [Google Scholar]

- Wu, H. The Strategy of Construction Control Based on the Research About Urban Texture in Shantou Small Park District. Master’s Thesis, South China University of Technology, Guangzhou, China, 2021. [Google Scholar] [CrossRef]

- Ouyang, L.; Xie, S. Arcades and Road: Urban Planning in Shantou During the Republican Period. Mod. Chin. Hist. Stud. 2023, 4, 91–106+161. [Google Scholar]

- Yi, G.; Wang, S.; Deng, X.; Yang, X.; Liu, J.; Zhang, Z. Structural reinforcement design of modern historical buildings in Trading Port Area of Shantou Small Park. Build. Struct. 2022, 52, 84–88. [Google Scholar]

- Lyu, Q. Protection and Utilization Strategy of Arcade Buildings in Shantou Small Park Kailuan District from the Perspective of Contextual Continuance. Master’s Thesis, South China University of Technology, Guangzhou, China, 2019. [Google Scholar] [CrossRef]

- Wang, G.; Yi, L. Understanding of the value of arcade building and exploration of practice on restoration. Urban Archit. 2018, 4, 41–43. [Google Scholar]

- Li, Q. Study on Protection and Renovation System of the Arcade Architecture in Lingnan Area—A Case of Protection and Renovation of Arcade Architecture in Zhaoan. Master’s Thesis, South China University of Technology, Guangzhou, China, 2021. [Google Scholar] [CrossRef]

- Cui, Y. Research on the Protection and Inheritance of Historical Architectural Cultural Heritage Empowered by Big Data 3D Scanning: A Case Study of Deqing Confucius Temple. In Proceedings of the 2025 Higher Education Teaching Seminar (Volume 1); Henan Provincial Non-Government Education Association: Guangzhou, China, 2025; pp. 142–144. [Google Scholar]

- Yu, Q.; Yuan, X.; Xu, L. Cross-Material Damage Detection and Analysis for Architectural Heritage Images. Buildings 2025, 15, 3100. [Google Scholar] [CrossRef]

- Xu, J.; Ma, D.; Scaioni, M. Foreign Current Development of HBIM (Historic Building Information Modelling) for Built Heritage. China Cult. Herit. 2024, 1, 5–16. [Google Scholar]

- Zu, X.; Gao, C.; Liu, Y.; Zhao, Z.; Hou, R.; Wang, Y. Machine intelligence for interpretation and preservation of built heritage. Autom. Constr. 2025, 172, 106055. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Chen, Y.; Wei, G. Application Review of Generative Adversarial Networks. J. Tongji Univ. Nat. Sci. Ed. 2020, 48, 591–601. [Google Scholar]

- Karadag, I. Machine learning for conservation of architectural heritage. Open House Int. 2023, 48, 23–37. [Google Scholar] [CrossRef]

- Basu, A.; Paul, S.; Ghosh, S.; Das, S.; Chanda, B.; Bhagvati, C.; Snasel, V. Digital restoration of cultural heritage with data-driven computing: A survey. IEEE Access 2023, 11, 53939–53977. [Google Scholar] [CrossRef]

- Zhao, F.; Ren, H.; Sun, K.; Zhu, X. GAN-based heterogeneous network for ancient mural restoration. Herit. Sci. 2024, 12, 418. [Google Scholar] [CrossRef]

- Maitin, A.M.; Nogales, A.; Delgado-Martos, E.; Intra Sidola, G.; Pesqueira-Calvo, C.; Furnieles, G.; García-Tejedor, ÁJ. Evaluating Activation Functions in GAN Models for Virtual Inpainting: A Path to Architectural Heritage Restoration. Appl. Sci. 2024, 14, 6854. [Google Scholar] [CrossRef]

- Bachl, M.; Ferreira, D.C. City-GAN: Learning architectural styles using a custom Conditional GAN architecture. arXiv 2019, arXiv:1907.05280. [Google Scholar]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Wang, Y. Research on Fuzzy Clustering Algorithm for Incomplete Data Based on Improved VAEGAN. Master’s Thesis, Liaoning University, Shenyang, China, 2020. [Google Scholar] [CrossRef]

- Ye, X.; Du, J.; Ye, Y. MasterplanGAN: Facilitating the smart rendering of urban master plans via generative adversarial networks. Environ. Plan. B Urban Anal. City Sci. 2022, 49, 794–814. [Google Scholar] [CrossRef]

- Ji, W.; Guo, J.; Li, Y. Multi-head mutual-attention cyclegan for unpaired image-to-image translation. IET Image Process. 2020, 14, 2395–2402. [Google Scholar] [CrossRef]

- Jiang, F.; Ma, J.; Webster, C.; Wang, W.; Cheng, J. Automated site planning using CAIN-GAN model. Autom. Constr. 2024, 159, 105286. [Google Scholar] [CrossRef]

- Mostafavi, F.; Tahsildoost, M.; Zomorodian, Z.S.; Shahrestani, S.S. An interactive assessment framework for residential space layouts using pix2pix predictive model at the early-stage building design. Smart Sustain. Built Environ. 2024, 13, 809–827. [Google Scholar] [CrossRef]

- Çiçek, S.; Turhan, G.D.; Taşer, A. Deterioration of pre-war and rehabilitation of post-war urbanscapes using generative adversarial networks. Int. J. Archit. Comput. 2023, 21, 695–711. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar] [CrossRef]

- Lin, H.; Huang, L.; Chen, Y.; Zheng, L.; Huang, M.; Chen, Y. Research on the Application of CGAN in the Design of Historic Building Facades in Urban Renewal—Taking Fujian Putian Historic Districts as an Example. Buildings 2023, 13, 1478. [Google Scholar] [CrossRef]

- Zhang, L.; Zheng, L.; Chen, Y.; Huang, L.; Zhou, S. CGAN-assisted renovation of the styles and features of street facades—A case study of the Wuyi area in Fujian, China. Sustainability 2022, 14, 16575. [Google Scholar] [CrossRef]

- Qu, G.; Xue, B. Generative design method of landscape functional layout in residential areas based on conditional generative adversarial nets. Low Temp. Archit. Technol. 2022, 44, 5–9+14. [Google Scholar]

- Huang, Y.; Luo, K.; Qin, L. Impact of Chloride Ions on Durability of Concrete Structures in Marine Environment and Protective Measures. China Sci. Technol. Inf. 2022, 19, 114–116. [Google Scholar]

- Shen, L. Traditional Landscape Features of the Xiaogongyuan Historic District in Shantou City. Chaoshang 2013, 3, 19–23. [Google Scholar]

- Newton, D. Generative deep learning in architectural design. Technol.|Archit.+Des. 2019, 3, 176–189. [Google Scholar] [CrossRef]

- Wang, T.; Liu, M.; Zhu, J.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional Gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8798–8807. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).