Abstract

Structure detection (SD) has emerged as a critical technology for ensuring the safety and longevity of infrastructure, particularly in housing and civil engineering. Traditional SD methods often rely on manual inspections, which are time-consuming, labor-intensive, and prone to human error, especially in complex environments such as dense urban settings or aging buildings with deteriorated materials. Recent advances in autonomous systems—such as Unmanned Aerial Vehicles (UAVs) and climbing robots—have shown promise in addressing these limitations by enabling efficient, real-time data collection. However, challenges persist in accurately detecting and analyzing structural defects (e.g., masonry cracks, concrete spalling) amidst cluttered backgrounds, hardware constraints, and the need for multi-scale feature integration. The integration of machine learning (ML) and deep learning (DL) has revolutionized SD by enabling automated feature extraction and robust defect recognition. For instance, RepConv architectures have been widely adopted for multi-scale object detection, while attention mechanisms like TAM (Technology Acceptance Model) have improved spatial feature fusion in complex scenes. Nevertheless, existing works often focus on singular sensing modalities (e.g., UAVs alone) or neglect the fusion of complementary data streams (e.g., ground-based robot imagery) to enhance detection accuracy. Furthermore, computational redundancy in multi-scale processing and inconsistent bounding box regression in detection frameworks remain underexplored. This study addresses these gaps by proposing a generalized safety inspection system that synergizes UAV and stair-climbing robot data. We introduce a novel multi-scale targeted feature extraction path (Rep-FasterNet TAM block) to unify automated RepConv-based feature refinement with dynamic-scale fusion, reducing computational overhead while preserving critical structural details. For detection, we combine traditional methods with remote sensor fusion to mitigate feature loss during image upsampling/downsampling, supported by a structural model GIOU [Mathematical Definition: GIOU = IOU − (C − U)/C] that enhances bounding box regression through shape/scale-aware constraints and real-time analysis. By siting our work within the context of recent reviews on ML/DL for SD, we demonstrate how our hybrid approach bridges the gap between autonomous inspection hardware and AI-driven defect analysis, offering a scalable solution for large-scale housing safety assessments. In response to challenges in detecting objects accurately during housing safety assessments—including large/dense objects, complex backgrounds, and hardware limitations—we propose a generalized inspection system leveraging data from UAVs and stair-climbing robots. To address multi-scale feature extraction inefficiencies, we design a Rep-FasterNet TAM block that integrates RepConv for automated feature refinement and a multi-scale attention module to enhance spatial feature consistency. For detection, we combine dynamic-scale remote feature fusion with traditional methods, supported by a structural GIOU model that improves bounding box regression through shape/scale constraints and real-time analysis. Experiments demonstrate that our system increases masonry/concrete assessment accuracy by 11.6% and 20.9%, respectively, while reducing manual drawing restoration workload by 16.54%. This validates the effectiveness of our hybrid approach in unifying autonomous inspection hardware with AI-driven analysis, offering a scalable solution for SD in housing infrastructure.

1. Improving Identification Technology Through Integrating Footage from an Unmanned Aerial Vehicle (Dajiang Technologies Inc., Shen Zhen, Guangdong, China) and Stair-Climbing Robot (CABRiolet Inc., Beijing, China)

The footage obtained from unmanned aerial vehicle (UAV) images to detect residential building clusters was collected in Kaihua, Zhejiang Province, and urban areas in Guizhou Province, China, and photos are shown in Figure 1. The dataset is in PASCAL VOC format and contains 492 images. The labeling tool was used to accurately label the Civil Architecture and Industrial Architecture classes of the residential buildings by expert teams from Zhejiang Provincial Construction Engineering Quality Inspection Station Co., Ltd., Hangzhou, P. R. China and Zhejiang Dahuo Inspection Co., Ltd, Hangzhou, P. R. China. During the labeling process, boxes with sizes of 70 × 70 and 50 × 50 were used to label the C and I classes, respectively, with 10,500 targets labeled for each class. These datasets were used in the experiments conducted in this project.

Figure 1.

Examples of UAV photos and videos obtained in the survey.

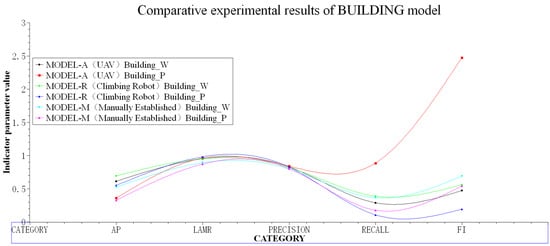

1.1. DasViewer Model

Figure 2 shows an overall detection block diagram of the DasViewer building [,,,]. DasViewer software (ZhiYing R10) is a professional building modeling and analysis software independently invented in China, capable of automatic data processing, 3D modeling, image feature extraction, and analysis (Instruction manual reference: https://www.daspatial.com.cn/dasviewer, accessed on 1 January 2024.). Using the DasViewer model combined with advanced target detection technology, we propose an efficient and accurate method for detecting and identifying buildings. The method fully utilizes the broad field of view and the detailed information obtained from high-altitude photography. As shown in Figure 2, the attention mechanism calculates attention weights to enhance the ability to focus on individual buildings, effectively addressing the problems of target occlusion and visual confusion, thereby improving the accuracy of single target building detection in drone aerial photos. The aerial photos are enhanced to improve the clarity and contrast of the building boundaries, reduce the impact of complex environments and changes in lighting on detection, and increase accuracy. The adaptive pooling method is adopted to improve the spatial resolution of detection, capture smaller building details and boundaries, and enhance sensitivity and accuracy. Furthermore, the SMU activation function is used to suppress background noise, enhance building edge details, and improve clarity and stability. A high-definition visible light camera and an infrared thermal imager (Beijing Zhibolian Technology Co. Ltd, Bejing, China) are equipped, as shown in Figure 1. The high-definition camera has a resolution of up to 40 million pixels and can conduct a high-precision physical examination of the exterior walls of buildings from a distance of 10 m. The infrared thermal imager captures hidden dangers that are invisible to the naked eye by measuring the temperature of the wall related to sunlight exposure.

Figure 2.

DasViewer diagrammatic figure.

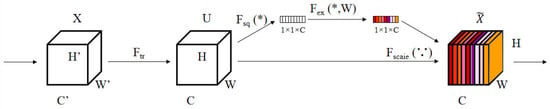

1.2. Technology Acceptance Model (TAM) Attention Mechanism

The TAM attention mechanism was first proposed and used in natural language processing and machine translation for aligning text, and good results have been achieved. In the field of computer vision, some researchers have explored methods of using the attention mechanism in vision and convolutional neural networks to improve network performance. The basic principle of the attention mechanism is quite simple: the importance of different features, which can be different channels or different positions, differs in each layer of the network. The subsequent layers need to pay attention to the important information and suppress unimportant information (Figure 3, quoted from 2017 SENet [Squeeze-and-Excitation Network]). For example, in gender classification, greater emphasis should be placed on features such as hair length, which are more indicative of gender, rather than on features like waist width and height-to-head ratio, which are less relevant [,].

Figure 3.

Algorithm flowchart of the technology acceptance model attention mechanism.

A flowchart of TAM is shown in Figure 4. The TAM consists of three parallel branches: the first two branches focus on cross-dimensional interactions between channels and spatial dimensions, while the third branch is similar to a convolutional attention module, concentrating on building spatial attention. The outputs of these three branches are aggregated through simple averaging. Among them, the triplet attention mechanism includes three branches, which calculate the attention weights between different dimensions to capture the dependencies across channels and spatial dimensions. The first two branches use rotation operations to strengthen the connection between channels and spatial dimensions. The model is suitable for use in processing aerial/capture images from a UAV or climbing robot, and it can precisely focus on the nodes and other targets of buildings, improving the detection performance in complex backgrounds and high-altitude perspectives.

Figure 4.

Flowchart of the technology acceptance model.

1.3. Identification Parameters

1.3.1. Parameter: Preliminary Inspection of Current Structural Condition [,,]

Using a UAV and a stair-climbing robot equipped with infrared imaging devices, the building structure was investigated. Relevant data and information about its expansion, renovation, and usage history were obtained and preliminarily analyzed.

1.3.2. Parameter: Structural Material Strength Testing [,]

Significant rebound force occurs when obtaining information about the strength of a structural material, and the maximum rebound value of a common rebound tester (Beijing Zhibolian Technology Co. Ltd, Bejing, China) reaches 60 MPa. According to the specification, the final value after calculation based on the carbonization depth reached 60 MPa, which is much heavier than the weight of the UAV and plaque robot. At the same time, the detection requirements for this parameter are relatively high. Detection needs to be performed horizontally or vertically, and in full contact with the detection surface. Therefore, after several attempts, we finally decided to use an intelligent device to complete the process.

1.3.3. Parameter: Component Cross-Sectional Size Inspection [,,,,,]

This parameter was detected and investigated through point cloud scanning using infrared imaging equipment carried by the UAV and stair-climbing robot. Under good lighting conditions, the collected data were accurate and effective, but precision was superior for individual components.

1.3.4. Parameter: Inspection of Reinforcement Configuration []

This parameter was detected and investigated using a UAV and a stair-climbing robot equipped with ultrasonic steel bar scanning devices. Based on the principle that the propagation speed of ultrasonic waves in concrete and steel bars is inconsistent, a steel bar layout map was formed, and the position of the main steel bars in the structure was accurately located.

1.3.5. Parameter: Appearance Quality Inspection [,,]

A UAV and a stair-climbing robot were employed to comprehensively detect and investigate defects and cracks using ultrasonic concrete flaw detectors and crack detection devices. Based on the principle that the propagation speed of ultrasonic waves in concrete and air is different, the wave speed was combined with the wave speed of the entire concrete and entered into the formula for comparison and calculation. This enabled defect conditions to be accurately identified and measurements of the width and depth of cracks to be conducted.

1.3.6. Parameter: Calculation of Structural Seismic Bearing Capacity []

YJK software (structural modeling software, YCAD) was used to conduct a structural bearing capacity calculation of the entire building and obtain a corresponding structural model and calculation data. The calculations were conducted in accordance with the GB 50011 standard [,] of the Ministry of Housing and Urban–Rural Development of the People’s Republic of China, and the final value of the structural seismic bearing capacity was analyzed.

The equipment used to determine the above parameters is shown in Figure 5.

Figure 5.

Images of the main equipment used in identification and testing.

2. Evaluation Indicators

Average precision (AP) is a metric commonly used to evaluate the performance of object detection algorithms. The value of AP is the area enclosed by the PR curve and the x-axis and y-axis. The mean average precision (M-AP) is used in multiple object detection categories, and it is obtained by summing the AP values corresponding to each category and calculating the average.

The model was evaluated on the test set, and the Precision and Recall corresponding to each predicted bounding box were calculated. In simple terms, each image in the test dataset was consecutively sent to the object detection [,], and the corresponding prediction results were obtained. Then, in the prediction results, each predicted object was traversed, and in accordance with the chronological order, the current precision (Precision) and recall rate (Recall) were calculated for each predicted bounding box obtained. The formulas used to calculate Precision and Recall are as follows,

where TP represents the number of correctly predicted targets, FP represents the number of incorrectly predicted targets, FN represents the number of targets that were not detected, and GP represents the total number of actual targets.

Precision = TP/(TP + FF)

Recall = TP/(TP + FN) = TP/(All Ground Truths)

3. Comparative Analysis of Results

3.1. Comparison and Analysis of Experimental Results

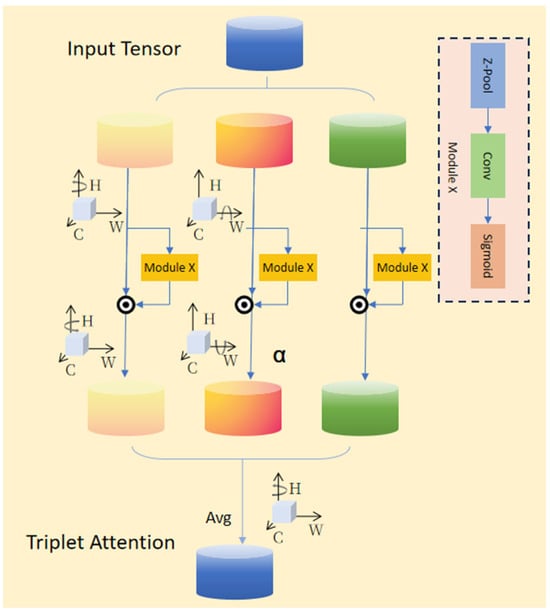

3.1.1. Comparison Between Image Collection Modeling and Modeling After Manual Image Collection

Through an in-depth analysis and processing of the flight/capture images from the UAV and stair-climbing robot, the performance indicators of the UAV, stair-climbing robot, and manual image collection for the Building_W category and the Building_P category were compared, and are presented in the line chart in Figure 6. These indicators included AP, LAMR, Precision, Recall, and F1 value, which comprehensively reflected the performance of the three models in the target detection task. The tests included multiple acquisition methods such as MODEL-A, MODEL-R, and MODEL-M to create models []. These models are all based on deep learning target detection algorithms and have their own characteristics and advantages.

Figure 6.

Line chart showing the comparative experiment results of the three models.

The detection performances of the UAV and the stair-climbing robot in the aerial images of the Building_W category and the Building_P category are shown in Table 1 []. For the Building_W category, the MODEL series models generally exhibited high Precision, ranging from 79.52% to 83.89%, demonstrating the reliable accuracy of the models in identifying buildings. Recall was relatively low, indicating that the models may have omissions when detecting all buildings. The F1 value, as the harmonic mean of Precision and Recall, ranged from 0.47 to 0.69, indicating that the established model provided a relatively balanced overall performance. The Building_P model provided a high performance, and its AP and F1 values were at the leading level. For the Building_P category on the other side, the detection difficulty significantly increased. Due to lighting issues, the series models still have some detection capabilities. The MODEL-A and MODEL-R models performed particularly well in model establishment and collection, reaching a basic level compared to MODEL-M, and their AP and F1 values were higher.

Table 1.

Comparative experiment results of the three models.

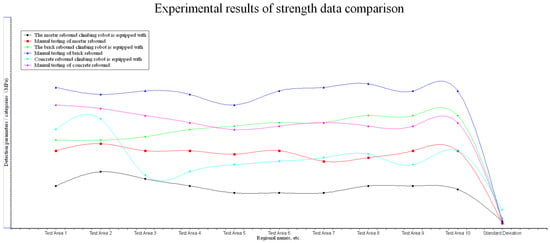

3.1.2. Comparison Between Strength Data Collection and Manual Collection of Strength Data

The strength data collection module is shown in Figure 7, which presents the results of different detection parameters during the data collection process when the stair-climbing robot was equipped with the device. These parameters included mortar rebound (brick strength detection), brick rebound (mortar strength detection), concrete rebound (compressive strength detection of concrete components), and the comparison with the manual test. These parameters were set to enhance the ability of the stair-climbing robot to collect data of different scales and types during its operation, thereby optimizing the target detection and collection performance. The mechanism of the stair-climbing robot performed particularly well with respect to the overall effect, and it was able to fully integrate different types of information on different scales and to help the device accurately capture key targets during data collection []. At the same time, through adaptive adjustment of weight allocation, the stair-climbing robot was able to focus more on important features (such as high-position components), further improving the accuracy and stability of target detection.

Figure 7.

Line chart showing comparison between strength data Collection.

All tests were conducted strictly in accordance with the current national standards. The climbing robot was equipped with various settings, and it was used in the same horizontal direction to perform a mechanical rebound force on vertical components. Data were collected during the operation, and the operational performance was significantly improved []. In particular, the equipment carried by the climbing robot provided an excellent performance when the climbing robot was above the ergonomic position. The deviation of the same type of detection values was between 1.619 and 5.095, as shown in Table 2. The detection standard deviation of the equipment carried by the climbing robot tower was greater than that of manual detection. However, the collected detection data performance was also relatively low, indicating that more experiments are required to improve the stability and effectiveness of the intelligent detection by the equipment carried by the climbing robot, or the final estimated value.

Table 2.

Comparison of intensity data collection experiment performances.

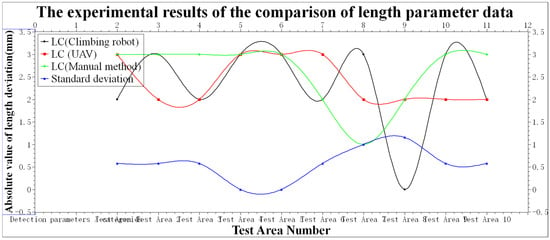

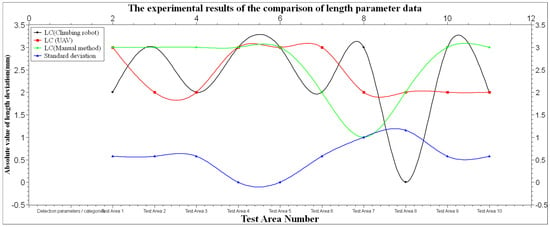

3.1.3. Collection and Comparison of Other Parameters with Manually Collected Data of Component Dimensions and Crack Widths

A comparison of the detection parameters is shown in Figure 8. In this paper, the proposed method of using UAVs or climbing robots in conjunction with other mainstream manual detection methods was compared with the experimental results after collecting data by UAVs or climbing robots. The point-line graph clearly demonstrates the differences in performance among the various detection types. Since UAV photography images usually contain complex background information, multi-scale targets, and special visual features at high-altitude perspectives, it is particularly important to select appropriate detection components. As can be seen from the figure, compared with target detection methods based on parameters using a UAV and climbing robot, UAVs and climbing robots demonstrated higher performance in building detection tasks. This was mainly due to the advantages of the UAV and climbing robot in collecting real-time and accurate infrared light [], as well as the optimizations made by UAVs in the characteristics of aerial photography images. At the same time, the performance of the UAV and climbing robot was better when identifying and detecting the quality of building exteriors. This indicates that the self-mounted fusion of an infrared light instrument with a UAV or climbing robot further enhances the ability of these technologies in detection and identification image processing.

Figure 8.

Line chart comparing length parameter data collection.

As shown in Table 3, there were no significant differences between the results for the different detection methods in measuring the size and crack widths. Specifically, the crack detection values detected by the equipment carried by the UAV were 18.50% higher than those detected by manual methods, while the detection values of the equipment carried by the robot were 25.58% higher than those detected using manual methods. In addition, the equipment carried by the UAV and robot performed well with key indicators such as crack depth and size detection, ensuring the accuracy and completeness of target detection []. In terms of annotation categories and flexible operation, both detection methods also demonstrated strong performance. This feature enabled the UAV and climbing stairs robot to perform well in detection tasks, providing an effective solution for related applications.

Table 3.

Comparison of other parameter data collected.

3.2. Technology Application

The experimental results for the detection task completed by the two machines are shown in Figure 6, Figure 7, Figure 8 and Figure 9, where detailed comparisons of the models and their performances with intensity parameters, images, size parameters, crack width, and their practical effectiveness during the identification and detection process are made. The characteristics of the UAV and climbing robot differ when processing images or data, and there is room for improving the intensity parameters, details, and accuracy. Both the UAV and climbing robot performed particularly well in identifying parameters in old buildings, especially in cases where no original design drawings were used for modeling []. The built model not only accurately detected the center position of buildings and components, but it also showed strong applicability in dealing with diverse shapes and visually confusing objects. However, in some complex scenarios, such as when the building density was high or the lighting or wind speed conditions were poor, applicability still presented certain challenges.

Figure 9.

Line graph showing comparison of crack parameter data collected.

4. Conclusions and Outlook

We applied a UAV and stair-climbing robot to improve identification technology and achieved significant improvements in building identification detection tasks during image collection and model establishment. Through their application, the problems of target occlusion and visual confusion were effectively handled. Through comparative tests, deviations of all parameters were found to be within the ideal range. Improvements were observed in adapting to morphological changes and varying scene backgrounds during size and crack depth detection. Use of a UAV and stair-climbing robot enabled detailed features to be detected, further improving the detection and identification effect. At the same time, the application of infrared scanning technology improved the expression ability, generalization ability, and applicability of the neural network. The experimental results showed that the overall detection efficiency increased by more than 30%. The efficiency of drawing restoration was also greatly improved, with the workload reduced from 3 people and 7 days to 1 person and 7 days. These results verify the effectiveness and superiority of the new identification method in detection and identification application tasks [].

In future research, we will address several critical challenges identified during our field deployments. Specifically. we will discuss potential improvements, such as optimizing the force control algorithm of the UAV and Stair-Climbing Robot, adding a shock absorber, or using a strategy of taking multiple measurements and averaging them to reduce variability.

To address these limitations, we will explore hybrid approaches combining advanced attention mechanisms with reinforcement learning methods specifically optimized for resource-constrained operation. This includes developing adaptive detection models that dynamically balance precision and recall based on remaining battery life and mission priorities. Furthermore, we will investigate tight integration between real-time monitoring drones and climbing robots to create synergistic systems where each platform compensates for the other’s operational weaknesses, enabling more efficient large-scale structural assessment while maintaining detection accuracy across diverse urban environments.

By explicitly addressing these performance–operation tradeoffs, we aim to develop more robust and practical solutions for urban renewal applications, ultimately advancing toward fully autonomous building inspection systems capable of precise, high-quality structural assessment under real-world constraints.

Author Contributions

Conceptualization, Z.S. and R.W.; methodology, Z.S.; software, W.L.; validation, W.L. and W.W.; formal analysis, W.W.; investigation, W.L.; resources, Z.S.; data curation, R.W.; writing—original draft preparation, Z.S. and L.W.; writing—review and editing, R.W.; visualization, W.L.; supervision, W.W.; project administration, Z.S. and L.W.; funding acquisition, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the 2024 Construction Research Project of Zhejiang Provincial Department of Housing and Urban–Rural Development, via grant number [2024K354]. The APC was funded by the Supply and Demand Connection Employment Education Project of the Ministry of Education (School–Enterprise Cooperation Project in the Field of New Intelligent Detection Technology of Zhejiang Dahe Detection Co., Ltd., 2025071822739).

Data Availability Statement

The original contributions of this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Wenhao Lu was employed by the company Zhejiang Dahe Testing Co., Ltd. Author Wei Wang was employed by the company Zhejiang Provincial Construction Engineering Quality Inspection Station Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. The authors declare that this study received funding from Zhejiang Dahe Testing Co., Ltd. and Zhejiang Construction Engineering Quality Inspection Station Co., Ltd. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

References

- Xin, W. Research on the Application of Unmanned Aerial Vehicle Technology in Residential Building Surveying. Hous. Living 2025, 10, 169–172. [Google Scholar]

- Ye, C. Research on the Application of Unmanned Aerial Vehicle Aerial Surveying Technology in Engineering Measurement. China Equip. Eng. 2025, 8, 219–221. [Google Scholar]

- Zhu, Y.; Li, Y.; Zhang, X.; Zhang, W. Urban Airspace Unmanned Aerial Vehicle Safety Path Planning Based on Risk Constraints. J. Beijing Univ. Aeronaut. Astronaut. 2025, 12. [Google Scholar] [CrossRef]

- Wu, K.; Bai, C.; Du, J.; Zhang, H.; Bai, X. Detection Model and Method for Corn Plant Center in Unmanned Aerial Vehicle Images. Comput. Eng. Appl. 2025, 61, 324–336. [Google Scholar]

- Hu, J.; Zhou, M.; Shen, F. Improved Detection Algorithm for Small Targets in Unmanned Aerial Vehicle Based on RTDETR. Comput. Eng. Appl. 2024, 60, 198–206. [Google Scholar]

- Zhang, W. Application of Unmanned Aerial Vehicle Technology in Building Deformation Monitoring. Sci. Technol. Innov. 2025, 7, 181–184. [Google Scholar]

- Liu, B.; Yin, X.; Sun, C.; Ge, H.; Wei, Z.; Jiang, Y.; Yang, X. Research on 3D Scene Navigation Method for Unmanned Aerial Vehicles Based on Deep Reinforcement Learning. J. Graph. 2025, 46, 1010–1017. [Google Scholar]

- Fang, Z. Research on the Principle and Function of Human-like Gait Climbing Robot Mechanism. Master’s Thesis, South China University of Technology, Guangzhou, China, 2022. [Google Scholar]

- Hou, J.; Xu, B.; Liu, S. Application and Analysis of Using Unmanned Aerial Vehicle to Conduct Exterior Wall Leakage Detection by High-Definition Visible Light and Infrared Thermal Imaging Method. China Build. Waterproofing 2024, 6, 39–42. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Zhang, J.; Zheng, P. Structural Safety Detection and Analysis of Buildings after Fire. Qual. Eng. 2024, 42 (Suppl. S1), 35–41. [Google Scholar]

- Jia, L.; Lin, M.; Qi, L.; Tan, J. Research on Multi-Scale Target Detection for Unmanned Aerial Vehicle Images. Semicond. Optoelectron. 2024, 45, 501–507+514. [Google Scholar] [CrossRef]

- Li, J. Research on Gait Planning and Control of Human-like Robot Climbing. Master’s Thesis, Qingdao University of Technology, Qingdao, China, 2018. [Google Scholar]

- Yuan, X.; Zhang, Y.; Kang, J.; Zhang, S. Application of Unmanned Aerial Vehicle Intelligent Inspection in New Energy Power Station. Hydropower Stn. Electr. Equip. Technol. 2024, 47, 59–61. [Google Scholar] [CrossRef]

- Xu, J.H.; Xie, P. Key Points for Safety Assessment of Concrete Structure Buildings. J. Wuhu Vocat. Tech. Coll. 2023, 25, 39–43. [Google Scholar]

- Zhao, T. An Example of Structural Inspection and Reinforcement Treatment for a Kindergarten. Shanxi Archit. 2023, 49, 71–74. [Google Scholar] [CrossRef]

- Liu, G. Design Research on Safety Operation System for Substations Based on Inspection Robots. Light Light. 2025, 49, 142–145. [Google Scholar]

- Fan, B.; Wang, X.; Shan, C.; Chen, G.; Lin, H. Application of Unmanned Aerial Vehicle in Pine Wood Nematode Disease Monitoring. Agric. Sci. Technol. Innov. 2025, 5, 57–59. [Google Scholar]

- Zhong, S.; Wang, L. MCS-RETR: An Improved UAV Aerial Image Target Detection Method Based on RT-DETR. J. Aeronaut. 2025, 13. Available online: http://kns.cnki.net/kcms/detail/11.1929.v.20250428.1511.004.html (accessed on 17 October 2025).

- Chen, X. Application of UAV Image Processing Technology in Surveying Engineering. Eng. Constr. Des. 2025, 7, 189–191. [Google Scholar] [CrossRef]

- Zhou, K. Technical Application Points of UAV in Residential Building Construction Surveying. Hous. Resid. 2025, 7, 169–172. [Google Scholar]

- GB 50011–2010; Partial Revised Provisions of “Code for Seismic Design of Buildings” (2016 Edition) (2024 Edition). Engineering Standards in Construction. Ministry of Housing and Urban-Rural Development of China: Beijing, China, 2010; pp. 72–79.

- Xiao, C.; Yuan, H. Research on Building Exterior Wall Leakage and Hollowing Detection Based on Infrared Thermal Imaging Technology. N. Archit. 2025, 10, 70–73. [Google Scholar]

- Raju, S.S.; Sagar, M.; Shashikant, G.B. Adoption of Drone Technology in Construction—A Study on Interaction between Various Challenges. World J. Eng. 2025, 22, 128–140. [Google Scholar]

- Yiğit, Y.A.; Uysal, M. Automatic Crack Detection and Structural Inspection of Cultural Heritage Buildings Using UAV Photogrammetry and Digital Twin Technology. J. Build. Eng. 2024, 94, 109952. [Google Scholar] [CrossRef]

- Cağatay, T.; Yeşim, Z.İ. Trends in Flying Robot Technology (Drone) in the Construction Industry: A Review. Archit. Civ. Eng. Environ. 2023, 16, 47–68. [Google Scholar]

- Elghaish, F.; Matarneh, S.; Talebi, S.; Kagioglou, M.; Hosseini, M.R.; Abrishami, S. Toward Digitalization in the Construction Industry with Immersive and Drone Technologies: A Critical Literature Review. Smart Sustain. Built Environ. 2020, 10, 345–363. [Google Scholar] [CrossRef]

- Ruan, X. Research on Autonomous Obstacle Crossing of Unmanned Intelligent Morphing Vehicle Based on Vision. Master’s Thesis, Hefei University of Technology, Hefei, China, 2023. [Google Scholar]

- Ding, H. New Findings from Xuzhou University of Technology in the Area of Robotics Described (Stairs-climbing Capacity of a W-shaped Track Robot). J. Robot. Mach. Learn. 2019, 22, 128–286. [Google Scholar]

- Zhang, B.; Wu, S.; Chen, Y. Application Difficulties and Optimization Measures of Thermal Imaging Technology in House Hollowing Detection. Real Estate World 2024, 24, 152. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).