Abstract

The traditional inference of thermal comfort relies mainly on either questionnaire surveys or invasive physiological signal monitoring. However, the use of these methods in real time is limited and they have a low accuracy; furthermore, they can cause an inconvenience to the daily work and life of indoor personnel. With the development of intelligent building technology, non-intrusive technology based on video analyses has gradually become a research hotspot. Not only does this type of technology avoid the limitations of traditional methods, but it can also be used to dynamically monitor thermal comfort. At present, the established and relatively complete non-intrusive recognition methods usually rely on additional equipment or cameras with specific angles, which limits their deployment and application in a wider range of scenarios. Therefore, in order to improve the non-intrusive prediction accuracy of the thermal comfort level of indoor personnel, it is necessary to establish a non-intrusive indoor personnel thermal comfort inference model. This study designed a cross-modal knowledge-distillation-based thermal adaptive behavior-recognition model. In order to avoid the difficulties of terminal deployment caused by the large model and the time-consuming nature of optical flow estimation, a multi-teacher network model was used to transfer the knowledge of different modes to a single student model. This reduced the number of model parameters and the computational complexity while improving the recognition accuracy. The experimental results show that the proposed vision-based thermal adaptation behavior-recognition model can non-invasively and accurately identify the thermal adaptation behavior of indoor personnel, which can not only improve the comfort of indoor environments, but also enable the intelligent adjustment of HVAC systems.

1. Introduction

The efficient operation of heating, ventilation, and air conditioning (HVAC) systems, which represent the core technology of building environment control, is not only important for energy use, but it also directly affects the thermal comfort of the occupants [1], with a significant impact on health, learning, and work efficiency. Due to the increase in urbanization, modern people spend more than 90% of their time in indoor environments, which makes regulating indoor thermal environments an important factor that affects health and happiness [2]. Previous studies have shown that an excessive indoor temperature or improper regulation can cause a variety of health problems, such as diabetes, cardiovascular diseases, and respiratory diseases [3,4,5]. Therefore, on the premise of optimizing the operational efficiency of HVAC systems to reduce energy consumption, improving the degree of thermal comfort in the hot zone is a problem that must be solved in the construction industry.

Currently, domestic and international researchers are undertaking comprehensive and detailed research on human thermal comfort within indoor building environments. The traditional invasive measurement methods mainly include questionnaires, physiological measurements, and environmental measurements, each of which has a specific application scenario and limitations in terms of the method’s thermal comfort assessment ability. The use of questionnaire surveys is the most direct way to obtain the psychological state of indoor personnel regarding a building’s thermal environment. Usually, a paper version of the questionnaire is issued, and the respondents are asked to fill in the questions. By analyzing the questionnaire results, Fanger proposed two classical environmental satisfaction evaluation indicators: the predicted mean vote (PMV) and the predicted percentage dissatisfied (PPD). These indicators provide important data support and lay a theoretical foundation for thermal comfort research. In recent years, researchers have continuously improved this theoretical model and have conducted extensive research on the expansion and improvement of the PMV-PPD index [6,7]. With the development of electronic devices, researchers have begun to adopt electronic questionnaires. For example, Zagreus et al. proposed a computer-based electronic questionnaire method [8], and Sanguinetti et al. developed a thermal sensory feedback system based on mobile phone applications [9]. Although the efficiency of the status feedback has been improved, this method still requires participants to frequently interrupt their activities to provide feedback about their thermal comfort, which is usually difficult to promote in real building environments. In addition, as the number of participants in the survey increases, the workload of information collection and analysis increases significantly. More importantly, this method of analysis has a lag period; there is a long time period between residents filling in the questionnaire and the completion of the analysis, and the indoor environment may have changed during this lag period. Therefore, it is difficult to determine the thermal comfort level of indoor personnel in real time.

Physiological measurements can be used to accurately obtain physiological data through direct contact with the human body or implanted devices, so as to scientifically evaluate thermal comfort. Commonly measured physiological indicators include the skin temperature, the heart rate, and perspiration, among others. In early studies, invasive sensors such as thermocouples and thermistors with cables were widely used, and these sensors provided strong support for research due to their high accuracy and reliability. However, the existence of connecting lines limits the convenience of the application of such devices. With the continuous progress of high-precision invasive sensor technology, research on thermal comfort based on EEG parameters has become a frontier direction in this field [10]. Wang et al. used EEG technology to conduct a detailed analysis of the brain activity patterns of 15 office workers in different thermal environments, revealing the significant influence of temperature changes on the brain workload; this provided an important scientific basis for optimizing the indoor thermal environment [11]. At the same time, Wu et al. organized 22 college students to participate in an experiment and identified thermal sensations through EEG signals. Their results showed that EEG can accurately identify the comfort level of people in different thermal environments, providing a new research concept and method for improving the indoor thermal environment [12]. Although physiological sensors can obtain high-precision data, their invasive characteristics may interfere with the comfort of the tested person; thus, their large-scale or long-term use still faces many challenges.

With the development of Internet of Things technology, sensors can be integrated into wearable devices, which are gradually replacing the traditional dedicated devices for collecting human physiological parameters [13,14]. For example, Ghahramani et al. designed an infrared sensor integrated into eyeglasses to measure the skin temperature of the face, cheekbones, nose, and ears. This was used to assess the thermal comfort of the human body and regulate the thermal energy inside of buildings [15]. Li et al. used a smart bracelet device to measure wrist temperature and heart rate in order to monitor and evaluate the thermal state of the human body; this could be used especially for online monitoring under different active states [16]. Fan et al. proposed a method for detecting the thermal comfort based on IMU data collected by wearable devices, and identified activities related to the thermal comfort in real time through convolutional neural networks [17]. This method involves collecting human physiological data through wearable devices with integrated sensors, thus reducing the limitations caused by the use of cables and enabling dynamic assessments of thermal sensory states. However, since not all indoor personnel are willing or able to wear such devices, their penetration rate is low and their practical application still faces challenges.

Early non-intrusive approaches have concentrated on the relationship between the indoor physical environment or thermal comfort [18] and the use of images or videos to measure thermal comfort, with the goal of associating image features with changes in thermal conditions [19]. Environmental methods involve measuring physical parameters related to the thermal environment, such as the temperature, humidity, wind speed, and radiation temperature, through a variety of sensors. Such methods do not directly collect physiological signals from personnel, but they do use supervised learning and other algorithms to correlate changes in the environmental parameters with personnel feedback, and then they conduct a thermal comfort assessment [20]. Environmental measurements can be used to determine the physical characteristics of the thermal environment and, based on this, assess the thermal status of personnel. However, this method still faces some challenges, including location differences between the sensors and personnel and an uneven distribution of air temperature and radiation.

With the integration of the Internet of Things (IoT), machine learning (ML), and artificial intelligence (AI) technologies [21], methods of obtaining non-invasive human thermal comfort measurements have been gradually developed. These methods do not require direct contact with the human body or the use of additional equipment; rather, they use surveillance video to observe the behavior and reactions of the building occupants to infer their thermal comfort state [22]. This method mainly involves the use of infrared camera technology and the ordinary camera measurement method, assisted by computer technology. Infrared cameras have been widely used to collect the skin temperature of the face, hands, and other exposed body parts. Burzo et al. manually measured facial temperature and physiological signals through a handheld thermal imager to predict the thermal comfort level of their subjects [23]. Ranjan et al. used a handheld thermal imager to extract the skin temperature of the hands and head to predict the hot and cold demand [24]. Cosma et al. used a programmable thermal imager to automatically extract the temperature of the skin and clothing from different parts of the body to predict individual thermal preferences in real time [25,26]. Although these techniques can be used to effectively assess thermal comfort, they rely on high-precision skin temperature measurements, which may be challenging to obtain using low-cost thermal imaging devices. In particular, the surface radiance, the distance between the camera and the skin, and the internal temperature of the sensor can affect the accuracy of the measurement.

With the rapid development of computer vision technology, measuring skin temperature using computer vision has been widely applied in the field of thermal comfort measurements. Eulerian video magnification (EVM) technology can magnify minute movements, color, or texture changes in a video. Many researchers have utilized this technology to collect non-contact measurements of human body temperature. For example, Cheng et al. established a relationship between skin saturation and skin temperature based on blood vessels and skin color changes, and used images to extract the facial skin temperature as the feedback signal of an HVAC system [27]. Jung et al. captured head and face images using camera and RGB video magnification technology, analyzed small changes in blood flow, and then extracted the thermal comfort information of the building occupants in a video [28]. However, such methods usually require the camera to maintain a close distance with the human body, and it is difficult to implement this in public buildings with many occupants or complex environments.

In recent years, with the development of intelligent building technology, non-intrusive methods based on video analyses have gradually become the focus of research [29]. Such methods can infer the thermal comfort state of occupants by recognizing human posture and behavior, which not only avoids the shortcomings of traditional methods, but also enables the monitoring of individual thermal adaptation behavior in real time [30,31]. By combining computer vision and artificial intelligence technology, it is possible to use existing monitoring equipment in buildings to achieve personalized indoor environmental control [32]. Although multi-modality-based data fusion has demonstrated good results in the recognition of thermal adaptive behavior, the computational cost of extracting optical flow and skeleton information from the RGB visual frequency is very high. Such high computing requirements can cause the model to consume more resources and time during computation, especially in online scenarios, which can result in a higher latency. This limits the usefulness of this method in practical applications with embedded devices and low-latency requirements [33,34]. In addition, the complexity of the multi-modal thermal adaptive behavior-recognition method is due to its large number of parameters, complex network structure, and high-dimensional feature representation. Due to these characteristics, the model consumes a lot of computing resources and time in the training and reasoning process; thus, it has difficulty in application scenarios that require lightweight devices or that have high real-time requirements [35]. Due to limited hardware resources, it is impossible for lightweight devices (such as intelligent monitoring and embedded systems) in the interior of buildings to support the operation of complex deep learning models. At the same time, these devices often need to work for a long time under a limited power supply, so reducing the computational volume and energy consumption is particularly important.

A current avenue of research is focusing on how to reduce the complexity of the model while ensuring the accuracy of behavior recognition. There are two main ways to solve this problem. One involves designing a lighter network structure to reduce the number of calculations and the energy consumption by reducing the number of model parameters and the depth or width of the network [36,37]. The second involves using model compression and acceleration techniques, such as pruning and knowledge distillation [38,39], to compress large and complex models into small and efficient models, while maintaining the accuracy of the model as much as possible. Among these methods, knowledge distillation has shown unique advantages as an effective model compression and transfer learning technique. By transferring knowledge from a large teacher model to a small student model, knowledge distillation enables the model to be lightweight and accelerated while maintaining a high accuracy. In addition, knowledge distillation can use the powerful representation ability of teacher models to improve the adaptability and generalization ability of student models and overcome the shortcomings of traditional methods in terms of the computational efficiency and adaptability [40]. Therefore, knowledge distillation applied to thermal adaptive behavior recognition may provide an effective solution for lightweight devices and scenarios with high real-time requirements.

In addition, although the current methods of thermal adaptation behavior recognition have a high accuracy, they are usually based on complex network models, which introduces difficulties in the terminal deployment of the models. Wang et al. [41] proposed a framework that combines multiple data streams to jointly infer thermal adaptation behaviors. Specifically, this framework includes three pipelines that utilize RGB, the optical flow, and key point heat maps as the inputs. Each pipeline is trained separately for different data streams. Finally, the recognition results of the three pipelines are weighted and averaged to serve as the final thermal adaptation behavior-recognition result. The advantage of this approach is that the multiple data streams complement each other, resulting in a high accuracy. However, the cost is that the recognition network model consists of three sub-networks with a large number of parameters. Meanwhile, three types of data streams are still required during the online testing phase, and it is well-known that optical flow inference consumes a lot of time.

The advantage of knowledge distillation technology lies in transferring the “knowledge” learned by a large and complex, yet high-performance, teacher model to a lightweight and efficient student model. During offline training, the recognition accuracy of the student model is improved with the help of the large teacher model. During online testing, only the lightweight and efficient student model, which inherits the core capabilities of the teacher, is used. This facilitates a balance between accuracy, speed, and resource consumption and provides a feasible method for the terminal deployment of the model. In our work, we adopted the knowledge distillation method, taking the model proposed by Wang et al. as the benchmark. We used the optical flow and joint flow pipelines as the teacher network, and the RGB flow as the student network. During training, the teacher network guided the student network to learn the optical flow and joint flow knowledge. In the online testing phase, only the student network was used, which greatly reduced the number of model parameters and improved the inference efficiency for thermal adaptation behaviors.

The method proposed in this study is a preliminary exploration of the terminal deployment of thermal adaptation behavior-recognition models. We aimed to establish a thermal adaptation behavior-recognition framework using knowledge distillation technology. On the basis of ensuring a lightweight model, multi-modal features can still be utilized to improve the recognition accuracy, which enables the model to meet the dynamic environmental temperature needs of multiple people in open-plan offices. This study examined the application of this framework in specific scenarios, with a focus on thermal adaptation behavior-recognition accuracy and the lightweighting of the model. Although the theoretical framework is still in its early stages and faces practical challenges, this study was committed to finding solutions. The main contributions of this study can be summarized as follows:

- (1)

- The image sequence with optical flow information and the node heat map sequence with skeleton information were used as the inputs for the teacher model; the RGB image sequence was used as the input for the student model; the output distillation loss was established between the output of the teacher model and that of the student model; and a thermal adaptive behavior-recognition model based on cross-modal knowledge distillation was constructed. By transferring the optical flow information and joint information from the teacher model to the student model, the student model can not only extract dynamic behavior features from the RGN image sequence, but it can also learn the optical flow and bone information transferred from the teacher model, thus improving the student model’s recognition performance.

- (2)

- The traditional distillation loss function usually only focuses on the prediction probability of the target class; in other words, it ensures that the output of the student model is consistent with that of the teacher model in the same class when predicting. However, in addition to target categories, the relationship between non-target categories is a crucial type of implicit knowledge. These inter-class relationships involve similarities and differences between different classes, and the teacher model may have learned these complex relationships. In this study, the loss function of distillation was decoupled and a dynamic thermal adaptation behavior-recognition model based on the knowledge distillation of the feature space and output space was constructed. In this model, the student model can fully learn from the advanced features of the teacher model and dynamically adjust the prediction probability of the target and non-target classes.

- (3)

- The thermal adaptive behavior-recognition model proposed in this study was tested on realistic surveillance video data, and the proposed model was compared to existing methods from three aspects: the recognition accuracy, the operation time, and the model complexity. The experimental results show that, although the recognition accuracy decreased in some cases, the proposed method demonstrated more advantages in terms of the thermal adaptive behavior inference time. The number of parameters was also significantly reduced. This provides an effective option for the embedded development of the algorithm and the deployment of indoor terminals in buildings.

This paper includes the following parts: Section 1 summarizes the issues discussed in this work and the research content; Section 2 discusses the principles and current situation of knowledge distillation; Section 3 combines the loss function of cross-modal knowledge distillation and decoupling distillation to propose a thermal adaptive behavior-recognition algorithm of cross-modal knowledge distillation; and Section 4 summarizes a test of the model using a realistic surveillance video dataset. The effectiveness of the proposed method was verified, and the experimental results were analyzed in depth. Section 5 summarizes the research conducted in this study.

2. Related Work

2.1. Thermal Adaptation Behavior-Recognition Algorithm

Human action recognition plays a crucial role in the understanding of thermal comfort based on video data. In recent years, this has become an active research field. Thermal adaptation behavior recognition can be categorized into two approaches based on the application methodology: traditional algorithms and deep learning models. Traditional algorithms [14] employ standard computers or phone cameras to observe human poses associated with thermal discomfort, define thermal discomfort-related poses, and develop sub-algorithms based on an artificial threshold for each pose estimation. This method is highly dependent on accurate coordinate position information for key joints, which is not easy to obtain using computer cameras. The coordinate positions of key joints are affected by the imaging angle, the distance to the object, and the occupant’s behavioral habits.

Current deep learning-based thermal adaptation behavior-recognition algorithms can be divided into skeleton-based methods and video-based methods, depending on whether human joints are detected. Most of the existing work on skeleton-based recognition has focused on the fusion of spatial skeletal-based key point features, such as ST-GCN [42]. Most of the existing video-based methods collect RGB signals based on video frames and use deep learning algorithms such as I3D, SlowFast, CNN, or Bi-LSTM to achieve end-to-end recognition. In recent years, the use of neural networks to automatically perform feature extraction has significantly improved the identification accuracy of actions compared to traditional algorithms. The application feasibility of deep learning algorithms has been proven in this field, thus attracting the attention of academia and industry in this research direction. However, the existing methods for recognizing single-modal thermal adaptation behaviors are restricted by the data quality and necessitate specialized feature engineering for various modalities. This results in the model having a limited generalization capability and makes it challenging to apply the model in complex real-world situations.

As a result, there is a growing trend toward integrating complementary information from multi-modal data to create a more robust and efficient model for recognizing heat-adaptive behaviors. Wang et al. proposed a thermal adaptive action-recognition method that integrates three data modalities: RGB, optical flow, and joint heat maps. Three dedicated networks were set up for the recognition of each data stream, demonstrating that the model has a high recognition accuracy. This model retains the integrity of information from each modality through parallel processing and result fusion. It performs exceptionally well in scenarios where the explicit utilization of heterogeneous features is required, but it has a high computational cost and is not conducive to deployment at the terminal level. On this basis, we propose a knowledge distillation model that utilizes bone and heat map information as the source domains and RGB data as the target domain. Through the distillation loss function mechanism, key information—such as the motion patterns in optical flow and bone structure features—are distilled into the RGB representation. Because of this, a single network can learn the unified features contained in the rectified RGB modal data, realize feature homogeneity, and reduce the computational overhead.

2.2. Basic Theory of Cross-Modal Learning

The core goal of cross-modal learning is to enhance the understanding and processing power of models by integrating information from different modes, such as vision, text, and audio. It is often difficult for the data of a single mode to fully capture multi-dimensional information in a complex environment, but cross-modal learning can significantly improve the performance of the model through information complementation and sharing between modes. In recent years, this technique has made significant progress in multiple tasks, including video recognition, image-text retrieval, and model compression.

Knowledge distillation technology is being more widely applied to cross-modal learning. Alkhulaifi et al. pointed out that knowledge distillation effectively realizes model compression by transferring knowledge from a large model (teacher model) to a small model (student model), which is especially suitable for resource-constrained environments [43]. In cross-modal learning, this approach is particularly important because it enables the transfer of knowledge from one mode to another. For example, in a visual-language task, the teacher model can extract information from the visual modes and “disaggregate” it into the student model of the text modes, thereby improving the student model’s ability to generalize and its robustness to cope with missing modal information.

In order to deal with the problem of incomplete modes in multi-modal learning, Wang et al. proposed a multi-modal learning framework based on knowledge distillation [44]. Traditional multi-modal learning usually requires each sample to have complete modal data, but in real-world applications, modal incompleteness is very common. Especially in situations when some modes are missing, the performance of the model will be greatly reduced. To solve this problem, Wang et al. passed on supplementary information from the full mode to the student model by training the teacher model separately for each mode. This method enables the student model to obtain enough information to make accurate predictions, even when the modes are incomplete. The experimental results show that the framework performs well on datasets for tasks such as neurodegenerative diseases, effectively improving the robustness of cross-modal learning.

In the field of video recognition, cross-modal learning further enhances the model’s ability to understand video content by combining visual and text modes. The BIKE framework proposed by Wu et al. has made remarkable progress in video-recognition tasks through cross-modal knowledge exploration (from video to text and from text to video) [45]. The framework utilizes a pre-trained visual-language model such as CLIP to generate auxiliary text attributes and capture key timing information in videos through a time-concept detection mechanism. Through two-way knowledge exploration, BIKE greatly improves the accuracy of video recognition, especially for zero-sample and small-sample learning tasks, and the advantages of cross-modal learning have been fully demonstrated. Moreover, the role of contrast learning in cross-modal learning cannot be ignored. Zolfaghari et al. proposed the CrossCLR method to mitigate the inefficiency of existing methods in video-text embedding by optimizing the loss function design of cross-modal contrast learning [46]. This method ensures that features from different modes and the same mode remain close to each other in the embedded space by learning the ratio between modes and within modes, thus significantly improving the performance of video-text retrieval and video captioning generation. Cross-modal contrast learning effectively integrates information from different modes, further enhances the overall performance of the model, and shows a powerful potential for cross-modal learning in complex tasks.

2.3. Knowledge Distillation Mechanism

With the rapid development of deep learning technology, deep neural networks have shown excellent performance in object detection [47], speech processing [48], image classification [49,50], and other fields. However, the construction of these high-performance models is often accompanied by the rapid expansion of the model scale, resulting in increased difficulty with training and deployment. Especially for resource-limited edge devices and real-time systems, how to effectively deploy these models has become a problem. The lightweight student model is developed through knowledge distillation, significantly lowering the deployment threshold while maintaining the performance. As an important technique for deep learning model optimization, knowledge distillation was originally used for model compression; it reduced the computational burden while maintaining the accuracy by extracting the knowledge from large-scale teacher models to small student models. In recent years, knowledge distillation has been widely used for complex tasks such as multi-modal learning and cross-perspective recognition, especially in scenarios with limited computing resources. With the development of multi-teacher distillation and cross-modal distillation, knowledge distillation technology can extract knowledge from multiple teacher models and improve the overall performance of student models. This technology shows great potential in video action recognition, cross-modal information fusion, and model integration by not only improving the accuracy and efficiency of the model, but also effectively addressing challenges such as modal loss and perspective changes.

Hinton et al. proposed knowledge distillation (KD) [51] in 2015. This technique refines the knowledge of a large-scale teacher model into a small student model to develop a lightweight model while maintaining the model accuracy. Knowledge distillation is not only widely used in the field of model compression; it also plays an important role in complex tasks such as multi-modal learning and cross-view recognition.

Knowledge distillation can be divided into three types according to the types of knowledge extracted: response-based, feature-based, and relation-based distillation methods [43]. The response-based distillation method uses the logit value output by the teacher model, and the student model is trained by imitating this output. This technique has been widely used in model compression tasks [52,53,54,55]. Feature-based distillation focuses on the feature representation of the middle layer of the teacher model and the student model. By learning the teacher’s feature response, the student model can better understand the intrinsic structure of the data. Relation-based distillation methods focus on relationships between different layers or training samples, using this information as a supervisory signal to help the student model better capture complex relationships.

In the specific implementation process, the core principle of knowledge distillation is to control the distillation temperature T by adjusting the Softmax function and calculate the probability-like value using Formula (1):

where represents the i class of logits, j is the total number of classes, is the exponential function, and T is the distillation temperature. If , this is the standard , and a higher T value produces a flatter class probability distribution.

The loss function L consists of two parts; the first part is the classification loss , and the other part is the distillation loss , as shown in Formula (2):

where and are weighting coefficients that determine the relative contribution of each loss term to the overall total.

The classification loss is generally the standard multi-classification cross-entropy loss, and the formula is as follows:

where y is the one-hot encoding of the real label, is the probability output from the student model, and represents the cross-entropy loss function.

The distillation loss is used to measure the difference between the soft label output of the teacher model and the student model, usually with a choice of either cross-entropy loss or Kullback–Leibler divergence. The calculation formula is shown in Formula (4):

where is the probability output from the teacher model.

When divergence is selected for the distillation loss, the formula is as follows:

In recent years, multi-teacher knowledge distillation has become an important advancement in this field. Different from the traditional single-teacher model, a multi-teacher model can extract knowledge from multiple perspectives and comprehensively transfer it to the student model. The multi-teacher distillation strategy proposed by Kang and Gwak combines feature, response, and relational distillation to transfer the advantages of multiple teacher models to lightweight student models, significantly reducing the computing resource consumption while improving the classification accuracy [56]. This method has significant advantages for multi-modal fusion tasks, especially in video action recognition and multi-view information integration. Knowledge distillation further demonstrates its unique advantages in cross-modal learning. By distilling the timing information of the optical flow mode into the RGB mode, Dai et al. ensured that the performance of the model relied only on the single-mode RGB flow reaching the level of the dual-flow model [57]. This method not only reduces the complexity of the model, but also maintains a high recognition accuracy when the mode is missing.

Cross-perspective recognition is another application field of knowledge distillation. The nonlinear knowledge transfer model proposed by Rahmani and Mian utilizes cross-perspective distillation to achieve more accurate action recognition under different perspectives [58]. By capturing behavioral features from multiple perspectives, the model’s adaptability to changing perspectives is improved. This approach is closely related to multi-modal fusion and demonstrates the flexibility of knowledge distillation, which enables it to handle multi-modal and multi-perspective challenges in complex environments. In addition, Allen-Zhu and Li discussed the relationship between knowledge distillation and model integration from a theoretical perspective, and proposed that the advantages of multiple independently trained teacher models could be aggregated into a single student model through knowledge distillation to improve the performance [59]. They also pointed out that self-distillation, as an implicit model integration method, can optimize the performance of student models without external teacher models, which has important implications for continuous learning and model optimization scenarios.

Knowledge distillation technology shows great potential for model compression, cross-modal learning, and cross-perspective recognition. Through the design of knowledge representation and distillation strategies, student models can significantly reduce the computational resource requirements without significantly sacrificing accuracy, and are suitable for resource-constrained edge devices and real-time systems. Future research will continue to explore how to optimize the distillation method and improve its application in multi-mode and multi-view tasks.

The model proposed in this study successfully provides an efficient solution for multi-modal thermal behavior-recognition tasks. This study makes core contributions in three aspects: First, it constructed a unified model that can be generalized across modalities, which reduces the engineering complexity without needing to design exclusive modules for specific modalities. Secondly, the fusion method was innovated, and multi-modal information fusion was completed in the feature extraction stage to improve the utilization rate of complementary information. Thirdly, the lightweight student model was cultivated using knowledge distillation technology, which significantly reduced the deployment threshold under the premise of ensuring high performance.

3. System Description

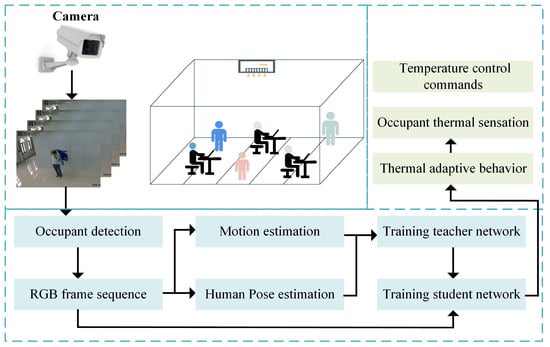

In this section, we introduce a heat-adaptive behavior-recognition model based on the multi-modal feature fusion of human appearance, motion, and posture information. As shown in Figure 1, we used off-the-shelf surveillance cameras as sensors to monitor a human subject’s response to the current ambient temperature. This work combines the advantages of the 3D ResNet and cross-modal knowledge distillation. In detail, the framework of cross-modal knowledge distillation was composed of a teacher network and a student network. The teacher network distilled the learned knowledge into the student network. This task was set up with two teacher networks and one student network. Among them, the motion features and pose features were used as the input of teacher network 1 and teacher network 2, respectively, and the input of the student network was RGB video frames. By transferring the motion information and pose information from the teacher models to the student model, the student model can not only extract dynamic appearance features from the RGB video frame sequence, but can also learn the motion and pose features transferred from the teacher models; this improves the recognition performance of the student model. A detailed description of the model architecture and the training process is provided below.

Figure 1.

Cross-modal knowledge-distillation-based thermal adaptive behavior-recognition model.

3.1. Data

Thermal adaptation actions (TAAs) [60] were used to train this model; the data comprised 17,296 video clips and showcased 16 distinct temperature adaptation behaviors. The dataset was collected using nine video capture devices, comprising three Microsoft Kinect sensors (Microsoft, Redmond, WA, USA), three EZVIZ C3W cameras (EZVIZ, Hangzhou, China), and three Q8S sensors (Festo, Shanghai, China). These devices provided different viewing angles, as detailed in Table 1, where 0° indicates that the device was positioned directly in front of the subject, −45° indicates that the device was placed to the right-front of the subject, and +45° indicates that the device was located to the left-front of the subject.

Table 1.

The specific settings of the collection equipment.

Specifically, the adaptive behaviors for occupants experiencing high heat included “fan self with an object”, “fan self with hands”, “fan self with one’s shirt”, “roll up sleeves”, “wipe perspiration”, “head scratching”, “take off a jacket”, and “take off a hat/cap”, which were named “A001” to “A008”, respectively. The adaptive behaviors of occupants who felt cold included “sneeze/cough”, “stamp one’s feet”, “rub one’s hands”, “blow into one’s hands”, “cross one’s arms”, “narrowed shoulders”, “put on a jacket”, and “put on a hat/cap”, which were named “A009” to “A016”, respectively. In addition, the frame rate was adjusted to 15 frames per second (FPS). To train this model, the data were processed on the original thermal adaptation behavior dataset. At the same time, a flow dataset and a joint point thermal map dataset based on the thermal adaptation behavior dataset were constructed. The multi-modal dataset included three parts: an RGB data frame, an optical flow data frame, and a joint point heat map data frame. Among them, the apparent feature invariance of the optical flow data played an important role in the recognition of heat adaptation actions, and the joint point heat map data represented an irreplaceable modality in action recognition tasks through structured representation, robust feature extraction, and deep coupling with the deep learning architecture. Furthermore, the training set, validation set, and testing set were divided into proportions of , , and , respectively, and they each contained 12,113, 3463, and 1720 thermal adaptation behavior video clips.

3.1.1. Optical Flow Estimation

Optical flow estimation (OFE) is a key technology in computer vision. It is used to analyze the motion information of pixels or feature points in video sequences in the time dimension. The basic idea is to infer the motion of a pixel point by comparing the pixel intensity changes between adjacent frames. Among many optical flow estimation algorithms, the TV-L1 [61] algorithm is widely used because of its good balance between computational efficiency and accuracy. The TV-L1 algorithm is an optical flow estimation technique based on a variational method; it computes the optical flow by minimizing an energy function containing a data term and a smoothing term. The data term ensures that the optical flow estimation is consistent with the input image sequence, while the smoothing term enables the optical flow field to be spatially smooth and reduces the interference of noise. The TV-L1 algorithm performs particularly well when dealing with large displacement scenes, and shows excellent performance in practical applications. It combines total variation (TV) regularization with the L1 norm constraint, which makes it robust to noise and illumination changes. The TV-L1 algorithm can still provide excellent optical flow estimation results when the surveillance video is disturbed by noise or the indoor lighting conditions are poor. In addition, the processing speed of the proposed algorithm is fast, and a processing rate of 30 FPS can be achieved with a 320 × 240 resolution video input, so it is considered to be a suitable algorithm for real-time processing.

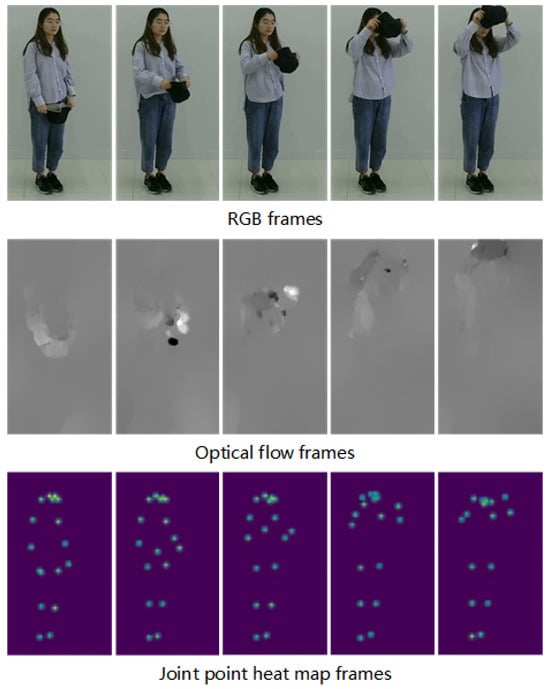

In view of the accuracy and computational efficiency advantages of the TV-L1 optical flow algorithm, especially when dealing with complex dynamic scenes, we chose this algorithm to extract the dense optical flow from video frames, representing a motion feature description of human thermal adaptive behavior. This step was crucial for subsequent thermal adaptive behavior recognition. The visual results of the optical flow features extracted using the TV-L1 algorithm on the heat adaptation behavior dataset video are shown in Figure 2.

Figure 2.

Sample frames of the dataset. The three rows show the RGB frames, TV-L1 optical flow frames, and joint heat map frames from HRNet pose estimation, respectively.

3.1.2. Human Pose Estimate

For indoor human pose estimation in the monitoring of building interiors, the first and most critical preprocessing step is to obtain joint points. Considering the good performance on the COCO key point recognition task, a high-resolution network, HRNet [62], pre-trained on the COCO-KeyPoints dataset [63], was used as the architectural indoor human pose extractor. HRNet employs a top-down approach for pose extraction, and its advantages have been widely demonstrated.

To reduce the impact of HRNet’s recognition accuracy on the model results, we used heat maps converted from the key point position confidence as the input for the model. The heat maps contained uncertainty information about the spatial positions of key points. This allowed the network to actively learn to handle such uncertainty and rely more on key points with a high level of confidence, thereby reducing the impact caused by key point position errors to a certain extent.

HRNet connects the outputs of each high-resolution to low-resolution sub-network in parallel rather than the more commonly adopted serial connection. Therefore, HRNet can maintain high-resolution representations throughout, instead of reconstructing high-resolution representations through low-resolution to high-resolution processing. As a result, the heat map of HRNet has a higher spatial accuracy.

3.2. Model Training

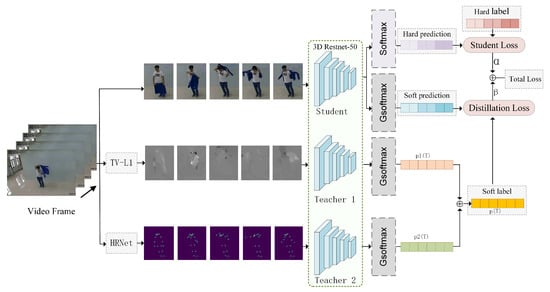

The teacher network adopted the 3D ResNet-50, and the motion flow network and the skeletal flow network were used as the input for teacher network 1 and teacher network 2, respectively. The input to the student network was an RGB video frame, and both the teacher networks and the student network used ResNet-50 as the backbone network for 3D volumes, as shown in Figure 3.

Figure 3.

Cross-modal knowledge distillation frame diagram.

3.2.1. Cross-Modal Knowledge Distillation

The framework of cross-modal knowledge distillation is composed of a teacher network and a student network, and the teacher network distills the learned knowledge into the student network.

Within the student network, the input video sequence is represented as . The logit value produced by the student network is defined as , which can be expressed as (where C denotes the total number of categories). The distillation temperature T introduced into the traditional Softmax layer is defined as the generalized GSoftmax layer, as shown in Formula (6). In the GSoftmax layer, the logit vector is converted into a probability distribution . The calculation is shown in Formula (7). The probable rate distribution has the dimensions of .

Within teacher network 1, the input video sequence is represented as . The logit value produced by the student network is defined as , which can be expressed as (where C denotes the total number of categories). According to Formulas (8) and (9), the GSoftmax layer converts the logit vector into a probability distribution . The probability distribution has the dimensions of . Similarly, the same operations are performed on the skeleton modal data input to Teacher Network 2.

During the knowledge distillation process, the training goal of the student network is defined as the probability distribution obtained by performing a weighted average on the class probabilities generated by Teacher Network 1 and Teacher Network 2. This involves integrating the knowledge “soft labels” from the two teachers, who convey comprehensive knowledge to the student network through these “soft labels”.

Distillation uses the class probabilities generated by the teacher model as a “soft label” for training the student model. When training the student model, the mesh number of the standard letter L group consists of two parts; one part is the student model classification loss between the real and hard label , which is used to supervise the student network prediction results, and the other part is the student and teacher model between the steam distillation loss , which is used to measure the difference between the network output of teachers and students.

The total loss function can be expressed as the weighted average of two parts, as shown in Formula (10):

The standard multi-class cross-entropy loss is used to calculate the classification loss, and the formula is as follows:

3.2.2. Decoupling Distillation Loss Function

In the knowledge distillation process, the traditional KD loss function is mainly concerned with the prediction probability of the target class, that is, the output agreement between the student model and the teacher model in the same class when predicting. However, in addition to the target categories, the relationship between the non-target categories is very important. These relationships can be seen as implicit knowledge that is critical to a model’s ability to generalize on new data. The knowledge of inter-class relations involves similarities and differences between different classes. For example, in an image classification task, some categories may be visually very similar, while others may be significantly different. The teacher model may have learned these complex inter-class relationships, while traditional KD loss functions often ignore this useful information by focusing only on the prediction of the target class.

Zhao et al. [64] proposed a decoupled knowledge distillation (DKD) method that divides classification predictions into two levels: (1) binary predictions for the target class and all non-target classes, and (2) multi-class predictions for each non-target class. Based on this, the classical KD loss is decoupled and reformulated into two parts; one is binary logit distillation for the target class and the other is multi-class logit distillation for the non-target class. They are referred to as target class knowledge distillation (TCKD) and non-target class knowledge distillation (NCKD), respectively. The DKD method argues that TCKD and NCKD should be considered separately because their contributions come from different aspects, and that, by decoupling TCKD and NCKD, the DKD loss can achieve better logic-based distillation.

Traditional knowledge distillation does not differentiate between target and non-target classes. The student model focuses solely on recognizing the target class while indiscriminately weakening all non-target categories, overlooking the nuanced inter-class relationships. In contrast, NCKD compels the student to distinguish among non-target categories, imitating the teacher model’s reasoning process and learning the subtle discriminative relationships between classes. That is, although none of the non-target classes are equal to the target class, some are very similar to the target class, while others differ significantly. Specifically, NCKD can learn the degree of difference between non-target behaviors, which can further enhance the model’s ability to distinguish.

For example, when considering the action of “blow into one’s hands”, it can be observed that “rub one’s hands” and “cross one’s arms” are very similar to it. Traditional KD methods make the student model only focus on recognizing “breathing on hands” while treating the other 15 types of actions equally. In contrast, the DKD method enables the student model to not only focus on the “breathing on hands” action, but also pay attention to the similarity between each non-target action and the “breathing on hands” action. For instance, rubbing hands and crossing arms are similar actions, while stamping feet is an action with an extremely low similarity. The DKD method can learn the subtle differences between actions and use these to improve the accuracy of model recognition.

In this section, the decoupled knowledge distillation loss function is used as the loss function of the above model. In this way, the student network can not only learn classification knowledge from labels, but also learn more behavioral feature representations from the teacher network.

For the logical value produced by the training data point i of class , the classification probability passing through the GSoftmax layer at the distillation temperature T can be expressed as . The probability calculation procedure for each belonging to type j is shown in Formula (12):

In order to distinguish the prediction results related to and unrelated to the target class, the symbol was defined to represent the binary probability of the target class and all other non-target classes, where represents the probability that the training data point i belongs to the target class c, and represents the probability that the training data point i belongs to the non-target class c.

The calculation probability of the target class is shown in Formula (13), and the calculation for the non-target class is shown in Formula (14):

At the same time, is defined to independently model the probability between non-target classes. The calculation method for each element is shown in Formula (15):

The classical distillation loss uses the KL divergence as the loss function. Next, the KL divergence is reformulated using the probability between the binary and non-target classes. The classical KL divergence can be expressed as follows:

According to Formulas (15) and (16), we obtain , so the above formula can be rewritten as follows:

By transforming the above formula, we obtain the following:

So far, the distillation loss L between the student model and the teacher model can be expressed as follows:

The above formula is decoupled and the KD loss is reformulated as a weighted sum of the two terms. represents the similarity between the binary probabilities of teachers and students in the target class, known as the target class knowledge distillation (represented by ). Meanwhile, represents the probabilistic similarity between the teacher and student in non-target categories, called non-target knowledge distillation (represented by ). Then, the formula can be expressed as follows:

Finally, after decomposing the classical KD loss into and , two hyperparameters, and , are introduced to form the final loss function of decoupling knowledge distillation:

where the hyperparameters and are the weights of and , respectively. In the above formula, and can be adjusted to balance the two terms. In the classical KD framework, the weights of and are coupled, and the gravity of each term cannot be changed in order to balance the importance.

Specifically, transfers knowledge about the difficulty of the training samples. For more challenging training data, can achieve more significant improvements. transfers knowledge between non-target classes and is suppressed when the weights are relatively small.

4. Results

In this section, the experimental results of the network model on the TAA thermal adaptive behavior dataset are analyzed in detail; the recognition accuracy of the model is evaluated through performance tests and ablation experiments; and the effects of different distillation strategies and parameter settings on the performance of the student model are compared. The experimental results show that, although some accuracy of the simplified student model was lost, the number of parameters and the calculation cost of the model were significantly reduced. By adjusting the distillation temperature, the performance of the student model was further optimized, and finally, a good recognition effect was achieved for various thermal adaptation behaviors.

4.1. Experimental Setup and Implementation

In order to verify the validity of the network model, experiments were conducted on the TAA dataset of thermal adaptive behavior. In this section, the setup and implementation of the experiments (including the ablation experiments) and the performance testing are introduced; additionally, the experimental results are analyzed and discussed in detail.

The algorithm program was implemented on the open source PyTorch 1.7.0 deep learning framework, which adopts the stochastic gradient descent (SGD) algorithm for optimization. The momentum and weight attenuation parameters were set to and , and the loss function adopted a cross-entropy loss reverse-propagation gradient. The initial learning rate was . The entire training phase took 50 rounds, and the training was completed end-to-end with the values of and set to . The execution environment of the experiment was Ubuntu, using version of the operating system, and the entire program was executed on a workstation equipped with NVIDIA Titan RTX and 32 GB of RAM.

4.2. Experimental Results and Analysis

In order to verify the effectiveness of the recognition method proposed in this study, the accuracy of the method was first compared with other existing recognition methods based on RGB modes using the TAA dataset. The results of this comparison are shown in Table 2. In terms of the recognition accuracy, the thermal adaptive behavior-recognition model based on cross-modal knowledge distillation proposed in this study had a top-1 recognition accuracy of 89.24%, second only to Wang et al.’s method [41]. In addition, the accuracy of the model was significantly higher than that of the two-stream method (71.04%), which indicates that the method proposed in this study has obvious advantages in terms of its recognition accuracy.

Table 2.

Comparison of recognition accuracy of different methods on TAA dataset .

Table 3 shows the number of parameters and GFLOPs of the teacher network and student network for the TAA dataset. Wang et al. [41] used three separate network models to train and infer thermal adaptation behavior categories for appearance, motion, and posture features, so the number of model parameters was three times that of the method proposed in this study. It is generally believed that more complex models have deeper and wider network structures, more parameters, and stronger nonlinear fitting capabilities, thus being able to learn more complex and refined data feature representations. Although complex models can achieve a higher recognition accuracy, the large number of parameters leads to difficulties in terminal deployment. Although the proposed method loses about 7% accuracy, the number of parameters and GFLOPs of the recognition model are reduced to 1/3 of those in Wang et al.’s model [41].

Table 3.

Comparison of model parameters and GFLOPs.

In terms of model efficiency, this method greatly reduces the number of parameters and the consumption of computing resources while maintaining a high recognition accuracy, which indicates that it may be more efficient in practical applications. Considering the recognition accuracy and model efficiency comprehensively, this method provided a good balance point for the TAA dataset. In a resource-constrained environment in particular, the thermal adaptive behavior-recognition model based on cross-modal knowledge distillation is a more suitable choice.

Table 4 and Table 5, respectively, show the recognition accuracy of heat-related and cold-related thermal adaptive behaviors on the TAA dataset. It can be seen that the recognition accuracy of heat-related adaptive behaviors generally reached more than 80%. In the recognition of thermal adaptive behaviors related to sensory heat, this method had the highest recognition accuracy for “wipe perspiration” and “fan self with an object” (94.44% and 92.59%, respectively), while the recognition accuracy for “fan self with one’s shirt” and “taking off a hat/cap” was 83.18% and 84.11%. In the recognition of cold-related thermal adaptive behaviors, the accuracy of “narrowed shoulders” and “stamp one’s feet” was the highest, at 94.39% and 96.3%, respectively, while the recognition accuracy of “sneeze/cough” was the lowest, at 82.41%.

Table 4.

The recognition results of heat-related discomfort actions on the TAA dataset.

Table 5.

The recognition results of cold-related discomfort actions on the TAA dataset.

The total accuracy of this method was lower than that of Wang et al.’s method [41], but our method still showed better performance in the identification of some thermal adaptive behaviors. In particular, the recognition accuracy of six types of thermal adaptive behaviors—“fan with a fan”, “fan with a hand”, “wipe perspiration”, “stamp one’s feet”, “rub one’s hands”, and “narrowed shoulders”—exceeded that of Duan et al.’s method [60].

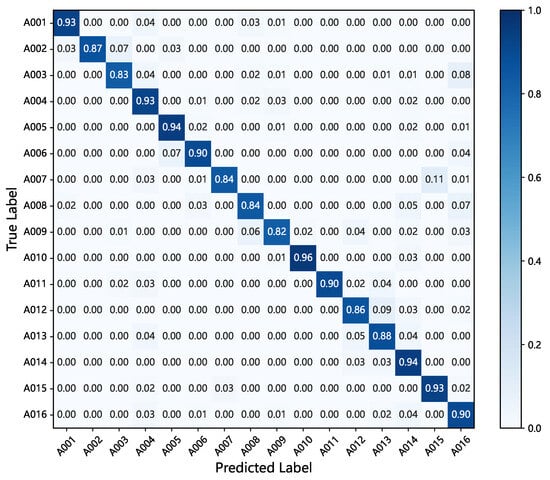

In order to further analyze the differences among 16 kinds of thermal adaptation behaviors, the recognition results of each category were observed through the confusion matrix of the model recognition results, as shown in Figure 4. The model performed well in the recognition of most actions, especially heat adaptation behaviors such as “fan self with an object”, “roll up sleeves”, “stamp one’s feet”, and “narrowed shoulders”, for which the accuracy reached more than 90%. For heat adaptation behaviors such as “fan self with one’s shirt”, “take off a jacket”, “take off a hat/cap”, “sneeze/cough”, and “blow into one’s hands”, the model had a low prediction accuracy. Among these behaviors, 11% of the “take off a jacket” instances were incorrectly predicted as “put on a jacket”, while 3% of the “put on a jacket” instances were incorrectly predicted as “take off a jacket”. Thus, the model confuses the actions of “putting on” and “taking off” a jacket. This is because both behaviors involve a jacket and the relative positions of body parts are extremely similar when the actions occur. Behavior inference based on RGB data streams is greatly affected by objects. In future research, we will design a model to increase the inference weight of temporal features and focus on the temporal displacement features of objects. Specifically, by focusing on the temporal changes in the position of the jacket, we believe that this will reduce the misjudgment rate of “putting on” and “taking off” a jacket. Although the prediction accuracy of the model was relatively low, it still reached more than 80%, and the model can still be used to identify the thermal adaptation behavior of people inside buildings.

Figure 4.

The confusion matrix of the recognition results.

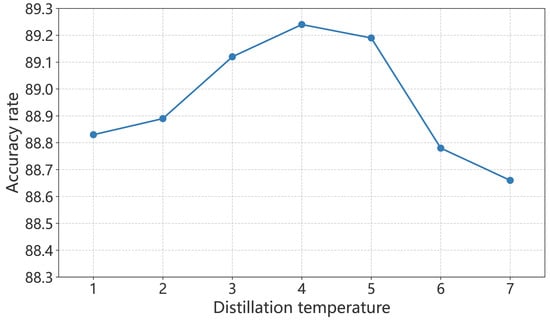

Figure 5 shows the recognition accuracy of the student model for thermal adaptation behaviors when different distillation temperatures were used. As the distillation temperature increased, the accuracy of the student network first increased and then decreased. The model exhibited the best performance when the distillation temperature was 4. This is because a lower distillation temperature will cause the soft label to be too sharp, while a higher distillation temperature will make the soft label too smooth, resulting in the loss of useful information. Therefore, the experiment determined the optimal distillation temperature for the recognition of thermal adaptive behavior so that the student network can best learn from the teacher network, thus ensuring the optimal performance of the model in the online testing process.

Figure 5.

Recognition accuracy curve under different distillation temperatures.

4.3. Ablation Experiment

Table 6 shows the accuracy of student model distillation with movement and/or posture as the teachers. It can be seen that the use of both movement information and posture information as teachers can improve the recognition performance of the student model to some extent. The experiment showed that, when the two were used together as the teacher-guided model, the recognition accuracy of the student model reached 89.24%. Compared with the three-stream fusion method, the recognition accuracy was reduced by 7%.

Table 6.

Comparison of recognition accuracy of different teacher models (%); M and P, respectively, represent the motion mode and the pose mode as teachers.

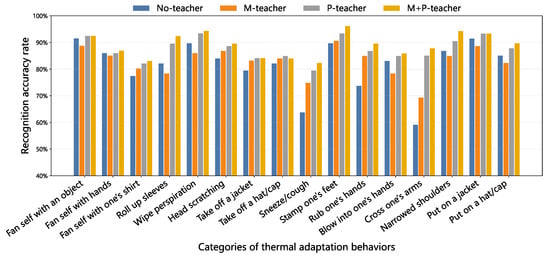

Figure 6 shows the influence of different types of modal data on the accuracy rate of the student model’s recognition of 16 types of behavior classification tasks. There are four teacher types in the figure: no teacher (blue), M for the motion mode teacher (orange), P for the posture mode teacher (yellow), and the M + P teacher (gray). In most cases, the recognition accuracy of the student model with teacher guidance was higher than that of the model without teacher guidance, indicating that the teacher model can effectively improve the knowledge distillation performance of the student model. In most of the behavior categories, the recognition accuracy of students under the guidance of teacher M or teacher P alone was higher than that without teacher guidance, indicating that both movement information and posture information are useful features that can improve the recognition ability of the model.

Figure 6.

Comparison of recognition accuracy for various thermal adaptation behaviors among different teacher network models.

In some specific categories (e.g., “A013: crossed one’s arms”), the model recognition accuracy without teacher guidance was unusually low, which may have been due to the difficulty in capturing the behavioral features of the class through single-mode data, or the poor learning of the student model on that category. The recognition accuracy of the student model guided by the M + P teacher was the highest, indicating that the combination of movement information and posture information in the teacher model can capture behavior characteristics more comprehensively, thus providing more comprehensive guidance and significantly improving the recognition accuracy of the student model in behavior classification. In particular, the teacher model that combined these two types of information (the M + P teacher) performed the best in most cases, indicating that cross-modal knowledge distillation plays an important role in improving the performance of student models.

5. Conclusions

In this study, a lightweight heat-adaptive action recognition method based on knowledge distillation was studied. By designing a knowledge distillation framework that included a multi-teacher network and a student network, the action knowledge and skeleton knowledge learned by the teacher network were transferred to the student network. This effectively improved the performance of the student network on the action recognition task. The experimental results showed that knowledge distillation is an effective model compression technology. The proposed method reduced the complexity and number of calculations of the model while ensuring the accuracy of thermal adaptation behavior recognition; achieved the design of a thermal adaptation behavior-recognition model; and provided ideas for the terminal deployment of real-time thermal adaptation behavior recognition in the interior of buildings. Of course, the current method still has some limitations and shortcomings. The logic-based distillation method needs to adjust additional hyperparameters, such as the distillation temperature, which can require additional parameter adjustment work when recognizing thermal adaptation behavior in different indoor settings. In addition, to make the model more adaptable to different indoor scenarios without manual adjustment, we will carry out the following work in the future: (1) learning universal representations from unlabeled data through self-supervised learning; (2) integrating reinforcement learning methods to enable the model to learn in real time from online environments; and (3) increasing the diversity of scenes and camera viewpoints in the training dataset, such as including libraries, offices, and conference rooms.

Author Contributions

Methodology, Y.W.; Formal analysis, X.L.; Investigation, W.Y.; Data curation, D.S.; Writing—original draft, W.D.; Writing—review & editing, W.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Foundation of Shandong Jianzhu University under grant X24046 and in part by the Youth Project of the Natural Science Foundation of Shandong Province under grant ZR2023QA092.

Institutional Review Board Statement

Our research does not involve sensitive personal information or commercial interests and uses anonymized data. Therefore, since this study involves anonymized survey data and does not pose any potential risk to participants, no ethical review or approval is required.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Acknowledgments

The authors acknowledge Cunqian Wang for reviewing and editing this papar.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yang, Z.; Ghahramani, A.; Becerik-Gerber, B. Building occupancy diversity and HVAC (heating, ventilation, and air conditioning) system energy efficiency. Energy 2016, 109, 641–649. [Google Scholar] [CrossRef]

- Allen, J.G.; Macomber, J.D. Healthy Buildings: How Indoor Spaces Drive Performance and Productivity; Harvard University Press: Cambridge, MA, USA, 2020. [Google Scholar]

- van Marken Lichtenbelt, W.; Hanssen, M.; Pallubinsky, H.; Kingma, B.; Schellen, L. Healthy excursions outside the thermal comfort zone. Build. Res. Inf. 2017, 45, 819–827. [Google Scholar] [CrossRef]

- Lima, F.; Ferreira, P.; Leal, V. A review of the relation between household indoor temperature and health outcomes. Energies 2020, 13, 2881. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Z.; Zhang, N.; Ji, W.; Zhu, Y.; Cao, B. Field studies on thermal comfort in China over the past 30 years. Build. Environ. 2024, 269, 112449. [Google Scholar] [CrossRef]

- Zhang, H.; Arens, E.; Huizenga, C.; Han, T. Thermal sensation and comfort models for non-uniform and transient environments: Part I: Local sensation of individual body parts. Build. Environ. 2010, 45, 380–388. [Google Scholar] [CrossRef]

- Nicol, F.; Humphreys, M.; Roaf, S. Adaptive Thermal Comfort: Principles and Practice; Routledge: London, UK, 2012. [Google Scholar]

- Zagreus, L.; Huizenga, C.; Arens, E.; Lehrer, D. Listening to the occupants: A Web-based indoor environmental quality survey. Indoor Air 2004, 14, 65–74. [Google Scholar] [CrossRef]

- Sanguinetti, A.; Pritoni, M.; Salmon, K.; Meier, A.; Morejohn, J. Upscaling participatory thermal sensing: Lessons from an interdisciplinary case study at University of California for improving campus efficiency and comfort. Energy Res. Soc. Sci. 2017, 32, 44–54. [Google Scholar] [CrossRef]

- Chaudhuri, T.; Soh, Y.C.; Li, H.; Xie, L. Machine learning driven personal comfort prediction by wearable sensing of pulse rate and skin temperature. Build. Environ. 2020, 170, 106615. [Google Scholar] [CrossRef]

- Wang, X.; Li, D.; Menassa, C.C.; Kamat, V.R. Investigating the effect of indoor thermal environment on occupants’ mental workload and task performance using electroencephalogram. Build. Environ. 2019, 158, 120–132. [Google Scholar] [CrossRef]

- Wu, M.; Li, H.; Qi, H. Using electroencephalogram to continuously discriminate feelings of personal thermal comfort between uncomfortably hot and comfortable environments. Indoor Air 2020, 30, 534–543. [Google Scholar] [CrossRef]

- Li, H.; Liu, Y.; Wu, H.; Lin, B.; Lei, L.; He, J. Thermal preference prediction through infrared thermography technology: Recognizing adaptive behaviors. Build. Environ. 2024, 262, 111829. [Google Scholar] [CrossRef]

- Zheng, P.; Wang, C.; Liu, Y.; Lin, B.; Wu, H.; Huang, Y.; Zhou, X. Thermal adaptive behavior and thermal comfort for occupants in multi-person offices with air-conditioning systems. Build. Environ. 2022, 207, 108432. [Google Scholar] [CrossRef]

- Ghahramani, A.; Castro, G.; Becerik-Gerber, B.; Yu, X. Infrared thermography of human face for monitoring thermoregulation performance and estimating personal thermal comfort. Build. Environ. 2016, 109, 1–11. [Google Scholar] [CrossRef]

- Li, W.; Zhang, J.; Zhao, T.; Liang, R. Experimental research of online monitoring and evaluation method of human thermal sensation in different active states based on wristband device. Energy Build. 2018, 173, 613–622. [Google Scholar] [CrossRef]

- Fan, C.; He, W.; Liao, L. Real-time machine learning-based recognition of human thermal comfort-related activities using inertial measurement unit data. Energy Build. 2023, 294, 113216. [Google Scholar] [CrossRef]

- Wang, X.; Qiao, Y.; Wu, N.; Li, Z.; Qu, T. On optimization of thermal sensation satisfaction rate and energy efficiency of public rooms: A case study. J. Clean. Prod. 2018, 176, 990–998. [Google Scholar] [CrossRef]

- Yang, B.; Li, X.; Hou, Y.; Meier, A.; Cheng, X.; Choi, J.H.; Wang, F.; Wang, H.; Wagner, A.; Yan, D.; et al. Non-invasive (non-contact) measurements of human thermal physiology signals and thermal comfort/discomfort poses-A review. Energy Build. 2020, 224, 110261. [Google Scholar] [CrossRef]

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. A review of deep reinforcement learning for smart building energy management. IEEE Internet Things J. 2021, 8, 12046–12063. [Google Scholar] [CrossRef]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Integration of deep learning into the iot: A survey of techniques and challenges for real-world applications. Electronics 2023, 12, 4925. [Google Scholar] [CrossRef]

- Jin, R.; Xu, P.; Gu, J.; Xiao, T.; Li, C.; Wang, H. Review of optimization control methods for HVAC systems in Demand Response (DR): Transition from model-driven to model-free approaches and challenges. Build. Environ. 2025, 280, 113045. [Google Scholar] [CrossRef]

- Burzo, M.; Wicaksono, C.; Abouelenien, M.; Perez-Rosas, V.; Mihalcea, R.; Tao, Y. Multimodal sensing of thermal discomfort for adaptive energy saving in buildings. In Proceedings of the iiSBE Net Zero Built Environment 2014 Symposium, Gainesville, FL, USA, 6–7 March 2014. [Google Scholar]

- Ranjan, J.; Scott, J. ThermalSense: Determining dynamic thermal comfort preferences using thermographic imaging. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 1212–1222. [Google Scholar]

- Cosma, A.C.; Simha, R. Using the contrast within a single face heat map to assess personal thermal comfort. Build. Environ. 2019, 160, 106163. [Google Scholar] [CrossRef]

- Cosma, A.C.; Simha, R. Machine learning method for real-time non-invasive prediction of individual thermal preference in transient conditions. Build. Environ. 2019, 148, 372–383. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Hedman, A.; Olofsson, T.; Li, H.; Van Gool, L. NIDL: A pilot study of contactless measurement of skin temperature for intelligent building. Energy Build. 2019, 198, 340–352. [Google Scholar] [CrossRef]

- Jung, W.; Jazizadeh, F. Vision-based thermal comfort quantification for HVAC control. Build. Environ. 2018, 142, 513–523. [Google Scholar] [CrossRef]

- Kim, S.; Park, C.S. Quantification of aleatoric and epistemic uncertainty in Data-Driven occupant behavior model for building performance simulation. Energy Build. 2025, 340, 115818. [Google Scholar] [CrossRef]

- Ji, Y.; Zhao, M.; Tian, W.; Wang, X.; Xie, J.; Liu, J. Research on the feature of thermal environment and auxiliary behavior of air-conditioning in buildings with substandard central heating. Energy Build. 2025, 341, 115827. [Google Scholar] [CrossRef]

- Yan, H.; Pan, Y.; Dong, M.; Zhang, H.; Li, J.; Zhao, S. Energy efficiency and comfort: Analysis of thermal responses and behaviors of residents with high and low air conditioning dependency. Energy Build. 2025, 338, 115695. [Google Scholar] [CrossRef]

- Yu, M.; Tang, Z.; Tao, Y.; Ma, L.; Liu, Z.; Dai, L.; Zhou, H.; Liu, M.; Li, Z. Thermal comfort prediction in multi-occupant spaces based on facial temperature and human attributes identification. Build. Environ. 2024, 262, 111772. [Google Scholar] [CrossRef]

- Cen, C.; Tan, E.; Valliappan, S.; Ang, E.; Chen, Z.; Wong, N.H. Students’ thermal comfort and cognitive performance in tropical climates: A comparative study. Energy Build. 2025, 341, 115817. [Google Scholar] [CrossRef]

- Zhao, Y.; Genovese, P.V.; Li, Z. Intelligent Thermal Comfort Controlling System for Buildings Based on IoT and AI. Future Internet 2020, 12, 30. [Google Scholar] [CrossRef]

- Li, Y.; Liu, J.; Wang, L. Lightweight Network Research Based on Deep Learning: A Review. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 9021–9026. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Blalock, D.W.; Ortiz, J.J.G.; Frankle, J.; Guttag, J.V. What is the State of Neural Network Pruning? In Proceedings of the Third Conference on Machine Learning and Systems, MLSys 2020, Austin, TX, USA, 2–4 March 2020; Dhillon, I.S., Papailiopoulos, D.S., Sze, V., Eds.; 2020. Available online: https://arxiv.org/abs/2003.03033 (accessed on 9 October 2025).

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. FitNets: Hints for Thin Deep Nets. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Conference Track Proceedings. Bengio, Y., LeCun, Y., Eds.; 2015. [Google Scholar]

- Wang, J.; Bian, C.; Zhou, X.; Lyu, F.; Niu, Z.; Feng, W. Online Knowledge Distillation for Efficient Action Recognition. In Proceedings of the 2022 IEEE 2nd International Conference on Computer Communication and Artificial Intelligence (CCAI), Beijing, China, 6–8 May 2022; pp. 177–181. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Y.; Duan, W.; Zheng, Y.; Duan, P. Joint measurement of thermal discomfort by occupant pose, motion and appearance in indoor surveillance videos for building energy saving. J. Circuits Syst. Comput. 2024, 33, 2450051. [Google Scholar] [CrossRef]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Alkhulaifi, A.; Alsahli, F.; Ahmad, I. Knowledge distillation in deep learning and its applications. PeerJ Comput. Sci. 2021, 7, e474. [Google Scholar] [CrossRef]

- Wang, Q.; Zhan, L.; Thompson, P.M.; Zhou, J. Multimodal Learning with Incomplete Modalities by Knowledge Distillation. In Proceedings of the KDD ’20: The 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual Event, CA, USA, 23–27 August 2020; Gupta, R., Liu, Y., Tang, J., Prakash, B.A., Eds.; ACM: New York, NY, USA, 2020; pp. 1828–1838. [Google Scholar] [CrossRef]

- Wu, W.; Wang, X.; Luo, H.; Wang, J.; Yang, Y.; Ouyang, W. Bidirectional Cross-Modal Knowledge Exploration for Video Recognition with Pre-trained Vision-Language Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 6620–6630. [Google Scholar] [CrossRef]