Abstract

With the rapid advancement of digital technologies, smart construction has emerged as a transformative approach within the construction industry. Central to the success of human-machine collaboration is human-machine trust, which plays a critical role in safety, performance, and the adoption of intelligent systems. This study develops and empirically tests a comprehensive structural equation model to explore the formation mechanism of human-machine trust in smart construction. Drawing on the three-domain framework, five primary constructs—role cognition; controllability; technology attachment; equipment reliability; and autonomy—are identified across individual and system dimensions. The model also incorporates trust propensity and task complexity as contextual moderators. A questionnaire survey of 288 construction professionals in China was conducted, and partial least squares structural equation modelling (PLS-SEM) was employed to analyze the data. The results confirm that all five constructs significantly and positively influence human-machine trust, with role cognition and autonomy having the strongest effects. Furthermore, trust propensity positively moderates the impact of individual traits, while task complexity negatively moderates the effect of equipment characteristics on trust formation. These findings provide valuable theoretical insights and practical guidance for the design of trustworthy intelligent systems, which can foster safer and more effective human-machine collaboration in smart construction.

1. Introduction

Driven by the global wave of digitalization and intelligence, the construction industry is undergoing a profound transformation toward automation and smart development, commonly referred to as smart construction. This emerging paradigm seeks to optimize the entire life cycle of construction projects by integrating cutting-edge technologies such as robotics, artificial intelligence (AI), the Internet of Things (IoT), and big data analytics [1]. This transformation fundamentally reshapes traditional construction methods and management practices. For instance, the application of robotic technologies enhances the safety of complex and hazardous tasks, automated equipment improves construction precision, and intelligent monitoring systems facilitate real-time data analytics and risk prediction throughout the construction process.

Despite the opportunities introduced by smart construction, new challenges and uncertainties have emerged. In smart construction, human-machine collaboration becomes the norm, and workers increasingly take on the roles of “technical operators” and “equipment managers”. This shift imposes greater demands on workers’ technological acceptance, psychological adjustment, and behavioral adaptation. Notably, understanding how workers interact with automated equipment under high-risk and uncertain conditions has become a critical concern in smart construction research [2,3].

Human-machine trust is widely acknowledged as a cornerstone of effective collaboration within intelligent and automated systems. In smart construction contexts, where tasks are often complex, environments dynamic, and safety requirements high, trust is vital for guiding human behavior, reducing cognitive burden, and ensuring reliable coordination between humans and machines. Human-machine trust refers to the extent to which users believe that an automated system is capable, dependable, and can perform as expected [4]. When well-calibrated, trust facilitates the delegation of control to machines, thereby enhancing efficiency and safety on construction sites. Recent research on human-machine trust has predominantly focused on domains such as autonomous driving, medical robotics, and intelligent manufacturing. These studies typically examine how system-related factors (e.g., reliability, autonomy) and user-related factors (e.g., role cognition, perceived controllability, technology attachment) influence trust in structured and stable environments. However, limited attention has been given to trust formation in smart construction, which is often characterized by high variability, task complexity, and operational uncertainty. The unique dynamics of human-machine collaboration in construction thus present distinct challenges that differ significantly from other domains.

Accordingly, it is crucial to explore the mechanisms and influencing factors of human-machine trust tailored to smart construction settings. Such exploration can help inform the design of trustworthy intelligent systems and promote safe and efficient collaboration in practice. Prior studies frequently examine system performance or user behavior in isolation, overlooking the multifaceted interplay among human, machine, and environmental factors [5]. In particular, few investigations have considered how trust evolves in response to factors such as system autonomy, feedback quality, and contextual variables like task complexity and role ambiguity. This lack of integrated research presents both theoretical and practical gaps in the development of dependable intelligent systems suited to the construction industry.

Existing research on trust formation mechanisms within construction contexts remains unclear and requires further investigation. To address this issue, the present study proposes a new research framework that incorporates individual attributes, equipment characteristics, and contextual factors to explain how human-machine trust forms in smart construction settings. It focuses on key variables such as role cognition, controllability, technology attachment, equipment reliability, and autonomy, while also examining the moderating effects of trust propensity and task complexity. This framework aims to capture the core pathways of trust formation and provide empirical insights for enhancing human-machine collaboration on construction sites.

2. Human-Machine Trust

2.1. The Connotation of Human-Machine Trust

With the rapid advancement of human-machine interaction, trust in machines has become a critical factor influencing whether individuals accept and rely on intelligent technologies. From autonomous vehicles and medical AI systems to construction robots, the level of trust humans place in machines directly affects both the effectiveness and safety of these technologies in practice. Despite extensive attention in the literature, no universally accepted definition of human-machine trust has been established. Definitions vary depending on disciplinary context and research objectives.

A widely cited definition is proposed by Lee and See [6], which has been adopted in numerous studies [7,8]. They suggest that trust encompasses an attitudinal stance, shaped by an individual’s perception of a system’s ability to achieve a goal under conditions of uncertainty and vulnerability. In their view, attitude influences willingness to rely on the system, but willingness and actual reliance do not necessarily equate to trust. Thus, trust is defined as an attitude toward an agent (e.g., an autonomous system) that is expected to assist in achieving a task (e.g., driving) under uncertain conditions. From a behavioral perspective, Hoffman et al. [9] define trust as the confidence users place in a system based on its demonstrated ability and reliability in specific tasks. Parasuraman and Riley [10], drawing from a psychological view, conceptualize trust as a mental state—an expectation or belief that the system will perform correctly. Merritt and Ilgen [11] further emphasize the role of perceived performance and emotional reliance. Sheridan [12], from an interactional perspective, considers trust as a dynamic outcome of user-system interaction, shaped by both machine capabilities and user experiences. Meanwhile, a system design perspective highlights the importance of transparency, predictability, and interface usability in fostering trust.

Some researchers have further advanced the understanding of human-machine trust. Hoff et al. [7] argue that in smart manufacturing environments, trust is influenced not only by machine capabilities, task complexity, and environmental uncertainty but also by users’ trust propensity and risk perception. Gao et al. [13] explore trust in autonomous driving, emphasizing the roles of information transparency, user-friendly interfaces, and perceived controllability. In the context of construction robotics, Wang [14] identifies trust as a key driver of operator reliance. However, trust bias—either over-trust or under-trust—may lead to unsafe behaviors on construction sites.

Despite differences in perspectives and definitions, core components of human-machine trust can be identified. These include machine reliability and autonomy, user-related factors such as role cognition, perceived controllability, and technology attachment, as well as task complexity. Trust is not static but evolves continuously across different contexts and interaction processes. A systematic understanding of how trust is formed and influenced is essential for promoting the effective use of intelligent technologies and enhancing human-machine collaboration.

2.2. Research Status of Human-Machine Trust

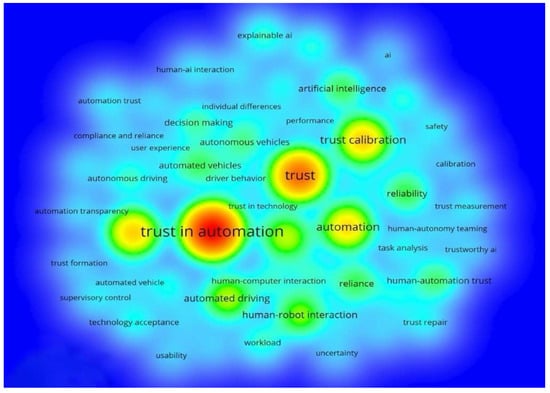

To gain a comprehensive understanding of the current research landscape on human-machine trust, a bibliometric analysis was conducted using VOSviewer 1.6.20, based on peer-reviewed publications retrieved from the Web of Science Core Collection. The search covered the period from January 2014 to December 2024 and employed the keywords “human-machine trust”, “trust in automation”, and “human-robot interaction” across titles, abstracts, and author keywords. A total of 563 English-language journal articles and reviews were selected for analysis. The keyword co-occurrence network is presented in Figure 1.

Figure 1.

Keyword co-occurrence network.

As shown in Figure 1, the most frequent co-occurring keywords cluster around core themes such as “trust in automation” [15,16], “trust” [12,17], and “trust calibration” [18]. These thematic areas indicate that current research places strong emphasis on understanding how trust is formed between users and intelligent systems across various high-risk domains.

Recent studies suggest that trust formation is influenced by multiple factors, including system performance, reliability, user experience, and task complexity. Chen et al. [19] demonstrated that machine transparency and task characteristics significantly shape users’ initial trust levels. Similarly, Zhou et al. [20] found that personality traits and prior experiences modulate individual tendencies to form trust in automated systems. Equipment-related factors, such as error rate and decision clarity [21], have also been shown to affect perceived system dependability.

Despite this progress, relatively few studies have explored trust formation mechanisms specifically within smart construction environments. In such contexts, trust is not only a psychological factor but also a key determinant of safety and performance. Most existing models are not tailored to smart construction, underscoring the need for research that identifies context-specific trust factors in construction.

2.3. Human-Machine Trust Hypothesis Model Development

Some studies have deepened the understanding of human-machine trust by highlighting its multi-dimensional nature across various high-risk environments such as robotics, autonomous systems, and smart construction. For example, Hancock et al. [22] emphasized that trust is shaped by a complex interplay of system reliability, user experience, and task conditions. Robinette et al. [23] further noted that trust can be easily disrupted by inconsistent robot behavior, even when system functionality remains intact. Building on the three-domain model proposed by Madhavan and Wiegmann [24]—which classifies trust antecedents into human; machine; and environmental dimensions—recent literature continues to support this framework. Zhou [20] confirmed that individual factors such as personality traits and trust propensity significantly influence trust calibration in collaborative scenarios. At the machine level, Schaefer et al. [25] found that users’ trust is largely driven by perceived autonomy and system performance consistency. Meanwhile, Lyons and Guznov [26] revealed that task complexity and environmental uncertainty amplify the importance of system transparency and adaptability.

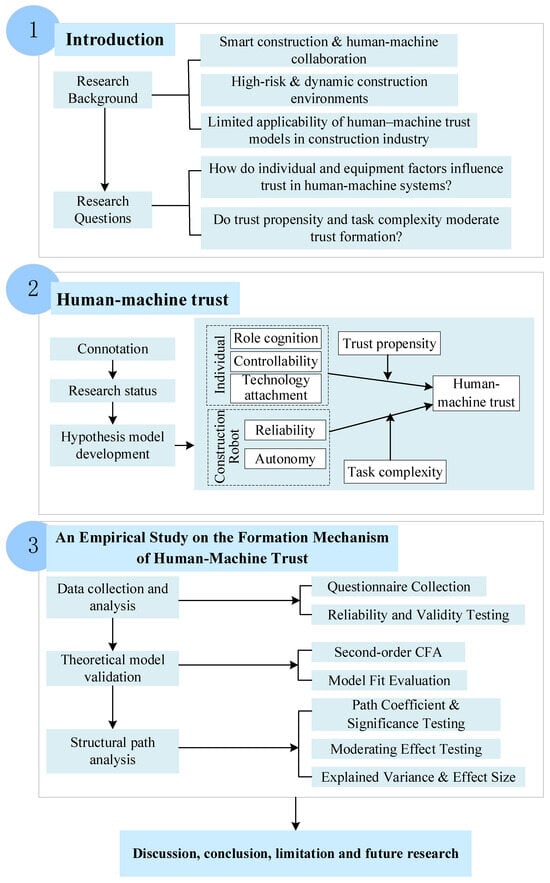

Drawing from this foundation, the study identifies five core constructs that influence human-machine trust within the context of China’s smart construction industry. These constructs are categorized into three dimensions: Individual attributes (role cognition, controllability, and technology attachment), equipment attributes (reliability and autonomy), and contextual factors (task complexity and trust propensity as moderators), providing a comprehensive analytical framework for trust formation.

2.3.1. Individual-Related Factors

At construction sites, workers’ acceptance and trust in smart devices are largely influenced by their individual characteristics. Especially in the early days when intelligent equipment was just introduced first, role cognition influences how individuals perceive their position and level of involvement when collaborating with intelligent systems. Rooted in role theory, this concept describes the extent to which workers recognize themselves as actively participating in or overseeing system operations. When individuals perceive themselves as occupying a more central role in the collaborative process, they are more likely to engage with automation proactively and to develop trust more readily [27,28]. Conversely, when their perceived role becomes vague due to increasing automation, workers may become more hesitant to rely on the system [29].

Second, controllability refers to an individual’s perceived ability to monitor, influence, and respond to automation errors during human-machine interaction, which significantly affects trust calibration in high-risk environments. In high-autonomy systems, such as those used in mining and construction, workers often act as supervisors who must remain vigilant and respond to rare but critical failures. Studies in aviation and advanced manufacturing have demonstrated that low perceived controllability leads to either automation complacency or disengagement, whereas high controllability promotes active monitoring and resilience of trust [30,31,32]. In construction settings, when workers believe that errors are manageable and that they retain partial control, they are more likely to maintain trust even after unexpected events.

Third, technology attachment, defined as an individual’s emotional bond and psychological reliance on technology, is another important antecedent of trust. Research suggests that workers with higher attachment levels are more willing to trust newly introduced systems, particularly under uncertainty [33,34]. Familiarity and prior positive experiences with intelligent devices strengthen this bond, helping users interpret system behavior more favorably and accelerating the development of early-stage trust [24].

Based on the above literature review results, the following hypotheses are developed (see Figure 2):

Figure 2.

Research framework.

H1.

Role cognition positively influences construction workers’ trust in automated equipment.

H2.

Controllability positively influences construction workers’ trust in automated equipment.

H3.

Technology attachment positively influences construction workers’ trust in automated equipment.

2.3.2. Equipment-Related Factors

In smart construction, the increasing integration of automated equipment—such as intelligent lifting machinery; inspection robots; and collaborative construction devices—has made human-machine trust a critical factor influencing both operational safety and task performance. Among various system-level characteristics, equipment reliability plays a foundational role in shaping initial trust perceptions. Reliability refers to the consistency, predictability, and stability of equipment behavior across different task conditions. During early-stage interactions, reliable system performance helps reduce users’ uncertainty, enabling them to form positive expectations about the machine’s competence and intentions. Robinette et al. [23] emphasized that equipment functionality strongly predicts trust in initial phases of human-machine interaction, while Hancock et al. [22] identified system reliability as the most influential technical factor in trust formation—more impactful than surface-level features such as appearance or voice.

In construction settings, where tasks are high-risk and time-sensitive, workers are especially sensitive to system failures. Equipment that operates with stability, accurate responses, and behavioral consistency is more likely to be trusted to assist in task execution. However, studies have also shown that the relationship between reliability and trust is not always linear. Excessively flawless performance may cause workers to over-trust the system and relax their vigilance, leading to potential safety risks [26]. Conversely, repeated malfunctions or behavior inconsistent with worker expectations can rapidly erode trust, sometimes resulting in rejection of the equipment altogether.

Meanwhile, equipment autonomy is defined as the degree to which automated equipment can operate independently without continuous human intervention. While earlier studies emphasized the potential risks associated with excessive autonomy, recent findings suggest that enhancing the autonomy of automated equipment within controllable boundaries can help strengthen workers’ trust. Moderate levels of autonomy reduce the need for manual oversight, increase workers’ perceived competence of the equipment, and promote more active human-machine collaboration. This is because autonomy lowers the requirement for constant human supervision, enhances the perceived technological capability, and allows workers to focus on supervisory or high-level tasks [35,36]. Hence, the following hypothesis is proposed:

H4.

Equipment reliability has a significant positive effect on trust in automated equipment.

H5.

Equipment autonomy has a significant positive effect on trust in automated equipment.

2.3.3. Moderating Role of Trust Propensity

In smart construction, trust propensity significantly influences how workers form trust in intelligent equipment. As a stable personality trait, trust propensity reflects an individual’s general tendency to trust others or systems in uncertain situations [37]. Workers with higher trust propensity are more likely to evaluate new automated tools positively, especially in early-stage interactions with limited information. Recent research shows that this trait is shaped by personality, education, and prior experience [20]. It not only affects initial trust judgments but also shapes how users interpret system reliability and feedback [38]. Therefore, trust propensity serves as a critical psychological basis for establishing human-machine trust in smart construction.

Therefore, trust propensity serves not only as a foundational factor in interpersonal trust-building but also as a critical psychological variable in the formation of human-machine trust on construction sites. It shapes individuals’ subjective evaluations of equipment performance, reliability, and interactive feedback, particularly in situations characterized by limited information and early-stage interaction. Workers with higher trust propensity are more likely to provide an initial baseline of trust, thereby accelerating the development of human-machine trust. Hence, the following hypothesis is proposed:

H6.

Trust propensity has a moderating effect on the formation of man-machine trust.

2.3.4. Moderating Role of Task Complexity

In smart construction, task complexity—such as technical difficulty; safety demands; and scheduling pressure—directly influences human-machine trust. As complexity increases, workers face greater uncertainty and risk, relying more on intelligent equipment for support. Under such conditions, trust is shaped not only by actual system performance but also by whether users perceive the system as capable of assisting effectively in challenging scenarios. The higher task complexity may elevate users’ cognitive load and sensitivity to risk, leading to more cautious trust formation. For instance, Sultana and Nemati [39] found that in complex task settings, users are more likely to trust systems with high explainability and transparency. Similarly, Sapienza et al. [40] emphasized that interpretability and clarity in system behavior are crucial for trust development under demanding operational contexts.

This effect becomes particularly salient in complex construction tasks. When automation demonstrates stable performance in such settings, trust is more likely to form and strengthen. Conversely, during simple tasks with low perceived stakes, trust may not increase even if the system performs flawlessly. Hence, the following hypothesis is proposed:

H7.

Task complexity has a moderating effect on the formation of man-machine trust.

3. Research Methodology

Based on an extensive literature review, a questionnaire survey was conducted to collect empirical data for analyzing the factors influencing human-machine trust in smart construction environments. The analysis involved mean score analysis, correlation analysis, and structural equation modeling (SEM), using the Statistical Package for Social Science (SPSS) 24.0 and SmartPLS 4 4.1.0.9. Mean score analysis was used to assess the relative importance of each dimension contributing to human-machine trust, including individual attributes (e.g., controllability, technology attachment) and equipment characteristics (e.g., reliability, autonomy). Spearman’s rank correlation analysis was performed to explore the relationships among trust, task complexity, and demographic variables. The SEM was employed to examine the direct and moderating effects of key variables—such as role cognition and task complexity—on human-machine trust formation. These analytical techniques have been widely applied in construction management and human-automation interaction research to uncover complex relationships between technical and psychological factors [22,41].

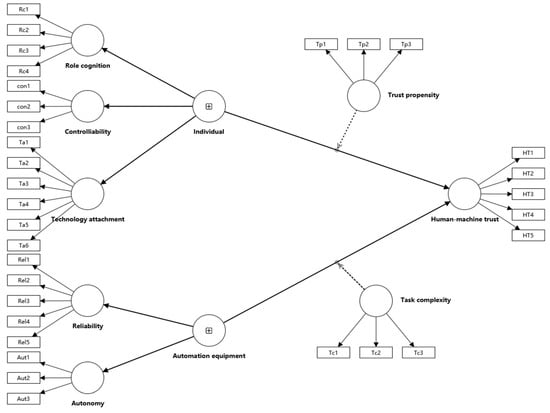

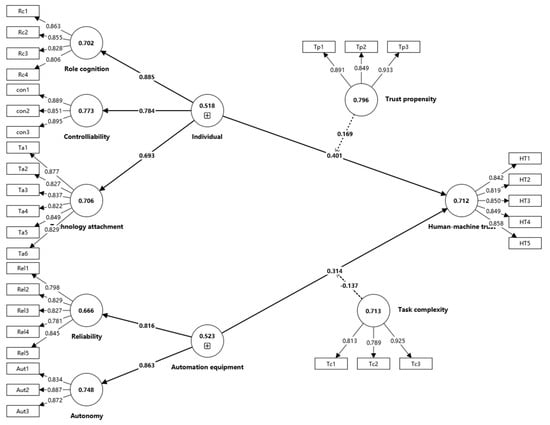

The questionnaire used in this study is divided into three main sections. The first section collects respondents’ demographic information, which is also used to examine the moderating effects of individual characteristics on the formation of human-machine trust. The second section focuses on measuring key factors influencing the formation of trust in intelligent equipment within smart construction environments. The third section aims to assess the overall level of trust the respondents have in such systems. A total of 32 items were included in the questionnaire, each measured using a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree). These items were developed based on an extensive literature review and were adapted to the context of construction, particularly focusing on human-machine collaboration. Specifically, seven dimensions were designed to explore the trust formation mechanism: role cognition (4 items), controllability (3 items), technology attachment (6 items), autonomy (3 items), perceived reliability (5 items), trust propensity (3 items), and task complexity (3 items). For example, the “technology attachment” dimension includes six items, such as “I have become accustomed to using intelligent equipment in construction tasks” and “I feel emotionally attached to the intelligent equipment used on-site”. All items were tailored to ensure relevance to the construction industry, with a particular emphasis on the practical application and user experience of smart construction equipment. The initial SEM model was shown in Figure 3.

Figure 3.

The initial model.

The respondents of the questionnaire were primarily drawn from professionals engaged in smart construction within China’s construction industry, including intelligent equipment operators, automated construction managers, and smart system technicians. The survey was conducted from January to April in 2025, resulting in 302 collected responses. To maintain the integrity of the data, a two-step screening procedure was implemented: (1) Incomplete responses were excluded, and (2) responses exhibiting zero standard deviation (SD) across all comparisons were discarded. After data screening, 288 valid responses were retained. A summary of respondent demographics is presented in Table 1.

Table 1.

Demographic information of the respondents.

4. Results

4.1. Data Reliability and Validity Test

4.1.1. Internal Consistency Results

In order to evaluate the internal consistency and reliability of the measurement tool, this study used Cronbach’s alpha (α) and Composite Reliability (CR) to test the reliability of the latent variables. It is generally believed that when the α value and CR value are both higher than 0.70, the construct has good internal consistency and stability. The internal consistency reliability of all latent constructs was evaluated using α and CR, both of which indicate that the measurement model exhibits strong psychometric properties. Specifically, α values for all variables exceed the conventional threshold of 0.70, ranging from 0.807 (task complexity) to 0.917 (technology attachment), demonstrating acceptable to excellent internal reliability.

4.1.2. Convergent Validity Results

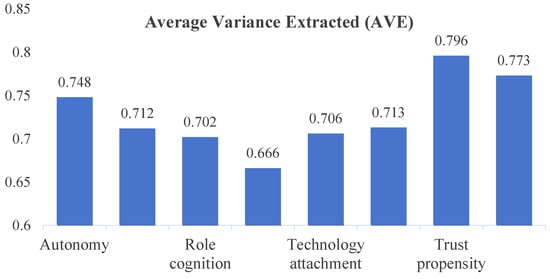

This study evaluates the convergent validity of the measurement model used by confirmatory factor analysis (CFA) to test the aggregation ability of observation indicators under each latent variable. According to the measurement model evaluation standard, it is generally believed that when the standardized factor loading (Outer Loading) of each measurement item is greater than 0.70 and the average variance extracted (AVE) is greater than 0.50, the construct has good convergent validity. The AVE is calculated using the following Formula (1), where i represents the standardized factor loading of each indicator, and is the number of items associated with the latent construct. The detailed results of outer loadings and AVE values for each construct are presented in Table 2 and Figure 4.

Table 2.

Outer loadings and AVE values of measurement constructs.

Figure 4.

AVE Values of Latent Constructs.

The results of the confirmatory factor analysis demonstrate that all constructs exhibit adequate convergent validity. Specifically, the outer loadings of all measurement items are above the recommended threshold of 0.70, indicating that each item contributes substantially to its corresponding latent construct. Additionally, AVE values for all constructs exceed the minimum acceptable level of 0.50, ranging from 0.666 to 0.796, which confirms that the constructs explain a sufficient proportion of the variance in their indicators. These results collectively indicate that the measurement model possesses sound convergent validity and that the observed variables effectively reflect their intended latent dimensions. The Measurement model diagram was shown in Figure 5.

Figure 5.

Measurement model diagram.

4.1.3. Assessment of Multicollinearity

In order to test whether there is a multicollinearity problem between the predictor variables in the structural model, this study uses the variance inflation factor (VIF) to evaluate multicollinearity. The higher the VIF value, the stronger the correlation between the variables. It is generally believed that when the VIF is less than 5, the multicollinearity problem is not serious, and a VIF value below 3 indicates that the collinearity is at a low level. The VIF assesses multicollinearity between indicators and is calculated as (2), where i is the coefficient of determination obtained when regressing the i-th variable on all other predictor variables. The VIF values for all predictor variables in this study are presented in Table 3.

Table 3.

Variance inflation factor (VIF).

According to widely accepted guidelines, VIF values below 3.3 indicate the absence of serious multicollinearity problems, while values under 5 are generally considered acceptable in less conservative contexts. In this study, all VIF values range from 1.000 to 1.649, indicating that collinearity among the independent variables is minimal. This suggests that each predictor contributes unique explanatory power to the model and that the regression estimates are unlikely to be distorted due to multicollinearity.

4.1.4. Discriminant Validity Results (Fornell-Larcker)

This study used the Fornell-Larcker method to evaluate discriminant validity. According to the Fornell-Larcker ratio of correlation, if the correlation coefficient between a factor and other factors is less than the square root of the factor‘s AVE, it means that all the constructs in the research model have a certain degree of discriminant validity. The square root of AVE values and the correlation coefficients between variables are presented in Table 4.

Table 4.

Square root of the AVE values and correlation coefficients between variables.

According to Fornell-Larcker criterion, discriminant validity is established when the square root of each construct’s AVE is greater than its correlations with any other construct. The results show that the diagonal elements (i.e., the square roots of the AVEs) are consistently higher than the off-diagonal correlation coefficients in their corresponding rows and columns. This indicates that each latent variable shares more variance with its associated indicators than with other constructs, thereby confirming the presence of acceptable discriminant validity within the model.

4.1.5. R-Square of the Model Base Results

The R-squared (R2) and adjusted R-squared (Adjusted R2) values of each endogenous variable were shown in Table 5. R-squared indicates the degree to which exogenous variables explain endogenous variables, that is, the explanatory strength of model fitting; adjusted R-squared corrects the impact of the number of variables in the model on the explanatory power on the basis of R2, and is more robust. The coefficient of determination results for Human-machine trust indicate a substantial level of explanatory power in the structural model. The R Variance value of 0.417 suggests that approximately 41.7% of the variance in Human-machine trust is accounted for by its predictors. After adjusting for the number of predictors and sample size, the Adjusted R-squared value is 0.405, which remains consistent with the original estimate and indicates a stable and reliable model fit. These results confirm that the model provides a meaningful explanation of Human-machine trust, capturing a considerable proportion of its variability through the independent variables included.

Table 5.

Model fitting.

4.1.6. F-Square of the Model Base Results (Effect Size f2)

To further evaluate the explanatory contribution of each exogenous variable to the endogenous variable in the structural model, this study introduced the effect size (f2) indicator for analysis. f2 is used to measure the degree of influence on the explanatory power (R2) of the endogenous variable when a specific exogenous variable is removed from the model. According to the judgment criteria of Cohen [42], an f2 value of 0.02 indicates a weak effect, 0.15 indicates a medium effect, and 0.35 indicates a strong effect. The effect size f2 evaluates the impact of an exogenous construct on an endogenous construct and is computed as (3), Where represents the contribution ratio of newly added variables to explanatory power. The results of the effect size (f2) analysis for all relevant constructs are summarized in Table 6.

Table 6.

Effect size f2.

The f2 results focusing on Human-machine trust indicate varying degrees of explanatory power among the associated predictors. According to Cohen’s guidelines, f2 values between 0.02 and 0.15 indicate a small effect, 0.15 to 0.35 indicate a medium effect, and values above 0.35 are considered large. Individual -> Human-machine trust (f2 = 0.167) demonstrates a moderate effect, suggesting that individual characteristics play a meaningful role in shaping trust in human-machine interaction. Automation equipment → Human-machine trust (f2 = 0.122) falls within the small-to-moderate range, indicating that the technological dimension also contributes but to a slightly lesser extent. The interaction terms—Trust propensity × Individual (f2 = 0.045) and Task complexity × Automation equipment (f2 = 0.031)—exhibit small effect sizes; reflecting limited moderating influence.

To validate the higher-level groupings of “Individual” and “Automation equipment” as second-order constructs, a second-order CFA was conducted. This analysis confirmed that the first-order constructs (role cognition, controllability, and technology attachment for Individuals; reliability and autonomy for Automation equipment) significantly loaded onto their respective second-order constructs with standardized loadings exceeding 0.70. CR and AVE values of the two second-order constructs were both above the recommended thresholds of 0.70 and 0.50, respectively. The model exhibited good overall fit (χ2/df = 1.894, GFI = 0.808, AGFI = 0.812, CFI = 0.927, RMSEA = 0.041), providing empirical support for the theoretical classification based on the three-domain model proposed by Madhavan and Wiegmann [24].

4.2. SEM Analysis

4.2.1. Path Analysis

This study used structural equation modeling (SEM) for path analysis and reported the standardized path coefficient (Original Sample), standard error (Standard Error), T value (T Statistics), and significance level (p Values) to verify the significance of each path relationship in the research model and the validity of the theoretical hypothesis. The results of hypothesis testing and the corresponding path coefficients are presented in Table 7.

Table 7.

Model hypothesis verification results and path coefficients.

The structural path analysis reveals that the five first-order constructs—Role cognition; Controllability; and Technology attachment (under Individual); as well as Reliability and Autonomy (under Automation equipment)—each exhibit statistically significant effects on Human-machine trust; thereby supporting the hypothesized relationships (H1–H5). Specifically, Role cognition displays the strongest influence (Original Sample = 0.885, T = 71.322, p < 0.001), followed by Autonomy (0.863, T = 63.625, p < 0.001) and Reliability (0.816, T = 37.455, p < 0.001). The other individual-related factors—Controllability (0.784, T = 32.154, p < 0.001) and Technology attachment (0.693, T = 24.472, p < 0.001)—also yield substantial and significant path coefficients. These results suggest that both personal cognitive and emotional factors (e.g., role understanding and perceived control) and the technical performance of automation (e.g., reliable behavior and autonomous capability) contribute meaningfully to trust formation. Notably, the magnitude of the coefficients for role cognition and autonomy indicates that interpersonal and interpretive aspects may outweigh purely functional ones in the development of Human-machine trust within the construction context.

4.2.2. Moderating Effect Test

The results of the moderating effect test confirm that both interaction terms exert statistically significant moderating influences on Human-machine trust. Specifically, the path Trust propensity × Individual → Human-machine trust yields a positive and significant coefficient (Original Sample = 0.169, T Statistics = 3.296, p Values = 0.001), indicating that individual traits enhance the effect of trust propensity on Human-machine trust. This implies that when individuals have a higher tendency to trust, the influence of their personality traits on trust in machines becomes more pronounced. Conversely, the interaction path Task complexity × Automation equipment → Human-machine trust presents a negative and significant coefficient (Original Sample = −0.137, T Statistics = 2.927, p Values = 0.003), suggesting that higher task complexity weakens the positive impact of automation equipment on trust formation. Overall, the results support both moderating hypotheses, highlighting the contextual dependencies in trust development within human-machine systems.

5. Discussion

5.1. Key Factors Influencing Human-Machine Trust Formation

5.1.1. Individual-Level Factors

- Technological Attachment

This study highlights the pivotal role of technological attachment in shaping human-machine trust within construction settings. Individuals with higher attachment tend to show greater acceptance and tolerance toward intelligent systems, viewing them as dependable partners rather than mere tools. While this fosters stable trust—even in the face of minor system errors—it may also lead to “trust inertia”; where users become less vigilant in monitoring system performance over time. This aligns with Steinhauser [43], who emphasized the dual influence of emotional bonding and perceived reliability in trust formation. However, excessive attachment may cause users to overlook subtle risk cues, potentially compromising safety in complex or changing environments. Therefore, promoting a resilient trust structure—one that balances confidence with ongoing critical awareness—should be a core objective in both system design and user training. The statistical results support this finding, with a standardized path coefficient β = 0.693, a T-value of 24.472, and p < 0.001, indicating a highly significant positive effect of technology attachment on trust. This highlights that emotional connection to technology is a robust and reliable predictor in smart construction environments.

- 2.

- Role Cognition

In smart construction systems, users assume dual roles as both operational executors and supervisory monitors. Without clear interface prompts, task boundaries, or role guidance, this dual responsibility often leads to cognitive ambiguity, which in turn disrupts the formation of stable and rational trust judgments. The structural path analysis confirms that role cognition exerts the strongest effect on human-machine trust among all first-order constructs (β = 0.885, T = 71.322, p < 0.001), highlighting its foundational importance. This result is particularly salient in smart construction, where workers often operate in fast-changing, high-risk environments that demand not only real-time decision-making but also situational oversight. Compared to autonomous driving or manufacturing, the construction site presents greater unpredictability and human-machine coordination, making clear human-machine role boundaries essential. When users perceive the system as an auxiliary tool—supporting task efficiency and execution—they tend to develop grounded; functional trust. However, when users misidentify the system as the primary decision-maker, trust may become irrationally inflated, leading to “over trust” or even “blind trust”. Such trust miscalibration can be especially dangerous on construction sites where automation errors, if misunderstood or unanticipated, can directly impact human safety. This imbalance has been widely observed in autonomous driving scenarios [7], where poorly defined human-machine boundaries increase the risk of misuse and delayed response. Therefore, intelligent system interfaces should incorporate explicit role definitions, layered information presentation, and responsibility prompts to reinforce users’ role cognition and support accurate trust calibration in dynamic operational environments.

- 3.

- Controllability

Controllability plays a crucial role in shaping trust by influencing users’ willingness to delegate authority to automated systems. This study finds a significant positive relationship between controllability and human-machine trust (β = 0.784, T = 32.154, p < 0.001), suggesting that when users believe they can intervene or override the system if necessary, they are more likely to form and maintain trust. Consistent with Hoffman [44], individuals exposed to uncertainty or risk show greater psychological resistance when their perceived control is lacking. Importantly, this perception does not always align with actual control: even in systems with restricted intervention mechanisms, users may maintain trust if they feel they understand the system’s logic or have alternative decision paths. Therefore, trust-oriented system design should go beyond offering actual override functions—such as manual control switches and action logs—and also address users’ psychological needs through real-time feedback and transparent system reasoning. In essence, a trustworthy intelligent system must support both operational control and cognitive assurance to ensure user confidence across varying task conditions.

5.1.2. System-Level Factors

- Reliability

The results in this study demonstrate a strong positive association between perceived reliability and overall trust (path coefficient = 0.816, T = 37.455, p < 0.001). This relationship is particularly salient during the initial phase of system interaction, where early impressions—such as consistent response; timely feedback; and error-free execution—play a formative role in establishing a trust baseline. This observation aligns with the “initial impression reinforcement” pathway proposed by Hoff and Bashir [7], whereby early reliable system behavior shapes subsequent trust expectations and tolerance thresholds. Therefore, establishing reliability from the outset is not merely a functional requirement but a psychological imperative in cultivating durable trust.

- 2.

- Autonomy

Autonomy is a defining feature of intelligent systems, directly affecting users’ perceptions of task delegation, control, and system intelligence. In this study, autonomy emerged as the second strongest predictor of trust among all constructs (path coefficient = 0.863, T = 63.625, p < 0.001), reflecting its central role in trust dynamics. Trust formation does not follow a linear pattern with increasing autonomy. Users generally exhibit the most stable trust toward systems with moderate autonomy, where machine initiative is balanced by human oversight. In contrast, excessive autonomy can lead to alienation or overreliance, depending on users’ trust propensity and task demands. Thus, adaptive autonomy—where system autonomy adjusts to user preferences and task complexity—may be optimal for maintaining consistent trust. From a design perspective, this calls for user-adjustable autonomy settings, explainable decision-making outputs, and fallback interaction options to enable dynamic co-adaptation between humans and machines.

5.2. Moderating Effects of Trust Propensity and Task Complexity

5.2.1. Moderating Effect of Trust Propensity

The moderating effect test confirms that trust propensity significantly enhances the impact of individual-level characteristics on human-machine trust (β = 0.169, T = 3.296, p = 0.001). This indicates that individuals with a higher general tendency to trust are more susceptible to the influence of their own cognitive and emotional traits—such as perceived control and role cognition—when forming trust in intelligent systems. In practical terms, users with high trust propensity are more likely to quickly establish trust and tolerate minor system errors, while those with lower trust propensity tend to remain cautious even when system performance is stable. These findings support the necessity of adaptive interface designs that can tailor feedback transparency and interaction complexity according to users’ trust profiles.

5.2.2. Moderating Effect of Task Complexity

The analysis also reveals a significant negative moderating effect of task complexity on the relationship between automation equipment and human-machine trust (β = −0.137, T = 2.927, p = 0.003). This suggests that as task complexity increases, the positive effect of automation equipment on trust formation becomes weaker. This phenomenon can be attributed to users’ increasing cognitive load and uncertainty under complex conditions, which may reduce their willingness to rely on system automation. Users may shift their attention from system functions to external stressors, making trust more fragile and contingent on situational clarity. Overall, these findings highlight that trust in intelligent systems is not purely a function of design or personality but emerges through dynamic interactions with task environments and user predispositions. Recognizing and accommodating these moderating influences is essential for sustaining reliable human-machine collaboration.

6. Conclusions

This study investigated the formation mechanism of human-machine trust in smart construction systems by developing and validating a structural equation model that incorporates both individual-level and system-level factors, as well as contextual moderators. The empirical results confirm that the five first-order constructs—role cognition; controllability; and technology attachment (under the individual dimension); as well as reliability and autonomy (under the automation equipment dimension)—all have significant positive effects on human-machine trust. Among them, role cognition (β = 0.885) and system autonomy (β = 0.863) emerged as the most influential predictors, indicating that improving workers’ role clarity and enhancing system autonomy can substantially strengthen trust levels. For instance, a one-unit improvement in role cognition can lead to an estimated 0.885 unit increase in human-machine trust. This highlights the importance of clearly defined interaction roles and adaptive automation in fostering stable and rational trust.

Moreover, the study reveals two critical moderating mechanisms: Trust propensity strengthens the effect of individual traits on trust, while task complexity weakens the positive impact of automation equipment. These findings suggest that human-machine trust is not static but dynamically shaped by the interplay of user predispositions and task environments. Accordingly, system interfaces should support flexible feedback, autonomy adjustment, and adaptive guidance based on user trust profiles and task characteristics. In sum, this research not only contributes to the theoretical understanding of human-machine trust formation in smart construction contexts but also provides quantitative insights for practical implications—prioritizing improvements in role cognition and autonomy could yield the most significant gains in trust.

More importantly, strengthening human-machine trust in smart construction is critical to improving site safety, enhancing collaboration efficiency, and promoting the adoption of intelligent technologies. Future research could explore trust-building mechanisms across different construction phases and investigate how trust calibration strategies impact worker performance, risk perception, and technology acceptance over time.

7. Limitations and Future Research

Despite advancing the understanding of human-machine trust mechanisms in smart construction contexts, this study is subject to two limitations that warrant attention. First, the data were collected from a relatively concentrated sample, primarily consisting of workers from specific regions and job categories, which may limit the generalizability of the findings. Second, the study adopted a cross-sectional research design, capturing trust perceptions at a single point in time. As trust is inherently dynamic—especially in long-term human-machine interactions—this design restricts the ability to observe how trust is built; degraded; or recovered over time. Future studies should further refine and expand the measurement dimensions of trust, incorporating constructs such as trust calibration, trust repair strategies, and real-time adaptability.

To address these limitations, future research is encouraged to adopt longitudinal or mixed-method approaches to capture the temporal dynamics of trust, including its formation, breakdown, and reconstruction. In addition, incorporating more diverse and heterogeneous samples—such as users from different cultural backgrounds or experience levels; or working with systems featuring varying degrees of automation—could provide richer insights into trust variability. Lastly, integrating objective data sources such as physiological indicators (e.g., heart rate, eye tracking) or behavioral metrics (e.g., response time, system override frequency) may help uncover the unconscious dimensions of trust and offer a more holistic view of how users cognitively and emotionally engage with intelligent systems. By advancing a more robust understanding of trust dynamics, such research could help the construction industry accelerate the safe deployment of smart equipment, reduce worker resistance to automation, and inform training programs that align human trust tendencies with system performance. These efforts will ultimately support the high-quality development of intelligent construction.

Author Contributions

Conceptualization, Y.D.; methodology, Y.D.; software, Y.D. and K.L.; validation, Y.D. and K.L.; formal analysis, W.H. and Y.G.; investigation, W.H. and Y.G.; resources, Y.D. and L.Z.; data curation, L.Z.; writing—original draft, W.H. and K.L.; writing—review and editing, Y.D. and W.H.; visualization, W.H.; supervision, Y.D. and K.L.; project administration, Y.D. and K.L.; funding acquisition, K.L. and L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the General Project of Philosophy and Social Science Research in Colleges and Universities in Jiangsu Province (2024SJYB1031), the Suzhou Science and Technology Plan (Basic Research) Project (SJC2023002), the National Natural Science Foundation of China (72301131), and the Research and Practice Innovation Plan for Graduate Students in Jiangsu Province (SJCX24_1929).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors express their gratitude to experts who participated in this research survey.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Datta, S.D.; Islam, M.; Sobuz, H.R.; Ahmed, S.; Kar, M. Artificial intelligence and machine learning applications in the project lifecycle of the construction industry: A comprehensive review. Heliyon 2024, 10, e26888. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Xu, R.; Wu, H.; Pan, J.; Luo, X. Human–robot collaboration for on-site construction. Autom. Constr. 2023, 150, 104812. [Google Scholar] [CrossRef]

- Wei, H.H.; Zhang, Y.; Sun, X.; Chen, J.; Li, S. Intelligent robots and human-robot collaboration in the construction industry: A review. J. Intell. Constr. 2023, 1, 9180002. [Google Scholar] [CrossRef]

- Wischnewski, M.; Krämer, N.; Müller, E. Measuring and understanding trust calibrations for automated systems: A survey of the state-of-the-art and future directions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Karwowski, W. A review of human factors challenges of complex adaptive systems: Discovering and understanding chaos in human performance. Hum. Factors 2012, 54, 983–995. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.G.; Kim, K.J.; Lee, S.; Shin, D.-H. Can autonomous vehicles be safe and trustworthy? Effects of appearance and autonomy of unmanned driving systems. Int. J. Hum. Comput. Interact. 2015, 31, 682–691. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Khastgir, S.; Birrell, S.; Dhadyalla, G.; Jennings, P. Calibrating trust to increase the use of automated systems in a vehicle. In Advances in Human Aspects of Transportation: Proceedings of the AHFE 2016 International Conference on Human Factors in Transportation, Orlando, FL, USA, 27–31 July 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 535–546. [Google Scholar]

- Hoffman, R.R.; Johnson, M.; Bradshaw, J.M.; Underbrink, A. Trust in automation. IEEE Intell. Syst. 2013, 28, 84–88. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Merritt, S.M.; Ilgen, D.R. Not all trust is created equal: Dispositional and history-based trust in human-automation interactions. Hum. Factors 2008, 50, 194–210. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–robot interaction: Status and challenges. Hum. Factors 2016, 58, 525–532. [Google Scholar] [CrossRef]

- Gao, Q.; Chen, L.; Shi, Y.; Luo, Y.; Shen, M.; Gao, Z. Trust calibration through perceptual and predictive information of the external context in autonomous vehicle. Transp. Res. Part F Traffic Psychol. Behav. 2024, 107, 537–548. [Google Scholar] [CrossRef]

- Wang, X.; Yu, H.; McGee, W.; Menassa, C.C.; Kamat, V.R. Enabling Building Information Model-driven human-robot collaborative construction workflows with closed-loop digital twins. Comput. Ind. 2024, 161, 104112. [Google Scholar] [CrossRef]

- Langer, M.; König, C.J.; Back, C.; Hemsing, V. Trust in artificial intelligence: Comparing trust processes between human and automated trustees in light of unfair bias. J. Bus. Psychol. 2023, 38, 493–508. [Google Scholar] [CrossRef]

- Chiou, E.K.; Lee, J.D. Trusting automation: Designing for responsivity and resilience. Hum. Factors 2023, 65, 137–165. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Ma, L.; Ding, L. A framework for BIM-enabled life-cycle information management of construction project. Int. J. Adv. Robot. Syst. 2014, 11, 126. [Google Scholar] [CrossRef]

- De Visser, E.J.; Peeters, M.M.M.; Jung, M.F.; Kohn, S. Towards a theory of longitudinal trust calibration in human–robot teams. Int. J. Soc. Robot. 2020, 12, 459–478. [Google Scholar] [CrossRef]

- Chen, J.Y.C. Transparent Human–Agent Communications. Int. J. Hum. Comput. Interact. 2022, 38, 1737–1738. [Google Scholar] [CrossRef]

- Zhou, J.; Luo, S.; Chen, F. Effects of personality traits on user trust in human–machine collaborations. J. Multimodal User Interfaces 2020, 14, 387–400. [Google Scholar] [CrossRef]

- Sarkar, S.; Araiza-Illan, D.; Eder, K. Effects of faults, experience, and personality on trust in a robot co-worker. arXiv 2017, arXiv:1703.02335. [Google Scholar]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; de Visser, E.J.; Parasuraman, R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Robinette, P.; Howard, A.M.; Wagner, A.R. Effect of robot performance on human–robot trust in time-critical situations. IEEE Trans. Hum. Mach. Syst. 2017, 47, 425–436. [Google Scholar] [CrossRef]

- Madhavan, P.; Wiegmann, D.A. Similarities and differences between human–human and human–automation trust: An integrative review. Theor. Issues Ergon. Sci. 2007, 8, 277–301. [Google Scholar] [CrossRef]

- Schaefer, K.E.; Chen, J.Y.C.; Szalma, J.L.; Hancock, P.A. A meta-analysis of factors influencing the development of trust in automation: Implications for understanding autonomy in future systems. Hum. Factors 2016, 58, 377–400. [Google Scholar] [CrossRef]

- Lyons, J.B.; aldin Hamdan, I.; Vo, T.Q. Explanations and trust: What happens to trust when a robot partner does something unexpected? Comput. Hum. Behav. 2023, 138, 107473. [Google Scholar] [CrossRef]

- Hinds, P.J.; Roberts, T.L.; Jones, H. Whose job is it anyway? A study of human-robot interaction in a collaborative task. Hum. Comput. Interact. 2004, 19, 151–181. [Google Scholar]

- Kim, S.S.Y.; Watkins, E.A.; Russakovsky, O.; Fong, R.; Monroy-Hernández, A. Humans, ai, and context: Understanding end-users’ trust in a real-world computer vision application. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 77–88. [Google Scholar]

- Song, Y.; Luximon, Y. Trust in AI agent: A systematic review of facial anthropomorphic trustworthiness for social robot design. Sensors 2020, 20, 5087. [Google Scholar] [CrossRef]

- Parasuraman, R.; Manzey, D.H. Complacency and bias in human use of automation: An attentional integration. Hum. Factors 2010, 52, 381–410. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Demir, K.A.; Döven, G.; Sezen, B. Industry 5.0 and human-robot co-working. Procedia Comput. Sci. 2019, 158, 688–695. [Google Scholar] [CrossRef]

- Gillath, O.; Ai, T.; Branicky, M.S.; Keshmiri, S.; Davison, R.B.; Spaulding, R. Attachment and trust in artificial intelligence. Comput. Hum. Behav. 2021, 115, 106607. [Google Scholar] [CrossRef]

- Chen, R.; Wang, R.; Fang, F.; Sadeh, N. Missing Pieces: How Framing Uncertainty Impacts Longitudinal Trust in AI Decision Aids—A Gig Driver Case Study. arXiv 2024, arXiv:2404.06432. [Google Scholar]

- Faas, C.; Bergs, R.; Sterz, S.; Langer, M.; Feit, A.M. Give Me a Choice: The Consequences of Restricting Choices Through AI-Support for Perceived Autonomy, Motivational Variables, and Decision Performance. arXiv 2024, arXiv:2410.07728. [Google Scholar]

- Hagos, D.H.; Alami, H.E.; Rawat, D.B. AI-Driven Human-Autonomy Teaming in Tactical Operations: Proposed Framework, Challenges, and Future Directions. arXiv 2024, arXiv:2411.09788. [Google Scholar]

- Jessup, S.A.; Schneider, T.R.; Alarcon, G.M.; Ryan, T.J.; Capiola, A. The measurement of the propensity to trust automation. In Virtual, Augmented and Mixed Reality. Applications and Case Studies: 11th International Conference, VAMR 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, 26–31 July 2019; Proceedings, Part II 21; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 476–489. [Google Scholar]

- Juvina, I.; Collins, M.G.; Larue, O.; Kennedy, W.G.; De Visser, E.; De Melo, C. Toward a unified theory of learned trust in interpersonal and human-machine interactions. ACM Trans. Interact. Intell. Syst. 2019, 9, 1–33. [Google Scholar] [CrossRef]

- Sultana, T.; Nemati, H.R. Impact of Explainable AI and Task Complexity on Human-Machine Symbiosis. In Proceedings of the AMCIS, Online, 9–13 August 2021. [Google Scholar]

- Sapienza, A.; Cantucci, F.; Falcone, R. Modeling interaction in human–machine systems: A trust and trustworthiness approach. Automation 2022, 3, 242–257. [Google Scholar] [CrossRef]

- Schomakers, E.M.; Biermann, H.; Ziefle, M. Users’ preferences for smart home automation–investigating aspects of privacy and trust. Telemat. Inform. 2021, 64, 101689. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: London, UK, 2013. [Google Scholar]

- Steinhauser, S. Human–Computer Interaction: Paths to Understanding Trust in Artificial Intelligence. In Transformation in Health Care: Game-Changers in Digitalization, Technology, AI and Longevity; Springer Nature: Cham, Switzerland, 2025; pp. 87–99. [Google Scholar]

- Hoffman, R.R. A taxonomy of emergent trusting in the human–machine relationship. In Cognitive Systems Engineering; CRC Press: Boca Raton, FL, USA, 2017; pp. 137–164. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).