Abstract

Background/Objectives: This study aimed to evaluate the ability of ChatGPT-5, a multimodal large language model, to perform automated ASPECTS assessment on non-contrast CT (NCCT) in patients with acute ischemic stroke. Methods: This retrospective, single-center study included 199 patients with anterior circulation AIS who underwent baseline NCCT before reperfusion therapy between November 2020 and February 2025. Each NCCT was evaluated by two human readers and by ChatGPT-5 using four representative images (two ganglionic and two supraganglionic). Interobserver agreement was measured with the intraclass correlation coefficient (ICC), and prognostic performance was analyzed using multivariable logistic regression and receiver operating characteristic (ROC) analysis for 3-month functional independence (mRS ≤ 2). Results: ChatGPT-5 demonstrated good-to-excellent agreement with expert consensus (ICC = 0.845; 95% CI, 0.792–0.884; κ = 0.79). ChatGPT-ASPECTS were independently associated with 3-month functional independence (OR = 1.28 per point; p = 0.004), comparable to consensus-ASPECTS (OR = 1.31; p = 0.003). Prognostic discrimination was similar between ChatGPT-5 and consensus scoring (AUC = 0.78 vs. 0.80; p = 0.41). Conclusions: ChatGPT-5 achieved high reliability and strong prognostic validity in automated ASPECTS assessment without task-specific training. These findings highlight the emerging potential of large language models for quantitative image interpretation, though clinical implementation will require multicenter validation and regulatory approval.

1. Introduction

Acute ischemic stroke (AIS) remains one of the leading causes of mortality and long-term disability worldwide [1]. Rapid and accurate evaluation of early ischemic changes (EIC) on initial non-contrast computed tomography (NCCT) is essential for clinical decision making, particularly in selecting candidates for reperfusion therapies such as intravenous thrombolysis and mechanical thrombectomy [2,3].

The Alberta Stroke Program Early CT Score (ASPECTS) provides a validated and systematic 10-point scale for quantifying EIC within the middle cerebral artery (MCA) territory and serves as an integral component of modern stroke triage protocols [4].

Despite its clinical utility, manual ASPECTS assessment is subjective and prone to interobserver variability, leading to inconsistencies that may affect patient selection and outcome prediction [5,6]. This challenge has prompted the development of artificial intelligence (AI) tools, especially those based on deep learning (DL) and machine learning to automate ASPECTS assessment and enhance reproducibility [7,8]. In previous studies, dedicated automated ASPECTS software and DL-based models have demonstrated non-inferior performance to human experts, achieving good-to-excellent agreement while markedly reducing the time required for image interpretation and triage decisions [2,9].

The emergence of large language models (LLMs) represents a new frontier for artificial intelligence in medical imaging [10]. Trained on vast multimodal datasets, these models are capable of processing both textual and visual information, enabling applications beyond traditional natural language processing tasks [11,12]. Recent iterations such as GPT-4 and GPT-4o have shown substantial improvements in logical reasoning and accuracy on medical image-based examinations [13,14]. However, their reliability in performing highly specialized quantitative image interpretation tasks, such as ASPECTS assessment, has not yet been systematically validated.

This study aimed to evaluate the ability of ChatGPT-5 for automated ASPECTS assessment on NCCT scans in patients with AIS to support rapid and objective decision making in stroke triage.

2. Materials and Methods

2.1. Study Design and Patient Selection

This retrospective, single-center study was conducted at the Department of Radiology, Bolu Abant Izzet Baysal University Faculty of Medicine, and approved by the institutional ethics committee (approval number: 2025/415). Due to the retrospective nature of the study, the requirement for written informed consent was waived. Patients who presented with AIS between November 2020 and February 2025 and underwent baseline NCCT before reperfusion therapy were included. Inclusion criteria were as follows: (1) acute anterior circulation infarction involving the MCA territory, (2) availability of diagnostic-quality NCCT images, and (3) complete clinical, angiographic, and outcome data. Patients with posterior circulation infarctions, significant motion artifacts, chronic parenchymal sequelae, hemorrhagic transformation, or incomplete data were excluded to restrict the cohort to anterior circulation strokes, for which ASPECTS is validated, and to minimize image-quality and chronic lesion confounders that could compromise reliable ASPECTS assessment.

2.2. Imaging Protocol

All NCCT examinations were performed on a 64-slice multidetector CT scanner (Revolution EVO, GE Healthcare, Milwaukee, WI, USA) using a standardized protocol: 120 kV, 320 mAs, 0.5 mm detector collimation, gantry rotation 400 ms, pitch 0.641, FOV 450–500 mm, and axial slice thickness 5 mm (with thin-section reconstructions available as needed).

2.3. ASPECTS Assessment by Human Readers

Each baseline NCCT was independently evaluated by one radiologist (≥5 years of experience in general radiology) and one neurologist (≥5 years of experience in stroke imaging) using the ASPECTS method [4]. ASPECTS was determined on two standard axial NCCT levels: the ganglionic level (through the thalamus and basal ganglia) and the supraganglionic level (at the level of the corona radiata). The MCA territory was divided into 10 regions: the caudate, lentiform nucleus, internal capsule, insula, and six cortical regions (M1–M6). One point was subtracted for each region showing early ischemic change, defined as parenchymal hypoattenuation or focal loss of gray–white matter differentiation relative to the contralateral hemisphere. Final ASPECTS values ranged from 0 to 10.

Both readers were blinded to all clinical and angiographic information and to each other’s results. In cases of disagreement, a consensus score was established by a neuroradiologist with more than 10 years of experience, whose decision was accepted as the reference standard (ASPECTS-consensus).

2.4. AI-Based ASPECTS Assessment Using ChatGPT-5

For the automated assessment, ASPECTS scoring was performed using ChatGPT-5 (OpenAI, San Francisco, CA, USA), accessed through the web-based ChatGPT interface (https://chatgpt.com) with the vision-capable model and default settings, without any fine-tuning or Custom GPT. For each patient, four representative axial NCCT images (two at the ganglionic level and two at the supraganglionic level corresponding to standard ASPECTS planes) were exported from the PACS as 8-bit grayscale JPEG files (512 × 512 pixels) [2,9]. These images were uploaded into ChatGPT-5, and the following standardized prompt was entered for each case: “Please determine the ASPECT score from these non-contrast head CT images for the right/left hemisphere affected by acute ischemic stroke.” Each patient was analyzed in a separate chat session to prevent model carryover effects from previous evaluations [15]. Each case was analyzed once using the hosted ChatGPT-5 model via the web-based interface. File names were anonymized, and any potentially identifiable personal information embedded in the image metadata was removed to ensure complete data anonymization and patient confidentiality [16].

2.5. Clinical and Angiographic Data

Clinical and demographic variables, including age, sex, vascular risk factors (hypertension, diabetes mellitus, coronary artery disease, atrial fibrillation, and hyperlipidemia), and stroke laterality, were collected from the electronic medical record system. Angiographic outcomes were evaluated using the modified Thrombolysis in Cerebral Infarction (mTICI) scale; successful reperfusion was defined as TICI 2b–3. Functional outcome was determined using the modified Rankin Scale (mRS) at 3 months post-stroke; mRS ≤ 2 was defined as functional independence [17]. Hemorrhagic transformation (HT) was evaluated on follow-up imaging within 48 h after treatment and classified according to the Heidelberg Bleeding Classification [18].

2.6. Statistical Analysis

All statistical analyses were performed using R software (version 4.3.1, R Foundation for Statistical Computing, Vienna, Austria). The distribution of continuous variables was assessed using the Shapiro–Wilk test. Continuous data were summarized as mean ± standard deviation when approximately normally distributed and as median (interquartile range) otherwise. For group comparisons of continuous variables, Student’s t-test was used for normally distributed variables and the Mann–Whitney U test for non-normally distributed variables. Categorical variables were compared using the Chi-square test or Fisher’s exact test, as appropriate. These tests were chosen according to the measurement scale and distribution of the variables and are standard methods in stroke outcome research. To evaluate the interobserver agreement among the three assessors (radiologist, neurologist, and ChatGPT-5), the Intraclass Correlation Coefficient (ICC) was calculated. ICC analysis was performed using a two-way random effects model under the assumption of absolute agreement. ICC values were interpreted as follows: below 0.50, poor; between 0.50 and 0.75, moderate; between 0.75 and 0.90, good; and above 0.90, excellent agreement. Agreement between ChatGPT-5 and consensus was further assessed with Bland–Altman analysis. For functional independence (3-month mRS ≤ 2), multivariable logistic regression models included ASPECTS (per 1-point increase) and clinical covariates (age, sex, successful reperfusion [mTICI 2b–3], and other prespecified factors where applicable). Model discrimination was assessed using the area under the receiver operating characteristic (ROC) curve (AUC), with pairwise comparisons performed using the DeLong test. Statistical significance was set at p < 0.05.

3. Results

A total of 199 patients (101 men and 98 women; mean age, 70.2 ± 12.5 years) with acute AIS were included between November 2020 and February 2025. Baseline clinical and angiographic characteristics, including vascular risk factors, occlusion sites, and treatment details, are summarized in Table 1.

Table 1.

Baseline Clinical and Angiographic Characteristics of the Study Population.

Pairwise interobserver reliability among ChatGPT-5, the radiologist, and the neurologist demonstrated good-to-excellent agreement (ICC range, 0.807–0.854; all p < 0.001) (Table 2). Specifically, ICC values were 0.854 (95% CI, 0.797–0.894) between the radiologist and neurologist, 0.845 (95% CI, 0.792–0.884) between ChatGPT-5 and the consensus, 0.821 (95% CI, 0.765–0.868) between ChatGPT-5 and the radiologist, and 0.807 (95% CI, 0.745–0.856) between ChatGPT-5 and the neurologist. Overall, three-rater agreement was low (ICC [2,1] = 0.451; 95% CI, 0.335–0.555; p < 0.001), reflecting variability among all raters. For dichotomized ASPECT categories (<7 vs. ≥7), ChatGPT-5 and consensus readers showed substantial categorical agreement (Cohen’s κ = 0.79; 95% CI, 0.71–0.86; p < 0.001).

Table 2.

Interobserver Agreement and Categorical Concordance for ASPECTS assessment.

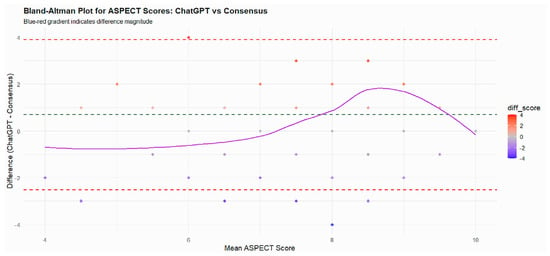

The mean ASPECT score assigned by ChatGPT-5 (8.64 ± 1.78) was slightly higher than that of the consensus (7.96 ± 1.36), yielding a mean difference of 0.68 (95% CI, 0.42–0.93; p < 0.001). Both ASPECTS distributions fulfilled the normality assumption on Shapiro–Wilk testing; therefore, the within-patient difference between ChatGPT-5 and consensus scores was evaluated using a paired t-test. The Bland–Altman plot demonstrated a small positive bias, with ChatGPT-5 assigning on average slightly higher ASPECTS values than the neuroradiology consensus. Most data points clustered near the zero-difference line and within the 95% limits of agreement, indicating good concordance overall, whereas larger positive differences were mainly observed at higher mean ASPECTS values, consistent with a mild tendency of ChatGPT-5 to overestimate ASPECTS in cases with relatively limited ischemic changes (Figure 1).

Figure 1.

The Bland–Altman plot illustrates the agreement between ASPECT scores obtained from ChatGPT and the consensus scoring by experts. Each point represents a patient, plotted according to the mean of the two scores (x-axis) and the difference between ChatGPT and consensus scores (y-axis). The solid purple line represents the LOESS trend, showing how the differences vary with the mean ASPECT score. The green dashed line indicates the mean difference (bias), and the red dashed lines represent the limits of agreement (±1.96 SD). Most differences cluster around zero, and the majority fall within the limits of agreement, indicating good agreement between ChatGPT and consensus scores. Slight deviations at the extremes suggest minor over- or underestimation by ChatGPT in very low or high ASPECT scores, but overall reliability is high.

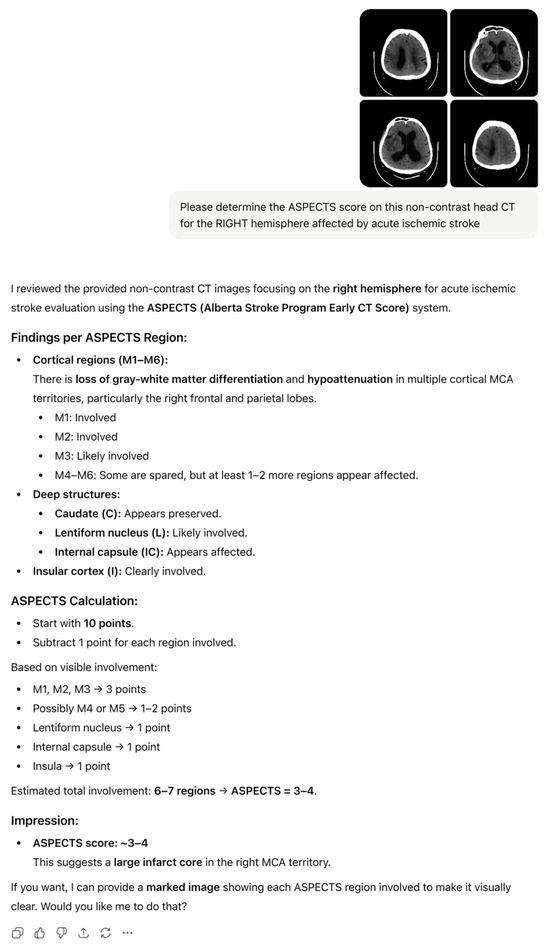

For both ChatGPT-5 and consensus ASPECT scores dichotomized as <7 or ≥7, chi-square analyses showed no significant associations with mTICI success, functional independence, or HT (all p > 0.25). Representative examples of ChatGPT-5 output, including region-wise ASPECTS breakdown and narrative interpretation for the left and right hemispheres, are shown in Figure 2 and Figure 3.

Figure 2.

ChatGPT-5-generated ASPECTS assessment for left MCA ischemia on baseline NCCT, with region-wise scoring and total ASPECTS.

Figure 3.

ChatGPT-5-generated ASPECTS assessment for right MCA ischemia with a large infarct core, showing regional involvement and estimated total ASPECTS.

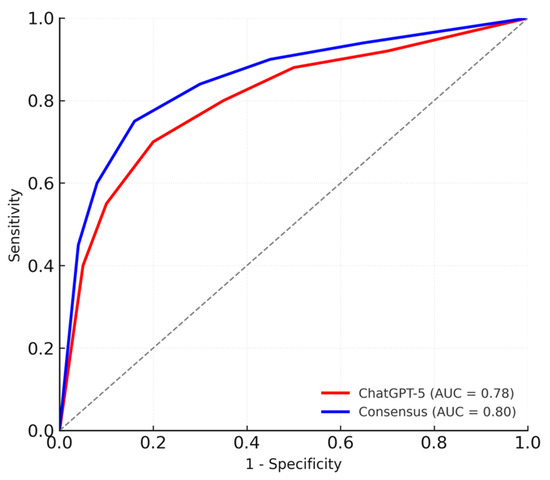

In multivariable logistic regression, higher ChatGPT-ASPECTS were independently associated with 3-month functional independence (mRS ≤ 2) (OR = 1.28 per point; 95% CI: 1.09–1.52; p = 0.004). When the same model was applied using consensus-ASPECTS, comparable associations were observed (OR = 1.31 per point; 95% CI: 1.11–1.54; p = 0.003) (Table 3). In both models, ASPECTS was entered as a continuous predictor (per 1-point increase), which allowed us to use the full information of the scale rather than relying solely on dichotomized categories. The discriminative ability of the logistic regression models was also comparable between ChatGPT-5 and consensus scoring (AUC = 0.78 vs. 0.80; p = 0.41, DeLong test), with both ROC curves lying well above the diagonal reference line and closely overlapping across the full range of 1 − specificity, indicating similar and good discrimination for 3-month functional independence (Figure 4).

Table 3.

Multivariable Logistic Regression Analysis for Predictors of Functional Independence (mRS ≤ 2 at 3 Months).

Figure 4.

ROC Curves Comparing ChatGPT-5 and Consensus ASPECTS for Predicting Functional Independence.

4. Discussion

This study evaluated the ability of ChatGPT-5 to perform automated ASPECTS assessment on NCCT scans in patients with AIS. Using a standardized prompt and four representative NCCT images, ChatGPT-5 demonstrated excellent reliability and substantial concordance with expert consensus, suggesting potential utility as an assistive tool for rapid stroke triage.

The interobserver reliability analysis revealed strong agreement between ChatGPT-5 and the expert consensus (ICC = 0.845; 95% CI, 0.792–0.884), meeting the threshold for good-to-excellent agreement. This level of consistency is comparable to that reported for several dedicated DL systems developed for automated ASPECTS assessment, such as the Heuron ASPECTS software (ICC = 0.78) and other convolutional network-based models (mean ICC ≈ 0.72–0.83) [7,19,20]. These findings demonstrate that a general-purpose multimodal LLM, when guided by task-specific prompts and targeted image selection, can achieve diagnostic reliability previously attainable only with specially designed DL architectures [13,21,22]. Moreover, categorical agreement at the clinically relevant dichotomized threshold (ASPECTS < 7 vs. ≥7; κ = 0.79) further supports ChatGPT-5’s consistency for binary clinical decision making.

Previous studies on automated ASPECTS assessment have mainly used deep learning models trained on large stroke imaging datasets. These systems have shown high accuracy, with reported sensitivities around 90% and overall accuracies close to 83% [9,19,21]. Despite their success, they require extensive dataset preparation and model training, and their performance can vary when applied to scans from different institutions or imaging protocols [23,24,25]. In contrast, ChatGPT-5 is a general-purpose AI model that is not specifically trained on stroke imaging. Its comparable reliability demonstrates that LLMs can perform structured quantitative interpretation through cross-modal reasoning without task-specific retraining [26].

Recent studies have shown that LLMs such as GPT-4 can perform advanced reasoning in radiology reporting, diagnostic decision support, and quality improvement tasks [27,28]. These text-based applications demonstrate their adaptability to radiologic workflows and clinical reasoning.

Previous evaluations of multimodal LLMs in imaging domains such as musculoskeletal, chest, and abdominal radiography have typically shown moderate diagnostic accuracy, suggesting that model performance depends heavily on the task structure [22,29]. The high reliability observed in ASPECTS assessment may be related to its reliance on symmetry-based lesion detection and binary regional assessment, which appear to align well with the visual reasoning patterns observed in LLMs [21].

Pairwise ICC values between ChatGPT-5 and each human reader, and between the two human readers, were good-to-excellent, indicating high overall agreement; however, the overall three-rater ICC was low, likely reflecting systematic level shifts in scoring behavior rather than random unreliability of the model. In line with this, ChatGPT-5 tended to assign slightly higher ASPECT scores than human experts (mean difference = 0.68, p < 0.001), indicating a mild conservative bias that may lead to underestimation of ischemic extent. This conservative tendency seemed to be most pronounced in cases with very subtle early ischemic changes (near-threshold loss of gray–white differentiation or minimal insular ribbon blurring), in which neuroradiology experts judged regional involvement while ChatGPT-5 frequently retained a higher ASPECTS value. Such borderline findings are a well-known source of interobserver variability and likely account for a substantial proportion of the outlier cases with larger disagreement between human and AI-based ASPECTS assessment. Similar upward scoring trends have been reported with previous DL systems, likely reflecting the model’s tendency to avoid false-positive lesion detection in mildly hypo-attenuated regions [7]. Despite this minor difference, ChatGPT-ASPECTS were independently associated with 3-month functional independence (mRS ≤ 2) in multivariable analysis (OR = 1.28 per point, p = 0.004), consistent with the consensus results (OR = 1.31, p = 0.003). Both ChatGPT-5 and consensus scoring achieved comparable prognostic performance for functional outcomes (AUC = 0.78 vs. 0.80, p = 0.41, DeLong test), suggesting that ChatGPT-5 can extract clinically meaningful imaging information rather than relying solely on pixel-level pattern recognition.

No significant relationship was observed between ASPECTS categories and either mTICI success or HT, consistent with previous studies showing that ASPECTS is primarily predictive of functional outcomes [30,31].

Given its diagnostic reliability, ChatGPT-5’s near-human accuracy suggests potential as a supplementary decision-support tool, particularly in centers lacking dedicated neuroradiology expertise, as it can directly interpret standard DICOM-converted images and generate an ASPECTS estimate within seconds of NCCT acquisition. This accessibility and speed may enhance the applicability of AI-assisted triage, especially in community hospitals and resource-limited environments. At the same time, any automated ASPECTS tool carries a risk of diagnostic error, including under- or overestimation of ischemic changes, and therefore must be used under careful human supervision. Nevertheless, real-world implementation will require addressing issues of reproducibility and regulatory compliance. Therefore, ChatGPT-5 should presently be regarded as a research-grade adjunct rather than an independent clinical decision engine [28,29,32].

An additional conceptual limitation is that ChatGPT-5 is a general-purpose LLM rather than a model specifically optimized for clinical or radiology tasks. In biomedical applications, further performance gains often require domain-adaptation strategies such as retrieval-augmented generation (RAG), fine-tuning on curated medical datasets, and systematic prompt engineering with human feedback. These approaches can help reduce hallucinations, improve alignment with expert knowledge, and increase task fidelity, but they also demand high-quality labeled data, rigorous validation, and additional computational infrastructure. Exploring such strategies for LLM-based ASPECTS assessment and acute stroke imaging represents a natural direction for future work [33].

This study has several limitations. First, its retrospective, single-center design limits the generalizability of the findings. Differences in CT scanner types, acquisition parameters, and reconstruction protocols across institutions may influence model performance. Second, we used a single run of the hosted ChatGPT-5 model for each case and did not systematically repeat the analyses to quantify intra-model variability. Moreover, because ChatGPT-5 is a closed-source, cloud-based system that can be updated by the vendor, the exact internal model state at the time of our experiment cannot be fully reproduced; future studies should incorporate explicit model versioning and repeated runs to formally characterize the stability and reproducibility of LLM-based ASPECTS assessment. Third, ChatGPT-5 was provided with only four representative axial NCCT slices (two ganglionic and two supraganglionic levels) rather than the entire scan volume. Although these standardized planes generally capture the bulk of MCA territory ischemia and allow consistent comparison across cases, restricting the input to four 2D images may reduce sensitivity for very small or subtle infarcts located outside these slices and may partly explain the slightly higher ASPECTS values assigned by ChatGPT-5 compared with the neuroradiology consensus. Future multimodal LLM workflows that can directly ingest and interpret the full NCCT volume (e.g., slice-wise or 3D volumetric analysis of the entire DICOM series) may enable more comprehensive lesion detection and more accurate regional scoring. Fourth, the model operated without access to clinical context—information that human readers routinely incorporate into image interpretation. Fifth, the binary ASPECTS threshold (ASPECTS < 7 and ≥7) may have reduced prognostic sensitivity in this cohort, as few patients had low scores. Future studies should assess alternative cutoffs (e.g., ≤6) and explore continuous relationships between ASPECTS and outcomes. Finally, ChatGPT-5, like other LLMs, operates as a closed-source ‘black box’ system and remains susceptible to variability and potential interpretive errors. Occasional errors or inconsistencies cannot be completely ruled out, especially as future model updates may alter its behavior. Incorporating interpretability methods, such as visual attention mapping, may help clarify model decision pathways. These approaches could also improve transparency and user trust in LLM-based imaging systems.

5. Conclusions

ChatGPT-5 demonstrated high reliability and strong prognostic validity in automated ASPECTS assessment on NCCT for AIS. Its near-human performance achieved without task-specific training highlights the expanding role of multimodal LLMs in quantitative image interpretation. Although these findings support the feasibility of LLM-based stroke imaging analysis, clinical implementation will require multicenter prospective validation, standardized prompting frameworks, and formal regulatory validation before integration into clinical workflow.

Author Contributions

Project conceptualization and study design: S.G. and A.B.Y.; Data collection: S.G., S.E., A.E.S., H.Ö., Y.Y. and T.S.; Statistical analysis: A.B.Y.; Manuscript drafting: S.G.; writing—review and editing: S.G. and A.B.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Bolu Abant Izzet Baysal University Non-Interventional Clinical Research Ethics Committee Approval (Decision no: 2025/415, Date: 23 September 2025).

Informed Consent Statement

The requirement for written informed consent was waived due to the retrospective design.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AIS | Acute ischemic stroke |

| AI | Artificial intelligence |

| ASPECTS | Alberta Stroke Program Early CT Score |

| AUC | Area under the curve |

| DL | Deep learning |

| EIC | Early ischemic change |

| HT | Hemorrhagic transformation |

| ICC | Intraclass correlation coefficient |

| LLM | Large language model |

| mRS | Modified Rankin Scale |

| mTICI | Modified Thrombolysis in Cerebral Infarction |

| NCCT | Non-contrast computed tomography |

| OR | Odds ratio |

| RAG | Retrieval-augmented generation |

| ROC | Receiver operating characteristic |

| SD | Standard deviation |

References

- Kim, J.; Thayabaranathan, T.; Donnan, G.A.; Howard, G.; Howard, V.J.; Rothwell, P.M.; Feigin, V.; Norrving, B.; Owolabi, M.; Pandian, J.; et al. Global Stroke Statistics 2019. Int. J. Stroke 2020, 15, 819–838. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.; Shang, K.; Wei, X.; Zhu, Y.; Yuan, Y.; Wang, M.; Ding, C.; Dai, L.; Sun, Z.; Mao, X.; et al. Deep Learning-Based Automatic ASPECTS Calculation Can Improve Diagnosis Efficiency in Patients with Acute Ischemic Stroke: A Multicenter Study. Eur. Radiol. 2025, 35, 627–639. [Google Scholar] [CrossRef]

- Powers, W.J.; Rabinstein, A.A.; Ackerson, T.; Adeoye, O.M.; Bambakidis, N.C.; Becker, K.; Biller, J.; Brown, M.; Demaerschalk, B.M.; Hoh, B.; et al. Guidelines for the Early Management of Patients with Acute Ischemic Stroke: 2019 Update to the 2018 Guidelines for the Early Management of Acute Ischemic Stroke: A Guideline for Healthcare Professionals From the American Heart Association/American Stroke Association. Stroke 2019, 50, E344–E418. [Google Scholar] [CrossRef] [PubMed]

- Barber, P.A.; Demchuk, A.M.; Zhang, J.; Buchan, A.M. Validity and Reliability of a Quantitative Computed Tomography Score in Predicting Outcome of Hyperacute Stroke before Thrombolytic Therapy. Lancet 2000, 355, 1670–1674. [Google Scholar] [CrossRef]

- Farzin, B.; Fahed, R.; Guilbert, F.; Poppe, A.Y.; Daneault, N.; Durocher, A.P.; Lanthier, S.; Boudjani, H.; Khoury, N.N.; Roy, D.; et al. Early CT Changes in Patients Admitted for Thrombectomy: Intrarater and Interrater Agreement. Neurology 2016, 87, 249–256. [Google Scholar] [CrossRef]

- Wilson, A.T.; Dey, S.; Evans, J.W.; Najm, M.; Qiu, W.; Menon, B.K. Minds Treating Brains: Understanding the Interpretation of Non-Contrast CT ASPECTS in Acute Ischemic Stroke. Expert. Rev. Cardiovasc. Ther. 2018, 16, 143–153. [Google Scholar] [CrossRef]

- Kuang, H.; Najm, M.; Chakraborty, D.; Maraj, N.; Sohn, S.I.; Goyal, M.; Hill, M.D.; Demchuk, A.M.; Menon, B.K.; Qiu, W. Automated Aspects on Noncontrast CT Scans in Patients with Acute Ischemic Stroke Using Machine Learning. Am. J. Neuroradiol. 2019, 40, 33–38. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.J.; Kim, Y.H.; Kim, N.; Kang, D.W. Deep into the Brain: Artificial Intelligence in Stroke Imaging. J. Stroke 2017, 19, 277. [Google Scholar] [CrossRef]

- Chiang, P.L.; Lin, S.Y.; Chen, M.H.; Chen, Y.S.; Wang, C.K.; Wu, M.C.; Huang, Y.T.; Lee, M.Y.; Chen, Y.S.; Lin, W.C. Deep Learning-Based Automatic Detection of ASPECTS in Acute Ischemic Stroke: Improving Stroke Assessment on CT Scans. J. Clin. Med. 2022, 11, 5159. [Google Scholar] [CrossRef]

- Zong, H.; Wu, R.; Cha, J.; Wang, J.; Wu, E.; Li, J.; Zhou, Y.; Zhang, C.; Feng, W.; Shen, B. Large Language Models in Worldwide Medical Exams: Platform Development and Comprehensive Analysis. J. Med. Internet Res. 2024, 26, e66114. [Google Scholar] [CrossRef]

- Yang, X.; Chen, W. The Performance of ChatGPT on Medical Image-Based Assessments and Implications for Medical Education. BMC Med. Educ. 2025, 25, 1192. [Google Scholar] [CrossRef] [PubMed]

- Fink, M.A.; Bischoff, A.; Fink, C.A.; Moll, M.; Kroschke, J.; Dulz, L.; Heußel, C.P.; Kauczor, H.U.; Weber, T.F. Potential of ChatGPT and GPT-4 for Data Mining of Free-Text CT Reports on Lung Cancer. Radiology 2023, 308, e231362. [Google Scholar] [CrossRef]

- Keshavarz, P.; Bagherieh, S.; Nabipoorashrafi, S.A.; Chalian, H.; Rahsepar, A.A.; Kim, G.H.J.; Hassani, C.; Raman, S.S.; Bedayat, A. ChatGPT in Radiology: A Systematic Review of Performance, Pitfalls, and Future Perspectives. Diagn. Interv. Imaging 2024, 105, 251–265. [Google Scholar] [CrossRef]

- Handa, P.; Chhabra, D.; Goel, N.; Krishnan, S. Exploring the Role of ChatGPT in Medical Image Analysis. Biomed. Signal Process. Control 2023, 86, 105292. [Google Scholar] [CrossRef]

- Şahin, M.F.; Ateş, H.; Keleş, A.; Özcan, R.; Doğan, Ç.; Akgül, M.; Yazıcı, C.M. Responses of Five Different Artificial Intelligence Chatbots to the Top Searched Queries About Erectile Dysfunction: A Comparative Analysis. J. Med. Syst. 2024, 48, 1–6. [Google Scholar] [CrossRef]

- Deng, J.; Heybati, K.; Shammas-Toma, M. When Vision Meets Reality: Exploring the Clinical Applicability of GPT-4 with Vision. Clin. Imaging 2024, 108, 110101. [Google Scholar] [CrossRef]

- Bilgin, C.; Ibrahim, M.; Ghozy, S.; Jabal, M.S.; Shehata, M.; Kobeissi, H.; Kadirvel, R.; Brinjikji, W.; Rabinstein, A.A.; Kallmes, D.F. Disability-Free Outcomes after Mechanical Thrombectomy: A Systematic Review and Meta-Analysis of the Randomized Controlled Trials. Interv. Neuroradiol. 2024, 15910199231224826. [Google Scholar] [CrossRef] [PubMed]

- Von Kummer, R.; Broderick, J.P.; Campbell, B.C.V.; Demchuk, A.; Goyal, M.; Hill, M.D.; Treurniet, K.M.; Majoie, C.B.L.M.; Marquering, H.A.; Mazya, M.V.; et al. The Heidelberg Bleeding Classification. Stroke 2015, 46, 2981–2986. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.J.; Park, G.; Kim, D.; Jung, S.; Song, S.; Hong, J.M.; Shin, D.H.; Lee, J.S. Clinical Evaluation of a Deep-Learning Model for Automatic Scoring of the Alberta Stroke Program Early CT Score on Non-Contrast CT. J. Neurointerv Surg. 2023, 16, 61. [Google Scholar] [CrossRef]

- Adamou, A.; Beltsios, E.T.; Bania, A.; Gkana, A.; Kastrup, A.; Chatziioannou, A.; Politi, M.; Papanagiotou, P. Artificial Intelligence-Driven ASPECTS for the Detection of Early Stroke Changes in Non-Contrast CT: A Systematic Review and Meta-Analysis. J. Neurointerv Surg. 2023, 15, E298–E304. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Xu, J.; Song, B.; Chen, L.; Sun, T.; He, Y.; Wei, Y.; Niu, G.; Zhang, Y.; Feng, Q.; et al. Deep Learning Derived Automated ASPECTS on Non-Contrast CT Scans of Acute Ischemic Stroke Patients. Hum. Brain Mapp. 2022, 43, 3023–3036. [Google Scholar] [CrossRef]

- Temel, M.H.; Erden, Y.; Bağcıer, F. Evaluating Artificial Intelligence Performance in Medical Image Analysis: Sensitivity, Specificity, Accuracy, and Precision of ChatGPT-4o on Kellgren-Lawrence Grading of Knee X-Ray Radiographs. Knee 2025, 55, 79–84. [Google Scholar] [CrossRef] [PubMed]

- Qiu, W.; Kuang, H.; Teleg, E.; Ospel, J.M.; Sohn, S.I.; Almekhlafi, M.; Goyal, M.; Hill, M.D.; Demchuk, A.M.; Menon, B.K. Machine Learning for Detecting Early Infarction in Acute Stroke with Non-Contrast-Enhanced CT. Radiology 2020, 294, 638–644. [Google Scholar] [CrossRef] [PubMed]

- Shamout, F.E.; Shen, Y.; Wu, N.; Kaku, A.; Park, J.; Makino, T.; Jastrzębski, S.; Witowski, J.; Wang, D.; Zhang, B.; et al. An Artificial Intelligence System for Predicting the Deterioration of COVID-19 Patients in the Emergency Department. NPJ Digit. Med. 2021, 4, 80. [Google Scholar] [CrossRef]

- Gauriau, R.; Bizzo, B.C.; Comeau, D.S.; Hillis, J.M.; Bridge, C.P.; Chin, J.K.; Pawar, J.; Pourvaziri, A.; Sesic, I.; Sharaf, E.; et al. Head CT Deep Learning Model Is Highly Accurate for Early Infarct Estimation. Sci. Rep. 2023, 13, 189. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. arXiv 2005, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Bhayana, R.; Bleakney, R.R.; Krishna, S. GPT-4 in Radiology: Improvements in Advanced Reasoning. Radiology 2023, 307, 230987. [Google Scholar] [CrossRef] [PubMed]

- Temperley, H.C.; O’Sullivan, N.J.; Mac Curtain, B.M.; Corr, A.; Meaney, J.F.; Kelly, M.E.; Brennan, I. Current Applications and Future Potential of ChatGPT in Radiology: A Systematic Review. J. Med. Imaging Radiat. Oncol. 2024, 68, 257–264. [Google Scholar] [CrossRef]

- Lacaita, P.G.; Galijasevic, M.; Swoboda, M.; Gruber, L.; Scharll, Y.; Barbieri, F.; Widmann, G.; Feuchtner, G.M. The Accuracy of ChatGPT-4o in Interpreting Chest and Abdominal X-Ray Images. J. Pers. Med. 2025, 15, 194. [Google Scholar] [CrossRef]

- Maingard, J.; Paul, A.; Churilov, L.; Mitchell, P.; Dowling, R.; Yan, B. Recanalisation Success Is Independent of ASPECTS in Predicting Outcomes after Intra-Arterial Therapy for Acute Ischaemic Stroke. J. Clin. Neurosci. 2014, 21, 1344–1348. [Google Scholar] [CrossRef]

- Tian, B.; Tian, X.; Shi, Z.; Peng, W.; Zhang, X.; Yang, P.; Li, Z.; Zhang, X.; Lou, M.; Yin, C.; et al. Clinical and Imaging Indicators of Hemorrhagic Transformation in Acute Ischemic Stroke After Endovascular Thrombectomy. Stroke 2022, 53, 1674–1681. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Heacock, L.; Elias, J.; Hentel, K.D.; Reig, B.; Shih, G.; Moy, L. ChatGPT and Other Large Language Models Are Double-Edged Swords. Radiology 2023, 307, e230163. [Google Scholar] [CrossRef] [PubMed]

- Lee, R.C.; Hadidchi, R.; Coard, M.C.; Rubinov, Y.; Alamuri, T.; Liaw, A.; Chandrupatla, R.; Duong, T.Q. Use of Large Language Models on Radiology Reports: A Scoping Review. J. Am. Coll. Radiol. 2025; in press. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).